Perceived Stress and Perceived Lack of Control of Student Teachers in Field Practice Placements in Schools during the COVID-19 Pandemic: Validity of the PSS-10 Adapted to the Field Practice Context

Abstract

1. Introduction

1.1. Environmental and Perceived Stress in Field Practice Placements

1.2. Changes and Development in Student Teachers’ Perceived Stress Related to Field Practice Placements

1.3. Perceived Stress in Student Teachers’ Field Practice Placements and Outcomes

1.4. The Measurement of Perceived Stress in Teacher Education Field Practice Placements

1.5. The Current Study

2. Materials and Methods

2.1. Participants and Data Collection

2.2. Instrument

2.3. Item Analyses by Rasch Models

- Unidimensionality: The items of a scale assess one single underlying latent construct. Here, the PSFP subscale assesses one construct and the PLCFP subscale another construct.

- Monotonicity: The expected item scores on a scale should increase with increasing values of the latent variable. Here, the probability of occurrence of any of the experiences described in the items should increase with increasing scores on the subscale.

- Local independence of items (no local response dependence; LRD): The responses to a single item should be conditionally independent from the responses to another item of the scale given the latent variable. For example, responses to any one PSFP item should only depend on the level of perceived stress in field practice and not also on responses to the other items in the scale.

- No differential item functioning (DIF): Items and exogenous (i.e., background variables) should be conditionally independent given the latent variable. For example, responses to any one PSFP item should only depend on the level of perceived stress in field practice and not on subgroup membership such as gender or age, etc.

- Homogeneity: The rank order of the item parameters (i.e., the item difficulties) should be the same across all persons, regardless of their level on the latent variable. For example, the item that requires the most perceived stress in field practice to be endorsed should be the same for all students, no matter if they were not very or very stressed in their field practice, and the same for the item requiring the second-lowest perceived stress in field practice, and so on.

2.3.1. Strategy of Analysis

- Overall test of homogeneity of item parameters across low- and high-scoring groups.

- Overall tests of invariance relative to in relation to the level of field practice placement (level I, level II, and level III), campus (A or B), Bachelor of Education program (regular or other), gender (female or male), and median-cut age groups (25 years and younger and 26 years and older). The first category serves as the reference group.

- Tests of no DIF for all items relative to the above background variables.

- Tests of local independence for all item pairs.

- Fit of the individual items to the RM.

- Assessment of person parameters estimates standard error and bias of measurement.

- Evaluation of targeting and reliability relative to the current study population.

2.3.2. Statistics

2.4. Criterion Validity

2.5. Software

3. Results

3.1. Item Analyses by Rasch Models

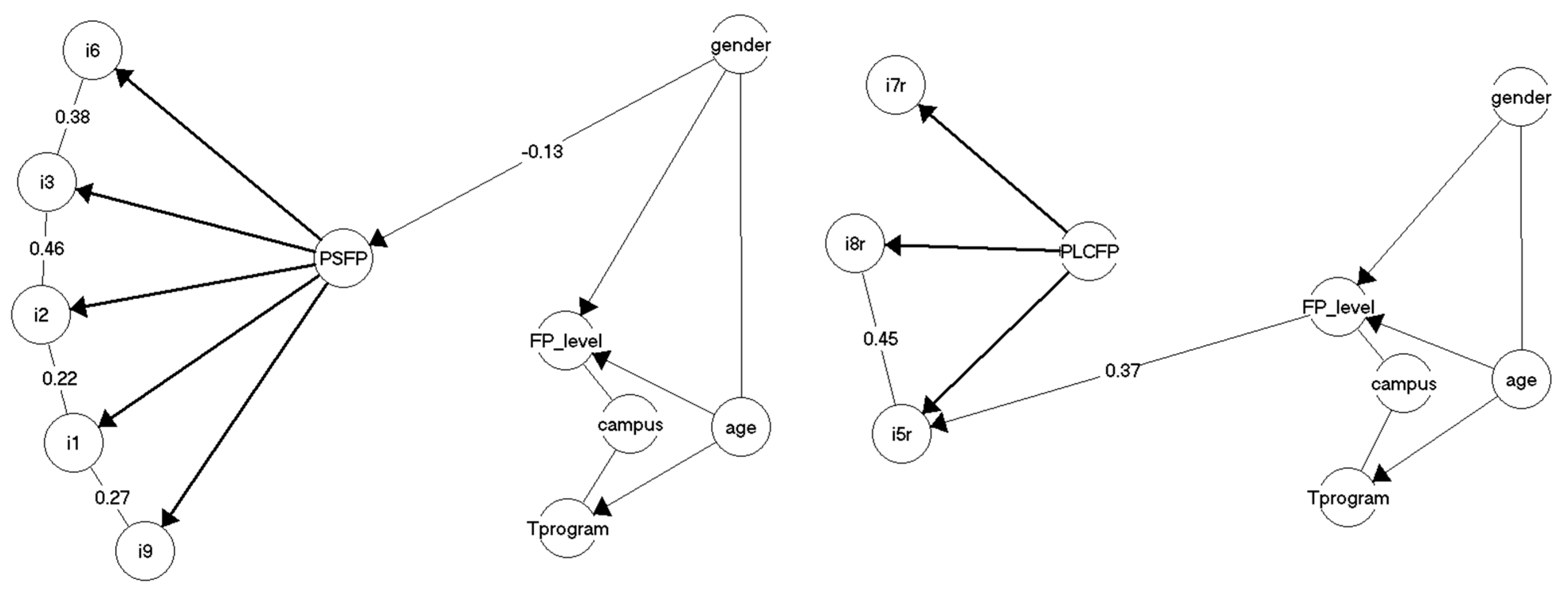

3.1.1. Local Response Dependence and Differential Item Functioning

3.1.2. Targeting and Reliability

3.2. Criterion Validity

3.2.1. Correlation of Perceived Stress and Perceived Lack of Control in Field Practice

3.2.2. Levels of Field Practice and Perceived Stress and Perceived Lack of Control in Field Practice

3.2.3. Perceived Stress and Perceived Lack of Control in Field Practice and Exam Grades

4. Discussion

4.1. Psychometric Properties

4.2. Criterion Validity

4.3. Perceived Stress and Lack of Control in Future and Broader Studies of Student Teachers in the Field Practice Context

4.4. Strengths and Limitations

5. Conclusions

Supplementary Materials

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Storrie, K.; Ahern, K.; Tuckett, A. A systematic review: Students with mental health problems—A growing problem. Int. J. Nurs. Pract. 2010, 16, 1–6. [Google Scholar] [CrossRef]

- Harkness, K.L.; Monroe, S.M. The assessment and measurement of adult life stress: Basic premises, operational principles, and design requirements. J. Abnorm. Psychol. 2016, 125, 727. [Google Scholar] [CrossRef] [PubMed]

- Pulido-Martos, M.; Augusto-Landa, J.M.; Lopez-Zafra, E. Sources of stress in nursing students: A systematic review of quantitative studies. Int. Nurs. Rev. 2011, 59, 15–25. [Google Scholar] [CrossRef]

- Al-Zayyat, A.S.; Al-Gamal, E. Perceived stress and coping strategies among Jordanian nursing students during clinical practice in psychiatric/mental health courses. Int. J. Ment. Health Nurs. 2014, 23, 326–335. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.S.; Rong, J.R.; Huang, M.Z. Factors associated with perceived stress of clinical practice among associate degree nursing students in Taiwan. BMC Nurs. 2021, 1, 89. [Google Scholar] [CrossRef] [PubMed]

- Gardner, S. Stress among Prospective Teachers: A Review of the Literature. Aust. J. Teach. Educ. 2010, 35, 18–28. [Google Scholar] [CrossRef]

- Chaplain, R.P. Stress and psychological distress among trainee secondary teachers in England. Educ. Psychol. 2008, 28, 195–209. [Google Scholar] [CrossRef]

- Folkman, S.; Lazarus, R.S.; Dunkel-Schetter, C.; DeLongis, A.; Gruen, R.J. Dynamics of a stressful encounter: Cognitive appraisal, coping, and encounter outcomes. J. Personal. Soc. Psychol. 1986, 50, 992. [Google Scholar] [CrossRef]

- Lazarus, R.S. Psychological Stress and the Coping Process; McGraw-Hill: New York, NY, USA, 1966. [Google Scholar]

- Ngui, G.K.; Lay, Y.F. Investigating the Effect of Stress-Coping Abilities on Stress in Practicum Training. Asia-Pac. Educ. Res. 2018, 27, 335–343. [Google Scholar] [CrossRef]

- Ngui, G.K.; Lay, Y.F. The Effect of Emotional Intelligence, Self-Efficacy, Subjective Well-Being and Resilience on Student Teachers’ Perceived Practicum Stress: A Malaysian Case Study. Eur. J. Educ. Res. 2020, 9, 277–291. [Google Scholar]

- Geng, G.; Midford, R.; Buckworth, J. Investigating the stress levels of early childhood, primary and secondary pre-service teachers during teaching practicum. J. Teach. Educ. Sustain. 2015, 17, 35–47. [Google Scholar] [CrossRef]

- Petko, D.; Egger, N.; Cantieni, A. Weblogs in Teacher Education Internships: Promoting Reflection and Self-Efficacy While Reducing Stress? J. Digit. Learn. Teach. Educ. 2017, 33, 78–87. [Google Scholar] [CrossRef]

- Geng, H.; Midford, R. Investigating First Year Education Students’ Stress Level. Aust. J. Teach. Educ. 2015, 40, 1–12. [Google Scholar] [CrossRef][Green Version]

- Martínez-García, I.; Nielsen, T.; Alestor-García, E. Perceived stress and perceived lack of control of Spanish education-degree university students: Measurement properties of the PSS10 and differences dependent on degree year, basis for admission and gender. Psychol. Rep. 2021, 125, 1824–1851. [Google Scholar] [CrossRef] [PubMed]

- Klassen, R.M.; Durksen, T.L. Weekly self-efficacy and work stress during the teaching practicum: A mixed methods study. Learn. Instr. 2014, 33, 158–169. [Google Scholar] [CrossRef]

- Hopkins, W.S.; Hoffman, S.Q.; Moss, V.D. Professional development schools and preservice teacher stress. Action Teach. Educ. 1997, 18, 36–46. [Google Scholar] [CrossRef]

- Klassen, R.M.; Elaine, W.; Angela, F.Y.S.; Waniwisa, H.; Marina, W.W.; Nongkran, W.; Panwadee, S.; Chaleosri, P.; Yanisa, B.; Anchalee, J. Preservice teachers’ work stress, self-efficacy, and occupational commitment in four countries. Eur. J. Psychol. Educ. 2013, 28, 1289–1309. [Google Scholar] [CrossRef]

- Kokkinos, C.M.; Stavropoulos, G. Burning out during the practicum: The case of teacher trainees. Educ. Psychol. 2016, 36, 548–568. [Google Scholar] [CrossRef]

- Cohen, S.; Williamson, G. Perceived stress in a probability sample of the United States. In The Social Psychology of Health: Claremont Symposium on Applied Social Psychology; Spacapan, S., Oskamp, S., Eds.; Sage: Newbury Park, CA, USA, 1988. [Google Scholar]

- Lee, B.; Jeong, H.I. Construct validity of the perceived stress scale (PSS-10) in a sample of early childhood teacher candidates. Psychiatry Clin. Psychopharmacol. 2019, 29, 76–82. [Google Scholar] [CrossRef]

- Eskildsen, A.; Dalgaard, V.L.; Nielsen, K.J.; Andersen, J.H.; Zachariae, R.; Olsen, L.R.; Jørgensen, A.; Christiansen, D.H. Cross-cultural adaptation and validation of the Danish consensus version of the 10-item perceived Stress Scale. Scand. J. Work. Environ. Health 2015, 41, 486–490. [Google Scholar] [CrossRef]

- Nielsen, T.; Dammeyer, J. Measuring higher education students’ perceived stress: An IRT-based construct validity study of the PSS-10. J. Stud. Educ. Eval. 2019, 63, 17–25. [Google Scholar] [CrossRef]

- Holland, P.W.; Wainer, H. Differential Item Functioning; Erlbaum: Hillsdale, NJ, USA, 1993. [Google Scholar]

- Marais, I. Local dependence. In Rasch Models in Health; Christensen, K.B., Kreiner, S., Mesbah, M., Eds.; ISTE and John Wiley & Sons, Inc.: London, UK, 2013; pp. 111–130. [Google Scholar]

- Rasch, G. Probabilistic Models for Some Intelligence and Attainment Tests; Danish Institute for Educational Research: Copenhagen, Denmark, 1960. [Google Scholar]

- Masters, G.N. A Rasch model for partial credit scoring. Psychometrika 1982, 47, 149–174. [Google Scholar] [CrossRef]

- Kreiner, S.; Christensen, K.B. Graphical Rasch Models. In Statistical Methods for Quality of Life Studies: Design, Measurements and Analysis; Mesbah, M., Cole, B.F., Lee, M.-L.T., Eds.; Springer: Boston, MA, USA, 2002; pp. 187–203. [Google Scholar] [CrossRef]

- Kreiner, S.; Christensen, K.B. Analysis of local dependence and multidimensionality in graphical loglinear Rasch models. Commun. Stat. Theory Methods 2004, 33, 1239–1276. [Google Scholar] [CrossRef]

- Kreiner, S.; Christensen, K.B. Validity and objectivity in health-related scales: Analysis by graphical loglinear Rasch models. In Multivariate and Mixture Distribution Rasch Models; Springer: New York, NY, USA, 2007; pp. 329–346. [Google Scholar]

- Fischer, G.H.; Molenaar, I.W. (Eds.) Rasch Models—Foundations, Recent Developments, and Applications; Springer: Berlin, Germany, 1995. [Google Scholar]

- Kreiner, S. The Rasch Model for Dichotomous Items. In Rasch Models Health; Christensen, K.B., Kreiner, S., Mesbah, M., Eds.; ISTE and John Wiley & Sons, Inc.: London, UK, 2013; pp. 5–26. [Google Scholar] [CrossRef]

- Rosenbaum, P.R. Criterion-related construct validity. Psychometrika 1989, 54, 625–633. [Google Scholar] [CrossRef]

- Nielsen, T.; Kreiner, S. Statistical Anxiety and Attitudes towards Statistics: Criterion-related construct validity of the HFS-R questionnaire revisited using Rasch models. Cogent Educ. 2021, 8, 1947941. [Google Scholar] [CrossRef]

- Lauritzen, S.L. Graphical Models; Clarendon Press: Oxford, UK, 1996. [Google Scholar]

- Andersen, E.B. A goodness of fit test for the Rasch model. Psychometrika 1973, 38, 123–140. [Google Scholar] [CrossRef]

- Kelderman, H. Loglinear Rasch model tests. Psychometrika 1984, 49, 223–245. [Google Scholar] [CrossRef]

- Hamon, A.; Mesbah, M. Questionnaire Reliability Under the Rasch Model. In Statistical Methods for Quality of Life Studies. Design, Measurement and Analysis; Mesbah, M., Cole, B.F., Lee, M.L.T., Eds.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 2002. [Google Scholar]

- Kreiner, S.; Christensen, K.B. Person Parameter Estimation and Measurement in Rasch Models. In Rasch Models Health; Christensen, K.B., Kreiner, S., Mesbah, M., Eds.; ISTE and John Wiley & Sons, Inc.: London, UK, 2013; pp. 63–78. [Google Scholar] [CrossRef]

- Benjamini, Y.; Hochberg, Y. Controlling the False Discovery Rate: A Practical and Powerful Approach to Multiple Testing. J. R. Stat. Soc. Ser. B (Methodol.) 1995, 57, 289–300. [Google Scholar] [CrossRef]

- Nielsen, T. Psychometric evaluation of the Danish language version of the Field Practice Experiences Questionnaire for teacher students (FPE-DK) using item analysis according to the Rasch model. PLoS ONE 2021, 16, e0258459. [Google Scholar] [CrossRef]

- Kreiner, S. Introduction to DIGRAM; Department of Biostatistics, University of Copenhagen: Copenhagen, Denmark, 2003. [Google Scholar]

- Kreiner, S.; Nielsen, T. Item Analysis in DIGRAM 3.04. Part I: Guided Tours; Research Report 2013/06; Department of Public Health, University of Copenhagen: Copenhagen, Denmark, 2013. [Google Scholar]

- Kreiner, S.; Nielsen, T. Item Analysis in DIGRAM 5.01. Guided Tours; Department of Biostatistics, University of Copenhagen: Copenhagen, Denmark, 2023; Available online: https://biostat.ku.dk/DIGRAM/Item%20analysis%20in%20DIGRAM%205-01%20-%20guided%20tours.pdf (accessed on 16 March 2013).

- Goodman, L.A.; Kruskal, W.H. Measures of Association for Cross Classifications. J. Am. Stat. Assoc. 1954, 49, 732–764. [Google Scholar] [CrossRef]

- Medvedev, O.N.; Krägeloh, C.U.; Hill, E.M.; Billington, R.; Siegert, R.J.; Webster, C.S.; Booth, R.J.; Henning, M.A. Rasch analysis of the perceived Stress Scale: Transformation from an ordinal to a linear measure. J. Health Psychol. 2019, 24, 1070–1081. [Google Scholar] [CrossRef] [PubMed]

- Nielsen, T.; Santiago, P.H.R. Chapter 14: Using graphical loglinear Rasch models to investigate the construct validity of the Perceived Stress Scale. In Rasch Measurement: Applications in Quantitative Educational Research; Khine, M., Ed.; Springer Nature: Singapore, 2020; pp. 261–281. ISBN 978-981-15-1799-0. [Google Scholar]

- Bandura, A. Self-Efficacy. The Exercise of Control; W.H. Freeman and Company: New York, NY, USA, 1997. [Google Scholar]

- Danmarks Evalueringsinstitut. Oplevelsen af Praktik på Uddannelserne til Lærer, Pædagog, Sygeplejerske og Socialrådgiver; Danmarks Evalueringsinstitut: Holbæk, Denmark, 2022; ISBN 978-87-7182-689-0. [Google Scholar]

- Cox, D.R.; Spjøtvoll, E.; Johansen, S.; van Zwet, W.R.; Bithell, J.F.; Barndorff-Nielsen, O.; Keuls, M. The Role of Significance Tests [with Discussion and Reply]. Scand. J. Stat. 1977, 4, 49–70. Available online: http://www.jstor.org/stable/4615652 (accessed on 27 March 2016).

- Murray-Harvey, R.; Slee, P.T.; Lawson, M.J.; Silins, H.; Banfield, G.; Russell, A. Under Stress: The concerns and coping strategies of teacher education students. Eur. J. Teach. Educ. 2000, 23, 19–35. [Google Scholar] [CrossRef]

| Frequency (%) | |

|---|---|

| Field practice placement | |

| Level I | 56 (15.6) |

| Level II | 151 (42.1) |

| Level III | 152 (42.3) |

| Campus | |

| Campus A | 236 (65.7) |

| Campus B | 123 (34.3) |

| BA education program | |

| Regular | 300 (83.6) |

| Other | 59 (16.4) |

| Major teaching subject a | |

| Danish (grade 1–6) | 62 (17.5) |

| Danish (grade 4–10) | 118 (33.3) |

| Mathematics (grade 1–6) | 18 (5.1) |

| Mathematics (grade 4–10) | 125 (35.3) |

| English (grade 1–6) | 8 (2.3) |

| English (grade 4–10) | 23 (6.5) |

| Gender | |

| Female | 258 (71.9) |

| Male | 101 (28.1) |

| Age | |

| Mean (SD) | 27.2 (6.95) |

| Tests of Fit | PSFP RM a | PSFP GLLRM b | PLCFP RM a | PLCFP GLLRM c | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CLR | df | p | CLR | df | p | CLR | df | p | CLR | df | p | |

| Global homogeneity d | 74.2 | 23 | <0.001 | 81.0 | 78 | 0.386 | 68.6 | 15 | <0.001 | 12.8 | 26 | 0.985 |

| Invariance | ||||||||||||

| Field practice level | 76.7 | 46 | 0.003 | 179.5 | 156 | 0.096 | 63.8 | 30 | <0.001 | 28.6 | 32 | 0.641 |

| Teacher program | 22.7 | 23 | 0.478 | 89.9 | 78 | 0.168 | 15.1 | 15 | 0.217 | 30.2 | 26 | 0.261 |

| Campus | 16.3 | 23 | 0.841 | 103.4 | 78 | 0.029 + | 18.9 | 15 | 0.443 | 21.2 | 26 | 0.730 |

| Gender | 49.7 | 23 | 0.001 | 104.0 | 78 | 0.026 + | 19.4 | 15 | 0.197 | 31.4 | 26 | 0.213 |

| Age | 31.8 | 23 | 0.104 | 86.9 | 78 | 0.229 | 24.7 | 15 | 0.055 | 43.5 | 26 | 0.017 + |

| Item/Rest Score Correlations | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSFP | RM a | GLLRM b | PLCFP | RM a | GLLRM c | ||||||||

| Items | Obs γ | Exp γ | p | Obs γ | Exp γ | p | Items | Obs γ | Exp γ | p | Obs γ | Exp γ | p |

| i1 | 0.59 | 0.58 | 0.765 | 0.58 | 0.58 | 0.352 | i4r | 0.57 | 0.58 | 0.744 | - | - | - |

| i2 | 0.65 | 0.59 | 0.067 | 0.65 | 0.63 | 0.520 | i5r | 0.61 | 0.59 | 0.611 | 0.72 | 0.68 | 0.361 |

| i3 | 0.62 | 0.59 | 0.358 | 0.61 | 0.62 | 0.918 | i7r | 0.60 | 0.59 | 0.810 | 0.59 | 0.58 | 0.768 |

| i6 | 0.57 | 0.58 | 0.705 | 0.54 | 0.52 | 0.591 | i8r | 0.67 | 0.58 | 0.048 | 0.67 | 0.68 | 0.849 |

| i9 | 0.46 | 0.58 | <0.001 + | 0.43 | 0.46 | 0.434 | |||||||

| i10 | 0.76 | 0.59 | <0.001 + | - | - | - | |||||||

| Theta | Sum Score | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Scales and Subgroups (n) a | Target | Mean | TI Mean | TI Max | TI Target Index | RMSE Mean | RMSE Min | RMSE Target Index | Target | Mean | Mean SEM | r b |

| PSFP subscale | ||||||||||||

| All students (359) | 0.22 | −0.52 | 6.120 | 8.533 | 0.717 | 0.425 | 0.342 | 0.805 | 12.80 | 7.64 | 2.45 | 0.65 |

| PLCFP subscale | ||||||||||||

| Field practice level I (56) | 2.00 | −0.71 | 1.258 | 1.868 | 0.673 | 0.882 | 0.732 | 0.829 | 7.61 | 3.48 | 1.11 | 0.71 |

| Field practice level II (151) | 1.54 | −1.12 | 1.191 | 1.989 | 0.599 | 0.933 | 0.709 | 0.760 | 7.47 | 3.44 | 1.08 | 0.69 |

| Field practice level III (152) | 1.59 | −1.22 | 1.262 | 1.914 | 0.659 | 0.882 | 0.723 | 0.819 | 7.66 | 3.32 | 1.11 | 0.68 |

| Level of Field Practice (n) | Mean Score | PSFP SE | 95% CI | p a | Mean Score b | PLCFP SE | 95% CI | p c |

|---|---|---|---|---|---|---|---|---|

| Level I (56) | 7.45 | 0.53 | [6.44; 8.49] | 3.48 | 0.27 | [2.98; 4.04] | ||

| Level II (151) | 7.49 | 0.34 | [6.86; 8.19] | 3.02 | 0.15 | [2.73; 3.32] | ||

| Level III (152) | 7.86 | 0.36 | [7.17; 8.52] | 0.355 | 2.85 | 0.15 | [2.58; 3.14] | 0.046 |

| Scale Score Groups (n) | Mean Grade | SE | 95% CI | p a | ES |

|---|---|---|---|---|---|

| PSFP b | |||||

| Low (187) | 8.61 | 0.21 | [8.20; 9.02] | ||

| High (160) | 7.88 | 0.13 | [7.37; 8.43] | 0.016 | 0.232 |

| PLCFP b | |||||

| Low (151) | 8.49 | 0.24 | [8.03; 8.99] | ||

| High (196) | 8.11 | 0.23 | [7.63; 8.54] | 0.132 | 0.121 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nielsen, T. Perceived Stress and Perceived Lack of Control of Student Teachers in Field Practice Placements in Schools during the COVID-19 Pandemic: Validity of the PSS-10 Adapted to the Field Practice Context. Educ. Sci. 2023, 13, 983. https://doi.org/10.3390/educsci13100983

Nielsen T. Perceived Stress and Perceived Lack of Control of Student Teachers in Field Practice Placements in Schools during the COVID-19 Pandemic: Validity of the PSS-10 Adapted to the Field Practice Context. Education Sciences. 2023; 13(10):983. https://doi.org/10.3390/educsci13100983

Chicago/Turabian StyleNielsen, Tine. 2023. "Perceived Stress and Perceived Lack of Control of Student Teachers in Field Practice Placements in Schools during the COVID-19 Pandemic: Validity of the PSS-10 Adapted to the Field Practice Context" Education Sciences 13, no. 10: 983. https://doi.org/10.3390/educsci13100983

APA StyleNielsen, T. (2023). Perceived Stress and Perceived Lack of Control of Student Teachers in Field Practice Placements in Schools during the COVID-19 Pandemic: Validity of the PSS-10 Adapted to the Field Practice Context. Education Sciences, 13(10), 983. https://doi.org/10.3390/educsci13100983