Abstract

Biomedical engineering (BME) is one of the fastest-growing engineering fields worldwide. BME professionals are extensively employed in the health technology and healthcare industries. Hence, their education must prepare them to face the challenge of a rapidly evolving technological environment. Biomedical signals and systems analysis is essential to BME undergraduate education. Unfortunately, students often underestimate the importance of their courses as they do not perceive these courses’ practical applications in their future professional practice. In this study, we propose using blended learning spaces to develop new learning experiences in the context of a biomedical signals and systems analysis course to enhance students’ motivation and interest and the relevance of the materials learned. We created a learning experience based on wearable devices and cloud-based collaborative development environments such that the students turned daily-life scenarios into experiential learning spaces. Overall, our results suggest a positive impact on the students’ perceptions of their learning experience concerning relevance, motivation, and interest. Namely, the evidence shows a reduction in the variability of such perceptions. However, further research must confirm this potential impact. This confirmation is required given the monetary and time investment this pedagogical approach would require if it were to be implemented at a larger scale.

1. Introduction

Biomedical engineering (BME) is defined as a discipline that “integrates physical, mathematical, and life sciences with engineering principles for the study of biology, medicine and health systems and for the application of technology to improving health and quality of life” [1]. With 117,935 biomedical engineers distributed in 129 countries in 2015, BME is one of the fastest-growing engineering fields worldwide [2,3]. BME professionals are extensively employed in the health technology and healthcare industries, hospitals and other institutions, academia, government institutions, and national regulatory agencies. Indeed, the World Health Organization (WHO) recently recognised that biomedical engineers play a critical role in the evolution of healthcare systems. Hence, BME education needs to prepare future biomedical engineers for the challenge, equipping them with new skills with which to apply their knowledge and tools of analysis, design, and implementation for problem solving within complex healthcare systems [4].

Biomedical signals and systems analysis is essential to BME undergraduate education [5,6]. Biomedical signal analysis entails extracting helpful information from biological and medical signals for diagnostic and therapeutic purposes [7]. For instance, cardiac signals can be studied to determine whether a patient is susceptible to a heart attack. Muscle signals can be analysed to extract features later used to control artificial limbs. Furthermore, analysing biomedical signals enables the characterisation of the systems that originate or manipulate them. For example, studying brain signals can lead to a deeper understanding of brain function during sleep. Thus, the “biomedical signals and systems analysis” course represents an opportunity to apply theoretical concepts and mathematical tools to real-life problems.

Despite the relevance of their future profession, BME undergraduate students frequently underestimate the importance of their courses as they do not perceive their practical applications in their future professional activities [5]. Thus, they show a lack of interest and motivation, which leads to suboptimal competency development. Such a gap may be reduced by implementing activities that allow students to build their knowledge in an immersive and engaging real-world scenario [8].

Several mechanisms have been identified to provide BME students with hands-on experiences throughout the curriculum, increasing their learning and engagement and promoting their preparedness to pursue careers in industry, academia, or elsewhere [5]. These mechanisms include computer simulation, laboratory experiments, design courses, guest speakers, industry-sponsored design projects, hospital field trips, placements at medical device companies, and internships. Additionally, instructional methods such as project-based learning (PBL) and challenge-based learning (CBL) have been proposed to improve BME education [9,10,11]. These methods collaboratively engage students in developing solutions to real-world problems concerning crucial concepts in the discipline, thus fostering disciplinary knowledge and creative thinking skills. Moreover, these methods have been found to increase students’ motivation and awareness of the connections between their in-class experiences and their future work [9,10,11].

In this work, we propose the use of blended learning spaces that combine direct instruction, experiential learning in daily-life scenarios, and cloud-based collaborative development environments in a “biomedical signals and systems analysis” course from the perspective of a teaching practice. Our proposal is grounded in an innovative concept of the “experiential learning space” previously presented [12,13]. Notably, we incorporate wearable devices as the enabling technology for experiential learning activities to record physiological and behavioural signals in everyday scenarios. In doing so, we intend to increase students’ perceived relevance of, interest in, and motivation for the course content and learning outcomes to improve their academic performance and competency development.

2. Theoretical Framework

The proposition of blended learning spaces for experiential learning supported by wearable technology and cloud-based collaborative development environments requires a clarification of the notions of relevance, interest, and the expected contribution to competency development in this work. These ideas are integrated into the fundamental assumptions behind the development of relevant learning experiences in the proposed blended learning spaces.

2.1. Relevance, Interest and Academic Performance

The term “interest” describes two different experiences: (1) an increased psychological state of attention, concentration, and affection experienced at a given time (situational interest), and (2) a durable predisposition to become involved with an object or a subject throughout time (personal interest) [14,15]. Situational interest combines affective (e.g., enthusiasm) and cognitive (e.g., perceived value) qualities produced by the characteristics of the situation (e.g., novelty) [15]. Experiencing situational interest promotes learning by increasing attention and dedication to the subject [16]. If it shifts to a personal interest, it is more likely for the student to dive deeper and become more involved in the topic. Thus, interest is a predictor of academic performance and, as a result, of competency development [17]. Such a transition from situational to personal interest arises when the assignments are perceived as relevant [14,16]. In this sense, relevance in education is considered to trigger interest as there is usefulness or practicality in preparing people to perform their current or future jobs or tasks competently under certain existing circumstances and requirements [8]. Therefore, using relevant situations in learning experiences becomes paramount for competency development as it highlights a purposive aspect of learning and the expected contribution students could obtain from their studies or what they learn. However, a question remains about the definition of “how to learn”, that is, the educational approach to facilitate students’ learning. To progress in this direction, different approaches might be considered. However, meaningful and long-lasting alternatives based on a constructivist student-centred approach are required [18]. This view calls for “experiential learning”, which is claimed to be more effective than any other form of learning as it enhances the motivation to learn by supporting the active participation of students in the learning process to code and construct learning within their condition and reality [19].

2.2. Experiential Learning

Experiential learning has been proposed to contribute to students’ learning in a meaningful and long-lasting way because of the emphasis on learning-by-doing and experimentation [19]. Learning is considered to naturally occur as part of a continuous meaning-making process through personal and environmental experiences in which students develop interest and recognise relevance in their learning [20]. Experiential learning involves a four-stage circular feedback process known as Kolb’s Experiential Learning Cycle, in which concrete experience (CE), reflective observation (RO), abstract conceptualisation (AC), and active experimentation (AE) take place in a continuous learning loop [21]. Accordingly, two major interacting domains in which learning occurs can be recognised as part of the learning cycle. On the one hand, there is an intellectual or conceptual domain in which people think and reflect on a situation. On the other hand, there is an operational domain in which people experience and act in the situation. Hence, learning can be seen as a circular process composed of intellectual activities that guide students’ actions, whereas practical activities and tasks provide feedback for conceptual knowledge in a specific context. This idea is crucial for translating educational activities into experiential learning, considering both types of conceptual and practical domains of learning [22].

Therefore, approaching learning from this perspective requires a different understanding and an action framework to conceptualise, organise, and implement meaningful learning activities as learning experiences linked to real-life environments and reflective practices. Moreover, experiential learning has become highly important as there is a current orientation in education towards CBL and competency-based education (CBE) to develop abilities in students through hands-on experiential learning activities. Experiential learning emphasises involving individual students in meaningful situations where they can build their knowledge from problem-solving or decision-making in real-world scenarios.

The ideas of experiential learning relate to other works promoting the active participation of students in such a way that they undertake a hands-on, learner-centred, collaborative, and situated type of learning [18,23,24]. This type of learning calls for moving educational approaches from a knowledge-broadcasting type of teaching in which students sit and listen to a constructivist alternative in which students learn by executing tasks in a thoughtful way while immersed in a meaningful situation [18]. The theory of experiential learning has been criticized for overseeing personal, psychological, and contextual conditions affecting, for instance, learning modes, learner types, learning styles, knowledge creation, and whether learning occurs in identifiable stages [25]. However, it is also recognised for its popularity and wide use in teaching practice [26].

2.3. Blended Learning Spaces for Biomedical Engineering Education

A learning space is commonly recognized as a location or physical place where learning occurs [27]. This might be the case of a classroom, laboratory, or workshop. Learning spaces are claimed to integrate educational approaches, technology, and resources to develop learning outcomes [28]. That is, learning spaces represent the way educators conceptualise teaching and learning [29]. However, it is increasingly argued that learning spaces are primarily ineffective as learning environments, especially when referring to traditional classrooms [30]. For instance, many Higher Education students in traditional learning environments cannot collaborate and apply much of what they have been taught. One possibility for this phenomenon is that classrooms are used to support the lecturing of content to passively listening students. Learning spaces should support effective learning in terms of active participation and collaborative work, considering the needs of learners, and facilitating learning wherever it takes place [30].

Thus, learning spaces should transcend locations and physical infrastructure to incorporate the whole dynamics of learning in different types of venues or environments. As educational resources, learning spaces should also allow for the incorporation of devices that enrich and enhance learning. This is the case with information technologies such as computers, gadgets, and specialized software, but also for devices that leverage learning with remote/online web-based alternatives across diverse scenarios [31]. Therefore, learning spaces must adopt a broader view away from the traditional, physical classrooms or academic locations. Instead, there should be a variety of real, virtual, hybrid, or blended learning spaces supporting learning synchronously or asynchronously in ubiquitous scenarios.

In science, technology, engineering, and mathematics (STEM) education in particular, several digital technologies have been proposed as didactic tools for enriching traditional learning spaces and facilitating active learning activities, thus improving the teaching–learning process [32]. Among these technologies are mobile devices (e.g., smartphones and tablets), web-based learning platforms (see, for instance, [33]), virtual environments (e.g., Second Life [34]), digital games (e.g., Minecraft Education Edition), robots (e.g., LEGO Mindstorms NXT [35]), virtual labs (see [36] for a review on the topic), virtual reality (see, for instance, [37]), and even social networks (e.g., Facebook [38]). Previous studies have reported that these technologies are effective in improving learning outcome gains [39], increasing students’ engagement and motivation [40,41], and fostering their cognitive and creative skills [39,42], among other benefits [32].

In this work, we consider learning spaces as those venues or environments where purposeful learning occurs, inside or outside of the classroom, and which support the contact, interactions, communications, and collective (or individual) actions that students undertake to produce their learning outcomes in different instructional formats over time [12]. This conceptualisation is extended to experiential learning spaces for BME education in which students can learn about improving health and the quality of life in diverse and relevant simulated, contrived, or real-world scenarios or situations. Learning spaces, in this case, can exist within dedicated teaching facilities within universities, healthcare institutions, or any suitable daily-life location. Accordingly, this view calls for adopting the necessary technologies and resources to support learning inside and outside classrooms, laboratories, and universities.

One possible strategy for undertaking this conceptualisation of learning spaces involves using Information and Communication Technologies (ICT). For example, a study on ICT used in higher education by Zweekhorst and Maas found that students deemed lectures using ICT tools more engaging [43]. Moreover, Cuetos Revuelta et al. found that faculty attributed a high capacity for students’ motivation and stimulation to using ICT in teaching [44]. Therefore, this work leverages the potential of using cloud-based collaborative development environments such as Google Collaboratory to foster students’ motivation and engagement.

A further possibility involves the use of wearable devices. Wearables refer to “any miniaturized electronic device that can be easily donned on and off the body, or incorporated into clothing or other body-worn accessories” [45]. Wearable technologies are traditionally used to measure and monitor vital human signs using head, wrist, or chest-based gadgets for well-being, leisure, sports, and healthcare applications in different situations and under various conditions. Nevertheless, there is a growing interest in using and deploying them to facilitate teaching and learning, as they are regarded to help collect data across multiple disciplines and improve students’ satisfaction and learning [46].

In healthcare, wearables have been successfully used to improve patients’ health literacy, treatment engagement, adherence to indications, medical follow-ups, and sociality, among others [47]. However, limitations exist because of their reduced scope of monitoring variables and the accurate measurement of health markers. As these limitations are overcome, wearables have a promising future in expanding the clinical repertoire of patient-specific measures and becoming an essential tool for precision health [47]. In the meantime, there is an increasing interest in wearables as an emergent technology and for training clinician professionals.

In higher education, wearables have been identified among the technologies transforming teaching practices, given their potential to support improved learning experiences [48,49,50]. This technology improves students’ interaction and engagement and enables the implementation of active learning [48]. Furthermore, the potential of wearables to facilitate distance teaching and learning and to compensate for the absence of physical learning spaces (for instance, during the COVID-19 sanitary restrictions and requirements for social distancing) has also been signalled [50]. In contrast, a high cost and potential privacy issues have also been signalled as drawbacks of their use in education [48,50].

In BME education, wearables can be used to train students to collect data and learn about their use, applicability, and limitations. For instance, Kanna et al. incorporated a custom-made wearable device in classroom activities to allow students to collect and analyse their electrocardiogram and breathing data [51]. According to the authors, most students considered the course intellectually stimulating, enabling them to learn concepts not practically covered in the curriculum, and enhancing their creativity and curiosity [51]. However, the full potential of wearables was not exploited since they were only used in the classroom.

Therefore, the use of wearables in this work is demonstrated in three parts. First, wearables are considered part of a learning space as an educational resource to take learning outside the classroom and universities into everyday scenarios. Second, wearables improve students’ learning experience by carrying out hands-on activities to increase their interest, motivation, and learning relevance. Third, wearables are used to collect data for disciplinary learning and research purposes within BME education.

3. Materials and Methods

The learning experience presented in this paper was implemented at Tecnologico de Monterrey (hereafter referred to as Tec), a private, non-profit university with 26 campuses in Mexico. Tec’s novel educational model, known as Tec21 Model, provides CBE grounded in learning experiences aiming to develop disciplinary, program-specific, and transversal skills that will allow students to face the opportunities and challenges of the 21st century [28]. Tec21 Model relies on three pillars:

- Flexible, personalised programs of study. Programs of study are divided into three stages:

- Exploration: a three-term stage (i.e., 1.5 years) during which students obtain the foundational knowledge and skills of a discipline or area of study (e.g., engineering). Students are expected to choose a specific academic program by the end of this stage.

- Focus: a three-term stage (i.e., 1.5 years) during which students obtain the core knowledge and skills of their chosen academic program (e.g., biomedical engineering).

- Specialisation: a two-term stage (i.e., one year) during which students delve into their chosen area of specialisation (e.g., rehabilitation engineering). Various learning opportunities are made available to the student to achieve this specialisation. Among them are minor and study abroad programs, internships, and undergraduate research experiences.

- Challenge-based learning, as the core pedagogical approach. CBL involves students working with stakeholders to define a challenge that is real, relevant, and related to their environment and collaboratively develop a suitable solution. Other active learning methods such as problem-based learning (PrBL) and research-based learning (RBL) are also used in some courses.

- Inspirational professors, defined as professors who are experts in their field and are/have actively engaged in research or professional activities. They are responsible for identifying the challenges or problems to be tackled by students and creating the active learning environments that will trigger the development of disciplinary, program-specific, and transversal skills.

More specifically, the learning experience reported in this paper was implemented in a biomedical signals and systems analysis course from the Bachelor of Science in Biomedical Engineering program. Further details about the course context, objectives, and structure; the proposed learning experience’s definition and structure; the assessment tools used to collect data about its impact; and the statistical methods used to analyse the data are provided below.

3.1. Course Context, Objective and Structure

The biomedical signals and systems analysis course is required for second-year students in the BME undergraduate program at Tecnologico de Monterrey. This course is typically part of the fourth term of the program and thus belongs to the “Focus” stage. Hence, it is one of the first BME disciplinary courses students take during their undergraduate studies.

This course addresses the application of analytical methods and computational simulation and modelling tools to analyse biomedical signals and systems. The course requires prior knowledge of statistics, calculus, differential equations, numerical methods, and basic human biology and physiology. As a learning outcome, the student applies time- and frequency-domain analysis methods to characterise biological and physiological signals and systems analytically and numerically. Moreover, this course promotes the development of three competencies—one program-specific and two transversal:

- Biomedical data analysis and modelling: the student analyses biomedical data using statistical methods, signals and systems analysis and modelling methods, and computational tools (program-specific).

- Critical thinking: the student assesses the soundness of their own and others’ arguments to make judgements (transversal).

- Digital culture: the student uses digital technologies to create value in different spheres of action (transversal).

The biomedical signals and systems analysis course runs for ten weeks with four classroom hours per week, including lectures and supervised learning activities (e.g., computer simulations). In addition, students are expected to spend up to four additional hours per week on course assignments to reach the learning outcome.

The course is organised into five modules (Table 1). The first three modules cover fundamental concepts in biomedical signals analysis. These modules are delivered over the first half of the course (i.e., five weeks), hereafter referred to as period 1. The fourth and fifth modules cover core concepts in biomedical systems analysis. These modules are delivered over the second half of the course, hereafter referred to as period 2.

Table 1.

Biomedical signals and systems analysis course modules at Tecnologico de Monterrey.

The learning experience reported in this paper was implemented in the spring 2022 term. Forty-eight students enrolled in two sections, which were randomly assigned as control (n = 26) and experimental (n = 22). The same professor taught both sections.

3.2. Learning Experience Definition and Structure

The biomedical signals and systems analysis course uses research-based learning (RBL). In RBL, the course content are extensively connected with inquiry-based activities, and the experiences of the teaching staff in research are strongly integrated into the students’ learning activities [52,53]. This pedagogical approach allows students to develop analysis, reflection, and argumentation skills and discipline-specific knowledge and skills [54,55]. Other benefits of RBL for faculty and students are students’ increased engagement and satisfaction with the course and their confidence as independent thinkers [55]. Due to its nature, RBL is related to other inquiry-based approaches, such as PrBL [53].

Accordingly, the biomedical signals and systems analysis course is organised around two RBL experiences: one for the signals-related and one for the systems-related course content (i.e., periods 1 and 2, respectively). These learning experiences concern a “problem situation”, i.e., a situation or scenario posing a problem that requires acquiring and applying knowledge and skills to be solved. This problem situation leads to some research questions. Notably, the learning experience described here addresses only the signals-related course content; thus, it is implemented over period 1. Specifically, the problem situation for period 1 requires students to characterise a biomedical signal using time- and frequency-domain analysis methods and tools.

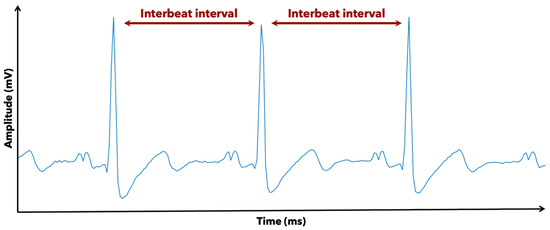

Students in the control group addressed the problem situation initially proposed by the course designers. In this situation, students analyse signals from a public dataset available in PhysioNet [56,57]. This dataset contains electrocardiography (ECG), skin conductance, and respiration signals from 57 spider-fearful individuals watching spider video clips [58]. These individuals were split into three experimental groups, each receiving different training in biofeedback techniques to control anxiety and stress [58]. In particular, students in the control group performed a heart rate variability (HRV) analysis to investigate potential differences between those experimental groups. HRV is the variation over time of the interval between consecutive heartbeats measured in milliseconds (i.e., interbeat intervals), which can be extracted from ECG signals (Figure 1) [59,60]. In HRV analysis, interbeat interval fluctuations are quantified using time- and frequency-domain methods for signal analysis (e.g., standard deviation and power spectral analysis of interbeat intervals, respectively) [59,60]. Therefore, HRV analysis was deemed optimal to link course content to real-life applications. HRV increases during resting periods and decreases during anxiety and stress periods [58]. As mentioned, students were expected to investigate potential HRV differences between groups and identify the signal analysis methods that proved more useful to the task. Furthermore, they were expected to critically appraise their results, providing a physiological interpretation of the observed differences.

Figure 1.

Electrocardiogram signal segment with two interbeat intervals identified. In heart rate variability (HRV) analysis, the fluctuations in interbeat intervals over time are quantified using time- and frequency-domain methods for signal analysis. Hence, HRV was deemed optimal to link course content to real-life applications.

Despite its hands-on nature, the problem situation above represents a simplified and contrived learning experience since students are only expected to reproduce a previously performed analysis on data collected by someone else. Moreover, it addresses a situation that might not be relevant for students (i.e., stress assessment in arachnophobic individuals). Hence, students might not find it exciting and fail to fully engage in their learning experience.

To overcome these limitations, we proposed enriching the experiential learning cycle by incorporating a broader set of activities into the learning experience. Namely, students in the experimental group used wearable devices (Zephyr Bioharness 3, Medtronic Inc., Boulder, CO, USA. Shown in Figure 2) to acquire their own physiological and behavioural signals (e.g., ECG, respiratory and physical activity levels) during tasks of their interest in scenarios beyond the classroom (e.g., physical training in the gym). Hence, students’ daily-life activities, venues, and environments became part of their experiential learning spaces (i.e., the physical infrastructure, type of contact, communications, interactions, and relations with other students and faculty members in a learning setting [12]). In this sense, different learning spaces were configured according to each student’s possibilities, interests, and practical relevance, taking their learning experiences everywhere. We hypothesised that involving students in the signal acquisition process in a situation relevant to them would provoke an increased interest in the learning experience. In turn, this interest would result in improved competency development and a stronger engagement with their learning of the course content linked to the problem situation. Furthermore, by encouraging students to carry out learning experiences within daily-life scenarios, we familiarised them with the concept of “ubiquitous healthcare” (i.e., the use of computing and communication devices to deliver healthcare services for patients and healthcare professionals, anytime and anywhere [61]) through “ubiquitous learning” [62,63].

Figure 2.

Wearable device (Zephyr Bioharness 3, Medtronic Inc., Boulder, CO, USA) that students used to acquire their own physiological and behavioural signals during tasks of their interest in scenarios beyond the classroom (e.g., physical training in the gym).

The student activities supporting this learning experience were similar for the control and experimental groups, with some exceptions derived from the use of the wearable device above. Table 2 summarises these learning activities, mapping their timing and relation to Kolb’s experiential learning cycle. Students were split into teams of four or five members to work on these activities. Further details are provided below.

Table 2.

Student learning activities as related to Kolb’s experiential learning cycle.

During the first week, all students were introduced to the problem situation, including a brief introduction to HRV analysis and its real-life applications. Moreover, students were given a demonstration of HRV analysis in action using actual data (i.e., ECG signals) and freeware [64,65]. Additionally, students in the control group downloaded and familiarised themselves with the dataset described above, whereas students in the experimental group were trained on setting up and using the wearable device. These activities allowed students to have a concrete experience of signal analysis methods applied to biomedical signals.

During the second week, all students critically read two essential papers on HRV analysis, emphasising time- and frequency-domain methods and their real-life applications [59,60]. This activity allowed students to make sense of the course content by connecting them with HRV analysis. Hence, this activity motivated a reflective observation of their previous concrete experience.

During the third week, students worked on the experimental protocol to be implemented later. For students in the control group, this meant reviewing and understanding a pre-designed experimental protocol that included the materials and methods required for the HRV analysis of the ECG signals available in the public dataset. For students in the experimental group, this involved selecting the scenario where they desired to acquire their physiological and behavioural signals (e.g., sleep or sport tracking) and defining the materials and methods required for analysing those signals. Therefore, this activity represented an abstract conceptualisation of the course content and learning outcomes.

Finally, during the fourth and fifth weeks, students implemented the experimental protocol reviewed or designed before, representing the active experimentation stage of Kolb’s Experiential Learning Cycle. To facilitate HRV analysis, students were given a Jupyter Notebook (i.e., a web-based notebook combining live code, equations, narrative text, and visualisations) containing Python code to run an exemplary HRV analysis on real ECG signals using Python toolboxes BioSPPy and pyHRV [66,67]. Students were encouraged to run this notebook on Google Collaboratory, a cloud-based collaborative development environment. This environment provided them with a virtual experiential learning space where they could collaborate synchronously or asynchronously to adapt the code to fit their respective purposes.

Students were expected to gradually document the outcomes of these activities in a scientific research report following the IMRaD format [68]. Hence, students were encouraged to complete the Introduction (I), Methods (M), and Results and Discussion (RaD) sections by the end of weeks 2, 3, and 5, respectively. This report was graded and used to assess students’ learning outcomes. Further details are provided below.

3.3. Learning Experience Assessment

Quantitative and qualitative data were collected from students before and after the learning experience above (weeks one and five, respectively) using the Student Assessment of their Learning Gains (SALG) [69,70]. The SALG instrument was initially designed to collect data on student-reported understanding, skills, and attitudes towards course content and learning outcomes. However, for this course, the SALG instrument was tailored to inquire only about students’ attitudes. In particular, students were asked to rate their:

- Perception of the relevance of this course’s content and their learning outcomes to their education and future professional activity (hereafter simply referred to as “relevance”);

- Interest in learning this course content (hereafter simply referred to as “interest”);

- Motivation to learn this course content (hereafter simply referred to as “motivation”).

Therefore, the tailored SALG instrument contains three Likert-scale items. Additionally, it includes two open-ended questions allowing students to comment on these elements.

Furthermore, the program-specific competency fostered in this course (i.e., biomedical data analysis and modelling) was assessed using the institutional rubric from Tecnológico de Monterrey. This rubric defines the four levels of achievement as follows:

- Incipient: the student performs incorrect data analysis using a reduced number of techniques and computational tools, reaching incoherent conclusions that he/she/they report(s) in a confusing way.

- Basic: the student performs a limited data analysis using some techniques and computational tools, reaching coherent conclusions that he/she/they report(s) acceptably.

- Solid: the student performs a correct data analysis using several techniques and computational tools, reaching relevant conclusions that he/she/they report(s) clearly.

- Outstanding: the student performs a rigorous data analysis using various techniques and computational tools, reaching original conclusions that he/she/they report(s) precisely.

Moreover, students’ academic performance was assessed using their grades from an end-of-period written exam. This exam contained four items assessing the students’ knowledge and ability to apply analytical methods and computational tools to analyse signals (e.g., the Fourier transform and MATLAB). The statistical analysis also considered the grades for the research report described above and the course’s cumulative grade for period 1. These grades reflect both the students’ individual work and teamwork.

Finally, the students’ opinions about their learning experience were assessed using the Student Opinion Survey (SOS), a voluntary, anonymous internal survey with six closed-ended items and one open-ended question. Closed-ended items use a 10-point rating scale, with 10 representing the highest value (e.g., the highest level of agreement or satisfaction). The open-ended question allows students to comment on their learning experience in the course. For this study, only two closed-ended items were considered, as these were deemed the most relevant for assessing the students’ learning experiences. Namely:

- EMRET—The professor challenged me to do my best (develop new skills, new concepts and ideas, think differently, etc.)

- EMREC—Overall, my learning experience was…

A mean above nine in the EMREC question is considered an indicator of the optimal performance of the professor as the facilitator of the students’ learning experiences.

Even though the SOS addresses the course as a whole, the only difference between the course delivered to the control and experimental groups was the learning experience reported here. Thus, it is reasonable to think that the observed differences in SOS responses are due to this experience.

All students signed an informed consent form notifying them of the aggregate use of anonymised data collected through the above instruments for research-only purposes. Additionally, the consent informed the students’ of their right to skip the SALG and SOS instruments for any reason and without any consequence to their evaluation.

3.4. Statistical Analysis

Descriptive statistics for each Likert-scale item in the SALG instrument were computed for each group (i.e., control and experimental) and before and after the learning experience above. Namely, the median and the interquartile range (IQR) were computed. The statistical significance of the differences between groups and before and after the learning experience was evaluated using the Wilcoxon rank sum test, a nonparametric test suitable for small samples and ordered categorical data. A p-value < 0.05 was accepted as evidence of statistical significance. Moreover, Spearman’s correlation analysis investigated potential associations between relevance, interest, and motivation. Spearman’s correlation is a nonparametric measure of rank correlation, i.e., the statistical dependence between the rankings of two variables. Thus, it is also suited for ordered categorical data (e.g., Likert-scale rank).

Descriptive statistics for the remaining assessments (i.e., competency evaluation, grades and SOS) were also computed for each group (i.e., control and experimental). Namely, the median and the interquartile range (IQR) were calculated for competency evaluations and grades. The statistical significance of the differences between groups for these data was evaluated using the Wilcoxon rank sum test, given the small sample size and the non-normal distribution of the data. Again, a p-value < 0.05 was accepted as evidence of statistical significance.

Statistical analysis was performed using R (version 3.6.3, R Foundation, Indianapolis, IN, USA) and RStudio (2022.02.3 + 492 “Prairie Trillium” Release, RStudio, PBC, Boston, MA, USA) [71,72].

4. Results

4.1. Relevance, Interest, and Motivation

4.1.1. Before the Learning Experience

Forty-six students responded to the SALG instrument before the learning experience, resulting in a 95.8% response rate (96.2% and 95.5% for the control and experimental groups, respectively). The median and IQR for the variables “interest” and “motivation” were identical for both groups, while a slight difference in median values was observed for variable “relevance”. However, this difference is not statistically significant (Table 3).

Table 3.

Descriptive statistics by group for the SALG instrument applied before the learning experience.

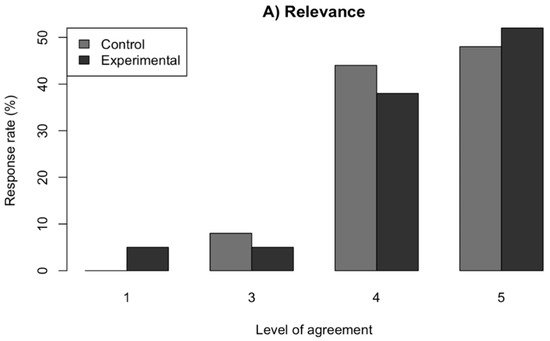

Overall, both groups highly rated their initial perceived relevance, interest, and motivation concerning the course content and learning outcomes (Figure 3). This behaviour might have been exhibited because this course is one of the first disciplinary courses that BME undergraduate students take during their studies. Indeed, their comments via the SAGL instrument express great excitement and expectations around this course.

Figure 3.

Response rate (%) by level of agreement for the SALG instrument applied before the learning experience: (A) relevance, (B) interest, and (C) motivation.

The correlation analysis revealed a moderate to strong positive correlation between “relevance” and “interest” (Spearman’s rho = 0.60), a moderate positive correlation between “ relevance” and “motivation” (Spearman’s rho = 0.52), and a strong positive correlation between “interest” and “motivation” (Spearman’s rho = 0.78).

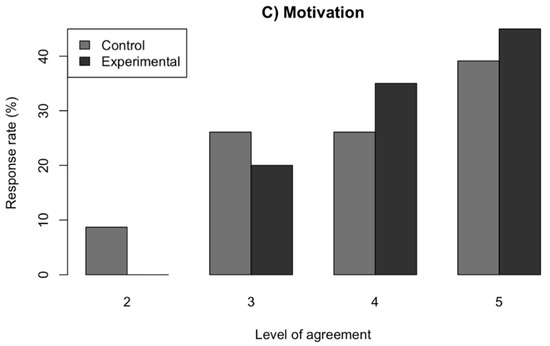

4.1.2. After the Learning Experience

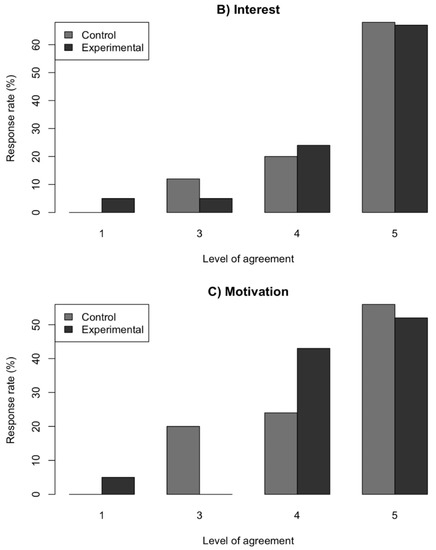

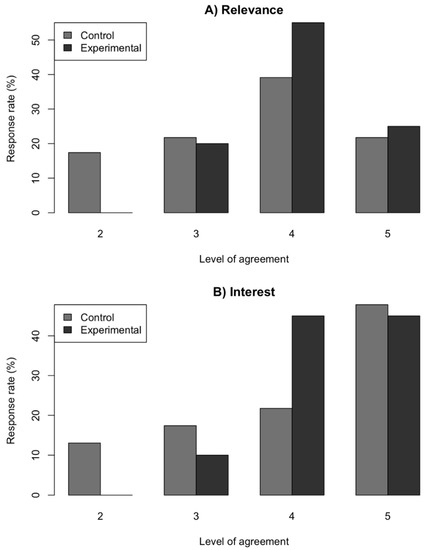

Forty-three students responded to the SALG instrument after the learning experience, amounting to an 89.6% response rate (88.5% and 90.9% for the control and experimental groups, respectively). The median values for the variables “relevance”, “interest”, and “motivation” were identical for both groups. However, a lower dispersion (i.e., IQR) was observed for the experimental group compared to the control group, suggesting a stronger within-group agreement about their attitude towards the course content and learning outcomes (Table 4). Moreover, a consistently larger proportion of responses from the experimental group fell in the higher levels (four and five) compared to the control group (Figure 4).

Table 4.

Descriptive statistics by group from the SALG instrument applied after the learning experience.

Figure 4.

Response rate (%) by level of agreement for the SALG instrument applied after the learning experience: (A) relevance, (B) interest, and (C) motivation.

The correlation analysis revealed a weak positive correlation between “relevance” and “interest” (Spearman’s rho = 0.33), a weak to moderate positive correlation between “relevance” and “motivation” (Spearman’s rho = 0.40), and a strong positive correlation between “interest” and “motivation” (Spearman’s rho = 0.81).

4.1.3. Before Versus after the Learning Experience

Both groups exhibited a lower median value for the variables “interest” and “motivation” after the learning experience than before the experience, yet this difference was not statistically significant (Table 5 and Table 6). Again, a lower dispersion (i.e., IQR) was observed for the experimental group compared to the control group.

Table 5.

Descriptive statistics before and after the learning experience for the control group.

Table 6.

Descriptive statistics before and after the learning experience for the experimental group.

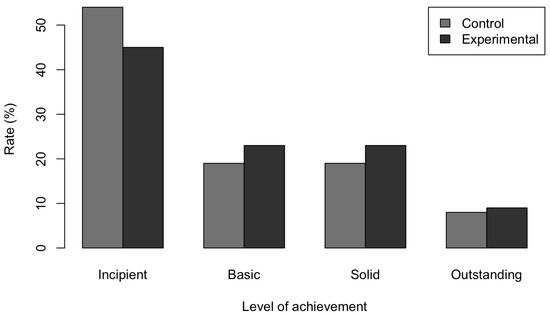

4.2. Program-Specific Competency Assessment

The experimental group exhibited a higher median value for the level of achievement of the assessed program-specific competency compared to the control group. However, this difference is not statistically significant (Table 7). On average, the experimental group reached a “basic” level of achievement of the assessed competency (median = 2), whereas the control group reached an “incipient” level of achievement (median = 1). Moreover, a larger proportion of higher levels of achievement (i.e., basic, solid, and outstanding) were observed in the experimental group than in the control group (Figure 5).

Table 7.

Descriptive statistics by group from the program-specific competency assessment.

Figure 5.

Percentage of students by level of achievement of the assessed competency.

4.3. Student Grades

On average, the experimental group exhibited higher grades in the written exam than the control group, although this difference is not statistically significant (Table 8). However, the opposite is true for the research report and the cumulative grade, where the control group showed higher grades than the experimental group. These differences are not statistically significant, though.

Table 8.

Descriptive statistics by group for student grades.

4.4. Student’s Opinion Survey

Only nineteen students responded to the SOS, amounting to a 39.6% response rate (30.8% and 50% for the control and experimental groups, respectively). This response rate is consistent with the largely low levels of student participation in the survey.

The median and IQR for the item “EMRET” were identical for both groups, while a slight difference in median values was observed for the item “EMREC”. However, this difference is not statistically significant (Table 9). Again, a lower dispersion (i.e., IQR) was observed for the experimental group compared to the control group.

Table 9.

Descriptive statistics by group for the students’ Opinion Survey.

5. Discussion

5.1. Main Findings

This work proposed using blended learning spaces combining direct instruction, experiential learning in daily-life scenarios, and cloud-based collaborative development environments in a “biomedical signals and systems analysis” course. This proposal incorporated wearable devices to enable experiential learning activities to collect physiological and behavioural data in daily-life scenarios. In doing so, we intended to increase students’ perceived relevance, interest, and motivation in the course content and learning outcomes to improve their competency development.

Interestingly, both groups rated their initial and final perceived relevance, interest, and motivation highly concerning the course content and learning outcomes (Figure 3 and Figure 4). Indeed, our statistical testing did not reveal any significant difference between groups or before and after the learning experience. This might be since this course is one of the first disciplinary courses that BME undergraduate students take during their studies. Accordingly, the students expressed excitement about the course in their responses to the SALG’s open-ended questions. However, despite this shared excitement, a consistently larger proportion of responses from the experimental group falling in the higher levels (four and five) compared to the control group was observed after the learning experience (Figure 4). This led to a lower dispersion (i.e., IQR) for the relevance, interest, and motivation for the experimental group compared to the control group, suggesting a stronger within-group agreement about their attitude towards the course content and learning outcomes (Table 4). This suggests a positive impact of the proposed learning experience on students’ perceived relevance and motivation. Nevertheless, further work is required to support any conclusion as this observation might have been produced by chance.

Furthermore, we proposed a three-dimensional framework to facilitate the assessment and interpretation of the results regarding students’ academic performance (i.e., grades), competency development, and satisfaction with their learning experience. The dimensions of this framework are:

- Effectiveness measured in terms of students’ grades and their competency level achievement;

- Efficiency measured by the resources involved in the design, development, and execution of the learning experience and the achieved learning outcomes;

- Efficacy measured by the students’ and professor’s satisfaction derived from the learning experience.

Based on this framework, we argue the following about the learning experience proposed:

- Effectiveness: there is no statistically significant evidence of increased levels of learning (as measured by grades) and student competency development due to the learning experience. However, the evidence suggests a positive impact of the learning experience on competency development. Namely, a larger proportion of higher levels of achievement (i.e., basic, solid, and outstanding) was observed in the experimental group than in the control group (Figure 5). Yet, further research is required to support or disprove this observation.

- Efficiency: the proposed learning experience was less efficient than the original one, as it involved monetary and time investments for the acquisition of wearable devices and the preparation/production of the learning experience, respectively. Given the learning outcomes above, this investment might not be justified. As previously mentioned, the high cost of commercial wearable devices has been an obstacle to their adoption in higher education for teaching purposes [48].

- Efficacy: there is no significant evidence of a higher efficacy of the proposed learning experience as measured by the SOS. However, the open-ended comments from the SALG suggested a positive impact of the learning experience on the students’ opinions about their overall learning experience in the course. These opinions align with previous studies on incorporating wearables in classroom activities, where students expressed an increased satisfaction, engagement, and intellectual stimulation [46,51]. Moreover, the professor also observed more engagement and motivation among the students who used the wearables. This observation coincides with a previous study on the perception of faculty members about the potential of incorporating ICTs in higher education [44]. Furthermore, the professor reported an improved teaching experience regarding the intellectual challenge it represents for mentoring students under experiential learning approaches and the motivation derived from it.

In summary, the evidence gathered in this study suggests that incorporating blended learning spaces that combine direct instruction, experiential learning in daily-life scenarios enabled by wearable devices, and cloud-based collaborative development environments may have a positive impact on a student’s perception of their learning experience concerning the relevance of learning and the students’ motivation and interest. However, the evidence is not statistically significant as there is only a reduction in the variability of their responses. Thus, further research must confirm this potential impact. This confirmation is required in other instances of learning experiences, given the monetary and time investment this pedagogical approach would need if it were to be implemented at a larger scale.

5.2. Limitations

The study reported in this paper has some limitations. First, although the SALG instrument applied before and after the educational intervention explored the same variables (i.e., relevance, interest, and motivation), by design, its questions had slight grammatical differences due to their time perspectives (i.e., the present tense for the survey applied before the intervention and past tense for the one used after it). This difference might have had an impact on the way students responded to the questions.

Furthermore, the relatively small sample size and the low response rate for the SOS made it difficult to draw solid conclusions about the impact of the proposed educational intervention on the students’ attitudes towards learning, learning achievement, and overall satisfaction with their learning experience.

6. Conclusions

This work contributes to creating active learning experiences for BME undergraduate students to enhance their perceived relevance of, interest in, and motivation for the course content, aiming to improve their learning outcomes and development of competencies. Considering the significance of “learning by doing” under the umbrella of the Tec21 Model, the undertaken pedagogic approaches must improve the learning experiences’ performance. Even though it was impossible to prove this approach’s higher effectiveness and efficacy, the present study shows that the evidence gathered suggested a positive impact on these variables. Nevertheless, this impact involves a higher financial cost for the acquisition of wearable devices, a greater workload and time spent by the professor during the design, and greater degrees of preparation and execution of the learning experience. Therefore, further research must prove the positive impact and justify the investment required to scale the educational intervention.

Author Contributions

Conceptualization, L.M., A.S.-D., D.E.S.-N. and L.C.-Z.; methodology, L.M., A.S.-D., D.E.S.-N. and L.C.-Z.; software, L.C.-Z.; formal analysis, L.M.; investigation, L.M.; resources, L.M.; data curation, L.M.; writing—original draft preparation, L.M., A.S.-D. and D.E.S.-N.; writing—review and editing, L.M., A.S.-D., D.E.S.-N. and L.C.-Z.; visualization, L.M.; supervision, L.M.; project administration, L.M.; funding acquisition, L.M., A.S.-D., D.E.S.-N. and L.C.-Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by NOVUS, Institute for the Future of Education, Tecnologico de Monterrey, Mexico, grant number N20-166. The APC was funded by the Writing Lab, Institute for the Future of Education, Tecnologico de Monterrey, Mexico.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the review board deeming it “Research without risk”, i.e., studies using retrospective documentary research techniques and methods, as well as those that do not involve any intervention or intended modification of physiological, psychological and social variables of study participants, among which the following are considered: questionnaires, interviews, review of clinical records and others, in which they are not identified or sensitive aspects of their behaviour are not addressed.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- International Federation of Medical and Biological Engineers. IFMBE’s Strategic Plan. Available online: https://ifmbe.org/about-ifmbe/strategic-plan/ (accessed on 29 November 2021).

- Velazquez Berumen, A.; Flood, M.; Rodriguez-Rodriguez, D.; Magjarevic, R.; Smith, M. Global Dimensions of Biomedical Engineering. In Human Resources for Medical Devices, the Role of Biomedical Engineers; World Health Organization: Geneva, Switzerland, 2017; pp. 30–52. ISBN 978-92-4-156547-9. [Google Scholar]

- Bureau of Labor Statistics, U.S. Department of Labor Occupational Outlook Handbook: Bioengineers and Biomedical Engineers. Available online: https://www.bls.gov/ooh/architecture-and-engineering/biomedical-engineers.htm#tab-1 (accessed on 29 November 2021).

- Sloane, E.; Hosea, F. Role of Biomedical Engineers in the Evolution of Health-Care Systems. In Human Resources for Medical Devices, the Role of Biomedical Engineers; World Health Organization: Geneva, Switzerland, 2017; pp. 127–142. ISBN 978-92-4-156547-9. [Google Scholar]

- Linsenmeier, R.A. What Makes a Biomedical Engineer? IEEE Eng. Med. Biol. Mag. 2003, 22, 32–38. [Google Scholar] [CrossRef] [PubMed]

- Voigt, H.F.; Hosea, F.; Nkuma-Udah, K.I.; Pallikarakis, N.; Lin, K.P.; Secca, M.; Zequera, M. Education and Training. In Human Resources for Medical Devices, the Role of Biomedical Engineers; World Health Organization: Geneva, Switzerland, 2017; pp. 39–52. ISBN 978-92-4-156547-9. [Google Scholar]

- IEEE Engineering in Medicine and Biology Society Designing a Career in Biomedical Engineering. Available online: https://www.embs.org/wp-content/uploads/2016/01/EMB-CareerGuide.pdf (accessed on 23 August 2022).

- Gibbons, M. Higher Education Relevance in the 21st Century 1998; World Bank: Washington, DC, USA, 1998. [Google Scholar]

- Farrar, E.J. Implementing a Design Thinking Project in a Biomedical Instrumentation Course. IEEE Trans. Educ. 2020, 63, 240–245. [Google Scholar] [CrossRef]

- Harris, T.R.; Brophy, S.P. Challenge-Based Instruction in Biomedical Engineering: A Scalable Method to Increase the Efficiency and Effectiveness of Teaching and Learning in Biomedical Engineering. Med. Eng. Phys. 2005, 27, 617–624. [Google Scholar] [CrossRef]

- Martin, T.; Rivale, S.D.; Diller, K.R. Comparison of Student Learning in Challenge-Based and Traditional Instruction in Biomedical Engineering. Ann. Biomed. Eng. 2007, 35, 1312–1323. [Google Scholar] [CrossRef] [PubMed]

- Salinas-Navarro, D.E.; Rodriguez Calvo, E.Z.; Garay Rondero, C.L. Expanding the Concept of Learning Spaces for Industrial Engineering Education. In Proceedings of the 2019 IEEE Global Engineering Education Conference (EDUCON), Dubai, United Arab Emirates, 8–11 April 2019; pp. 669–678. [Google Scholar]

- Salinas-Navarro, D.E.; Garay-Rondero, C.L.; Rodriguez Calvo, E.Z. Experiential Learning Spaces for Industrial Engineering Education. In Proceedings of the 2019 IEEE Frontiers in Education Conference (FIE), Covington, KY, USA, 16–19 October 2019; pp. 1–9. [Google Scholar]

- Hidi, S. Interest: A Unique Motivational Variable. Educ. Res. Rev. 2006, 1, 69–82. [Google Scholar] [CrossRef]

- Hidi, S.; Renninger, K.A. The Four-Phase Model of Interest Development. Educ. Psychol. 2006, 41, 111–127. [Google Scholar] [CrossRef]

- Harackiewicz, J.M.; Smith, J.L.; Priniski, S.J. Interest Matters: The Importance of Promoting Interest in Education. Policy Insights Behav. Brain Sci. 2016, 3, 220–227. [Google Scholar] [CrossRef] [PubMed]

- Harackiewicz, J.M.; Durik, A.M.; Barron, K.E.; Linnenbrink-Garcia, L.; Tauer, J.M. The Role of Achievement Goals in the Development of Interest: Reciprocal Relations between Achievement Goals, Interest, and Performance. J. Educ. Psychol. 2008, 100, 105–122. [Google Scholar] [CrossRef]

- Lalley, J.P.; Miller, R.H. The Learning Pyramid: Does It Point Teachers in the Right Direction? Education 2007, 128, 64–79. [Google Scholar]

- Kolb, D.A.; Fry, R.E. Toward an Applied Theory of Experiential Learning; Working Paper; MIT Alfred P. Sloan School of Management: Cambridge, MA, USA, 1974. [Google Scholar]

- Healey, M.; Jenkins, A. Kolb’s Experiential Learning Theory and Its Application in Geography in Higher Education. J. Geogr. 2000, 99, 185–195. [Google Scholar] [CrossRef]

- Kolb, D.A. Experiential Learning: Experience as the Source of Learning and Development; Prentice-Hall: Englewood Cliffs, NJ, USA, 1984; ISBN 978-0-13-295261-3. [Google Scholar]

- Reyes, A.; Zarama, R. The Process of Embodying Distinctions—A Re-Construction of the Process of Learning. Cybern. Hum. Knowing 1998, 5, 19–33. [Google Scholar]

- Freeman, S.; Eddy, S.L.; McDonough, M.; Smith, M.K.; Okoroafor, N.; Jordt, H.; Wenderoth, M.P. Active Learning Increases Student Performance in Science, Engineering, and Mathematics. Proc. Natl. Acad. Sci. USA 2014, 111, 8410–8415. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aji, C.A.; Khan, M.J. The Impact of Active Learning on Students’ Academic Performance. JSS 2019, 7, 204–211. [Google Scholar] [CrossRef]

- Bergsteiner, H.; Avery, G.C. The Twin-Cycle Experiential Learning Model: Reconceptualising Kolb’s Theory. Stud. Contin. Educ. 2014, 36, 257–274. [Google Scholar] [CrossRef]

- Bergsteiner, H.; Avery, G.C.; Neumann, R. Kolb’s Experiential Learning Model: Critique from a Modelling Perspective. Stud. Contin. Educ. 2010, 32, 29–46. [Google Scholar] [CrossRef]

- Thomas, H. Learning Spaces, Learning Environments and the Dis‘Placement’ of Learning. Br. J. Educ. Technol. 2010, 41, 502–511. [Google Scholar] [CrossRef]

- Tecnologico de Monterrey Model Tec21. Available online: https://tec.mx/en/model-tec21 (accessed on 10 December 2021).

- Alstete, J.W.; Beutell, N.J. Designing Learning Spaces for Management Education: A Mixed Methods Research Approach. JMD 2018, 37, 201–211. [Google Scholar] [CrossRef]

- Long, P.D.; Ehrmann, S.C. Future of the Learning Space: Breaking Out of the Box. EDUCAUSE Rev. 2005, 40, 42–58. [Google Scholar]

- Oblinger, D. (Ed.) Learning Spaces; An Educase e-Book; Educause: Wahington, DC, USA; Boulder, CO, USA, 2017; ISBN 978-0-9672853-7-5. [Google Scholar]

- Hernandez-de-Menendez, M.; Morales-Menendez, R. Technological Innovations and Practices in Engineering Education: A Review. Int. J. Interact. Des. Manuf. 2019, 13, 713–728. [Google Scholar] [CrossRef]

- Geng, F.; Srinivasan, S.; Gao, Z.; Bogoslowski, S.; Rajabzadeh, A.R. An Online Approach to Project-Based Learning in Engineering and Technology for Post-Secondary Students. In New Realities, Mobile Systems and Applications; Auer, M.E., Tsiatsos, T., Eds.; Lecture Notes in Networks and Systems; Springer International Publishing: Cham, Switzerland, 2022; Volume 411, pp. 627–635. ISBN 978-3-030-96295-1. [Google Scholar]

- Gallego, M.D.; Bueno, S.; Noyes, J. Second Life Adoption in Education: A Motivational Model Based on Uses and Gratifications Theory. Comput. Educ. 2016, 100, 81–93. [Google Scholar] [CrossRef]

- Gross, S.; Kim, M.; Schlosser, J.; Lluch, D.; Mohtadi, C.; Schneider, D. Fostering Computational Thinking in Engineering Education: Challenges, Examples, and Best Practices. In Proceedings of the 2014 IEEE Global Engineering Education Conference (EDUCON), Istanbul, Turkey, 3–5 April 2014; pp. 450–459. [Google Scholar]

- Potkonjak, V.; Gardner, M.; Callaghan, V.; Mattila, P.; Guetl, C.; Petrović, V.M.; Jovanović, K. Virtual Laboratories for Education in Science, Technology, and Engineering: A Review. Comput. Educ. 2016, 95, 309–327. [Google Scholar] [CrossRef]

- Vergara, D.; Antón-Sancho, Á.; Dávila, L.P.; Fernández-Arias, P. Virtual Reality as a Didactic Resource from the Perspective of Engineering Teachers. Comp. Applic. Eng. Educ. 2022, 30, 1086–1101. [Google Scholar] [CrossRef]

- Bastida-Escamilla, E.; Elias-Espinosa, M.C.; Franco-Herrera, F.; Covarrubias-Rodríguez, M. Bridging Theory and Practice Using Facebook: A Case Study. Educ. Sci. 2022, 12, 355. [Google Scholar] [CrossRef]

- Merchant, Z.; Goetz, E.T.; Cifuentes, L.; Keeney-Kennicutt, W.; Davis, T.J. Effectiveness of Virtual Reality-Based Instruction on Students’ Learning Outcomes in K-12 and Higher Education: A Meta-Analysis. Comput. Educ. 2014, 70, 29–40. [Google Scholar] [CrossRef]

- Dicheva, D.; Dichev, C.; Agre, G.; Angelova, G. Gamification in Education: A Systematic Mapping Study. Educ. Technol. Soc. 2015, 18, 75–88. [Google Scholar]

- Ferreira, M.J.; Moreira, F.; Pereira, C.S.; Durão, N. The Role of Mobile Technologies in the Teaching/Learning Process Improvement in Portugal. In Proceedings of the ICERI2015 Conference, Sevilla, Spain, 16 November 2015; p. 12. [Google Scholar]

- Sung, Y.-T.; Chang, K.-E.; Liu, T.-C. The Effects of Integrating Mobile Devices with Teaching and Learning on Students’ Learning Performance: A Meta-Analysis and Research Synthesis. Comput. Educ. 2016, 94, 252–275. [Google Scholar] [CrossRef]

- Zweekhorst, M.B.M.; Maas, J. ICT in Higher Education: Students Perceive Increased Engagement. J. Appl. Res. High. Educ. 2015, 7, 2–18. [Google Scholar] [CrossRef]

- Cuetos Revuelta, M.J.; Grijalbo Fernández, L.; Argüeso Vaca, E.; Escamilla Gómez, V.; Ballesteros Gómez, R. Potencialidades de las TIC y su papel fomentando la creatividad: Percepciones del profesorado. RIED 2020, 23, 287. [Google Scholar] [CrossRef]

- Smith, C. What Is Wearable Tech? Everything You Need to Know Explained. Available online: https://www.wareable.com/wearable-tech/what-is-wearable-tech-753 (accessed on 11 August 2022).

- Khosravi, S.; Bailey, S.G.; Parvizi, H.; Ghannam, R. Learning Enhancement in Higher Education with Wearable Technology. arXiv 2021, arXiv:2111.07365. [Google Scholar]

- Smuck, M.; Odonkor, C.A.; Wilt, J.K.; Schmidt, N.; Swiernik, M.A. The Emerging Clinical Role of Wearables: Factors for Successful Implementation in Healthcare. NPJ Digit. Med. 2021, 4, 45. [Google Scholar] [CrossRef]

- Hernandez-de-Menendez, M.; Escobar Díaz, C.; Morales-Menendez, R. Technologies for the Future of Learning: State of the Art. Int. J. Interact. Des. Manuf. 2019, 14, 683–695. [Google Scholar] [CrossRef]

- Prendes Espinosa, M.P.; Cerdán Cartagena, F. Tecnologías avanzadas para afrontar el reto de la innovación educativa. RIED 2021, 24, 35. [Google Scholar] [CrossRef]

- Almusawi, H.A.; Durugbo, C.M.; Bugawa, A.M. Wearable Technology in Education: A Systematic Review. IEEE Trans. Learn. Technol. 2021, 14, 540–554. [Google Scholar] [CrossRef]

- Kanna, S.; von Rosenberg, W.; Goverdovsky, V.; Constantinides, A.G.; Mandic, D.P. Bringing Wearable Sensors into the Classroom: A Participatory Approach. IEEE Signal Process. Mag. 2018, 35, 110–130. [Google Scholar] [CrossRef]

- Griffiths, R. Knowledge Production and the Research–Teaching Nexus: The Case of the Built Environment Disciplines. Stud. High. Educ. 2004, 29, 709–726. [Google Scholar] [CrossRef]

- Noguez, J.; Neri, L. Research-Based Learning: A Case Study for Engineering Students. Int. J. Interact. Des. Manuf. 2019, 13, 1283–1295. [Google Scholar] [CrossRef]

- Vázquez Parra, J.C. How to Trigger Research-Based Learning in the Classroom? Available online: https://observatory.tec.mx/edu-bits-2/research-based-learning (accessed on 28 June 2022).

- Center for Teaching Excellence, University of South Carolina. Linking Teaching and Research. Available online: https://sc.edu/about/offices_and_divisions/cte/teaching_resources/maintainingbalance/link_teaching_research/ (accessed on 28 June 2022).

- Ihmig, F.R.; Gogeascoechea, A.; Schäfer, S.; Lass-Hennemann, J.; Michael, T. Electrocardiogram, Skin Conductance and Respiration from Spider-Fearful Individuals Watching Spider Video Clips (Version 1.0.0). PhysioNet 2020. [Google Scholar] [CrossRef]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a New Research Resource for Complex Physiologic Signals. Circulation 2000, 101, E215–E220. [Google Scholar] [CrossRef]

- Schäfer, S.K.; Ihmig, F.R.; Lara, H.K.A.; Neurohr, F.; Kiefer, S.; Staginnus, M.; Lass-Hennemann, J.; Michael, T. Effects of Heart Rate Variability Biofeedback during Exposure to Fear-Provoking Stimuli within Spider-Fearful Individuals: Study Protocol for a Randomized Controlled Trial. Trials 2018, 19, 184. [Google Scholar] [CrossRef]

- Malik, M.; Bigger, J.T.; Camm, A.J.; Kleiger, R.E.; Malliani, A.; Moss, A.J.; Schwartz, P.J. Heart Rate Variability: Standards of Measurement, Physiological Interpretation, and Clinical Use. Eur. Heart J. 1996, 17, 28. [Google Scholar] [CrossRef]

- Rajendra Acharya, U.; Paul Joseph, K.; Kannathal, N.; Lim, C.M.; Suri, J.S. Heart Rate Variability: A Review. Med. Biol. Eng. Comput. 2006, 44, 1031–1051. [Google Scholar] [CrossRef] [PubMed]

- Saleemi, M.; Anjum, M.; Rehman, M. Ubiquitous Healthcare: A Systematic Mapping Study. J. Ambient. Intell. Human Comput. 2020, 1, 1–26. [Google Scholar] [CrossRef]

- Cárdenas-Robledo, L.A.; Peña-Ayala, A. Ubiquitous Learning: A Systematic Review. Telemat. Inform. 2018, 35, 1097–1132. [Google Scholar] [CrossRef]

- Mota, F.P.; de Toledo, F.P.; Kwecko, V.; Devincenzi, S.; Nunez, P. Ubiquitous Learning: A Systematic Review. In Proceedings of the 2019 IEEE Frontiers in Education Conference, Covington, KY, USA, 16–19 October 2019; pp. 1–9. [Google Scholar] [CrossRef]

- Tarvainen, M.P.; Niskanen, J.-P.; Lipponen, J.A.; Ranta-aho, P.O.; Karjalainen, P.A. Kubios HRV–Heart Rate Variability Analysis Software. Comput. Methods Programs Biomed. 2014, 113, 210–220. [Google Scholar] [CrossRef] [PubMed]

- Pichot, V.; Roche, F.; Celle, S.; Barthélémy, J.-C.; Chouchou, F. HRVanalysis: A Free Software for Analyzing Cardiac Autonomic Activity. Front. Physiol. 2016, 7, 557. [Google Scholar] [CrossRef]

- Instituto de Telecomunicacoes. BioSPPy: Biosignal Processing in Python. Available online: https://biosppy.readthedocs.io/en/latest/ (accessed on 23 August 2022).

- Gomes, P. PyHRV: Python Toolbox for Heart Rate Variability. Available online: https://pyhrv.readthedocs.io/en/latest/ (accessed on 23 August 2022).

- George Mason University Writing Center. Writing an IMRaD Report. Available online: https://writingcenter.gmu.edu/writing-resources/imrad/writing-an-imrad-report (accessed on 23 August 2022).

- Seymour, E.; Wiese, D.; Hunter, A.; Daffinrud, S.M. Creating a Better Mousetrap: On-Line Student Assessment of Their Learning Gains. In National Meeting of the American Chemical Society; National Institute of Science Education, University of Wisconsin-Madison: San Francisco, CA, USA, 2000. [Google Scholar]

- Scholl, K.; Olsen, H.M. Measuring Student Learning Outcomes Using the SALG Instrument. SCHOLE A J. Leis. Stud. Recreat. Educ. 2014, 29, 37–50. [Google Scholar] [CrossRef]

- R Core Team R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2020.

- R Studio Team. Studio: Integrated Development Environment for R; RStudio, Inc.: Boston, MA, USA, 2019. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).