Design and Assessment of Survey in a 360-Degree Feedback Environment for Student Satisfaction Analysis Applied to Industrial Engineering Degrees in Spain

Abstract

1. Introduction

2. Literature Review

3. Methods

Motivation and Objectives

- To increase the active participation of students in their own assessment, thus, seeking to motivate the excessively monotonous and individualistic study in engineering, through an innovative action in the classroom [5,15]. To increase student understanding of the role of the evaluator and how items are assessed in engineering subjects.

- To motivate students in their learning process by providing them with tools that allow them to compare their performance with their classmates, thus, making of this evaluation a useful information tool and not merely a control tool [16].

- To improve the student learning process in a peer-assessment environment, as reported in Martínez-Figueira et al. [17].

- o

- To know the degree of satisfaction of students on how the evaluation is being carried out and to observe if there are discrepancies between the evaluation of the hetero-, peer- and self-assessment of students. To obtain feedback concerning the impartial and anonymous assessment experience by the students.

- o

- To validate the satisfaction survey used in the 360-degree feedback evaluation through a formal statistical process (confirmatory factor analysis) to assess its potential standardized use in other subjects in industrial engineering degrees in Spain/outside Spain.

4. Participants

4.1. Design of the Survey

4.2. Description of the 360-Degree Evaluation Method

5. Results

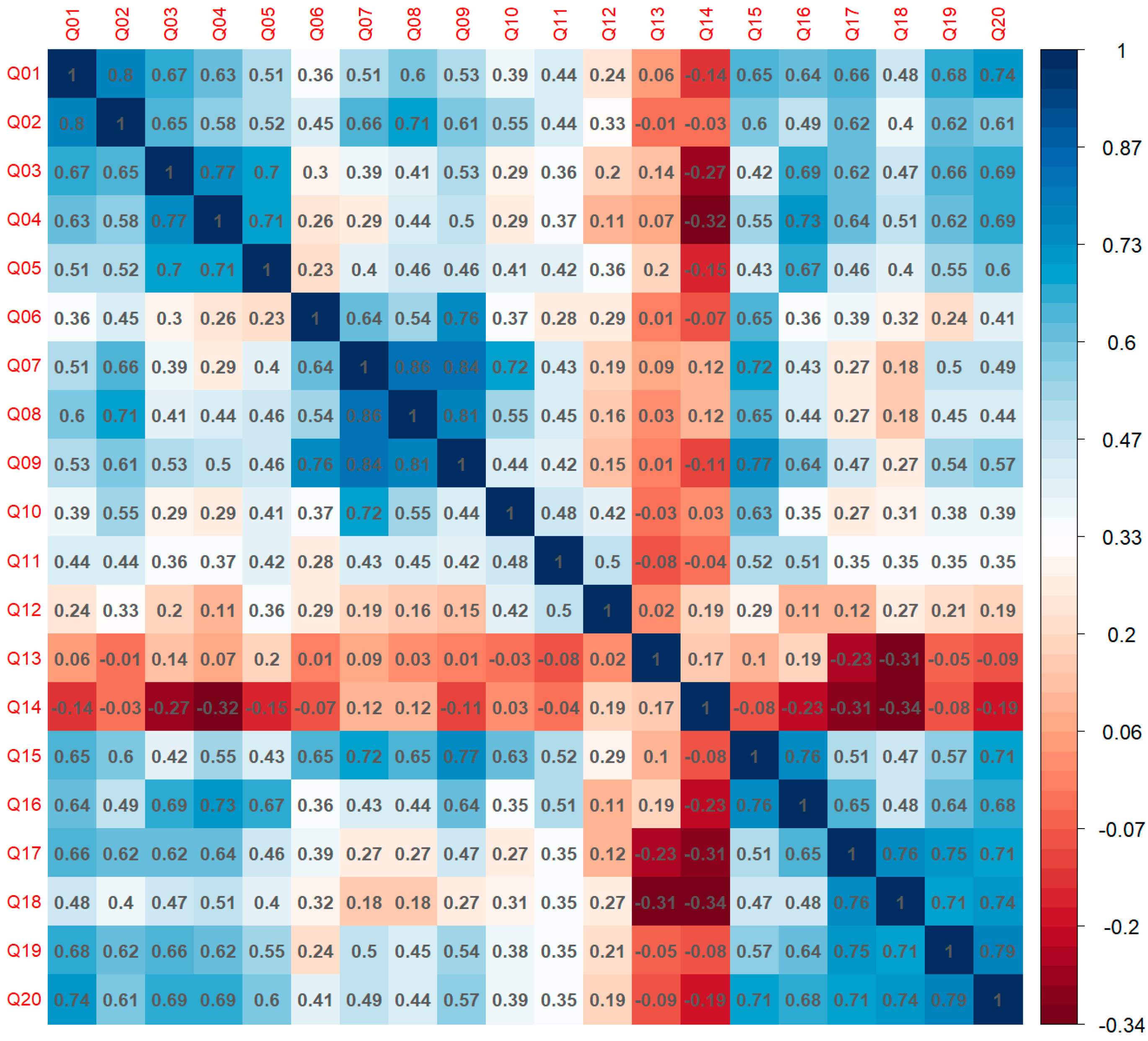

5.1. Confirmatory Factor Analysis

5.2. Internal Consistency of the Survey

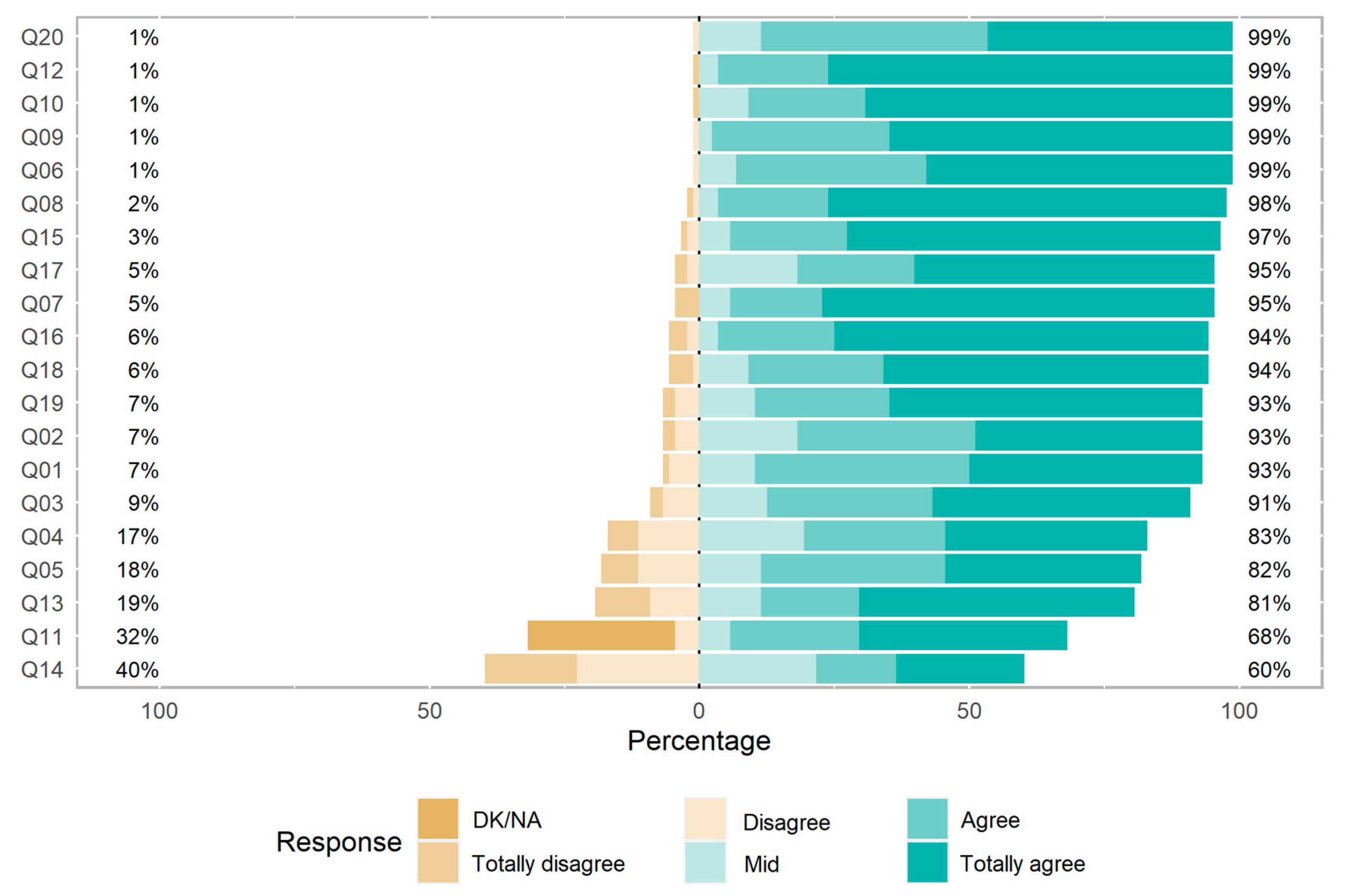

5.3. Overall Analysis of the Sample

5.4. Analysis of the Sample by Groups

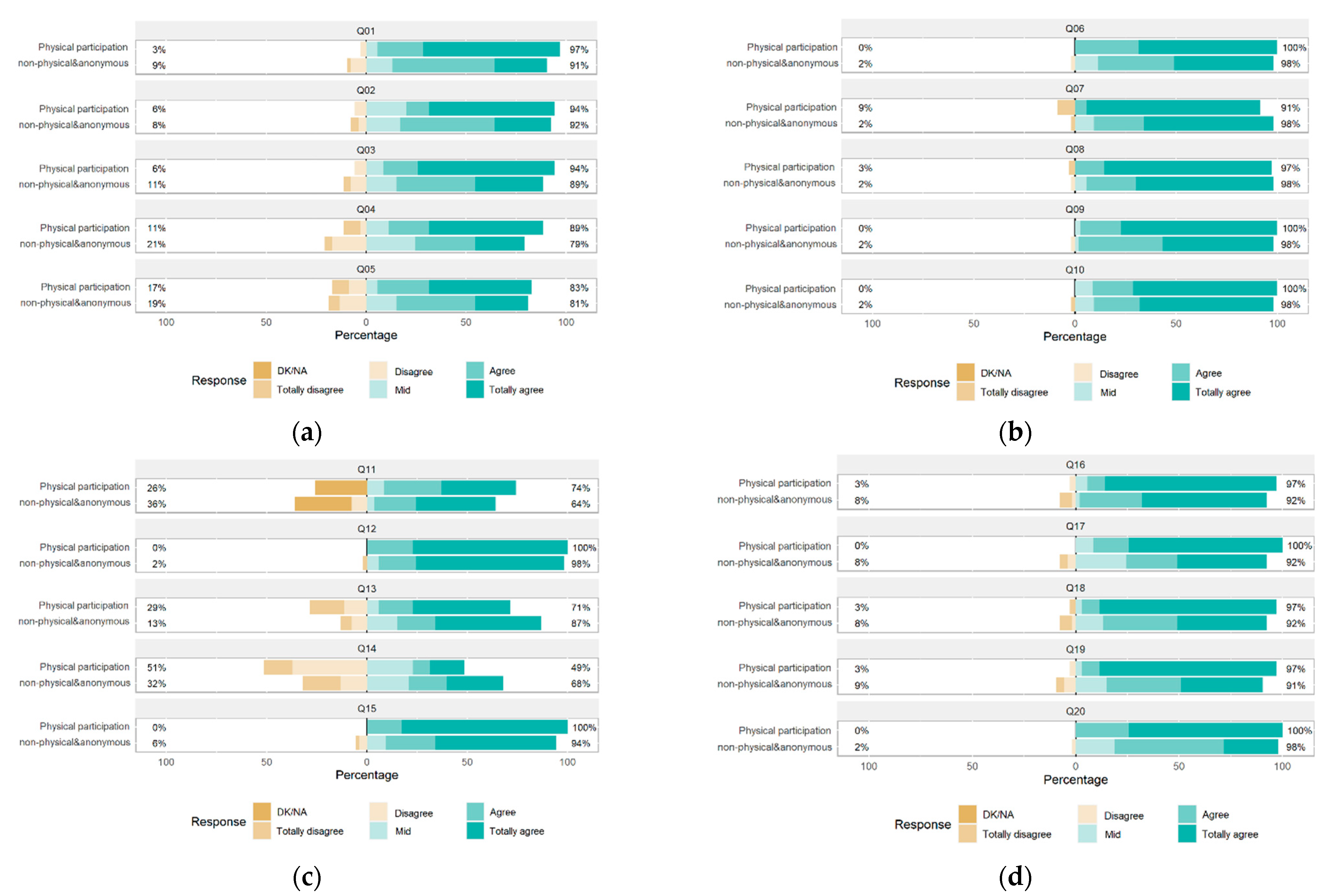

5.5. Analysis Due to Attendance and Anonymity

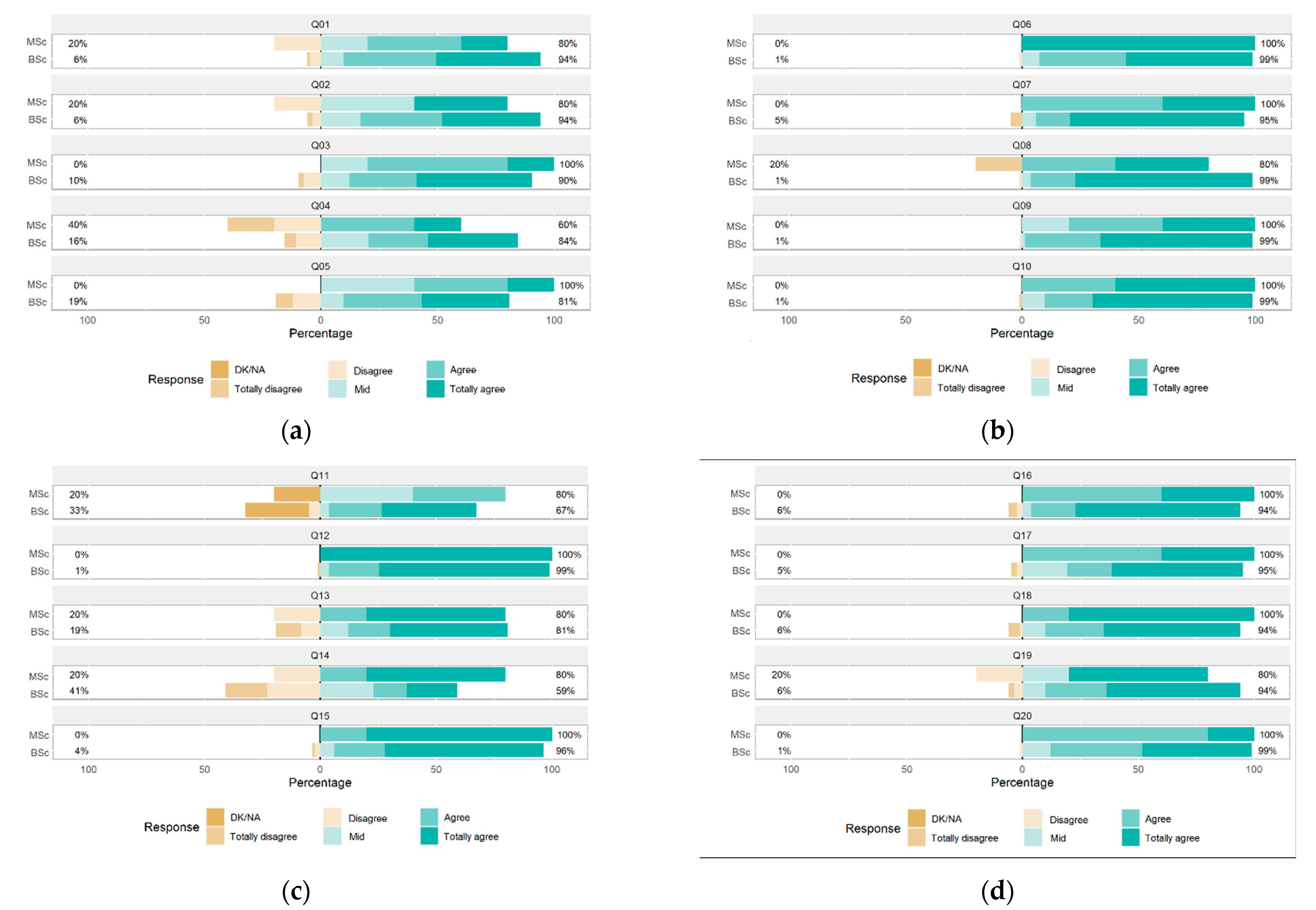

5.6. Analysis Grouped by Bachelor (BSc) and Master (MSc) Degrees

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Silió, E. (18 de diciembre de 2019). La revolución 4.0 peligra: Los estudiantes de ingeniería caen un 30% en 20 años. El País. Available online: https://elpais.com/sociedad/2019/12/17/actualidad/1576612459_205974.html (accessed on 10 June 2022).

- Stegmann, J.G. (18 de diciembre de 2019). En España nadie quiere estudiar ingenierías y el país se dirige a la «dependencia tecnológica». ABC. Available online: https://www.abc.es/sociedad/abci-espana-nadie-quiere-estudiar-ingenierias-y-pais-dirige-dependencia-tecnologica-201912181146_noticia.html (accessed on 10 June 2022).

- Servimedia. (18 de diciembre de 2019). Los Estudiantes de Ciencias e Ingenierías caen un 30% desde 2.000 Porque el Mercado Laboral no Recompensa “el esfuerzo”. El Economista. Available online: https://www.eleconomista.es/ecoaula/noticias/10260885/12/19/Los-estudiantes-de-ciencias-e-ingenierias-caen-un-30-desde-2000-porque-el-mercado-laboral-no-recompensa-el-esfuerzo.html (accessed on 10 June 2022).

- Castillo-Martín, C. Análisis de la Eficiencia de los Estudios de Ingeniería. [Tesis Fin de Grado Ingeniería de Organización Industrial]. Bachelor’s thesis, Universidad de Sevilla, Sevilla, Spain, 2021.

- López-Cózar-Navarro, C.; Benito-Hernández, S.; Priede-Bergamini, T. Un análisis exploratorio de los factores que inciden en el abandono universitario en titulaciones de ingeniería. REDU Rev. Docencia Univ. 2020, 18, 81–96. [Google Scholar] [CrossRef]

- Hernández, J.; Pérez, J.A. (dir.). La Universidad Española en Cifras. Información Académica, Productiva y Financiera de las Universidades Públicas de España. Indicadores Universitarios Curso académico 2017–2018. CRUE. 2019. Available online: http://www.crue.org/Boletin_SG/2020/UEC%202020/UEC%20WEB.pdf (accessed on 10 June 2022).

- Schertzer, C.B.; Schertzer, S.M. Student satisfaction and retention: A conceptual model. J. Mark. High. Educ. 2004, 14, 79–91. [Google Scholar] [CrossRef]

- Rodríguez González, R.; Hernández García, J.; Alonso Gutiérrez, A.M.; Díez Itza, E. El absentismo en la Universidad: Resultados de una encuesta sobre motivos que señalan los estudiantes para no asistir a clase. Aula Abierta 2003, 82, 2003. [Google Scholar]

- García, M.E.; Gutiérrez, A.B.B.; Rodríguez-Muñiz, L.J. Permanencia en la universidad: La importancia de un buen comienzo. Aula Abierta 2016, 44, 1–6. [Google Scholar] [CrossRef]

- Jiménez Galán, Y.I.; González Ramírez, M.A.; Hernández Jaime, J. Modelo 360 para la evaluación por competencias (enseñanza-aprendizaje). Innovación Educ. 2010, 10, 43–53. Available online: https://www.redalyc.org/pdf/1794/179420770003.pdf (accessed on 10 June 2022).

- Lévy-Leboyer, C. Feedback de 360; Grupo Planeta (GBS): Barcelona, Spain, 2004. [Google Scholar]

- Bisquerra Alzina, R.; Martínez Olmo, F.; Obiols Soler, M.; Pérez Escoda, N. Evaluación de 360°: Una aplicación a la educación emocional. Rev. Investig. Educ. 2006, 24, 187–203. Available online: https://revistas.um.es/rie/article/view/97371 (accessed on 10 June 2022).

- Alles, M.A. Desempeño por Competencias: Evaluación de 360; Ediciones Granica SA: Buenos Aires, Argentina, 2002. [Google Scholar]

- Hanrahan, S.J.; Isaacs, G. Assessing Self- and Peer-assessment: The students’ views. High. Educ. Res. Dev. 2001, 20, 53–70. [Google Scholar] [CrossRef]

- Boud, D.; Cohen, R.; Sampson, J. Peer Learning and Assessment. Assess. Eval. High. Educ. 1999, 24, 413–426. [Google Scholar] [CrossRef]

- Vivanco-Álvarez, R.V.; Pinto-Vilca, S.A. Efecto de la aplicación de coevaluación sobre la motivación de logro en estudiantes de nivel universitario. Paid. XXI 2018, 8, 57–78. [Google Scholar] [CrossRef]

- Martínez-Figueira, E.; Tellado-González, F.; Raposo-Rivas, M. La rúbrica como instrumento para la autoevaluación: Un estudio piloto. REDU Rev. Docencia Univ. 2013, 11, 373–390. [Google Scholar] [CrossRef]

- Mertler, C.A. Designing scoring rubrics for your classroom. Pract. Assess. Res. Eval. 2001, 7, 25. [Google Scholar] [CrossRef]

- Basurto-Mendoza, S.T.; Cedeño, J.A.M.; Espinales, A.N.V.; Gámez, M.R. Autoevaluación, Coevaluación y Heteroevaluación como enfoque innovador en la práctica pedagógica y su efecto en el proceso de enseñanza-aprendizaje. Polo Del Conoc. Rev. Científico Prof. 2021, 6, 828–845. [Google Scholar] [CrossRef]

- Lent, R.W.; Singley, D.; Sheu, H.B.; Schmidt, J.A.; Schmidt, L.C. Relation of social-cognitive factors to academic satisfaction in engineering students. J. Career Assess. 2007, 15, 87–97. [Google Scholar] [CrossRef]

- Martínez-Caro, E.; Campuzano-Bolarín, F. Factors affecting students’ satisfaction in engineering disciplines: Traditional vs. blended approaches. Eur. J. Eng. Educ. 2011, 36, 473–483. [Google Scholar] [CrossRef]

- Kim, E.; Rothrock, L.; Freivalds, A. An empirical study on the impact of lab gamification on engineering students’ satisfaction and learning. Int. J. Eng. Educ. 2018, 34, 201–216. [Google Scholar]

- González Rogado, A.B.; Rodríguez Conde, M.J.; Olmos Migueláñez, S.; Borham-Puyal, M.; García-Peñalvo, F.J. Key factors for determining student satisfaction in engineering: A regression study. Int. J. Eng. Educ. IJEE 2014, 30, 576–584. [Google Scholar]

- Olds, B.M.; Miller, R.L. The effect of a first-year integrated engineering curriculum on graduation rates and student satisfaction: A longitudinal study. J. Eng. Educ. 2004, 93, 23–35. [Google Scholar] [CrossRef]

- Blanco, A. Las rúbricas: Un instrumento útil para la evaluación de competencias, In La Enseñanza Universitaria Centrada en el Aprendizaje: Estrategias Útiles para el Profesorado; Prieto, L., Ed.; Octaedro-ICE de la Universidad de Barcelona: Barcelona, Spain, 2008; pp. 171–188. [Google Scholar]

- Urbieta, J.M.E.; Garayalde, K.A.; Losada, D. Diseño de rúbricas en la formación inicial de maestros/as. Rev. Form. Innovación Educ. Univ. 2011, 4, 156–169. [Google Scholar]

- Ministerio de Universidades. Datos y Cifras del Sistema Universitario Español. 2019. Publicación 2020–2021. Available online: https://www.universidades.gob.es/stfls/universidades/Estadisticas/ficheros/Datos_y_Cifras_2020-21.pdf (accessed on 1 July 2022).

- Baker, R.W.; Siryk, B. Student Adaptation to College Questionnaire (SACQ); Western Psychological Services: Worcester, MA, USA, 1984. [Google Scholar] [CrossRef]

- Tuan, H.L.; Chin, C.C.; Shieh, S.H. The development of a questionnaire to measure students’ motivation towards science learning. Int. J. Sci. Educ. 2005, 27, 639–654. [Google Scholar] [CrossRef]

- Douglas, J.; Douglas, A.; Barnes, B. Measuring student satisfaction at a UK university. Qual. Assur. Educ. 2006, 14, 251–267. [Google Scholar] [CrossRef]

- Alarcón, R.; Blanca, M.J.; Bendayan, R. Student satisfaction with educational podcasts questionnaire. Escr. De Psicol.Psychol. Writ. 2017, 10, 126–133. [Google Scholar] [CrossRef]

- Santos-Pastor, M.L.; Cañadas, L.; Martínez-Muñoz, L.F.; García-Rico, L. Diseño y validación de una escala para evaluar el aprendizaje-servicio universitario en actividad física y deporte. Educ. XX1 2020, 23, 67–93. [Google Scholar] [CrossRef]

- Casero-Martínez, A. Propuesta de un cuestionario de evaluación de la calidad docente universitaria consensuado entre alumnos y profesores. Rev. Investig. Educ. 2008, 26, 25–44. Available online: https://revistas.um.es/rie/article/view/94091 (accessed on 10 June 2022).

- González López, I.; López Cámara, A.B. Sentando las bases para la construcción de un modelo de evaluación a las competencias docentes del profesorado universitario. Rev. Investig. Educ. 2010, 28, 403–423. Available online: https://revistas.um.es/rie/article/view/109431 (accessed on 10 June 2022).

- López Pastor, V.M.L.; Pascual, M.G.; Martín, J.B. La participación del alumnado en la evaluación: La autoevaluación, la coevaluación y la evaluación compartida. Rev. Tándem: Didáctica Educ. Física 2005, 17, 21–37. Available online: http://hdl.handle.net/11162/21846 (accessed on 10 June 2022).

- Likert, R. A technique for the measurement of attitudes. Arch. Psychol. 1932, 22, 55. [Google Scholar]

- Albaum, G. The Likert scale revisited: An alternate version. Mark. Res. Society. J. 1997, 39, 331–348. [Google Scholar] [CrossRef]

- Allen, I.E.; Seaman, C.A. Likert scales and data analyses. Qual. Prog. 2007, 40, 64–65. [Google Scholar]

- Jöreskog, K.G. On the estimation of polychoric correlations and their asymptotic covariance matrix. Psychometrika 1994, 59, 381–389. [Google Scholar] [CrossRef]

- García Cueto, E.; Gallo Álvaro, P.M.; Miranda García, R. Bondad de ajuste en el análisis factorial confirmatorio. Psicothema 1998, 10, 717–724. Available online: http://hdl.handle.net/10651/29218 (accessed on 10 June 2022).

- González-Montesinos, M.J.; Backhoff, E. Validación de un cuestionario de contexto para evaluar sistemas educativos con modelos de ecuaciones estructurales. Relieve 2010, 16, 1–17. [Google Scholar] [CrossRef]

- Rojas-Torres, L. Robustez de los índices de ajuste del Análisis Factorial Confirmatorio a los valores extremos. Rev. De Matemática: Teoría Y Apl. 2020, 27, 403–424. [Google Scholar] [CrossRef]

- Rodríguez-Rodríguez, J.; Reguant-Álvarez, M. Calcular la fiabilidad de un cuestionario o escala mediante el SPSS: El coeficiente alfa de Cronbach. REIRE Rev. D’innovació I Recer. En Educ. 2020, 13, 1–13. [Google Scholar] [CrossRef]

- Cronbach, L.J. Coefficient alpha and the internal structure of tests. Psychometrika 1951, 16, 297–334. [Google Scholar] [CrossRef]

- Nunnally, J.C.; Bernstein, I. Psychometric Theory McGraw-Hill New York, the role of university in the development of entrepreneurial vocations: A Spanish study. J. Technol. Transf. 1978, 37, 387–405. [Google Scholar] [CrossRef]

- Gong, G. Consideration of evaluation of teaching at colleges. Open J. Soc. Sci. 2016, 4, 82. [Google Scholar] [CrossRef]

- Sotelo, A.F.; Arévalo, M.G.V. Proceso de autoevaluación, coevaluación y heteroevaluación para caracterizar el comportamiento estudiantil y mejorar su desempeño. Rev. San Gregor. 2015, 1, 6–15. [Google Scholar]

- Abadía Valle, A.R.; Bueno García, C.; Ubieto-Artur, M.I.; Márquez Cebrián, M.D.; Sabaté Díaz, S.; Jorba Noguera, H. Competencias del buen docente universitario. Opinión de los estudiantes. REDU. Rev. De Docencia Univ. 2015, 13, 363–390. [Google Scholar] [CrossRef]

- Topping, K. Peer assessment between students in colleges and universities. Rev. Educ. Res. 1998, 68, 249–276. [Google Scholar] [CrossRef]

| Number | Subject | Course Degree | N. Participants/Total |

|---|---|---|---|

| #1 | Computational simulation of fluid flows over vehicles | 1° Master | 7/8 |

| #2 | Fluid mechanics over vehicles | 1° Master | 3/4 |

| #3 | Teleoperations and telerobotics | 1° Master | 2/4 |

| #4 | Physics I | 1° BSc | 63/64 |

| #5 | Photovoltaic facilities | 4° BSc | 15/20 |

| #6 | Fault-tolerant mechatronic systems | 1° Master | 3/4 |

| #7 | Industrial processes | 3° BSc | 60/63 |

| TOTAL: 153/167 |

| Sex | Men | 70.45% |

| Women | 29.55% | |

| Degree | BSc | 90.2% |

| MSc | 9.8% | |

| Subject | Physics I | 41.2% |

| Industrial processes | 39.22% | |

| Photovoltaic facilities | 9.8% | |

| Computational simulation of fluid flows over vehicles | 4.57% | |

| Fault-tolerant mechatronic systems | 1.96% | |

| Fluid mechanics over vehicles | 1.96% | |

| Teleoperations and telerobotics | 1.31% |

| Group or Dimension | Item Numbering | Item |

|---|---|---|

| Personal evaluation | Q01 | Has participation in the evaluation experience helped me to better understand the contents of the subject? |

| Q02 | Has participation in this experience helped me to detect misconceptions about the content of the subject? | |

| Q03 | Has participation in this experience helped me to be more responsible and leader of my own learning? | |

| Q04 | Has participation in this experience helped me to improve my study system? | |

| Q05 | To know that self-assessment is part of the process: Has this given me an “extra” motivation to carry out the activity? | |

| Criteria | Q06 | Is the evaluation documentation provided by the teaching staff intuitive and easily interpretable? |

| Q07 | Do I think the evaluation criteria have been adequate? | |

| Q08 | Do I think that the point marks of the sections to be evaluated is adequate? | |

| Q09 | Documentation provided by the teaching staff is valuable to carry out the evaluation (rubric, evaluation criteria, point marks and correction, etc.). | |

| Objectivity of evaluation | Q10 | Has the self-assessment been objective and fair? |

| Q11 | If you do not know, please answer 0: has the peer-assessment that I have received from my classmates been objective and fair? | |

| Q12 | Have I carried out a fair and objective peer-assessment of my peers? | |

| Q13 | Do you think that ensuring anonymity would be an important factor for peer-assessment among students? | |

| Q14 | Can I assure that friendship with evaluated peers has not influenced my peer-assessment carried out (positively or negatively)? | |

| Q15 | Has the evaluation carried out by your teaching staff been objective and fair? | |

| Learning from the experience | Q16 | Has participation in this experience allowed me to better understand the evaluative role of teaching staff? |

| Q17 | Do I consider that I have learned more with this experience than with the traditional method? | |

| Q18 | Do you think it would be interesting to apply this experience to other subjects? | |

| Q19 | Would you recommend the subject because of the experience you had with this evaluation method? | |

| Q20 | Objectively, how would you rate this experience? |

| Item | |||

|---|---|---|---|

| Q01 | 0.757 | 0.911 | 0.947 |

| Q02 | 0.761 | 0.911 | 0.947 |

| Q03 | 0.730 | 0.912 | 0.948 |

| Q04 | 0.718 | 0.913 | 0.948 |

| Q05 | 0.655 | 0.916 | 0.949 |

| Q06 | 0.486 | 0.918 | 0.950 |

| Q07 | 0.573 | 0.917 | 0.946 |

| Q08 | 0.598 | 0.916 | 0.949 |

| Q09 | 0.682 | 0.914 | 0.947 |

| Q10 | 0.603 | 0.916 | 0.949 |

| Q11 | 0.579 | 0.916 | 0.951 |

| Q12 | 0.272 | 0.922 | 0.954 |

| Q15 | 0.716 | 0.913 | 0.945 |

| Q16 | 0.729 | 0.912 | 0.945 |

| Q17 | 0.721 | 0.912 | 0.947 |

| Q18 | 0.604 | 0.916 | 0.950 |

| Q19 | 0.757 | 0.911 | 0.947 |

| Q20 | 0.774 | 0.912 | 0.946 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Granados-Ortiz, F.-J.; Gómez-Merino, A.I.; Jiménez-Galea, J.J.; Santos-Ráez, I.M.; Fernandez-Lozano, J.J.; Gómez-de-Gabriel, J.M.; Ortega-Casanova, J. Design and Assessment of Survey in a 360-Degree Feedback Environment for Student Satisfaction Analysis Applied to Industrial Engineering Degrees in Spain. Educ. Sci. 2023, 13, 199. https://doi.org/10.3390/educsci13020199

Granados-Ortiz F-J, Gómez-Merino AI, Jiménez-Galea JJ, Santos-Ráez IM, Fernandez-Lozano JJ, Gómez-de-Gabriel JM, Ortega-Casanova J. Design and Assessment of Survey in a 360-Degree Feedback Environment for Student Satisfaction Analysis Applied to Industrial Engineering Degrees in Spain. Education Sciences. 2023; 13(2):199. https://doi.org/10.3390/educsci13020199

Chicago/Turabian StyleGranados-Ortiz, Francisco-Javier, Ana Isabel Gómez-Merino, Jesús Javier Jiménez-Galea, Isidro María Santos-Ráez, Juan Jesús Fernandez-Lozano, Jesús Manuel Gómez-de-Gabriel, and Joaquín Ortega-Casanova. 2023. "Design and Assessment of Survey in a 360-Degree Feedback Environment for Student Satisfaction Analysis Applied to Industrial Engineering Degrees in Spain" Education Sciences 13, no. 2: 199. https://doi.org/10.3390/educsci13020199

APA StyleGranados-Ortiz, F.-J., Gómez-Merino, A. I., Jiménez-Galea, J. J., Santos-Ráez, I. M., Fernandez-Lozano, J. J., Gómez-de-Gabriel, J. M., & Ortega-Casanova, J. (2023). Design and Assessment of Survey in a 360-Degree Feedback Environment for Student Satisfaction Analysis Applied to Industrial Engineering Degrees in Spain. Education Sciences, 13(2), 199. https://doi.org/10.3390/educsci13020199