1. Introduction

On 30 April 2021, the Australian Government announced a package of measures to support non-uni higher education providers (NUHEPs) most affected by COVID-19 and border closures [

1]. This package included

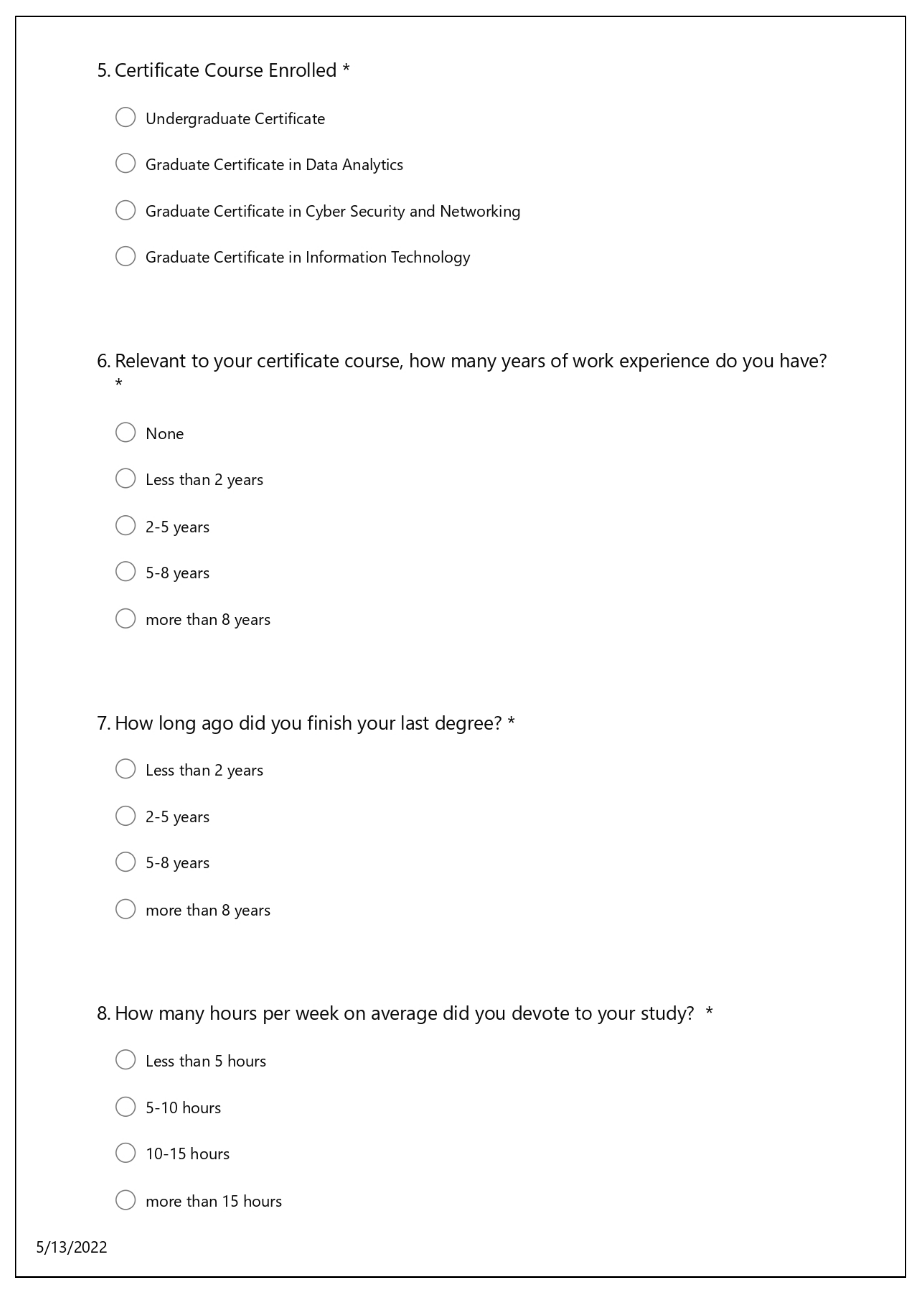

$26.1 million for 5000 short course places for domestic students at NUHEPs, for delivery in Semester 2, 2021. Melbourne Institute of Technology (MIT), being a NUHEP that has campuses both in Melbourne and Sydney, was successful in gaining federal support to offer several short courses in the form of two undergraduate courses, including Undergraduate Certificate in Information Technology (UCIT), Undergraduate Certificate in Cybersecurity and Networking (UCCN), and three postgraduate certificates, including Graduate Certificate in Cyber Security and Networking (GCCN), Graduate Certificate in Information Technology (GCIT), and Graduate Certificate in Data Analytics (GCDA). These undergraduate and postgraduate certificates were designed to be completed within a shorter duration than the normal degree, so that graduates could return to the workforce and become productive as soon as possible. In meeting such requirements, MIT designed the short courses to run in a 4-week (for undergraduate courses) or 5-week (for postgraduate courses) block mode, with students taking one unit after another, instead of concurrently, as in the normal semester system. In addition, due to the cohort of students which include both school leavers and students who are studying part-time, a flipped classroom approach was adopted, with most of the learning materials made available prior to the start of each block, and having a two-hour weekly workshop delivered through the Zoom platform, where students met their instructor and received the support they needed on their study.

Recent research has suggested that intensive modes of delivery might improve engagement, attendance, and achievement for students from diverse backgrounds [

2]. One mode of intensive delivery that is related to this study is block teaching [

3]. Block teaching has previously been applied in the delivery of tertiary education in Australia, one such example being Victoria University (VU), which experimented with using block teaching in their first-year engineering degree, and had found the delivery mode positively affected student outcomes [

4]. However, while the VU block mode example was delivered in a face-to-face teaching environment, the national and state lockdown in 2021 meant that face-to-face teaching was not feasible, and so the delivery of the MIT block mode was exclusively online. There are thus several distinct differences between the block mode previously delivered, and the approach by MIT, presenting an opportunity to investigate the extent to which the block mode delivery works in alternative educational contexts.

Firstly, units in a certificate course were of a shorter duration, that is, in 4-week or 5-week block mode. Secondly, they were delivered one after the other (i.e., taking one block at a time). Thirdly, they were taught using a flipped learning pedagogy and delivered entirely online. Fourthly, they were open to school leavers, and those who were already in the workforce.

We have recently studied how the flipped learning (FL) approach has impacted our student’s perception in attaining the graduate attributes (GAs) in capstone project units at MIT [

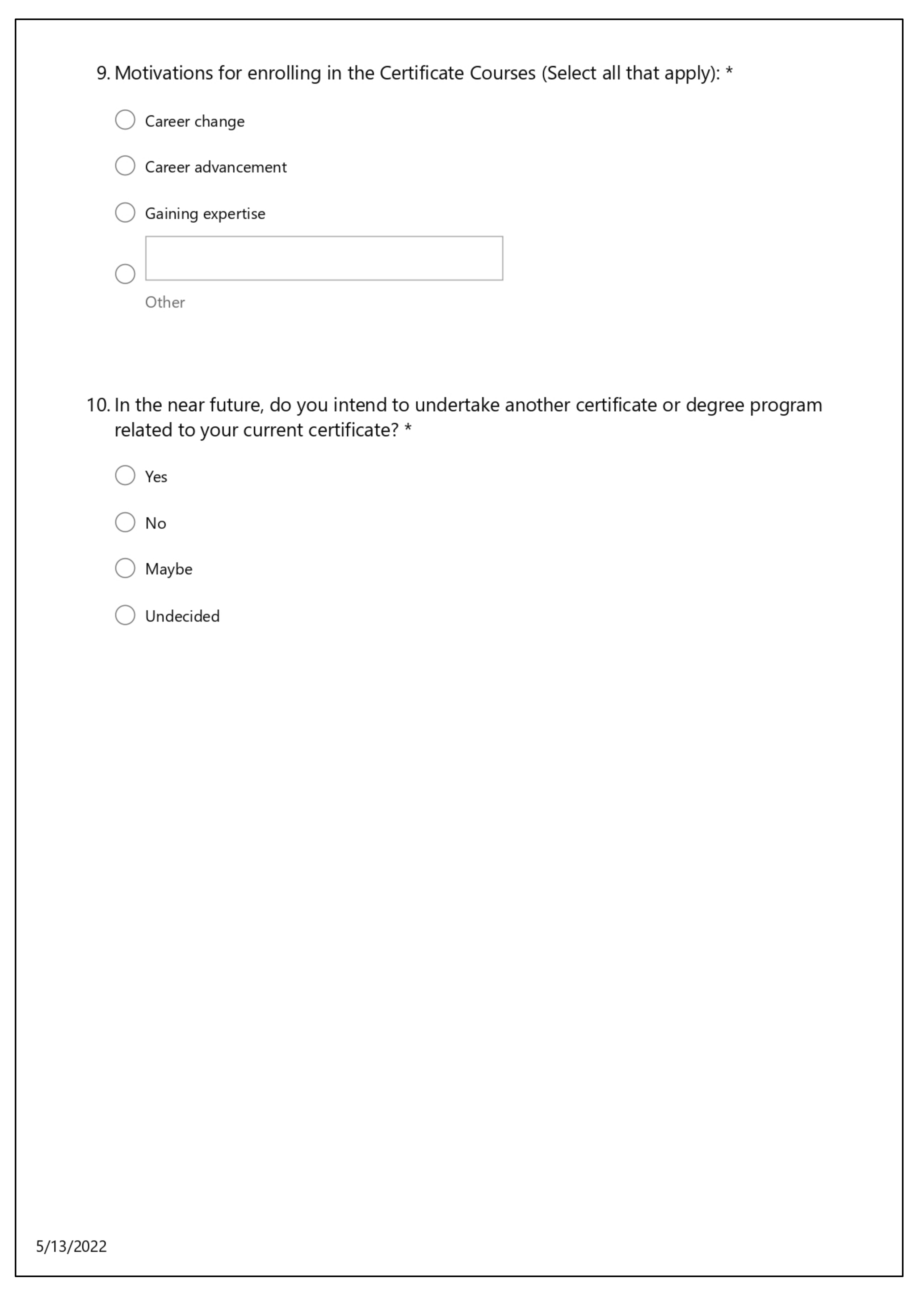

5]. Building on our recent work, this project addresses how students perceive their learning and engagement, while studying one of the five certificate courses at MIT between July 2021 and January 2022, over two repetitions of the undergraduate course and three repetitions of the postgraduate courses. Each repetition of the undergraduate certificate consists of four 4-week blocks, while each repetition of a postgraduate certificate consists of three 5-week blocks, one for each subject of each certificate. By capturing the students’ feedback using an online questionnaire, we want to understand how satisfied students are with their overall learning and engagement experiences based on 10 perception questions, the first nine of which focus on one key aspect of their learning and engagement experience, including the quality of learning materials, pre-recorded lectures, assessments, feedback, academic services, etc. In summary, the two main objectives of this research are:

Assessing students’ perception of the BMFC delivery in meeting their expectations of learning and engagement.

Clarifying the key aspects or factors of the certificate courses, which include quality of learning materials, pre-recorded lectures, assessments, feedback, etc., that are significant in influencing students’ overall satisfaction.

An understanding of these significant factors will provide guidance on how to improve students’ satisfaction in future offerings of these courses, and the viability of BMFC delivery as a whole, together with an improved theoretical understanding of the nature of student satisfaction of novel educational delivery modes.

The rest of the paper is organized as follows.

Section 1.1, on Literature Review, will overview related research to our study.

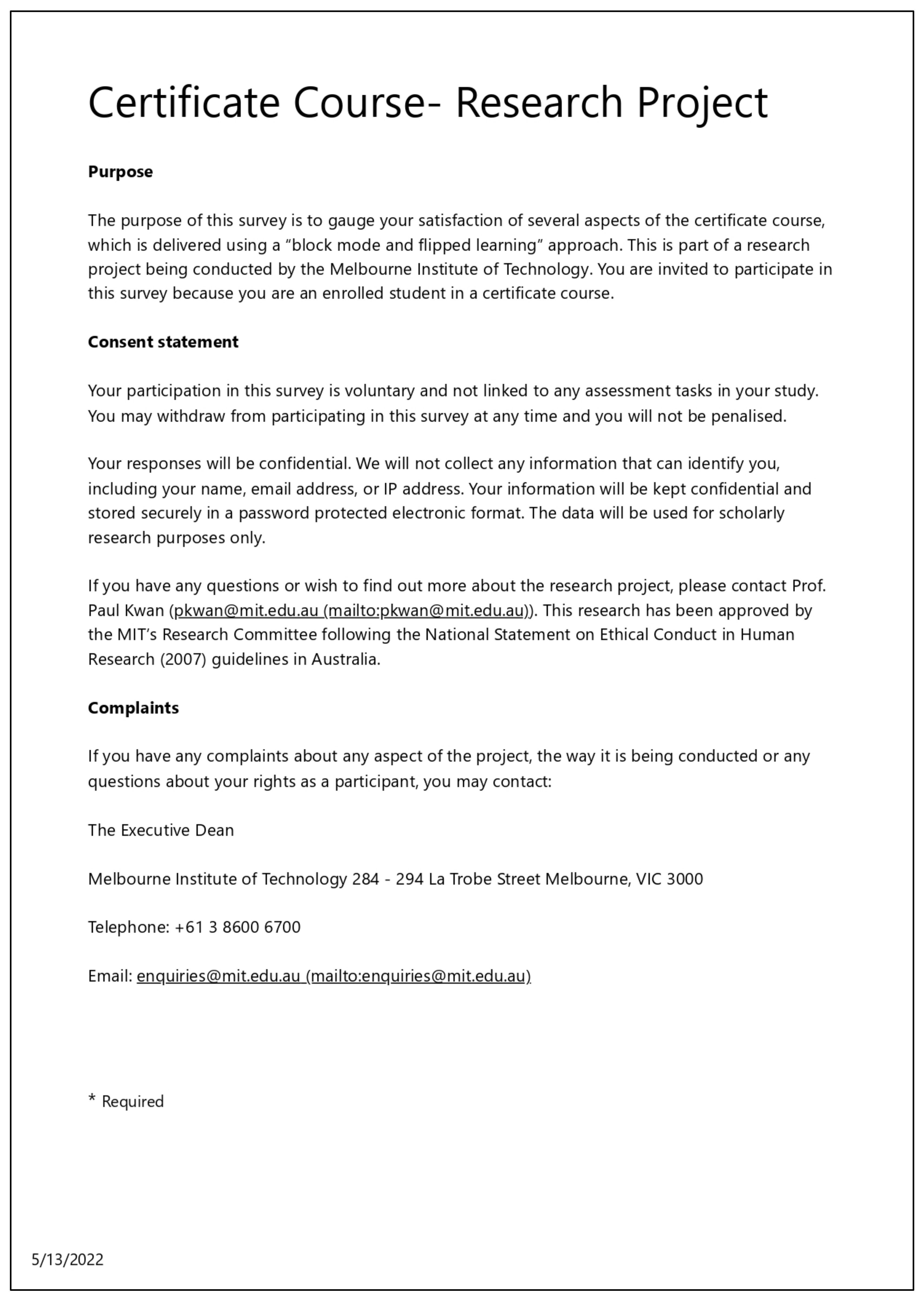

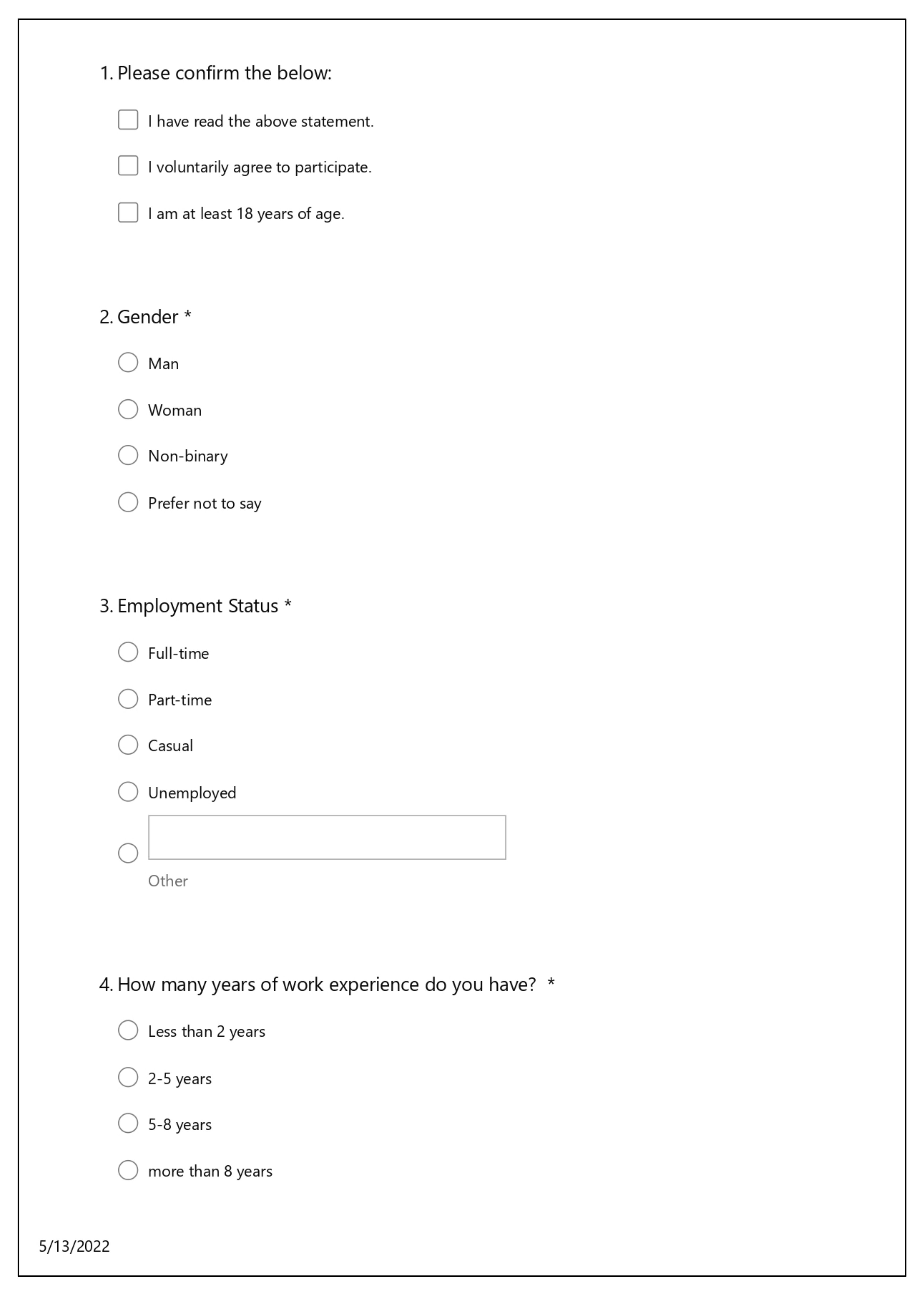

Section 2, on Materials and Methods, will describe the approach and methodology we have adopted for this study, and describe the empirical data collected using an online questionnaire we have developed to survey students’ perception on their experience studying the certificate courses at MIT, delivered in an intensive block mode, and flipped classroom (BMFC) pedagogy.

Section 3, on Results, will present statistical analyses of the survey data, while the Discussion in

Section 4 explains the implications of our study based on the results. Lastly, the Conclusion, in

Section 5, summarises the objectives and contributions of this research, as well as highlighting potential future work arising from this study.

1.1. Literature Review

In recent times, block mode has gained attention as an instructional delivery method in which students are only allowed to enroll in one unit at a time and it is completed in a short, intensive period before moving onto another. This teaching approach has the benefit of keeping students focused on a single unit at a time. In the Australian context, block mode delivery has been shown to positively affect students’ satisfaction in their courses, academic outcomes, and career mentality [

6,

7,

8]. Likewise, students’ satisfaction and performance in the block mode delivery of a first-year undergraduate degree has been previously reported [

9]. The authors found a substantial increase in performance in a certain subset of students, but also reported a decrease in course satisfaction.

In [

10], the authors analyzed students’ engagement and performance for first-year engineering students, whose instruction was based on a block mode problem-based learning delivery approach. The study revealed that students’ engagement and performance increased, along with a similar increase in the quality of learning. The effects of block mode learning on students’ performance among students with specialized needs, such as those who were repeating their units, were reported in [

11]. The results showed improvement in students’ academic performance.

However, studies on the effectiveness of teaching and the quality of learning by students in intensive mode courses have not always been positive. In [

12], the authors examined the practices and challenges of modular/block teaching in Ethiopian higher education institutions by collecting data using surveys and interviews. Their analysis of the data revealed that while modular/block teaching might help students to focus on a single subject at a time, it does not give adequate attention to practical skills. Because of this, they argued that theory has not informed practice, and vice versa, thereby diminishing the credibility of learning. Another criticism of block teaching came in the form of an open letter by an anonymous student of Victorica University, entitled “Against the block model” [

13]. In the letter, the student wrote as someone who had experienced firsthand the VU-model, stating that block teaching had brought confusion and alienation to new or struggling students, and caused them to fall behind. Whereas, inevitably, there are pros and cons to most pedagogical approaches, including block teaching, the authors of [

14] concluded in their report, commissioned by the Australian Department of Education and Training in 2015–2017, that both students and teachers reported many benefits of the intensive model, provided that it was planned well, to avoid such risks as student and staff burnout.

On the other hand, flipped learning is a method of teaching and learning that derives its name from the way it flips, or inverts, the structure of the traditional classroom model [

15]. It can be considered a specific type of blended learning [

16,

17] that requires students to combine various types of learning to improve engagement, and use teaching resources effectively [

18,

19,

20,

21,

22]. The adoption of a flipped classroom approach is also partly driven by the decreasing attendance rate of lectures, and the increasing demand for a flexible learning approach [

23,

24]. The key objective of using a flipped approach is to utilize class time productively by moving the information transmission out of the classroom to promote active exchange and socializing between teachers and learners, as well as among learners in class. According to [

24], analysis reveals that flipped learning positively impacts student performance regardless of the level of education and disciplines, yet the effect might be different, depending on the flipped classroom design.

In practice, the combination of block mode delivery and flipped classroom has not been used commonly, or intentionally, in either online or traditional classroom setting. We believe there is a genuine research gap in this area of research that requires careful investigation and reflection. Equally important is the study for improvement of the certificate courses at MIT, which is anticipated to be offered again in the future. It is critical that we understand the students’ perception of the delivery and teaching approach of the certificate courses to optimize both educational quality and enrolment. We also anticipate the findings of our study will add to the body of knowledge among the higher education community, who are considering the possible adoption of hybrid and intensive block mode teaching in the near future.

5. Conclusions

This paper reported an empirical study performed to understand how students perceived their experience studying one of several certificate courses at Melbourne Institute of Technology (MIT), Australia, between July 2021 and January 2022, where the authors of this study are affiliated. These certificate courses were delivered in an intensive block mode and flipped classroom (BMFC) pedagogy, which was a first at MIT. Until recently, the institute had been delivering its courses using a traditional 12-week trimester system. These certificate courses were different in several aspects, including (a) a shorter 4-week duration for undergraduate, or 5-week duration for postgraduate units, (b) the units were taken in sequence instead of parallelly, as in a normal trimester, (c) all activities were online rather than in-class, and (d) students included both school leavers and mature aged students.

By capturing students’ feedback using an online questionnaire, this study aimed to understand how satisfied students were with their overall learning and engagement experiences based on 10 perception questions, the first nine of which focused on one key aspect of their learning and engagement experience, including the quality of learning materials, pre-recorded lectures, assessments, feedback, academic services, etc. Our goals and contributions included: (1) assessing students’ perception of the BMFC delivery in meeting their expectations on learning and engagement, and (2) clarifying the key aspects or factors of the certificate courses, which included quality of learning materials, pre-recorded lectures, assessments, feedback, etc., that were significant in influencing the overall students’ satisfaction.

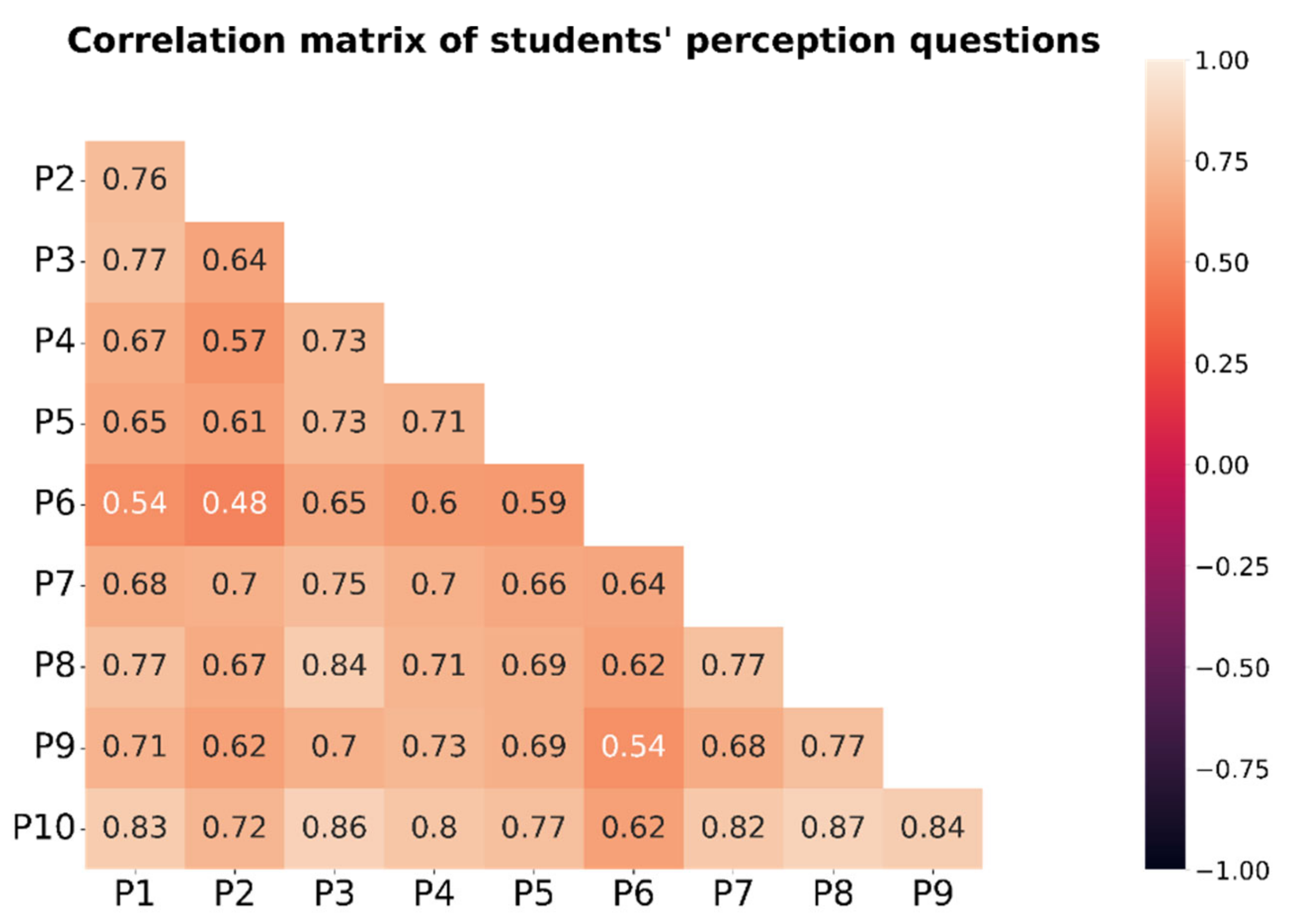

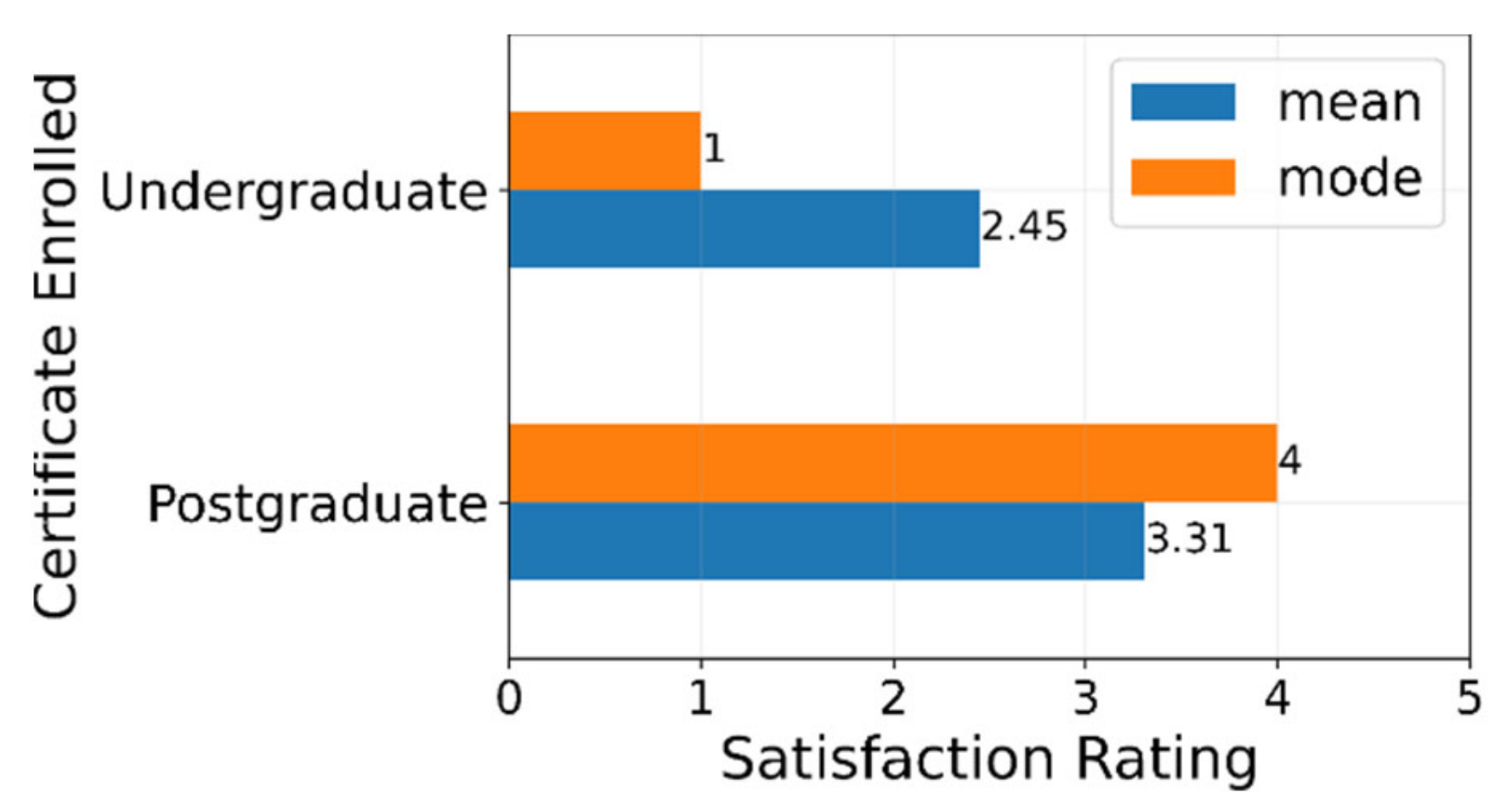

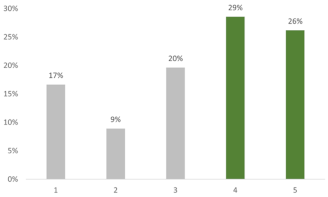

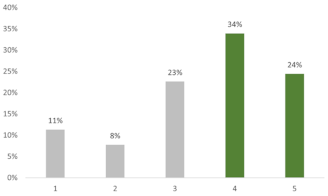

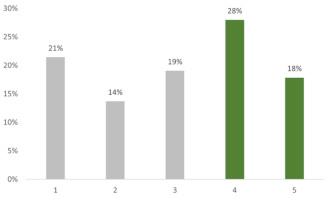

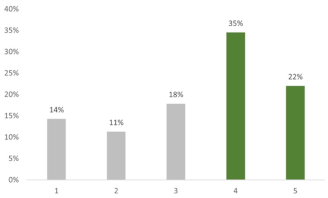

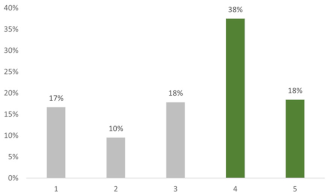

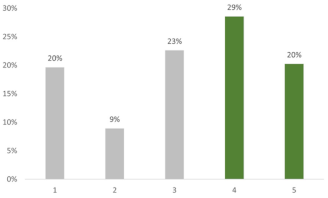

Statistical analysis on the questionnaire data was performed post-survey. The mean (standard deviation), median, and mode calculated from the responses revealed that students regarded the BMFC approach as being positive on a 5-star rating scale in 7 out of the 10 perception questions. This is supported by high pairwise correlations among the questions (the lowest at r = 0.48 and the highest at r = 0.87). In addition, multiple regression analysis using the first nine perception questions as predictors for the 10th question (overall satisfaction) revealed that six of these were statistically significant predictors (p < 0.05) of the overall satisfaction of the students surveyed, implying that an increase in the overall satisfaction could potentially be achieved by improving these key factors of the BMFC delivered certificate courses.

The findings of this study are equally relevant to other delivery modes in higher education, including distance learning and remote learning. By assessing students’ perception using a survey instrument such as the one proposed in this study, one can ascertain how satisfied students are with the learning and engagement in their study. In addition, one can also identify the key factors that may affect students’ overall satisfaction, while studying under a particular delivery mode. In turn, we anticipate that the results reported will add to the body of knowledge among the higher education community on the impact of innovative teaching and delivery approaches on students’ satisfaction on their learning and engagement post COVID-19.