Self-Regulated Learning Strategies as Predictors of Perceived Learning Gains among Undergraduate Students in Ethiopian Universities

Abstract

:1. Introduction

- What is the state of SRLSs and perceived learning among undergraduate students in Ethiopia?

- To what extent do the SRLS components relate to one another as perceived by undergraduate students in the context of Ethiopian universities?

- Do the SRLS components predict a university student’s perceived learning after accounting for the control factors in universities in Ethiopia?

2. Materials and Methods

2.1. Study Design

2.2. Theoretical Framework and Empirical Background

2.3. Participants of the Study

2.4. Data Collection Instrument and Validty Evidences

2.5. Data Analyses Methods

2.6. Preliminary Analyses

3. Results

3.1. Demographic Information and Descriptive Analyses Results

Demographic Information about the Student Participants

3.2. Results of Descriptive Statistics for the SRL Components and Perceived Learning Gains

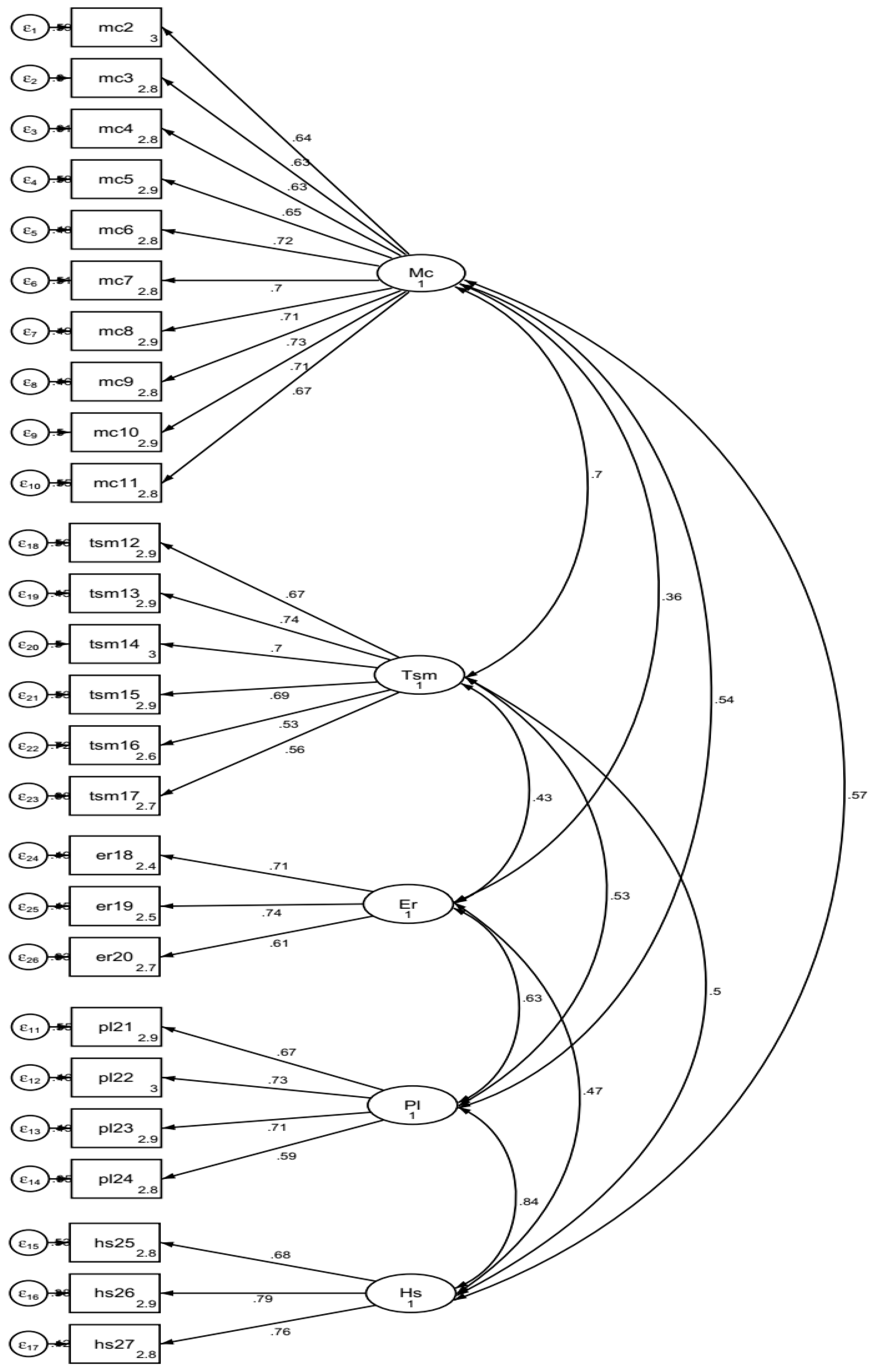

3.3. Results of the SRLS’s Factor Structure and Model−Fit Using SEM

3.4. Results of Hierarchical Multiple Regression Ananlyses

4. Discussion

Study Limitations

5. Conclusions and Implications

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Arum, R.; Roksa, J.; Cook, A. Improving Quality in American Higher Education: Learning Outcomes and Assessments for the 21st Century; Jossey-Bass: San Francisco, CA, USA, 2016. [Google Scholar]

- Tadesse, T.; Gillies, R.; Campbell, C. Assessing the dimensionality and educational impacts of integrated ICT literacy in the higher education context. Australas. J. Educ. Technol. 2018, 34, 88–101. [Google Scholar] [CrossRef] [Green Version]

- Virtanen, A.; Tynjälä, P. Factors explaining the learning of generic skills: A study of university students’ experiences. Teach. High. Educ. 2019, 24, 880–894. [Google Scholar] [CrossRef]

- Anderson, P.; Fraillon, J. What makes a difference? How measuring the non-academic outcomes of schooling can help guide school practice. In Proceedings of the ACER’s 14th Annual Research Conference: Assessment and Student Learning: Collecting, Interpreting and Using Data to Inform Teaching, Perth, Australia, 16–18 August 2009. [Google Scholar]

- Bradforth, S.; Miller, E.R.; Dichtel, W.R.; Leibovich, A.K.; Feig, A.L.; Martin, J.D.; Bjorkman, K.S.; Schultz, Z.D.; Smith, T.L. University learning: Improve undergraduate science education. Nature 2015, 523, 282–284. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yearwood, T.L.; Jones, E.A. Understanding what influences successful black commuter students’ engagement in college. J. Gen. Educ. 2012, 61, 97–125. [Google Scholar] [CrossRef]

- Sakurai, Y.; Pyhältö, K. Understanding students’ academic engagement in learning amid globalising universities. In Annual Review of Comparative and International Education; Wiseman, A.W., Ed.; Emerald Publishing Limited: Bingley, UK, 2018; pp. 31–38. [Google Scholar]

- Babayigit, B.B.; Guven, M. Self-regulated learning skills of undergraduate students and the role of higher education in promoting self-regulation. Eurasian J. Educ. Res. 2020, 20, 47–70. [Google Scholar] [CrossRef]

- Seifert, T.A.; Pascarella, E.T.; Goodman, K.M.; Salisbury, M.H.; Blaich, C.F. Liberal arts colleges and good practices in undergraduate education: Additional evidence. J. Coll. Stud. Dev. 2010, 51, 1–22. [Google Scholar] [CrossRef]

- Kivunja, C. Do you want your students to be job-ready with 21st century skills? Change pedagogies: A pedagogical paradigm shift from Vygotskyian social constructivism to critical thinking, problem solving and Siemens’ digital connectivism. Int. J. High. Educ. 2014, 3, 81–91. [Google Scholar] [CrossRef] [Green Version]

- Song, X. “Critical thinking” and pedagogical implications for higher education. East Asia 2016, 33, 25–40. [Google Scholar] [CrossRef]

- Kilgo, C.A.; Sheets, J.K.E.; Pascarella, E.T. The link between high-impact practices and student learning: Some longitudinal evidence. High. Educ. 2015, 69, 509–525. [Google Scholar] [CrossRef]

- Fink, M.C.; Heitzmann, N.; Siebeck, M.; Fischer, F.; Fischer, M.R. Learning to diagnose accurately through virtual patients: Do reflection phases have an added benefit? BMC Med. Educ. 2021, 21, 523. [Google Scholar] [CrossRef]

- Fink, M.C.; Reitmeier, V.; Stadler, M.; Siebeck, M.; Fischer, F.; Fischer, M.R. Assessment of diagnostic competences with standardized patients versus virtual patients: Experimental study in the context of history taking. J. Med. Internet Res. 2021, 23, e21196. [Google Scholar] [CrossRef] [PubMed]

- Prosser, M.; Sze, D. Problem-based learning: Student learning experiences and outcomes. Clin. Linguist. Phon. 2014, 28, 131–142. [Google Scholar] [CrossRef]

- Verbic, G.; Keerthisinghe, C.; Chapman, A.C. A project-based cooperative approach to teaching sustainable energy systems. IEEE Trans. Educ. 2017, 60, 221–228. [Google Scholar] [CrossRef]

- Santos, J.; Figueiredo, A.S.; Vieira, M. Innovative pedagogical practices in higher education: An integrative literature review. Nurse Educ. Today 2019, 72, 12–17. [Google Scholar] [CrossRef] [PubMed]

- Tarbutton, T. Leveraging 21st century learning & technology to create caring diverse classroom cultures. Multicult. Educ. 2018, 25, 4–6. [Google Scholar]

- McNeil, H.P.; Scicluna, H.; Boyle, P.; Grimm, M.; Gibson, K.; Jones, P.D. Successful development of generic capabilities in an undergraduate medical education program. High. Educ. Res. Dev. 2012, 31, 525–539. [Google Scholar] [CrossRef]

- Olapade-Olaopa, O.; Adaramoye, O.; Raji, Y.; Fasola, A.O.; Olopade, F. Developing a competency-based medical education curriculum for the core basic medical sciences in an African medical school. Adv. Med. Educ. Pract. 2016, 7, 389–398. [Google Scholar] [CrossRef] [Green Version]

- Bloom, L.; Kowalske, K.; Dole, S. Transforming pedagogy: Changing perspectives from teacher-centered to learner-centered. Interdiscip. J. Probl. Based Learn. 2015, 10, 1. [Google Scholar]

- Tadesse, T.; Gillies, R.; Manathunga, C. Shifting the instructional paradigm in higher education classrooms in Ethiopia: What happens when we use cooperative learning pedagogies more seriously? Int. J. Educ. Res. 2020, 99, 101509. [Google Scholar] [CrossRef]

- Ahmed, Y.; Taha, M.H.; Al-Neel, S.; Gaffar, A. Students’ perception of the learning environment and its relation to their study year and performance in Sudan. Int. J. Med. Educ. 2018, 9, 145–150. [Google Scholar] [CrossRef]

- Tadesse, T.; Melese, W.; Ferede, B.; Getachew, K.; Asmamaw, A. Constructivist learning environments and forms of learning in Ethiopian public universities: Testing factor structures and prediction models. Learn. Environ. Res. 2022, 25, 75–95. [Google Scholar] [CrossRef]

- Hsieh, T.-L. Motivation matters? The relationship among different types of learning motivation, engagement behaviors and learning outcomes of undergraduate students in Taiwan. High. Educ. 2014, 68, 417–433. [Google Scholar] [CrossRef]

- Picton, C.; Kahu, E.R.; Nelson, K. Hardworking, determined and happy’: First-year students’ understanding and experience of success. High. Educ. Res. Dev. 2018, 37, 1260–1273. [Google Scholar] [CrossRef]

- Yucel, R.; Bird, F.; Young, J.; Blanksby, T. The road to self-assessment: Exemplar marking before peer review develops first-year students’ capacity to judge the quality of a scientific report. Assess. Eval. High. Educ. 2014, 39, 971–986. [Google Scholar] [CrossRef]

- Park, S.; Kim, N.H. University students’ self-regulation, engagement and performance in flipped learning. Eur. J. Train. Dev. 2022, 46, 22–40. [Google Scholar] [CrossRef]

- Pintrich, P.R. An achievement goal theory perspective on issues in motivation terminology, theory, and research. Contemp. Educ. Psychol. 2000, 25, 92–104. [Google Scholar] [CrossRef] [Green Version]

- Pintrich, P.R.; Garcia, T. Self-regulated learning in college students: Knowledge, strategies, and motivation. In Student Motivation, Cognition, and Learning. Essays in Honor of Wilbert J. McKeachie; Pintrich, P.R., Brown, D.R., Weinstein, C.E., Eds.; Routledge: London, UK, 1994; pp. 113–133. [Google Scholar]

- Pintrich, P.R. Chapter 14—The role of goal orientation in self-regulated learning. In Handbook of Self-Regulation; Boekaerts, M., Pintrich, P.R., Zeidner, M., Eds.; Academic Press: San Diego, CA, USA, 2000; pp. 451–502. [Google Scholar]

- Rasheed, R.A.; Kamsin, A.; Abdullah, N.A. An approach for scaffolding students peer-learning self-regulation strategy in the online component of blended learning. IEEE Access 2021, 9, 30721–30738. [Google Scholar] [CrossRef]

- Sitzmann, T.; Ely, K.; Brown, K.G.; Bauer, K.N. Self-assessment of knowledge: A cognitive learning or affective measure? Acad. Manag. Learn. Educ. 2010, 9, 169–191. [Google Scholar] [CrossRef]

- Bacon, D.R. Reporting actual and perceived student learning in education research. J. Mark. Educ. 2016, 38, 3–6. [Google Scholar] [CrossRef] [Green Version]

- Elbeck, M.; Bacon, D. Toward universal definitions for direct and indirect assessment. J. Educ. Bus. 2015, 90, 278–283. [Google Scholar] [CrossRef]

- Fink, M.C.; Reitmeier, V.; Siebeck, M.; Fischer, F.; Fischer, M.R. Live and video simulations of medical history-taking: Theoretical background, design, development, and validation of a learning environment. In Learning to Diagnose with Simulations; Fischer, F., Opitz, A., Eds.; Springer Nature: Cham, Switzerland, 2022; pp. 109–122. [Google Scholar]

- Reddy, Y.M.; Andrade, H. A review of rubric use in higher education. Assess. Eval. High. Educ. 2010, 35, 435–448. [Google Scholar] [CrossRef]

- McGrath, C.H.; Guerin, B.; Harte, E.; Frearson, M.; Manville, C. Learning Gain in Higher Education; RAND Corporation: Santa Monica, CA, USA, 2015. [Google Scholar]

- Thomas, L.J.; Parsons, M.; Whitcombe, D. Assessment in smart learning environments: Psychological factors affecting perceived learning. Comput. Hum. Behav. 2019, 95, 197–207. [Google Scholar] [CrossRef]

- Russell, J.M.; Baik, C.; Ryan, A.T.; Molloy, E. Fostering self-regulated learning in higher education: Making self-regulation visible. Act. Learn. High. Educ. 2020, 23, 97–113. [Google Scholar] [CrossRef]

- Pintrich, P.R.; Smith, D.A.F.; Garcia, T.; McKeachie, W.J. Reliability and predictive validity of the motivated strategies for learning questionnaire (MSLQ). Educ. Psychol. Meas. 1993, 53, 801–813. [Google Scholar] [CrossRef]

- Zimmerman, B.J. A social cognitive view of self-regulated academic learning. J. Educ. Psychol. 1989, 81, 329–339. [Google Scholar] [CrossRef]

- Lai, E.R. Metacognition: A Literature Review. Pearson Assessments. 2011. Available online: http://images.pearsonassessments.com/images/tmrs/Metacognition_Literature_Review_Final.pdf (accessed on 1 June 2022).

- Pintrich, P.R. A conceptual framework for assessing motivation and self-regulated learning in college students. Educ. Psychol. Rev. 2004, 16, 385–407. [Google Scholar] [CrossRef] [Green Version]

- Pintrich, P.; Smith, A.; Garcia, T.; Mckeachie, J. A Manual for the Motivated Strategies for Learning Questionarie (MSLQ). ERIC. 1991. Available online: https://files.eric.ed.gov/fulltext/ED338122.pdf (accessed on 1 June 2022).

- Vrieling, E.; Stijnen, S.; Bastiaens, T. Successful learning: Balancing self-regulation with instructional planning. Teach. High. Educ. 2018, 23, 685–700. [Google Scholar] [CrossRef] [Green Version]

- Hadwin, A.F.; Winne, P.H. Promoting learning skills in undergraduate students. In Enhancing the Quality of Learning: Dispositions, Instruction, and Learning Processes; Kirby, J.R., Lawson, M.J., Eds.; Cambridge University Press: Cambridge, UK, 2012; pp. 201–227. [Google Scholar]

- Yot-Domínguez, C.; Marcelo, C. University students’ self-regulated learning using digital technologies. Int. J. Educ. Technol. High. Educ. 2017, 14, 38. [Google Scholar] [CrossRef]

- Richardson, M.; Abraham, C.; Bond, R. Psychological correlates of university students’ academic performance: A systematic review and meta-analysis. Psychol. Bull. 2012, 138, 353–387. [Google Scholar] [CrossRef] [Green Version]

- Broadbent, J.; Poon, W.L. Self-regulated learning strategies & academic achievement in online higher education learning environments: A systematic review. Internet High. Educ. 2015, 27, 1–13. [Google Scholar]

- Papageorgiou, E. Self-Regulated learning strategies and academic performance of accounting students at a South African university. South Afr. J. High. Educ. 2022, 36, 251–278. [Google Scholar]

- Anthonysamy, L.; Koo, A.-C.; Hew, S.-H. Self-regulated learning strategies and non-academic outcomes in higher education blended learning environments: A one decade review. Educ. Inf. Technol. 2020, 25, 3677–3704. [Google Scholar] [CrossRef]

- Caspersen, J.; Smeby, J.-C.; Aamodt, P.O. Measuring learning outcomes. Eur. J. Educ. 2017, 52, 20–30. [Google Scholar] [CrossRef]

- Schunk, D.H. Modeling and attributional effects on children’s achievement: A self-efficacy analysis. J. Educ. Psychol. 1981, 73, 93–105. [Google Scholar] [CrossRef]

- Schunk, D.H. Sequential attributional feedback and children’s achievement behaviors. J. Educ. Psychol. 1984, 76, 1159–1169. [Google Scholar] [CrossRef]

- Veenman, M.V.J.; Spaans, M.A. Relation between intellectual and metacognitive skills: Age and task differences. Learn. Individ. Differ. 2005, 15, 159–176. [Google Scholar] [CrossRef]

- Zimmerman, B.J.; Schunk, D.H. Handbook of Self-Regulation of Learning and Performance; Routledge: New York, NY, USA; Taylor & Francis Group: Abingdon, UK, 2011. [Google Scholar]

- Roth, A.; Ogrin, S.; Schmitz, B. Assessing self-regulated learning in higher education: A systematic literature review of self-report instruments. Educ. Assess. Eval. Account. 2016, 28, 225–250. [Google Scholar] [CrossRef]

- Pintrich, P.R. Taking control of research on volitional control: Challenges for future theory and research. Learn. Individ. Differ. 1999, 11, 335–354. [Google Scholar] [CrossRef]

- Pintrich, P.R.; Zusho, A. Student Motivation and Self-Regulated Learning in the College Classroom; Springer: Dordrecht, The Netherlands, 2007; pp. 731–810. [Google Scholar]

- Chalachew, A.A.; Lakshmi, V.H. Factors influence students self-regulation learning towards their academic achievement in undergraduate programs in Ethiopia. Abhinav Natl. Mon. Refereed J. Res. Arts Educ. 2013, 2, 30–40. [Google Scholar]

- Pintrich, P.R.; De Groot, E.V. Motivational and self-regulated learning components of classroom academic performance. J. Educ. Psychol. 1990, 82, 33–40. [Google Scholar] [CrossRef]

- Boekaerts, M.; Corno, L. Self-regulation in the classroom: A perspective on assessment and intervention. Appl. Psychol. 2005, 54, 199–231. [Google Scholar] [CrossRef]

- Reinartz, W.; Haenlein, M.; Henseler, J. An empirical comparison of the efficacy of covariance-based and variance-based SEM. Int. J. Res. Mark. 2009, 26, 332–344. [Google Scholar] [CrossRef] [Green Version]

- Acock, A.C. Discovering Structural Equation Modeling Using Stata; Stata Press Books: College Station, TX, USA, 2013. [Google Scholar]

- Kline, R. Principles and Practice of Structural Equation Modeling; Guilford Press: New York, NY, USA, 1998. [Google Scholar]

- Nunally, J.; Bernstein, I. Psychometric Theory; McGraw Hill: New York, NY, USA, 1994. [Google Scholar]

- Cochran, W.G. The χ2 test of goodness of fit. Ann. Math. Stat. 1952, 23, 315–345. [Google Scholar] [CrossRef]

- Bollen, K.A. Structural Equations with Latent Variables; Wiley: New York, NY, USA, 1989. [Google Scholar]

- Browne, M.W.; Cudeck, R. Alternative ways of assessing model fit. Sage Focus Ed. 1993, 154, 136–180. [Google Scholar] [CrossRef]

- Bentler, P.M.; Bonett, D.G. Significance tests and goodness of fit in the analysis of covariance structures. Psychol. Bull. 1980, 88, 588–606. [Google Scholar] [CrossRef]

- Hu, L.-T.; Bentler, P.M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Modeling A Multidiscip. J. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Browne, M.W.; Cudeck, R. Alternative ways of assessing model fit. Sociol. Methods Res. 1992, 21, 230–258. [Google Scholar] [CrossRef]

- Schreiber, J.B.; Nora, A.; Stage, F.K.; Barlow, E.A.; King, J. Reporting structural equation modeling and confirmatory factor analysis results: A review. J. Educ. Res. 2006, 99, 323–337. [Google Scholar] [CrossRef]

- Arum, R.; Roksa, J. Limited learning on college campuses. Society 2011, 48, 203–207. [Google Scholar] [CrossRef] [Green Version]

- Tadesse, T.; Manathunga, C.; Gillies, R. Making sense of quality teaching and learning in higher education in Ethiopia: Unfolding existing realities for future promises. J. Univ. Teach. Learn. Pract. 2018, 15, 4. [Google Scholar] [CrossRef]

- Myers, C.B.; Myers, S.M. The use of learner-centered assessment practices in the United States: The influence of individual and institutional contexts. Stud. High. Educ. 2015, 40, 1904–1918. [Google Scholar] [CrossRef]

- Veer Ramjeawon, P.; Rowley, J. Knowledge management in higher education institutions: Enablers and barriers in Mauritius. Learn. Organ. 2017, 24, 366–377. [Google Scholar] [CrossRef]

- Kahu, E.R.; Nelson, K. Student engagement in the educational interface: Understanding the mechanisms of student success. High. Educ. Res. Dev. 2018, 37, 58–71. [Google Scholar] [CrossRef]

- Schneider, M.; Preckel, F. Variables associated with achievement in higher education: A systematic review of meta-analyses. Psychol. Bull. 2017, 143, 565. [Google Scholar] [CrossRef]

- Stevens, J. Applied Multivariate Statistics for the Social Sciences; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2002. [Google Scholar]

- Lenhard, W.; Lenhard, A. Computation of effect sizes. Psychometrica. 2016. Available online: https://doi.org/10.13140/RG.2.2.17823.92329 (accessed on 1 June 2022).

- Wolters, C.A.; Brady, A.C. College students’ time management: A self-regulated learning perspective. Educ. Psychol. Rev. 2021, 33, 1319–1351. [Google Scholar] [CrossRef]

- VanZile-Tamsen, C.; Livingston, J.A. The differential impact of motivation on the self-regulated strategy use of high-and low-achieving college students. J. Coll. Stud. Dev. 1999, 40, 54–60. [Google Scholar]

- Varasteh, H.; Ghanizadeh, A.; Akbari, O. The role of task value, effort-regulation, and ambiguity tolerance in predicting EFL learners’ test anxiety, learning strategies, and language achievement. Psychol. Stud. 2016, 61, 2–12. [Google Scholar] [CrossRef]

- Wandler, J.B.; Imbriale, W.J. Promoting undergraduate student self-regulation in online learning environments. Online Learn. 2017, 21, n2. [Google Scholar] [CrossRef] [Green Version]

| Independent Variables | Gains in Personal and Social Development | Gains in General Education | Gains in Practical Competence | |||

|---|---|---|---|---|---|---|

| Parr Corr. | p-Value | Parr Corr. | p-Value | Parr Corr. | p-Value | |

| University | −0.027 | 0.368 | −0.035 | 0.238 | −0.039 | 0.195 |

| Major Field | −0.042 | 0.162 | −0.058 | 0.051 | −0.056 | 0.060 |

| Gender | −0.021 | 0.471 | −0.028 | 0.344 | −0.042 | 0.159 |

| Age | 0.007 | 0.811 | 0.015 | 0.609 | 0.015 | 0.619 |

| Resident | −0.070 | 0.018 * | −0.074 | 0.012 * | −0.075 | 0.012 * |

| EPSCE | 0.010 | 0.739 | 0.007 | 0.824 | 0.013 | 0.669 |

| Class Year | −0.083 | 0.005 * | −0.085 | 0.004 * | −0.080 | 0.007 * |

| Attendance | 0.092 | 0.002 * | 0.079 | 0.008 * | 0.065 | 0.030 * |

| Study hours | 0.013 | 0.669 | 0.020 | 0.494 | 0.009 | 0.761 |

| Academic preparation | 0.129 | 0.000 * | 0.132 | 0.000 * | 0.116 | 0.000 * |

| University | Frequency | Percent | ||

| Jimma | 595 | 52.1 | ||

| Mizan-Tipe | 325 | 28.5 | ||

| Metu | 222 | 19.4 | ||

| College attended | Frequency | Percent | ||

| Engineering | 638 | 55.9 | ||

| BECO | 504 | 44.1 | ||

| Gender | Frequency | Percent | ||

| Female | 442 | 38.7 | ||

| Male | 700 | 61.3 | ||

| Age | Frequency | Percent | ||

| 16–19 | 147 | 12.9 | ||

| 20–22 | 601 | 52.6 | ||

| 23–24 | 228 | 20.0 | ||

| 25–39 | 166 | 14.5 | ||

| Resident status | Frequency | Percent | ||

| Female | Male | Female | Male | |

| Living on campus | 321 | 589 | 73% | 84% |

| Living off campus | 121 | 111 | 27% | 16% |

| Class year | Frequency | Percent | ||

| 1st Year | 310 | 27.1 | ||

| 2nd Year | 238 | 20.8 | ||

| 3rd Year | 426 | 37.3 | ||

| 4th and 5th Year 1 | 168 | 14.7 | ||

| Student Attendance rate | Frequency | Percent | ||

| Less than 50% | 106 | 9.28 | ||

| 51–74% | 249 | 21.80 | ||

| 75–94% | 326 | 28.55 | ||

| 95–100% | 461 | 40.37 | ||

| Academic preparation | Frequency | Percent | ||

| Not prepared | 189 | 16.55 | ||

| Somewhat prepared | 502 | 43.96 | ||

| Prepared | 451 | 39.49 | ||

| Variable | Observation | Mean | Standard Deviation | Items in a Component | Cronbach Alpha α |

|---|---|---|---|---|---|

| Metacognition | 1142 | 2.60 | 0.66 | 10 | 0.90 |

| Time and study management | 1142 | 2.62 | 0.67 | 6 | 0.81 |

| Effort regulation | 1142 | 2.38 | 0.75 | 3 | 0.72 |

| Peer learning | 1142 | 2.56 | 0.68 | 4 | 0.76 |

| Help seeking | 1142 | 2.60 | 0.78 | 3 | 0.78 |

| Total | 26 | 0.92 | |||

| Gains in personal and social development | 1142 | 2.78 | 0.72 | 6 | 0.86 |

| Gains in general education | 1142 | 2.78 | 0.79 | 3 | 0.82 |

| Gains in practical competence | 1142 | 2.72 | 0.77 | 4 | 0.83 |

| Total | 13 | 0.93 |

| Step One | General Education | Personal and Social Development | Practical Competence | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| B | β | B | β | B | β | B | β | B | β | B | β | |

| Constant | −0.18 | −0.17 | −0.09 | |||||||||

| Resident | −0.12 | −0.08 ** | −0.13 | −0.08 ** | −0.12 | −0.08 ** | ||||||

| Class Year | −0.06 | −0.10 ** | −0.06 | −0.09 ** | −0.05 | −0.09 ** | ||||||

| Attendance Rate | 0.07 | 0.11 *** | 0.06 | 0.10 ** | 0.04 | 0.08 * | ||||||

| Academic preparation | 0.11 | 0.13 *** | 0.12 | 0.13 *** | 0.10 | 0.12 *** | ||||||

| Adjusted R2 | 0.06 | 0.06 | 0.05 | |||||||||

| F | 200.33 | 190.65 | 140.87 | |||||||||

| Step Two | ||||||||||||

| Constant | −0.04 | −0.01 | 0.06 | |||||||||

| Resident | −0.07 | −0.04 | −0.08 | −0.05 * | −0.07 | −0.05 * | ||||||

| Class year | −0.02 | −0.03 | −0.01 | −0.02 | −0.01 | −0.02 | ||||||

| Attendance rate | 0.01 | 0.01 | −0.00 | −0.00 | −0.02 | −0.03 | ||||||

| Academic preparation | 0.06 | 0.07 ** | 0.06 | 0.07 ** | 0.04 | 0.05 * | ||||||

| Metacognition | 0.03 | 0.03 | 0.00 | 0.00 | 0.00 | 0.01 | ||||||

| Time and study management | 0.11 | 0.10 ** | 0.14 | 0.12 ** | 0.15 | 0.14 *** | ||||||

| Effort regulation | 0.05 | 0.04 | 0.01 | 0.01 | 0.01 | 0.01 | ||||||

| Peer learning | 0.07 | 0.06 | 0.21 | 0.18 * | 0.21 | 0.20 * | ||||||

| Help seeking | 0.51 | 0.47 *** | 0.43 | 0.39 *** | 0.34 | 0.35 *** | ||||||

| Adjusted R2 | 0.44 | 0.45 | 0.43 | |||||||||

| ΔR2 | 0.38 | 0.39 | 0.38 | |||||||||

| ΔF | 780.98 | 860.19 | 790.83 | |||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tadesse, T.; Asmamaw, A.; Getachew, K.; Ferede, B.; Melese, W.; Siebeck, M.; Fischer, M.R. Self-Regulated Learning Strategies as Predictors of Perceived Learning Gains among Undergraduate Students in Ethiopian Universities. Educ. Sci. 2022, 12, 468. https://doi.org/10.3390/educsci12070468

Tadesse T, Asmamaw A, Getachew K, Ferede B, Melese W, Siebeck M, Fischer MR. Self-Regulated Learning Strategies as Predictors of Perceived Learning Gains among Undergraduate Students in Ethiopian Universities. Education Sciences. 2022; 12(7):468. https://doi.org/10.3390/educsci12070468

Chicago/Turabian StyleTadesse, Tefera, Aemero Asmamaw, Kinde Getachew, Bekalu Ferede, Wudu Melese, Matthias Siebeck, and Martin R. Fischer. 2022. "Self-Regulated Learning Strategies as Predictors of Perceived Learning Gains among Undergraduate Students in Ethiopian Universities" Education Sciences 12, no. 7: 468. https://doi.org/10.3390/educsci12070468

APA StyleTadesse, T., Asmamaw, A., Getachew, K., Ferede, B., Melese, W., Siebeck, M., & Fischer, M. R. (2022). Self-Regulated Learning Strategies as Predictors of Perceived Learning Gains among Undergraduate Students in Ethiopian Universities. Education Sciences, 12(7), 468. https://doi.org/10.3390/educsci12070468