Investigating Pre-Service Biology Teachers’ Diagnostic Competences: Relationships between Professional Knowledge, Diagnostic Activities, and Diagnostic Accuracy

Abstract

1. Introduction

1.1. Diagnostic Competences as a Specification of Professional Competence

1.2. Three Components of Diagnostic Competence

1.2.1. Professional Knowledge

1.2.2. Diagnostic Activities

1.2.3. Diagnostic Accuracy

1.3. Empirical Evidence on the Relationships between the Components of Diagnostic Competences

1.4. Video-Based Assessment of Diagnostic Competences

1.5. Summary

2. Aims and Hypotheses

How do the different knowledge facets PCK, CK, and PK relate to diagnostic activities and diagnostic accuracy?

3. Materials and Methods

3.1. Design and Sample

3.2. Measurements

3.2.1. Professional Knowledge Tests

3.2.2. Video-Based Assessment Tool DiKoBi Assess

Measuring Diagnostic Activities

- (1)

- level of students’ cognitive activities and creation of situational interest,

- (2)

- dealing with (specific) student ideas and errors,

- (3)

- use of technical language,

- (4)

- use of experiments,

- (5)

- use of models,

- (6)

- conceptual instruction.

Calculating Diagnostic Accuracy

3.3. Data Analysis

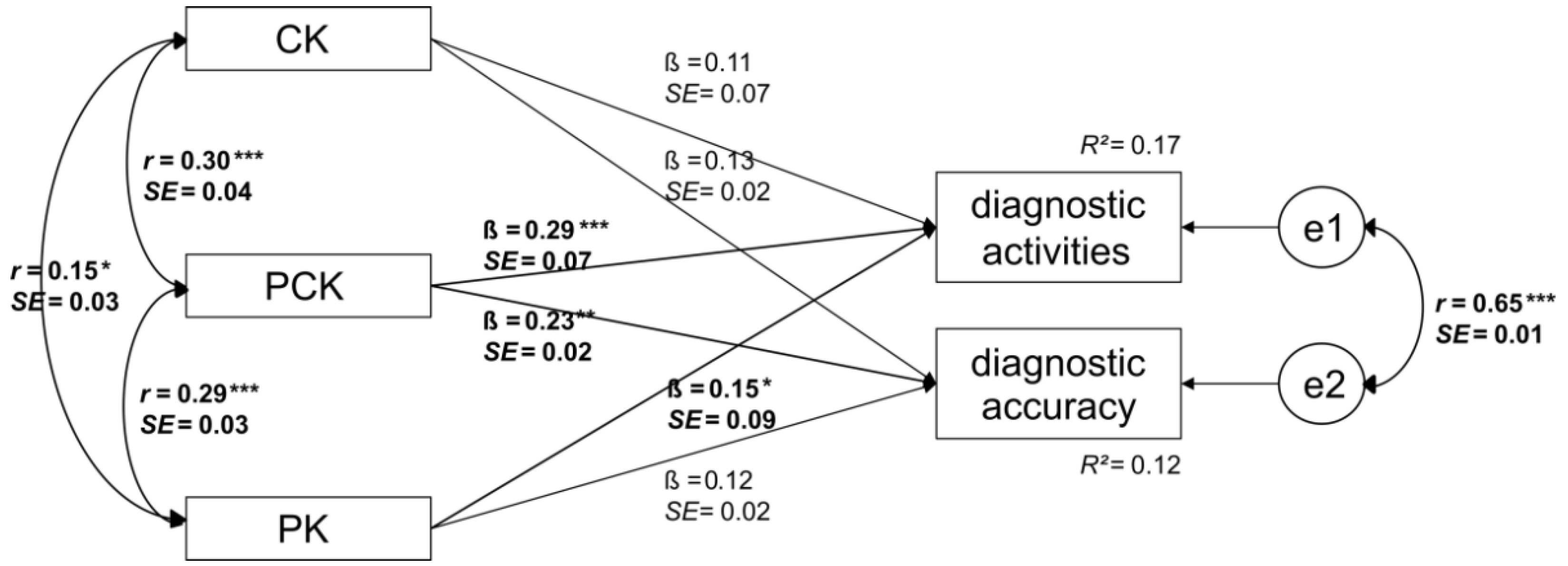

4. Results

5. Discussion

6. Implications and Further Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Coding Procedure for Diagnostic Activities

| Code | Description | Example | ||

|---|---|---|---|---|

| Task Describe | −99 | Missing | No answer was given. | - |

| 0 | Not accurate | The given description is not accurate. | Introduction with media. | |

| 1 | Unsystematic, incomplete description | Non-specific, superficial description of the identified event; weak/no focus on specific details | Repetition very short. | |

| 2 | Systematic, complete description | Several details of the identified event are described with attributes; a strong focus on specific details (visible in the video) | The students should briefly repeat what was discussed last week, but only superficial, general terms are discussed. | |

| Task Explain | −99 | Missing | No answer was given. | - |

| 0 | Not accurate | The given explanation is not accurate. | Presence of the teacher is minimized -less activating and motivating -room use is almost not given. | |

| 1 | Empty phrase | The statement is more of an everyday phrase than an explanation, partly meaningless. | It doesn’t really make sense to say we repeat the last lesson and then stop after two aspects. | |

| 2 | Simple reference to concepts/theories | Appropriate to or based on the corresponding description, the subject-specific pedagogical theory is named as a keyword or embedded as a phrase in a sentence. | The teachers’ questions do not allow for cognitive activation. | |

| 3 | Comprehensive explanation | Observation and theory are related to each other. | Calling one student is not enough, The teacher neither asked for explanations nor did she engage the students to recognize or call on conceptual connections. The activation of prior knowledge could be extended to activate the students more deeply. | |

| Task Alternative Strategy | −99 | Missing | No answer was given. | - |

| 0 | Not accurate | An alternative strategy is missing or the statement contains a suggested strategy that does not make sense with regard to the context. | To handle the whiteboard; best if the room is empty try it out and see how it moves. | |

| 1 | Just one alternative (described non-specific) | One alternative strategy is described. The description is rather general. Concrete examples/references are missing. | Introducing the lesson with a close-up of the skin that arouses interest. | |

| 2 | Several alternatives (described in detail and with examples) | Several appropriate suggestions for improvement are described with concrete examples and references; it is explained to what extent the alternative strategy improves what has been criticized before. | Promoting the motivation of the students by using different skin types (elephant skin, crocodile skin), activating prior knowledge (e.g., describing similarities/differences of the different skin types), asking for students’ prior experiences (e.g., experiments on the sense of feeling/touch). | |

References

- Blömeke, S.; Kaiser, G.; Clarke, D. Preface for the Special Issue on “Video-Based Research on Teacher Expertise”. Int. J. Sci. Math. Educ. 2015, 13, 257–266. [Google Scholar] [CrossRef]

- Kaiser, G.; Busse, A.; Hoth, J.; König, J.; Blömeke, S. About the Complexities of Video-Based Assessments: Theoretical and Methodological Approaches to Overcoming Shortcomings of Research on Teachers’ Competence. Int. J. Sci. Math. Educ. 2015, 13, 369–387. [Google Scholar] [CrossRef]

- Klug, J.; Bruder, S.; Kelava, A.; Spiel, C.; Schmitz, B. Diagnostic competence of teachers: A process model that accounts for diagnosing learning behavior tested by means of a case scenario. Teach. Teach. Educ. 2013, 30, 38–46. [Google Scholar] [CrossRef]

- Heitzmann, N.; Seidel, T.; Opitz, A.; Hetmanek, A.; Wecker, C.; Fischer, M.R.; Ufer, S.; Schmidmaier, R.; Neuhaus, B.J.; Siebeck, M.; et al. Facilitating Diagnostic Competences in Simulations: A Conceptual Framework and a Research Agenda for Medical and Teacher Education. Frontline Learn. Res. 2019, 7, 1–24. [Google Scholar] [CrossRef]

- Brunner, M.; Anders, Y.; Hachfeld, A.; Krauss, S. The Diagnostic Skills of Mathematics Teachers. In Cognitive Activation in the Mathematics Classroom and Professional Competence of Teachers: Results from the COACTIV Project; Kunter, M., Baumert, J., Blum, W., Klusmann, U., Krauss, S., Neubrand, M., Eds.; Springer: New York, NY, USA, 2013; pp. 229–248. ISBN 978-1-4614-5148-8. [Google Scholar]

- Hoth, J.; Döhrmann, M.; Kaiser, G.; Busse, A.; König, J.; Blömeke, S. Diagnostic competence of primary school mathematics teachers during classroom situations. ZDM Math. Educ. 2016, 48, 41–53. [Google Scholar] [CrossRef]

- Klug, J.; Bruder, S.; Schmitz, B. Which variables predict teachers diagnostic competence when diagnosing students’ learning behavior at different stages of a teacher’s career? Teach. Teach. 2016, 22, 461–484. [Google Scholar] [CrossRef]

- Sekretariat der Ständigen Konferenz der Kultusminister der Länder in der Bundesrepublik Deutschland (KMK). Ländergemeinsame Inhaltliche Anforderungen für die Fachwissenschaften und Fachdidaktiken in der Lehrerbildung [Common State Requirements for the Professional Disciplines and Subject Didactics in Teacher Education]. 2008. Available online: https://www.kmk.org/fileadmin/veroeffentlichungen_beschluesse/2008/2008_10_16-Fachprofile-Lehrerbildung.pdf (accessed on 23 February 2021).

- Herppich, S.; Praetorius, A.-K.; Förster, N.; Glogger-Frey, I.; Karst, K.; Leutner, D.; Behrmann, L.; Böhmer, M.; Ufer, S.; Klug, J.; et al. Teachers’ assessment competence: Integrating knowledge-, process-, and product-oriented approaches into a competence-oriented conceptual model. Teach. Teach. Educ. 2018, 76, 181–193. [Google Scholar] [CrossRef]

- Karst, K.; Schoreit, E.; Lipowsky, F. Diagnostische Kompetenzen von Mathematiklehrern und ihr Vorhersagewert für die Lernentwicklung von Grundschulkindern [Diagnostic Competencies of Math Teachers and Their Impact on Learning Development of Elementary School Children]. Z. Für Pädagogische Psychol. 2014, 28, 237–248. [Google Scholar] [CrossRef]

- Steffensky, M.; Gold, B.; Holdynski, M.; Möller, K. Professional Vision of Classroom Management and Learning Support in Science Classrooms—Does Professional Vision Differ Across General and Content-Specific Classroom Interactions? Int. J. Sci. Math. Educ. 2015, 13, 351–368. [Google Scholar] [CrossRef]

- Tolsdorf, Y.; Markic, S. Exploring Chemistry Student Teachers’ Diagnostic Competence—A Qualitative Cross-Level Study. Educ. Sci. 2017, 7, 86. [Google Scholar] [CrossRef]

- Ohle, A.; McElvany, N.; Horz, H.; Ullrich, M. Text-picture integration—Teachers’ attitudes, motivation and self-related cognitions in diagnostics. J. Educ. Res. Online 2015, 7, 11–33. [Google Scholar]

- Schrader, F.-W. Diagnostische Kompetenz von Lehrpersonen [Teacher Diagnosis and Diagnostic Competence]. Beiträge Zur Lehr. 2013, 31, 154–165. [Google Scholar]

- Artelt, C.; Rausch, T. Accuracy of teacher judgments: When and for What Reasons? In Teacher’s Professional Development: Assessment, Training, and Learning. The Future of Education Research; Krolak-Schwerdt, S., Glock, S., Böhmer, M., Eds.; Sense Publishers: Rotterdam, The Netherlands, 2014; pp. 27–43. [Google Scholar]

- Südkamp, A.; Kaiser, J.; Möller, J. Accuracy of teachers’ judgments of students’ academic achievement: A meta-analysis. J. Educ. Psychol. 2012, 104, 743–762. [Google Scholar] [CrossRef]

- Wildgans-Lang, A.; Scheuerer, S.; Obersteiner, A.; Fischer, F.; Reiss, K. Analyzing prospective mathematics teachers’ diagnostic processes in a simulated environment. ZDM Math. Educ. 2020, 57, 175. [Google Scholar] [CrossRef]

- Bromme, R. Kompetenzen, Funktionen und unterrichtliches Handeln des Lehrers [Competencies, functions and instructional behavior of the teacher]. In Psychologie des Unterrichts und der Schule [Psychology of Teaching and School]; Weinert, F.E., Ed.; Hogrefe: Göttingen, Germany, 1997; pp. 177–212. [Google Scholar]

- Kaiser, G.; Blömeke, S.; König, J.; Busse, A.; Döhrmann, M.; Hoth, J. Professional competencies of (prospective) mathematics teachers—Cognitive versus situated approaches. Educ. Stud. Math. 2017, 94, 161–184. [Google Scholar] [CrossRef]

- Depaepe, F.; Verschaffel, L.; Kelchtermans, G. Pedagogical content knowledge: A systematic review of the way in which the concept has pervaded mathematics educational research. Teach. Teach. Educ. 2013, 34, 12–25. [Google Scholar] [CrossRef]

- Hoth, J.; Kaiser, G.; Döhrmann, M.; König, J.; Blömeke, S. A Situated Approach to Assess Teachers’ Professional Competencies Using Classroom Videos. In Mathematics Teachers Engaging with Representations of Practice: A Dynamically Evolving Field; Buchbinder, O., Kuntze, S., Eds.; ICME-13 Monographs; Springer: Berlin/Heidelberg, Germany, 2018; pp. 23–45. [Google Scholar]

- Kersting, N.B.; Givvin, K.B.; Thompson, B.J.; Santagata, R.; Stigler, J.W. Measuring Usable Knowledge: Teachers’ Analyses of Mathematics Classroom Videos Predict Teaching Quality and Student Learning. Am. Educ. Res. J. 2012, 49, 568–589. [Google Scholar] [CrossRef]

- Shulman, L.S. Those who understand: Knowledge growth in teaching. Educ. Res. 1986, 15, 4–14. [Google Scholar] [CrossRef]

- Shulman, L.S. Knowledge and teaching: Foundations of the new reform. Havard Educ. Rev. 1987, 57, 1–22. [Google Scholar] [CrossRef]

- König, J.; Blömeke, S.; Klein, P.; Suhl, U.; Busse, A.; Kaiser, G. Is teachers’ general pedagogical knowledge a premise for noticing and interpreting classroom situations? A video-based assessment approach. Teach. Teach. Educ. 2014, 38, 76–88. [Google Scholar] [CrossRef]

- Voss, T.; Kunter, M.; Baumert, J. Assessing teacher candidates’ general pedagogical/psychological knowledge: Test construction and validation. J. Educ. Psychol. 2011, 103, 952–969. [Google Scholar] [CrossRef]

- Ball, D.L.; Thames, M.H.; Phelps, G. Content Knowledge for Teaching: What Makes It Special? J. Teach. Educ. 2008, 59, 389–407. [Google Scholar] [CrossRef]

- Gess-Newsome, J. A model of professional knowledge and skill including PCK: Results of the thinking from the PCK Summit. In Re-Examining Pedagogical Content Knowledge in Science Education; Berry, A., Friedrichsen, P., Loughran, J., Eds.; Routledge: New York, NY, USA, 2015; pp. 28–42. [Google Scholar]

- Schmelzing, S.; Van Driel, J.; Jüttner, M.; Brandenbusch, S.; Sandmann, A.; Neuhaus, B.J. Development, evaluation, and validation of a paper-and-pencil test for measuring two components of biology teachers’ pedagogical content knowledge concerning the ‘cardiovascular system’. Int. J. Sci. Math. Educ. 2013, 11, 1369–1390. [Google Scholar] [CrossRef]

- Fischer, H.E.; Borowski, A.; Tepner, O. Professional Knowledge of Science Teachers. In Second International Handbook of Science Education; Fraser, B.J., Tobin, K., McRobbie, C.J., Eds.; Springer: Dordrecht, The Netherlands, 2012; pp. 435–448. ISBN 978-1-4020-9040-0. [Google Scholar]

- Baumert, J.; Kunter, M. The Effect of Content Knowledge and Pedagogical Content Knowledge on Instructional Quality and Student Achievement. In Cognitive Activation in the Mathematics Classroom and Professional Competence of Teachers: Results from the COACTIV Project; Kunter, M., Baumert, J., Blum, W., Klusmann, U., Krauss, S., Neubrand, M., Eds.; Springer: New York, NY, USA, 2013; pp. 175–205. ISBN 978-1-4614-5148-8. [Google Scholar]

- Hill, H.C.; Schilling, S.G.; Ball, D.L. Developing Measures of Teachers’ Mathematics Knowledge for Teaching. Elem. Sch. J. 2004, 105, 11–30. [Google Scholar] [CrossRef]

- Baumert, J.; Kunter, M.; Blum, W.; Brunner, M.; Voss, T.; Jordan, A.; Klusmann, U.; Krauss, S.; Neubrand, M.; Tsai, Y.-M. Teachers’ Mathematical Knowledge, Cognitive Activation in the Classroom, and Student Progress. Am. Educ. Res. J. 2010, 47, 133–180. [Google Scholar] [CrossRef]

- Förtsch, C.; Werner, S.; von Kotzebue, L.; Neuhaus, B.J. Effects of biology teachers’ professional knowledge and cognitive activation on students’ achievement. Int. J. Sci. Educ. 2016, 38, 2642–2666. [Google Scholar] [CrossRef]

- Kulgemeyer, C.; Riese, J. From professional knowledge to professional performance: The impact of CK and PCK on teaching quality in explaining situations. J. Res. Sci. Teach. 2018, 55, 1393–1418. [Google Scholar] [CrossRef]

- Kleickmann, T.; Tröbst, S.; Heinze, A.; Bernholt, A.; Rink, R.; Kunter, M. Teacher Knowledge Experiment: Conditions of the Development of Pedagogical Content Knowledge. In Competence Assessment in Education: Research, Models and Instruments; Leutner, D., Fleischer, J., Grünkorn, J., Klieme, E., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 111–129. ISBN 978-3-319-50028-7. [Google Scholar]

- Alonzo, A.C.; Kim, J. Affordances of video-based professional development for supporting physics teachers’ judgments about evidence of student thinking. Teach. Teach. Educ. 2018, 76, 283–297. [Google Scholar] [CrossRef]

- Meschede, N.; Fiebranz, A.; Möller, K.; Steffensky, M. Teachers’ professional vision, pedagogical content knowledge and beliefs: On its relation and differences between pre-service and in-service teachers. Teach. Teach. Educ. 2017, 66, 158–170. [Google Scholar] [CrossRef]

- Sorge, S.; Stender, A.; Neumann, K. The Development of Science Teachers’ Professional Competence. In Repositioning Pedagogical Content Knowledge in Teachers’ Knowledge for Teaching Science; Hume, A., Cooper, R., Borowski, A., Eds.; Springer: Singapore, 2019; pp. 149–164. [Google Scholar]

- Codreanu, E.; Sommerhoff, D.; Huber, S.; Ufer, S.; Seidel, T. Between authenticity and cognitive demand: Finding a balance in designing a video-based simulation in the context of mathematics teacher education. Teach. Teach. Educ. 2020, 95. [Google Scholar] [CrossRef]

- Seidel, T.; Stürmer, K. Modeling and measuring the structure of professional vision in preservice teachers. Am. Educ. Res. J. 2014, 51, 739–771. [Google Scholar] [CrossRef]

- Sherin, M.G.; van Es, E.A. Effects of Video Club Participation on Teachers’ Professional Vision. J. Teach. Educ. 2009, 60, 20–37. [Google Scholar] [CrossRef]

- van Es, E.A.; Sherin, M.G. Learning to notice: Scaffolding new teachers‘ interpretations of classroom interactions. J. Technol. Teach. 2002, 10, 571–596. [Google Scholar]

- Ingenkamp, K.; Lissmann, U. Lehrbuch der Pädagogischen Diagnostik [Textbook of Pedagogical Diagnostics], 6th ed.; Beltz: Weinheim, Germany, 2008; ISBN 3407255039. [Google Scholar]

- Fischer, F.; Kollar, I.; Ufer, S.; Sodian, B.; Hussmann, H.; Pekrun, R.; Neuhaus, B.J.; Dorner, B.; Pankofer, S.; Fischer, M.R.; et al. Scientific reasoning and argumentation: Advancing an interdisciplinary research agenda in education. Frontline Learn. Res. 2014, 5, 28–45. [Google Scholar] [CrossRef]

- Lai, M.K.; Schildkamp, K. In-service Teacher Professional Learning: Use of Assessment in Data-based Decision-making. In Handbook of Human and Social Conditions in Assessment; Brown, G., Ed.; Routledge: Abingdon, UK, 2016; pp. 77–94. ISBN 9781315749136. [Google Scholar]

- Barendsen, E.; Henze, I. Relating Teacher PCK and Teacher Practice Using Classroom Observation. Res. Sci. Educ. 2019, 49, 1141–1175. [Google Scholar] [CrossRef]

- Lehane, L.; Bertram, A. Getting to the CoRe of it: A review of a specific PCK conceptual lens in science educational research. Educ. Química 2016, 27, 52–58. [Google Scholar] [CrossRef]

- Sherin, M.G.; Russ, R.S.; Sherin, B.L.; Colestock, A. Professional Vision in Action: An Exploratory Study. Issues Teach. Educ. 2008, 17, 27–46. [Google Scholar]

- McElvany, N.; Schroeder, S.; Hachfeld, A.; Baumert, J.; Richter, T.; Schnotz, W.; Horz, H.; Ullrich, M. Diagnostische Fähigkeiten von Lehrkräften [Diagnostic skills of teachers]. Z. Für Pädagogische Psychol. 2009, 23, 223–235. [Google Scholar] [CrossRef]

- Helmke, A.; Schrader, F.-W. Interactional effects of instructional quality and teacher judgment accuracy on achievement. Teach. Teach. Educ. 1987, 3, 91–98. [Google Scholar] [CrossRef]

- Carter, K.; Cushing, K.; Sabers, D.; Stein, P.; Berliner, D.C. Expert-Novice Differences in Perceiving and Processing Visual Classroom Information. J. Teach. Educ. 1988, 39, 25–31. [Google Scholar] [CrossRef]

- Barth, V.L.; Piwowar, V.; Kumschick, I.R.; Ophardt, D.; Thiel, F. The impact of direct instruction in a problem-based learning setting. Effects of a video-based training program to foster preservice teachers’ professional vision of critical incidents in the classroom. Int. J. Educ. Res. 2019, 95, 1–12. [Google Scholar] [CrossRef]

- Kramer, M.; Förtsch, C.; Stürmer, J.; Förtsch, S.; Seidel, T.; Neuhaus, B.J. Measuring biology teachers’ professional vision: Development and validation of a video-based assessment tool. Cogent Educ. 2020, 7. [Google Scholar] [CrossRef]

- Todorova, M.; Sunder, C.; Steffensky, M.; Möller, K. Pre-service teachers’ professional vision of instructional support in primary science classes: How content-specific is this skill and which learning opportunities in initial teacher education are relevant for its acquisition? Teach. Teach. Educ. 2017, 68, 275–288. [Google Scholar] [CrossRef]

- Blömeke, S.; Busse, A.; Kaiser, G.; König, J.; Suhl, U. The relation between content-specific and general teacher knowledge and skills. Teach. Teach. Educ. 2016, 56, 35–46. [Google Scholar] [CrossRef]

- Kersting, N.B.; Givvin, K.B.; Sotelo, F.L.; Stigler, J.W. Teachers’ Analyses of Classroom Video Predict Student Learning of Mathematics: Further Explorations of a Novel Measure of Teacher Knowledge. J. Teach. Educ. 2010, 61, 172–181. [Google Scholar] [CrossRef]

- Kaiser, J.; Helm, F.; Retelsdorf, J.; Südkamp, A.; Möller, J. Zum Zusammenhang von Intelligenz und Urteilsgenauigkeit bei der Beurteilung von Schülerleistungen im Simulierten Klassenraum [On the Relation of Intelligence and Judgment Accuracy in the Process of Assessing Student Achievement in the Simulated Classroom]. Z. Für Pädagogische Psychol. 2012, 26, 251–261. [Google Scholar] [CrossRef]

- Machts, N.; Kaiser, J.; Schmidt, F.T.C.; Möller, J. Accuracy of teachers’ judgments of students’ cognitive abilities: A meta-analysis. Educ. Res. Rev. 2016, 19, 85–103. [Google Scholar] [CrossRef]

- de Bruin, A.B.; Schmidt, H.G.; Rikers, R.M.J.P. The role of basic science knowledge and clinical knowledge in diagnostic reasoning: A structural equation modeling approach. Acad. Med. 2005, 80, 765–773. [Google Scholar] [CrossRef]

- Braun, L.T.; Zottmann, J.M.; Adolf, C.; Lottspeich, C.; Then, C.; Wirth, S.; Fischer, M.R.; Schmidmaier, R. Representation scaffolds improve diagnostic efficiency in medical students. Med. Educ. 2017, 51, 1118–1126. [Google Scholar] [CrossRef]

- Berliner, D.C. Learning about and learning from expert teachers. Int. J. Educ. Res. 2001, 35, 463–482. [Google Scholar] [CrossRef]

- Beck, E.; Baer, M.; Guldimann, T.; Bischoff, S.; Brühwiler, C.; Müller, P.; Niedermann, R.; Rogalla, M.; Vogt, F. Adaptive Lehrkompetenz. Analyse und Struktur, Veränderbarkeit und Wirkung Handlungssteuernden Lehrerwissens [Adaptive Teaching Competence. Analysis and Structure, Changeability and Effect of Action-Guiding Teacher Knowledge]; Waxmann: Münster, Germany, 2008. [Google Scholar]

- Brühwiler, C. Adaptive Lehrkompetenz und Schulisches Lernen. Effekte handlungssteuernder Kognitionen von Lehrpersonen auf Unterrichtsprozesse und Lernergebnisse der Schülerinnen und Schüler [Adaptive Teaching Competence and School-Based Learning. Effects of Teachers’ Action-Guiding Cognitions on Instructional Processes and Student Learning Outcomes]; Waxmann: Münster, Germany, 2014. [Google Scholar]

- Behling, F.; Förtsch, C.; Neuhaus, B.J. Sprachsensibler Biologieunterricht–Förderung professioneller Handlungskompetenz und professioneller Wahrnehmung durch videogestützte live-Unterrichtsbeobachtung. Eine Projektbeschreibung [Language-sensitive Biology Instruction–Fostering Professional Competence and Professional Vision Through Video-based Live Lesson Observation. A Project Description]. Z. Didakt. Naturwissenschaften 2019, 42, 271. [Google Scholar] [CrossRef]

- Goldman, E.; Barron, L. Using Hypermedia to Improve the Preparation of Elementary Teachers. J. Teach. Educ. 1990, 41, 21–31. [Google Scholar] [CrossRef]

- Grossman, P.; Compton, C.; Igra, D.; Ronfeldt, M.; Shahan, E.; Williamson, P.W. Teaching Practice: A Cross-Professional Perspective. Teach. Coll. Rec. 2009, 111, 2055–2100. [Google Scholar]

- Wedel, A.; Müller, C.R.; Pfetsch, J.; Ittel, A. Training teachers’ diagnostic competence with problem-based learning: A pilot and replication study. Teach. Teach. Educ. 2019, 86, 1–14. [Google Scholar] [CrossRef]

- Wiens, P.D.; Beck, J.S.; Lunsmann, C.J. Assessing teacher pedagogical knowledge: The Video Assessment of Teacher Knowledge (VATK). Educ. Stud. 2020, 1–17. [Google Scholar] [CrossRef]

- Ohle, A.; McElvany, N. Teacher diagnostic competences and their practical relevance. Special issue editorial. J. Educ. Res. Online 2015, 7, 5–10. [Google Scholar]

- Spector, P.E. Do Not Cross Me: Optimizing the Use of Cross-Sectional Designs. J. Bus. Psychol. 2019, 34, 125–137. [Google Scholar] [CrossRef]

- Südkamp, A.; Praetorius, A.-K. (Eds.) Diagnostische Kompetenz von Lehrkräften; Waxmann: Münster, Germany, 2017. [Google Scholar]

- Cortina, K.S.; Thames, M.H. Teacher Education in Germany. In Cognitive Activation in the Mathematics Classroom and Professional Competence of Teachers: Results from the COACTIV Project; Kunter, M., Baumert, J., Blum, W., Klusmann, U., Krauss, S., Neubrand, M., Eds.; Springer: New York, NY, USA, 2013; pp. 49–62. ISBN 978-1-4614-5148-8. [Google Scholar]

- Campbell, D.E. How to write good multiple-choice questions. J. Paediatr. Child Health 2011, 47, 322–325. [Google Scholar] [CrossRef]

- Jüttner, M.; Neuhaus, B.J. Validation of a Paper-and-Pencil Test Instrument Measuring Biology Teachers’ Pedagogical Content Knowledge by Using Think-Aloud Interviews. J. Educ. Train. Stud. 2013, 1, 113–125. [Google Scholar] [CrossRef]

- Jüttner, M.; Boone, W.J.; Park, S.; Neuhaus, B.J. Development and use of a test instrument to measure biology teachers’ content knowledge (CK) and pedagogical content knowledge (PCK). Educ. Assess. Eval. Acc. 2013, 25, 45–67. [Google Scholar] [CrossRef]

- Förtsch, C.; Sommerhoff, D.; Fischer, F.; Fischer, M.R.; Girwidz, R.; Obersteiner, A.; Reiss, K.; Stürmer, K.; Siebeck, M.; Schmidmaier, R.; et al. Systematizing Professional Knowledge of Medical Doctors and Teachers: Development of an Interdisciplinary Framework in the Context of Diagnostic Competences. Educ. Sci. 2018, 8, 207. [Google Scholar] [CrossRef]

- Tepner, O.; Borowski, A.; Dollny, S.; Fischer, H.E.; Jüttner, M.; Kirschner, S.; Leutner, D.; Neuhaus, B.J.; Sandmann, A.; Sumfleth, E.; et al. Modell zur Entwicklung von Testitems zur Erfassung des Professionswissens von Lehrkräften in den Naturwissenschaften [Item Development Model for Assessing Professional Knowledge of Science Teachers]. ZfDN 2012, 18, 7–28. [Google Scholar]

- Wirtz, M.A.; Caspar, F. Beurteilerübereinstimmung und Beurteilerreliabilität. Methoden zur Bestimmung und Verbesserung der Zuverlässigkeit von Einschätzungen mittels Kategoriensystemen und Ratingskalen[Interrateragreement and Interraterreliability. Methods for Determining and Improving the Reliability of Assessments via Category Systems and Rating Scales]; Hogrefe: Göttingen, Alemanha, 2002; ISBN 3801716465. [Google Scholar]

- Kunina-Habenicht, O.; Maurer, C.; Wolf, K.; Kunter, M.; Holzberger, D.; Schmidt, M.; Seidel, T.; Dicke, T.; Teuber, Z.; Koc-Januchta, M.; et al. Der BilWiss-2.0-Test: Ein revidierter Test zur Erfassung des bildungswissenschaftlichen Wissens von (angehenden) Lehrkräften [The BilWiss-2.0 Test: A Revised Instrument for the Assessment of Teachers’ Educational Knowledge]. Diagnostica 2020, 66, 80–92. [Google Scholar] [CrossRef]

- Kunter, M.; Leutner, D.; Terhart, E.; Baumert, J. Bildungswissenschaftliches Wissen und der Erwerb professioneller Kompetenz in der Lehramtsausbildung (BilWiss) [Broad Pedagogical Knowledge and the Development of Professional Competence in Teacher Education (BilWiss)] (Version 5) [Data Set]. Available online: https://www.iqb.hu-berlin.de/fdz/studies/BilWiss (accessed on 23 February 2021).

- Klieme, E.; Schümer, G.; Knoll, S. Mathematikunterricht in der Sekundarstufe I: Aufgabenkultur und Unterrichtsgestaltung [Mathematics Instruction in Secondary Education: Task Culture and Instructional Processes]. In TIMMS—Impulse für Schule und Unterricht [TIMSS—Impetus for School and Teaching]; Bundesministerium für Bildung und Forschung, Ed.; Bundesministerium für Bildung und Forschung (BMBF): Bonn, Germany, 2001; pp. 43–57. [Google Scholar]

- Lipowsky, F.; Rakoczy, K.; Pauli, C.; Drollinger-Vetter, B.; Klieme, E.; Reusser, K. Quality of geometry instruction and its short-term impact on students’ understanding of the Pythagorean Theorem. Learn. Instr. 2009, 19, 527–537. [Google Scholar] [CrossRef]

- Bond, T.G.; Fox, C.M.F. Applying the Rasch Model: Fundamental Measurement in the Human Sciences, 2nd ed.; Lawrence Erlbaum: Mahwah, NJ, USA, 2007. [Google Scholar]

- Boone, W.J.; Staver, J.; Yale, M. Rasch Analysis in the Human Sciences; Springer: Dordrecht, The Netherlands, 2014; ISBN 9789400768574. [Google Scholar]

- Linacre, J.M. A User’s Guide to Winsteps® Ministeps Rasch-Model Computer Programs. Program Manual 4.8.0. Available online: https://www.winsteps.com/a/Winsteps-Manual.pdf (accessed on 23 February 2021).

- Boone, W.J.; Staver, J. Advances in Rasch Analysis in the Human Science; Springer: Dordrecht, The Netherlands, 2020. [Google Scholar]

- Wright, B.D.; Linacre, J.M. Reasonable mean-square fit values. Rasch Meas. Trans. 1994, 8, 370. [Google Scholar]

- Questback GmbH. EFS Survey; Questback GmbH: Köln, Germany, 2018. [Google Scholar]

- Dorfner, T.; Förtsch, C.; Neuhaus, B.J. Die methodische und inhaltliche Ausrichtung quantitativer Videostudien zur Unterrichtsqualität im mathematisch-naturwissenschaftlichen Unterricht: Ein Review [The methodical and content-related orientation of quantitative video studies on instructional quality in mathematics and science education]. ZfDN 2017, 23, 261–285. [Google Scholar] [CrossRef]

- Kramer, M.; Förtsch, C.; Seidel, T.; Neuhaus, B.J. Comparing two constructs for describing and analyzing teachers’ diagnostic processes. Studies Educ. Eval. 2021, 28. [Google Scholar] [CrossRef]

- Scheiner, T. Teacher noticing: Enlightening or blinding? ZDM Math. Educ. 2016, 48, 227–238. [Google Scholar] [CrossRef]

- Vogt, F.; Schmiemann, P. Assessing Biology Pre-Service Teachers’ Professional Vision of Teaching Scientific Inquiry. Educ. Sci. 2020, 10, 332. [Google Scholar] [CrossRef]

- Kaiser, G.; Blömeke, S.; Busse, A.; Döhrmann, M.; König, J. Professional knowledge of (prospective) Mathematics teachers—Its structure and development1. In Proceedings of the Joint Meeting of PME 38 and PME-NA 36; Liljedahl, P., Nicol, C., Oesterle, S., Allan, D., Eds.; PME: Vancouver, BC, Canada, 2014; pp. 35–50. [Google Scholar]

- Arbuckle, J.L. Amos (Version 26.0); SPSS: Chicago, IL, USA, 2019. [Google Scholar]

- Hu, L.-T.; Bentler, P.M. Fit indices in covariance structure modeling: Sensitivity to underparameterized model misspecification. Psychol. Methods 1998, 3, 424–453. [Google Scholar] [CrossRef]

- Finger, R.P.; Fenwick, E.; Pesudovs, K.; Marella, M.; Lamoureux, E.L.; Holz, F.G. Rasch analysis reveals problems with multiplicative scoring in the macular disease quality of life questionnaire. Ophthalmology 2012, 119, 2351–2357. [Google Scholar] [CrossRef] [PubMed]

| Diagnostic Activity | Description |

|---|---|

| Identifying problems | A noteworthy event that may influence student learning is noticed by the teacher. |

| Questioning | The teacher asks questions to find out more about the identified problematic incident or its cause. |

| Generating hypothesis | The teacher generates a hypothesis about possible sources of the identified problem. |

| Construct or redesign artefacts | The teacher creates content-specific tasks suitable for identifying underlying instructional problems or detecting students’ misconceptions. |

| Generating evidence | Evidence is generated either by the use of a constructed test or a created task or through systematic observation and description of the problematic incident. |

| Evaluating evidence | The teacher assesses the generated evidence regarding its support to a claim or theory. He/she interprets the data, thus making sense of the generated evidence with regard to his/her belief, knowledge, and expertise (cf. [46]). |

| Drawing conclusions | As a result of evaluating evidence, the teacher predicts consequences regarding student learning or makes suggestions for alternative instructional strategies. |

| Communicating the process/results | The teacher scrutinizes diagnostic results to colleagues, students, or parents. |

| Item Label | Topic | PCK-Dimension |

|---|---|---|

| PCK-1a ° | Causes of a specific student error | Student errors |

| PCK-1b ° | Dealing with specific student errors | |

| PCK-1c ° | Further topic-specific student errors | |

| PCK-2a ° | Advantages and disadvantages of a specific model on the structure of the skin | Use of models |

| PCK-2b ° | Critical reflection of the model | |

| PCK-2c ° | Setting goals for elaborated use of models | |

| PCK-3a * | Assessment of an experiment (cold protection through fat) regarding subject-specific aspects (e.g., steps of scientific inquiry) | Use of experiments |

| PCK-3b ° | Alternative instructional implementation of the experiment | |

| PCK-3c ° | Further student experiments on the topic skin |

| Item Label | Topic |

|---|---|

| CK-1 * | Control and regulation of skin functions |

| CK-2 ° | Regulation of body temperature |

| CK-3 ° | Structure and function I |

| CK-4 * | Structure and function II |

| CK-5 * | Human skin color |

| CK-6 ° | Transfer of biological concepts/principles |

| Item Label | Topic | Item Label | Topic |

|---|---|---|---|

| PK-1 * | Structuring feedback to students | PK-9 * | Supportive climate/motivating learning environment |

| PK-2 ° | Discourse management and question formats | PK-10 * | Participation of the students (working with weekly schedules) |

| PK-3 ° | Methodological organization, social forms, research on teaching methods | PK-11 * | Support of learning processes (role of students’ prior knowledge) |

| PK-4 * | Preparation of variable/differentiated teaching progressions | PK-12 * | Cognitive activating learning goals according to Bloom |

| PK-5 * | Methodological forms of teaching (advantages of project work) | PK-13 * | Transparency of goals and requirements |

| PK-6 ° | General didactics: constructivist models | PK-14 ° | Models of teacher-student interaction |

| PK-7 * | General didactics: theoretical models of teaching and learning | PK-15 ° | Classroom management |

| PK-8 * | Constructive handling of errors/analysis of student errors |

| N | M | SD | PCK | CK | PK | Diagnostic Activities | Diagnostic Accuracy | |

|---|---|---|---|---|---|---|---|---|

| PCK a | 186 | −1.21 | 0.72 | - | ||||

| CK a | 186 | −0.88 | 0.71 | 0.30 ** | - | |||

| PK a | 186 | 0.47 | 0.50 | 0.29 ** | 0.15 * | - | ||

| Diagnostic activities a | 186 | −1.53 | 0.67 | 0.36 ** | 0.22 ** | 0.25 ** | - | |

| Diagnostic accuracy | 186 | 0.28 | 0.15 | 0.30 ** | 0.22 ** | 0.21 ** | 0.70 ** | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kramer, M.; Förtsch, C.; Boone, W.J.; Seidel, T.; Neuhaus, B.J. Investigating Pre-Service Biology Teachers’ Diagnostic Competences: Relationships between Professional Knowledge, Diagnostic Activities, and Diagnostic Accuracy. Educ. Sci. 2021, 11, 89. https://doi.org/10.3390/educsci11030089

Kramer M, Förtsch C, Boone WJ, Seidel T, Neuhaus BJ. Investigating Pre-Service Biology Teachers’ Diagnostic Competences: Relationships between Professional Knowledge, Diagnostic Activities, and Diagnostic Accuracy. Education Sciences. 2021; 11(3):89. https://doi.org/10.3390/educsci11030089

Chicago/Turabian StyleKramer, Maria, Christian Förtsch, William J. Boone, Tina Seidel, and Birgit J. Neuhaus. 2021. "Investigating Pre-Service Biology Teachers’ Diagnostic Competences: Relationships between Professional Knowledge, Diagnostic Activities, and Diagnostic Accuracy" Education Sciences 11, no. 3: 89. https://doi.org/10.3390/educsci11030089

APA StyleKramer, M., Förtsch, C., Boone, W. J., Seidel, T., & Neuhaus, B. J. (2021). Investigating Pre-Service Biology Teachers’ Diagnostic Competences: Relationships between Professional Knowledge, Diagnostic Activities, and Diagnostic Accuracy. Education Sciences, 11(3), 89. https://doi.org/10.3390/educsci11030089