1. Introduction

Since the beginning of the second half of the 20th century, the world has gone through technological evolutions that have transformed several areas of knowledge. Since the appearance of the first computers, data processing capacity and speed have increased exponentially, and this has led people and society to new behaviors. Education in general has changed, and so has engineering education [

1,

2].

With the transformations experienced in recent decades, current students were born surrounded by many technological resources. With almost all the information available on mobile phones, knowing how to make sense of it becomes increasingly important.

Engineering schools are experiencing a global trend of adaptation of their programs to the reality of the 21st century. Several movements are attempting to modernize programs and teaching practices, such as the CDIO initiative [

3]. This initiative “provides students with an education stressing engineering fundamentals set in the context of Conceiving—Designing—Implementing—Operating (CDIO) real-world systems and products” [

4]. Additionally, accreditation criteria of engineering programs in USA, established by the Accreditation Board for Engineering and Technology—ABET (called EC2000) [

5], have changed. Such novel criteria require US engineering departments to demonstrate that, in addition to having a solid knowledge of science, math, and engineering fundamentals, their graduates have communication skills, multidisciplinary teamwork capabilities, lifelong learning skills, and awareness of the social and ethical considerations associated with the engineering profession [

6]. Finally, completely novel engineering colleges are being created, with totally different proposals from the traditional 20th century model, such as the Olin College [

7] and Aalborg University [

8].

A common topic among the engineering modernization movements is the importance of placing the student at the center of the learning process, as highlighted in the learning outcomes of the EC2000 (Criterion 3. i—“a recognition of the need for, and an ability to engage in life-long learning”) [

9] and Standard 8 of the CDIO (“Active Learning”) (p. 153, [

3]). Putting the student at the center of the learning process, along with increasing student engagement, is arguably achieved by the use of Active Learning [

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22].

Active Learning still lacks a definitive unique definition, but three stand as the most popular. Prince defines it as “any instructional method [used in the classroom] that engages students in the learning process” [

23], Roehl as “an umbrella term for pedagogies focusing on student activity and student engagement in the learning process” [

24], and Barkley as “an umbrella term that now refers to several models of instruction, including cooperative and collaborative learning, discovery learning, experiential learning, problem-based learning, and inquiry-based learning” [

14]. Hartikainen [

10] shows 66 definitions of Active Learning, grouped by three main categories: (1) defined and viewed as an instructional approach; (2) not defined but viewed as an instructional approach; and (3) not defined but viewed as a learning approach.

Among the main Active Learning techniques, the following stand out: Problem-Based Learning (PBL) [

8,

23,

25,

26,

27,

28,

29], Cooperative and Collaborative Learning [

13,

23,

30,

31,

32,

33,

34,

35], and the Flipped Classroom [

20,

36,

37,

38,

39].

Furthermore, the pedagogical results and effectiveness of Active Learning are also widely documented [

19,

23,

40,

41,

42,

43,

44,

45]. Hartikainen [

10] related positive effects on the development of subject-related knowledge, professional skills, social skills, communication skills, and meta-competences.

However, there are problems both in research and in the implementation of Active Learning. Prince [

23] points out that comprehensive assessment of Active Learning is difficult due to the limited range of learning outcomes and different possible interpretations of these outcomes. Streveler [

46] notes that “active learning is not a panacea that is a blanket remedy for all instructional inadequacies. Instead, it is a collective term for a group of instructional strategies that produce different results and require differing degrees of time to design, implement, and assess”. Fernandes [

47] related that “students identify the heavy workload which the project entails as one of the main constraints of PBL approach”. There are also the least researched, but much-mentioned, barriers of resistance to novelty on the part of lecturers and students [

43,

48,

49,

50,

51,

52].

Although Active Learning has already been validated as an effective way to influence student learning and is increasingly being incorporated into the classroom, there is no way to qualify and evaluate the use of Active Learning techniques by faculty members [

40]. There are four maturity models in the field of education, but none that specifically allow the assessment of the implementation of Active Learning in a course [

53,

54,

55,

56]. In addition to the difficulty of measuring Active Learning usage in the classroom, there is no way to assess the maturity level of Active Learning implementations in a course or a program of a Higher Education Institution (HEI), engineering schools included. This gap blurs the diagnostics of the status of a given implementation and consequently leads to less assertiveness in decision making, reducing the effectiveness of changes and Active Learning as a whole.

Maturity models can be a bridge to this gap. They enable practitioners to assess organizational performance, support management, and allow improvements [

57]. Maturity modeling is a generic approach that describes the development of an organization over time through ideal levels to a final state [

58]. In addition, maturity models are instruments to assess organizational elements and select appropriate actions, which lead to higher levels of maturity and better performance [

59].

Therefore, this work will propose a conceptual maturity model that allows evaluating Active Learning implementations at the level of a specific course. This model targets the incremental enhancement of courses and seems logically to be the first step towards a more general and comprehensive framework that can extend its reach to evaluate institutions as a whole.

2. Methodology

Based on the research objectives, the broad keyword “active learning” was used in Scopus and Web of Science databases to search for abstracts of peer-reviewed journal articles. Additional keywords related to “success factors” and “engineering education” were used to refine the search. Ultimately, 31 studies were selected for review.

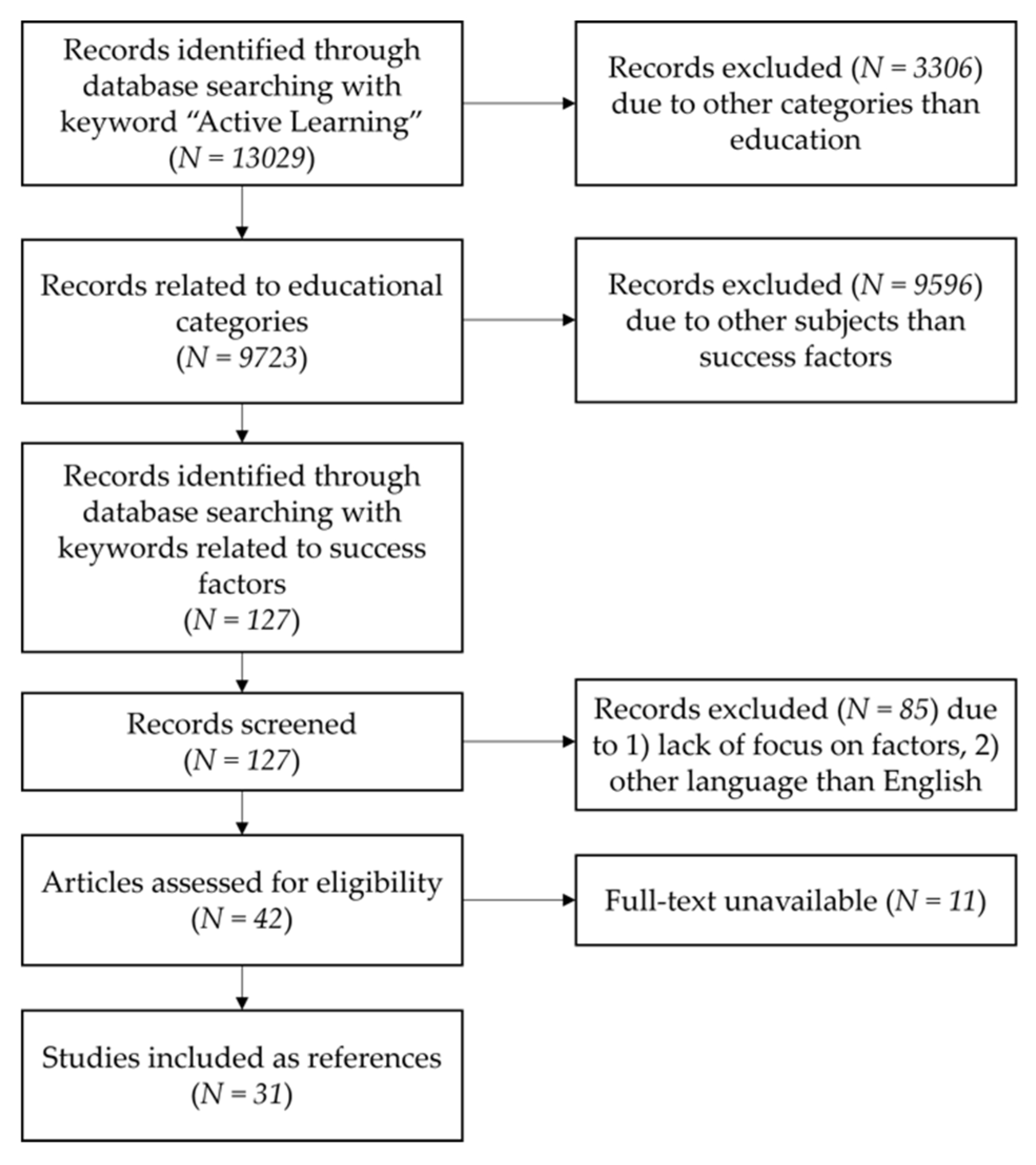

Figure 1 uses the PRISMA model [

60,

61] to describe the literature review process.

The initial search returned a total number of 13,029 articles. Approximately 25% (3306) of the records were excluded because they belonged to categories other than education. The objective of this criterion was to exclude articles that used "active learning" in different purposes.

With the sample reduced to 75% of the original size (9723), filters were applied in the databases to match keywords related to success factors: “critic* factor*”, “key factor*”, and “success factor*”. This step led to the reduction of the sample to 127 articles.

The abstracts of these 127 articles were judged against the following inclusion criteria: (1) reported research on key factors and (2) written in English. These criteria were intended to eliminate articles that had some keywords related to success factors but that did not actually address them. This step resulted in the reduction of the sample to 42 articles, whose full texts were searched. Of these, 11 full texts were not available for download, which resulted in the selection of 31 references that were included in the literature review. After the literature selection stage, references were read to identify the Key Success Factors (KSF) for the implementation of Active Learning.

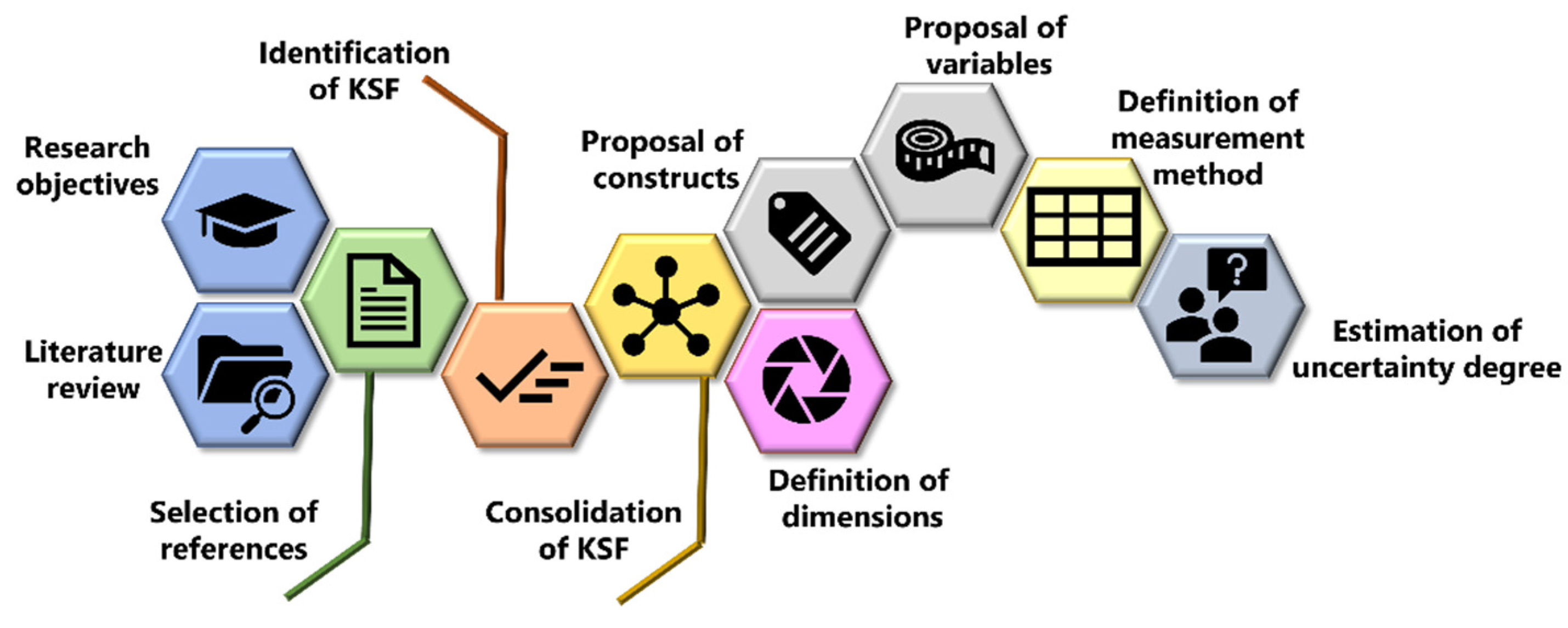

The software MaxQDA® was used to extract and accumulate text snippets that represented key success factors. Then, similar snippets were combined into single KSFs to avoid duplication. Next, a definition based on the literature was attributed to each factor. The following step was to define the relevant constructs for each factor and for each construct, the variables that would be used for measurement.

Finally, each variable had a measurement method proposed and an uncertainty degree estimated.

The research method is presented in

Figure 2.

3. Results

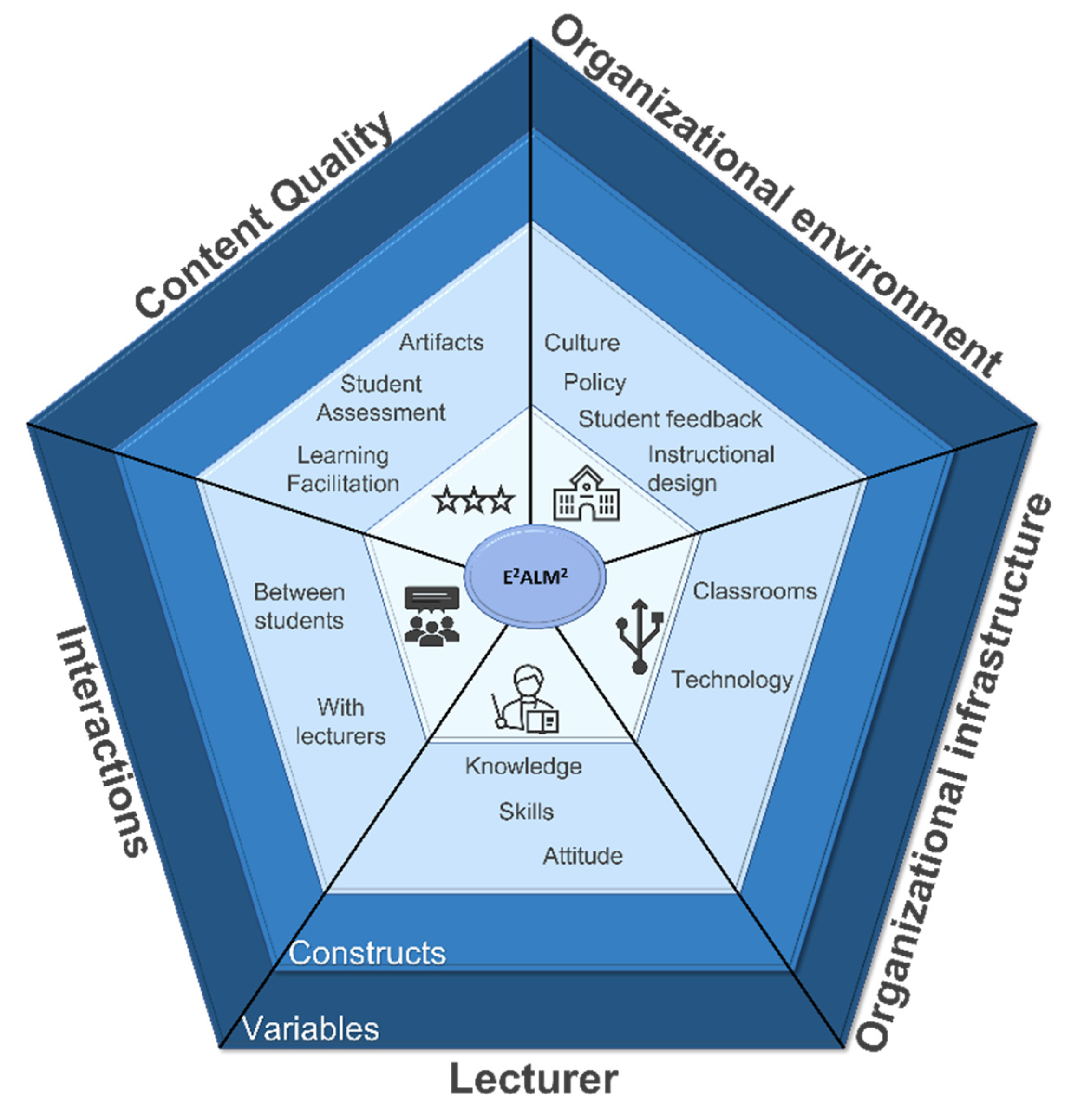

The 31 sources included for the literature review provided 14 key success factors, grouped into five dimensions according to their similarity and relatedness to a specific aspect of the educational environment.

Table 1 shows the dimensions and their related KSF.

Following up on the creation of dimensions, each of the 14 KSF was detailed into 41 constructs. The constructs were detailed into 90 variables that could operationalize objective measurements to assess the maturity of a given implementation. Then, a measurement method was proposed for each variable, as well as an uncertainty degree estimated based on each measurement method. Three measurement methods were proposed:

A questionnaire faculty in charge of a course should answer (Lecturer Questionnaire, LQ),

Another questionnaire directed to students (Student Questionnaire, SQ), and

An external evaluation from a third party not directly involved in the course (External Evaluation, EE).

As a result,

Figure 3 shows the Engineering Education Active Learning Maturity Model (E

2ALM

2) with four levels.:

All dimensions and their KSF are defined in the following sections. Each KSF is detailed with its constructs and variables. Each variable has a measurement method (MM) and uncertainty degree (UD) suggested.

3.1. Content Quality

This dimension concentrates the factors related to the core of the learning process, such as the quality of the problems, projects, or cases studied (artifacts); the level of difficulty required from the students; whether the activities facilitate learning; and whether the evaluation criteria are clear and consistent. The three KSF are detailed below.

3.1.1. Course Artifacts

Course artifacts (problems, projects, or cases studied) should:

Engage students with real-life problems and active experiences [

62];

Provide students with a variety of additional instructional resources, such as simulations, case studies, videos, and demonstrations [

62];

Be suitable to achieve different targets including the support of the students’ learning process and establishing learning outcomes requirements [

53];

Be clearly written, in the right length, useful, flexible, and provide an appropriate degree of breath [

63];

Have suitable intellectual challenge [

16,

17,

18,

42,

64]; and

Begin with an explanation of its purpose [

49,

65,

66].

Table 2 describes the KSF “Course Artifacts” with more detail. Its constructs were derived from the list of requisites presented above. Variables were proposed to measure each construct, as well as the most suitable measurement method (MM) and the uncertainty degree in each measurement.

3.1.2. Student Assessment

Student assessment needs to be clear, concise, and consistent. This involves instructions, assignments, assessments, due dates, course pages, and office hours [

62]. Furthermore, criteria for success must be communicated clearly and monitored [

18,

34,

42,

67,

68,

69,

70].

Table 3 details the KSF “Student Assessment”.

3.1.3. Learning Facilitation

Learning facilitation includes the preparation of students to conduct activities and tasks required in addition to activities related to the facilitator guiding the learning process of the students [

53]. It also involves providing students with regular opportunities for formative feedback from the lecturer [

17,

18,

42,

67,

70,

71].

Table 4 details the following levels of this KSF.

3.2. Organizational Environment

The factors of this dimension represent abstract aspects of the institution, such as culture, policy, and the practice of collecting feedback from students.

3.2.1. Culture

Organizational culture is a set of values systems followed by members of an organization as guidelines for behavior and solving the problems that occur in the organization [

72]. This way, an organization and its members should have behavior alignment, and an organization should have guidelines to solve problems.

Table 5 details the following levels of this KSF.

3.2.2. Policy

Organization policy is a set of program plans, activities, and actions that allows the prediction of how the organization works and how a problem would be solved [

72]. Once time is needed to prepare the activities, teachers must have it for implementing something new in their classes [

19].

Table 6 describes more details of this KSF.

3.2.3. Student Feedback

Organizations are expected to collect feedback from students [

25,

49,

73] and provide the support needed to successfully complete the activity [

49].

Thus, it is possible to identify three different requirements for organizations carry on successfully this process: having a suitable process of feedback collection, using suitable feedback, and having an adequate student feedback process.

The following levels of the KSF “Student Feedback” are shown in

Table 7.

3.2.4. Instructional Design

Brophy [

74] and Paechter et al. [

75] highlight the importance of the structure and coherence of the curriculum and the learning materials. Thus, it is possible to identify two requirements to this KSF:

Table 8 shows the following levels of this KSF.

3.3. Organizational Infrastructure

This dimension contains factors that represent the infrastructure available for course activities.

3.3.1. Classrooms

Classrooms designed for improved Active Learning experience [

76] and equipped with technologies can enhance student learning and support teaching innovation [

77,

78,

79,

80,

81]. Thus, two different requirements emerge for this KSF:

Table 9 describes more details of this KSF.

3.3.2. Technology

The school should provide equipment and technological structure [

19,

42]. This involves availability, reliability, accessibility, usability of devices, internet (Wi-Fi), learning support, and inclusive learning environment [

16,

42,

70,

82].

Table 10 shows the details of KSF “Technology”.

3.4. Lecturer

The lecturer is single most important actor in a successfully implementation of Active Learning. This dimension groups factors that represents their knowledge, skills, and attitude to carry out education innovation.

3.4.1. Knowledge

Knowledge is a combination of framed experience, values, and contextual information that provides an environment for evaluating and incorporating new experiences [

72]. DeMonbrun et al. highlighted the relevance of experience to lecturer [

49]. Therefore, lecturer should have suitable experience as faculty member and information about Active Learning.

3.4.2. Skills

Skills are the ability to use reason, thoughts, ideas, and creativity in doing, changing, or making things more meaningful so as to produce a value from the results of the work [

72]. The lecturer should have skills about educational innovations in general and about Active Learning specifically.

Table 12 shows this KSF in detail.

3.4.3. Attitude

Attitude encompasses a very broad range of activities, including how people walk, talk, act, think, perceive, and feel [

72]. Hegarty and Thompson [

42] highlight the relevance of lecturer attributes and teaching methods, such as approachable, supportive, enthusiastic, and interesting delivery.

Table 13 shows the following levels of this KSF.

3.5. Interactions

Placing students at the center of the learning process requires them to step out of the role of recipients of information and become active agents. The interaction between students and between them and teachers allows this transition to happen.

3.5.1. Between Students

Opportunities for students to work together and obtain peer feedback included in the learning design [

42]. Chen, Bastedo, and Howard [

62] emphasize that the course should provide online and face-to-face opportunities for students to collaborate with others.

3.5.2. With Lecturers

Interaction between students and lecturer supports knowledge construction, motivation, and the establishment of a social relationship [

75]. Furthermore, constructive and enriching feedbacks from the lecturer lead to increasing academic success and feelings of support [

42].

Table 15 details this KSF.

3.6. Measurement Scales

Most of E

2ALM

2 variables are related to the perception of students and teachers. They can be measured on a five-point Likert scale [

83], coded as 5: strongly agree; 4: agree; 3: neither agree nor disagree; 2: disagree; and 1: strongly disagree.

The model also involves numerical variables, such as the percentage of activities that define clearly what is expected of the student or the percentage of activities in which the purpose is explained to students. For these variables, it is also possible to use a five-point scale, however with coding based on frequency or ranges, such as 5: always, 4: often, 3: occasionally, 2: rarely, and 1: never.

Finally, there are binary variables, e.g., whether assessment methods are defined in advance.

3.7. KSF Weights

In the proposed model, each dimension has a score independent of the others. Thus, there is no need to define weights for the dimensions. However, it is necessary to define the weight that each KSF has in the composition of the score within its dimension. Two approaches are possible: (i) a uniform distribution inside the dimension and (ii) a distribution according to the relative relevance, based on number of references that support each KSF.

Table 16 presents KSF weights under two criteria.

4. Discussion

As explained in the introduction, there is a lack of instruments that can help engineering schools and lecturers assess Active Learning implementations. The use of maturity models can support them in this task.

According to Bruin et al. [

84], the maturity assessment can be descriptive, prescriptive, or comparative in nature. A purely descriptive model can be applied for an as-is diagnosis, with no provision for improving maturity or providing relationships with performance. A prescriptive model emphasizes the relationships between variables for final performance and indicates how to approach maturity improvement to positively affect the outcome. Therefore, it allows the development of a roadmap for improvement. A comparative model allows benchmarking across sectors or regions. Thus, it would be possible to compare similar practices between organizations to assess maturity in different sectors.

The E

2ALM

2 is a descriptive maturity model (according to Bruin et al.’s classification), which can be understood as the first step in a life cycle that will allow the evolution to a prescriptive model. This evolution requires more knowledge about the impact of actions and the identification of replicable actions that support the advance in the maturity level. This difficulty is especially important due to the difference in results obtained in education when different contexts and conditions are compared [

43].

Although there are four other maturity models in the field of education, they have a different focus from E

2ALM

2. These models are focused on: Project-Based Learning (PBLCMM) [

53], Student Engagement (SESR-MM) [

54], Curriculum Design (CDMM) [

55], and e-Learning [

56]. In addition to the difference in focus, none of these models provide an assessment of the same requirements and with the scope of E

2ALM

2. In addition to these four models, there is an extremely simple scale, which is neither a theoretical model with scientific references nor peer-reviewed, but which has a similar objective to assess the use of Active Learning [

85].

The E2ALM2 model allows the diagnosis of the current stage of Active Learning implementation with a focus on a course, from the objective measurement of 90 variables. For most variables, the suggested measurement method is a questionnaire for the lecturer, for the student, or for both. This choice aims to facilitate the application of the model in real cases, reducing the need for an external evaluator to observe the activities throughout the entire period to issue its report.

Obviously, collecting impressions through questionnaires introduces the possibility of bias, both for the teacher and the student. Therefore, it will be necessary to use response validation techniques when creating the questionnaires. Because of this possibility of bias, all variables had an estimated uncertainty degree. In cases where the uncertainty degree is high, the statistical validation of answers will need to be stricter. As a way to avoid possible contamination in the results due to bias, some variables are measured by questions asked to both the lecturer and the students.

The use of Active Learning has several positive effects, as explained in the introduction, but there are also some difficulties and limitations. Streveler states that Active Learning is not a solution for all instructional inadequacies [

46]. The increasing workload for lecturers [

52,

86] and students [

47], the resistance to changes [

43,

48,

49,

50,

51], and the need to align curriculum and course activities [

86,

87] are challenges that need to be overcome in Active Learning implementations.

Furthermore, it is important to emphasize that the E2ALM2 model does not aim to assess the overall quality of an engineering program, but the maturity level of Active Learning implementation, which is a recommendation of the main modernization movements in the Engineering Education field around the world. Courses and schools can still be of a high quality even though they follow a more traditional approach to engineering education. The point here is that whoever wants to modernize their engineering education approach will struggle with the implementation of Active Learning as a pedagogical and cultural element, and the E2ALM2 can shed light for managers and lecturers during the messy times of changes, infrastructural adaptations, and resistance from students and faculty members.

As future work, we recommend: (i) defining further studies to test the scale of each variable; (ii) determining empirical testing of the weights of each KSF in their respective dimensions; (iii) testing the questionnaires to measure all variables; (iv) validation of the framework in different cultural settings, for instance with an international panel of experts; and (v) application of the framework to evaluate the maturity of real cases, which will allow qualitative and quantitative analyses.

5. Conclusions

This study proposed a framework to evaluate the maturity of adoption of Active Learning by a specific course. The variables described here can serve as a checklist to lecturers adopting Active Learning and as a metric to evaluate the comprehensiveness and quality of existing initiatives.

The proposed model is descriptive, because it allows evaluating the current situation, but it can be understood as a first step towards the construction of a prescriptive model, which can indicate good practices and replicable actions to increase the level of maturity.

E2ALM2 was designed so that its application is easy, centered on questionnaires for lecturers and students, without the need for long periods of external observation, which would lead to greater expenses and prevent scalability.

E2ALM2 allows faculty members to assess the current state of Active Learning implementations and therefore compare states before and after planned interventions with specific objectives.

Despite having the focus on a course, the diagnosis of a program or an engineering school can be made as a composition of the evaluations of the courses that comprise it, which also favors managerial actions.