Developing a Task-Based Dialogue System for English Language Learning

Abstract

1. Introduction

- Information-gap activity: Allow learners to exchange information to fill up the information gap. Learners can communicate with each other using the target language to ask questions and solve problems.

- Opinion-gap activity: Learners express their feelings, ideas, and personal preferences to complete the task. In addition to interacting with each other, teachers can add personal tasks to the theme to stimulate the learners’ potential.

- Reasoning-gap activity: Students conclude new information through reasoning by using the existing information, for example, deriving the meaning of the dialogue topic or the implied association in the sentence from the dialogue process.

- Development of a task-based dialogue system that is able to conduct a task-oriented conversation and evaluate students’ performance after the conversation;

- Comparison of the differences between the proposed system and the traditional methods;

- Evaluation of the effectiveness of the proposed system.

2. Methodology

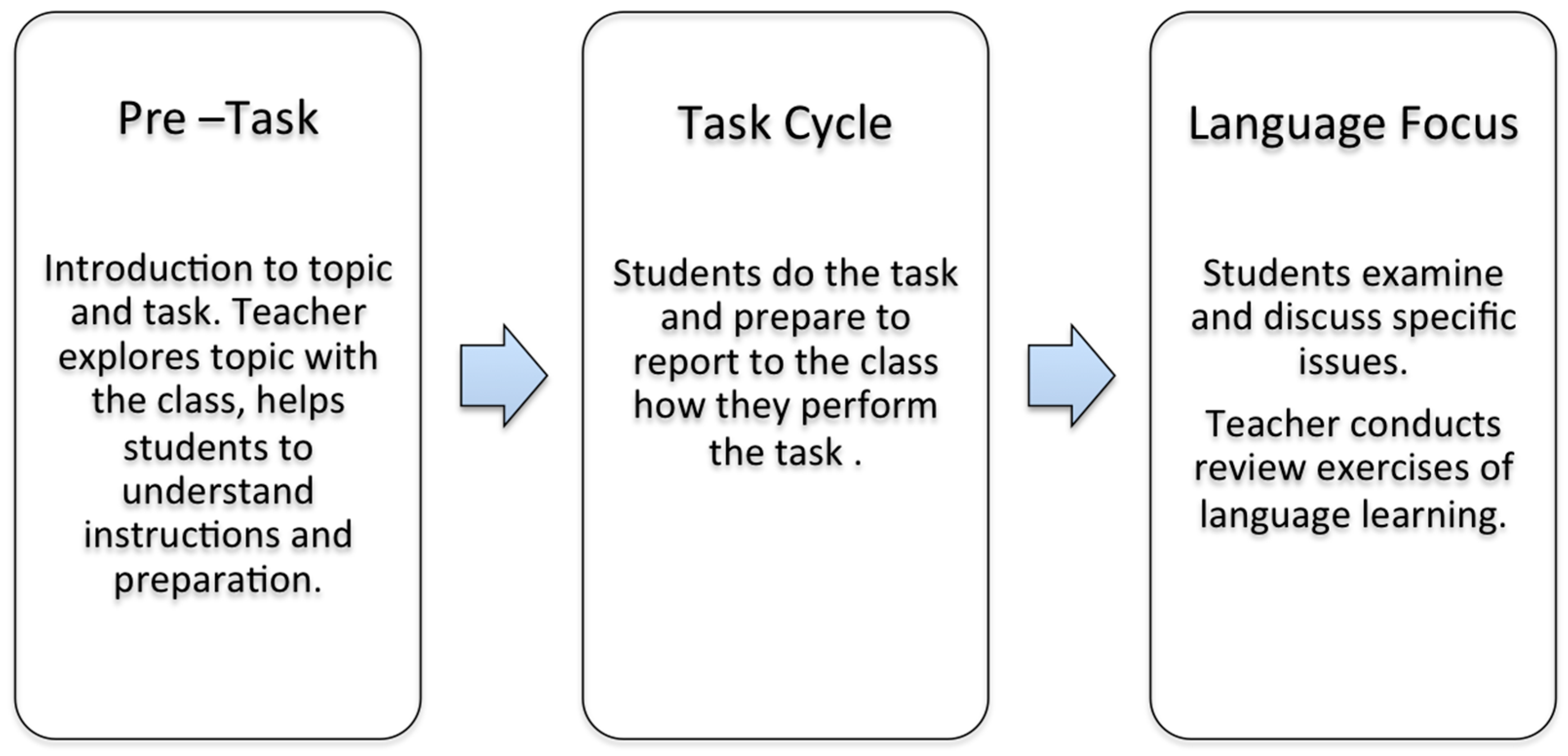

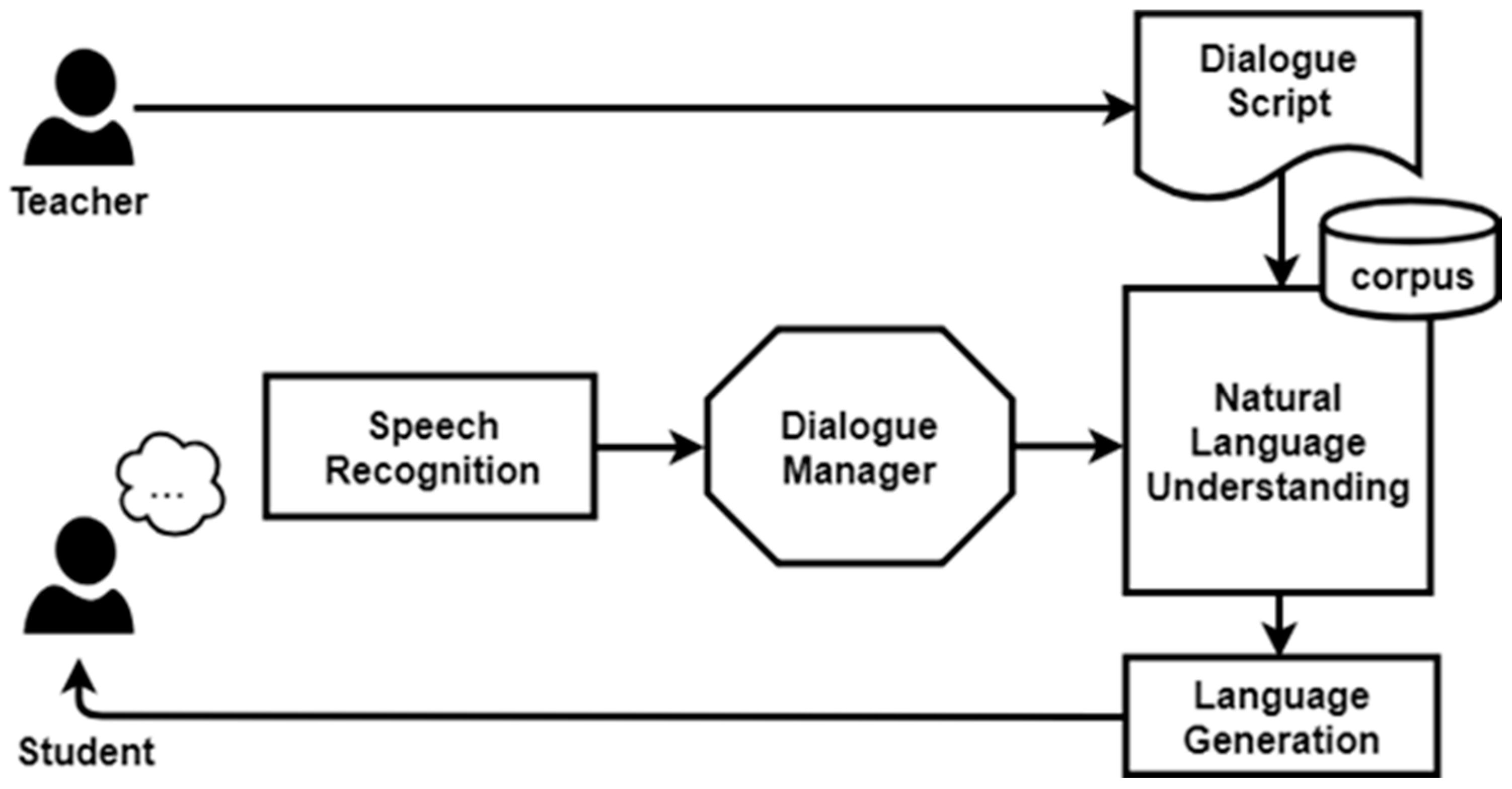

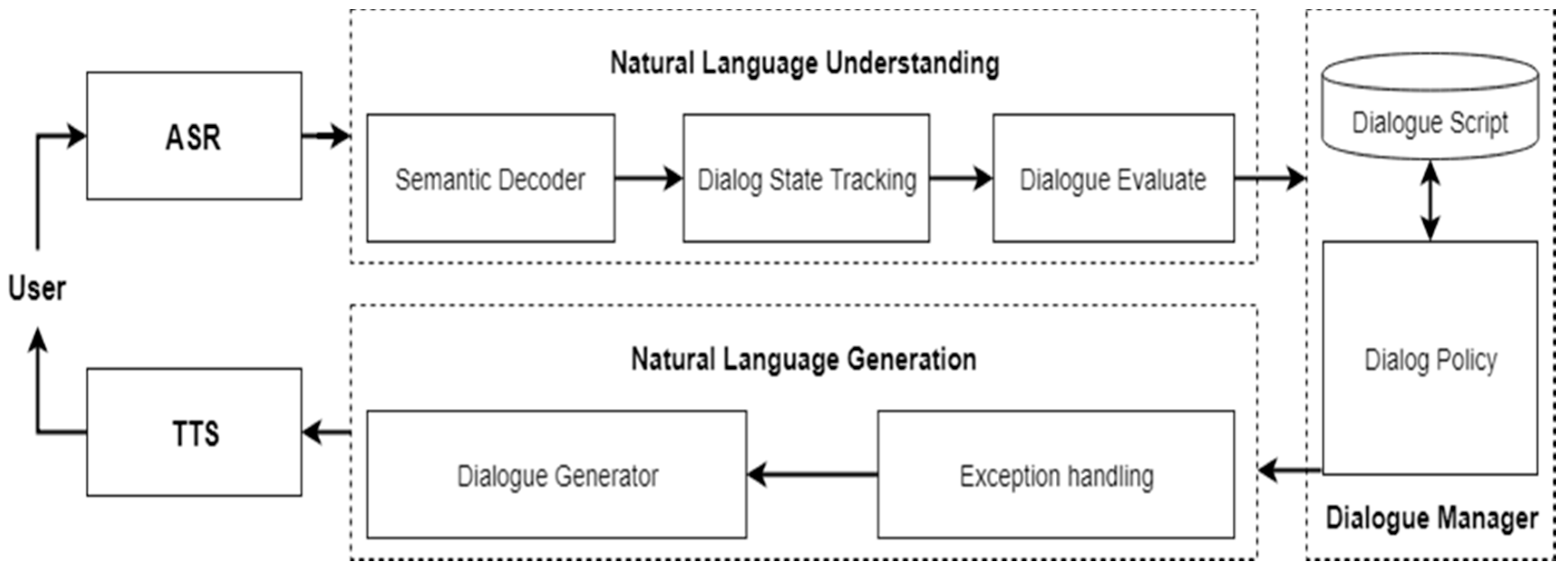

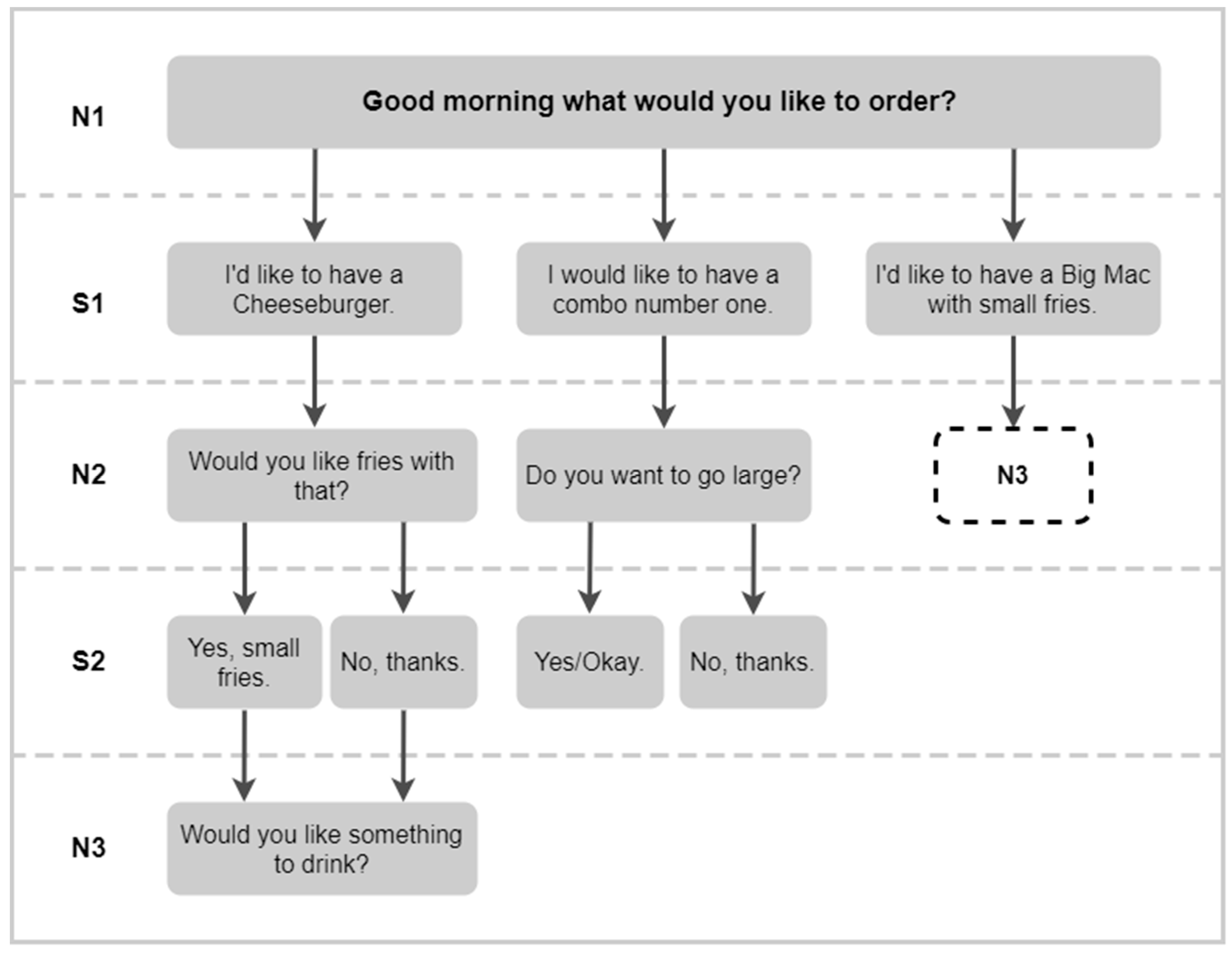

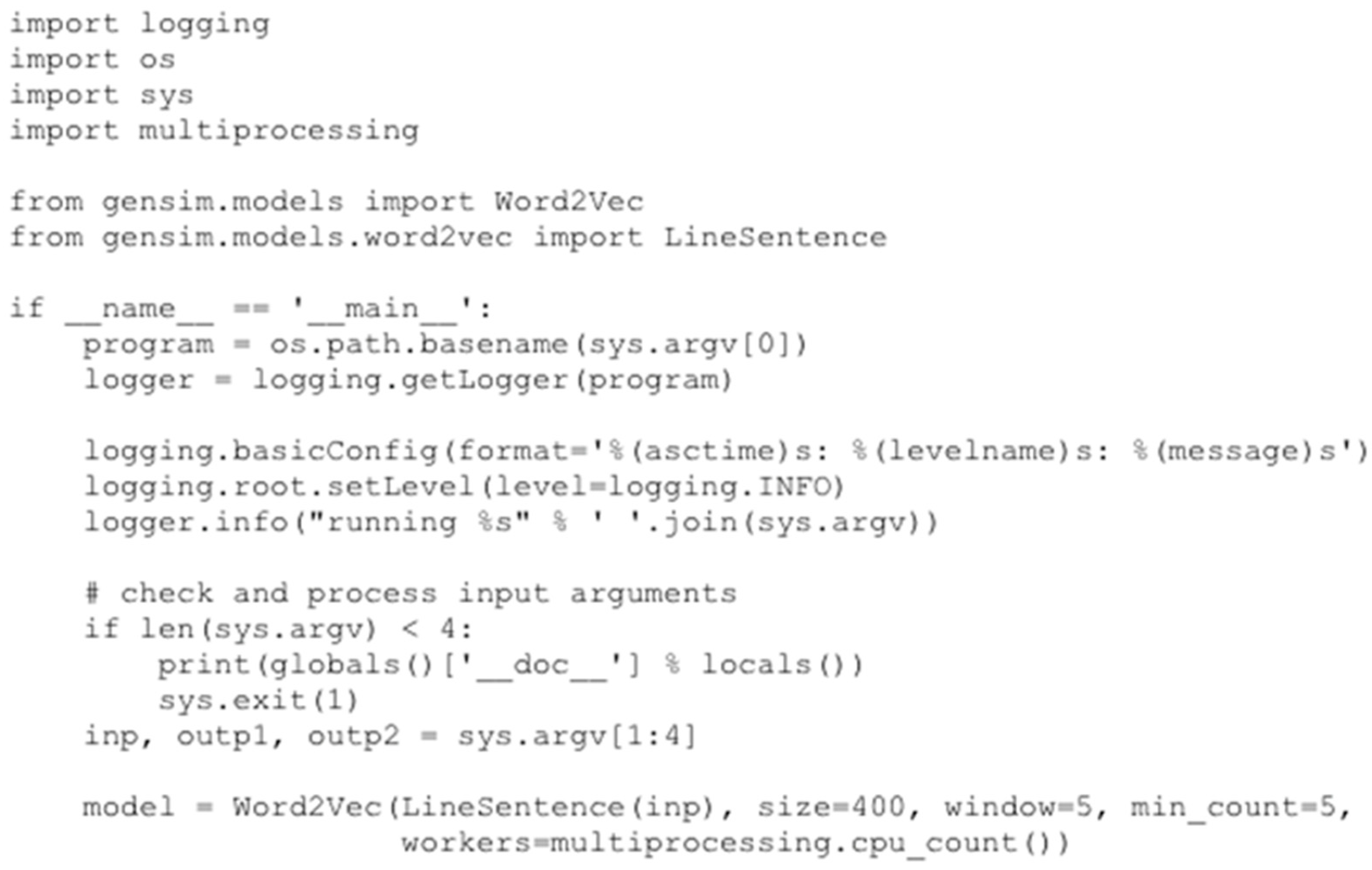

2.1. Proposed Task-Based Dialogue-Learning Model

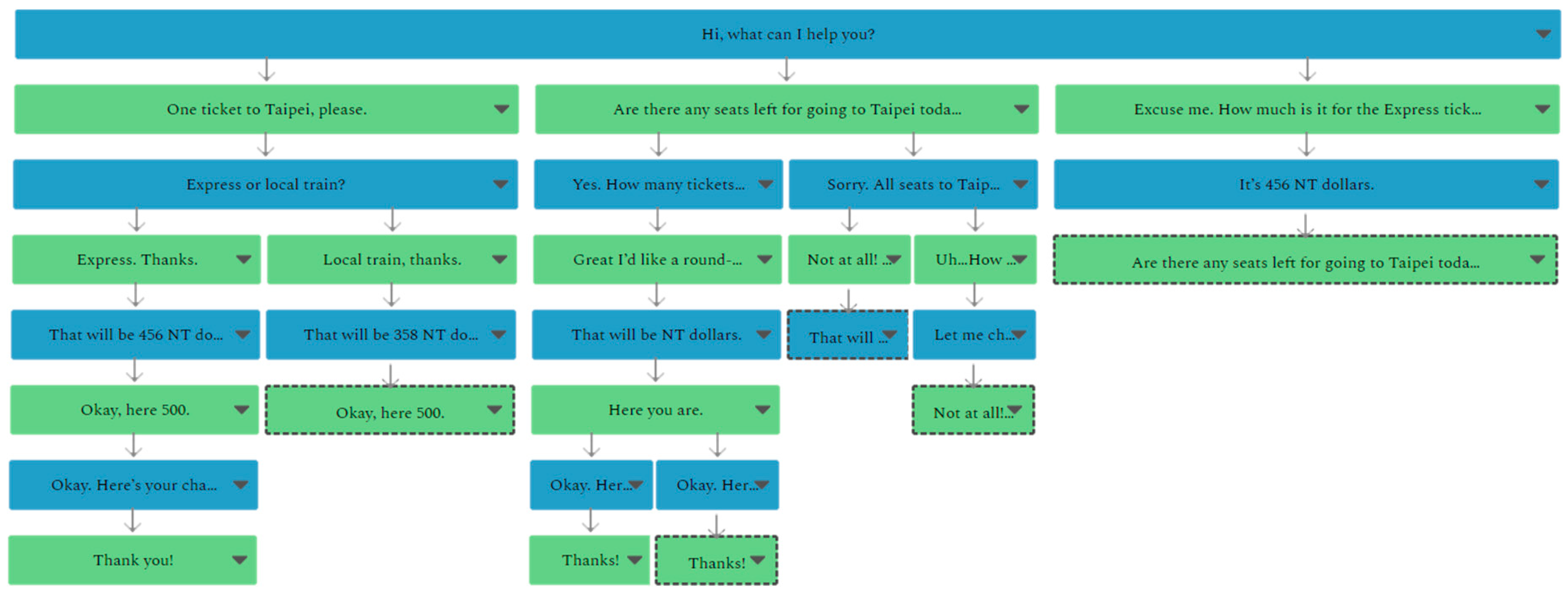

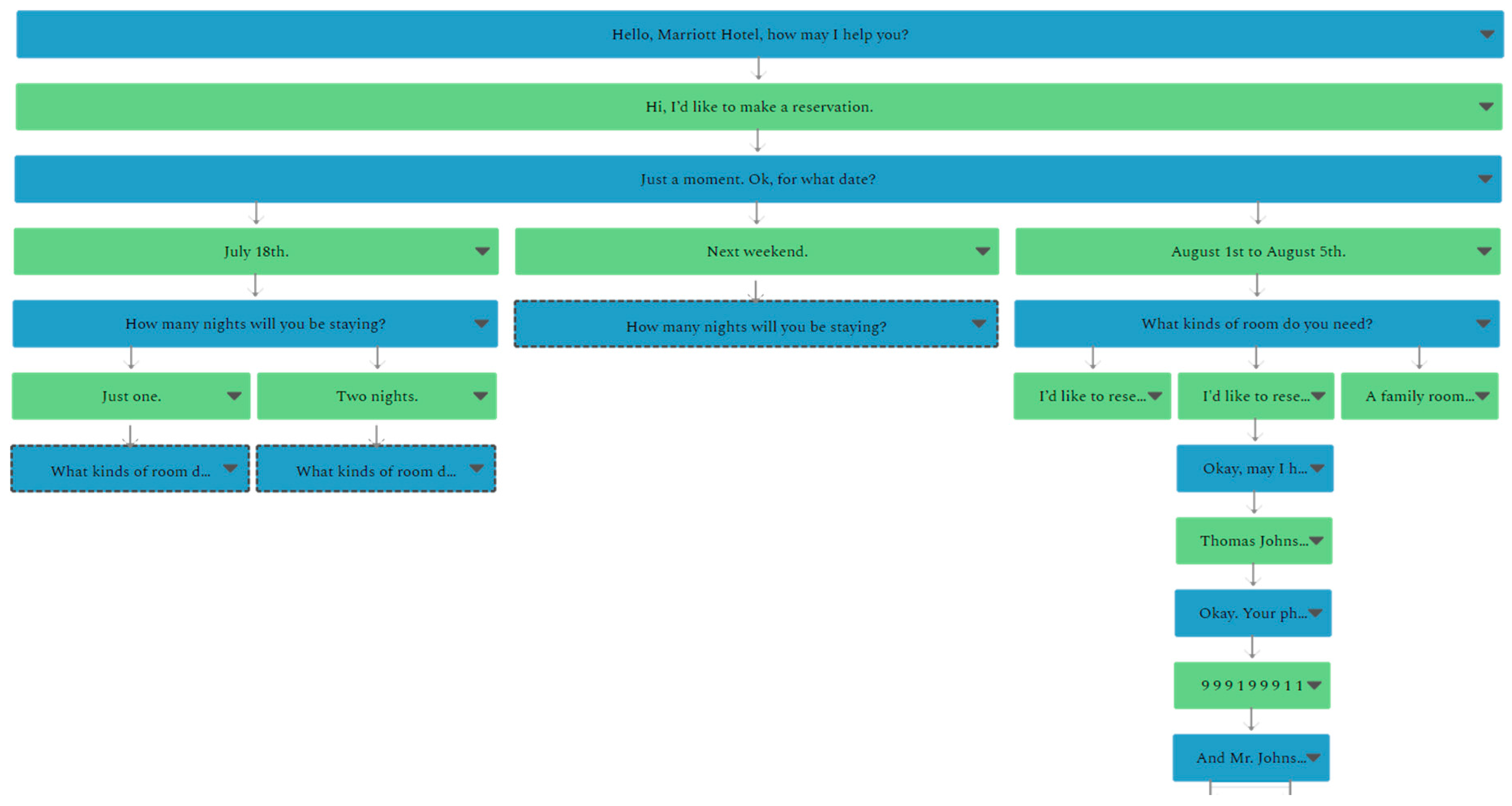

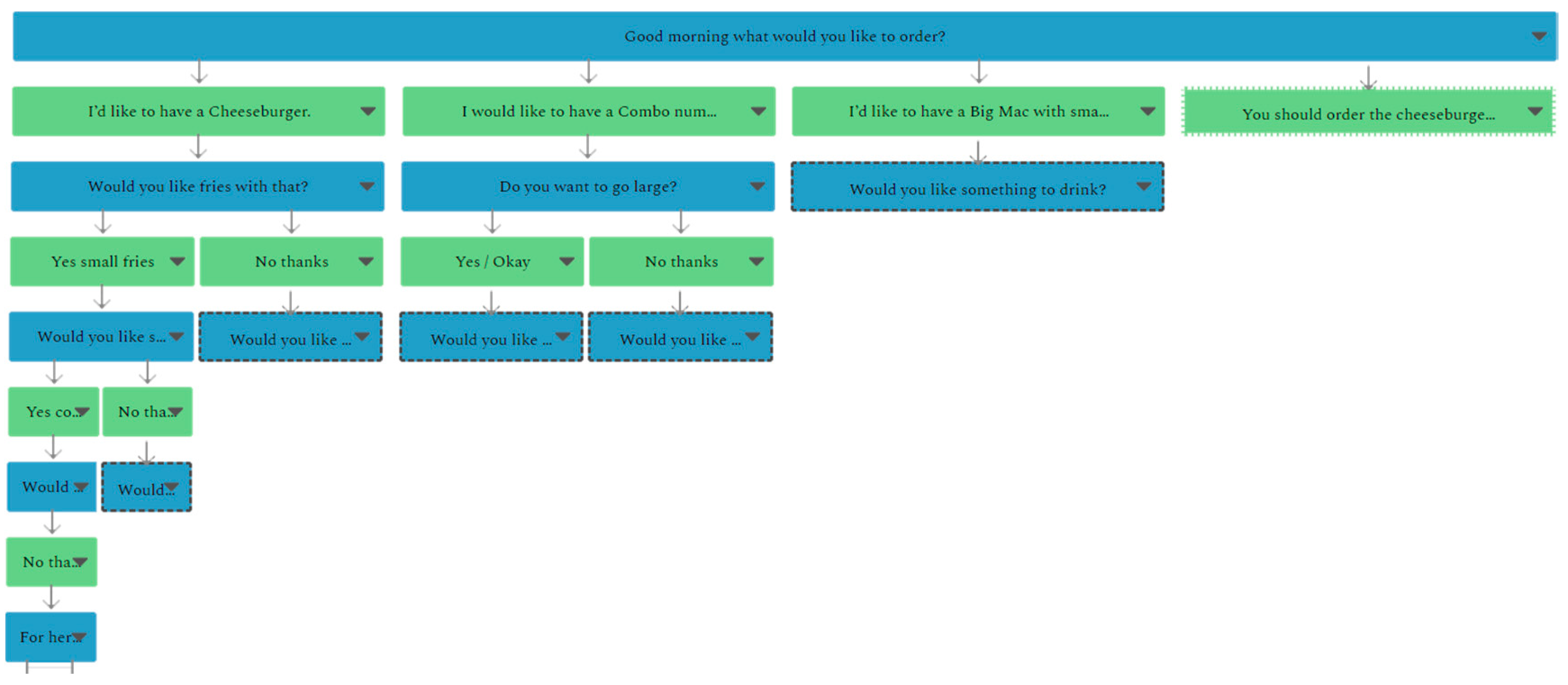

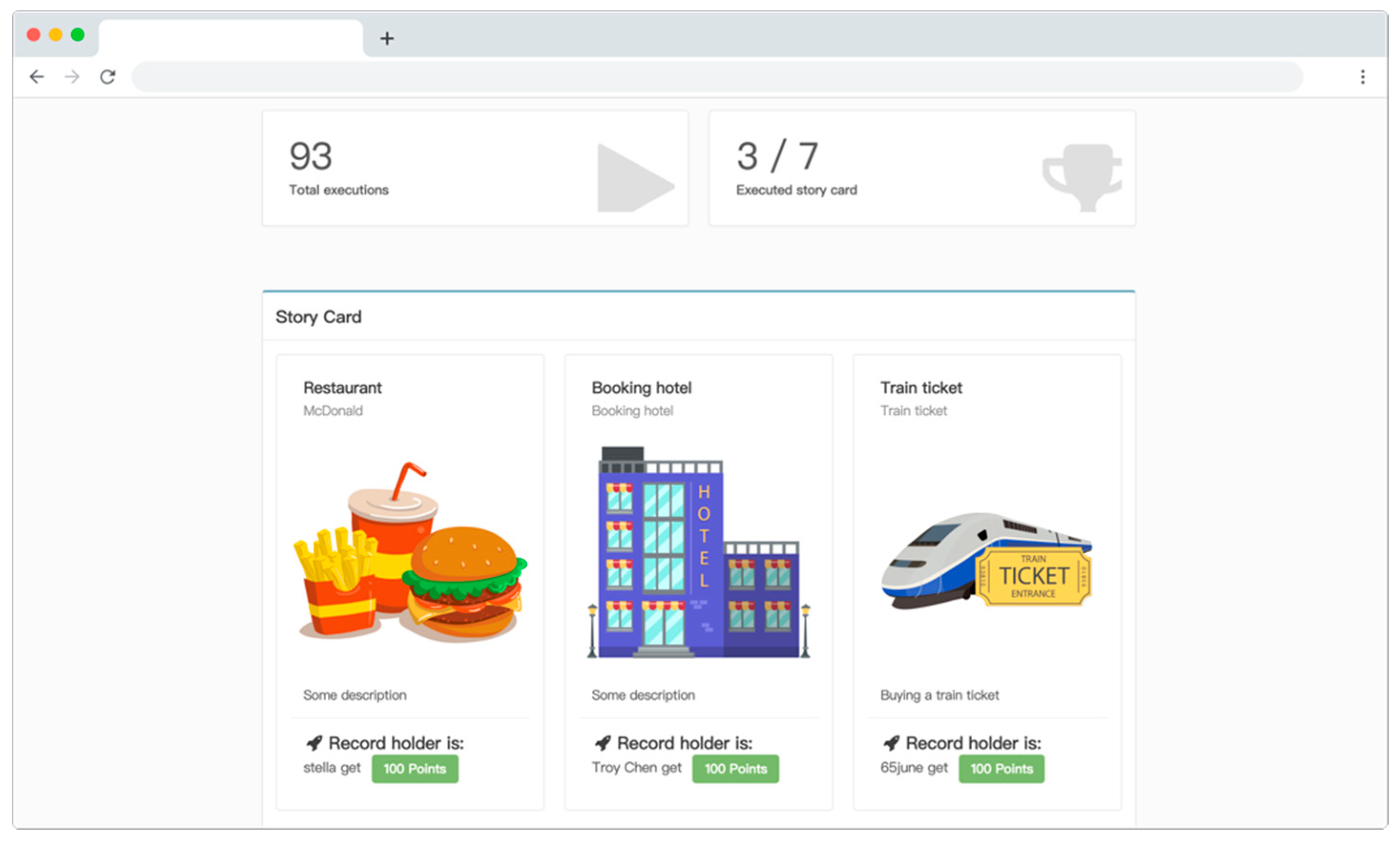

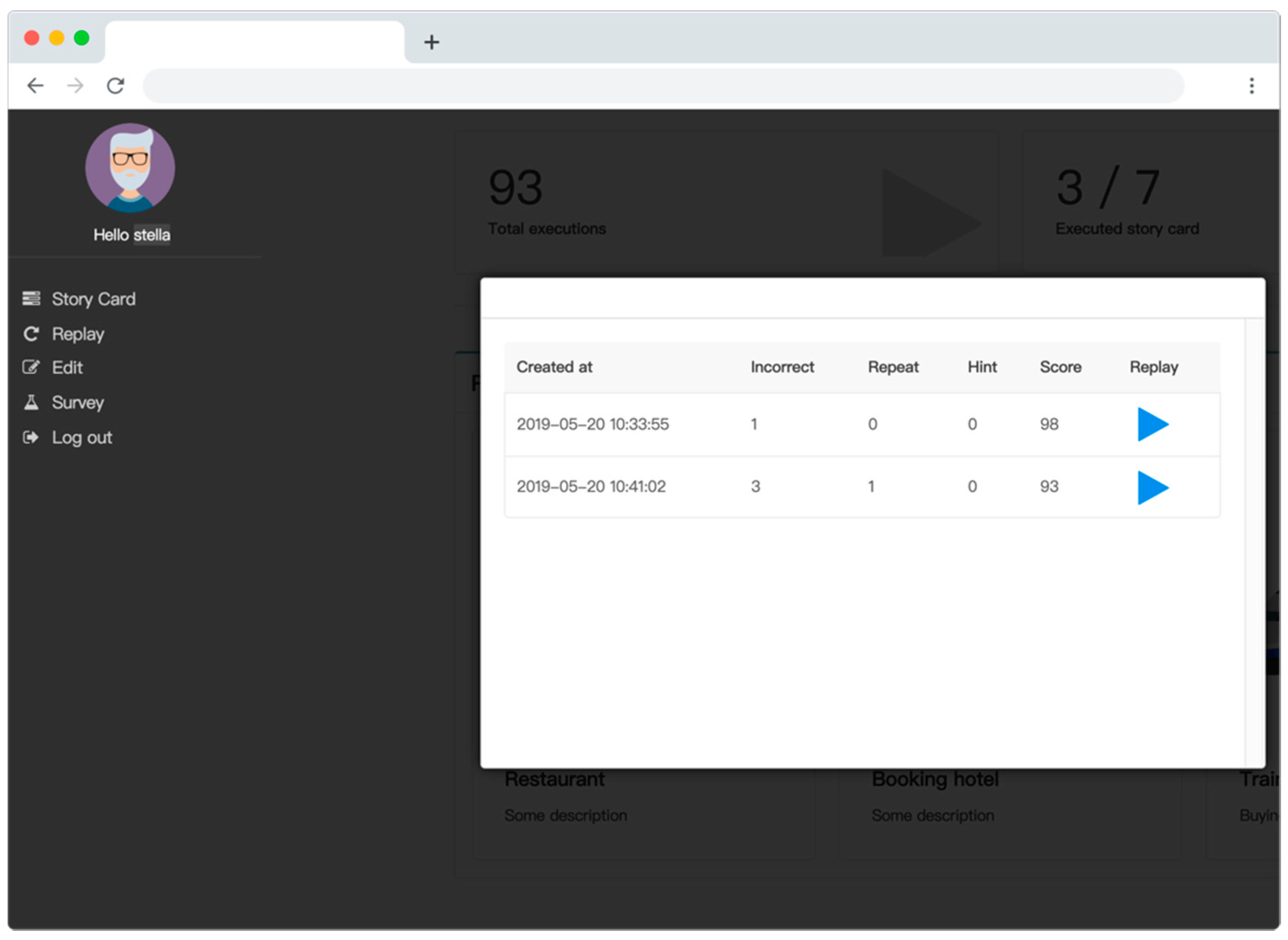

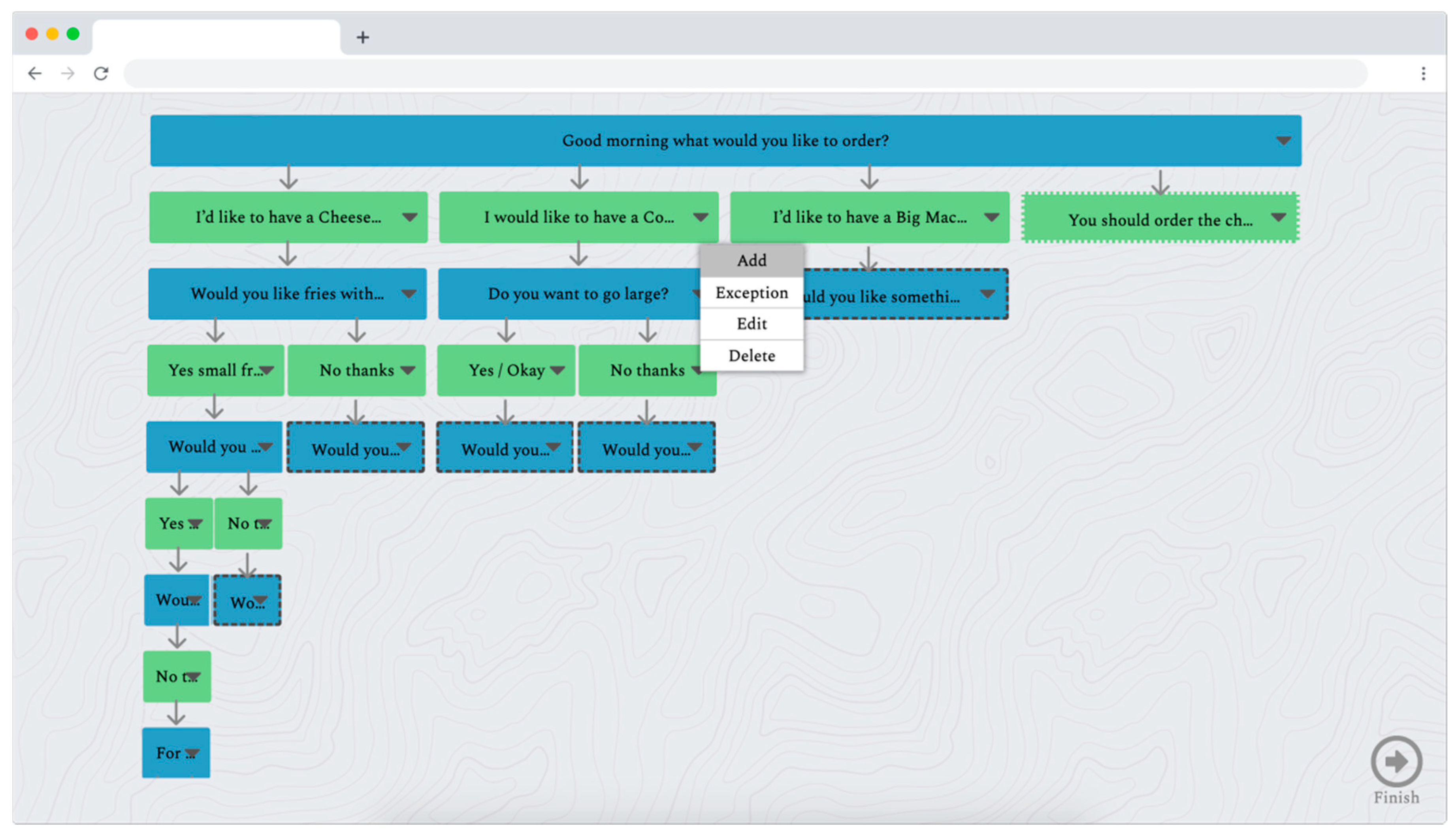

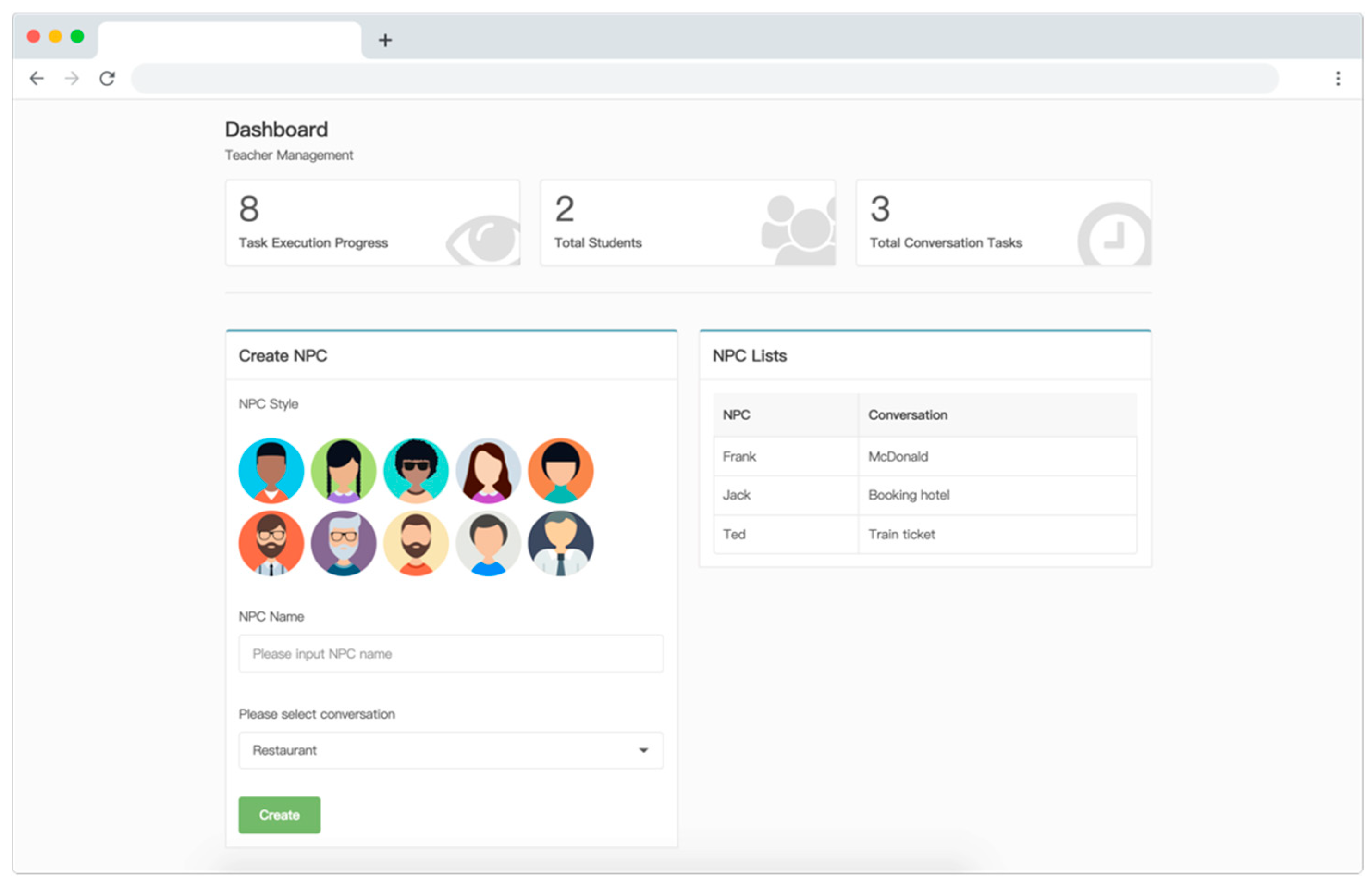

2.2. Experiment Procedure and System

3. Results

4. Conclusions

5. Limitations and Future Works

Author Contributions

Funding

Conflicts of Interest

Appendix A

Appendix B

- Q1.

- The user interface is simple and easy to use.

- Q2.

- The dialogue guidance is helpful for completing the task.

- Q3.

- The task flow is smooth and easy to follow.

- Q4.

- The voice recognition in the system is accurate.

- Q5.

- Compared to the traditional classroom teaching, the content in the system is easier to access.

- Q6.

- Compared to the traditional classroom teaching, the language-learning content in the system is easier to learn.

- Q7.

- Compared to the traditional classroom teaching, I am better motivated to learn by using this system.

- Q8.

- Compared to the traditional classroom teaching, I perform better by using this system.

- Q9.

- The scoring board and social interactive functions keep me motivated.

- Q10.

- Overall, this system is helpful in English speaking practice.

- Q11.

- Overall, I am satisfied with the experience of using this system.

- Q12.

- I would like to continue to use this system.

Appendix C

| id | Q1 | Q2 | Q3 | Q4 | Q5 | Q6 | Q7 | Q8 | Q9 | Q10 | Q11 | Q12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 4 |

| 2 | 5 | 1 | 2 | 4 | 4 | 3 | 3 | 3 | 4 | 4 | 4 | 3 |

| 3 | 4 | 4 | 3 | 3 | 3 | 4 | 3 | 3 | 4 | 4 | 4 | 3 |

| 4 | 4 | 4 | 2 | 3 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 |

| 5 | 4 | 3 | 2 | 2 | 4 | 3 | 4 | 3 | 3 | 3 | 3 | 2 |

| 6 | 3 | 3 | 3 | 2 | 4 | 4 | 5 | 4 | 4 | 3 | 3 | 3 |

| 7 | 4 | 3 | 4 | 3 | 4 | 4 | 3 | 3 | 4 | 3 | 3 | 3 |

| 8 | 5 | 5 | 4 | 3 | 4 | 4 | 3 | 4 | 5 | 3 | 4 | 4 |

| 9 | 4 | 4 | 3 | 3 | 4 | 4 | 4 | 4 | 4 | 3 | 3 | 4 |

| 10 | 4 | 3 | 4 | 3 | 4 | 3 | 3 | 3 | 4 | 4 | 4 | 3 |

| 11 | 4 | 2 | 2 | 3 | 4 | 4 | 3 | 4 | 3 | 3 | 3 | 2 |

| 12 | 4 | 3 | 4 | 2 | 4 | 3 | 4 | 3 | 4 | 4 | 4 | 3 |

| 13 | 4 | 4 | 4 | 4 | 3 | 3 | 2 | 2 | 3 | 4 | 4 | 3 |

| 14 | 3 | 3 | 2 | 2 | 4 | 4 | 3 | 3 | 3 | 3 | 3 | 3 |

| 15 | 5 | 4 | 3 | 3 | 4 | 4 | 5 | 4 | 5 | 5 | 5 | 4 |

| 16 | 4 | 4 | 4 | 3 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 |

| 17 | 4 | 3 | 4 | 3 | 3 | 4 | 4 | 3 | 3 | 4 | 4 | 4 |

| 18 | 3 | 2 | 2 | 3 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 3 |

| 19 | 3 | 3 | 2 | 2 | 3 | 3 | 3 | 3 | 3 | 3 | 4 | 3 |

| 20 | 3 | 2 | 3 | 3 | 4 | 4 | 4 | 2 | 3 | 3 | 3 | 3 |

| 21 | 5 | 2 | 1 | 1 | 2 | 4 | 4 | 4 | 2 | 4 | 3 | 2 |

| 22 | 2 | 3 | 2 | 2 | 3 | 3 | 4 | 3 | 4 | 3 | 4 | 4 |

| AVG 3.125 | AVG 3.545 | AVG 3.511 | ||||||||||

Appendix D

| System Rating | Teacher Rating | Machine Learning Prediction (Three Features) | Machine Learning Prediction (Five Features) |

|---|---|---|---|

| 98 | 80 | 90.35 | 87.74 |

| 89 | 90 | 84.70 | 89.53 |

| 89 | 85 | 84.70 | 85.96 |

| 100 | 99 | 91.76 | 92.34 |

| 80 | 80 | 77.65 | 82.01 |

| 96 | 1 | 91.49 | 90.07 |

| 80 | 80 | 86.70 | 77.77 |

| 94 | 80 | 89.89 | 89.91 |

| 89 | 89 | 86.70 | 87.40 |

| 97 | 97 | 94.68 | 91.83 |

| 74 | 74 | 76.49 | 77.36 |

| 85 | 83 | 83.39 | 83.38 |

| 83 | 83 | 84.77 | 83.91 |

| 94 | 90 | 87.53 | 91.11 |

| 88 | 88 | 83.39 | 86.25 |

| 94 | 93 | 86.98 | 92.01 |

| 63 | 65 | 69.15 | 68.21 |

| 100 | 98 | 90.81 | 94.86 |

| 93 | 92 | 86.98 | 90.72 |

| 98 | 98 | 89.53 | 92.93 |

| 98 | 95 | 89.92 | 95.78 |

| 91 | 88 | 87.19 | 90.86 |

| 78 | 80 | 76.29 | 73.75 |

| 100 | 100 | 91.28 | 93.91 |

| 96 | 90 | 89.92 | 94.49 |

| 88 | 85 | 82.91 | 86.55 |

| 100 | 98 | 90.88 | 95.36 |

| 94 | 94 | 86.89 | 87.82 |

| 98 | 98 | 89.55 | 92.15 |

| 92 | 92 | 85.56 | 89.11 |

| 88 | 88 | 83.42 | 88.79 |

| 92 | 90 | 86.26 | 90.06 |

| 98 | 80 | 90.54 | 87.27 |

| 65 | 70 | 72.02 | 71.44 |

| 78 | 78 | 76.30 | 73.46 |

| 98 | 80 | 90.79 | 79.43 |

| 96 | 96 | 89.33 | 91.61 |

| 94 | 94 | 87.88 | 89.74 |

| 100 | 80 | 92.24 | 94.21 |

| 88 | 88 | 87.88 | 89.75 |

| 92 | 92 | 85.81 | 80.17 |

| 98 | 98 | 89.88 | 85.90 |

| 100 | 80 | 91.24 | 88.02 |

| 100 | 100 | 91.24 | 90.28 |

| 96 | 96 | 88.53 | 83.70 |

| 92 | 92 | 85.91 | 88.12 |

| 92 | 88 | 85.91 | 88.33 |

| 55 | 60 | 63.72 | 63.18 |

| 94 | 94 | 87.21 | 89.65 |

| 70 | 70 | 72.85 | 71.37 |

| 88 | 88 | 83.30 | 84.08 |

References

- Sykes, J.M. Technologies for Teaching and Learning Intercultural Competence and Interlanguage Pragmatics; John Wiley & Sons: Hoboken, NJ, USA, 2017; pp. 118–133. [Google Scholar]

- Scholz, K. Encouraging Free Play: Extramural Digital Game-Based Language Learning as a Complex Adaptive System. CALICO J. 2016, 34, 39–57. [Google Scholar] [CrossRef]

- Braga, J.D.C.F. Fractal groups: Emergent dynamics in on-line learning communities. Revista Brasileira de Linguística Aplicada 2013, 13, 603–623. [Google Scholar] [CrossRef][Green Version]

- Abdallah, M.M.S.; Mansour, M.M. Virtual Task-Based Situated Language-Learning with Second Life: Developing EFL Pragmatic Writing and Technological Self-Efficacy. SSRN Electron. J. 2015, 2, 150. [Google Scholar] [CrossRef]

- Culbertson, G.R.; Andersen, E.L.; White, W.M.; Zhang, D.; Jung, M.F.; Culbertson, G. Crystallize: An Immersive, Collaborative Game for Second Language Learning. In Proceedings of the 19th ACM Conference on Computer-Supported Cooperative Work & Social Computing—CSCW ’16, San Francisco, CA, USA, 27 February–2 March 2016; Association for Computing Machinery (ACM): New York, NY, USA, 2016; pp. 635–646. [Google Scholar]

- Kennedy, J.; Baxter, P.; Belpaeme, T. The Robot Who Tried Too Hard. In Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction—HRI ’15, Cancun, Mexico, 15–17 November 2016; Association for Computing Machinery (ACM): New York, NY, USA, 2015; pp. 67–74. [Google Scholar]

- Morton, H.; Jack, M. Speech interactive computer-assisted language learning: A cross-cultural evaluation. Comput. Assist. Lang. Learn. 2010, 23, 295–319. [Google Scholar] [CrossRef]

- Gamper, J.; Knapp, J. A Review of Intelligent CALL Systems. Comput. Assist. Lang. Learn. 2002, 15, 329–342. [Google Scholar] [CrossRef]

- Hsieh, Y.C. A case study of the dynamics of scaffolding among ESL learners and online resources in collaborative learning. Comput. Assist. Lang. Learn. 2016, 30, 115–132. [Google Scholar] [CrossRef]

- Ehsani, F.; Knodt, E. Speech Technology in Computer-Aided Language Learning: Strengths and Limitations of a New Call Paradigm. Lang. Learn. Technol. 1998, 2, 45–60. [Google Scholar]

- Tür, G.; Jeong, M.; Wang, Y.-Y.; Hakkani-Tür, D.; Heck, L.P. Exploiting the semantic web for unsupervised natural language semantic parsing. In Proceedings of the 13th Annual Conference of the International Speech Communication Association, Portland, OR, USA, 9–13 September 2012. [Google Scholar]

- Chen, Y.-N.; Wang, W.Y.; Rudnicky, A.I. Unsupervised induction and filling of semantic slots for spoken dialogue systems using frame-semantic parsing. In 2013 IEEE Workshop on Automatic Speech Recognition and Understanding; IEEE: New York, NY, USA, 2013; pp. 120–125. [Google Scholar]

- Hoang, G.T.L.; Kunnan, A. Automated Essay Evaluation for English Language Learners:A Case Study ofMY Access. Lang. Assess. Q. 2016, 13, 359–376. [Google Scholar] [CrossRef]

- González-Lloret, M. A Practical Guide to Integrating Technology into Task-Based Language Teaching; Georgetown University Press: Washington, DC, USA, 2016. [Google Scholar]

- Baralt, M.; Gurzynski-Weiss, L.; Kim, Y. Engagement with the language. In Language Learning & Language Teaching; John Benjamins Publishing Company: Amsterdam, The Netherlands, 2016; pp. 209–239. [Google Scholar]

- Allen, J.P.B.; Prabhu, N.S. Second Language Pedagogy. TESOL Q. 1988, 22, 498. [Google Scholar] [CrossRef]

- Leaver, B.; Willis, J. Task-Based Instruction in Foreign Language Education: Practices and Programmes; Georgetown University Press: Washington, DC, USA, 2005. [Google Scholar]

- Yuan, F.; Willis, J. A Framework for Task-Based Learning. TESOL Q. 1999, 33, 157. [Google Scholar] [CrossRef][Green Version]

- Willis, D.; Willis, J. Doing Task-Based Teaching; Oxford University Press: Oxford, UK, 2007; pp. 56–63. [Google Scholar]

- Sarıcoban, A.; Karakurt, L. The Use of Task-Based Activities to Improve Listening and Speaking Skills in EFL Context. Sino-US Engl. Teach. 2016, 13, 445–459. [Google Scholar] [CrossRef][Green Version]

- Rabbanifar, A.; Mall-Amiri, B. The comparative effect of opinion gap and reasoning gap tasks on complexity, fluency, and accuracy of EFL learners’ speaking. Int. J. Lang. Learn. Appl. Linguist. World 2017, 16, 55–77. [Google Scholar]

- Fallahi, S.; Aziz Malayeri, F.; Bayat, A. The effect of information-gap vs. opinion-gap tasks on Iranian EFL learners’ reading comprehension. Int. J. Educ. Investig. 2015, 2, 170–181. [Google Scholar]

- Wen, T.-H.; VanDyke, D.; Mrkšić, N.; Gasic, M.; Barahona, L.M.R.; Su, P.-H.; Ultes, S.; Young, S. A Network-based End-to-End Trainable Task-oriented Dialogue System. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics, Valencia, Spain, 3–7 April 2017; Volume 1. Long Papers. [Google Scholar]

- Chen, H.; Liu, X.; Yin, D.; Tang, J. A Survey on Dialogue Systems. ACM SIGKDD Explor. Newsl. 2017, 19, 25–35. [Google Scholar] [CrossRef]

- Fryer, L.; Carpenter, R. Emerging Technologies: Bots as Language Learning Tools. Lang. Learn. Technol. 2006, 10, 8–14. [Google Scholar]

- Shawar, B.A.; Atwell, E. Chatbots: Are they really useful? LDV-Forum Band 2007, 22, 29–49. [Google Scholar]

- Brandtzaeg, P.B.; Følstad, A. Chatbots: Changing user needs and motivations. Interactions 2018, 25, 38–43. [Google Scholar] [CrossRef]

- Azadani, M.N.; Ghadiri, N.; Davoodijam, E. Graph-based biomedical text summarization: An itemset mining and sentence clustering approach. J. Biomed. Inform. 2018, 84, 42–58. [Google Scholar] [CrossRef]

- Zhou, M.; Duan, N.; Liu, S.; Shum, H.-Y. Progress in Neural NLP: Modeling, Learning, and Reasoning. Engineering 2020, 6, 275–290. [Google Scholar] [CrossRef]

- Marshall, I.J.; Wallace, B.C. Toward systematic review automation: A practical guide to using machine learning tools in research synthesis. Syst. Rev. 2019, 8, 1–10. [Google Scholar] [CrossRef]

- Feine, J.; Gnewuch, U.; Morana, S.; Maedche, A. A Taxonomy of Social Cues for Conversational Agents. Int. J. Hum.-Comput. Stud. 2019, 132, 138–161. [Google Scholar] [CrossRef]

- Go, E.; Sundar, S.S. Humanizing chatbots: The effects of visual, identity and conversational cues on humanness perceptions. Comput. Hum. Behav. 2019, 97, 304–316. [Google Scholar] [CrossRef]

- Ivanov, O.; Snihovyi, O.; Kobets, V. Implementation of Robo-Advisors Tools for Different Risk Attitude Investment Decisions. In Proceedings of the 14th International Conference on ICT in Education, Research and Industrial Applications. Integration, Harmonization and Knowledge Transfer. Volume II: Workshops (ICTERI 2018), Kyiv, Ukraine, 14–17 May 2018; pp. 195–206. [Google Scholar]

- Jung, D.; Dorner, V.; Glaser, F.; Morana, S. Robo-Advisory: Digitalization and Automation of Financial Advisory. Bus. Inf. Syst. Eng. 2018, 60, 81–86. [Google Scholar] [CrossRef]

- Jung, D.; Dorner, V.; Weinhardt, C.; Pusmaz, H. Designing a robo-advisor for risk-averse, low-budget consumers. Electron Mark. 2017, 28, 367–380. [Google Scholar] [CrossRef]

- Shang, L.; Lu, Z.; Li, H. Neural Responding Machine for Short-Text Conversation. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015; Association for Computational Linguistics (ACL): Stroudsburg, PA, USA, 2015; Volume 1, pp. 1577–1586, Long Papers. [Google Scholar]

- DeNoyer, L.; Gallinari, P. The Wikipedia XML corpus. ACM SIGIR Forum 2006, 40, 64–69. [Google Scholar] [CrossRef]

- Wang, Y.; Witten, I.H. Induction of model trees for predicting continuous classes. In Proceedings of the Poster Papers of the European Conference on Machine Learning, Prague, Czech Republic, 23–25 April 1997; pp. 128–137. [Google Scholar]

| Target Sentence | Input Sentence | Similarity |

|---|---|---|

| I’d like to have a cheeseburger. | Hello can I have a hamburger please. | 0.915 |

| One ticket to Taipei, please. | I want to buy a ticket. | 0.831 |

| Yes, small fries. | Yes, I would like some fries. | 0.840 |

| Hi, I’d like to make a reservation. | How are you today. | 0.760 |

| Theme | Task | Task Description | Level |

|---|---|---|---|

| Accommodation | Hotel Reservation | Reserve a room for two adults, two nights from 8th August. | 2 |

| Transportation | Buying Train Tickets | Buy a train ticket to Taipei with a 500 bill. | 1 |

| Restaurant | Ordering food in a Restaurant | Order a burger and a coke. | 1 |

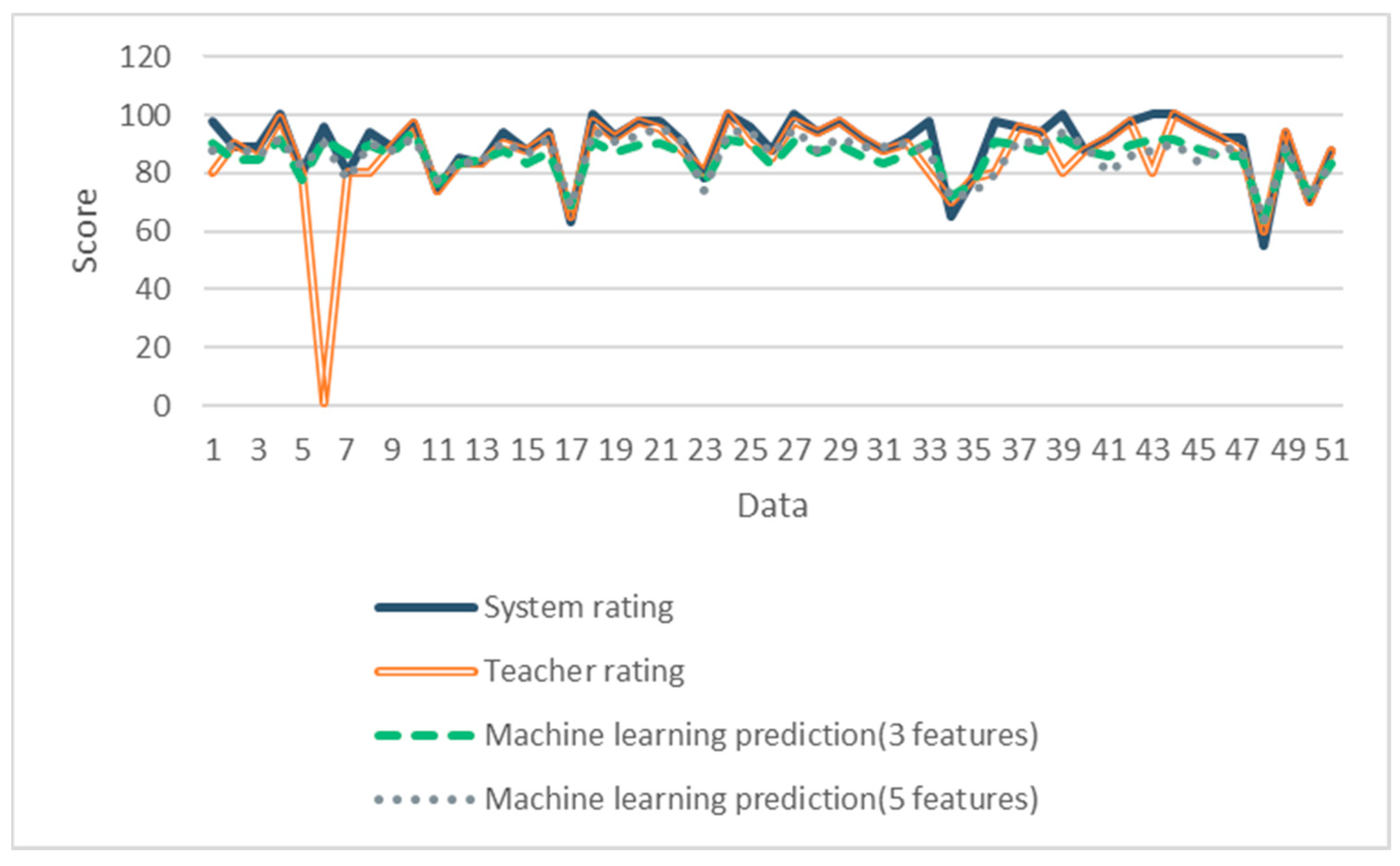

| Methods | Root Mean Squared Error | Mean Absolute Error |

|---|---|---|

| System rating | 14.81 | 5.02 |

| ML prediction (three features) | 14.08 | 6.98 |

| ML prediction (five features) | 13.59 | 5.82 |

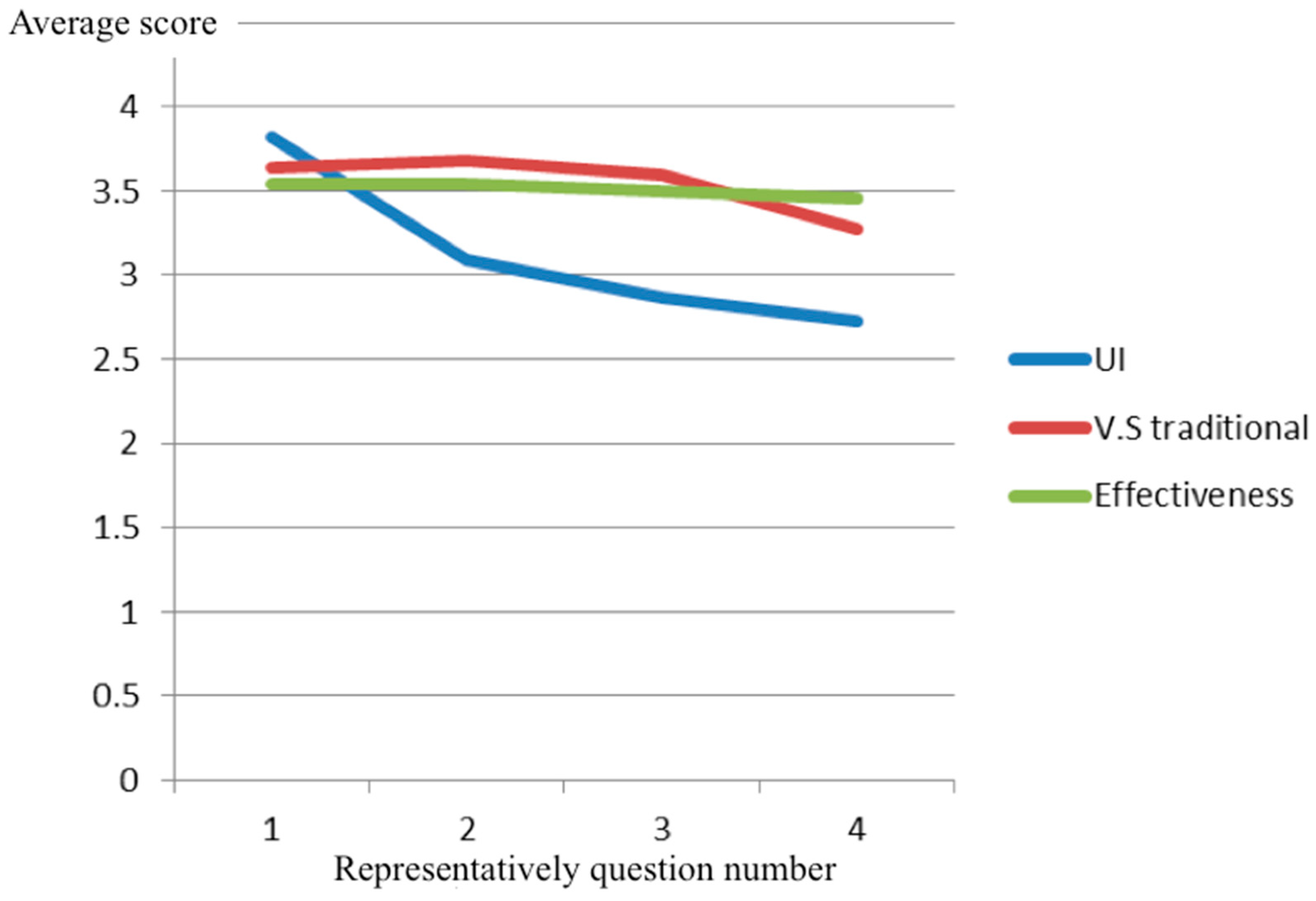

| Survey Topics | AVG |

|---|---|

| participants’ perception of the user interface (Q1–Q4) | 3.125 |

| participants’ perception of the chatting process compared to traditional instruction (Q5–Q8) | 3.545 |

| participants’ perception of the overall effectiveness of the system (Q9–Q12) | 3.511 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, K.-C.; Chang, M.; Wu, K.-H. Developing a Task-Based Dialogue System for English Language Learning. Educ. Sci. 2020, 10, 306. https://doi.org/10.3390/educsci10110306

Li K-C, Chang M, Wu K-H. Developing a Task-Based Dialogue System for English Language Learning. Education Sciences. 2020; 10(11):306. https://doi.org/10.3390/educsci10110306

Chicago/Turabian StyleLi, Kuo-Chen, Maiga Chang, and Kuan-Hsing Wu. 2020. "Developing a Task-Based Dialogue System for English Language Learning" Education Sciences 10, no. 11: 306. https://doi.org/10.3390/educsci10110306

APA StyleLi, K.-C., Chang, M., & Wu, K.-H. (2020). Developing a Task-Based Dialogue System for English Language Learning. Education Sciences, 10(11), 306. https://doi.org/10.3390/educsci10110306