2. Literature Review

Artificial intelligence (AI) is not only regarded as a technological breakthrough but also as a macroeconomic shock with important implications. It is reshaping productivity spaces, altering labor markets, further widening inequalities in incomes, and presenting new challenges in inflation targeting as well as in macroeconomic governance. However, even as the scholarly literature labels AI as transformative, the systematic linking of its diffusion to broader macroeconomic frameworks is still lacking; this is the case in Europe in particular. That is exactly where the present study intervenes.

Abrardi et al. (

2019) pave the way by posing AI as a general-purpose technology with spillovers in the broader economy, as well as institutions and capital barriers that are able to delay diffusion.

Acemoglu (

2025) complements such a viewpoint through formalizing the macroeconomic automation-driven substitution–productivity improvement trade-off in such a way that AI’s long-term economic effects are conditional on institutions.

Autor (

2022) supports the latter in presenting AI employment dynamics as biased in favoring high-skill employees, which causes skill-biased technological change and amplifies wage polarization.

However, much such research remains macro-theoretical and not empirically supported in diverse institutional contexts.

Agrawal et al. (

2019) describe AI as a “prediction engine”, central to industrial productivity, but their work is not based on an empirical connection to national-level enablers like access to credit or public investment in innovation.

Albanesi et al. (

2023), for Europe, advise caution even when high-tech aspirations are in place; this is because traditional kinds of employment, shed as a result of automation, are not well compensated in new ICT jobs—the suggestion being that the aggregate gains coming from AI are not well shared and are structurally conditioned.

Aldasoro et al. (

2024) provide some monetary policy ideas, such as using AI to stimulate output while constraining inflation, but the cross-country difference in such an effect is underexplored. Moreover, in developmental contexts,

Aromolaran et al. (

2024) prove that AI investment reduces poverty when diffusion is inclusive and broad-based. That conditionality illuminates the role of diffusion mechanisms, which are typically excluded in texts focusing on AI’s payoff. At the firm level,

Babina et al. (

2024) document that AI can spur innovation, but market concentration threatens small firms that are lacking the absorption capacity to transform.

Bickley et al. (

2022) mention that AI is revolutionizing the economics practice itself, but the lack of a harmonized technique inhibits generalizability in such claims.

Such dissimilar works, though thematically substantial, point to a crucial omission: not many reconcile macroeconomic configuration with the empirical modeling of AI diffusion. As aptly commented on by

Hoffmann and Nurski (

2021) and

Gualandri and Kuzior (

2024), the firm-level focus in the prevailing scholarship overlooks the role that macro-level policy and national institutions play in shaping diffusion patterns, not least in heterogeneous European economies. The omission is considerable because European countries significantly differ in their digital preparedness, infrastructure, credit markets, and labor market inflexibility.

Bonab et al. (

2021) make a normative case for “anticipatory regulation” for the avoidance of AI-promoted growth in inequality. Moreover,

Bonfiglioli et al. (

2023) link AI adoption in U.S. commuting regions to polarization in cognitive labor, which includes regional disparity—an element perhaps evident in Europe.

Brynjolfsson and Unger (

2023) present AI as structurally transformative, but

Brynjolfsson et al. (

2018) note a “productivity paradox,” in which potential benefits are not realized due to under-measurement and late diffusion.

Brynjolfsson et al. (

2023) note that generative AI is expected to raise productivity for low-skilled labor, but its diffusion remains patchy. The suggestion is that policy and institutional settings are important mediators between AI’s macroeconomic effects. On the behavioral front,

Camerer (

2018) puts forward the possibilities that algorithmic choice could transform macroeconomic behavior itself. Such results are, however, largely conceptual and lack empirical support.

Chen et al. (

2024) project various global effects for AI, highlighting infrastructure and institutional absorptive capacity differentials.

Cockburn et al. (

2018) indicate that AI’s returns in R&D would become even more concentrated, perpetuating innovation inequality. Such warnings, however, lack country-level adoption profiles and therefore provide little guidance for policy.

Thus, the current body of research is thematically well-grounded but structurally incomplete. Although theory recognizes AI’s macro-level implications, no systematic, cross-country empirical research linking AI adoption rates to macroeconomic fundamentals such as trade openness, access to domestic credit, or health spending—the very essence of economic resilience and innovation preparedness—bridges the gap. The current study tries to bridge this fundamental empirical gap.

By means of a dual-method approach—econometric modeling (fixed and random effects) and machine learning algorithms (e.g., K-Nearest Neighbors, Clustering)—the present article explores the national-level macroeconomic characteristics and institutional configurations that can be used in explaining AI adoption in large European firms. With such an endeavor, it responds to demands for methodological innovation and policy-driven empirical scholarship, such as those raised by the following authors:

Ruiz-Real et al. (

2021);

Szczepanski (

2019);

Trabelsi (

2024);

Varian (

2018);

Wagner (

2020);

L. Wang et al. (

2021);

X. Wang et al. (

2025);

Webb (

2019);

Wolff et al. (

2020) and

Zekos (

2021).

Such, for example, is the case for AI-driven nonlinear macro-behavior requiring high institutional capacity. The idea that AI can exacerbate the offshoring of thinking work, endangering middle-skill occupations, is shown by

Webb (

2019). The idea that AI’s productivity advantage in healthcare is contingent on public belief and governmental capacities—with the latter being a macro-institutional variable—is related by

Wolff et al. (

2020). The idea that setting AI’s net social benefits against future public damages requires global cooperation is pointed out by

Zekos (

2021). Yet, such comments are made in isolation and are seldom embedded in consistent, comparative analyses.

Our study makes a typological contribution in the Cluster analysis of European countries, showing that structurally similar economies can exhibit different AI adoption paths due to institutional mismatch. The paper further provides predictive modeling for estimating diffusion in alternative policy futures—a factor that is not present in the previous scholarship. Finally, in our study, we are not merely descriptive but rather diagnostic and prescriptive, which not only aids theory building but also policy guidance. Lastly, the worth of such research lies in connecting theory and empirics, micro and macro, and even technology and policy. The paper bridges a significant gap in AI scholarship in portraying an institutionally grounded, cross-country research on European adoption dynamics, in advancing knowledge on the European economic, structural, and policy drivers behind digital change.

A synthesis of the literature review according to macro-themes is presented in the following

Table 1.

Artificial intelligence is not just a technological innovation but a transformative macroeconomic force subject to national institutions, policy, and structural conditions. While existing work hints at AI’s productivity value and risk factors—in such areas as inequality and labor polarization—the bulk of the work is theoretical, firm-level, or U.S.-centric. Understudied in AI adoption are crucial macroeconomic factors like trade openness, credit access, and expenditure on health. Our study fills the gap in the inclusion of panel econometrics and machine learning for an AI diffusion study in European countries. Our study determines structural and institutional factors that provide a novel, evidence-based typology for inclusive and adaptive AI policy formation.

4. A Methodologically Integrated Approach to Analyzing AI Adoption: Panel Econometrics Meets Machine Learning

For systematically de-linking the structural and institutional determinants of European AI adoption, this study employs an integrated empirical framework. It complements panel econometric approaches with supervised machine learning processes along with unsupervised Clustering algorithms in pursuit of explanatory robustness, predictive accuracy, and typological differentiation. These paths work in synergistic combination in order to produce a multidimensional view of AI diffusion across European member states. The variable chosen for investigating the adoption of artificial intelligence focuses on the adoption of AI in large European companies. This choice is based on both practical reasons and theoretical and economic policy issues. In theory, such enterprises possess refined organizational structures, significant economic means, and refined management capacities—the primary requirements for absorbing and utilizing refined technologies such as artificial intelligence (AI). Large enterprises possess significantly greater chances of possessing a qualified workforce, refined infrastructures of technology, as well as economic means of efficiently utilizing AI in production and control systems (

Ardito et al., 2024;

Oldemeyer et al., 2025). SMEs possess structural disadvantages of limited digitalization, limited financial means, as well as technical and strategic capacity shortages, due to which they possess limited potential for utilizing AI in systematic and scale-based manners (

Zavodna et al., 2024;

Kukreja, 2025). Large enterprises, in any case, not only possess larger potential for innovative capacities, they also possess specific potential for clear productivity as well as capacity gains in operations; additionally, they show gains in improving their commercial offering to customers and stakeholders. Apart from this, adoption in such enterprises possesses multiplier gains across value chains, with indirect impulses towards SMEs occurring towards innovations as well (

Ardito et al., 2024).

Panel Econometric Models: Given the multi-country, multi-year nature of the dataset (2018–2023, 28 European countries), panel data models attempt to account for both the cross-sectional as well as time series dimensions, thereby capturing dynamic heterogeneity as well as unobservable country-level impacts. Fixed-effect models perform exceptionally well in controlling unobserved, time-invariant heterogeneity across countries, such as those that occur due to variations in institutional environments, regulation, or cultural perceptions of technological innovations, thereby allowing a more sophisticated causal interpretation of AI adoption’s macroeconomic determinants. In contrast, random-effects models assume orthogonality between the regressors as well as unobserved country-specific impacts, resulting in more efficient estimates, if this assumption holds. Choice between these two designs is aided by the Hausman test, which investigates the consistency of estimators, with ancillary evidence coming in specification diagnostics such as the Breusch–Pagan test and F-tests (

Popović et al., 2025). Application of these models determines statistically significant and robust associations between AI adoption (ALOAI) and a variety of macroeconomic indicators, with positive associations with health expenditure, GDP per head, openness of trade, and inflation. At the same time, it identifies, in some instances, unexpectedly adverse associations with domestic credit extended towards the private sector as well as with gross fixed capital formation, indicative of potential inefficiencies or structural mismatches in financial as well as capital resources being deployed in the context of some countries.

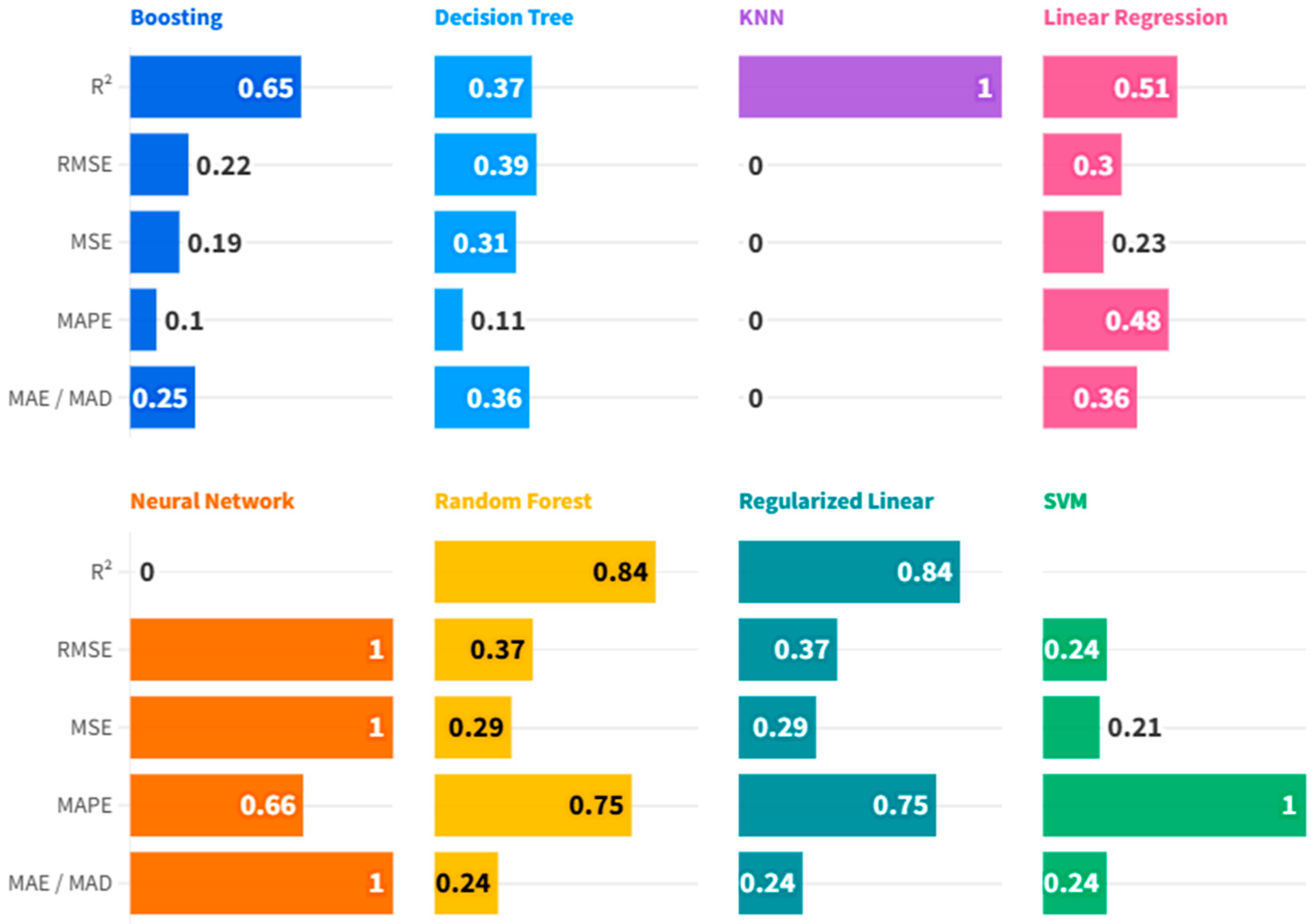

Supervised Machine Learning Algorithms: To complement the econometric analysis, we conducted a technical and scientific comparison of eight supervised machine learning algorithms: Boosting, Decision Tree, K-Nearest Neighbors (KNNs), Linear Regression, Neural Networks, Random Forest, Regularized Linear Regression, and Support Vector Machines (SVMs). These models have been trained using normalized data and tested using standard indicators of predictive performance, including MSE, RMSE, MAE, MAPE, and R2 (

Tapeh & Naser, 2023;

Ozkan-Okay et al., 2024). Among them, KNN emerged as the best-performing algorithm with near-zero prediction error as well as full explanatory power (R2 = 1.000). In order to determine the robustness of these results and reduce concerns of overfitting, a cross-validation exercise has been conducted as detailed in

Appendix A. Moreover, dropout analysis using KNN as a framework revealed domestic credit towards the private sector, as well as GDP per capita, and expenditure on health, as the most significant AI adoption drivers, revealing significant roles of internal financial perspectives as well as institutional capacity vis à vis external trade openness in determining the diffusion of tech.

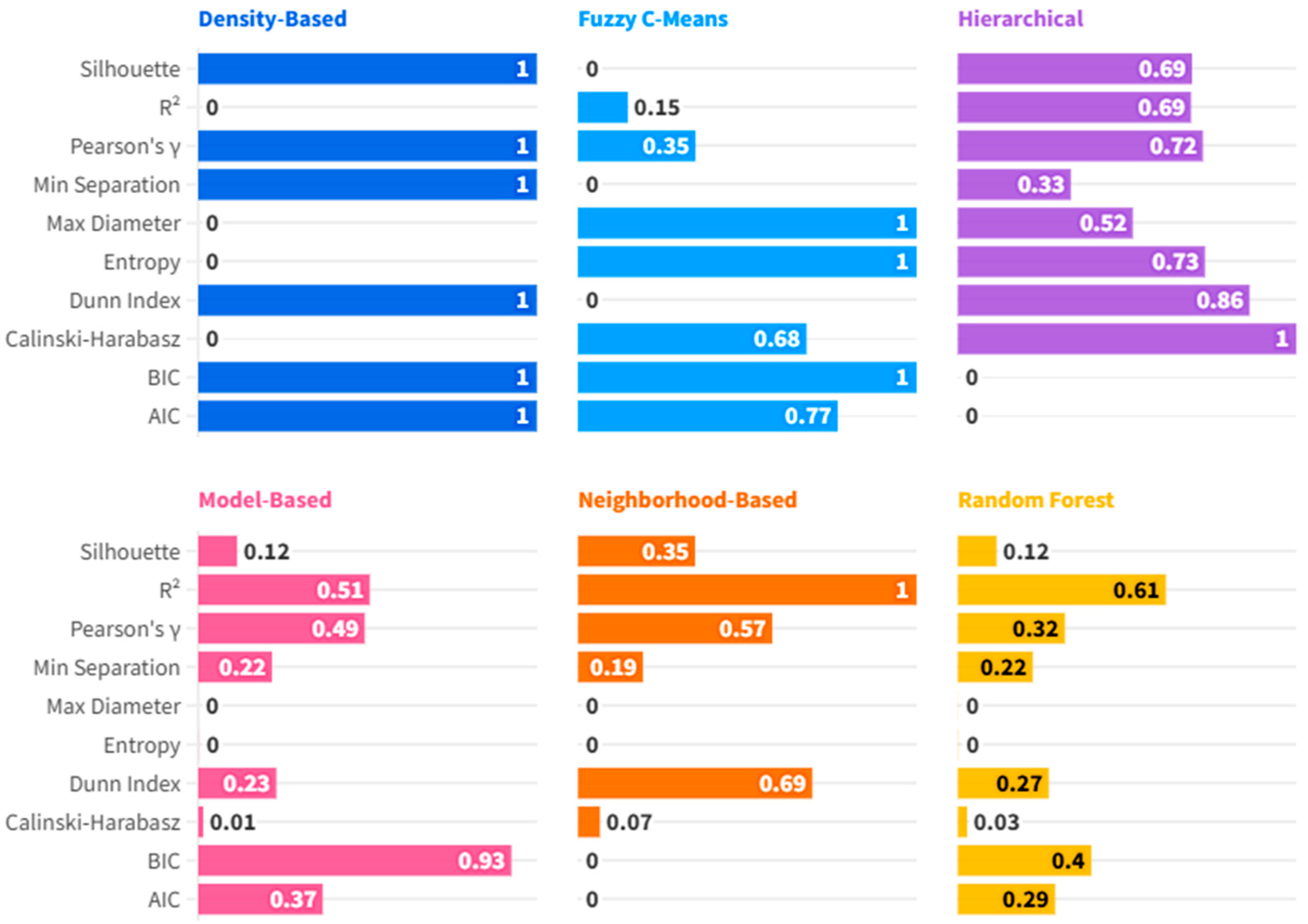

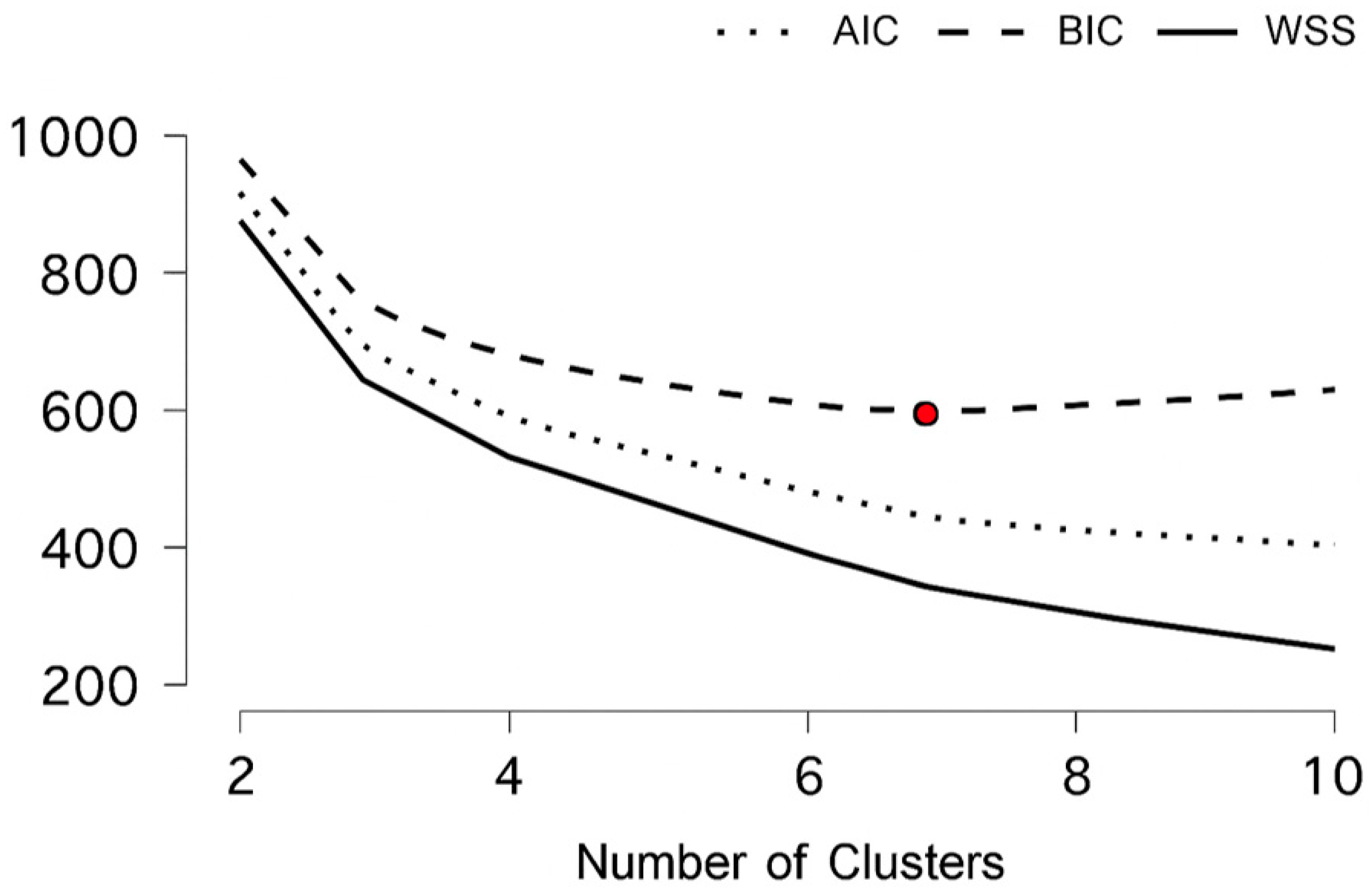

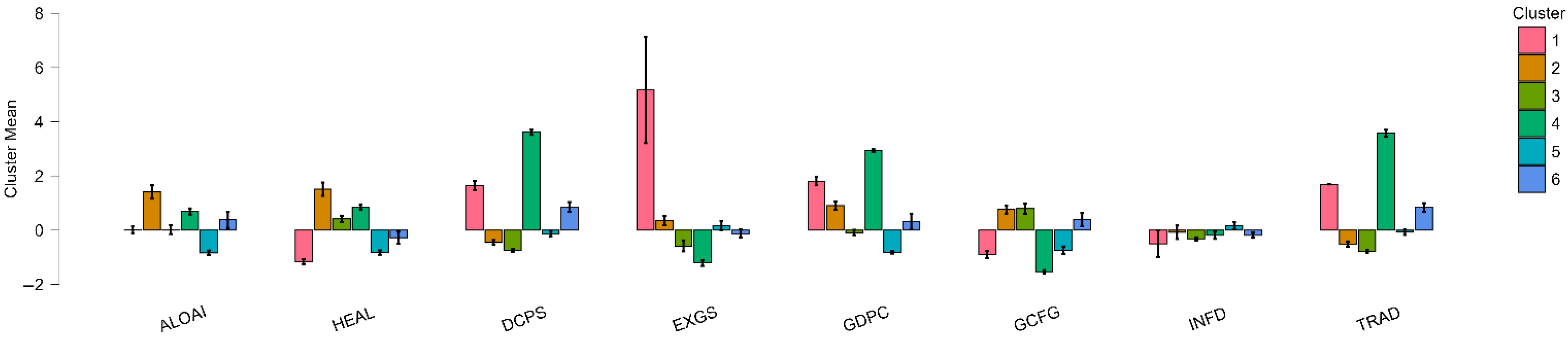

Unsupervised Clustering Analysis: In order to explore latent typologies of AI diffusion in EU nations, a whole range of unsupervised learning schemes was employed, including Density-Based, Fuzzy C-Means, Hierarchical, Model-Based, Neighborhood-Based, and Random Forest Clustering protocols. The analysis applied these procedures to macroeconomic and institutional indicators to uncover structural similarities among countries, revealing distinct patterns in their AI adoption trajectories (

Shokouhifar et al., 2024). Inferences derived from such Clusters yield meaningful structures in countries’ responses towards macroeconomic pressures—the likes of inflation shocks (

Czeczeli et al., 2024), fiscal policy changes (

Andrejovská & Andrejkovičova, 2024), as well as digital labor preparedness gaps (

Iuga & Socol, 2024)—thereby enabling further specialized comparative research as well as evidence-based policy design. Such structural convergence research utilizing such a Clustering-based method reinforces multi-method research complementarity, merging causal inference employing panel data models, predictive power employing machine learning, as well as typological insight utilizing Clustering. Such juxtaposition is particularly relevant in spheres such as financial institutions as well as cybersecurity, in which thematic segmentation as well as strategic differentiation signify most (

Olasiuk et al., 2023), as well as being in alignment with prevailing research needs in multisectoral empirical underpinnings in AI policy research (

Popescu et al., 2024).

A combined methodological design of panel data econometric models, supervised machine learning models, and unsupervised Clustering algorithms has some notable strengths in examining AI adoption in EU countries. Firstly, econometric panel data offer stringent causal interpretability with control of both cross-sectional and temporal variations, and can control unobservable, time-invariant, country-specific factors such as institutional quality or cultural innovation. It enhances internal validity and isolates macroeconomic determinants of AI diffusion with methodological refinement. Secondly, complementation of econometric models with supervised machine learning models such as Boosting, KNN, SVM, and Random Forest, enables the modeling of complex, nonlinear associations overlooked by traditional linear models. With standardized performance indicators such as MSE, RMSE, MAE, MAPE, and R2, it offers a sound comparison of algorithmic performance. KNN emerged as the top-performing model, with validity tested using cross-validation (

Appendix A), resulting in greater confidence in inferences with control of overfitting. Thirdly, dropout analysis in the KNN framework provided insights into variable importance, including domestic credit, GDP per head, expenditure on health, and most internal drivers of AI adoption—highlighting financial and institutional preparedness at the expense of external trade-based drivers. Fourthly, unsupervised Clustering algorithms such as Density-Based, Fuzzy C-Means, Hierarchical, Model-Based, and Neighborhood-Based algorithms allow AI diffusion typology identification of latent structures in AI diffusion using structural agglomeration of countries with similar structures with diverging adoption patterns. These Clusters act as rich sources of inputs towards focused policy interventions, highlighting the response of countries at this level towards inflationary pressures, fiscal duress, and labor market shortages. Overall, this multi-methodology is tractable with causal description, prediction modeling, and structural classification under one empirical structure. It is especially insightful as a beacon towards evidence-informed digital as well as innovation policy and is in alignment with current research calls towards multi-methods in AI governance scholarship research (

Shokouhifar et al., 2024;

Popescu et al., 2024;

Olasiuk et al., 2023).

We have used the variables shown in

Table 2.

Some specifications on the definition of the ALOAI variable and on the exclusion of some industrial sectors: Exclusion of agriculture, mining, and as finance industry units from the ALOAI indicator is methodologically and substantively defensible. These units have technologically and structurally distinct profiles, significantly differing from those of units in manufacturing and services. Agriculture, as well as mining, for example, have capital-intensive modes of production with limited digitalization. Although AI applications persist in them—such as precision agriculture or predictive maintenance—the applications are comparatively niche-based and do not pervasively stretch across large enterprises (

Hasteer et al., 2024). Finance is, on the other hand, a digital outlier industry with early and sophisticated AI adoption in fields such as fraud detection, algorithmic trading, and customer analytics (

Hassan, 2024). The inclusion of this industry would risk muddying cross-industry comparability due to its extremely high degree of digital maturity (

Lopez-Garcia & Rojas, 2024). In addition, this industry is subject to a distinct set of regulation schemes influencing AI adoption in ways inapplicable in other industry contexts, thus introducing regulation variable confounders at variance with study goals of establishing the macroeconomic and labor market determinants of AI adoption (

Kumari et al., 2022). The inclusion of such structurally distinct industry units would allow us to work towards reducing industry comparability as well as ensuring the coherence of the ALOAI indicator. In addition, the agriculture and mining industry units typically have irregular data coverage in EUROSTAT, mainly due to firm size distributions and confidentiality restrictions (

Hasteer et al., 2024). The inclusion of such industry units would compromise the statistical soundness of the study. Exclusion of such units thus ensures a sounder, comparable, and policy-relevant depiction of AI adoption among large enterprises in Europe.

Data Preprocessing and Gap Filling—Implementing Piecewise Linear Interpolation for Missing Values: The treatment of missing data was achieved through the formalization of a piecewise linear interpolatory method across the dataset. It was chosen in conformity with foregoing methodological demands, i.e., its empirical simplicity, low risk of distortion, as well as conformity with the structure of panel data. With such an approach, one can create believable, continuous series within empirical boundaries while preserving comparability across sections. Computational implementation of the method follows and steadfastly the linear formula below, which captures the assumption of a uniform rate of change between known annual observations with the avoidance of overfitting or spurious curvature in interpolated estimates. Specifically, the following formula has been applied:

where

is the interpolated value at year t, and

are the known values at the bounding years. In Belgium—looking at the period between 2021 (41.44) and 2023 (47.86), for instance—we can see that, in 2022, the interpolated value is precisely the arithmetic mean (44.65), as would be the case in a simple linear interpolating function. In those cases in which only one initial data value is available (e.g., in 2020 or 2021)—as in Germany’s or Croatia’s cases—prior years can be backward-extrapolated along a fixed inclination; meanwhile, successive years are forward-extrapolated until the next available value is reached. Moreover, because no fluctuations or advanced curvatures are demonstrated in such interpolated series, this prevents any adoption of polynomial- or spline-type interpolating methods. Adopted in this way, such an approach ensures temporal homogeneity and continuity over partially completed time series, in addition to offering a smooth, plausible course of history. From a validity perspective, linear interpolation is a well-respected approach in economic research in cases wherein believable endpoint values exist, as well as in cases wherein missing values have to be approximated without injecting artificial deformation. In this case, it allows one to formulate a harmonious dataset that is susceptible to panel and econometric investigations, with stable, interpretable estimates in the succeeding modelling stages (

Table 3).

The scientific rationale of applying piecewise linear interpolation in recreating missing annual values of AI adoption is grounded in sound methodological bases of time series work as well as numerical approximation, as illustrated by

Dezhbakhsh and Levy (

2022). Piecewise linear—in its premise—comprises the idea that, between two empirically observed points, the most objective and the least assumption-laden estimate is the one that progresses at a constant clip. This makes it particularly desirable in economic indicators such as AI adoption, wherein transitions at the annual frequency will tend towards a slow and policy- or wave-dictated progression rather than a spasmodic or unstable progression; this feature is stressed by

Niedzielski and Halicki (

2023). The theoretical validity of the method lies in its parsimoniousness: it introduces no inflection points, no curvatures, and no extraneous assumptions concerning its functions. By holding observed trends in place in their monotonicity as well as their directionality, it keeps interpolated values mathematically valid; moreover, the values remain intuitively believable and behaviorally consonant in longitudes of adoption data that are forthcoming in economics work (

Kwon et al., 2020).

Notably, this method avoids the overfitting tendency of otherwise more detailed alternatives such as spline smoothing or polynomial interpolation. Polynomial interpolation, especially when applied with sparse or irregularly spaced data, is notoriously liable to introduce high-order wiggles unsupported in underlying empirical processes—a condition known as Runge’s phenomenon. Such phantom oscillations, mathematically correct as they stand, can belie true-world interpretations and render the data analytically misleading. Spline smoothing, being less liable to this vice, nonetheless introduces smoothness assumptions at the possible cost of artificial continuity or curvature between points, veiling structural shifts or shocks of economic substance, as

Asanjan et al. (

2020) illustrated. Moreover, both polynomial-based and spline-based procedures require a dense series of data in order to be reliable; linear interpolation, in contrast, is still reliable with widely spaced observations, as in this instance of missing annual observations in this AI adoption dataset. Also, linear interpolation is accurate to the boundary conditions—not pushing interpolated values outside of the minimum or maximum of bounding data points, a desirable quality in empirical economics, where values above observed values without argument can damage credibility.

Furthermore, linear interpolation satisfies several demands of temporal as well as cross-sectional coherence. Using an identical method across all countries ensures methodological homogeneity, thereby preserving comparability in panel data applications. Applying different interpolation methods across countries—such as splines in some cases and linear approximations in others—introduces systematic variation, potentially confounding subsequent statistical inferences and compromising cross-national comparability. In contrast, the piecewise linear approach provides a neutral, reproducible, and transparent base for cross-country comparison. Methodological transparency further allows replication, auditability, and conformity with expectations of peer-reviewed economic research. Thirdly, in practice, linear interpolation is feasible with downstream processes such as fixed-effects panel regression, time-differencing, and Clustering analyses, which do not require further corrections of models or tuning of parameters. It is compliant with the principle of parsimony in epistemology—embracing simplicity as a sufficient explanation in scenarios in which data does not justify added complexity. Overall, piecewise linear interpolation utilized in this case is not only technically a sufficient choice with regard to the structure of data but is scientifically defendable and it is balanced with analytical rigor and empirical realism (

Shi et al., 2023). A synthesis is represented in

Table 4.

Finally, piecewise linear interpolation as employed herein is a methodologically valid and empirically meaningful solution in reconstructing missing annual values in AI adoption levels between countries. Its merits include its simplicity, its computationally transparent handling of values, and its allowance of both temporal and cross-sectional comparability assumptions in panel data applications. In contrast with such polynomial-based solutions as would impose unrealistic curvature or hide substantial economic changes, linear interpolation ensures directionality homogeneity without generating trends. It ensures interpolated values move strictly between known values of points with integrity, as well as the plausibility of the reconstructed series, as illustrated by the data for Belgium in 2021–2023. Moreover, as a procedure, it accommodates sparse or irregularly distributed values well without risking overfitting or artificial smoothing with elaborate schemes. Applying a uniform procedure across countries ensures methodological homogeneity in the research process, thereby preventing non-random noise or distortions in subsequent econometric modeling. It also enables replicability, auditability, and adherence to the expectations of standards of economic research. Overall, this approach achieves the research objective of generating a harmonized, continuous dataset enabling a robust, interpretable, and policy-informed longitudinal research analysis, without sacrificing empirical realism.

5. Understanding AI Diffusion in EU Enterprises: Evidence from Fixed- and Random-Effects Models

To understand the macroeconomic determinants that lead large European enterprises to embrace artificial intelligence (AI) technologies, our study employs a metric-driven panel data approach with fixed-effects and random-effects estimates. The dependent variable, ALOAI, represents a percentage of enterprises with 250 or more employees having a minimum AI technology form, based on Eurostat data and excluding agriculture, mining, and financial sectors. The study examines a panel of 28 European nations observed in 2018–2023. Our study objective remains to estimate the effect of a macroeconomic indicator on key areas, including health expenditure, domestic credit, exports, GDP per capita, capital formation, inflation, and trade openness, impacting AI diffusion across nations and over time. Based on a comparison between performance and coefficient values between fixed-effects and generalized least squares (GLS) random-effects models, our study intends to establish statistically significant AI adoption predictors and assess their relative contributions.

We have estimated the following equation:

where i = 28

1 and t = [2018; 2023].

The econometric results are shown in

Table 5.

The panel data analysis of the adoption of artificial intelligence (AI) by large European enterprises provides important evidence on the macroeconomic drivers of technological diffusion among firms. This analysis employs fixed-effects and random-effects (GLS) econometric models, utilizing 151 observations and a comprehensive set of macroeconomic indicators, including current health expenditure (HEAL), domestic credit to the private sector (DCPS), exports of goods and services (EXGS), gross domestic product per capita (GDPC), gross fixed capital formation (GFCF), inflation as measured by the GDP deflator (INFD), and trade openness (TRAD). The results demonstrate the strong influence of these economic variables. Empirical research by

Doran et al. (

2025) supports this methodological approach, analyzing automation systems across European industries and confirming that the structure of economic sectors plays a crucial role in technology adoption. Similarly,

Buglea et al. (

2025) apply panel data to study Central and Eastern European countries, affirming that both structural and macroeconomic factors significantly shape technology adoption. The fixed-effects estimation, which accounts for unobserved heterogeneity among countries, identifies several variables with statistically significant impacts on AI adoption. Notably, health expenditure has a highly significant and positive impact (coefficient = 3.969,

p < 0.01); this suggests that increased public spending on health may enhance institutional capabilities or support personnel investment, both of which could facilitate the deployment of AI. This positive significance is consistently observed in the random-effects estimations (coefficient = 3.690), reinforcing the robustness of the results across different estimation techniques. The version of the analysis based on the fixed-effects and random-effects (GLS) econometric models, with 151 observations and on the complete range of macroeconomic indicators available (current health expenditure, HEAL; domestic credit to the private sector, DCPS; exports of goods and services, EXGS; gross domestic product (GDP) per capita, GDPC; gross fixed capital formation, GFCF; inflation as captured by the GDP deflator, INFD; and trade openness, TRAD), provides strong evidence for the economic variables’ influence. Empirical work by

Doran et al. (

2025) provides support for the methodological approach. These authors analyze European industry automation systems and confirm the key role of economic sector structures in dictating technology take-up.

Buglea et al. (

2025) apply panel data on Central and Eastern European countries to analyze the adoption of digital transformation and confirm the role of structural and macro-variables in shaping technology adoption. The fixed-effects estimation, accounting for unobserved heterogeneity of countries, identifies various variables with statistically significant impacts on the adoption of AI. Health expenditure has a highly significant and positive impact (coefficient = 3.969,

p < 0.01), implying that increased public spending on health might reflect both wider institutional capabilities or investment in personnel, not independently contributing to the potential deployment of AI. The exact significance and positive impact are replicated in the random-effects estimations (coefficient = 3.690), establishing the robustness of the results to different estimation techniques.

Another significant finding is the statistically significant and negative impact of domestic credit to the private sector (DCPS), with a coefficient of −0.286 in fixed effects and −0.159 in random effects, which are significant results at standard levels. This unexpected finding suggests that, in some economies, financial richness does not necessarily imply innovation support or digital transition; rather, it can imply capital utilization inefficiencies.

Wagan and Sidra (

2024) overcome a similar complexity by highlighting how—with massive investments in AI—countries can differ in their efficiency in using venture capital. Goods and services exports (EXGSs) also produce a stable and significant negative link with AI adoption in both models, with coefficients of −2.152 and −1.726, respectively. This finding may suggest that, with their overwhelming orientation into classical export-led development models, traditional economies tend to lag in digital innovations, possibly owing to path dependency associated with low-tech or labor-intensive formation patterns, or structural inelasticities blocking disruptive technology adoption. The finding corresponds with

Abdelaal (

2024), who indicates that conventional structure-dominated economy types lag in their reallocation of resources to the high-tech sectors of AI implementation. GDP per capita (GDP) makes a small but statistically significant positive contribution, suggesting that wealthier economies, as theorized, are more likely to adopt AI technologies.

Meanwhile, magnitude remains small (0.000579 in fixed effects and 0.000753 in random effects), suggesting that, by itself, GDP’s problem remains not dominant but a constituent factor in the broader set of facilitating factors. The finding corresponds with those by

Žarković et al. (

2025), who report that GDP per capita’s contribution to economic modernization shows significant heterogeneity between old and new joining EU nations. Their study upholds a presumption from a theoretical perspective that additional structural determinants shape divergent development patterns and technology dissemination processes.

Gross fixed capital formation (GFCF), quantifying investments in infrastructure and productive assets, unexpectedly produces a negative and significant coefficient in both specifications. We have raised critical questions regarding the allocation of investment flows—specifically, whether investors predominantly direct capital toward conventional physical assets rather than intangible or digital infrastructures, essential for effective AI integration. The result is consistent with evidence from

Giannini and Martini (

2024) on enduring regional heterogeneity in economic structure and innovation preparedness throughout the European Union, many of which are likely to bias the efficiency of traditional spending. Inflation (INFD), quantified by the GDP deflator, has a positive and significant impact on the adoption of AI; this perhaps captures the instance of moderate inflation accompanying vigorous investment environments or policies with the aim of expansion that support digital innovation. Last, trade openness (TRAD) exerts a strong positive and highly significant influence in both specifications (estimates of 1.058 and 0.855), affirming that access to world markets is a stimulus for the adoption of AI. Such mechanisms as exposure to foreign competition, technology transfer, and integration into foreign-led global value chains likely drive this outcome. Empirical evidence from

Nguyen and Santarelli (

2024) supports this interpretation, showing that open European economies benefit substantially from AI-related spillovers due to their higher degree of global integration. Statistically, the fixed-effects (FE) specifications demonstrate significant explanatory power, with an R-squared value of 0.924 and a significantly high F-statistic (F = 41.706); these values indicate that the considered regressors explain a large proportion of variance in the dependent variable. Both random-effects (RE) models yield statistically significant estimates as well (Chi2 = 75.88,

p < 0.00001). There does not exist any systematic distinction identified between the two models’ estimators; however, as per the Hausman test (Chi

2 = 8.057,

p = 0.328), this observation suggests inconsistent RE estimates as being valid under test assumptions. It is necessary to clarify, though, that the non-rejection of the null in the Hausman test does not imply that RE is superior, nor does it invalidate FE as a specification. It only suggests there is no statistical distinction in coefficient estimates between the two methodological approaches. FE selection cannot thus simply base itself on the Hausman result but on a broader statistical and theory-based appraisal. In this regard, whilst in no way questioned in this research study, there is substantial diagnostic evidence in favor of FE. Firstly, there is conclusive evidence of unobserved heterogeneity with both the F-test of group intercepts F = 17.36,

p ≈ 0.00, as well as of substantial heteroskedasticity using the Breusch–Pagan test of heteroscedasticity Chi

2 = 158.842,

p ≈ 0.00. Both of these findings suggest support for FE due to its superior adaptability in controlling unobserved, time-invariant characteristics at the country level, such as institutional environments, structural features, and long-term socio-economic changes—these, unless controlled, can lead to biased inferences. Despite the Durbin–Watson statistic (~0.59) hinting at moderate autocorrelation, the estimates remain valid in terms of significance and stability. Both theory-based arguments and diagnostic evidence thus suggest support for using the fixed-effects approach. There shall be no uncertainty in the final text that such a choice is not one of test results individually, but one of an integrated appraisal by data structure as well as research objectives.

Ultimately, these results affirm a multidimensional and sometimes non-monotonic correlation between macroeconomic markers and the adoption of AI. Structural drivers such as spending on health, financial stability, and integration into trade are available to underpin digital innovation. In contrast, variables traditionally associated with development, such as capital and the formation of credit, are not necessarily positively correlated in all cases.

Tiutiunyk et al. (

2021) argue that, although macroeconomic stability in European economies is positively correlated with digital transformation, its interaction with traditional growth variables such as credit and capital is more complicated and varies depending on the circumstances. Increasing levels of access to investment or credit will be insufficient. They must direct support toward activities that foster innovation and are backed by institutional preparation. For example,

Iuga and Socol (

2024) demonstrate how institutional variables significantly influence the readiness of European member states to adopt AI, and complacency in bridging these gaps will leave brain drain exposed, especially in the less-developed European regions.

Furthermore, the goodness of fit of the models reinforces the importance of macroeconomic policy in shaping the digital competitiveness of European economies. With rising salience placed on the adoption of AI as a driver of industrial modernity and economic resilience, such a macro-booster to adoption can feed into more targeted and effective interventions, both in member states and in the European Union. For example, spurring adoption of AI is not about more investment of assets but strategic coordination of finance systems, trade policy, health infrastructure, and digital plans to provide the canvas onto which innovation can seize. Such holistic strategic coordination is consistent with evidence by

Challoumis (

2024), who argues that AI is remapping economic fundamentals and calling on fiscal and innovation policies to make space in turn to accommodate a new finance paradigm. The macroeconometric robustness of the models, in particular the large R-squared of the fixed-effects formulation and

p-value convergence between estimators, reinforces the importance of such inferences. Notably, the conclusions push policy to be strategic and dimensional, balancing macroeconomic planning and digital innovation ambitions.

This is not just about enhancing health systems and participating in international trade; it is about aligning lending and investment channels to facilitate capabilities entirely digitally. Meanwhile, among the significant sources of economic competitiveness and resilience—especially in Europe’s wider digital and green transformations—this question confirms policymakers’ need to underpin the macroeconomic foundations that can lead to the success of AI technologies in business.

From Job Quality to Tech Readiness—Labor Market Determinants of AI Diffusion: In recent years, the adoption of artificial intelligence (AI) technologies by firms has been the target of wide-ranging debates about their implications for the work market. On the one hand, AI has the potential to boost productivity, streamline business processes, and create new economic opportunities. On the contrary, it generates mounting concerns about the replacement of human work—particularly the work that is repetitive and requires low skills—due to automation and cognitive systems. Such a tension between the virtue of technological inventiveness and the disruptive force of work raises questions about how the nature of the work market affects the adoption of AI in a variety of national contexts. The model includes six explanatory variables which encompass the salient aspects of the work market: the share of employers among total employment (EMPL), work in services (SERV), the share of self-employed workers (SELF), the unemployment rate (UNEM), the share of workers with vulnerable employment (VEMP), and the share of waged and salaried workers (WAGE). Such a model aims to clarify the way the structure and the quality of the work market shape the capacity and the will of firms to adopt AI technologies. Understandings of such inter-relations are, indeed, of central importance, not only as a means of interpreting the current trends in AI diffusion, but also as a guide for the design of public policy which promotes the digital transformation of the economy as well as inclusive work market evolution. We directly estimate the following relationship. We applied three different econometric approaches—panel data with fixed effects, panel data with random effects, and dynamic panel data models—to capture this relationship. By utilizing a multi-method design, we guarantee the robustness of the estimates and better capture the way in which labor market circumstances influence the adoption of AI over time and across countries. Our empirical coverage extends to 28 European countries over the 2018–2023 interval, providing an exhaustive overview of AI diffusion as it applies to labor market behavior in different national settings.

We estimated the following equation:

where i = 28 and t = [2018; 2023].

The presented model is designed with the aim of analyzing the determinants of artificial intelligence (AI) adoption by large European enterprises. The dependent variable, ALOAI, represents the percentage of firms with at least 250 employees that use at least one AI technology, such as machine learning or image recognition. The regression equation includes six explanatory variables, all related to the structure and quality of the labor market: the share of employers in total employment (EMPL), employment in the service sector (SERV), the share of self-employed workers (SELF), the unemployment rate (UNEM), the percentage of workers in vulnerable employment (VEMP), and the proportion of waged and salaried workers (WAGE). The dataset consists of 28 cross-sectional units observed over six years, totaling 168 observations. We used three different estimation techniques: a random-effects model (GLS), a fixed-effects model, and a dynamic panel model that includes the lagged dependent variable, ALOAI. The figures suggest strong consistency between the different specifications. EMPL is statistically significant and harmful for the fixed- and random-effects models. The notion that the share of the labor market that is occupied by employer-established firms does not need to encourage AI adoption comes as a surprise. However, this finding may reflect the nature of these family-centric, relatively small business firms; they are often reluctant to use financial resources, and prefer a classic business model (

Hoffmann & Nurski, 2021). For both the fixed- and random-effects models, the coefficient comes up as −20,000, which reveals a qualitatively significant influence.

In contrast, service sector work (SERV) exerts a considerable and significant influence in a positive direction on AI adoption. This result aligns with the ongoing European economic digital transformation, whereby the service sector accounts for the leading provider of technological innovation (

Gualandri & Kuzior, 2024). Sector segments like information technology, health, education, and financial services are becoming highly integrated with AI-related applications. The considerable positive relationship reflects the larger need and implementation capability for AI technologies for such segments. Such a constructive influence holds for all models, which vary the coefficient between about 1.1 and 2.9 with the specification.

Self (SELF) is another variable that shows a significant positive correlation with ALOAI, as the variable accounts for the percentage of self-employed individuals. The result shows that, the greater the proportion of self-employed individuals, the greater the AI adoption of big firms. That might reflect a dynamic and innovation-oriented entrepreneurial environment. An environment conducive to business startup, specifically the tech sector, might similarly have a positive effect on big firms through the diffusion of the innovations generated by startups and freelancers (

Spagnuolo et al., 2025). Also, greater self-employment might reflect greater use of information and communication technologies, which in turn makes the adoption of AI technologies easier.

Another significant variable exhibiting a negative and statistically significant association with AI adoption for all the models is the unemployment rate (UNEM). This result bolsters the proposition that, for the countries undergoing labor market difficulties, firms have a weaker capacity to innovate and adopt new technologies. Unemployment tends to go hand-in-hand with undesirable macroeconomic conditions, fewer funds available to firms, and a lower need for firms to compete, all of which may discourage the adoption of AI solutions (

Dave, 2024).

Similarly, the variable VEMP—which depicts the share of workers engaged in vulnerable employment—reveals a negative and significant coefficient for two of the three models. The economic and social relevance of the variable becomes important: significant labor market vulnerabilities often translate into informal work, precarious contracts, and inadequate social protection. In such a situation, companies are likely to have fewer formalized units and are reluctant to incur the substantial first costs of such advanced technologies as AI. They are likely to lack the skilled workforce required for the effective adoption of such instruments (

Du, 2024). Moreover, decentralized and precarious labor markets could reflect the wider structural vulnerabilities of the economy that hinder its innovative capabilities.

In contrast, the coefficient of WAGE, the proportion of waged and salaried workers, is positive and statistically significant in all models. That means that, the wider the coverage of formal, secure work, the higher the probability that firms will employ AI technologies. This finding stresses the importance of a well-structured labor market as a precondition for technological innovation. Salaried work also reflects the fact that firms are larger and better structured, with access to the financial resources required for long-term investment in technology. Those firms have, as a general rule, formal procedures along with a workforce enjoying the benefits of labor protection, which are helpful when it comes to the adoption and implementation of AI systems.

In the dynamic model, the lagged dependent variable ALOAI(t−1) enters with a significant and very high coefficient (0.87), indicating strong temporal persistence of AI adoption. Firms adopt AI during a year with a very high likelihood of continuing and broadening it during subsequent years. The dynamic reflects a cumulative process, such that initial adoption produces subsequent learning, adaptation, and cumulative consolidation as time progresses. The significant dynamic effect highlights the importance of public policy instruments that compel firms to venture into such a cumulative process, as it seems self-sustaining.

More broadly, the analysis reveals that the labor market’s structure and quality are central drivers of the adoption of AI technologies by firms. Economies with formal, stable, and service-oriented labor forces are better equipped for AI adoption. By contrast, conditions with widespread unemployment, precarious employment, and a greater prevalence of less formal types of employment have lower AI-diffusion rates. Among the policy lessons, these have significant implications for the articulation of public policy design. Supporting labor formalization, investment in the services sector, enabling innovative entrepreneurship, and combating unemployment are all policy approaches that simultaneously strengthen the labor market and encourage the digital transformation of the economy.

Also, the strong dynamic effect obtained for the panel model shows that policy should not just try to stimulate first AI adoption; it should also stimulate firms through their whole process of technological integration. Policies of this kind include the offering of training programs, technical services, and improved access to funds for investment in digitization. By shaping the right innovation context and reducing the first adoption barriers, public policy can have a decisive influence in hastening the diffusion of AI to the main areas of the European economy (

Table 6).

Statistical results and diagnostic tests of the three estimated models—random-effects (GLS), fixed-effects (within estimator), and the one-step dynamic panel—provide a complete picture of the robustness and validity of the relationship between the labor market variables and the adoption of AI by major European enterprises. The dependent variable for all models is ALOAI, which sets the percentage of major enterprises adopting at least one AI technology (

Gualandri & Kuzior, 2024). Let us start with the random-effects model. We have a total of 168 observations from 28 cross-sectional units over six years. Log-likelihood of the model is −658.48, while the Akaike and Schwarz information criteria are 1330.96 and 1352.83, respectively. The sum of the squared residuals is high (around 24,963), and the standard error of the regression is quite sizable at 12.41. They indicate that, while the model explains a part of the variation in AI adoption, the explanatory power of the model is mainly about the remaining variation (

Popović et al., 2025). Between-group variance is 119.87, while the within-group variance is 32.31, revealing much heterogeneity both across and over the periods. The estimated rho value, which shows the proportionate amount of variation as a result of individual-specific effects, is about 0.38. That means 38% of the total variation in the ALOAI is a result of differences rather than the periods, that is, the countries. The estimated results are better as per the explanatory power of the model. The model produces an R-squared value of 0.3376, while the LSDV R-squared (least squares dummy variable) is remarkably high at 0.9031, indicating that the model explains more than 90% of the total variation in AI adoption when it controls for all country-specific effects (

Wagan & Sidra, 2024). The related F-statistic (F(33,134) = 37.82) is highly significant with a

p-value nearly equal to zero, which reveals that the joint impact of the regressors and fixed effects is statistically significant. Log-likelihood improves considerably while shifting to the fixed-effects model, going up to −511.33, while the Akaike and Schwarz criteria improve to 1090.67 and 1196.88, respectively.

These improvements imply better model fit than the random-effects specification. The standard error of regression is also reduced to 5.68, implying improved precision. However, the Durbin–Watson statistic remains low, at 0.911 for both models, indicating the possibility of the existence of serial correlation in the residuals. For the dynamic panel model, which includes a lagged dependent variable, the sample size reduces to 112 observations as the first period is lost during dynamic estimation. We have a very high coefficient for the lagged dependent regressor ALOAI(-1) of 0.87, which is statistically significant at the 1% level. This result confirms the existence of strong path dependency for AI adoption: the history of past AI adoption of a country strongly predicts the country’s continuing AI adoption in the following years (

Spagnuolo et al., 2025). We obtained a Sargan over-identification test Chi-square statistic of 60.60 with a

p-value of 0.0000, which rejects the null hypothesis of valid over-identifying restraints. This result could imply the existence of possible problems affecting the instruments used during the model. However, the results of the Arellano–Bond tests for serial correlation are reassuring. We have the AR(1) test significant (z = −2.60,

p = 0.0092), as expected when first differences are used.

In contrast, the AR(2) test yields no significant results (z = −0.08,

p = 0.9357), supporting the validity of the dynamic model, as there is no evidence of a second-order effect. Several specifications and robustness tests similarly inform the model choice. We have the decisive rejection of the null hypothesis of zero variance for the random effects by the Breusch–Pagan test, with a Chi-square of 208.93 and a

p-value effectively set as zero. We have proved the existence of significant unobserved heterogeneity across countries (

Atajanov & Yi, 2023). However, the Hausman test gives a decisive result arguing for the fixed-effects model choice. We have the consistency of the GLS random-effects estimator tested as compared to the fixed effects, which comes out with a Chi-square statistic of 23.51, a

p-value of 0.0006, and the rejection of the null hypothesis that the random-effects estimator becomes consistent (

Yum, 2022). We have the regressors violating the primary assumption of the random-effects model, correlating with the individual-specific effects.

The joint tests for the regressors also confirm the relevance of the model specifications accordingly. For the fixed-effects estimation, the F-test of all the regressors (F(6, 134) = 11.38) appears significant at a very high level, while the

p-value comes to about 2.92 × 10

−10. For the Wald test in the case of the dynamic model, similarly, the joint significance of the regressors included appears to hold, while the Chi-square statistic comes to 228.35, as the

p-value remains well below 0.01. These values certainly create no doubt about the explanatory power of the used regressors (

Bustani et al., 2024). Additionally, the test of differing group intercepts in the case of the fixed-effects model strongly rejects the null of a common intercept common to all the included countries. An F-statistic of 20.00 with a

p-value effectively at zero reveals that country-specific factors are indeed significant and cannot go unnoticed, further confirming the validity of the applicability of the fixed-effects model.

On the whole, the collectively indicated set of statistical tests and diagnostics tends towards the fixed-effects model as the best-specifying model for the characterization of AI adoption among large European firms. It fits better, has higher explanatory power, and satisfies the several assumptions that are violated by the random-effects model. The dynamic panel model similarly gives significant insights, primarily about the persistence of the adoption of AI over time. However, we have exercised some caution regarding the validity of the instruments used. Collectively, the estimates are statistically robust and have significant policy implications about the role played by the characteristics of the labor market while shaping the adoption of technology.

The econometric investigation of 28 European nations during the 2018–2023 period sheds light on how the structural features of the labor market have a substantial impact on the adoption of artificial intelligence (AI) technologies by significant numbers of enterprises. Outcomes, robust for fixed-effects, random-effects, and dynamic panels, reflect several statistically significant associations. A higher share of employers relative to total employment reveals a negative association with AI adoption, which posits that economies with a predominance of scarce, frequently family-managed firms are less likely to adopt highly advanced technologies. Employment in the services sector, conversely, reveals a robust positive association, which reflects the significant role played by services as drivers of the digital innovation impulse. Analogously, a higher percentage of self-employed people reveals a positive association with AI adoption, possibly indicating a dynamic environment of entrepreneurship that optimally benefits the process of technological diffusion. Elevated percentages of unemployment and higher percentages of staff occupied in precarious work, conversely, indicate a negative association with AI uptake, which signals the limitations imposed by the volatility of the labor market upon innovation. A higher percentage of waged and salaried workers reveals a positive association with AI adoption, which reflects the notion that the highly formalized and structured labor markets are optimally better suited to the process of technological investment. The dynamic panel model, finally, reveals robust persistence of AI use throughout the observation period, as testified by the existence of a significant and higher coefficient of the lagged dependent variable, revealing that, when initiated, AI adoption widens and perseveres, possibly as a consequence of the investment undertaken by the enterprise. Such a finding has policy implications. For the enhancement of the formalization of the labor market, combating the rates of unemployment, facilitating innovative initiatives within the services sector, and boosting entrepreneurship can permit a healthier framework that prefers AI adoption. Additionally, public policy must not merely catalyze the entry of adoption; instead, it must stimulate enterprises through the complete process of their absorption of technology, through the provision of information programs, as well as the provision of better access to funds. Generally, the discussion reveals that the effective diffusion of AI depends upon both technological readiness and inclusive and stable forms of labor market institutions.

6. Decoding AI Adoption in Europe: A Comparative Evaluation of Predictive Models and Macroeconomic Drivers

To complement inferences derived from panel data regression estimates, the subsequent analysis shifts the focus to a machine learning orientation. Econometric techniques interpret causality based on exogenously specified assumptions and the average effects of macroeconomic and labor market variables on AI uptake. However, they sometimes fail to model complex, nonlinear relationships between predictors. Machine learning algorithms, in contrast, seek maximal prediction accuracy and can discover latent structures in data without subjecting them to strong parametric constraints. The use of supervised learning algorithms such as K-Nearest Neighbors (KNNs), Random Forest, and Support Vector Machines (SVMs) provides a robustness check against the econometric findings, as well as alternative insights about which variables matter and about generalizability. The shift toward machine learning methods then complements the empirical strategy, as well as making for both methodological triangulation and further findings pertinent to policy.

This section performs a comparative analysis on eight regression models, i.e., Boosting, Decision Tree, K-Nearest Neighbors (KNNs), Linear Regression, Neural Networks, Random Forest, Regularized Linear Regression, and Support Vector Machines (SVMs). We evaluated the models using standardized performance metrics, such as the root mean square error (MSE), root mean square error (RMSE), mean absolute error (MAE/MAD), mean absolute percentage error (MAPE), and coefficient of determination (R²). They normalized all input data prior to evaluation to ensure unbiased and consistent comparisons across models. Our selection of machine learning algorithms in this work—K-Nearest Neighbors (KNNs), Random Forest, Boosting, Support Vector Machines (SVMs), Decision Tree, Linear Regression, Regularized Linear Regression, and Neural Networks—was an intentional attempt at ensuring methodological diversity, predictive power, and adherence with the dataset’s characteristics, in addition to the study’s objectives. These models have been selected in this work because each of them has specific achievements in handling nonlinearities, high-dimensional data, and varied levels of interpretability (

Assis et al., 2025;

Khan et al., 2020;

Sutanto et al., 2024;

Walters et al., 2022). KNN was a controversial selection because of its non-parametric method of viewing local patterns of complex, high-dimensional data. KNNs have a propensity for being selection-sensitive as well as prone to possible overfitting; thus, we counterbalanced such drawbacks with procedures of cross-validation in Annex A. Random Forest was a controversial selection because of its propensity for ensemble learning, in addition to its reduction in variance (because of the pooling of hundreds of Decision Trees); moreover, it shows increased predictive accuracy, irrespective of the examples of multicollinear or noisy data, though this comes at the cost of interpretability in some way (

Sutanto et al., 2024;

Assis et al., 2025). A Gradient Boosting algorithm was utilized because of its continuous focus on the correction of the errors of preceding models, with the possibility of attaining high precision and sensitivity in the differentiation of subtle data patterns. However, this comes with the requirement of subtle fine-tuning in order to not be affected by overfitting or computational expenses (

Walters et al., 2022). SVMs have been utilized in high-dimensional spaces, and in applications where resistance to the presence of outliers is important (

Assis et al., 2025;

Khan et al., 2020). Decision Trees constitute a transparent baseline model that means it is simple enough to visualize and interpret the data of the model (

Sutanto et al., 2024). We have employed Linear Regression and Regularized Linear Regression models (e.g., Ridge, Lasso) as baselines to evaluate the performances of more complex models. They were used to apply regularization procedures to prevent overfitting and address multicollinearity (

Khan et al., 2020). Neural Networks raised some interest in order to explore the probable capacities of modeling extremely nonlinear associations; however, they are computationally intensive and opaque (

Khan et al., 2020;

Walters et al., 2022). This heterogeneous set, as a whole, enables the comparability of performance trade-offs between simplicity and complexity and between interpretability and accuracy; thus, we have been able to facilitate a richer, more robust and policy-relevant modeling of AI-adoption determinants. The objective here is to explore each model’s predictive capacity and generalizability in predicting AI uptake in large firms in Europe. Mainly accompanied by model benchmarking, this section also includes a study on KNN-based feature importance using mean dropout loss for ranking macroeconomic factors according to their contribution toward AI uptake. Such analyses offer both methodological insights and policy-relevant evidence on the structural economic variables that condition AI diffusion in different national contexts.

Figure 1 presents the comparative results of the regression models.

In comparing the performances of eight regression models—Boosting, Decision Tree, K-Nearest Neighbors (KNNs), Linear Regression, Neural Networks, Random Forest, Regularized Linear Regression, and Support Vector Machines (SVMs)—our consideration is on the same five basic statistical measures of mean square error (MSE), root mean square error (RMSE), mean absolute error/mean absolute deviation (MAE/MAD), mean absolute percentage error (MAPE), and coefficient of determination (R2). These measures are essential indicators of how well the models fit, are stable, and generalize to unseen data. Lower values in MSE, RMSE, MAE/MAD, and MAPE indicate better prediction accuracy, model stability, and generalization to unseen data. Of all the models tested, KNN shines with near-perfection in all the measures of evaluation with MSE, RMSE, MAE/MAD, and MAPE of 0.000 and R2 of 1.000. The outcome denotes a perfect match between predicted and observed values, resulting in zero error. Though such kinds of performances are scarce in actual practical use and might be suggestive of overfitting, data leakage, or data with too-low complexity, the results as they are set put KNN in the list of best performers and the top algorithm in this comparison. The application of KNN in environmental disciplines—as demonstrated by

Raj and Gopikrishnan (

2024) in vegetation dynamics modeling—highlights the algorithm’s effectiveness with highly ordered, feature-rich data. The second-best is Boosting, which performs well with an MSE of 0.187, an RMSE of 0.222, an MAE/MAD of 0.247, an MAPE of 0.100, and an R2 of 0.650. These results indicate that Boosting provides an excellent balance of low deviation and decent explanation of variance, making it well-suited for practical use, especially in complicated or more noisy environments. SVMs perform reasonably well based on mean deviation with an MAE/MAD of 0.241, which is better than those obtained using Boosting and Random Forest. However, it has the worst MAPE of 1.000 and thus significantly loses credibility in matters of percent-based precision, such as financial prediction or health prediction. In addition, the R2 of 0.248 is relatively low and represents little power to explain the dependent variable’s variance. Such volatility in SVMs is also witnessed in education analytics, where

Kumah et al. (

2024) observed such shortcomings in identifying nonlinear behavior in the prediction of students’ performance, especially with the involvement of categorical variables or in the case of badly scaled variables.

Conversely, Regularized Linear Regression and Random Forest have almost identical MSE values of 0.293, RMSEs of 0.374, MAE/MADs of 0.242, and R2s of 0.841. However, both models have significant errors in the form of MAPE (0.750), with poor relative prediction precision. Despite that, their big R2, though not always linked with low MSE, reveals they are useful in instances when capturing the general trend—not specific values—is the objective.

Chandra et al. (

2024) also demonstrate such a balance between error-based measures and variance explanation when comparing different machine learning models used to predict production processes. They commend Random Forest for its ability to match trends but criticize it for its sensitivity to outliers. Decision Tree is no better on most measures. Its MSE and RMSE (0.310 and 0.388, respectively) are among the largest, with their MAE/MAD (0.361) and R2 (0.370) values also being among the largest. Decision Tree performs decently only on the MAPE measure (0.107), showing slightly better relative error than Random Forest and SVMs.

Vijayalakshmi et al. (

2023) also illustrate these frailties in their prediction of medical insurance prices, where they found that regression models provided more stable relative performances across both absolute and percentage metrics. Linear Regression produces slightly better results, yielding an MSE of 0.230, an RMSE of 0.298, an MAE/MAD of 0.357, and an R² of 0.510. These outcomes are considered average and reflect a respectable balance between model complexity and generalizability, though they do not excel in any particular metric. Last—and best of all the models (though still very poor)—is the Neural Network, with the greatest possible MSE, RMSE, and MAE/MAD (all equal to 1.000) values and the lowest possible R2 (0.000); the results suggest that it is not able to learn any functional mapping of the features to the target. Its MAPE of 0.658 supports this. Such a low performance can be due to either the poor optimization of the architecture, insufficient training data, or a network that is too deep to be processed by the dataset.

Elnaeem Balila and Shabri (

2024) evidence the same weakness in the model in a property price prediction application, with deep models performing poorly with simpler models, owing to overfitting and data-poor generalization.

Among all the examined models, K-Nearest Neighbors (KNNs) achieved the finest all-around performance, with minimal error rates and retaining all the target variable’s variance. However, such ideal results must, of course, give us pause for concern regarding overfitting and poor generalizability, in the event the model has memorized cases rather than having identified powerful patterns. In correction, we employed hold-out testing and cross-validation before even considering the KNN for release. With consistency of performance for the portions of the data, KNN is the optimal solution for accuracy-driven scenarios. When transferability and robustness are prioritized, Boosting is a more trustworthy solution. It will always yield a better error reduction vs. the generalizability of the model trade-off. Following this are Regularized Linear Regression and Random Forest, which have the same explanatory power but have relatively higher residual errors. The Support Vector Machines (SVMs), despite their low MAE, produced high MAPE values, and so they are not favored in applications in which proportional accuracy is most critical. These results support the caveat made in

Elnaeem Balila and Shabri (

2024) not to use very sophisticated models, such as deep neural nets, in situations for which less sophisticated methods will suffice. The authors’ illustration of the use of the time-honored machine learning approach for predicting realty prices in Dubai is a good illustration of the principle that we often prefer reliability and interpretability over algorithmic novelty. Aside from predictive accuracy, practical usability in the real world also considers computational cost, scalability, interpretability, and noise sensitivity. KNN, being accurate, is, however, computationally expensive, and feature set scaling is highly sensitive—these are flaws that decrease its scalability. Boosting and Random Forest are more robust and applicable in larger datasets, but they are computationally expensive. Linear models, which are less accurate, are also most often required in highly regulated fields such as healthcare and finance, where interpretability is a hard requirement.

Zeleke et al. (

2023), for instance, employed the use of Gradient Boosting in the prediction of prolonged in-hospital stay, in effect illustrating the applicability of the method in high-risk, high-complexity uses. Likewise,

Kaliappan et al. (

2021) illustrated the value of generalizability in public health predictions, most significantly in the modeling of COVID-19 reproduction rates, thus also establishing the practical versatility of Boosting. Ultimately, the algorithmic choice must satisfy the target application’s objectives and constraints. In performance-obsessed scenarios, KNN is unbeatable in all the metrics considered. Boosting comes close, in turn sacrificing accuracy for stability. Random Forest and Regularized Linear Regression are in the second tier, being best in variance explanation but with relative error trade-offs. SVM and Decision Tree models deliver mediocre results, while Linear Regression, despite its effectiveness, lacks strong arguments. Neural Networks, considering the results thus far, would need drastic re-optimization before being considered. These results establish the basis for disciplined model selection, hyperparameter tuning, and thoughtful advancement in predictive modeling.

The level of mean dropout loss is presented in

Figure 2.

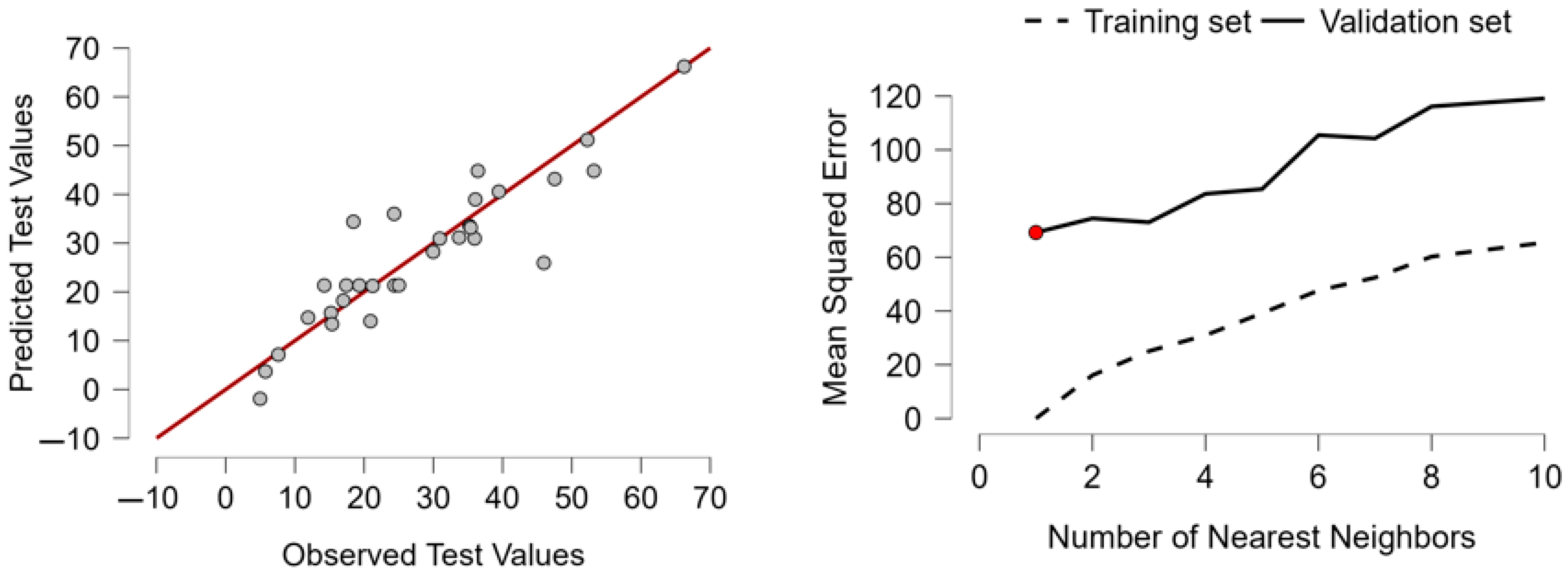

We applied K-Nearest Neighbors (KNNs) predictive models to investigate the large-scale adoption of artificial intelligence (AI) technologies in Europe, defined as the proportion of firms with 250 or more employees that employ at least one AI system. Our analysis was grounded in a standardized matrix of national-level indicators. These are health expenditure (HEAL), domestic credit to the private sector (DCPS), exports (EXGS), GDP per capita (GDPC), gross fixed capital formation (GFCF), inflation (INFD), and trade openness (TRAD), all of which cover the major structural and financial features of European economies. To estimate the predictive importance of each variable, we employed mean dropout loss, the averaged root mean squared error of 50 random permutations. We estimate the loss of model accuracy resulting from the removal of each feature, thereby determining its relative importance for prediction. Among all the predictors, the most significant mean dropout loss of 12.451 was realized in the domestic credit to the private sector (DCPS), thus qualifying as the most contributing variable. The above demonstrates the fundamental importance of access to finance in enabling investment and technological change driven by artificial intelligence in the large firm. The finding further underscores the broader importance of liquid financial market conditions that enable risk-taking and support capital-intensive technological change. We base this interpretation on the findings of

Kotrachai et al. (

2023), who identified financial variables as key predictors in credit card fraud detection models and demonstrated the predictive significance of internal financial dynamics. With that contrast, trade openness (TRAD) and goods and services export (EXGS) recorded relatively low dropout losses of 6.106 and 6.239, respectively, suggesting that external economic activity is relatively insignificant in specifications AI adoption. The contrast suggests that internal developmental and financial capability is more significant than exposure to external trade in preparing for the integration of AI. The health sector also records the same trend, such that

Sehgal et al. (

2024) depicted that internal clinical terms prevailed over external behavioral terms significantly in early-stage predication of diabetes driven by AI. Overall, the results show that structural conditions and internal financial conditions are the most significant enablers of the diffusion of AI in large organizations. They also show that policy interventions that try to spur adoption of AI should pay more emphasis on establishing a robust domestic financial infrastructure than trade liberalization in the external sector.

The K-Nearest Neighbors algorithm also does well when identifying structured adoption patterns of technology, for example, in

Chaurasia et al. (

2022), when analyzing the adoption of mobile technology among individuals who have dementia. According to their findings, the models of proximity can discover delicate but recurrent patterns of adoption in various socio-economic segments. Among the variables examined, health expenditure (HEAL) and GDP per capita (GDPC) recorded the same dropout loss of 9.269 and therefore ranked them in the intermediate level of predictor importance. This convergence shows that institutional and human capital capabilities—such as economic affluence and investment in public health—jointly shape the economic environment that enables AI adoption.

Siddik et al. (

2025) concur similarly on this argument, noting that institutional readiness in health and education infrastructure, among others, is pivotal in catalyzing technology-intensive and sustainable sectoral and macroeconomic growth. Gross fixed capital formation (GFCF) then showed a relatively low dropout loss of 8.077, which is indicative of the less critical nature of infrastructural investment in the population’s preparation for the use of AI compared to credit access or institutional capacity. Inflation (INFD) then showed a loss of 6.682, which is consistent with its intermediate status. Though moderate levels of inflation are viable for a dynamic economy, high or uncertain levels of inflation erode confidence in long-term tech investments. The result is consistent with

Gonzalez (

2025), who demonstrated that machine learning models of inflation prediction increasingly pay greater relative weight to the interlinkages of macroeconomic volatility and tech investment decisions.