User Experience Evaluation in Intelligent Environments: A Comprehensive Framework

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

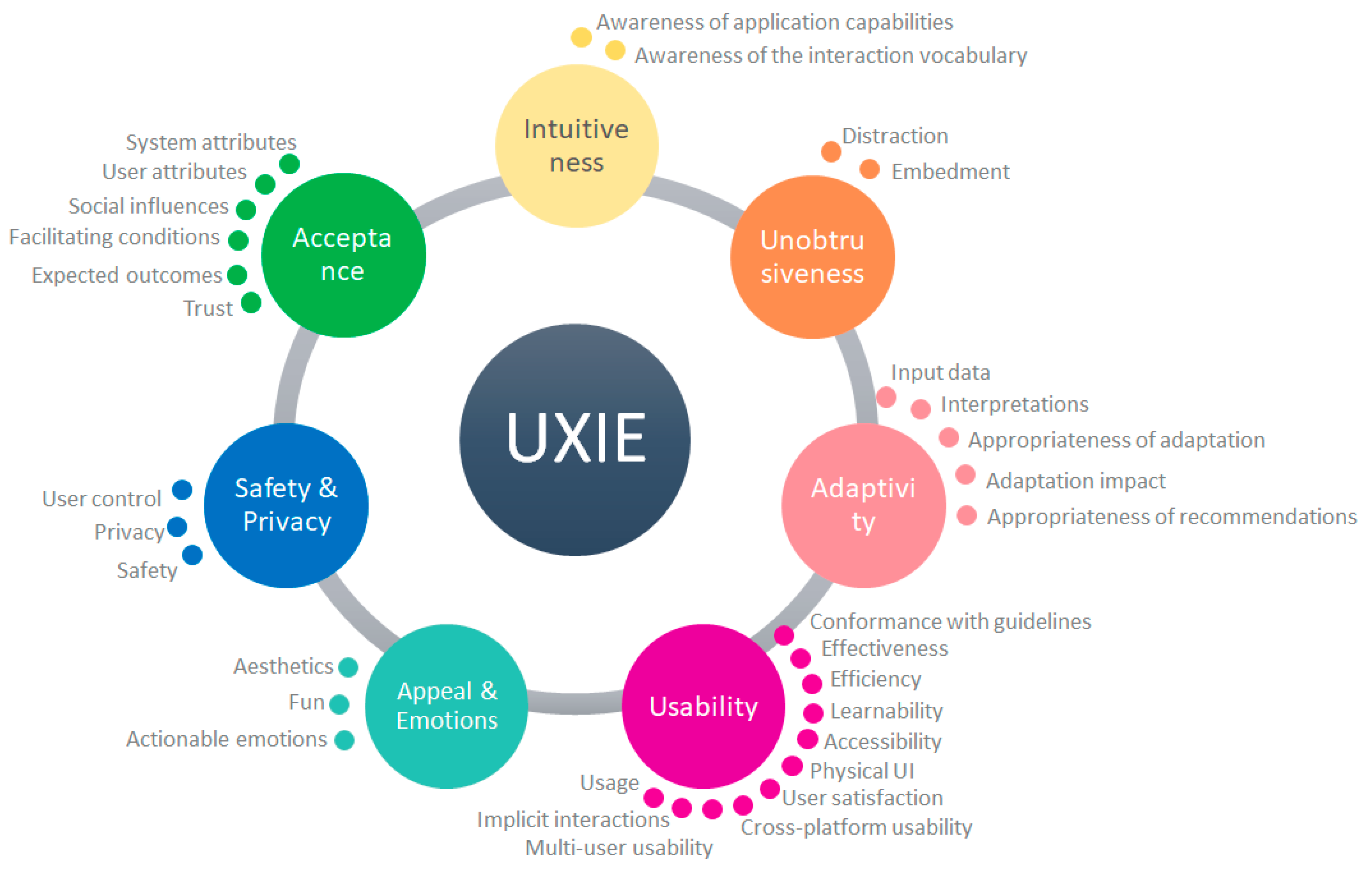

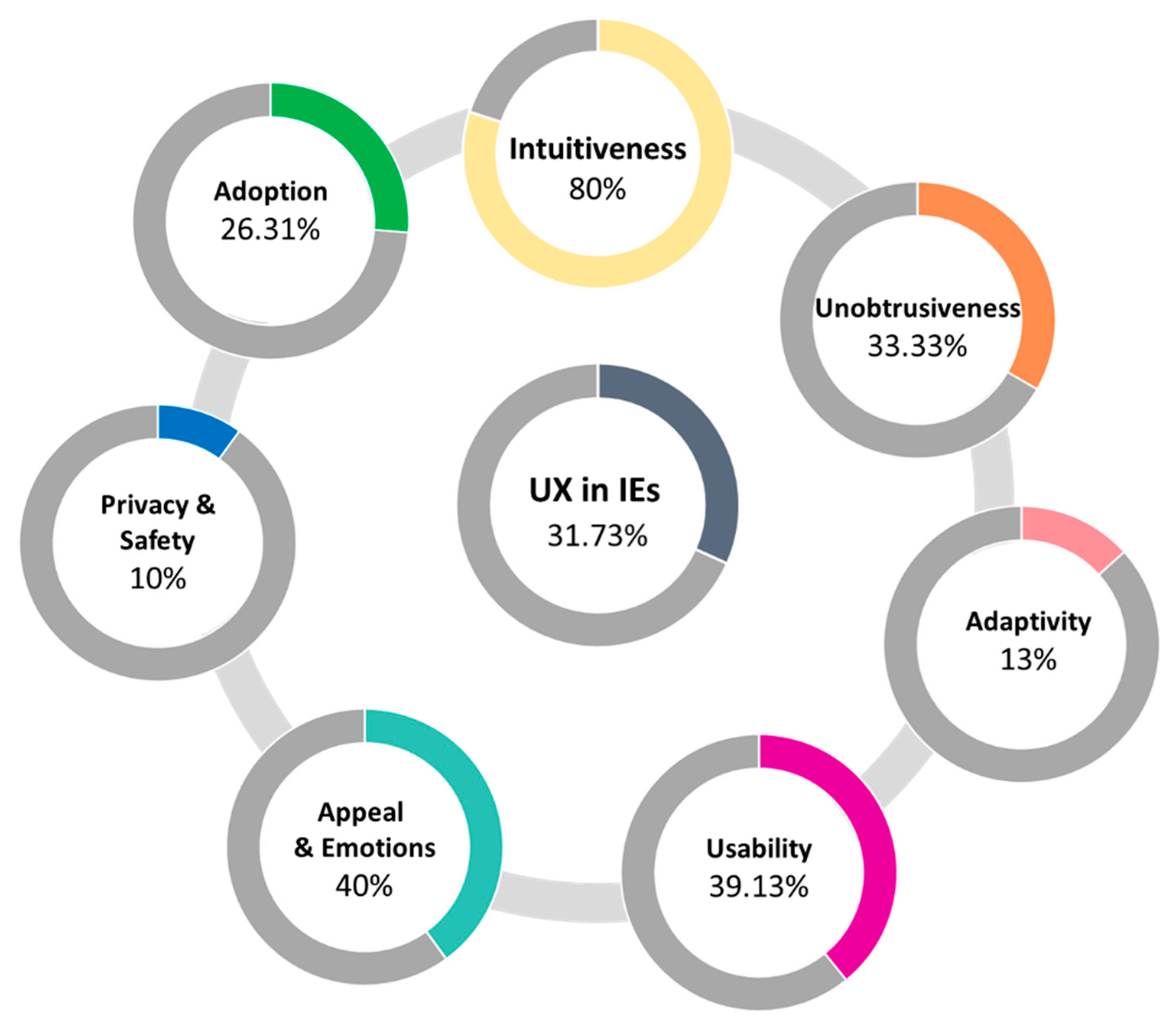

3.1. Attributes of Intelligent Environments—Conceptual Overview

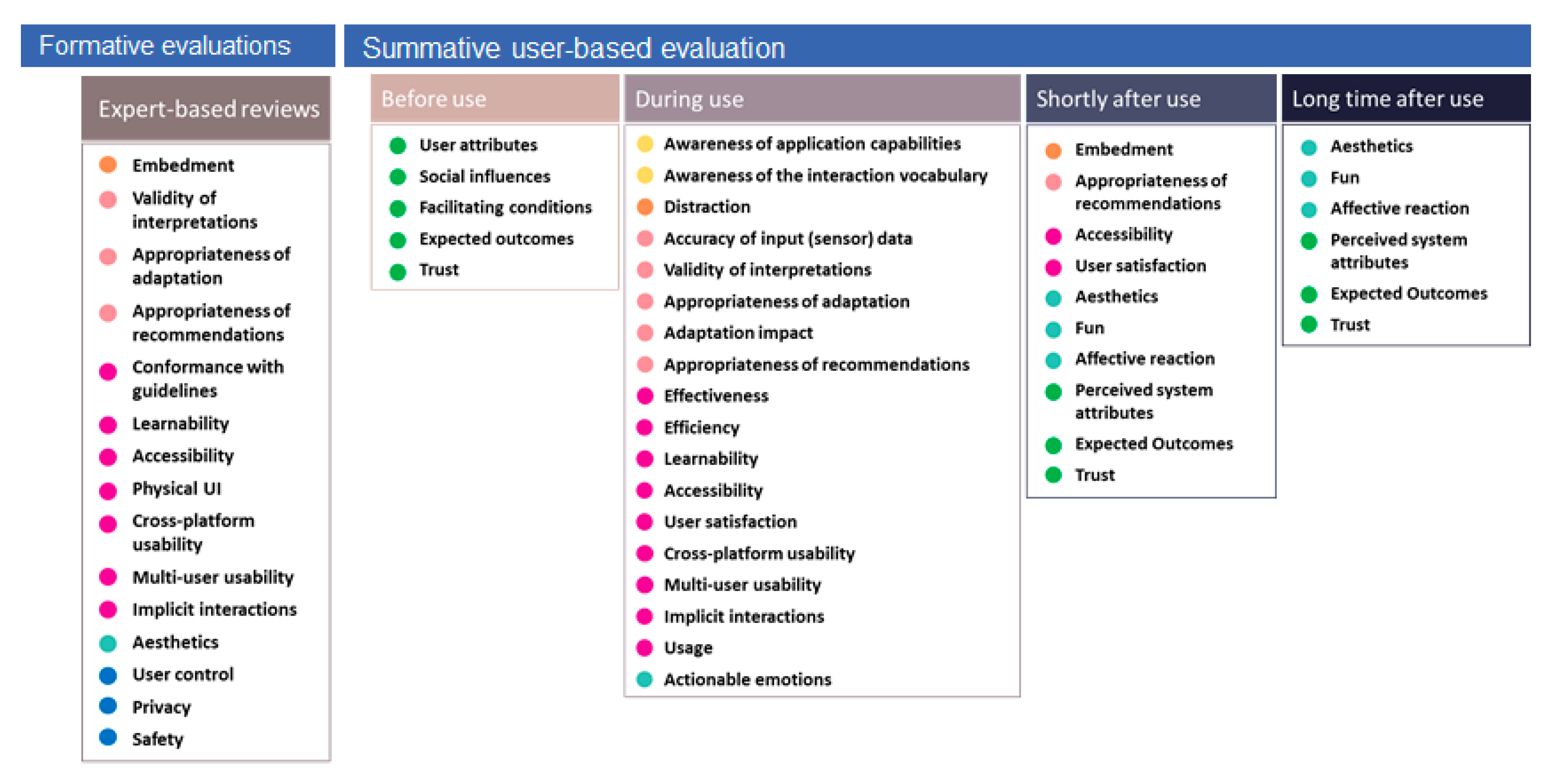

3.2. Evaluation Approach—Methodological Overview

4. The UXIE Evaluation Framework

- Whether automation is possible, with the indication automated measurement for full automation and automation support whenever full automation is not possible, but the evaluator can be assisted in calculations and observation recording. In general, fully automated measurements are based on the architecture of IE and the typical information flow in such environments, whereby interactors (e.g., people) perform their tasks, some of these tasks trigger sensors, and these in turn activate the reasoning system [29]. Therefore, interaction with a system in the IE is not a “black box”, instead it goes through sensors and agents residing in the environment, resulting in knowledge of interactions by the environment. A more detailed analysis of how the architecture of IE can be used for the implementation of such automated measurements is provided in [39].

- If the metric should be acquired before the actual system usage (

B), during (

D), shortly after (

sA), or long after it (

lA).

- If the metric pertains to a task-based experiment (Task-based), or if it should be applied only in the context of real systems’ usage (e.g., in in-situ or field studies).

- If the metric is to be acquired through a specific question in the questionnaire that will be filled-in by the user after their interaction with the system, or as a discussion point in the interview that will follow up.

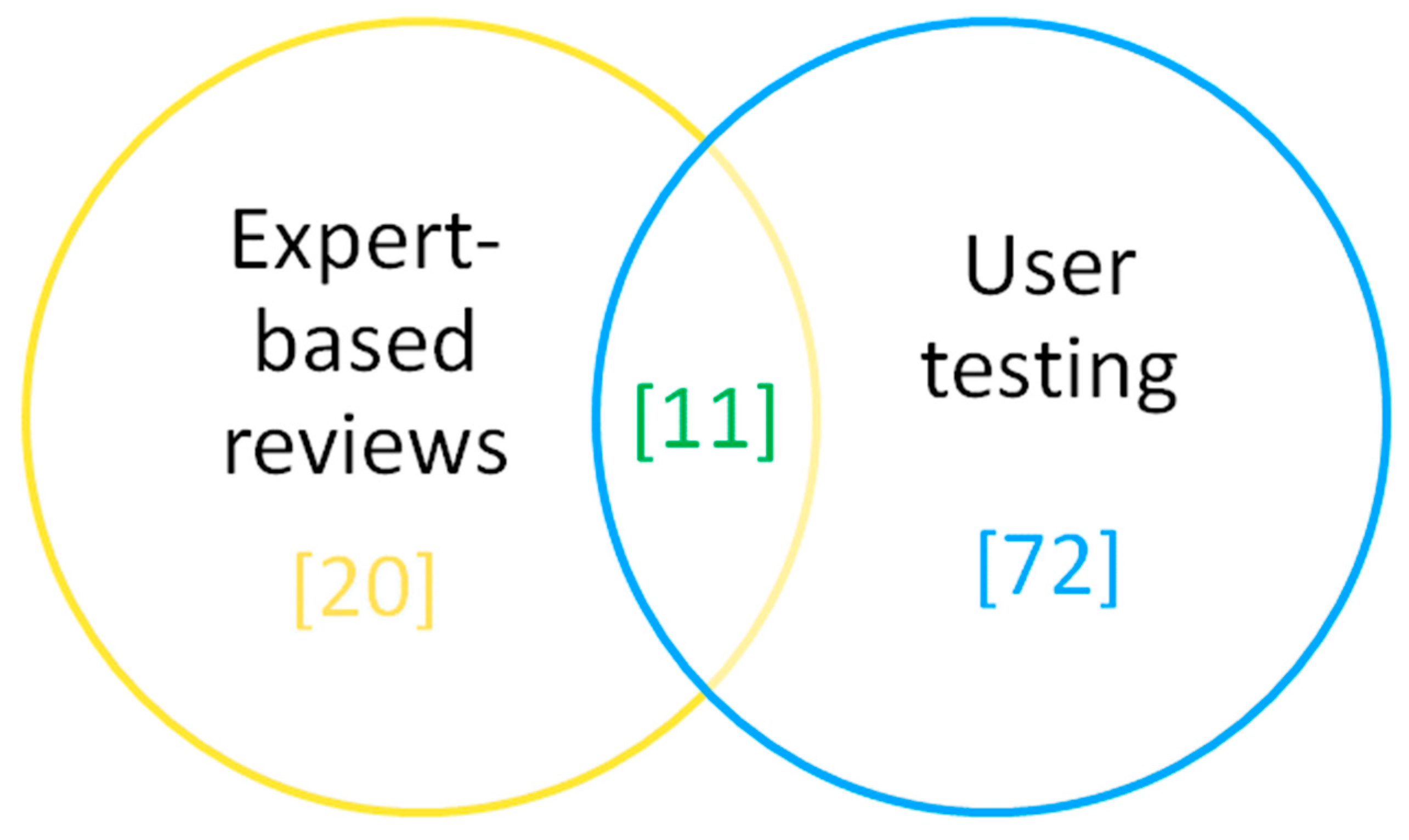

5. Evaluation of the UXIE Framework

5.1. Method and Participants

5.2. Procedure

- What is your overall impression of the UXIE framework?

- Would you consider using it? Why?

- Was the language clear and understandable?

- What was omitted that should have been included?

- What could be improved?

- Would it be helpful in the context of carrying out evaluations in intelligent environments in comparison to existing approaches you are aware of?

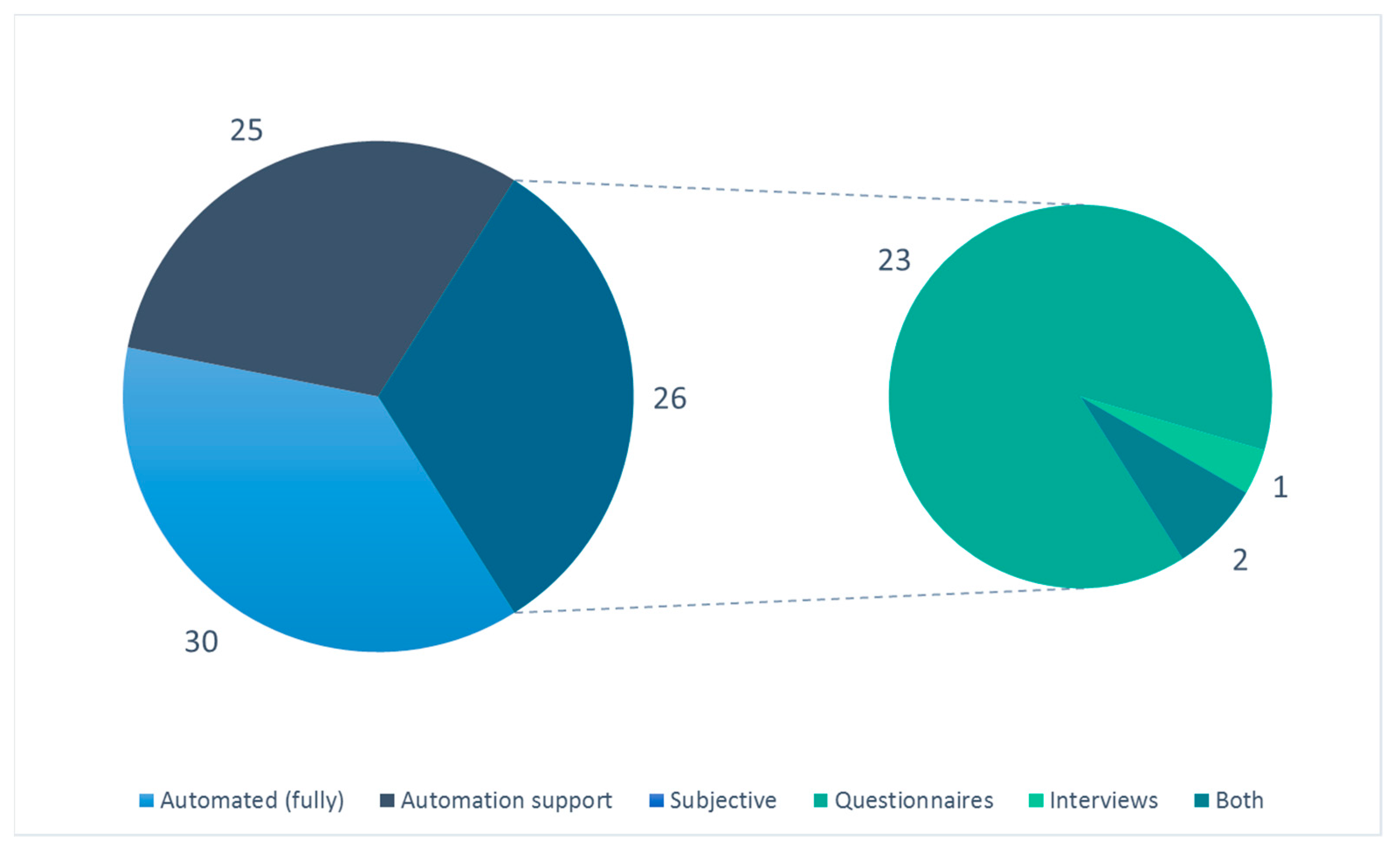

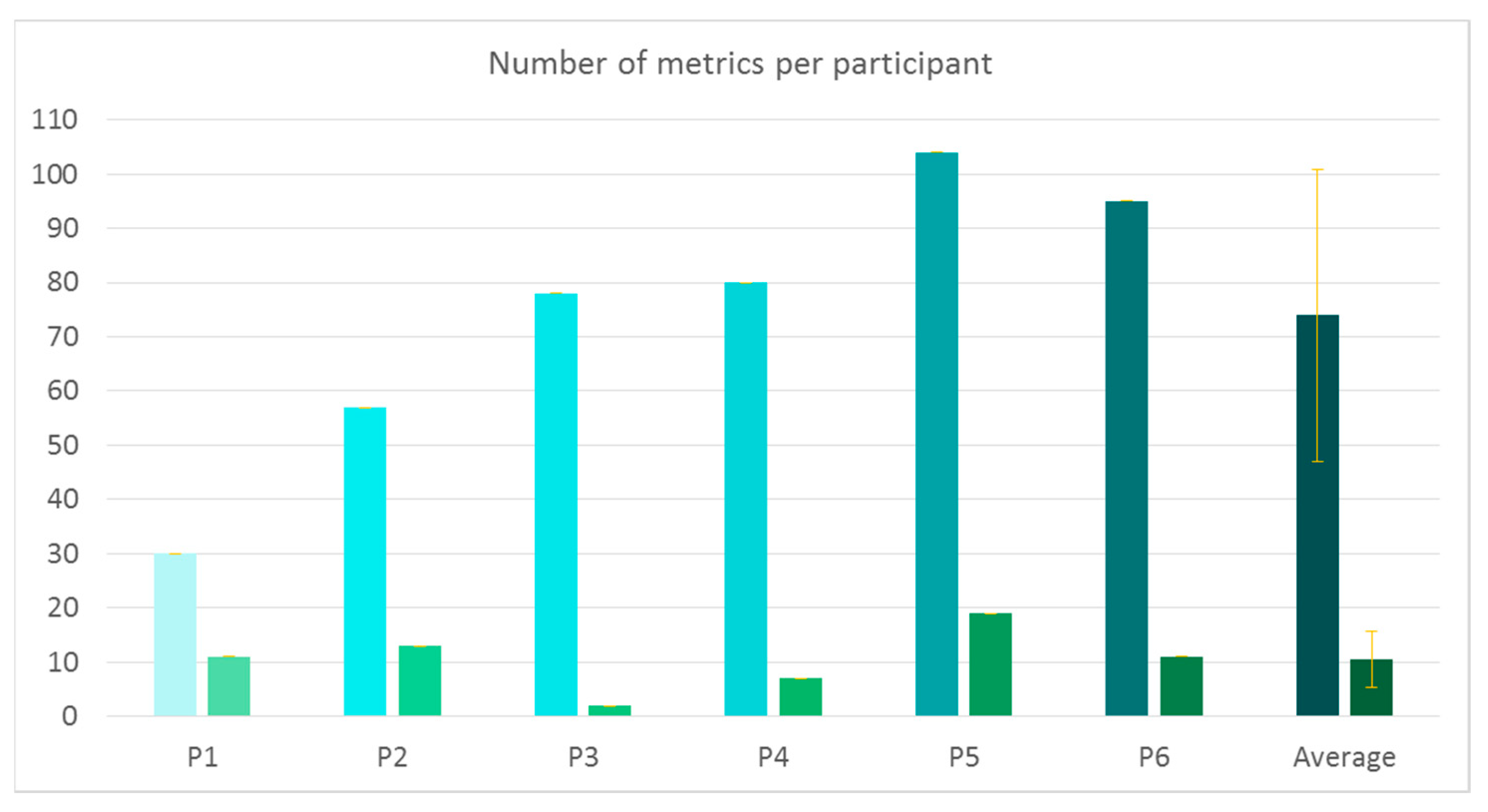

5.3. Results

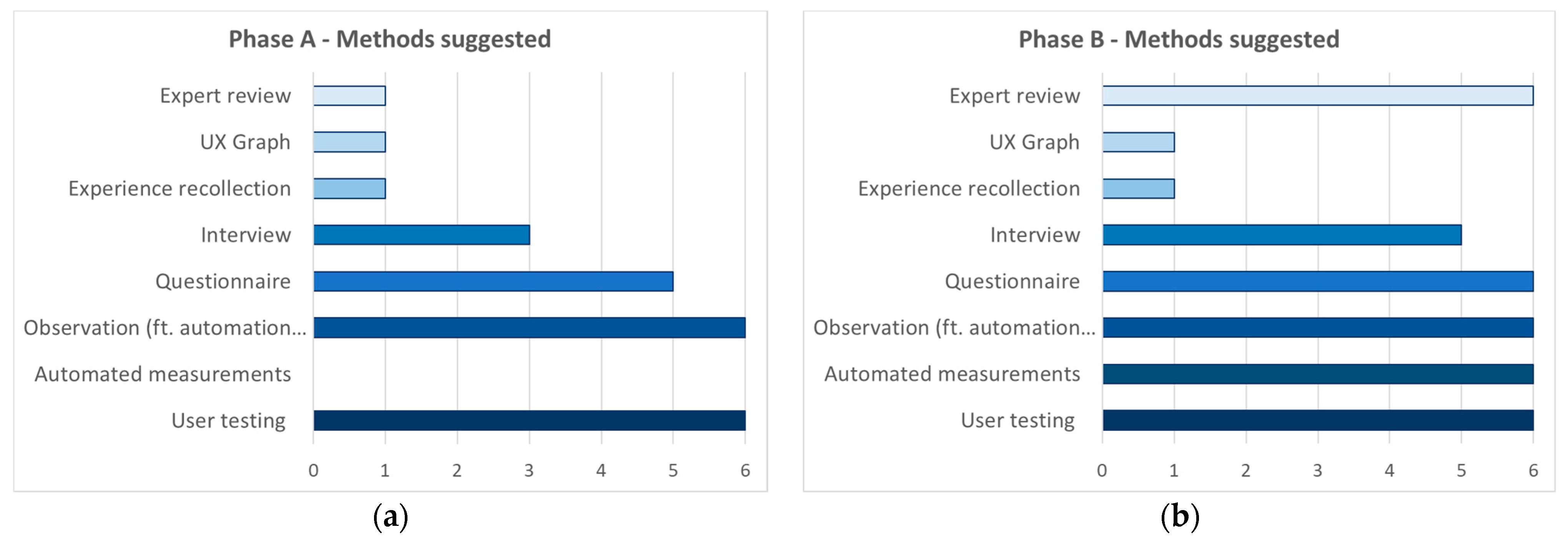

- User testing: suggested by all six participants (100%).

- Expert-based reviews: suggested by one participant only (16.66%).

- Although in phase A only one participant selected expert-based reviews as a method to be employed, in phase B six participants selected it, embracing the multimethod approach advocated by the framework.

- Interviews were selected by two more participants in phase B.

- Automated measurements were selected by all the participants in phase B.

- Observations through automation support were selected by all the participants in phase B.

- Observation

- Time to complete a task

- Number of times that an interaction modality is used

- Interaction modality changes for a given task

- Number of errors

- Input errors

- Interaction errors

- Time spent recovering from errors

- Number of help requests

- Number of times that the user “undoes” automatic changes

- Interaction modality accuracy

- Interaction modality selected first

- Task success

- Number of tries to achieve a task

- Unexpected actions or movements

- User confidence with interaction modalities

- Think aloud user statements

- 16.

- Input modalities that the user wanted to use but did not remember how to

- 17.

- Number of times the user expresses frustration

- 18.

- Number of times the user expresses joy

- 19.

- If the user understands the changes happening in the environment

- Questionnaires

- 20.

- Age

- 21.

- Gender

- 22.

- Computer attitude

- 23.

- Preferable interaction technique

- 24.

- User satisfaction (questionnaire)

- 25.

- How well did the system manage multiple users?

- 26.

- Correctness of system adaptations

- 27.

- Level of fatigue

- 28.

- Users’ experience of the intelligence

- 29.

- How intrusive did they find the environment?

- 30.

- Effectiveness (questionnaire)

- 31.

- Efficiency (questionnaire)

- 32.

- User feelings

- 33.

- Learnability

- 34.

- System innovativeness

- 35.

- System responsiveness

- 36.

- System predictability

- 37.

- Comfortability with gestures

- 38.

- Promptness of system adaptations to user emotions

- 39.

- Comfortability with tracking and monitoring of activities

- Interview

- 40.

- User feedback for each modality

- 41.

- Likes

- 42.

- Dislikes

- 43.

- Additional functionality desired

- 44.

- Experience Recollection Method (ERM)

- 45.

- User experience

- 46.

- UX Graph

- 47.

- User satisfaction from the overall user experience

- 48.

- Expert-based review

- 49.

- Functionality provided for setting preferences

6. Discussion

- Level of fatigue, which is an important consideration for the evaluation of systems supporting gestures. Although context-specific, as gestures are expected to be a fundamental interaction modality in IEs, this metric will be included in future versions of the UXIE framework, along with other metrics examining the most fundamental interaction modalities. In addition, such specific concerns are expected to be studied by expert evaluators as well.

- System responsiveness: a system characteristic which obviously impacts the overall user experience that should always be examined during software testing. Future versions of the framework will consider adding this variable to the expert-based measurements and simulations, however, not as a user reported metric.

- Comfortability with tracking and monitoring of activities: a fundamental concern in IEs is whether users accept the fact that the environment collects information based on their activities. UXIE has included attributes regarding safety, privacy, and user’s control over the behavior of the IE. In addition, the trust metric in the acceptance and adoption category aims to retrieve users’ attitudes on how much they trust the IE. Future versions of the framework will explore if a specific question for activity monitoring should be included as well.

- Perceived overall user experience: this metric could be included as an indication that additional methods estimating user experience as perceived by the users can be employed (e.g., how satisfied they are from the system during the various phases of using it).

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

| Unobtrusiveness | |

| Embedment | The system and its components are appropriately embedded in the surrounding architecture |

| Adaptability and Adaptivity | |

| Interpretations | Validity of system interpretations |

| Appropriateness of adaptation | Interaction modalities are appropriately adapted according to the user profile and context of use |

| System output is appropriately adapted according to the user profile and context of use | |

| Content is appropriately adapted according to the user profile and context of use | |

| Appropriateness of recommendations | The system adequately explains any recommendations |

| The system provides an adequate way for users to express and revise their preferences | |

| Recommendations are appropriate for the specific user and context of use | |

| Usability | |

| Conformance with guidelines | The user interfaces of the systems comprising the IE conform to relevant guidelines |

| Learnability | Users can easily understand and use the system |

| Accessibility | The system conforms to accessibility guidelines |

| The systems of the IE are electronically accessible | |

| The IE is physically accessible | |

| Physical UI | The system does not violate any ergonomic guidelines |

| The size and position of the system is appropriate for its manipulation by the target user groups | |

| Cross-platform usability | Consistency among the user interfaces of the individual systems |

| Content is appropriately synchronized for cross-platform tasks | |

| Available actions are appropriately synchronized for cross-platform tasks | |

| Multiuser usability | Social etiquette is followed by the system |

| Implicit interactions | Appropriateness of system responses to implicit interactions |

| Appeal and emotions | |

| Aesthetics | The systems follow principles of aesthetic design |

| Safety and privacy | |

| User control | User has control over the data collected |

| User has control over the dissemination of information | |

| The user can customize the level of control that the IE has: high (acts on behalf of the person), medium (gives advice), low (executes a person’s commands) | |

| Privacy | Availability of the user’s information to other users of the system or third parties |

| Availability of explanations to a user about the potential use of recorded data | |

| Comprehensibility of the security (privacy) policy | |

| Safety | The IE is safe for its operators |

| The IE is safe in terms of public health | |

| The IE does not cause environmental harm | |

| The IE will not cause harm to commercial property, operations or reputation in the intended contexts of use | |

| Technology Acceptance and Adoption | |

| User attributes | Self-efficacy |

| Computer attitude | |

| Age | |

| Gender | |

| Personal innovativeness | |

| Social influences | Subjective norm |

| Voluntariness | |

| Facilitating conditions | Visibility |

| Expected outcomes | Perceived benefit |

| Long-term consequences of use | |

| Trust | User trust towards the system |

| Intuitiveness | |

| Awareness of application capabilities | Functionalities that have been used for each system |

| Undiscovered functionalities of each system | |

| Awareness of the interaction vocabulary | Percentage of input modalities used |

| Erroneous user inputs (inputs that have not been recognized by the system) for each supported input modality | |

| Percentage of erroneous user inputs per input modality | |

| Adaptability and adaptivity | |

| Adaptation impact | Number of erroneous user inputs (i.e., incorrect use of input commands) once an adaptation has been applied |

| Appropriateness of recommendations | Percentage of accepted system recommendations |

| Usability | |

| Effectiveness | Number of input errors |

| Number of system failures | |

| Efficiency | Task time |

| Learnability | Number of interaction errors over time |

| Number of input errors over time | |

| Number of help requests over time | |

| Cross-platform usability | After switching device: number of interaction errors until task completion |

| Help requests after switching devices | |

| Cross-platform task time compared to the task time when the task is carried out in a single device (per device) | |

| Multiuser usability | Number of collisions with activities of others |

| Percentage of conflicts resolved by the system | |

| Implicit interactions | Implicit interactions carried out by the user |

| Number of implicit interactions carried out by the user | |

| Percentages of implicit interactions per implicit interaction type | |

| Usage | Global interaction heat map: number of usages per hour on a daily, weekly and monthly basis for the entire IE |

| Systems’ interaction heat map: number of usages for each system in the IE per hour on a daily, weekly and monthly basis | |

| Applications’ interaction heat map: number of usages for each application in the IE per hour on a daily, weekly and monthly basis | |

| Time duration of users’ interaction with the entire IE | |

| Time duration of users’ interaction with each system of the IE | |

| Time duration of users’ interaction with each application of the IE | |

| Analysis (percentage) of applications used per system (for systems with more than one application) | |

| Percentage of systems to which a pervasive application has been deployed, per application | |

| Appeal and emotions | |

| Actionable emotions | Detection of users’ emotional strain through physiological measures, such as heart rate, skin resistance, blood volume pressure, gradient of the skin resistance and speed of the aggregated changes in the all variables’ incoming data |

| Unobtrusiveness | |

| Distraction | Number of times that the user has deviated from the primary task |

| Time elapsed from a task deviation until the user returns to the primary task | |

| Adaptability and Adaptivity | |

| Input (sensor) data | Accuracy of input (sensor) data perceived by the system |

| Interpretations | Validity of system interpretations |

| Appropriateness of adaptation | Interaction modalities are appropriately adapted according to the user profile and context of use |

| System output is appropriately adapted according to the user profile and context of use | |

| Content is appropriately adapted according to the user profile and context of use | |

| Adaptations that have been manually overridden by the user | |

| Adaptation impact | Number of erroneous user interactions once an adaptation has been applied |

| Percentage of adaptations that have been manually overridden by the user | |

| Appropriateness of recommendations | The system adequately explains any recommendations |

| Recommendations are appropriate for the specific user and context of use | |

| Recommendations that have not been accepted by the user | |

| Usability | |

| Effectiveness | Task success |

| Number of interaction errors | |

| Efficiency | Number of help requests |

| Time spent on errors | |

| User satisfaction | Percent of favourable user comments/unfavorable user comments |

| Number of times that users express frustration | |

| Number of times that users express clear joy | |

| Cross-platform usability | After switching device: time spent to continue the task from where it was left |

| Cross-platform task success compared to the task success when the task is carried out in a single device (per device) | |

| Multiuser usability | Correctness of system’s conflict resolution |

| Percentage of conflicts resolved by the user(s) | |

| Implicit interactions | Appropriateness of system responses to implicit interactions |

| Unobtrusiveness | |

| Embedment | The system and its components are appropriately embedded in the surrounding architecture |

| Adaptability and adaptivity | |

| Appropriateness of recommendations | User satisfaction by system recommendations (appropriateness, helpfulness/accuracy) |

| Usability | |

| User satisfaction | Users believe that the system is pleasant to use |

| Appeal and emotions | |

| Aesthetics | The IE and its systems are aesthetically pleasing for the user |

| Fun | Interacting with the IE is fun |

| Actionable emotions | Users’ affective reaction to the system |

| Technology acceptance and adoption | |

| System attributes | Perceived usefulness |

| Perceived ease of use | |

| Trialability | |

| Relative advantage | |

| Cost (installation, maintenance) | |

| Facilitating conditions | End-user support |

| Expected outcomes | Perceived benefit |

| Long-term consequences of use | |

| Observability | |

| Image | |

| Trust | User trust towards the system |

| Unobtrusiveness | |

| Embedment | The system and its components are appropriately embedded in the surrounding architecture |

| Adaptability and adaptivity | |

| Appropriateness of recommendations | User satisfaction by system recommendations (appropriateness, helpfulness/accuracy) |

| Usability | |

| Accessibility | The IE is physically accessible |

| Usability | |

| User satisfaction | Users believe that the system is pleasant to use |

| Appeal and emotions | |

| Aesthetics | The IE and its systems are aesthetically pleasing for the user |

| Fun | Interacting with the IE is fun |

| Actionable emotions | Users’ affective reactions to the system |

| Technology acceptance and adoption | |

| System attributes | Perceived usefulness |

| Perceived ease of use | |

| Relative advantage | |

| Facilitating conditions | End-user support |

| Expected outcomes | Perceived benefit |

| Long-term consequences of use | |

| Observability | |

| Image | |

| Trust | User trust toward the system |

References

- Stephanidis, C.; Antona, M.; Ntoa, S. Human factors in ambient intelligence environments. In Handbook of Human Factors and Ergonomics, 5th ed.; Salvendy, G., Karwowski, W., Eds.; John Wiley & Sons: Hoboken, NJ, USA, 2021. [Google Scholar]

- Stephanidis, C.C.; Salvendy, G.; Antona, M.; Chen, J.Y.C.; Dong, J.; Duffy, V.G.; Fang, X.; Fidopiastis, C.; Fragomeni, G.; Fu, L.P.; et al. Seven HCI Grand Challenges. Int. J. Hum. Comput. Interact. 2019, 35, 1229–1269. [Google Scholar] [CrossRef]

- Augusto, J.C.; Nakashima, H.; Aghajan, H. Ambient Intelligence and Smart Environments: A State of the Art. In Handbook of Ambient Intelligence and Smart Environments; Nakashima, H., Aghajan, H., Augusto, J.C., Eds.; Springer: Boston, MA, USA, 2010; pp. 3–31. [Google Scholar]

- Davis, F.D. A Technology Acceptance Model for Empirically Testing New End-User Information Systems: Theory and Results. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1986. [Google Scholar]

- Ntoa, S.; Antona, M.; Stephanidis, C. Towards Technology Acceptance Assessment in Ambient Intelligence Environments. In Proceedings of the AMBIENT 2017, the Seventh International Conference on Ambient Computing, Applications, Services and Technologies, Barcelona, Spain, 12–16 November 2017; pp. 38–47. [Google Scholar]

- Petrie, H.; Bevan, N. The Evaluation of Accessibility, Usability, and User Experience. In the Universal Access Handbook; Stephanidis, C., Ed.; CRC Press: Boca Raton, FL, USA, 2009. [Google Scholar]

- Paz, F.; Pow-Sang, J.A. Current Trends in Usability Evaluation Methods: A Systematic Review. In Proceedings of the 7th International Conference on Advanced Software Engineering and Its Applications, Hainan Island, China, 20–23 December 2014; pp. 11–15. [Google Scholar]

- Hornbæk, K.; Law, E.L.-C. Meta-Analysis of Correlations Among Usability Measures; ACM Press: San Jose, CA, USA, 2007; pp. 617–626. [Google Scholar]

- ISO 9241-210:2019. Ergonomics of Human-System Interaction—Part 210: Human-Centered Design for Interactive Systems, 2nd ed.; ISO: Geneva, Switzerland, 2010. [Google Scholar]

- Andrade, R.M.C.; Carvalho, R.M.; de Araújo, I.L.; Oliveira, K.M.; Maia, M.E.F. What Changes from Ubiquitous Computing to Internet of Things in Interaction Evaluation? In Distributed, Ambient and Pervasive Interactions; Streitz, N., Markopoulos, P., Eds.; Springer: Cham, Switzerland, 2017; pp. 3–21. [Google Scholar]

- Hellweger, S.; Wang, X. What Is User Experience Really: Towards a UX Conceptual Framework. arXiv 2015, arXiv:1503.01850. [Google Scholar]

- Miki, H. User Experience Evaluation Framework for Human-Centered Design. In Human Interface and the Management of Information: Information and Knowledge Design and Evaluation; Yamamoto, S., Ed.; Springer: Cham, Switzerland, 2014; pp. 602–612. [Google Scholar]

- Lachner, F.; Naegelein, P.; Kowalski, R.; Spann, M.; Butz, A. Quantified UX: Towards a Common Organizational Understanding of User Experience. In Proceedings of the 9th Nordic Conference on Human-Computer Interaction, Gothenburg, Sweden, 23–27 October 2016; pp. 1–10. [Google Scholar]

- Zarour, M.; Alharbi, M. User experience framework that combines aspects, dimensions, and measurement methods. Cogent Eng. 2017, 4, 1421006. [Google Scholar] [CrossRef]

- Weiser, M. The computer for the 21st century. ACM SIGMOBILE Mob. Comput. Commun. Rev. 1999, 3, 3–11. [Google Scholar] [CrossRef]

- Want, R. An Introduction to Ubiquitous Computing. In Ubiquitous Computing Fundamentals; Krumm, J., Ed.; Chapman & Hall/CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Connelly, K. On developing a technology acceptance model for pervasive computing. In Proceedings of the 9th International Conference on Ubiquitous Computing (UBICOMP)-Workshop of Ubiquitous System Evaluation (USE), Innsbruck, Austria, 16–19 September 2007. [Google Scholar]

- Scholtz, J.; Consolvo, S. Toward a framework for evaluating ubiquitous computing applications. IEEE Pervasive Comput. 2004, 3, 82–88. [Google Scholar] [CrossRef]

- Carvalho, R.M.; Andrade, R.M.D.C.; de Oliveira, K.M.; Santos, I.D.S.; Bezerra, C.I.M. Quality characteristics and measures for human–computer interaction evaluation in ubiquitous systems. Softw. Qual. J. 2017, 25, 743–795. [Google Scholar] [CrossRef]

- Díaz-Oreiro, I.; López, G.; Quesada, L.; Guerrero, L.A. UX Evaluation with Standardized Questionnaires in Ubiquitous Computing and Ambient Intelligence: A Systematic Literature Review. Adv. Hum. Comput. Interact. 2021, 2021, 1–22. [Google Scholar] [CrossRef]

- Almeida, R.L.A.; Andrade, R.M.C.; Darin, T.G.R.; Paiva, J.O.V. CHASE: Checklist to assess user experience in IoT environments. In Proceedings of the ACM/IEEE 42nd International Conference on Software Engineering: New Ideas and Emerging Results, Seoul, Korea, 27 June–19 July 2020; pp. 41–44. [Google Scholar]

- Dhouib, A.; Trabelsi, A.; Kolski, C.; Neji, M. An approach for the selection of evaluation methods for interactive adaptive systems using analytic hierarchy process. In Proceedings of the IEEE 10th International Conference on Research Challenges in Information Science (RCIS), Grenoble, France, 1–3 June 2016; pp. 1–10. [Google Scholar]

- Raibulet, C.; Fontana, F.A.; Capilla, R.; Carrillo, C. An Overview on Quality Evaluation of Self-Adaptive Systems. In Managing Trade-Offs in Adaptable Software Architectures; Elsevier: Amsterdam, The Netherlands, 2017; pp. 325–352. [Google Scholar]

- De Ruyter, B.; Aarts, E. Experience Research: A Methodology for Developing Human-centered Interfaces. In Handbook of Ambient Intelligence and Smart Environments; Nakashima, H., Aghajan, H., Augusto, J.C., Eds.; Springer: Boston, MA, USA, 2010; pp. 1039–1067. [Google Scholar]

- Aarts, E.E.; de Ruyter, B.B. New research perspectives on Ambient Intelligence. J. Ambient. Intell. Smart Environ. 2009, 1, 5–14. [Google Scholar] [CrossRef]

- Pavlovic, M.; Kotsopoulos, S.; Lim, Y.; Penman, S.; Colombo, S.; Casalegno, F. Determining a Framework for the Generation and Evaluation of Ambient Intelligent Agent System Designs; Springer: Cham, Switzerland, 2020; pp. 318–333. [Google Scholar]

- De Carolis, B.; Ferilli, S.; Novielli, N. Recognizing the User Social Attitude in Multimodal Interaction in Smart Environments. In Ambient Intelligence; Paternò, F., de Ruyter, B., Markopoulos, P., Santoro, C., van Loenen, E., Luyten, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 240–255. [Google Scholar]

- Remagnino, P.; Foresti, G.L. Ambient Intelligence: A New Multidisciplinary Paradigm. IEEE Trans. Syst. Man Cybern. 2005, 35, 1–6. [Google Scholar] [CrossRef]

- Augusto, J.; Mccullagh, P. Ambient Intelligence: Concepts and applications. Comput. Sci. Inf. Syst. 2007, 4, 1–27. [Google Scholar] [CrossRef]

- Aarts, E.; Wichert, R. Ambient intelligence. In Technology Guide; Bullinger, H.-J., Ed.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 244–249. [Google Scholar]

- Cook, D.J.; Augusto, J.C.; Jakkula, V.R. Ambient intelligence: Technologies, applications, and opportunities. Pervasive Mob. Comput. 2009, 5, 277–298. [Google Scholar] [CrossRef]

- Sadri, F. Ambient intelligence. ACM Comput. Surv. 2011, 43, 1–66. [Google Scholar] [CrossRef]

- Turner, P. Towards an account of intuitiveness. Behav. Inf. Technol. 2008, 27, 475–482. [Google Scholar] [CrossRef]

- Paramythis, A.; Weibelzahl, S.; Masthoff, J. Layered evaluation of interactive adaptive systems: Framework and formative methods. User Model. User Adapt. Interact. 2010, 20, 383–453. [Google Scholar] [CrossRef]

- Ryu, H.; Hong, G.Y.; James, H. Quality assessment technique for ubiquitous software and middleware. Res. Lett. Inf. Math. Sci. 2006, 9, 13–87. [Google Scholar]

- Margetis, G.; Ntoa, S.; Antona, M.; Stephanidis, C. Augmenting natural interaction with physical paper in ambient intelligence environments. Multimed. Tools Appl. 2019, 78, 13387–13433. [Google Scholar] [CrossRef]

- Nielsen, J. Usability Engineering; KaUX Grufmann: Amsterdam, The Netherlands, 2010. [Google Scholar]

- Martins, A.I.; Queirós, A.; Silva, A.G.; Rocha, N.P. Usability Evaluation Methods: A Systematic Review. In Human Factors in Software Development and Design; Saeed, S., Bajwa, I.S., Mahmood, Z., Eds.; IGI Global: Hershey, PA, USA, 2015. [Google Scholar]

- Ntoa, S.; Margetis, G.; Antona, M.; Stephanidis, C. UXAmI Observer: An Automated User Experience Evaluation Tool for Ambient Intelligence Environments; Arai, K., Kapoor, S., Bhatia, R., Eds.; Springer: Cham, Switzerland, 2019; pp. 1350–1370. [Google Scholar]

- Margetis, G.; Antona, M.; Ntoa, S.; Stephanidis, C. Towards Accessibility in Ambient Intelligence Environments. In Ambient Intelligence; Paternò, F., de Ruyter, B., Markopoulos, P., Santoro, C., van Loenen, E., Luyten, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 328–337. [Google Scholar]

- Schmidt, A. Implicit human computer interaction through context. Pers. Ubiquitous Comput. 2000, 4, 191–199. [Google Scholar] [CrossRef]

- Heo, J.; Ham, D.-H.; Park, S.; Song, C.; Yoon, W.C. A framework for evaluating the usability of mobile phones based on multi-level, hierarchical model of usability factors. Interact. Comput. 2009, 21, 263–275. [Google Scholar] [CrossRef]

- Sommerville, I.; Dewsbury, G. Dependable domestic systems design: A socio-technical approach. Interact. Comput. 2007, 19, 438–456. [Google Scholar] [CrossRef]

- Kurosu, M. Theory of User Engineering; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Cook, D.J.; Das, S.K. Smart Environments: Technologies, Protocols, and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2005. [Google Scholar]

| Intuitiveness | ||

| Awareness of application capabilities | Functionalities that have been used for each system * | User testing [ D]: Automated measurement D]: Automated measurement |

| Undiscovered functionalities of each system * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Awareness of the interaction vocabulary | Percentage of input modalities used * | User testing [ D]: Automated measurement D]: Automated measurement |

| Erroneous user inputs (inputs that have not been recognized by the system) for each supported input modality * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Percentage of erroneous user inputs per input modality * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Unobtrusiveness | ||

| Distraction | Number of times that the user has deviated from the primary task * | User testing [ D] [Task-based, or Think Aloud]: Automation support D] [Task-based, or Think Aloud]: Automation support |

| Time elapsed from a task deviation until the user returns to the primary task | User testing [ D] [Task-based, or Think Aloud]: Automation support D] [Task-based, or Think Aloud]: Automation support | |

| Embedment | The system and its components are appropriately embedded in the surrounding architecture | Expert-based review User testing [  sA]: Questionnaire, Interview sA]: Questionnaire, Interview |

| Adaptability and Adaptivity | ||

| Input (sensor) data | Accuracy of input (sensor) data perceived by the system | User testing [ D]: Automation support D]: Automation support |

| Interpretations | Validity of system interpretations | Expert-based review |

| Appropriateness of adaptation | Interaction modalities are appropriately adapted according to the user profile and context of use * | Expert-based review User testing [  D]: Automation support D]: Automation support |

| System output is appropriately adapted according to the user profile and context of use * | Expert-based review User testing [  D]: Automation support D]: Automation support | |

| Content is appropriately adapted according to the user profile and context of use * | Expert-based review User testing [  D]: Automation support D]: Automation support | |

| Adaptations that have been manually overridden by the user * | User testing [ D]: Automation support D]: Automation support | |

| Adaptation impact | Number of erroneous user inputs (i.e., incorrect use of input commands) once an adaptation has been applied * | User testing [ D]: Automated measurement D]: Automated measurement |

| Number of erroneous user interactions once an adaptation has been applied * | User testing [ D]: Automation support D]: Automation support | |

| Percentage of adaptations that have been manually overridden by the user * | User testing [ D]: Automation support D]: Automation support | |

| Appropriateness of recommendations | The system adequately explains any recommendations | Expert-based review User testing [  D]: Automation support D]: Automation support |

| The system provides an adequate way for users to express and revise their preferences | Expert-based review | |

| Recommendations are appropriate for the specific user and context of use * | Expert-based review User testing [  D]: Automation support D]: Automation support | |

| Recommendations that have not been accepted by the user * | User testing [ D]: Automation support D]: Automation support | |

| Percentage of accepted system recommendations * | User testing [ D]: Automated measurement D]: Automated measurement | |

| User satisfaction by system recommendations (appropriateness, helpfulness/accuracy) | User testing [ sA]: Questionnaire, Interview sA]: Questionnaire, Interview | |

| Usability | ||

| Conformance with guidelines | The user interfaces of the systems comprising the IE conform to relevant guidelines | Expert-based review |

| Effectiveness | Task success | User testing [ D] (Task-based): Automation support D] (Task-based): Automation support |

| Number of interaction errors * | User testing [ D]: Automation support D]: Automation support | |

| Number of input errors * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Number of system failures | User testing [ D]: Automated measurement D]: Automated measurement | |

| Efficiency | Task time | User testing [ D] (Task-based): Automated measurement D] (Task-based): Automated measurement |

| Number of help requests | User testing [ D]: Automation support D]: Automation support | |

| Time spent on errors | User testing [ D]: Automation support D]: Automation support | |

| Learnability | Users can easily understand and use the system | Expert-based review (cognitive walkthrough) |

| Number of interaction errors over time * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Number of input errors over time * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Number of help requests over time * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Accessibility | The system conforms to accessibility guidelines | Expert-based review Semi-automated accessibility evaluation tools |

| The systems of the IE are electronically accessible | Expert review User testing [  D] D] | |

| The IE is physically accessible | Expert review User testing [  D] D]User testing [  sA]: Interview sA]: Interview | |

| Physical UI | The system does not violate any ergonomic guidelines | Expert-based review |

| The size and position of the system is appropriate for its manipulation by the target user groups | Expert-based review User testing [  D] D] | |

| User satisfaction | Users believe that the system is pleasant to use | User testing [ sA] [ sA] [ lA]: Questionnaire lA]: Questionnaire |

| Percent of favorable user comments/unfavorable user comments | User testing [ D]: Automation support D]: Automation support | |

| Number of times that users express frustration | User testing [ D]: Automation support D]: Automation support | |

| Number of times that users express clear joy | User testing [ D]: Automation support D]: Automation support | |

| Cross-platform usability | After switching device: time spent to continue the task from where it was left * | User testing [ D]: Automation support D]: Automation support |

| After switching device: number of interaction errors until task completion * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Consistency among the user interfaces of the individual systems | Expert-based review | |

| Content is appropriately synchronized for cross-platform tasks | Expert-based review | |

| Available actions are appropriately synchronized for cross-platform tasks | Expert-based review | |

| Help requests after switching devices * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Cross-platform task success compared to the task success when the task is carried out in a single device (per device) * | User testing [ D] (Task-based): Automation support D] (Task-based): Automation support | |

| Cross-platform task time compared to the task time when the task is carried out in a single device (per device) * | User testing [ D] (Task-based): Automated measurement D] (Task-based): Automated measurement | |

| Multiuser usability | Number of collisions with activities of others | User testing [ D]: Automated measurement D]: Automated measurement |

| Correctness of system’s conflict resolution * | User testing [ D]: Automation support D]: Automation support | |

| Percentage of conflicts resolved by the system | User testing [ D]: Automated measurement D]: Automated measurement | |

| Percentage of conflicts resolved by the user(s) * | User testing [ D]: Automation support D]: Automation support | |

| Social etiquette is followed by the system | Expert-based review | |

| Implicit interactions | Implicit interactions carried out by the user * | User testing [ D]: Automated measurement D]: Automated measurement |

| Number of implicit interactions carried out by the user * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Percentages of implicit interactions per implicit interaction type * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Appropriateness of system responses to implicit interactions * | Expert-based review User testing [  D]: Automation support D]: Automation support | |

| Usage | Global interaction heat map: number of usages per hour on a daily, weekly and monthly basis for the entire IE * | User testing [ D]: Automated measurement D]: Automated measurement |

| Systems’ interaction heat map: number of usages for IE each system per hour on a daily, weekly and monthly basis * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Applications’ interaction heat map: number of usages for each IE application per hour on a daily, weekly and monthly basis * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Time duration of users’ interaction with the entire IE * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Time duration of users’ interaction with each system of the IE * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Time duration of users’ interaction with each application of the IE * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Analysis (percentage) of applications used per system (for systems with more than one application) * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Percentage of systems to which a pervasive application has been deployed, per application * | User testing [ D]: Automated measurement D]: Automated measurement | |

| Appeal and Emotions | ||

| Aesthetics | The systems follow principles of aesthetic design | Expert-based review |

| The IE and its systems are aesthetically pleasing for the user | User testing [ sA] [ sA] [ lA]: Questionnaire lA]: Questionnaire | |

| Fun | Interacting with the IE is fun | User testing [ sA] [ sA] [ lA]: Questionnaire lA]: Questionnaire |

| Actionable emotions | Detection of users’ emotional strain through physiological measures, such as heart rate, skin resistance, blood volume pressure, gradient of the skin resistance and speed of the aggregated changes in the all variables’ incoming data | User testing [ D]: Automated measurement D]: Automated measurement |

| Users’ affective reaction to the system | User testing [ sA] [ sA] [ lA]: Questionnaire lA]: Questionnaire | |

| Safety and Privacy | ||

| User control | User has control over the data collected | Expert-based review |

| User has control over the dissemination of information | Expert-based review | |

| The user can customize the level of control that the IE has: high (acts on behalf of the person), medium (gives advice), low (executes a person’s commands) * | Expert-based review | |

| Privacy | Availability of the user’s information to other users of the system or third parties | Expert-based review |

| Availability of explanations to a user about the potential use of recorded data | Expert-based review | |

| Comprehensibility of the security (privacy) policy | Expert-based review | |

| Safety | The IE is safe for its operators | Expert-based review |

| The IE is safe in terms of public health | Expert-based review | |

| The IE does not cause environmental harm | Expert-based review | |

| The IE will not cause harm to commercial property, operations or reputation in the intended contexts of use | Expert-based review | |

| Technology Acceptance and Adoption | ||

| System attributes | Perceived usefulness | User testing [ sA] [ sA] [ lA]: Questionnaire lA]: Questionnaire |

| Perceived ease of use | User testing [ sA] [ sA] [ lA]: Questionnaire lA]: Questionnaire | |

| Trialability | Field study/In situ evaluation [ sA]: Questionnaire sA]: Questionnaire | |

| Relative advantage | User testing [ sA] [ sA] [ lA]: Questionnaire lA]: Questionnaire | |

| Cost (installation, maintenance) | Field study/In situ evaluation [ sA]: Questionnaire sA]: Questionnaire | |

| User attributes | Self-efficacy | User testing [ B]: Questionnaire B]: Questionnaire |

| Computer attitude | User testing [ B]: Questionnaire B]: Questionnaire | |

| Age | User testing [ B]: Questionnaire B]: Questionnaire | |

| Gender | User testing [ B]: Questionnaire B]: Questionnaire | |

| Personal innovativeness | User testing [ B]: Questionnaire B]: Questionnaire | |

| Social influences | Subjective norm | User testing [ B]: Questionnaire B]: Questionnaire |

| Voluntariness | User testing [ B]: Questionnaire B]: Questionnaire | |

| Facilitating conditions | End-user support | Field study/In situ evaluation [ sA] [ sA] [ lA]: Questionnaire lA]: Questionnaire |

| Visibility | Field study/In situ evaluation [ B]: Questionnaire B]: Questionnaire | |

| Expected outcomes | Perceived benefit | User testing [ B] [ B] [ sA] [ sA] [ lA]: Questionnaire lA]: Questionnaire |

| Long-term consequences of use | User testing [ B] [ B] [ sA] [ sA] [ lA]: Questionnaire lA]: Questionnaire | |

| Observability | User testing [ sA] [ sA] [ lA]: Questionnaire lA]: Questionnaire | |

| Image | User testing [ sA] [ sA] [ lA]: Questionnaire lA]: Questionnaire | |

| Trust | User trust towards the system | User testing [ B] [ B] [ sA] [ sA] [ lA]: Questionnaire lA]: Questionnaire |

| Age | Usability/UX Expertise | Evaluation in IEs | |||

|---|---|---|---|---|---|

| 20–30 | 2 | Expert | 3 | Expert | 1 |

| 30–40 | 2 | Knowledgeable | 3 | Knowledgeable | 2 |

| 40–50 | 2 | Familiar | 3 | ||

| Living Room TV (Interaction: Gestures, Speech, and Remote Control) | |

|---|---|

| Scenario 1 | Jenny enters home after a long day at work. On her way home, she heard on the radio about an earthquake in her home island. Worried, she turns on the TV through the remote control. She switches to her favorite news channel through the remote control and turns up the volume by carrying out a gesture, raising up her palm that faces the ceiling. The news channel is currently showing statements of the Prime Minister for a hot political topic. While listening to the news, she does some home chores and prepares dinner. She is cooking, when she listens that a report about the earthquake is presented and returns to the TV area. It turns out that the earthquake was small after all and no damages have been reported. |

| Scenario 3 | Peter has returned home from work and is currently reading the news through the living room TV. While reading, he receives a message from Jenny that she is on her way home and that he should start the dishwasher. Peter heads towards the kitchen (lights are turned on), selects a dishwasher program to start and returns to the living room (while kitchen lights are automatically turned off). After some time, Jenny arrives at home and unlocks the front door. As Jenny’s preferred lighting mode is full bright, while Peter has dimmed the lights, a message is displayed on the active home display, the living room TV, asking whether light status should change to full bright. Peter authorizes the environment to change the lighting mode, welcomes Jenny and they both sit on the couch to read the news. Peter tells Jenny about an interesting article regarding an automobile company and the recent emissions scandal and opens the article for her to read. Having read the article, Jenny recalls something interesting that she read at work about a new car model of the specific company and how it uses IT to detect drivers’ fatigue. She returns to the news categories, selects the IT news category and they both look for the specific article. Peter reads it and they continue selecting collaboratively interesting news articles. After some time, and since they have to wait for Arthur—their 15 year old son—to come back from the cinema, they decide to watch a movie. The system recommends movies based on their common interests and preferences. Peter selects the movie, Jenny raises the volume, while the environment dims the lights to the preset mode for watching TV. Quite some time later, and while the movie is close to ending, Arthur comes home. As soon as he unlocks the door and enters, the lights are turned to full bright and the movie stops, since the movie is rated as inappropriate for persons younger than 16 years old. Jenny and Peter welcome their son, and then resume the movie, as they think that it is not inappropriate for Arthur anyway, plus it is about to end. The movie ends and Jenny heads to the kitchen to serve dinner. Arthur and Peter browse through their favorite radio stations and select one to listen to. The dinner is served, the family is gathered in the kitchen, and the music follows along, as it is automatically transferred to the kitchen speaker. |

| P1 | P2 | P3 | P4 | P5 | P6 | Avg. | |

|---|---|---|---|---|---|---|---|

| Phase A | 0 | 0 | 0 | 0 | 0 | 1 | 0.16 |

| Phase B | 3 | 22 | 25 | 16 | 30 | 29 | 20.83 |

| UXIE adoption | 10% | 73.33% | 83.33% | 53.33% | 100% | 96.66% | 69.44% |

| Participant | Observation | User Statements | Questionnaire | Interview | ERM | UX Graph | Expert | Total |

|---|---|---|---|---|---|---|---|---|

| P1 | 7 | 4 | 0 | 0 | 0 | 0 | 0 | 11 |

| P2 | 3 | 0 | 8 | 0 | 1 | 1 | 0 | 13 |

| P3 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 2 |

| P4 | 4 | 0 | 1 | 2 | 0 | 0 | 0 | 7 |

| P5 | 5 | 0 | 10 | 4 | 0 | 0 | 0 | 19 |

| P6 | 2 | 0 | 7 | 1 | 0 | 0 | 1 | 11 |

| Total | 22 | 4 | 26 | 8 | 1 | 1 | 1 | 63 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ntoa, S.; Margetis, G.; Antona, M.; Stephanidis, C. User Experience Evaluation in Intelligent Environments: A Comprehensive Framework. Technologies 2021, 9, 41. https://doi.org/10.3390/technologies9020041

Ntoa S, Margetis G, Antona M, Stephanidis C. User Experience Evaluation in Intelligent Environments: A Comprehensive Framework. Technologies. 2021; 9(2):41. https://doi.org/10.3390/technologies9020041

Chicago/Turabian StyleNtoa, Stavroula, George Margetis, Margherita Antona, and Constantine Stephanidis. 2021. "User Experience Evaluation in Intelligent Environments: A Comprehensive Framework" Technologies 9, no. 2: 41. https://doi.org/10.3390/technologies9020041

APA StyleNtoa, S., Margetis, G., Antona, M., & Stephanidis, C. (2021). User Experience Evaluation in Intelligent Environments: A Comprehensive Framework. Technologies, 9(2), 41. https://doi.org/10.3390/technologies9020041