1. Introduction

Vocational training consists of instructional programs and courses to train a workforce. It focuses on training people with the skills required for a particular job function or trade. Manufacturing training is a subset of vocational training, where the worker is given on-the-job training to acquire or improve the skills required to do the job. Gennrich et al. [

1] states that technical and vocational education and training (TVET) uses formal, non-formal and informal learning methods to provide knowledge and skills required for the trade.

Manufacturing industries have drastically changed over the past few years. Traditionally, the manufacturing of goods has evolved from craftsmanship to highly organized mass producing factories to highly customized Industry 4.0. Subsequently, the skills required by the workforce to adapt these rapid changes have increased. Global competition drives manufactured goods nowadays and there is a need for fast adaptation of skills, process and production to meet the transformative markets’ requests.

The productivity has increased due to rapid advancements in manufacturing technologies. Hence, there is a need to ensure worker engagement, performance and wellness. The skills are needed for both cognitive and physical areas. There has been a very significant increase in skill demands because of the evolving manufacturing sector. For example, due to Industry 4.0, that includes Internet of Things, (IoT), Industrial Internet of Things (IIoT), Cloud-based manufacturing and smart manufacturing, which makes the manufacturing process digitized and intelligent, as described by Ero et al. [

2], the demand for cognitive skills has increased. With close integration of technology, robots, automated factory lines and intelligent manufacturing, the worker is required to use cognitive skills to work efficiently. Along with this, the cognitive load of workers is higher than ever and there is a need to assess, monitor and improve cognitive performance.

Newly required skills include, and are not limited to, understanding the complete manufacturing process which starts from order to delivery of the product, working with smart devices at the factory, learning to use technically advanced machinery and tools, communicating with and handling robots, understanding how to read, understanding and conveying data in real time, learning to program firm software, and working with data mining and cloud infrastructure. Due to the rise of robotic technologies, the integration of smart connected robotics and smart maintenance is common in Industry 4.0 [

3].

The TVET systems need to prepare for the skills of the future for global connectivity and smart technologies in the manufacturing sector. Future and Jobs Reports of the World Economic Forum from 2015 list the top ten skills that are relevant for Industry 4.0. These are cross functional skills also known as soft/interpersonal skills. Even though these skills are not-job specific and remain highly common in every vocation, the importance of training the workers with these skills that improve their cognitive performance highly affects the overall outcome of the job.

In manufacturing, the skills are applied to produce marketable goods and products. The worker acquires the skills necessary to help in the production of these goods and products and the process by which the worker develops the needed abilities is considered as training. Currently, many manufacturing companies train their workers in different ways. One popular method is by assigning a senior member of the workforce to the new worker. This member acts as a mentor and teaches every skill needed to finish the job. Another common method is by enrolling the workers in a time-based curriculum, where they are taught the theory and practical versions of the skills. Both these methods are part of on-the-job training. The other version of manufacturing training is the one that is taught in trade schools or vocational training programs outside the companies. It is very rare to see any kind of standardization or consistency amongst these methods of training. This causes the workers to learn one type of skills specific to that manufacturing company, which means that they have few transferable cognitive skills. After the training, the worker may or may not give a test to assess how much they have learned over the training period. Moreover, these methods of training do not evolve as quickly as technological advancements in the manufacturing sector.

It is highly important that workers in manufacturing environments should be trained and re-trained to meet the evolving market needs. These training should reflect the demands on their skills. The workers’ skills are directly related to their performance. The purpose of this review paper is to focus on the need of worker training with advanced new immersive technologies that provide better global training, which in turn leads to the improvement of workers’ cognitive performance in the manufacturing industry. We performed a comprehensive study by reviewing published articles in academic databases and present the state of the technologies and their current applications towards manufacturing training.

Need for Extended Reality (XR) in Manufacturing Training

Extended reality (XR) refers to all real and virtual environments combined together, where the interaction between human and machine occurs through interactions generated by computer technology and hardware. XR technologies consist of virtual reality (VR), mixed reality (MR) and augmented reality (AR).

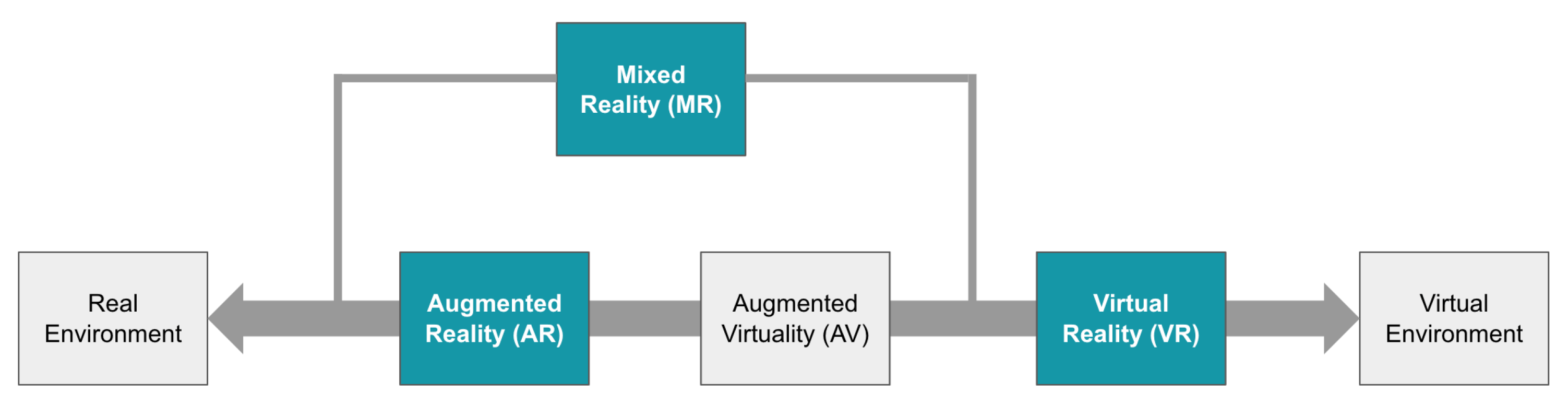

Figure 1 shows the reality-virtuality continuum adopted from Milgram et al. [

4], which gives an overview of the XR ecosystem and how VR/AR/MR relates to the real and virtual environment.

Generally speaking, the question of whether introducing XR as the sole or complementary method for training is viable or not, shall depend on an array of factors: training needs, available resources, health and safety risks, privacy concerns, etc. Therefore, companies and corporate groups should perform a thorough analysis considering the aforementioned factors, in order to determine whether XR training is capable of yielding fruitful outcomes.

In order for companies to hire employees who meet the standards required in terms of skill acquisition, they need to find new ways to recruit and prepare candidate employees to join the workforce [

5]. Although there is no universally applicable solution, each company needs to adjust their various needs to the resources available. Nevertheless, given the constant need to address the recurring complexities of modern manufacturing, it is apparent that building consistent worker training programs is of indispensable importance in modern manufacturing organizations [

6]. Contemporary technologies (XR, sensors, robots etc.) in manufacturing have redefined the boundaries of safety and retention improvement, production optimization and speed/cost of training processes. Hence, employees can exploit XR features as a powerful and compelling training tool, in order to become familiarized with the demanding real-world working conditions.

As mentioned by Fast-Berglund et al. [

7], the ability to blend and exploit the assets of digital/cyber/virtual and physical worlds can produce vital savings of time in various areas of manufacturing ( design, logistics, maintenance, etc.). Thus, contemporary technologies like XR could act as the missing link accomplishing this bridge, but at the same time, can introduce new features, such as increasing time-room flexibility.

2. Research Process

2.1. Research Question

Our research aims to review the current state of the art use of XR technologies in imparting training to personnel in the manufacturing industry. This paper addresses the following questions. Q1: Which tasks are currently supported by using XR technologies for training in each manufacturing phase? Q2: What are the applications of XR technology in other areas of training domain? Q3: Identify gaps in areas of XR technology and manufacturing phase that need more attention from researchers and why.

2.2. Research Methodology

We conducted a comprehensive review of all published, peer-reviewed articles on the use of XR technologies in the manufacturing industry for training personnel. Our search criteria consisted of two main parts: a primary phrase describing the various forms of XR technologies, combined with a secondary phrase targeted towards a type of training in manufacturing. We used terms such as worker training, manufacturing training, training, industrial training, etc. for our secondary phrases.

These terms were searched in databases such as Science Direct, IEEE Xplore, Springer, ACM Library and Google Scholar. The search period for this review ranged from 2001 to 2020. All results of the search were examined to determine if they fit our predefined inclusion criteria. With our initial search, we identified 127 articles which were then examined closely to determine the fit for our research. The result of this was 52 articles, which we have reviewed in detail in this manuscript.

2.3. Inclusion Criteria

Research articles met our inclusion criteria if they, at least, presented and evaluated a training interface targeted towards any type of training towards a manufacturing environment. We considered articles as valid for our research if they were published as part of a thesis or dissertation, in a peer-reviewed journals, proceedings of a conference or a peer-reviewed open access database. We excluded articles if they evaluated anything but performance after training, such as engagement or enjoyment, as we specifically wanted to evaluate the XR technologies for their contribution towards imparting and evaluating training. If two articles explored the same training interface with a varying number of users, we chose the one article which conducted a study with a larger group. If the article focused on training using XR in domain other than manufacturing training, we did not outright reject the article, but instead categorized such articles based on their domain and ranked them based on their number of citations and included only the top 5 articles from each domain. We categorized all articles into their appropriate category of XR used for training (AR, VR, or MR).

3. XR in Manufacturing Training

3.1. Overview and Capabilities

As mentioned above, XR, also known as extended or X reality, where X represents any current or future spatial computing technologies such as augmented reality (AR), mixed reality (MR), virtual reality (VR) and the areas interpolated among them. XR is defined as a form of “mixed reality environment that comes from the fusion ubiquitous sensor/actuator networks and shared online virtual worlds”, according to Pavlik et al. [

8]. XR refers to all real-and-virtual combined environments and human–computer interactions generated by computer technology and wearable sensors. XR is a super-set which includes the entire spectrum known as the concept of the reality–virtuality continuum introduced by Milgram et al., as shown in

Figure 1. It is a continuous scale from “the complete real” to “the complete virtual” consisting of all possible variations and compositions of real and virtual objects, as described by Milgram et al. [

9].

Table 1 gives an overview of different capabilities of each XR technology.

MR—Mixed reality ranges from one extreme end to another, where real and virtual worlds are mixed. MR combines real world and digital elements. Usually, the user wears a head-mounted display (MR). In mixed reality, the user can interact with and manipulate physical and virtual items and environments, using next-generation sensing and imaging technologies. Mixed reality allows the user to immerse in the combination of real and virtual world using their own hands—all without ever removing one’s headset. It provides the ability to have one foot (or hand) in the real world, and the other in an imaginary place, breaking down basic concepts between real and imaginary.

AR—Augmented reality is where virtual objects and environments are mixed with the real world. The user views his real environment along with computer-generated perceptual information. AR overlays digital information on real-world elements. Augmented reality enhances the real world experience with other digital details, layering new layer of perception, and supplementing the user’s reality or environment by keeping the real world central.

VR—Virtual reality is a computer-simulated experience that replaces the user’s perception completely from the real world to a similar or completely different virtual world. VR is the most widely known of these technologies. It tricks the user’s senses into thinking they are in a different environment. This is called the sense of presence. Using a head-mounted display (HMD) or headset, the user experiences a computer-generated world of imagery and sounds in which one can manipulate objects and move around using haptic controllers while tethered to a console or PC.

3.1.1. Virtual Reality—VR

The use of virtual reality for the purpose of manufacturing training is an upcoming and ongoing research process. Currently, there are various research works that focus on implementing virtual reality in the manufacturing and industrial sectors, for learning and training purposes. Ref. [

11] has applied the concept of virtual reality for training of maintenance operations. They have created a simulated environment where they perform maintenance training using virtual hands which are controlled through devices such as mouse, keyboard and leap motion. Ref. [

12] used virtual reality for creating a virtual environment that has a set of components and a layout plan with instructions to perform the assembly task of building a product in simulation. They tested the virtual environment with both the normal beginners and industrial professionals to check whether the virtual environment was effective as a learning tool.

A virtual simulation was developed by Winther et al. [

13], with the focus of performing a pump maintenance. It was developed for the purpose of training the beginner people. The simulation was tested alongside pairwise training and video training to study its effectiveness. A series of experiments were conducted by Murcia et al. [

14] to test the effectiveness of virtual reality systems in various environments. Various experiments were conducted in a lab environment, such as entering a room through different entry points to collect an object and performing the same task in a virtual environment, which was recreated based on the same room that has been designed in the real world, playing puzzles in the real environment and virtual environment, etc.

Studying the effectiveness of virtual reality as a learning tool has been the main focus by Velaz et al. [

15], where an assembly task is performed in a simulated environment. The testing of this environment was performed with various modes of interaction, such as mouse, haptic device, motion capture, etc. Pan et al. [

16] focused on a different dimension of virtual reality where they were trying to learn the effects of various types of avatar on the cognitive performance of the user or participant. They performed the experiments with various avatar scenarios and compared them with the real-time performance of the task.

Salah et al. [

17] emphasized the lack of training innovation to match manufacturing innovation. New rational and logical training methods, like VR, are crucial. A VR teaching method based on VLS was developed to train students on the reconfigurable manufacturing system (RMS) through a case study. The majority of students were satisfied with its effectiveness. User understanding, satisfaction, number of errors, and completion time were more desirable in VR-based training when compared to conventional training methods.

3.1.2. Human–Robot Collaboration Training in VR

Smith et al. [

18] developed a VR platform for training that allowed an instructor to record their 3D movements and voice in the 3D environment. Then, a trainee could take on a roll of another 3D character and follow along while watching the trainer’s recording in VR. This would help bring down the cost of training while still providing valuable experience to the trainee. This approach had a longer instruction time, but a statistically similar assembly time. Most participants preferred the “hands-on” style of learning, like VR.

Perez et al. [

19] proposed using VR environments as a robot-controller. This would allow the operator to be trained while the robot is being tested simultaneously in a safe environment. An architecture was described for recreating a real robot in virtual reality and how the virtual controller should function.

Matsas et al. [

20] used a Microsoft Kinect sensor for skeletal tracking of the user with an HMD to create a virtual environment for human–robot collaboration (HRC) training. There are many safety concerns with working in close proximity to robots, but a virtual environment provides a safe learning experience. Multiple tasks were created for the user to complete in a virtual factory environment. Researchers found success in testing these tasks, but point out limitations in the immersive device.

Gammieri et al. [

21] couple a redundant manipulator with a virtual reality environment to test and investigate HRC. This allows for the use of a real robot as an input for a VR scene, while simultaneously making it possible to control the robot within a VR scene. This helps to enhance and optimize human–robot co-operation.

3.1.3. Augmented Reality—AR

Augmented reality (AR) enhances interaction with the real-world environment using computer-generated perceptual information across multiple sensory modalities, such as visual, auditory, haptic, etc. Several AR technologies have been developed to assist workers with manufacturing and assembly tasks. Werrlich et al. [

22] evaluated the efficacy of head-mounted displays (HMDs) for training transfer for assembly tasks using augmented reality. The authors conducted a user study with two groups of 30 participants, where the first group was asked to complete a tutorial with multiple levels, while the second group received an additional quiz level. Their study argues that a combination of the real and virtual assembly phases strengthened the training transfer, due to which the second group made 79% fewer mistakes.

Another study by Hoover et al. [

23] used the Microsoft HoloLens head-mounted display (HMD) to train workers for a mock wing assembly task. The author recorded measures like completion time, error count, net promoter score, and quantitative feedback and compared with previously published data using desktop model-based instructions (MBI), Table MBI, and Tablet AR. The study concluded that the HoloLens AR condition resulted in better overall human performance on the assembly task than both non-AR conditions.

Apart from assembly tasks, AR can also act as effective assistance in maintenance work. A study by Aromaa et al. [

24] considers the aspects of human factors and ergonomics (HFE) and safety issues, while working in harsh maintenance environments. The authors focus on what kind of postures the users adopt when using a tablet-based AR system during a maintenance task. The involved participants adopted various kinds of postures based on the view of the augmented information and the target object. The study showed that the work experience and the profession of the user seemed to influence their posture. The findings from this study could be used to improve HFE in industrial maintenance settings.

Heinz et al. [

25] developed an augmented reality system to give users a better understanding of complex automated systems, despite the increasing complexity of these systems and the continuous decline of expert availability. An AR tablet application was created to give the user information about internal processes, sensor states, settings, and hidden parts of the system without the need for an expert. The application was prototyped on an industrial folding machine, but more work is needed to access the feasibility of such systems.

Sirakaya et al. [

26] attempt to Increase student efficacy and motivation in vocational education and training through the use of AR. HardwareAR, an AR system that provides information about hardware components, ports, and the assembly of components, was developed. Students assembled a motherboard using the system. There were statistically significant results, showing that students who used the AR application had more success than those that used the text manual.

Murauer et al. [

27] used augmented reality to create language-independent order picking instructions. Many times, instructions are not written in workers’ native language. Textual feedback was collected from the users using instructions in AR and instructions in a foreign language. Results showed that workers using language-independent visual AR instructions complete the task faster than those using foreign language-based instructions. Discomfort caused by the Hololens was discussed as a limitation of the system.

3.1.4. Mixed Reality—MR

In mixed reality (MR), physical and digital objects co-exist and interact in real-time, encompassing both augmented reality and augmented virtuality via immersive technology. Mixed reality is an emerging technology that deals with maximum user interaction in the real world compared to other similar technologies and has been vastly successful in manufacturing training. Gonzalez-Franco et al. [

28] implemented a mixed reality setup with see-through cameras attached to a head-mounted display (HMD) and tested the system in implementing a manufacturing procedure of an aircraft maintenance door. The authors conducted a knowledge retention test after the training to evaluate the effectiveness of the training. The results showed that the knowledge levels acquired both in the mixed reality setup and in the conventional face-to-face setup were not significantly different. However, a trend was found with 93% confidence of equivalence on the retention results obtained by participants of the conventional face-to-face training when compared to the MR, which shows that MR scenarios can potentially provide a successful metaphor for collaborative training.

Similarly, Reyes et al. [

29] developed a mixed reality guidance system (MRGS) for motherboard assembly to analyze the usability and viability of using mixed reality and tangible augmented reality (TAR) for simulating hands-on manual assembly tasks. The authors evaluated the system on 25 (10 experienced and 15 naive) participants in two usability studies: first, the participants were asked to rate the proposed interaction technique, and second, they were tasked to partially assemble the motherboard using MRGS. During the evaluation, it was found out that the participants using MRGS performed better in orienting and positioning different parts of the motherboard than the ones who only had access to the physical setting. Both naive and experienced users voted the system similarly with moderate reliability, although the experienced users had an easier time using the system than the naive users. The study suggests that the usability of the system seemed to be favoring users with augmented reality backgrounds.

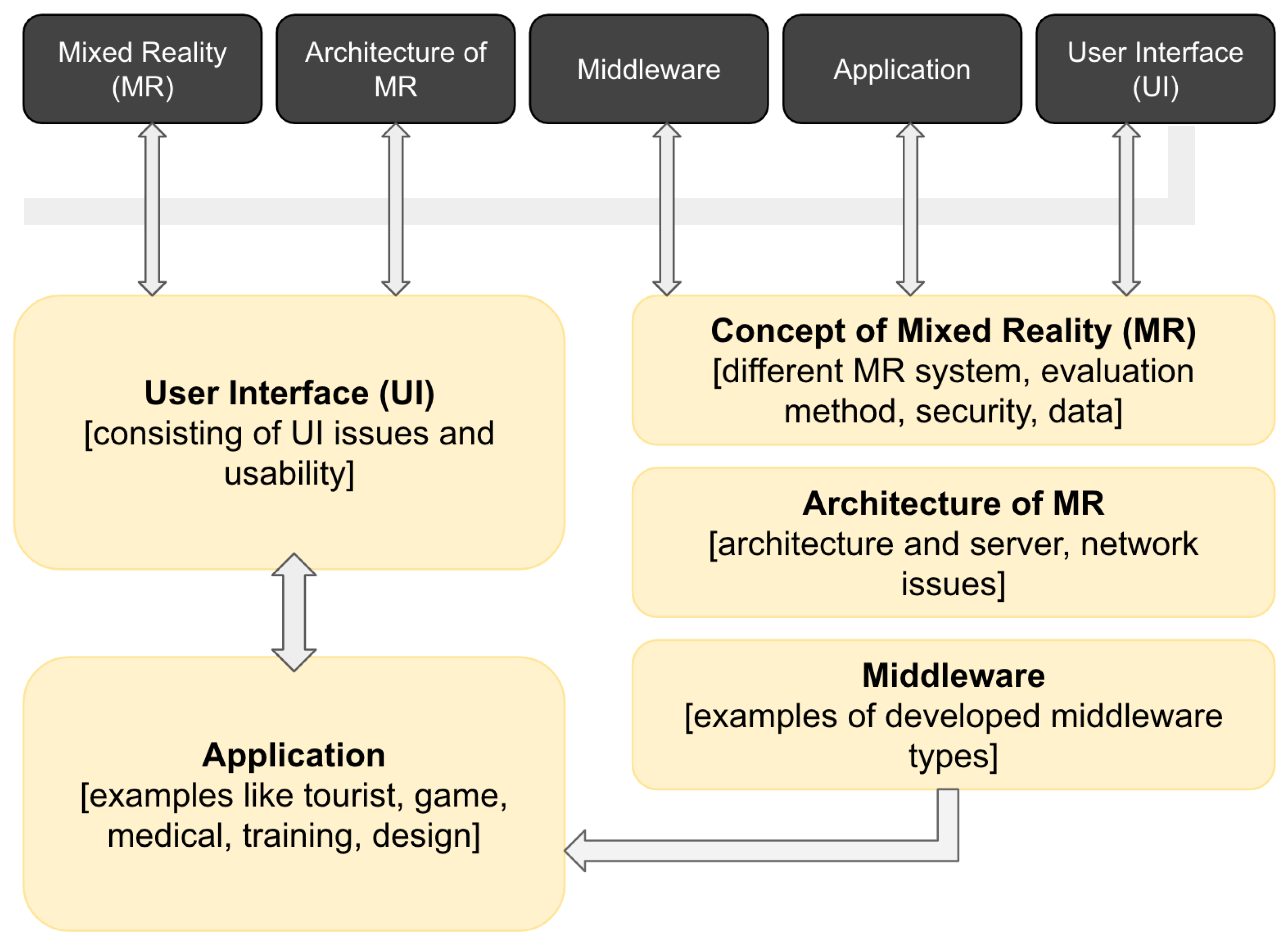

Developing an MR application is complicated and a key issue in designing such an application is the increasing level of user interaction. A study by Rokhsaritalemi et al. [

30] proposes a comprehensive framework comprised of necessary components for MR applications, as shown in

Figure 2. This review can help researchers to develop better MR systems for manufacturing training that aids industry workers in severe environments.

3.2. XR Supporting Cognitive Performance

Classic cognitive training tasks train specific aspects of cognition (e.g., processing speed or memory) using guided practice on standardized tasks. Neuropsychological software programs (e.g., NeuroPsychological Training, Colorado Neuropsychological Test) are designed to enhance multiple cognitive domains using a variety of tasks, can provide instant performance feedback, and are mostly self-guided, allowing participants to progress through tasks at their own pace. Kueider et al. [

31] describe that video games can include electronic or computerized games in which the player manipulates images on a screen to achieve a goal.

All cognitive domain-specific tasks are measured in terms of, and are aimed at, improve cognitive speed of processing, memory, attention, perception, time taken to finish the task, reaction time, executive function and managing cognitive load in general, which increases engagement and enjoyment during the task.

Cognitive performance and wellness of the worker is important. In this era of Industry 4.0, when automation has enlarged an individuals’ work routine, higher cognitive level is in demand due to the flexibility and adaptation of tasks. Job characteristics and computer anxiety in the production industry [

2] discuss this higher level of cognition needed with high workload, increased work pressure, diminished job control, training, and use of new technologies.

Imran et al. [

32] designed a virtual reality application to measure cognitive performance in the presence of various background noises. Specifically, the use of virtual reality allowed for the real-time auralization of speech in an office environment. The study was conducted to determine whether reducing speech intelligibility due to frequency-specific insulation between office spaces had an effect on cognitive performance. The authors concluded that VR had no significant impact on the results of the cognitive performance task when compared to an analogous study conducted in a real-world environment. The study showed promising results in achieving generalized predictions of cognitive performance in humans.

Kotranza et al. [

33] designed a mixed environment (ME) simulation that trains and evaluates both psychomotor and cognitive skills. The user receives real-time virtual visualization and feedback of their performance in a physical task through the use of multiple sensors in the physical environment. The approach was used in an ME for learning clinical breast exams and various feedback methods were detailed.

Papakostas et al. [

34] propose a vocational tool for cognitive assessment and training (v-CAT). The goal of v-CAT is to provide personalized autonomous training options for each individual user. The tool uses data gathered from multiple sensors while the user completes various tasks, while immersed in a simulated virtual workplace/factory environment to assess the user’s physio-cognitive state. The tasks used in the tool utilized virtual reality, computer vision, and sensors. The results can then be used in conjunction with machine learning, deep learning, and reinforcement learning to prioritize areas of training that are most needed.

Augstein et al. [

35] designed a table-top-based system that can be used to enhance cognitive performance in complex tasks. The system was designed to aid in neuro-rehabilitation by eliminating many time-consuming tasks normally left to professionals. The system uses various modalities for interaction including touch, tangibles, and the use of a pen to support different patients’ variable range of motion. Training sessions are recorded and then analysed at a later time. The researchers found a high acceptance of the system in the three participants that were chosen.

Sharma et al. [

36] use various physiological features such as heart rate, along with facial features to estimate a person’s cognitive performance. The researchers wanted to examine the feasibility of this system in separate distinct tasks that often require different cognitive processes. A game of Pac-Man, an adaptive assessment task, a code-debugging task, and a gaze-based game are all used to gather features.

Costa et al. [

37] present an application utilizing smart watch hardware that aims to regulate emotions and reduce anxiety to improve cognitive function. The application gives the user haptic feedback, simulating a heartbeat. The researchers gave 72 participants math exams. Half of the participants had watches that simulated a slow heartbeat and the other a fast heartbeat. Results showed that simulating a slow heart rate led to positive cognitive, physiological, and behavioral changes.

3.3. Benefits of Using XR in Manufacturing Training

There has been a lot of research works in the field of virtual reality (VR), augmented reality (AR), mixed reality (MR). Irrespective of the various ways of implementation and experimentation with these concepts, the aim of most of the research works is working out whether these extended reality (XR) systems are effective in the respected activities where they are being used. In the context of extended reality-based training, many research works and analyses have been performed to test the effectiveness of these training methods when compared to traditional training methods.

Various research works have specified certain benefits of using extended reality in the context of manufacturing training. Kaplan et al. [

38] have stated that, though extended reality-based training and traditional training produce similar results with respect to various tasks, one of the key benefits of using extended reality-based training is that it can be implemented and is useful in environments which are very difficult and pose a threat in traditional training. To support the previous point, Farra et al. [

39] developed a virtual reality simulation for disaster training, where the participants showed good progress in immediate assessment after virtual reality-based training and in the assessment after two months, which showed stability even in disaster training.

Werrlich et al. [

40] showed that the augmented reality systems performed better in immediate and long-term recall after training when compared to traditional training methods like video-training and training through printed text instructions. Moreover, it has been stated that the mental workload is less in augmented reality-based training when compared to traditional training. Another benefit of implementing extended reality-based training is that you can extend the working of the system with the various uses of interactive devices, such as mouse, motion capture, haptic devices, etc. Velaz et al. [

15] have specified that the usage of haptic devices, motion capture and mouse has shown positive performance with respect to the virtual to real-time transition of knowledge.

3.4. Other Technologies along with XR in Manufacturing Training

3.4.1. Robotics

Incorporating robotic functionalities with XR simulations has attracted tons of contemporary research interest. Various AR applications as described by Makhataeva et al. [

41] have been developed, aiming to improve the real-world perception by combining the assets of a virtual environment and a sequence of images acquired by cameras mounted on robots. In an indicative manner, robotic fields such as industrial robots, medical robotics, human–robot interaction, multi-agent systems, control and trajectory planning exhibit a high amount of AR research. While employees faced the necessity of performing tasks requiring strenuous effort, it became apparent that methods and techniques facilitating successful training should be introduced. Hernoux et al. [

42] propose a framework that detects collisions using bounding boxes and the Kinect device. In particular, their system consists of a real world setup, including a robot with a Kinect2 mounted on it, and a virtual world setup including the virtual equivalents of the robot and the Kinect, respectively. The robot learns all the trajectories demonstrated by the user who were previously exposed on a training task using VR. Hence, in situ tasks are taught, while it is ensured that there are no collisions between the operator and the robot.

Alternatively, there has been a lot of debate on whether virtual training can outperform physical training. In [

43], Murcia et al. created a bimanual assembly task that is examined from the effectiveness-of-training perspective. Namely, participants who were asked to complete a 3D puzzle comprised of both virtual and physical elements exhibited a higher response when virtually trained. Such findings can be proven to be quite encouraging, especially in cases that users have to interact with robotic manipulators in assembly tasks.

Zhang et al. [

44] focus on the development of a VR-based simulator responsible for the training of an eye tracking-based robot steering. Specifically, their experiment relies on a user who, while wearing a head-mounted display (HMD), manages to navigate a wheeled virtual robot by gazing in the desired direction of the robot’s motion. However, their experiments on a total number of 32 participants did not exhibit any convincing difference between the VR and the actual training.

Another instance of whether XR technologies can address the emerging need for the XR training process to satisfy the need that facilitates and accelerates the training working in conjunction with various sensors can be found in [

45], where Stuanicua et al. design a framework that is intended for neurorehabilitation purposes. In particular, the system consists of two subsystems: (1) the first one incorporates all of the actual patients’ rehabilitation processes (including virtual reality exercises for motor rehabilitation, speaking and communication therapy, based on gamification principles) and (2) the second one for training the medical staff as a means to increase their awareness on choosing the appropriate techniques encapsulated in each patient’s neurorehabilitation. It has to be mentioned that neurorehabilitation is strongly correlated with robotics, since the number of robotic devices used for such tasks has significantly increased in recent years.

3.4.2. Wearable and Non-Wearable Sensors

Multiple studies using sensors have been conducted to improve the immersion in the VR environment. One such study was conducted by Caserman et al. [

46]. Here, the researchers tried to improve the step detection using integrated sensors in the VR headset. They used an Oculus Rift Development Kit 2 with an integrated IMU sensor. From the inertial measurement unit (IMU) sensor, they were able to extract the acceleration signal in the three axes (X, Y, and Z). From this signal, they extracted the velocity and position data. They created algorithms to detect steps from the collected data. They found that the position data gave the best accuracy (greater than 90%) for step detection.

Hunt et al. [

47] created a framework that was designed to be used during rehabilitation. They modeled the trajectory of the hand in augmented reality and virtual reality using kinematic information. They used the information collected by three IMU sensors attached to the body. These sensors were attached to the upper arm, forearm, and torso. The features from the IMU signals were used to identify the trajectory, which was then used to define the dynamic motion primitives. The data were then used to define the trajectory in an augmented reality environment where the users were asked to perform a series of pick and place tasks.

Betella et al. [

48] conducted a study that combined healthcare and VR. Here, they wanted to create a method that allowed behavioral studies in a virtual environment. They did this on a system that they created called the experience induction machine (XIM), which is an immersive space to conduct human behavior experiments. They used electrocardiogram (ECG) and electrodermal responses (EDR) extracted from a sensor shirt and glove to sense the arousal state of the user. Arousal state represents the intensity of emotion to information or situation perceived by the user. The features extracted from the signals were fed into multiple machine learning models. Amongst them, linear discriminant classifier had the highest accuracy.

Marin et al. [

49] created a system to detect the effective state of the user in an immersive environment. They achieved this by using ECG and electroencephalography (EEG) sensors. They measured the affective state by looking at the arousal and valence state. Valence state represents the quality of the emotion perceived by the user, i.e., positive or negative. The extracted signal was processed and features were extracted from them. The authors then used a nonlinear support vector machine (SVM) to classify the values as high (positive) and low (negative). They had a 75% accuracy in classifying arousal and 71.21% accuracy in classifying valance.

Limbu et al. [

50] defined a framework for work training in augmented reality. The framework operates by capturing the trainer’s performance using wearable sensors. These data are then used in conjunction with AR to train the workers. They investigate multiple ways of teaching a trainee in an AR environment. They dubbed this as “Transfer Mechanism“. The framework was created based on Wearable Experience for Knowledge Intensive Training (WEKIT) and uses the Four Component Instructional Design (4C/ID) model. The 4C/ID model defines a method of teaching in a complex environment.

4. Other Vocational Training Domains Using XR to Improve Cognitive Performance

A lot can be learned from how XR technologies are being used and applied in other vocational training domains. The main objective of training is to equip the worker with cognitive and physical skills needed for the job. In this paper, we are focusing on only cognitive aspects of training. In the manufacturing sector, the need to become more flexible, adaptable, productive, cost efficient, schedule efficient and quality driven has been noted by the effect of cognitive demands and perceived quality of work life on human performance in manufacturing environments. In the end, every industry wants to remain profitable in this ever-changing environment. In order to achieve this, they try to maximize human and system performance. Therefore, human cognitive performance should result in overall optimized system performance (work compatibility: an integrated diagnostic tool for evaluating musculoskeletal responses to work and stress outcomes). In this section, we will discuss a few popular vocational training domains that are using XR technologies for the training, education and assessment of their workforce.

4.1. Safety Training

Immersive XR applications have proven to be safe, engaging and effective for vocational training. They reduce the risk of any harm significantly and provide a training platform that can be used multiple times for training, without worrying about the cost, availability, risk, and complexity of the equipment or system. Among the three XR technologies, VR has proven to be the best immersive medium for safety training systems. Lerner et al. [

51] created an EPICSAVE (Enhanced Paramedic Vocational Training with Serious Games and Virtual Environments) project, which was a room scale VR simulated environment for emergency simulation training. They wanted to see how effective training and media could be in influencing learning and training in VR. They used a virtual simulation of a 5-year-old girl getting anaphylaxis grade III with shock along with swelling of the upper and lower respiratory tract. The participants found the VR experience more engaging and was rated as an effective educational approach.

Another VR study was used to train mine rescuers’ safety training by Pedram et al. [

52]. They used structural equation modelling to create a systematic framework that captures the key features of user learning experiences in immersive VR. The result of the 45 VR training session was multifold. It showed that enhanced learning by trainee engagement with the scenario, their perception of the fidelity, their sense of co-presence with other trainees, the usability, an overall positive attitude towards technology, and a high engagement proved that VR provides a safe and effective training medium.

Along with safety training, immersive XR training platforms are also used to equipment training for highly risky vocations. For example, Park et al. [

53] developed an immersive vehicle simulator to assist airport ramp agents in vocational training. It provided training for the process of performing the job and to master ground handling services before using the actual equipment at the airport. The training system consisted of three parts; a hardware component, a simulator software and the vocational training content. The training proved to be safe and very engaging.

4.2. Education and Military Training

After entertainment, the military was one of the first industries that invested in the development of virtual reality applications for training in a risk-free environment. It is used in almost any kind of training—from firing exercises to a full-scale combat mission simulation. Ahir et al. [

54] rightly state “Virtual reality is emerging freshly in the field of interdisciplinary research”. Although all kinds of training comes under the umbrella of ‘education’, in this case, there is a tight knot between military training and education. The methods and goals are overlapping. For example, Gonzalez et al. [

28] have laid out the steps of creating an immersive scenario for improving naval skills. They created a naval training scenario by using the necessary skills like critical thinking and problem solving and experiences needed for building such a training environment prototype. The naval training scenario had all the aspects of naval training, like the maintenance and design of a ship and how real world problems related to the management of a ship can be solved. The scope of the study was twofold—they wanted to engage and promote the use of VR amongst educators and students. Secondly, they gathered their feedback to then build a VR training system later. A multi-user collaborative response training system was proposed.

Gac et al. [

55] designed a tool to assist a virtual reality learner in a vocational training context. The work presented in this paper was part of a global project aiming to create, design and assess new VR tools and methodologies for educational training, where teachers can train about good conditions regarding safety, logistics and financial resources. The authors found that the usability of an immersive tool depends on the clarity and user interface design. The minimalist design was welcomed by young students and they enjoyed VR training.

Gupta et al. [

56] created a language learning VR environment to train Swedish language. They conducted two user studies separated by one week gap and test the learning performance, retention and recall or Swedish words, in a comparative VR and traditional environments. The results proved that the retention rate of VR was higher than the traditional method. Participants also rated the VR system as more enjoyable and effective for learning.

4.3. Rehabilitation and Medical Training

Bozgeyikli et al. [

57] used immersive VR for the vocational rehabilitation of individuals with severe disabilities. They also used a physical and virtual assistive robot for controlling the skills training. A study of 15 participants showed promising results for VR as a rehabilitation training tool. Three games were developed for multiple sclerosis (MS) rehabilitation training: a piano game, a recycle game and a tidy up game by Soomalet al. [

58]. The VR games were designed to test the user motivation, engagement level, usability and sense of presence in VR. The results and user feedback were positive.

Augmented reality is slowly proving effective. As the technology advances, the capabilities of AR will advance too. Gorman et al. [

59] have summarized eighteen studies where AR was used for upper or lower limb rehabilitation training following a stroke. They found that AR technology is in its nascent stages and needs further investigation.

Gerup et al. [

60] summarize the current research and state of AR, MR and the applications developed for healthcare education beyond surgery. Twenty-six application-based studies were included. The result of this review was that there is still a need for further studies. The current state of research suggests that AR, MR learning approaches have medical training based application, there is scope to improve the lacking validity of study conclusions, heterogeneity of research designs and challenges in the transferability of the findings in the studies.

The Coronavirus Disease 19 (COVID-19) pandemic has disrupted in-hospital medical training. De et al. [

61] discuss the experience of 122 medical students who attended online training sessions, including 21 online patient-based cases on a platform with simulated clinical scenarios. They underwent a virtual patient-based training too. The majority of students were satisfied with their virtual interruption-free medical training experience and gave positive feedback to the perceived quality. During these trying times, many sectors along with the medical sector have used and experienced one or more immersive online training platforms.

Javaid et al. [

62] provide a survey of VR in the context of the medical field. Many medical professionals are using XR technologies for training, diagnosis and virtual treatment during an emergency situation. They also found that VR is very effective for surgical training. Especially during complicated medical scenarios, VR provides a safe, non-intrusive method of studying the case and simulations for training.

5. Discussion

In the manufacturing industry, there are three types of tasks that people need training for—assembly line tasks, job shops and discrete tasks. These tasks include monitoring assembly line, sorting, picking, keeping, assembling, installation, inspection, packing, cleaning routine (process, shovel, sweep, clean work areas) and using hand tools, power tools and machinery. After reviewing state-of-the-art XR technologies for manufacturing training, and based on the different qualities and capabilities of VR, AR, MR, all the tasks can be broken down into different manufacturing phases and the XR technologies can be mapped to a task where they can prove beneficial, as shown in

Table 2.

As evident in

Table 2, VR is very useful in the introductory and learning phase. The biggest advantage of using VR for these phases is that it adds a virtual layer to training. This ensures worker wellness and keeps then safe from workplace risk or too many errors during learning. VR is also helpful for planning, visualizing and designing new training tasks.

As seen in the reality—virtuality continuum, MR is the most flexible technology and could be used in almost every phase. In the manufacturing scenario, MR can be utilized for training in every phase, since it is not completely immersive like VR and lets the user view his real world along with digital information. This is helpful in operational phases that include tasks that need to be done on a daily basis, a tangent phase that includes rare tasks that are occasionally needed and an end phase that requires daily job shop tasks, like cleaning and inspection.

Clearly, a lot of work is needed for AR technology to become useful in all manufacturing phases. AR needs some further development before it can work. Although it can help when used in few phases, it is still not effective as VR and MR presently. However, in situ projections can be considered as AR technology and these have proven to be helpful in learning, tangent and end phases.

5.1. Measuring Success

The current state of research shows that the success metrics of manufacturing training are measured by performance, time taken for completion of tasks, precision, accuracy, awareness, reaction time, error rate, and a few qualitative metrics such a engagement, enjoyability and user feedback. Although these are perfect metrics to measure success, we should also remember that these metrics are highly dependent on the capabilities and performance of the XR training platform. As XR technologies are still evolving, there is a lot of scope for improvement. So, the metrics such as reaction time and awareness can provide a different set of details about training capabilities and worker performance when the XR training will update its hardware and software by adding new features. In the next section, hardware and software capabilities and how they affect the overall consumer adoption of these XR technologies in the field of manufacturing training are discussed.

5.2. Hardware and Software

XR technologies are here to stay. VR has been around since the 1950s, but recently we have seen a rise in VR applications due to advancement in its hardware. Similarly, AR and MR hardware development has taken up speed. Although the speed of hardware development seems fast, it is not fast enough to solve all user experience problems. The power of XR is to create a sense of presence that can be designed in any way to suit the training requirements. VR, AR, and MR training platforms are only as good as their hardware capabilities. Although we have very good XR headsets in the market, there is still room for improvement on many fronts, such as haptic input/output, reduced latency, less bulky headsets, etc.

The XR technologies for training are not only hardware devices. The capabilities and quality of XR training platforms also depend on the software. Good quality software is needed to run on the hardware, as well as to create the XR content. Major manufacturers of VR—Oculus Rift, Google Cardboard, HTC, Sony, Samsung—often create their own XR authoring content creation tools, but recently we have seen the rise of third party companies offering VR development software. The gap between the growth rate of software and hardware also leads to challenges for creators, as they are constantly learning these new technologies and creating applications with new features and capabilities. XR application creators require professional skills for content generation, full immersion, interactivity, programming and implementation.

Interaction plays a huge role in making any training platform successful. The demand for better interaction hardware and software is higher than ever. Hopefully, we will have better haptic gloves and wearable sensors that reduce the sense gap between the real and virtual worlds, making the experience as real as possible. Until all these domains become mature, the field of research will be too disparate to find out which factors contribute to better training transfer from VR/AR.

5.3. Consumer Adoption

The progress to head-worn portable XR devices faces huge obstructions in terms of consumer adoption. Unlike other emerging technologies, this requires huge behavioural changes in purchaser conduct. Head-mounted displays (HMDs) are a groundbreaking thought. The rate of consumer adoption of other new technologies in the past, such as television, computer, mobile phones, has been very high compared to the XR technologies. Amongst all XR technologies, the one that is heavy on HMD dependents will have the slowest consumer adoption because of the slow rate of hardware development. Due to these reasons, commercial manufacturing sectors are slow on adopting XR technologies for mass workplace training and these areas remain highly used for research only purposes. Whereas the consumer adoption of mobile-based AR is significantly higher than HMDs because of low hardware barriers. The AR training applications that are non-wearable, low cost, mobile-based or projection-based have higher chances of mass adoption in the new future.

6. Conclusions

Immersive XR technologies are a powerful tool for manufacturing training. As evident based on our discussion above, augmented reality is still lacking behind in major areas of manufacturing training, whereas research in virtual reality applications around the later phases of manufacturing is still unexplored. We also discussed how XR technologies used in other vocational training domains are effective and can be used in training in manufacturing setting, especially because in safety-critical vocations, real-world training is often too complicated, expensive, or risky. In summary, (1) there are multiple paths by which VR, AR, MR training can have a positive impact on manufacturing training, and (2) immersive XR training helps enhance the performance of workers and increases engagement. Automation, use of robots and other new technologies will not replace the requirement for skilled trainers, but rather it will augment the overall performance of the manufacturing industry. All the XR application in training focus on learning and educating the workers and their work is generally comparable to performance after training in a traditional setting. For future work, we conclude that the application of augmented reality in later phases of manufacturing training and the need for adding interaction in the training interface still need to be explored to better understand the impact of imparting training.

Author Contributions

Conceptualization, S.D.; methodology, S.D., and F.M.; investigation, resources, data curation, writing—original draft preparation, writing—review and editing: S.D., C.W., V.K., C.S., A.J., H.N. and F.M.; visualization: S.D. and A.J.; supervision: S.D. and F.M.; project administration, S.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Science Foundation (NSF) under award numbers NSF-PFI 1719031.

Acknowledgments

The authors would like to thank Maria Kyrarini for proof reading the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gennrich, R. TVET: Towards Industrial Revolution 4.0. In Proceedings of the Technical and Vocational Education and Training International Conference (TVETIC 2018), Johor Bahru, Malaysia, 26–27 November 2018; Routledge: Abingdon-on-Thames, UK, 2019; p. 1. [Google Scholar]

- Erol, S.; Jäger, A.; Hold, P.; Ott, K.; Sihn, W. Tangible Industry 4.0: A scenario-based approach to learning for the future of production. Procedia CiRp 2016, 54, 13–18. [Google Scholar] [CrossRef]

- Lu, Y. Industry 4.0: A survey on technologies, applications and open research issues. J. Ind. Inf. Integr. 2017, 6, 1–10. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A taxonomy of mixed reality visual displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Rushmeier, H.; Madathil, K.C.; Hodgins, J.; Mynatt, B.; Derose, T.; Macintyre, B. Content Generation for Workforce Training. arXiv 2019, arXiv:1912.05606. [Google Scholar]

- Mital, A.; Pennathur, A.; Huston, R.; Thompson, D.; Pittman, M.; Markle, G.; Kaber, D.; Crumpton, L.; Bishu, R.; Rajurkar, K.; et al. The need for worker training in advanced manufacturing technology (AMT) environments: A white paper. Int. J. Ind. Ergon. 1999, 24, 173–184. [Google Scholar] [CrossRef]

- Fast-Berglund, Å.; Gong, L.; Li, D. Testing and validating Extended Reality (xR) technologies in manufacturing. Procedia Manuf. 2018, 25, 31–38. [Google Scholar] [CrossRef]

- Pavlik, J.V. Experiential media and transforming storytelling: A theoretical analysis. J. Creat. Ind. Cult. Stud.—JOCIS 2018, 046–067. [Google Scholar]

- Milgram, P.; Takemura, H.; Utsumi, A.; Kishino, F. Augmented reality: A class of displays on the reality-virtuality continuum. In Telemanipulator and Telepresence Technologies; International Society for Optics and Photonics: Bellingham, WA, USA, 1995; Volume 2351, pp. 282–292. [Google Scholar]

- McMillan, K.; Flood, K.; Glaeser, R. Virtual reality, augmented reality, mixed reality, and the marine conservation movement. Aquat. Conserv. Mar. Freshw. Ecosyst. 2017, 27, 162–168. [Google Scholar] [CrossRef]

- Numfu, M.; Riel, A.; Noël, F. Virtual Reality Based Digital Chain for Maintenance Training. Procedia CIRP 2019, 84, 1069–1074. [Google Scholar] [CrossRef]

- Hirt, C.; Holzwarth, V.; Gisler, J.; Schneider, J.; Kunz, A. Virtual Learning Environment for an Industrial Assembly Task. In Proceedings of the 2019 IEEE 9th International Conference on Consumer Electronics (ICCE-Berlin), Berlin, Germany, 8–11 September 2019; pp. 337–342. [Google Scholar]

- Winther, F.; Ravindran, L.; Svendsen, K.P.; Feuchtner, T. Design and Evaluation of a VR Training Simulation for Pump Maintenance. In Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–8. [Google Scholar]

- Murcia López, M. The Effectiveness of Training in Virtual Environments. Ph.D. Thesis, UCL (University College London), London, UK, 2018. [Google Scholar]

- Vélaz, Y.; Rodríguez Arce, J.; Gutiérrez, T.; Lozano-Rodero, A.; Suescun, A. The influence of interaction technology on the learning of assembly tasks using virtual reality. J. Comput. Inf. Sci. Eng. 2014, 14. [Google Scholar] [CrossRef]

- Pan, Y.; Steed, A. Avatar type affects performance of cognitive tasks in virtual reality. In Proceedings of the 25th ACM Symposium on Virtual Reality Software and Technology, Sydney, Australia, 12–15 November 2019; pp. 1–4. [Google Scholar]

- Salah, B.; Abidi, M.H.; Mian, S.H.; Krid, M.; Alkhalefah, H.; Abdo, A. Virtual reality-based engineering education to enhance manufacturing sustainability in industry 4.0. Sustainability 2019, 11, 1477. [Google Scholar] [CrossRef]

- Smith, J.W.; Salmon, J.L. Development and Analysis of Virtual Reality Technician-Training Platform and Methods. In Proceedings of the Interservice/Industry Training, Simulation, and Education Conference (I/ITSEC), Orlando, FL, USA, 27 November–1 December 2017. [Google Scholar]

- Pérez, L.; Diez, E.; Usamentiaga, R.; García, D.F. Industrial robot control and operator training using virtual reality interfaces. Comput. Ind. 2019, 109, 114–120. [Google Scholar] [CrossRef]

- Matsas, E.; Vosniakos, G.C.; Batras, D. Modelling simple human-robot collaborative manufacturing tasks in interactive virtual environments. In Proceedings of the 2016 Virtual Reality International Conference, Laval, France, 23–25 March 2016; pp. 1–4. [Google Scholar]

- Gammieri, L.; Schumann, M.; Pelliccia, L.; Di Gironimo, G.; Klimant, P. Coupling of a redundant manipulator with a virtual reality environment to enhance human-robot cooperation. Procedia CIRP 2017, 62, 618–623. [Google Scholar] [CrossRef]

- Werrlich, S.; Nguyen, P.A.; Notni, G. Evaluating the training transfer of Head-Mounted Display based training for assembly tasks. In Proceedings of the 11th PErvasive Technologies Related to Assistive Environments Conference, Corfu, Greece, 26–29 June 2018; pp. 297–302. [Google Scholar]

- Hoover, M. An Evaluation of the Microsoft HoloLens for a Manufacturing-Guided Assembly Task. Master’s Thesis, Iowa State University, Ames, IA, USA, 2018. [Google Scholar]

- Aromaa, S.; Väätänen, A.; Kaasinen, E.; Uimonen, M.; Siltanen, S. Human Factors and Ergonomics Evaluation of a Tablet Based Augmented Reality System in Maintenance Work. In Proceedings of the 22nd International Academic Mindtrek Conference, Tampere, Finland, 10–11 October 2018; pp. 118–125. [Google Scholar]

- Heinz, M.; Büttner, S.; Röcker, C. Exploring training modes for industrial augmented reality learning. In Proceedings of the 12th ACM International Conference on PErvasive Technologies Related to Assistive Environments, Rhodes, Greece, 5–7 June 2019; pp. 398–401. [Google Scholar]

- Sirakaya, M.; Kilic Cakmak, E. Effects of augmented reality on student achievement and self-efficacy in vocational education and training. Int. J. Res. Vocat. Educ. Train. 2018, 5, 1–18. [Google Scholar] [CrossRef]

- Murauer, N.; Müller, F.; Günther, S.; Schön, D.; Pflanz, N.; Funk, M. An analysis of language impact on augmented reality order picking training. In Proceedings of the 11th PErvasive Technologies Related to Assistive Environments Conference, Corfu, Greece, 26–29 June 2018; pp. 351–357. [Google Scholar]

- Gonzalez-Franco, M.; Pizarro, R.; Cermeron, J.; Li, K.; Thorn, J.; Hutabarat, W.; Tiwari, A.; Bermell-Garcia, P. Immersive mixed reality for manufacturing training. Front. Robot. AI 2017, 4, 3. [Google Scholar] [CrossRef]

- Reyes, A.C.C.; Del Gallego, N.P.A.; Deja, J.A.P. Mixed Reality Guidance System for Motherboard Assembly Using Tangible Augmented Reality. In Proceedings of the 2020 4th International Conference on Virtual and Augmented Reality Simulations, Sydney, Australia, 14–16 February 2020; pp. 1–6. [Google Scholar]

- Rokhsaritalemi, S.; Sadeghi-Niaraki, A.; Choi, S.M. A Review on Mixed Reality: Current Trends, Challenges and Prospects. Appl. Sci. 2020, 10, 636. [Google Scholar] [CrossRef]

- Kueider, A.M.; Parisi, J.M.; Gross, A.L.; Rebok, G.W. Computerized cognitive training with older adults: A systematic review. PLoS ONE 2012, 7, e40588. [Google Scholar] [CrossRef]

- Imran, M.; Heimes, A.; Vorländer, M. Real-time building acoustics noise auralization and evaluation of human cognitive performance in virtual reality. In Proceedings of the (DAGA 2019)—45th Jahrestagung für Akustik, Rostock, Germany, 19–21 March 2019; pp. 18–21. [Google Scholar]

- Kotranza, A.; Lind, D.S.; Pugh, C.M.; Lok, B. Real-time in-situ visual feedback of task performance in mixed environments for learning joint psychomotor-cognitive tasks. In Proceedings of the 2009 8th IEEE International Symposium on Mixed and Augmented Reality, Orlando, FL, USA, 19–22 October 2009; pp. 125–134. [Google Scholar]

- Papakostas, M.; Tsiakas, K.; Abujelala, M.; Bell, M.; Makedon, F. v-cat: A cyberlearning framework for personalized cognitive skill assessment and training. In Proceedings of the 11th PErvasive Technologies Related to Assistive Environments Conference, Corfu, Greece, 26–29 June 2018; pp. 570–574. [Google Scholar]

- Augstein, M.; Neumayr, T.; Karlhuber, I.; Dielacher, S.; Öhlinger, S.; Altmann, J. Training of cognitive performance in complex tasks with a tabletop-based rehabilitation system. In Proceedings of the 2015 International Conference on Interactive Tabletops & Surfaces, Funchal, Portugal, 15–18 November 2015; pp. 15–24. [Google Scholar]

- Sharma, K.; Niforatos, E.; Giannakos, M.; Kostakos, V. Assessing Cognitive Performance Using Physiological and Facial Features: Generalizing Across Contexts. Available online: https://dl.acm.org/doi/abs/10.1145/3411811 (accessed on 20 October 2020).

- Costa, J.; Guimbretière, F.; Jung, M.F.; Choudhury, T. Boostmeup: Improving cognitive performance in the moment by unobtrusively regulating emotions with a smartwatch. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, London, UK, 9–14 September 2019; Volume 3, pp. 1–23. [Google Scholar]

- Kaplan, A.D.; Cruit, J.; Endsley, M.; Beers, S.M.; Sawyer, B.D.; Hancock, P. The Effects of Virtual Reality, Augmented Reality, and Mixed Reality as Training Enhancement Methods: A Meta-Analysis. Hum. Factors 2020, 0018720820904229. [Google Scholar] [CrossRef]

- Farra, S.; Miller, E.; Timm, N.; Schafer, J. Improved training for disasters using 3-D virtual reality simulation. West. J. Nurs. Res. 2013, 35, 655–671. [Google Scholar] [CrossRef]

- Werrlich, S.; Eichstetter, E.; Nitsche, K.; Notni, G. An overview of evaluations using augmented reality for assembly training tasks. Int. J. Comput. Inf. Eng. 2017, 11, 1068–1074. [Google Scholar]

- Makhataeva, Z.; Varol, H.A. Augmented Reality for Robotics: A Review. Robotics 2020, 9, 21. [Google Scholar] [CrossRef]

- Hernoux, F.; Nyiri, E.; Gibaru, O. Virtual reality for improving safety and collaborative control of industrial robots. In Proceedings of the 2015 Virtual Reality International Conference, Laval, France, 8–10 April 2015; pp. 1–6. [Google Scholar]

- Murcia-Lopez, M.; Steed, A. A comparison of virtual and physical training transfer of bimanual assembly tasks. IEEE Trans. Vis. Comput. Graph. 2018, 24, 1574–1583. [Google Scholar] [CrossRef] [PubMed]

- Zhang, G.; Hansen, J.P. A Virtual Reality Simulator for Training Gaze Control of Wheeled Tele-Robots. In Proceedings of the 25th ACM Symposium on Virtual Reality Software and Technology, Sydney, Australia, 12–15 November 2019; pp. 1–2. [Google Scholar]

- Stănică, I.C.; Moldoveanu, F.; Dascălu, M.I.; Moldoveanu, A.; Portelli, G.P.; Bodea, C.N. VR System for Neurorehabilitation: Where Technology Meets Medicine for Empowering Patients and Therapists in the Rehabilitation Process. In Proceedings of the 6th Conference on the Engineering of Computer Based Systems, Bucharest, Romania, 2–3 September 2019; pp. 1–7. [Google Scholar]

- Caserman, P.; Krabbe, P.; Wojtusch, J.; von Stryk, O. Real-time step detection using the integrated sensors of a head-mounted display. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 003510–003515. [Google Scholar]

- Hunt, C.L.; Sharma, A.; Osborn, L.E.; Kaliki, R.R.; Thakor, N.V. Predictive trajectory estimation during rehabilitative tasks in augmented reality using inertial sensors. In Proceedings of the 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), Cleveland, OH, USA, 17–19 October 2018; pp. 1–4. [Google Scholar]

- Alberto, B.; Daniel, P.; Riccardo, Z.; Arsiwalla, X.D.; Pedro, O.; Lanata, A.; Mazzei, D.; Tognetti, A.; Greco, A.; Carbonaro, N.; et al. Interpreting psychophysiological states using unobtrusive wearable sensors in virtual reality. In Proceedings of the 7th International Conference on Advances in Computer-Human Interactions (ACHI 2014), Barcelona, Spain, 23–27 March 2014; pp. 331–336. [Google Scholar]

- Marín-Morales, J.; Higuera-Trujillo, J.L.; Greco, A.; Guixeres, J.; Llinares, C.; Scilingo, E.P.; Alcañiz, M.; Valenza, G. Affective computing in virtual reality: Emotion recognition from brain and heartbeat dynamics using wearable sensors. Sci. Rep. 2018, 8, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Limbu, B.; Fominykh, M.; Klemke, R.; Specht, M.; Wild, F. Supporting training of expertise with wearable technologies: The WEKIT reference framework. In Mobile and Ubiquitous Learning; Springer: Berlin, Germany, 2018; pp. 157–175. [Google Scholar]

- Lerner, D.; Mohr, S.; Schild, J.; Göring, M.; Luiz, T. An Immersive Multi-User Virtual Reality for Emergency Simulation Training: Usability Study. JMIR Serious Games 2020, 8, e18822. [Google Scholar] [CrossRef] [PubMed]

- Pedram, S.; Palmisano, S.; Skarbez, R.; Perez, P.; Farrelly, M. Investigating the process of mine rescuers’ safety training with immersive virtual reality: A structural equation modelling approach. Comput. Educ. 2020, 153, 103891. [Google Scholar] [CrossRef]

- Park, Y.; Park, Y.; Kim, H. Development of Immersive Vehicle Simulator for Aircraft Ground Support Equipment Training as a Vocational Training Program. In Proceedings of the International Conference on Human-Computer Interaction, Orlando, FL, USA, 26–31 July 2019; Springer: Berlin, Germany, 2019; pp. 225–234. [Google Scholar]

- Ahir, K.; Govani, K.; Gajera, R.; Shah, M. Application on virtual reality for enhanced education learning, military training and sports. Augment. Hum. Res. 2020, 5, 7. [Google Scholar] [CrossRef]

- Gac, P.; Richard, P.; Papouin, Y.; George, S.; Richard, E. Virtual Interactive Tablet to Support Vocational Training in Immersive Environment. In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Prague, Czech Republic, 25–27 February 2019; pp. 145–152. [Google Scholar] [CrossRef]

- Ebert, D.; Gupta, S.; Makedon, F. Ogma: A Virtual Reality Language Acquisition System. In Proceedings of the 9th ACM International Conference on PErvasive Technologies Related to Assistive Environments (PETRA’16), Corfu, Greece, 29 June–1 July 2016; Association for Computing Machinery: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Bozgeyikli, E.; Aguirrezabal, A.; Alqasemi, R.; Raij, A.; Sundarrao, S.; Dubey, R. Using Immersive Virtual Reality Serious Games for Vocational Rehabilitation of Individuals with Physical Disabilities. In Proceedings of the International Conference on Universal Access in Human-Computer Interaction, Las Vegas, NV, USA, 15–20 July 2018; Springer: Cham, Switzerland, 2018; pp. 48–57. [Google Scholar]

- Soomal, H.K.; Poyade, M.; Rea, P.M.; Paul, L. Enabling More Accessible MS Rehabilitation Training Using Virtual Reality. In Biomedical Visualisation; Springer: Cham, Switzerland, 2020; pp. 95–114. [Google Scholar]

- Gorman, C.; Gustafsson, L. The use of augmented reality for rehabilitation after stroke: A narrative review. Disabil. Rehabil. Assist. Technol. 2020, 1–9. [Google Scholar] [CrossRef]

- Gerup, J.; Soerensen, C.B.; Dieckmann, P. Augmented reality and mixed reality for healthcare education beyond surgery: An integrative review. Int. J. Med. Educ. 2020, 11, 1. [Google Scholar] [CrossRef]

- De Ponti, R.; Marazzato, J.; Maresca, A.M.; Rovera, F.; Carcano, G.; Ferrario, M.M. Pre-graduation medical training including virtual reality during COVID-19 pandemic: A report on students’ perception. BMC Med. Educ. 2020, 20, 1–7. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A. Virtual reality applications toward medical field. Clin. Epidemiol. Glob. Health 2020, 8, 600–605. [Google Scholar] [CrossRef]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).