Technology-Enhanced Musical Practice Using Brain–Computer Interfaces: A Topical Review

Abstract

1. Introduction

2. Background

2.1. Brain–Computer Interfaces

2.2. Technology-Enhanced Musical Practice

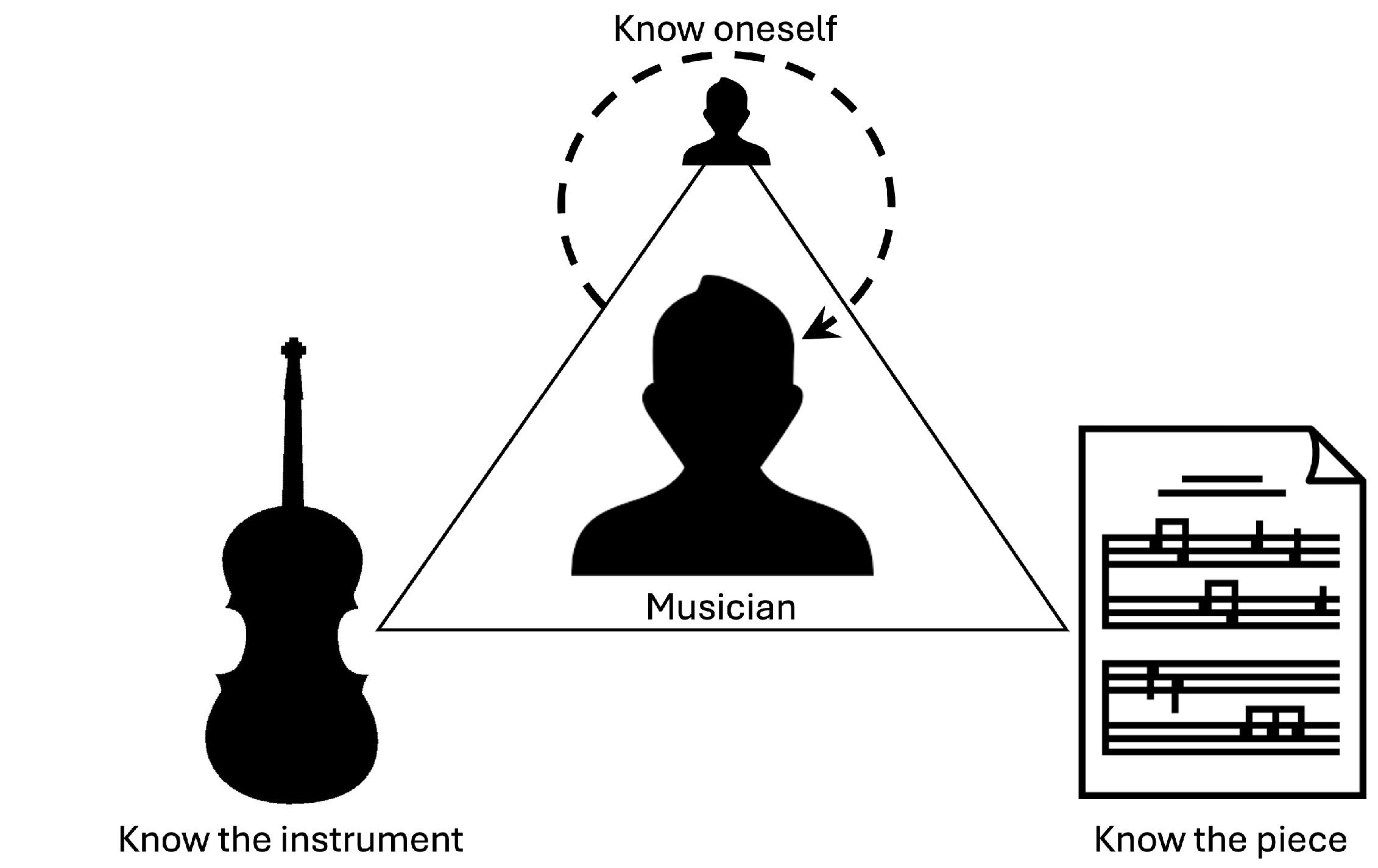

3. Conceptual TEMP Framework

3.1. Biomechanical Awareness

- Posture and Balance: This is the monitoring of overall playing posture, alignment, and weight distribution.

- Movement and Muscle Activity: This is the real-time monitoring of muscle tension and relaxation patterns, especially in key areas such as forearms, hands, neck, shoulders, and lower back.

- Fine Motor and Dexterity: These capture detailed finger, hand, wrist, arm, and facial muscle movements.

- Breathing Control: For wind and voice instrumentalists, diaphragm engagement and respiratory patterns are key parameters of the technique.

- Head and Facial Movement: The monitoring facial tension and head alignment to identify strain or compensatory patterns that may indicate suboptimal technique. These capabilities are particularly valuable in identifying inefficiencies or compensatory behaviors that may not be easily perceived from a first-person perspective.

- Movement intention: A core functionality of the TEMP framework would be the ability to distinguish intentional, goal-directed movement from involuntary or reflexive motion. This distinction is essential in helping musicians identify habits such as unwanted tension, tremors, or unintentional shifts in posture. By separating these movement types, the system can provide feedback that distinguishes between technical errors and unconscious physical responses, enhancing the performer’s body awareness and self-regulation during practice.

- Coordination and Movement Fluidity: This is the evaluation of coordination and movement fluidity during transitions and articulations.

3.2. Tempo Processing

3.3. Pitch Recognition

3.4. Cognitive Engagement

4. Review Strategy and Scope

- Posture and Balance;

- Movement and Muscle Activity;

- Fine Motor Movement and Dexterity;

- Breathing Control;

- Head and Facial Movement;

- Movement Intention;

- Coordination and Movement Fluidity;

- Tempo Processing;

- Pitch Recognition;

- Cognitive Engagement.

4.1. Literature Search Approach

4.2. Search Scope and Selection Parameters

4.3. Objectives of the Review

4.4. Search and Screening Results

5. Results

5.1. Biomechanical Awareness

5.1.1. Posture and Balance

5.1.2. Movement and Muscular Activity

5.1.3. Fine Motor and Dexterity

5.1.4. Breathing Control

5.1.5. Head and Facial Movement

5.1.6. Movement Intention

5.1.7. Coordination and Movement Fluidity

5.2. Tempo and Rhythm

5.3. Pitch Recognition

5.4. Cognitive Engagement

6. Discussion

7. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Utilized Search Queries

| TEMP Feature | Search Query |

|---|---|

| Posture and Balance | (“EEG” OR “brain-computer interface” OR “brain computer interface” OR “BCI”) AND (“posture” OR “alignment” OR “weight distribution” OR “proprioception” OR “kinesthesia” OR “balance”) |

| Movement and Muscle Activity | (“EEG” OR “brain-computer interface” OR “brain computer interface” OR “BCI”) AND (“muscle activity” OR “motor detection” OR “motor execution” OR “movement detection” OR “movement”) |

| Fine Motor and Dexterity | (“EEG” OR “brain-computer interface” OR “brain computer interface” OR “BCI”) AND (“fine motor control” OR “fine motor skills” OR “finger movement” OR “motor dexterity” OR “manual dexterity” OR “finger tapping” OR “precise movement” OR “precision motor tasks” OR “finger control” OR “force” OR “pressure” OR “finger identification”) |

| Breathing Control | (“EEG” OR “brain-computer interface” OR “brain computer interface” OR “BCI”) AND (“breathing” OR “respiration” OR “respiratory control” OR “diaphragm” OR “respiratory effort” OR “respiratory patterns” OR “breath regulation” OR “inhalation” OR “exhalation”) |

| Head and Facial Movement | (“EEG” OR “brain-computer interface” OR “brain computer interface” OR “BCI”) AND (“facial movement” OR “facial muscle activity” OR “facial tension” OR “facial expression” OR “head movement” OR “head posture” OR “head position” OR “head tracking” OR “cranial muscle activity” OR “facial motor control”) |

| Movement Intention | (“EEG” OR “brain-computer interface” OR “brain computer interface” OR “BCI”) AND (“voluntary movement” OR “involuntary movement” OR “motor intention” OR “movement intention” OR “intent detection” OR “reflex movement” OR “automatic motor response” OR “conscious movement” OR “unconscious movement” OR “motor inhibition” OR “motor control” OR “volitional” OR “reflexive movement” OR “intentional movement” OR “purposeful movement” OR “spasmodic movement”) |

| Coordination and Movement Fluidity | (“EEG” OR “brain-computer interface” OR “brain computer interface” OR “BCI”) AND (“motor coordination” OR “movement fluidity”) |

| Tempo Processing | (“EEG” OR “brain-computer interface” OR “brain computer interface” OR “BCI”) AND (“tempo perception” OR “tempo tracking” OR “internal tempo” OR “imagined tempo” OR “motor imagery tempo” OR “rhythm perception” OR “timing perception” OR “sensorimotor timing” OR “mental tempo” OR “temporal processing” OR “beat perception” OR “rhythm processing” OR “timing accuracy”) |

| Pitch Recognition | (“EEG” OR “brain-computer interface” OR “brain computer interface” OR “BCI”) AND (“pitch perception” OR “pitch tracking” OR “pitch recognition” OR “internal pitch” OR “imagined pitch” OR “pitch imagination” OR “pitch imagery” OR “auditory imagery” OR “sensorimotor pitch” OR “mental pitch” OR “pitch processing” OR “melody perception” OR “pitch accuracy”) |

| Cognitive Engagement | (“EEG” OR “brain-computer interface” OR “brain computer interface” OR “BCI”) AND (“flow” OR “musical flow” OR “musical performance” OR “music performance” OR “movement focus” OR “active movement control” OR “automatic performance” OR “performance engagement”) |

Appendix B. Included Papers’ Highlights

| Citation Number | Objectives | BCI Task | Results |

|---|---|---|---|

| 14 | Quantify the reliability of perturbation-evoked N1 as a biomarker of balance. | Repeated EEG recordings during surface translations in young and older adults across days and one year. | N1 amplitude and latency showed excellent within-session and test-retest reliability (ICC > 0.9), supporting clinical feasibility. |

| 15 | Examine age-related changes in cortical connectivity during dynamic balance. | EEG during sway-referenced posturography in young vs. late-middle/older adults. | Older adults displayed weaker PCC-to-PMC connectivity that correlated with poorer COP entropy, indicating a shift toward cognitive control of balance. |

| 16 | Test whether perturbation N1 reflects cognitive error processing. | Whole-body translations with expected vs. opposite directions while EEG recorded. | Error trials elicited Pe and ERAS, whereas early N1 remained unchanged, evidencing distinct neural generators for perturbation and error signals. |

| 17 | Assess a head-weight support traction workstation’s effect on resting brain activity in heavy digital users. | Compare supported traction vs. conventional traction during 5-min seated rest; EEG + EDA. | Supported traction increased global alpha power and comfort without elevating high-frequency activity, sustaining alertness. |

| 18 | Investigate cortical reorganization after short-term visual-feedback balance training in older adults. | Three-day stabilometer program with pre/post EEG and postural metrics. | Training reduced RMS sway, increased ventral-pathway alpha power, altered alpha MST topology, and these changes correlated with balance gains. |

| 19 | Determine if EEG rhythms encode direction-specific postural perturbations. | HD-EEG during four translation directions; CSP-based single-trial classification. | Low-frequency (3–10 Hz) features enabled above-chance classification in both healthy and stroke groups, implicating theta in directional encoding. |

| 20 | Evaluate passive-BCI workload decoding across posture and VR display contexts. | Sparkles mental-arithmetic paradigm while sitting/standing with screen or HMD-VR. | Within-context accuracy was stable; adding VR or standing minimally affected SNR, but cross-context transfer reduced classifier performance. |

| 21 | Explore auditory-stimulus effects on functional connectivity during balance. | Stabilometry + EEG with visual feedback alone vs. with music. | Music reduced whole-brain FC (delta–gamma) and decreased balance-quality indices, suggesting cognitive load diversion. |

| 22 | Probe posture-dependent modulation of interoceptive processing via HEPs. | EEG-HEP during sitting, stable standing, and unstable standing. | HEP amplitudes over central sites were lower in standing and further reduced on an unstable surface, independent of cardiac or power-spectrum changes, implying attentional reallocation from interoception to balance control. |

| 23 | Develop an interpretable CNN that can decode 2-D hand kinematics from EEG and test within-, cross- and transfer-learning strategies to shorten calibration time | Continuous trajectory decoding during a pursuit-tracking task | ICNN out-performed previous decoders while keeping model size/training time low; transfer-learning further improved prediction quality |

| 24 | Propose an enhanced Regularized Correlation-based Common Spatio-Spectral Patterns (RCCSSP) framework to better separate ipsilateral upper-limb movements in EEG | 3-class movement execution classification (right arm, right thumb, rest) | Mean accuracy = 88.94%, an 11.66% gain over the best prior method |

| 25 | Introduce a Motion Trajectory Reconstruction Transformer (MTRT) that uses joint-geometry constraints to recover full 3-D shoulder–elbow–wrist trajectories | Continuous multi-joint trajectory reconstruction (sign-language data) | Mean Pearson across 6 joints = 0.94—highest among all baseline models; NRMSE = 0.159 |

| 26 | Present a multi-class Filter-Bank Task-Related Component Analysis (mFBTRCA) pipeline for upper-limb movement execution | 5- to 7-class movement classification | Accuracy = 0.419 ± 0.078 (7 classes) and 0.403 ± 0.071 (5 classes), improving on earlier methods |

| 27 | Explore Multilayer Brain Networks (MBNs) combined with MRCP features to decode natural grasp types and kinematic parameters | 4-class natural grasp type + binary grasp-taxonomy | Four-class grasp accuracy = 60.56%; binary grasp-type and finger-number accuracies ≈ 79% |

| 28 | Design a pseudo-online pipeline (ensemble of SVM, EEGNet, Riemannian-SVM) for real-time detection and classification of wrist/finger extensions | Movement-vs.-rest detection + contra/ipsi classification | Detection: TPR = 79.6 ± 8.8%, 3.1 ± 1.2 FP min−1, 75 ms mean latency; contra/ipsi classification ≈ 67% |

| 29 | Investigate whether EEG can distinguish slow vs. fast speeds for eight upper-limb movements using FBCSP and CNN | Subject-independent speed classification | FBCSP-CNN reached 90% accuracy ( = 0.80) for shoulder flexion/extension—the best across all movements tested |

| 30 | Create a neuro-physiologically interpretable 3-D CNN with topography-preserving inputs to decode reaction-time, mode (active/passive), and 4-direction movements | Multi-label movement-component classification | Leave-one-subject-out accuracies: RT 79.81%, Active/Passive 81.23%, Direction 82.00%; beats 2-D CNN and LSTM baselines |

| 31 | Provide the first comprehensive review of EEG-based motor BCIs for upper-limb movement, covering paradigms, decoding methods, artifact handling and future directions | Narrative literature review (no experimental task) | Synthesizes state-of-the-art techniques, identifies gaps and proposes research road-map |

| 32 | Propose a state-based decoder: CSP-SVM identifies movement axis, followed by axis-specific Gaussian Process Regression for trajectory reconstruction | Hybrid discrete (axis) + continuous hand-trajectory decoding | Axis classifier accuracy = 97.1%; mean correlation actual-vs-predicted trajectories = 0.54 (orthogonal targets) and 0.37 (random) |

| 33 | Demonstrate that an off-the-shelf mobile EEG (Emotiv EPOC) can decode hand-movement speed and position outside the lab and propose a tailored signal-processing pipeline | Executed left/right-ward reaches at fast vs. slow pace; classify speed and reconstruct continuous x-axis kinematics | Speed-class accuracies: 73.36 ± 11.95% (overall); position and speed reconstruction = 0.22–0.57/0.26–0.58, validating commercial EEG for real-world BCIs |

| 34 | Replace the classic centre-out task with an improved paradigm and create an adaptive decoder-ensemble to boost continuous hand-kinematic decoding | Continuous 2-D reaches; decode six parameters (px, py, vx, vy, path length p, speed v) from low- EEG | Ensemble raised Pearson r by ≈75% for directional parameters and ≈10% for non-directional ones versus the classic setup (e.g., px: 0.21 vs. 0.14) |

| 35 | Quantify how a 2-back cognitive distraction degrades decoding and present a Riemannian Manifold–Gaussian NB (RM-GNBC) that is more distraction-robust | 3-D hand-movement direction classification with and without distraction | RM-GNBC outperformed TSLDA by 6% (p = 0.026); Riemannian approaches showed the smallest accuracy drop |

| 36 | Explore steady-state movement-related rhythms (SSMRRs) and test whether limb (hand) and movement frequency can be decoded from EEG | Rhythmic finger tapping at two frequencies with both hands; four-class (hand × frequency) classification | 4-class accuracy = 73.14 ± 15.86% (externally paced) and 66.30 ± 17.26% (self-paced) |

| 37 | Introduce a diffusion-adaptation framework with orthogonal Bessel functions to recognize prolonged, continuous gestures from EEG even with small datasets | Continuous upper-limb movements segmented into up to 10 sub-gestures; inter-subject gesture classification | Leave-one-subject-out accuracy ≈ 70% for ten sub-gestures while keeping computational cost low |

| 38 | Propose a hierarchical model combining Attention-State Detection (ASD) and Motion-Intention Recognition (MIR) to keep upper-limb intention decoding reliable when subjects are distracted | Binary right-arm movement-intention (move/no-move) under attended vs. distracted conditions | Hierarchical model accuracy = 75.99 ± 5.31% (6% higher than a conventional model) |

| 39 | Provide the first topical overview of EEG-BCIs for lower-limb motor-task identification, cataloging paradigms, pre-processing, features and classifiers | Literature survey contrasts active, imagined and assisted lower-limb tasks and associated BCI pipelines | Maps 22 key studies; highlights Butterworth 0.1–30 Hz filtering, power/correlation features and LDA/SVM as most recurrent, achieving >90% in several benchmarks |

| 40 | Investigate raw low-frequency EEG and build a CNN-BiLSTM to recognise hand movements and force/trajectory parameters with near-perfect accuracy | Classify four executed hand gestures, picking vs. pushing forces, and four-direction displacements | Accuracies: 4 gestures = 99.14 ± 0.49%, picking = 99.29 ± 0.11%, pushing = 99.23 ± 0.60%, 4-direction displacement = 98.11 ± 0.23% |

| 41 | Disentangle cortical networks for movement initiation vs. directional processing using delta-band EEG during center-out reaches | Four-direction center-out task; direction classifiers cue-aligned vs. movement-aligned | Windowed delta-band classifier peaked at 55.9 ± 8.6% (cue-aligned) vs. 50.6 ± 7.5% (movement-aligned); parieto-occipital sources dominated direction coding |

| 42 | Map the topography of delta and theta oscillations during visually guided finger-tapping with high-density EEG | Self-paced finger tapping; spectral analysis rather than online decoding | Theta showed contralateral parietal + fronto-central double activation, while delta was confined to central contralateral sites, revealing distinct spatial roles in movement execution |

| 43 | Develop an embedding-manifold decoder whose neural representation of movement direction is invariant to cognitive distraction | Binary 4-direction center-out reaching; classify direction from 1–4 Hz MRCPs while subjects are attentive vs. performing a 2-back distraction task | Mixed-state model reached 76.9 ± 10.6% (attentive)/76.3 ± 9.7% (distracted) accuracy, outperforming a PCA baseline and eliminating the need to detect the user’s attentional state |

| 44 | Perform the first meta-analysis of EEG decoding of continuous upper-limb kinematics, pooling 11 studies to assess overall feasibility and key moderators | Narrative review (executed + imagined continuous trajectories); compares linear vs. non-linear decoders | Overall random-effects mean effect size r = 0.46 (95% CI 0.32–0.61); synergy-based decoding stood out (best study r ≈ 0.80), and non-linear models outperformed linear ones |

| 45 | Examine whether EEG + force-tracking signals can separate four force-modulation tasks and tell apart fine-motor experts from novices. | Multi-class task-type classification and expert vs. novice group classification. | Task-type could be decoded with high accuracy (≈ 88–95%) in both groups, whereas group membership stayed at chance, revealing very individual EEG patterns in experts. |

| 46 | Present a spatio-temporal CSSD + LDA algorithm to discriminate single-trial EEG of voluntary left vs. right finger movement. | Binary executed finger-movement classification (L vs. R index). | Mean accuracy 92.1% on five subjects without trial rejection. |

| 47 | Evaluate SVM and deep MLP networks for decoding UHD-EEG during separate finger extensions; visualize salient time-channels. | Pairwise binary classification for every finger pair (5 fingers → 10 pairs). | MLP reached 65.68% average accuracy, improving on SVM (60.4%); saliency maps highlighted flexion and relaxation phases. |

| 48 | Test whether scalp-EEG phase features outperform amplitude for thumb vs. index movement recognition using deep learning. | Binary executed finger movement (thumb ↔ index). | Phase-based CNN achieved 70.0% accuracy versus 62.3% for amplitude features. |

| 49 | Build an ultra-high-density (UHD) EEG system to decode individual finger motions on one hand and benchmark against lower-density montages. | 2- to 5-class-executed single-finger classification. | UHD EEG accuracies: 80.86% (2-class), 66.19% (3-class), 50.18% (4-class), 41.57% (5-class)—all significantly above low-density setups. |

| 50 | Map broadband and ERD/ERS signatures of nine single/coordinated finger flex-ext actions to gauge EEG decodability. | Detection (movement vs. rest) + pairwise discrimination among 9 actions. | Combined low-freq amplitude + alpha/beta ERD features gave > 80% movement detection; thumb vs. other actions exceeded 60% LOSO accuracy. |

| 51 | Propose Autonomous Deep Learning (ADL)—a streaming, self-structuring network—for subject-independent five-finger decoding. | 5-class executed finger-movement classification with online adaptation. | ADL scored ≈ 77% (5-fold CV) across four subjects, beating CNN (72%) and RF (53%) and remaining stable in leave-one-subject-out tests. |

| 52 | Decode four graded effort levels of finger extension in stroke and control participants using EEG, EMG, and their fusion. | Four-class effort-level classification (low, medium, high, none). | Controls: EEG + EMG 71% (vs. chance 25%); stroke paretic hand: EEG alone 65%, whereas EMG alone failed (41%). |

| 53 | Detect intrinsic flow/engagement fluctuations during a VR fine-finger task from EEG using ML. | Binary high-flow vs. low-flow state decoding. | Spectral-coherence classifier exceeded 80% cross-validated accuracy; high-frequency bands contributed most. |

| 54 | Identify EEG markers of optimal vs. sub-optimal sustained attention during visuo-haptic multi-finger force control. | Compare EEG in low-RT-variability (optimal) vs. high-variability (sub-optimal) trials. | Optimal state showed 20–40 ms frontal-central haptics-potential drop and widespread alpha suppression—proposed biomarkers for closed-loop attention BCIs. |

| 55 | Determine whether movement of the index finger and foot elicits distinct movement-related power changes over sensorimotor cortex (EEG) and cerebellum (ECeG) | Executed left and right finger-extension and foot-dorsiflexion while recording 104-channel EEG + 10% cerebellar extension montage | Robust movement-related -band desynchronisation over Cz, / synchronisation, and a premovement high- power decrease over the cerebellum, demonstrating opposing low-frequency dynamics between the cortex and cerebellum |

| 56 | Explore how auditory cues modulate motor timing and test if deep-learning models can decode pacing vs. continuation phases of finger tapping from single-trial EEG | Right-hand finger-tapping task with four conditions (synchronized/syncopated × pacing/continuation) | CNN achieved 70% mean accuracy (stimulus-locked) and 66% (response-locked) in the 2-class pacing-vs-continuation problem—20% and 16% above chance, respectively |

| 57 | Compare dynamic position-control (PC) versus isometric force-control (FC) wrist tasks and examine how each type of motor practice alters cortico- and inter-muscular coherence | 40-trial wrist-flexion “ramp-and-hold” tasks with pre- and post-practice blocks for PC or FC training | -band CM coherence rose significantly after PC practice and was accompanied by a stronger descending (cortex → muscle) component; FC practice showed no coherence change |

| 58 | Examine how EEG functional connectivity (FC) changes across inhale, inhale-hold, exhale, and exhale-hold during ultra-slow breathing (2 cpm). | Multi-class EEG classifier that labels the four respiratory phases from FC features. | Random-committee classifier reached 95.1% accuracy with 403 theta-band connections, showing that the theta-band connectome is a reliable phase “signature”. |

| 59 | Probe neural dynamics during slow-symmetric breathing (10, 6, 4 cpm) with and without breath-holds. | Within-subject comparison of EEG metrics (coherence, phase-amplitude coupling, modulation index) across breathing patterns. | Slow-symmetric breathing increased coherence and phase-amplitude coupling; alpha/beta power highest during breath-holds, but adding holds did not change the overall EEG-breathing coupling. |

| 60 | Test whether respiration-entrained oscillations can be detected with high-density scalp EEG in humans. | Coherence analysis between respiration signal and EEG channels during quiet breathing. | Significant respiration–EEG coherence was found in most participants, confirming that scalp EEG can capture respiration-locked brain rhythms. |

| 61 | Determine the one-week test–retest reliability of respiratory-related evoked potentials (RREPs) under unloaded and resistive-loaded breathing. | Repeated RREP acquisition; reliability quantified with intraclass-correlation coefficients (ICCs). | Reliability ranged from moderate to excellent (ICC 0.57–0.92) for all RREP components in both conditions, supporting RREP use in longitudinal BCI studies. |

| 62 | Investigate how 30-s breath-hold cycles and the resulting CO2/O2 swings modulate regional EEG power over time. | Cross-correlation of end-tidal gases with EEG global and regional field power (delta, alpha bands). | Apnea termination raised delta and lowered alpha power; CO2 positively, O2 negatively correlated with EEG, with area-specific time lags, indicating heterogeneous cortical chemoreflex pathways. |

| 63 | Identify scalp-EEG “signatures” of voluntary (intentional) respiration for future conscious-breathing BCIs. | Compute 0–2 Hz power, EEG–respiration phase-lock value (PLV) and sample-entropy while subjects vary breathing effort. | Voluntary breathing enhanced low-frequency power frontally and right-parietally, increased PLV, and lowered entropy—evidence of a strong EEG marker set for detecting respiratory intention. |

| 64 | Introduce cycle-frequency (C-F) analysis as an alternative to conventional time–frequency EEG analysis for rhythmic breathing tasks. | Compare C-F versus standard T–F on synthetic and real EEG during spontaneous vs. loaded breathing. | C-F gave sharper time and frequency localization and required fewer trials, improving assessment of cycle-locked cortical activity. |

| 65 | Assess whether adding head-accelerometer data boosts EEG-based detection of respiratory-related cortical activity during inspiratory loading. | Covariance-based EEG classifier alone vs. “Fusion” (EEG + accelerometer); performance via ROC/AUC. | Fusion plus 50-s smoothing raised detection (AUC) above EEG-only; head motion information allows fewer EEG channels while preserving accuracy. |

| 66 | Review and demonstrate mechanisms that couple brain, breathing, and external rhythmic stimuli. | Entrain localized EEG (Cz) and breathing to variable-interval auditory tones; analyze synchronization and “dynamic attunement”. | Both breathing and Cz power spectra attuned to stimulus timing, increasing brain–breath synchronization during inter-trial intervals, supporting long-timescale alignment mechanisms. |

| 67 | Show that scalp EEG recorded before movement can reliably decode four tongue directions (left, right, up, down) and explore which features/classifiers work best for a multi-class tongue BCI. | Offline detection of movement-related cortical potentials and classification of tongue movements (2-, 3-, 4-class scenarios) from single-trial pre-movement EEG. | LDA gave 92–95% movement-vs-idle accuracy; 4-class accuracy, 62.6%; 3-class, 75.6%; 2-class, 87.7%. Temporal + template features were most informative. |

| 68 | Build and validate a neural-network model that links occipital + central EEG to head yaw (left/right) rotations elicited by a light cue, aiming at hands-free HCI for assistive robotics. | System-identification BCI: predict continuous head-position signal from sliding windows of EEG; evaluation within- and across-subjects. | Within-subject testing reached up to r = 0.98, MSE = 0.02, while cross-subject generalization was poor, showing that the model works but needs user-specific calibration. |

| 69 | Assess whether a low-cost, 3-channel around-ear EEG setup can detect hand- and tongue-movement intentions. | Binary classification of single-trial ear-EEG (movement vs. idle) in three scenarios: hand-rehab, hand-control, tongue-control. | Mean accuracies: 70% (hand-rehab), 73% (hand-control), 83% (tongue-control)—all above chance, indicating that practical ear-EEG BCIs are feasible. |

| 70 | Add rhythmic temporal prediction to a motor-BCI so that “time + movement” can be encoded together and movement detection is easier | Visual finger-tapping task: left vs. right taps under 0 ms, 1000 ms or 1500 ms prediction; 4-class “time × side” decoding | 1000 ms prediction yielded 97.30% left-right accuracy and 88.51% 4-class accuracy – both significantly above the no-prediction baseline |

| 71 | Test whether putting subjects into a preparatory movement state before the action strengthens pre-movement EEG and boosts decoding | Two-button task; “prepared” vs. “spontaneous” pre-movement; MRCP + ERD features fused with CSP | Preparation raised pre-movement decoding from 78.92% to 83.59% and produced earlier/larger MRCP and ERD signatures |

| 72 | In a real-world setting (dyadic dance), disentangle four simultaneous neural processes. | Mobile EEG + mTRF modelling during spontaneous paired dance | mTRF isolated all four processes and revealed a new occipital EEG marker that specifically tracks social coordination better than self- or partner-kinematics alone |

| 73 | Ask if pre-movement ERPs (RP/LRP) and MVPA encode action-outcome prediction | Active vs. passive finger presses that trigger visual/auditory feedback; MVPA decoding | Decoding accuracy ramps from ≈800 ms and peaks ≈ 85% at press time; late RP more negative when an action will cause feedback, confirming RP carries sensory-prediction info |

| 74 | Propose a time-series shapelet algorithm for asynchronous movement-intention detection aimed at stroke rehab | Self-paced ankle-dorsiflexion; classification + pseudo-online detection | Best F1 = 0.82; pseudo-online: 69% TPR, 8 false-positives/min, 44 ms latency – outperforming six other methods |

| 75 | Show that 20–60 Hz power can simultaneously encode time (500 vs. 1000 ms) and movement (left/right) after timing-prediction training | Time-movement synchronization task, before (BT) and after (AT) training; 4-class decoding | After training, high- ERD gave 73.27% mean 4-class accuracy (best subject = 93.81%) while keeping movement-only accuracy near 98% |

| 76 | Convert 1-D EEG to 2-D meshes and feed to CNN + RNN for cross-subject, multiclass intention recognition | PhysioNet dataset (108 subjects, 5 gestures) + real-world brain-typing prototype | 98.3% accuracy on the large dataset; 93% accuracy for 5-command brain-typing; test-time inference ≈ 10 ms |

| 77 | Determine whether EEG (RP) adds value over kinematics for telling intentional vs. spontaneous eye-blinks | Blink while resting (spontaneous) vs. instructed fast/slow blinks; logistic-regression models with EEG + EOG | EEG cumulative amplitude (RP) alone classified intentional vs. spontaneous blinks with 87.9% accuracy and boosted full-model AUC to 0.88; model generalized to 3 severely injured patients |

| 78 | Determine whether individual upper-limb motor ability (dexterity, grip strength, MI vividness) explains the inter-subject variability of motor-imagery EEG features. | Motor-imagery EEG recording; correlate relative ERD patterns ( bands) with behavioral and psychological scores. | Alpha-band rERD magnitude tracks hand-dexterity (Purdue Pegboard) and imagery ability |

| 79 | Develop a task-oriented bimanual-reaching BCI and test deep-learning models for decoding movement direction. | Classify three coordinated directions (left, mid, right) from movement-related cortical potentials with a hybrid CNN + BiLSTM network. | CNN-BiLSTM decodes three-direction bimanual reach from EEG at 73% peak accurac |

| 80 | Systematically review recent progress in bimanual motor coordination BCIs, covering sensors, paradigms and algorithms. | Literature review (36 studies, 2010–2024) spanning motor-execution, imagery, attempt and action-observation BCIs. | Bilateral beta/alpha ERD plus larger MRCP peaks emerge as core EEG markers of bimanual coordination |

| 81 | Compare neural encoding of simultaneous vs. sequential bimanual reaching and evaluate if EEG can distinguish them before movement. | 3-class manifold-learning decoder (LSDA + LDA) applied to pre-movement and execution-period EEG. | Low-frequency MRCP/ERD patterns allow pre-movement separation of sequential vs. simultaneous bimanual reaches |

| 82 | Introduce an eigenvector-based dynamical network analysis to reveal meta-stable EEG connectivity states during visual-motor tracking. | Track functional-connectivity transitions while participants follow a moving target vs. observe/idle. | Eigenvector-based dynamics expose meta-stable alpha/gamma networks that differentiate visual-motor task states |

| 83 | Isolate the neural mechanisms that distinguish self-paced finger tapping from beat-synchronization tapping using steady-state evoked potentials (SSEPs). | EEG while participants (i) tapped at their own pace, (ii) synchronized taps to a musical beat, or (iii) only listened. | Synchronization recruited the left inferior frontal gyrus, whereas self-paced tapping engaged bilateral inferior parietal lobule—indicating functionally different beat-production networks. |

| 84 | Test whether neural entrainment to rhythmic patterns, working-memory capacity, and musical background predict sensorimotor synchronization skill. | SS-EPs recorded during passive listening to syncopated/unsyncopated rhythms; separate finger-tapping and counting-span tasks. | Stronger entrainment to unsyncopated rhythms surprisingly predicted worse tapping accuracy; working memory (not musical training) was the positive predictor of tapping consistency. |

| 85 | Use a coupled-oscillator model to explain tempo-matching bias and test whether 2 Hz tACS can modulate that bias. | Dual-tempo-matching task with simultaneous rhythms; EEG entrainment measured; fronto-central 2 Hz tACS vs. sham. | Listeners biased matches toward the starting tempo; tACS reduced both under- and over-estimation biases, validating model predictions about strengthened coupling. |

| 86 | Determine if SSEPs track subjective beat perception rather than stimulus acoustics. | Constant rhythm with context → ambiguous → probe phases; listeners judged beat-match; EEG SSEPs analyzed. | During the ambiguous phase, spectral amplitude rose at the beat frequency cued by the prior context, showing SSEPs mirror conscious beat perception. |

| 87 | Ask whether the predicted sharpness of upcoming sound envelopes is encoded in beta-band activity and influences temporal judgements. | Probabilistic cues signalled envelope sharpness in a timing-judgement task; EEG beta (15–25 Hz) analyzed. | Pre-target beta power scaled with expected envelope sharpness and correlated with individual timing precision, linking beta modulation to beat-edge prediction. |

| 88 | Compare motor-cortex entrainment to isochronous auditory vs. visual rhythms. | Auditory or flashing-visual rhythms, with tapping or passive attention; motor-component EEG isolated via ICA. | Motor entrainment at the beat frequency was stronger for visual than auditory rhythms and strongest during tapping; -power rose for both modalities, suggesting modality-specific use of motor timing signals. |

| 89 | Examine whether ballroom dancers show superior neural resonance and behavior during audiovisual beat synchronization. | Finger-tapping to 400 ms vs. 800 ms beat intervals; EEG resonance metrics compared between dancers and non-dancers. | Dancers exhibited stronger neural resonance but no behavioral advantage; the 800 ms tempo impaired both groups and demanded more attentional resources. |

| 90 | Clarify how mu-rhythms behave during passive music listening without overt movement. | 32-ch EEG during silence, foot/hand tapping, and music listening while movement was suppressed. | Music listening produced mu-rhythm enhancement over sensorimotor cortex—similar to effector-specific inhibition—supporting covert motor suppression during beat perception. |

| 91 | Introduce an autocorrelation-based extension of frequency-tagging to measure beat periodicity as self-similarity in empirical signals. | Applied to adult and infant EEG, finger-taps and other datasets. | The new method accurately recovered beat-periodicity across data types and resolved specificity issues of classic magnitude-spectrum tagging, broadening tools for beat-BCI research. |

| 92 | Predict which dominant beat frequency a listener is tracking by classifying short EEG segments with machine learning. | EEG from 20 participants hearing 12 pop songs; band-power features fed to kNN, RF, SVM. | Dense spatial filtering reached 70% binary and 56% ternary accuracy—≈20% above chance—showing beat-frequency decoding from just 5 s of EEG. |

| 93 | Test the motor-auditory hypothesis for hierarchical meter imagination: does the motor system create and feed timing information back to the auditory cortex when we “feel” binary or ternary meter in silence? | High-density EEG + ICA isolated motor vs. auditory sources while participants listened, imagined, or tapped rhythms. | Bidirectional coupling appeared in all tasks, but motor-to-auditory flow became marginally stronger during pure imagination, showing a top-down drive from motor areas even without movement. |

| 94 | Determine whether imagined, non-isochronous beat trains can be detected from EEG with lightweight deep models. | Binary beat-present/absent classification during 17 subjects’ silent imagery of two beat patterns. | EEGNet + Focal-Loss yielded the best performance, using <3% of the CNN’s weights and showing robustness to label imbalance—making it the preferred model for imagined-rhythm BCIs. |

| 95 | Ask how pitch predictability, enjoyment and musical expertise modulate cortical and behavioral tracking of musical rhythm. | Passive listening to tonal vs. atonal piano pieces while EEG mutual-information tracked envelope and pitch-surprisal; finger-tapping to short excerpts measured beat following. | Envelope tracking was stronger for atonal music; tapping was more consistent for tonal music; in tonal pieces, stronger envelope tracking predicted better tapping; envelope tracking rose with both expertise and enjoyment. |

| 96 | Test whether whole melodies can be identified from EEG not only while listening but also while imagining them, and introduce a maximum-correlation (maxCorr) decoding framework. | 4-melody classification (Bach chorales) from single-subject, single-bar EEG segments during listening and imagery. | Melodies were decoded well above chance in every participant; maxCorr out-performed bTRF, and low-frequency (<1 Hz) EEG carried additional pitch information, demonstrating practical imagined-melody BCIs. |

| 97 | Evaluate the feasibility of decoding individual imagined pitches (C4–B4) from scalp EEG and determine the best feature/classifier combination. | Random imagery of seven pitches; features = multiband spectral power per channel. | 7-class SVM with IC features achieved 35.7 ± 7.5% mean accuracy (chance ≈ 14%), max = 50%, ITR = 0.37 bit/s, confirming the first-ever pitch-decoding BCI. |

| 98 | Disentangle how prediction uncertainty (entropy) and prediction error (surprizal) for note onset and pitch are encoded across EEG frequency bands, and whether musical expertise modulates this encoding. | Multivariate temporal-response-function (TRF) models reconstruct narrow-band EEG (, , , , <30 Hz) while listeners hear Bach melodies; compare acoustic-only vs. acoustic + melodic-expectation regressors. | Adding melodic-expectation metrics improved EEG reconstruction in all sub-30 Hz bands; entropy contributed more than surprisal, with - and -band activity encoding temporal entropy before note onset. Musicians showed extra -band gains, non-musicians showed -band gains—highlighting that frequency-specific predictive codes are useful for rhythm-pitch BCIs. |

| 99 | Determine whether the centro-parietal-positivity (CPP) build-up rate reflects confidence rather than merely accuracy/RT in a new auditory pitch-identification task. | 2-AFC pitch-label selection (24 tones) with 4-level confidence rating; simultaneous 32-ch EEG to quantify CPP slope. | Confidence varied with tonal distance, yet CPP slope tracked accuracy and reaction time, not confidence, indicating that CPP is a first-order evidence-accumulation signal in audition. Provides a novel paradigm to probe confidence-aware auditory BCIs. |

| 100 | Systematically review the neural correlates of the flow state across EEG, fMRI, fNIRS and tDCS studies. | Literature synthesis (25 studies, 471 participants). | Converging evidence implicates anterior attention/executive and reward circuits, but findings remain sparse and inconsistent, underscoring methodological gaps and small-sample bias. |

| 101 | Build an automatic flow-vs-non-flow detector from multimodal wearable signals and test emotion-based transfer learning. | Arithmetic and reading tasks recorded with EEG (Emotiv Epoc X), wrist PPG/GSR, and Respiban; ML classifiers with and without transfer learning from the DEAP emotion dataset. | EEG alone: 64.97% accuracy; sensor fusion: 73.63%; emotion-transfer model boosted accuracy to 75.10% (AF1 = 74.92%), showing taht emotion data can enhance flow recognition. |

| 102 | Demonstrate on-body detection of flow and anti-flow (boredom, anxiety) while gaming. | Tetris at graded difficulty; EEG, HR, SpO2, GSR, head/hand motion analyzed. | Flow episodes showed fronto-central alpha/ dominance, U-shaped HRV, inverse-U SpO2, and minimal motion, confirming that lightweight wearables can monitor flow in real time. |

| 103 | Test whether motor corollary-discharge attenuation is domain-general across speech and music. | Stereo-EEG in a professional musician during self-produced vs. external speech and music. | Self-produced sounds evoked widespread 4–8 Hz suppression and 8–80 Hz enhancement in that auditory cortex, with reduced acoustic-feature encoding for both domains, proving domain-general predictive signals. |

| 104 | Examine if brain-network flexibility predicts skilled musical performance. | Pre-performance resting EEG; sliding-window graph community analysis vs. piano-timber precision. | Higher whole-brain flexibility just before playing predicted finer timbre control when feedback was required, highlighting flexibility as a biomarker of expert sensorimotor skill. |

| 105 | Relate game difficulty, EEG engagement indices and self-reported flow; build a high/low engagement classifier. | “Stunt-plane” video game (easy/optimal/hard) with , , 1/ indices; ML classification. | Self-rated flow peaked at optimal difficulty; combining three indices yielded F1 = 89% (within-subject)/81% (cross-subject); older-adult model F1 = 85%. |

| 106 | Investigate how technological interactivity level (LTI) plus balance-of-challenge (BCS) and sense-of-control (SC) shape EEG-defined flow. | 9th-grade game-based learning with low/mid/high LTI; chi-square, decision-tree, and logistic models on EEG flow states. | High LTI + high short-term SC + high BCS increased odds of flow 8-fold, confirming interface interactivity as a key flow driver. |

| 107 | Identify EEG signatures of flow across multiple task types with a single prefrontal channel. | Mindfulness, drawing, free-recall, and three Tetris levels; correlate delta// power with flow scores. | Flow scores correlated positively with , , and power peaking ∼2 min after onset (max R2 = 0.163), showing that portable one-channel EEG can index flow in naturalistic settings. |

| 108 | Test whether cognitive control can modulate steady-state movement-related potentials, challenging “no-free-will” views. | Repetitive finger tapping; participants voluntarily reduce pattern predictability; EEG SSEPs analyzed. | Participants successfully de-automatized tapping; SSEPs over motor areas were modulated by control, supporting a role for higher-order volition in motor preparation. |

References

- Barrett, K.C.; Ashley, R.; Strait, D.L.; Kraus, N. Art and science: How musical training shapes the brain. Front. Psychol. 2013, 4, 713. [Google Scholar] [CrossRef] [PubMed]

- Williamon, A. Musical Excellence: Strategies and Techniques to Enhance Performance; Oxford University Press: Oxford, UK, 2004. [Google Scholar]

- Bazanova, O.; Kondratenko, A.; Kondratenko, O.; Mernaya, E.; Zhimulev, E. New computer-based technology to teach peak performance in musicians. In Proceedings of the 2007 29th International Conference on Information Technology Interfaces, Cavtat, Croatia, 25–28 June 2007; pp. 39–44. [Google Scholar]

- Pop-Jordanova, N.; Bazanova, O.; Kondratenko, A.; Kondratenko, O.; Markovska-Simoska, S.; Mernaya, J. Simultaneous EEG and EMG biofeedback for peak performance in musicians. In Proceedings of the Inaugural Meeting of EPE Society of Applied Neuroscience (SAN) in Association with the EU Cooperation in Science and Technology (COST) B27; 2006; p. 23. [Google Scholar]

- Riquelme-Ros, J.V.; Rodríguez-Bermúdez, G.; Rodríguez-Rodríguez, I.; Rodríguez, J.V.; Molina-García-Pardo, J.M. On the better performance of pianists with motor imagery-based brain-computer interface systems. Sensors 2020, 20, 4452. [Google Scholar] [CrossRef] [PubMed]

- Bhavsar, P.; Shah, P.; Sinha, S.; Kumar, D. Musical Neurofeedback Advancements, Feedback Modalities, and Applications: A Systematic Review. Appl. Psychophysiol. Biofeedback 2024, 49, 347–363. [Google Scholar] [CrossRef]

- Sayal, A.; Direito, B.; Sousa, T.; Singer, N.; Castelo-Branco, M. Music in the loop: A systematic review of current neurofeedback methodologies using music. Front. Neurosci. 2025, 19, 1515377. [Google Scholar] [CrossRef]

- Kawala-Sterniuk, A.; Browarska, N.; Al-Bakri, A.; Pelc, M.; Zygarlicki, J.; Sidikova, M.; Martinek, R.; Gorzelanczyk, E.J. Summary of over fifty years with brain-computer interfaces—A review. Brain Sci. 2021, 11, 43. [Google Scholar] [CrossRef] [PubMed]

- Nicolas-Alonso, L.F.; Gomez-Gil, J. Brain computer interfaces, a review. Sensors 2012, 12, 1211–1279. [Google Scholar] [CrossRef]

- Acquilino, A.; Scavone, G. Current state and future directions of technologies for music instrument pedagogy. Front. Psychol. 2022, 13, 835609. [Google Scholar] [CrossRef]

- MakeMusic, Inc. MakeMusic. 2025. Available online: https://www.makemusic.com/ (accessed on 29 May 2025).

- Yousician Ltd. Yousician. 2025. Available online: https://yousician.com (accessed on 29 May 2025).

- Folgieri, R.; Lucchiari, C.; Gričar, S.; Baldigara, T.; Gil, M. Exploring the potential of BCI in education: An experiment in musical training. Information 2025, 16, 261. [Google Scholar] [CrossRef]

- Mirdamadi, J.L.; Poorman, A.; Munter, G.; Jones, K.; Ting, L.H.; Borich, M.R.; Payne, A.M. Excellent test-retest reliability of perturbation-evoked cortical responses supports feasibility of the balance N1 as a clinical biomarker. J. Neurophysiol. 2025, 133, 987–1001. [Google Scholar] [CrossRef]

- Dadfar, M.; Kukkar, K.K.; Parikh, P.J. Reduced parietal to frontal functional connectivity for dynamic balance in late middle-to-older adults. Exp. Brain Res. 2025, 243, 111. [Google Scholar] [CrossRef]

- Jalilpour, S.; Müller-Putz, G. Balance perturbation and error processing elicit distinct brain dynamics. J. Neural Eng. 2023, 20, 026026. [Google Scholar] [CrossRef]

- Jung, J.Y.; Kang, C.K.; Kim, Y.B. Postural supporting cervical traction workstation to improve resting state brain activity in digital device users: EEG study. Digit. Health 2024, 10, 20552076241282244. [Google Scholar] [CrossRef]

- Chen, Y.C.; Tsai, Y.Y.; Huang, W.M.; Zhao, C.G.; Hwang, I.S. Cortical adaptations in regional activity and backbone network following short-term postural training with visual feedback for older adults. GeroScience 2025, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Solis-Escalante, T.; De Kam, D.; Weerdesteyn, V. Classification of rhythmic cortical activity elicited by whole-body balance perturbations suggests the cortical representation of direction-specific changes in postural stability. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 2566–2574. [Google Scholar] [CrossRef] [PubMed]

- Gherman, D.E.; Klug, M.; Krol, L.R.; Zander, T.O. An investigation of a passive BCI’s performance for different body postures and presentation modalities. Biomed. Phys. Eng. Express 2025, 11, 025052. [Google Scholar] [CrossRef]

- Oknina, L.; Strelnikova, E.; Lin, L.F.; Kashirina, M.; Slezkin, A.; Zakharov, V. Alterations in functional connectivity of the brain during postural balance maintenance with auditory stimuli: A stabilometry and electroencephalogram study. Biomed. Phys. Eng. Express 2025, 11, 035006. [Google Scholar] [CrossRef] [PubMed]

- Dohata, M.; Kaneko, N.; Takahashi, R.; Suzuki, Y.; Nakazawa, K. Posture-Dependent Modulation of Interoceptive Processing in Young Male Participants: A Heartbeat-Evoked Potential Study. Eur. J. Neurosci. 2025, 61, e70021. [Google Scholar] [CrossRef]

- Borra, D.; Mondini, V.; Magosso, E.; Müller-Putz, G.R. Decoding movement kinematics from EEG using an interpretable convolutional neural network. Comput. Biol. Med. 2023, 165, 107323. [Google Scholar] [CrossRef]

- Besharat, A.; Samadzadehaghdam, N. Improving Upper Limb Movement Classification from EEG Signals Using Enhanced Regularized Correlation-Based Common Spatio-Spectral Patterns. IEEE Access 2025, 13, 71432–71446. [Google Scholar] [CrossRef]

- Wang, P.; Li, Z.; Gong, P.; Zhou, Y.; Chen, F.; Zhang, D. MTRT: Motion trajectory reconstruction transformer for EEG-based BCI decoding. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 2349–2358. [Google Scholar] [CrossRef]

- Jia, H.; Feng, F.; Caiafa, C.F.; Duan, F.; Zhang, Y.; Sun, Z.; Solé-Casals, J. Multi-class classification of upper limb movements with filter bank task-related component analysis. IEEE J. Biomed. Health Inform. 2023, 27, 3867–3877. [Google Scholar] [CrossRef]

- Gao, Z.; Xu, B.; Wang, X.; Zhang, W.; Ping, J.; Li, H.; Song, A. Multilayer Brain Networks for Enhanced Decoding of Natural Hand Movements and Kinematic Parameters. IEEE Trans. Biomed. Eng. 2024, 72, 1708–1719. [Google Scholar] [CrossRef]

- Niu, J.; Jiang, N. Pseudo-online detection and classification for upper-limb movements. J. Neural Eng. 2022, 19, 036042. [Google Scholar] [CrossRef]

- Zolfaghari, S.; Rezaii, T.Y.; Meshgini, S.; Farzamnia, A.; Fan, L.C. Speed classification of upper limb movements through EEG signal for BCI application. IEEE Access 2021, 9, 114564–114573. [Google Scholar] [CrossRef]

- Kumar, N.; Michmizos, K.P. A neurophysiologically interpretable deep neural network predicts complex movement components from brain activity. Sci. Rep. 2022, 12, 1101. [Google Scholar] [CrossRef]

- Wang, J.; Bi, L.; Fei, W. EEG-based motor BCIs for upper limb movement: Current techniques and future insights. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 4413–4427. [Google Scholar] [CrossRef] [PubMed]

- Hosseini, S.M.; Shalchyan, V. State-based decoding of continuous hand movements using EEG signals. IEEE Access 2023, 11, 42764–42778. [Google Scholar] [CrossRef]

- Robinson, N.; Chester, T.W.J. Use of mobile EEG in decoding hand movement speed and position. IEEE Trans. Hum.-Mach. Syst. 2021, 51, 120–129. [Google Scholar] [CrossRef]

- Wang, J.; Bi, L.; Fei, W.; Tian, K. EEG-based continuous hand movement decoding using improved center-out paradigm. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 2845–2855. [Google Scholar] [CrossRef]

- Fei, W.; Bi, L.; Wang, J.; Xia, S.; Fan, X.; Guan, C. Effects of cognitive distraction on upper limb movement decoding from EEG signals. IEEE Trans. Biomed. Eng. 2022, 70, 166–174. [Google Scholar] [CrossRef]

- Wei, Y.; Wang, X.; Luo, R.; Mai, X.; Li, S.; Meng, J. Decoding movement frequencies and limbs based on steady-state movement-related rhythms from noninvasive EEG. J. Neural Eng. 2023, 20, 066019. [Google Scholar] [CrossRef]

- Falcon-Caro, A.; Ferreira, J.F.; Sanei, S. Cooperative Identification of Prolonged Motor Movement from EEG for BCI without Feedback. IEEE Access 2025, 13, 11765–11777. [Google Scholar] [CrossRef]

- Bi, L.; Xia, S.; Fei, W. Hierarchical decoding model of upper limb movement intention from EEG signals based on attention state estimation. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 2008–2016. [Google Scholar] [CrossRef]

- Asanza, V.; Peláez, E.; Loayza, F.; Lorente-Leyva, L.L.; Peluffo-Ordóñez, D.H. Identification of lower-limb motor tasks via brain–computer interfaces: A topical overview. Sensors 2022, 22, 2028. [Google Scholar] [CrossRef]

- Yan, Y.; Li, J.; Yin, M. EEG-based recognition of hand movement and its parameter. J. Neural Eng. 2025, 22, 026006. [Google Scholar] [CrossRef] [PubMed]

- Kobler, R.J.; Kolesnichenko, E.; Sburlea, A.I.; Müller-Putz, G.R. Distinct cortical networks for hand movement initiation and directional processing: An EEG study. NeuroImage 2020, 220, 117076. [Google Scholar] [CrossRef] [PubMed]

- Körmendi, J.; Ferentzi, E.; Weiss, B.; Nagy, Z. Topography of movement-related delta and theta brain oscillations. Brain Topogr. 2021, 34, 608–617. [Google Scholar] [CrossRef]

- Peng, B.; Bi, L.; Wang, Z.; Feleke, A.G.; Fei, W. Robust decoding of upper-limb movement direction under cognitive distraction with invariant patterns in embedding manifold. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 1344–1354. [Google Scholar] [CrossRef] [PubMed]

- Khaliq Fard, M.; Fallah, A.; Maleki, A. Neural decoding of continuous upper limb movements: A meta-analysis. Disabil. Rehabil. Assist. Technol. 2022, 17, 731–737. [Google Scholar] [CrossRef]

- Gaidai, R.; Goelz, C.; Mora, K.; Rudisch, J.; Reuter, E.M.; Godde, B.; Reinsberger, C.; Voelcker-Rehage, C.; Vieluf, S. Classification characteristics of fine motor experts based on electroencephalographic and force tracking data. Brain Res. 2022, 1792, 148001. [Google Scholar] [CrossRef]

- Li, Y.; Gao, X.; Liu, H.; Gao, S. Classification of single-trial electroencephalogram during finger movement. IEEE Trans. Biomed. Eng. 2004, 51, 1019–1025. [Google Scholar] [CrossRef]

- Nemes, Á.G.; Eigner, G.; Shi, P. Application of Deep Learning to Enhance Finger Movement Classification Accuracy from UHD-EEG Signals. IEEE Access 2024, 12, 139937–139945. [Google Scholar] [CrossRef]

- Wenhao, H.; Lei, M.; Hashimoto, K.; Fukami, T. Classification of finger movement based on EEG phase using deep learning. In Proceedings of the 2022 Joint 12th International Conference on Soft Computing and Intelligent Systems and 23rd International Symposium on Advanced Intelligent Systems (SCIS&ISIS), Ise, Japan, 29 November–2 December 2022; pp. 1–4. [Google Scholar]

- Ma, Z.; Xu, M.; Wang, K.; Ming, D. Decoding of individual finger movement on one hand using ultra high-density EEG. In Proceedings of the 2022 16th ICME International Conference on Complex Medical Engineering (CME), Zhongshan, China, 4–6 November 2022; pp. 332–335. [Google Scholar]

- Sun, Q.; Merino, E.C.; Yang, L.; Van Hulle, M.M. Unraveling EEG correlates of unimanual finger movements: Insights from non-repetitive flexion and extension tasks. J. NeuroEng. Rehabil. 2024, 21, 228. [Google Scholar] [CrossRef] [PubMed]

- Anam, K.; Bukhori, S.; Hanggara, F.; Pratama, M. Subject-independent Classification on Brain-Computer Interface using Autonomous Deep Learning for finger movement recognition. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Montreal, QC, Canada, 20–24 July 2020; Annual International Conference. Volume 2020, pp. 447–450. [Google Scholar]

- Haddix, C.; Bates, M.; Garcia Pava, S.; Salmon Powell, E.; Sawaki, L.; Sunderam, S. Electroencephalogram Features Reflect Effort Corresponding to Graded Finger Extension: Implications for Hemiparetic Stroke. Biomed. Phys. Eng. Express 2025, 11, 025022. [Google Scholar] [CrossRef]

- Tian, B.; Zhang, S.; Xue, D.; Chen, S.; Zhang, Y.; Peng, K.; Wang, D. Decoding intrinsic fluctuations of engagement from EEG signals during fingertip motor tasks. IEEE Trans. Neural Syst. Rehabil. Eng. 2025, 33, 1271–1283. [Google Scholar] [CrossRef] [PubMed]

- Peng, C.; Peng, W.; Feng, W.; Zhang, Y.; Xiao, J.; Wang, D. EEG correlates of sustained attention variability during discrete multi-finger force control tasks. IEEE Trans. Haptics 2021, 14, 526–537. [Google Scholar] [CrossRef]

- Todd, N.P.; Govender, S.; Hochstrasser, D.; Keller, P.E.; Colebatch, J.G. Distinct movement related changes in EEG and ECeG power during finger and foot movement. Neurosci. Lett. 2025, 853, 138207. [Google Scholar] [CrossRef]

- Jounghani, A.R.; Backer, K.C.; Vahid, A.; Comstock, D.C.; Zamani, J.; Hosseini, H.; Balasubramaniam, R.; Bortfeld, H. Investigating the role of auditory cues in modulating motor timing: Insights from EEG and deep learning. Cereb. Cortex 2024, 34, bhae427. [Google Scholar] [CrossRef]

- Nielsen, A.L.; Norup, M.; Bjørndal, J.R.; Wiegel, P.; Spedden, M.E.; Lundbye-Jensen, J. Increased functional and directed corticomuscular connectivity after dynamic motor practice but not isometric motor practice. J. Neurophysiol. 2025, 133, 930–943. [Google Scholar] [CrossRef]

- Anusha, A.S.; Kumar G., P.; Ramakrishnan, A. Brain-scale theta band functional connectome as signature of slow breathing and breath-hold phases. Comput. Biol. Med. 2025, 184, 109435. [Google Scholar] [CrossRef]

- Kumar, P.; Adarsh, A. Modulation of EEG by Slow-Symmetric Breathing incorporating Breath-Hold. IEEE Trans. Biomed. Eng. 2024, 72, 1387–1396. [Google Scholar] [CrossRef]

- Watanabe, T.; Itagaki, A.; Hashizume, A.; Takahashi, A.; Ishizaka, R.; Ozaki, I. Observation of respiration-entrained brain oscillations with scalp EEG. Neurosci. Lett. 2023, 797, 137079. [Google Scholar] [CrossRef] [PubMed]

- Herzog, M.; Sucec, J.; Jelinčić, V.; Van Diest, I.; Van den Bergh, O.; Chan, P.Y.S.; Davenport, P.; von Leupoldt, A. The test-retest reliability of the respiratory-related evoked potential. Biol. Psychol. 2021, 163, 108133. [Google Scholar] [CrossRef]

- Morelli, M.S.; Vanello, N.; Callara, A.L.; Hartwig, V.; Maestri, M.; Bonanni, E.; Emdin, M.; Passino, C.; Giannoni, A. Breath-hold task induces temporal heterogeneity in electroencephalographic regional field power in healthy subjects. J. Appl. Physiol. 2021, 130, 298–307. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, Y.; Zhang, Y.; Wang, Z.; Guo, W.; Zhang, Y.; Wang, Y.; Ge, Q.; Wang, D. Voluntary Respiration Control: Signature Analysis by EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 4624–4634. [Google Scholar] [CrossRef]

- Navarro-Sune, X.; Raux, M.; Hudson, A.L.; Similowski, T.; Chavez, M. Cycle-frequency content EEG analysis improves the assessment of respiratory-related cortical activity. Physiol. Meas. 2024, 45, 095003. [Google Scholar] [CrossRef]

- Hudson, A.L.; Wattiez, N.; Navarro-Sune, X.; Chavez, M.; Similowski, T. Combined head accelerometry and EEG improves the detection of respiratory-related cortical activity during inspiratory loading in healthy participants. Physiol. Rep. 2022, 10, e15383. [Google Scholar] [CrossRef]

- Goheen, J.; Wolman, A.; Angeletti, L.L.; Wolff, A.; Anderson, J.A.; Northoff, G. Dynamic mechanisms that couple the brain and breathing to the external environment. Commun. Biol. 2024, 7, 938. [Google Scholar] [CrossRef]

- Kæseler, R.L.; Johansson, T.W.; Struijk, L.N.A.; Jochumsen, M. Feature and classification analysis for detection and classification of tongue movements from single-trial pre-movement EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 678–687. [Google Scholar] [CrossRef] [PubMed]

- Zero, E.; Bersani, C.; Sacile, R. Identification of brain electrical activity related to head yaw rotations. Sensors 2021, 21, 3345. [Google Scholar] [CrossRef] [PubMed]

- Gulyás, D.; Jochumsen, M. Detection of Movement-Related Brain Activity Associated with Hand and Tongue Movements from Single-Trial Around-Ear EEG. Sensors 2024, 24, 6004. [Google Scholar] [CrossRef]

- Meng, J.; Zhao, Y.; Wang, K.; Sun, J.; Yi, W.; Xu, F.; Xu, M.; Ming, D. Rhythmic temporal prediction enhances neural representations of movement intention for brain–computer interface. J. Neural Eng. 2023, 20, 066004. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, M.; Wang, H.; Zhang, M.; Xu, G. Preparatory movement state enhances premovement EEG representations for brain–computer interfaces. J. Neural Eng. 2024, 21, 036044. [Google Scholar] [CrossRef]

- Bigand, F.; Bianco, R.; Abalde, S.F.; Nguyen, T.; Novembre, G. EEG of the Dancing Brain: Decoding Sensory, Motor, and Social Processes during Dyadic Dance. J. Neurosci. 2025, 45, e2372242025. [Google Scholar] [CrossRef] [PubMed]

- Ody, E.; Kircher, T.; Straube, B.; He, Y. Pre-movement event-related potentials and multivariate pattern of EEG encode action outcome prediction. Hum. Brain Mapp. 2023, 44, 6198–6213. [Google Scholar] [CrossRef]

- Janyalikit, T.; Ratanamahatana, C.A. Time series shapelet-based movement intention detection toward asynchronous BCI for stroke rehabilitation. IEEE Access 2022, 10, 41693–41707. [Google Scholar] [CrossRef]

- Meng, J.; Li, X.; Li, S.; Fan, X.; Xu, M.; Ming, D. High-Frequency Power Reflects Dual Intentions of Time and Movement for Active Brain-Computer Interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2025, 33, 630–639. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Yao, L.; Chen, K.; Wang, S.; Chang, X.; Liu, Y. Making sense of spatio-temporal preserving representations for EEG-based human intention recognition. IEEE Trans. Cybern. 2019, 50, 3033–3044. [Google Scholar] [CrossRef] [PubMed]

- Derchi, C.; Mikulan, E.; Mazza, A.; Casarotto, S.; Comanducci, A.; Fecchio, M.; Navarro, J.; Devalle, G.; Massimini, M.; Sinigaglia, C. Distinguishing intentional from nonintentional actions through eeg and kinematic markers. Sci. Rep. 2023, 13, 8496. [Google Scholar] [CrossRef]

- Gu, B.; Wang, K.; Chen, L.; He, J.; Zhang, D.; Xu, M.; Wang, Z.; Ming, D. Study of the correlation between the motor ability of the individual upper limbs and motor imagery induced neural activities. Neuroscience 2023, 530, 56–65. [Google Scholar] [CrossRef]

- Zhang, M.; Wu, J.; Song, J.; Fu, R.; Ma, R.; Jiang, Y.C.; Chen, Y.F. Decoding coordinated directions of bimanual movements from EEG signals. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 31, 248–259. [Google Scholar] [CrossRef]

- Tantawanich, P.; Phunruangsakao, C.; Izumi, S.I.; Hayashibe, M. A Systematic Review of Bimanual Motor Coordination in Brain-Computer Interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 33, 266–285. [Google Scholar] [CrossRef]

- Wang, J.; Bi, L.; Fei, W.; Xu, X.; Liu, A.; Mo, L.; Feleke, A.G. Neural correlate and movement decoding of simultaneous-and-sequential bimanual movements using EEG signals. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 2087–2095. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Mota, B.; Kondo, T.; Nasuto, S.; Hayashi, Y. EEG dynamical network analysis method reveals the neural signature of visual-motor coordination. PLoS ONE 2020, 15, e0231767. [Google Scholar] [CrossRef] [PubMed]

- De Pretto, M.; Deiber, M.P.; James, C.E. Steady-state evoked potentials distinguish brain mechanisms of self-paced versus synchronization finger tapping. Hum. Mov. Sci. 2018, 61, 151–166. [Google Scholar] [CrossRef] [PubMed]

- Noboa, M.d.L.; Kertész, C.; Honbolygó, F. Neural entrainment to the beat and working memory predict sensorimotor synchronization skills. Sci. Rep. 2025, 15, 10466. [Google Scholar] [CrossRef]

- Mondok, C.; Wiener, M. A coupled oscillator model predicts the effect of neuromodulation and a novel human tempo matching bias. J. Neurophysiol. 2025, 133, 1607–1617. [Google Scholar] [CrossRef]

- Nave, K.M.; Hannon, E.E.; Snyder, J.S. Steady state-evoked potentials of subjective beat perception in musical rhythms. Psychophysiology 2022, 59, e13963. [Google Scholar] [CrossRef]

- Leske, S.; Endestad, T.; Volehaugen, V.; Foldal, M.D.; Blenkmann, A.O.; Solbakk, A.K.; Danielsen, A. Beta oscillations predict the envelope sharpness in a rhythmic beat sequence. Sci. Rep. 2025, 15, 3510. [Google Scholar] [CrossRef]

- Comstock, D.C.; Balasubramaniam, R. Differential motor system entrainment to auditory and visual rhythms. J. Neurophysiol. 2022, 128, 326–335. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Zhou, C.; Jin, X. Resonance and beat perception of ballroom dancers: An EEG study. PLoS ONE 2024, 19, e0312302. [Google Scholar] [CrossRef]

- Ross, J.M.; Comstock, D.C.; Iversen, J.R.; Makeig, S.; Balasubramaniam, R. Cortical mu rhythms during action and passive music listening. J. Neurophysiol. 2022, 127, 213–224. [Google Scholar] [CrossRef]

- Lenc, T.; Lenoir, C.; Keller, P.E.; Polak, R.; Mulders, D.; Nozaradan, S. Measuring self-similarity in empirical signals to understand musical beat perception. Eur. J. Neurosci. 2025, 61, e16637. [Google Scholar] [CrossRef]

- Pandey, P.; Ahmad, N.; Miyapuram, K.P.; Lomas, D. Predicting dominant beat frequency from brain responses while listening to music. In Proceedings of the 2021 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Houston, TX, USA, 9–12 December 2021; pp. 3058–3064. [Google Scholar]

- Cheng, T.H.Z.; Creel, S.C.; Iversen, J.R. How do you feel the rhythm: Dynamic motor-auditory interactions are involved in the imagination of hierarchical timing. J. Neurosci. 2022, 42, 500–512. [Google Scholar] [CrossRef] [PubMed]

- Yoshimura, N.; Tanaka, T.; Inaba, Y. Estimation of Imagined Rhythms from EEG by Spatiotemporal Convolutional Neural Networks. In Proceedings of the 2023 IEEE Statistical Signal Processing Workshop (SSP), Hanoi, Vietnam, 2–5 July 2023; pp. 690–694. [Google Scholar]

- Keitel, A.; Pelofi, C.; Guan, X.; Watson, E.; Wight, L.; Allen, S.; Mencke, I.; Keitel, C.; Rimmele, J. Cortical and behavioral tracking of rhythm in music: Effects of pitch predictability, enjoyment, and expertise. Ann. N. Y. Acad. Sci. 2025, 1546, 120–135. [Google Scholar] [CrossRef] [PubMed]

- Di Liberto, G.M.; Marion, G.; Shamma, S.A. Accurate decoding of imagined and heard melodies. Front. Neurosci. 2021, 15, 673401. [Google Scholar] [CrossRef] [PubMed]

- Chung, M.; Kim, T.; Jeong, E.; Chung, C.K.; Kim, J.S.; Kwon, O.S.; Kim, S.P. Decoding Imagined Musical Pitch From Human Scalp Electroencephalograms. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 2154–2163. [Google Scholar] [CrossRef]

- Galeano-Otálvaro, J.D.; Martorell, J.; Meyer, L.; Titone, L. Neural encoding of melodic expectations in music across EEG frequency bands. Eur. J. Neurosci. 2024, 60, 6734–6749. [Google Scholar] [CrossRef]

- Tang, T.; Samaha, J.; Peters, M.A. Behavioral and neural measures of confidence using a novel auditory pitch identification task. PLoS ONE 2024, 19, e0299784. [Google Scholar] [CrossRef]

- Alameda, C.; Sanabria, D.; Ciria, L.F. The brain in flow: A systematic review on the neural basis of the flow state. Cortex 2022, 154, 348–364. [Google Scholar] [CrossRef]

- Irshad, M.T.; Li, F.; Nisar, M.A.; Huang, X.; Buss, M.; Kloep, L.; Peifer, C.; Kozusznik, B.; Pollak, A.; Pyszka, A.; et al. Wearable-based human flow experience recognition enhanced by transfer learning methods using emotion data. Comput. Biol. Med. 2023, 166, 107489. [Google Scholar] [CrossRef]

- Rácz, M.; Becske, M.; Magyaródi, T.; Kitta, G.; Szuromi, M.; Márton, G. Physiological assessment of the psychological flow state using wearable devices. Sci. Rep. 2025, 15, 11839. [Google Scholar] [CrossRef]

- Lorenz, A.; Mercier, M.; Trébuchon, A.; Bartolomei, F.; Schön, D.; Morillon, B. Corollary discharge signals during production are domain general: An intracerebral EEG case study with a professional musician. Cortex 2025, 186, 11–23. [Google Scholar] [CrossRef]

- Uehara, K.; Yasuhara, M.; Koguchi, J.; Oku, T.; Shiotani, S.; Morise, M.; Furuya, S. Brain network flexibility as a predictor of skilled musical performance. Cereb. Cortex 2023, 33, 10492–10503. [Google Scholar] [CrossRef]

- Ahmed, Y.; Ferguson-Pell, M.; Adams, K.; Ríos Rincón, A. EEG-Based Engagement Monitoring in Cognitive Games. Sensors 2025, 25, 2072. [Google Scholar] [CrossRef]

- Wu, S.F.; Lu, Y.L.; Lien, C.J. Measuring effects of technological interactivity levels on flow with electroencephalogram. IEEE Access 2021, 9, 85813–85822. [Google Scholar] [CrossRef]

- Hang, Y.; Unenbat, B.; Tang, S.; Wang, F.; Lin, B.; Zhang, D. Exploring the neural correlates of Flow experience with multifaceted tasks and a single-Channel Prefrontal EEG Recording. Sensors 2024, 24, 1894. [Google Scholar] [CrossRef] [PubMed]

- van Schie, H.T.; Iotchev, I.B.; Compen, F.R. Free will strikes back: Steady-state movement-related cortical potentials are modulated by cognitive control. Conscious. Cogn. 2022, 104, 103382. [Google Scholar] [CrossRef] [PubMed]

| Scope Inclusion Parameters | Exclusion Conditions |

|---|---|

| Journal articles. | Use invasive or non-EEG extracranial neuroimaging technology such as infra-red spectroscopy (fNIRS) or magnetic resonance (fMRI). |

| Published in 2020 or later. | Use of external stimuli-dependent passive BCI strategies, such as P300 oddball or visually evoked potentials. |

| Reports on non-invasive EEG-based BCI systems. | Works that only analyze motor imagery tasks, where the users perform only a mental action, without actually performing the reciprocal physical motion. |

| Presents experimental results with human participants (). | Studies of face expression or emotion recognition (specific criteria for the facial movements search). |

| Evaluates a parameter that is relevant to at least one TEMP feature. | Does not report empirical results, or the study was not tested with human-generated datasets |

| Includes technical performance metrics (e.g., latency, accuracy, detection reliability). |

| Category | PubMed Results | IEEEXplore Results | Included in Survey |

|---|---|---|---|

| Posture and Balance | 92 | 202 | 4 |

| Movement and Muscle Activity | 327 | 2564 | 22 |

| Fine Motor Movement and Dexterity | 1195 | 320 | 13 |

| Breathing Control | 35 | 258 | 9 |

| Head and Facial Movement | 143 | 243 | 3 |

| Movement Intention | 535 | 547 | 8 |

| Coordination and Movement Fluidity | 352 | 36 | 7 |

| Tempo Processing | 85 | 41 | 3 |

| Pitch Recognition | 15 | 20 | 4 |

| Cognitive Engagement | 597 | 534 | 9 |

| TEMP Feature | Feasibility Tier | Evidence-Grounded Prototyping Strategy | Key Bottlenecks |

|---|---|---|---|

| Posture and balance | (i) Technically viable | 8–32 ch wearable EEG targeting perturbation-evoked N1 and fronto/parietal modulations | Movement-related EEG artifacts; reliable calibration in standing/playing positions |

| Gross arm/hand trajectory | (i) Technically viable | CSP → CNN on ERD + MRCPs | ∼150 ms latency still perceptible; angular, not mm precision |

| Finger individuation and force | (ii) Within experimental reach | Ultra-high-density EEG (256+) or ear-EEG; SVM/Riemann classifier flags wrong-finger presses | UHD caps cumbersome; overlap of finger maps lowers SNR |

| Breathing control | (ii) Within experimental reach | 32-ch EEG -band connectivity distinguishes inhale/exhale/hold after resistive-load calibration | Wind-instrument mouthpiece artifacts; elusive fine pressure gradations |

| Bimanual coordination | (ii) Within experimental reach | ERD and reconfigurations of alpha- and gamma-band visual–motor networks | Requires context-appropriate research |

| Tempo processing | (i) Technically viable | Beat-locked SSEPs (1–5 Hz) + SMA ERD track internal vs. external tempo | Sub-20 ms micro-timing below EEG resolution; expressive rubato confounds error metric |

| Movement intention | (iii) Aspirational | Detect BP/MRCP 150–300 ms pre-onset; dual threshold for unplanned twitches | Require large labeled datasets; day-to-day variability |

| Facial/head muscle tension | (iii) Aspirational | Requires further research | Strong EMG/blink contamination; no robust decoding of subtle embouchure |

| Pitch recognition | (ii) Within experimental reach | Left–right hemispheric differences in the beta and low-gamma range | No study combining with actual intrument playing |

| Engagement and flow state | (ii) Within experimental reach | Wearable frontal EEG → small CNN fine-tuned via transfer-learning; uses moderate plus high coherence | Signatures idiosyncratic; single-channel headsets give only coarse signal |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Perrotta, A.; Estima, J.; Cardoso, J.C.S.; Roque, L.; Pais-Vieira, M.; Pais-Vieira, C. Technology-Enhanced Musical Practice Using Brain–Computer Interfaces: A Topical Review. Technologies 2025, 13, 365. https://doi.org/10.3390/technologies13080365

Perrotta A, Estima J, Cardoso JCS, Roque L, Pais-Vieira M, Pais-Vieira C. Technology-Enhanced Musical Practice Using Brain–Computer Interfaces: A Topical Review. Technologies. 2025; 13(8):365. https://doi.org/10.3390/technologies13080365

Chicago/Turabian StylePerrotta, André, Jacinto Estima, Jorge C. S. Cardoso, Licínio Roque, Miguel Pais-Vieira, and Carla Pais-Vieira. 2025. "Technology-Enhanced Musical Practice Using Brain–Computer Interfaces: A Topical Review" Technologies 13, no. 8: 365. https://doi.org/10.3390/technologies13080365

APA StylePerrotta, A., Estima, J., Cardoso, J. C. S., Roque, L., Pais-Vieira, M., & Pais-Vieira, C. (2025). Technology-Enhanced Musical Practice Using Brain–Computer Interfaces: A Topical Review. Technologies, 13(8), 365. https://doi.org/10.3390/technologies13080365