A Design-Based Research Approach to Streamline the Integration of High-Tech Assistive Technologies in Speech and Language Therapy

Abstract

1. Introduction

2. Related Works

2.1. Technical, Ethical and Cultural Issues That Hinder the Acceptance and Adoption of High-Tech ATs by Speech and Language Therapists

- Technical expertise—therapists may lack the skills needed to program robots, raising concerns about their use in therapy;

- Software complexity—programming tools for SARs are often too complex or overly limited;

- Robot behavior—the robot must adapt to children’s abilities, requiring customization based on individual needs;

- User-friendliness—the ease of setup and interface design are critical for adoption in clinical settings.

- Accessible training in AI basics—many therapists lack the background in AI or programming needed to effectively utilize or troubleshoot ConvAI systems. Continuous technical support and directions on how to use ChatGPT for speech therapy are essential to facilitate this transition [39].

- Time constraints—according to [12], 32.4% of professionals expressed interest in adopting new AI tools but mentioned limited time as a barrier. Some therapists (e.g., participants S27, S41) openly admitted that they tend to avoid using technology.

- Data privacy and compliance—therapists expressed concerns about compliance with privacy laws such as HIPAA and FERPA, especially when using AI tools for transcription or data processing (e.g., participant S10 in [12]).

- Rapid technological change—the speed at which AI technologies evolve makes it difficult for practitioners to stay up-to-date. One participant (S17) cited “keeping up with technology” as a top challenge.

- System compatibility and latency—the integration of AI systems into existing platforms can be technically demanding. Authors in [40] describe in detail the limitations of integrating ChatGPT into the Pepper robot. Compatibility issues (Pepper uses Python 2.7; ChatGPT APIs require Python 3.x) and dependency on stable internet connections often lead to latency, which disrupts smooth and real-time interaction.

2.2. Findings from Case Studies Demonstrating How Hi-Tech ATs Have Been Integrated into SLT

2.3. Existing Visual-Based Programming Platforms That Explore Similar Design Solutions for Intuitive and Modular at Interfaces

2.4. Identified Issues and Areas for Improvement

- There is a need for an ergonomic platform that supports therapists in using various ATs. This platform should serve as a general hub, allowing therapists to seamlessly integrate ATs.

- The platform should offer a GUI that allows therapists to personalize the robot’s receptive and expressive language, as well as interactions, to align with each child’s therapeutic goals and developmental stage.

- The platform should provide secure data management, including storing and analyzing progress through quantitative performance metrics and session summaries.

- The platform should be tailored to SLT, which would save time for therapists in their intervention preparation. Visual programming platforms are generally universal in design and do not typically focus on specific therapies. They often lack pre-designed content, such as a content library with therapeutic interactive scenarios enhanced by ATs.

- There is a need for robot cooperation, which arises when a robot lacks certain functionalities, such as QR code reading or Automatic Speech Recognition (ASR). This calls for shared repositories and direct or message-based input/output channels to facilitate communication between robots and support such cooperation.

- There is a need for a remote mode in the platform for monitoring and controlling robots for children receiving therapy at home.

- There is a need for providing technical support for therapists, and training on how to integrate ATs through tutorials and video lessons. Therapists also need special training to use block-based GUIs, even though they are often intuitive.

3. Materials and Methods

3.1. Methodology

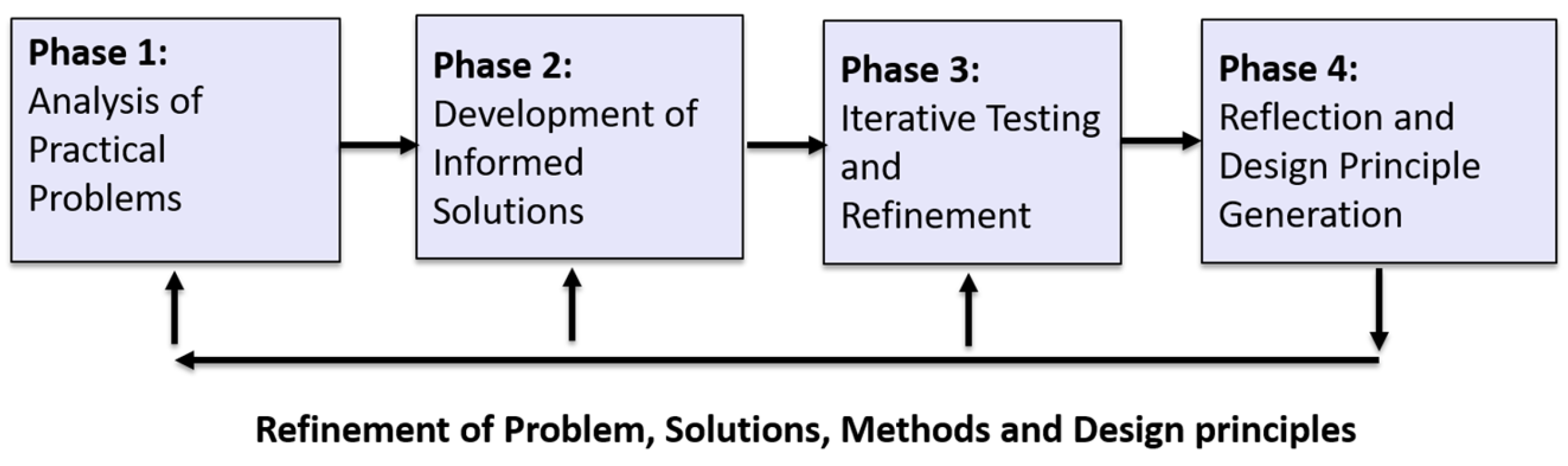

3.1.1. Developing and Accessing ATLog Therapy Solutions Using a Cyclical Approach

3.1.2. Participants and Setup for the Qualitatively Assessment of ATLog

3.1.3. Instruments for the Qualitatively Assessment of ATLog

| Questionnaire | Cronbach’s Alpha | N of Items | Description |

|---|---|---|---|

| SUS | 0.798 | 10 | Reliable |

| Design structure (communications and effectiveness in creating interactive scenarios in ATLog) | 0.849 | 10 | Reliable |

| PU subscale | 0.906 | 6 | Reliable |

| PEU subscale | 0.922 | 6 | Reliable |

| No. | Question | Mean | SD | Interpretation |

|---|---|---|---|---|

| 1 | I think that I would like to use this system frequently. | 4.1 | 0.8 | Agree |

| 2 | I found the system is unnecessarily complex. | 1.4 | 0.7 | Disagree |

| 3 | I thought the system was easy to use. | 4.3 | 0.8 | Agree |

| 4 | I think that I would need the support of a technical person to be able to use this system. | 2.4 | 1.2 | Disagree |

| 5 | I found the various functions in this system were well integrated. | 4.3 | 0.7 | Agree |

| 6 | I thought there was too much inconsistency in this system. | 1.4 | 0.8 | Disagree |

| 7 | I would imagine that most people would learn how to use this system very quickly. | 4.4 | 0.6 | Agree |

| 8 | I found the system very cumbersome to use. | 1.4 | 0.8 | Disagree |

| 9 | I felt very confident using the system. | 3.6 | 1.3 | Agree |

| 10 | I need to learn a lot of things before I could get going with this system. | 2.8 | 1.2 | Disagree |

| No. | Question | Mean | SD | Interpretation |

|---|---|---|---|---|

| 11 | I would use ATLog if I had access to video tutorials and a methodological guide. | 4.2 | 1.1 | Agree |

| 12 | The platform is adequately structured for creating interactive scenarios with AT. | 4.4 | 0.7 | Agree |

| 13 | Creating scenarios in the Platform saves time. | 4.3 | 0.8 | Agree |

| 14 | The platform is suitable for managing SAR in real conditions. | 4.3 | 0.8 | Agree |

| 15 | The platform allows the child to take initiative (through QR code, text, voice). | 4.8 | 0.4 | Agree |

| 16 | The therapist has control over the AT in the platform during the intervention (stop button, voice command, and touch sensor). | 4.9 | 0.4 | Agree |

| 17 | The delay in responses from the text-generative AI is acceptable. | 3.7 | 0.8 | Agree |

| 18 | Each of the graphic blocks in the platform contains information about its therapeutic application. | 4.6 | 0.6 | Agree |

| 19 | The graphic blocks in the platform are informative enough for their use. | 4.4 | 0.9 | Agree |

| 20 | The platform supports the creation of personalized interactive scenarios with AT. | 4.7 | 0.6 | Agree |

3.2. Materials

3.2.1. Hardware

Social Robots

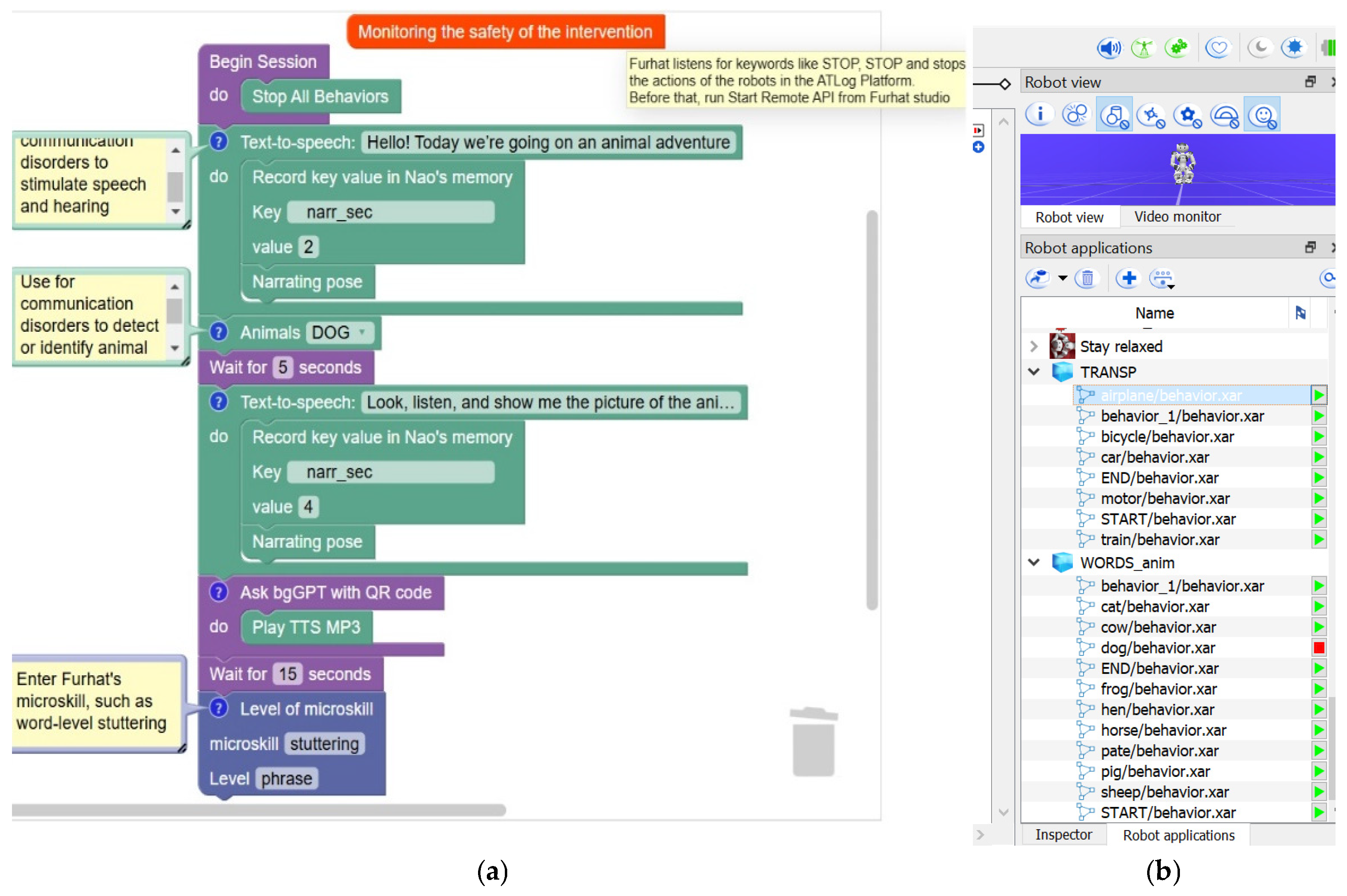

- Nao [83]: A small humanoid robot for multimodal interaction through speech, body language, barcode reading and tactile sensors. Nao is one of the most widely used SARs in therapy for individuals with developmental, language and communication disorders [47]. For that reason, the ATLog platform contains more predefined Blockly blocks for Nao compared to other ATs.

- Furhat [84]: Currently the most advanced conversational social robot with an expressive face. Its human presence tracking, visual contact by eye-tracking camera, intuitive face-to-face interaction, and customizable diverse characters and voices contribute to real human-like interactions. The touchscreen interface, enhanced with images and text, further enriches communications.

- Emo, our second version (v2) of the emotionally expressive robot, v1 can be seen [85]: The robot has a 25 cm head that displays emotional states using emoticon-like facial features, with a dynamically changing mouth, eyes and eyebrows. Emo has six degrees of freedom to replicate human head movements. It can track a person in front of it to detect attendance, read QR codes and play audio files, and access cloud services for NLP and NLG. These capabilities make Emo suitable for emotional teaching and multi-robot cooperation in SLT.

- Double3 [86]: A robot for telepresence, designed to provide remote interaction with a dynamic presence. It is also considered as a social robot because it enables assistive interactions with both the therapists and other ATs during telepractice. Double3 has a high-definition camera, two-way audio and video capabilities, the ability to navigate physical spaces, and an interactive display to present text, images and videos. Double3 can scan remote QR codes, thus acting as a mediator in communications during telepractice.

VR Devices

- Headsets: Meta Quest 2, 3, 3S and Pro. Headsets run on the “Meta Quest OS” operating system, which is based on “Android” and can be used independently or connected to a computer via the “Meta Quest Link” program. The Meta Quest Pro headset was used during the experiments due to its eye-tracking.

- Camera for 360° Virtual Tours: XPhase Pro S2 with 25 sensors, each with 8 megapixels, providing a total resolution of 200 megapixels. It supports 360° tours, hot spots and side-by-side view, with 16-bit lossless format output.

Computing Devices

3.2.2. Software

- Choregraphe [87]: a multi-platform desktop tool that allows users to create animations, behaviors and dialogs for the Nao robot. It has block-based visual interface for creating, customizing and uploading personalized behaviors, which are then uploaded to the robot for subsequent use through the ATLog frontend.

- NAOqi 2.8 [88]: NAOqi is the operating system for the Nao robot. Through its remote APIs, developers can interact with the robot from external IDEs over a network. These APIs allow users to access features such as speech recognition, motion control, vision processing and sensor data. Customized Python scripts in the ATLog backend enable these functionalities for subsequent use through the ATLog frontend.

- Furhat Kotlin SDK [89]: A software development kit designed for building interactive applications for the Furhat robot using Kotlin. This SDK is used to program the robot’s real-time interactions, including speech, gestures, and dialog, as well as tablet-based interface to Furhat.

- Furhat Remote APIs [90]: The remote APIs enable developers to interact with the robot from external IDEs over a network. Using these APIs, the robot’s movements, facial expressions and voice can be controlled remotely through the ATLog frontend.

- Double3 Developer SDK [91]: The D3 APIs, commands and events enable developers to use Double3 in developer mode. Thus, python scripts run directly in the Double3 operating system. The D3 software includes a built-in subscription/publishing system, allowing Double3 to send event messages or respond according to its programmed skills.

- EMO software: The control system of the robot is based on two Raspberry Pi 5 units and other Arduino-type microcontrollers. Running on Linux, it supports any compatible programming language.

- VR software for interactive scenarios in web environment: HTML, CSS and JavaScript for structuring the content, styling and layout. To enable virtual reality within the browser, webXR technology [92] is used to manage VR devices and handle controller inputs through an API that triggers events and interactions within the VR environment. Additionally, the THREE.js library, built on top of WebGL [93], accesses the underlying graphics hardware to render 3D content directly in the browser.

- 3DVista [94]: Software for 360° Virtual Tours enables the creation of interactive environments through integrated elements such as clickable hotspots, navigation arrows, and objects that are activated based on predefined therapeutic goals and outcomes. The virtual tours include components designed to support the development of spatial awareness, orientation and logical thinking. Specific interactive features for children are included to facilitate navigation within environments and engage them through age-appropriate, game-based scenarios tailored to their individual needs.

- Express.js server [98]: A Node.js web application framework for developing the client–server model. Express.js is used to build the backend in ATLog, handling logic, communication and data processing.

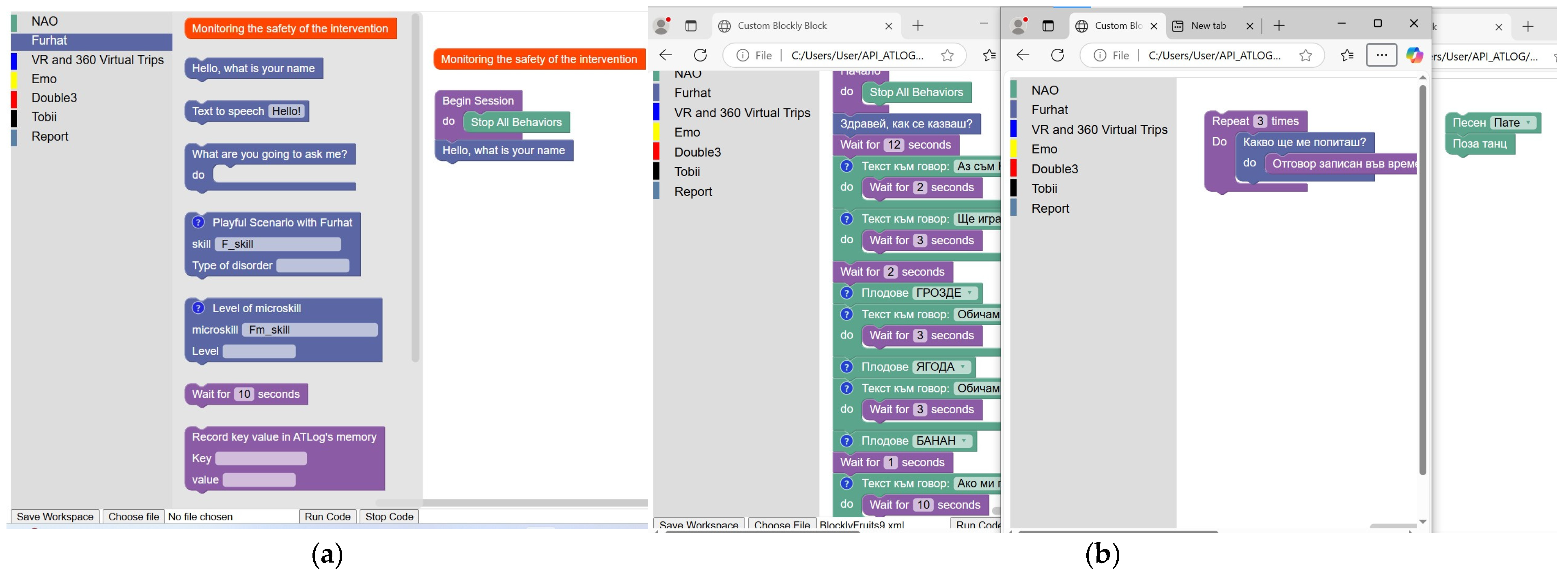

- Visual programming interface, built on Google Blockly [99]: block-based programming interface allowing users to design graphical flows of blocks without programming skills.

- The internal memory, built on a Node.js object repository, stores and retrieves key-value pairs and acts as a temporary data storage for short-term operations.

- SQLite database [100]: persistent data storage for long-term data management to track and access the progress of the individual SLT interventions.

3.3. ATLog Platform: Design and Features

3.3.1. Platform Architecture

3.3.2. Communication Protocols

- In Express server: standard HTTP protocols, along with child processes to access skills of SARs and VR scenarios. RESTful HTTP requests (GET, POST) are sent to cloud services for NLP and NLG. WebSocket protocol enables bidirectional communications in VR scenarios.

- In Blockly: HTTP GET and POST requests are used to send commands to Express server or external web resources.

- For file transfers: Files are securely transferred between the platform and the Nao robot via SCP (Secure Copy Protocol) over the LAN network, using SSH (Secure Shell) for encryption and authentication.

- For remote script execution on Nao and Furhat: TCP sockets are used for communication from the Express backend to NAOqi (on Nao) over IP, while for Furhat we use the Python SDK wrapper, which connects to the Furhat API via WebSocket.

- For remote script execution on Double3: python scripts are remotely executed using an SSH client via PuTTY. A developer mode and additional administrative rights are required to activate the camera and subscribe to events for Double3, such as reading QR codes (DRCamera.tags) using the command “camera.tagDetector.enable”.

- For MQTT connections to Emo robot: Remote control and execution of scripts on the Emo robot by using the mqtt protocol within a PY 3.x virtual environment and paho-mqtt library. On the Raspberry Pi, a script runs as a communication bridge between the Raspberry Pi and the ATLog server.

- For database writing, the protocol is SQL, executed via the SQLite3 package for Node.js.

3.3.3. Network Solutions for the Integration of Block-Based Visual Programming with Express Server

- On the frontend (Blockly): ATLog sends HTTP GET and POST requests to the server to initiate the skills of ATs, retrieve data or send data to the internal ATLog repository. To ensure the correct execution of the next block in the scenario flow, specific handling of the backend response is mandatory for certain blocks.

- On the backend (Express server): ATLog uses standard TCP or HTTP protocols, along with child processes and several middleware, to access the skills of SARs and VR scenarios. It sends RESTful HTTP requests (GET, POST) to cloud services for NLP and NLG. Backend endpoints return responses to the frontend as needed.

- The VR endpoints on the backend run on a separate port, enabling bidirectional communication between the client and server using the socket.io library. Socket.io enhances the Websocket protocol by implementing organized communication spaces.

- VR streaming is provided through Meta’s Meta Quest casting service [101], allowing the VR headset screen to be shared on an external monitor for observation by the therapist or other children.

3.3.4. Cooperation of ATs

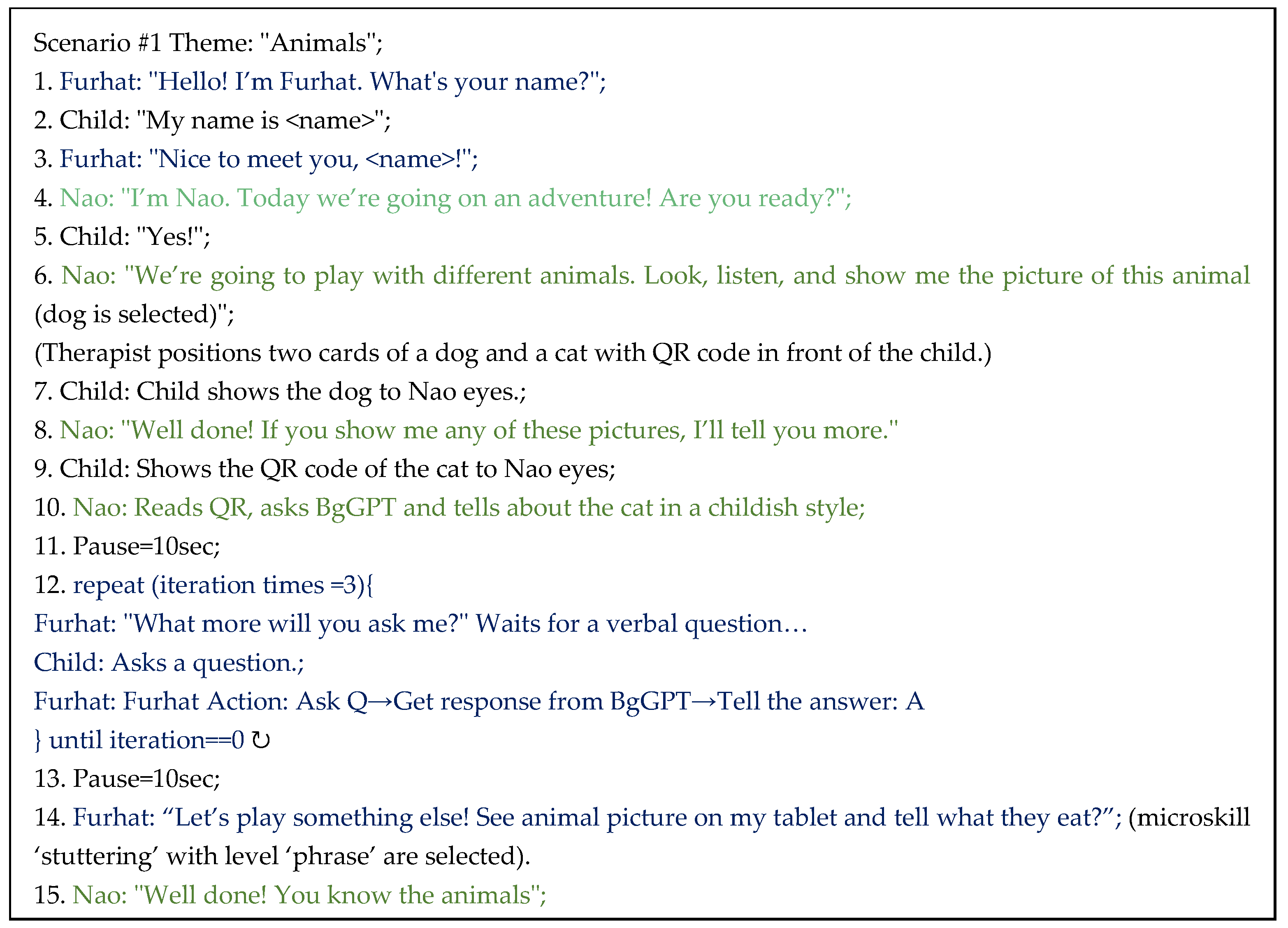

3.4. Design Process of Interactive Scenario

3.5. Ethics

4. Implementation and Results

4.1. Implementation of ATLog Platform

4.2. Technical Challenges

- A virtual environment for different python versions must be activated through the ‘venv’ endpoint because the child processes for text translation, text generation or speech synthesis rely on PY 3.x or higher.

- We faced challenges with continuous block execution in Blockly. While child processes were still running, the run code in the JavaScript of the block had exited, causing the next block to start early. The solution was to use an async function with await new Promise(resolve), which either waits for a server response indicating the child process has exited with code 0, or continuously checks the status of the child process by sending requests to a /monitor endpoint. The second approach works better for blocks with more complex run code. When Nao blocks need to wait for Furhat child processes to finish, an async function continuously checks the status of the python process by sending requests to the /monitor/pyFurhat endpoint. It waits for a response from the server, which indicates whether the Python process is still running or has been exited. Once the process is no longer running, the function exits the loop, and Nao blocks can start. Similarly, /monitor/pyNao1 and /monitor/pyNao2 endpoints allow for client-side functions to determine the child’s status when Furhat blocks wait for Nao child processes to exit.

- Another challenge was that by default, Express sets a request body size limit of 100 KB when using the express.json() middleware. To handle larger payloads, such as saving responses from chatGPT in KEY=answer, the limit had to be increased to at least 500 KB by configuring the middleware to express.json({limit: ‘500 kb’}).

- We faced challenges with delay in the API response time of BgGPT. To address this, we experimented with response streaming using Server-Sent Events (SSEs) over an HTTP connection. We enhanced performance by delivering tokens in real time through an Express endpoint, which requests a streamed response from the BgGPT API and pipes the received chunks directly to the SARs TTS services.

- From the pilot experiment, where we tested interactive scenarios for stuttering using Furhat, we found that the embedded Furhat’s speech recognition based on ML occasionally misinterpreted stuttered speech, sometimes recognizing it as fluent, while other times as ‘NOMATCH’ (not fluent). The current solution relies on the SLT practitioners verbally instructing Furhat by saying “fluent” or “not fluent” to classify the stuttered speech correctly.

- We faced challenges with object selection and tracking the direction of the child’s hand in VR Bingo (Figure 2b). In the previous DBR iteration, the objects (fruits) were placed on a round table (Figure 5a) and the children had difficulty changing the perspective in order to select the objects that were behind another one. The solution was to expand the round table along the X-axis in an ellipse and rearrange the objects to prevent overlap from the initial perspective (Figure 5b). Additionally, the invisible boxes representing the “hitbox” were initially too small and were adjusted to match the height of the objects (fruits), ensuring easier selection. The white ray used to track the direction of the child’s hand was replaced with a longer and thicker red cylinder, significantly improving tracking accuracy.

4.3. Example Scenario, Development Steps and Runtime

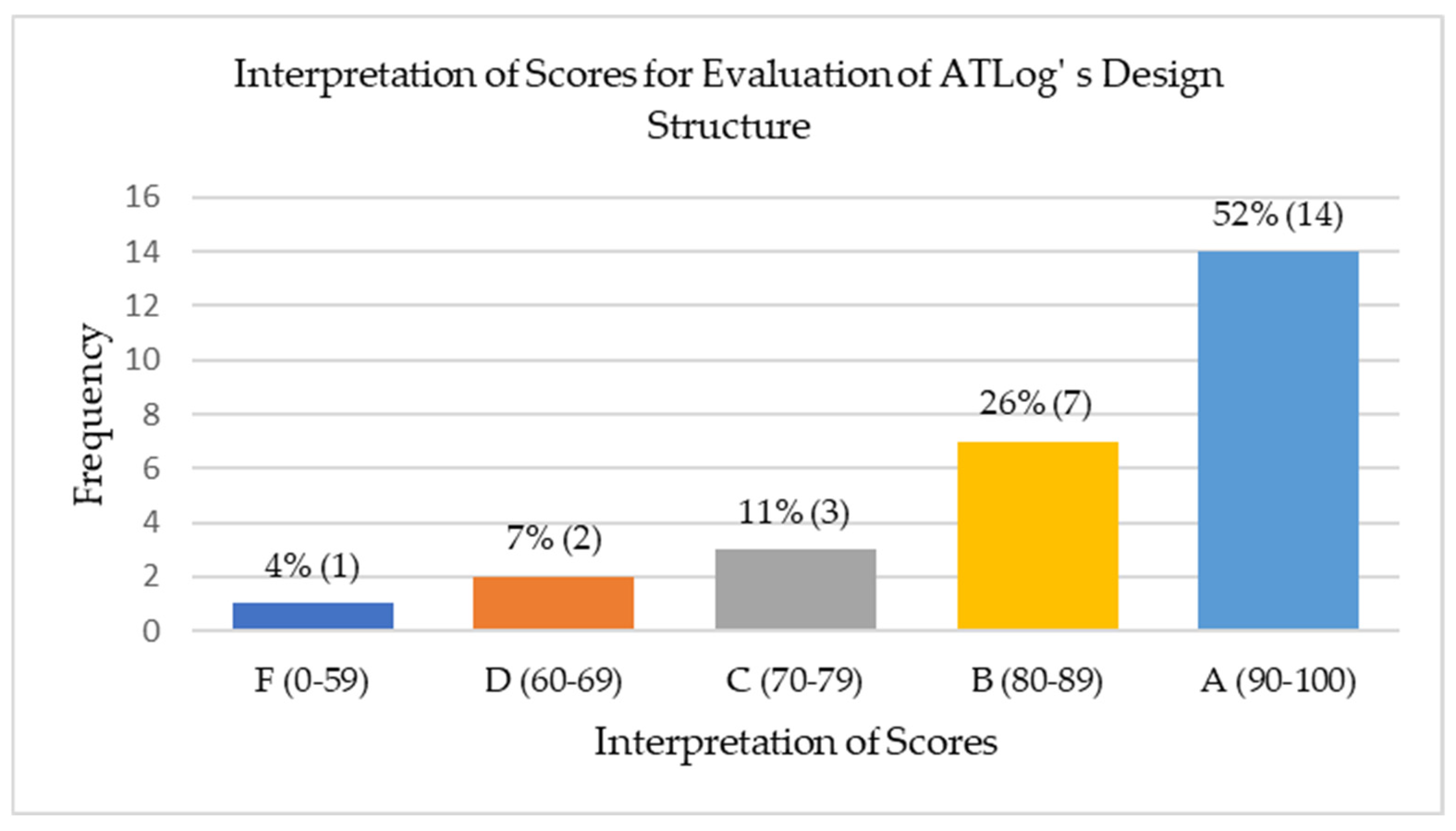

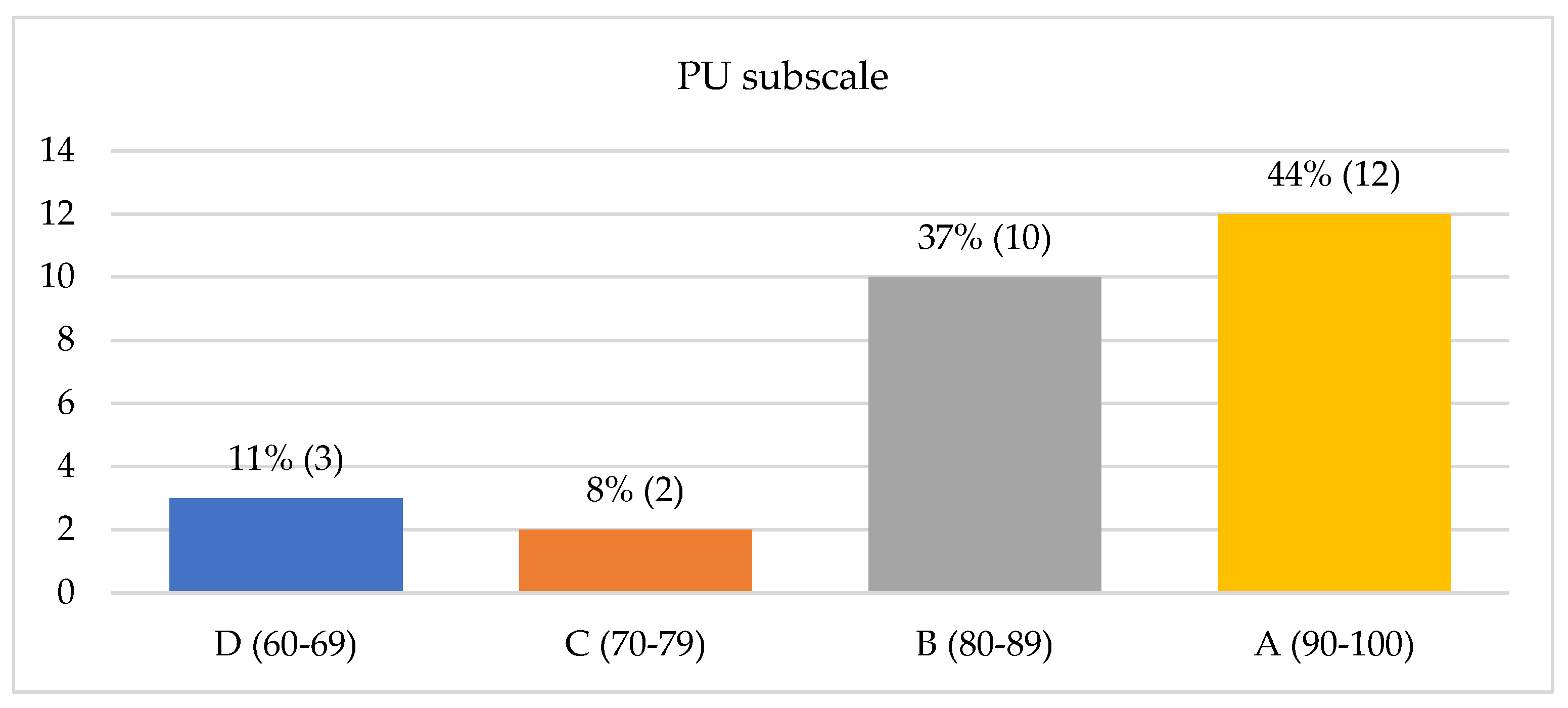

4.4. Results from the TAM and the SUS

5. Discussion

5.1. Technological Innovation of ATLog Platform

5.2. Human–Computer Interaction (HCI)

5.3. Social and Economic Impact

5.4. User Behavior Analysis

5.4.1. SUS Q1–10

5.4.2. Q11–20

5.4.3. TAM Q1–12

5.4.4. Correlation Analysis Between the SUS, the Design Structure Evaluation of the ATLog Platform, and the TAM

6. Conclusions

6.1. Limitations

6.2. Future Directions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AAC | Alternative and Augmented Communication |

| ATs | Assistive Technologies |

| CDs | Communication Disorders |

| ConvAI | Conversational AI |

| DBR | Design-Based Research |

| GPT | Generative Pre-trained Transformer |

| IDE | Integrated Development Environment |

| LLMs | Large Language Models |

| LRMs | Large Reasoning Models |

| NLG | Natural Language Generation |

| NLP | Natural Language Processing |

| PEU | Perceived Ease of Use |

| PU | Perceived Usefulness |

| PY | Python |

| Q&A | AI questions and answers |

| RQ | Research Question |

| SARs | Socially Assistive Robots |

| SCP | Secure Copy Protocol |

| SLT | Speech and Language Therapy |

| SUS | System Usability Scale |

| TAM | Technology Acceptance Model |

| VR | Virtual Reality |

Appendix A

| Frontend Block Name | Description | Microskill/File Uploaded on the Robot | Input Parameters | Backend Endpoint | Fetching Endpoint(s) with Parameters |

|---|---|---|---|---|---|

| StartBlock | Initializes the session. Connected endpoints in doCode of the block: StopAll_BehaviorsBlock and AutonomousModeBlock. | No | N/A | N/A | N/A |

| Autonomous ModeBlock | Enables or disables autonomous behavior on Nao robot. | No | ipNao, STATE (FieldCheckbox: TRUE, FALSE) | /nao1 | http://serverIP:3000/nao1?IP=${ipNao}&state=${STATE}&pyScript=auton |

| StopAll_BehaviorsBlock | Stops ongoing behaviors on Nao robot. | No | ipNao | /nao1 | http://serverIP:3000/nao1?IP=${ipNao}&pyScript=stopBehav2 |

| VolumeBlock | By entering an integer from 0 to 100, the Nao robot’s volume level can be adjusted. | No | ipNao, VOLUME | /nao1 | http://serverIP:3000/nao1?IP=${ipNao}&volume=${VOLUME}&pyScript=volume |

| HelloBlock | Nao animation—greeting while in a seated position. | Yes | ipNao | /nao2 | http://ipServer:3000/${endpoint}?IP=${ipNao}&BN=User/hello-6f0483/behavior_1 |

| Read_qr_code Block | Initiates QR code reading in front of Nao’s eyes. Reads from Nao’s memory. Saves in ATLog repository with a key = QR. Connected endpoints in doCode of the block: NaoMemoryRead and RepositorySaveBlock. | Yes | ipNao KEY | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=User/qr-19f134/behavior_1 Connected endpoints: http://serverIP:3000/monitor/pyNao2 |

| LingSounds Block | Nao says the Ling sound, defined by the input parameter, and waits to recognize the QR code shown to it. | Yes | ipNao, LING_SOUND: A, I, U, M, S, Sh | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=ling-2b453a/${LING_SOUND} |

| TransportationBlock | Nao says the name and play sounds for a transportation tool and waits to recognize the QR code shown to it. | Yes | ipNao, TRANSPORT: Bicycle, Car, Train, Motor, Airplane, etc. | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=transports-c868b7/${TRANSPORT} |

| AnimalsBlock | Nao says the animal’s name, plays its sound, and waits to recognize the QR code shown to it. | Yes | ipNao, ANIMAL: Cat, Dog, Cow, Sheep, Frog, Horse, Monkey, Pate, Pig, etc. | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=animals-486c82/${ANIMAL} |

| FruitsBlock | Nao says the fruit’s name and waits to recognize the QR code shown to it. | Yes | ipNao, FRUIT: strawberry, bannana, apple, etc. | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN= fruits-f0887f/${FRUIT} |

| Vegetable Block | Nao says the vegetables’s name and waits to recognize the QR code shown to it. | Yes | ipNao, VEGETABLE: cucumber, cabbage, | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=vegetables-7e6223/${VEGETABLE} |

| ShapesBlock | Nao says the shape’s name and waits to recognize the QR code shown to it. | Yes | ipNao, SHAPE: Triangle, Rectangle, Circle, Square, Star, Moon, etc. | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=shapes-cc46c2/${SHAPE} |

| ColorsBlock | Nao says the color’s name and waits to recognize the QR code shown to it. | Yes | ipNao, COLOR: Green, Orange, Red, Blue, Pink, White, Yellow, etc. | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=colors-dd3988/${COLOR} |

| MusicalInstr Block | Nao plays music with animation for an instrument (defined by the input parameter). | Yes | ipNao, INSTRUMENT: Trumpet, Guitar, Maracas, Piano, Drums, Violin, etc. | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=instruments-78257e/${INSTRUMENT} |

| EmotionsBlock | Nao performs an animation for the emotion defined by the input parameter. | Yes | ipNao, EMOTION: sad, happy, scared, tired, neutral | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=emotions-f6ef56/${EMOTION} |

| FairytaleBlock | Nao narrates a fairy tale, defined by the input parameter, and its mp3 is uploaded on Nao folder: home/nao/fairs/. Connected in sequence blocks: NarratingBlock. | Yes | ipNao, FAIRY_TALE_NAME: MuriBird, CatMum, 3cats, smallCat, 3catsMum, etc. | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=story-afb24d/${ FAIRY_TALE_NAME } |

| StoryBlock | Nao tells a story with sequential in time scenes by three or more flashcards (the story is defined by input parameter). | Yes | ipNao, STORY_NAME: tomato, cake, sleep, cat, etc. | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=[story_nina-97b2d5,story_emi-e0be5f,story_cat-7f9f13, story_mimisleep-afcea3] |

| SongBlock | Nao sings a song (defined by the input parameter), and its mp3 is uploaded on Nao folder: home/nao/songs/. Connected in sequence blocks: DanceBlock. | Yes | ipNao, SONG_NAME: song1, song2, song3, song4, song5, etc. | /nao1 | http://serverIP:3000/${endpoint}?IP=${ipNao}&filepath=${filepath}&filename=${SONG_NAME}&pyScript=play_mp3 |

| MarketPlay Block | Nao simulates market-related interactions with the child using 3D objects embedded with barcodes (real or toys), such as toothbrush, cash desk, payment card, etc. | Yes | ipNao, MarketItem: Juice, Salad, Bathroom, Teeth, etc. | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=market-6f6441/${MarketItem} |

| TouchSensor Block | Nao detects a touch on its tactile sensors and plays the corresponding mp3 for the touched body_part, uploaded on the robot in folder home/nao/body/. | Yes | ipNao, SENSOR: Head_Front, RArm, Larm, LFoot/Bumper RFoot/Bumper | /nao1 | http://serverIP:3000/${endpoint}?IP=${ipNao}&touch=${SENSOR}&pyScript=touchBodyPart |

| Head_tactile_ sensorBlock | Activates the tactile sensor on Nao head to stop any currently running microskill. Connected in sequence block: StopAll_behaviours. | No | ipNao, SENSOR Head_Front, Head_Middle Head_Rear | /nao1 | http://serverIP:3000/${endpoint}?IP=${ipNao}&bodyPart&{SENSOR}&pyScript=touchHead |

| NaoMemory ReadBlock | Reads a value from Nao’s memory key. | No | ipNao, KEY | /nao1 | http://serverIP:3000/${endpoint}?IP=${ipNao}&key=${KEY}&pyScript=ALmemory_get |

| NaoMemory SaveBlock | Writes a {key: value} to Nao’s memory. | No | ipNao, KEY, VALUE | /nao1 | http://serverIP:3000/${endpoint}?IP=${ipNao}&key=${KEY}&value=${VALUE}&pyScript=ALmemory_set |

| DeclareEvent Block | Declares an event name in Nao’s memory. | No | ipNao, EVENT | /nao1 | http://serverIP:3000/${endpoint}?IP=${ipNao}&name=${ EVENT }&pyScript=ALmemory_decl |

| RaiseEvent Block | Raises a declared event in Nao’s memory. | No | ipNao, EVENT | /nao1 | http://serverIP:3000/${endpoint}?IP=${ipNao}&name=${ EVENT }&pyScript=ALmemory_raise |

| PlayAudio Block | Plays audio files uploaded to the robot in Nao folder home/nao/audio/. | Yes | ipNao, path, file_name | /nao1 | http://serverIP:3000/nao1?IP=${robot_ip}&filepath=${path}&filename=${file_name}&pyScript=play_mp3 |

| UploadFile Block | Uploads a file on Nao robot in folder:/home/nao/tts/with name: tts.mp3. | No | ipNao, passwordNao, file_path, file_name | /naoUpload | http://serverIP:3000/naoUpload?IP=${ipNao}&password=${passwordNao}${file_path}${file_name} |

| Text_to_speechBlock | Nao plays the input text as audio file. Text is converted to speech by GoogleTTS or MSAzureTTS. The generated tts.mp3 file is uploaded to NAO folder:/home/nao/tts/. | No | ipNao, passwordNao text filename: tts.mp3, filepath on Nao:/home/nao/tts/ | /nao3 | http://serverIP:3000/${endpoint}?IP=${ipNao}&filepath=${path}&filename=${file_name}?TEXT=${text} Connected endpoints: http://serverIP:3000/venv;http://serverIP:3000/monitor/pyNao1 http://serverIP:3000/naoUpload?IP=${ipNao}&password=${passwordNao}${file_path}${file_name} http://serverIP:3000/nao1?IP=${robot_ip}&filepath=${path}&filename=${file_name}&pyScript=play_mp3 |

| PlaytextBlock | Nao plays the current answer in the ATLog repository as audio file. Answer is converted to tts.mp3 and uploaded to Nao directory/home/nao/tts/. | No | ipNao, passwordNao file_name, file_path, KEY=answer | /nao3 | http://serverIP:3000/${endpoint}?IP=${ipNao}&filepath=${path}&filename=${file_name}?TEXT=${answer)} Connected endpoints: http://serverIP:3000/repository/answer http://serverIP:3000/venv;http://serverIP:3000/monitor/pyNao1 http://serverIP:3000/naoUpload?IP=${ipNao}&password=${passwordNao}${file_path}${file_name} http://serverIP:3000/nao1?IP=${robot_ip}&filepath=${path}&filename=${file_name}&pyScript=play_mp3 |

| QRquestion Block | Nao waits for a QR code to be shown, decodes it to generate a text-based question, sends the question to bgGPT, and stores the received answer in the repository. It then plays the answer as an audio file. Connected endpoints in doCode of the block: PlaytextBlock. | No | ipNao, Question, Context, KEY =answer, VALUE | /nao1 /nao2 /nao3 /bgGPT /repository /monitor/pyNao1 /monitor/pyNao2 | Connected endpoints: http://serverIP:3000/nao2?IP=${ipNao}&BN=qr-19f134 http://serverIP:3000/monitor/pyNao2 http://serverIP:3000/nao1?IP=${ipNao}&key=QR&pyScript=ALmemory_get2 http://serverIP:3000/venv; ttp://serverIP:3000/monitor/pyNao1 http://serverIP:3000/bgGPT?question=${question}&context=${context} http://serverIP:3000/repository?key=${KEY}&value=${VALUE |

| TextQuestion Block | Retrieves the questions and context entered in the input fields, sends them to bgGPT, stores the generated answer in the repository, and Nao plays the current response. Connected endpoints in doCode of the block: PlaytextBlock. | No | ipNao, Question, Context, KEY: answer, VALUE | /nao1 /nao3 /bgGPT /repository | Connected endpoints: http://serverIP:3000/venv http://serverIP:3000/bgGPT?question=${question}&context=${context} http://serverIP:3000/monitor/pyNao1 http://serverIP:3000/repository?key=${KEY}&value=${VALUE |

| Narrating Block | Nao animation—for narrating pose. Connected in sequence blocks: NaoMemorySave with {key= narr_sec, value= VALUE}. | Yes | ipNao VALUE (Seconds for narrating) | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=User/slow_narrating-0de14c/behavior_1 |

| Thinking Block | Nao animation—thinking pose in a seated position. | Yes | ipNao | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao&BN=User/thinking-b54157/behavior_1 |

| Narrating_sit Block | Nao animation—pose for storytelling in a seated position. | Yes | ipNao | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=User/narr_sit-a1f157/behavior_1 |

| Dance Block | Nao animation—performing dance movements. | Yes | ipNao | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&BN=User/dancing-e1h145/behavior_1 |

| CustomBehavior Block | Runs a custom skill prepared in Choregraphe and uploaded to Nao. | Yes | ipNao, BEHAVIOR_NAME (Unique Behavior ID on Nao) | /nao2 | http://serverIP:3000/${endpoint}?IP=${ipNao}&?id=${BEHAVIOR_NAME} |

| CustomRemote Block | Runs a custom python script by remote NAOqi. | No | ipNao, PY_SCRIPT (Unique py script name) | /nao1 | http://serverIP:3000/${endpoint}?IP=${ipNao}&${endpoint}id=${PY_SCRIPT} |

| Frontend Block Name | Description | Microskill/File Uploaded on the Robot | Input Parameters | Backend Endpoint | Fetching Endpoint(s) with Parameters |

|---|---|---|---|---|---|

| Monitoring SafetyBlock | Furhat listens for keywords like ‘STOP’, and stops the actions of the integrated ATs in the current session. | No | ipFurhat | /furhatRem |

http://serverIP:3000/furhatRem?IP=${ipFurhat}&scriptName=fur_stop.py Connected endpoints: http://serverIP:3000/venv http://serverIP:3000/furhat?IP=${ipFurhat}&?id=RemoteAPI http://serverIP:3000/stop-server |

| CustomSkill Block | Runs a custom skill prepared in KotlinSSDK and uploaded to Furhat. | Yes | ipFurhat, SKILL_NAME | /furhat | http://serverIP:3000/${endpoint}?IP=${ipFurhat}&?id=${SKILL_NAME} |

| FurhatTTS Block | Specifies the text to vocalize (TTS). | No | ipFurhat, Text to Vocalize | /furhatRem | http://serverIP:3000/${endpoint}?IP=${ipFurhat}&scriptName=fur_tts.py&text=${text} Connected endpoints: http://serverIP:3000/venv http://serverIP:3000/${endpoint}?IP=${ipFurhat}&?id=RemoteAPI |

| FurhatAsk NameBlock | Asks the user their name and saves the response in the internal repository. | No | ipFurhat, KEY: name | /furhatRem | http://serverIP:3000/${endpoint}?IP=${ipFurhat}&scriptName=fur_name.py Connected endpoints: http://serverIP:3000/venv; http://serverIP:3000/monitor/pyFurhat http://serverIP:3000/${endpoint}?IP=${ipFurhat}&?id=RemoteAPI http://serverIP:3000/repository?key=${KEY} |

| FurhatSpeak AnswerBlock | Furhat speaks the answer stored in the internal repository. | No | ipFurhat, KEY: answer | /furhatRem | http://serverIP:3000/${endpoint}?IP=${ipFurhat}&scriptName=furhat_rem_textFur.py’ Connected endpoints: http://serverIP:3000/venv http://serverIP:3000/${endpoint}?IP=${ipFurhat}&?id=RemoteAPI http://serverIP:3000/repository?key=${KEY} |

| FurhatQuestionBlock | Listens to a question, fetches the question to bgGPT and stores the answer in the repository. Connected endpoints in doCode of the block: FurhatSpeakAnswerBlock. | No | ipFurhat, Question, Context, KEY: answer, VALUE | /furhatRem | http://serverIP:3000/${endpoint}?IP=${ipFurhat}&scriptName=furhat_rem_question.py’ Connected endpoints: http://serverIP:3000/venv; http://serverIP:3000/monitor/pyFurhat http://serverIP:3000/${endpoint}?IP=${ipFurhat}&?id=RemoteAPI http://serverIP:3000/bgGPT?question=${question}&context=${context} http://serverIP:3000/repository?key=${KEY}&value=${VALUE} |

| FurhatRepeat Block | Listens to a spoken phrase with attempts to repeat it, unless it returns a ‘NOMATCH’ response. | No | ipFurhat, text_ intent | /furhatRem | http://serverIP:3000/${endpoint}?IP=${ipFurhat}&scriptName=fur_understand.py Connected endpoints: http://serverIP:3000/venv http://serverIP:3000/${endpoint}?IP=${ipFurhat}&scriptName=fur_tts.py&text=${text} |

| Frontend Block Name | Description | Microskill/File Uploaded on the Robot | Input Parameters | Backend Endpoint | Fetching Endpoint(s) with Parameters |

|---|---|---|---|---|---|

| Start_EMO_mqqt_session1 | Establishes MQTT session1 (via Python paho-mqtt bridge). | remote_paho_mqtt.py | ipEmo, Port, Username, Password (PUP) | /emo1 |

http://serverIP:3000//${endpoint}?IP=${ipEmo}&PUP=${PUP} Connected endpoints: http://serverIP:3000/venv |

| Start_EMO_mqtt_session2 | Establishes an MQTT session2 (via MQTT net library). | Yes | ipEmo, Port, Username, (PU), TOPIC, file_name | /emo2 | http://serverIP:3000//${endpoint}?IP=${ipEmo}&PU=${PU}&topic=${TOPIC}&file=${file_name} |

| ReadQRcode | Initiates QR code reading in front of EMO. Saves in ATLog repository with a key = QR. Connected in sequence blocks: RepositorySaveBlock. | Yes | ipEmo, Port, Username, Password (PUP), KEY | /emo1 | http://serverIP:3000/${endpoint}?IP=${ipEmo}&PUP=${PUP}&script=qr_reader_atlog.py Connected endpoints: http://serverIP:3000/venv |

| UploadFile Block | Uploads a file after serialization to Emo robot with topic = ‘upload’. | ipEmo, Port, Username, (PU), TOPIC, data | /emoUpload | http://serverIP:3000/${endpoint}?IP=${ipEmo}&PU=${PU}&topic=${TOPIC}& serialization=${data}Connected endpoints: http://serverIP:3000/emo2 | |

| EmoPlay AudioBlock | Emo plays audio file uploaded on Emo folder/home/emo/audio/ topic = ‘audios’. | Yes | ipEmo, Port, Username, (PU), TOPIC, filename filename | /emoPlay |

http://serverIP:3000/${endpoint}?IP=${ipEmo}&PU=${PU}&topic=${TOPIC}&filename=${name} Connected endpoints: http://serverIP:3000/emo2 |

| EmoChat TextBlock | Emo plays the current answer from BgGPT in the internal repository as audio file. Answer is converted in tts.mp3 and uploaded to Emo folder/home/emo/tts/ topic is ‘answer’. | Yes | ipEmo, Port, Username, (PU), TOPIC, filename filename/home/emo/tts/, KEY | /emoChat |

http://serverIP:3000/${endpoint}?IP=${ipEmo}&PU=${PU}&topic=${TOPIC}&filename=${file_name}?TEXT=${answer)} Connected endpoints: http://serverIP:3000/repository/answer http://serverIP:3000/venv http://serverIP:3000/emoUpload; http://serverIP:3000/emoPlay |

| EmoQRquestionBlock | Waiting for a QR code, decode it to prepare a question, fetch the question to bgGPT and stores the answer in the repository. Emo plays the current answer. Connected endpoints in doCode of the block: EmoPlayAudioBlock | No | ipEmo, Question, Context, KEY: answer, VALUE | /qrEmo | http://serverIP:3000/${endpoint}?IP=${ipEmo}&PUP=${PUP} Connected endpoints: http://serverIP:3000/emo1 http://serverIP:3000/venv http://serverIP:3000/emo1?IP=${ipEmo}&pyScript=qr_reader_atlog.py http://serverIP:3000/bgGPT?question=${question}&context=${context} http://serverIP:3000/repository?key=${KEY}&value=${VALUE} http://serverIP:3000/emo2/emoUpload http://serverIP:3000/emo2/emoPlay |

| Start_D3_session | Opens an SSH session to a Double3 (D3) robot and runs PY scripts that interact with the Double3 Developer SDK and D3 API. | Yes | ipD3, Port, Username, Password (PUP), script_name | /double3 | http://serverIP:3000/double3?IP=${ipD3}&PUP=${PUP}&script=alive.py |

| D3QRBlock | Opens an SSH session to D3 and runs PY script that reads QR codes via D3 robot. | Yes | ipD3, Port, Username, Password (PUP), script_name | /double3 | http://serverIP:3000/double3?IP=${ipD3}&PUP=${PUP}&script=readQR.py |

| D3 ControlBlock | Opens an SSH session to a D3 and run PY script with parameters. | Yes | ipD3, Port, Username, Password (PUP), script_name, PARAM | /double3 | http://serverIP:3000/double3?IP=${ipD3}&PUP=${PUP}&script=${script_name}¶m=${PARAM} |

| Frontend Block Name | Description | Microskill/File Uploaded on the Robot | Input Parameters | Backend Endpoint | Fetching Endpoint(s) with Parameters |

|---|---|---|---|---|---|

| Stop Code (button) | Sends a request to shut down the server by terminating the all active child processes and closes the database connections | No | No | /stop-server | http://serverIP:3000/stop-server |

| Repository ReadBlock | Reads a value in ATLog’s repository with a specified key | N/A | KEY | /repository | http://serverIP:3000//${endpoint}?&key=${ KEY} |

| Repository SaveBlock | Saves a value in ATLog’s repository with the specified key | N/A | KEY, VALUE | /repository2 | http://serverIP:3000//${endpoint}?&key=${ KEY}&value=${VALUE} |

| DB_save Block | Saves session observations or assessments in ATLog’s database | No | postData: ID, VALUE (childID, observations, date and time recorded automatically) | /save-child-notes | http://serverIP:3000/${endpoint} with JSON: { method: ‘POST’, headers: { ‘Content-Type’: ‘application/json; charset=utf-8’ }, body: JSON.stringify(${JSON.stringify(postData)}) } |

| BG_GPT Block | Sends questions and context entered in the input fields to the BgGPT APIs (Bulgarian ChatGPT) | No | QUESTION, CONTEXT | /bgGPT | http://serverIP:3000/bgGPT?question=${QUESTION}&context=${CONTEXT} |

| QABlock | Sends questions and context to NLPcloud | No | QUESTION, CONTEXT | /QA | http://serverIP:3000/QA?question=${QUESTION}&context=${CONTEXT} |

| WaitBlock | Makes the robot wait for a specified number of seconds | No | SECONDS | N/A | Blockly local run await new Promise(resolve => setTimeout(resolve, ${ SECONDS })); |

| RepeatBlock | Wraps (one or more) blocks in a loop to execute it multiple times based on a variable number of iterations. In doCode of the block: any block(s) | No | ITERATIONS | Blockly local run loop to repeat execution ${ITERATIONS} times Connected endpoints = some_of ([ http://serverIP:3000/monitor/pyNao1, http://serverIP:3000/monitor/pyNao2, http://serverIP:3000/monitor/pyFurhat ]} |

| Frontend Block Name | Description | Microskill/File Uploaded on the Server | Input Parameters | Backend Endpoint | Fetching Endpoint(s) with Parameters |

|---|---|---|---|---|---|

| Setup_VR_Session_Block | Sets up Express server, WebSocket communication, assigns unique session IDs for the therapists and the child. | Socket.io, JavaScript ES6 Modules | VRsceneID | /startVR | http://serverIP:3005/${VRsceneID}/startVR |

| Setup_VR_Scene_Block | Initializes a VR scene in Three.js with camera, VR controllers, renderer, WebXR support and 3D model loading. Delivers static files (frontend code, images, styles) from the “public” folder. Dynamically generates HTML pages. | JavaScript ES6 Modules | sessionID, BINGO SIZE: 3x3,4x4,5x5, SCENARIO: animals, fruits, vegetables, transports. | /sessions:id/setup | http://serverIP:3005/sessions/${sessionID}/setup with JSON:{method: ‘POST’, headers: { ‘Content-Type’: ‘application/json; }, body: JSON.stringify (${JSON.stringify({ “bingoSize”: BINGO_SIZE, “scenario”: SCENARIO })}) } |

| StartVRbutton_Block | Starts VR button in the immersive mode of the browser. | Socket.io, Three.js, WebXR, JavaScript ES6 Modules | N/A | /startVRbutton | http://serverIP:3005/startVRbutton |

| 360_Virtual_Tour | An interactive 360° virtual tour on a touchscreen where children explore common places, as well as educational spaces. The tours are hosted on the ATLog project website. | N/A | SPACE: house, hypermarket, museum | N/A | Blockly local runtime for fetch request https://atlog.ir.bas.bg/images/tours/${SPACE}/index.htm |

| 360_Virtual_Game | An interactive 360° virtual game on a touchscreen, where children search for and identify objects from a preassigned list. The games are hosted on the ATLog project website. | N/A | SCENARIO: animals, fruits, vegetables, transports | N/A | Blockly local runtime for fetch request https://atlog.ir.bas.bg/images/tours/${SCENARIO}/index.htm |

Appendix B

| Dependencies that Need to be Installed by Npm | Dynamic Imports Used for: |

| const express = require(‘express’); | Express framework to create a web server. |

| const app = express(); | Creating an instance of the Express application. |

| const {spawn } = require(‘child_process’); | Running child processes (e.g., executing PY scripts). |

| const {Client } = require(‘ssh2’); | SSH2 client module to establish SSH connections. |

| const {exec } = require(‘child_process’); | Exec to run shell commands asynchronously. |

| const fetch = require(‘node-fetch’); | Node-fetch for making HTTP requests. |

| const repository = {}; | Initializing an empty object to store key-value pairs. |

| const Database = require(‘better-sqlite3’); | SQLite database interactions (better-sqlite3). |

| const fs = require(‘fs’); | File System (fs) module to handle file operations. |

| const path = require(‘path’); | Path module to manipulate file paths. |

| const http = require(‘http’); | HTTP module to create an HTTP server for VR session. |

| const socketIo = require(‘socket.io’); | Socket.IO library for real-time communication in VR. |

| const server = http.createServer(app); | Create an HTTP server using the Express app. |

| const io = socketIo(server); | Initialize Socket.IO by passing the HTTP server. |

| const os = require(‘os’); | OS module to interact with the operating system. |

| Middleware (built into Express) | Establishing a middleware to: |

| app.use(express.json()); | Parse JSON request bodies. |

| app.use(express.urlencoded({extended: true })); | Parse URL-encoded request bodies. |

| app.use((req, res, next) => {res.setHeader(‘Content-Type’, ‘application/json; charset=utf-8’); next(); }); | Set the response header to JSON format with UTF-8 encoding. |

| app.use(express.static(‘public’)); | Deliver static files from the public directory. |

| app.use(morgan(‘dev’)); | Log HTTP requests in “dev” format. |

| const corsOptions = { origin: function (origin, callback) {…}; app.use(cors(corsOptions)); | Handle requests only for LAN devices, based on the request’s origin or IP address. |

| app.use(express.static(__dirname + ‘/public’)); | Listen for HTTP requests with a path starting with/public. Deliver static files (e.g., HTML, CSS, JS). |

| app.use(express.urlencoded({ extended: true })); | Parse URL-encoded request bodies (e.g., form data). |

| paths = [“/geometries”, “/textures”, “/sounds”, “/styles”]; paths.forEach((path) => { app.use(path, express.static(__dirname + path));}); | Deliver static files from directories corresponding to the paths:/geometries;/textures;/sounds;/styles. |

| app.use(‘/build/’,express.static(path.join(__dirname, ‘node_modules/three/build’))); | Deliver Three.js build files from the build directory under the “/build” route. |

| app.use(‘/jsm/’,express.static(path.join(__dirname, ‘node_modules/three/examples/jsm’))); | Deliver Three.js modules from the “jsm” directory under the “/jsm” route. |

References

- Reeves, T. Design Research from a Technology Perspective. In Educational Design Research; Routledge: London, UK, 2006; pp. 64–78. [Google Scholar]

- Kouroupa, A.; Laws, K.R.; Irvine, K.; Mengoni, S.E.; Baird, A.; Sharma, S. The Use of Social Robots with Children and Young People on the Autism Spectrum: A Systematic Review and Meta-Analysis. PLoS ONE 2022, 17, e0269800. [Google Scholar] [CrossRef]

- Mills, J.; Duffy, O. Speech and Language Therapists’ Perspectives of Virtual Reality as a Clinical Tool for Autism: Cross-Sectional Survey. JMIR Rehabil. Assist. Technol. 2025, 12, e63235. [Google Scholar] [CrossRef]

- Peng, L.; Nuchged, B.; Gao, Y. Spoken Language Intelligence of Large Language Models for Language Learning. arXiv 2025, arXiv:2308.14536. [Google Scholar]

- Austin, J.; Benas, K.; Caicedo, S.; Imiolek, E.; Piekutowski, A.; Ghanim, I. Perceptions of Artificial Intelligence and ChatGPT by Speech-Language Pathologists and Students. Am. J. Speech-Lang. Pathol. 2025, 34, 174–200. [Google Scholar] [CrossRef] [PubMed]

- Leinweber, J.; Alber, B.; Barthel, M.; Whillier, A.S.; Wittmar, S.; Borgetto, B.; Starke, A. Technology Use in Speech and Language Therapy: Digital Participation Succeeds through Acceptance and Use of Technology. Front. Commun. 2023, 8, 1176827. [Google Scholar] [CrossRef]

- Szabó, B.; Dirks, S.; Scherger, A.-L. Apps and Digital Resources in Speech and Language Therapy—Which Factors Influence Therapists’ Acceptance? In Universal Access in Human–Computer Interaction. Novel Design Approaches and Technologies. HCII 2022; Antona, M., Stephanidis, C., Eds.; Springer: Cham, Switzerland, 2022; Volume 13308, pp. 379–391. [Google Scholar] [CrossRef]

- Hastall, M.R.; Dockweiler, C.; Mühlhaus, J. Achieving End User Acceptance: Building Blocks for an Evidence-Based User-Centered Framework for Health Technology Development and Assessment. In Universal Access in Human–Computer Interaction. Human and Technological Environments; Antona, M., Stephanidis, C., Eds.; Springer: Cham, Switzerland, 2017; pp. 13–25. [Google Scholar] [CrossRef]

- Spitale, M.; Silleresi, S.; Garzotto, F.; Mataric, M. Using Socially Assistive Robots in Speech-Language Therapy for Children with Language Impairments. Int. J. Soc. Robot. 2023, 15, 1525–1542. [Google Scholar] [CrossRef]

- Rupp, R.; Wirz, M. Implementation of Robots into Rehabilitation Programs: Meeting the Requirements and Expectations of Professional and End Users. In Neurorehabilitation Technology, 2nd ed.; Reinkensmeyer, D.J., Juneal-Crespo, L., Dietz, V., Eds.; Springer: Cham, Switzerland, 2022; pp. 263–288. [Google Scholar]

- Lampropoulos, G.; Keramopoulos, E.; Diamantaras, K.; Evangelidis, G. Augmented Reality and Virtual Reality in Education: Public Perspectives, Sentiments, Attitudes, and Discourses. Educ. Sci. 2022, 12, 798. [Google Scholar] [CrossRef]

- Suh, H.; Dangol, A.; Meadan, H.; Miller, C.A.; Kientz, J.A. Opportunities and Challenges for AI-Based Support for Speech-Language Pathologists. In Proceedings of the 3rd Annual Symposium on Human—Computer Interaction for Work (CHIWORK 2024), New York, NY, USA, 9–11 June 2024; pp. 1–14. [Google Scholar] [CrossRef]

- Du, Y.; Juefei-Xu, F. Generative AI for Therapy? Opportunities and Barriers for ChatGPT in Speech-Language Therapy. Unpubl. Work 2023. Available online: https://openreview.net/forum?id=cRZSr6Tpr1S (accessed on 20 June 2025).

- Fu, B.; Hadid, A.; Damer, N. Generative AI in the Context of Assistive Technologies: Trends, Limitations and Future Directions. Image Vis. Comput. 2025, 154, 105347. [Google Scholar] [CrossRef]

- Santos, L.; Annunziata, S.; Geminiani, A.; Ivani, A.; Giubergia, A.; Garofalo, D.; Caglio, A.; Brazzoli, E.; Lipari, R.; Carrozza, M.C.; et al. Applications of Robotics for Autism Spectrum Disorder: A Scoping Review. Rev. J. Autism Dev. Disord. 2023. [Google Scholar] [CrossRef]

- Sáez-López, J.M.; del Olmo-Muñoz, J.; González-Calero, J.A.; Cózar-Gutiérrez, R. Exploring the Effect of Training in Visual Block Programming for Preservice Teachers. Multimodal Technol. Interact. 2020, 4, 65. [Google Scholar] [CrossRef]

- Rees Lewis, D.; Carlson, S.; Riesbeck, C.; Lu, K.; Gerber, E.; Easterday, M. The Logic of Effective Iteration in Design-Based Research. In Proceedings of the 14th International Conference of the Learning Sciences: The Interdisciplinarity of the Learning Sciences (ICLS 2020), Nashville, TN, USA, 19–23 June 2020; Gresalfi, M., Horn, I.S., Eds.; International Society of the Learning Sciences: Bloomington, IN, USA; Volume 2, pp. 1149–1156. [Google Scholar]

- ATLog Project. Available online: https://atlog.ir.bas.bg/en (accessed on 20 June 2025).

- Lekova, A.; Tsvetkova, P.; Andreeva, A.; Simonska, M.; Kremenska, A. System Software Architecture for Advancing Human-Robot Interaction by Cloud Services and Multi-Robot Cooperation. Int. J. Inf. Technol. Secur. 2024, 16, 65–76. [Google Scholar] [CrossRef]

- Andreeva, A.; Lekova, A.; Tsvetkova, P.; Simonska, M. Expanding the Capabilities of Robot NAO to Enable Human-Like Communication with Children with Speech and Language Disorders. In Proceedings of the 25th International Conference on Computer Systems and Technologies (CompSysTech 2024), Sofia, Bulgaria, 28–29 June 2024; pp. 63–68. [Google Scholar] [CrossRef]

- Lekova, A.; Tsvetkova, P.; Andreeva, A. System Software Architecture for Enhancing Human-Robot Interaction by Conversational AI. In Proceedings of the 2023 International Conference on Information Technologies (InfoTech), Varna, Bulgaria, 19–20 October 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Lekova, A.; Vitanova, D. Design-Based Research for Streamlining the Integration of Text-Generative AI into Socially-Assistive Robots. In Proceedings of the 2024 International Conference “ROBOTICS & MECHATRONICS”, Sofia, Bulgaria, 29–30 October 2024. [Google Scholar]

- Kolev, M.; Trenchev, I.; Traykov, M.; Mavreski, R.; Ivanov, I. The Impact of Virtual and Augmented Reality on the Development of Motor Skills and Coordination in Children with Special Educational Needs. In Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering: Computer Science and Education in Computer Science; Springer Nature Switzerland: Cham, Switzerland, 2023; Volume 514, pp. 171–181. [Google Scholar] [CrossRef]

- Brooke, J. SUS—A Quick and Dirty Usability Scale. In Usability Evaluation in Industry; CRC Press: Boca Raton, FL, USA, 1996; pp. 189–194. [Google Scholar]

- Davis, F. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Cheah, W.; Jusoh, N.; Aung, M.; Ab Ghani, A.; Rebuan, H.M.A. Mobile technology in medicine: Development and validation of an adapted system usability scale (SUS) questionnaire and modified technology acceptance model (TAM) to evaluate user experience and acceptability of a mobile application in MRI safety screening. Indian J. Radiol. Imaging 2023, 33, 36–45. [Google Scholar] [CrossRef] [PubMed]

- Hariri, W. Unlocking the Potential of ChatGPT: A Comprehensive Exploration of Its Applications, Advantages, Limitations, and Future Directions in Natural Language Processing. arXiv 2023, arXiv:2304.02017. [Google Scholar]

- Vaezipour, A.; Aldridge, D.; Koenig, S.; Theodoros, D.; Russell, T. “It’s Really Exciting to Think Where It Could Go”: A Mixed-Method Investigation of Clinician Acceptance, Barriers and Enablers of Virtual Reality Technology in Communication Rehabilitation. Disabil. Rehabil. 2022, 44, 3946–3958. [Google Scholar] [CrossRef]

- Hashim, H.U.; Md Yunus, M.; Norman, H. Augmented Reality Mobile Application for Children with Autism: Stakeholders’ Acceptance and Thoughts. Arab World Engl. J. 2021, 12, 130–146. [Google Scholar] [CrossRef]

- Rasheva-Yordanova, K.; Kostadinova, I.; Georgieva-Tsaneva, G.; Andreeva, A.; Tsvetkova, P.; Lekova, A.; Stancheva-Popkostandinova, V.; Dimitrov, G. A Comprehensive Review and Analysis of Virtual Reality Scenarios in Speech and Language Therapy. TEM J. 2025, 14, 1895–1907. [Google Scholar] [CrossRef]

- Vanderborght, B.; Simut, R.; Saldien, J.; Pop, C.; Rusu, A.S.; Pintea, S.; Lefeber, D.; David, D.O. Using the Social Robot Probo as a Social Story Telling Agent for Children with ASD. Interact. Stud. 2012, 13, 348–372. [Google Scholar] [CrossRef]

- LuxAI. QTrobot for Schools & Therapy Centers. Available online: https://luxai.com/robot-for-teaching-children-with-autism-at-home/ (accessed on 20 June 2025).

- Furhat Robotics. Furhat AI. Available online: https://www.furhatrobotics.com/furhat-ai (accessed on 20 June 2025).

- PAL Robotics. ARI. Available online: https://pal-robotics.com/robot/ari/ (accessed on 20 June 2025).

- RoboKind Milo Robot. Available online: https://www.robokind.com/?hsCtaTracking=2433ccbc-0af8-4ade-ae0b-4642cb0ecba4%7Cb0814dc8-76e1-447d-aba7-101e89845fb7 (accessed on 20 June 2025).

- She, T.; Ren, F. Enhance the Language Ability of Humanoid Robot NAO through Deep Learning to Interact with Autistic Children. Electronics 2021, 10, 2393. [Google Scholar] [CrossRef]

- Cherakara, N.; Varghese, F.; Shabana, S.; Nelson, N.; Karukayil, A.; Kulothungan, R.; Farhan, M.; Nesset, B.; Moujahid, M.; Dinkar, T.; et al. FurChat: An Embodied Conversational Agent Using LLMs, Combining Open and Closed-Domain Dialogue with Facial Expressions. In Proceedings of the 24th Annual Meeting of the Special Interest Group on Discourse and Dialogue (SIGDIAL 2023), Prague, Czech Republic, 11–13 September 2023; pp. 588–592. [Google Scholar] [CrossRef]

- Belda-Medina, J.; Calvo-Ferrer, J.R. Using Chatbots as AI Conversational Partners in Language Learning. Appl. Sci. 2022, 12, 8427. [Google Scholar] [CrossRef]

- ChatGPT Prompting for SLPs. Available online: https://eatspeakthink.com/chatgpt-for-speech-therapy-2025/ (accessed on 20 June 2025).

- Bertacchini, F.; Demarco, F.; Scuro, C.; Pantano, P.; Bilotta, E. A Social Robot Connected with ChatGPT to Improve Cognitive Functioning in ASD Subjects. Front. Psychol. 2023, 14, 1232177. [Google Scholar] [CrossRef] [PubMed]

- Salhi, I.; Qbadou, M.; Gouraguine, S.; Mansouri, K.; Lytridis, C.; Kaburlasos, V. Towards Robot-Assisted Therapy for Children with Autism—The Ontological Knowledge Models and Reinforcement Learning-Based Algorithms. Front. Robot. AI 2022, 9, 713964. [Google Scholar] [CrossRef] [PubMed]

- Naneva, S.; Sarda Gou, M.; Webb, T.L.; Prescott, T.J. A Systematic Review of Attitudes, Anxiety, Acceptance, and Trust Towards Social Robots. Int. J. Soc. Robot. 2020, 12, 1179–1201. [Google Scholar] [CrossRef]

- Liu, Z.; Li, H.; Chen, A.; Zhang, R.; Lee, Y.C. Understanding Public Perceptions of AI Conversational Agents. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems (CHI 2024), Honolulu, HI, USA, 11–16 May 2024; pp. 1–17. [Google Scholar] [CrossRef]

- Gerlich, M. Perceptions and Acceptance of Artificial Intelligence: A Multi-Dimensional Study. Soc. Sci. 2023, 12, 502. [Google Scholar] [CrossRef]

- Severson, R.; Peter, J.; Kanda, T.; Kaufman, J.; Scassellati, B. Social Robots and Children’s Development: Promises and Implications. In Handbook of Children and Screens; Christakis, D.A., Hale, L., Eds.; Springer: Cham, Switzerland, 2024; pp. 627–633. [Google Scholar] [CrossRef]

- Youssef, K.; Said, S.; Alkork, S.; Beyrouthy, T. A Survey on Recent Advances in Social Robotics. Robotics 2022, 11, 75. [Google Scholar] [CrossRef]

- Georgieva-Tsaneva, G.; Andreeva, A.; Tsvetkova, P.; Lekova, A.; Simonska, M.; Stancheva-Popkostadinova, V.; Dimitrov, G.; Rasheva-Yordanova, K.; Kostadinova, I. Exploring the Potential of Social Robots for Speech and Language Therapy: A Review and Analysis of Interactive Scenarios. Machines 2023, 11, 693. [Google Scholar] [CrossRef]

- Estévez, D.; Terrón-López, M.-J.; Velasco-Quintana, P.J.; Rodríguez-Jiménez, R.-M.; Álvarez-Manzano, V. A Case Study of a Robot-Assisted Speech Therapy for Children with Language Disorders. Sustainability 2021, 13, 2771. [Google Scholar] [CrossRef]

- Ioannou, A.; Andreva, A. Play and Learn with an Intelligent Robot: Enhancing the Therapy of Hearing-Impaired Children. In Human–Computer Interaction—INTERACT 2019; Lamas, D., Loizides, F., Nacke, L., Petrie, H., Winckler, M., Zaphiris, P., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2019; Volume 11747, pp. 436–452. [Google Scholar] [CrossRef]

- Lee, H.; Hyun, E. The Intelligent Robot Contents for Children with Speech-Language Disorder. J. Educ. Technol. Soc. 2015, 18, 100–113. [Google Scholar]

- Lekova, A.; Andreeva, A.; Simonska, M.; Tanev, T.; Kostova, S. A System for Speech and Language Therapy with a Potential to Work in the IoT. In Proceedings of the 2022 International Conference on Computer Systems and Technologies (CompSysTech 2022), Ruse, Bulgaria, 16–17 June 2022; pp. 119–124. [Google Scholar] [CrossRef]

- QTrobot for Special Needs Education. Available online: https://luxai.com/assistive-tech-robot-for-special-needs-education/ (accessed on 20 June 2025).

- Vukliš, D.; Krasnik, R.; Mikov, A.; Zvekić Svorcan, J.; Janković, T.; Kovačević, M. Parental Attitudes Towards the Use of Humanoid Robots in Pediatric (Re)Habilitation. Med. Pregl. 2019, 72, 302–306. [Google Scholar] [CrossRef]

- Szymona, B.; Maciejewski, M.; Karpiński, R.; Jonak, K.; Radzikowska-Büchner, E.; Niderla, K.; Prokopiak, A. Robot-Assisted Autism Therapy (RAAT): Criteria and Types of Experiments Using Anthropomorphic and Zoomorphic Robots. Sensors 2021, 21, 3720. [Google Scholar] [CrossRef]

- Nicolae, G.; Vlădeanu, C.; Saru, L.-M.; Burileanu, C.; Grozăvescu, R.; Crăciun, G.; Drugă, S.; Hatiș, M. Programming the NAO Humanoid Robot for Behavioral Therapy in Romania. Rom. J. Child Adolesc. Psychiatry 2019, 7, 23–30. [Google Scholar]

- Gupta, G.; Chandra, S.; Dautenhahn, K.; Loucks, T. Stuttering Treatment Approaches from the Past Two Decades: Comprehensive Survey and Review. J. Stud. Res. 2022, 11. [Google Scholar] [CrossRef]

- Chandra, S.; Gupta, G.; Loucks, T.; Dautenhahn, K. Opportunities for Social Robots in the Stuttering Clinic: A Review and Proposed Scenarios. Paladyn J. Behav. Robot. 2022, 13, 23–44. [Google Scholar] [CrossRef]

- Bonarini, A.; Clasadonte, F.; Garzotto, F.; Gelsomini, M.; Romero, M.E. Playful Interaction with Teo, a Mobile Robot for Children with Neurodevelopmental Disorders. In Proceedings of the 7th International Conference on Software Development and Technologies for Enhancing Accessibility and Fighting Info-exclusion (DSAI 2016), Vila Real, Portugal, 1–3 December 2016; pp. 223–231. [Google Scholar] [CrossRef]

- Kose, H.; Yorganci, R. Tale of a Robot: Humanoid Robot Assisted Sign Language Tutoring. In Proceedings of the 11th IEEE-RAS International Conference on Humanoid Robots, Bled, Slovenia, 26–28 October 2011; pp. 105–111. [Google Scholar] [CrossRef]

- Fung, K.Y.; Lee, L.H.; Sin, K.F.; Song, S.; Qu, H. Humanoid Robot-Empowered Language Learning Based on Self-Determination Theory. Educ. Inf. Technol. 2024, 29, 18927–18957. [Google Scholar] [CrossRef]

- Cappadona, I.; Ielo, A.; La Fauci, M.; Tresoldi, M.; Settimo, C.; De Cola, M.C.; Muratore, R.; De Domenico, C.; Di Cara, M.; Corallo, F.; et al. Feasibility and Effectiveness of Speech Intervention Implemented with a Virtual Reality System in Children with Developmental Language Disorders: A Pilot Randomized Control Trial. Children 2023, 10, 1336. [Google Scholar] [CrossRef]

- Purpura, G.; Di Giusto, V.; Zorzi, C.F.; Figliano, G.; Randazzo, M.; Volpicelli, V.; Blonda, R.; Brazzoli, E.; Reina, T.; Rezzonico, S.; et al. Use of Virtual Reality in School-Aged Children with Developmental Coordination Disorder: A Novel Approach. Sensors 2024, 24, 5578. [Google Scholar] [CrossRef]

- Maresca, G.; Corallo, F.; De Cola, M.C.; Formica, C.; Giliberto, S.; Rao, G.; Crupi, M.F.; Quartarone, A.; Pidalà, A. Effectiveness of the Use of Virtual Reality Rehabilitation in Children with Dyslexia: Follow-Up after One Year. Brain Sci. 2024, 14, 655. [Google Scholar] [CrossRef] [PubMed]

- Mangani, G.; Barzacchi, V.; Bombonato, C.; Barsotti, J.; Beani, E.; Menici, V.; Ragoni, C.; Sgandurra, G.; Del Lucchese, B. Feasibility of a Virtual Reality System in Speech Therapy: From Assessment to Tele-Rehabilitation in Children with Cerebral Palsy. Children 2024, 11, 1327. [Google Scholar] [CrossRef] [PubMed]

- Macdonald, C. Improving Virtual Reality Exposure Therapy with Open Access and Overexposure: A Single 30-Minute Session of Overexposure Therapy Reduces Public Speaking Anxiety. Front. Virtual Real. 2024, 5, 1506938. [Google Scholar] [CrossRef]

- Pergantis, P.; Bamicha, V.; Doulou, A.; Christou, A.I.; Bardis, N.; Skianis, C.; Drigas, A. Assistive and Emerging Technologies to Detect and Reduce Neurophysiological Stress and Anxiety in Children and Adolescents with Autism and Sensory Processing Disorders: A Systematic Review. Technologies 2025, 13, 144. [Google Scholar] [CrossRef]

- Tobii Dynavox Global: Assistive Technology for Communication. Available online: https://www.tobiidynavox.com/ (accessed on 20 June 2025).

- Klavina, A.; Pérez-Fuster, P.; Daems, J.; Lyhne, C.N.; Dervishi, E.; Pajalic, Z.; Øderud, T.; Fuglerud, K.S.; Markovska-Simoska, S.; Przybyla, T.; et al. The Use of Assistive Technology to Promote Practical Skills in Persons with Autism Spectrum Disorder and Intellectual Disabilities: A Systematic Review. Digit. Health 2024, 10, 20552076241281260. [Google Scholar] [CrossRef]

- Ask NAO. Available online: https://www.asknao-tablet.com/en/home/ (accessed on 20 June 2025).

- Furhat Blockly. Available online: https://docs.furhat.io/blockly/ (accessed on 20 June 2025).

- Vittascience Interface for NAO v6. Available online: https://en.vittascience.com/nao/?mode=mixed&console=bottom&toolbox=vittascience (accessed on 20 June 2025).

- LEKA. Available online: https://leka.io (accessed on 20 June 2025).

- Platform for VR Public Speaking. Available online: https://www.virtualrealitypublicspeaking.com/platform (accessed on 20 June 2025).

- ThingLink. Available online: https://www.thinglink.com/ (accessed on 20 June 2025).

- Kurai, R.; Hiraki, T.; Hiroi, Y.; Hirao, Y.; Perusquía-Hernández, M.; Uchiyama, H.; Kiyokawa, K. MagicItem: Dynamic Behavior Design of Virtual Objects with Large Language Models in a Commercial Metaverse Platform. IEEE Access 2025, 13, 19132–19143. [Google Scholar] [CrossRef]

- Therapy withVR. Available online: https://therapy.withvr.app/ (accessed on 20 June 2025).

- Grassi, L.; Recchiuto, C.T.; Sgorbissa, A. Sustainable Cloud Services for Verbal Interaction with Embodied Agents. Intell. Serv. Robot. 2023, 16, 599–618. [Google Scholar] [CrossRef]

- LuxAI. Complete Guide to Build a Conversational Social Robot QTrobot with ChatGPT. Available online: https://luxai.com/blog/complete-guide-to-build-conversational-social-robot-qtrobot-chatgpt/ (accessed on 20 June 2025).

- Lewis, J.R. The system usability scale: Past, present, and future. Int. J. Hum. Comput. Interact. 2018, 34, 577–590. [Google Scholar] [CrossRef]

- Mulia, A.P.; Piri, P.R.; Tho, C. Usability analysis of text generation by ChatGPT OpenAI using system usability scale method. Procedia Comput. Sci. 2023, 227, 381–388. [Google Scholar] [CrossRef]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An empirical evaluation of the system usability scale. Int. J. Hum. Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Lewis, J.R. Comparison of Four TAM Item Formats: Effect of Response Option Labels and Order. J. Usability Stud. 2019, 14, 224–236. [Google Scholar]

- Aldebaran. NAO Robot. Available online: https://aldebaran.com/en/nao6/ (accessed on 20 June 2025).

- Furhat Robotics. The Furhat Robot. Available online: https://www.furhatrobotics.com/furhat-robot (accessed on 20 June 2025).

- Tanev, T.K.; Lekova, A. Implementation of Actors’ Emotional Talent into Social Robots Through Capture of Human Head’s Motion and Basic Expression. Int. J. Soc. Robot. 2022, 14, 1749–1766. [Google Scholar] [CrossRef]

- Double Robotics. Double3 Robot for Telepresence. Available online: https://www.doublerobotics.com/double3.html (accessed on 20 June 2025).

- Aldebaran. Choregraphe Software Overview. Available online: http://doc.aldebaran.com/2-8/software/choregraphe/choregraphe_overview.html (accessed on 20 June 2025).

- Aldebaran. NAOqi API and SDK 2.8. Available online: http://doc.aldebaran.com/2-8/news/2.8/naoqi_api_rn2.8.html?highlight=naoqi (accessed on 20 June 2025).

- Furhat Robotics. Furhat SDK Documentation. Available online: https://docs.furhat.io/getting_started/ (accessed on 20 June 2025).

- Furhat Robotics. Furhat Remote API. Available online: https://docs.furhat.io/remote-api/ (accessed on 20 June 2025).

- Double Robotics. Double3 Developer SDK. Available online: https://github.com/doublerobotics/d3-sdk (accessed on 20 June 2025).

- W3C. WebXR Device API. Available online: https://www.w3.org/TR/webxr/ (accessed on 20 June 2025).

- Dirksen, J. Learning Three.js—The JavaScript 3D Library for WebGL, 2nd ed.; Packt Publishing: Birmingham, UK, 2015; ISBN 978-1-78439-221-5. [Google Scholar]

- 3DVista Virtual Tour Software. Available online: https://www.3dvista.com/en/ (accessed on 20 June 2025).

- NLP Cloud API Platform. Available online: https://nlpcloud.com/ (accessed on 20 June 2025).

- OpenAI. ChatGPT-4 Research Overview. Available online: https://openai.com/index/gpt-4-research/ (accessed on 20 June 2025).

- INSAIT. BgGPT Language Model. Available online: https://bggpt.ai/ (accessed on 20 June 2025).

- Express.js. Node.js Web Application Framework. Available online: https://expressjs.com/ (accessed on 20 June 2025).

- Google Developers. Blockly for Developers. Available online: https://developers.google.com/blockly (accessed on 20 June 2025).

- Node.js. SQLite Module Documentation. Available online: https://nodejs.org/api/sqlite.html (accessed on 20 June 2025).

- Meta. Casting from Meta Quest 3 to Computer. Available online: https://www.oculus.com/casting/ (accessed on 20 June 2025).

- ATLog Ethical Codex. Available online: https://atlog.ir.bas.bg/en/results/ethical-codex (accessed on 20 June 2025).

- Vlachogianni, P.; Tselios, N. Perceived Usability Evaluation of Educational Technology Using the Post-Study System Usability Questionnaire (PSSUQ): A Systematic Review. Sustainability 2023, 15, 12954. [Google Scholar] [CrossRef]

- Lah, U.; Lewis, J.R.; Šumak, B. Perceived usability and the modified technology acceptance model. Int. J. Hum. Comput. Interact. 2020, 36, 1216–1230. [Google Scholar] [CrossRef]

| No. | Question | Mean | SD | Interpretation | |

|---|---|---|---|---|---|

| PU | 1 | Using the ATLog platform would improve my performance at work. | 6.1 | 0.7 | Strongly agree |

| 2 | Using the ATLog platform would increase my productivity. | 6.1 | 0.7 | Strongly agree | |

| 3 | Using the ATLog platform would increase my efficiency at work. | 6.3 | 0.7 | Strongly agree | |

| 4 | Using the ATLog platform would make my work easier. | 6.4 | 0.7 | Strongly agree | |

| 5 | Using the ATLog platform would be useful for my work. | 6.4 | 0.7 | A Strongly agree | |

| 6 | Using the ATLog platform in my work would allow me to complete my therapeutic tasks faster. | 5.9 | 1.0 | Strongly agree | |

| PEU | 7 | Learning how to use the ATLog platform would be easy for me. | 6.4 | 0.7 | Strongly agree |

| 8 | I could easily use the ATLog platform for therapy purposes. | 6.4 | 0.8 | Strongly agree | |

| 9 | The user interface to the ATLog platform is clear and understandable. | 6.2 | 0.9 | Strongly agree | |

| 10 | It would be easy for me to learn to work with the ATLog platform. | 6.5 | 0.7 | Strongly agree | |

| 11 | I think the ATLog platform is easy to use. | 6.3 | 0.8 | Strongly agree | |

| 12 | I think it will be easy for me to learn to use the ATLog platform. | 6.4 | 0.7 | Strongly agree |

| Correlations | SUS | Design Structure of ATLog Platform | PU | PEU |

|---|---|---|---|---|

| SUS | 1 | |||

| Design Structure of ATLog platform | 0.450 * | 1 | ||

| PU | 0.262 | 0.095 | 1 | |

| PEU | 0.243 | −0.022 | 0.879 ** | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lekova, A.; Tsvetkova, P.; Andreeva, A.; Dimitrov, G.; Tanev, T.; Simonska, M.; Stefanov, T.; Stancheva-Popkostadinova, V.; Padareva, G.; Rasheva, K.; et al. A Design-Based Research Approach to Streamline the Integration of High-Tech Assistive Technologies in Speech and Language Therapy. Technologies 2025, 13, 306. https://doi.org/10.3390/technologies13070306

Lekova A, Tsvetkova P, Andreeva A, Dimitrov G, Tanev T, Simonska M, Stefanov T, Stancheva-Popkostadinova V, Padareva G, Rasheva K, et al. A Design-Based Research Approach to Streamline the Integration of High-Tech Assistive Technologies in Speech and Language Therapy. Technologies. 2025; 13(7):306. https://doi.org/10.3390/technologies13070306

Chicago/Turabian StyleLekova, Anna, Paulina Tsvetkova, Anna Andreeva, Georgi Dimitrov, Tanio Tanev, Miglena Simonska, Tsvetelin Stefanov, Vaska Stancheva-Popkostadinova, Gergana Padareva, Katia Rasheva, and et al. 2025. "A Design-Based Research Approach to Streamline the Integration of High-Tech Assistive Technologies in Speech and Language Therapy" Technologies 13, no. 7: 306. https://doi.org/10.3390/technologies13070306

APA StyleLekova, A., Tsvetkova, P., Andreeva, A., Dimitrov, G., Tanev, T., Simonska, M., Stefanov, T., Stancheva-Popkostadinova, V., Padareva, G., Rasheva, K., Kremenska, A., & Vitanova, D. (2025). A Design-Based Research Approach to Streamline the Integration of High-Tech Assistive Technologies in Speech and Language Therapy. Technologies, 13(7), 306. https://doi.org/10.3390/technologies13070306