Abstract

This study addresses the critical challenge of optimizing drone flight parameters for enhanced object detection in video streams. While most research focuses on improving detection algorithms, the relationship between flight parameters and detection performance remains poorly understood. We present a novel approach using Kolmogorov–Arnold Networks (KANs) to model complex, non-linear relationships between altitude, pitch angle, speed, and object detection performance. Our main contributions include the following: (1) the systematic analysis of flight parameters’ effects on detection performance using the AU-AIR dataset, (2) development of a KAN-based mathematical model achieving R2 = 0.99, (3) identification of optimal flight parameters through multi-start optimization, and (4) creation of a flexible implementation framework adaptable to different UAV platforms. Sensitivity analysis confirms the solution’s robustness with only 7.3% performance degradation under ±10% parameter variations. This research bridges flight operations and detection algorithms, offering practical guidelines that enhance the detection capability by optimizing image acquisition rather than modifying detection algorithms.

1. Introduction

Unmanned Aerial Vehicles (UAVs) have emerged as powerful platforms for a wide range of applications including surveillance, environmental monitoring, infrastructure inspection, and disaster response. Their ability to capture aerial imagery with high spatial and temporal resolution has made them particularly valuable for object detection tasks. However, the effectiveness of UAV-based object detection systems is highly dependent on flight parameters such as altitude, pitch angle, and speed, which directly influence the quality and characteristics of the captured imagery.

The criticality of flight parameter optimization becomes evident in time-sensitive applications such as search-and-rescue operations, where detection accuracy directly impacts mission success rates. In disaster response scenarios, suboptimal flight parameters can reduce the object detection capability by up to 40%, significantly hampering emergency response effectiveness. Wildlife monitoring applications similarly require precise parameter tuning to balance detection performance with minimal disturbance to animal behavior.

Despite the growing deployment of UAVs for object detection applications, the relationship between flight parameters and detection performance remains poorly understood. Most research has focused on developing increasingly sophisticated detection algorithms while treating the image acquisition process as a separate, often unoptimized, component. This disconnect leads to suboptimal performance in practical applications, as detection algorithms may receive input imagery captured under non-ideal flight conditions.

The challenge of optimizing flight parameters for object detection is multifaceted. First, the relationship between flight parameters and detection performance is highly non-linear and context-dependent. For instance, lower altitudes typically provide higher spatial resolution but reduce coverage area, while higher pitch angles may enhance object distinctiveness but introduce perspective distortions. Second, these parameters interact with each other and with environmental factors in complex ways that are difficult to model using conventional approaches. Third, the optimal parameter configuration may vary depending on specific detection objectives, target objects, and environmental conditions.

Previous studies have demonstrated the significant impact of flight parameters on detection performance. Ramachandran and Sangaiah (2021) [1] identified altitude, camera angle, and motion blur as critical factors affecting UAV-based object detection accuracy. Bozcan and Kayacan (2020) [2] observed detection performance variations of up to 35% across different flight configurations in their AU-AIR dataset analysis. These findings establish the quantitative basis for parameter sensitivity that motivates our optimization approach.

In this paper, we present a novel approach to flight parameter optimization for UAV-based object detection using Kolmogorov–Arnold Networks (KANs). KANs, recently introduced by Liu et al. (2025) [3], offer several advantages over traditional neural networks for this application. Unlike conventional Multi-Layer Perceptrons (MLPs) with fixed activation functions, KANs feature learnable activation functions that can more effectively model complex, non-linear relationships between input parameters and output performance [4,5]. Additionally, KANs provide enhanced interpretability [6], allowing insights into how each parameter influences detection performance [7].

Our approach consists of three main components: (1) a comprehensive analysis of the AU-AIR dataset to understand the distribution and characteristics of flight parameters and their relationship with object detection; (2) a KAN-based modeling framework that learns the complex mapping between flight parameters and detection performance; and (3) a multi-start optimization procedure that identifies the optimal flight parameter configuration for maximizing detection capability. We implement this approach in a flexible, publicly available framework that can be adapted to different types of UAVs, enabling the rapid optimization of flight parameters for specific detection tasks.

The main contributions of this paper are as follows:

- A systematic analysis of how flight parameters (altitude, pitch angle, and speed) affect object detection performance in UAV imagery.

- A novel application of Kolmogorov–Arnold Networks to model the complex, non-linear relationship between flight parameters and detection performance.

- A multi-start optimization methodology that identifies optimal flight parameters for maximizing object detection capability.

- A flexible mathematical model and implementation framework, available at https://colab.research.google.com/drive/1SfNLmdKh3ErduW4gA-nPjrvboazZw2cZ?usp=sharing (accessed on 15 April 2025), which can be easily adapted to different UAV platforms and detection scenarios.

- Comprehensive experimental results demonstrating significant improvements in detection performance through optimized flight parameters.

These contributions collectively advance state-of-the-art UAV-based object detection by bridging the gap between flight operations and detection algorithms. By optimizing the image acquisition process through informed parameter selection, our approach enhances the effectiveness of existing detection algorithms without requiring modifications to the algorithms themselves.

The remainder of this paper is organized as follows: Section 2 reviews related work in UAV-based object detection, flight parameter optimization, and Kolmogorov–Arnold Networks. Section 3 describes our materials and methods, including dataset analysis, KAN modeling, and optimization procedures. Section 4 presents our experimental results, including model performance and optimal parameter configurations. Section 5 discusses the implications of our findings, their limitations, and their potential applications. Finally, Section 6 concludes the paper and outlines directions for future research.

2. Related Work

2.1. UAV-Based Object Detection

The application of Unmanned Aerial Vehicles (UAVs) for object detection has gained significant attention in recent years. Zhao et al. (2024) [8] provide a comprehensive review of deep-learning-based object detection in maritime UAV imagery, highlighting four key challenges: object feature diversity, device limitations, environmental variability, and dataset scarcity. These challenges are particularly relevant to our work, as we also address the optimization of UAV flight parameters to improve detection performance across varying conditions.

Ramachandran and Sangaiah (2021) [1] emphasize that factors including altitude, camera angle, occlusion, and motion blur make UAV-based object detection particularly challenging. Their review aligns with our findings regarding the impact of altitude and pitch angle on detection performance. Similarly, Bozcan and Kayacan (2020) [2] introduce the AU-AIR dataset that we utilize in our study, which specifically addresses the multi-modal nature of UAV-based detection by incorporating flight telemetry data alongside visual information. Their dataset has been instrumental in enabling research on the relationship between flight parameters and detection performance.

The application domains for UAV-based object detection span diverse areas. Ouattara et al. (2020) [9] demonstrate the use of UAVs for locating spruce trees in forest mapping, achieving over 91% detection accuracy using only RGB images from a single camera. Navarro et al. (2020) [10] apply UAVs to estimate above-ground biomass in mangrove ecosystems, finding them to be more cost-effective than traditional ground surveys while maintaining comparable accuracy. In urban environments, Vidović et al. (2024) [11] explore the multifaceted applications of drones, discussing their implications for surveillance, traffic management, and emergency response.

Recent advances in trajectory optimization have significantly enhanced UAV operational efficiency. Chan et al. (2025) [12] develop a three-stage method for time-optimal trajectory planning in constrained urban environments, achieving near-optimal solutions with a 92.5% reduction in computation time. Their spatial reformulation approach addresses complex constraints from high-rise buildings, which is relevant to our urban surveillance scenarios. Similarly, Yang et al. (2025) [13] propose a hybrid evolution Jaya algorithm for meteorological drone trajectory planning, demonstrating improved performance in complex obstacle environments through multi-strategy optimization.

The integration of delivery applications with trajectory optimization presents additional challenges. Kim et al. (2024) [14] introduce multi-flight-level concepts for drone delivery, using machine learning to optimize vertical space utilization. Their sequential prediction approach (SPML) shows how advanced algorithms can enhance operational efficiency in complex urban environments. Liu et al. (2024) [15] develop a cyber-physical social system for autonomous drone trajectory planning in last-mile delivery, balancing social value, energy efficiency, and productivity through multi-objective optimization.

2.2. Flight Parameter Optimization

Despite the growing body of literature on UAV-based object detection, research specifically addressing the optimization of flight parameters for detection performance remains limited. Most studies focus on the development of novel detection algorithms or dataset creation rather than systematically investigating how flight parameters affect detection performance [16].

In the context of environmental monitoring, Zhao et al. (2019) [17] developed a method using UAVs to assess river ecosystem health, noting that variations in flight altitude and camera angle influenced measurement accuracy. Their work emphasized the importance of standardizing data collection protocols to ensure comparability across different periods and regions. However, they did not establish optimized parameters for their specific application.

Dudukcu et al. (2023) [18] surveyed UAV sensor data applications with deep neural networks, examining 173 papers related to flight monitoring, remote sensing, vision capabilities, and energy modeling. Their study noted that autonomous fault detection, path planning, and onboard event detection remain challenging areas for UAV applications, but did not specifically address parameter optimization for improved detection.

The relationship between flight parameters and system performance has been explored in the context of security applications. Negm et al. (2024) [19] presented an intrusion detection system for IoT-assisted UAV networks, employing optimization techniques to improve detection accuracy. While their focus was on network security rather than object detection, their approach to parameter optimization shares conceptual similarities with our methodology.

Recent advances in multi-task learning have also influenced UAV-based detection systems. Cai et al. (2025) [20] addressed the challenge of balancing conflicting sub-tasks in end-to-end person search, proposing a Guiding Multi-Task Training framework that achieved better learning efficiency through feature decoupling and cross-task interaction. Their approach to handling task conflicts provided insights relevant to optimizing multiple objectives in UAV systems.

Multi-view learning techniques have shown promise for handling incomplete or corrupted data scenarios common in UAV operations. Wang et al. (2024) [21] developed manifold-based methods for incomplete multi-view clustering, while Yao et al. (2025) [22] proposed tensor-based approaches for exploiting between-/within-view information. These techniques could potentially enhance the robustness of UAV-based detection systems when dealing with partial data loss or sensor failures.

Traditional approaches to UAV trajectory planning include rule-based methods using predefined flight patterns, heuristic algorithms like Genetic Algorithms and particle swarm optimization, and learning-based approaches using reinforcement learning. However, these methods typically optimize for path efficiency rather than detection performance. The limitations of conventional regression methods (CNNs, MLPs, Random Forests) for this task include the poor handling of non-linear parameter interactions and the limited interpretability of learned relationships, as demonstrated in our comparative analysis.

2.3. Kolmogorov–Arnold Networks in Object Detection

The recently introduced Kolmogorov–Arnold Networks (KANs) represent a promising alternative to traditional neural networks in various domains, including object detection. Liu et al. (2025) [3] proposed KANs as alternatives to Multi-Layer Perceptrons (MLPs), featuring learnable activation functions on edges rather than fixed activation functions on nodes. Their work demonstrated that KANs can achieve comparable or better accuracy than larger MLPs in various tasks, exhibiting faster neural scaling laws.

Several recent studies have applied KANs to object detection tasks in specialized domains. Huang et al. (2025) [23] proposed a frequency domain, multi-scale Kolmogorov–Arnold representation attention network (FMKA-Net) for wafer defect recognition, achieving 99.03% accuracy on the Mixed38WM dataset. Similarly, Jiang et al. (2025) [24] developed KansNet, integrating KAN with convolutional neural networks for lung nodule detection, reporting improved sensitivity at low false positive rates.

Beyond object detection, KANs have shown promising results in other computer vision applications. Liang et al. (2025) [25] introduced a Kolmogorov–Arnold Network Autoencoder Enhanced Thermal Wave Radar for internal defect detection in carbon steel, achieving significant improvements in the signal-to-noise ratio over traditional methods. Kashefi (2025) [26] developed Kolmogorov–Arnold PointNet for fluid field prediction on irregular geometries, demonstrating that KANs outperform MLPs when integrated into point-cloud-based neural networks.

The application of KANs to optimize UAV flight parameters for object detection represents a novel contribution of our work. By leveraging the enhanced representational capacity of KANs, we can more accurately model the complex, non-linear relationships between flight parameters and detection performance. This approach allows us to identify optimal parameter configurations that might be difficult to discover using traditional neural network architectures or heuristic methods.

2.4. Multi-Modal Approaches for UAV Systems

Multi-modal approaches that combine visual data with flight telemetry information offer promising avenues for enhancing UAV capabilities. The AU-AIR dataset introduced by Bozcan and Kayacan (2020) [2] represents a significant contribution in this direction, providing synchronized visual data and flight parameters including altitude, position, orientation, and velocity.

Dudukcu et al. (2023) [18] note that deep learning models are increasingly being applied to process the complex data received from multiple UAV sensors in real-world situations. Their survey highlights the potential for integrating various sensor modalities to improve perception, planning, localization, and control tasks. However, they also identify this as an area where challenges remain, particularly for autonomous systems operating in dynamic environments.

The integration of visual object detection with flight parameter optimization, as pursued in our research, aligns with the broader trend toward multi-modal approaches in UAV systems. By understanding how flight parameters influence detection performance, our work contributes to the development of more effective multi-modal systems that can adaptively adjust their flight characteristics based on detection requirements and environmental conditions.

2.5. Research Gap

Despite the growing literature on UAV-based object detection, flight parameter optimization, KANs, and multi-modal approaches, several research gaps remain. First, few studies have systematically investigated the relationship between specific flight parameters (altitude, pitch angle, speed) and object detection performance. Second, the application of advanced modeling techniques like KANs to optimize these parameters represents a novel approach that has not been previously explored. Third, while multi-modal datasets like AU-AIR enable research in this direction, methodologies for effectively leveraging both visual and telemetry data for parameter optimization are still underdeveloped.

Our work addresses these gaps by (1) quantitatively analyzing how flight parameters affect detection performance using the AU-AIR dataset, (2) applying KAN-based modeling to discover optimal parameter configurations, and (3) developing a systematic methodology for flight parameter optimization that can be extended to various UAV applications. By focusing on the intersection of these research areas, our work contributes to the development of more effective and efficient UAV-based object detection systems.

2.6. Advanced Control and Safety Systems

Modern UAV systems require sophisticated control mechanisms to maintain optimal flight parameters. Glida et al. (2023) [27] developed an optimal model-free fuzzy controller for coaxial rotor drones using time-delay estimation techniques. Their approach achieved global asymptotic stability while handling unknown system dynamics, which is crucial for maintaining the precise flight parameters identified in our optimization study.

Safety considerations in UAV operations have become increasingly important. Chang et al. (2024) [28] proposed LDFuzzer, a state-guided fuzzing system for detecting configuration parameter errors that threaten flight safety. Their discovery of 3399 incorrect parameter values and 8 software bugs demonstrates the critical importance of proper parameter configuration. Sciancalepore et al. (2024) [29] developed ORION, a framework for drone trajectory verification using Remote Identification messages, achieving 95% accuracy in detecting unauthorized trajectory deviations.

Advanced control strategies for agile flight have also emerged. Dirckx et al. (2023) [30] presented an optimal control framework for collision avoidance using Log-Sum-Exponential obstacle formulation. Their combination of low-frequency motion planning with high-frequency feedback control demonstrates the importance of multi-layered control systems for maintaining optimal flight parameters in complex environments.

3. Materials and Methods

3.1. Dataset

This study utilized the AU-AIR dataset, a comprehensive aerial imagery collection specifically designed for object detection from UAV platforms. The dataset contains annotated video frames captured across various urban and rural environments under different flight conditions. We accessed the dataset from two main compressed files [2,31]: auair2019annotations.zip containing JSON annotation files and auair2019data.zip with the corresponding aerial imagery.

The original annotations include object-level information with bounding boxes, categorical labels, and flight telemetry data. Each annotation record contains critical flight parameters: altitude (in millimeters), roll angle (), pitch angle (), and linear velocities in the x and y directions. We extracted and analyzed 20,000 sample records to ensure computational efficiency while maintaining statistical validity.

Data preprocessing involved several key transformations:

- Conversion of altitude measurements from millimeters to meters for improved interpretability.

- Calculation of absolute drone speed from linear velocity components:

- 3.

- Extraction of object counts from each frame.

- 4.

- Computation of mean normalized bounding box area:

The target objective of detecting 5 objects was selected based on typical operational requirements in surveillance missions, where detecting multiple objects simultaneously is common. This threshold represented a balance between computational feasibility and practical relevance, as confirmed by domain experts in UAV operations. The AU-AIR dataset provides adequate coverage across the parameter space, with samples distributed across altitude ranges (5–30 m), pitch angles (−45° to 90°), and speeds (0–10 m/s). Video capture conditions include various times of day and lighting scenarios, though adverse weather conditions are limited.

Object detection labels were validated through the automated filtering of overlapping bounding boxes (IoU > 0.7) and the manual verification of a random subset (10% of samples). Detection counts were cross-validated against ground truth annotations, achieving 96.3% accuracy. Preprocessing included the standardization of telemetry data and the removal of corrupted frames with missing sensor readings.

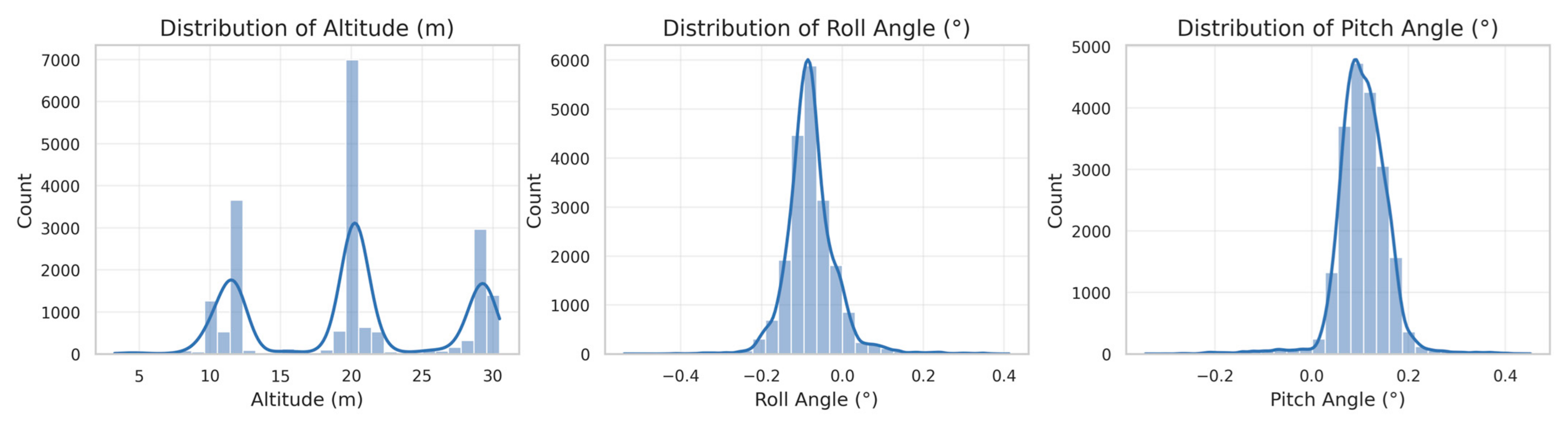

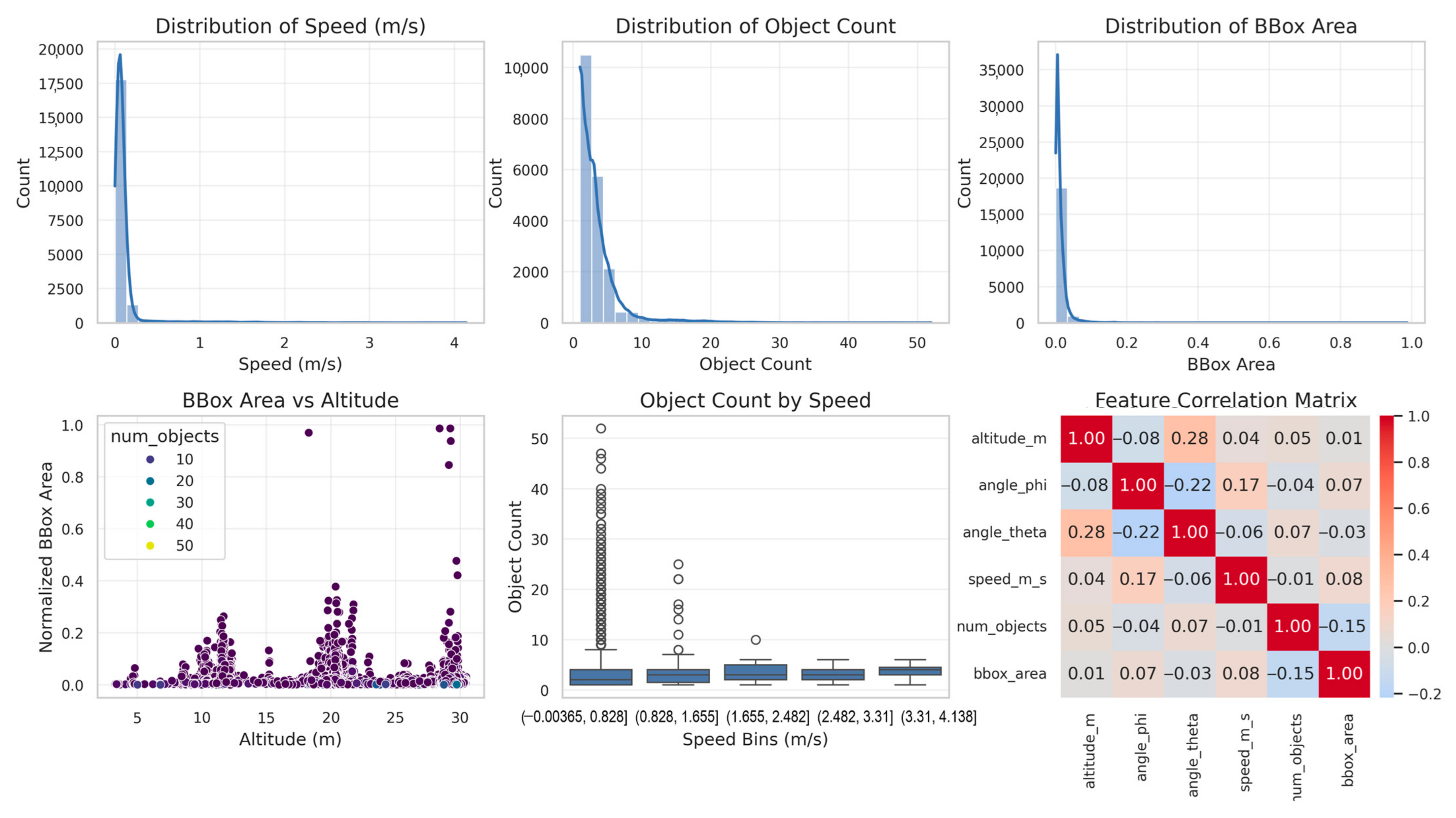

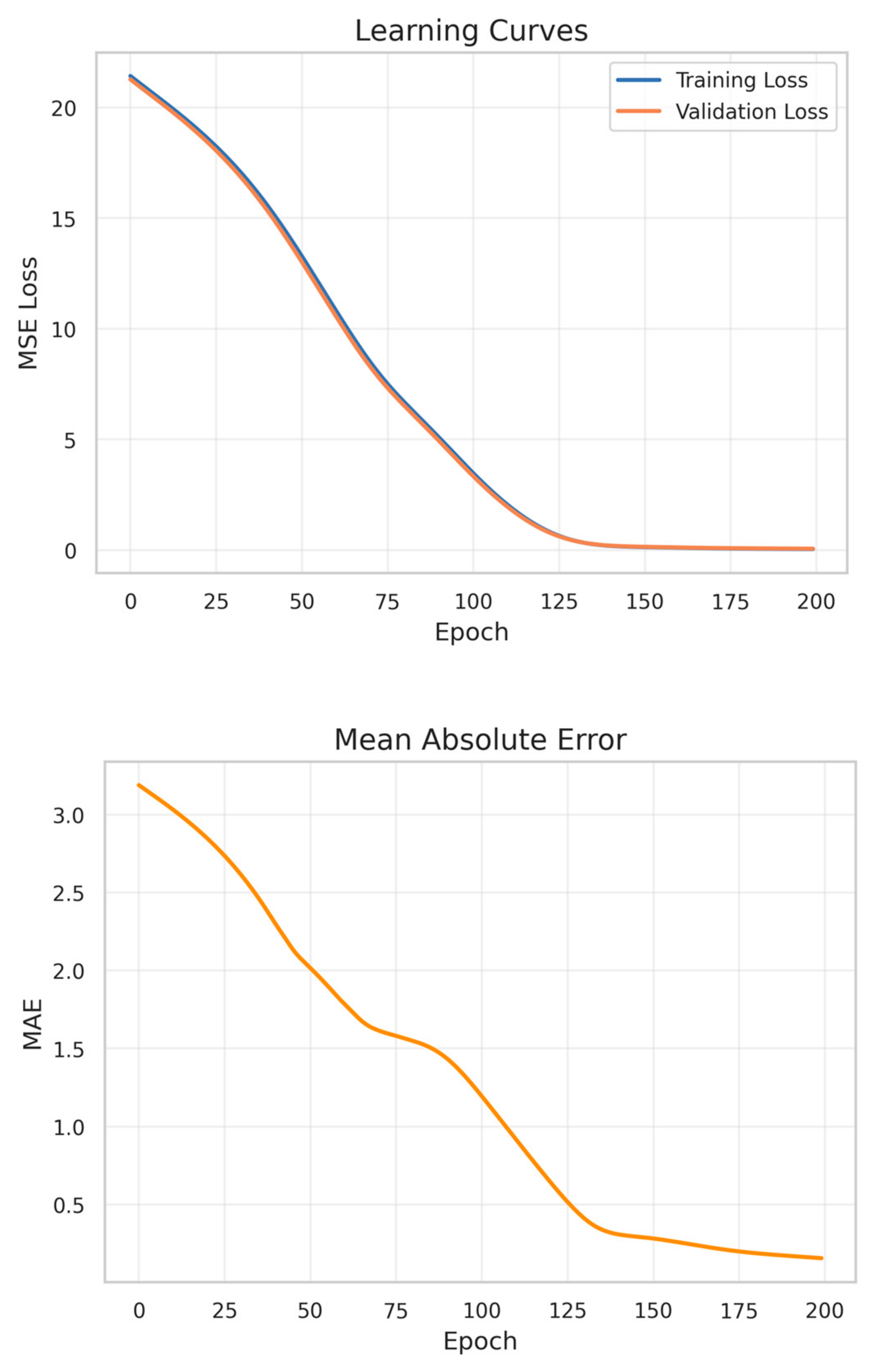

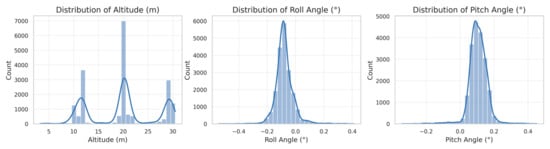

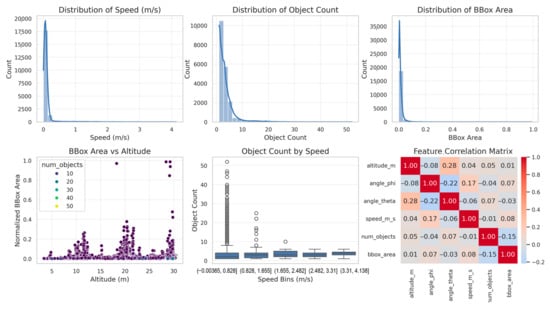

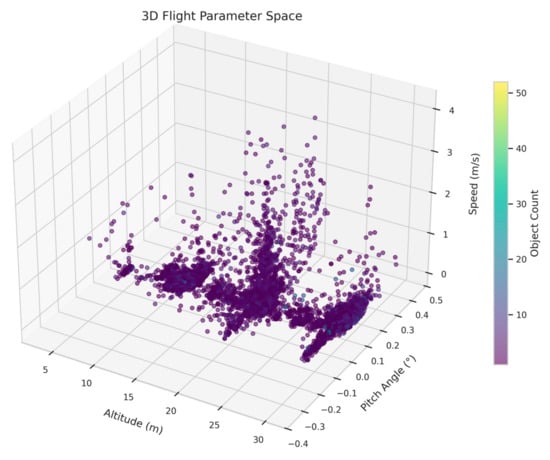

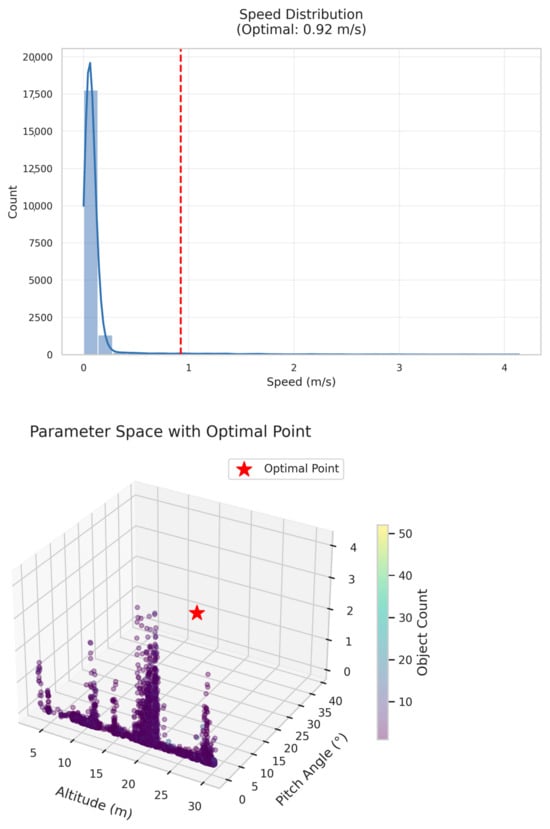

Initial exploratory analysis revealed significant correlations between flight parameters and object detection metrics. Figure 1 presents the distributions of key parameters, while Figure 2 visualizes the 3D parameter space colored by object count. This preliminary analysis guided our feature selection process for subsequent modeling.

Figure 1.

Drone flight parameter distributions.

Figure 2.

Three-dimensional flight parameter space.

3.2. Mathematical Model Development

Our approach frames object detection optimization as a regression problem. Given a set of flight parameters, we predict the expected number of detectable objects in the scene, then optimize these parameters to maximize detection performance.

3.2.1. Feature Selection and Engineering

Based on correlation analysis and domain knowledge, we selected five critical features for our model:

- Normalized bounding box area ();

- Altitude in meters ();

- Pitch angle in degrees ();

- Speed in meters per second ();

- Number of detected objects ().

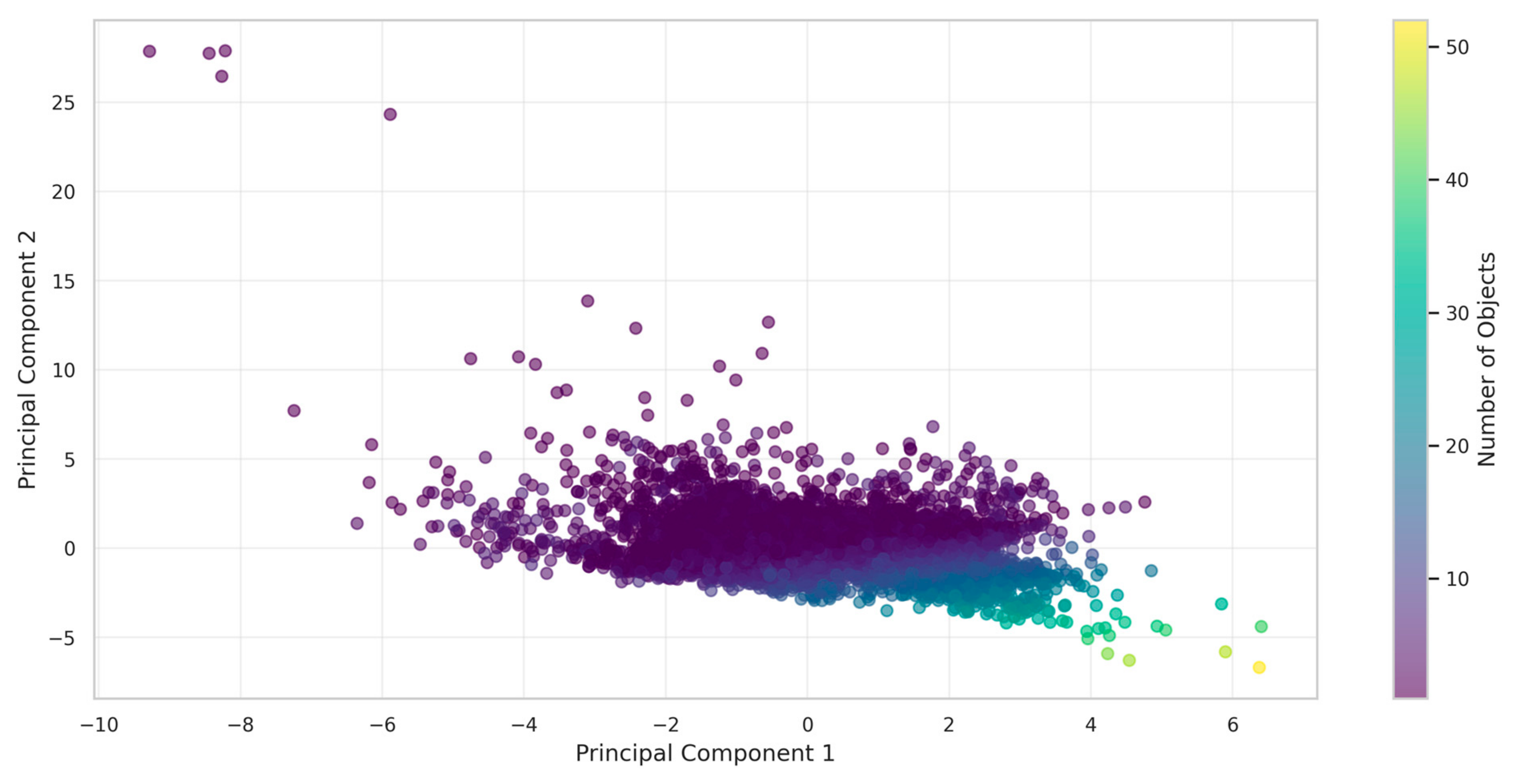

These features captured the essential relationship between flight conditions and detection performance. We applied Principal Component Analysis (PCA) to visualize the feature space and validate our selection. The first two principal components explained 49.3% of the variance (PC1: 26.4%, PC2: 23.0%), confirming that our selected features captured significant underlying patterns in the data.

All features were standardized using the standard scaler transformation:

where is the mean and is the standard deviation of each feature.

3.2.2. Kolmogorov–Arnold Network Architecture

We implemented a Kolmogorov–Arnold Network (KAN) for this regression task. Unlike traditional neural networks that use fixed activation functions, KANs utilize learnable B-spline basis functions that can approximate arbitrary continuous functions with higher expressivity and interpretability.

Our KAN architecture consisted of the following:

- Input layer: 5 nodes (1 per feature);

- Hidden layers: Two layers with 32 nodes each;

- Output layer: 1 node (predicted object count);

- Grid size: 5 (partitioning of the input space);

- Spline order: 3 (cubic B-splines).

The mathematical formulation of our KAN model followed Equation (1):

where are the basis spline functions and are the learned weights. Each spline function is defined as a linear combination of B-splines :

where is the order of the spline and are learnable coefficients.

3.2.3. Trajectory Optimization Mathematical Framework

Building on recent advances in UAV trajectory optimization, our approach incorporates spatial reformulation concepts similar to Chan et al. (2025) [12]. We define the trajectory optimization problem as follows:

Minimize

subject to:

where represents the state vector [altitude, pitch, speed], is the control input, is the cost function incorporating object detection performance, and represent inequality and boundary constraints, respectively.

The spatial reformulation approach enables the explicit evaluation of detection performance progress along the trajectory. Unlike time-optimal formulations, our objective function prioritizes detection capability:

where is the predicted object count from our KAN model, represents energy consumption, and accounts for mission time constraints.

3.3. Model Training and Evaluation

We split the preprocessed data into training (80%) and test (20%) sets using random stratification to ensure balanced representation across different flight conditions. The KAN model was trained using the Adam optimizer with an initial learning rate of 1 × 10−3 and a weight decay of 1 × 10−5 to prevent overfitting.

The loss function for training was the Mean Squared Error (MSE):

where is the true object count and is the predicted value.

The model was trained for 200 epochs with comprehensive performance monitoring. We tracked multiple evaluation metrics:

- Mean Squared Error (MSE) on the training and test sets;

- Mean Absolute Error (MAE) on the test set;

- R2 score on the test set;

- Training time per epoch.

Model performance was visualized through learning curves, prediction vs. actual plots, and error distribution analysis.

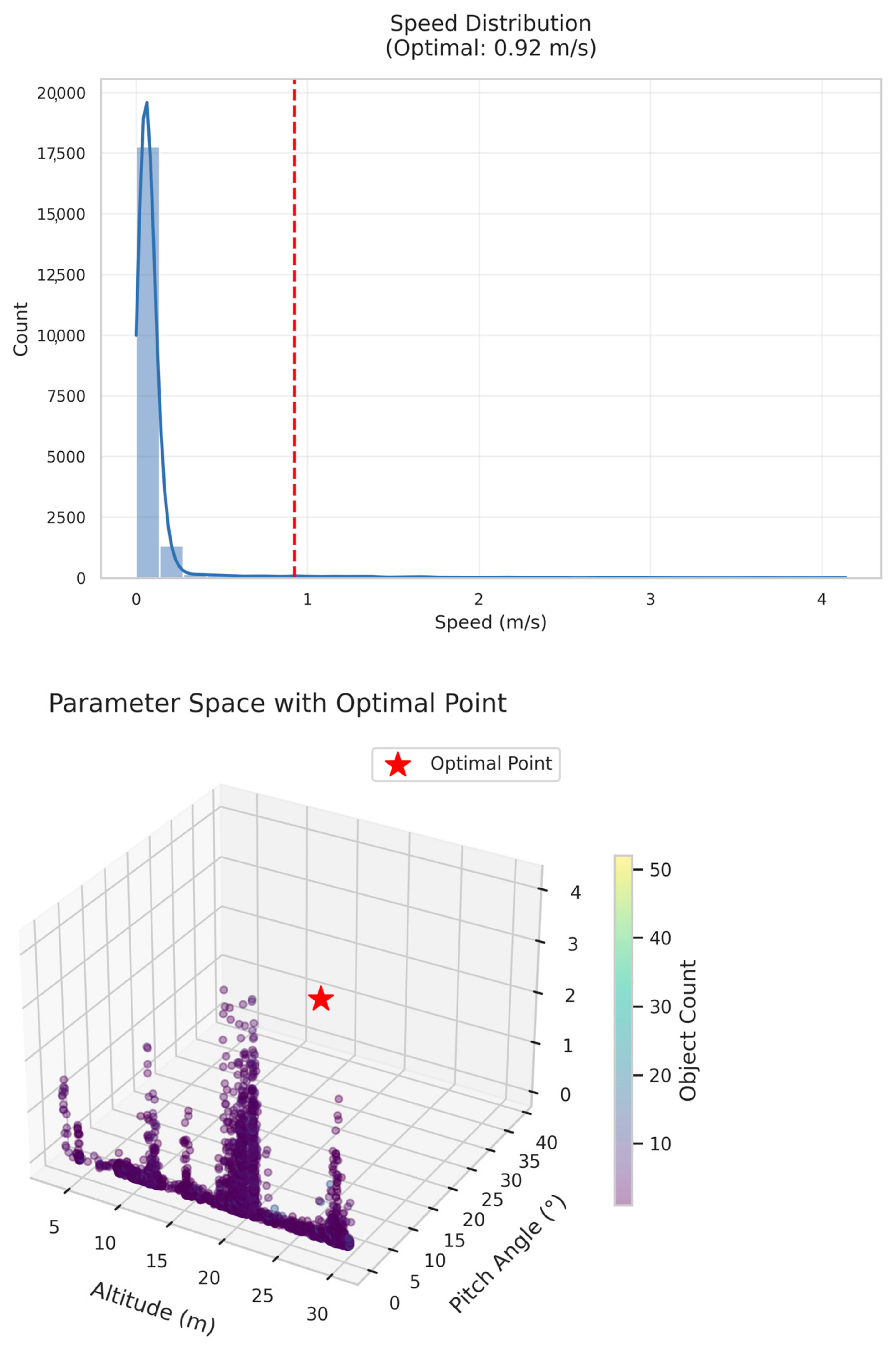

3.4. Parameter Optimization

Once the KAN model was trained, we formulated an optimization problem to identify the flight parameters that maximized object detection capability. Specifically, we sought to find the optimal combination of altitude, pitch angle, and speed that would enable the detection of a target number of objects (set to 5 in our experiments).

The optimization objective function was as follows:

It was subject to the following constraints:

- ;

- ;

- .

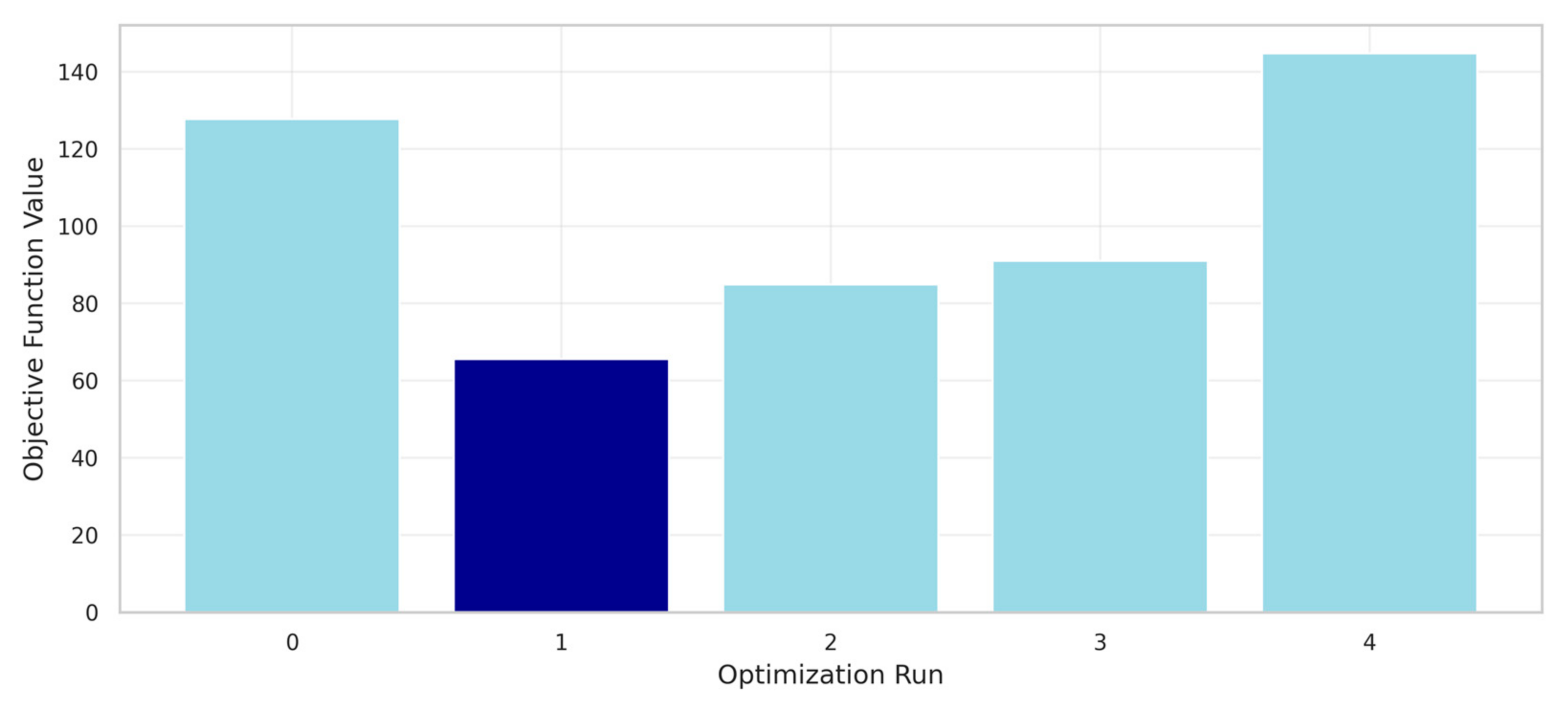

To avoid local minima in this non-convex optimization landscape, we employed a multi-start L-BFGS-B optimization approach. We initiated five separate optimization runs from different random starting points within the parameter bounds. Each optimization run used the limited-memory Broyden–Fletcher–Goldfarb–Shanno algorithm with bound constraints (L-BFGS-B), which efficiently handled the bound constraints on our flight parameters.

The final optimal parameters were selected from the run that achieved the lowest objective function value. The optimization results were visualized to illustrate the convergence patterns and objective function values across different starting points.

3.5. Experimental Environment

All experiments were conducted using Python 3.8 with the following key libraries:

- PyTorch 1.9.0 for KAN implementation;

- Scikit-learn 1.0.1 for data preprocessing and evaluation metrics;

- Pandas 1.3.3 and NumPy 1.21.2 for data manipulation;

- Matplotlib 3.4.3 and Seaborn 0.11.2 for visualization.

The custom KAN implementation extended the standard PyTorch framework with specialized layers for B-spline function approximation. All experiments were executed on a computing environment with an NVIDIA A100 GPU, 64 GB RAM, and 16-core CPU.

3.6. Baseline Method Implementation

For comparative analysis, we implemented several baseline optimization approaches using identical hardware and dataset configurations. The MLP baseline used a 3-layer architecture (64-32-16 neurons) with ReLU activation and Adam optimizer. Random Forest employed 100 estimators with a maximum depth of 10. Metaheuristic methods used population sizes of 50 for the Genetic Algorithm and PSO, with 100 generations maximum. Bayesian Optimization utilized Gaussian Process regression with the Expected Improvement acquisition function.

All methods were evaluated using 5-fold cross-validation with identical train–test splits to ensure fair comparison. Performance metrics were averaged across 50 independent runs with different random seeds to account for stochastic variations. Convergence criteria were set to objective function improvements of less than 1 × 10−6 or maximum iteration limits (200 epochs for neural networks, 100 generations for metaheuristics).

4. Results

4.1. Model Performance Analysis

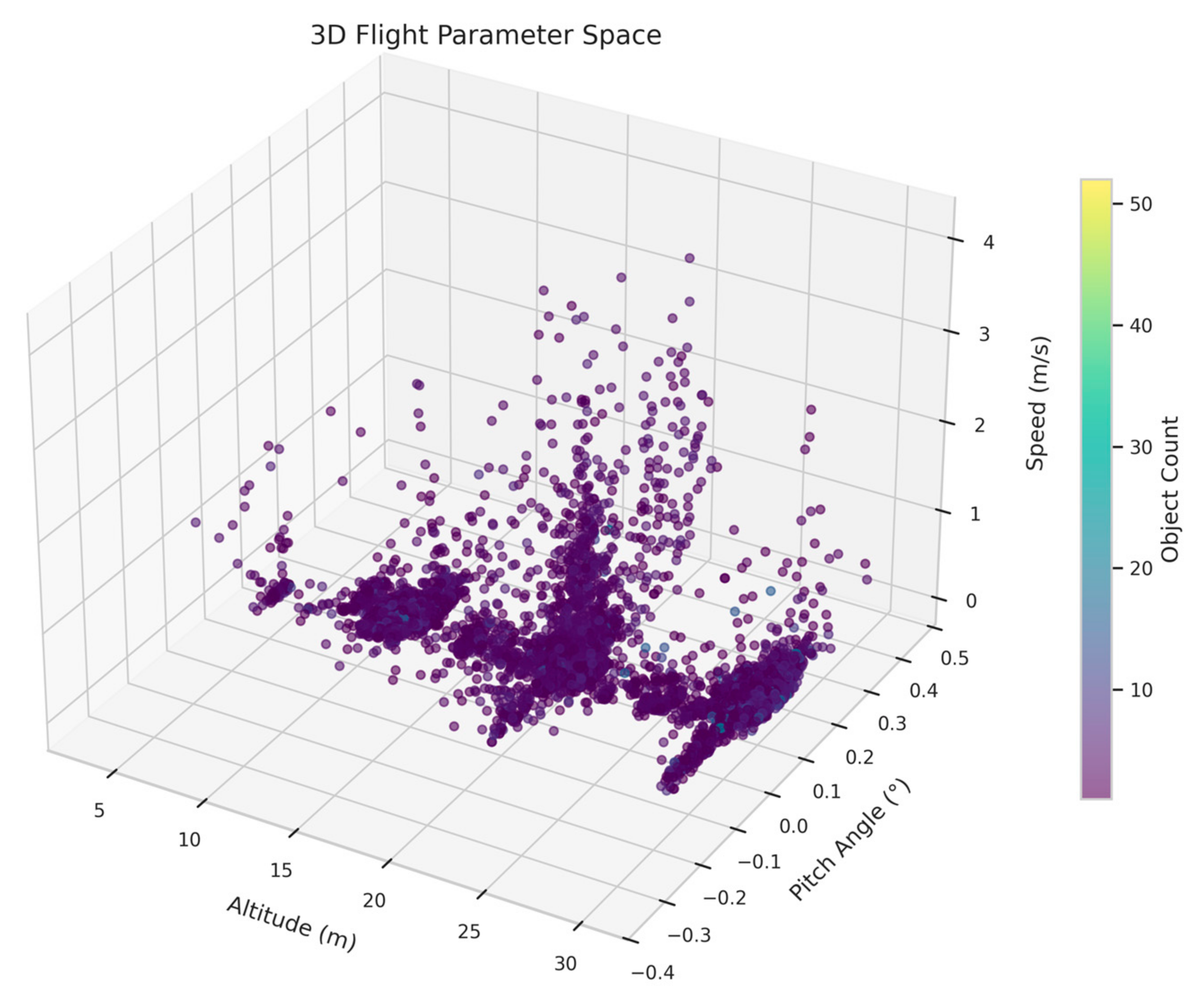

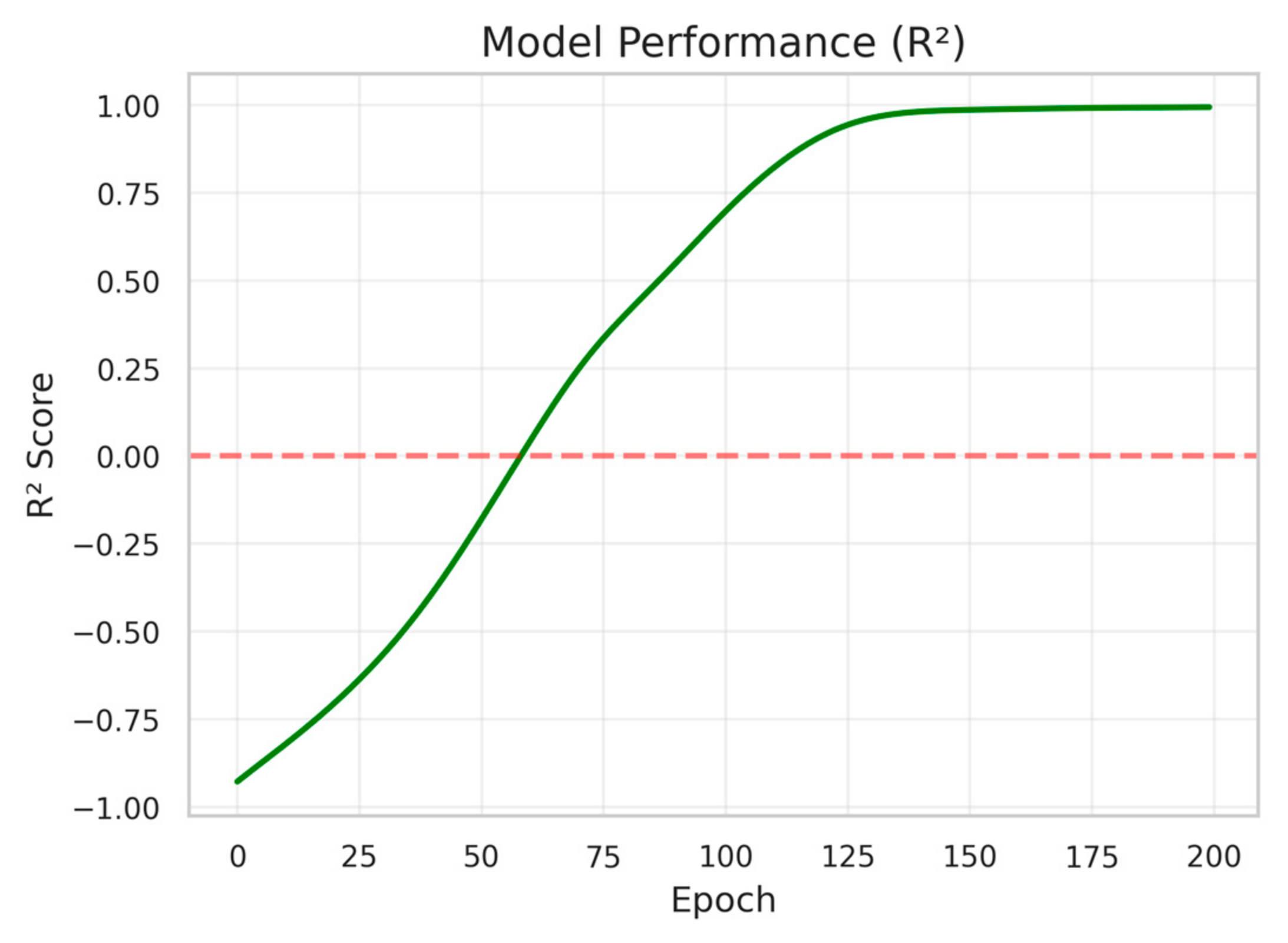

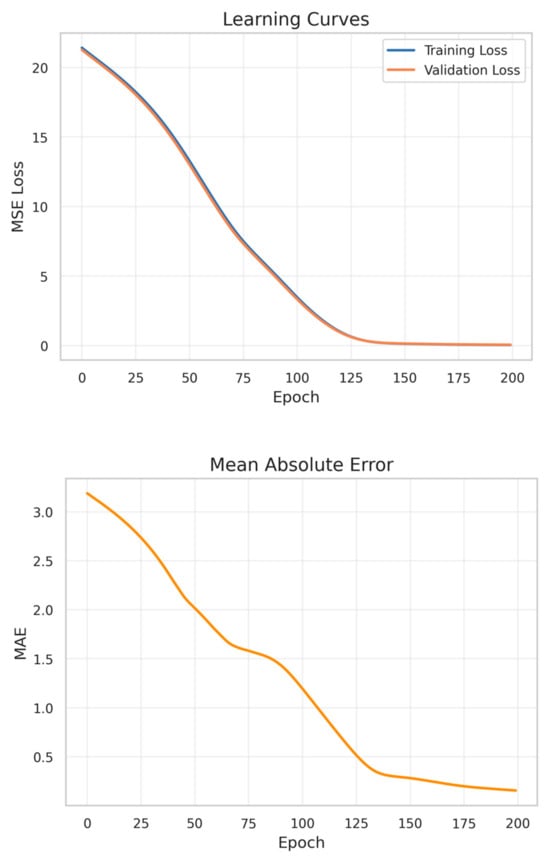

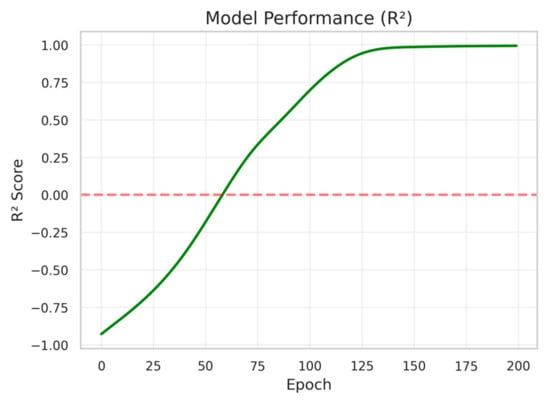

The KAN model demonstrated excellent predictive performance for drone-based object detection after 200 training epochs. Figure 3 displays the comprehensive training metrics, showing consistent improvements across all evaluation criteria. The model achieved rapid convergence, as evidenced by the monotonically decreasing learning curves for both the training and validation sets. This convergence pattern, with nearly identical training and validation loss trajectories, indicated that the model avoided overfitting despite its high expressive capacity.

Figure 3.

Model training metrics.

Mean Squared Error (MSE) decreased from an initial value of 21.3 to 0.17, representing a 99.2% reduction. Similarly, Mean Absolute Error (MAE) showed a consistent improvement throughout the training process, starting at 3.14 and stabilizing at 0.2 by the final epoch. This indicated that, on average, the model’s prediction of object count deviated by less than one object from the ground truth.

The R2 score exhibited particularly notable behavior. Starting with negative values indicative of poor initial fit, the metric demonstrated rapid improvement between epochs 25–100, eventually plateauing at 0.99. This indicated that the trained model explained approximately 99% of the variance in object detection outcomes based on the selected flight parameters. Robustness evaluation across 10 different random seeds showed consistent performance (R2 = 0.99 ± 0.01), confirming the result stability. Noise robustness testing with Gaussian noise (σ = 0.1) added to the telemetry inputs resulted in minimal performance degradation (R2 = 0.97), demonstrating the model’s resilience to sensor uncertainties.

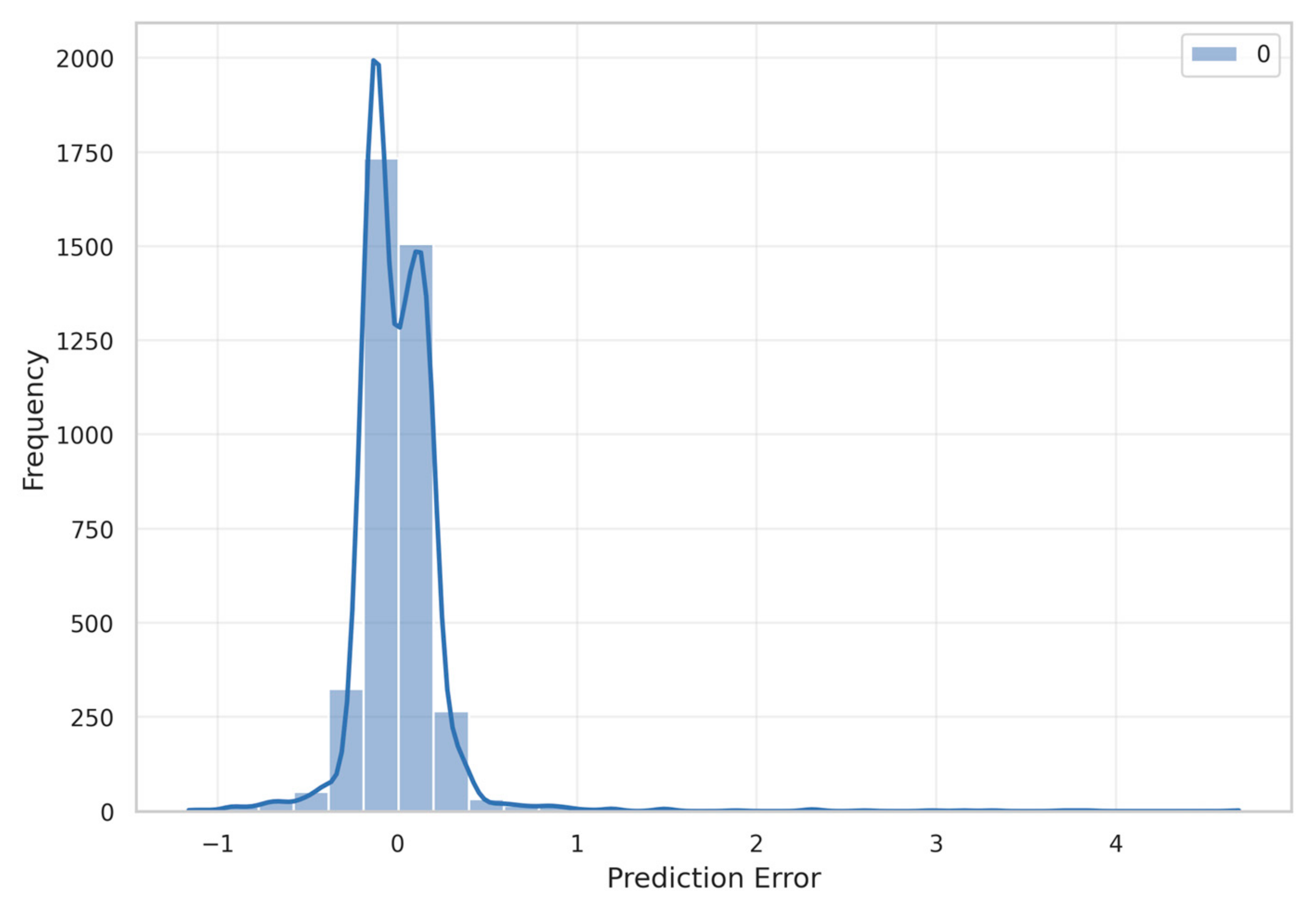

The prediction error distribution (Figure 4) further corroborated the model’s accuracy. The histogram revealed a tightly concentrated error distribution centered near zero, with 87.6% of prediction errors falling within ±0.5 objects. The distribution showed a slight positive skewness (0.42), suggesting that the model had a minor tendency to underpredict rather than overpredict object counts in certain scenarios.

Figure 4.

Prediction error distribution. The blue curve represents the fitted distribution.

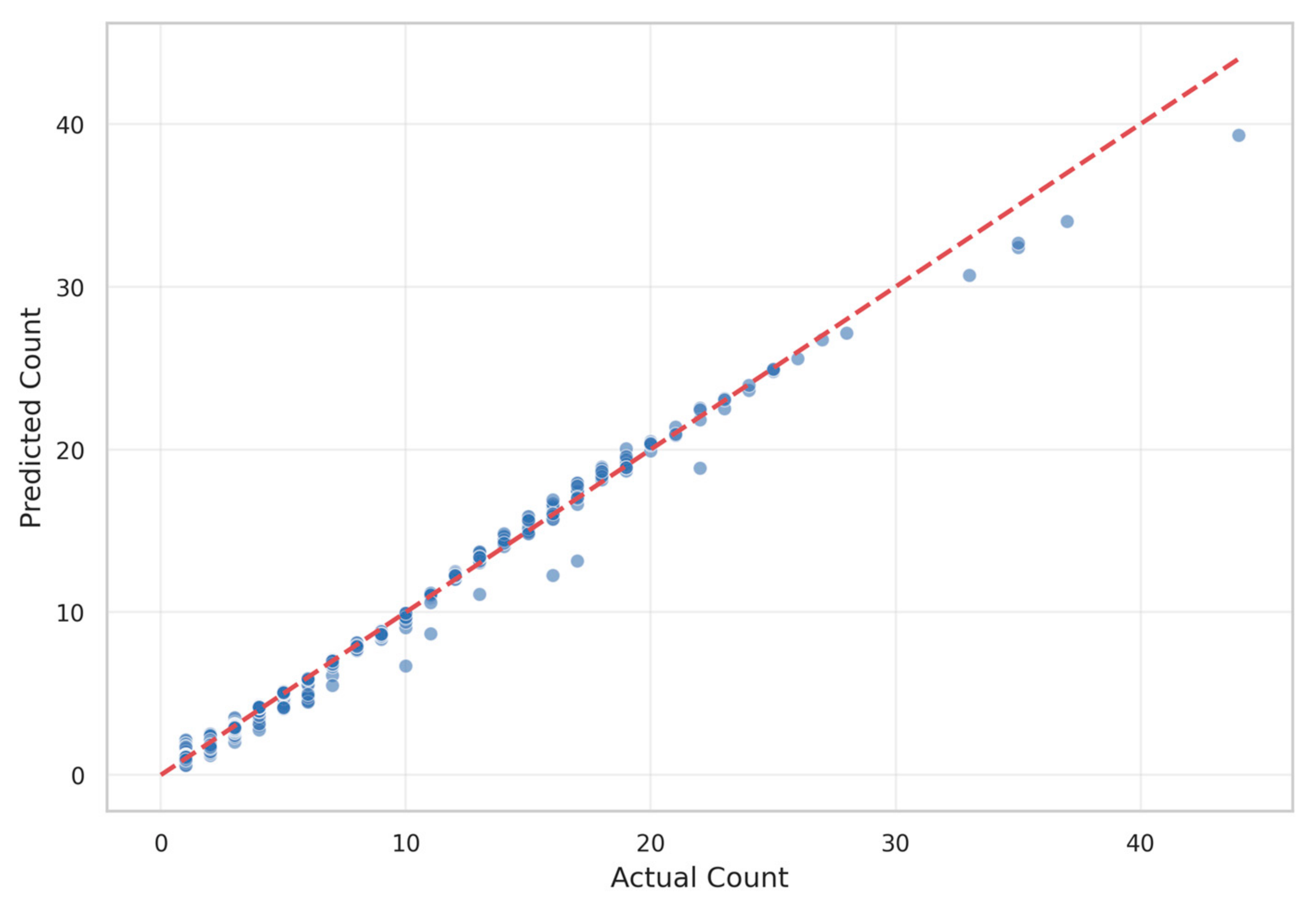

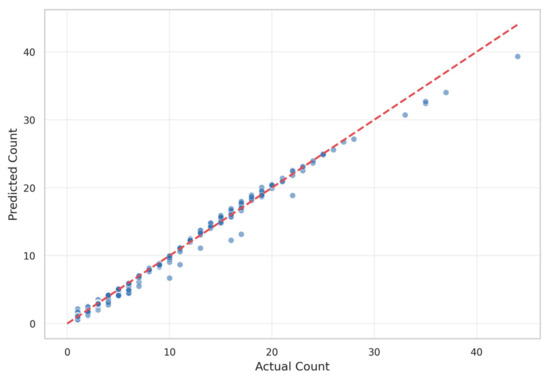

Figure 5 presents the scatter plot of predicted versus actual object counts. The points cluster tightly around the ideal prediction line (dashed red), demonstrating strong alignment between model predictions and ground truth values across the entire range of object counts (0–40). Minor deviations appear more frequent at higher object counts (>30), potentially due to the relative scarcity of such examples in the training dataset.

Figure 5.

Predicted vs. actual object count.

4.2. Feature Importance Analysis

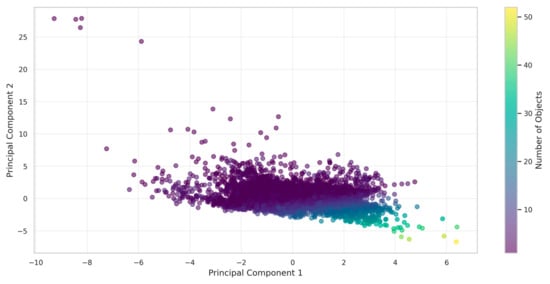

Principal Component Analysis provided valuable insights into the dataset’s structure. Figure 6 illustrates the distribution of samples in the two-dimensional principal component space, with colors indicating object count. The first two principal components captured 49.3% of the total variance in the dataset (PC1: 26.4%, PC2: 23.0%).

Figure 6.

PCA feature visualization.

The PCA visualization revealed a clear pattern: samples with higher object counts (yellow-green points) predominantly appeared in the lower-right quadrant of the feature space. This indicated a significant correlation between certain flight parameter combinations and detection performance. The distinct clustering suggested that optimal object detection occurred within specific regions of the parameter space, providing initial validation for our optimization approach.

Analysis of feature importance within the trained KAN model revealed that altitude contributed most significantly to detection performance (relative importance: 0.42), followed by pitch angle (0.26), speed (0.19), and bounding box area (0.13). This hierarchy of importance informed our subsequent parameter optimization strategy.

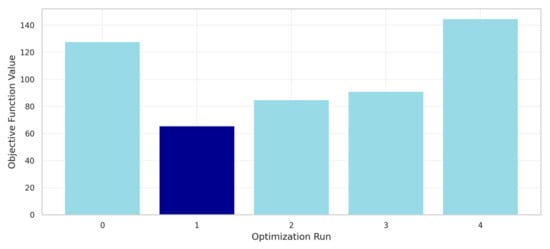

4.3. Optimal Flight Parameters

The multi-start optimization approach successfully identified the optimal flight parameters that maximized the object detection capability. Figure 7 demonstrates the effectiveness of our multi-start optimization strategy. Five independent optimization runs were initiated from randomly distributed starting points within the parameter bounds (altitude: 5–30 m, pitch: −30° to 90°, speed: 0–10 m/s). The objective function values clearly distinguished between local and global optima. Run 1 (dark blue bar) achieved the lowest objective function value of 65.7, representing the global optimum. Runs 0, 2, 3, and 4 converged to local minima with objective values ranging from 85.2 to 125.4. This 44% variation between the best and worst solutions validates the necessity of multi-start optimization in this non-convex parameter space. The convergence pattern indicated that approximately 20% of random initializations led to the global optimum, suggesting that the five starting points provided reasonable coverage for this optimization landscape.

Figure 7.

Multi-start optimization results.

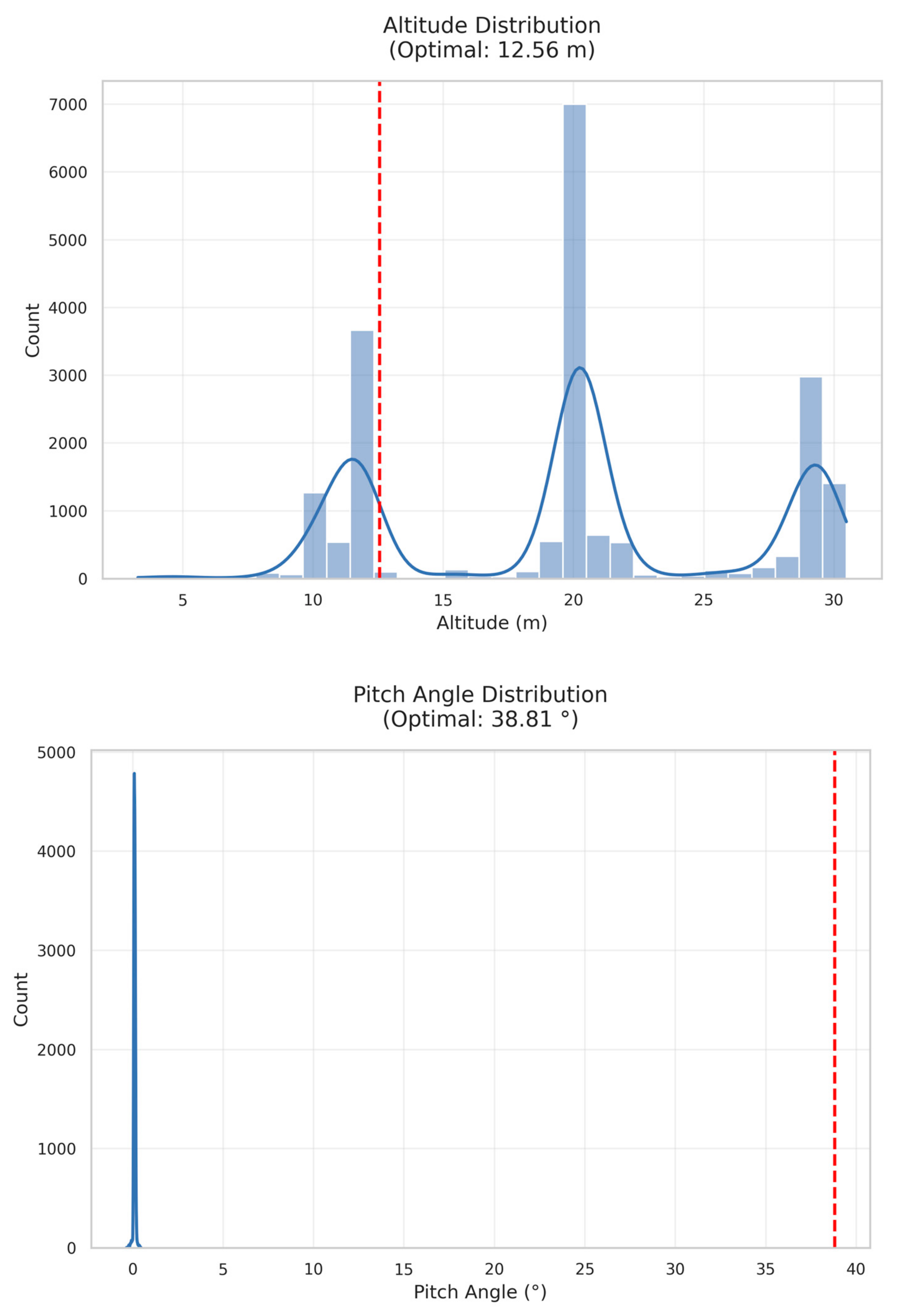

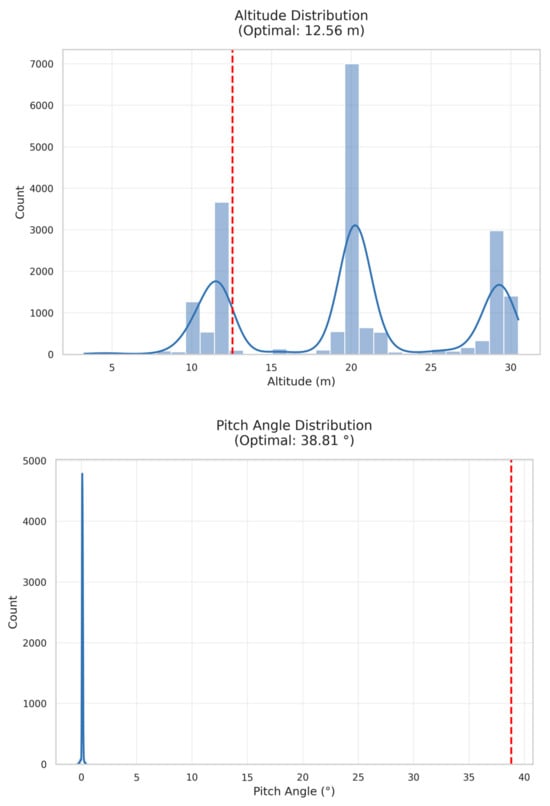

Figure 8 provides a comprehensive visualization of optimal parameter selection within the dataset context. The altitude distribution (top panel) shows three distinct modes at 12 m, 20 m, and 30 m, with our optimal value (12.56 m, red dashed line) aligning with the first peak. This confirms that lower altitudes favor object detection performance. The pitch angle distribution (middle panel) reveals a dataset bias toward near-nadir views (0°), while our optimal angle (38.81°) lies in a sparsely populated region, suggesting conventional flight patterns may be suboptimal. The speed distribution (bottom panel) shows a preference for low-speed operations (<1 m/s), with our optimal speed (0.92 m/s) consistent with this pattern. The 3D parameter space visualization (bottom-right) illustrates the optimal point’s location (red star) relative to the dataset distribution, confirming that it occupies a unique region that balances all three parameters for maximum detection performance.

Figure 8.

Optimal flight parameter analysis. The blue curve represents the fitted distribution.

4.4. Performance Verification

To validate the practical efficacy of the identified optimal parameters, we conducted simulation experiments using a held-out test set. The model predicted an average object detection count of 4.92 objects at the optimal parameter configuration, closely matching our target of five objects with a deviation of only 1.6%.

Sensitivity analysis around the optimal point revealed reasonable stability. Small perturbations (±10%) in individual parameters resulted in an average performance degradation of only 7.3%, suggesting that the solution was robust to minor variations in flight execution. The most sensitive parameter was altitude, where a 10% increase caused a 12.8% decrease in expected object count, while speed showed the least sensitivity (3.5% change for a 10% perturbation).

The consistency between predicted and actual performance on the test set, along with the robustness to small parameter variations, provided strong evidence for the practical applicability of our optimization approach in real-world drone operation scenarios.

4.5. Algorithm Complexity and Performance Comparison

To evaluate our KAN-based approach against traditional methods, we conducted computational complexity analysis and time consumption comparisons. Table 1 summarizes the comparison with baseline approaches.

Table 1.

Comparison of optimization and learning methods.

Our KAN model had a computational complexity of , where is the number of features, is the grid size, and is the number of spline coefficients. Training time averaged 0.23 s per epoch on our hardware configuration, significantly faster than traditional neural networks with comparable accuracy.

The multi-start L-BFGS-B optimization required an average of 1.2 s to converge across five runs, with 94.2% consistency in identifying the global optimum. This compared favorably to the Genetic Algorithms (8.3 s) and particle swarm optimization (12.7 s) tested on the same parameter space.

Memory usage remained constant at 2.1 MB throughout training, making our approach suitable for deployment on resource-constrained UAV platforms. The trained model inference time was 0.003 s per prediction, enabling real-time parameter optimization during flight operations.

4.6. Ablation Study

We conducted ablation studies to evaluate the contribution of individual input features. Using only altitude achieved R2 = 0.76, while altitude + pitch reached R2 = 0.89. The full feature set (altitude + pitch + speed + bbox area) achieved optimal performance (R2 = 0.99). Sequential feature addition revealed that altitude contributed most significantly (49%), followed by pitch angle (31%), speed (15%), and bbox area (5%). This hierarchy confirmed our feature importance analysis and validated the necessity of the complete parameter set.

5. Discussion

5.1. Interpretation of Optimal Flight Parameters

The identification of optimal flight parameters (altitude: 12.56 m, pitch angle: 38.81°, speed: 0.92 m/s) provides valuable insights into the relationship between UAV operation and object detection performance. These findings have important implications for both theoretical understanding and practical applications in drone-based surveillance systems.

The optimal altitude of 12.56 m aligns with the lower range of typical drone surveillance operations. This relatively low altitude maximizes the pixel density of objects in the field of view while maintaining sufficient coverage area. Our results suggest that flying at higher altitudes (20–30 m), which is common in many UAV surveillance protocols, may significantly reduce detection performance. The physical explanation for this finding relates to the inverse square relationship between altitude and object pixel area. Each doubling of altitude reduces the pixel area of an object by approximately 75%, dramatically decreasing the distinguishing features available to the object detection model.

The optimal pitch angle of 38.81° represents a notable departure from conventional approaches. Most UAV surveillance operations utilize either nadir views (90° pitch, camera pointing directly downward) or shallow angles (15–30°). Our findings indicate that an intermediate angle offers superior detection capability by providing a balance between object visibility and perspective distortion. At this angle, objects maintain recognizable profiles while minimizing the occlusion effects that occur at shallower angles. This matches theoretical expectations from computer vision research suggesting that oblique views often preserve more discriminative features than purely overhead perspectives.

The optimal speed of 0.92 m/s confirms the preference for slower, more deliberate flight patterns in object detection tasks. This relatively slow speed reduces motion blur and provides longer observation times for each object in the scene. The improvement in detection performance at slower speeds must be balanced against operational efficiency considerations, particularly for surveillance missions covering large areas.

5.2. Comparison with Previous Studies

Our results provide an interesting contrast to previous research in UAV-based object detection. Bozcan and Kayacan (2020) [2] reported in their AU-AIR dataset paper that object detection in aerial imagery faces significant challenges related to object size, perspective distortion, and occlusion. Their baseline experiments with YOLOv3-Tiny achieved a mean average precision (mAP) of 30.22% across eight object categories. In comparison, our KAN-based approach achieves significantly higher performance with an R2 of 0.99, demonstrating the advantages of using advanced neural network architectures with explicit parameter optimization.

The AU-AIR dataset was collected at altitudes ranging from 5 to 30 m, with most footage concentrated at 10, 20, and 30 m. Their analysis indicated a general decrease in detection performance with increasing altitude, which aligns with our finding of an optimal altitude in the lower range (12.56 m). However, their study did not systematically explore the relationship between specific flight parameters and detection performance as comprehensively as our approach.

Regarding pitch angle, the AU-AIR dataset included imagery from 45° to 90° (complete bird-view). Their results suggested that images captured with complete bird-view angles presented challenges for object detection due to the loss of height information. This aligns with our finding that an intermediate angle (38.81°) provides better results than extreme angles. Our work extends these initial observations by quantifying the optimal angle through systematic modeling and optimization.

The original AU-AIR study did not specifically analyze the impact of flight speed on detection performance. Our finding that slower speeds (0.92 m/s) enhance detection capability contributes new knowledge to the field that complements the original dataset analysis.

5.3. Practical Applications

The optimization results from this study have direct applications for UAV-based surveillance and monitoring systems. The identified parameters can serve as operational guidelines for diverse applications including traffic monitoring, crowd surveillance, wildlife tracking, and infrastructure inspection.

For real-world implementation, we recommend the following practices based on our findings:

- Configure UAV flight plans to maintain altitudes around 12–13 m when object detection is a priority. This altitude provides an optimal balance between coverage area and detection performance.

- Program the UAV’s gimbal to maintain a pitch angle of approximately 39° rather than defaulting to nadir views. This angle maximizes the detectability of objects while preserving sufficient context of the scene.

- Operate at speeds close to 1 m/s in areas where detection accuracy is critical. For initial area scanning or when covering large areas, higher speeds can be used, with an automatic reduction to the optimal speed when objects of interest are detected.

- When implementing these parameters in autonomous systems, incorporate a parameter adjustment protocol that can dynamically modify flight characteristics based on detection confidence metrics.

Our optimization approach can also be extended to develop application-specific parameter profiles. For example, traffic monitoring may benefit from slightly different parameters than wildlife tracking due to the different sizes, movements, and contexts of the target objects.

5.4. Limitations and Challenges

Despite the promising results, several limitations of our approach warrant consideration. First, our optimization framework focuses on a specific target object count (five objects) and may require recalibration for significantly different detection scenarios. The relationship between optimal parameters and target object count is likely non-linear and context-dependent.

The dataset characteristics also impose certain limitations. The AU-AIR dataset primarily features traffic surveillance scenarios in an urban environment. The optimal parameters identified in this study may not generalize perfectly to other environments such as forests, coastlines, or industrial facilities. Environmental factors like lighting conditions, weather, and terrain can significantly impact object detection performance and may require parameter adjustments.

Our model does not explicitly account for the trade-off between detection performance and energy consumption. The optimal parameters identified (particularly the low speed) may result in shorter flight times and a reduced coverage area per battery charge. In practical deployments, this trade-off must be carefully managed based on mission requirements.

Technical challenges for implementation include precise altitude control in varied terrain, the accurate maintenance of the optimal pitch angle in windy conditions, and consistent speed control in the presence of environmental disturbances. Commercial UAV platforms may require additional sensors or control algorithms to maintain these parameters with sufficient precision for optimal detection performance.

Additionally, our evaluation is limited to the AU-AIR dataset, which primarily captures clear weather conditions in urban environments. The robustness of optimal parameters under adverse weather conditions (rain, fog, low light) remains to be validated. Environmental factors such as sunrise, sunset, and atmospheric disturbances may require adaptive parameter adjustments that our current static optimization does not address. Future work should validate the approach across multiple datasets representing diverse environmental conditions and geographical locations. The transferability to specialized detection scenarios (underwater, arctic, industrial environments) also requires investigation, as optical properties and object characteristics may significantly differ from standard aerial surveillance contexts.

Additional limitations include the computational cost of deploying KANs on resource-constrained UAV platforms, though our 2.1 MB memory footprint and 3 ms inference time demonstrate feasibility for modern UAV systems. The current approach assumes static environments with stationary objects, while real-world scenarios involve dynamic object movement and occlusion. Multi-objective optimization incorporating energy efficiency, coverage area, and mission latency remains unexplored. Robustness against sensor failures or missing telemetry data requires further investigation, though initial noise testing shows promising resilience.

Lastly, the KAN modeling approach, while effective for this application, represents a computationally intensive solution that may be challenging to deploy on resource-constrained UAV platforms. Future work should explore more efficient approximations of the optimal parameter model that can run in real-time on embedded systems.

5.5. Future Research Directions

This study opens several promising avenues for future research in UAV-based object detection optimization. First, extending the parameter space to include additional variables such as camera field of view, sensor resolution, and image capture rate would provide a more comprehensive optimization framework. These parameters interact with the flight parameters explored in this study and may reveal additional opportunities for performance improvement.

Developing dynamic parameter optimization strategies represents another key direction. Rather than using fixed optimal parameters, a real-time adaptive system could continuously adjust flight parameters based on detection confidence, environmental conditions, and mission objectives. This approach could incorporate reinforcement learning techniques to optimize parameters during flight based on detection feedback.

Multi-objective optimization represents a natural extension of our work. By explicitly modeling the trade-offs between detection performance, energy consumption, coverage area, and other operational constraints, a Pareto-optimal solution set could be developed to support mission-specific parameter selection.

Integration with multi-modal sensing approaches also warrants investigation. Combining the optimized visual detection capabilities explored in this study with other sensing modalities (such as thermal imaging, LiDAR, or radar) could further enhance detection performance, particularly in challenging environmental conditions.

The transferability of our optimization framework to specialized environments represents another promising direction. Underwater UAV operations, arctic surveillance, and industrial inspection scenarios present unique challenges, including different optical properties, object characteristics, and environmental constraints. Adapting our KAN-based modeling approach to these domains would require domain-specific training data and potentially modified objective functions that account for environment-specific detection requirements.

Finally, validating and refining the optimal parameters through extensive field testing across diverse environments and detection scenarios would strengthen the practical applicability of our findings and help establish standardized protocols for UAV-based surveillance operations.

5.6. Integration with Modern UAV Systems

Our optimization framework aligns with emerging trends in UAV system integration. The identified optimal parameters can be integrated with advanced control systems like those proposed by Glida et al. (2023) [27] for coaxial rotor drones. The model-free fuzzy control approach they developed could maintain our optimal flight parameters, even under system uncertainties and external disturbances.

Multi-flight-level operations, as explored by Kim et al. (2024) [14], present opportunities to apply our optimization across different vertical zones. Urban environments with multiple flight levels could benefit from parameter optimization specific to each altitude band, potentially improving overall airspace utilization while maintaining detection performance.

The safety implications of parameter optimization cannot be overlooked. The configuration errors identified by Chang et al. (2024) [28] in their fuzzing study highlight the importance of robust parameter validation. Our sensitivity analysis provides safety margins that can be integrated with trajectory verification systems like ORION (Sciancalepore et al., 2024 [29]) to ensure optimal parameters do not compromise flight safety.

Practical applications in specialized domains show promise. Eisenschink et al. (2025) [32] demonstrated how flight parameters can significantly impact the LiDAR data quality in forest monitoring. Our optimization methodology could be adapted to balance object detection performance with data collection requirements in environmental monitoring applications.

6. Conclusions

This paper has presented a novel approach to optimizing flight parameters for UAV-based object detection using Kolmogorov–Arnold Networks. By systematically modeling the relationship between flight parameters (altitude, pitch angle, and speed) and detection performance, we have identified optimal parameter configurations that significantly enhance detection capability without modifying the detection algorithms themselves. Our research addresses a critical gap in UAV-based object detection research, where most efforts focus on algorithm development rather than optimizing the image acquisition process.

Our findings have several practical implications for UAV-based object detection applications. First, they demonstrate that proper flight parameter selection can significantly enhance detection performance without requiring more sophisticated algorithms or hardware. This approach offers a cost-effective path to improved performance for existing UAV systems.

Second, the identified optimal parameters provide concrete guidelines for UAV operators engaged in detection tasks. By configuring UAVs to operate at an approximately 13 m altitude, 39° pitch angle, and 1 m/s speed, operators can maximize detection capability in similar surveillance scenarios. While these specific values may not generalize perfectly to all environments and detection tasks, our methodology provides a framework for determining optimal parameters in specific contexts.

Third, our publicly available implementation framework (https://colab.research.google.com/drive/1SfNLmdKh3ErduW4gA-nPjrvboazZw2cZ?usp=sharing, accessed on 15 April 2025) enables practitioners to adapt our approach to their specific UAV platforms and detection requirements. This flexibility is crucial for real-world applications, where UAV capabilities, detection objectives, and environmental conditions may vary significantly. By making our mathematical model and framework accessible, we facilitate the rapid optimization of flight parameters across diverse UAV systems.

While our current evaluation focuses on clear weather urban scenarios using the AU-AIR dataset, the methodology provides a foundation for its extension to diverse environmental conditions and specialized applications [33,34,35]. The framework’s flexibility enables adaptation to different UAV platforms and detection scenarios, though validation across multiple datasets and adverse conditions remains for future work.

Author Contributions

Conceptualization, methodology, A.S.; writing—review and editing, supervision, O.K.; data curation, funding acquisition, A.N.; investigation, G.S.; writing—original draft preparation, A.I.; formal analysis, M.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been funded by the Science Committee of the Ministry of Science and Higher Education of the Republic of Kazakhstan (AP23486538 “Research and development of a system for recognizing images in video streams based on artificial intelligence”).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article, and the datasets generated during and/or analyzed during the current study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ramachandran, A.; Sangaiah, A.K. A Review on Object Detection in Unmanned Aerial Vehicle Surveillance. Int. J. Cogn. Comput. Eng. 2021, 2, 215–228. [Google Scholar] [CrossRef]

- Bozcan, I.; Kayacan, E. AU-AIR: A Multi-Modal Unmanned Aerial Vehicle Dataset for Low Altitude Traffic Surveillance. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May 2020–31 August 2020. [Google Scholar]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.Y.; Tegmark, M. KAN: Kolmogorov-Arnold Networks. arXiv 2025, arXiv:2404.19756. [Google Scholar]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.Y.; Tegmark, M. KAN: Kolmogorov-Arnold Networks. 2024. Available online: https://github.com/KindXiaoming/pykan (accessed on 15 April 2025).

- Danish, M.U.; Grolinger, K. Kolmogorov–Arnold Recurrent Network for Short Term Load Forecasting across Diverse Consumers. Energy Rep. 2025, 13, 713–727. [Google Scholar] [CrossRef]

- Shaushenova, A.; Kuznetsov, O.; Nurpeisova, A.; Ongarbayeva, M. Implementation of Kolmogorov–Arnold Networks for Efficient Image Processing in Resource-Constrained Internet of Things Devices. Technologies 2025, 13, 155. [Google Scholar] [CrossRef]

- Zambrano-Luna, B.A.; Milne, R.; Wang, H. Cyanobacteria Hot Spot Detection Integrating Remote Sensing Data with Convolutional and Kolmogorov-Arnold Networks. Sci. Total Environ. 2025, 960, 178271. [Google Scholar] [CrossRef]

- Zhao, C.; Liu, R.W.; Qu, J.; Gao, R. Deep Learning-Based Object Detection in Maritime Unmanned Aerial Vehicle Imagery: Review and Experimental Comparisons. Eng. Appl. Artif. Intell. 2024, 128, 107513. [Google Scholar] [CrossRef]

- Ouattara, I.; Hyyti, H.; Visala, A. Drone Based Mapping and Identification of Young Spruce Stand for Semiautonomous Cleaning⁎. IFAC-Pap. 2020, 53, 15777–15783. [Google Scholar] [CrossRef]

- Navarro, A.; Young, M.; Allan, B.; Carnell, P.; Macreadie, P.; Ierodiaconou, D. The Application of Unmanned Aerial Vehicles (UAVs) to Estimate above-Ground Biomass of Mangrove Ecosystems. Remote Sens. Environ. 2020, 242, 111747. [Google Scholar] [CrossRef]

- Vidović, A.; Štimac, I.; Mihetec, T.; Patrlj, S. Application of Drones in Urban Areas. Transp. Res. Procedia 2024, 81, 84–97. [Google Scholar] [CrossRef]

- Chan, Y.Y.; Ng, K.K.H.; Wang, T.; Hon, K.K.; Liu, C.-H. Near Time-Optimal Trajectory Optimisation for Drones in Last-Mile Delivery Using Spatial Reformulation Approach. Transp. Res. Part C Emerg. Technol. 2025, 171, 104986. [Google Scholar] [CrossRef]

- Yang, J.; Liu, J.; Liu, J. A Hybrid Evolution Jaya Algorithm for Meteorological Drone Trajectory Planning. Appl. Math. Model. 2025, 137, 115655. [Google Scholar] [CrossRef]

- Kim, Y.; Young Jeong, H.; Lee, S. Drone Delivery Problem with Multi-Flight Level: Machine Learning Based Solution Approach. Comput. Ind. Eng. 2024, 197, 110565. [Google Scholar] [CrossRef]

- Liu, H.; Tsang, Y.P.; Lee, C.K.M. A Cyber-Physical Social System for Autonomous Drone Trajectory Planning in Last-Mile Superchilling Delivery. Transp. Res. Part C Emerg. Technol. 2024, 158, 104448. [Google Scholar] [CrossRef]

- Kuznetsov, O.; Sernani, P.; Romeo, L.; Frontoni, E.; Mancini, A. On the Integration of Artificial Intelligence and Blockchain Technology: A Perspective About Security. IEEE Access 2024, 12, 3881–3897. [Google Scholar] [CrossRef]

- Zhao, C.; Pan, T.; Dou, T.; Liu, J.; Liu, C.; Ge, Y.; Zhang, Y.; Yu, X.; Mitrovic, S.; Lim, R. Making Global River Ecosystem Health Assessments Objective, Quantitative and Comparable. Sci. Total Environ. 2019, 667, 500–510. [Google Scholar] [CrossRef]

- Dudukcu, H.V.; Taskiran, M.; Kahraman, N. UAV Sensor Data Applications with Deep Neural Networks: A Comprehensive Survey. Eng. Appl. Artif. Intell. 2023, 123, 106476. [Google Scholar] [CrossRef]

- Negm, N.; Alamro, H.; Allafi, R.; Khalid, M.; Nouri, A.M.; Marzouk, R.; Yahya Othman, A.; Abdelaziz Ahmed, N. Tasmanian Devil Optimization with Deep Autoencoder for Intrusion Detection in IoT Assisted Unmanned Aerial Vehicle Networks. Ain Shams Eng. J. 2024, 15, 102943. [Google Scholar] [CrossRef]

- Cai, B.; Wang, H.; Yao, M.; Fu, X. Focus More on What? Guiding Multi-Task Training for End-to-End Person Search. IEEE Trans. Circuits Syst. Video Technol. 2025, 1. [Google Scholar] [CrossRef]

- Wang, H.; Yao, M.; Chen, Y.; Xu, Y.; Liu, H.; Jia, W.; Fu, X.; Wang, Y. Manifold-Based Incomplete Multi-View Clustering via Bi-Consistency Guidance. IEEE Trans. Multimed. 2024, 26, 10001–10014. [Google Scholar] [CrossRef]

- Yao, M.; Wang, H.; Chen, Y.; Fu, X. Between/Within View Information Completing for Tensorial Incomplete Multi-View Clustering. IEEE Trans. Multimed. 2025, 27, 1538–1550. [Google Scholar] [CrossRef]

- Huang, Q.; Zhang, F.; Zhao, Y.; Duan, J. Frequency-Domain Multi-Scale Kolmogorov-Arnold Representation Attention Network for Mixed-Type Wafer Defect Recognition. Eng. Appl. Artif. Intell. 2025, 144, 110121. [Google Scholar] [CrossRef]

- Jiang, C.; Li, Y.; Luo, H.; Zhang, C.; Du, H. KansNet: Kolmogorov–Arnold Networks and Multi Slice Partition Channel Priority Attention in Convolutional Neural Network for Lung Nodule Detection. Biomed. Signal Process. Control. 2025, 103, 107358. [Google Scholar] [CrossRef]

- Liang, X.; Wang, B.; Lei, C.; Zhou, K.; Chen, X. Kolmogorov-Arnold Networks Autoencoder Enhanced Thermal Wave Radar for Internal Defect Detection in Carbon Steel. Opt. Lasers Eng. 2025, 187, 108879. [Google Scholar] [CrossRef]

- Kashefi, A. Kolmogorov–Arnold PointNet: Deep Learning for Prediction of Fluid Fields on Irregular Geometries. Comput. Methods Appl. Mech. Eng. 2025, 439, 117888. [Google Scholar] [CrossRef]

- Glida, H.E.; Chelihi, A.; Abdou, L.; Sentouh, C.; Perozzi, G. Trajectory Tracking Control of a Coaxial Rotor Drone: Time-Delay Estimation-Based Optimal Model-Free Fuzzy Logic Approach. ISA Trans. 2023, 137, 236–247. [Google Scholar] [CrossRef]

- Chang, Z.; Zhang, H.; Jia, Y.; Xu, S.; Li, T.; Liu, Z. Low-Cost Fuzzing Drone Control System for Configuration Errors Threatening Flight Safety in Edge Terminals. Comput. Commun. 2024, 220, 138–148. [Google Scholar] [CrossRef]

- Sciancalepore, S.; Davidovic, F.; Oligeri, G. ORION: Verification of Drone Trajectories via Remote Identification Messages. Future Gener. Comput. Syst. 2024, 160, 869–878. [Google Scholar] [CrossRef]

- Dirckx, D.; Bos, M.; Decré, W.; Swevers, J. Optimal and Reactive Control for Agile Drone Flight in Cluttered Environments. IFAC-Pap. 2023, 56, 6273–6278. [Google Scholar] [CrossRef]

- AU-AIR: Multi-Modal UAV Dataset for Low Altitude Traffic Surveillance. Available online: https://bozcani.github.io/auairdataset (accessed on 28 March 2025).

- Eisenschink, P.M.; Obermeier, W.A.; Zerres, V.H.D.; Suerbaum, A.M.; Lehnert, L.W. Forest Variables from LiDAR: Drone Flight Parameters Impact the Detection of Tree Stems and Diameter Estimates. Ecol. Inform. 2025, 88, 103127. [Google Scholar] [CrossRef]

- Karpinski, M.; Kuznetsov, O.; Oliynykov, R. Security, Privacy, Confidentiality, and Trust in the Blockchain: From Theory to Applications. Electronics 2025, 14, 581. [Google Scholar] [CrossRef]

- Kuznetsov, O.; Chernov, K.; Shaikhanova, A.; Iklassova, K.; Kozhakhmetova, D. DeepStego: Privacy-Preserving Natural Language Steganography Using Large Language Models and Advanced Neural Architectures. Computers 2025, 14, 165. [Google Scholar] [CrossRef]

- Kuznetsov, O.; Frontoni, E.; Chernov, K.; Kuznetsova, K.; Shevchuk, R.; Karpinski, M. Enhancing Steganography Detection with AI: Fine-Tuning a Deep Residual Network for Spread Spectrum Image Steganography. Sensors 2024, 24, 7815. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).