New Approach to Dominant and Prominent Color Extraction in Images with a Wide Range of Hues

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

3.1. Image Database

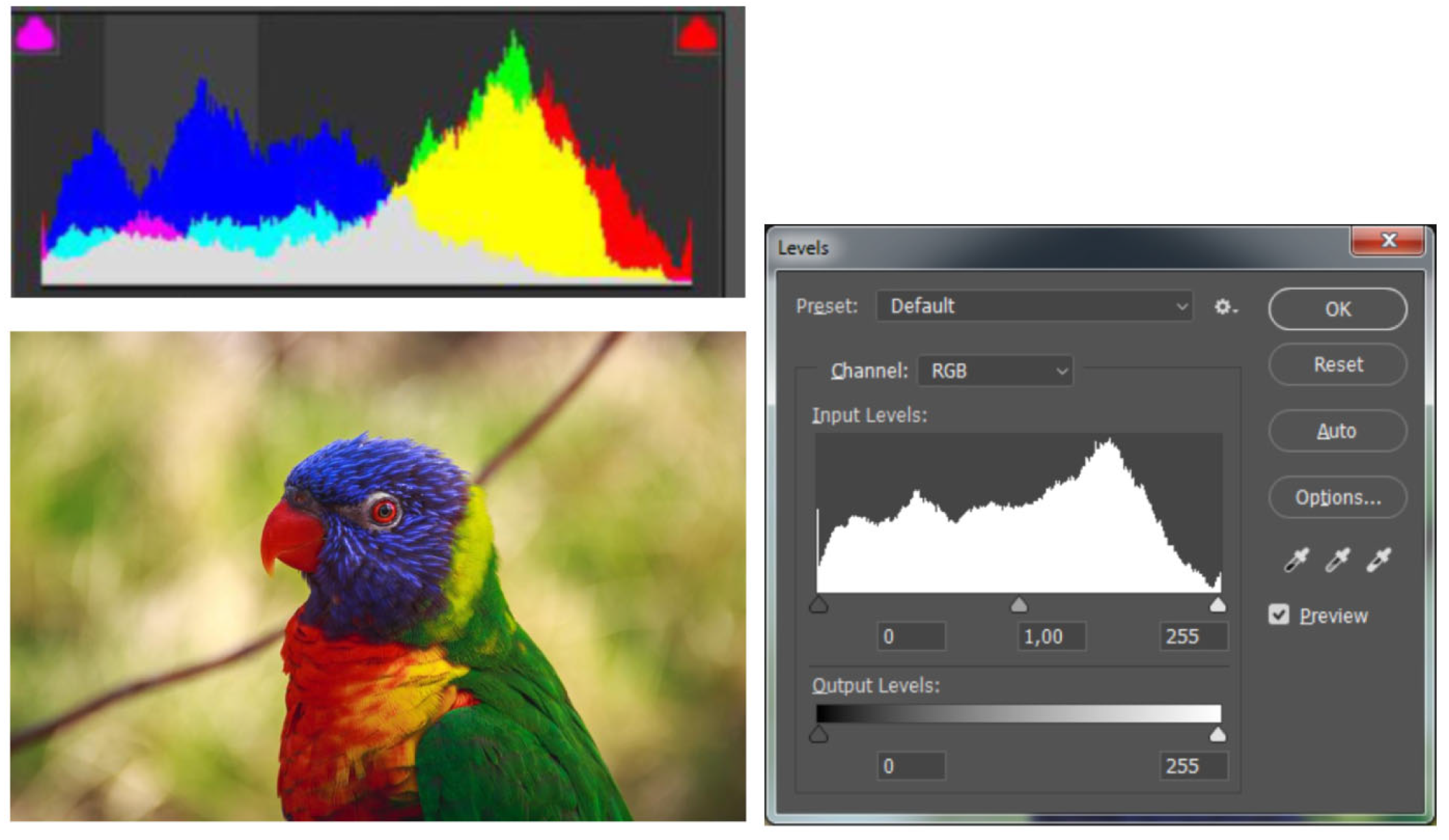

3.2. Color Gamut Analysis of an Image in the CIE L*a*b* Color Space

3.3. Image Processing Using Orthogonal ICaS Color Space

3.4. Proposed Method

- In most cases, the number of dominant colors varies from 4 to 10;

- The palette usually includes the color that occupies the largest area in the image, regardless of its visual expressiveness;

- The palette is usually based on the colors of the background, not the object of the image;

- Despite the wide range of colors, there is a limited variety of shades.

- Use of the visual salience model, which takes into account the contrast of the color relative to the surrounding background;

- Achromatic color filtering;

- Performing color segmentation in the orthogonal ICaS color space;

- Performing clustering in the ICaS color space, using the KM method to identify the most common color groups in the image;

- Performing the final selection of the dominant colors.

- Cluster size, which reflects the number of pixels of a particular color;

- Color saturation, which correlates with the probability of inclusion in the final palette;

- Contrast with the environment, which favors more visually expressive colors.

4. Results

4.1. Developed Algorithm and Experimental Environment

| Algorithm 1 Determining Dominant and Prominent Colors of an Image Using Orthogonal ICaS color space |

| Require: input_image—input image |

| Ensure: dominant_colors—dominant colors in each color sector |

| 1: image_rgb ← load and convert input_image to RGB |

| 2: normalize image_rgb to [0, 1] |

| 3: compute I, C, S from RGB channels |

| 4: Cr ← sqrt(C2 + S2) |

| 5: Hi ← arctangent(S/C) in degrees, range [0, 360] |

| 6: alid_pixels ← (Cr > 0.1) ∧ ((I > 1.15) ∨ (I < 0.2)) |

| 7: saliency_map ← compute spectral residual saliency from image |

| 8: binary_map ← threshold saliency_map at 24 to get salient areas |

| 9: salient_mask ← binary_map = 255 ∧ valid_pixels |

| 10: define hue_sectors as named angle ranges |

| 11: initialize sector_colors ← empty list for each sector |

| 12: for each pixel in salient_mask do |

| 13: hi ← Hi at pixel |

| 14: assign pixel color to matching hue_sector by hi |

| 15: end for |

| 16: dominant_colors ← empty dict |

| 17: for each sector in hue_sectors do |

| 18: if sector_colors not empty then |

| 19: apply k-means (k = 1) to pixel colors |

| 20: center ← cluster centroid |

| 21: store rounded center as RGB in dominant_colors |

| 22: end if |

| 23: end for |

| 24: visualize dominant_colors as a horizontal palette |

| 25: return dominant_colors |

4.2. Image Gamut Volume Calculating

4.3. Determination of Dominant and Prominent Colors

4.4. Evaluating the Color Diversity in Palettes

4.5. Visual Assessment of the Quality of Generated Palettes

4.6. Comparison of the Performance of the Developed Method with Other Methods

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| RGB | Red–green–blue |

| CMYK | Cyan–magenta–yellow–black |

| SR | Spectral residual saliency |

| CC0 | Creative commons zero |

| KM | K-means |

| KML | K-means in the CIE L*a*b* color space |

| FCM | Fuzzy C-means |

| OSM | Orthogonal saliency mean |

| MS | Mean shift |

References

- Itten, J. The Art of Color: The Subjective Experience and Objective Rationale of Color; Van Nostrand Reinhold Company: New York, NY, USA; Cincinnati, OH, USA; Toronto, ON, Canada; London, UK; Melbourne, VIC, Australia, 1973; Available online: https://archive.org/details/johannes-ittens-the-art-of-color/page/n1/mode/2up (accessed on 22 April 2025).

- Menezes Fernandes, J. The Power of Brand Colours. Available online: https://www.researchgate.net/publication/382496000_The_Power_of_Brand_Colours (accessed on 22 April 2025).

- Chen, Y.; Yu, L.; Westland, S.; Cheung, V. Investigation of designers’ colour selection process. Color Res. Appl. 2021, 46, 557–565. [Google Scholar] [CrossRef]

- Torralba, A.; Isola, P.; Freeman, W.T. Foundations of Computer Vision; The MIT Press: Cambridge, MA, USA, 2024; Available online: https://mitpress.mit.edu/9780262048972/foundations-of-computer-vision/ (accessed on 22 April 2025).

- Bhat, J.I.; Yousuf, R.; Jeelani, Z.; Bhat, O. An Insight into Content-Based Image Retrieval Techniques, Datasets, and Evaluation Metrics. In Intelligent Signal Processing and RF Energy Harvesting for State of Art 5G and B5G Networks; Sheikh, J.A., Khan, T., Kanaujia, B.K., Eds.; Springer: Singapore, 2024; pp. 127–146. [Google Scholar] [CrossRef]

- Special Issue Image Segmentation Techniques: Current Status and Future Directions. Available online: https://www.mdpi.com/journal/jimaging/special_issues/image_segmentation_techniques (accessed on 22 April 2025).

- Jamil, S. Review of Image Quality Assessment Methods for Compressed Images. J. Imaging 2024, 10, 113. [Google Scholar] [CrossRef] [PubMed]

- Nascimento, S.M.C.; Albers, A.M.; Gegenfurtner, K.R. Naturalness and aesthetics of colors—Preference for color compositions perceived as natural. Vis. Res. 2021, 185, 98–110. [Google Scholar] [CrossRef] [PubMed]

- Shovheniuk, M.; Kovalskiy, B.; Semeniv, M.; Semeniv, V.; Zanko, N. Information technology of digital images processing with saving of material resources. In Proceedings of the 15th International Conference on ICT in Education, Research and Industrial Applications, ICTERI 2019, Kherson, Ukraine, 12–15 June 2019; CEUR Workshop Proceedings. Volume 2387, pp. 414–419. Available online: http://ceur-ws.org/Vol-2387/20190414.pdf (accessed on 22 April 2025).

- Kovalskiy, B.; Semeniv, M.; Zanko, N.; Semeniv, V. Application of Digital Images Processing for Expanded Gamut Printing with Effect of Saving Material Resources. In Proceedings of the Seventh International Workshop on Computer Modeling and Intelligent Systems (CMIS-2024), Zaporizhzhia, Ukraine, 3 May 2024; CEUR Workshop Proceedings. Volume 3702, pp. 226–238. Available online: https://ceur-ws.org/Vol-3702/paper19.pdf (accessed on 22 April 2025).

- Gao, Y.; Liang, J.; Yang, J. Color Palette Generation From Digital Images: A Review. Color Res. Appl. 2024, 50, 250–265. [Google Scholar] [CrossRef]

- Han, J.; Lee, Y. Image sentiment considering color palette recommendations based on influence scores for image advertisement. Electron. Commer. 2024, 24, 1–29. [Google Scholar] [CrossRef]

- Bao, C.; Hu, J.; Mo, Y.; Xiong, D. A Dominant Color Extraction Method Based on Salient Object Detection. In Proceedings of the 3rd International Symposium on Computer Technology and Information Science (ISCTIS), Chengdu, China, 7–9 July 2023; pp. 93–97. [Google Scholar] [CrossRef]

- Nieves, J.L.; Romero, J. Heuristic analysis influence of saliency in the color diversity of natural images. Color Res. Appl. 2018, 43, 713–725. [Google Scholar] [CrossRef]

- Lara-Alvarez, C.; Reyes, T. A geometric approach to harmonic color palette design. Color Res. Appl. 2019, 44, 106–114. [Google Scholar] [CrossRef]

- Weingerl, P.; Hladnik, A.; Javoršek, D. Development of a machine learning model for extracting image prominent colors. Color Res. Appl. 2020, 45, 409–426. [Google Scholar] [CrossRef]

- Yan, S.; Xu, S.; Zhang, S. Flexible neural color compatibility model for efficient color extraction from image. Color Res. Appl. 2023, 48, 761–771. [Google Scholar] [CrossRef]

- Vulpoi, R.A.; Ciobanu, A.; Drug, V.L.; Mihai, C.; Barboi, O.B.; Floria, D.E.; Coseru, A.I.; Olteanu, A.; Rosca, V.; Luca, M. Deep Learning-Based Semantic Segmentation for Objective Colonoscopy Quality Assessment. Imaging 2025, 11, 84. [Google Scholar] [CrossRef]

- Ren, S.; Chen, Y.; Westland, S.; Yu, L. A comparative evaluation of similarity measurement algorithms within a colour palette. Color Res. Appl. 2021, 46, 332–340. [Google Scholar] [CrossRef]

- Yang, J.; Chen, Y.; Westland, S.; Xiao, K. Predicting visual similarity between colour palettes. Color Res. Appl. 2020, 45, 401–408. [Google Scholar] [CrossRef]

- Gijsenij, A.; Vazirian, M.; Spiers, P.; Westland, S.; Koeckhoven, P. Determining key colors from a design perspective using dE-means color clustering. Color Res. Appl. 2022, 48, 69–87. [Google Scholar] [CrossRef]

- Rong, A.; Hansopaheluwakan-Edward, N.; Li, D. Analyzing the color availability of AI-generated posters based on K-means clustering. Color Res. Appl. 2024, 49, 234–257. [Google Scholar] [CrossRef]

- Chen, C.L.; Huang, Q.Y.; Zhou, M.; Huang, D.C.; Liu, L.C.; Deng, Y.Y. Quantified emotion analysis based on design principles of color feature recognition in pictures. Multimed. Tools Appl. 2024, 83, 57243–57267. [Google Scholar] [CrossRef]

- Ruan, S.; Zhang, K.; Wu, L.; Xu, T.; Liu, Q.; Chen, E. Color Enhanced Cross Correlation Net for Image Sentiment Analysis. IEEE Trans. Multimed. 2024, 26, 4097–4109. [Google Scholar] [CrossRef]

- Kösesoy, M.B.; Yilmaz, S. A Novel Color Difference-Based Method for Palette Extraction and Evaluation Using Images of Birds. IEEE Access 2025, 13, 52270–52283. [Google Scholar] [CrossRef]

- Bruce Lindbloom Color Science. Available online: http://www.brucelindbloom.com/ (accessed on 22 April 2025).

- Fabrizio, J. How to compute the convex hull of a binary shape? A real-time algorithm to compute the convex hull of a binary shape. J. Real Time Image Process. 2023, 20, 106. [Google Scholar] [CrossRef]

- Sun, B.; Liu, H.; Li, W.; Zhou, S. A Color Gamut Description Algorithm for Liquid Crystal Displays in CIELAB Space. Sci. World J. 2014, 2014, 671964. [Google Scholar] [CrossRef]

- Bracewell, R.N. The Hartley Transform; Oxford University Press: New York, NY, USA, 1986. [Google Scholar]

- Predko, K.; Kryk, M.; Shovheniuk, M. Equation of chromatic color coordinates. Print. Technol. Tech. 2010, 2, 28–37. (In Ukrainian) [Google Scholar]

- Meijer, I.; Terpstra, M.M.; Camara, O.; Marquering, H.A.; Arrarte Terreros, N.; de Groot, J.R. Unsupervised Clustering of Patients Undergoing Thoracoscopic Ablation Identifies Relevant Phenotypes for Advanced Atrial Fibrillation. Diagnostics 2025, 15, 1269. [Google Scholar] [CrossRef]

- Pawan, S.J.; Muellner, M.; Lei, X.; Desai, M.; Varghese, B.; Duddalwar, V.; Cen, S.Y. Integrated Hyperparameter Optimization with Dimensionality Reduction and Clustering for Radiomics: A Bootstrapped Approach. Multimodal Technol. Interact. 2025, 9, 49. [Google Scholar] [CrossRef]

- Coolors—The super fast color palettes generator! Available online: https://coolors.co (accessed on 22 April 2025).

- Adobe Color Wheel. Available online: https://color.adobe.com (accessed on 22 April 2025).

- Image Color Picker. Available online: https://imagecolorpicker.com (accessed on 22 April 2025).

- Palette Generator. Available online: https://palettegenerator.com (accessed on 22 April 2025).

- OpenCV Saliency Detection. Available online: https://pyimagesearch.com/2018/07/16/opencv-saliency-detection/ (accessed on 22 April 2025).

- Saastamoinen, K.; Penttinen, S. Visual seabed classification using k-means clustering, CIELAB colors and Gabor-filters. Procedia Comput. Sci. 2021, 192, 2471–2478. [Google Scholar] [CrossRef]

- Chang, Y.; Mukai, N. Color Feature Based Dominant Color Extraction. IEEE Access 2022, 10, 93055–93061. [Google Scholar] [CrossRef]

- Color blind test. Available online: https://www.colorlitelens.com/color-blindness-test.html (accessed on 2 June 2025).

| No | Fixed I (ICaS) | Number of sRGB Colors on CS Plane | Fixed L (CIE L*a*b*) | Number of sRGB Colors on ab Plane |

|---|---|---|---|---|

| 1 | 1.299038 | 49,966 | 77.93 | 39,455 |

| 2 | 0.866025 | 136,829 | 53.77 | 60,053 |

| 3 | 0.433013 | 49,980 | 25.97 | 25,955 |

| Clustering Metrics | CaS-Plane of Constant Brightness (I = 0.866) in ICaS Color Space | a*b*-Plane of Constant Brightness (L = 53.77) in CIE L*a*b* Color Space |

|---|---|---|

| Silhouette Score | 0.342 | 0.378 |

| Davies–Bouldin Index | 0.872 | 0.835 |

| Image Category | Average Value of Gamut Volume, Cubic CIE L*a*b* Units | Maximum Value of Gamut Volume, Cubic CIE L*a*b* Units |

|---|---|---|

| Birds | 378,161.05 | 562,533.38 |

| Fish | 538,639.22 | 694,086.66 |

| Flowers | 514,948.65 | 671,420.25 |

| Landscape | 323,726.40 | 538,613.75 |

| Buildings | 589,373.96 | 729,224.01 |

| Method | ΔE00 (CIE L*a*b*) | ||

|---|---|---|---|

| Min | Mean | Max | |

| KM | 15.8 | 36.6 | 64.4 |

| KML | 15.4 | 37.2 | 58.9 |

| FCM | 14.3 | 36.1 | 67.1 |

| MS | 14.8 | 37.5 | 65.4 |

| OSM | 15.3 | 36.4 | 56.6 |

| Method | CIE L Value | ||

|---|---|---|---|

| Min | Mean | Max | |

| KM | 12 | 54.3 | 88 |

| KML | 11 | 53.6 | 83 |

| FCM | 6 | 54.2 | 89 |

| MS | 7 | 55.3 | 87 |

| OSM | 32 | 52.3 | 73 |

| Method | Harmony, Total Points | Appeal, Total Points | Relevance, Total Points |

|---|---|---|---|

| KM | 279 | 274 | 283 |

| KML | 272 | 269 | 286 |

| FCM | 276 | 278 | 251 |

| OSM | 286 | 287 | 287 |

| SM | 272 | 272 | 262 |

| Number of Image | KM | KML | FCM | OSM | SM |

|---|---|---|---|---|---|

| 1 | 1.74 | 2 | 29.25 | 0.62 | 1.94 |

| 2 | 1.31 | 1.02 | 80.29 | 0.5 | 2.36 |

| 3 | 1.64 | 1.87 | 79.71 | 0.54 | 2.22 |

| 4 | 0.95 | 0.65 | 30.58 | 0.45 | 1.49 |

| 5 | 0.86 | 0.98 | 24.31 | 0.31 | 2.46 |

| 6 | 1.33 | 2 | 115.63 | 0.47 | 2.08 |

| 7 | 2.03 | 1.12 | 119.27 | 0.9 | 4.13 |

| 8 | 1.57 | 1.46 | 67.23 | 0.46 | 9.96 |

| 9 | 4.23 | 2.09 | 94.95 | 0.49 | 2.46 |

| 10 | 2.43 | 1.52 | 75.32 | 0.47 | 6.24 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kynash, Y.; Semeniv, M. New Approach to Dominant and Prominent Color Extraction in Images with a Wide Range of Hues. Technologies 2025, 13, 230. https://doi.org/10.3390/technologies13060230

Kynash Y, Semeniv M. New Approach to Dominant and Prominent Color Extraction in Images with a Wide Range of Hues. Technologies. 2025; 13(6):230. https://doi.org/10.3390/technologies13060230

Chicago/Turabian StyleKynash, Yurii, and Mariia Semeniv. 2025. "New Approach to Dominant and Prominent Color Extraction in Images with a Wide Range of Hues" Technologies 13, no. 6: 230. https://doi.org/10.3390/technologies13060230

APA StyleKynash, Y., & Semeniv, M. (2025). New Approach to Dominant and Prominent Color Extraction in Images with a Wide Range of Hues. Technologies, 13(6), 230. https://doi.org/10.3390/technologies13060230