1. Introduction

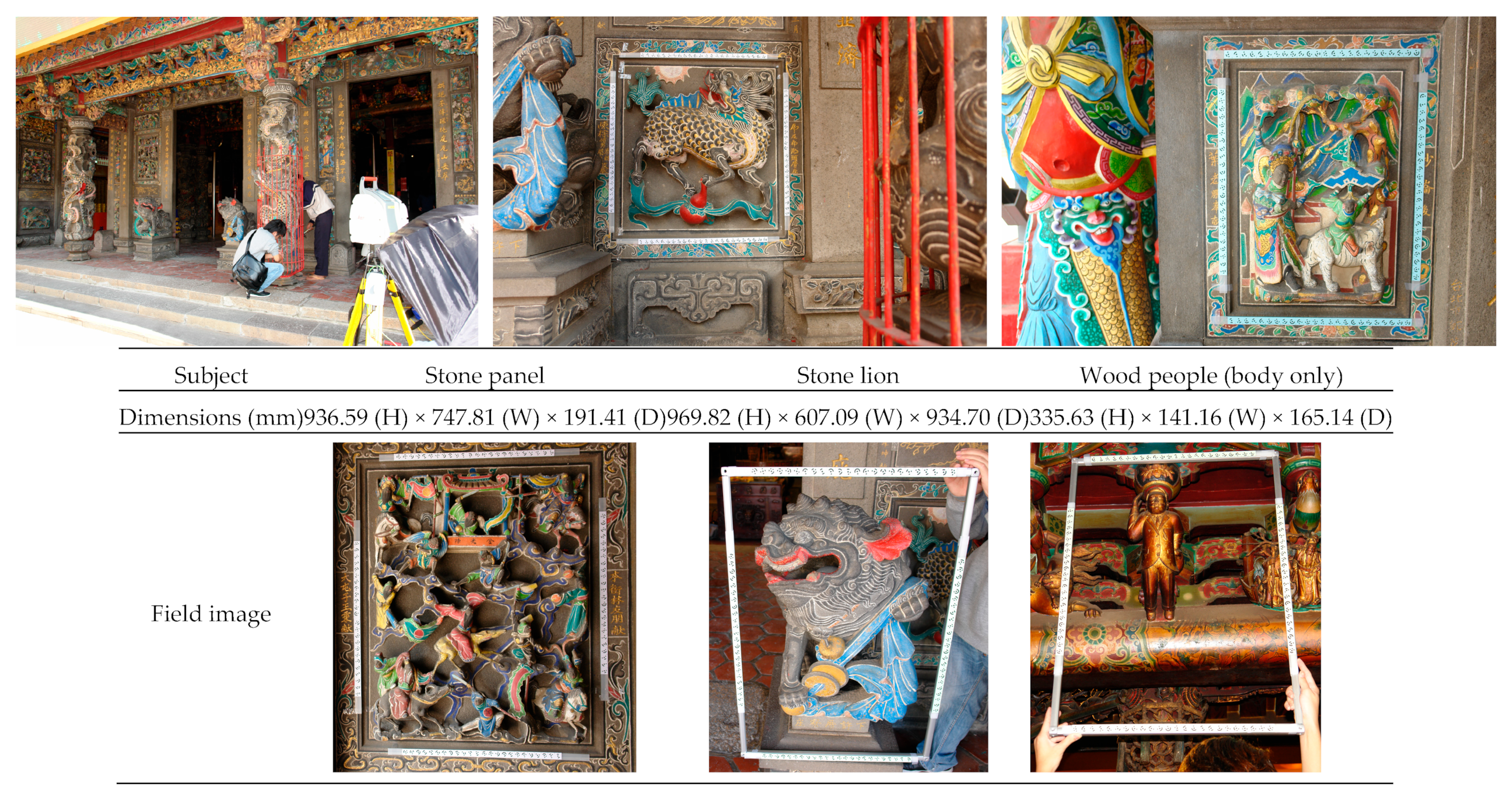

Gongfan Temple, or Gongfan Palace, is located in Mailiao Township, Yunlin County, Taiwan. Its name refers to the peace and prosperity of all living beings in the area. It has been dedicated to the worship of the Six Mazus since 1685. With a history dating back 340 years, it is the earliest temple in Taiwan dedicated to Mazu. In 2006, the Yunlin County Government announced it as a county-designated historic site, in acknowledgement of its tangible and intangible heritage (

Figure 1).

The temple is frequently decorated with 3D structures inspired by ancient stories, for educational purposes. These traditional decorations are often designed by craftsmen to display intricate protagonists, expressions, body shapes, and scenes in 3D or 2.5D. Visitors can further distinguish the narratives or identities created by different craftsmen from the tension of the characters’ bodies or the wrinkles on their clothes. These narrative 3D scenes vitalize old stories.

1.1. Former Studies

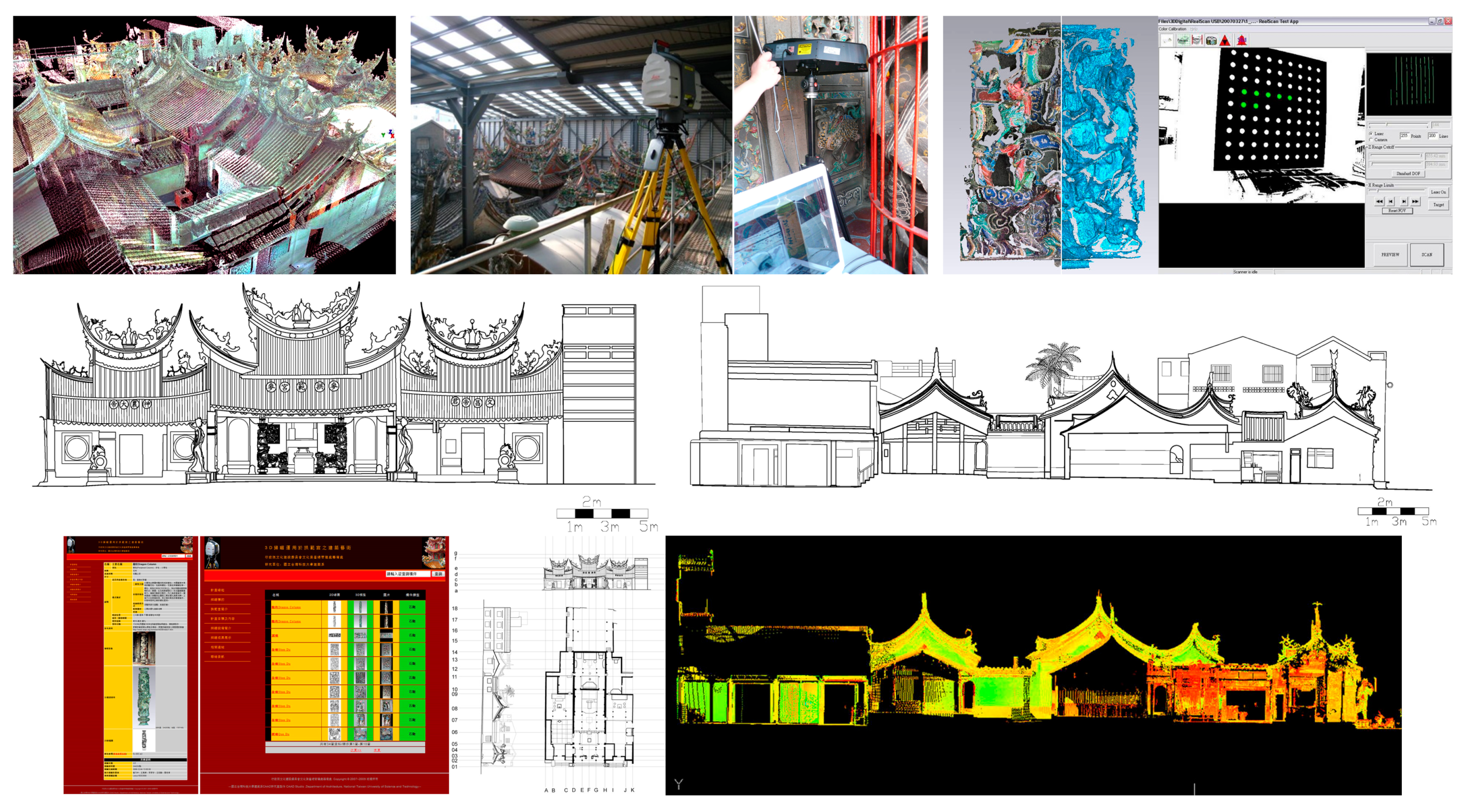

The preservation of cultural assets, such as the exquisite carvings installed in the interior and on the exterior of the temple, is achieved with the use of 3D scan technologies (

Figure 2). The initial period of preservation was between 2008.8 and 2009.3, in which a Leica HDS 3000

® laser scanner (Leica Geosystems HDS LLC, San Ramon, CA, USA) and Cyclone

® software (Leica Geosystems HDS LLC, San Ramon, CA, USA, V 3.1) were used for long-range scans, and Optix 400 RealScan USB

® (3D Digital Corp., Sandy Hook, CT, USA) and Color_Calibration

® (3D Digital Corp., Sandy Hook, CT, USA) software were used for detailed scans [

1]. With the former combination, a total of 612 scans and 74,584,968 points were completed from 33 scanning locations at ground level and greater heights. With the latter combination, 29 components and 1023 scans were completed. For wood carvings, 34 objects, 154 million polygons, and 1029 scans were captured in the first attempt.

The main temple was under renovation and covered under a weather canopy, causing partial shielding for long-range scans. Near-range scan data had to be calibrated for the first use, and was subjected to limited space accessibility and interference from light sources. After completing the field scan work, different scans needed to be registered, sampled, noise-filtered, and color-corrected. There were often small areas that could not be recorded.

The temple has numerous small components within the same category with similar configurations. To avoid misidentification, a customized coordinate system was deployed for vertical, horizontal, and elevation positioning, based on existing partitioning modules. The final results were integrated with Metadata definitions from web pages.

1.2. Research Goal

This research aims to connect AI and conservation in a seamless 3D reconstruction of heritage from images taken 17 years ago. The as-built physical and digital data are mutually influential in preserving cultural heritage sustainably. The temple was digitally preserved using 3D laser scanning technology in 2008 [

1], which was achieved with multiple photos that had been taken previously. How can these 17-year-old photos be helpful in renewed preservation efforts? Since AI has changed how data can be sustainably generated, how it connects to the past in a wholly novel manner remains to be seen in the field of conservation.

With the development of photogrammetry and AI, the efficiency of 3D reconstruction has improved. Images from the past can be used to reconstruct the temple and its decorative art, such as wood carvings, stone carvings, clay sculptures, cut-outs, murals, tablets, plaques, and other building components that were used at that time.

1.3. Related Studies

The effective preservation of these assets through utilizing old resources is one of the current priorities of cultural policies. The literature shows that 3D Gaussian splatting (3DGS), which represents a paradigm shift in neural rendering, has the potential to become a mainstream method for 3D reconstruction. It effectively transforms multi-view images into highly detailed 3D Gaussian reconstructions in real time [

2]. This software can estimate camera poses for arbitrarily long video sequences [

3]. In the surface reconstruction of large-scale scenes captured by unmanned aerial vehicles (UAVs), the quality of the surface reconstruction is accompanied by high computational costs [

4]. High-precision real-time rendering reconstruction has also been applied for cultural relics and buildings in large scenes [

5], peach orchards [

6], and historic architecture using 360° capture [

7]. In addition to being utilized for the large-scale 3D digitization of the remains of the Notre-Dame de Paris fire [

8], neural rendering has been applied in leaf structure reconstruction [

9], the analysis and promotion of dance heritage [

10], and as part of an ethical framework for cultural heritage and creative industries [

11]. Special renderers have been developed for the effective visualization of point clouds and meshes [

12]. RODIN

® applies a generative model for sculpting 3D digital avatars using diffusion [

13], with the 3D NeRF model presenting computational efficiency.

Three-dimensional content generation has benefited from advanced neural representations and generative models [

14]. For a relatively small-scale object or space, a standard smartphone can be used to capture tangible cultural heritage using only RGB images easily and affordably [

15]. The accuracy of scaled 3D models of crane hooks created with iPhones and commercial structure-from-motion (SfM) applications met the relevant inspection requirements for crane hooks [

16]. Generative AI has also been used to create detailed accessories for a virtual costume [

17].

Regarding the detail and accuracy needed to meet requirements in the medical field, photogrammetry has been conducted to construct 3D medical didactic materials using mobile phone apps, which are accessible, user-friendly, and have the potential for virtual reality (VR) and augmented reality (AR) integration [

18,

19], compared to traditional simulation [

20]. Photogrammetry provides comparable scanning capabilities at a significantly low cost for medical applications like intraoral scans [

21]. KIRI Engine

® has been used for image capture in designing patient-specific helmets [

22] and acquiring 3D meshes of cleft palate models [

23]. The KIRI

® program enables more accurate and detailed capture, is faster, and does not require computer specifications or time-consuming processing [

24]. Although the accuracy of these models might vary, the accessibility and practicality of their use via smartphones have proven to be significant advantages [

25].

If smartphone scan applications can be applied for intraoral scans and constructing costume details, it should also be feasible to use them to document cultural heritage in architecture. UAVs with 1” camera sensors can document images for the to-scale reconstruction of large sites. A smartphone camera with a resolution of 200 million pixels should be feasible for the detailed reconstruction of cultural heritage on a small scale.

2. Materials and Methods

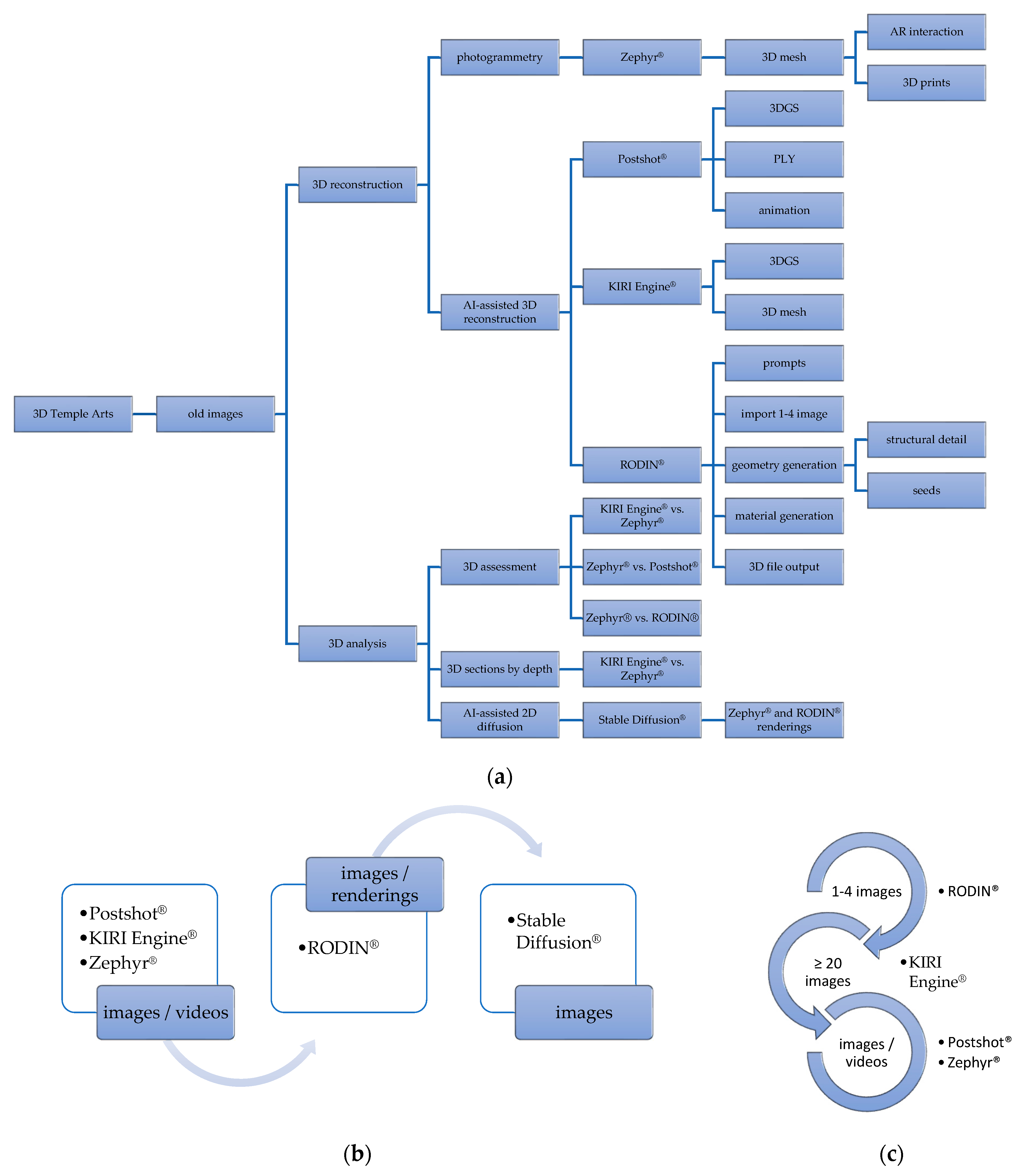

Continuously updating the temple’s heritage data is the most direct method of conservation and was initiated 17 years ago. This preservation consists of a series of 3D reconstruction processes using scans, photogrammetry, and AI (

Figure 3a). AI-assisted software and cloud computing are applied (RODIN

® (Deemos Technologies Inc. Los Angeles, CA, USA, Gen1.5, RLHF V 1.3), KIRI Engine

® (Hong Kong, V 3.13) (

Figure 3b). For desktop computing, Postshot

® (Jawset, Munich, Germany, V 0.6) creates a model to solve the issue of data privacy and sovereignty, using a basic setup with Nvidia

® GPU RTX 2060 (Santa Clara, CA, USA, RTX 2060). The 3DGS format is capable of rendering reflections and semi-transparent surfaces. KIRI Engine

® can convert 3DGS into mesh, which enables a connection to other 3D applications. RODIN

®, which can provide 3D models with a strong resemblance to the original scene using a limited number of images (

Figure 3c), can be used for initial modeling and can incorporate additive imagery resources when available.

The working platform produces a 3D model that is different from traditional versions. It allows for the diversification of subsequent operating platforms, for example, game engines (Unreal Engine

® or UE

® (Epic Games, Cary, NC, USA, V 5)) and multimedia software (After Effect

® or AE

® (Adobe, San Jose, CA, USA, V 25.2.2)) could be used. The original restrictions on taking pictures determine the selection of subsequent applications, such as Postshot

®, RODIN

® (1–4 images, specified by software), KIRI Engine

® (at least 20 images, specified by software) [

25], or Zephyr

® (3DFlow, Verona, Italy, V 8.001) (

Figure 4). Progress has been made in 3D reconstruction with the use of AI. Notably, 3DGS has substantially improved in terms of the level of reality and the output rate.

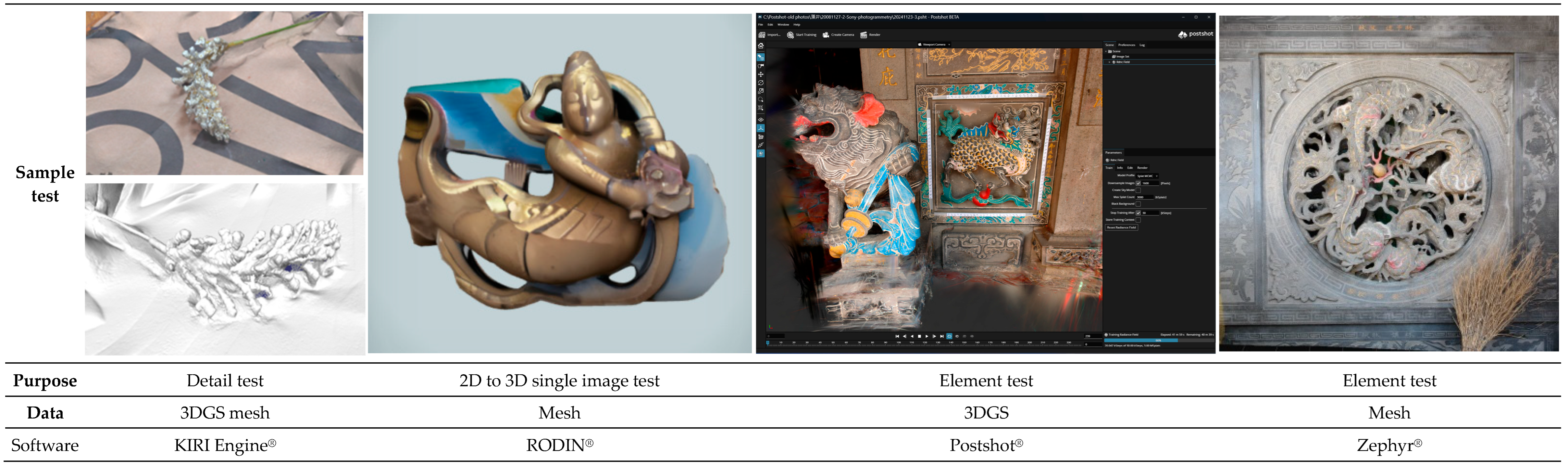

AI-assisted 3D model reconstruction verifies details corresponding to different 3D platforms. The point cloud and mesh created using Zephyr® were referred to and compared with those of the 3DGS and polygon models generated using Postshot®, RODIN®, and KIRI Engine® under the following requirements:

Availability of sufficient imagery data: Reconstruction may fail with limited numbers or chaotic arrangements of image sequences.

Comparison of cross-program reconstruction results at the same level of detail: Thin elements must present roughly the same level of detail, and should not disappear, be incorrectly created, or merge with adjacent parts.

Implication of missing model parts: In RODIN®, insufficient descriptions of images may be solved by extending or interpolating the surface curvature to invisible parts of subjects, or through the differentiation of the subject from its background and periphery, instead of creating a flat background surface or merging into it. In Postshot®, scenes can be missing from discrete and uninterpolated images.

Image segmentation: Unclear images may generate unexpected 3D results. For example, the dark horns of a cow were not generated because of their similarity to the dark background color. In contrast, an extruding nose was generated despite there being a similar background color.

Options supporting the generation of polygon models: Polygon or mesh models were additionally requested for a follow-up study and visualization using shaded/pbr (physically based rendering), MTL (material file), and AR, based on OBJ and 3DGS (with/without mesh).

Three-dimensional program accessibility: Variation exists in desktop/mobile configuration or subscription plans in terms of polygon numbers, original image size, and texture resolution.

Seed (or gain) implementation: The seed value was available to record training and extend generation experiences.

The limitations of this study include the incompleteness of the original images. About 1500 original photos (

Figure 5) were taken from radial and centripetal perspectives and used in the categories of working records, environment observation, and 3D scanner image mapping, and for tracing vector drawings. Reconstruction restrictions include the number of photos, how the photos were taken, and the quality of the photos. A large number of pictures included striped survey markers placed temporarily next to the target to enable alignment. The markers caused interference in the 3D reconstruction. Fortunately, most of the markers could be removed either automatically or manually.

In total, about 1500 images were taken using a SONY® DSC-R1 with 3888 × 2592 pixels, 24 bit, and 72 dpi resolution, with some taken using a Panasonic® DMC-FX100 with 4000 × 3000 pixels, 24 bit, and 72 dpi resolution. The success of reconstruction was determined by the proportion of available photos that generated 3D models or the number of failures. The 3D output rate was about 80%, and the satisfactory success rate was about 50%, but the level of detail was still quite useful from a sustainable perspective of digital data.

3. Results

Three-dimensional models were reconstructed using Postshot®, KIRI Engine®, RODIN®, and Zephyr®. The results were compared, assessed, and animated. To prove the ability of the tool to satisfy preservation requirements in any circumstance, the software should be used ubiquitously for every object and space, including the interiors and exteriors of the building.

3.1. Five Types of AI-Assisted Modeling

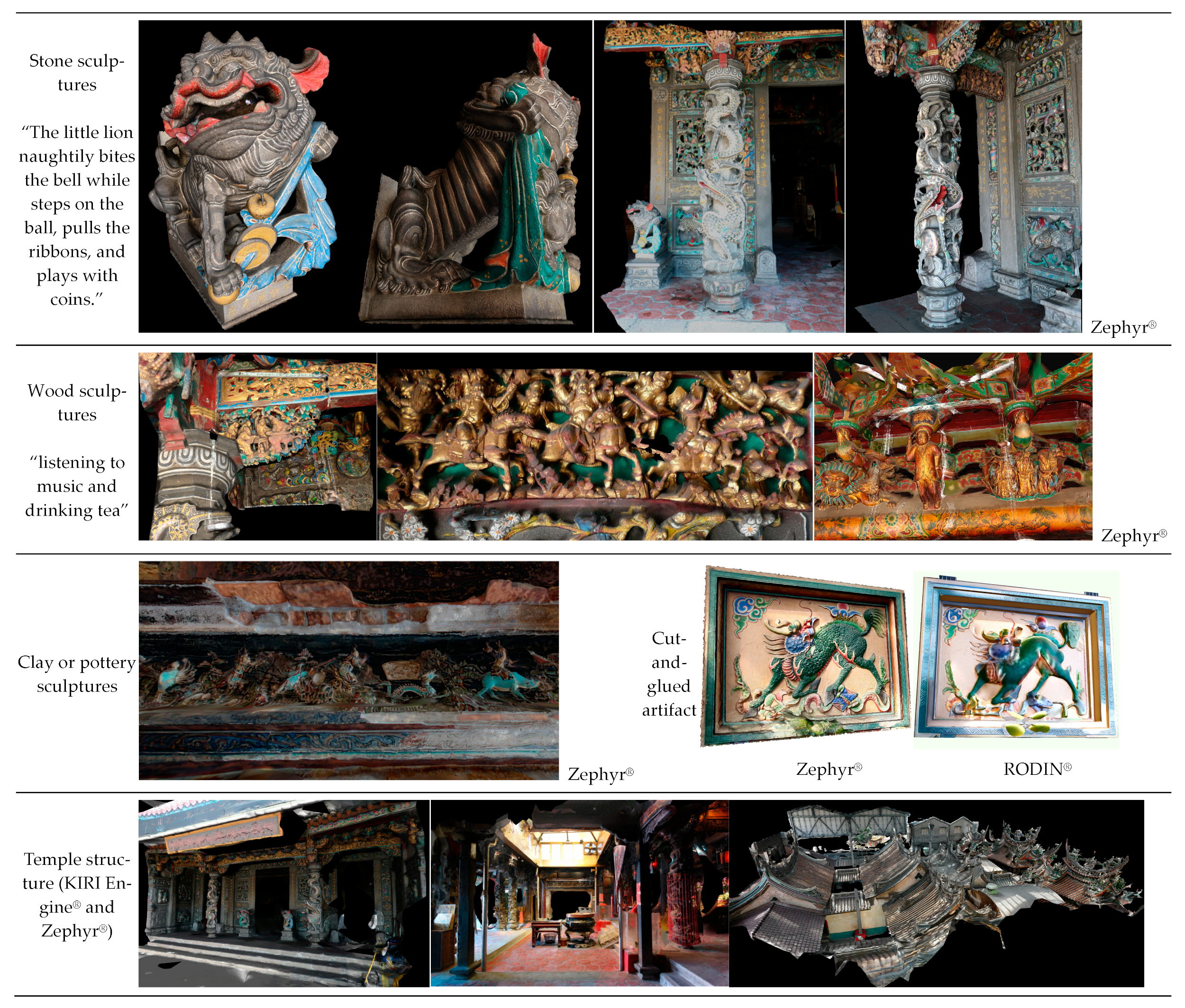

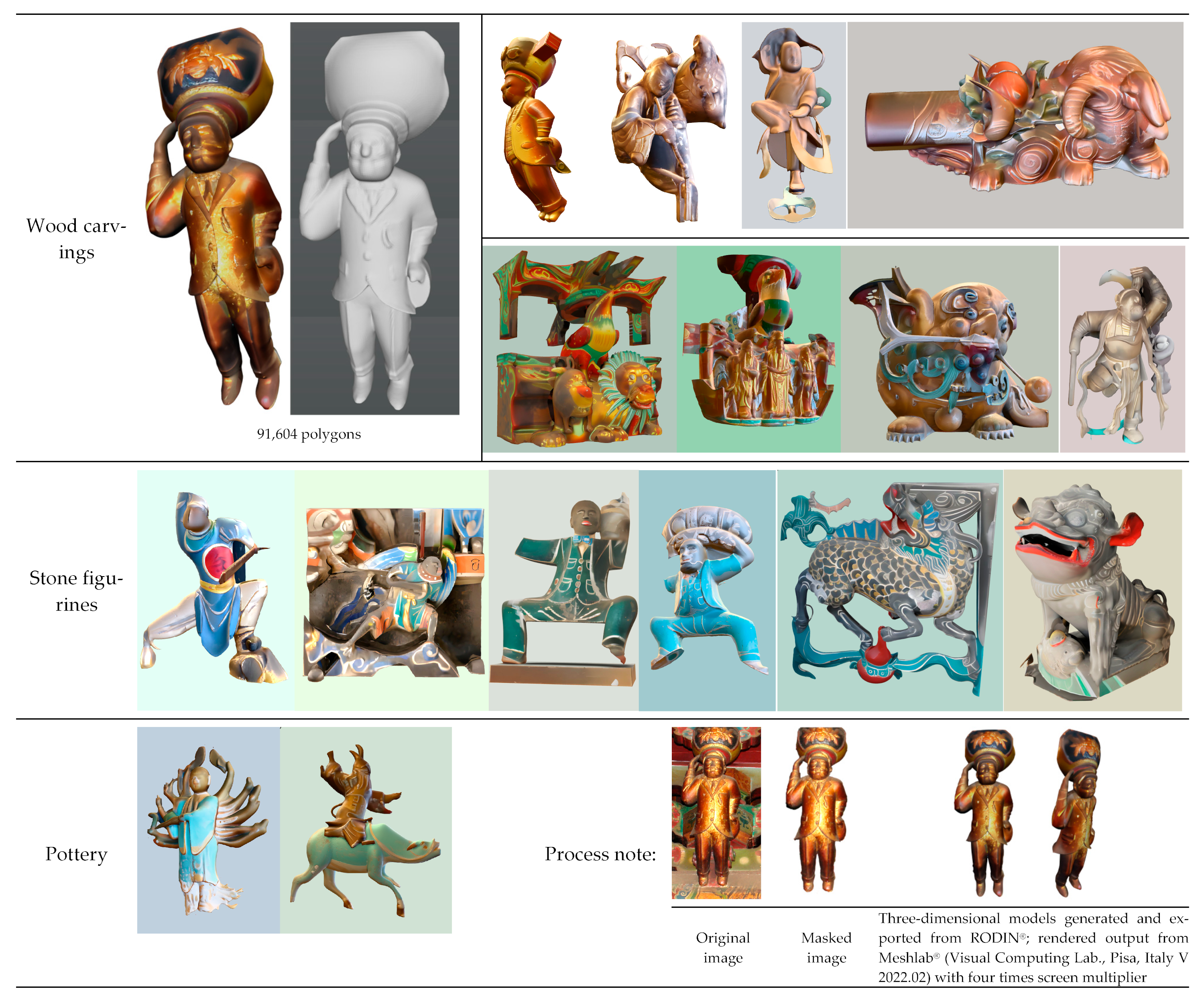

The results are categorized into five types of components (

Figure 6).

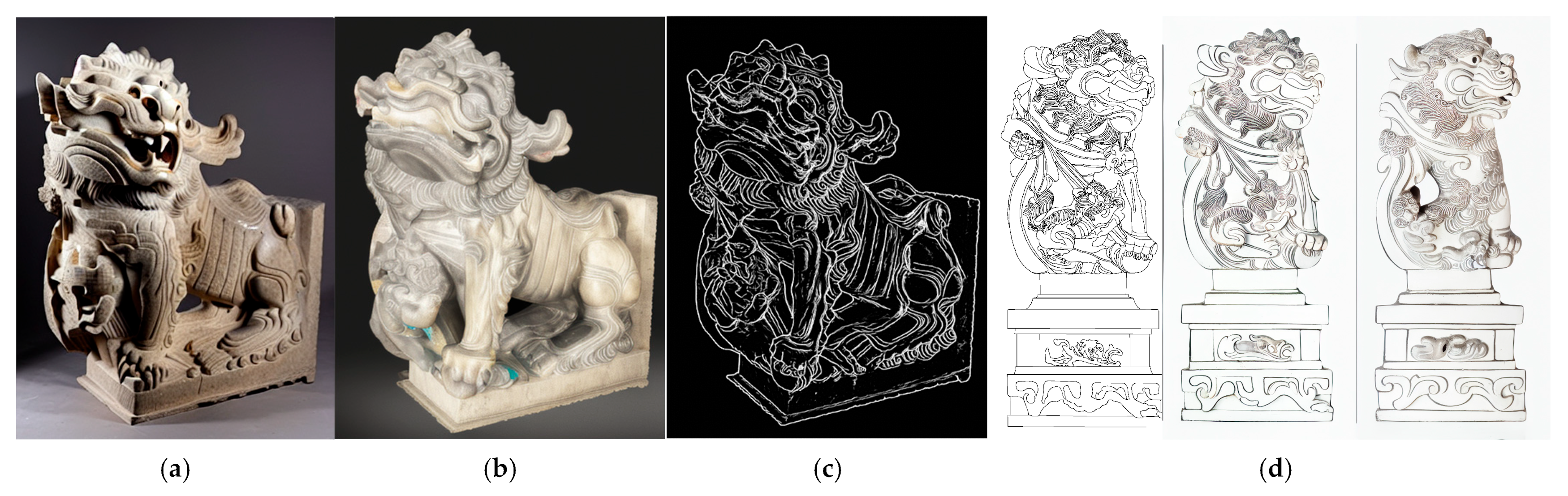

Stone carvings: The main components of stone carvings were dragon pillars, stone lions, and decorations on walls, including top (top block), body (torso block), and skirt (apron block) sections. The carving methods included openwork, relief, and round carving. Postshot® and Zephyr® were mainly used, while specific components were modeled and compared using all of the applications. The color, which originated from old photos, was not calibrated by color card and image editing software.

Wood carvings: The wood carvings included brackets, queti, drop beams, and hanging canisters. The selection was based on the iconic characters presented on the old official web page. Zephyr® was mainly used to reconstruct the models.

Clay or pottery sculptures: Only one case was exemplified and illustrated. Most of the clay components are located on the wall below the roof ridge. In order to avoid damaging the building components or causing danger to workers, components that enabled the greatest degree of safety were selected. Limited accessibility to the backs of the sculptures prevented a thorough scan. RODIN® was applied to generate a model using a single image.

Cut-and-paste crafts: Only one case was exemplified and illustrated. Colored bowls were processed into pieces and then pasted to form 3D shapes. RODIN® was applied with very few images available to reconstruct sufficient detail.

Temple structures: This category included five models of Sanchuan Hall, the roof, and the interior. The original photos recorded during the 3D scanning process were taken in the front right and left rear corners before the renovations were complete. Zephyr® and Postshot® were used to reconstruct the site using image set(s) sequentially rearranged in an order different from that of the original shooting angles. At the front of the temple, the red iron fence was removed from the dragon pillar. Although it was obstruction-free, the model’s resolution was lower than expected since the images were taken from a distance and less than five images were available.

Figure 6.

The five types of components and models.

Figure 6.

The five types of components and models.

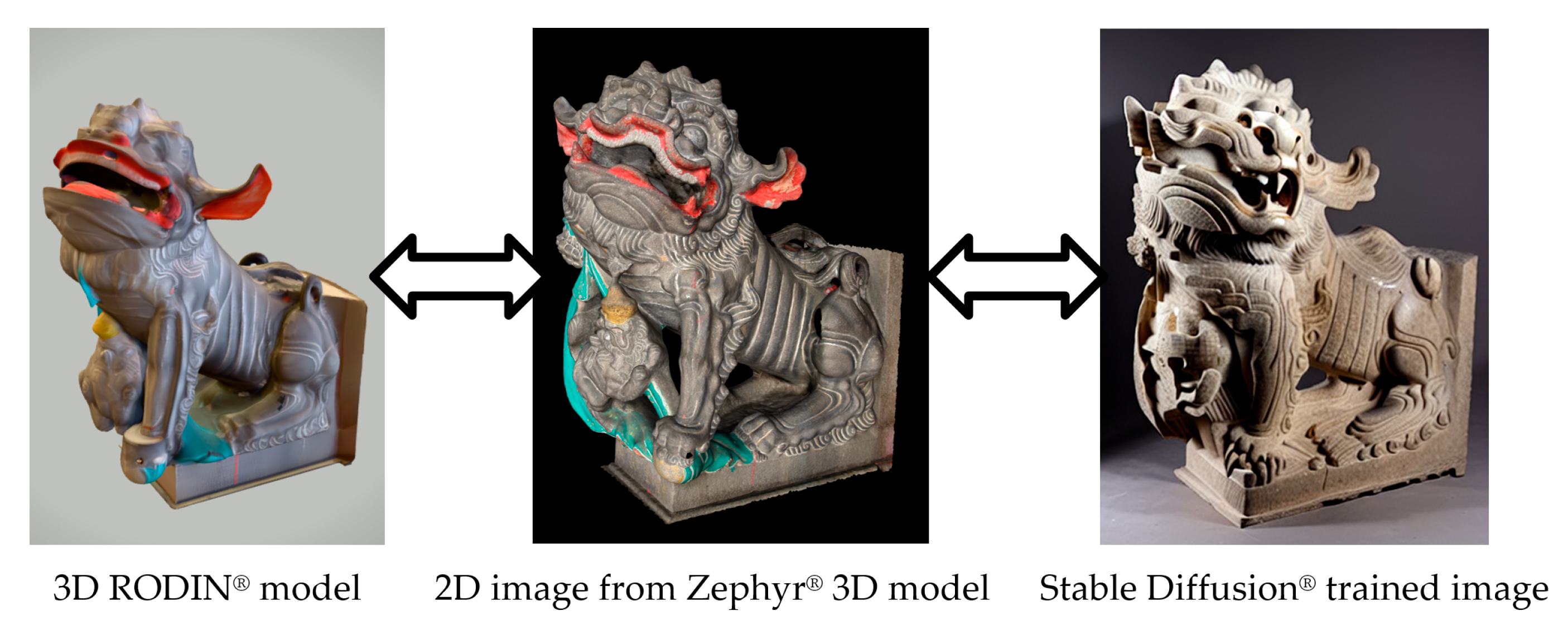

3.2. RODIN® Reconstruction

RODIN

® can reconstruct context from a single image or less than four images. It played an important role in creating models with a strong resemblance using limited source images, since access to the front or side views of some sculptures was very limited. Technically, it generates a secondary creation more similar to a figure or the original version (

Figure 7). The structural details show how ancient masters of their craft conveyed the characteristics of a subject. The details are precisely reconstructed by training the model parametrically using seeds in configuring variables and mapping references. It reinterprets details and characters, the classifier-free guidance scale (cfgs), and iteration times as the old artists did, with an iteration time similar to that of Stable Diffusion

®.

RODIN®’s reconstruction of these structures encouraged further inspections of wood details, for example, the joints connecting figures to frames or the elephant details at the end of beams. The product was not necessarily accurate, since the second generation was attempted using symbolic representations of the parametric variables.

The quality of the reconstruction depends on the quality of the image. As seen in the picture of the interior (

Figure 8), the left and right sides of this photo are focused differently. However, the blue-green pottery to the right is clearer, and this can produce a better result after the image is enhanced, thereby producing a 3D model more similar to that expected. The pottery depicting a female character, located to the left, is not clear, but a 3D result is still generated.

This generation was enabled using vector drawings, 3D renderings, and field images (

Figure 8). The traditional plans, elevations, and sections are less convincing than their 3D versions in different ways. Although the 3D models reconstructed from the 3D scans were still projected orthogonally in order to enable the vector drawing to be traced correctly, a vector drawing was also used for 3D reconstruction, the same as for the rendering made from the Zephyr

®-reconstructed 3D model.

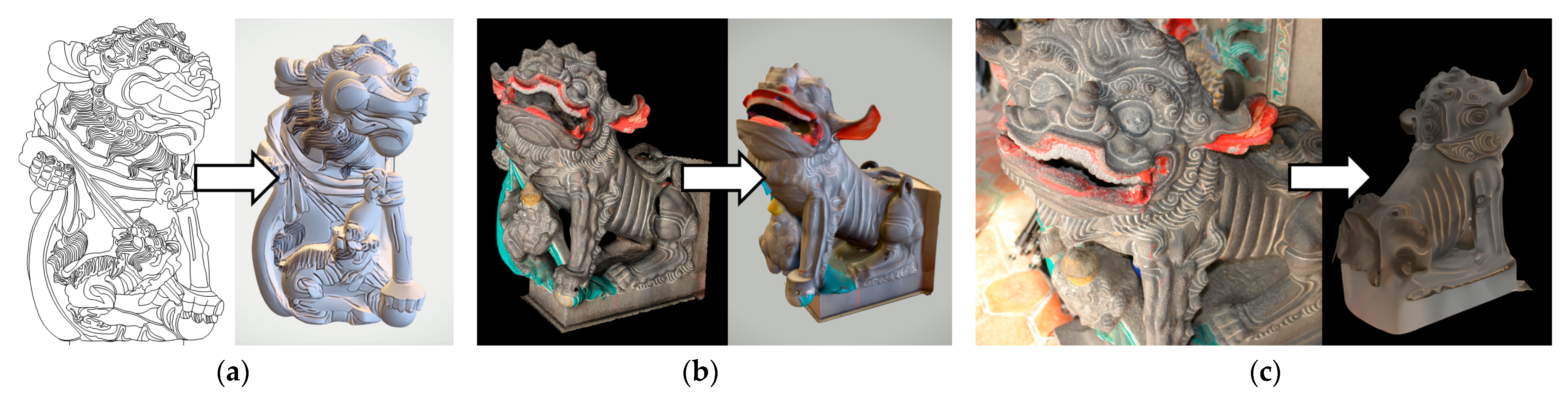

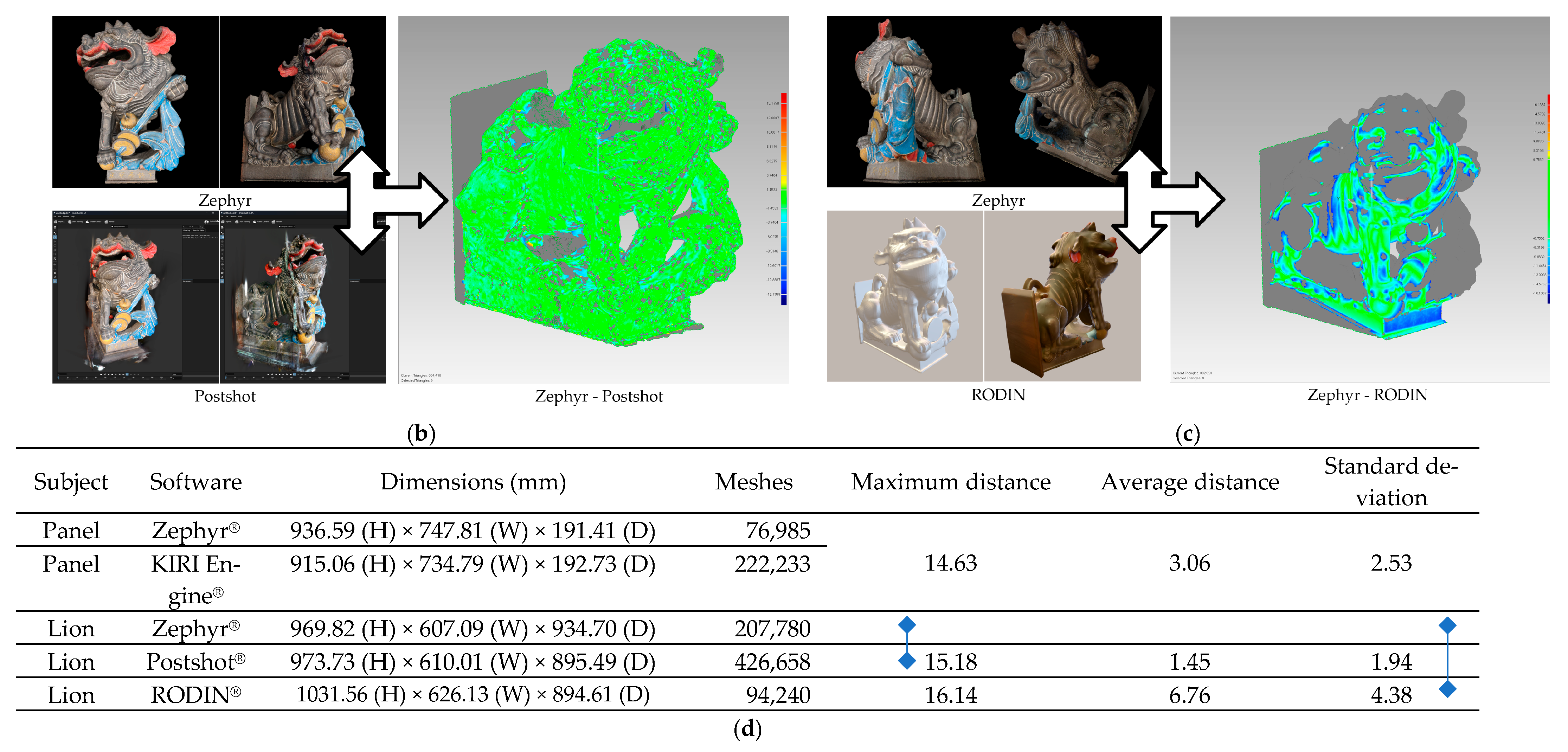

3.3. Three-Dimensional Assessments

The three-dimensional models made by KIRI Engine

®, Postshot

®, RODIN

®, and Zephyr

® were cross-assessed. A larger tolerance was encountered in the following three specific cases, although all of the models presented a certain level of fidelity. The standard deviation was lower in the Postshot

®–Zephyr

® paired set (

Figure 9b) than that achieved by the KIRI Engine

®–Zephyr

® (

Figure 9a) and Postshot

®–RODIN

® paired assessments (

Figure 9c). Instead of 3DGS, the PLY format was exported from Postshot

®, converted into 3D polygons in Geomagic Studio

®, and globally registered with the model created by Zephyr

®. The PLY model displayed unevenly distributed splatters. The RODIN

® model was deformed.

The subsequent comparisons were made by registering two overlapping 3D models and assessing them in terms of the standard deviation, maximum distance, and average distance (

Figure 9d). Global registrations were made within the modified boundary of the focused subject, following manual registrations conducted for the two models at their original size.

Based on the reconstructed 3D models, the reconstruction results for the sculpture panel attached to a wall were worse than those of the isolated lion sculpture. The former had a mostly exposed front viewpoint, while the latter can be viewed from almost 360 degrees. Another critical point was the availability of photos, with more images of the lion available.

In the comparison, RODIN® ultimately produced the worst result. Although the model was eventually trained with surprising details, a close inspection of its outcome revealed differences despite the apparent similarity of many sub-parts. One of the most significant dissimilarities was that the back base and wall were not orthogonally oriented at 90 degrees. RODIN® also had the most troublesome registration, since its large tolerance seemed meaningless in establishing a registration reference. The front part was still compared. After testing many combinations of adjustments, only near-perfect results could be achieved. Nevertheless, both the correct and dissimilar aspects of the modeling results enabled a further inspection of the heritage.

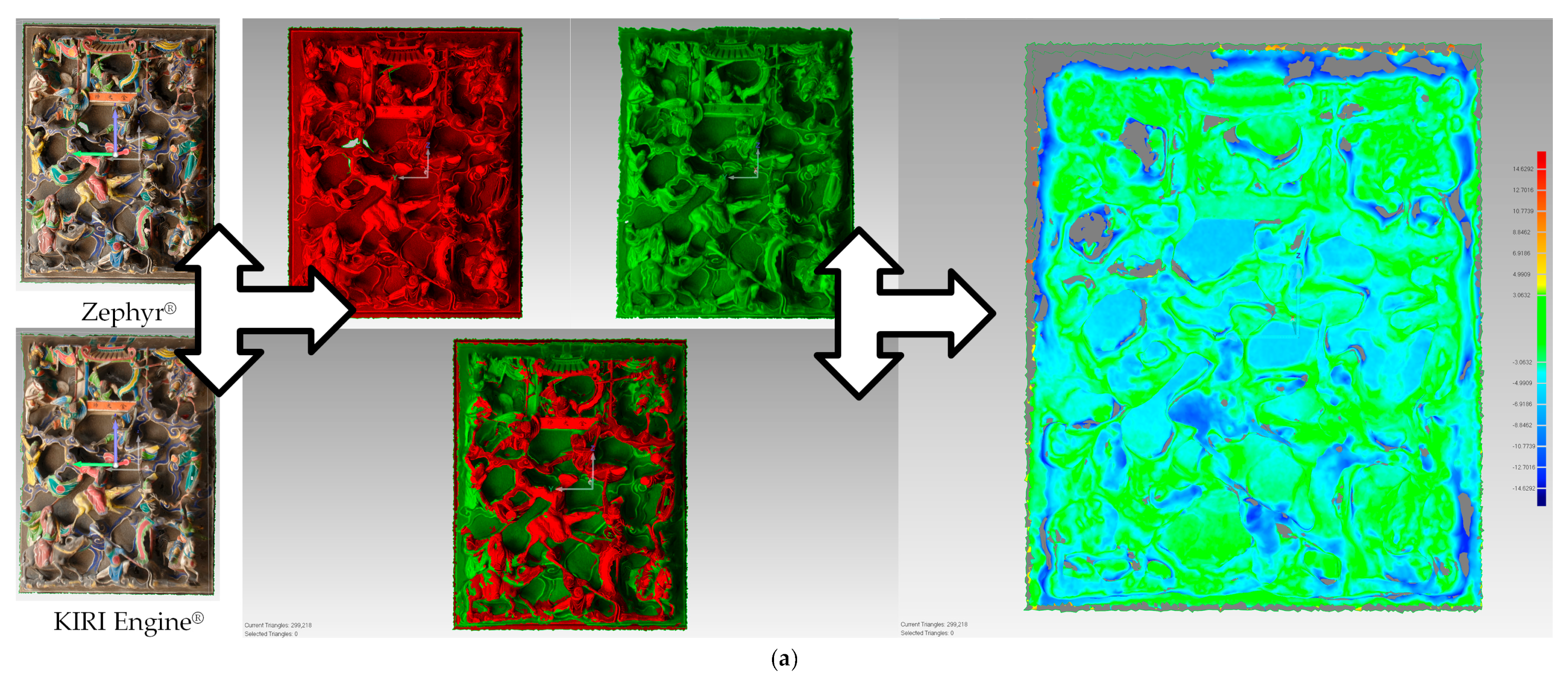

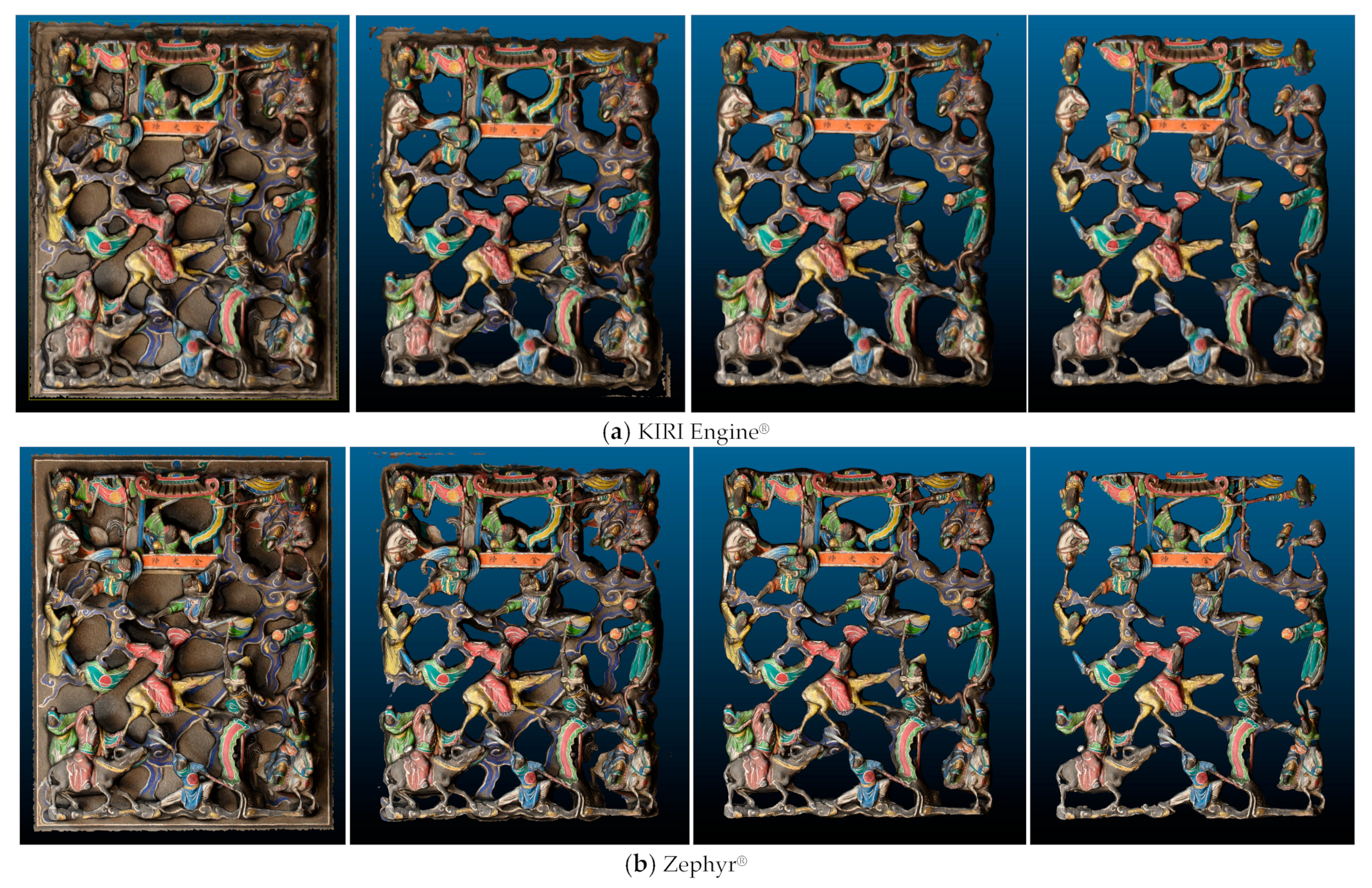

3.4. Three-Dimensional Composition Analysis by Section

In order to verify the accuracy of the openwork reconstruction, sections parallel to the wall surface were taken to inspect if the thin elements and wall background were completely distinct. One of the most notable stone sculptures, which created flows and tensions between interlaced layers of characters and subjects within a short depth, is shown in

Figure 10. The two test sets were reconstructed using KIRI Engine

® and Zephyr

®, in which the latter presented more details than the former. The depth-based slices, which were made in CloudCompare, deconstructed the sculpture’s composition layer by layer. The composition was an interconnected complex including the composition’s title in the top center, the main cluster depicting characters fighting in the center and to the lower left, another character on the center right fighting with two mirrors in its hands, and clouds. The composition presents the links between the characters’ body features, the animals they are riding, and weapons, flags, and clouds. The clouds have four hierarchical layers, which are displayed as elevated curves extruding from the background to the characters. The most elevated layer of the arrangement is located at the top left, with most parts of the characters merged into the background and behind the clouds.

3.5. Data Post-Processing

Three-dimensional models are created not only for illustration, but also for AR interaction, animation, and 2D image training as an extended heritage preservation effort.

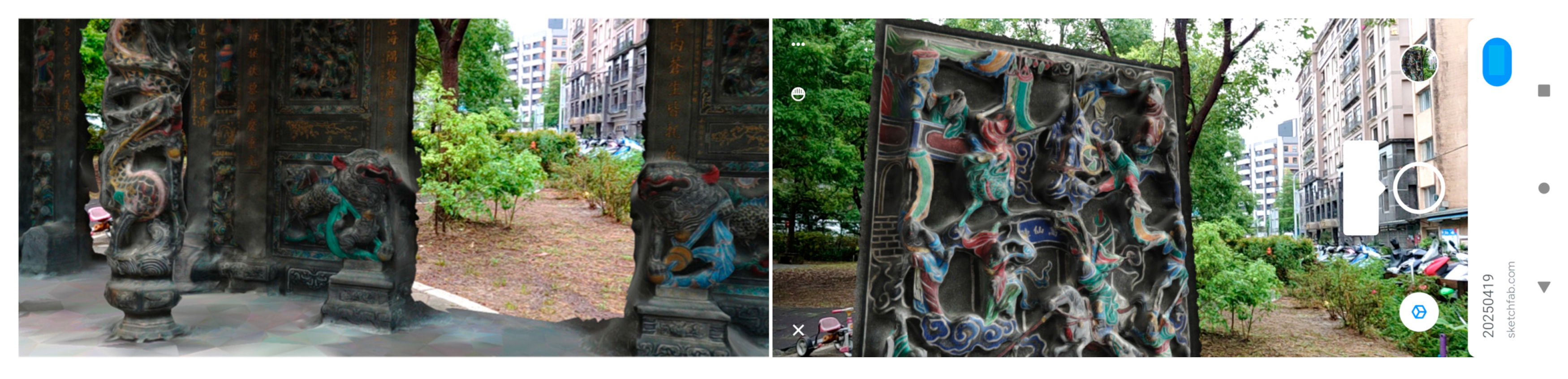

3.5.1. Augmented Reality

AR on Augment

® or Sketchfab

® (

Figure 11), which allows for cloud access from a smartphone, enables a sculpture to be inserted into a scene using a portable device, and can be used for research or education. Two models were created in Zephyr

®. Although the details were limited by the quality of the original image, the 3D models were rotated, scaled, and duplicated as needed using a smartphone.

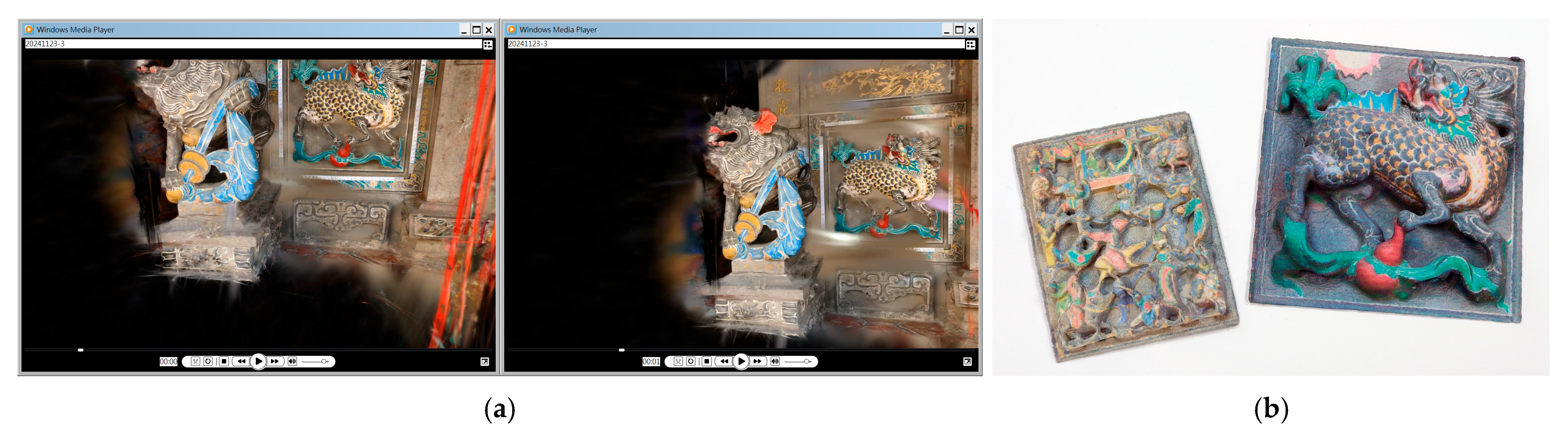

3.5.2. Postshot® Animation and 3D Prints

AI-assisted modeling, which was conducted using off-the-shelf, ready-made applications, also supported animation in Postshot

® and smartphone AR in Augment

® or Sketchfab

®. The 3D model of Gongfan Palace was scanned using reverse engineering technology in a point model. In order to preserve detail, a reduction in polygon count is usually used as a trade-off between the computing speed and a high level of detail. Postshot

® works by directly using a trained model for animation (

Figure 12a), regardless of the polygon count. It creates realistic scenes with a basic hardware setup, and is supported by game engines (Unreal Engine

®) and multimedia time tracks (After Effect

®).

Three-dimensional printing creates effective physical representations of data. ComeTrue

® T10 (1200 × 556 dpi) was used to print 3D color models. Inkjet dyes were printed on layers of gypsum-like powder to replicate the texture and geometric attributes in relation to different parts (

Figure 12b). The detail presented in the original reconstruction was improved by increasing the model’s resolution for self-explanatory visual and structural details.

3.5.3. Stable Diffusion® Training

Stable Diffusion

® reinterpreted alternative representations of the original drawings and 3D renderings of sculptures [

26]. The results included regenerated images using Canny for Zephyr

® rendering, the simulation of raw stone texture with paint removed, and more realistic forms via Lineart (

Figure 13).

The novel 3D–2D combined process used the original images to reconstruct new 3D models through Zephyr®, as well as new rendering methods to explore new styles by referring to the original object’s position and details. The bottom–up process, from Controlnet to img2img in Stable Diffusion®, referred to vector drawings using Zephyr® rendering and vice versa. The differentiated nature of the original data enabled the original sculpture to be reconstructed in a synergistic way.

4. Discussions

The AI-assisted environment generation concluded with a recursive reconstruction involving 3D models and 2D images, specifically for the conservation of temple art. This approach can supplement 3D modeling with extraordinary structural and visual details. The trained 3D models can be assessed and extended to composition analysis by section.

4.1. Consistent and Inconsistent Reconstruction

AI contributes to the consistent and inconsistent parts of trained results. The former evaluates the results based on the existing visual and structural details presented. The latter, which may lead to a result with an appearance deviating from that expected, needs auxiliary data to complete the reconstruction. Although inconsistency means useful outcomes cannot be achieved through training, the careful inspection or adjustment of variables usually contributes to a more thorough differentiation and classification of parts considered to be controllable or deconstructive. A seed (or gain), which presents a meta-collection of variables, not only creates a connection to an object’s details, but also documents possible styles for future data maintenance. If the former delivers answers directly, the latter inspires us to rethink why the answers are not satisfactory. Their contributions are equally important.

Consistency and inconsistency are not mutually exclusive. In reality and preservation practices, the former consists of the application of Postshot®, KIRI Engine®, and Zephyr®. The latter consists of the application of RODIN®, which trains results from a limited number of images of a subject. The RODIN® test selected four images rendered by Zephyr®, a typically used AI component, to train the results, which became inconsistent with the original images. It is certainly helpful to revisit the site to examine hidden parts or take pictures from behind the object. If not, the inconsistent outcome clarifies the focused region, enabling the elucidation of details for future filming plans.

Technological evolution is achieved via adaptive reuse and the acknowledgement of cultural heritage. In other words, it is a continuous process that evolves preserved artifacts with inspired outcomes. For example, the image rendered from the 3D model reconstructed by Zephyr

® was used for further reconstruction by RODIN

® in the new style recently developed via Stable Diffusion

® (

Figure 14). This is a reversed-study process simulating how the human figure was interpreted by traditional craftsmen. It has inspired the reinterpretation and verification of gray areas of imaginative space created by craftsmanship and predicted the unscanned parts of objects. The connection to the existing framework sparks curiosity and overcomes the limits of a formal approach.

4.2. New Structure and Management of Resources, Formats, and Interfaces

Structure from motion photogrammetry is available for the fast documentation of archaeological features [

27], and the digital transition of geological fieldwork is well established, even involving the use of smartphones [

28]. A well-documented set of images can be used as a reference to support collaborative applications. In this study, the level of detail was restricted by the available angles shown in the original images. The traditional photogrammetry approach, i.e., Zephyr

®, not only generated a more favorable appearance but was also capable of rendering images for RODIN

® models in a recursive way. In other words, all 3D data were mutually referenced.

Although this method used old imagery data, AI-based assistance contributed to a new structure with more feature-inspired management. The structure also led to a more diversified interface, enabling situated application and delivery, such as 3D printing and AR.

The adaptive reuse of old photos with AI enabled new interpretations in RODIN® with seeds and 2D-oriented training and interpretation using Stable Diffusion®. Compared to large-scale and computationally expensive 3D scan systems, image-based modeling is an efficient reconstruction alternative. In contrast to traditional VR and animation, polygon-free manipulation was made possible by using gaming engines and multimedia platforms. The management of the 3D data was facilitated by a 3D cloud platform for AR interaction.

5. Conclusions

Preservation requires constant maintenance, since the demand for it, its format and nature, and the level of interference could be different or evolve in the future. Different approaches not only create novel types of data, but provide the opportunity to use new interfaces and platforms. Three-dimensional content, which used to be a collative presentation of heterogeneous types of data, is now enriched by AI-assisted generation.

This study has emphasized and exemplified the utility of photography-based and AI-assisted reconstructions in the preservation of tangible cultural heritage. Photographs taken 17 years ago provide images of the as-built scenes of the past, but the image-taking process should be enhanced with the more efficient and effective tools and processes currently available.

AI-trained models can and should be assessed. High fidelity is an advantage shared by all software. Postshot® not only raises the bar regarding fidelity, but also creates 3DGS scenes capable of showing reflections and semi-transparent surfaces, which Zephyr® handles less adeptly. KIRI Engine® can convert 3DGS into mesh, enabling a connection to other 3D applications. RODIN®, which can provide 3D models with a strong resemblance to the original scene with a limited number of images, can be used for initial modeling and can incorporate additive imagery resources when available.

The AI-assisted 3D reconstruction process supports heritage examination—regardless of whether the object is an openwork carving or 2D image made via a vector drawing of curved thin elements, such as cuffs and ribbons—and can be used to reinterpret the results of RODIN® and Stable Diffusion®. The working process increases the range of formats (e.g., 3DGS) applicable for heritage metadata.

Future studies will extend the use of the emerging AI cloud computing app and hand-held devices, like smartphones, to the ubiquitous management of 3D resources, formats, and interfaces for heritage documentation, inspection, and interaction.