Abstract

Artificial Intelligence (AI) security research is promising and highly valuable in the current decade. In particular, deep neural network (DNN) security is receiving increased attention. Although DNNs have recently emerged as a prominent tool for addressing complex challenges across various machine learning (ML) tasks and DNNs stand out as the most widely employed, as well as holding a significant share in both research and industry, DNNs exhibit vulnerabilities to adversarial attacks where slight but intentional perturbations can deceive DNNs models. Consequently, several studies have proposed that DNNs are exposed to new attacks. Given the increasing prevalence of these attacks, researchers need to explore countermeasures that mitigate the associated risks and enhance the reliability of adapting DNNs to various critical applications. As a result, DNNs have been protected against adversarial attacks using a variety of defense mechanisms. Our primary focus is DNN as a foundational technology across all ML tasks. In this work, we comprehensively survey and present the latest research on DNN security based on various ML tasks, highlighting the adversarial attacks that cause DNNs to fail and the defense strategies that protect the DNNs. We review, explore, and elucidate the operational mechanisms of prevailing adversarial attacks and defense mechanisms applicable to all ML tasks utilizing DNN. Our review presents a detailed taxonomy for attacker and defender problems, providing a comprehensive and robust review of most state-of-the-art attacks and defenses in recent years. Additionally, we thoroughly examine the most recent systematic review concerning the measures used to evaluate the success of attack or defense methods. Finally, we address current challenges and open issues in this field and future research directions.

1. Introduction

Throughout recent years, DNNs have achieved excellent success in an extensive range of exciting real-world applications, including image classification, speech recognition, and self-driving cars [1,2]. Deep learning (DL) comprises two primary states that transform input data into features and then features into the desired output. The application of architecture for interfaces between these stages is required. Different architectures, such as DNN and AutoML [3], are used in building DL models. DNNs, commonly used in this field, are being explored more than AutoMl, a promising solution that is beginning to be applied in various research areas [4,5]. However, with the proliferation of DNN-based applications, security is becoming a significant concern. Several studies have shown that adversarial examples, a type of polluted input, can easily deceive a DNN [6,7]. ML algorithms have exhibited vulnerabilities to adversarial attacks during training or testing for more than a decade [8,9]. As a result of the first attacks on linear classifiers proposed in 2004 [10,11], Biggio et al. [12,13] demonstrated for the first time that gradient-based optimization attacks can mislead nonlinear ML algorithms, including support vector machines (SVMs) and neural networks [8]. Although such vulnerabilities are not unique to learning algorithms, they have recently gained mass popularity following the demonstration by Szegedy et al. [6,14] that even DL algorithms that exhibit superhuman performance on image classification tasks suffer from these issues. DNNs can be induced to misclassify an input image, even by manipulating only a few pixels. As a result, adversarial examples have become increasingly popular [6,9,13]. As an illustration of an attack during the testing phase, consider a classification task. In adversarial attacks, subtle perturbations are typically crafted using an optimization algorithm and injected into a legitimate image, creating an adversarial example commonly referred to as an adversarial example. When processed for classification, an adversarial image often induces convolution neural networks (CNNs) to make predictions that deviate from the expected outcome, displaying high confidence in the divergent prediction. Despite being imperceptible to the human eye, these introduced adversarial perturbations have the potential to significantly misguide the decision-making of a targeted DNN model with a notable level of confidence. In the context of an automobile, this could result in misidentifying signs, such as road signs that stop at other signs, leading to severe consequences [15]. In the realm of media integrity, particularly in deepfake detection, adversarial attacks in digital environments highlight another case of how deepfake videos can deceive individuals and systems by generating hyper-realistic yet fabricated content. These manipulations pose significant challenges, including eroding public trust, spreading misinformation, and exploiting cognitive biases [16]. A similar attack can be executed for all Ml tasks, such as learning policy and representation.

In 2013, researchers began investigating the vulnerabilities of DNN security by adding perturbation to images to produce misclassified events. Szegedy et al. [6] introduce pioneering research that identifies vulnerabilities in DNNs and introduces adversarial examples. In 2014, Goodfellow et al. [14], the fast gradient sign method (FGSM) was introduced as an approach to generating adversarial examples, an approach to generating adversarial examples. The field advanced further in 2017 when Carlini and Wagner developed more sophisticated attacks, known as C&W attacks [17], which exposed vulnerabilities in existing defenses and set a new standard for evaluating model robustness. In 2017, Madry et al. [18] introduced a theoretical framework for robust optimization to mitigate such attacks. However, in 2018, Athalye et al. [19] used adaptive attacks to deceive several defense strategies and opened research for evaluating defense strategies to protect DNNs. It confirms the proposed techniques’ effectiveness and sets the stage for future progress in reducing the risks associated with backdoor attacks in ML systems. In 2020, the focus shifted to backdoor attacks, such as BadNet [20], which exploit training data to embed triggers that evade traditional adversarial defenses [21]. Recent advances between 2021 and 2024 have introduced novel defense mechanisms that leverage explainability and feature-level analysis. These mechanisms also use digital forensics to trace poison data to address sophisticated threats, including backdoor and clean-label attacks. These advancements reflect the field’s continuous efforts to enhance neural network security. On the other hand, attacks and defenses on DNN rapidly develop depending on the DNN life cycle scenarios, such as training or testing-phase attacks. Biggio et al. [12] proposed data poisoning in the early work on training-phase attacks. Gu et al. [20] introduced a backdoor attack that demonstrated how to embed hidden triggers in DNNs during training. In 2020, with the rise in federated learning, Bagdasaryan et al. [22] demonstrated how malicious clients could poison the global model by sending manipulated updates. On the other hand, in 2018, Madry et al. [18] proposed adversarial training. These defenses are against training-phase attacks. Szegedy et al. [6] proposed adversarial examples in the testing phase. The researchers then proposed different attacks, such as the model extraction attacks by Tramèr et al. [23] in 2016, and Membership Inference Attacks by Shokri et al. [24] in 2017. Researchers rapidly proposed defenses, even reactive and proactive, and in 2022, Shawn et al. [25] introduced digital forensics to trace back source poisoning attacks and, for the first time, proposed digital forensics to solve poisoning attacks in DNN security.

In 2022, Khamaiseh and Bagagem [15] conducted a review of security vulnerabilities and open challenges in DNNs. Although it provides a proper initial review of the security problems in image classification, it must adequately cover the security topics of adversarial DNNs in additional applications with major tasks based on DNNs (classification, policy learning, and representation learning) and defense mechanisms. We aim to meet these needs by providing a more comprehensive survey of attacks and defense mechanisms and discussing future research directions in DNN security.

Our review covers recent years in the most reputable datasets, i.e., IEEE, Science Direct, and WOS, for adversarial attack and difference mechanisms with DNN against various ML approaches and tasks, i.e., classification, regression, and policy learning. The result presented a detailed taxonomy for the life-cycle of adversarial attacks, different mechanisms and strategies, and the evaluation measures used to evaluate each strategy.

1.1. Motivation and Contribution

This paper presents an overview of the most aggressive attacks and defenses on DNNs for various ML tasks. It is important to make recent advancements in this field easily accessible to help readers quickly engage with the rapidly growing DNNs security research field. It is crucial to examine the current status and upcoming trends in DNN security and present a comprehensive analysis of future research in this area. Recently, several articles have reviewed various research studies in this field [15,26,27,28,29,30]. A recent study highlights the critical need for more research on DNN security tailored to ML tasks in different applications, pointing out that most adversarial attack research has focused mainly on image classification. There are several ways in which this survey differs from other surveys. The findings of this survey differed from those of previous studies in the field. It analyzes adversarial DL attacks and defense mechanisms across various ML tasks based on DNN, provides a precise formulation for both attacker and defender problems, and provides a clear overview of various strategies for attack and defense mechanisms against adversarial attacks.

The primary contributions of this study can be summarized as follows:

- We provide an extensive study of the state-of-the-art adversarial attack and defense method on all ML tasks based on DNN that leads researchers to understand the DNNs security for various tasks ML tasks, i.e., classification, regression, policy learning.

- We provide a precise formulation for both attacker and defender problems; thus, it is easy for readers with limited DNNs security knowledge to understand.

- We provide a systematic and comprehensive review of the measures used to evaluate the success of attack or defense methods. Thus, it is accessible for researchers who want to research DNN security to know the mechanisms for evaluating the success of an attack or defense of DNNs.

- We identify and discuss various open issues and potential future research directions for DNN security. This research field aims to explore new methods to secure DNN models.

Our study is motivated by the need to address the security challenges in DNNs, a critical area that remains inadequately explored and fragmented in the current AI security literature.

1.2. Paper Organization

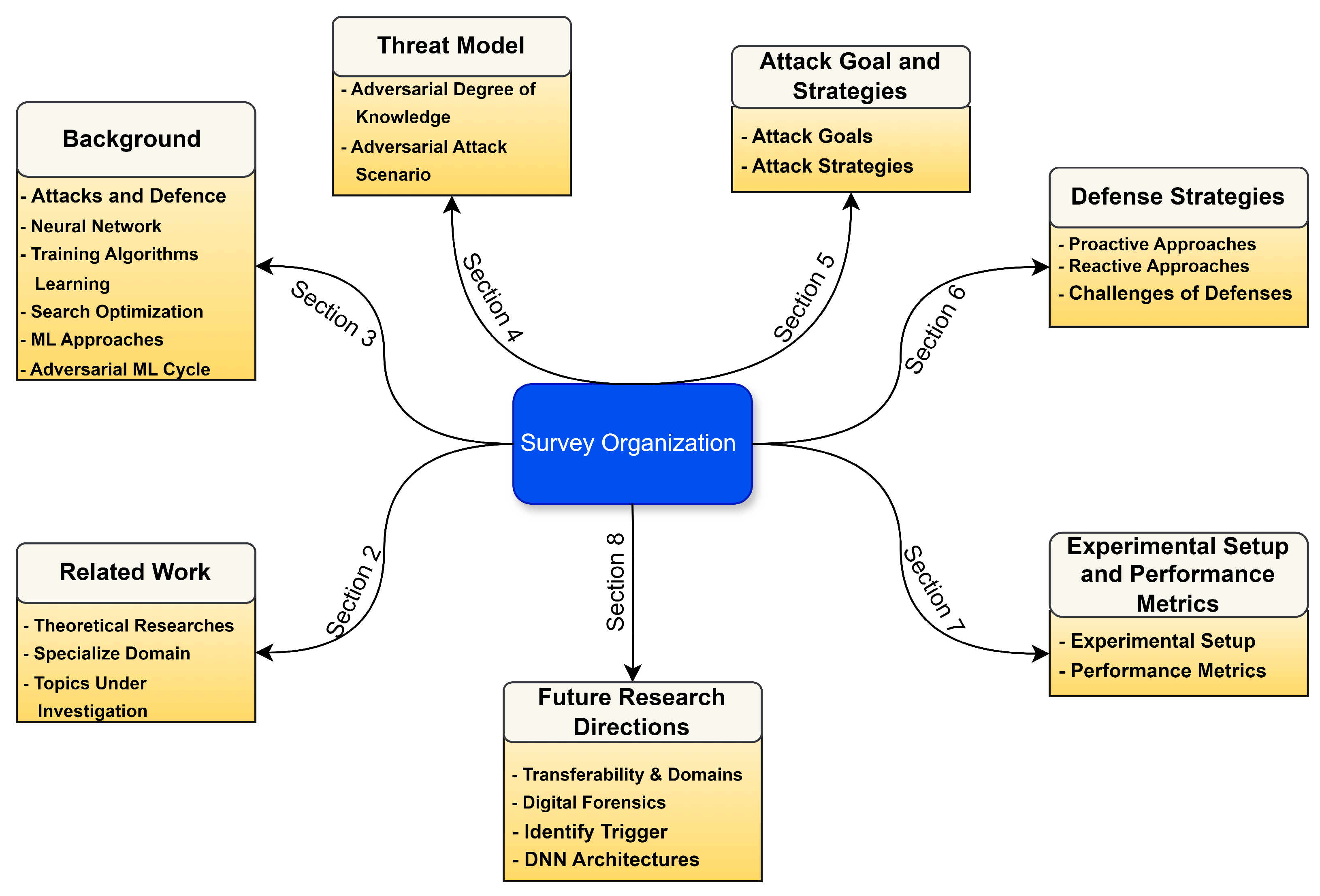

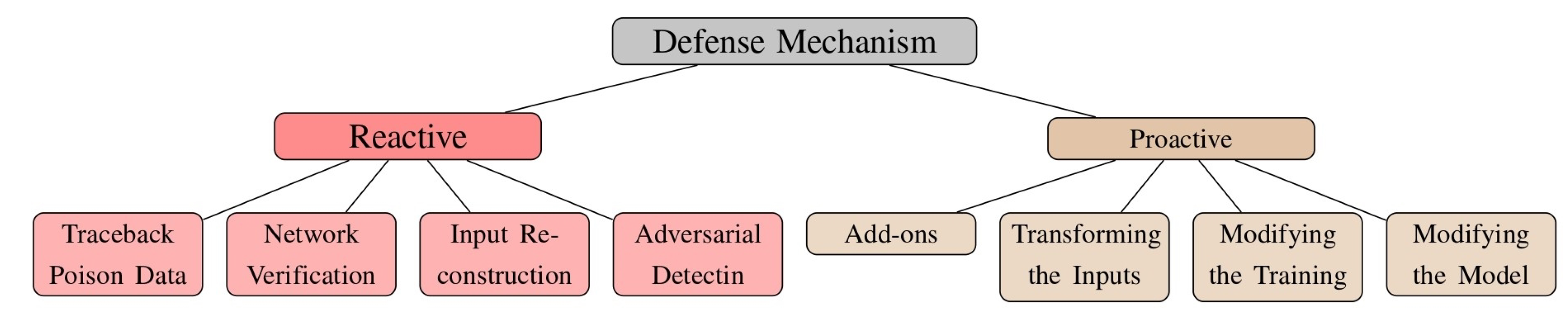

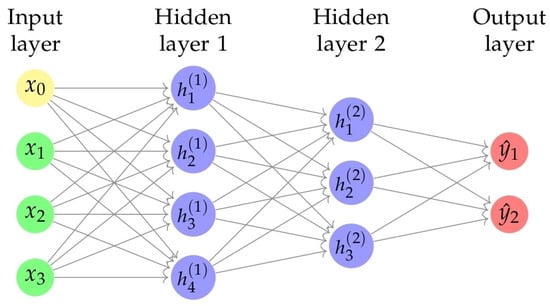

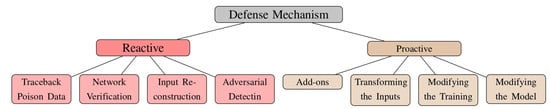

The paper’s composition is structured as follows: Section 1 clarifies the rationale behind a detailed review of adversarial threats and counteractions in the context of DNNs while summarizing the key contributions and layout of the paper. Section 2 investigates the historical development of DNNs, covering aspects such as their structural designs, training processes, various artificial neural network models, and the tactics utilized by attackers. Section 3 outlines the strategies adversaries employ, considering their level of expertise, the attack scenarios they can initiate, the range of adversarial samples that can be created, and the eventual consequences of such attacks. Section 4 examines the objectives behind attacks in various ML applications. Section 5 examines contemporary adversarial onslaughts on DNNs, organizing them into four classifications: white-box, extraction attacks, poisoning, and black-box, and delves into the respective challenges, computational techniques, and contexts. Section 6 evaluates current protective measures against adversarial exploits targeting DNNs, classifying them into proactive and reactive categories, and scrutinizes each strategy’s efficacy, limitations, and associated defense techniques. Section 7 summarizes the metrics for assessing the effectiveness of adversarial tactics and their countermeasures. Section 8 encapsulates ongoing research and potential new ways to address research shortcomings. Section 9 presents the conclusions and proposes avenues for future scholars related to this topic. Figure 1 shows the organization of the review.

Figure 1.

Organization of the review paper.

2. Related Work

A series of papers [15,27] delve into the theoretical frameworks of adversarial DL within cybersecurity. Further investigations [28,29,31,32] examine its application in particular, including computer vision, medical imagery, and NLP. Additional scholarly efforts focus on assessing ideas for attacking unsupervised and reinforcement learning (RL) methodologies. Table 1 presents a summary of existing reviews.

Table 1.

Summary of existing surveys.

2.1. Theoretical Researches

The research presented in [15] extensively reviewed the latest developments in adversarial attack and defense strategies, focusing on CNN-based models for general image classification. The study organizes the fundamental principles, starting with the knowledge and objectives of the attacker, the adversarial scenarios, and the different types of adversarial instances. Provides a comprehensive summary of the methods used by adversarial attacks and defense mechanisms. In addition, it discusses the challenges and applications of adversarial DL and includes the most sophisticated techniques for creating and detecting adversarial examples. In [27], the author presented a detailed classification system that clearly defines ML applications and adversarial models, enabling other researchers to duplicate experiments and advance the competition in enhancing the sophistication and resilience of ML applications. The framework begins with the Adversarial ML Cycle, delving into a detailed breakdown of the dataset’s characteristics, ML architectures, the adversary’s knowledge, capabilities, objectives, strategies, defensive reactions and how these elements interconnect. The paper also assesses and contrasts recent scholarly contributions, uncovering unaddressed issues and obstacles within the domain.

2.2. Specialized Domain

The research in [31] offered an in-depth analysis of adversarial tactics in the context of CNNs for medical imaging, detailing the latest methods to generate and counter adversarial examples and assessing the efficacy and limitations of defense strategies. Similarly, the researchers [31,32] provided a detailed examination of adversarial approaches targeting CNNs in computer vision, covering the foundational principles and advanced techniques for attack and defense. In natural language processing (NLP), ref. [33] explained various adversarial methods and their implications for tasks such as sentiment analysis and machine translation, addressing various attack strategies and defense solutions. Meanwhile, the researchers [34] examined the resilience of DL models in computer vision against adversarial threats, reviewing practical defense evaluations. Lastly, ref. [35] focused on the evolving landscape of adversarial attacks and defenses within image recognition, providing insights into basic concepts and emerging trends. These surveys underscore the critical importance of advancing cybersecurity measures in DL applications in various domains. In ref. [36], the survey extensively examined the current state of adversarial attacks and their corresponding defensive strategies within the realm of DL applied to cybersecurity. It explored the complexities and potential solutions necessary to fortify the robustness and ensure the security of DL-enabled systems. The study addressed a spectrum of adversarial threats, encompassing various threat models, and evaluated the latest advances in countermeasures designed to thwart such attacks. In addition, it sheds light on unresolved issues and contemplates prospective avenues for future research to enhance the resilience and reliability of DL frameworks in the face of adversarial open problems.

2.3. Topics Under Investigation

The study in [29] thoroughly reviewed the literature on adversarial robustness within unsupervised ML, examining the various attack and defense mechanisms associated with this method. The research delved into the difficulties, techniques, and practical applications of unsupervised learning when faced with adversarial conditions, touching on topics such as maintaining privacy, clustering, detecting anomalies, and learning representations. In addition, the paper introduced a framework for defining the characteristics of attacks on unsupervised learning and pointed out existing research gaps and future research avenues. In ref. [28], the article offered an in-depth analysis of deep reinforcement learning (DRL)-based strategies for smart systems, focusing on efficiency, sturdiness, and security improvements. It explored the concept of novel attacks targeting DRL and the possible defensive actions to counteract them. Moreover, it underscored the unresolved issues and research challenges in devising strategies to counteract attacks on DRL-powered intelligent systems.

3. Background

In this section, we lay the groundwork by introducing critical elements of the research landscape. Our focus begins with adversarial attack and defense methods, navigating the essential concepts. We then delve into training algorithms and learning techniques, unraveling the methodologies crucial for robust model training. The discussion broadens to encompass distinct types of ML, outlining their unique characteristics, followed by an overview of ML approaches. This succinct background is a foundation for our study’s subsequent discussions and analyses.

3.1. Attacks and Defence Methods

Different mathematical concepts create adversarial ML attacks and defense methods. This subsection offers a detailed summary of essential concepts needed to understand how these work.

3.1.1. Gradient Descent

Optimization involves the process of minimizing or maximizing an objective function. In ML, optimization seeks the most suitable parameter values for an objective function, ultimately minimizing a cost function. Various algorithms can be used to optimize, with gradient descent being one of the most widely used methods to find optimal parameters in a wide variety of ML algorithms [17]. Gradient descent is a primary optimization technique that leverages the function’s gradient at its current position to chart the course through the search space.

The fundamental gradient descent algorithm comprises the following steps: (1) computing the gradient of the objective function , (2) progressing in the direction opposite to the gradient , which corresponds to the steepest descent direction conducive to improvement (i.e., locating the global minimum), and (3) determining the learning rate , which dictates the step size towards the minimum. It is crucial to fine-tune the learning rate as it is the most significant parameter to achieve high performance in a DNN model.

A higher value of generally facilitates faster learning for the ML model. However, this can lead to a substantial decrease in model performance, as the algorithm may overshoot the optimal minimum and end up on the other side of the valley. On the other hand, a smaller enables the model to converge by gradually finding a local minimum after numerous iterations, which, unsurprisingly, extends the runtime. This situation highlights a trade-off between the accuracy of the result and the time needed for parameter updates.

3.1.2. Distance Metric

Distance metrics are integral to quantifying the separation between two points, typically represented as vectors. In essence, these metrics gauge the degree of similarity between two vectors, with a distance of zero indicating complete equivalence under a specific metric. To compute the distance between two vectors, one typically evaluates the norm of their difference using a norm function. Formally, the norm function of the vector x can be defined as follows:

where represents the set of real numbers.

In this context, means p is a real number (i.e., it can take any value from the set of real numbers).

The condition specifies that p must be greater than or equal to 1.

The norm of a vector x quantifies its distance from the origin to the point x.

These distance metrics play a pivotal role in the process of generating adversarial attacks, enabling the quantification of their similarities. In particular, state-of-the-art adversarial attack algorithms frequently rely on , , , and distance metrics [3,11,17,41].

3.2. Neural Network

In this subsection, we systematically explore fundamental concepts within the realm of ML. We delve deeper into DNNs and artificial neural networks (ANNs). Furthermore, this subsection navigates the intricacies of CNNs. The subsection lays the groundwork for an advanced understanding of the diverse landscape that constitutes the backbone of modern ML research by providing a concise, yet comprehensive overview of these ML paradigms.

3.2.1. ANNs

ANNs are interconnected neurons inspired by biological neural networks. The initial and foundational mathematical model for ANNs was the single-layer perceptron, pioneered by Rosenblatt [42], and later extended into multi-layer perceptrons (MLPs) by Nazzal et al. [43]. To emulate the learning mechanisms of the human brain, ANNs were developed as generalized mathematical models inspired by biological neural networks [26]. The fundamental architecture of an ANN typically encompasses a minimum of three essential layers: (1) the input layer, (2) the output layer, and (3) one or more hidden layers. Each layer comprises nodes, which serve as the fundamental units of computation. These nodes transmit input to subsequent layers, receive input from other nodes or external sources, and generate the corresponding output.

The choice of neural network type depends on the specific problem conditions, with different neural network architectures tailored to various tasks. For instance, a text generation problem might require a recurrent neural network (RNN) for optimal performance.

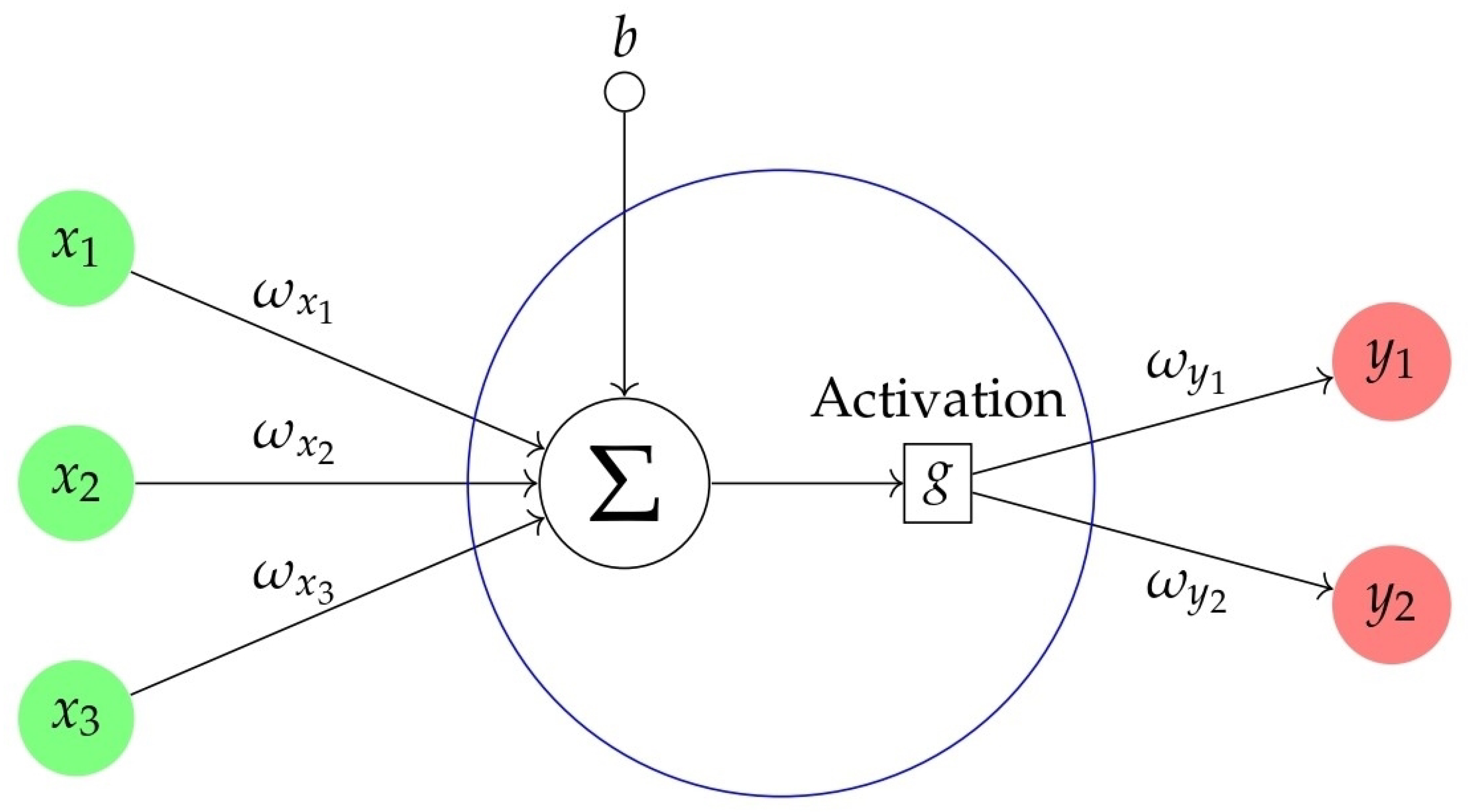

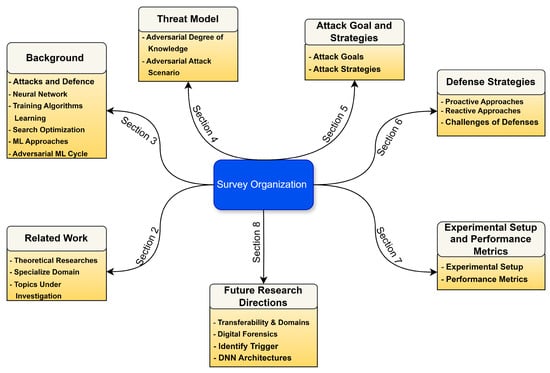

Figure 2 below illustrates the basic structure of an artificial neuron. Each neuron receives inputs , weighted by corresponding coefficients , along with a bias term b. These inputs are combined through a summation operation, followed by an activation function g, resulting in outputs and .

Figure 2.

A representation model of ANNs.

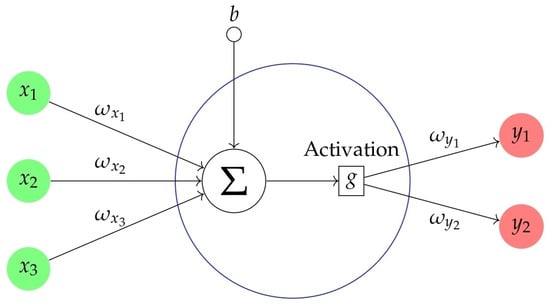

3.2.2. DNN

A DL model, often called a DNN, comprises numerous layers with nonlinear activation functions capable of expressing intricate transformations between input data and the desired output.

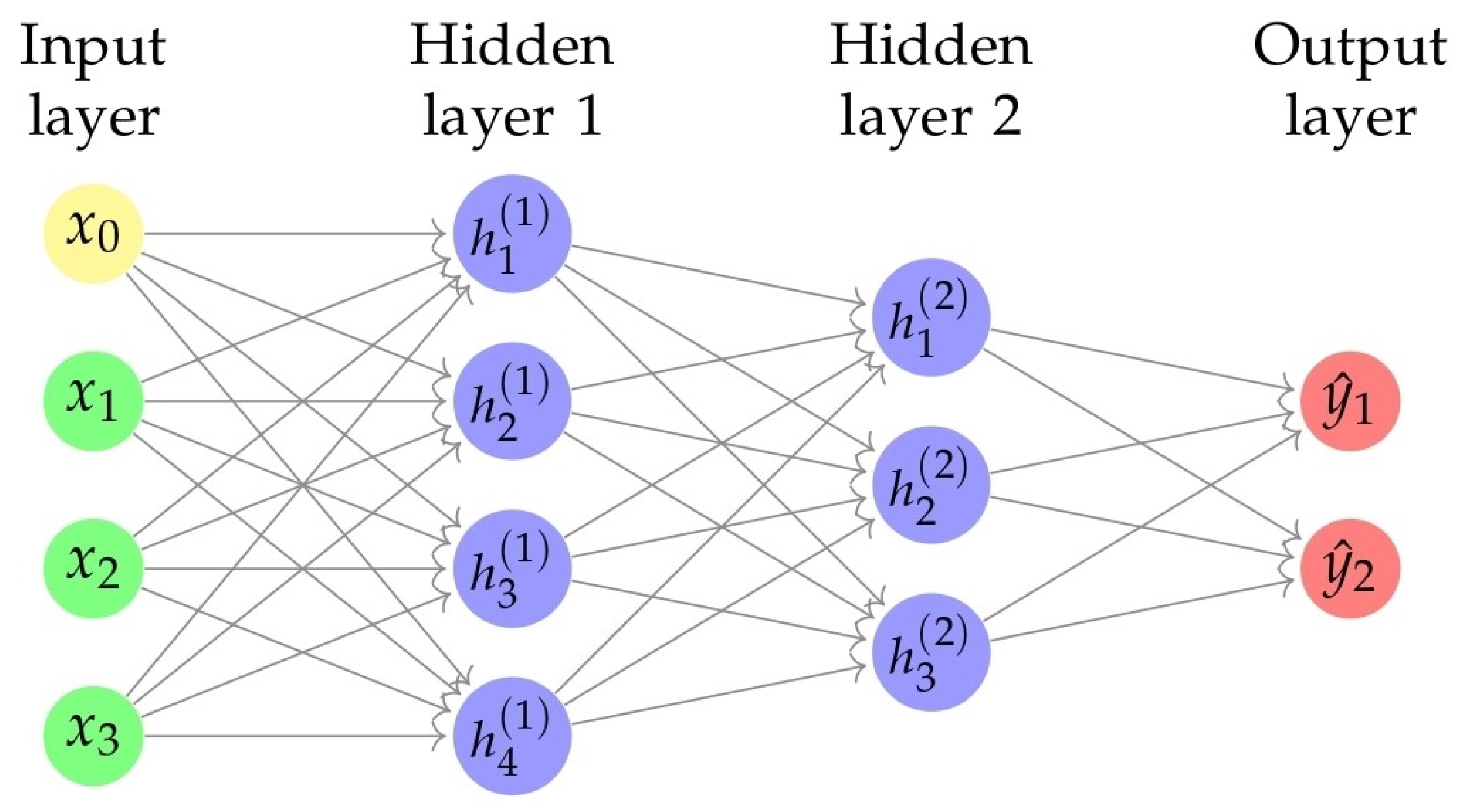

Figure 3 shows a neural network architecture commonly used in DL applications. The neural network consists of three main layers: the input layer, two hidden layers, and the output layer. The nodes represent each layer, and connections (or links) between nodes signify the flow of information during the network’s computation. The input layer, labeled “Input Layer”, receives input signals denoted as x values. The two hidden layers, labeled “Hidden Layer 1” and “Hidden Layer 2”, contain nodes that represent intermediate features learned by the network. The output layer, labeled “Output Layer”, produces the final output represented as values. The weights associated with the connections between layers are adjusted during training to enable the network to learn and make predictions. This diagram is a visual representation of the neural network’s structure, which helps to understand its architecture for tasks such as classification or regression.

Figure 3.

DNN with multiple hidden layers.

3.2.3. Convolutional Neural Network (CNN)

In 1998, LeCun introduced deep CNN [44], which has become one of the most widely used models in DL. This model automatically learns features from input data, eliminating the need for manual feature extraction. It extensively uses image classification tasks, including object identification, detection, and classification.

CNN operates under supervised learning, which requires a substantial volume of labeled data for training. Its inspiration comes from the visual cortex of animals. The convolutional layer employs a set of filters applied to the input image, which generates feature maps. These feature maps result from the convolution of local patches with weight vectors, often called filters. Feature maps are collections of localized weighted sums [45]. To enhance training efficiency, filters are repeatedly applied, reducing the number of parameters during the learning process.

Pooling layers are used to subsample non-overlapping regions in feature maps, typically using maximum or average pooling, to handle more complex features [45]. Finally, fully connected layers function as a conventional neural network, mapping the features to the predicted outputs in the final phase of learning. Deep convolutional neural networks typically comprise convolutional layers, subsampling layers, dense layers, and a softmax layer at the end [45].

Numerous CNN-based models have been offered and implemented by various researchers. Major CNN architectures, these models, including GoogleNet, VGGNet, and ResNet, are widely regarded for their exceptional performance on various image classification and object recognition benchmarks.

CNN often performs image classification and video object detection tasks. It is excellent at capturing local spatial information through its convolutional filters. On the other hand, network traffic data does not inherently possess local spatial relationships, making CNN more suited for use in hybrid systems in conjunction with RNN [46,47].

3.2.4. RNNs

RNNs are a category of DL models distinguished by their internal memory, which allows them to grasp sequential dependencies. In contrast to CNN, which treats inputs as isolated entities, RNNs consider the temporal sequence of inputs. This characteristic makes them ideal for tasks that involve sequential data processing [48]. Through a recurrent loop mechanism, RNNs apply a consistent operation to each element involving sequential data processing within a sequence; the present computation depends not only on the current input but also on the outcomes of previous computations [49].

RNNs are crucial to modeling context dependencies effectively and are, therefore, vital for a wide variety of sequence-based applications, including NLP, video classification, and speech recognition. In language modeling, for instance, the accurate prediction of the next word in a sentence needs a comprehensive knowledge of the preceding words. The recurrent structure of RNNs enables them to model these sequential relationships efficiently, allowing more robust representations of temporal and contextual dependencies [50,51].

However, basic RNNs need help with short-term memory limitations, which restrict their ability to maintain information in long sequences [52]. To tackle this limitation, researchers have developed more advanced variants of RNN, including LSTM [53], bidirectional LSTM [54], Bayesian RNN [55], GRU [56], and bidirectional GRU [57].

3.3. Training Algorithms Learning

The training algorithm aims to find network parameters that minimize the difference between the network predictions on training inputs and the truth labels.

3.3.1. Backpropagation

In backpropagation, input values are fed forward through the ANN, and errors are calculated and propagated back. Backpropagation minimizes the cost function as the ANN’s weights and biases are iteratively updated and adjusted. The model calculates an update of the parameter taking the gradient of the loss function with respect to its weights. If the network minimizes the cost function, the network will propagate [58].

3.3.2. Self-Organization Map

Self-organization maps (SOMs) are unsupervised learning algorithms that construct spatial representations of multidimensional data on typically two-dimensional grids. This method preserves the topological and metric relationships between data points by organizing them on a grid. Using the SOM algorithm, similar data samples are mapped to proximate units on the grid using a neighborhood function. The network weights are adjusted iteratively to reveal the underlying structure of the data in response to input vectors. In this competitive learning process, the algorithm clusters similar data points while keeping dissimilar ones apart until the map effectively represents the input space [59].

3.3.3. Autoencoder

An autoencoder’s architecture encodes input data into a compressed representation in a lower-dimensional space and then reconstructs the input data from this representation as accurately as possible. Training an autoencoder is unsupervised, meaning it does not require labeled data. The network trains to minimize the reconstruction error, representing the difference between the original input and its reconstruction. This process involves backpropagation to adjust the network weights, similar to other neural networks [60].

3.4. Search Optimization Methods

Optimization methods are critical to solving complex problems in various disciplines. There are several optimization methods, each with its strengths and suitable application scenarios. We will delve into some of these methods, including iterative, heuristic, greedy, and metaheuristic methods.

3.4.1. Iterative Methods

Iterative methods are algorithms that converge to a solution by repeatedly approximating. Iterative methods often start with an initial guess of the solution and progressively improve it by applying an iterative process. This approach is common in numerical analysis and can be used for various optimization problems. The key idea is that each iteration brings the solution closer to the optimum, and researchers continue the process until they achieve a desired level of accuracy or the improvements become negligible [61].

3.4.2. Heuristic Methods

Heuristics are problem-solving methods that employ a practical approach to finding satisfactory solutions where optimal solutions are impractical. They are more comprehensive and focus on speed and efficiency. Heuristic methods are typically used when researchers face a large and complex search space that makes an exhaustive search impractical. They provide suitable solutions that may not necessarily be the best possible but are obtained relatively quickly [62].

3.4.3. Greedy Methods

Greedy algorithms follow the problem-solving heuristic of making the locally optimal choice at each stage. It assumes that one can reach a global optimum by choosing a local optimum at each step. These algorithms are known for simplicity and are used to solve optimization problems where the result involves making a series of choices. However, greedy methods may only sometimes produce the optimal solution for all problems, especially when a globally optimal solution cannot be built from optimal local solutions [62].

3.4.4. Metaheuristic Methods

Metaheuristics are high-level frameworks or strategies that guide lower-level heuristics in searching for solutions to optimization problems. They escape local optima and robustly search the solution space. Researchers often apply nature-inspired meta-heuristic methods to a wide range of problems. Examples include simulated annealing, particle swarm optimization, and genetic algorithms, to name a few. They are handy for complex problems where other methods fail to find satisfactory solutions in a reasonable time frame. Each of these methods has its place in the optimization algorithms. The selection of a method typically hinges on the problem’s specifics, the required precision, the complexity of the solution space, and the available computational resources [63].

3.5. ML Approaches

In this subsection, we delve into foundational concepts encompassing common ML approaches, specifically focusing on three prominent paradigms: supervised, unsupervised, and RL.

3.5.1. Supervised

It is the most prevalent form of ML, deep or not. Consider a scenario in which we aim to construct a system capable of classifying images such as houses, cars, or pets. Initially, we assemble a large dataset containing images of houses, cars, and pets, each tagged with its respective category. During the training phase, the machine is presented with an image and produces an output as a vector of scores, one score per category. Supervised learning predominantly utilizes labeled data, whether it involves deep or non-deep methods. Consider a scenario in which the goal is to develop a system for dividing images into categories such as houses, cars, people, or pets. This setup curates a comprehensive dataset, with each image labeled with its corresponding category. The machine processes an image throughout the training and generates an output vector with scores assigned to each category. The primary objective is to ensure that the correct category receives the highest score, a goal that is usually achieved through training [26].

To compute an objective function to measure machine performance, quantifying the error or distance between the output scores and the desired score pattern. Subsequently, the machine adjusts its internal tunable parameters, often called ‘weights’. The weights are real-valued parameters that function as adjustable controls that determine how the machine processes inputs to produce the corresponding outputs. In a typical DL system, hundreds of millions of tunable parameters and an equivalent number of labeled data points are used to train the model [26].

3.5.2. Unsupervised

Unsupervised ML differs from supervised ML, primarily in the absence of a training set. Secondly, although most clustering algorithms include an optimal criterion, they do not guarantee that the global optimal solution has been reached. Typically, researchers must consider all data partitions, but even moderate sample sizes make this impractical, so they use some heuristic approach instead. In this case, using a different starting parameter where possible would be a good idea [26].

3.5.3. RL

RL is a learning method that analyzes the interactions of the agent with their environment to determine the best strategies. RL agents learn differently from supervised and unsupervised learning processes because they do not require training data. Instead, they learn from observations made during real-time interactions with the environment. Robotics and control problems and many other real-world challenges can be solved through RL using a trial-and-error approach. One of the main drawbacks of RL algorithms in practical applications is their slow learning speed and difficulty learning in complicated settings.

RL agent acquires knowledge by interacting with its dynamic environment, refining its behavior through trial and error [64,65]. The agent receives a single reward signal that reflects the evaluation of its performance in the given scenario. This type of feedback offers less detailed guidance than supervised learning, where the agent is explicitly given the correct actions to perform [66]. In contrast, it provides more guidance than unsupervised learning, where the agent can independently determine the correct actions without receiving explicit performance feedback [67].

3.6. Adversarial ML Cycle

Research on the security aspects of ML, focusing on adversarial knowledge, was initially introduced in [68]. Subsequently, researchers proposed a holistic approach to incorporate both the goal and capabilities of adversaries into the model, as documented in [69]. More recently, Biggio et al. argued for the construction of a well-defined adversarial model, which encompasses four critical dimensions: goal, knowledge, capability, and attacking strategy [70].

To elaborate, the adversarial goal can be explicitly delineated by considering the expected impacts and the specificity of the attack on security threats. For example, an attacker’s goal might involve launching a non-selective integrity attack aimed at causing high false positive and true negative rates in classifiers. Alternatively, it could revolve around the execution of a targeted privacy violation attack to acquire sensitive data about a specific user illicitly.

Adversarial knowledge can be categorized into two groups: depending on whether the attacker has access to training data, features, learning algorithms, decision-making processes, classifier settings, or feedback information, attacks can be classified as either partial or full knowledge scenarios. The attacker’s ability to launch adversarial attacks depends on how much influence they have over the training and testing datasets. Furthermore, this can qualitatively evaluate this capability from three angles: (1) whether the security threats have a causative or exploratory impact, (2) the proportion of training and testing data under the attacker’s control, and (3) the extent to which features and parameters are known to the attacker.

Lastly, the attacking strategy encompasses an adversary’s specific actions to effectively manipulate training and testing data to achieve their objectives. It may include decisions about data manipulation, category label modifications, and feature tampering.

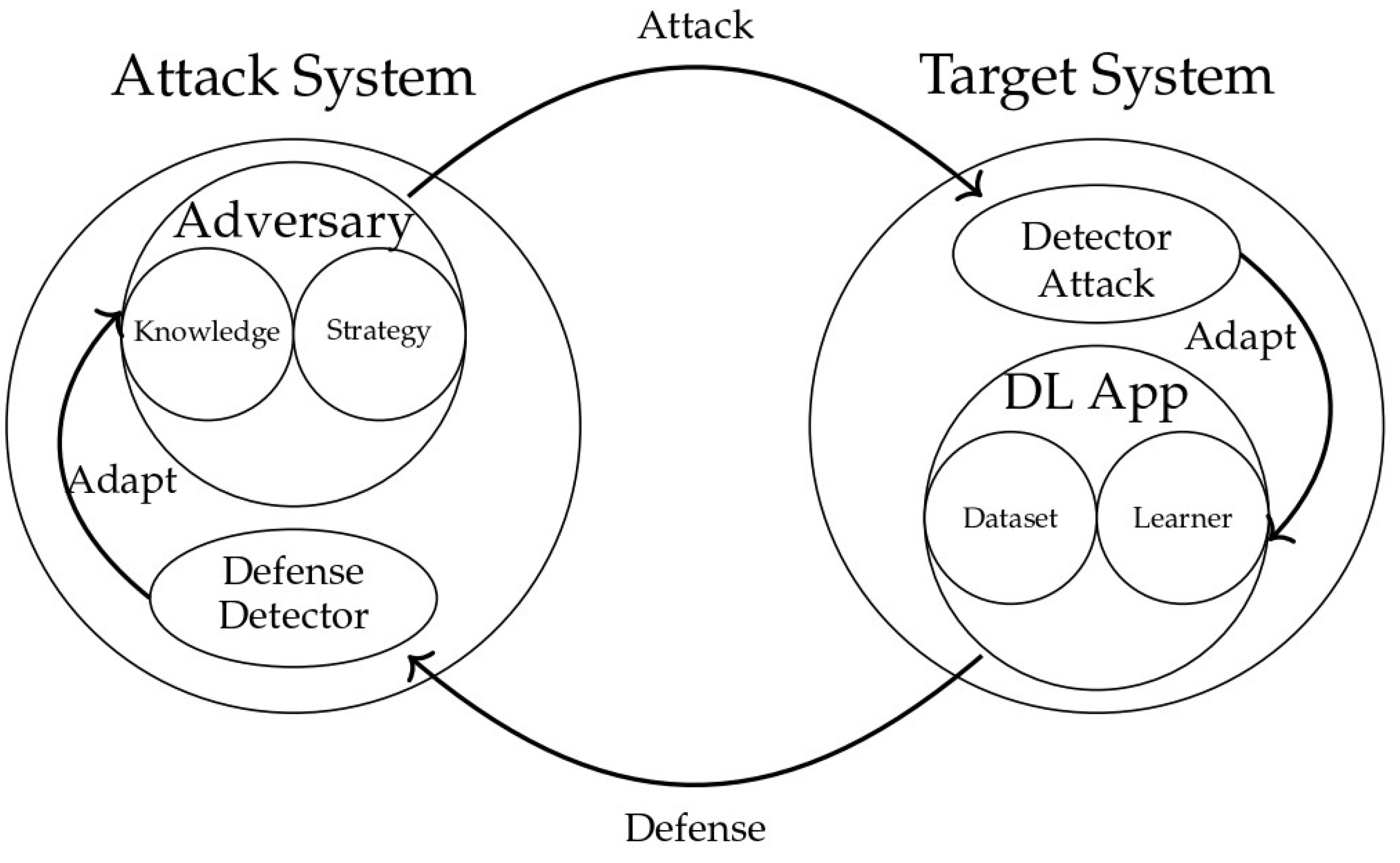

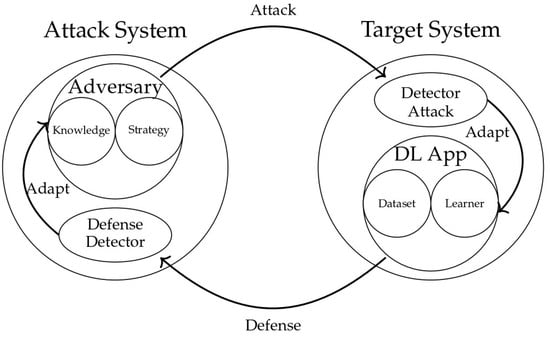

Figure 4 illustrates the life cycle of adversarial attacks on DL systems. The diagram is divided into two main components: the “Attack System” on the left and the “Target System” on the right. In the Attack System, the adversary employs an array of strategies denoted by “Knowledge” and “Strategy” to develop attacks. These attacks target the system, which consists of a ‘DL App’ (DL application). The life cycle involves a continuous interplay between the Attack and Target Systems, with arrows indicating the flow of attacks and corresponding defenses. Additionally, Figure 4 introduces “Defense Detectors” in both the Attack and Target Systems, emphasizing the dynamic nature of adversarial interactions. The overarching theme is the cyclical adaptation of attack and defense strategies, symbolizing the perpetual evolution of adversarial techniques and the need for responsive defense mechanisms in DL applications.

Figure 4.

Adversarial DL cycle.

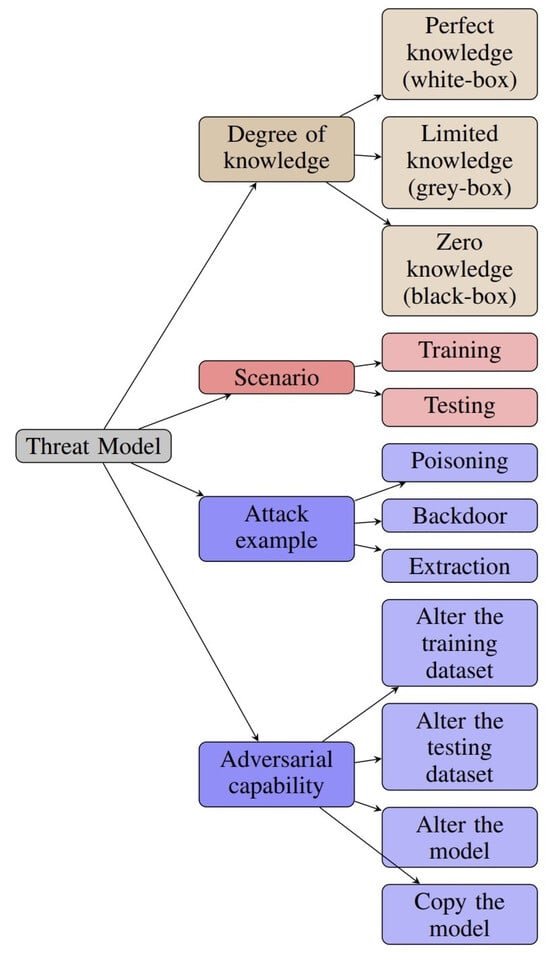

4. Threat Model

A threat is a prospective factor that could lead to the compromise of assets and organizations. Illustrative categories of threats include impersonation of user identity, data manipulation, repudiation, exposure to unauthorized information, service disruption, and unauthorized privilege escalation [71].

In adversarial attacks on classification tasks, the threat model, described in [72], involves a malicious attacker attempting to deceive a DNN-based image classifier by introducing adversarial examples. The attacker’s objective is to perturb the pixels of an image repeatedly until the DNN model causes a misclassification event, leading to an incorrectly classified image on another. Assume that the attacker has full access to the model’s internal details of the DNN-based image classifier, essentially treating it as a white box.

Consequently, the attacker can generate perturbation data that causes the classifier to misclassify. This is in part due to the widespread familiarity with the neural network architectures used in top-performing image classifiers, such as ResNet models [73]. Even if the attacker lacks knowledge of the classifier parameters, they can develop a reliable surrogate model of the classifier by sending and collecting responses from queries [74].

The common case is where the adversarial does not know the defense system, treating the detector as a black box. Nevertheless, we can also investigate a rare scenario in which the intruder obtains the defense system and its gradients, conceivably due to corrupt employees or bugs in the server. In this case, evaluating the DNNs model in classifier against white-box attack [75].

The threat model works in real-world scenarios in the following way: adversaries typically lack access to the model’s internal details, usually well protected by service providers. Furthermore, researchers have emphasized the importance of assessing the robustness of the model through the transferability of adversarial examples [26,76]. Otherwise, attackers could exploit a more vulnerable model as a substitute to breach the defenses of the target model.

In some situations, the model’s internal details and defensive mechanisms may be inadvertently disclosed to the attacker, leading to a grey-box scenario. However, the adversary must be aware of the defense’s specific parameters. In the extreme white-box scenario, the adversary knows the oracle model and its defense mechanisms. This is an exceedingly potent position for the attacker, as attacks launched under these conditions are highly challenging to defend against given the attacker’s comprehensive awareness of the system.

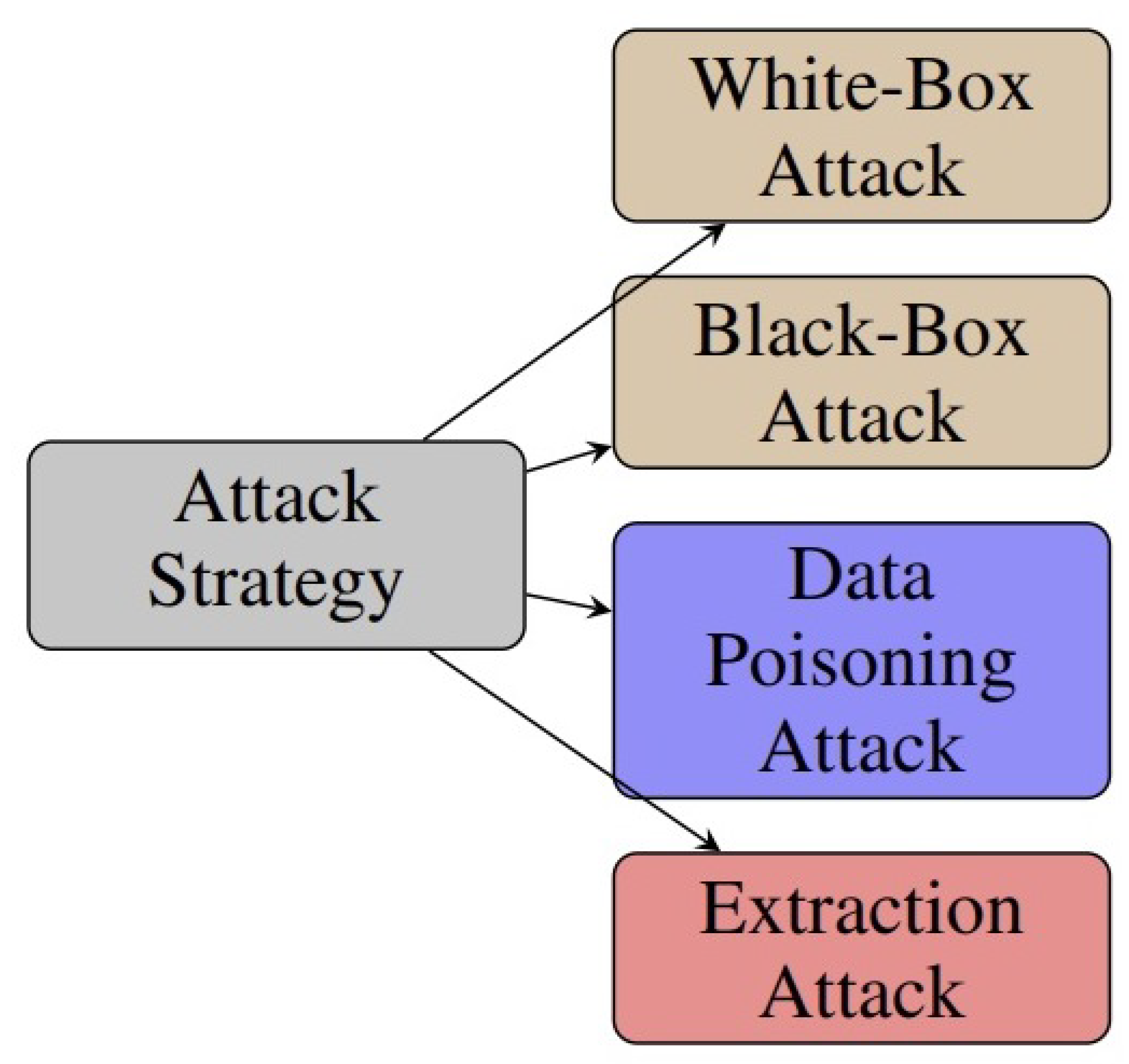

Figure 5 demonstrates a classification that presents different types of adversarial attacks against DNNs. The attacks are grouped into top-level branches in the taxonomy: white-box, black-box, data poisoning, and extraction attacks. This classification system provides an organized and thorough summary of different types of attacks, helping researchers and professionals understand the range of dangers in DNN security.

Figure 5.

Taxonomy of the adversarial threat model.

4.1. Adversarial Degree of Knowledge

Knowledge is a fundamental aspect of any scientific endeavor. It plays a pivotal role in identifying vulnerabilities, developing defenses, and advancing the field of DNN security. Understanding whether an attacker can access a model’s internal details, such as its parameters or architecture, is crucial in assessing and mitigating potential threats.

4.1.1. White Box

The white-box threat model assumes a significantly more potent attacker than the black-box scenario. In this setting, the attacker has extensive access to information, including input/output data and the target model’s internal details. Adversarial attacks in this context involve methodically crafting attacks by leveraging the victim model’s internal details. Due to unrestricted access to model information, white-box attacks pose more significant challenges for defense. In the context of our literature review, the Carlini and Wagner (C&W) attack [17] and the projected gradient descent attack (PGD) [73] emerge as particularly influential. In the white-box attack threat model, similar to bit-flip-based adversarial weight attacks on quantized networks [77,78,79], we consider a scenario where the attacker has access to the model’s weights, gradients, and a portion of the test data. This threat model remains valid, as previous research has shown that attackers can effectively acquire comparable information, such as layer numbers, weight sizes, and parameters, through side-channel attacks [80,81,82,83]. However, it is essential to note that the attacker denied access to training-related information, including the training dataset and hyperparameters.

4.1.2. Black Box

In this threat model, the attacker lacks the internal details of the target model. However, the attacker can train a readily exploitable model to craft adversarial examples and then transfer these examples to the target classifier, also known as the oracle. The attacker possesses a training dataset with a distribution similar to that used to train the oracle. Using the same training dataset, the substitute and the oracle emulate a worst-case scenario that could occur in real-world situations. However, the attacker needs to be more informed about the defensive mechanisms and the precise architecture and parameters of the oracle.

In this threat scenario, the adversary can submit queries to the target models and analyze the resulting predictions based on the provided input. In other words, the attacker can see the input and output of the target model but does not have access to the internal components of the victim model, including its architecture, gradients, or hyperparameters. The HopSkipJump (HSJ) attack [84] is one of the latest and most potent attacks within the black-box attack category. The HSJ attack is a repetitive method that depends exclusively on the outputs of the target model, without needing any gradient data from the model itself. It approximates the model’s gradients and determines the direction of these gradients using binary data from the decision boundary, which makes it particularly efficient in a black-box environment.

4.1.3. Grey Box

In this scenario, the attacker has access to the internal details of the model. An attacker may sometimes leak a model’s parameters, architecture, and defense mechanism. However, The adversary is unaware of the defense parameters. As a result, the attacker can craft adversarial examples based on the oracle itself without needing a substitute model. However, it is essential to note that while the attacker has insight into the model’s internal details, the specific parameters of the defensive mechanism remain undisclosed. Therefore, the effectiveness of the defense may still be preserved [31].

4.2. Adversarial Attack Scenarios

4.2.1. Attacks During Training Phase

An attacker can undermine a particular ML system by focusing on its training dataset during training. This strategy, referred to as a poisoning attack, involves the attacker trying to interfere with the training dataset, which is used to alter the statistical characteristics of the data for training. It can use the following scenarios to initiate this poisoning attack:

- Data injection and modification: The attacker intentionally incorporates malicious samples into the training dataset or alters existing data points in a harmful way. These detrimental examples may be produced using the label noise method [85]. Once the training dataset is compromised, the resulting DNN model produces erroneous outputs [86].

- Logical corruption: The adversary disrupts the learning process of the DNN model, hindering its ability to learn accurately.

4.2.2. Attacks During Testing Phase

In certain circumstances, an opponent may exploit the features of fundamental classes that can be altered without compromising accurate classification [87]. During the testing phase, researchers can execute two primary attacking scenarios:

- Evasion attack: The adversary attempts to infiltrate the specific model by creating a harmful input sample that the ML model incorrectly categorizes. Most of the proposed adversarial works have incorporated this type of attack [88].

- Exploratory attack: During testing, the adversary gathers data about the learning system to gain insight into its model’s internal details or training data by creating adversarial samples, similar to side-channel attacks.

In order to train the attack model, the attacker is assumed to possess a supplementary dataset that includes both authentic and counterfeit images. Despite the availability of many pre-made DeepFake image datasets and models, the attacker’s access to data is restricted to prevent worst-case scenarios:

- Limited Dataset Size: the attacker needs more resources to gather public information, resulting in a relatively small dataset size.

- Out-of-Distribution DeepFake: the attacker can only gather fake images produced by certain DeepFake techniques, so not all types of DeepFakes will be in the auxiliary dataset.

5. Attack Goal and Strategies

This section systematically explores the attack goal and strategy for DNNs used in various ML tasks. In this section, we will discuss the attacker’s goal, either privacy, integrity, or availability, and describe the strategy the attacker follows to attack DNNs’ models.

5.1. Attack Goal

Integrity attacks aim to introduce new features, alter existing ones, or bypass current features. Privacy attacks aim to extract private information from the ML model. Attackers carry out availability attacks to disrupt the intended operation of the system. Depending on the ML task (i.e., learning or classification), adversarial goals are shaped as follows:

5.1.1. Classification Task

- Untargeted Misclassification: The adversary aims to raise the DNN model’s misclassification rate by feeding it adversarial examples from an untargeted adversarial attack that results in inaccurate classification. Simply put, the attacker attempts to make the model classify adversarial examples incorrectly.

- Source/Target Misclassification: Engaging in targeted adversarial attacks can achieve this objective. By adding a trigger to a specified target label, the victim DNN model system will be misled in classifying the correct label, called a target attack.

5.1.2. Confidence Reduction

The adversary attempts to reduce confidence in the prediction of the DNN mode by increasing the ambiguity of the prediction of the target model, even if the predicted class remains correct. This attack manipulates inputs to decrease the model’s certainty about its output and reduce performance in practical applications such as autonomous driving or medical diagnostics, where high-confidence predictions are critical. Several researchers have explored techniques related to confidence reduction and its connection with adversarial examples. For instance, adversarial attacks like the FGSM [14] or the DeepFool attack [41] work by introducing perturbations that minimize the margin of decision boundaries, lowering the confidence of the model’s outputs.

5.1.3. Policy Learning Task

An adversary’s goal is to change an agent’s behavior to solve a targeted problem.

The adversary’s goal is to determine the details about the model, the reward function, or other parts of DRL. Adversaries can use these details to create copies of the model or to attack it. There may be a limitation in the ability of the adversary to change the environment based on the part of the environment they can modify.

5.1.4. Representation Learning Task

The representation learning task disrupts the latent representation. A model disruption attack introduces new samples into the training set, a manipulation attack changes an existing sample, and a logic corruption attack adds a backdoor to the model. Additionally, representation learning faces a broader threat from adversarial examples, perturbed inputs crafted to mislead models during their task-learning process.

5.1.5. Generate Synthetic Data Task

A generate synthetic data task attack uses adversarial methods to attack models that use synthetic data for training or testing purposes. ML researchers often generate synthetic data to augment datasets, protect privacy, or compensate for unavailable real-world data. Adversaries may generate synthetic data to embed adversarial patterns in the data or target models trained on synthetic data, ultimately weakening their performance [89].

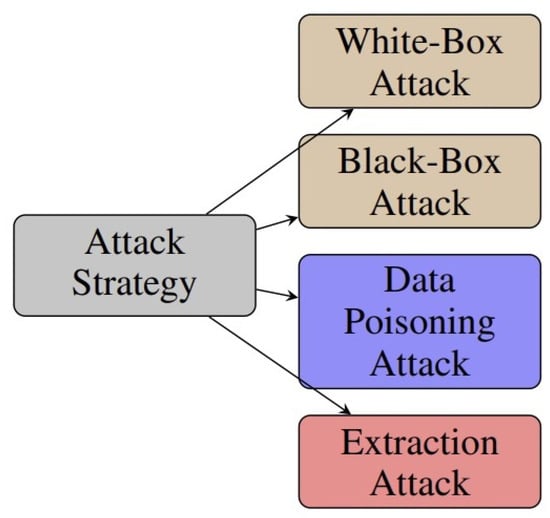

5.2. Attack Strategies

This part examines the latest adversarial attacks on DNN in various ML tasks. Attack strategies can be divided into four types: white-box attack, black-box attack, poisoning attack, and extraction attack, as illustrated in Figure 6. Our main focus is to provide a detailed explanation of the attack scenario and how the attack problem is formulated, an overview of the different algorithms used by attackers to solve the attack problem, and finally, explain how this attack fits different ML tasks.

Figure 6.

Taxonomy of attack strategies.

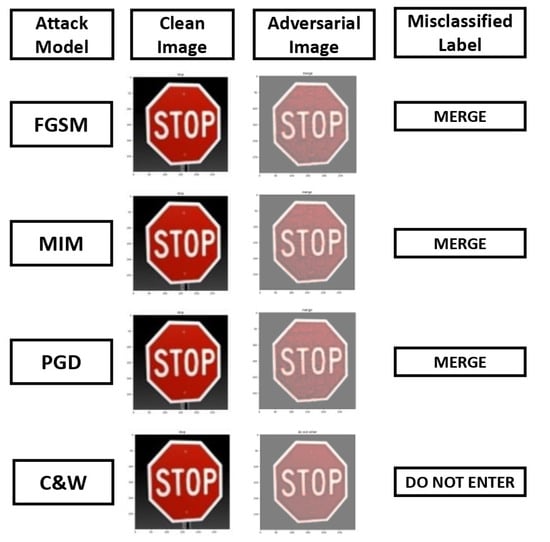

5.2.1. White-Box Attack

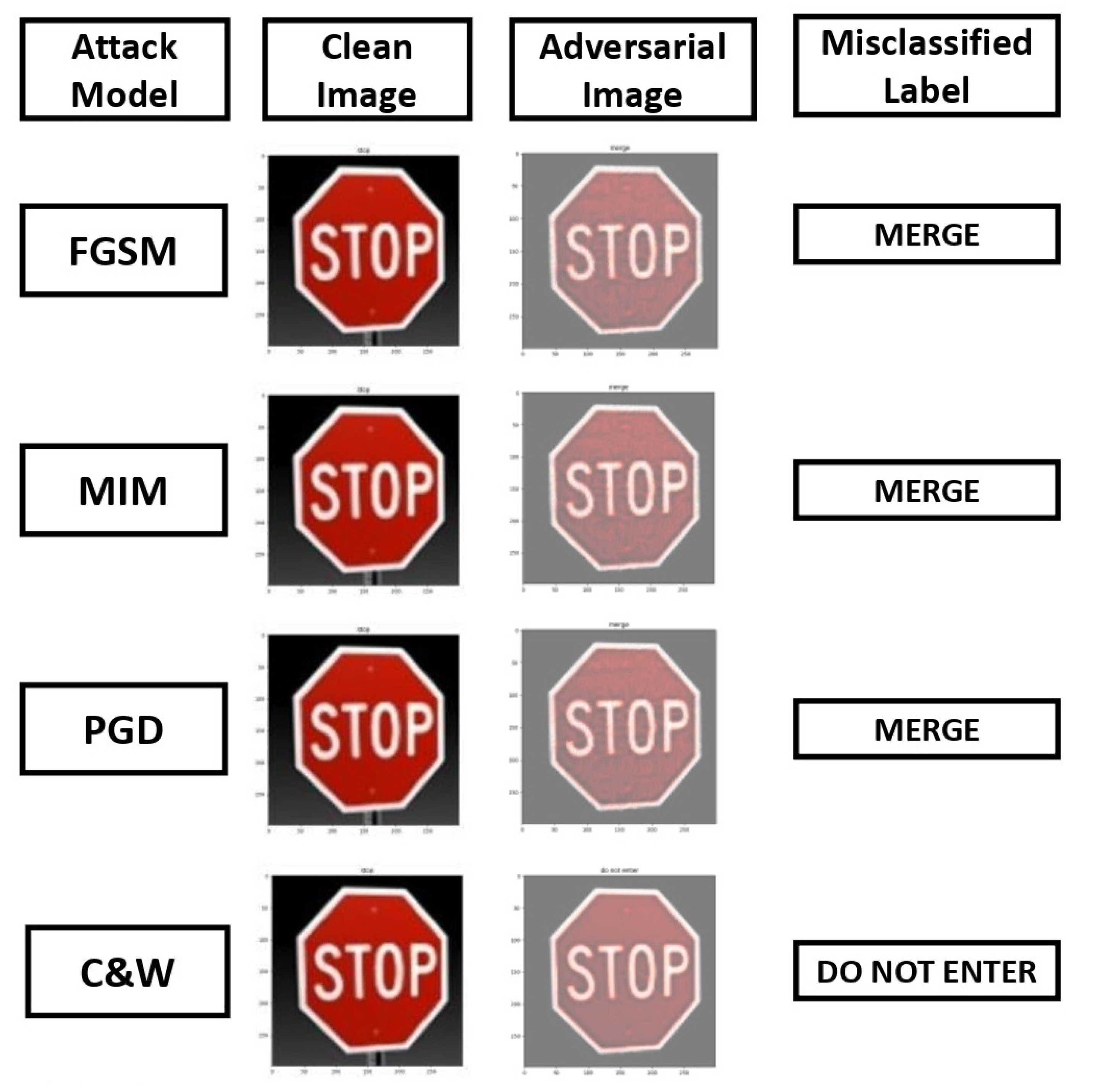

Attackers can access internal details for target models, training data, and loss functions in white-box attacks. Szegedy et al. [6] investigated and demonstrated DNNs that are susceptible to adversarial attacks. An adversarial example can be instituted by adding small perturbations to an image. This can deceive DNNs, reducing their accuracy. Adversarial examples are also known as white-box adversarial attacks against DNNs. These disruptions are identified by optimizing the input to maximize the prediction error. The attack is performed based on the knowledge of the ML input and output layer values only to solve the optimization problem formulated by Szegedy et al. [6]. The attack can be applied to classification and policy learning tasks [82]. However, it cannot be applied to unsupervised learning tasks as no testing phase is required. For classification, it tries to change the output label, for instance, slightly altering a benign traffic sign image to fool recognition traffic signs into misclassifying a self-driving vehicle’s sign to another, such as a “Stop” sign as a “Yield” sign, as shown in Figure 7. Policy learning tries to change the best-learned action.

Figure 7.

The impact of various attack models and image transformations on a sample traffic sign image, specifically a stop sign [90].

Researchers have proposed different algorithms to solve attack problems, using various optimization techniques based on similarity measures between original and adversarial examples. In some cases, adaptation to the main problem is proposed by [6].

- Elastic-Net Attacks to DNNs (EADs): The research introduces EAD regularization, which combines the penalty functions L1 and L2, to the optimization problem of finding the minimal perturbation in an image that causes a DNN to misclassify it as a target class [91]. It shows that EAD can generalize and craft L1-oriented, more effective adversarial examples focusing on targeted attacks. It demonstrates that EAD can achieve similar or better attack performance than C&W and other breaking undefended and defensively distilled DNNs. EAD creates adversarial samples employing the iterative shrinkage-thresholding algorithm [92].

- Targeted Universal Adversarial Perturbations: This research proposed a simple iterative method to generate universal adversarial perturbations (UAPs) for image classification tasks. By integrating the straightforward iterative approach for producing non-targeted universal adversarial perturbations with the fast gradient sign method to create targeted adversarial perturbations for a given input, UAPs are imperceptible perturbations that can cause DNN to misclassify most input images into a specific target class. The results demonstrate that the technique can produce almost imperceptible UAPs that achieve high ASR for known and unknown images [93].

- Brendel and Bethge Attack: Divide the image into regions based on estimated boundaries and use for the similarity distance and the iterative algorithm to enhance the perturbation value additively; the attack calculates the best step by solving a quadratic trust-region optimization problem in each iteration. This attack improves the performance of generating adversarial examples [94].

- Shadow Attack: The attack imposes more constraints on the standard problem, which shows an effective success rate for a set of datasets. By applying a range of penalties, shadow attacks for constraints promote the imperceptibility of the resulting perturbation to the human eye by limiting the perturbation size [95].

- White-Box Bit-Flip-Based Weight Attack: The authors propose an attack paradigm that modifies the weight bits of a DNN in the deployment stage [96]. They suggested a general formula with elements to meet efficiency and covert objectives and a limitation on bit-flip quantity. They also presented two cases of the general formulation with different perturbation purposes: a single-sample attack aims to misclassify a specific sample into a target class by flipping a few bits in the memory, and a triggered samples attack aims to misclassify the samples embedded with a particular trigger by flipping a few bits and learning the trigger. They utilized the alternating-direction method of the multipliers method to solve the optimization problems and demonstrated the superiority of their methods in attacking DNNs.

- Adversarial Distribution Searching-Driven Attack (ADSAttack): Wang et al. [97] proposed the ADSAttack; this technique generates adversarial examples by exploring adversarial distributions in unsupervised hidden learning. The technique trains an autoencoder to capture the underlying features of the input data, then identifies the distribution that minimizes the classification error of the target DNN and ultimately generates adversarial instances based on this distribution using the decoder. The algorithm can produce adversarial examples that are more diverse and effective than the existing ones and can be transferred to other models and defense mechanisms. The algorithm may also have potential applications in the security, privacy, and robustness of DNNs.

- Targeted Bit-Flip Adversarial Weight Attack (T-BFA): T-BFA is a novel method for attacking DNNs by altering a small number of weight bits stored in computer memory. The paper proposed three types of T-BFA attacks: N-to-1 attack, which forces all inputs from N source classes to one target class; 1-to-1 attack, which misclassifies inputs from one source class to one target class; and 1-to-1 stealth attack, which achieves the same objective as 1-to-1 attack while preserving the accuracy of other classes. The paper also shows the practical feasibility of T-BFA attacks in a real computer system by exploiting existing memory fault injection techniques, such as the row-hammer attack, to flip the identified vulnerable weight bits in dynamic random access memory (DRAM). The paper discusses the limitations and challenges of T-BFA attacks, such as the dependency on the bit-flip profile, the trade-off between attack stealthiness and strength, and the resistance of some defense techniques, such as weight quantization, binarization, and clustering [98].

- Invisible Adversarial Attack: Wang et al. [99] introduced the invisible adversarial attack, an adaptive method that uses the human vision system (HVS) to create realistic adversarial scenarios. The technique comprises two categories of adaptive attacks: coarse-grained and fine-grained. The first attack introduces a spatial constraint on the perturbations, which only adds perturbations to the cluttered regions in an image in areas where the human visual system has reduced sensitivity. The fine-grained attack uses a novel metric called just noticeable distortion, which measures each pixel’s noticeable distortion (JND) to better simulate the HVS perceptual redundancy and set pixel-wise penalty policies for the perturbations. The algorithm can be applied to adversarial attack methods that manipulate pixel values, like FGSM [14], Basic Iterative Method (BIM) [100], and C&W [17].

- Type I Adversarial Attack: Tang et al. [101] introduced a new method to create Type I adversarial examples, where the appearance of an input image is significantly changed while maintaining the original classification predictions. Utilizing an existing understanding of the Gaussian distribution, incorporating label data into the latent space allows identifying features. In this latent space, they challenge the classifier by modifying the latent variables through a gradient descent approach. This approach utilizes a decoder to propagate forward, converting the revised latent variables into images. Additionally, a discriminator was incorporated to assess the distribution of the manifold within the latent space, facilitating the successful execution of a Type I attack. The method has successfully produced adversarial images that resemble the target images but are still classified as the original labels.

- Generative Adversarial Attack (GAA): He et al. [102] proposed the GAA, a novel Type I attack method that uses a generative adversarial network (GAN) to exploit the distribution mapping from the source domain of multiple classes to the target domain of a single class. The GAA algorithm operates under the assumption that DL models are susceptible to similar features, which means that examples with different appearances may exhibit similar features in the resulting feature space of the model. This algorithm aims to generate adversarial examples that closely resemble the target domain while evoking the original predictions of the target model. GAA updates the GAN generator and the discriminator during each iteration using a custom loss function comprising three terms: adversarial loss, feature loss, and latent loss. The adversarial loss aims to deceive the discriminator with the generated instances, the feature loss maintains the similarity between the original and created features, and the latent loss adjusts the latent vector to diversify the generated examples. GAA has generated adversarial images that resemble the target images.

- Average Gradient-Based Adversarial Attack: This attack was proposed by Zhang et al. [103], which utilizes the gradients of the loss function to create a dynamic set of adversarial examples. Unlike existing gradient-based adversarial attacks, which only use the gradient of the current example, the average gradient-based adversarial attack aims for higher success rates and better transferability.In each iteration, this algorithm generates a dynamic set of adversarial examples using the gradients of the previous iterations. Then, it calculates the average gradient of the loss function concerning the dynamic set and the current example. The average gradient determines the perturbations added to the original example.Compared to its competitors, the average gradient-based adversarial attack has produced more effective and diverse adversarial examples and has outperformed them on various datasets and models. Overall, it has proven to be a practical and robust method for evaluating the robustness of DNNs.

- Enhanced Ensemble of Identical Independent Evaluators (EIIEs) Method: The enhanced EIIE approach, introduced by Zhang et al. [104] built on the EIIE topology by incorporating details on the top bids and requests for portfolio management. The primary concept of the EIIE topology involves a neural network that assesses the potential growth of each asset in the portfolio based on the asset’s data. The enhanced EIIE method enhances the EIIE topology by introducing two additional inputs: the best bid price and the best ask price for each asset. These inputs provide precise market information to assist the network in making optimal decisions regarding portfolio allocation. The EIIE approach has shown the vulnerability of an RL agent in portfolio management. An attack could collapse the trading strategy, creating an opportunity for the attacker to profit.

- Transformed Gradient (TG) Method: A white-box approach generates adversarial perturbations by modifying the gradient of the loss function using a predefined kernel. The transfer gradient (TG) technique aims to reduce the susceptibility of adversarial instances to specific areas of the target model and improve their ability to be transferred to other models. This approach is compatible with any gradient-based attack method, such as FGSM or PGD. TG can generate adversarial instances that are more easily transferred to other models than baseline approaches, especially when targeting defense models. The TG technique is an efficient method to improve the transferability of adversarial cases [105].

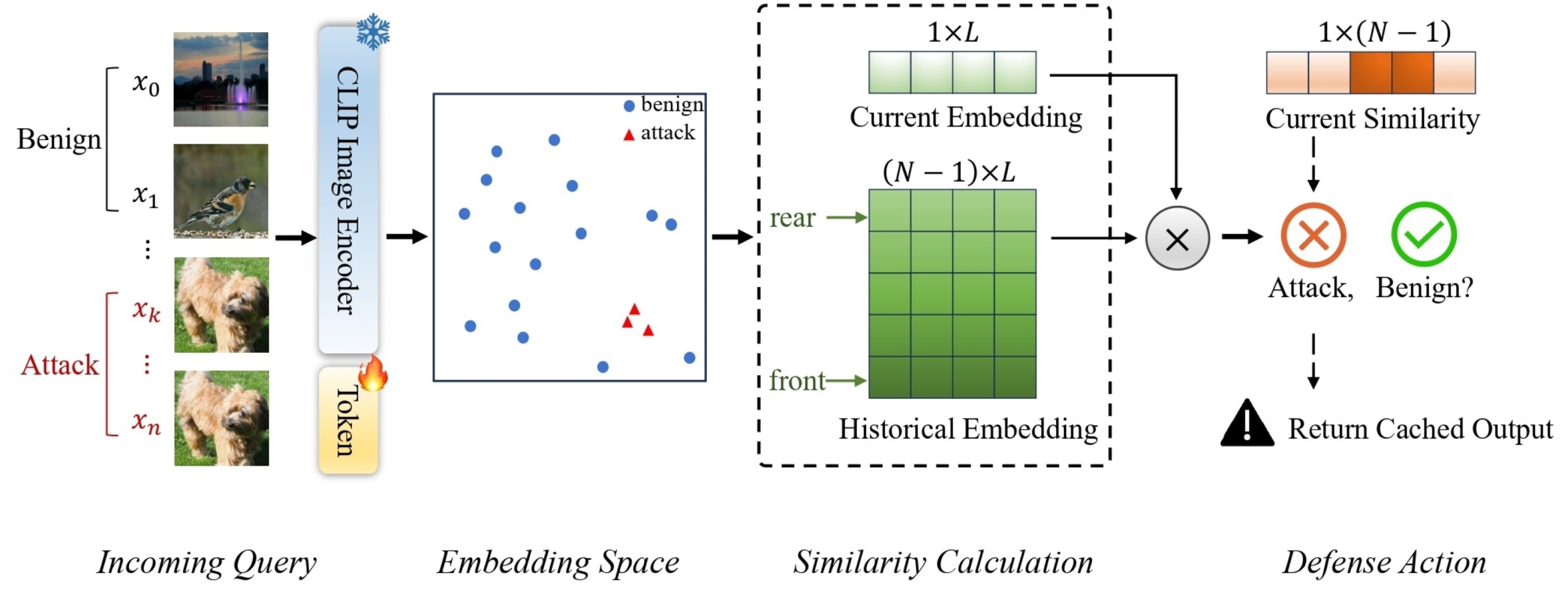

5.2.2. Black-Box Attack

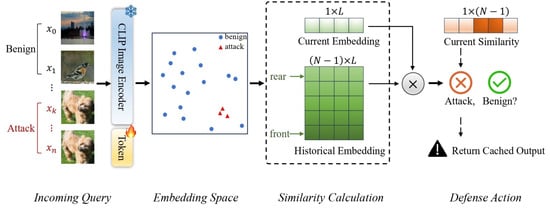

Attackers cannot access internal details for target models in a black-box attack. The attacker can interact with the model by querying it and observing its output (e.g., predictions or confidence scores). Despite this limited access, the attacker can still craft adversarial examples using techniques such as transferability or query-based methods. For example, query-based attacks are where the adversary sends multiple inputs to a deployed model (e.g., an ML API) and observes the output to infer decision boundaries, as shown in Figure 8. The adversary then uses these observations to craft adversarial examples. The black-box and white-box adversarial attacks against DNN share the same objective formulated by Szegedy et al. [6]. They have the same list of compromised tasks but use different approaches to solve the problem.

Figure 8.

Proposed AdvQDet framework [106].

In the white-box approach, the attacker attempts to modify the image pixels and observe the DNN’s output. They then used a gradient distance based on a similarity metric to minimize image modification.

However, in a black-box attack, the attacker controls the modification of the image by applying iterative modifications that either maximize or minimize a specific objective function.

- Square Attack: This is a black-box adversarial attack that operates in both and norms, and it does not depend on local gradient information, making it resistant to gradient masking. This attack utilizes a random search methodology, a classic iterative optimization approach proposed by Rastrigin in 1963 [107]. In particular, the results obtained from a square attack can provide more accurate assessments of the robustness of models that exhibit gradient masking compared to traditional white-box attacks [108].

- One-Pixel Attack: Begin by selecting an image point and applying a permutation to generate a set of modifications for this point. Next, utilize a differential evolution optimization algorithm to choose solutions that meet the target. Modified pixels should have more dimensions in common with another set of dimensions [109].

- Multilabel Adversarial Examples—Differential Evolution (MLAE-DE): Jiang et al. [110] introduced the MLAE-DE approach. It is an iterative method that uses differential evolution (DE) methodology to create multilabel adversarial instances capable of deceiving DNNs is a popular population-based optimization algorithm. It involves misclassifying input from one or more source classes into a target class using a modified DNN model. This approach provided a breakthrough in DE by reducing the required fitness assessments and enhancing attack performance. MLAE-DE is a black-box approach that generates adversarial instances using only model outputs without accessing model parameters. It effectively creates adversarial images that resemble the target images while eliciting the original prediction of the targeted model. MLAE-DE has proven to be a valuable and efficient technique for generating multiple adversarial examples on large-picture datasets.

- Black-box Attack Method Based on Successor Representation: Cai et al. [111] introduced the SR-CRPA attack, a black-box strategy that manipulates rewards in the environment to train deep RL agents. The developers of the SR-CRPA method assumed that the adversary has access to the state, action, and reward information of both the agent and the environment. This approach involves using a pre-trained neural network to learn each state’s SR, which indicates the anticipated future visitation frequency of the states. At each time step, SR-CRPA uses the SR value of the current-state action combination to determine whether to initiate an attack and how to subtly perturb the reward. With just a few attacks, SR-CRPA prevents the agent from acquiring the optimal policy. In general, SR-CRPA is a discrete and effective algorithm to poison the rewards of the target agent.

- Dual-Stage Network Erosion (DSNE): Duan et al. [112] introduced the DSNE attack as a black-box approach that uses DSNE to modify the source model and create adversarial samples. The DSNE method assumes that the adversary has access to the internal components of the source model but not to the target model. This technique involves modifying the original model’s features to generate various virtual models combined by a longitudinal ensemble across iterations. DSNE biases the output of each residual block towards the skip connection in residual networks to reveal more transferable information. This results in enhanced transferability of adversarial cases with comparable computational expense. The results of the DSNE attack indicate that DNN structures are still vulnerable and network security can be improved through improved structural design.

- Serial Minigroup Ensemble Attack (SMGEA: The SMGEA attack, developed by Z et al. [113], is a transfer-based black-box technique designed to generate adversarial examples targeting a set of source models and then transferring them to various target models. The method assumes that the attacker can access multiple pre-trained white-box source models, excluding the target models. Divides the source models into multiple “minigroups” and uses three new ensemble techniques to improve transferability within each group. SMGEA employs a recursive approach to gather “long-term” gradient memories from the minigroup before and transfer them to the following minigroup. This procedure helps maintain acquired adversarial information and enhance intergroup transfer.

- CMA-ES-Based Adversarial Attack on Black-Box DNNs: Kuang et al. [114] presented a black-box method called a CMA-ES-based adversarial attack. This method creates adversarial samples for image classification using the CMA-ES. The attacker only has access to the input and output of the DNN model, the top K labels, and their confidence. The CMA-ES attack uses a Gaussian distribution to model variations in adversarial scenarios. It modifies the average and variation in the distribution according to the sample’s quality. The attack generates altered images in every iteration, picking the most successful ones to modify the distribution.

- Subspace Activation Evolution Strategy (SA-ES): Li et al. [115] introduced the SA-ES algorithm, a method to create adversarial samples for DNNs using a zeroth-order optimization method. This method assumes that the opponent can only request the model’s softmax probability value for the input through queries without being able to access the model directly. The SA-ES algorithm uses a subspace activation technique and a block-inactivation technique to detect sensitive regions in the input image that could alter the model output. In every iteration, SA-ES uses an organic evolutionary method to modify the distribution parameters of perturbations towards the optimal direction. SA-ES can efficiently find high-quality adversarial examples with a limited number of queries. This algorithm is a reliable and effective approach for focusing on the model in both digital and physical settings.

- Adversarial Attributes: In a study, Wei and colleagues [116] proposed the adversarial attributes attack. This method creates harmful examples for image classifiers by changing the semantic characteristics of the input image. The technique employs a preexisting attribute predictor to analyze the visual characteristics of each image, such as hair color. The adversarial attribute attack detects the most impactful attributes in every round and merges them with the original image to generate the perturbation.Adversarial attribute attacks can obtain a high success rate and remain undetected with only a few queries by using evolutionary computation to solve a constrained optimization problem.

- Artificial Bee Colony Attack (ABCAttack): Cao et al. [117] presented an artificial bee colony (ABC) attack, which creates adversarial examples for image classifiers using the ABC algorithm. The ABC attack assumes that the attacker can only request the softmax probability value of the model input without being able to access the model itself. ABC attack produces a set of modified images during each iteration and chooses the best ones to adjust the spread. It can accurately determine the most effective changes that cause the target model to incorrectly classify input images while staying within a set query limit. ABC attack is a method that has demonstrated effectiveness in fooling black-box image classifiers while being efficient and resistant to queries.

- Sample Based Fast Adversarial Attack Method: Wang et al. [118] introduced the sample-based fast adversarial attack method. This technique generates adversarial examples for image classifiers using data samples in a black-box approach. The technique assumes that the opponent can only obtain data samples, not the model parameters or gradients. Principal component analysis is used to identify the main differences between categories and calculate the discrepancy vector. Creating a target adversarial sample with minor adjustments involves performing a bisection line search along the vector that connects the current class to the target class.

- Attack on Attention (AoA): Chen et al. [119] developed the AoA attack, a black-box technique that generates adversarial examples for DNNs by manipulating the attention heatmaps of the input images. This attack assumes that the attacker can only request the model’s softmax probability value for the input without accessing the model itself. The approach utilizes a pre-trained attention predictor to learn attention heatmaps for each image, demonstrating the areas on which the model focuses. AoA modifies the attention heatmaps in each iteration by adding or subtracting pixels to create the perturbation.

5.2.3. Poisoning Attack

Typically, an attacker can only contaminate a defender’s dataset without modifying existing training data (). The attacker can introduce poisoned data, actively or passively, to manipulate the model during retraining, causing the model to misclassify specific testing data. This situation may arise when the defender collects data from various sources, some of which may be untrustworthy (e.g., scraping data from the public Internet) [120]. When the attacker has access to the testing data, they insert a trigger to manipulate them, causing misclassification, known as a backdoor attack. Attackers can carry out these attacks on all ML tasks that involve learning from data sources. In classification tasks, the goal of the attacker is to misclassify test data, as proposed by [121]; in policy learning attacks, the aim is to modify agent behavior, as proposed by [122]; in synthetic data generation attacks, the attacker aims to manipulate the latent code [123].

Figure 9 shows traffic signs, including clean versions and backdoor versions altered with various triggers, such as a yellow square, bomb sticker, or flower.

Figure 9.

Examples of backdoored traffic signs and their impact on classification accuracy [20].

The goal of the attacker can be presented as:

where is the attacker’s desired model parameter, which realizes the attacker’s objectives, and is the loss function. In order to achieve the target attacker objective, a set of proposed algorithms tries to infer the poison data from the clean defender data:

- Feature Collection Attack: Feature collision attacks occur within the framework of targeted data poisoning. The aim is to modify the training dataset so that the model incorrectly classifies a specific target, from the test set, into the base class. Techniques for feature collision in targeted data poisoning focus on adjusting training images from the base class to shift their representations in feature space closer to that of the target [124].

- Label Flipping: Label-flipping attacks involve changing the assigned labels during training, but keeping the data unchanged. Although not considered a “clean label”, these attacks do not leave easily detectable artifacts that the target could recognize [125].

- Influence Functions: Researchers can use influence functions to evaluate how a slight modification in training data impacts the model parameters learned during training. This can help to create poisoning examples and then be used to approximate solutions to the bilevel formulation of poisoning [126].

- TensorClog: In their work, Shen et al. [127] introduced the TensorClog attack, which is known as a black box attack, which involves inserting perturbations into the input data of DNNs to reduce their effectiveness and protect user privacy. The TensorClog attack assumes that the attacker can access a substantial amount of user data but not the model parameters or gradients. The approach uses a pre-trained frequency predictor to analyze the frequency domain of each input, capturing its spectral features. TensorClog modifies the frequency domain of the input by adding or subtracting pixels on each iteration to create the perturbation. The attack can effectively identify perturbations that cause the target model to converge to a higher loss and reduced accuracy using fewer queries. TensorClog is a covert and efficient technique used to corrupt the input data of DNNs.

- Text Classification: In their work, Zhang et al. [128] presented a method for identifying vulnerabilities in NLP models with limited information. They discovered the existence of universal attack triggers. Their approach consists of two stages. First, they extract harmful terms from the adversarial sample to create the knowledge base in a black-box scenario. In the second phase, universal attack triggers are created by altering the predicted outcomes for a set of samples. Incorporating the generated trigger into any clean input can significantly decrease the prediction accuracy of the NLP model, potentially bringing it down to nearly zero.

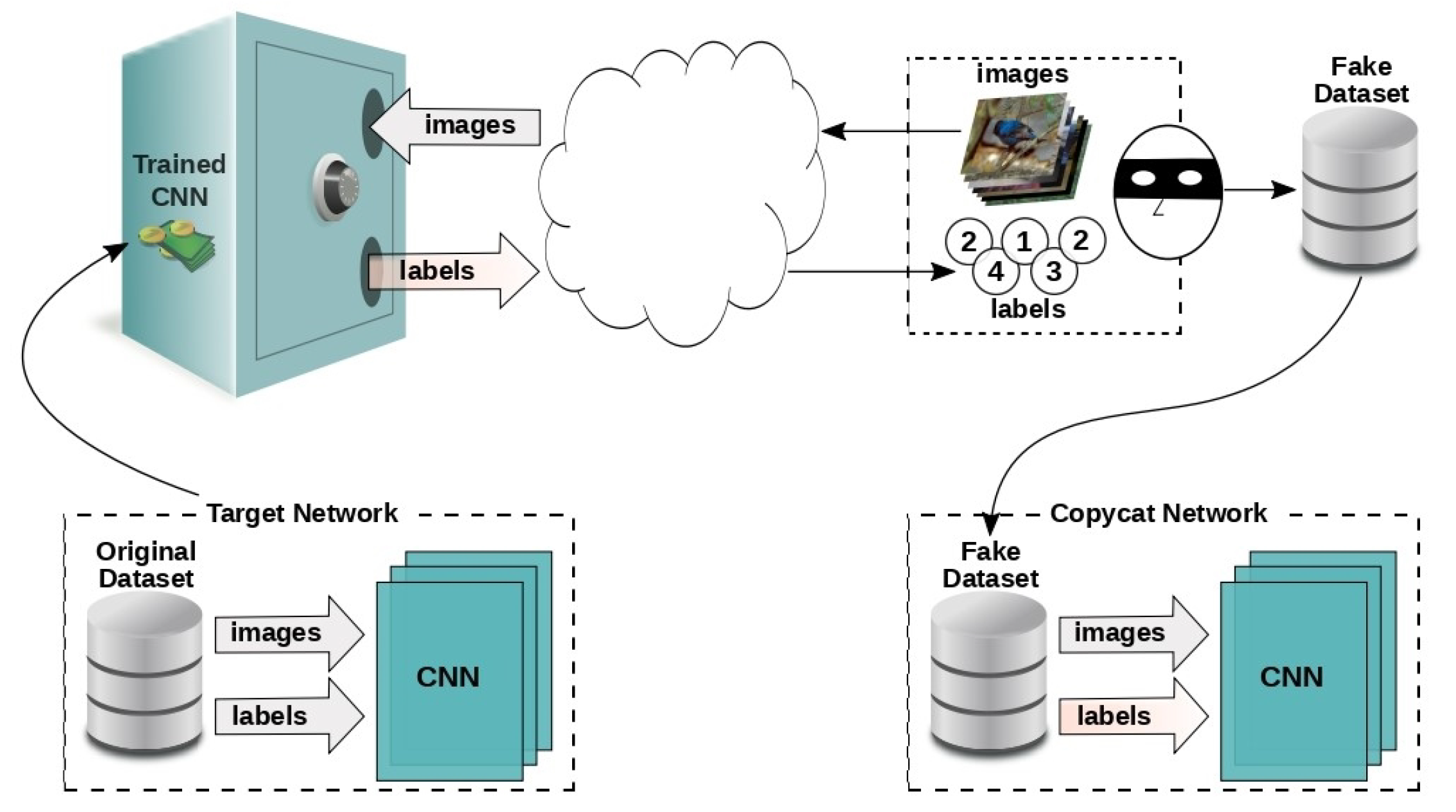

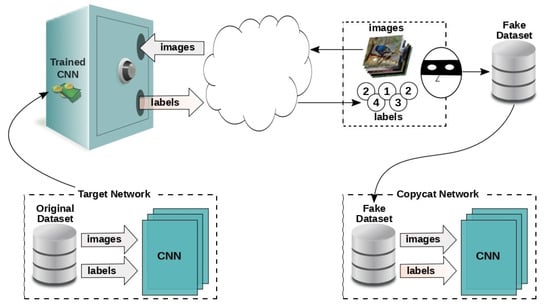

5.2.4. Extraction Attacks

Correia-Silva et al. [129] proposed replicating a targeted network using only clean images for querying. The method involves two main steps: creating fake data and training the copycat system. By selecting multiple images, the adversary creates a fake dataset, which can be from the target network or a different image collection, based on their access to the specific model. The system deems the original image labels irrelevant and removes them from all image sets. The attacker aims to understand how the targeted network classifies images during this stage. To carry out this process, the new dataset is fed into the target model and observe how it categorizes the images. The stolen labels are the labels given to the new images. The adversary aims to exploit the classifier’s minor vulnerabilities, allowing another network trained on the same dataset to generate results that resemble the target model.

Figure 10 illustrates the workflow of a model-stealing attack conducted on a black-box neural network (referred to as the “target network”). The target network is initially trained on a confidential dataset and is deployed as a public API. This API allows users to submit input images and receive corresponding class labels as output.

Figure 10.

Model stealing process [90].