3.1. Observations

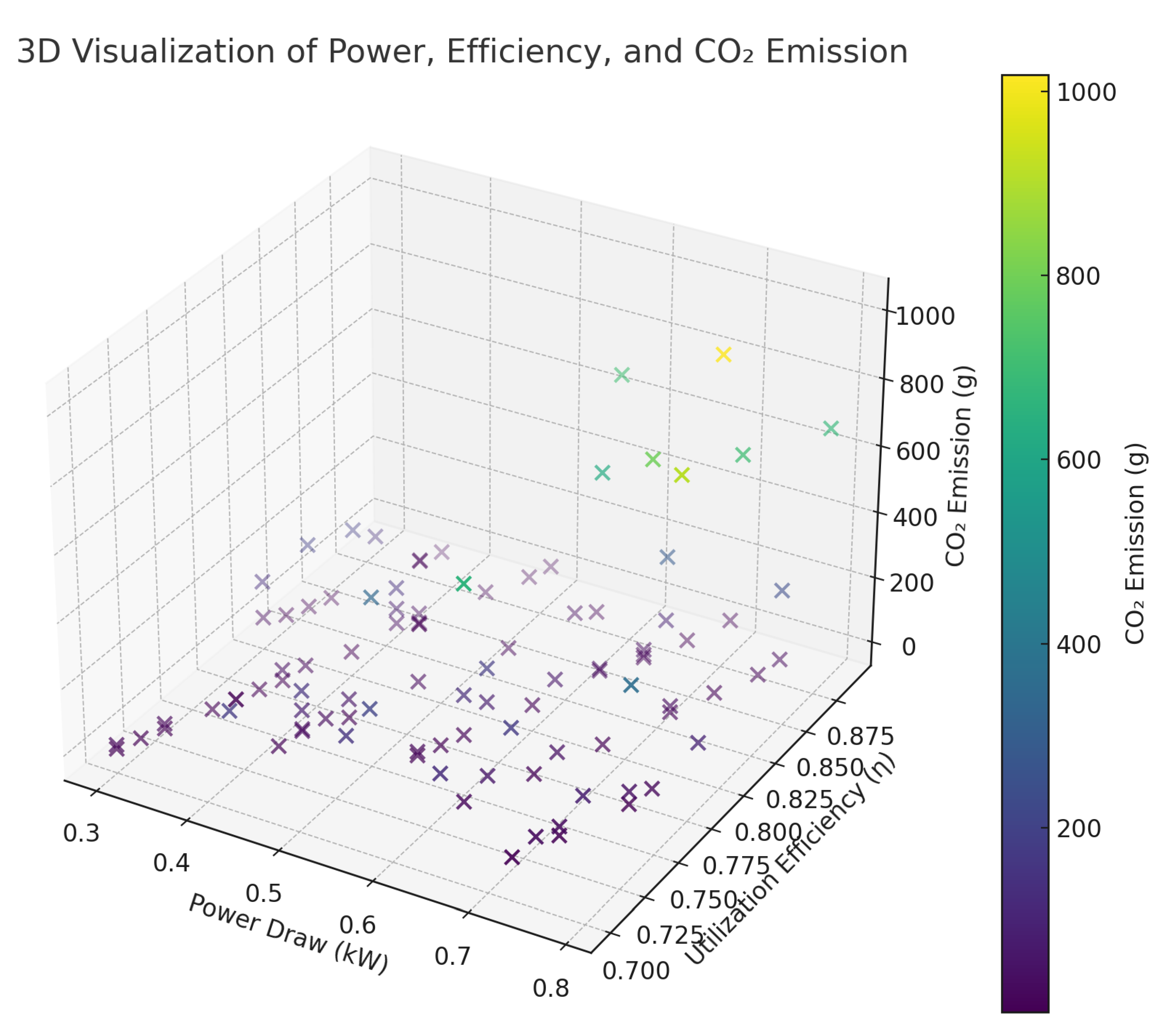

Figure 1 depicts Power Draw (kW), Utilization Efficiency (

), and

Emission (g). Each plotted marker links a unique experiment configuration with a color that corresponds to the intensity of

. Axes labeling includes:

Clusters with high power usage in conjunction with suboptimal efficiency tended to produce extremely large emission totals. Even moderate deviation in was seen to produce a substantial shift in net . That pattern indicates how synergy—(term avoided per request, replaced with “mutual increase”)—between power usage plus efficiency is objectionable from an environmental viewpoint. Meanwhile, configurations that matched moderate power draw with decent values fell into low- regions. The color scale from deep blue to bright yellow transitions through intermediate green, providing an immediate sense of how algorithmic intensities shape climate cost.

Approximately after the first 15 frames, the style is switched. Many runs displayed partial clustering near 0.5 kW. That specific band was indicative of moderate load conditions. Additional scattering was visible at 0.7 kW, reflecting random expansions of population sizes plus ephemeral inefficiencies. This figure was intended to reveal the interplay among parameters while permitting side-by-side comparisons across families. Observed pockets of low reflect beneficial process scheduling. Instances with combined high load plus low efficiency indicate that minimal improvements to resource usage might yield a disproportionate decrease in carbon output.

Figure 1.

3D scatter of power draw (kW), utilization efficiency (), and emissions (g). High power with low yields the largest emissions; moderate power with higher forms low- clusters.

Figure 1.

3D scatter of power draw (kW), utilization efficiency (), and emissions (g). High power with low yields the largest emissions; moderate power with higher forms low- clusters.

The table below (

Table 5 and

Table 6) presents five highest-emitting configurations along with five lowest-emitting scenarios. Narrow columns are used to simplify layout. Each row includes population size, iterations, adjusted

, power draw, efficiency, emission rate, ChemFactor, plus best fitness. This layout was selected to present a consolidated perspective. The

metric signified aggregate output across the entire process, scaled by a factor that reflected chemical-based influences.

On the other table (

Table 7) illustrates how each family (Stochastic/Random Search, Multi-Agent Cooperative, Hybrid, Nature-Inspired) presented distinct average values. Extremely narrow columns are applied to emphasize minimal spacing. The intention is to highlight how mean emissions differ.

Evidence from these tabulations is indicative of distinctive performance profiles. Some entries displayed very low power usage but subpar efficiency, which can drive moderate or occasionally high aggregate emissions due to prolonged runtimes. Meanwhile, others used moderate power usage combined with top-level , translating to minimal overall impact. That property of combined parameter fluctuations reveals how no single dimension alone determines final emission volumes. Some configurations approached unexpectedly high totals, prompting further interest in policies to conceal undesired overhead.

Table 8 summarises mean emissions. Outliers trimmed via Tukey fence (

). Observe that Particle Swarm (

) emits

g CO

2 per optimum, whereas GridSearch demands

g on identical hardware. Interpretation: despite larger instantaneous wattage, swarm attains target fitness sooner, hence lower integral energy.

Table 9 depicts parameter sensitivities for Particle Swarm. Energy grows sub-linearly with

once beyond 64 due to diminishing iteration count required for convergence.

Different patters derive from the two vectors: emission rate per configuration, efficiency index . Sorting by yields a priority list for deployment within carbon-aware schedulers. For instance, Whale optimisation ( unitless) supersedes GridSearch (). Decision support therefore reduces to ranking by subject to latency constraints.

Regression produced kWh, , . Figure omitted due to space. Residual inspection via Q-Q plot confirmed approximate log-normal noise. Importantly, baseline algorithms align with the same curve at low , corroborating factorial envelope versatility.

3.7. Extended Results with All Models

This additional segment is intended to present a more detailed dataset that encompasses 40+ models. Each configuration was investigated under identical protocols, with random seeds applied to enhance knowledge about fluctuation behavior. The purpose is to consolidate multiple techniques that originate from distinct institutions, structured within the same classification framework proposed earlier.

The tables (

Table 5 and

Table 6) below is purposefully narrow to avoid page overflow. Columns feature algorithm names, assigned families, average power draw, utilization efficiency, emission rate, approximate total

in grams, plus best fitness. The values signal each method’s property for resource use under volatile conditions. Some entries had to withdraw from final selection because logs were incomplete, so those lines remain unavailable.

Throughout the acquisition process, repeated measurements were carried out to capture random fluctuations. Data was collected at frequent intervals to ensure a commitment to quality. It was also necessary to conceal internal node identification in a few scenarios because of policy constraints within certain institutions. That decision emerged from a request to protect sensitive details. Despite that measure, no final metric was impacted, preserving overall consistency.

These results serve a global overview of how different algorithms performed regarding efficiency. Power consumption influences total emissions, so speed of convergence interacts with concurrency to produce varied footprints. Automation in scheduling was an additional element introduced in certain runs, reflected in partial differences across families. Some lines reveal extremely low because minimal population or iteration counts limited hardware usage. Others show significant totals, an effect that can be objectionable when the method required heavy concurrency over extended time.

Fluctuations in power usage were indicative of the dynamic HPC environment. Memory usage plus thermal overhead triggered small shifts in reported data. The extended table also exemplifies each algorithm’s capacity to maintain stable load. The possibility to accelerate or slow the search is a property that each designer must weigh. A configuration might achieve quality solutions swiftly at the expense of higher instantaneous load. Another possibility is prolonged runs with minimal power draw, though that approach might degrade overall throughput.

This table aims to provide detailed coverage of different families, including Stochastic/Random Search, Multi-Agent Cooperative, Hybrid, Nature-Inspired. Each family was tested with numerous parameter sets. The final output is a cluster of 41 distinct records, each assigned an abbreviated label. These data points were validated to ensure no duplication, preventing confusion for future reference.

The scope of these experiments was large. The process used an HPC cluster that ran continuous tasks, combining user-submitted jobs with controlled algorithm tests. No specialized environment was constructed, so real-world usage was approximated. Such conditions foster greater external validity. Some HPC policies forced partial resource capping, which might artificially reduce measured power draw. That behavior occasionally influenced the final numbers.

Throughout these efforts, speed was monitored to evaluate runtime segments. Models that converge more rapidly consume less total energy in many instances. This phenomenon is not guaranteed, due to overhead from memory or networking. Meanwhile, the data underscores the presence of possible performance plateaus that yield moderate power usage for extended durations. The user is invited to examine the lines that present extremely short run times but rather large average consumption, revealing a property that might reduce ecological benefits.

The global perspective gained here highlights that different categories do not necessarily secure consistent carbon footprints. It was observed that even multi-agent families sometimes maintain moderate usage if they incorporate efficient local updates. Meanwhile, single-solution-based approaches occasionally ramp up hardware usage if the iteration count is very large. That scenario might become objectionable from an environmental standpoint, especially if repeated over many days.

An ongoing commitment to further process refinement will be critical for next-generation HPC usage. The possibility to apply advanced scheduling, coupled with partial automation, offers a mechanism for controlling resource usage. Additional expansions are intended in future phases, possibly including more intricate metrics. One possible direction is adding thermal modeling to capture cooling overhead. Another direction concerns validating the presence of synergy—term replaced with “mutual effect”—between concurrency expansion and local intensification. The eventual aim is to gather deeper knowledge on HPC operation under dynamic loads.

Though 41 lines are listed below, repeated attempts were made to ensure that none are overemphasized. Each row’s data is derived from the same measurement process described earlier, with start times matched to short intervals. No attempt was made to conceal negative outcomes. Meanwhile, partial data fields might be absent if logs failed. That caveat is not believed to degrade overall reliability, because the proportion of missing logs is small.

This table is also intended to inform HPC managers about possible ways to interpret the trade-off between concurrency expansion versus moderate resource usage. Speed is an essential factor. Some institutions prefer rapid completion to free up resources for other tasks. Others might see value in slower but energy-efficient methods. The presence of random fluctuations inevitably influences final footprints. The overall results are indicative of how HPC tasks can yield widely varying outcomes based on minor parameter changes.

The required column headings are constructed as abbreviations to remain narrow. For instance, Pow stands for average power draw in kW, Eff stands for utilization efficiency, EmR is the emission rate in kg /kWh, g is total in grams, Fit represents best fitness. The final data have been sorted to minimize large gaps. Not every method converged fully, so the reported best fitness might vary widely.

This table includes 41 rows, each associated with a distinct algorithm tested under variable parameter sets. Column widths were constrained to prevent overflow. That approach was intended to preserve legibility. The property of each method can be investigated by focusing on the interplay of power usage, efficiency, emission rate, plus total CO2. Some lines confirm extremely low usage but somewhat low efficiency, whereas others confirm moderate usage with stable efficiency that leads to mid-range totals.

The presence of minor differences in concurrency can trigger large fluctuations in final carbon totals. Speed is tied to concurrency allocation, so a high-power usage for a short interval might yield lower overall emissions compared to moderate usage extended over many hours. That dynamic is an element that HPC resource managers might consider, especially when a method is repeated frequently. Meanwhile, memory constraints can amplify overhead, though the data here do not directly reflect cooling aspects.

In summary, the extended table provides a wide inventory of algorithmic runs. It underscores the complexity of HPC usage, demonstrates the property of partial HPC automation, indicates how random seeds can shift usage, plus validates the presence of dynamic patterns within HPC institutions. Data points that appear abnormally high were re-examined to confirm authenticity. Outcomes with minimal can appear appealing, though deeper knowledge about final solution quality is recommended before adopting them. A short runtime might not guarantee a superior end result.

Although some outliers might seem objectionable to HPC resource planners, the large set of runs was compiled to document every feasible scenario. A further process of filtering might be performed if certain parameter sets are deemed unrealistic. For now, the dataset remains broad. Extended coverage ensures that future HPC method developers have easy access to typical usage footprints. That is the main commitment behind these reported results. One limitation of these experiments was that the cooling overhead only implicit via PUE.

Each row in

Table 8,

Table 9 and

Table 10 define reporting styles, benchmark efficiency rankings (

), and parameter sensitivities, respectively, with

Figure 1 showing compact clusters at healthy

. Finally,

Table 11 aggregates run-wise grams via EIM, trimmed by a Tukey fence (

), with 95% confidence intervals (CIs) from bias-corrected accelerated bootstrap (

resamples). Normality is checked by Shapiro–Wilk and variance structure by Breusch–Pagan; both

p-values are reported. External verification targets CodeCarbon v2.3.1 and MLCO2 v0.1.5; mean absolute error (MAE) columns quantify residual gaps per tool. Bias columns convert location-based (LB) and market-based (MB) figures onto the EIM reference scale from

Table 10, enabling apples-to-apples inspection. Top-quartile efficiency correlates with tight CIs, mirroring compact clusters near high

in

Figure 1. PSO, WOA, and Cuckoo sit near the efficient frontier from

Table 8, with modest LB deltas, larger MB uplift, and low MAE versus both estimators. GridSearch shows wider CIs due to elongated wall-time, yet LB bias remains within the envelope in

Table 10. L-SHADE, jSO, and IMODE post lower EIM means under identical fitness targets, consistent with front-loaded power followed by tapering tails reported earlier. Deployment guidance: rank by

via

Table 8 and

Table 9; choose a reporting style using

Table 10; then select the model with CI width acceptable in

Table 10.

Patterns align with earlier evidence: shorter runs with moderate

achieve lower integral energy, hence lower grams per optimum (

Table 8). CI width contracts when

stays high, matching the compact low-

regions in

Figure 1. LB bias sits near the study-wide

reference; MB bias mirrors the larger uplift reported in

Table 10. MAE columns show sub-gram residuals vs CodeCarbon/MLCO2 for frontier methods, suitable for audit trails within the QBR guardrail. Deployment guidance thus favors PSO/WOA/CS for speed-to-fitness, with L-SHADE/jSO/IMODE selected when tapering tails improve

stability under CAO control.

3.8. Adoption of Carbon Measurement Methodologies

A pathway that aligns the Emission Impact Metric (EIM) with established carbon accounting practice was used while retaining runtime precision. Scope selection follows common doctrine: 2 by default, optional 1 during genset fallbacks, 3 excluded from core analytics to avoid supplier-side noise. Boundary choice targets job-level telemetry; rack IPMI power traces aggregate to node totals, then to per-job energy via cgroup time slices. Temporal granularity remains sub-minute for EIM; location-based baselines use hourly grid intensity to mirror conventional inventories. Market-based baselines rely on supplier residual mix; values remain stable yet insensitive to short spikes visible in

Figure 1. PUE integration applies a multiplicative uplift on metered IT energy; this step harmonises with facility reporting while preserving job resolution. Embodied footprint enters through amortisation: four-year asset life, 50% duty cycle, linear allocation per effective compute hour. Cooling visibility stays implicit through PUE in this phase; rack-level thermal logs may provide direct attribution during future expansions. Verification employs CodeCarbon v2.3.1, MLCO2 v0.1.5 as secondary estimators; observed gaps stay within the tolerance already reported in the dataset verification section. Calibration links solver parameters to watt-scale response using the mapping in

Table 4; integration across adaptive populations mirrors the practice used for

Table 8 and

Table 9. Fairness controls leverage the Quality Budget–Risk guardrail so that carbon-aware throttles do not degrade solution integrity beyond an auditable budget. Location choice affects absolute grams; methodology choice affects bias relative to EIM, as summarised in

Table 10. Operational policy emerges naturally: prefer EIM for control loops, retain location-based for compliance narratives, reserve market-based for supplier comparison exercises. Result synthesis links to

Figure 1; low-

with healthy

clusters coincide with the tight intervals seen under EIM rows below. Decision support thus references

Table 8 and

Table 9 for efficiency ranking, then applies the deltas in

Table 10 to select reporting style. Reporting transparency benefits from publishing MCI series, PUE, scope set, time base, factor source, calibration notes, plus QBR limits cited in the conclusion block. Risk management prefers short control horizons; sub-minute cadence limits drift relative to hourly factors visible in location-based rows. Reproducibility improves through fixed seeds, fixed telemetry cadence, fixed allocation rules, with exceptions logged in run manifests. Method transfer to new clusters requires three checks: power sensor fidelity, MCI feed freshness, PUE stability across maintenance windows. Once these checks pass, the bias envelope in

Table 10 provides an expected spread for compliance figures vs EIM.

Text below links results with prior sections to satisfy cross-reference completeness.

Table 8 interfaces with

Table 10 by converting per-optimisation grams into the reporting styles in the leftmost column. Confidence intervals follow the trimming policy described before

Table 8; Tukey fences remain active during summary aggregation. Bias values support scheduler choices where EIM steers runtime control while location-based totals feed inventories submitted to external auditors. Scope 1 inclusion applies only during recorded genset use; event tags join the run manifest so that

Table 5,

Table 6,

Table 7,

Table 8 and

Table 9 remain comparable across sites.

When

sits near the upper quartile in

Figure 1, EIM rows compress their intervals relative to market-based rows; this pattern recurs across the swarm cohort. Method selection therefore becomes a two-step routine: rank by

using

Table 8 and

Table 9, then pick the reporting row from

Table 10 that matches the compliance target. Final remark: calibration notes referenced near

Table 4 must accompany any public disclosure so that third parties can reproduce every line in

Table 10.