Abstract

Machine vision, an interdisciplinary field that aims to replicate human visual perception in computers, has experienced rapid progress and significant contributions. This paper traces the origins of machine vision, from early image processing algorithms to its convergence with computer science, mathematics, and robotics, resulting in a distinct branch of artificial intelligence. The integration of machine learning techniques, particularly deep learning, has driven its growth and adoption in everyday devices. This study focuses on the objectives of computer vision systems: replicating human visual capabilities including recognition, comprehension, and interpretation. Notably, image classification, object detection, and image segmentation are crucial tasks requiring robust mathematical foundations. Despite the advancements, challenges persist, such as clarifying terminology related to artificial intelligence, machine learning, and deep learning. Precise definitions and interpretations are vital for establishing a solid research foundation. The evolution of machine vision reflects an ambitious journey to emulate human visual perception. Interdisciplinary collaboration and the integration of deep learning techniques have propelled remarkable advancements in emulating human behavior and perception. Through this research, the field of machine vision continues to shape the future of computer systems and artificial intelligence applications.

1. Introduction

Computer vision, through digital image processing, empowers machines to map surroundings, identify obstacles, and determine their positions with high precision [1,2]. This multidisciplinary field integrates computer science, artificial intelligence, and image analysis to extract meaningful insights from the physical world, empowering computers to make informed decisions [3]. Real-time vision algorithms, applied in domains like robotics and mobile devices, have yielded significant results, leaving a lasting impact on the scientific community [4].

The study of computer vision presents numerous complex challenges and inherent limitations. Developing algorithms for tasks such as image classification, object detection, and image segmentation requires a deep understanding of the underlying mathematics. However, it is important to acknowledge that each computer vision task requires a unique approach, which adds complexity to the study itself. Therefore, a combination of theoretical knowledge and practical skills is crucial in this field, as it leads to advancements in artificial intelligence and the creation of impactful real-world applications.

The field of computer vision has been greatly influenced by earlier research efforts. In the 1980s, significant advancements were made in digital image processing and the analysis of algorithms related to image understanding. Prior to these breakthroughs, researchers worked on mathematical models to replicate human vision and explored the possibilities of integrating vision into autonomous robots. Initially, the term “machine vision” was primarily associated with electrical engineering and industrial robotics. However, over time, it merged with computer vision, giving rise to a unified scientific discipline. This convergence of machine vision and computer vision has led to remarkable growth, with machine learning techniques playing a pivotal role in accelerating progress. Today, real-time vision algorithms have become ubiquitous, seamlessly integrated into everyday devices like mobile phones equipped with cameras. This integration has transformed how we perceive and interact with technology [4].

Machine vision has revolutionized computer systems, empowering them with advanced artificial intelligence techniques that surpass human capabilities in various specific tasks. Through computer vision systems, computers have gained the ability to perceive and comprehend the visual world [3].

The overarching goals of computer vision are to enable computers to see, recognize, and comprehend the visual world in a manner analogous to human vision. Researchers in machine vision have dedicated their efforts to developing algorithms that facilitate these visual perception functions. These functions include image classification, which determines the presence of specific objects in image data; object detection, which identifies instances of semantic objects within predefined categories; and image segmentation, which breaks down images into distinct segments for analysis. The complexity of each computer vision task, coupled with the diverse mathematical foundations involved, poses significant challenges to their study. However, understanding and addressing these challenges holds great theoretical and practical importance in the field of computer vision.

The contribution of this work is a presentation of the literature that showcases the current state of research of machine learning and deep learning methods for object detection, semantic segmentation, and human action recognition in machine and robotic vision. In this paper, we present a comprehensive overview of the key elements that constitute machine vision and the technologies that enhance its performance. We discuss innovative scientific methods extensively utilized in the broad field of machine and deep learning in recent years, along with their advantages and limitations. This review not only adds new insights into machine learning and deep learning methods in machine/robotic vision but also features real-world applications of object detection, semantic segmentation, and human action recognition. Additionally, it includes a critical discussion aimed at advancing the field.

This paper’s organizational structure is as follows. Section 2 offers an overview of machine learning/deep learning algorithms and methods. Section 3 comprehensively covers object detection, image, and semantic segmentation algorithms and methods, with a specific focus on human action recognition methods. Section 4 introduces detailed notions regarding robotic vision. Section 5 presents Hubel and Wiesel’s electrophysiological insights, Van Essen’s map of the brain, and their impact on machine/robotic vision. Section 6 presents a discussion regarding the aforementioned topics. Lastly, Section 7 addresses the current challenges and future trends in the field.

2. Machine Learning/Deep Learning Algorithms

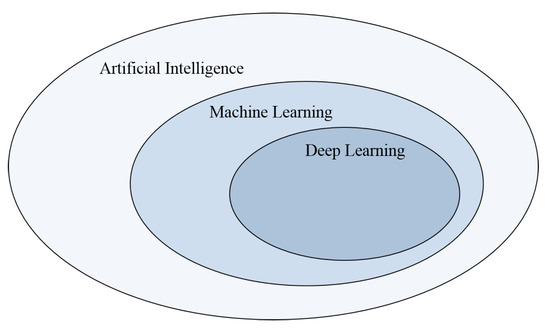

Various AI algorithms facilitate pattern recognition in machine vision and can be broadly categorized into supervised and unsupervised types. Supervised algorithms, which leverage labeled data to train models predicting the class of input images, can be further divided into parametric (assuming data distribution) and non-parametric methods. Examples include k-nearest neighbors, support vector machines, and neural networks. Unsupervised algorithms, which lack labeled data, unveil patterns or structures and can be categorized into clustering and dimensionality reduction methods. Comparative analyses assess technologies based on metrics like accuracy and scalability, with the optimal choice dependent on the specific problem and resources. Initially met with skepticism, public perception of AI’s benefits has shifted positively over time. Artificial intelligence aims to replicate human intelligence, with vision being a crucial aspect. Exploring the link between computer vision and AI, the latter comprises machine learning and deep learning subsets, essential for understanding machine vision’s progress (see Figure 1).

Figure 1.

Relationship between artificial intelligence, machine learning, and deep learning.

The terms artificial intelligence, machine learning, and deep learning are often mistakenly used interchangeably. To grasp their relationship, it is helpful to envision them as concentric circles. The outermost circle represents artificial intelligence, which was the initial concept. Machine learning, which emerged later, forms a smaller circle that is encompassed by artificial intelligence. Deep learning, the driving force behind the ongoing evolution of artificial intelligence, is represented by the smallest circle nested within the other two.

2.1. Machine Learning

Human nature is marked by the innate ability to learn and progress through experiences. Similarly, machines possess the capacity for improvement through data acquisition, a concept known as machine learning (ML). ML, a subset of artificial intelligence, empowers computers to autonomously detect patterns and make decisions with minimal human intervention. Algorithms undergo training through exposure to diverse situations, refining understanding with more data, leading to enhanced accuracy. Organizations adopt ML for automated, efficient operations. Computer vision applications, like facial recognition and image detection, showcase ML’s impact. Image analysis identifies facial features for applications such as smartphone unlocking and security systems. In autonomous vehicles, image detection recognizes objects in real time, enabling informed decisions. ML embraces supervised learning, making inferences based on past data, and unsupervised learning, identifying patterns without labeled guidance, offering versatility in various domains.

2.2. Deep Learning

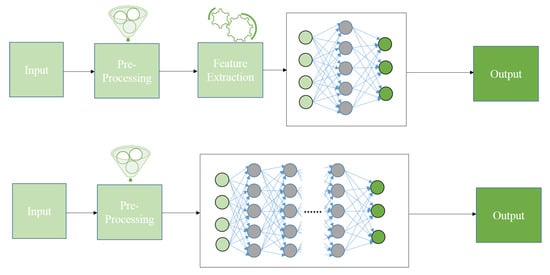

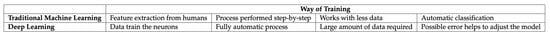

Deep learning, an evolution of machine learning, surpasses shallow neural networks by employing intricate algorithms that mirror human cognitive processes. These algorithms, forming deep neural networks, emulate the logical structure of the human brain, enabling them to draw conclusions by analyzing data. Unlike traditional machine learning, which relies on manually extracted features, deep learning operates on an end-to-end learning framework, minimizing human intervention. The architecture of deep neural networks consists of multiple interconnected layers with non-linearity, enhancing their capacity to learn complex patterns. In contrast, traditional machine learning, represented by shallow neural networks, involves step-by-step feature extraction and model construction with human-designed features. Computer vision utilizes “manual features” for precise identification within images, a process distinct from the automatic feature learning of deep neural networks. The comparison presented in Figure 2 and Figure 3 underscores the automatic nature of deep learning, driven by data and minimal user involvement, whereas traditional machine learning relies on human-crafted features and a more manual, stepwise process.

Figure 2.

Comparison between a shallow neural network (first image above) and deep learning (second image below).

Figure 3.

Supervised machine learning vs. supervised deep learning.

2.3. Vision Applications Using Deep Learning Methods

Although the term “deep learning” initially referred to the depth of the neural network (number of hidden layers), it has evolved to encompass a broader class of machine learning techniques that utilize neural networks with multiple layers to model and solve complex problems. The relevance of deep learning spans various domains and applications. Here are some of the most relevant problems and applications where deep learning has demonstrated a significant impact:

- Image Recognition and Classification: Deep learning, especially convolutional neural networks (CNNs), excels in tasks like image classification, object recognition, facial recognition, and medical image analysis [4].

- Autonomous Vehicles: Deep learning, particularly CNNs, plays a crucial role in perception tasks for autonomous vehicles, enabling object detection, segmentation, and recognition [5].

- Medical Image Analysis: Deep learning, especially CNNs, is applied in tasks such as tumor detection, pathology recognition, and organ segmentation in medical image analysis [6].

- Generative Modeling: Generative models like GANs and VAEs are used for image synthesis, style transfer, and the generation of realistic data samples [7].

- Reinforcement Learning: Deep reinforcement learning successfully trains agents for game playing, robotic control, and optimizing complex systems through interaction with the environment [8].

- Human Activity Recognition: Deep learning models, especially RNNs and 3D CNNs, recognize and classify human activities from video or sensor data, with applications in healthcare, surveillance, and sports analytics [9].

These are just a few examples, and the versatility of deep learning continues to expand as researchers and practitioners explore new applications and architectures. The success of deep learning in these domains is attributed to its ability to automatically learn hierarchical representations from data, capturing complex patterns and relationships.

3. Object Detection, Semantic Segmentation, and Human Action Recognition Methods

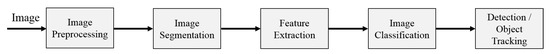

Digital image processing algorithms have been transformative in machine vision and computer vision, reshaping visual perception and enabling machines to comprehend and analyze images [10,11]. Originating from image processing, these algorithms have driven progress in pattern recognition, object detection, and image classification, ushering in a paradigm shift. Machine vision leverages intricate techniques and mathematical models, bridging the gap between human visual systems and machine intelligence. By extracting meaning from visual stimuli, computer vision has transformed our understanding of artificial intelligence’s visual realms. Images convey diverse information, including colors, shapes, and recognizable objects, analogous to how the human brain interprets emotions and states. In machine vision, algorithms analyze digital images to extract information based on user-defined criteria. Object detection, face detection, and color recognition are some examples, illustrating the system’s dependence on specific patterns for information extraction [12]. The process involves detecting patterns representing objects, with the detailed steps outlined in Figure 4.

Figure 4.

Steps in machine vision.

3.1. Image Preprocessing

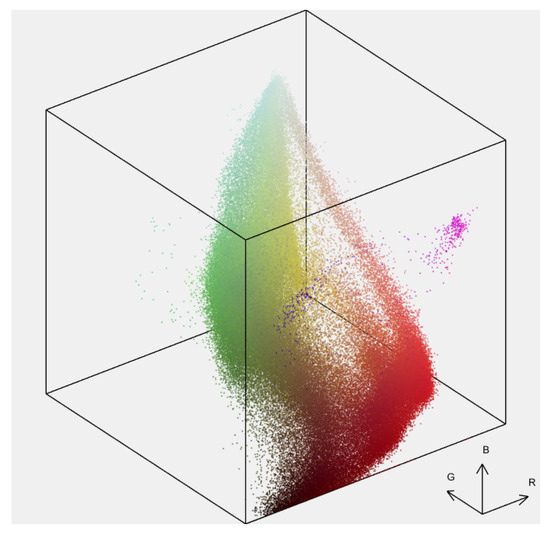

Image preprocessing plays a vital role in refining images before applying pattern recognition algorithms, aiming to enhance quality, reduce noise, correct illumination, and extract relevant features [13]. Common techniques include filtering, histogram equalization, edge detection, and morphological operations. A robust mathematical foundation is essential for effective image analysis, laying the groundwork for the subsequent steps. This foundation determines the color space and model, representing colors mathematically. Color models like RGB, HSI, and HSV define colors precisely using variables, forming color spaces. The RGB model is composed of red, green, and blue components. It combines the intensity levels of these components to create colors. The full strength of all three yields white, whereas their absence results in black [14,15]. The process ensures a comprehensive understanding of image content and sets the stage for employing algorithms in image analysis. Figure 5 and Figure 6 illustrate the RGB color model’s primary colors, their combinations, and a sample RGB model color space.

Figure 5.

An RGB image, its red, green and blue component [15].

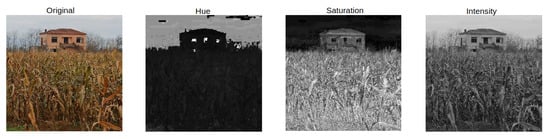

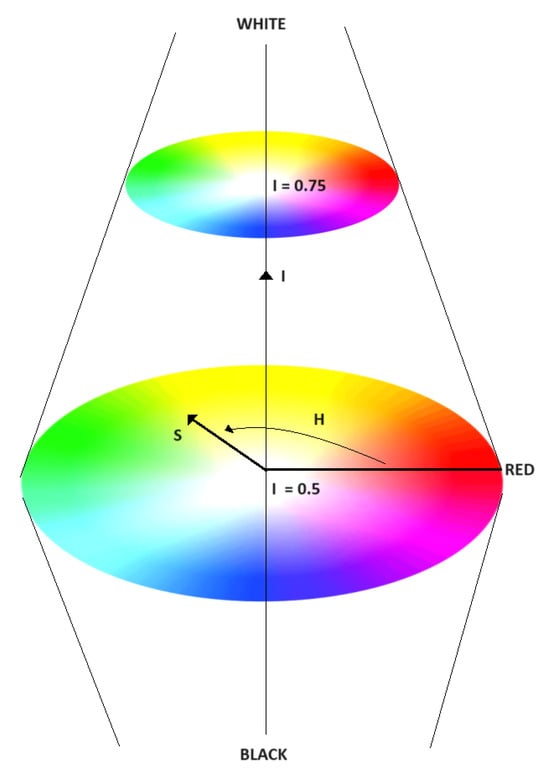

The HSI and HSV models aim to approximate human perception by considering characteristics such as hue (H), saturation (S), intensity (I), brightness (B), and value (V). In the HSI model, the hue component ranges from 0 to 360, determining the color’s hue, whereas saturation (S) expresses the mixing degree of a primary color with white (Figure 7). The intensity (I) component denotes light intensity without conveying color information [16]. The HSI model, depicted as a double cone, exhibits upper and lower peaks corresponding to white (I = 1) and black (I = 0), with maximum purity (S = 1) at I = 0.5 (Figure 8).

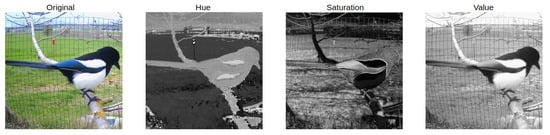

Figure 9 showcases the HSI model in a real photo, depicting HSV channels as grayscale images, revealing color saturation and modified color intensity for a clearer representation. The HSV model calculates the brightness component differently from HSI, primarily managing hue and chromatic clarity components for digital tasks like histogram balancing.

The HSV color space positions black at the inverted cone’s top (V = 0) and white at the base (V = 1). The hue component (hue) for red is 0, differing by 180 from the complementary colors. Saturation (S) is determined by the distance from the cone’s base, simplifying color representation and extraction in object detection compared to the RGB color space [14,17].

Figure 6.

RGB model’s color space in a pepper image.

Figure 7.

Color components of the HSI model on a face: hue, saturation, and intensity.

Figure 8.

HSI model’s color space [18].

Figure 9.

Color components of the HSI model.

3.2. Image Segmentation

Image segmentation is the pivotal process of partitioning an image into segments or regions with similar characteristics, aiding in foreground-background separation, object grouping, boundary location, and more. The mathematical methods usually used in image segmentation are given in Table 1. Techniques such as thresholding, clustering, region growing, and watershed are common for this purpose. The segmentation’s primary aim is to simplify image information, thereby facilitating subsequent analyses and reducing complexity. Successful segmentation, crucial for efficient image analysis, involves dividing the image into homogeneous regions, ideally corresponding to objects like faces. It plays a vital role in object identification and boundary delineation, assigning labels to pixels with common visual characteristics. The algorithms for image segmentation fall into two groups: boundary-based (edge and object detection) and region-based (thresholding, expansion, division, merging, watershed) algorithms (see Table 2 for main algorithms). Robust mathematical foundations, incorporating clustering, edge detection, and graph-based algorithms, are imperative for successful image segmentation, object detection, and image classification [19].

Table 1.

Mathematical methods used in image segmentation.

Table 2.

Image segmentation algorithms.

Image segmentation, crucial for analyzing objects through mathematical models, results in a binary image based on features like texture and color. The process can utilize color or color intensities, and the histogram-based method, which constructs a histogram from all pixels, aids in identifying common pixels in the image. In medical applications, such as chest X-rays, histogram-based segmentation is prevalent [37]. When segmenting based on color, one-dimensional histograms are obtained for monochrome images, whereas color images require three histograms for each channel. Peaks or valleys in the histogram assist in identifying objects and backgrounds. Multicolor images involve processing individual RGB histograms, combining results to select a robust segmentation hypothesis. Segmentation based on pixel intensity is less complex, as evidenced in black-and-white images where relatively large-sized objects create pixel distributions around their average intensity values [38].

3.2.1. Feature Extraction

In machine learning, pattern recognition, and image processing, feature extraction is vital. Following image segmentation, this process transforms a large input dataset into a reduced set of features or a feature vector. Distinct image components, like lines and shapes, are identified, each of which is assigned a normalized value (e.g., perimeter, pixel coverage). Algorithms consider that each pixel has an 8-bit value, reducing information in a 640 × 320 image to a focused feature vector. Feature extraction facilitates generalized learning by isolating relevant information and describing the image in a structured manner (Figure 10) [39,40].

Figure 10.

Original image (left); representation of the boundaries of the regions on the original image (top-right image); segmentation result, where each uniform region is described by an integer and all pixels in the region have this value (bottom-right image).

Some algorithms commonly used for feature extraction include:

- Histogram of Oriented Gradients (HOG);

- Scale-Invariant Feature Transform (SIFT);

- Speeded-Up Robust Features (SURF) ([39,41]).

3.2.2. Image Classification

Humans effortlessly perceive the three-dimensional world, distinguishing objects and recognizing emotions. In computer vision, recognition tasks involve feature extraction, such as identifying a cat. However, distinguishing between a cat and a dog requires a database and specific classification mechanisms. Learning is crucial, with algorithms like Learning and Classification Algorithms (LCAs) being employed based on the application domain. Image classification categorizes images into predefined classes by utilizing mathematical techniques and neural networks. Challenges persist in emulating human visual system complexities, and LCAs can adapt to factors like class linearity. Image classification relies on a multidisciplinary approach, integrating feature extraction, data representation, and model training (see Table 3).

Some commonly used classification algorithms include [42]:

- Naive Bayes classifier;

- Decision trees;

- Random forests;

- Neural networks;

- Nearest neighbor or k-means.

Table 3.

Mathematical methods used in image classification.

Table 3.

Mathematical methods used in image classification.

| Mathematical Method | Application in Image Classification |

|---|---|

| Linear algebra [43] | Vectors and matrices: represent images and perform matrix operations for pixel manipulation. |

| Eigenvalue decomposition: dimensionality reduction (e.g., PCA, SVD). | |

| Statistics [44] | Probability and statistics: model feature distributions, probabilistic models (e.g., Naive Bayes, Gaussian Mixture Models). |

| Calculus [45] | Gradient descent: Optimization during machine learning model training. |

| Machine learning algorithms [46] | Support vector machine (SVM): Finds hyperplanes in feature space. |

| Decision trees and random forests: use mathematical decision rules for classification. | |

| Neural networks [47] | Backpropagation: updates weights during neural network training. |

| Activation functions: introduce non-linearity in neural networks. | |

| Signal processing [48] | Fourier transform: extracts features in the frequency domain. |

| Wavelet transform: captures high- and low-frequency components. | |

| Distance metrics [49] | Euclidean distance: measures similarity between feature vectors. |

| Mahalanobis distance: considers correlations between features. |

3.2.3. Object Detection

Object detection in computer vision identifies and labels objects in images or videos, employing algorithms that extract and process information, with an emphasis on specific aspects. This technique enables the counting, tracking, and precise labeling of objects. The process, termed `object detection’ or `recognition’, employs mathematical techniques like convolution, spatial transformations, and machine learning algorithms. Specific instances, such as `face detection’ or `car detection’, focus on extracting information related to faces or cars. Mathematical concepts essential for object detection include convolutions, spatial transformations, and machine learning algorithms like support vector machines and decision trees (see Table 4) [39,50] (Figure 11).

Table 4.

Mathematical methods used in object detection.

Figure 11.

Example of detecting objects in an image [51].

3.2.4. Object Tracking

Following object detection, visual object tracking involves recognizing and estimating the states of moving objects within a visual scene. It plays a vital role in machine vision applications, tracking entities like people and faces. Despite recent advancements, challenges persist due to factors like environmental conditions, object characteristics, and non-linear motion. Challenges include variations in background, lighting conditions, rigid object parts, and interactions with other objects. Effectively predicting future trajectories requires assessing and determining the most suitable tracking algorithms (see Figure 12) [59,60].

Figure 12.

Example of tracking objects in an image.

3.3. Human Action Recognition

Human action recognition in computer vision, crucial for surveillance, human–computer interaction, and sports analysis, benefits from advanced deep learning techniques. By leveraging CNNs and RNNs like LSTM, these models can effectively capture spatial and temporal information from video sequences. By extracting features representing motion and appearance patterns, they can enhance accuracy. The fusion of modalities like RGB and depth information further refines recognition. Recent strides in attention mechanisms and metaheuristic algorithms have optimized network architectures, emphasizing relevant regions for improved performance [9,61,62,63,64,65,66,67,68,69,70,71,72].

There are also various other approaches regarding HAR.

In [73], a two-stream attention-based LSTM network was proposed for deep learning models, enhancing feature distinction determination. The model integrates a spatiotemporal saliency-based multi-stream network with an attention-aware LSTM, utilizing a saliency-based approach to extract crucial features and incorporating an attention mechanism to prioritize relevance. By introducing an LSTM network, temporal information and long-term dependencies are captured, improving accuracy in distinguishing features and enhancing action differentiation. In [74], a hybrid deep learning model for human action recognition was introduced, achieving 96.3% accuracy on the KTH dataset. The model focuses on precise classification through robust feature extraction and effective learning, leveraging the success of deep learning in various contexts. The authors of [75] proposed a framework for action recognition, utilizing multiple models to capture both global and local motion features. A 3D CNN captures overall body motion, whereas a 2D CNN focuses on individual body parts, enhancing recognition by incorporating both global and local motion information. Furthermore, [76] drew inspiration from deep learning achievements, proposing a CNN-Bi-LSTM model for human activity recognition. Through end-to-end training, the model refines pre-trained CNN features, demonstrating exceptional accuracy in recognizing single- and multiple-person activities on RGB-D datasets. In [77], a novel hybrid architecture for human action recognition was introduced, combining four pre-trained network models through an optimized metaheuristic algorithm. The architecture involves dataset creation, a deep neural network (DNN) design, training optimization, and performance evaluation. The results demonstrate its superiority over existing architectures in accurately predicting human actions. The authors of [78] presented a key contribution with temporal-spatial mapping, capturing video frame evolution. The proposed temporal attention model within a convolutional neural network achieved remarkable performance, surpassing a competing baseline method by 4.2% in accuracy on the challenging HMDB51 dataset. In [79] authors tackled still image-based human action recognition challenges using transfer learning and data augmentation and by fine-tuning CNN architectures. The proposed model outperformed prior benchmarks on the Stanford 40 and PPMI datasets, showcasing its robustness. Finally, [80] introduced the cooperative genetic algorithm (CGA) for feature selection, employing a cooperative approach that enhances accuracy, reduces overfitting, and improves resilience to noise and outliers. CGA offers superior feature selection outcomes across various domains.

The main human action recognition methods are presented in Table 5, while their characteristics, including their advantages, disadvantages/limitations, and complexities, are given in Appendix A.

Table 5.

Human action recognition methods.

3.4. Semantic Segmentation

In computer vision, the fundamental challenges are image classification, object detection, and segmentation, each escalating in complexity [12,81,82,83,84,85]. Object detection involves labeling objects and determining their locations, whereas image segmentation delves deeper, precisely delineating object boundaries. Image segmentation can be classified into two techniques: semantic segmentation, which assigns each pixel to a specific label, and instance segmentation, which uniquely labels each instance of an object. Semantic segmentation plays a vital role in perceiving and interpreting images, crucial for applications like autonomous driving and medical imaging. Convolutional neural networks, especially in deep learning, have significantly advanced semantic segmentation, providing high-resolution mapping for various applications, including YouTube stories and scene understanding [86,87,88,89,90,91,92]. This technique finds applications in diverse areas, such as document analysis, virtual makeup, self-driving cars, and background manipulation in images, showcasing its versatility and importance. Semantic segmentation architectures typically involve an encoder network, which utilizes pre-trained networks like VGG or ResNet, and a decoder network, which projects learned features onto the pixel space, enabling dense pixel-level classification [86,87,88,89,90,91,92].

The three main approaches are:

1. Region-Based Semantic Segmentation

Typically, region-based approaches use the “segmentation using recognition” pipeline. In this method, free-form regions are extracted from an image and described before being subjected to region-based classification. The region-based predictions are transformed into pixel predictions during testing by giving each pixel a label based on the region with the highest score to which it belongs [86,87,93,94,95,96].

2. Fully Convolutional Network-Based Semantic Segmentation

The original Fully Convolutional Network (FCN) does not require region proposals because it learns a mapping from pixels to pixels. By enabling it to handle images of any size, the FCN expands the capabilities of a conventional CNN. FCNs only use convolutional and pooling layers, as opposed to traditional CNNs, which use fixed fully connected layers, allowing predictions on inputs of any size [92,97,98,99,100].

3. Weakly Supervised Semantic Segmentation

Many semantic segmentation methods depend on pixel-wise segmentation masks, which are laborious and costly to annotate. To address this challenge, weakly supervised methods have emerged. These approaches leverage annotated bounding boxes to achieve semantic segmentation, providing a more efficient and cost-effective solution [50,63,90,91,92,101,102,103,104,105,106,107].

Some other approaches are discussed below.

In [108], the authors discussed the application of deep learning for the semantic segmentation of medical images. They outlined crucial steps for constructing an effective model and addressing challenges in medical image analysis. Deep convolutional neural networks (DCNNs) in semantic segmentation were explored in [109], where models like UNet, DeepUNet, ResUNet, DenseNet, and RefineNet were reviewed. DCNNs proved effective in semantic segmentation, following a three-phase procedure: preprocessing, processing, and output generation. Ref. [110] introduced CGBNet, a segmentation network that enhanced performance through context encoding and multi-path decoding. The network intelligently selects relevant score maps and introduces a boundary delineation module for competitive scene segmentation results.

The main semantic segmentation methods are presented in Table 6, while their characteristics, including their advantages, disadvantages/limitations, and complexities, are given in Appendix B.

Table 6.

Semantic segmentation methods.

3.5. Automatic Feature Extraction

Automatic feature extraction methods play a crucial role in robotic vision by helping neural networks (NNs) effectively process and understand visual information. Following is an overview of how these methods are used in conjunction with neural networks:

- Preprocessing:Image Enhancement.Methods like histogram equalization and noise reduction improve image quality, aiding neural networks in extracting meaningful features.

- Feature Extraction:

- Traditional Techniques: Edge and corner detection and texture analysis extract relevant features, capturing crucial visual information.

- Deep Learning-Based Techniques: Convolutional neural networks (CNNs) learn hierarchical features directly from raw pixel data, covering both low-level and high-level features.

- Data Augmentation: Automatic feature extraction is integrated into data augmentation, applying techniques like rotation and scaling to diversify the training dataset.

- Hybrid Models: Hybrid models combine traditional computer vision methods with neural networks, leveraging the strengths of both for feature extraction and classification.

- Transfer Learning: Pre-trained neural networks, especially in computer vision tasks, can be fine-tuned for specific robotic vision tasks, saving training time and resources.

- Object Detection and Recognition: Automatic feature extraction contributes to object detection, as seen in region-based CNNs (R-CNNs) using region proposal networks and subsequent feature extraction for classification.

- Semantic Segmentation: In tasks like semantic segmentation, automatic feature extraction aids the neural network in understanding context and spatial relationships within an image.

By integrating automatic feature extraction methods with neural networks, robotic vision systems can efficiently process visual information, understand complex scenes, and perform tasks such as object recognition, localization, and navigation. This combination of techniques allows for more robust and accurate vision-based applications in robotics.

4. Robotic Vision Methods

Robotic vision algorithms serve three primary functions in visual perception. In this subsection, we explore and examine examples of each of these functions [3].

4.1. Pattern Recognition—Object Classification

Pattern recognition in machine vision is the process of identifying and classifying objects or patterns in images or videos using machine learning algorithms. Pattern recognition can be used for various applications, such as object detection, face recognition, optical character recognition, biometric authentication, etc. [111,112]. Pattern recognition can also be used for image preprocessing and image segmentation, which are essential steps for many computer vision tasks [113,114,115].

Robotic vision is based on pattern recognition. It is necessary to classify the data into different categories to make it easier to use appropriate algorithms to select the right decisions.

Originally, two approaches were founded for the implementation of a pattern recognition system. Statistical pattern recognition is based on underlying statistical models to describe the patterns and their classes. The first pattern is the theoretical decision. In the second approach, the classes are represented by formal structures such as grammar and strings. This approach is called syntactic pattern recognition, otherwise defined as a structural approach. The third approach was developed later, and it has experienced rapid development in recent years. It is called neural pattern recognition. In this approach, the classifier is depicted as a network of small autonomous units that perform a small number of specific actions, i.e., “cells” that mimic the neurons of the human brain.

Classifying objects belongs to a biological capacity of the human system that refers to visual perception. It is a very important function in the field of computer vision, aiming to automatically classify images into predefined categories. For decades, researchers have developed advanced techniques to improve the quality of classification. Traditionally, classification models can only perform well on small datasets, such as CIFAR-10 [116] and MNIST [117]. The biggest leap forward in the development of image classification occurred when the large-scale image dataset “ImageNet” was created by Feifei Li in 2009 [106].

An equally important and challenging task in computer vision is object detection, which involves identifying and localizing objects from either a large number of predefined categories in natural images or for a specific object. Object detection and image classification face a similar technological challenge: both need to handle a wide variety of objects. However, object detection is more challenging compared to image classification because it requires identifying the exact target object being searched for [19]. Most research efforts have focused on detecting a single class of object data, such as pedestrians or faces, by designing a set of suitable features. In these studies, objects are detected using a set of predefined patterns, where the features correspond to a location in the image or a feature pyramid.

Object classification identifies the objects present in the visual scene, whereas object detection reveals their locations. Object segmentation is defined as the pixel-level categorization of pixels, aiming to divide an image into significant regions by classifying each pixel into a specific layer. In classical object segmentation, the method of uncontrolled merging and region segmentation has been extensively investigated based on clustering, general feature optimization, or user intervention. It is divided into two primary branches based on object partitioning. In the first branch, semantic segmentation is employed, where each pixel corresponds to a semantic object classification. In the second branch, instance segmentation is utilized, providing different labels for different object instances as a further improvement of semantic segmentation [19].

In [72], the authors presented a comprehensive survey of the literature on human action recognition, with a specific focus on the fusion of vision and inertial sensing modalities. The surveyed papers were categorized based on fusion approaches, features, classifiers, and multimodality datasets. The authors also addressed challenges in real-world deployment and proposed future research directions. The work contributed a thorough overview, categorization, and insightful discussions of the fusion-based approach for human action recognition.

The authors of [118] evaluated some Kinect-based algorithms for human action recognition using multiple benchmark datasets. Their findings revealed that most methods excelled in cross-subject action recognition compared to cross-view action recognition. Additionally, skeleton-based features exhibited greater resilience in cross-view recognition, while deep learning features were well-suited for large datasets.

The authors of [119] offered a comprehensive review of recent advancements in human action recognition systems. They introduced hand-crafted representation-based methods, as well as deep learning-based approaches, for this task. A thorough analysis and a comparison of these methods and datasets used in human action recognition were presented. Furthermore, the authors suggested potential future research directions in the field.

In [120], a comprehensive review of recent progress made in semantic segmentation was presented. The authors specifically examined and compared three categories of methods: those relying on hand-engineered features, those leveraging learned features, and those utilizing weakly supervised learning. The authors presented the descriptions, as well as a comparison, of prominent datasets used in semantic segmentation. Furthermore, they conducted a series of comparisons between various semantic segmentation models to showcase their respective strengths and limitations.

In [121], a comprehensive examination of semantic segmentation techniques employing deep neural networks was presented. The authors thoroughly analyzed the leading approaches in this field, highlighting their strengths, weaknesses, and key challenges. They concluded that deep convolutional neural networks have demonstrated remarkable effectiveness in semantic segmentation. The review encompassed an in-depth assessment of the top methods employed for semantic segmentation using deep neural networks. The strengths, weaknesses, and significant challenges associated with these approaches were carefully summarized. Semantic segmentation has played a vital role in enhancing and expanding our understanding of visual data, providing valuable insights for various computer vision applications.

The authors of [122] presented a comprehensive review of deep learning-based methods for semantic segmentation. They explored the common challenges faced in current research and highlighted emerging areas of interest in this field. Deep learning techniques have played a pivotal role in enhancing the performance of semantic segmentation tasks. Research on semantic segmentation can be categorized based on the level of supervision, namely fully supervised, weakly supervised, and semi-supervised approaches. The current research faces challenges such as limited data availability and class imbalance, which necessitate further exploration and innovation.

In [123], the latest advancements in semantic image segmentation were explored. The authors conducted a comparative analysis of different models and concluded by discussing the model that exhibited the best performance. They suggested that semantic image segmentation is a rapidly evolving field that has involved the development and application of numerous models across various domains. A performance evaluation of each semantic image segmentation model was carried out using the Intersection-over-Union (IoU) method. The results of the IoU were used to facilitate a comprehensive comparison of the different semantic image segmentation models.

Key deep learning architectures in robotic vision include CNNs, RNNs, and Generative Adversarial Networks (GANs). These innovations have wide-ranging applications in robotic vision, encompassing tasks such as object detection, pose estimation, and semantic segmentation. Convolutional neural networks (CNNs) play a central role in tasks like object detection, image classification, and scene segmentation. They excel in extracting intricate features from raw image data, enabling precise object identification and tracking. In situations demanding temporal insights, recurrent neural networks (RNNs), especially Long Short-Term Memory (LSTM) networks, are essential. They excel in tracking moving objects and predicting future actions based on historical data. Some deep learning applications in robotic vision include object grasping and pick-and-place operations [124]. Offline reinforcement learning algorithms have also surfaced, facilitating continuous learning in robots without erasing previous knowledge [125]. In flower removal and pollination, a 3D perception module rooted in deep learning has emerged, elevating detection and positioning precision for robotic systems [126]. Additionally, deep learning has found utility in fastener detection within computer vision-based robotic disassembly and servicing, excelling in performance and generalization [127]. Neural networks have wielded a pivotal influence in robot vision, making strides in image segmentation, drug detection, and military applications. Deep learning methods, as demonstrated [128], stand as versatile and potent tools for augmenting robotic vision capabilities.

Semantic segmentation entails delineating a specific object or region within an image. This task finds applications in diverse industries, including filmmaking and augmented reality. In the era of deep learning, the convolutional neural network (CNN) has emerged as the main method for semantic segmentation. Rather than attempting to discern object boundaries through traditional visual cues like contrast and sharpness, deep CNNs reframe the challenge as a classification problem. By assigning a class to each pixel in the image, the network inherently identifies object boundaries. This transformation involves adapting the final layers of a conventional CNN classification network to produce H*W values, representing pixel classes, in lieu of a single value representing the entire image’s class. The DeepLab series, following the FCN paradigm, spans four iterations: V1, V2, V3, and V3+, developed from 2015 to 2018. DeepLab V1 laid the foundation, while subsequent versions introduced incremental improvements. These iterations harnessed innovations from recent image classification advancements to enhance semantic segmentation, thereby serving as a catalyst for research endeavors in the field [92,129,130].

4.2. Mathematical Foundations of Deep Learning Methods in Robotic Vision

Deep learning in robotic vision reveals a plethora of promising approaches, each with its own unique strengths and characteristics. To utilize the full potential of this technology, it is crucial to identify the most promising methods and consider several combinations to tackle specific challenges.

Convolutional neural networks (CNNs): Among the most promising approaches are CNNs, which excel in image recognition tasks. They have revolutionized object detection, image segmentation, and scene understanding in robotic vision. Their ability to automatically learn hierarchical features from raw pixel data is a game-changer.

Recurrent neural networks (RNNs): RNNs are vital for tasks requiring temporal understanding. They are used in applications like video analysis, human motion tracking, and gesture recognition. Combining CNNs and RNNs can address complex tasks by leveraging spatial and temporal information.

Reinforcement learning (RL) in robotic vision involves algorithms for robots to learn and decide via environmental interaction, utilizing a Markov Decision Process (MDP) framework. RL algorithms like Deep Q-Networks (DQN) and Proximal Policy Optimization (PPO) use neural networks to approximate mappings between states, actions, and rewards, improving robots’ understanding and navigation. The integration of RL and robotic vision is promising for applications like autonomous navigation and human–robot collaboration, which rely on well-designed reward functions.

Generative Adversarial Networks (GANs): GANs offer transformative potential in generating synthetic data and enhancing data augmentation. This is especially valuable when dealing with limited real-world data. Their combination with other models can enhance training robustness.

Transfer Learning: Using pre-trained models is a promising strategy. By fine-tuning models on robotic vision data, we can benefit from knowledge transfer and accelerate model convergence. This approach is particularly useful when data are scarce.

Multi-Modal Fusion: Combining information from various sensors, such as cameras, LiDARS, and depth sensors, is crucial for comprehensive perception. Techniques like sensor fusion, including vision and LiDAR or radar data, are increasingly promising.

The convergence of these approaches holds tremendous potential. For instance, combining CNNs, RNNs, and GANs for real-time video analysis or fusing multi-modal data with transfer learning can address complex robotic vision challenges. The promise lies in the thoughtful integration of these approaches to create holistic solutions that can empower robots to effectively perceive, understand, and interact with their environments.

The main deep learning methods are presented in Table 7.

Table 7.

Deep learning methods in robotic vision.

4.2.1. Convolutional Neural Networks (CNNs)

- Convolution Operation: The convolution operation in CNNs involves the element-wise multiplication of a filter (kernel) with a portion of the input image, followed by summing the results to produce an output feature map. Mathematically, it can be represented as:where I is the input image, K is the convolutional kernel, represents the spatial position in the output feature map, and iterates over the kernel dimensions.

- Activation Functions: Activation functions, such as the Rectified Linear Unit (ReLU), introduce non-linearity into the network. The ReLU function is defined as:and is applied to the output of the convolutional and fully connected layers.

- Pooling: Pooling layers reduce the spatial dimensions of feature maps. Max pooling, for example, retains the maximum value in a specified window. Mathematically, it can be represented as:where P is the pooled output and is the input.

- Fully Connected Layers: In the final layers of a CNN, fully connected layers perform traditional neural network operations. A fully connected layer computes the weighted sum of all inputs and passes it through an activation function, often a softmax, for classification tasks.

- Backpropagation: The training of CNNs relies on backpropagation, a mathematical process for adjusting network weights and biases to minimize a loss function. This process involves the chain rule to compute gradients and update model parameters.

4.2.2. Recurrent Neural Networks (RNNs)

RNNs are a type of neural network designed for processing sequences of data. They have a dynamic and recurrent structure that allows them to maintain hidden states and process sequential information. The core mathematical components of RNNs include:

1. Hidden State Update: At each time step t, the hidden state is updated using the current input and the previous hidden state through a set of weights and activation functions. Mathematically, this can be expressed as:

where is the hidden state at time step t; f is the activation function, typically the hyperbolic tangent (tanh) or sigmoid; and are the weight matrices; and is the bias term.

2. Output Calculation: The output at each time step can be computed based on the current hidden state. For regression tasks, the output is often calculated as:

where is the output at time step t, is the weight matrix for the output, and is the bias term.

3. Backpropagation Through Time (BPTT): RNNs are trained using the backpropagation through time (BPTT) algorithm, which is an extension of backpropagation. BPTT calculates gradients for each time step and updates the network’s weights and biases accordingly.

RNNs are well-suited for sequence data, time-series analysis, and natural language processing tasks. They can capture dependencies and contexts in sequential information, making them a valuable tool in machine learning and deep learning.

4.2.3. Reinforcement Neural Networks (RNNs)

Reinforcement learning (RL) is a machine learning paradigm focused on training agents to make sequential decisions in an environment to maximize cumulative rewards. The fundamental mathematical components of RL include:

1. Markov Decision Process (MDP): RL problems are often formalized as MDPs. An MDP consists of a tuple (), where S is the state space representing the possible environmental states; A is the action space consisting of the possible actions the agent can take; P is the transition probability function, defining the probability of transitioning from one state to another after taking a specific action; and R is the reward function, which provides a scalar reward signal to the agent for each state-action pair.

2. Policy (): A policy defines the agent’s strategy for selecting actions in different states. It can be deterministic or stochastic. Mathematically, a policy maps states to actions: .

3. Value Functions: Value functions evaluate the desirability of states or state-action pairs. The most common value functions are:

- State-Value Function (V): estimates the expected cumulative reward when starting from a state s and following policy .

- Action-Value Function (Q): estimates the expected cumulative reward when starting from a state s, taking action a, and following policy .

4. Bellman Equations: The Bellman equations express the relationship between the value of a state or state-action pair and the values of the possible successor states. They are crucial for updating the value functions during RL training.

5. Optimality: RL aims to find an optimal policy that maximizes the expected cumulative reward. This can be achieved by maximizing the value functions:

Reinforcement learning algorithms, such as Q-learning, SARSA, and various policy gradient methods, use these mathematical foundations to train agents in a wide range of applications, from game playing to robotics and autonomous systems.

4.2.4. Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are a class of deep learning models that consist of two neural networks: a generator (G) and a discriminator (D). The mathematical foundations of GANs include:

1. Generator (G): The generator maps random noise z from a prior distribution () to generate data samples. This process can be represented as .

2. Discriminator (D): The discriminator evaluates whether a given data sample is real (x) or generated by the generator (). It produces a scalar value representing the probability that the input is real ().

3. Objective Function: GANs are trained using a minimax game between G and D. The objective function to be minimized by G and maximized by D is defined as:

where is the real data distribution, is the prior distribution of noise, and represents the expectation.

4. Optimal Generator: At optimality, the generator produces samples that are indistinguishable from real data, meaning . This occurs when the objective function reaches its global minimum.

5. Training: GANs are trained using techniques like stochastic gradient descent. The generator updates its parameters to minimize the objective function, whereas the discriminator updates its parameters to maximize it.

6. Generated Data: The generator produces synthetic data samples that closely resemble real data.

GANs are widely used in various applications, including image generation, style transfer, and data augmentation.

4.2.5. Long Short-Term Memory (LSTM)

Long Short-Term Memory (LSTM) [137] is a type of recurrent neural network (RNN) architecture designed to overcome the vanishing gradient problem and capture long-range dependencies in sequential data. The key equations and components of LSTM include:

1. Gates: LSTMs have three gates: the forget gate (), the input gate (), and the output gate (). These gates regulate the flow of information within a cell.

2. Cell State (): LSTMs maintain a cell state, which serves as a memory unit. The cell state is updated using the following equations:

where is the sigmoid activation function and ⊙ represents the element-wise multiplication.

3. Hidden State (): The hidden state is derived from the cell state and is updated using the output gate:

4. Training: LSTMs are trained using the backpropagation through time (BPTT) and gradient descent algorithms. The gradients are computed with respect to the cell state, hidden state, and parameters.

LSTMs are known for their ability to capture long-term dependencies and are widely used in natural language processing, speech recognition, and various sequential data tasks.

In Table 8, we can see a detailed comparison of deep learning algorithms and methods and their integration in robotic vision.

Table 8.

Comparison of deep learning algorithms and methods in robotic vision.

4.3. Combining Approaches for Robotic Vision

To address the complexity of robotic vision tasks, a combination of these neural network architectures can be powerful. For instance:

- Using CNNs for initial image feature extraction to identify objects and their positions.

- Integrating RNNs to process temporal data and track object movement and trajectories over time.

- Implementing GANs to generate synthetic data for training in various environments and conditions.

- Employing LSTMs to remember past states and recognize long-term patterns in robot actions and sensor data.

By combining these approaches, robotic vision systems can leverage the strengths of each architecture to improve object detection, tracking, and the understanding of complex visual scenes in dynamic environments (see Table 9).

Table 9.

Combined approaches in robotic vision.

4.4. Big Data, Federated Learning, and Vision

Big data and federated learning play significant roles in advancing the field of computer vision. Big data provides a wealth of diverse visual information, which is essential for training deep learning models that power computer vision applications. These datasets enable more accurate object recognition, image segmentation, and scene understanding.

Federated learning, on the other hand, enhances privacy and efficiency. It allows multiple devices to collaboratively train models without sharing sensitive data. In computer vision, this means that the collective intelligence of various sources can be used while preserving data privacy, making it a game-changer for applications like surveillance, healthcare, and autonomous vehicles or drones.

4.4.1. Big Data

Big data uses vast and complex datasets arising from diverse origins and applications, such as social media, sensors, and cameras. Within machine vision, big data proves invaluable for pattern recognition, offering a plethora of information like images, videos, texts, and audio.

The advantages of big data are numerous: it can facilitate the creation of more accurate and resilient pattern recognition models by supplying ample samples and variations; it can display latent patterns and insights inaccessible to smaller datasets; and it can support pattern recognition tasks necessitating multiple modalities or domains. However, big data also has certain drawbacks: it can present challenges in data collection, storage, processing, analysis, and visualization; it can create ethical and legal concerns surrounding data privacy, security, ownership, and quality; and it can introduce noise, bias, or inconsistency that may impede the performance and reliability of pattern recognition models.

Big data and machine vision find a lot of applications. In athlete training, they aid behavior recognition. By combining machine vision with big data, the actions of athletes can be analyzed using cameras, providing valuable information for training and performance improvements [143].

In image classification, spatial pyramids can enhance the bag-of-words approach. Machine vision-driven big data analysis can improve speed and precision in microimage surface defect detection or be used to create intelligent guidance systems in large exhibition halls, enhancing the visitor experience. In the context of category-level image classification, the use of spatial pyramids based on 3D scene geometry has been proposed to improve classification accuracy [144]. Data fusion techniques with redundant sensors have been used to boost robotic navigation. Big data and AI have been used to optimize communication and navigation within robotic swarms in complex environments. They have also been applied in robotic platforms for navigation and object tracking using redundant sensors and Bayesian fusion approaches [145]. Additionally, the combination of big data analysis and robotic vision has been used to develop intelligent calculation methods and devices for human health assessment and monitoring [146].

4.4.2. Federated Learning

Federated learning, a distributed machine learning technique, facilitates the collaborative training of a shared model among multiple devices or clients while preserving the confidentiality of their raw data. In the context of machine vision, federated learning proves advantageous when dealing with sensitive or dispersed data across various domains or locations. Federated learning offers several benefits: it can safeguard client data privacy and security by preserving data locally; it can minimize communication and computation costs by aggregating only model updates; and it can harness the diversity and heterogeneity of client data to enhance model generalization. Nonetheless, federated learning entails certain drawbacks: it may encounter challenges pertaining to coordination, synchronization, aggregation, and evaluation of model updates; it may be subject to communication delays or failures induced by network bandwidth limitations or connectivity issues; and it may confront obstacles in model selection, optimization, or regularization due to non-iidness or data imbalance. In computer vision and image processing, “IID” stands for “Independent and Identically Distributed”. It refers to a statistical assumption about the data used in vision-related tasks.

Federated learning can be used to improve the accuracy of machine vision models. It enables training a machine learning model in a distributed manner using local data collected by client devices, without exchanging raw data among clients [147]. This approach is effective in selecting relevant data for the learning task, as only a subset of the data is likely to be relevant, whereas the rest may have a negative impact on model training. By selecting the data with high relevance, each client can use only the selected subset in the federated learning process, resulting in improved model accuracy compared to training with all data [148]. Additionally, federated learning can handle real-time data generated from the edge without consuming valuable network transmission resources, making it suitable for various real-world embedded systems [149].

LEAF is a benchmarking framework for learning in federated settings. It includes open-source federated datasets, an evaluation framework, and reference implementations. The goal of LEAF is to provide realistic benchmarks for developments in federated learning, meta-learning, and multi-task learning. It aims to capture the challenges and intricacies of practical federated environments [150].

Federated learning (FL) offers several potential benefits for machine vision applications. Firstly, FL allows multiple actors to collaborate on the development of a single machine learning model without sharing data, addressing concerns such as data privacy and security [151]. Secondly, FL enables the training of algorithms without transferring data samples across decentralized edge devices or servers, reducing the burden on edge devices and improving computational efficiency [152]. Additionally, FL can be used to train vision transformers (ViTs) through a federated knowledge distillation training algorithm called FedVKD, which reduces the edge-computing load and improves performance in vision tasks [153].

Finally, FL algorithms like FedAvg and SCAFFOLD can be enhanced using momentum, leading to improved convergence rates and performance, even with varying data heterogeneity and partial client participation [154]. The authors of [155] introduced personalized federated learning (pFL) and demonstrated its application in tailoring models for diverse users within a decentralized system. Additionally, they introduced the Contextual Optimization (CoOp) method for fine-tuning pre-trained vision-language models.

5. Hubel and Wiesel’s Electrophysiological Insights, Van Essen’s Map of the Brain, and Their Impact on Robotic Vision

5.1. Hubel and Wiesel’s Contribution

Deep learning’s impact on robotic vision connects insights from neuroscience and computer science. Hubel and Wiesel’s electrophysiological research revealed the fundamental mechanisms of human visual perception, laying the foundation for understanding how neural networks process visual information in deep learning. Similarly, Van Essen’s brain map serves as a critical reference for comprehending neural pathways and functions, elucidating connections within the visual cortex for developing deep learning algorithms. The synergy between neuroscientific revelations and computer science has redefined robotic vision. Deep learning algorithms, inspired by the neural architectures discovered by Hubel and Wiesel and refined through insights from Van Essen’s map, have empowered robots to decipher visual data with precision. This fusion of understanding and innovation has accelerated the development of autonomous robots capable of perceiving, interpreting, and reacting to their surroundings. By embracing the neural foundations of visual perception, deep learning has surpassed human abilities, allowing robots to navigate, interact, and make knowledgeable decisions.

Hubel and Wiesel’s groundbreaking contributions in their electrophysiological studies mixed neuroscience, artificial neural networks (ANNs), and computer vision, shaping the very foundation of modern AI. Their exploration of the cat and monkey visual systems unearthed fundamental insights into sensory processing, establishing vital connections between biological mechanisms and computational paradigms. Understanding the receptive fields of cells in the cat’s striate cortex shed light on brain visual processing. The authors of [156] enriched the comprehension of visual pathways from the retina to the cortex, influencing perception. Notably, moving stimuli trigger robust responses, suggesting motion’s key role in cortical activation. This insight has led to advances in fields like computer vision and robotics, refining motion detection. Specific shapes, sizes, and orientations that activate cortical cells have impacted experimental design. Moreover, intricate properties within the striate cortex units hint at deeper complexities necessitating exploration. Such insights contribute to a holistic understanding of the brain’s visual processing mechanisms. Studying a cat’s visual cortex unveils complex receptive fields, surpassing lower visual levels. This involves receptive fields and binocular interaction and overcomes the limitations of slow-wave recording. A new approach studies individual cells using micro-electrodes, correlating responses with cell location. This method has enhanced the understanding of functional anatomy in smaller cortex areas [157,158,159].

Hubel and Wiesel’s pioneering revelation of “feature detectors” is another cornerstone that resonates within ANNs and computer vision. These specialized neurons, responsive to distinct visual attributes, resemble the artificial neurons that define the core architecture of ANNs. Just as Hubel and Wiesel studied layers of neurons processing features like edges, ANNs harness a similar hierarchy to progressively grasp more complex patterns, enriching our understanding of both brain and machine vision. Moreover, Hubel and Wiesel’s discovery of “ocular dominance columns” and “orientation columns” mirrors the hierarchical arrangement of ANNs, creating structured systems for pattern recognition. The layer-wise organization they elucidated forms the multi-layer architecture of ANNs, maximizing their capacity to decipher complex data patterns. Hubel and Wiesel’s legacy also extends to computer vision, infusing it with a deeper understanding of visual processing. Their identification of critical periods in visual development aligns with the iterative “training” stages of ANNs. By synthesizing their discoveries, ANNs can autonomously learn and recognize complex patterns from images, revolutionizing fields like image classification, object detection, and facial recognition.

Many research papers have built upon the contributions of Hubel and Wiesel. Here, we examine a few of these papers. The VLSI binocular vision system simulates the primary visual cortex disparity computation in robotics and computer vision [160]. It employs silicon retinas, orientation chips, and an FPGA, enabling real-time disparity calculation with minimal hardware. Complex cell responses and a disparity map assist in depth perception and 3D reconstruction. This blend of analog and digital circuits ensures efficient computation. However, the authors solely addressed the primary visual cortex disparity emulation, overlooking other visual aspects. In [161], the authors introduced a practical vergence eye control system for binocular robot vision. The system is rooted in the primary visual cortex (V1) disparity computation and comprises silicon retinas, simple cell chips, and an FPGA. Silicon retinas mimic vertebrate retinal fields, while simple cell chips emulate orientation-selective fields like Hubel and Wiesel’s model. The system generates real-time complex cell outputs for five disparities, enabling reliable vergence movement, even in complex scenarios. This development has paved the way for accurate eye control in binocular robot vision, with potential applications in robotics, computer vision, and AI. In [162], the authors introduced a hierarchical machine vision system based on the primate visual model, thereby enhancing pattern recognition in machines. It involves invariance transforms and an adaptive resonance theory network, focusing on luminance, not color, motion, or depth. The system mirrors network-level biological processes, without biochemical simulation. This system can enhance machine vision algorithms, aiding tasks like object recognition and image classification.

The authors of [163] studied visual mechanisms like automatic gain control and nonuniform processing. They suggested that these biological processes, if applied to machine vision, could reduce data and enhance computational efficiency, particularly in wide-view systems. Implementing these mechanisms could boost machine vision’s processing power and effectiveness. In [164], the growth of cognitive neuroscience and the merging of psychology and neurobiology were explored. In addition, the authors examined memory, perception, action, language, and awareness, bridging behavior and brain circuits. Cognitive psychologists emphasized information flow and internal representations. The authors also touched on the molecular aspects of memory, delving into storage and neural processes, and underscored the progress in memory research within cognitive neuroscience and the value of comprehending both behavioral and molecular memory facets. The authors of [165] explored how the human visual cortex processes complex visual stimuli. They discussed the event-related potentials (ERPs) generated when viewing faces, objects, and letters. Specific ERPs revealed different stages of face processing. The study revealed distinct regions used for the recognition of objects and letters, along with bilateral and right hemisphere-specific face activity. These findings have enhanced our understanding of the neural mechanisms involved in face perception and object recognition in the human brain.

Individuals with autism exhibit challenges in recognizing faces, often due to reduced attention to eyes and unusual processing methods [166]. Impairments start early, at around 3 years old, affecting both structural encoding and recognition memory stages. Electrophysiological studies have highlighted disruptions in the face-processing neural systems from an early age that persist into adulthood. Slower face processing has been linked to more severe social issues. Autism also impacts the brain’s specialization for face processing. These insights have deepened our comprehension of social cognition impairments in autism, aiding early identification and interventions. Other research papers on the use of machine learning methods for classifying autism include [167,168,169,170,171,172,173,174,175,176,177,178,179].

Table 10 shows the main articles discussing the above methods, while their characteristics, including their advantages, disadvantages/limitations, and complexities, are given in Appendix C.

Table 10.

Methods related to Hubel and Wiesel’s main methods.

5.2. Van Essen’s Functional Mapping of the Brain

Van Essen’s work on brain mapping serves as a bridge between our brain’s complex networks and advanced deep neural networks (DNNs) in modern AI. His methodical approach to understanding how different brain regions work together during thinking and sensing is like solving a puzzle. This is similar to how DNNs, with their layers of connected artificial neurons, learn from data. Van Essen’s study of the human connectome, mapping brain pathways, is similar to how DNNs are structured. Both systems process information step by step, finding patterns and understanding them better. By combining Van Essen’s brain mapping with the DNN architecture, we can connect natural and artificial networks. This could lead to a better understanding of how we think and improve AI. This mix of neuroscience and AI could inspire new ways of thinking and improve what machines can do. Looking ahead, this blend could help create AI systems that work more like brains, giving us a deeper understanding of thinking and pushing AI to new levels. It is like a partnership between human creativity and machine learning, a place where what we learn about the brain can help improve technology. This mix of science and technology shows the potential of connecting our natural thinking with the digital thinking we are building.

The new map of the human cerebral cortex from Van Essen’s studies has important practical implications for researchers and medical professionals. It helps researchers understand brain disorders like autism, schizophrenia, dementia, and epilepsy, potentially leading to better treatments. This map was created with precise boundaries and a well-designed algorithm, allowing researchers to more accurately compare results from different brain studies. It can also facilitate personalized brain maps for surgeries and treatments, which is especially helpful for neurosurgeons. Additionally, the map identifies specific brain areas for tasks like language processing and sensory perception, benefiting both cognitive neuroscience and interventions for people with impairments. Overall, the findings of Van Essen’s study connect brain research with practical applications in medicine and neuroscience. Specifically, the authors of [180] outlined the cortical areas tied to vision and other senses and presented a database of connectivity patterns. They analyzed the cortex’s hierarchy, focusing on visual and somatosensory/motor systems, and highlighted the interconnectedness and distinct processing streams. The study uncovered visual area functions and suggested that the organization allows for both divergence and convergence. The research deepened our knowledge of primate cortex hierarchy and connectivity, particularly in vision and somatosensory/motor functions.

The authors of [181] explored surface-based visualization for mapping the cerebral cortex’s functional specialization. They employed an atlas to show the link between specialized regions and topographic areas in the visual cortex. The surface-based warping enhanced data mapping, thereby reducing distortions. These methods advanced high-resolution brain mapping, improving our comprehension of cerebral cortex organization and function across species, especially in humans. The authors of [182] revealed that the brain’s activation–deactivation balance during tasks is naturally present, even at rest. Two networks, linked by correlations and anticorrelations, show ongoing brain organization. This intrinsic structure showcases the brain’s dynamic functionality and supports the understanding of coherent neural fluctuations’ impact on brain function. The authors of [183] presented a comprehensive map of the human cerebral cortex’s divisions, using magnetic resonance images and a neuroanatomical method. They identified 97 new areas, confirmed 83 previously known areas, and developed a machine learning classifier for automated identification. This tool enhanced our understanding of cortical structure and function, aiding research in diverse contexts.

Table 11 presents the main articles regarding Van Essen’s mapping, while their characteristics, including their advantages, disadvantages/limitations, and complexities, are given in Appendix D.

Table 11.

Methods related to Van Essen’s functional mapping of the brain.

6. Discussion

In machine vision, there exist numerous contemporary technologies pertaining to pattern recognition, each harboring its own merits and demerits. Presented below are several recent technologies alongside their respective advantages and disadvantages.

Deep learning leverages neural networks comprising multiple layers to extract intricate and high-level features from data. Remarkable achievements have been witnessed in diverse pattern recognition tasks through deep learning, such as image classification, object detection, face recognition, and semantic segmentation, among others. Deep learning possesses certain advantages: it can autonomously learn from extensive datasets without substantial human intervention or feature engineering; it can adeptly capture non-linear and hierarchical relationships within the data; and it can reap the benefits of hardware and software advancements like GPUs and frameworks. However, deep learning also entails certain drawbacks: it demands substantial computational resources and time for training and deployment; it may be susceptible to issues of overfitting or underfitting, hinging upon network architecture selection, hyperparameter tuning, regularization techniques, and more; it may lack interpretability and explainability concerning learned features and decisions; and it may prove vulnerable to adversarial attacks or data poisoning.

Challenges and Limitations

Deep learning techniques in robotic vision offer distinct advantages, enabling high-level tasks like image recognition and segmentation, vital for robust robot vision systems. Deep learning algorithms and neural networks find diverse applications, spanning domains such as drug detection and military applications. These methods facilitate the acquisition of data-driven representations, features, and classifiers, thereby enhancing the perceptual capabilities of robotic systems. However, inherent challenges exist in employing deep learning for robotic vision. The limitations in robot hardware and software pose efficiency challenges for vision systems, and deep learning alone may not resolve all issues in industrial robotics. Furthermore, designing deep learning-based vision systems necessitates specific methodologies and tools tailored to the field’s unique demands.