Abstract

With the aging of the population in Japan, the number of bedridden patients who need long-term care is increasing. The Japanese government has been promoting the creation of an environment that enables everyone, including bedridden patients, to enjoy travel, based on the principle of normalization. However, it is difficult for bedridden patients to enjoy the scenery of distant places and to talk with the local people because they need support from helpers to travel to distant places using travel agencies. Therefore, to enhance their quality of life (QOL), we developed a remote-controlled drone system, which involves using only the eyes. We believe that bedridden patients are able to operate the system’s drone in a distant place, to easily view the scenery of the distant place with a camera installed on the drone, and to talk with the local people. However, we have never evaluated whether actual bedridden patients can operate the drone in a distant place, to see the scenery, and to talk with the local people. In this paper, we presented clinical experimental results to verify the effectiveness of this drone system. Findings showed that, not only subjects with relatively high levels of independence in activities of daily living, but also bedridden subjects, could operate the drone at a distant place with only their eyes and communicate with others.

1. Introduction

Recently, the aging of the population has been progressing in Japan. With this, the number of bedridden patients who need long-term care in hospitals is increasing [1,2]. The Japanese government has been promoting the creation of an environment that enables everyone, including bedridden patients, to enjoy travel [3,4,5], based on the principle of normalization [6,7]. There are already several travel agencies that support travel for all people, including bedridden patients [8,9,10], and there have been publicized cases fulfilling the requests of bedridden patients [11,12,13,14,15]. However, it is difficult for bedridden patients to enjoy the scenery of distant places and to talk with the local people because they need support from helpers to travel to distant places using travel agencies [16,17,18]. On the other hand, the Internet has been used in various fields recently [19,20,21,22,23]. If bedridden patients can operate remote-controlled robots using the Internet in distant places, enjoy the scenery of the distant places, and talk with the local people using the robots, their quality of life (QOL) can be expected to improve through enjoying the virtual trips [24].

Many robots that are controlled using a keyboard or joystick have been developed [25,26]. However, it is difficult for bedridden patients with limb disabilities to operate these robots. Therefore, it has been proposed to use electroencephalography (EEG), electromyography (EMG), respiration, voice, and gaze to control robots [27,28,29,30,31,32,33,34,35,36]. However, using EEG or EMG signals requires electrodes to be attached to the patient’s body, which poses a hygiene problem. Additionally, in the case of EEG, EMG contamination and artifacts can affect the EEG [37]. When respiration is used, a device for measuring respiration needs to be placed in the patient’s mouth, which also poses a hygiene problem. When a voice is used, there is a risk that the robot may malfunction during a conversation with a person. Furthermore, when using a wearable eye-tracking device, such as a head-mounted display with an eye tracker, there is a hygiene problem [38,39]. On the other hand, if a non-wearable eye-tracking device is used, there is no hygiene problem. In addition, there is no risk of the robot malfunctioning when talking with a person using a non-wearable eye-tracking device [40]. Based on the above, we believe that a remote-controlled robot system using a non-wearable eye-tracking device is effective for bedridden patients.

Wheeled or legged robots are often used in robotic systems [41,42]. However, patients may feel stressed when these robots cannot climb stairs or steps. On the other hand, drones can fly over stairs and steps. Therefore, using drones can be expected to reduce patient stress [43]. Therefore, a remote-controlled drone system using a non-wearable eye-tracking device may be effective [44].

Hansen et al. developed a drone system that uses a keyboard in combination with a non-wearable eye-tracking device [45]. However, patients with limb disabilities have difficulty operating a keyboard. Therefore, it is difficult for patients with limb disabilities to use this system.

Systems using non-wearable eye-tracking devices for patients with limb disabilities include text input operation systems for communication support and a system for operating an electric wheelchair [46,47,48]. These systems have a keyboard or gaze input buttons displayed on the operation screen. The user can input texts by looking at keys of the keyboard and move the wheelchair by looking at the input buttons. However, while operating the keyboard or buttons, it is difficult for the user to look at the other parts of the screen. Therefore, there is the following problem in drone systems using the keyboard or input buttons: when a user wants a better view of their target, the user takes his/her eyes off the target, operates the drone so that they can get a better view, checks the target again, and if the target is still difficult to observe, the user must operate the drone again. In these systems, it is necessary to repeatedly look at the target, remove the eyes from the target, and operate the drone.

To solve the above problem, Kogawa et al. developed a remote-controlled drone system using a non-wearable eye-tracking device [43]. By using this drone system’s control screen, the drone moves so that the patient can observe the target near the center of the screen simply by continuously looking at the target the patient wants to observe. This system is expected to allow patients to operate the drone and observe targets more easily than the system using the above-mentioned keyboard or buttons. Furthermore, this system takes into account human eye movements (microsaccades) and operability to enable agile operation, assuming that there is a time lag dependent on the Internet environment. However, the effectiveness of the developed remote-controlled drone system has not been verified through experiments, such as whether bedridden patients can actually operate the system’s drone in a distant place using only their eyes, whether bedridden patients can enjoy the scenery of the distant place, and whether bedridden patients can communicate with the local people.

This paper aimed to verify the effectiveness of the remote-controlled drone system (especially, the control screen) by conducting clinical experiments using bedridden patients who had been in a hospital for a long period of time. In this paper, we first briefly describe the remote-controlled drone system we propose. Next, we present the results of clinical experiments conducted on actual patients using the drone system. Finally, the effectiveness of the remote-controlled drone system was verified based on the clinical experimental results.

2. Materials and Methods

2.1. Drone System

Although there is a difference in the drone used, the drone system used in this study is essentially the same as the drone system proposed by Kogawa et al. In the following, we briefly explain the drone system used in this paper (for details, see [43]). In the drone system’s components, the control screen and the joystick control device were originally developed for this research.

2.1.1. Overview

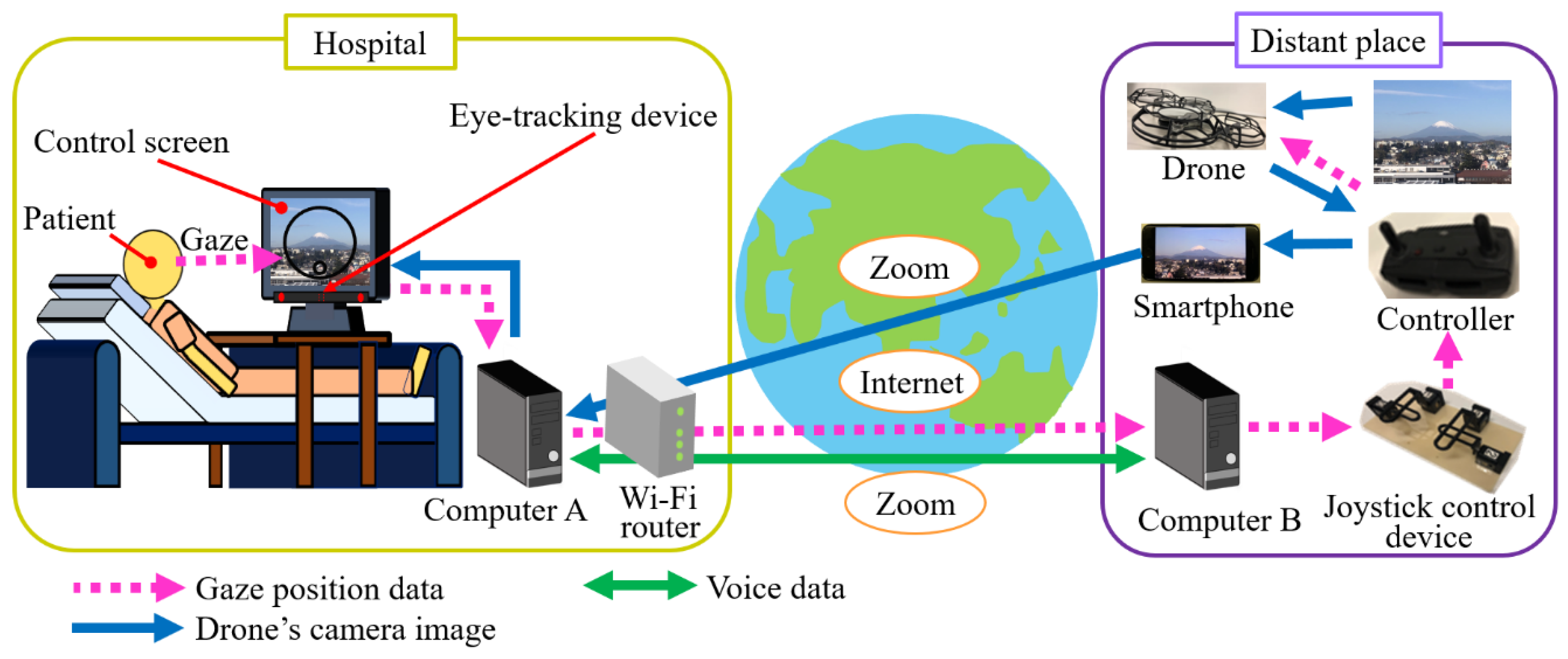

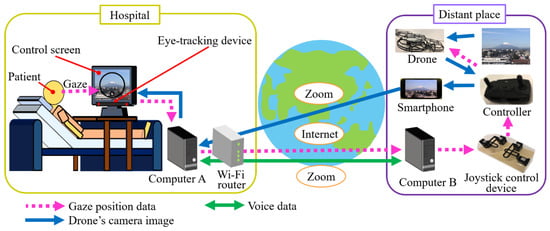

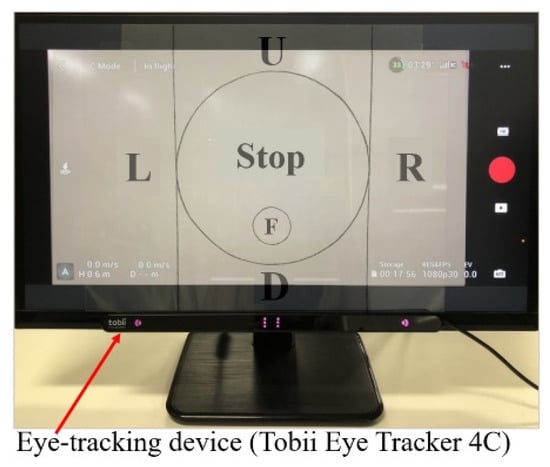

Figure 1 shows the drone system. The system is composed of an eye-tracking device, a control screen, Computer A, Computer B, a Wi-Fi router, the Internet, a joystick control device, a drone, a drone controller, a smartphone, and the web conferencing service Zoom. The eye-tracking device is attached to the control screen.

Figure 1.

Remote-controlled drone system.

Images from the drone’s onboard camera are displayed on the control screen via the drone controller, the smartphone, Zoom, the Wi-Fi router, and Computer A. The patient can view the scenery in a distant place through the camera images displayed on the control screen. The patient’s gaze position is detected by the eye-tracking device.

The gaze position data is sent to Computer B in the distant place via Computer A, the Wi-Fi router, and the Internet. Based on the gaze position data, Computer B sends operational commands to the joystick control device. The joystick control device directly moves the two joysticks of the drone controller, based on the operational commands, and the drone is operated, based on the gaze position data. In the joystick control device, motors with backdrivability are used to allow the joysticks to be easily moved after switching off the motors. Using this joystick control device, a person near the drone controller can safely operate and land the drone by switching off the motors and operating the joysticks by hands, even if Computer B, etc. fail.

The patient and the local people can talk via Zoom using a microphone and a speaker attached to Computer A and a microphone and a speaker attached to Computer B, respectively.

In order to reduce damage to the environment in case the drone crashes during experiments, we used Mavic Mini (Da-Jiang Innovations Science and Technology Co., Ltd., Guangdong, China), which weighs 199 g less than the drone used by Kogawa et al. [43]. The joystick control device was fabricated by referring to [43]. We used the Tobii Eye Tracker 4C (Tobii AB, Stockholm, Sweden) in the eye-tracking device.

2.1.2. Operation Method and Control Screen

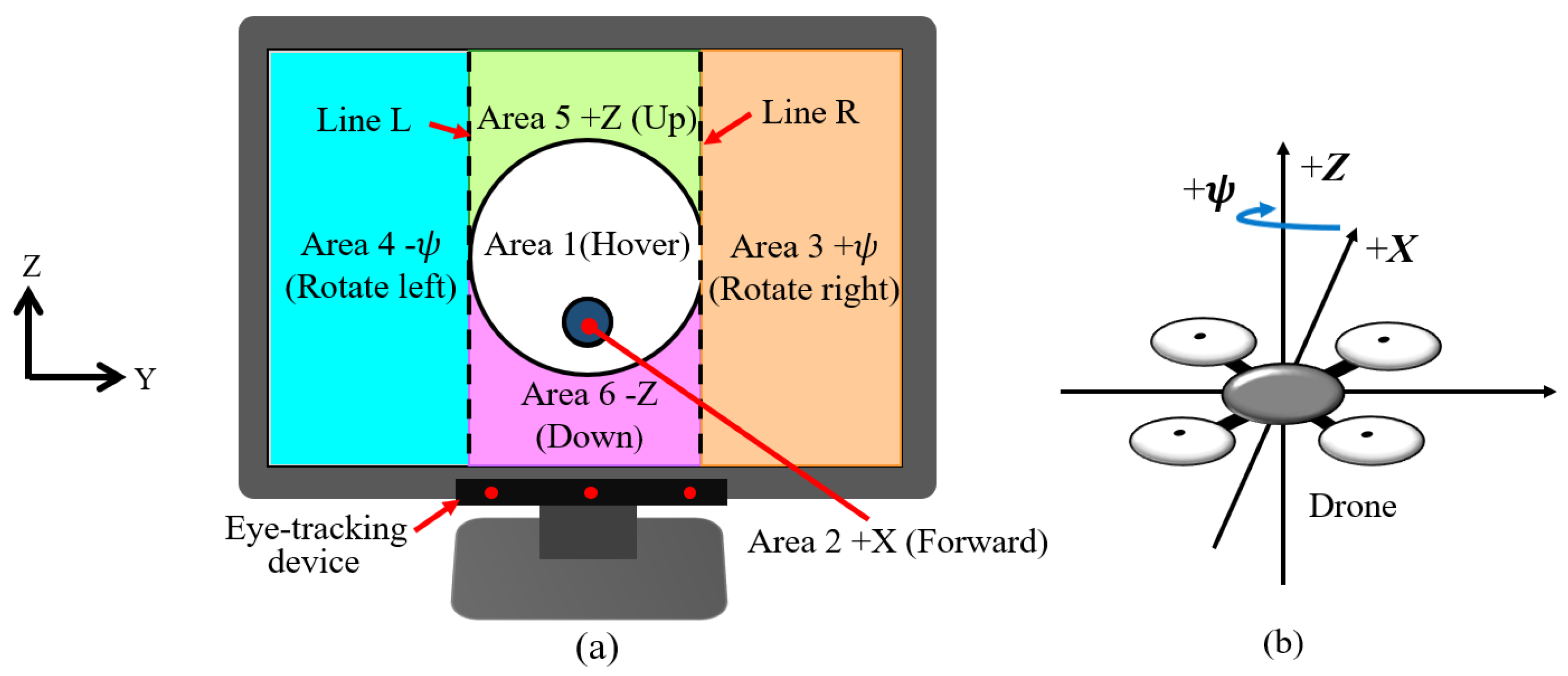

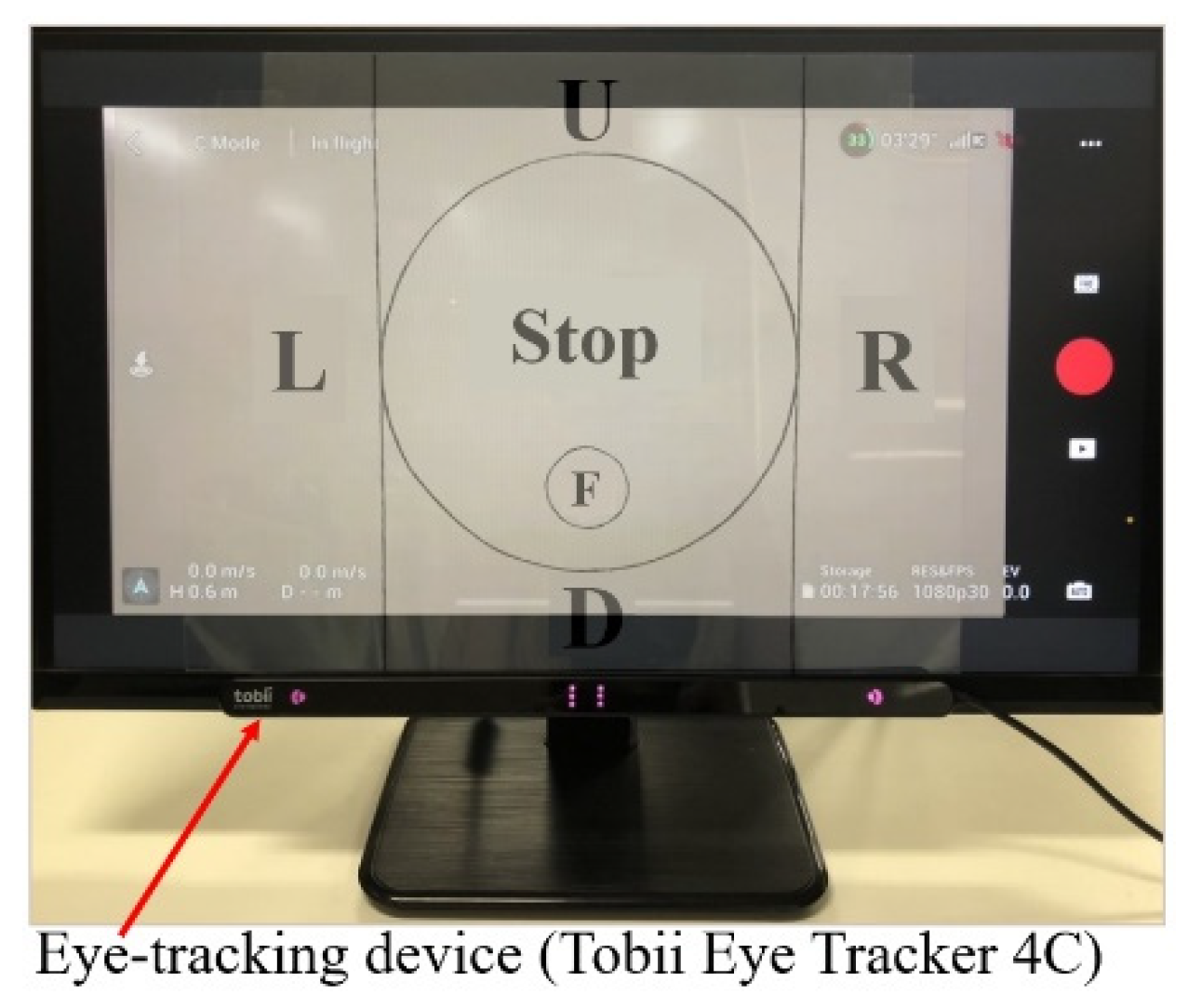

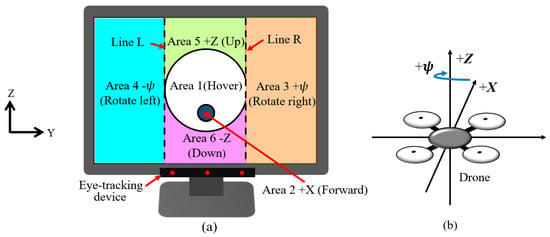

Figure 2 shows the relationship between the control screen used in the drone system and the coordinates of the drone in flight. The control screen was divided into six areas. Area 1 is a circular area located in the center of the control screen and excludes Area 2. The patient basically observes a target of interest within Area 1. Area 2 is a circular area located inside Area 1 and below the center of the control screen. The lines, L and R, are tangential to Area 1 in the Z direction. Area 3 is the area to the right of line R and Area 4 is the area to the left of line L. Area 5 is above Area 1 and Area 6 is below Area 1.

Figure 2.

Control screen and the coordinates around the drone. (a) Control screen; (b) Coordinate system.

The drone is operated as follows:

While the patient is looking at Area 1, the drone hovers.

While looking at Area 2, the drone moves forward at a preset constant translational velocity.

While looking at Area 3, the drone rotates right at a preset constant rotational velocity.

While looking at Area 4, the drone rotates left at a preset constant rotational velocity.

While looking at Area 5, the drone ascends at a preset constant translational velocity.

While looking at Area 6, the drone descends at a preset constant translational velocity.

If the patient keeps looking at a target on the control screen, the drone moves so that the target is closer to Area 1 and the patient can easily see the target in Area 1. For example, if the patient keeps looking at the target in the upper left of the screen, the drone first rotates left, then moves up, and, finally, the patient can see the target in Area 1.

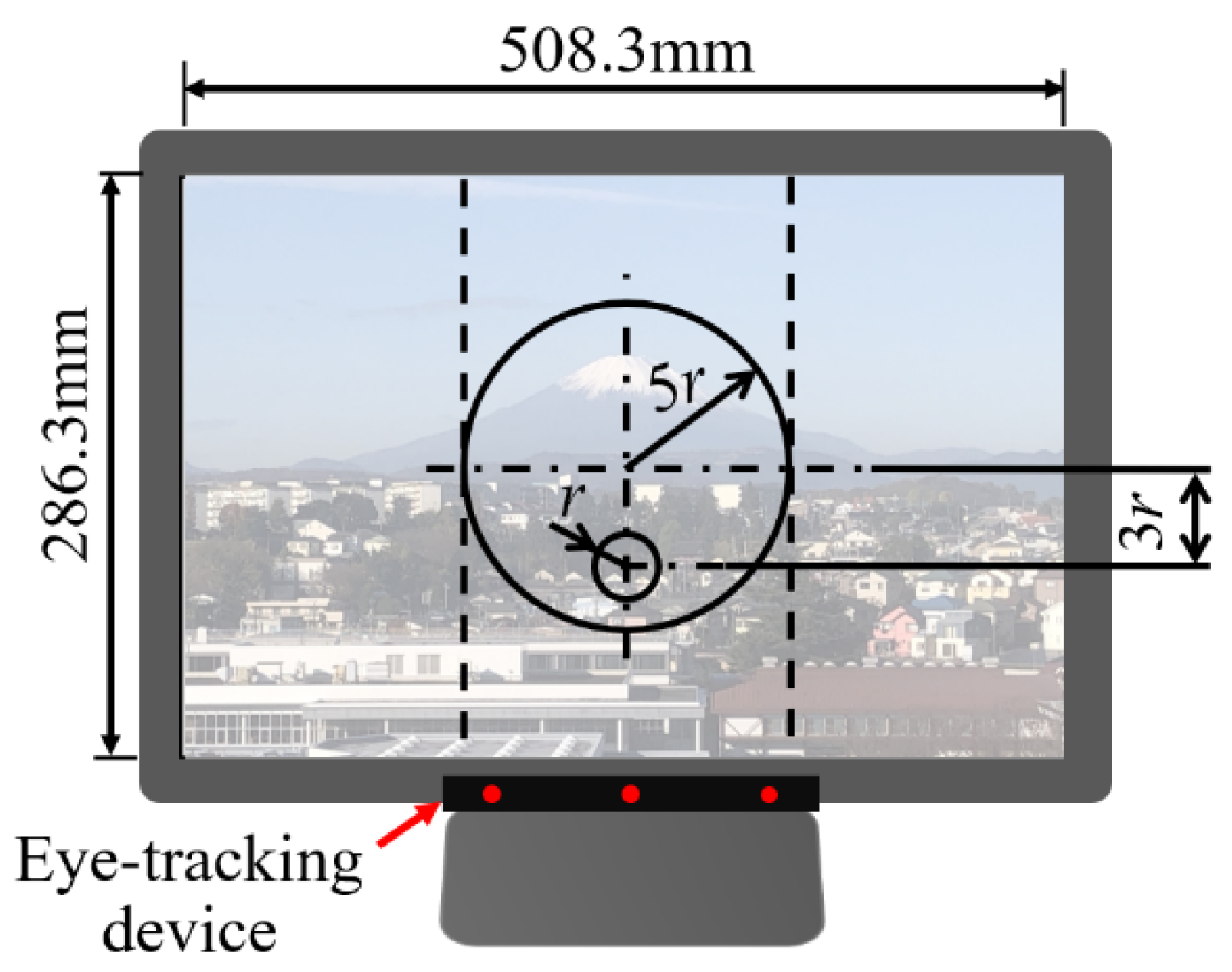

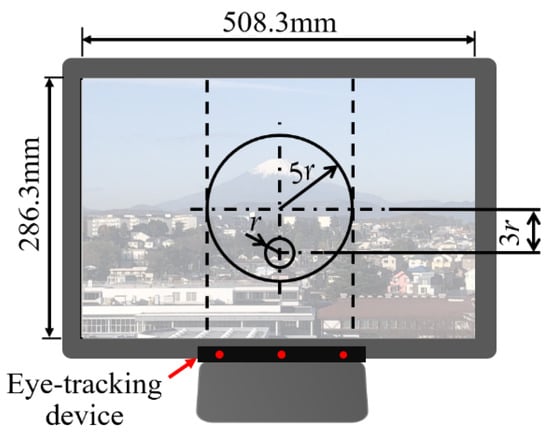

Kogawa et al. [43] proposed a method for designing the size of Areas 1 and 2 and the position of Area 2 in this control screen, taking into account human eye movements (microsaccades) and operability. The control screen designed, based on their design method, is shown in Figure 3.

Figure 3.

Control screen designed by Kogawa et al. [43].

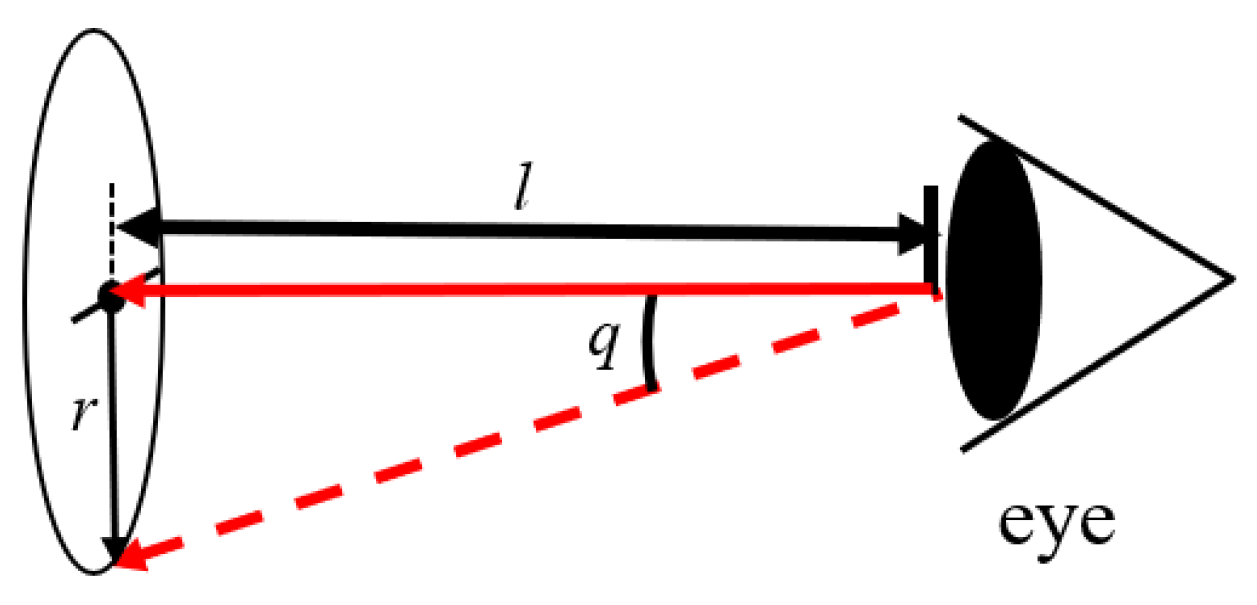

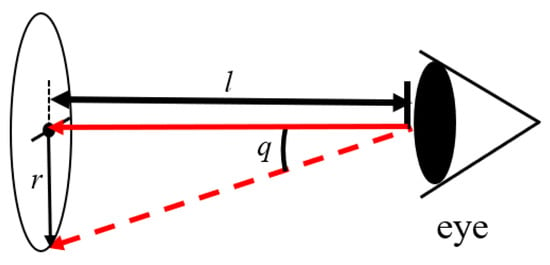

When humans look at one point, the eyeballs are always moving slightly. These eye movements are called microsaccades [49]. The amplitude of the eye movements is approximately 1 to 2 degrees (frequency: 1 to 3 Hz). Since macrosaccades are involuntary movements, it is difficult for humans to consciously stop microsaccades. Therefore, as shown in Figure 4, when the patient looks at one point on the control screen, the patient’s gaze position may change within a circle of radius r because of microsaccades. The radius r is expressed by

where l is the distance between the control screen and the patient’s eyes and q is the amplitude of the microsaccades.

r = l tan q,

Figure 4.

Errors caused by microsaccades.

As shown in Figure 3, the radius of Area 2 (see Figure 2) is r. Therefore, if the patient gazes at the center of Area 2, the gaze position’s change caused by the microsaccades is within Area 2 and the drone moves forward. Even if the patient looks at the bottom edge of Area 2 to move the drone forward, the patient’s gaze does not enter Area 6 because the distance from the bottom edge of Area 2 to Area 6 is r. At this time, the patient’s gaze could enter Area 1. However, when the gaze enters Area 1, the drone simply hovers. Therefore, the patient can look back at the center of Area 2 to advance the drone again. In an environment where Internet time lags are expected, we must prevent the drone from malfunctioning due to microsaccades, because correcting the malfunction is difficult due to the time lags. Therefore, using this control screen designed to consider the microsaccades was important in this research.

Moreover, considering only the microsaccades, Area 1 could be made larger than Area 1 in Figure 3. However, the larger Area 1 becomes, the more the patients have to move their gaze to look at Areas 3 to 6 from the center of the control screen. Therefore, in order for the patients to maneuver the drone agilely, Area 1 should be as small as possible. In an environment where Internet time lags are expected, operating the drone agilely is also important. Therefore, the control screen shown in Figure 3 was designed to consider both the microsaccades and the drone’s operability.

In addition, since the areas for moving the drone are arranged in contact with Area 1 and the video images in each area can be viewed in this control screen, the patients can wait for the target to approach Area 1 by moving their gaze from the area to move the drone to Area 1 earlier to compensate for Internet time lag.

As shown in [43], we could add the functions for the patients to take off and land the drone. However, in this paper, to focus on the effectiveness of the control screen when the drone is in flight, we used the control screen shown in Figure 3 and the drone was taken off and landed by experiment staff near the drone controller in the following experiments. Additionally, in the following experiments, the drone was set to hover if the Internet connection was disconnected for 50 ms or the patient looked out of the control screen.

We programmed in Visual C++ to control the drone using the control screen shown in Figure 3. If, to enjoy the scenery of a distant place at a constant height, it was only necessary for the drone to rotate right and left, then Areas 2, 5, and 6 could be set to hovering the drone. In this case, the drone could not move forward, up, or down, allowing the patient to concentrate more on enjoying the scenery of the distant place and conversation with local people. In the following indoor experiment, we experimented using a drone which could move forward, rotate right and left, ascend and descend to focus on whether the patient could operate the drone. In the following outdoor experiment, we experimented using a drone which could only rotate right and left to focus on whether the patient could rotate the drone to the right and the left, enjoy the scenery, such as Mt. Fuji, and enjoy talking with experiment staff in a distant place.

2.2. Clinical Experiment

In this section, we describe a clinical experiment to verify the effectiveness of the above-mentioned drone system. In the following, we call the experiment conducted by patients with ADL higher than bedridden patients the “preliminary experiment.” We call the experiment conducted with bedridden patients the “principal experiment.” In both the preliminary experiment and the principal experiment, we conducted an indoor experiment and an outdoor experiment. In the indoor experiment, we experimented to verify whether the patients could operate the drone in a distant place to the left, right, up, down, and forward. In the outdoor experiment, we focused on whether patients could enjoy the scenery of a distant place and communicate with the experiment staff in the distant place. As described in the next section, the clinical experiment required intermediaries [24] to assist patients with disabilities to perform the experiment. Therefore, the following experiments were conducted collaborating with intermediaries positioned at the patient’s side.

2.2.1. Subjects

All subjects who participated in the following preliminary and principal experiments were long-term hospitalized patients, operating the drone system for the first time. The details of the patients are described below.

- (1)

- Preliminary Experiment

The objectives of the preliminary experiment were the following: (i) to verify whether patients who were not bedridden, but could go out using their own legs or go out in a wheelchair, and were hospitalized for a long period of time, could operate the drone in a distant place with only their eyes before the principal experiment; and (ii) to verify whether these patients could enjoy the scenery and communicate with people in the distant place using the drone system.

Four patients (one female, three males, aged 64.8 ± 10.9 years) participated in this experiment. All patients had been hospitalized for more than 5 years.

The patients’ levels, based on the degree to which elderly disabled people can manage their daily lives as defined by the Ministry of Health, Labor and Welfare of Japan, were as follows [50]:

One patient’s level was J1—The patient had a disability, but could go out on his/her own or use public transportation.

Two patients were level J2—The patients had disabilities, but could go out on their own around the neighborhood, but could not use public transportation.

One patient’s level was B1—The patient required some assistance for indoor living and was mainly bedridden during the day, but could maintain a sitting position, transfer to a wheelchair, eat, and defecate away from the bed.

- (2)

- Principal Experiment

The objectives of the principal experiment were as follows: (i) to verify whether bedridden patients who had been in hospital for a long period of time could operate the drone in a distant place with only their eyes; and (ii) to verify whether these patients could enjoy the scenery and communicate with people in the distant place using the drone system.

Two female patients, aged 62 and 85 years, participated in this experiment. The patients’ levels, based on the degree to which elderly disabled people can manage their daily lives, were C1. That is, they were bedridden.

C1 meant that the patient spent all day in bed and required assistance with toileting, eating, and dressing.

They had also been hospitalized for more than 5 years.

Table 1 shows the characteristics of the above patients.

Table 1.

Characteristics of patients.

2.2.2. Methods of Indoor Experiment

In both the preliminary and principal experiments, the experiment staff side was located at Tokai University in Kanagawa Prefecture, and the patient side was located in a hospital in Kagawa Prefecture. The patients remotely controlled the drone in a gymnasium at Tokai University from the hospital using only his/her eyes. The patients could talk with the experiment staff using a microphone and a speaker attached to Computer A. The patient side (hospital) connected Computer A to the Internet using DISM WiMAX2+ Package Education New 1 Year Version (Daiwabo Information System Co., Ltd., Osaka, Japan) as the Wi-Fi router, shown in Figure 1. The drone’s preset rotational velocity and translational velocity were set to 7.5°/s and 0.1 m/s, respectively.

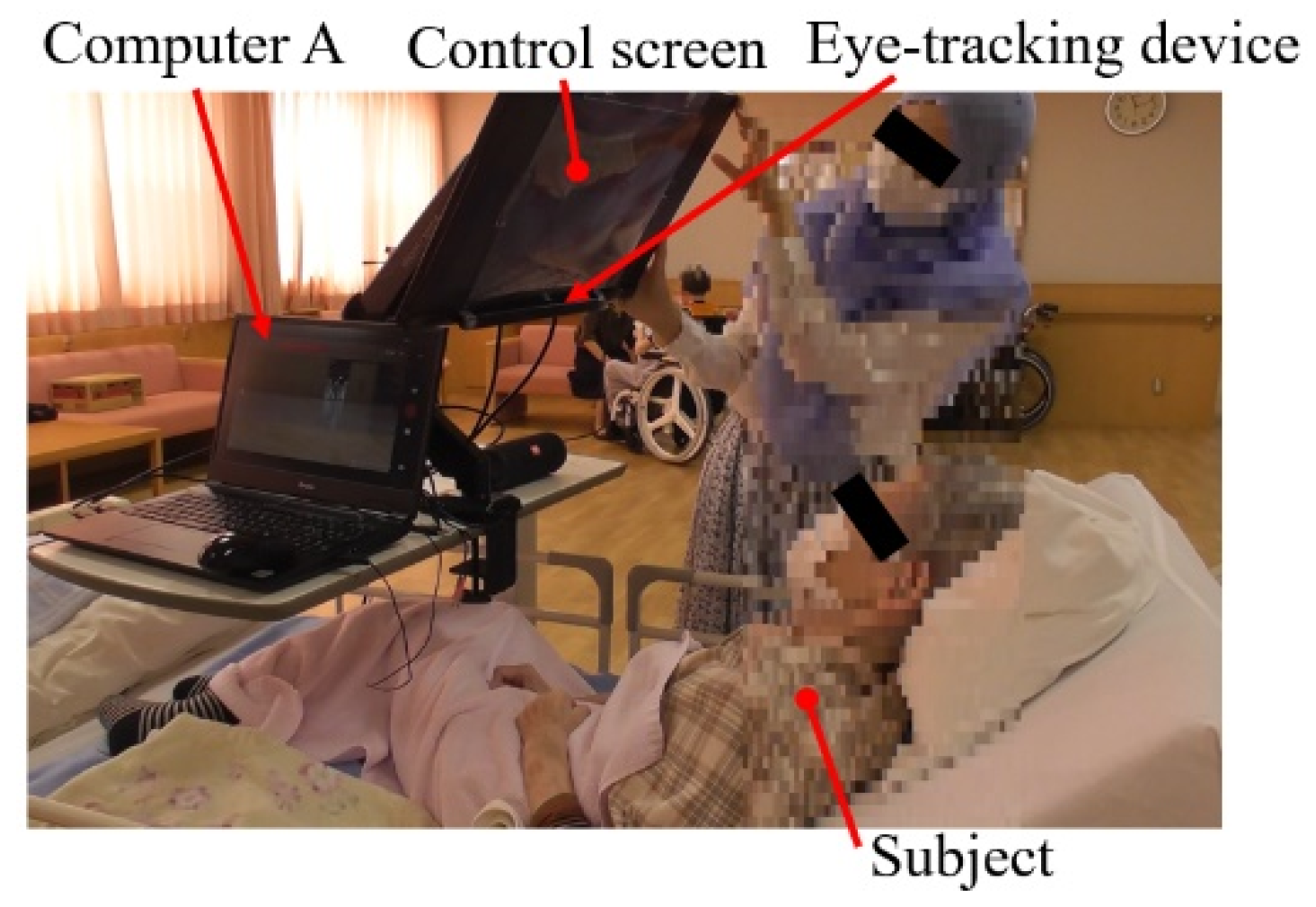

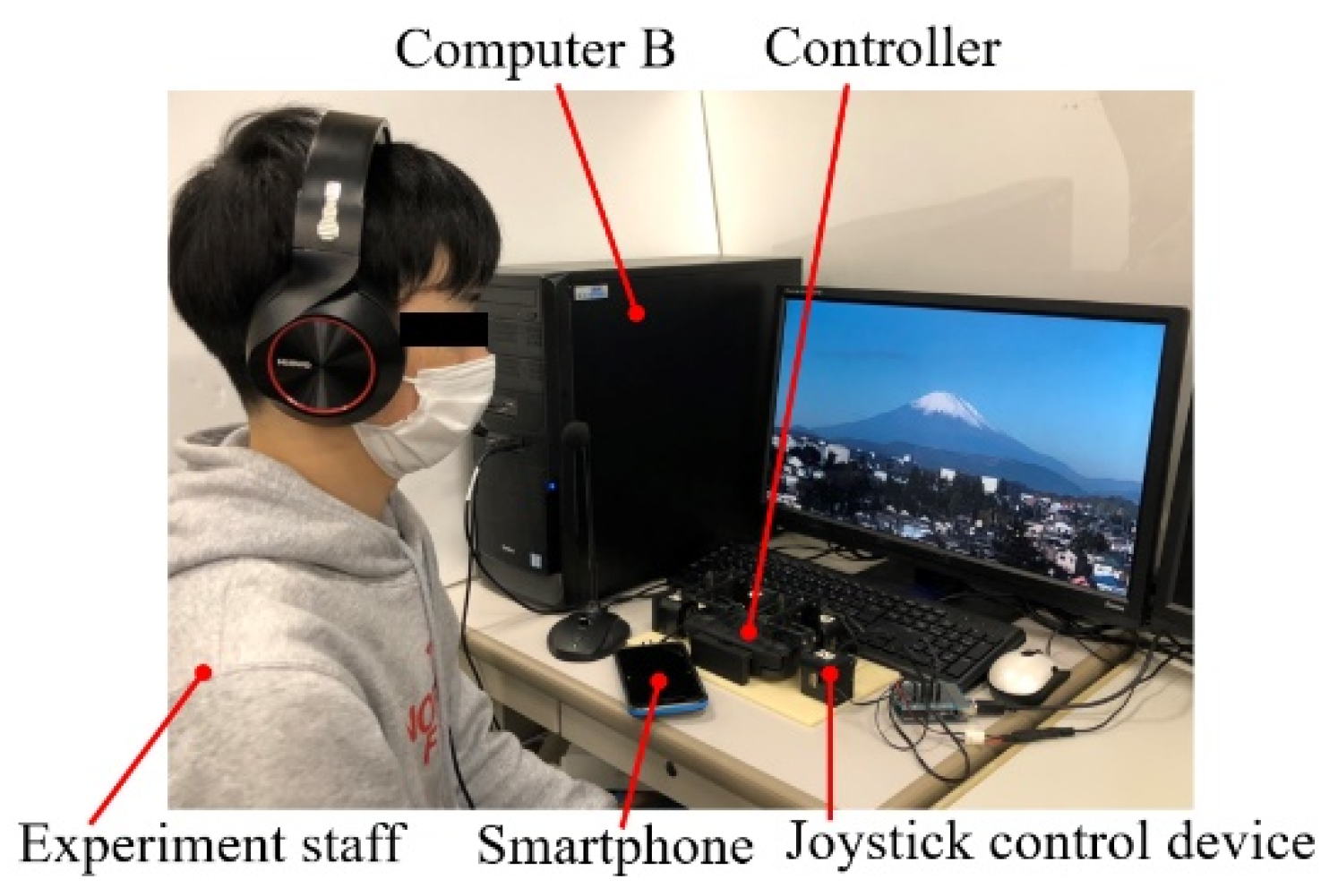

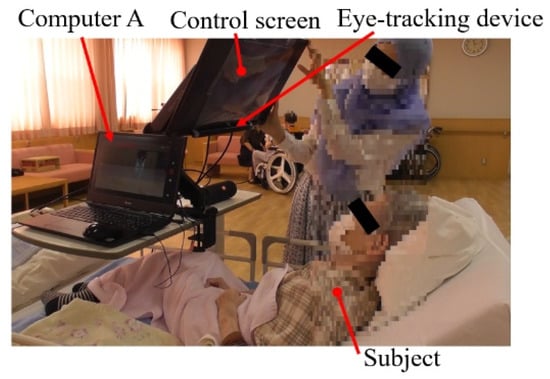

Figure 5 shows the patient’s side, Figure 6 shows the experiment staff’s side, and Figure 7 shows the control screen used. The control screen was marked with Japanese characters, such as “Stop,” in each area, to prevent the patients from forgetting the control method. In order to minimize the load on the system as much as possible, the boundaries of each area and the letters were written on a transparent film, which was attached to the control screen.

Figure 5.

Patient (Hospital).

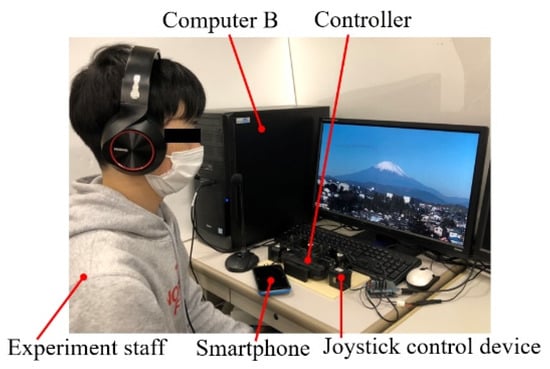

Figure 6.

Experiment staff (Tokai University).

Figure 7.

Control screen used in this experiment.

The experimental procedure was as follows:

First, we instructed the patients on the control method of the drone and the flight course. Next, the eye-tracking device was calibrated for each patient, and then we started the experiment to control the drone using only eye movements. After the experiment, the patients were asked to complete a questionnaire about their evaluation of the system.

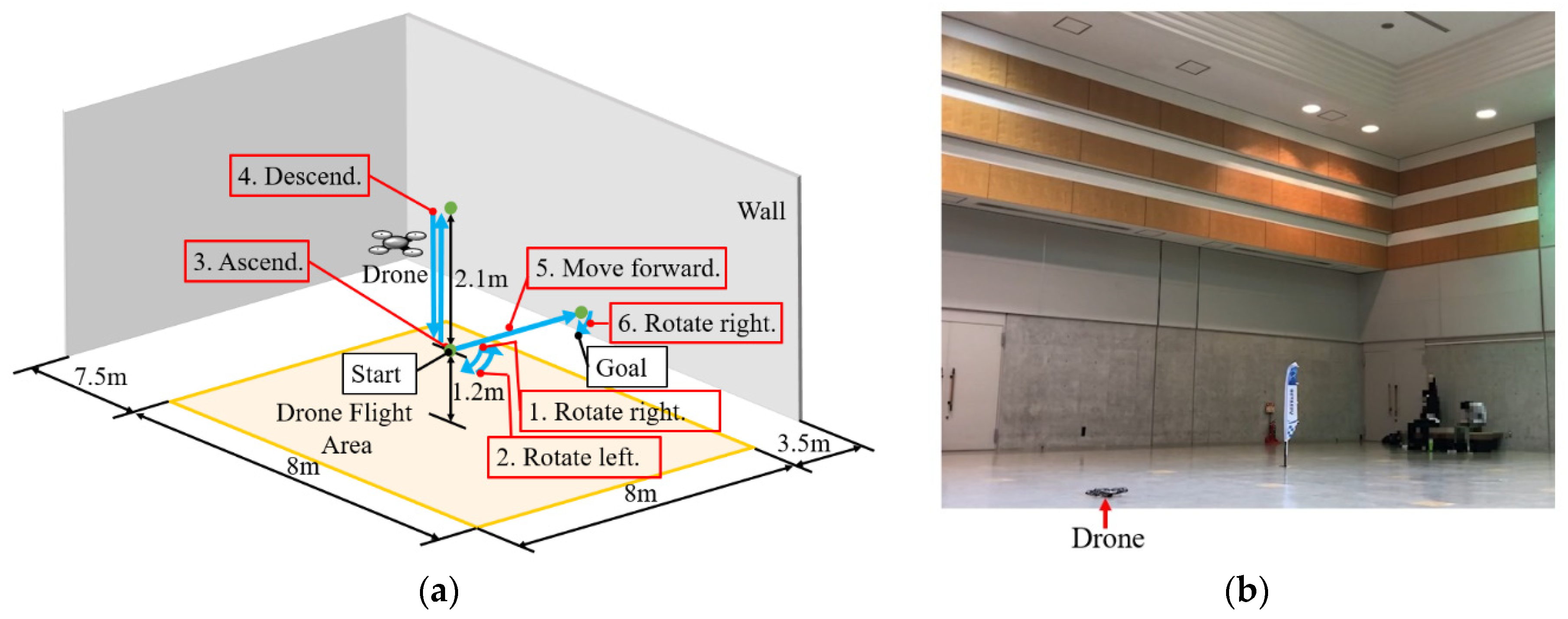

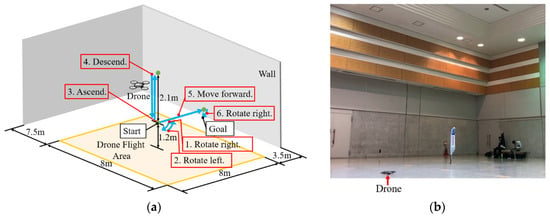

Figure 8 shows the flight course in this indoor experiment. The patients operated the drone according to the following procedure, based on instructions from the experiment staff:

Figure 8.

Flight course in the indoor experiment. (a) Flight course; (b) Real experimental set-up in a gymnasium at Tokai University.

- (i).

- The drone took off and rose to a height of 1.2 m, controlled by the experiment staff. After that, the patient looked at Area 3, according to the experiment staff’s instructions.

- (ii).

- After rotating the drone to the right at the preset rotational velocity, the patient looked at Area 4, according to the experiment staff’s instructions.

- (iii).

- After rotating the drone to the left at the preset rotational velocity, the patient looked at Area 5, according to the experiment staff’s instructions.

- (iv).

- After ascending the drone at the preset translational velocity, the patient looked at Area 6, according to the experiment staff’s instructions.

- (v).

- After descending the drone at the preset translational velocity, the patient looked at Area 2, according to the experiment staff’s instructions.

- (vi).

- After moving the drone forward at the preset translational velocity, the patient looked at Area 3, according to the experiment staff’s instructions.

- (vii).

- After the patient rotated the drone to the right and saw students holding flower bouquets, the patient looked at Area 1, according to the experiment staff’s instructions.

- (viii).

- After the patient hovered the drone and saw the students holding flower bouquets for a moment, the drone was landed by the experiment staff.

In the indoor experiment, for safety, the experiment staff and the students holding flower bouquets were kept away from the drone flight area to prevent contact between them and the drone.

2.2.3. Methods of Outdoor Experiment

In this experiment, as in the indoor experiment, the experiment staff side was located at Tokai University in Kanagawa Prefecture, and the patient side was located in the hospital in Kagawa Prefecture, in both the preliminary and principal experiments. The patients remotely controlled the drone on the roof of a Tokai University building from the hospital by using only his/her eyes. The patients could talk with the experiment staff using a microphone and a speaker attached to Computer A. As shown in Section 2.1.2, the drone was set to hover when the subjects looked at Areas 2, 5, and 6. The patient side (hospital) connected Computer A to the Internet using SoftBank E5383 unlimited (Huawei Technologies Japan Co., Ltd., Tokyo, Japan) as the Wi-Fi router shown in Figure 1. The drone’s preset rotational velocity and translational velocity were set the same as in the indoor experiment. The view of the experiment staff’s side, the patient’s side, and the control screen used were as shown in Figure 5, Figure 6 and Figure 7.

The experimental procedure was the same as the indoor experiment. That is, first, the drone operation method and flight course were explained to the patients. Then, the eye-tracking device was calibrated for each patient, and the experiment began. After the experiment, the patients answered a questionnaire about the drone system.

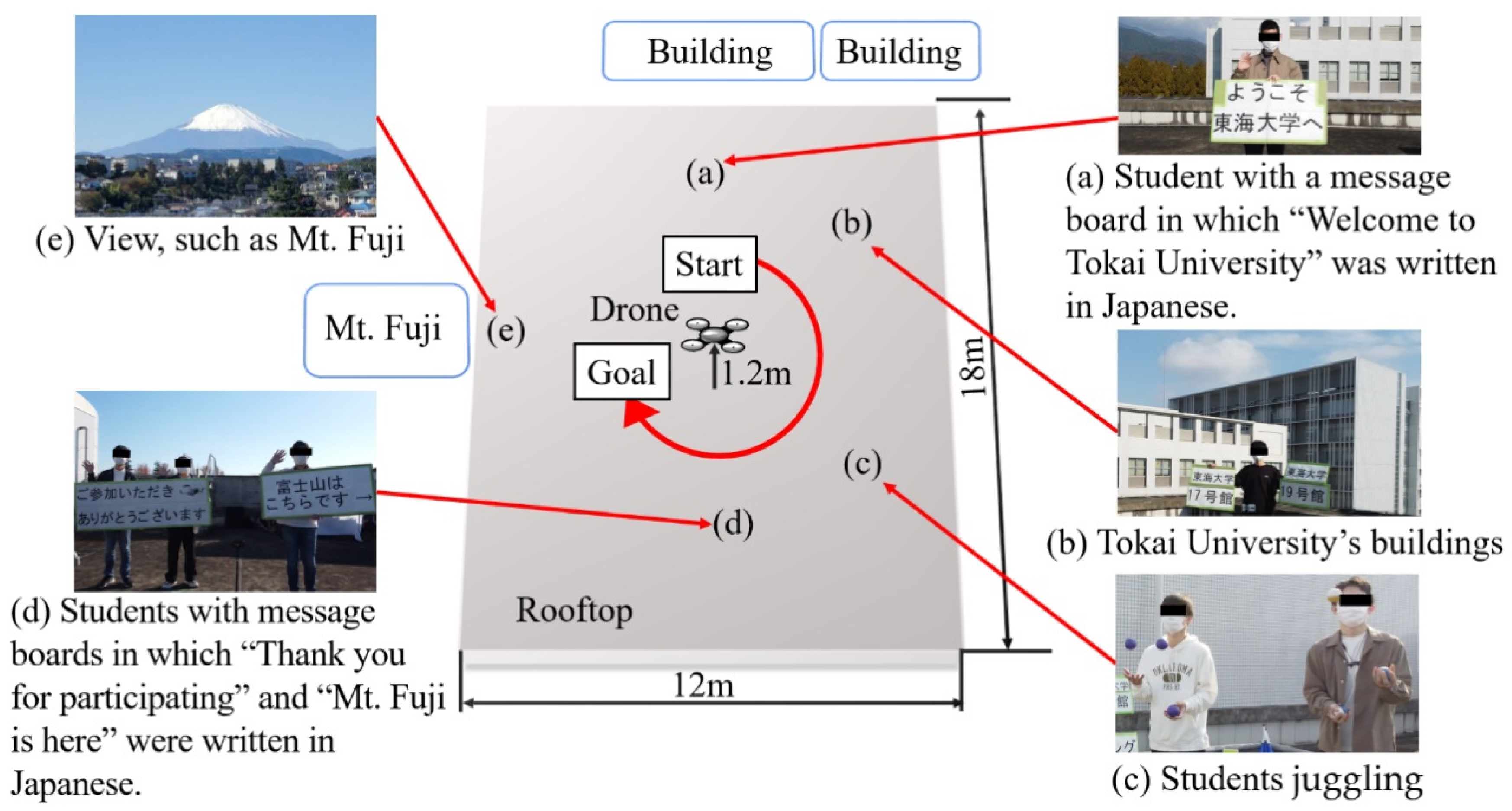

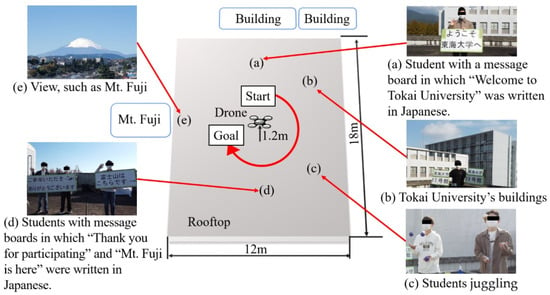

Figure 9 shows the flight course in the outdoor experiment. The patients operated the drone according to the following procedure:

Figure 9.

Flight course in the outdoor experiment.

- (i).

- After the drone took off and rose to a height of 1.2 m, controlled by the experiment staff, the patient saw a student with a message board in which “Welcome to Tokai University” was written in Japanese (a), and, then, the patient rotated the drone to the right, according to the experiment staff’s instructions.

- (ii).

- After this, the patient saw Tokai University’s buildings (b), students juggling (c), students holding message boards in which “Thank you for participating” and “Mt. Fuji is here” were written in Japanese (d), and students holding flowers and waving their hands, and the view of Mt. Fuji (e). Finally, the experiment staff landed the drone.

If there was anything the patients wanted to see, the patients could carefully observe their target by rotating the drone left or right or hovering it in place.

In this outdoor experiment, for safety, we attached a stainless-steel wire to the drone, and the students and the experiment staff were approximately 4 m or more away from the drone in order to prevent contact between them and the drone.

3. Results

All the patients successfully flew the drone in the indoor experiment and the outdoor experiment. The results are shown in this section.

3.1. Indoor Experiment

3.1.1. Preliminary Experiment

Table 2 shows the results of the questionnaire in the preliminary indoor experiment. In the questionnaire, questions (1) to (4) mainly covered the drone flight, while questions (5) and (6) mostly covered whether the patients enjoyed the experiment. The right side of Table 2 shows the patients’ answers to each question. Table 3 shows the patients’ flight times in the preliminary indoor experiment. Table 4 shows the maximum value, the average value, and the minimum value of the time lags when looking at an area, and before the control screen video caught up in each patient’s flight.

Table 2.

Questionnaire results in the preliminary indoor experiment.

Table 3.

Results of the patients’ flight times in the preliminary indoor experiment.

Table 4.

Results of time lags in the preliminary indoor experiment.

3.1.2. Principal Experiment

Similar to Table 2, Table 5 shows the results of the questionnaire in the principal indoor experiment. Table 6 shows the patients’ flight times in the principal indoor experiment. Similar to Table 4, Table 7 shows the maximum value, the average value, and the minimum value of the time lags in each patient’s flight.

Table 5.

Questionnaire results in the principal indoor experiment.

Table 6.

Results of the patients’ flight times in the principal indoor experiment.

Table 7.

Results of time lags in the principal indoor experiment.

3.2. Outdoor Experiment

3.2.1. Preliminary Experiment

Table 8 shows the results of the questionnaire in the preliminary outdoor experiment. In the outdoor experiment, we focused on whether the patients enjoyed the experiment. Therefore, in Table 8, we show the patient’s answers to questions (5) and (6) of Table 2. Table 9 shows the patients’ flight times in the preliminary outdoor experiment. Furthermore, similar to Table 4, Table 10 shows the maximum value, the average value, and the minimum value of the time lags in each patient’s flight. Moreover, Table 11 shows the communication between the patients and the experiment staff, which we confirmed, based on the video taken during this preliminary outdoor experiment.

Table 8.

Questionnaire results in the preliminary outdoor experiment.

Table 9.

Results of the patients’ flight times in the preliminary outdoor experiment.

Table 10.

Results of time lags in the preliminary outdoor experiment.

Table 11.

Communication between the experiment staff and the patients in the preliminary outdoor experiment.

3.2.2. Principal Experiment

Similar to Table 8, Table 12 shows the results of the questionnaire in the principal outdoor experiment. Table 13 shows the patients’ flight times in the principal outdoor experiment. Furthermore, similar to Table 10, Table 14 shows the maximum value, the average value, and the minimum value of the time lags in each patient’s flight. Additionally, similar to Table 11, Table 15 shows the communication between the patients and the experiment staff, which we confirmed, based on the video taken during this principal outdoor experiment.

Table 12.

Questionnaire results in the principal outdoor experiment.

Table 13.

Results of the patients’ flight times in the principal outdoor experiment.

Table 14.

Results of time lags in the principal outdoor experiment.

Table 15.

Communication between the experiment staff and the patients in the principal outdoor experiment.

4. Discussion

4.1. Could the Patients Operate the Drone Remotely?

As mentioned above, all patients successfully flew the drone, as instructed by the experiment staff, in the indoor and outdoor experiments. In the indoor and outdoor experiments, variations in the patients’ flight times were confirmed, as shown in Table 3, Table 6, Table 9 and Table 13. No constraints were set on the time of the indoor and outdoor experiments to verify whether the patients could enjoy the drone system, the scenery of a distant place, and the communication with the experiment staff in a distant place. However, the variations were not very large, and mainly depended on whether each patient took time to see what he/she wanted to see or not.

In the preliminary indoor experiment, which included all up/down, left/right, and forward operations, as shown in Table 4, the maximum and minimum of the time lags between the patients’ gaze operation and the video response were 12.79 s and 0.84 s, respectively. Despite this condition, patients A to D succeeded in flying the drone. In Table 2, patient A answered “Yes” to “Did you feel any difficulty in the operation of the drone?” and “Were you tired from the operation of the drone?,” but the other patients answered “No” to the questions. Additionally, all patients answered “Yes” to “Were you able to fly the drone as desired?” and answered “Suitable” to “How was the drone’s speed?” in the preliminary indoor experiment. Therefore, we considered that, although one patient felt tired and experienced difficulty in the drone’s operation, all the patients could fly the drone as desired under the condition that the time lags’ maximum was 12.79 s.

On the other hand, in the principal indoor experiment, two bedridden patients succeeded in flying the drone, although the time lag was 5.92 s at maximum and 1.32 s at minimum (see Table 7). In Table 5, patient E answered “Neutral” to “Were you able to fly the drone as desired?,” “Did you feel any difficulty in the operation of the drone?,” “Were you tired from the operation of the drone?,” and “How was the drone’s speed?” Patient F answered “Yes” to “Were you able to fly the drone as desired?” and answered “No” to “Did you feel any difficulty in the operation of the drone?” and “Were you tired from the operation of the drone?” In Table 5, it can be seen that patient F answered that the drone speed was slow. This suggested that there were individual preferences in the drone speed, and that it was necessary to, in the future, improve the system to adjust the speed to suit each individual. Since patient E did not give definite negative responses, we interpreted that patients E and F were basically able to fly the drone, did not have difficulty operating it, and were not so tired from the operation.

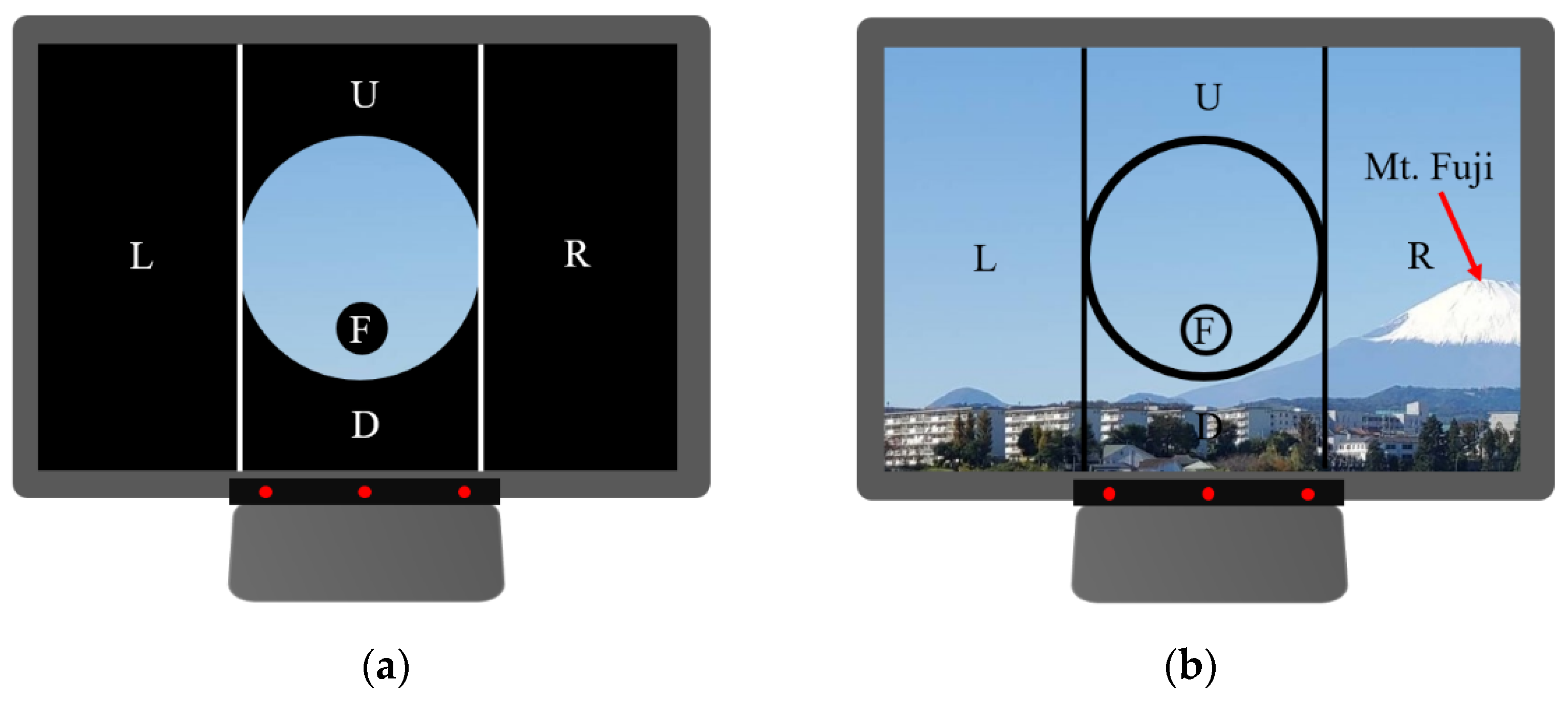

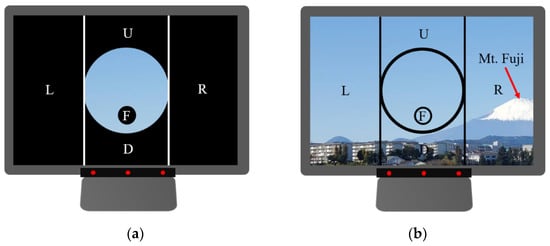

As described above, in both the preliminary and principal indoor experiments, the patients were able to operate the drone in Kanagawa Prefecture from Kagawa Prefecture, a distance of approximately 700 km, using this drone system, despite the time lag described above. We considered that the reason was that the above-mentioned control screen was used in this drone system. The control screen was designed to consider human eye movements (microsaccades) and to be agile. Since the drone moved so that a target was closer to Area 1 if the patients kept looking at the target on the control screen, we considered that the patients could control the drone intuitively. In fact, the gaze position data showed that the patients’ gaze positions were in the area indicated by the experiment staff, and that they kept looking at the target they wanted to see. Additionally, on the control screen, the hovering area (Area 1) was arranged in contact with the other areas and the video images in each area could be viewed. Therefore, the patients could move their gazes to the next operational area earlier to compensate for the time lag. We considered that this was also one of the factors that contributed to the success of their flights. In fact, Table 16 and Table 17 show the number of times each patient moved to the next operational area earlier to compensate for the time lag in the preliminary experiment and in the principal experiment, respectively. When using a control screen in which the areas for moving the drone were painted in black, as in Kim et al.’s control screen [51] (see Figure 10a), we considered that the patients could not move their gaze to the next operational area earlier to compensate for the time lag because they could not see the approaching target. However, by using our control screen, as shown in Figure 10b, the patients could move their gazes to the next operational area earlier to compensate for the time lag because they could see the approaching target. From the above statements, we determined that the patients could operate the drone by using the drone system, despite the time lag in the indoor experiment.

Table 16.

Results of eye movements considering time lags in the preliminary indoor experiment.

Table 17.

Results of eye movements considering time lags in the principal indoor experiment.

Figure 10.

Comparison between the control screen using Kim et al.’s method [51] and the control screen used in this paper. (a) Control screen using Kim et al.’s method; (b) Control screen used in this paper. In (b), it could be understood that Mt. Fuji was approaching.

In the outdoor experiment, we changed the Wi-Fi router in the hospital. Therefore, as shown in Table 10 and Table 14, the time lag was greatly improved. Additionally, in the outdoor experiment, we set Areas 2, 5, and 6 to areas for hovering the drone. That is, the operation of the drone was easier than in the indoor experiment. Therefore, we considered that all the patients could operate the drone remotely.

4.2. Could the Patients Enjoy Viewing the Scenery and Talking with the Experiment Staff in the Distant Place?

As mentioned above, we found that the patients could operate the drone by using this drone system, even if there was a time lag of a few seconds in the indoor experiment. Therefore, in the outdoor experiment, we placed the drone on the roof of a Tokai University building to focus on whether the patients could enjoy the scenery and enjoy communication with the experiment staff. As a result, patients D and E answered “Neutral” to “Did you have fun?” in the indoor experiment (see Table 2 and Table 5), but all the patients answered “Yes” to the question in the outdoor experiment (see Table 8 and Table 12). In both the indoor and outdoor experiments, all patients answered “Yes” to “Do you want to fly the drone again?” Therefore, we considered that the patients enjoyed the experiments (especially, the outdoor experiment). The communication between the patients and the experiment staff during the outdoor experiment (see Table 11 and Table 15) showed that the patients enjoyed the experiment with smiling faces, talking with the experiment staff, waving to the students, and bowing to them. Mt. Fuji is very familiar to Japanese people, and the fact that all of the patients were familiar with the mountain was considered to be one of the reasons for their enjoyment. Moreover, using the drone set to only rotate right and left was considered to be one of the reasons for their enjoyment, as it helped the patients concentrate on enjoying the scenery of the distant place and conversing with local people.

4.3. Significance of the Principal Experiment

The principal experiment revealed that two bedridden patients (C1) could remotely operate the drone with only their eyes. In the questionnaire administered after the experiment, to the optional question, “What kind of thing would you like to see using a drone?,” patient E answered “mountains and rivers” and patient F answered “foreign countries,” indicating their willingness to use this system.

Level C1 refers to those who spend all day in bed and require assistance with toileting, eating, and changing clothes. Therefore, they require special long-term rehabilitation training to operate the drone using a keyboard or joystick. It is also necessary to motivate the patient to want to operate the drone.

In the case of this drone system, which could be operated by using only eye movement, the drone could be operated easily, as described above, and, during the experiment, the patients felt joy, smiled when they looked at the scenery, and communicated with the students. In this sense, we believe that the drone operation using only the eyes was useful, and that we could provide the bedridden patients with a meaningful experience.

Moreover, both of the bedridden patients who participated in the principal experiment answered “Yes” to “Do you want to fly the drone again?,” confirming the needs of the bedridden patients for this drone system. Based on the normalization principle, if there is even one bedridden patient who wants to use this system, we would like to provide this drone system to fulfill that need.

4.4. Limitations and Future Scope

In the indoor and outdoor experiments, we had safety measures, such as keeping the experiment staff and the students away from the drone. Therefore, we consider it necessary to consider safety measures, such as flying drones within safety fences or restricting the flight area by attaching a wire, to prevent contact between the drone and people in distant places when using this drone system.

Moreover, in this drone system, if the drone is close to the microphone of Computer B, the patient may hear the drone noise, and the local people may have difficulty hearing the patient’s voice, due to the drone noise. Therefore, we consider it necessary to consider measures to reduce drone noise, or to land the drone, if the patient wants to concentrate on the conversation in the future.

In the indoor experiment, which included all up/down, left/right, and forward operations, we confirmed that all the patients could operate the drone by using this drone system, even if there was a time lag of a few seconds. In the outdoor experiment, to help the patients concentrate on enjoying the scenery of the distant place and the conversation with the local people, the drone was set to only rotate right and left. The experimental results indicated that the patients could enjoy the scenery and communication with the experiment staff. From this result, we consider that patients will be able to use the drone set to only rotate right and left when the patients want to concentrate on enjoying the scenery of a distant place and conversing with local people. Also, we consider that patients will be able to use the drone allowing all up/down, left/right, and forward operations when they want to move the drone to various places to enjoy the scenery of the places and to converse with the local people. However, we did not use the drone allowing all up/down, left/right, and forward operations in the outdoor experiment. Therefore, in the future, we plan to conduct outdoor experiments to verify the effectiveness of the drone allowing all up/down, left/right, and forward operations.

5. Conclusions

This paper verified the effectiveness of the proposed remote-controlled drone system by conducting clinical experiments using bedridden patients who had been in a hospital for a long period of time. The findings showed that, not only patients with relatively high levels of independence in ADL (J1, J2, B1), but also bedridden patients (C1), could operate the drone in a distant place with only their eyes and communicate with students.

In the future, we will verify the effectiveness of the drone system, including the drone’s up-and-down and forward movements outdoors. In addition, we plan to conduct experiments with patients with other symptoms (e.g., patients with amyotrophic lateral sclerosis) to verify the effectiveness of the drone system for patients with other symptoms.

Author Contributions

Conceptualization, Y.K.; methodology, Y.K.; software, Y.K., Y.S., R.S. and A.K.; validation, Y.K., K.O., T.T. and Y.Z.; formal analysis, Y.K.; investigation, Y.K., K.O., Y.S., R.S., A.K., R.T. and T.T.; resources, Y.K.; data curation, Y.K., Y.S., R.S., A.K., R.T., K.O. and T.T.; writing—original draft preparation, Y.K., Y.S., R.S. and T.T.; writing—review and editing, Y.K., A.K., R.T., K.O., Y.Z. and T.T.; visualization, Y.K.,Y.S. and R.S.; supervision, Y.K.; project administration, Y.K.; funding acquisition, Y.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Pfizer Health Research Foundation.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and was approved by the Ethics Committee of Tokai University (approval number: 21029) and the Mifune Hospital Clinical Research Ethics Review Committee (approval number:20200408).

Informed Consent Statement

Informed consent was obtained from all participants involved in the study.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author. The data are not publicly available because of privacy and ethical restrictions.

Acknowledgments

The authors express their gratitude and appreciation to the participants. The authors thank Director Kazushi Mifune from the Mifune Hospital, as well as the entire staff of Mifune Hospital, for their assistance during the conduct of the research. Furthermore, this research was supported by the 2019 Pfizer Health Research Foundation. We would like to take this opportunity to express our deepest gratitude.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kato, H.; Hashimoto, R.; Ogawa, T.; Tagawa, A. Activity report on the occasion of the 10th anniversary of the Center for Intractable Neurological Diseases. J. Int. Univ. Health Welf. 2014, 19, 16–23. (In Japanese) [Google Scholar]

- Saito, A.; Kobayashi, A. The Study on Caregivers’ Subjective Burden in Caregiving of Amyotrophic Lateral Sclerosis Patients at Home. J. Jpn. Acad. Community Health Nurs. 2001, 3, 38–45. (In Japanese) [Google Scholar]

- Tourism Industry Division Japan Tourism Agency. Effectiveness Verification Report on the Promotion of Universal Tourism; Japan Tourism Agency: Tokyo, Japan, 2016; pp. 1–144. (In Japanese)

- Ministry of Land Infrastructure Transport and Tourism. Creating an Environment Where Everyone Can Enjoy Travel: Aiming for “Universal Design” of Tourism; Ministry of Land Infrastructure Transport and Tourism: Tokyo, Japan, 2008; pp. 1–7. (In Japanese)

- Cabinet Office. Survey on the Economic Lives of the Elderly; Cabinet Office: Tokyo, Japan, 2011; pp. 129–134. (In Japanese)

- Principle of Normalization in Human Services: Office of Justice Programs. Available online: https://www.ojp.gov/ncjrs/virtual-library/abstracts/principle-normalization-human-services (accessed on 29 November 2022).

- Bengt Nirje, “The Normalization Principle and Its Human Management Implications,” 1969: The Autism History Project. Available online: https://blogs.uoregon.edu/autismhistoryproject/archive/bengt-nirje-the-normalization-principle-and-its-human-management-implications-1969/ (accessed on 29 November 2022).

- Tourism Industry Division Japan Tourism Agency. Report on Promotion Project for Barrier-Free Travel Consultation Service; Japan Tourism Agency: Tokyo, Japan, 2020; pp. 4–48. (In Japanese)

- Tourism Agency. Practical Measures for Tour Guidance Corresponding to Universal Tourism; Japan Tourism Agency: Tokyo, Japan, 2017; pp. 2–34. (In Japanese)

- Kubota, M.; Suzuki, K. Considerations for the Development of Universal Tourism: Collaboration between travel agencies and local support organizations. Jpn. Found. Int. Tour. 2020, 27, 103–111. (In Japanese) [Google Scholar]

- What is Universal Tourism for Those Who Need Nursing Care or Are Bedridden? For Details, Application Process, and Inquiries. Available online: https://rakan-itoshima.com/universal_tourism_about (accessed on 29 November 2022). (In Japanese).

- Tourism Industry Division Japan Tourism Agency. Universal Tourism Promotion Services Report; Japan Tourism Agency: Tokyo, Japan, 2019; pp. 26–29. (In Japanese)

- Universal Tourism Promotion Project. Marketing Data Related to Universal Tourism; Japan Tourism Agency: Tokyo, Japan, 2014; pp. 2–58. (In Japanese)

- Ureshino Onsen Accommodation Universal Tourism, Travel for Those Who Need Nursing Care or Are Bedridden. Available online: https://rakan-itoshima.com/universal_tourism (accessed on 29 November 2022). (In Japanese).

- Chugoku Industrial Creation Center Foundation. Report on a Study of Tourism Promotion Policies Based on Universal Tourism in an Aging Society; Chugoku Industrial Creation Center Foundation: Hiroshima, Japan, 2015; pp. 93–94. (In Japanese) [Google Scholar]

- Tourism Industry Division Japan Tourism Agency. Report on “Demonstration Project for Strengthening Barrier-Free Travel Support System”; Japan Tourism Agency: Tokyo, Japan, 2021; pp. 4–15. (In Japanese)

- Takeuchi, T. A Study for Promoting Universal Tourism: Awareness Raising of Travel Agents and Its Practice. Jpn. Found. Int. Tour. 2019, 26, 23–31. (In Japanese) [Google Scholar] [CrossRef]

- Ministry of Land Infrastructure Transport and Tourism. Universal Design for Tourism Guidance Manual: To Create an Environment in Which Everyone Can Enjoy Travel; Ministry of Land Infrastructure Transport and Tourism: Tokyo, Japan, 2008; pp. 34–50. (In Japanese)

- Duan, M.; Li, K.; Liao, X.; Li, K. A Parallel Multiclassification Algorithm for Big Data Using an Extreme Learning Machine. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 2337–2351. [Google Scholar] [CrossRef]

- Pu, B.; Li, K.; Li, S.; Zhu, N. Automatic Fetal Ultrasound Standard Plane Recognition Based on Deep Learning and IIoT. IEEE Trans. Ind. Inform. 2021, 17, 7771–7780. [Google Scholar] [CrossRef]

- Wang, J.; Yang, Y.; Wang, T.; Sherratt, R.; Zhang, J. Big Data Service Architecture: A Survey. J. Internet Technol. 2020, 21, 393–405. [Google Scholar] [CrossRef]

- Li, H.; Ota, K.; Dong, M.; Guo, M. Learning Human Activities through Wi-Fi Channel State Information with Multiple Access Points. IEEE Commun. Mag. 2018, 56, 124–129. [Google Scholar] [CrossRef]

- Zhang, J.; Zhong, S.; Wang, T.; Chao, H.-C.; Wang, J. Blockchain-Based Systems and Applications: A Survey. J. Internet Technol. 2020, 21, 1–14. [Google Scholar] [CrossRef]

- Betriana, F.; Tanioka, R.; Kogawa, A.; Suzuki, R.; Seki, Y.; Osaka, K.; Zhao, Y.; Kai, Y.; Tanioka, T.; Locsin, R. Remote-Controlled Drone System through Eye Movements of Patients Who Need Long-Term Care: An Intermediary’s Role. Healthcare 2022, 10, 827. [Google Scholar] [CrossRef]

- Jiang, H.; Wachs, J.P.; Pendergast, M.; Duerstock, B.S. 3D joystick for robotic arm control by individuals with high level spinal cord injuries. In Proceedings of the 2013 IEEE 13th International Conference on Rehabilitation Robotics (ICORR), Seattle, WA, USA, 24–26 June 2013; pp. 1–5. [Google Scholar] [CrossRef]

- Lee, D.-H. Operator-centric joystick mapping for intuitive manual operation of differential drive robots. Comput. Electr. Eng. 2022, 104, 108427. [Google Scholar] [CrossRef]

- Naveen, R.S.; Julian, A. Brain computing interface for wheel chair control. In Proceedings of the 2013 Fourth International Conference on Computing, Communications and Networking Technologies (ICCCNT), Tiruchengode, India, 4–6 July 2013; pp. 1–5. [Google Scholar] [CrossRef]

- Liao, L.-Z.; Tseng, Y.-L.; Chiang, H.-H.; Wang, W.-Y. EMG-based Control Scheme with SVM Classifier for Assistive Robot Arm. In Proceedings of the 2018 International Automatic Control Conference (CACS), Taoyuan, Taiwan, 4–7 November 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Wang, W.; Zhang, Z.; Suga, Y.; Iwata, H.; Sugano, S. Intuitive operation of a wheelchair mounted robotic arm for the upper limb disabled: The mouth-only approach. In Proceedings of the 2012 IEEE International Conference on Robotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 1733–1740. [Google Scholar] [CrossRef]

- Nagy, G.; Varkonyi-Koczy, A.R.; Toth, J. An Anytime Voice Controlled Ambient Assisted Living System for motion disabled persons. In Proceedings of the 2015 IEEE International Symposium on Medical Measurements and Applications (MeMeA) Proceedings, Turin, Italy, 7–9 May 2015; pp. 163–168. [Google Scholar] [CrossRef]

- Espiritu, N.M.D.; Chen, S.A.C.; Blasa, T.A.C.; Munsayac, F.E.T.; Arenos, F.E.T.M.R.P.; Baldovino, R.G.; Bugtai, N.T.; Co, H.S. BCI-controlled Smart Wheelchair for Amyotrophic Lateral Sclerosis Patients. In Proceedings of the 2019 7th International Conference on Robot Intelligence Technology and Applications (RiTA), Daejeon, Korea, 1–3 November 2019; pp. 258–263. [Google Scholar] [CrossRef]

- Mallikarachchi, S.; Chinthaka, D.; Sandaruwan, J.; Ruhunage, I.; Lalitharatne, T.D. Motor Imagery EEG-EOG Signals Based Brain Machine Interface (BMI) for a Mobile Robotic Assistant (MRA). In Proceedings of the 2019 IEEE 19th International Conference on Bioinformatics and Bioengineering (BIBE), Athens, Greece, 28–30 October 2019; pp. 812–816. [Google Scholar] [CrossRef]

- Varghese, V.A.; Amudha, S. Dual mode appliance control system for people with severe disabilities. In Proceedings of the 2015 International Conference on Innovations in Information, Embedded and Communication Systems, Coimbatore, India, 19–20 March 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Inoue, Y.; Kai, Y.; Tanioka, T. Development of service dog robot. Kochi Univ. Technol. Res. Bull. 2004, 1, 52–56. (In Japanese) [Google Scholar]

- Yuan, L.; Reardon, C.; Warnell, G.; Loianno, G. Human Gaze-Driven Spatial Tasking of an Autonomous MAV. IEEE Robot. Autom. Lett. 2019, 4, 1343–1350. [Google Scholar] [CrossRef]

- BN, P.K.; Balasubramanyam, A.; Patil, A.K.; Chai, Y.H. GazeGuide: An Eye-Gaze-Guided Active Immersive UAV Camera. Appl. Sci. 2020, 10, 1668. [Google Scholar] [CrossRef]

- Maddirala, A.K.; Shaik, R.A. Removal of EMG artifacts from single channel EEG signal using singular spectrum analysis. In Proceedings of the 2015 IEEE International Circuits and Systems Symposium (ICSyS), Langkawi, Malaysia, 2–4 September 2015; pp. 111–115. [Google Scholar] [CrossRef]

- Laura, L. Computer Hygiene. CAUT Health Saf. Fact Sheet 2008, 18, 1–3. [Google Scholar]

- Lutz, O.H.-M.; Burmeister, C.; Dos Santos, L.F.; Morkisch, N.; Dohle, C.; Krüger, J. Application of head-mounted devices with eye-tracking in virtual reality therapy. Curr. Dir. Biomed. Eng. 2017, 3, 53–56. [Google Scholar] [CrossRef]

- Zhe, Z.; Sai, L.; Hao, C.; Hailong, L.; Yang, L.; Yu, F.; Felix, W.S. GaVe: A Webcam-Based Gaze Vending Interface Using One-Point Calibration. arXiv 2022, arXiv:2201.05533. [Google Scholar]

- Yamashita, A.; Asama, H.; Arai, T.; Ota, J.; Kaneko, T. A Survey on Trends of Mobile Robot Mechanisms. J. Robot. Soc. Jpn. 2003, 21, 282–292. [Google Scholar] [CrossRef]

- Sato, Y.; Mishima, H.; Kai, Y.; Tanioka, T.; Yasuhara, Y.; Osaka, K.; Zhao, Y. Development of a Velocity-Based Mechanical Safety Brake for Wheeled Mobile Nursing Robots: Proposal of the Mechanism and Experiments. Int. J. Adv. Intell. 2021, 12, 69–81. [Google Scholar]

- Kogawa, A.; Onda, M.; Kai, Y. Development of a Remote-Controlled Drone System by Using Only Eye Movements: Design of a Control Screen Considering Operability and Microsaccades. J. Robot. Mechatron. 2021, 33, 301–312. [Google Scholar] [CrossRef]

- Veling, W.; Lestestuiver, B.; Jongma, M.; Hoenders, H.J.R.; van Driel, C. Virtual Reality Relaxation for Patients With a Psychiatric Disorder: Crossover Randomized Controlled Trial. J. Med. Internet Res. 2021, 23, e17233. [Google Scholar] [CrossRef]

- Hansen, J.P.; Alapetite, A.; MacKenzie, I.S.; Møllenbach, E. The use of gaze to control drones. In Proceedings of the Symposium on Eye Tracking Research and Applications, Safety Harbor, FL, USA, 26–28 March 2014. [Google Scholar] [CrossRef]

- Hansen, J.P.; Lund, H.; Aoki, H.; Itoh, K. Gaze communication systems for people with ALS. In Proceedings of the ALS Workshop, in conjunction with the 17th International Symposium on ALS/MND, Yokohama, Japan, 30 November–2 December 2006; pp. 35–44. [Google Scholar]

- Itoh, K.; Sudoh, Y.; Yamamoto, T. Evaluation on eye gaze communication system. Inst. Image Inf. Telev. Eng. 1998, 22, 85–90. (In Japanese) [Google Scholar] [CrossRef]

- Juhong, A.; Treebupachatsakul, T.; Pintavirooj, C. Smart eye-tracking system. In Proceedings of the 2018 International Workshop on Advanced Image Technology (IWAIT), Chiang Mai, Thailand, 7–9 January 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Martinez-Conde, S.; Macknik, S.; Hubel, D.H. The role of fixational eye movements in visual perception. Nat. Rev. Neurosci. 2004, 5, 229–240. [Google Scholar] [CrossRef] [PubMed]

- Degree to Which Elderly Disabled People Can Manage Their Daily Lives (Bedridden Degree): Ministry of Health Labour and Welfare of Japan. Available online: https://www.mhlw.go.jp/file/06-Seisakujouhou-12300000-Roukenkyoku/0000077382.pdf (accessed on 22 November 2022). (In Japanese).

- Kim, B.H.; Kim, M.; Jo, S. Quadcopter flight control using a low-cost hybrid interface with EEG-based classification and eye tracking. Comput. Biol. Med. 2014, 51, 82–92. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).