Abstract

Based on educational considerations, the European Society for Paediatric Endocrinology (ESPE) e-learning portal has been developed, providing an interactive learning environment for up-to-date information in pediatric endocrinology. From March 2011 to January 2012, five small-scale pilot studies were completed to assess the usefulness of the structure and content by senior experts, fellows, residents and medical students. Altogether, 8 cases and 4 chapters were studied by a total of 71 individuals: 18 senior experts, 21 fellows, 10 medical students, 9 regional pediatricians and 13 residents, resulting in a total of 127 evaluations. Participants considered the portal content interesting and appreciated the way of learning compared to traditional learning from literature and textbooks. Special attention was paid to assess the personalized feedback given by experts to fellows and residents who completed the portal. Feedback from experts included both medical understanding and communication skills demonstrated by fellows and residents. Users highly appreciated the feedback of the medical experts, who brought perspectives from another clinic. This portal also offers educational opportunities for medical students and regional pediatricians and can be used to develop various CanMEDS competencies, e.g., medical expert, health advocate, and scholar.

1. Introduction

E-learning refers to the use of various kinds of electronic media and information and communication technologies (ICT) in education. The term “e-learning” includes all forms of educational technology that electronically or technologically supports learning and teaching. Various examples of e-learning in medical education programs have recently been described in the literature. Daetwyler [1] offered an example of using e-learning to enhance physician patient communication and in teaching how to present bad news to a patient. Dyrbye [2] concluded that e-learning has become an important tool in physician education, enabling advanced curriculum development, instruction, assessment, evaluation, educational leadership, and education scholarship.

Feedback plays a central role in learning. It offers students insight into their learning progress and supports students’ skill development. Ideally, feedback is provided face-to-face and is concrete; it identifies strengths and weaknesses and offers improvement strategies [3]. Assessment methods provide two forms of feedback: summative and formative feedback. Feedback from the summative assessment plays a central role in certification. Feedback from the formative assessment provides primarily educational feedback [4,5,6]. Both summative and formative feedback play an important role in learning. Therefore, e-learning should provide both summative and formative assessments [7].

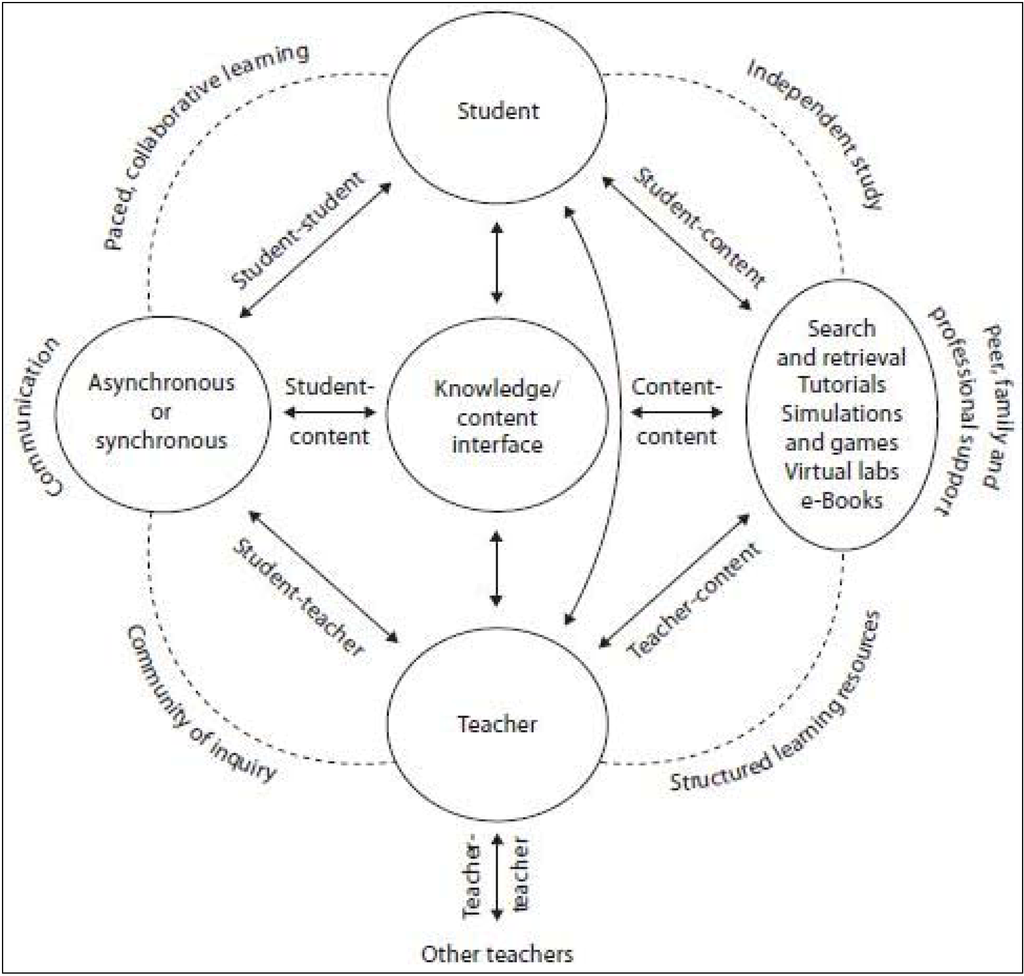

Feedback can be given in an e-learning portal, which is illustrated by Anderson’s interactions in an online learning environment (see Figure 1). This figure illustrates the two major human actors, learners and teachers, and how they interact with each other and with the content. In the figure on the left there is interaction between learners and teachers and the content, using a variety of synchronous or asynchronous activities such as video, audio, computer conferencing or interactions. Here, feedback can be given personally. The right side illustrates the independent use of structured learning resources. Common tools used in this mode include computer-assisted tutorials, tests, drills and simulations [8]. Here feedback is given automatically, by predefined answers.

Not only knowledge, but also competences count in medical education. Since the nineties, Competency-Based Medical Education (CBME) has become a priority in many countries. A widely-used framework for CBME is the CanMEDS model from the Royal College of physicians and surgeons of Canada. CanMEDS is a derivative from Canadian Medical Education Directives for Specialists. It identifies and describes seven roles that lead to optimal health and health care outcomes: medical expert (central role), communicator, collaborator, manager, health advocate, scholar and professional [9,10,11,12,13,14].

Combining the instruction and formative assessment in learning and CBME, an interactive e-learning portal for Pediatric Endocrinology [15] was developed [16]. According to Deming’s Plan-Do-Check-Act cycle [17], several small-scale pilots were performed to check on the user experience, quality of the teaching content, navigation and interaction. Furthermore, learning by personalized feedback was evaluated. One of the outcomes of the pilot studies was the experts’ opinion that CanMEDS roles can be trained within the e-learning portal.

Figure 1.

To create online educational experiences and contexts it is important to further all types of interaction. Well known is the human interaction between student and teacher about learning content (vertical line). The student-teacher interaction can also take place using a variety of synchronous or asynchronous activities such as video-, audio-, computer conferencing or virtual interactions. In addition, there are structural learning tools associated with independent learning such as (computer assisted) tutorials, drills, and simulations (Anderson, T. 2004 [8]).

2. Experimental Section

The European Society for Paediatric Endocrinology (ESPE) sets standards for medical training and accreditation in EU member states in the field of Pediatric Endocrinology. In addition to conventional learning methods like daily clinical practice and textbooks, an e-learning portal “Paediatric Endocrinology” [15] was developed in 2010. The aim of developing the web portal was to provide an interactive learning environment for up-to-date pediatric endocrinology. The development of the portal is an on-going process, following Deming’s Plan-Do-Check-Act cycle.

2.1. Plan

The pedagogical design was based on psychological learning theories and recent research described in the study of Grijpink-van den Biggelaar [16]. The most important feature of this design was the integration of learning, instruction, and assessment. The formative assessment offered immediate feedback to the independent learner [8]. The portal also aimed to support the interaction between aspirant and qualified pediatric endocrinologists to help them acquire and maintain their professional competencies. These assessments were used to train various competencies as described by the CanMEDS model [9,10,11,12].

2.2. Do

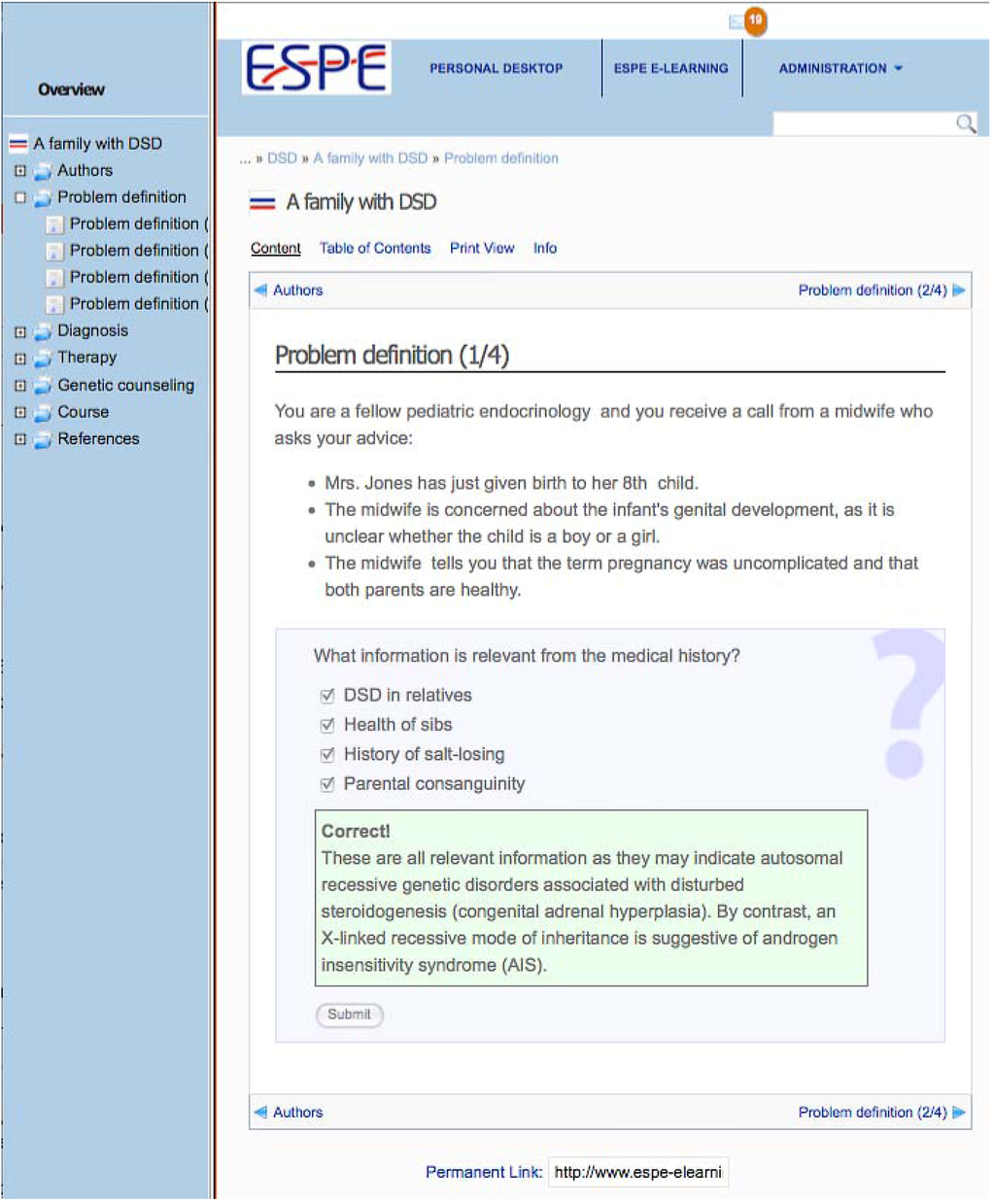

As part of the ESPE e-learning portal and as part of an EU project EURO-DSD (European Community’s Seventh Framework Program (FP7/2007–2013) grant agreement No. 2014444) a special module on Disorders of Sex Development (DSD) was developed. Many specialists on DSD contributed to this module resulting in various chapters concisely describing physiology, pathophysiology, and practical approaches to management and treatment of DSD, such as the use of therapeutic agents. In addition, real-life cases were provided, inviting the student to solve diagnostic and management problems in a step-wise and interactive manner. The first version supported independent learning by providing formative assessments. Both multiple-choice and open questions with predefined answers were provided, written by experts on DSD. Examples of questions are provided in Table 1 and a visual illustration is provided in Figure 2. Based on the outcome of the first pilot, a second version of the portal was developed. This portal contained two cases, with each three extra feedback questions that enabled personalized feedback. Fellows were requested to submit their answer on these questions to an expert, who subsequently provided electronically personalized feedback. An example of these feedback questions is also provided in Table 1.

Table 1.

Abbreviated example of an interactive case assessing various competencies.

| Competence | Question | Assessment |

|---|---|---|

| You are a fellow pediatric endocrinologist and you receive a call from a pediatrician who asks your advice: Mrs. Johnson has just given birth to her second child. The pediatrician is concerned about the infant’s genital development, as it is unclear whether the child is a boy or a girl. | ||

| Medical expert | What information do you need from the pediatrician? | Multiple-choice question |

| Medical expert | What information is relevant to provide to the pediatrician? | Open question, provide correct items |

| Collaborator | What is your advice to the pediatrician? | Open question, feedback provided by expert |

| Communicator | What is your advice to the pediatrician to tell the parents? What to tell family and friends? | Open question, feedback provided by expert |

| The genitalia of the otherwise healthy infant indeed look very ambiguous. | ||

| Medical expert | What information regarding the physical examination are you specifically interested in? What further information needs to be collected and what tests need to be performed after the initial physical examination? | Multiple-choice question |

| The karyotype is 46,XX; based on hormonal and ultrasound investigations you diagnose congenital adrenal hyperplasia. | ||

| Medical Expert | Deficiency of which enzyme is most likely responsible? | Multiple-choice question |

| Both parents are very relieved that they have a girl and the mother says: “From the beginning, I had the feeling that I had a daughter!” | ||

| Communicator | You discuss this condition with the infant’s parents. What do you say? | Open question, feedback provided by expert |

Figure 2.

A screenshot from a multiple-choice question in the studied e-learning portal.

2.3. Check

From March 2011 to January 2012, five small-scale pilot tests were conducted to evaluate the developed portals by senior experts, general pediatricians, fellows, residents and medical students. Experts are defined as pediatricians specialized in endocrinology, fellows are pediatricians in specialty training in pediatric endocrinology, residents follow a specialty training in pediatrics, and medical students are mostly in their 4–6th year. An overview of the pilot studies and the main characteristics is provided in Table 2. Pilots 1–5 were designed to evaluate independent learning as provided in the first version of the pilot. The second pilot was specifically conducted to evaluate personalized feedback in the second version. In all pilot studies, participants were asked to fill in a standard questionnaire investigating opinions about content, user experience, time spent, and the questions used in the e-learning portal. In the second and fifth questionnaire, participants were also asked about interactivity and the ability of the portal to train competences described by the CanMEDS model. Pilots 3–5 focused on the use of the portal for specific user groups.

Table 2.

Main characteristics of five pilot studies.

| Time period | Subject | Items studied | Participants | |

|---|---|---|---|---|

| Pilot 1 | March 2011 | User experience and quality | 3 cases, 3 chapters | 9 experts, 1 fellows, 9 residents, 6 medical students, total 35 |

| Pilot 2 | August 2011 | Interaction | 2 cases | 3 experts, 8 fellows, 3 residents, total 14 |

| Pilot 3 | October 2011 | Use for medical students | 2 cases | 4 medical students |

| Pilot 4 | November 2011 | Use for regional pediatricians | 2 cases | 9 pediatricians, 1 fellow |

| Pilot 5 | January 2012 | Use for masterclass | 2 cases | 9 experts, 1 fellow |

2.3.1. Pilot 1

This pilot was conducted to evaluate user experience, the didactic quality of the content, and navigation in the e-learning environment. Three cases and three chapters were selected for evaluation. A total of 54 people were invited to participate, including senior experts, fellows, residents and medical students worldwide. Chapters and cases contained a various amount of open and multiple-choice questions with standard answer models. All participants were asked to study one chapter and one case and subsequently answer a survey, e.g., does the content connect well to the level of knowledge of the different target groups? Are there sufficient questions in the cases and are these questions adjusted to the needs of the different users? How do experts think about the content of the chapters and the cases?

2.3.2. Pilot 2

In order to test and evaluate the feedback offered in the second version of the portal, 4 experts and 24 fellows or senior residents were asked to participate. In the period of 30 August to 22 September 2011, the fellows and residents, referred to as “students”, were asked to study 2 cases, each containing 3 feedback questions. The answers on these questions were sent to an expert appointed to the student, referred to as “teacher”. The teacher gave personalized feedback to the given response. An answer model with some suggestions was provided for the experts. The student received a notification and had the opportunity to engage in further discussion with the teacher. The experiences were evaluated with a questionnaire, consisting of questions regarding time spent, quality of feedback, evaluation of interaction and evaluation of usability of the e-learning portal to train CanMEDS competences.

2.3.3. Pilot 3

The third pilot evaluated the use of the portal in the master phase of medical student education. The study was conducted at the Erasmus University in Rotterdam, the Netherlands. 24 Master students, completing a minor in pediatrics, were asked to study a chapter and a case describing a real-life situation. Afterwards they were asked to fill in a questionnaire to evaluate time spent as well as the quality and level of the content and the navigation.

2.3.4. Pilot 4

The fourth pilot study evaluated the contribution of the e-learning portal to the on-going education of regional pediatricians participating in post-graduate training for regional pediatricians on DSD in the Netherlands in the Rotterdam area. A group of 36 pediatricians and fellows were asked to study two cases in the portal and subsequently fill in the questionnaire.

2.3.5. Pilot 5

The fifth pilot study was conducted to evaluate the usability of the portal for on-going education for experts and fellows in DSD. The 29 participants in a masterclass were asked to study two cases and subsequently fill in the questionnaire.

3. Results and Discussion

Pilot 1 included 34 participants: 9 experts, 11 fellows, 9 residents and 5 medical students. For the second pilot 4 experts and 24 fellows or senior residents were asked to participate. Due to the short pilot period and summer period, 3 experts, 8 fellows and 3 residents were able to participate. Only 4 of the 24 medical students replied who were asked to participate in the third pilot. In the fourth pilot, 10 invitees responded. From the 29 participants in the masterclass who were invited for this pilot, 10 persons participated. See Table 2 for an overview of participants of the pilot studies. Altogether, 8 cases and 4 chapters were studied by a total of 71 people: 18 senior experts, 21 fellows, 10 medical students, 9 regional pediatricians and 13 residents, with a participation rate of 42%. Some participants studied multiple cases or chapters, resulting in a total of 127 evaluations.

3.1. Evaluation of Usability and Time Spent

A standard survey was used in all pilot studies to evaluate the studied chapters and cases on content, illustrations, effectiveness of learning compared to other learning methods such as textbooks, and time spent to study a chapter or case. An overview of the results is given in Table 3 and is later discussed in more detail.

Table 3.

Results of all pilot studies (n = number of respondents, sd = standard deviation).

| Evaluation by subgroup | N | Content (scale 1–10) | Illustrations (scale 1–10) | Effectiveness of learning | Time spent (min) | ||||

|---|---|---|---|---|---|---|---|---|---|

| mean | sd | mean | sd | mean | sd | mean | sd | ||

| Expert | 30 | 8.3 | 1.7 | 7.1 | 2.2 | 8.2 | 1.0 | 41 | 37 |

| Fellow | 35 | 8.6 | 1.5 | 7.0 | 2.4 | 8.3 | 0.9 | 56 | 40 |

| Medical student | 20 | 8.0 | 0.9 | 7.2 | 1.5 | 7.8 | 1.2 | 37 | 18 |

| Regional pediatrician | 20 | 8.4 | 0.9 | 7.4 | 1.7 | 8.2 | 0.8 | 19 | 8 |

| Resident | 22 | 8.3 | 1.4 | 7.2 | 1.6 | 7.9 | 1.3 | 53 | 45 |

| Total | 127 | 8.4 | 1.4 | 7.1 | 1.9 | 8.1 | 1.1 | 43 | 36 |

Regarding the level of difficulty of the studied content, the opinions varied. In general, experts and fellows judged the content as appropriate, but that depended on whether a case or chapter was considered easy or difficult. Medical students however judged the content as appropriate to difficult.

The time spent depended on the length of the case or chapter studied and ranged from a mean of 17 min for the shortest case to a mean of 69 min for the longest. Furthermore, the time spent varied per subgroup. On average residents and fellows spent most time on studying the content. The time spent was mostly judged as appropriate. Fellows indicated that they would like to have clear information about the time needed and the objectives before they start with the case. Experts indicated that “lack of time was a great threat”, by which they were not able to spend the appropriate time and attention.

Overall, the respondents were enthusiastic about the content of the studied sections. There were some suggestions to break the text into shorter paragraphs in order to make the information easier to digest. More illustrations, photos, animations and diagrams were desired. Also, a short take-home message at the end of a chapter was requested as potentially helpful for students.

On average, respondents were satisfied by the number and the quality of open and multiple-choice questions. Several students remarked that they found questions helpful in the learning process. There were several requests to add more questions to check the knowledge retained. For example: “I think a combination of multiple-choice and open questions is appropriate. Open questions stimulate to think more and take time. Multiple choice questions cost less time”.

In the first pilot, specific attention was paid to students’ wishes for personalized feedback on certain questions. This potential option received an average rating of 8 on a scale of 1–10. There was a suggestion for each page to have a “Feedback” section where users could leave feedback or ask questions on it. It was suggested that personalized feedback may be difficult to implement, as it will require resources. However, as one respondent commented, “I do not think that every open question should be given personal feedback. Some are easy to explain in general, some need help”.

3.2. Personalized Feedback

The second pilot was especially conducted to evaluate personalized feedback given by experts to fellows and residents. Each studied case contained three feedback questions, where the answer of the fellow/resident was sent to an expert for personalized feedback. On average, fellows spent 30–40 min answering and discussing the three questions in one case while residents spent 40–45 min. Experts needed 15 min in average per case to give feedback. The way of interaction was qualified on a scale of 1 (worst)–10 (best); it showed an average score of 8.3 for experts, 6.3 for fellows and 7.3 for residents. Several fellows preferred receiving feedback by direct e-mail, although the involved experts did not want to use e-mail. The mode of interaction was evaluated as acceptable. The quality of the interaction received a mean score of 8 on a scale of 1–10 (worst-best). Fellows and residents appreciated the opinion of another expert, who brought in another perspective. It was also appreciated not only to receive feedback on pure medical aspects, but also to receive feedback on communication skills. In the pilot the answers of both students and teachers were anonymous, which was highly valued by several students and one teacher. “Anonymity guarantees that the student feels free to answer”.

3.3. Different Target Groups and Didactical Possibilities

One aspect the pilot studies tested was how applicable the e-learning portal was for different target groups. The number of medical students (4 out of 24) that participated in the third pilot showed little enthusiasm. Their comments mostly involved portal navigation. The content was rated interesting, however difficult. The fourth pilot was set up with regional pediatricians. The content was evaluated as interesting (see Table 3), the level of material adequate, and the time spent varied from appropriate to short. One respondent stated that it was not fit for a general pediatrician, but would be very useful for the fellow in the pediatric endocrinology or for endocrinologists for on-going learning.

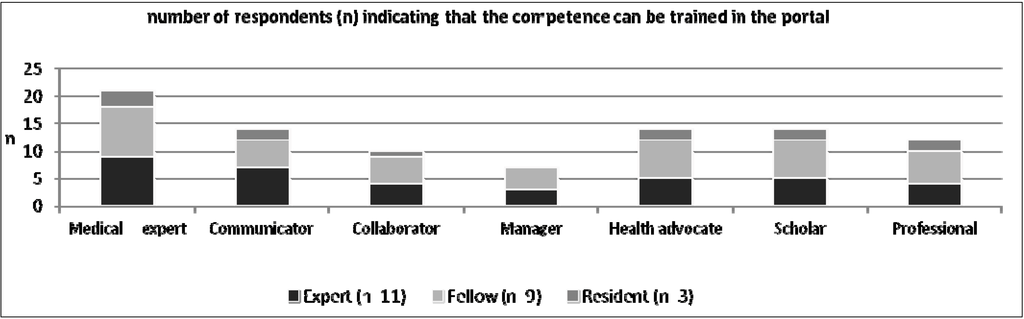

Furthermore, the second and fifth pilot paid attention to the portal’s applicability of the CanMEDS competences for the education and training in an e-learning environment. In the opinion of the respondents, the e-learning portal can be used to train all competences, in particular the role of Medical Expert (see Figure 3).

Figure 3.

This figures illustrates the opinion of the named subgroups about the applicability to train CanMEDS competences in the studied e-learning portal. The vertical axis counts the number respondents indicating that the portal is suitable to train the specific competence.

4. Discussion

The ESPE e-learning portal [15] provides a rich source of information for medical students, residents, fellows, specialists, consultants and teachers around the world. The portal is based on educational learning theories and is continuously improved in order to fit the daily practice of the users. In order to evaluate the content and user experience of the current portal, pilot studies were conducted. The outcomes of the pilot studies are just a preliminary “check” in the plan-do-check-act cycle, evaluating the first versions of the e-learning portal based on educational principles. The results of these “checks” are used for the further development of the e-learning portal.

We noticed that the response rate of these pilot studies was 42%, varying individually from 17%–63%. In literature, comparable response rates are found, for example a survey from a university in Cameroon [18] had a response rate of 51%. We do not know the reasons for not participating as we did not address specific attention to this respect. However, this might be due to the voluntary participation and the time needed to study the content. In future studies, we intend to study what factors affect individual participation and usage in more detail.

Participants of the pilot studies stated that the content was interesting and that the level of the content was appropriate. Valuable suggestions for improvement were made to break the text in shorter paragraphs and provide short take home messages at the end of a chapter or case. These suggestions will be incorporated in future versions of the portal.

We were particularly interested to learn about the wish of the students for personalized feedback and the portals feasibility to provide this. Also, we were interested in the response of both students and teachers to comply with the exchange of information. The quality of feedback exchange was rated highly by both students and teachers. Several fellows preferred receiving feedback by direct e-mail, although the involved experts did not want to use e-mail. Not unexpectedly, time spent was a serious issue. Thus, in future studies, more details on the time needed to complete a section will be provided.

An additional aim of the pilot studies was to obtain initial insight into the use of the CanMEDS competencies, both offering specific items related to CanMEDS roles in problem solving cases and evaluating these competencies. We think that the possibility of personalized feedback is appropriate to train roles such as communicator and health advocate. Independent learning and questions with fixed answers seem appropriate for roles such as medical expert roles and scholar. Our preliminary data suggest that these competencies can be addressed, and we intend to further develop formative assessment modules.

Based on the insights obtained in these pilot studies, we proceeded to further develop the ESPE e-learning portal using an open source learning management system ILIAS [19] with major emphasis on formative assessment and option for summative assessment.

5. Conclusions

The ESPE e-learning portal [15] provides a rich source of information for medical students, residents, fellows and specialists around the world. The portal was based on educational principles and is continuously improved in order to fit in to the daily practice of the users. In order to evaluate the content and user experience of the current portal, a number of pilot studies were conducted.

The portal offers possibilities for medical education, both for knowledge and for competence purposes. The portal offers possibilities for independent learning, which seems fit to train competencies as medical expert and scholar. This was accomplished by providing chapters and real-life cases with formative assessment in the form of open and multiple-choice questions with standard answer models. Moreover, the portal offers possibilities to train other competencies such as communicator and health advocate by providing personalized feedback.

It has to be mentioned that the pilot study outcomes were preliminary “checks” in the Plan-Do-Check-Act cycle, evaluating the first versions of the e-learning portal based on educational principles. The results of these “checks” were used to further develop the e-learning portal. Based on the insights from the pilot studies, we further developed the ESPE e-learning portal using an open source learning management system ILIAS [7] with major emphasis on formative assessment and with options for summative assessment.

Acknowledgment

We thank Conny de Vugt for her excellent contribution regarding the content management. We gratefully acknowledge the critical review of the manuscript by Miriam Muscarella, bachelor women studies, Harvard University, Boston, MA, USA.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Daetwyler, C.J.; Cohen, D.G.; Gracely, E.; Novack, D.H. Elearning to enhance physician patient communication: A pilot test of “doc.com” and “WebEncounter” in teaching bad news delivery. Med. Teach. 2010, 32, e381–e390. [Google Scholar] [CrossRef]

- Dyrbye, L.; Cumyn, A.; Day, H.; Heflin, M. A qualitative study of physicians’ experiences with online learning in a masters degree program: Benefits, challenges, and proposed solutions. Med. Teach. 2009, 31, e40–e46. [Google Scholar] [CrossRef]

- Orrel, J. Feedback on learning achievement: Rhetoric and reality. Teach. High. Educ. 2006, 11, 441–456. [Google Scholar] [CrossRef]

- Gibbs, G.; Simpson, C. Conditions under which assessment supports students’ learning. Learn. Teach. High. Educ. 2004, 5, 3–31. [Google Scholar]

- Van den Vleuten, C.P.M.; Schuwirth, L.W.T. Assessing professional competency: From methods to programmes. Med. Educ. 2005, 39, 309–317. [Google Scholar] [CrossRef]

- Galbraith, M.; Hawkins, R.E.; Holmboe, E.S. Making self-assessment more effective. J. Contin. Educ. Health. 2008, 28, 20–24. [Google Scholar] [CrossRef]

- Schuwirth, L.W.T.; van den Vleuten, C.P.M. A plea for new psychometric models in educational assessment. Med. Educ. 2006, 40, 296–300. [Google Scholar] [CrossRef]

- Toward a theory of online learning. Available online: http://epe.lac-bac.gc.ca/100/200/300/athabasca_univ/theory_and_practice/ch2.html (accessed on 6 August 2013).

- Frank, J.R.; Danoff, D. The CanMEDS initiative: Implementing an outcomes-based framework of physician competencies. Med. Teach. 2007, 29, 642–647. [Google Scholar] [CrossRef]

- Frank, J.R.; Snell, L.S.; Cate, O.T.; Holmboe, E.S.; Carraccio, C.; Swing, S.R.; Harris, P.; Glasgow, N.J.; Campbell, C.; Dath, D.; et al. Cmpetency-based medical education: Theory to practice. Med. Teach. 2010, 32, 638–645. [Google Scholar] [CrossRef]

- Wong, R. Defining content for a competency-based (CanMEDS) postgraduate curriculum in ambulatory care: A delphi study. Can. Med. Educ. J. 2012, 3. Article 1. [Google Scholar]

- CanMEDS: Better standards, better physicians, better care. Available online: http://www.royalcollege.ca/portal/page/portal/rc/resources/aboutcanmeds (accessed on 6 August 2013).

- Stutsky, B.J.; Singer, M.; Renaud, R. Determining the weighting and relative importance of CanMEDS roles and competencies. BMC Res. Notes 2012, 5. [Google Scholar] [CrossRef]

- Michels, N.R.M.; Denekens, J.; Driessen, E.W.; van Gaal, L.F.; Bossaert, L.L.; de Winter, B.Y. A Delphi study to construct a CanMEDS competence based inventory applicable for workplace assessment. BMC Med. Educ. 2012, 12. [Google Scholar] [CrossRef]

- Espe e-learning portal. Available online: http://www.espe-elearning.org/ (accessed on 6 August 2013).

- Grijpink-van den Biggelaar, K.; Drop, S.L.S.; Schuwirth, L. Development of an e-learning portal for pediatric endocrinology: Educational considerations. Horm. Res. Paediatr. 2010, 73, 223–230. [Google Scholar]

- Deming, W.E. Out of the Crisis; MIT Center for Advanced Engineering Study: Cambridge, MA, USA, 1986. [Google Scholar]

- Bediang, G.; Stoll, B.; Geissbuhler, A.; Klohn, A.M.; Stuckelberger, A.; Nko’o, S.; Chastonay, P. Computer literacy and e-learning perception in cameroon: The case of yaounde faculty of medicine and biomedical sciences. BMC Med. Educ. 2013, 13. [Google Scholar] [CrossRef]

- Open source e-learning. Available online: http://www.ilias.de/docu/goto.php?target=root_1 (accessed on 6 August 2013).

© 2013 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).