A Meta-Synthesis of Technology-Supported Peer Feedback in ESL/EFL Writing Classes Research: A Replication of Chen’s Study

Abstract

1. Introduction

- (1)

- What are the characteristics of technology-supported peer-feedback activities found in the primary studies from 2011 to 2022?

- (2)

- What are the technologies used in computer-mediated peer interactions, and what are their advantages and disadvantages? In what ways do these activities affect students’ perceptions and attitudes?

- (3)

- What are the main themes emerging from the GT analysis, and what is the metatheory for the synthesis?

2. Literature Review

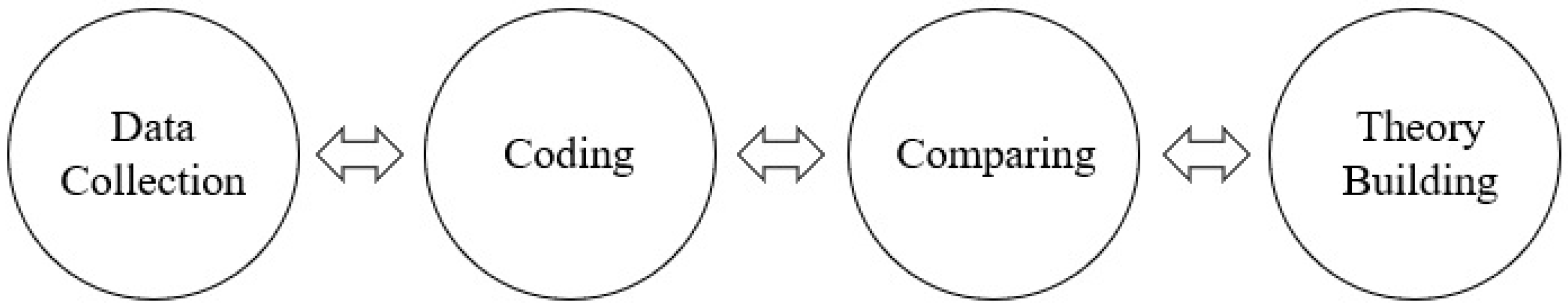

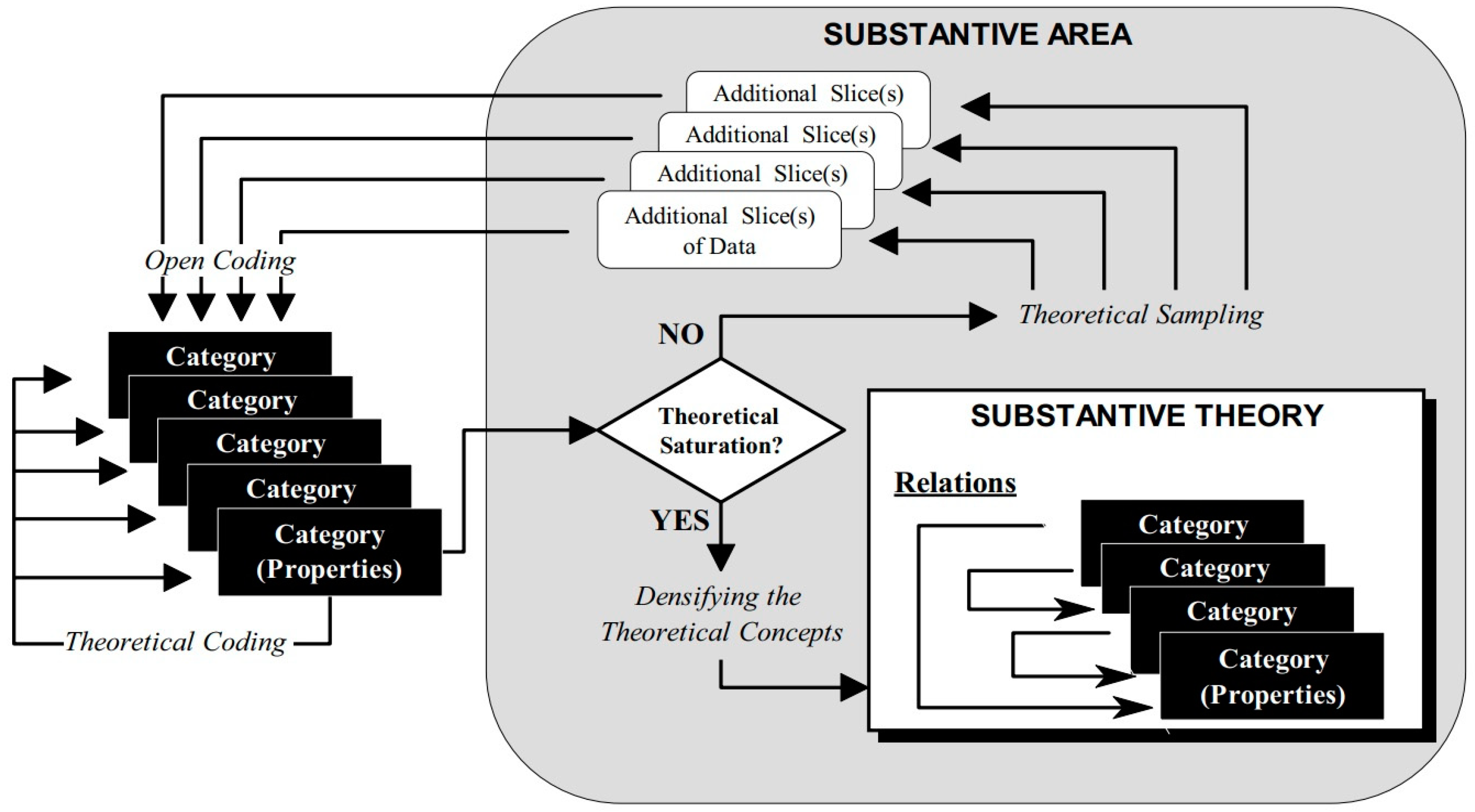

3. Methodology

3.1. The Data Collection Stage

3.2. Literature Searching Steps

- (1)

- Peer feedback/peer response/peer review/peer editing/peer interaction;

- (2)

- Peer feedback (and the interchangeable items in item (1) + ESL/EFL writing);

- (3)

- Peer cooperation + ESL/EFL writing;

- (4)

- Peer feedback (and the interchangeable terms in item (1) + training in ESL/EFL writing);

- (5)

- Online (computer-mediated, technology-enhanced, synchronous, and asynchronous) + peer feedback (the interchangeable terms in item (1) + ESL/EFL writing).

- (1)

- Educational Resource Information Center (ERIC);

- (2)

- Social Science Citation Index (SSCI);

- (3)

- Arts & Humanities Citation Index (AHCI);

- (4)

- Academic Search Premier (EBSCO HOST);

- (5)

- Cambridge Core;

- (6)

- Project Muse;

- (7)

- JSTOR;

- (8)

- Directory of Open Access Journals.

3.3. The Data Evaluation Stage

- (1)

- The studies had to be published or completed between 2011 and 2022 and had to take place in ESL/EFL writing classrooms.

- (2)

- The instructors of the studies had to employ at least one type of technology (computer-mediated) mode of peer-feedback activities in the writing class.

- (3)

- The studies had to use a qualitative or a mixed research design including qualitative analysis.

- (4)

- Similar to Chen’s study, the qualitative data found in each article had to meet five criteria, adopted from the Qualitative Research Guidelines of Journals of Language Learning and Technology and TESOL Quarterly:

- (a)

- The articles contained thorough descriptions of the research contexts and participants.

- (b)

- The procedures of data collection and data analysis had to be fully described.

- (c)

- The articles’ findings, limitations, and implications were described.

- (d)

- A description of a clear and salient organization of patterns should be included in the data analysis.

- (e)

- The findings had to be interpreted holistically, and the writers had to trace the meaning of patterns throughout all of the contexts in which they were entrenched.

3.4. Coding the Literature

4. Coding Results

5. Discussion of Research Questions

- The way students’ learning, perceptions, and attitudes are affected by technology-supported feedback activities should be considered.

- The benefits and drawbacks of using computer-mediated feedback (compared to face-to-face feedback) should be considered when applying them in the classes.

- The instructors should provide proper training for the students (both in terms of giving helpful feedback and utilizing the online platforms).

- Considering contextual factors in peer feedback activities plays an important role in successfully integrating technology in giving feedback.

Understanding the students’ preferences, capabilities, and attitudes regarding using technology features in learning, as well as considering the contextual factors, the advantages and disadvantages of each online platform, and the instructors’ ability in providing training on giving proper feedback and using technology determine the extent to which implementing technology-supported peer feedback activities would be successful.

6. Implications

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Abdullah, Mohamad Yahya, Supyan Hussin, and Mohanaad Shakir. 2018. The effect of peers’ and teacher’s e-feedback on writing anxiety level through CMC applications. International Journal of Emerging Technologies in Learning 13: 8448. [Google Scholar] [CrossRef]

- Ahmed, Rashad, and AL-KADİ Abdu. 2021. Online and face-to-face peer review in academic writing: Frequency and preferences. Eurasian Journal of Applied Linguistics 7: 169–201. [Google Scholar]

- Anson, Chris M., Deanna P. Dannels, Johanne I. Laboy, and Larissa Carneiro. 2016. Students’ perceptions of oral screencast responses to their writing: Exploring digitally mediated identities. Journal of Business and Technical Communication 30: 378–411. [Google Scholar]

- Belén Díez-Bedmar, María, and Pascual Pérez-Paredes. 2012. The types and effects of peer native speakers’ feedback on CMC. Language Learning & Technology 16: 62–90. [Google Scholar]

- Chen, Kate Tzu-Ching. 2012. Blog-based peer reviewing in EFL writing classrooms for Chinese speakers. Computers and Composition-An International Journal for Teachers of Writing 29: 280. [Google Scholar] [CrossRef]

- Chen, Tsuiping. 2016. Technology-supported peer feedback in ESL/EFL writing classes: A research synthesis. Computer Assisted Language Learning 29: 365–97. [Google Scholar] [CrossRef]

- Chew, Esyin, Helena Snee, and Trevor Price. 2016. Enhancing international postgraduates’ learning experience with online peer assessment and feedback innovation. Innovations in Education and Teaching International 53: 247–59. [Google Scholar] [CrossRef]

- Ciftci, Hatime, and Zeynep Kocoglu. 2012. Effects of peer e-feedback on Turkish EFL students’ writing performance. Journal of Educational Computing Research 46: 61–84. [Google Scholar] [CrossRef]

- Colpitts, Bradley D. F., and Travis Hunter Past. 2019. A Comparison of Computer-Mediated Peer Corrective Feedback between High and Low-Proficiency Learners in a Japanese EFL Writing Classroom. JALT CALL Journal 15: 23–39. [Google Scholar] [CrossRef]

- Cunningham, Kelly J. 2019. Student perceptions and use of technology-mediated text and screencast feedback in ESL writing. Computers and Composition 52: 222–41. [Google Scholar] [CrossRef]

- Cuocci, Sophie. 2022. The Effect of Written and Audiovisual Interactivity on Teacher Candidates’ Application of Instructional Support Practices for English Learners in an Online TESOL Course. Doctoral dissertation, University of Central Florida, Orlando, FL, USA. Available online: https://stars.library.ucf.edu/etd2020/1369 (accessed on 28 December 2022).

- Cuocci, Sophie, and Padideh Fattahi Marnani. 2022. Technology in the classroom: The features language teachers should consider. Journal of English Learner Education 14. Available online: https://stars.library.ucf.edu/jele/vol14/iss2/4/ (accessed on 28 December 2022).

- Cuocci, Sophie, and Rebeca Arndt. 2020. SEL for culturally and linguistically diverse students. Journal of English Learner Education 10: 4. [Google Scholar]

- DiGiovanni, Elaine, and Girija Nagaswami. 2001. Online peer review: An alternative to face-to-face? ELT Journal 55: 263–72. [Google Scholar] [CrossRef]

- Dochy, Filip J. R. C., Mien Segers, and Dominique Sluijsmans. 1999. The use of self-, peer and co-assessment in higher education: A review. Studies in Higher Education 24: 331–50. [Google Scholar] [CrossRef]

- Er, Erkan, Yannis Dimitriadis, and Dragan Gašević. 2021. A collaborative learning approach to dialogic peer feedback: A theoretical framework. Assessment & Evaluation in Higher Education 46: 586–600. [Google Scholar]

- Fattahi Marnani, Padideh, and Sophie Cuocci. 2022. Foreign language anxiety: A review on theories, causes, consequences and implications for educators. Journal of English Learner Education 14. Available online: https://stars.library.ucf.edu/jele/vol14/iss2/2/?utm_source=stars.library.ucf.edu%2Fjele%2Fvol14%2Fiss2%2F2&utm_medium=PDF&utm_campaign=PDFCoverPages (accessed on 28 December 2022).

- Fernandez, Walter D., Hans Lehmann, and Alan Underwood. 2002. Rigour and relevance in studies of IS innovation: A grounded theory methodology approach. Paper presented at the 10th European Conference on Information Systems, Gdañsk, Poland, June 6–8; Information Systems and the Future of the Digital Economy. pp. 110–19. [Google Scholar]

- Ferris, Dana R., and John Hedgcock. 2013. Teaching L2 Composition: Purpose, Process, and Practice. New York: Routledge. [Google Scholar]

- Glaser, Barney. G. 1998. Doing Grounded Theory: Issues and Discussions. Mill Valley: Sociology Press. [Google Scholar]

- Glaser, Barney. G., and Anselm L. Strauss. 1967. The Discovery of Grounded Theory: Strategies for Qualitative Research. New York: Aldine Publishing Company. [Google Scholar]

- Hansen, Jette G., and Jun Liu. 2005. Guiding principles for effective peer response. ELT Journal 59: 31–38. [Google Scholar] [CrossRef]

- Hedgecock, John. S. 2005. Taking stock of research and pedagogy in L2 writing. In Handbook of Research in Second Language Teaching and Learning. Edited by E. Hinkel. Mahwah: Lawrence Erlbaum Publishers. [Google Scholar]

- Ho, Mei-Ching. C. 2012. The efficiency of electronic peer feedback: From Taiwanese EFL students’ perspectives. International Journal of Arts & Sciences 5: 423. [Google Scholar]

- Ho, Mei-Ching. C. 2015. The effects of face-to-face and computer-mediated peer review on EFL writers’ comments and revisions. Australasian Journal of Educational Technology 31. [Google Scholar] [CrossRef]

- Huisman, Bart, Nadira Saab, Paul van den Broek, and Jan van Driel. 2019. The impact of formative peer feedback on higher education students’ academic writing: A Meta-Analysis. Assessment & Evaluation in Higher Education 44: 863–80. [Google Scholar]

- Hussin, Supyan, Mohamad Yahya Abdullah, Noriah Ismail, and Soo Kum Yoke. 2015. The Effects of CMC Applications on ESL Writing Anxiety among Postgraduate Students. English Language Teaching 8: 167–72. [Google Scholar] [CrossRef]

- Hyland, Ken, and Fiona Hyland. 2019. Feedback in Second Language Writing: Contexts and Issues. Cambridge: Cambridge University Press. [Google Scholar]

- Kayacan, Ayten, and Salim Razı. 2017. Digital self-review and anonymous peer feedback in Turkish high school EFL writing. Journal of Language and Linguistic Studies 13: 561–77. [Google Scholar]

- Kelly, Alison, and Kimberly Safford. 2009. Does teaching complex sentences have to be complicated? Lessons from Children’s Online Writing. Literacy 43: 118–22. [Google Scholar]

- Kim, Victoria. 2018. Technology-Enhanced Feedback on Student Writing in the English-Medium Instruction Classroom. English Teaching 73: 29–53. [Google Scholar] [CrossRef]

- Kitade, Keiko. 2000. L2 learners’ discourse and SLA theories in CMC: Collaborative interaction in Internet chat. Computer Assisted Language Learning 13: 143–66. [Google Scholar] [CrossRef]

- Kitchakarn, Orachorn. 2013. Peer feedback through blogs: An effective tool for improving students’ writing abilities. Turkish Online Journal of Distance Education 14: 152–64. [Google Scholar]

- Ko, Myong-HeeH. 2019. Students’ reactions to using smartphones and social media for vocabulary feedback. Computer Assisted Language Learning 32: 920–44. [Google Scholar] [CrossRef]

- Kostopoulou, Stergiani, and Fergus O’Dwyer. 2021. “We learn from each other”: Peer review writing practices in English for Academic Purposes. Language Learning in Higher Education (Berlin, Germany) 11: 67–91. [Google Scholar] [CrossRef]

- Lantolf, James. P. 2000. Introducing sociocultural theory. Sociocultural Theory and Second Language Learning 1: 1–26. [Google Scholar]

- Lehmann, Hans P. 2001. A Grounded Theory of International Information Systems. Unpublished Doctoral dissertation, University of Auckland, Auckland, New Zealand. [Google Scholar]

- Li, Mimi, and Jinrong Li. 2017. Online peer review using Turnitin in first-year writing classes. Computers and Composition 46: 21–38. [Google Scholar] [CrossRef]

- Lin, Ming Huei. 2015. Learner-centered blogging: A preliminary investigation of EFL student writers’ experience. Journal of Educational Technology & Society 18: 446–58. [Google Scholar]

- Lin, Ming Huei, Nicholas Groom, and Chin-Ying Lin. 2013. Blog-assisted learning in the ESL writing classroom: A phenomenological analysis. Journal of Educational Technology & Society 16: 130–39. [Google Scholar]

- Liou, Hsien-Chin, and Zhong-Yan Peng. 2009. Training effects on computer-mediated peer review. System 37: 514–25. [Google Scholar] [CrossRef]

- Liu, Jun, and Randall W. Sadler. 2003. The effect and affect of peer review in electronic versus traditional modes on L2 writing. Journal of English for Academic Purposes 2: 193–227. [Google Scholar] [CrossRef]

- Noroozi, Omid, Harm Biemans, and Martin Mulder. 2016. Relations between scripted online peer feedback processes and quality of written argumentative essay. The Internet and Higher Education 31: 20–31. [Google Scholar] [CrossRef]

- Noroozi, Omid, and Javad Hatami. 2018. The effects of online peer feedback and epistemic beliefs on students’ argumentation-based learning. Innovations in Education and Teaching International 56: 548–57. [Google Scholar] [CrossRef]

- Novakovich, Jeanette. 2016. Fostering critical thinking and reflection through blog-mediated peer feedback. Journal of Computer Assisted Learning 32: 16–30. [Google Scholar] [CrossRef]

- Nguyen, Phuong Thi Tuyet. 2012. Peer feedback on second language writing through blogs: The case of a Vietnamese EFL classroom. International Journal of Computer-Assisted Language Learning and Teaching (IJCALLT) 2: 13–23. [Google Scholar] [CrossRef]

- Pappamihiel, Eleni N. 2002. English as a second language students and English language anxiety: Issues in the mainstream classroom. Research in the Teaching of English 36: 327–55. [Google Scholar]

- Pennington, Martha C. 1993. Exploring the potential of word processing for non-native writers. Computers and the Humanities 27: 149–63. [Google Scholar] [CrossRef]

- Pham, Ha Thi Phuong. 2020. Computer-mediated and face-to-face peer feedback: Student feedback and revision in EFL writing. Computer Assisted Language Learning 35: 2112–47. [Google Scholar] [CrossRef]

- Pham, V. P. Ho. 2021. The effects of lecturer’s model e-comments on graduate students’ peer e-comments and writing revision. Computer Assisted Language Learning 34: 324–57. [Google Scholar] [CrossRef]

- Ravand, Hamdollah, and Abbas Eslami Rasekh. 2011. Feedback in ESL writing: Toward an interactional approach. Journal of Language Teaching and Research 2: 1136. [Google Scholar] [CrossRef]

- Saeed, Murad Abdu, Kamila Ghazali, Sakina Suffian Sahuri, and Mohammed Abdulrab. 2018. Engaging EFL learners in online peer feedback on writing: What does it tell us? Journal of Information Technology Education: Research 17: 39–61. [Google Scholar] [CrossRef] [PubMed]

- Saglamel, Hasan, and Sakire Erbay Çetinkaya. 2022. Students’ Perceptions towards Technology-Supported Collaborative Peer Feedback. Indonesian Journal of English Language Teaching and Applied Linguistics 6: 189–206. [Google Scholar] [CrossRef]

- Schultz, Jean Marie. 2000. Computers and collaborative writing in the foreign language curriculum. In Network-Based Language Teaching: Concepts and Practice. Edited by M. Warschauer and R. Kern. Cambridge: Cambridge University Press. [Google Scholar]

- Storch, Neomi. 2018. Written corrective feedback from sociocultural theoretical perspectives: A research agenda. Language Teaching 51: 262–77. [Google Scholar] [CrossRef]

- Tajabadi, Azar, Moussa Ahmadian, Hamidreza Dowlatabadi, and Hooshang Yazdani. 2020. EFL learners’ peer negotiated feedback, revision outcomes, and short-term writing development: The effect of patterns of interaction. Language Teaching Research. [Google Scholar] [CrossRef]

- Thompson, Riki, and Meredith J. Lee. 2012. Talking with students through screencasting: Experimentations with video feedback to improve student learning. The Journal of Interactive Technology and Pedagogy 1: 1–16. [Google Scholar]

- Tolosa, Constanza, Martin East, and Helen Villers. 2013. Online peer feedback in beginners’ writing tasks: Lessons learned. IALLT Journal of Language Learning Technologies 43: 1–24. [Google Scholar] [CrossRef]

- Tuzi, Frank. 2004. The impact of e-feedback on the revisions of L2 writers in an academic writing course. Computers and Composition 21: 217–35. [Google Scholar] [CrossRef]

- Vaezi, Shahin, and Ehsan Abbaspour. 2015. Asynchronous online peer written corrective feedback: Effects and affects. In Handbook of Research on Individual Differences in Computer-Assisted Language Learning. Hershey: IGI Global, pp. 271–97. [Google Scholar]

- Van den Berg, Ineke, Wilfried Admiraal, and Albert Pilot. 2006. Designing student peer assessment in higher education: Analysis of written and oral peer feedback. Teaching in Higher Education 11: 135–47. [Google Scholar] [CrossRef]

- Vygotsky, Lev Semenovich, and Michael Cole. 1978. Mind in Society: Development of Higher Psychological Processes. Cambridge: Harvard University Press. [Google Scholar]

- Warnock, Scott. 2008. Responding to Student Writing with Audio-Visual Feedback. In Writing and the iGeneration: Composition in the Computer-Mediated Classroom. Edited by T. Carter, M. A. Clayton, A. D. Smith and T. G. Smith. Southlake: Fountainhead Press. [Google Scholar]

- Warschauer, Mark. 2010. Invited commentary: New tools for teaching writing. Language Learning & Technology 14: 38. [Google Scholar]

- Woo, Matsuko Mukumoto, Samuel Kai Wah Chu, and Xuanxi Li. 2013. Peer-feedback and revision process in a wiki mediated collaborative writing. Educational Technology Research and Development 61: 279–309. [Google Scholar] [CrossRef]

- Xu, Qi, and Shulin Yu. 2018. An action research on computer-mediated communication (CMC) peer feedback in EFL writing context. The Asia-Pacific Education Researcher 27: 207–16. [Google Scholar] [CrossRef]

- Yang, Yu-Fen, and Shan-Pi Wu. 2011. A collective case study of online interaction patterns in text revisions. Journal of Educational Technology & Society 14: 1–15. [Google Scholar]

- Yu, S., and C. Liu. 2021. Improving student feedback literacy in academic writing: An evidence-based framework. Assessing Writing. [Google Scholar] [CrossRef]

- Zamel, Vivian. 1982. Writing: The process of discovering meaning. TESOL Quarterly 16: 195–209. [Google Scholar] [CrossRef]

| Type of Research Design | Studies |

|---|---|

| Qualitative research design | |

| Mixed-method research design |

| Study No. | Author (Year) | Name of Platforms | Function of the Platforms |

|---|---|---|---|

| 1 | (Yang and Wu 2011) | Diff Engine | Diff Engine highlights newly added words and crosses out newly deleted words in the system to show revisions. |

| 2 | Colpitts and Past (2019) | Google Docs | Google Docs allows easy access to writing critiques, editing, and sharing peer feedback. |

| 3 | Xu and Yu (2018) | Qzone | A popular blog platform in China used to post student blogs. |

| 4 | Lin et al. (2013) | BALL | Used for blog-assisted language learning. |

| 5 | Chen (2012) | Blogging platform | A blog-based platform for peer-reviewing (no specific names). |

| 6 | Pham (2020) | Google Docs | Google Docs allows easy access to writing critiques, editing, and sharing peer feedback. |

| 7 | Kayacan and Razı (2017) | Edmodo | Edmodo is an educational technology platform for K-12 schools and teachers. Edmodo enables teachers to share content and distribute quizzes and assignments |

| 8 | Ciftci and Kocoglu (2012) | Blogger (http://www.blogger.com accessed on 28 December 2022) | Blogger is an American online content management system founded in 1999 that enables multi-user blogs with time-stamped entries. |

| 9 | Saeed et al. (2018) | Facebook Groups | A social networking website that can be used to exchange feedback for text revisions and written reflections. |

| 10 | Lin (2015) | Lang8 | A free language blogging website, Lang8 allows users to create their own blogs. |

| 11 | Li and Li (2017) | Turnitin | Turnitin offers features such as PeerMark that give students the ability to browse, analyze, score, and assess the papers that their classmates have turned in. |

| 12 | Kitchakarn (2013) | Blogger.com (website) | Blogger, an online website, serves as a platform for peer feedback exercises. Blogger is an American online content management system founded in 1999 that enables multi-user blogs with time-stamped entries. |

| 13 | Woo et al. (2013) | Wiki tool (PBworks) | A wiki application called PBworks enables students to engage together in writing courses, co-create their writing on PBworks pages made specifically for each group, and exchange helpful criticism and comments on the platform. |

| 14 | Nguyen (2012) | Blog (www.opera.com accessed on 28 December 2022) | Users of a class blog (www.opera.com accessed on 28 December 2022) have access to a free blog service site where they can submit details on grammatical constructions, writing conventions, and reading texts. |

| 15 | Saglamel and Çetinkaya (2022) | Google Docs | Peer reviews can be written, edited, and shared using Google Docs. |

| 16 | Abdullah et al. (2018) | CMC (online Blog forum) | An online platform (CMC blog) that allows peer feedback exercises. |

| 17 | Hussin et al. (2015) | Blog and Facebook | CMC applications (blog and Facebook) improve writing ability through peer involvement and interaction. |

| 18 | Ho (2015) | Online Meeting | The CMPR involves OnlineMeeting, an interface simulating a split-screen protocol specifically designed for synchronous online peer review. |

| 19 | Ho (2012) | Online Meeting and Word | Word allows students to directly type comments on the computer, track changes, and highlight important features. The OnlineMeeting system automatically generates web links allowing users access to online chat rooms. |

| 20 | Belén Díez-Bedmar and Pérez-Paredes (2012) | Moodle | Moodle is a Course Management System (CMS) or learning platform designed to provide educators, administrators, and learners with a single robust, secure, and integrated system to create personalized learning environments. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cuocci, S.; Fattahi Marnani, P.; Khan, I.; Roberts, S. A Meta-Synthesis of Technology-Supported Peer Feedback in ESL/EFL Writing Classes Research: A Replication of Chen’s Study. Languages 2023, 8, 114. https://doi.org/10.3390/languages8020114

Cuocci S, Fattahi Marnani P, Khan I, Roberts S. A Meta-Synthesis of Technology-Supported Peer Feedback in ESL/EFL Writing Classes Research: A Replication of Chen’s Study. Languages. 2023; 8(2):114. https://doi.org/10.3390/languages8020114

Chicago/Turabian StyleCuocci, Sophie, Padideh Fattahi Marnani, Iram Khan, and Shayla Roberts. 2023. "A Meta-Synthesis of Technology-Supported Peer Feedback in ESL/EFL Writing Classes Research: A Replication of Chen’s Study" Languages 8, no. 2: 114. https://doi.org/10.3390/languages8020114

APA StyleCuocci, S., Fattahi Marnani, P., Khan, I., & Roberts, S. (2023). A Meta-Synthesis of Technology-Supported Peer Feedback in ESL/EFL Writing Classes Research: A Replication of Chen’s Study. Languages, 8(2), 114. https://doi.org/10.3390/languages8020114