Abstract

This study explores the production and perception of word-final devoicing in German across text-to-speech (from technology used in common voice-AI “smart” speaker devices—specifically, voices from Apple and Amazon) and naturally produced utterances. First, the phonetic realization of word-final devoicing in German across text-to-speech (TTS) and naturally produced word productions was compared. Acoustic analyses reveal that the presence of cues to a word-final voicing contrast varied across speech types. Naturally produced words with phonologically voiced codas contain partial voicing, as well as longer vowels than words with voiceless codas. However, these distinctions are not present in TTS speech. Next, German listeners completed a forced-choice identification task, in which they heard the words and made coda consonant categorizations, in order to examine the intelligibility consequences of the word-final devoicing patterns across speech types. Intended coda identifications are higher for the naturally produced productions than for TTS. Moreover, listeners systematically misidentified voiced codas as voiceless in TTS words. Overall, this study extends previous literature on speech intelligibility at the intersection of speech synthesis and contrast neutralization. TTS voices tend to neutralize salient phonetic cues present in natural speech. Subsequently, listeners are less able to identify phonological distinctions in TTS. We also discuss how investigating which cues are more salient in natural speech can be beneficial in synthetic speech generation to make them more natural and also easier to perceive.

1. Introduction

Present-day, people interact with synthesized speech in a variety of situations. For instance, the prevalence of voice-activated artificially intelligent devices, which use text-to-speech (TTS) generation to communicate with users, means that language comprehension of synthetic speech can be an everyday event for many people. Intelligibility of TTS speech is both a practical and theoretical issue. From the practical perspective, as TTS methods evolve, understanding the ways in which speech comprehension for synthetic speech deviates from natural speech can contribute to speech technology advancements (e.g., Duffy and Pisoni 1992). Theoretically, comparing how speech intelligibility differs across naturally produced and TTS voices can inform our understanding of what properties of the signal allow listeners to better understand a speaker’s intended message.

The current study asks whether the production and perception of phonological variation differs across naturally produced and TTS speech. We focus on word-final devoicing. Word-final devoicing is a cross-linguistically common phonological process, observed in languages such as German and Polish, commonly leading to contrast neutralization of voiced and voiceless obstruents in coda position of words (Dinnsen and Charles-Luce 1984; Port and O’Dell 1985; Winter and Röttger 2011). However, speakers often produce secondary phonetic features that differentiate word-final voiced and voiceless stops, such as differences in preceding vowel duration, consonant closure duration, and burst duration across words with underlying voiced and voiceless obstruent codas (Fourakis and Iverson 1984; Charles-Luce 1985). Thus, in natural speech, speakers appear to show incomplete neutralization of a phonological process that might lead to contrast reduction. Moreover, listeners are highly sensitive to these secondary acoustic properties in identifying the underlying phonological contrast: for instance, German listeners are able to accurately categorize word-final voiced and voiceless obstruents in natural produced speech (Janker and Piroth 1999).

The current study asks two questions: First, how does the acoustic realization of word-final devoicing differ across naturally produced and (concatenative) TTS speech? Specifically, we ask whether the secondary phonetic cues to word-final obstruent voicing observed in naturally produced speech are present in TTS speech, such as that used in common household smart speakers, such as Amazon’s Echo. We compare several acoustic features in the realization of word-final devoicing in German across TTS and naturally produced word productions in order to quantify how they differ. Second, we explore the implications of any phonetic differences across natural and TTS speech for intelligibility. Specifically, is there a difference in listeners’ ability to identify the contrast between word-final voiced and voiceless codas across natural and TTS speech? We compare native listeners’ categorizations of words with final voiced codas in German across natural and TTS productions. Understanding how phonological variation differs across natural and synthetic speech can outline further the gap in generating the most naturalistic TTS. Moreover, from a practical perspective, outlining how the properties in synthetic speech lead to reductions in intelligibility, relative to natural speech, can be used to improve TTS in the future.

1.1. German Word-Final Devoicing in Production

Phonological neutralization is when a phonological distinction disappears in a specific context in a language. There are many different phonological processes that can lead to neutralization, resulting in a loss of lexical contrast in certain contexts. A cross-linguistically well-documented phonological process that leads to contrast-neutralization is the devoicing of historically voiced obstruents in word-final position. This process is exemplified, and well-studied, in German; for instance, the minimal pairs Bund (“league”) and bunt (“bright”) are both produced as [bunt]. In other morphological alternations, these underlying voiced stops surface (e.g., Bünde is pronounced [by:ndə], retaining full voicing), indicating that German speakers have paradigmatic evidence to the phonological form of the neutralized words.

However, there is much prior work investigating whether this process is categorical, resulting in full neutralization of minimal pair contrasts, or more gradient where phonetic cues to the underlying voiced-voiceless distinct are still present in the speech signal. Numerous research papers have shown that neutralization of word-final voiced stops is indeed ‘incomplete’ in German production (Dinnsen and Charles-Luce 1984; Port and O’Dell 1985; Winter and Röttger 2011; Kharlamov 2012). For example, Port and O’Dell (1985) examine word-final neutralization of German stop voicing and its realization in natural speech as a “semicontrast” (p. 455). They investigate the production of word-final /b/-/p/, /d/-/t/, and /g/-/k/ in real words and find that word final voiceless stops and word final devoiced stops are not acoustically identical. They observe that devoiced stops showed (1) longer duration of the preceding vowel, (2) longer consonant closure duration, (3) shorter burst duration and (4) some voicing in the coda stop closure, relative to underlyingly voiceless stops. Similarly, Fourakis and Iverson (1984) also found a significant difference in vowel duration and consonant duration, with both being longer in devoiced stops in comparison to voiceless stops. Charles-Luce (1985) also found preceding vowels in devoiced stop contexts to be significantly longer than those in voiceless contexts. Overall, it appears that there are multiple phonetic differences that distinguish underlying voiced and voiceless stops in German even after phonological devoicing, with preceding vowel duration appearing to be the most consistent acoustic difference.

Thus, prior work has established that the phonological devoicing of stops in German is ‘incomplete’. Incomplete neutralization of word-final devoicing has also been found cross-linguistically. For example, Warner et al. (2004) found that in Dutch, a language closely related to German, similar sub-phonemic differences in duration across multiple dimensions in production (i.e., vowel duration, burst) can be shown. Additionally for Dutch, Ernestus and Baayen (2006) have found incomplete neutralization to bear some functionality as a cue to past-tense formation. In a study on incomplete neutralization in Polish, Slowiaczek and Dinnsen (1985) found vowel duration to be longer in vowels preceding a devoiced stop. Devoiced stops show a longer voicing into closure in Catalan (Charles-Luce and Dinnsen 1987). This suggests that the features of incomplete neutralization found in German are also a common feature of natural speech in other languages.

The phonological and phonetic variation found in natural language potentially presents a challenge to generating intelligible and naturalistic text-to-speech (TTS) utterances. Though modern speech synthesis now generates highly naturalistic speech, there are still many differences in the acoustic properties of speech generated using TTS and that produced naturally by people. In recent years, people are interacting with digital devices more and more on a daily basis (Ammari et al. 2019), and engineers have worked to improve synthesis techniques to generate speech that more closely resembles naturally produced speech. Because the methods used in TTS synthesis are rapidly evolving (van den Oord et al. 2016), the need to explore how the subphonemic phonological variations are represented in TTS-generated speech has grown. Understanding the extent to which fine phonetic detail is both present in speech production and exploited by listeners in speech perception is an important aspect of speech science (e.g., Hawkins 2003). The question of how such fine details are produced and perceived in TTS is an important aspect of addressing this area. Our first research question is whether concatenative TTS contains the same subphonemic cues for incomplete neutralization in German that is observed in naturally produced speech.

1.2. The Perception of Word-Final Stops in German

The presence of subphonemic cues to the voicing contrast in word final position in German, and other languages, suggests that the phonological process does not result in complete neutralization of the lexical contrast. Indeed, there is much work examining whether listeners can still identify the underlying phonological contrasts from the presence of distinct phonetic cues. For example, Port et al. (1981), and also Port and O’Dell (1985), found that listeners are able to identify the voicing category of word final stops above chance for real German words. Kleber et al. (2010) presented German listeners stimuli manipulated along a continua of vowel duration and stop closure duration from values taken from word-final voiced stops to voiceless stops. They found that while listeners can distinguish categorically between the continua endpoints, their judgments shift gradiently as the phonetic differences change; this indicates that listeners show gradient sensitivity to subphonemic cues.

As mentioned earlier, an open question is how phonological variation should be implemented and perceived in TTS. The goal of synthetic speech is to make natural and intelligible speech. Thus, having TTS that contains phonetic variation across phonological conditions similar to what is found in natural speech might achieve that goal. Prior work has found that the addition of fine phonetic details that are present in naturally produced speech improves perception when present in synthetic speech. For example, listeners have been shown to better identify synthesized speech segments if they contained the contextually appropriate coarticulation (i.e., F2 lowering before /r/ or /z/) (Hawkins and Slater 1994). This demonstrates the critical role of context-specific variation, a ubiquitous feature of speech, in supporting successful phoneme identification in synthetic speech, similar to what is found in naturally produced speech. In the current study, we extend this previous research exploring how subphonemic variations in synthetic speech influence listener perception and extend it to word-final devoicing in German.

Our second research question is how do subphonemic cues to word-final devoicing in naturally produced speech vs. TTS influence listener perception? While prior studies have investigated the perception of word-final devoicing in German, no prior work, to our knowledge, has investigated this question in synthesized speech. We are interested in specifically comparing natural speech and TTS, as TTS can contain reduced acoustic cues present in natural speech.

Why might the perception of incomplete neutralization differ between TTS and naturally produced speech? One possibility is that the degree of neutralization may differ in synthetic speech; the secondary cues to obstruent voicing contrasts that remain in naturally produced speech may be present to a smaller degree or not at all in TTS-generated speech, or different acoustic cues might be emphasized in comparison to natural speech. For example, if the system incorporates only the rule of word-final devoicing (i.e., concatenating a phonological /t/ without secondary cues), it may not have the ability to generate acoustic differences between [at] and [ad̥]. Alternatively, the system might prioritize only one acoustic cue, such as vowel duration, but ignore others such as closure duration, or f0. This difference on a fine acoustic level would lead to a difference in listener accuracy in identifying neutralized word-final obstruents for TTS speech. It has been shown that fine acoustic details drive differences in perceptual patterns; for example, Zellou et al. (2021) found that fine-tuned acoustic differences in TTS methods lead to distinct patterns in listeners’ perception for coarticulation rather than perceived roboticity.

Another possibility is that listeners’ perception of secondary cues remaining after incomplete neutralization is influenced by other aspects of synthesized speech outside of phonetic differences in contrast-specific acoustic patterns. The concatenative nature of some types of TTS can cause noticeable mismatches in f0 and spectral properties at phoneme boundaries (Ávila et al. 2018); this can cause listeners to perceive speech as more “telegraphic”, and they may not look to adjacent segments for clues to an obstruent’s voicing. In both of these scenarios, there is a possibility that TTS-generated speech is not identified with the same ease or accuracy as naturally produced speech, even when secondary phonetic cues to the contrast, in this case preceding vowel duration, are neutralized.

1.3. Current Study

The current study aims to: (1) compare the phonetic realization of word-final voiceless and devoiced German stops in naturally produced and TTS speech and (2) investigate the phonological categorization of word-final devoiced and voiceless codas in naturally produced and synthesized speech.

Experiment 1 is a phonetic analysis of word-final devoiced and voiceless stops in German across naturally produced and TTS speech, generated from two widely used commercial TTS systems (Apple and Amazon Polly). We measure consonant closure duration and duration of the preceding vowel, the subphonemic features commonly found to vary across words containing phonologically voiced and voiceless codas. In Experiment 2, we employ a phoneme identification task to test listeners’ ability to correctly identify intended voiced and voiceless codas in naturally produced and TTS speech. We test German listeners’ phoneme identifications on two types of stimuli: first, listeners performed the task on unaltered productions, allowing us to see if listeners’ performance on phoneme identifications given the current variations across naturally produced and synthetic speech; second, listeners also made phoneme identifications on items that had been modified to contain neutralized vowel duration cues. Specifically, the change in listener performance can be interpreted as telling of the degree to which listeners rely on durational differences on the vowel to identify the voicing category of word-final consonants in German across speech types.

2. Experiment 1: Acoustic Properties of German Word-Final Stops in Natural and TTS Speech

2.1. Methods

2.1.1. Target Words

The target words selected for this study consisted of six consonant-vowel-consonant (CVC) nonword minimal pairs differing in coda voicing. Minimal pairs matched in onset and coda consonant place of articulation. Table 1 provides the target word list. Velar word-final obstruents were excluded due to a /g/-spirantization process in German. We selected non-words in order to avoid the possibility that incomplete neutralization might trigger lexical frequency or usage factors, as has been reported in prior work (e.g., Winter and Röttger 2011).

Table 1.

Word pairs used in the present study.

For the natural speech productions, the target words were produced by two female native German speakers from Recklinghausen (NRW region, age = 30) and Kassel (Hesse region, age = 28). Target words were read in two repetitions, with the second repetition chosen for inclusion in order to avoid first-mention hyperarticulation effects.

For the synthetic speech, the target words were generated by two female TTS voices. The TTS speech was generated using two different German voices across two different voice-AI platforms (Apple’s Anna and Amazon Polly’s Marlene). Both systems offer concatenative TTS voices for the German language. These are generated through the splicing of recordings into individual phonemes which are reconcatenated into new utterances. This differs from neural methods which use neural networks to generate speech and are generally only available for larger language markets like English and Mandarin. For the TTS tokens, only one repetition was generated. The decision to include voices generated by two systems was to ensure that patterns found in these tokens were not specific to one system.

2.1.2. Acoustic Measurements

We looked at whether coda stops were fully or partially devoiced and, in the case of the latter, how much of the consonant closure was voiced. This was done with Praat (version 6.1.34; Boersma and Weenink 2020) by looking for the existence of periodic voicing in the waveform and confirming the presence of a voice bar in the spectrogram. Word, stop consonant closure, and vowel duration were measured for each token. Vowel duration was measured from the onset to cessation of periodic voicing and clear formant structure. Stop closure duration was measured from the cessation of periodic voicing and formant structure until the burst associated with the release of the closure within the stop.

2.1.3. Statistical Analysis

Acoustic measurements were modeled in a mixed effects linear regression using the lmer() function in the lme4 package in R (version 1.1-26; Bates et al. 2015). The model included fixed effects for Speaker Type (Naturally produced, TTS), Coda Voicing (Voiced, Voiceless), and Word Duration and the two-way interaction between Speaker Type and Coda Voicing. The random-effects structure included by-speaker random intercepts and by-speaker random slopes for Coda Voicing. All categorical variables were treatment-coded.

2.2. Results

2.2.1. Voicing

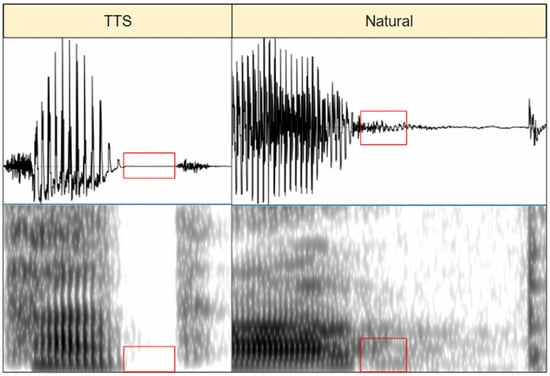

We analyzed whether coda consonants were completely devoiced or still contained partial voicing. This was done by measuring the duration of periodic voicing as indicated in the waveform and confirmed by the presence of a voice bar in the spectrogram (see Figure 1 for comparison). We then calculated the proportion of the consonant closure that was voiced; a higher percentage indicates more residual voicing. In naturally produced speech, we found varying degrees of residual voicing across tokens in both voices. A total of 20 of the stops contained partial voicing in voiced codas. On average, 38.9% of consonant closure durations contained residual voicing; this value ranged from 10% to 59% depending on the particular token. Nevertheless, in TTS speech, there was no voicing found in consonant closures for either voice; this indicates that these stops are fully devoiced.

Figure 1.

Waveform (top) and spectrogram (bottom) for TTS (left) and natural (right) productions of /pab/. The red square indicates where partial voicing occurs in natural speech but not in TTS speech.

2.2.2. Word Duration

Word duration across TTS and naturally produced words was measured. In natural speech, words ending in a voiced consonant had longer overall durations (270.6 ms) than words ending in a voiceless consonant (195.1 ms). In TTS speech, words ending in voiced consonants (155.7 ms) had similar durations to those ending in voiceless consonants (153.0 ms). Furthermore, naturally produced tokens were overall longer (232.9 ms) than TTS speech (154.4 ms).

2.2.3. Consonant Closure Duration

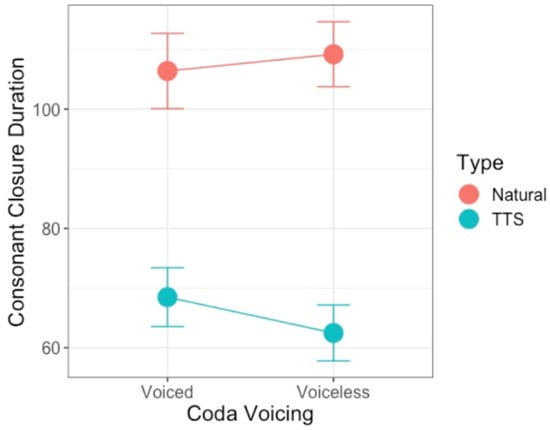

Figure 2 displays the consonant closure duration values for word-final voiced and voiceless stop codas across naturally produced and TTS productions. Table 2 provides the summary statistics of the model run on consonant closure duration. Word Duration was a significant predictor of consonant closure duration; as expected, words that were longer overall had longer consonant closure durations. The model computed a main effect of Speaker Type. As seen in Figure 2, the TTS productions had shorter consonant closure durations than naturally produced words overall. In addition, the model revealed a main effect of Coda Voicing indicating that overall, underlyingly voiceless stops had shorter closure durations than underlyingly voiced stops. Furthermore, there was an interaction between Speaker Type and Coda voicing. Figure 2 also shows that while there appears to be a difference in closure duration between voiced and voiceless codas for TTS speech, there appears to be no difference for naturally produced consonant durations.

Figure 2.

Consonant closure duration (ms) by Speech Type (Natural, TTS) and Coda Voicing (Voiced, Voiceless).

Table 2.

Model output. Syntax: [Closure.Dur ~ Type × Voicing + Word.Dur + (1 + Voicing|Speaker)].

2.2.4. Preceding Vowel Duration

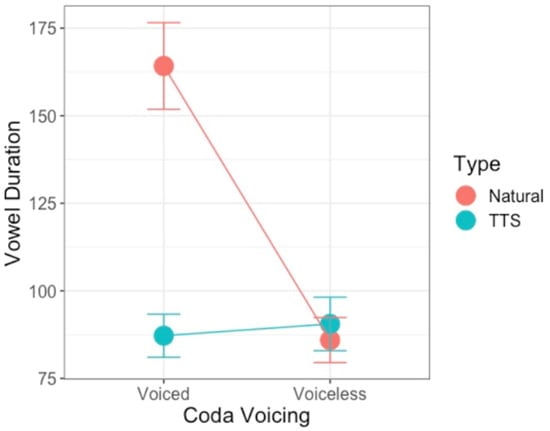

Figure 3 provides the mean durations for vowels preceding voiced and voiceless stops across Speaker Types, and a summary of statistics from the model run on preceding vowel duration is given in Table 3. Word duration was a significant predictor of preceding vowel duration; as expected, words that were overall longer had longer durations of the preceding vowel. The model revealed a significant main effect of Coda voicing indicating that vowels before voiceless stops were systematically shorter than vowels before voiced stops. There was also a main effect of Speaker Type, with TTS voices overall having shorter vowel durations than naturally produced words. The model also computed a significant two-way interaction between Coda Voicing and Speaker Type; while vowels are longer before voiced codas than before voiceless codas in naturally produced speech, there was no difference between vowel durations before voiced and voiceless stops in TTS tokens.

Figure 3.

Consonant closure duration (ms) by Speech Type (Natural, TTS) and Coda Voicing (Voiced, Voiceless).

Table 3.

Model output. Syntax: [Vowel.Dur ~ Type × Voicing + Word.Dur + (1+Voicing|Speaker)].

2.3. Interim Discussion

The acoustic analysis of nonwords containing voiced and voiceless stop codas as produced by native German speakers and generated by German voice-AI assistant TTS voices provides insight into the differences in realization of word-final codas in these two speech types. First, we found partial voicing on 20 of 24 naturally produced coda stops, but no partial voicing in the TTS tokens. Thus, it appears that while underlyingly voiced stops are not fully devoiced in naturally produced speech, TTS speech contains full devoicing of underlyingly voiced stops. Overall, we also find that acoustic cues related to stop voicing remain in the speech signal after the phonological devoicing process has occurred. Nevertheless, natural speech and TTS productions displayed different patterns of secondary cues to word-final voicing. While TTS contained only a final consonant closure duration difference across coda types (longer for voiced than for voiceless), naturally produced speech had only a vowel duration difference (longer vowels before word-final stops).

3. Experiment 2: Perception of Word-Final Voicing

Experiment 1 revealed that the cues to word-final coda voicing in German were distinct across naturally and synthetic speech: specifically, natural speech contained distinct vowel duration patterns across words with phonologically voiced and voiceless codas while TTS speech did not. The naturally produced words also contained only partial devoicing, whereas the TTS words were fully devoiced. Given these differences across naturally produced and TTS lexical items, we designed Experiment 2 to ask whether listeners use these distinctions in naturally produced speech to categorize word-final voiced and voiceless codas. If so, we also ask whether this will cause differences in overall identifiability for voiced codas between the speaker types. Prior work has found that listeners are sensitive to the preceding vowel duration distinctions across phonologically voiced and voiceless codas in Germanic languages (Port and O’Dell 1985; Warner et al. 2004). Thus, we predict that listeners will be more accurate in categorizing coda voicing in naturally produced speech than in TTS speech, since the former makes use of this secondary cue.

In a second part of the experiment, we will neutralize vowel duration differences within pair to see if listeners can use cues on the coda consonant itself in identification. Consonant closure durations of voiced consonants have been found to be reliably longer than voiceless consonants (Stathopoulos and Weismer 1983), but a distinction in this duration was maintained only in TTS speech. While lacking this durational difference, natural speech did contain partial voicing during consonant closure. Therefore, we predict that listeners will still be able to reliably identify the phonological voicing status of coda consonants in natural speech.

3.1. Stimuli

The stimuli consisted of the 24 words produced by the four talkers from Experiment 1. Stimuli were normalized for intensity (60 dB) and naturally produced tokens were down-sampled from 44.1 kHz to 22.05 kHz to match the TTS voices. One set of stimuli remained as they were recorded (apart from intensity and sampling frequency normalization), and one set was manipulated to create an altered stimuli set. For the altered stimuli, vowels were extracted from their original utterance. Vowels were then normalized for pitch (20% decrease linearly across the vowel) and duration (average within-speaker and within-pair duration) using the VocalToolkit plug-in (Corretge 2012) for Praat (version 6.1.34; Boersma and Weenink 2020). Normalization of these secondary cues allows us to test if listeners are able to use acoustic cues remaining on the devoiced obstruent to correctly identify it as being underlyingly voiced, in line with previous cue weighting research (Clayards 2018). After the vowels were normalized, they were spliced back into their CVC contexts.

3.2. Participants and Procedure

Fifty-six (47 female, 9 male, average age = 33.5 years old, SD = 11.1) listeners were recruited to participate remotely. All reported being native speakers of German, and none reported a history of hearing impairment. Before listeners began the experimental blocks, they were presented with a sentence “Ich habe einen Tisch” (“I have a table”) normalized to 60 dB and were instructed to adjust their volume to a comfortable level and leave it there for the duration of the task. Stimuli were presented in two experimental blocks. In Block 1, trials contained the unaltered stimulus items containing original vowel duration and pitch contour. In Block 2, listeners were presented with the altered stimulus items which contained vowels normalized within-speaker and -pair for vowel duration and pitch; pitch was included to omit potential effects it might have on perceived duration (Brigner 1988). On each trial, listeners were presented with a stimulus item and asked to identify the final segment in the word they heard by selecting it from two options; options were always orthographic depictions of the voicing contrast in German (e.g., “d” and “t” for pad). Order of voiced and voiceless options was randomized across trials. In addition to the experimental trials, a listening comprehension question was also included in between the blocks. For the listening comprehension question, listeners heard a sentence (“Wo ist die Katze”) and were asked to indicate what they heard from a list of three options (“Wo ist die Katze”, “Wo ist die Kuh”, and “Wo ist die Maus”; the order of presentation was randomized). Only participants who correctly answered this question had their data included in the analysis (all participants correctly answered this question).

3.3. Results

Responses were coded for whether listeners identified the underlying coda voicing (=1) or not (=0). Responses were analyzed with a mixed-effects logistic regression model using the glmer() function in the lme4 package in R (version 1.1-26; Bates et al. 2015). Fixed effects included Speaker Type (TTS, Naturally produced), Coda Voicing (Voiced, Voiceless), Log Word Duration, and Trial Type (Original Duration, Duration-Neutralized). We also included all the possible two- and three-way interactions between Speaker Type, Coda Voicing, and Trial Type. Random effects included by-Listener random intercepts and by-Listener random slopes for Speaker Type and Trial Type (more complex random effects structure led to converge failures). To explore significant interactions, Tukey’s HSD pairwise comparisons were performed within the model, using the emmeans() function in the lsmeans R package (version 1.5.3; Lenth et al. 2021).

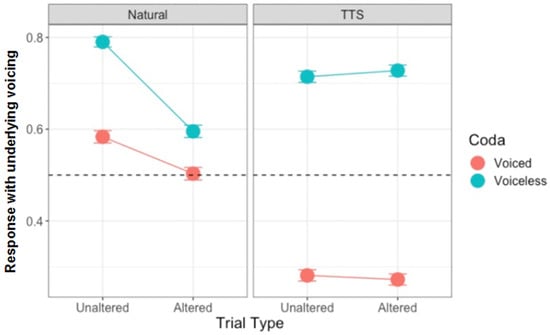

Table 4 provides the summary statistics from the model. Figure 4 displays participants’ aggregated responses to coda voicing identification for TTS-generated and natural speech across Coda Voicing and Trial Types. First, the model computed a significant main effect for Speaker Type, where they responded less often with the underlying coda voicing for TTS-generated (49%) than with naturally produced (62%) lexical items, on average. Furthermore, there was a main effect for Coda Voicing: listeners responded with the underlying coda voicing less often for voiced codas (41%) than for voiceless codas (71%). The model also revealed a main effect of Trial Type, wherein listeners responded with the underlying coda voicing in unaltered lexical items containing original vowel durations (59%), relative to duration-neutralized tokens (51%). Lastly, there was a main effect of Word Duration, where listeners responded with the underlying coda voicing more when the words were longer overall.

Table 4.

Model output. Syntax: [ID ~ Type × Voicing × Trial + Word.Dur + (1+Type*Voicing*Trial|Speaker)].

Figure 4.

Aggregate listener responses by Trial Type (Unaltered, Altered) and Coda (Voiced, Voiceless); the left panel shows responses to natural speech and the right panel shows responses to TTS speech. Chance response pattern (binary choice) is 0.50, indicated with the dotted line.

The model also computed a significant interaction between Speaker Type and Trial Type. Tukey’s HSD pairwise comparisons revealed that listeners responded with the underlying coda voicing more in the unaltered trials than in the altered trials for the naturally produced speech (z = 10.86, p < 0.01), but there was no difference between Trial Types for TTS-generated speech (z = −0.18, p = 0.86). Listeners responded with the underlying coda voicing more for the natural speech in both the unaltered trials (z = 12.45, p < 0.01) and altered trials (z = 3.05, p = 0.002), but the difference was smaller in the altered trials.

The model also computed a significant interaction between Speaker Type and Coda Voicing. Listeners responded with the underlying coda voicing more with voiceless codas as voiceless than with voiced codas as voiced for both naturally produced (z = −11.70, p < 0.01) and TTS speech (z = −31.33, p < 0.01). While listeners responded with the underlying voicing more for voiced codas for the naturally produced than for TTS speech (z = 17.43, p < 0.01), there was no difference in identification of voiceless codas between Speaker Types (z = −1.36, p = 0.17). The interaction between Coda Voicing and Trial Type was also significant; listeners responded less often with the underlying coda voicing in voiced codas than with voiceless codas in both unaltered (z = −23.34, p < 0.01) and altered trials (z = −20.09, p < 0.01).

Finally, the model computed a significant three-way interaction between Speaker Type, Trial Type, and Coda Voicing. This interaction is illustrated in Figure 4. For naturally produced speech (left panel), while listeners responded more often with the underlying coda voicing for voiceless codas than for voiced codas in both unaltered (z = −11.41, p < 0.01) and altered trials (z = −22.67, p < 0.01), the difference between Coda Voicing was larger in unaltered trials than in altered trials. Within TTS-generated speech (right panel), listeners responded more with the underlying coda voicing for voiceless codas in both unaltered (z = −21.67, p < 0.01) and altered trials (z = −22.67, p < 0.01), and there was no difference for either Coda Voicing between Trial Types.

4. General Discussion

The current study was designed to investigate the perception of word final-obstruent devoicing in German in concatenative TTS-generated and naturally produced speech. We first conducted an acoustic analysis of words produced by two female native speakers of German and generated by two female German TTS voices, to investigate the extent of neutralization in these voices. We found distinct acoustic realizations of incomplete neutralization between voice types. For one, while in natural productions, speakers produced partial devoicing of underlying voiced stops, and TTS words contained full devoicing.

The realization of secondary acoustic cues also varied across voice types. In naturally produced speech, there was a significant difference in duration between vowels before voiced (164 ms) and voiceless (85 ms) stops, which is consistent with previous descriptions of word-final devoicing in German as an example of incomplete neutralization (Port and O’Dell 1985; Charles-Luce 1985). Nevertheless, in generated TTS speech, there was no difference in vowel length (87 ms before voiced; 85 ms before voiceless). Given that salient secondary acoustic cues can contribute to the robustness of a contrast for listeners (Stevens and Keyser 1989), we can assume that a larger difference in the preceding vowel’s duration is helpful for listeners.

Consonant closure duration, however, was distinct across voiced and voiceless codas within TTS speech, but not in naturally produced speech. However, given that the stops are completely devoiced, this is perhaps a less robust cue to word-final voicing in German. While the difference in consonant closure duration may still be informative and useful for listeners, it is not present in all stimuli and may therefore still pose issues for identification of voiced coda obstruents. From our stimuli, it appears that only consonant closure duration in alveolar consonants is available as a distinction in TTS voices. This highlights a fundamental issue in the production of word-final devoicing in TTS speech: salient and informative secondary acoustic cues are being excluded, leading to different phonetic realizations in TTS speech from those in natural speech. Taken together, the production data suggest that while neutralization of word-final stop voicing is incomplete in naturally produced German, it seems to be neutralized in TTS-generated speech, potentially causing difficulties for listeners interacting with devices.

Experiment 2 examined the perceptual consequences of the word-final devoicing patterns across speech types. It consisted of a two-part forced identification task in which listeners heard the words and made coda consonant categorizations. Participants were presented with two types of trials: unaltered and altered. In the unaltered trials, participants were played the original productions of the wordlists with only intensity and sampling rate normalized. The rate at which listeners identified the underlying coda voicing were overall higher for the naturally produced speech than for the TTS voices for both voiced and voiceless codas. This is in line with our predictions given that the naturally produced speech offered two acoustic-phonetic distinctions along two dimensions while TTS speech offered only one. For natural voices, listeners identified the upcoming coda voicing at above chance levels but did so around 30% of times for TTS voices; listeners were reliably misidentifying voiced codas as voiceless rather than simply being unsure. This highlights the overall importance of duration of the preceding vowel as an enhancing cue to contrastive stop voicing as well as a possible overall bias toward identifying codas as voiceless.

In altered trials, vowel duration and pitch were normalized to remove vocalic information as an enhancing cue. Here, we found that the rate of identification of underlyingly voiced codas dropped to chance level in the natural voices and stayed around 30% for the TTS voices, showing that listeners were no longer able to identify voicing in these positions. For the natural voices, identification of underlying coda voicing for both voiced and voiceless codas dropped significantly between unaltered and altered trials, showing that neutralizing the secondary cue of vowel duration was particularly detrimental to perception of coda voicing. Meanwhile, there was no difference in response across trial types for TTS voices, suggesting that the preceding vowel was truly uninformative for listeners before manipulation.

There were a number of limitations to the current study that offer possible avenues for future research. First, our study had a relatively limited scope, looking only at monosyllabic non-words in German concatenative TTS voices. The patterns found in these voices might differ from those generated with neural methods given the distinct methods of voice synthesis. Furthermore, our study utilized voices generated by only two systems: Apple and Amazon. Comparing across more TTS voices and systems is a ripe area for future work. Independently developed speech synthesis systems could produce slightly different voice patterns, and therefore, it would be advantageous to include voices from a wider range of systems in future investigations.

Furthermore, the relatively overall low identification accuracy in the original human trials found here (60%) and in other studies (Port et al. (1981) found 60% accuracy in their listening test) of voiced codas raises the question whether cues found in incomplete neutralization are indeed useful in speech perception, albeit this number is still high enough to argue for incomplete rather than full neutralization. It has been argued that incomplete neutralization bears no functional relevance in German, as most lexical items undergoing it would never occur in the same contexts (Röttger et al. 2011). Of course, the results shown here could be an artifact of the experimental design, forcing participants to choose between two options of voicing. However, they do show that listeners are able to exploit fine phonetic details when faced with the task of disambiguating stops in a neutralizing context.

Overall, this study extends previous literature on speech perception at the intersection of contrast neutralization and synthesized speech. Our data shows that concatenative TTS voices tend to neutralize salient phonetic cues present in natural speech that are used by listeners to disambiguate phonemic contrast in the absence of other contextual information. We also show that investigating which cues are more salient in the perception of natural speech can be beneficial in the process of deciding how to model fine phonetic detail in the architecture of TTS voices to help make them not only more natural but also easier to perceive, which could be especially useful in noisy environments and for listeners with hearing impairments.

5. Conclusions

We believe the data presented here offer evidence for the importance of including phonetic variation and nuance within voice-AI systems in order to both improve intelligibility for users and generate more natural sounding voices in the future. As our data demonstrate, excluding cues that are important in the perception of natural speech leads, at best, to lower accuracy and, at worst, to increased ambiguity.

Author Contributions

Conceptualization, A.B. and K.P.; methodology, A.B.; formal analysis, A.B.; data curation, A.B. and K.P.; writing—original draft preparation, A.B., K.P, and G.Z.; writing—review and editing, A.B. and G.Z.; visualization, A.B.; supervision, A.B. and G.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of the University of California, Davis (Protocol 1296810-1, approved 10 February 2018).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ammari, Tawfiq, Jofish Kaye, Janice Y. Tsai, and Frank Bentley. 2019. Music, Search, and IoT: How People (Really) Use Voice Assistants. ACM Transactions in Computer Human Interaction 26: 17-1–17-28. [Google Scholar] [CrossRef]

- Ávila, Margarita Selene Salazar, Jose Antonio Trejo Carrillo, Fabian Navarrete Rocha, Naram Isai Hernandez Belmontes, J. R. Zamarrón, Javier Saldivar Pérez, Cesar Gamboa Rosales, Lorena Raquel Casanova Luna, Abubeker Gamboa Rosales, and Claudia Sifuentes Gallardo. 2018. Pitch marking study towards high quality in concatenative based speech synthesis. International Journal of Progress in Science Technology 10: 163–69. [Google Scholar]

- Bates, Douglas, Martin Maechler, Ben Bolker, S. Walker, R. H. B. Christensen, H. Singmann, and M. B. Bolker. 2015. Package ‘lme4’. Convergence 12: 437. [Google Scholar]

- Boersma, Paul, and David Weenink. 2020. Praat: Doing Phonetics by Computer: Version 6.1.34. Amsterdam: Instituut voor Fonetische Wetenschappen. [Google Scholar]

- Brigner, Willard L. 1988. Perceived duration as a function of pitch. Perceptual and Motor Skills 67: 301–2. [Google Scholar] [CrossRef] [PubMed]

- Charles-Luce, Jan. 1985. Word-final devoicing in German: Effects of phonetic and sentential contexts. Journal of Phonetics 13: 309–24. [Google Scholar] [CrossRef]

- Charles-Luce, Jan, and Daniel Dinnsen. 1987. A reanalysis of Catalan devoicing. Journal of Phonetics 15: 187–90. [Google Scholar] [CrossRef]

- Clayards, Meghan. 2018. Differences in cue weights for speech perception are correlated for individuals within and across contrasts. The Journal of the Acoustical Society of America 144: EL172–77. [Google Scholar] [CrossRef] [PubMed]

- Corretge, Ramon. 2012. Praat Vocal Toolkit. Available online: http://www.praatvocaltoolkit.com (accessed on 3 June 2018).

- Dinnsen, Daniel, and Jan Charles-Luce. 1984. Phonological neutralization, phonetic implementation and individual differences. Journal of Phonetics 12: 49–60. [Google Scholar] [CrossRef]

- Duffy, Susan A., and David B. Pisoni. 1992. Comprehension of synthetic speech produced by rule: A review and theoretical interpretation. Language and Speech 35: 351–89. [Google Scholar] [CrossRef] [PubMed]

- Ernestus, Mirjam, and R. Harald Baayen. 2006. The functionality of incomplete neutralization in Dutch: The case of past-tense formation. Laboratory Phonology 8: 27–49. [Google Scholar]

- Fourakis, Marios, and Gregory K. Iverson. 1984. On the “incomplete neutralization” of German final obstruents. Phonetica 41: 140–49. [Google Scholar] [CrossRef]

- Hawkins, Sarah. 2003. Roles and representations of systematic fine phonetic detail in speech understanding. Journal of Phonetics 31: 373–405. [Google Scholar] [CrossRef]

- Hawkins, Sarah, and Andrew Slater. 1994. Spread of CV and V-to-V coarticulation in British English: Implications for the intelligibility of synthetic speech. Proceedings of the International Conference of Spoken Language Processing 94: 57–60. [Google Scholar]

- Janker, Peter M., and Hans Georg Piroth. 1999. On the perception of voicing in word-final stops in German. Paper presented at the 14th International Congress on Phonetic Sciences (ICPhS), San Francisco, CA, USA, August 1–7; pp. 2219–22. [Google Scholar]

- Kharlamov, Viktor. 2012. Incomplete Neutralization and Task Effects in Experimentally-Elicited Speech: Evidence from the Production and Perception of Word-Final Devoicing in Russian. Doctoral dissertation, University of Ottawa, Ottawa, ON, Canada. [Google Scholar]

- Kleber, Felicitas, Tina John, and Jonathan Harrington. 2010. The implications for speech perception of incomplete neutralization of final devoicing in German. Journal of Phonetics 38: 185–96. [Google Scholar] [CrossRef]

- Lenth, Russell, Maxime Herve, Jonathon Love, Hannes Riebl, and Henrik Singman. 2021. Package ‘Emmeans’ [Software Package]. Available online: https://github.com/rvlenth/emmeans (accessed on 2 January 2022).

- Port, Robert, and Michael O’Dell. 1985. Neutralization of syllable-final voicing in German. Journal of Phonetics 13: 455–71. [Google Scholar] [CrossRef]

- Port, Robert, Fares Mitleb, and Michael O’Dell. 1981. Neutralization of obstruent voicing in German is incomplete. Journal of the Acoustical Society of America 70: S13. [Google Scholar] [CrossRef]

- Röttger, Timo, Bodo Winter, and Sven Grawunder. 2011. The Robustness of Incomplete Neutralization in German. Paper presented at the 17th International Congress on Phonetic Science (ICPhS), Hong Kong, China, August 17–21; pp. 1722–25. [Google Scholar] [CrossRef]

- Slowiaczek, Louisa M., and Daniel Dinnsen. 1985. On the neutralizing status of Polish word-final devoicing. Journal of Phonetics 13: 325–41. [Google Scholar] [CrossRef]

- Stathopoulos, Elaine T., and Gary Weismer. 1983. Closure duration of stop consonants. Journal of Phonetics 11: 395–400. [Google Scholar] [CrossRef]

- Stevens, Kenneth N., and Samuel Jay Keyser. 1989. Primary features and their enhancement in consonants. Language 65: 81–106. [Google Scholar] [CrossRef]

- van den Oord, Aaron, Sander Dieleman, Heiga Zen, Karen Simonyan, Oriol Vinyals, Alex Graves, Nal Kalchbrenner, Andrew Senior, and Koray Kavukcuoglu. 2016. Wavenet: A generative model for raw audio. arXiv arXiv:1609.03499. [Google Scholar]

- Warner, Natasha, Allard Jongman, Joan Sereno, and Rachel Kemps. 2004. Incomplete neutralization and other sub-phonemic durational differences in production and perception: Evidence from Dutch. Journal of Phonetics 32: 251–76. [Google Scholar] [CrossRef]

- Winter, Bodo, and Timo Röttger. 2011. The nature of incomplete neutralization in German: Implications for laboratory phonology. Grazer Linguistische Studien 76: 55–74. [Google Scholar]

- Zellou, Georgia, Michelle Cohn, and Aleese Block. 2021. Partial compensation for coarticulatory vowel nasalization across concatenative and neural text-to-speech. The Journal of the Acoustical Society of America 149: 3424–36. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).