Abstract

In this study, we present improvements in N-best rescoring of code-switched speech achieved by n-gram augmentation as well as optimised pretraining of long short-term memory (LSTM) language models with larger corpora of out-of-domain monolingual text. Our investigation specifically considers the impact of the way in which multiple monolingual datasets are interleaved prior to being presented as input to a language model. In addition, we consider the application of large pretrained transformer-based architectures, and present the first investigation employing these models in English-Bantu code-switched speech recognition. Our experimental evaluation is performed on an under-resourced corpus of code-switched speech comprising four bilingual code-switched sub-corpora, each containing a Bantu language (isiZulu, isiXhosa, Sesotho, or Setswana) and English. We find in our experiments that, by combining n-gram augmentation with the optimised pretraining strategy, speech recognition errors are reduced for each individual bilingual pair by 3.51% absolute on average over the four corpora. Importantly, we find that even speech recognition at language boundaries improves by 1.14% even though the additional data is monolingual. Utilising the augmented n-grams for lattice generation, we then contrast these improvements with those achieved after fine-tuning pretrained transformer-based models such as distilled GPT-2 and M-BERT. We find that, even though these language models have not been trained on any of our target languages, they can improve speech recognition performance even in zero-shot settings. After fine-tuning on in-domain data, these large architectures offer further improvements, achieving a 4.45% absolute decrease in overall speech recognition errors and a 3.52% improvement over language boundaries. Finally, a combination of the optimised LSTM and fine-tuned BERT models achieves a further gain of 0.47% absolute on average for three of the four language pairs compared to M-BERT. We conclude that the careful optimisation of the pretraining strategy used for neural network language models can offer worthwhile improvements in speech recognition accuracy even at language switches, and that much larger state-of-the-art architectures such as GPT-2 and M-BERT promise even further gains.

1. Introduction

Language modelling in under-resourced settings, such as those encountered for African languages, is challenging (Mesham et al. 2021; Wills et al. 2020). In comparison with the large datasets available for English and other highly resourced languages, very few large corpora are available for African languages, and when they are available, the applicability of their domain is narrow. In addition to this, code-switching, which involves the use of multiple languages within or between utterances, is common in everyday speech in African countries. In the context of language modelling and speech recognition, code-switching results in a high confusion and consequently increased errors around language switches, as shown in this work. Research notes that code-switches vary between speakers and across speech domains. In addition, data for modelling code-switching is under-resourced and therefore modelling the phenomenon statistically is challenging (Chang et al. 2019). As a consequence code-switching is challenging to model within a singular linguistic paradigm; such as matrix language frame theory (Myers-Scotton 1997) or equivalence constraint theory (Poplack 2000).

In this work we consider the inclusion of larger monolingual corpora in both related and unrelated languages for language model pretraining as a way of alleviating data scarcity. We optimise the way in which two large monolingual datasets (typically English and a respective Bantu language) are interleaved—either by shuffling the sets at the sequence level or by presenting only one language within a training batch, before being presented as input to the language model. To the best of our knowledge this aspect of code-switched language model pretraining has not been reported on before. We show the surprising result that, although the additional data is monolingual, its inclusion allows us to improve speech recognition accuracies—even across language switches. Additionally, we show that even when language models are pretrained on completely unrelated languages, it is still possible to achieve improvements in speech recognition—even across language switches.

We present this work in two parts. Firstly, we optimize the pretraining of long short-term memory (LSTM) language models utilising available out-of-domain monolingual corpora in our target languages—isiZulu, isiXhosa, Sesotho, Setswana, and English. These language models are then fine-tuned on sets of bilingual soap opera data (van der Westhuizen and Niesler 2018) and used for N-best rescoring. Synthetic code-switched data generated in previous research is also incorporated into our pretraining data. We show that, even in situations where the vocabulary is closed on the types in the training, development and test sets for each respective sub-corpus in the under-resourced dataset—resulting in high out of vocabulary rates (26.13–70.72%) during pretraining on the respective out-of-domain corpora—that the neural language models improve substantially when pretrained using a curated dataset.

In the second portion of this work we refrain from pretraining the neural language models ourselves and instead utilise a selection of publicly available models that have not been trained on any of the target languages considered in this work1, and that have not been applied to code-switched speech including African languages before. We find that, when applying these models during N-best rescoring after fine-tuning on the same in-domain data used in the LSTM experiments, we again see improved speech recognition even over language switches. In fact, even without fine-tuning (a zero-shot setting) small improvements are possible. We note that the application of bidirectional models in N-best rescoring has not been thoroughly investigated before, and this is the first study to present results for code-switched speech recognition.

The remainder of this document is organised as follows. Section 2 provides a description of the background and literature. Section 3 describes the corpora utilised in this work, and Section 4 presents our experimental setup, detailing how the corpora and model architectures are utilised in pretraining and augmentation experiments. The augmented n-gram and neural language models are then utilised for lattice generation and N-best rescoring, respectively. The results from these experiments are presented in Section 5. This section also presents the rescoring results utilising the publicly available pretrained models. Finally, Section 6 concludes.

2. Background

Research has found that pretraining on synthetic data generated by an adversarial network can improve code-switched language modelling (Garg et al. 2018). Additionally, an extensive investigation of time delay neural networks (TDNN), LSTM, transformer, and other neural language models found that, for the two African languages, isiZulu and Sepedi, the inclusion of training data from nine non-European South African languages improves language modelling performance (Mesham et al. 2021). Improvements in language modelling and speech recognition for two under-resourced South African languages—isiZulu and Setswana—are also achieved by using sub-word and multi-word tokenization techniques, respectively (Wills et al. 2020).

Utilising the same corpus of multilingual code-switched speech considered in this work, Biswas et al. (2022) investigate the impact of the inclusion of both acoustic and textual data on speech recognition accuracy. Specifically, four balanced bilingual corpora are used to train bilingual speech recognition systems. The subsequent inclusion of more in-domain speech, which leads to an imbalance in the training data, improves performance. Further improvements are afforded by including out-of-domain monolingual speech (Barnard et al. 2014) in each of the considered languages. Finally, speech recognition in the same languages can also be improved by incorporating additional out-of-domain monolingual text as well as synthetically generated code-switched bigrams to the language model (van der Westhuizen and Niesler 2019).

Typically the performance achieved by the current state of the art language models is attributed to the their large training corpora, which are far larger even than our monolingual pretraining sets. However, research has shown that competitive multilingual language models can be trained utilising data from under-resourced (<1 GB training data) African languages (Ogueji et al. 2021), even when compared to large M-BERT (Devlin et al. 2019) models trained on much larger amounts (100 GB) of mostly non-African languages.

In Ralethe (2020), a monolingual Afrikaans BERT model was shown to outperform a fine-tuned multilingual BERT in named entity recognition and dependency parsing. Utilising an Afrikaans text corpus, a new sub-word encoded vocabulary was created. The monolingual Afrikaans BERT model was then initialised utilising the parameters of M-BERT. However, only the overlapping sub-word embeddings were utilised, while all new tokens in the vocabulary were assigned randomly initialised embeddings.

Research aimed specifically to improve code-switched language modelling and speech recognition has employed state-of-the-art architectures for text synthesis. Pretrained BERT models have been employed in adversarial fine-tuning frameworks to generate code-switched text (Gao et al. 2019), which was shown to improve speech recognition accuracy when the data is incorporated into the language model training data. State-of-the-art transformer models (Vaswani et al. 2017) have also been utilised to generate code-switched text, which, when used to train an LSTM model, reduced test set perplexities (Tarunesh et al. 2021).

In this work we demonstrate improvements in language modelling and speech recognition by optimising the pretraining strategy for an LSTM language model utilised in N-best rescoring experiments. We show the surprising result that although the pretraining data is monolingual, we observe improvements even across language switches. We also demonstrate that large publicly available architectures, such as multilingual BERT and distilled GPT-2, are able to improve the speech recognition accuracy for the same code-switched speech. Use of these architectures can be seen as a way of pretraining on very large out-of-domain and out-of-language datasets. To the best of our knowledge, this is the first study which investigates the impact of such bidirectional transformer models on code-switched speech recognition and particularly utilising African languages (Ortiz and Burud 2021; Shin et al. 2019).

3. Datasets

For experimentation, we utilise an under-resourced corpus of code-switched speech collected from South African Soap Operas (van der Westhuizen and Niesler 2018). We evaluate the performance of our pretraining and augmentation techniques using the development and test set defined in this corpus. The dataset is split into four bilingual corpora, each consisting of a Bantu language and English, as shown in Table 1.

Table 1.

The soap opera corpus, showing the four bilingual corpora (English–isiZulu, English–isiXhosa, English–Sesotho, and English–Setswana). For each corpus, the total number of word tokens (Tok), word types (Uniq), and code switches (CS) is presented. We denote the number of code-switches from English to a Bantu language as CSEB, while CSBE indicates the number of switches from Bantu to English. The final column (Dur) presents the duration of each corpus in minutes (m) or hours (h). Adapted from van der Westhuizen and Niesler (2018).

For each of the languages in this corpus, a respective out-of-domain monolingual corpus is available, which has been collected from newspapers and web content (Biswas et al. 2022). For each of the sub-corpora we define a bilingual vocabulary closed on the types in the training, development, and test sets highlighted in Table 1. In Table 2 we present the out-of-vocabulary rates (OOV) when utilising these respective vocabularies on the Bantu and English monolingual corpora. We note that in general the out-of-vocabulary rates are high, in excess of 26%. It is also clear from the table that the out-of-vocabulary rates are always higher for the Bantu corpora than for English. This is especially true for the Nguni languages (isiZulu and isiXhosa) which are known to have large vocabularies due to their agglutinative orthographies.

Table 2.

Out-of-vocabulary rates for the monolingual corpora when a vocabulary is closed on the four respective bilingual datasets.

Additionally, we utilise four existing synthetic corpora of bilingual code-switched text, which were generated using an existing LSTM network (Jansen van Vueren and Niesler 2021). The token counts for the monolingual and the synthesized text are given in Table 3. Below are examples of synthetic English-isiZulu code-switched utterances. In each case, isiZulu is presented in italics, and dashes (-) denote the joining of an isiZulu prefix or suffix with an English word as part of an intra-word code-switch. The English translation is provided in parentheses below each example:

Table 3.

Token counts for the four available monolingual corpora, as well as for the synthetic code-switched corpus, which contains both English and the respective Bantu language.

- Alternational switches:

- -

- Yebo ntwana wow.(Yes child wow.)

- -

- At least zama.(At least try.)

- -

- I want this mngani.(I want this companion.)

- Intra-word switches:

- -

- Uphenyo ama-examples.(Browse through the examples.)

- -

- Ngithi u-relax.(I say relax.)

- Intra-word and insertional switches:

- -

- Uma lokho i-move-e out.(If that one moves out.)

4. Experimental Setup

In this section we present the experimental setup of our work. We begin, in Section 4.1, by outlining the evaluation metrics utilised in our language modelling and speech recognition experiments. In Section 4.2 we detail the experimental setup of our baseline speech recognition system, and specify the toolkits we employed to train our n-gram models and neural networks. Section 4.3 describes the architecture of the baseline and pretrained LSTM language models. Subsequently, Section 4.4 presents our LSTM pretraining optimisation strategy, Section 4.5 presents the n-gram optimisation strategy, and finally Section 4.6 presents the fine-tuning strategies for larger pretrained transformer architectures.

4.1. Code-Switched Perplexity (CPP) and Bigram Error Rate (CSBG)

We evaluate the performance of our language models utilising the perplexity calculated for the development and test sets as defined in Table 1. To analyse the model performance specifically over code-switches, we also calculate the average perplexity over only language switches, and refer to this as the code-switched perplexity (CPP). In a similar fashion, we evaluate speech recognition performance at language switches by specifically calculating the speech recognition error rate only at the point of language transition, and refer to this as the code-switched bigram error rate (CSBG).

4.2. Speech Recognition

The speech recognition system utilised in our work is trained using the Kaldi toolkit (Povey et al. 2011). A CNN-TDNN-F acoustic model is pretrained on the pooled audio data and then fine-tuned on each bilingual corpus, as presented in Biswas et al. (2020). All n-gram language models are trained using the SRILM toolkit (Stolcke 2002), while the neural language models are trained using Tensorflow (Martín et al. 2015). The baseline speech recognition system is denoted as ASR-B in the subsequent sections. Our baseline n-gram language models are trigrams with modified Kneser–Ney smoothing trained separately on each of the four bilingual soap opera training corpora. These are denoted by LMB.

4.3. Language Model Architecture

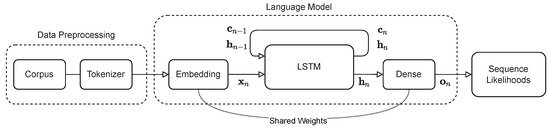

The neural language model shown in Figure 1 is an LSTM with 256-dimensional embedding, and 256-dimensional recurrent matrices. The embedding matrix weights are tied to the final matrix (Press and Wolf 2017). This means that the embedding matrix weights of dimension (,) are utilised to form the output likelihood vectors of dimensionality in addition to producing embedded vectors of dimensionality for each token in the vocabulary. The output likelihood vectors are calculated by applying the product between the output hidden state vectors of the LSTM (), whose dimensionality is also , and the embedding matrix. The resulting vector () has the dimensionality of the vocabulary (). In addition, L2 weight regularisation is applied to the LSTM recurrent kernel (the trainable weights which manipulate the input hidden state vector ) and to the weight-tied embedding and dense matrix. These hyperparameters were chosen in correspondence to the best development set performance during preliminary experiments. We found that the incorporation of L2 regularisation improved performance, whilst weight-tying allowed us to greatly reduce the number of model parameters as well as improve language model performance.

Figure 1.

Structure of the LSTM language model. Each batch of the respective training corpus is first preprocessed and tokenized, and then presented as input to the embedding layer, which computes the embedded vector . This embedded vector is presented as input to the LSTM network along with the hidden () and cell state () vectors. The updated hidden state vector is presented to the output dense layer, whose weights are shared with the embedding layer, to form a likelihood vector of the next possible words (). This process is repeated for each token in the current sequence. L2 weight regularisation is specifically applied to the weight-tied embedding and dense layer, as well as the recurrent kernel in the LSTM.

We train four separate baseline LSTM language models, denoted by N-LMB, on each of the bilingual soap opera training corpora (Table 1) until convergence on the development set. We utilise a batch size of 32 and the ADAM gradient descent algorithm (Kingma and Ba 2015).

4.4. Optimisation of LSTM Pretraining

In preliminary experiments we found that training the model separately on the monolingual data (i.e., by for example first training on the English data and then on the respective Bantu data) did not afford improvements in perplexity when the pretrained model is fine-tuned. However, interleaving and sub-sampling the sequences from the different corpora resulted in consistent perplexity improvements. Therefore, we investigate different methods of sequence level interleaving in order to determine empirically which affords the largest improvements in language modelling performance.

In our first experiment we interleave batches () of the available sets by sub-sampling the largest corpus (typically English) at regular intervals until the number of sequences is the same as in the smallest set. The second strategy interleaves English and Bantu at the sequence level (), again by sub-sampling the largest dataset at regular intervals until it is the same size as the Bantu set.

For each of the strategies we close the vocabulary of a word-based LSTM language model on all word types in the soap opera dataset. We then pretrain using a specific interleaving strategy ( or ) on a subset of the datasets presented in Table 4, after which the model is fine-tuned using the code-switched data (Table 1) until convergence on the development set. These models are indicated as N-LM in Table 4. Specifically, as indicated in this table, we consider three pooled corpora for pretraining: firstly, utilising only the synthetic data (S), then combining the respective monolingual Bantu data as well as the monolingual English data (M), and finally utilising a combination of the synthetic, Bantu and English data (S+M).

Table 4.

Corpora considered for the different pretraining or augmentation strategies, where × indicates corpora included in the respective pool.

In Section 5.1, we begin by optimising the number of pretraining epochs over the range .

4.5. N-gram Optimisation

We would like to contrast the improvements in speech recognition when rescoring N-best lists with the pretrained language model to improvements seen when re-running the speech recognizer after augmenting the training sets of an n-gram model with the same data used for pretraining. If the improvements afforded by rescoring are comparable to those afforded by n-gram augmentation, the latter is preferable due to its reduced computational requirements and greater simplicity, especially in computationally-constrained settings. Additionally, we investigate whether the improvements afforded by n-gram augmentation and N-best rescoring are complementary.

The augmentation process is accomplished by training separate n-gram language models on each of the available corpora (soap opera, synthetic, and monolingual) and interpolating these three individual n-gram models according to the four different combinations of corpora (B, B+S, B+M, B+S+M) as indicated in Table 4. In all cases these interpolated n-gram language models, denoted by LM, are optimised according to the development set perplexity.

4.6. Large Pretrained Language Models

Large pretrained language models have become the de facto standard in the industry. These models are trained on billions of tokens and achieve the state-of-the-art in many language modelling tasks. Here we investigate the application of these models in N-best rescoring of code-switched speech in both zero-shot settings as well as after fine-tuning on the code-switched data.

We note that many of these models are bidirectional and are trained using the masked language modelling (MLM) objective as first presented in Devlin et al. (2019). This training strategy randomly selects 15% of the input sequence tokens, and either replaces them with a specific mask token [MSK], a random token, or the same token with 80%, 10%, and 10% probability, respectively. The average cross-entropy loss is calculated over the masked tokens and utilised to calculate gradients via backpropagation for model weight training.

During rescoring, an autoregressive model is typically employed to calculate the likelihood of the next token given a sequence of previous tokens. However, because bidirectional models make use of context from both the left and the right of the current token, a different strategy must be employed. Specifically, when applying these models, the negative log likelihood is calculated for each token in a sequence given the remainder of the sequence as a context. This is accomplished by applying the masking token [MSK] to the nth position in a sequence and calculating the negative log-likelihood of the target token at the same position. In order to generate a negative log-likelihood score for the sequence as a whole, this process is repeated for each position in the hypothesised sequence. The scores for each sequence are then interpolated with the respective n-gram and acoustic model scores, in order to rescore the development and test set N-best lists. To our knowledge, these are the first investigations applying bidirectional transformer language model architectures to code-switched speech recognition.

Although the structures of the transformers and LSTM language models are not directly comparable—they differ for example in terms of pretraining data, architecture, and vocabulary size—we can nevertheless contrast their impact on speech recognition performance. Specifically, the LSTM models use a word level language dependent tokenisation, while BERT utilises a sub-word encoding method known as word-piece encoding, and the distilled GPT-2 model utilises a sub-word encoding method know as byte-pair encoding. Preliminary experiments indicated that constraining the LSTM to use the same tokenisation used by BERT or GPT-2 led to deteriorated performance. As a result, we chose to compare the best-performing implementations of each architecture. However, the optimisation of the sub-word vocabulary doubtlessly remains an aspect of our ongoing research.

We consider five different bidirectional architectures: a distilled multilingual BERT model (D-M-BERT) (Sanh et al. 2019), a multilingual BERT model (M-BERT) (Devlin et al. 2019), a first BERT architecture trained on South African languages (afriBERTa-S) (Ogueji et al. 2021), another distilled multilingual transformer model distilled from XLM-R (mMiniLMv2) (Wang et al. 2020), and finally a distilled GPT-2 (GPT-2) model, which is an autoregressive transformer (Radford et al. 2019).

Each of the considered models are applied in the following three ways. Firstly, in a zero-shot setting (), where the baseline language model is applied with no additional training. Secondly, after fine-tuning the model for between one and ten epochs on each of the four bilingual soap opera corpora (). Finally, by pooling the four bilingual language pairs and again fine-tuning the model for between one and ten epochs (). Each of these resulting models is then evaluated by means of N-best rescoring experiments.

5. Results

First, in Section 5.1, we present the language modelling and N-best list rescoring results achieved by the optimisation of the monolingual pretraining of the LSTM language models as described in Section 4.4. In Section 5.2, we show the improvements afforded by optimising the augmented n-gram language model, and additional performance afforded by utilising both augmented n-grams as well as the pretrained LSTM for N-best rescoring as described in Section 4.5. Finally in Section 5.3, the results afforded by applying fine-tuned publicly available pretrained models, as described in Section 4.6, in N-best rescoring experiments, are presented.

5.1. Optimisation of LSTM Pretraining

In this section we present the results of the experiments that investigate the best pretraining strategy for the LSTM language model presented in Section 4.4. These results will be both in terms of perplexity and word error rate after N-best rescoring. We compare the performance of the pretrained model with the baseline LSTM language model (N-LMB), which is trained solely on the soap opera training data as described in Section 4.3. In each experiment, the LSTM is pretrained using data interleaved at the batch () or sequence () level as described in Table 4 for between one and five epochs. Table 5 shows that, on average over all four language pairs and five training epochs, the model pretrained using only the out-of-domain monolingual data (N-LMM) affords the largest improvements in perplexity of 42.87% relative to the baseline (N-LMB). Additionally, we find that interleaving at the sequence level () is better than at the batch level (), affording a 45.4% average relative improvement in perplexity compared to a 40.33% average improvement relative to the baseline (N-LMB).

Table 5.

Development set perplexity (PP) and perplexity over code-switches (CPP) during the optimisation of the pretraining strategy for each of the respective language pairs: English–isiZulu (EZ), English–isiXhosa (EX), English–Sesotho (ES), and English–Setswana (ET). The epoch which afforded the lowest development set perplexity across the different strategies is denoted in bold.

When considering the application of these same language models in the 50-best list rescoring, similar trends are apparent in terms of speech recognition performance. In Table 6, we find that interleaving the monolingual sets at the sequence level again affords the largest improvement, both in overall word error rate and in code-switched bigram error. We find that, on average over all four language pairs and over all the pretraining epochs, this strategy leads to an absolute improvement of the average test set word error rate and code-switched bigram error of 1.65% and 1.26% compared to the baseline (ASRB) respectively, outperforming the batch level interleaving, which afforded average improvements of 1.56% and 1.13%.

Table 6.

Test set word error rates (WER) and code-switched bigram error rates (CSBG) during the optimisation of the pretraining strategy for each of the respective language pairs: English–isiZulu (EZ), English–isiXhosa (EX), English–Sesotho (ES), and English–Setswana (ET). The epochs that afforded the lowest development set word error rate are highlighted. The best average performance over the five pretraining epochs across the different strategies on the test set is denoted in bold.

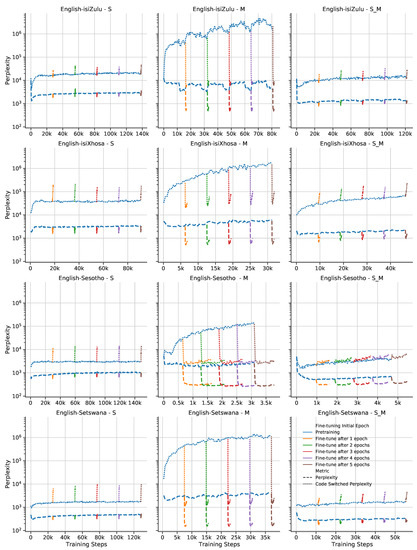

When pretraining on the synthetic code-switched data, it appears from the results in both Table 5 and Figure 2 that subsequent fine-tuning on the soap opera data is not successful. In fact, columns S and S_M of the figure make it clear that for all four languages the code-switched losses immediately diverge when fine tuning begins. We believe this may be caused by over-fitting on the synthetic data, therefore we investigated pretraining for fewer training batches (1, 100, 500, and 1000). However, we found that models incorporating the synthetic data are still outperformed by those pretrained on the monolingual data. When pretraining on the monolingual data, it is clear that the performance over code-switches is poor. However, during fine-tuning, performance improves. In fact, these models (M) exhibit better performance over code-switches than the models that are exposed to synthetic code-switched data during pretraining (S and S_M).

Figure 2.

Development set perplexity and code-switched perplexity for each language pair (in rows: English–isiZulu, English–isiXhosa, English–Sesotho, and English–Setswana) and for each of the combinations of corpora used for pretraining as outlined in Table 4 (in columns: S: Synthesized, M: Monolingual, S_M: Synthesized and Monolingual). The red plot indicates the loss throughout pretraining, and each alternate colour corresponds to the loss when fine-tuning is started after pretraining for between one and five epochs.

We therefore conclude that the best pretraining strategy in terms of speech recognition performance is to mix the monolingual datasets at the sequence level, and pretrain for three or four epochs. Given this strategy, selecting the model with the best development set word error rate over the five pretraining epochs (highlighted in Table 6) affords test set absolute word error rate improvements compared to the baseline speech recognition system (ASR-B), as outlined in Section 4.2, of 3.17%, 0.42%, 1.16%, and 2.47% for isiZulu, isiXhosa, Sesotho, and Setswana, respectively. We note deterioration in speech recognition at code-switches (CSBG) for isiXhosa and Sesotho of 1.74% and 1.13%, respectively, while isiZulu and Setswana are improved by 4.03% and 3.32%.

5.2. N-gram Augmentation

In Table 7, we present both the language model perplexities and speech recognition word error rates when utilising the interpolated n-gram language models trained on the respective corpora outlined in Table 4. We find that the interpolated models that incorporate n-grams from the soap opera data, the synthetic code switched data, and the monolingual data (LMB+S+M) on average afford the largest improvement in development set word error rate as well as perplexity, and improve the test set word error rate by between 1.97% and 3.24% absolute compared to the baseline (ASRB). Additionally, absolute improvements in code-switched bigram error compared to the baseline of 1.23% and 3.19% are achieved for isiZulu and Setswana, respectively, while isiXhosa and Sesotho deteriorate by 0.29% and 0.75%, respectively. By incorporating the additional monolingual and synthetic data to train an interpolated n-gram model we are able to consistently improve absolute recognition accuracy on average by 2.53% compared to the baseline model.

Table 7.

Development set perplexity (PP) and code-switched perplexity (CPP) of the augmented n-gram language models (LM) for each of the four respective language pairs: English–isiZulu (EZ), English–isiXhosa (EX), English–Sesotho (ES), and English–Setswana (ET). The test set speech recognition error rates (WER) and code switched bigram error rates (CSBG) when utilising the augmented n-gram language models and additional rescoring by the pretrained neural language model (+N-LM) or multilingual language model (+GPT-2 () or +M-BERT ()) are also shown. The model that afforded the best average speech recognition performance on the development set is highlighted. The best performance achieved on the test set is denoted in bold.

Table 7 also shows the results of rescoring the N-best lists with the best performing pretraining strategy (N-LMM) identified in Section 5.1. Specifically, the table presents the test set results corresponding to the best development set word error rate from the five pretrained and fine-tuned models— in Table 6. We rescore the N-best lists generated by both the n-gram models trained using the soap opera and monolingual data (LMB+M), as well as those that incorporated the soap opera, monolingual, and synthetic data (LMB+S+M). On average, over the four language pairs, we find that rescoring the hypotheses generated using the n-gram incorporating the soap opera, monolingual, and synthetic data (LMB+S+M) lead to the largest improvements in the development set word error rate compared to the baseline (ASRB). These language models achieved corresponding improvements in the test set word error rate of 3.5% on average compared to the baseline, and 0.98% over the n-gram trained using the soap opera, synthetic, and monolingual data. We find that including the synthetic data improves both language modelling and speech recognition when achieved by n-gram augmentation. This is in contrast to the lack of improvement seen when the same data is used in LSTM pretraining. This is consistent with our expectation, as the data was optimised specifically to improve speech recognition when utilised for n-gram augmentation.

It is also clear that the improvements afforded by rerunning speech recognition experiments after n-gram augmentation are similar to those afforded by the rescoring experiments, which suggests that especially in computationally constrained settings, the augmentation of only the n-gram models for lattice generation should be favoured since it is far less computationally expensive to implement. More specifically, when comparing the results achieved when the optimally pretrained model is used to rescore the baseline N-best hypotheses (ASRB + N-LMM in Table 7) to those achieved by utilising the same data for n-gram augmentation (LMB+M), we find that on average over the four language pairs, the n-gram augmentation outperforms the rescoring by 0.69% absolute in overall speech recognition accuracy. However, the rescoring method outperforms the n-gram augmentation in terms of code-switched recognition accuracy by 1.38% absolute on average. This suggests that the neural language models are better able to model the code-switching phenomenon, while the augmented n-grams improve the modelling of monolingual stretches of speech.

Overall, rescoring the N-best lists produced by the augmented n-gram LMB+S+M produces the lowest development set word error rate for isiZulu and Setswana, with corresponding test set improvements of 4.23% and 4.45% absolute compared to the baseline (ASRB). Additionally, we improve the speech recognition accuracy over code-switches for the same languages by 2.05% and 3.13% absolute compared to the baseline. The best development set word error rate for isiXhosa and Sesotho is achieved by rescoring the N-best lists produced by n-gram LMB+M, leading to test set improvements of 1.81% and 2.69% absolute compared to the baseline. We find, however, that speech recognition at code-switches is worse for both of these languages than the baseline. We conclude that utilising the additional data for both n-gram augmentation (LMB+S+M) and LSTM pretraining (N-LMM) for N-best rescoring offers the largest consistent improvements in speech recognition accuracy, and outperforms either strategy employed alone.

5.3. Large Pretrained Language Models

In Table 8 we present the overall test set speech recognition error rate (WER) as well as the speech recognition error rate specifically over code-switches (CSBG) for the five considered pretrained architectures, as discussed in Section 4.6. Each pretrained model is used in rescoring experiments in either a zero shot setting () or after fine-tuning for between one and ten epochs on either the respective bilingual corpus () or the pooled data from all four sub-corpora (). After fine-tuning, the model that afforded the largest development set word error rate improvements over the ten fine-tuning epochs is selected and used to rescore the corresponding test set N-best list. The resulting test set word error rate is listed in the table.

Table 8.

Test set word error rates (WER) and code-switched bigram error rates (CSBG) when utilising transformer models for N-best rescoring. Results are presented for each of the four respective language pairs: English–isiZulu (EZ), English–isiXhosa (EX), English–Sesotho (ES), and English–Setswana (ET). The model that afforded the best average speech recognition performance on the development set is highlighted. The best performance achieved on the test set amongst the multilingual models is presented in bold.

It is clear from Table 8 that marginal average speech recognition improvements in zero-shot rescoring () are possible for all the bidirectional models except GPT-2. On average over the four language pairs, an absolute improvement of 0.4% is achieved compared to the baseline (ASRB). While better results were achieved by rescoring with the LSTMs trained only on the in domain data (N-LMB), it is nevertheless interesting that these models, even in zero-shot settings, are able to improve the recognition accuracy. We believe that this may be due to the language agnostic sub-word encoding strategy used by all the large models in Table 8. These encodings allow the models to learn rich embeddings across languages. Unlike our own LSTM language model, which distinguishes between words in different languages by appended language tags, the sub-word encoding strategies used by the multilingual models do not. We hypothesise that this allows the model to benefit from the additional training data in related target languages. We aim to explore this hypothesis in further research.

When fine-tuning the pretrained models on the pooled bilingual sets of in domain data (), we find that absolute speech recognition accuracy is improved by 1.79% on average compared to the baseline over all the models and the four language pairs, while models fine-tuned on only the bilingual data afford improvements of 1.23% compared to the baseline.

Interestingly, we note that the BERT model trained on 11 African languages (afriBERTa-S) is outperformed by the BERT model (M-BERT) trained on much more text in unrelated languages. Preliminary experiments using the larger afriBERTa-base, which is comparable in model size to M-BERT, indicated even higher word error rates than those achieved with the smaller afriBERTa-S model.

Over all the large transformer models and language pairs, we find that distilled GPT-2 and multilingual BERT, both fine-tuned on the pooled () soap opera training data, afford the largest improvements in development set speech recognition, both overall and over language switches. In fact, the best fine-tuned GPT-2 model outperforms our own LSTM rescoring model pretrained on the out-of-domain bilingual corpora as outlined in Section 4 by an average of 0.60% absolute on the test set over the four language pairs. Similar trends are seen in terms of code-switched speech recognition error rate, where GPT-2 outperforms the LSTM model by 1.34% absolute on average over the four language pairs. These results are remarkable, given that the data on which GPT-2 was pretrained did not include any of the target languages considered in this work, and uses a vocabulary that is not optimised to represent those same languages.

Furthermore, the multilingual BERT model also affords consistent improvements over all four language pairs in both overall speech recognition and recognition across code-switches. On average over the four language pairs, this model improves test set word error rate by 2.18% and code-switched bigram error by 2.67% compared to the baseline system (ASRB).

When rescoring the N-best lists produced using the augmented n-grams (LMB+S+M) the performance of M-BERT surpasses the performance of GPT-2. As shown in Table 7, the multilingual BERT model affords, on average over the four language pairs, improvements of 4.66% and 4.45% in development and test set speech recognition accuracy, respectively. The improvement in code-switched performance achieved by the bidirectional model is further strengthened, improving the test set baseline recognition performance by 3.52%, outperforming the GPT-2 model by 1.41%.

We conclude that fine-tuning large transformer models pretrained on unrelated languages can improve speech recognition accuracy more effectively than carefully fine-tuned LSTM models pretrained on data in the target languages. In terms of effective use of computational resources this is an encouraging result, which shows that under-resourced languages can benefit from large models pretrained on well-resourced languages, even when the under-resourced languages are completely unrelated to those used to train the larger models.

5.4. LSTM + BERT Rescoring

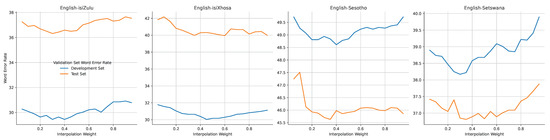

In a final set of experiments, we interpolated the N-best scores obtained by the best LSTM (Section 5.1) and best M-BERT (Section 5.3) models. We optimise the interpolation weight over the range 0.05 to 0.95, as shown in Figure 3. In the figure, interpolation weights closer to one assign more weight to the BERT scores, and conversely interpolation weights closer to zero assign more weight to the LSTM scores.

Figure 3.

Development and test set word error rates (WER) for each of the respective language pairs (English–isiZulu, English–isiXhosa, English–Sesotho, and English–Setswana) when optimising the interpolation weight between N-best scores produced by the optimal pre-trained LSTM (N-LMM from Section 5.1) and BERT (M-BERT () from Section 5.3).

It is clear from Table 7 that utilising a combination of both architectures for rescoring is able to marginally improve (0.4% absolute) overall speech recognition performance for all language pairs except English–isiXhosa. However, recognition performance over code-switches is not improved. Additionally, utilising both models incurs the severe computational overhead of training both architectures, as well as requiring each model to rescore the N-best lists.

In future work, we aim to train a large multilingual transformer (comparable to M-BERT) using our South African language data, in order to better assess the performance of the fine-tuned transformer architectures explored here. The LSTM models we have considered receive word-level tokens with language dependent and closed vocabularies, while the transformer models utilise language agnostic sub-word encoding strategies. By closing this gap, we hope to achieve further benefits.

6. Conclusions

In this work we have presented and compared several strategies for pretraining a code-switched neural language model. We found that interleaving distinct pretraining corpora at the sequence level outperforms interleaving at the batch level. This aspect of pretraining, which had not previously been reported on, highlights the importance of carefully selecting and curating pretraining corpora. Additionally we found that incorporating synthetic data in the pretraining corpora did not afford improvements in speech recognition when the language models are employed in N-best rescoring. We presented the surprising result that, although the data utilised for pretraining our LSTM language model is monolingual, its inclusion allows us to improve speech recognition accuracies, even across language switches.

In contrast, we found that when augmenting n-gram models used for lattice generation with the monolingual and synthetic data we could achieve consistent and comparable improvements, at a fraction of the computational cost of training the neural language model. A combination of N-best rescoring and n-gram augmentation led to larger improvements in speech recognition accuracies than each approach individually, achieving gains of between 1.81% and 4.45% absolute compared to the baseline.

Finally, we contrasted the improvements afforded by our pretraining strategies to those achieved by fine-tuning large publicly available language models, such as M-BERT. We report the first experiments which employ such architectures to rescore N-best lists for speech recognition and that consider English–Bantu code-switched speech. We found that, even when not specifically trained on data that include the target languages, these models also improve both overall test set speech recognition (by between 4.07% and 4.84%) and recognition over code-switches (by between 2.02% and 4.78%) compared to the baseline. This result is encouraging, since it represents a means of taking advantage of huge out-of-domain datasets without the need for costly pretraining. Further marginal improvements in speech recognition were achieved by interpolating the N-best scores produced by the best pre-trained LSTM and BERT models. In future work we would like to investigate if these results can be improved upon by direct training of large transformer architectures utilising the available monolingual text in the target languages.

Author Contributions

Conceptualization, J.J.v.V. and T.N.; methodology, J.J.v.V. and T.N.; software, J.J.v.V.; validation, J.J.v.V. and T.N.; formal analysis, J.J.v.V.; investigation, J.J.v.V. and T.N.; resources, T.N.; data curation, J.J.v.V.; writing—original draft preparation, J.J.v.V. and T.N.; writing—review and editing, J.J.v.V. and T.N.; visualization, J.J.v.V.; supervision, T.N.; project administration, T.N.; funding acquisition, T.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analysed in this study. Data sharing is not applicable to this article.

Acknowledgments

We would like to thank the South African Centre for High Performance Computing (CHPC) for providing computational resources on their Lengau cluster for this research. We gratefully acknowledge the support of Telkom South Africa. We also are grateful to the SABC and Human Stark at Generations: The Legacy, the Department of Arts and Culture of the South African Government, as well as e.tv and Yula Quinn at Rhythm City, for assistance with data compilation.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LSTM | Long-short term memory |

| WER | Word error rate |

| CSBG | Code-switched bigram error rate |

| OOV | Out of vocabulary |

| PP | Perplexity |

| CPP | Code-switched perplexity |

| BERT | Bidirectional encoder representations from transformers |

| GPT | Generative pretrained transformer |

| LM | Language model |

| N-LM | Neural language model |

| ASR | Automatic Speech Recognition |

Note

| 1 | huggingface.co (accessed on 20 December 2021). |

References

- Barnard, Etienne, Marelie H. Davel, Charl van Heerden, Febe de Wet, and Jaco Badenhorst. 2014. The NCHLT speech corpus of the South African languages. Paper presented at 4th Workshop on Spoken Language Technologies for Under-Resourced Languages (SLTU), St Petersburg, Russia, May 14–16. [Google Scholar]

- Biswas, Astik, Emre Yılmaz, Ewald van der Westhuizen, Febe de Wet, and Thomas Niesler. 2022. Code-switched automatic speech recognition in five South African languages. Computer Speech & Language 71: 101262. [Google Scholar]

- Biswas, Astik, Emre Yilmaz, Febe de Wet, Ewald van der Westhuizen, and Thomas Niesler. 2020. Semi-supervised development of ASR systems for multilingual code-switched speech in under-resourced languages. Paper presented at 12th Language Resources and Evaluation Conference (LREC), Marseille, France, May 11–16. [Google Scholar]

- Chang, Ching-Ting, Shun-Po Chuang, and Hung-Yi Lee. 2019. Code-switching sentence generation by generative adversarial networks and its application to data augmentation. Paper presented at Interspeech, Graz, Austria, September 15–19. [Google Scholar]

- Devlin, Jacob, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: Pre-training of deep bidirectional transformers for language understanding. Paper presented at Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NACL), Minneapolis, MN, USA, June 2–7. [Google Scholar]

- Gao, Yingying, Junlan Feng, Ying Liu, Leijing Hou, Xin Pan, and Yong Ma. 2019. Code-switching sentence generation by BERT and generative adversarial networks. Paper presented at Interspeech, Graz, Austria, September 15–19. [Google Scholar]

- Garg, Saurabh, Tanmay Parekh, and Preethi Jyothi. 2018. Code-switched language models using dual RNNs and same-source pretraining. Paper presented at Conference on Empirical Methods in Natural Language Processing (EMNLP), Brussels, Belgium, October 31–November 4. [Google Scholar]

- Jansen van Vueren, Joshua, and Thomas Niesler. 2021. Optimised code-switched language model data augmentation in four under-resourced South African languages. Paper presented at SPECOM, St. Petersburg, Russia, September 27–30. [Google Scholar]

- Kingma, Diederik P., and Jimmy Ba. 2015. Adam: A method for stochastic optimization. Paper presented at 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, May 7–9. [Google Scholar]

- Martín, Abadi, Agarwal Ashish, Barham Paul, Brevdo Eugene, Chen Zhifeng, Citro Craig, S. Corrado Greg, Davis Andy, Dean Jeffrey, Devin Matthieu, and et al. 2016. TensorFlow: A System for Large-Scale Machine Learning. Paper presented at 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI), Savannah, GA, USA, November 2–4. [Google Scholar]

- Mesham, Stuart, Luc Hayward, Jared Shapiro, and Jan Buys. 2021. Low-resource language modelling of South African languages. Paper presented at 2nd Workshop on African Natural Language Processing, 16th Conference of the European Chapter of the Association for Computational Linguistics (EACL), Virtual, April 19–23. [Google Scholar]

- Myers-Scotton, Carol. 1997. Duelling Languages: Grammatical Structure in Codeswitching. Oxford: Oxford University Press. [Google Scholar]

- Ogueji, Kelechi, Yuxin Zhu, and Jimmy Lin. 2021. Small data? No problem! Exploring the viability of pretrained multilingual language models for low-resourced languages. Paper presented at 1st Workshop on Multilingual Representation Learning, Punta Cana, Dominican Republic, November 11. [Google Scholar]

- Ortiz, Pablo, and Simen Burud. 2021. Disambiguation-BERT for n-best rescoring in low-resource conversational ASR. arXiv arXiv:2110.02267. [Google Scholar]

- Poplack, Shana. 2000. Sometimes I’ll start a sentence in Spanish y termino en español: Toward a typology of code-switching. The Bilingualism Reader 18: 221–56. [Google Scholar]

- Povey, Daniel, Arnab Ghoshal, Gilles Boulianne, Lukas Burget, Ondrej Glembek, Nagendra Goel, Mirko Hannemann, Petr Motlicek, Yanmin Qian, Petr Schwarz, and et al. 2011. The Kaldi speech recognition toolkit. Paper presented at IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), Hawaii, HI, USA, December 11–15. [Google Scholar]

- Press, Ofir, and Lior Wolf. 2017. Using the output embedding to improve language models. Paper presented at 15th Conference of the European Chapter of the Association for Computational Linguistics, Valencia, Spain, April 3–7. [Google Scholar]

- Radford, Alec, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, and Ilya Sutskever. 2019. Language Models Are Unsupervised Multitask Learners. Available online: https://cdn.openai.com/better-language-models/language_models_are_unsupervised_multitask_learners.pdf (accessed on 20 July 2021).

- Ralethe, Sello. 2020. Adaptation of deep bidirectional transformers for Afrikaans language. Paper presented at 12th Language Resources and Evaluation Conference, Marseille, France, May 11–16; Reykjavik: European Language Resources Association. [Google Scholar]

- Sanh, Victor, Lysandre Debut, Julien Chaumond, and Thomas Wolf. 2019. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv arXiv:1910.01108. [Google Scholar]

- Shin, Joonbo, Yoonhyung Lee, and Kyomin Jung. 2019. Effective sentence scoring method using BERT for speech recognition. Paper presented at Eleventh Asian Conference on Machine Learning, Nagoya, Japan, November 17–19. [Google Scholar]

- Stolcke, Andreas. 2002. SRILM-an extensible language modeling toolkit. Paper presented at Seventh International Conference on Spoken Language Processing (ICSLP), Denver, CO, USA, September 16–20. [Google Scholar]

- Tarunesh, Ishan, Syamantak Kumar, and Preethi Jyothi. 2021. From machine translation to code-switching: Generating high-quality code-switched text. arXiv arXiv:2107.06483. [Google Scholar]

- van der Westhuizen, Ewald, and Thomas Niesler. 2018. A first South African corpus of multilingual code-switched soap opera speech. Paper presented at Eleventh International Conference on Language Resources and Evaluation (LREC), Miyazaki, Japan, May 7–12. [Google Scholar]

- van der Westhuizen, Ewald, and Thomas R. Niesler. 2019. Synthesised bigrams using word embeddings for code-switched ASR of four South African language pairs. Computer Speech & Language 54: 151–75. [Google Scholar]

- Vaswani, Ashish, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. Paper presented at Advances in Neural Information Processing Systems (NIPS), Long Beach, CA, USA, December 4–9. [Google Scholar]

- Wang, Wenhui, Furu Wei, Li Dong, Hangbo Bao, Nan Yang, and Ming Zhou. 2020. MiniLM: Deep self-attention distillation for task-agnostic compression of pre-trained transformers. arXiv arXiv:2002.10957. [Google Scholar]

- Wills, Simone, Pieter Uys, Charl van Heerden, and Etienne Barnard. 2020. Language modeling for speech analytics in under-resourced languages. Paper presented at Interspeech, Shanghai, China, October 25–29. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).