The Radical Unacceptability Hypothesis: Accounting for Unacceptability without Universal Constraints

Abstract

:1. Introduction

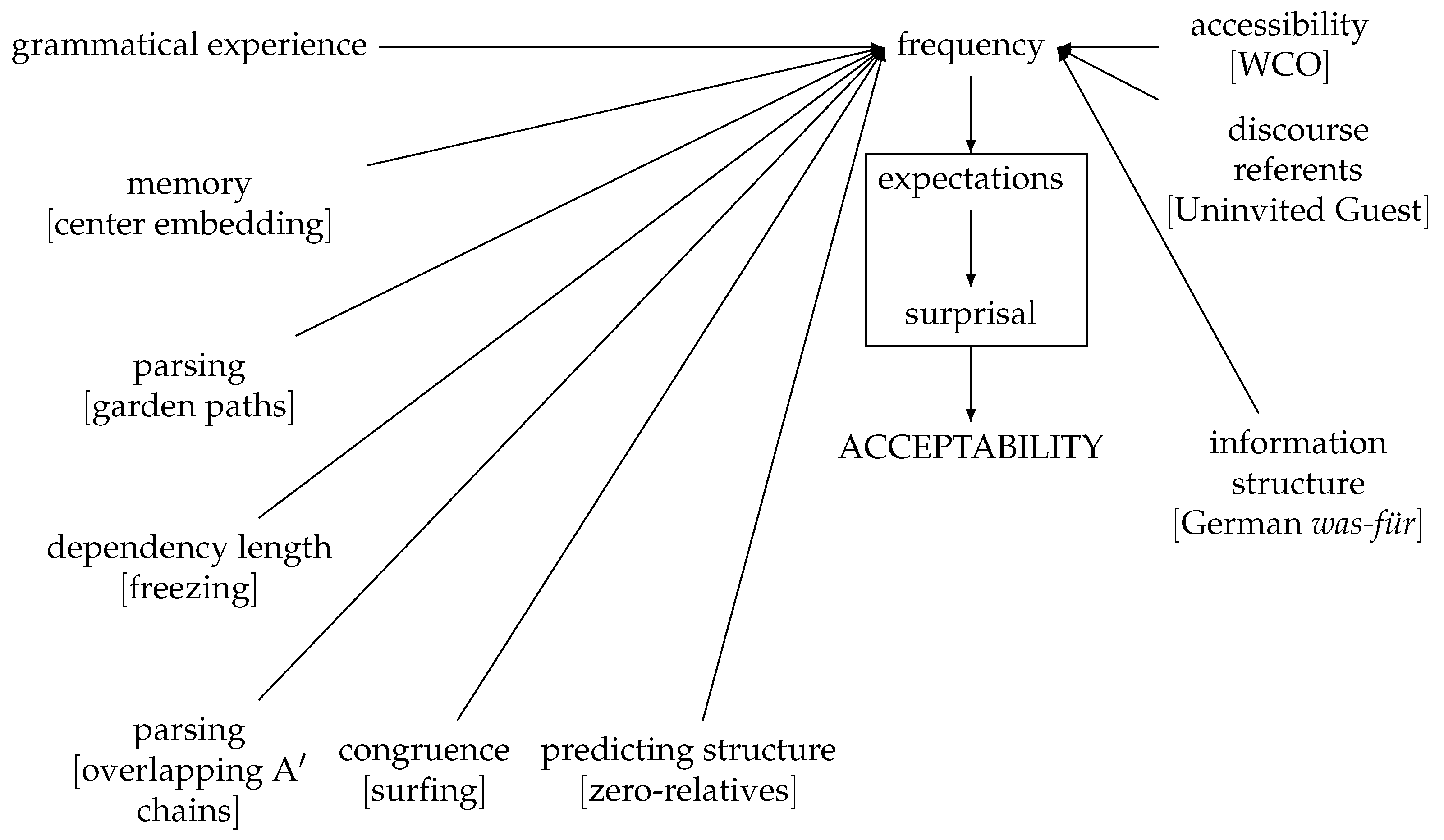

- (1)

- Sandy read [NP a book [S that deals with economic theory]].

- * What subject did Sandy read [NP a book [S that deals with ]]

- (2)

- * What subject did Sandy read [NP a book [S that reveals [S that Kim worked on ]]]?

- * What subject did Sandy read [NP a book [S that reveals [S that Taylor knows ... [that Kim worked on ]]]]?

2. Sources of Unacceptability

- (3)

- * Sandy the beer drank;

- * Sandy relies about Kim;

- * Sandy are happy.

- (4)

- One swallow does not a summer make.

3. The Acceptability/Grammaticality Distinction and Standard Island Constraints

- (5)

- * Who did Taylor give a book?

- Who did Taylor give a book to ?

- (6)

- This is the kind of weather that there are [NP many people [S who like ]].

- Which diamond ring did you say that there was [NP nobody in the world [S who could buy ]]?

- There were several old rock songs that she and I were [NP the only ones [S who knew ]]?

4. Processing A Chains

4.1. Freezing

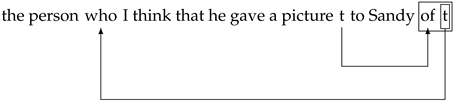

- (7)

- You saw [a picture tj] yesterday [PP of Thomas Jefferson]j.

- * Whoi did you see [a picture tj] yesterday [PP of ti]j?

- (8)

- If a prepositional phrase has been extraposed out of a noun phrase, neither that noun phrase nor any element of the extraposed prepositional phrase can be moved. (Ross 1967, p. 303)

- (9)

- * Whoi did you say that [friends of ti]j, you dislike tj? (subextraction from embedded topicalization)

- * Whoi did you say that [friends of ti]j tj dislike you? (subextraction from subject)

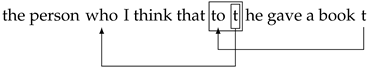

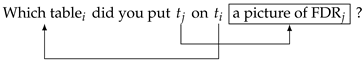

4.2. Overlapping A Chains

- (10)

- Right Surfing

- (11)

- You put [a picture of FDR]j on the table.

- You put tj on the table [a picture of FDR]j.

- * Whoi did you put tj on the table [a picture of ti]j?

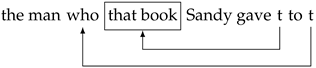

4.3. Topic Islands

- (12)

- * Whati does John think that Billj, Mary gave ti to tj?

- * This is the man whoi that bookj, Mary gave tj to ti.

- * Howi did you say [that the carj, Bill fixed tj ti]?

- * This booki, I know that Tomj, Mary gave ti to tj.

(Rochemont 1989, p. 147)

- (13)

- ? Whati does John think [that at the concert, Mary proposed to sing ti]?

- ? This is the man [whoi at the party, Mary insulted tj].

- ? Howi did you say [that when he came home, Bill was feeling ti]?

- ? This booki, I know [that if the Times recommends it, Mary will buy ti].

- (14)

- Nesting

- (15)

- Crossing

- (16)

- Left Surfing

4.4. Initial Non-Subjects in Zero-Relatives

- (17)

- War and Peace is

- a book which you should read.

- a book that you should read.

- a book ∅ you should read.

- (18)

- War and Peace is

- a book which if you have time you should read.

- a book that if you have time you should read.

- * a book ∅ if you have time you should read.

- (19)

- * He is a man libertyj, we could never grant tj to ti. (Cf. ?He is a man thati libertyj, we could never grant tj to ti. (Baltin 1981)

- * He is a man under no circumstances would I give any money to ti. (Cf. He is a man thati under no circumstances would I give any money to ti)

- * Detroit is a town in almost every garage can be found a car manufactured by GM. (Cf. Detroit is a town that in almost every garage can be found a car manufactured by GM.)

- (20)

- If you have time to read a book, War and Peace you should definitely read.

- (21)

- To Sandy, not a single dollar would I give!

- (22)

- Without her contributions failed to come in.(Pritchett 1988, p. 543)

5. Discourse and Information Structure

5.1. Weak Crossover

- (23)

- Whoi loves hisi dog?

- * Whoi does hisi dog love ?

- (24)

- * Whoi did hisi dean publicly denounce ti?

- ?? Which professori did hisi dean publicly denounce ti?

- ? [Which distinguished molecular biologist that I used to work with]i did hisi dean publicly denounce ti?

- (25)

- I plan to interview the professor whoi hisi dean publicly denounced ti.

- (26)

- I plan to interview Professor Smithi, whoi hisi dean publicly denounced ti.

- (27)

- ? Whoi does hisi mother love ti and Sandy dislike ti?

- Whoi does Sandy dislike ti and hisi mother love ti?

- ? a person whoi hisi mother loves ti but Sandy dislikes ti

- a person whoi Sandy dislikes ti but hisi mother loves ti

- (28)

- Charlie and Frank finished watching a movie. Charlie was the one who picked it out. He didn’t like it.

5.2. The Uninvited Guest

- (29)

- * a person who (not) shaking hands with t would really bother Sandy (gerund)

- * a person who us shaking hands with t would bother Sandy (gerund with pronominal subject)

- * a person who Terry shaking hands with t would bother Sandy (gerund with referential subject)

- * a person who Terry’s shaking hands with t would bother Sandy (gerund with possessive)

- * a person who that Terry shakes hands with t would bother everyone (that clause)

- * a person who to shake hands with t would bother Sandy (infinitive)

- * a person who for to shake hands with t would bother Sandy (for-to infinitive)

- * a person who offensive jokes about t would bother Sandy? (NP)

- * a person who the fact that Sandy shakes hand with t would bother Terry (sentential complement of N like belief, claim)

- (30)

- a person who (not) shaking hands with pg would bother t

- ? a person who us shaking hands with pg would bother t

- * a person who Terry’s shaking hands with pg would bother t

- * a person who that Terry shakes hands with pg would bother t

- * a person who to shake hands with pg would bother t

- * a person who for to shake hands with pg would bother t

- * a person who the fact that Sandy shakes hands with pg would never bother t

- (31)

- ...with them—the people who love you and who you love, who you laugh with and who spending time with is enriching rather than exhausting.

- More than anything though, The Joker is a fascinating character who spending time with is a treat.

- There are some things which fighting against is not worth the effort. Concentrating on things which can create significant positive change is much more fruitful.

- That might be a good idea, the only way I could get her contact information would be through my SM though, which asking for would become a fiasco.

- (32)

- First person

- I’ve found people who spending time with isn’t an exhausting experience and actually gives me a boost.

- However, there have been girls who spending time with and going places [sic] because we love them have made us happy.

- (33)

- Second person

- In your head you’re able to let the mind wander to all sorts of corners, day dreaming about the happy things you hope might happen one day, the good times you’ve enjoyed, and the people who spending time with makes you feel good.

- there are some people who talking to gives you a sort of high

- ... Deathstroke, and some other important characters, such as Alfred (who talking to gives you more ...), James Gordon, and Barbara Gordon.

- The purpose of a relationship (in my mind) is to find someone who spending time with makes you happier than you would be on your own, this guy’s behaviour does not represent that in my opinion and it certainly doesnt sound like minor character traits that you may be able to change with time because it doesn’t sound like he’s at all willing to change.

- (34)

- Third person

- But even if that were so, it would seem that he had at least one person in his life who spending time with and whose love made him feel pure bliss.

- ... But there was one part of Tim which to describe as typical rather undersells him, although it is an aspect of his being to which we would all aspire, because Tim’s integrity—his sense of honour, his honesty, his deep sense of decency—was special and it was rare.

- Until Marinette, the shy classmate who tended to word-vomit in his vicinity and otherwise cease being able to function like a normal human for reasons he had yet to understand (and which asking about would get him sly looks from Alya and concerned looks from Nino), was there.

- (35)

- Common attribute

- Do you have vendors you work with that you truly enjoy? People who work hard for you, do a great job and who spending time with makes the day go by happily and productively?

- Today, there was this person who talking to would make my life exponentially more complicated and fucked up.

- (36)

- Sentence topic

- Definitely the most important advice is to join an orchestra. You will not only meet likeminded individuals who spending time with will improve your playing, but friends and connections for life.

- I desire that you accept of no offers of transportation from officials who deprived you of the very food, in some cases, which was necessary to supply your pressing wants, and who couple their offers of a free passage with conditions which to accept would cast a stain upon your patriotism as Irishmen and as free citizens, who are bound to sympathize with every struggling nationality.

- For purposes of Proof the important distinction lies solely between assertions capable of denial with a meaning, and those which to deny would contradict the postulated meaning.

5.3. Information Structure

- (37)

- Who ate the pizza?

- SANDY ate the pizza.

- # Sandy ate the PASTA.

- (38)

- *Wasi haben [DP ti für Bücher] [DP den FRITZ] beeindruckt?what have [DP t for books.nom] [DP the Fritz.acc] impressed‘What kind of books impressed Fritz?’

- (39)

- Wasi haben [DP den Fritz]j [DP ti für BÜCHer] tj beeindruckt?what have [DP the Fritz.acc] [DP t for books.nom] t impressed‘What kind of books impressed Fritz’

6. Processing Factors and Problematic Cases

- (40)

- Coordinate Structure Constraint (Ross 1967, p. 89)In a coordinate structure, (a) no conjunct may be moved, (b) nor may any element contained in a conjunct be moved out of that conjunct unless the same element is moved out of both conjuncts.

- (41)

- * Who did you see [Joanne and ti] yesterday.

- * Which book did you say that [Amy wrote and Harry bought the magazine]?

- (42)

- I don’t know what happened to Taylor, but it’s been years since I heard from Sandy .

- (43)

- Left Branch Condition (LBC) (Ross 1967, p. 207)No NP which is the leftmost constituent of a larger NP can be reordered out of this NP by a transformational rule.

- (44)

- * Whose did you read [NP book]?

- * His, I don’t think you liked [NP food].

- * How much did she earn [NP money].

- (45)

- * Howi is Sandy [AP ti tall]? (Cf. How tall is Sandy?)

- * [How big]i did you buy [AP ti a house]? (Cf. How big a house did you buy?)

- (46)

- Čuju ty čitaješ [NP knigu]?whose you read book‘Whose book are you reading?’

- (47)

- Cuius legis [NP librum]?whose read.2sg book‘Whose book are you reading?’

- (48)

- Whoi do you believe [S ti will win]?

- (49)

- * Whose did you read [NP some books of ]? (Cf. You read some books of Susan’s.)

- * Your wife’s, I met [NP an uncle of ]. (Cf. I met an uncle of your wife’s.)

7. Summary

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

| 1 | Subsequent syntactic theories of islands have, of course, evolved well beyond Ross’s early efforts. The main thrust of the literature on islands after Ross, as far as we can see, is to derive the central results of Ross’s classical syntactic account in a more principled way, often with the goal of unifying various locality conditions (see Boeckx (2012) for an overview). However, this tradition inherits from Ross and other early work like Chomsky (1973) the idea that the patterns underlying island effects are syntactic in nature (Bošković 2015; Chomsky 2008; Phillips 2013a, 2013b; Sprouse 2007, 2012a, 2012b). Our discussion here—as well as the more lengthy arguments in Kluender (1991), Goldberg (2006), Hofmeister and Sag (2010), Chaves and Putnam (2020) and Kubota and Levine (2020)—targets this basic assumption, rather than the details of specific syntactic accounts. |

| 2 | Due to space considerations we are unable to survey every phenomenon that bears on this hypothesis. For research on a broad array of phenomena that appear to be consistent with the ERUH, see Francis (2022). Additionally, it appears plausible that the ERUH applies to other kinds of putative non-local constraints, such as Condition C and the binding of long-distance anaphors (Reinhart and Reuland 1991; Varaschin 2021; Varaschin et al. 2022). We also do not deal with weak islands such as wh-islands and negative islands, for which a range of both syntactic and semantic accounts have been proposed. For a review, see Szabolcsi and Lohndal (2017), who conclude that “it seems true beyond reasonable doubt that a substantial portion of this large [weak island—PWC, GV and SW] phenomenon is genuinely semantic in nature”, and Abrusán (2014). This work suggests that weak islands are consistent with the ERUH. See also Kroch (1998) for a pragmatic account of weak islands, and Gieselman et al. (2013) for experimental evidence that the unacceptability of extraction from negative islands arises from the interaction of various processing demands. |

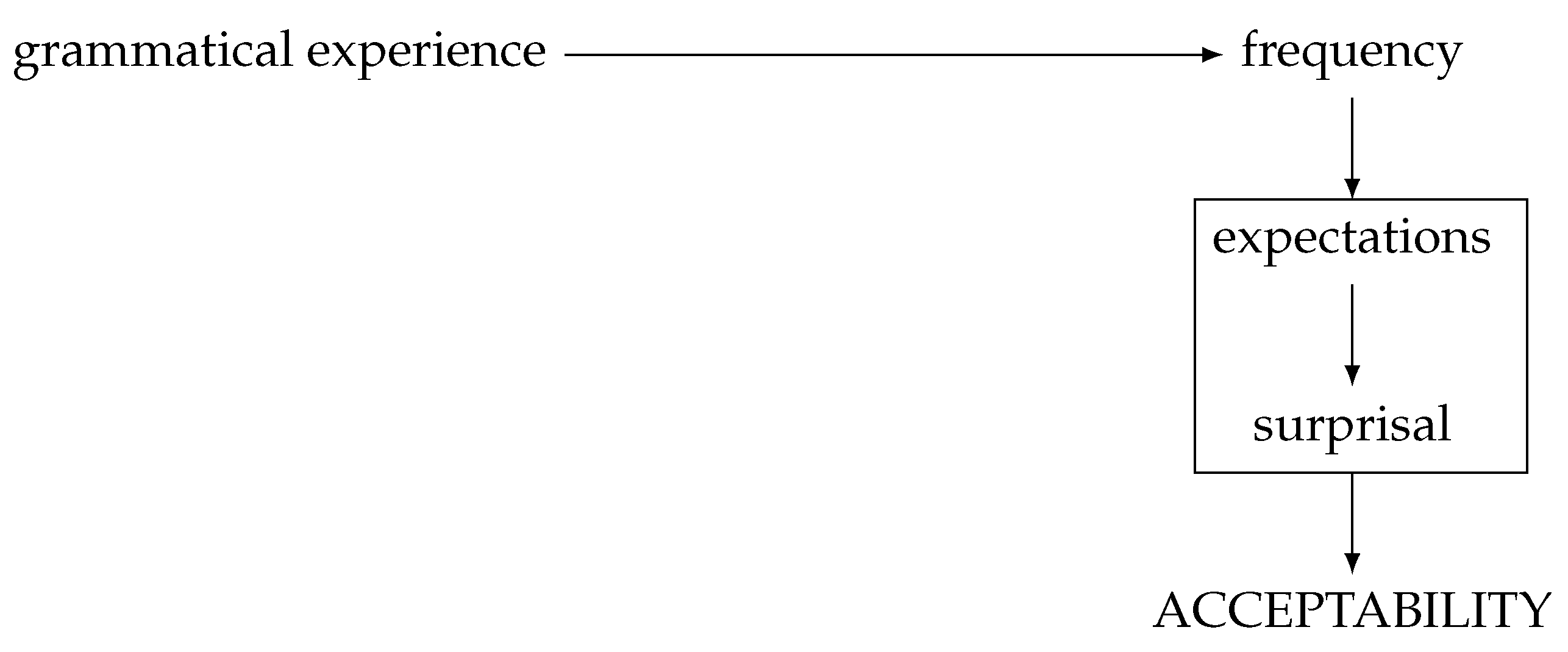

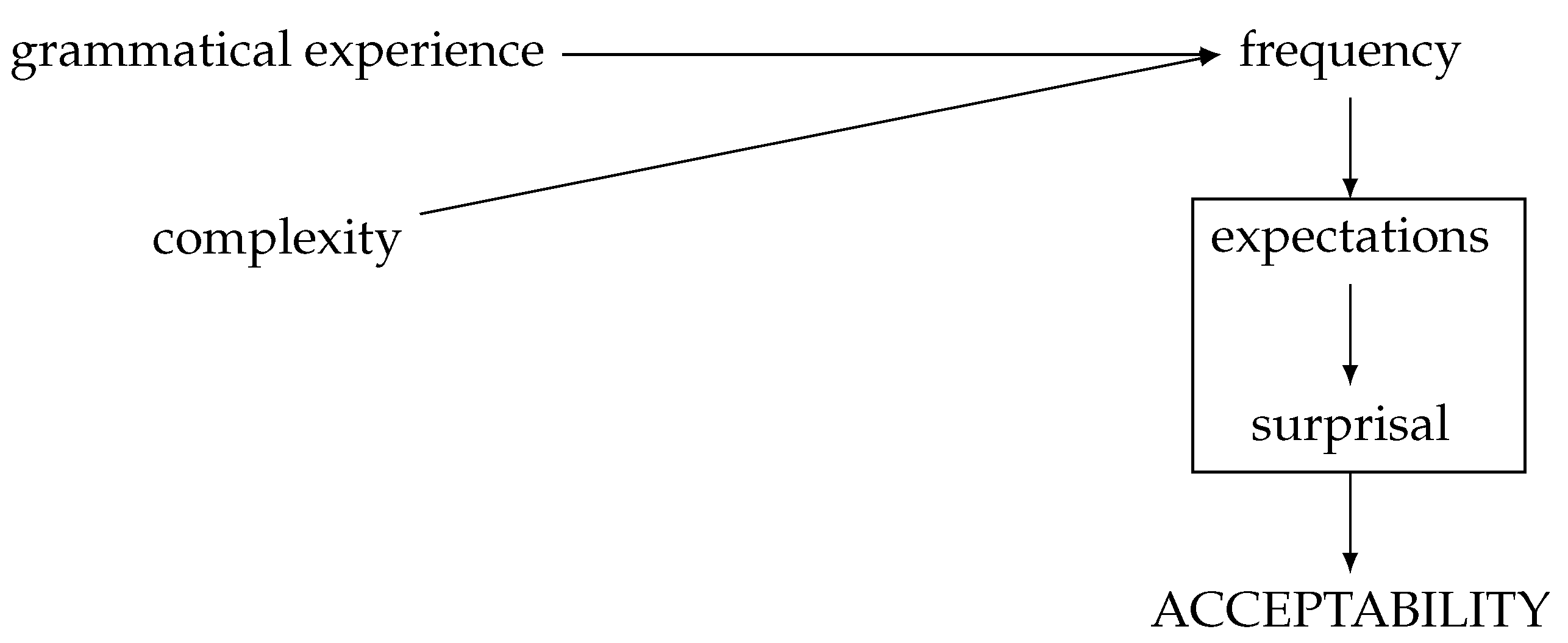

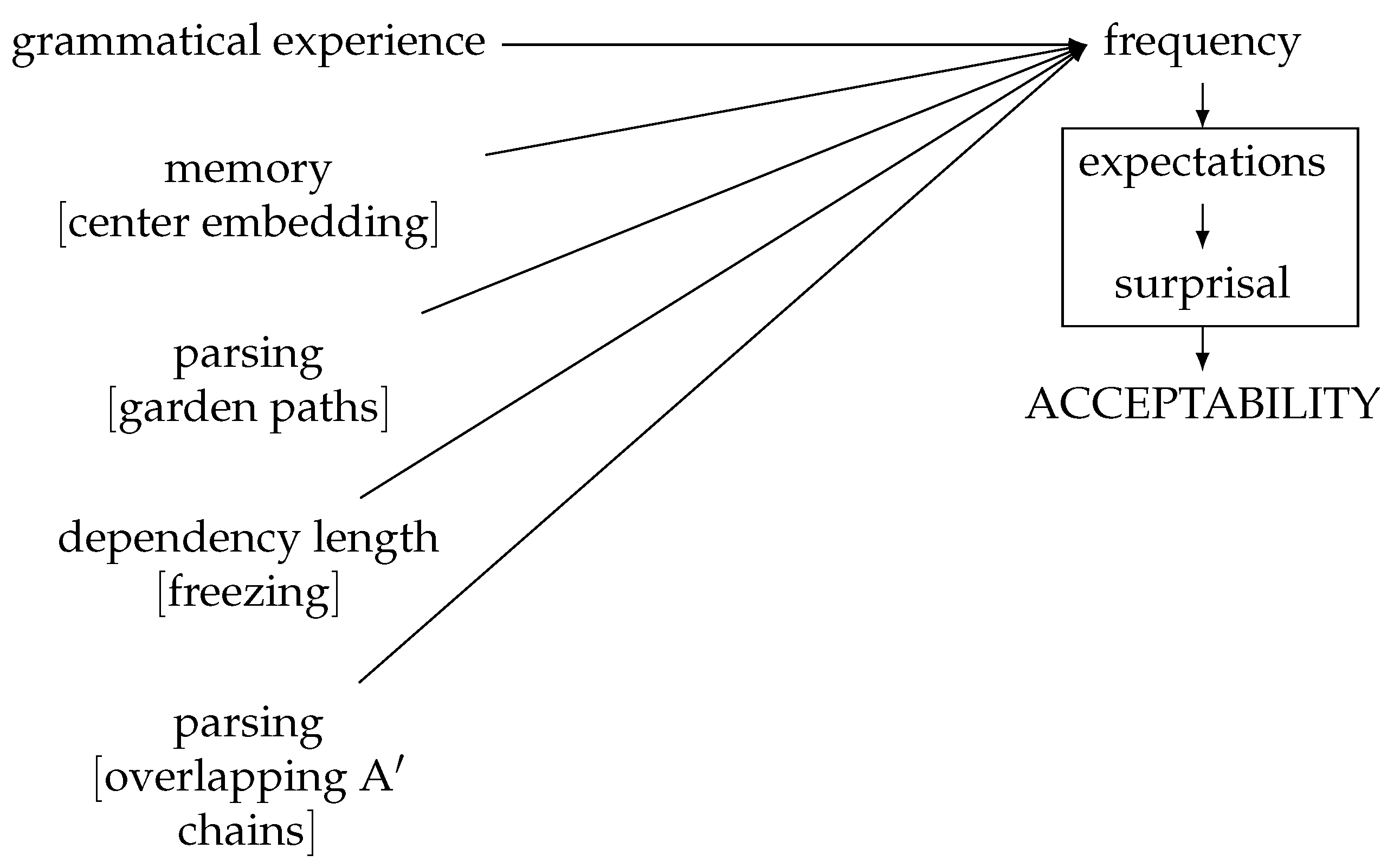

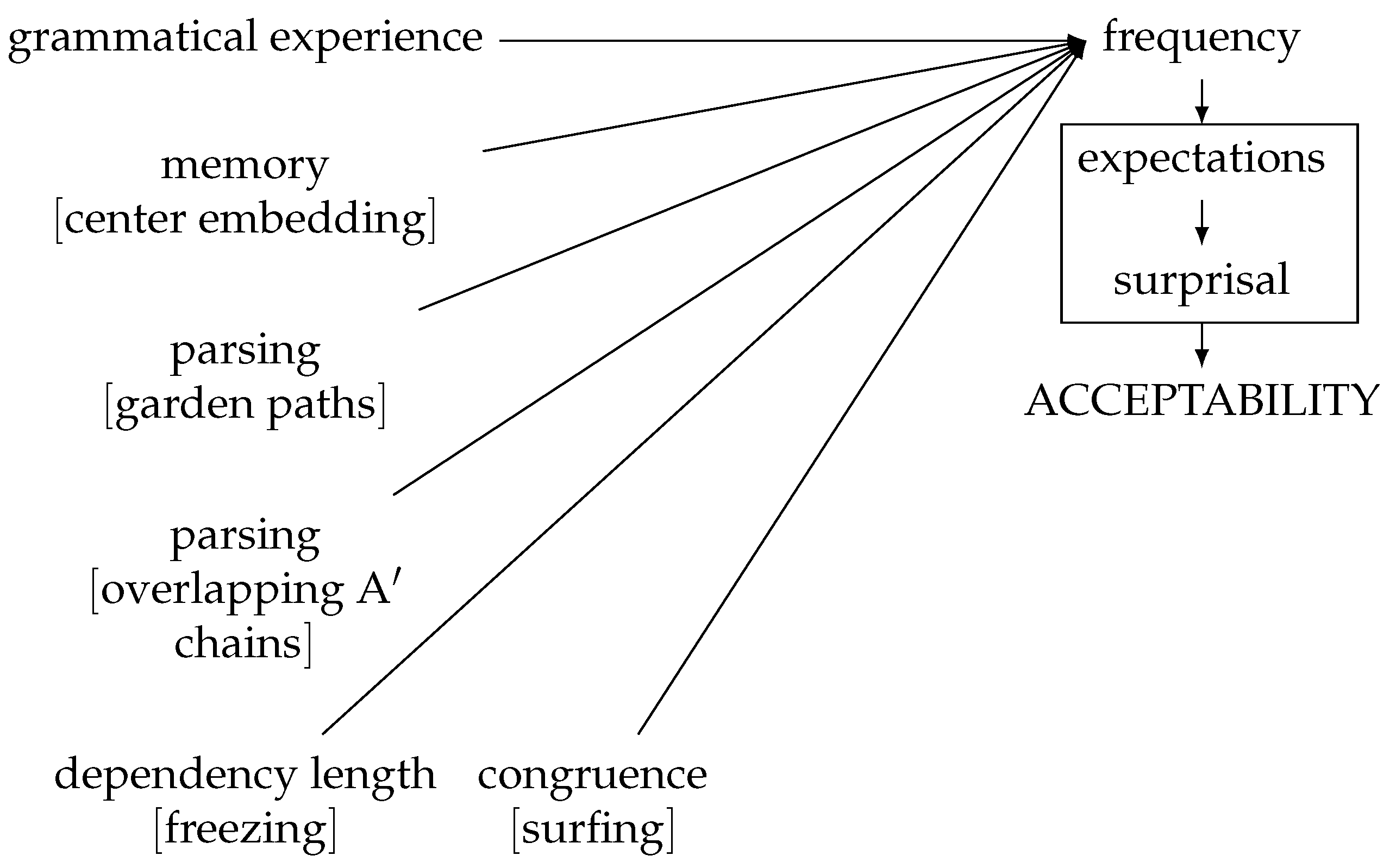

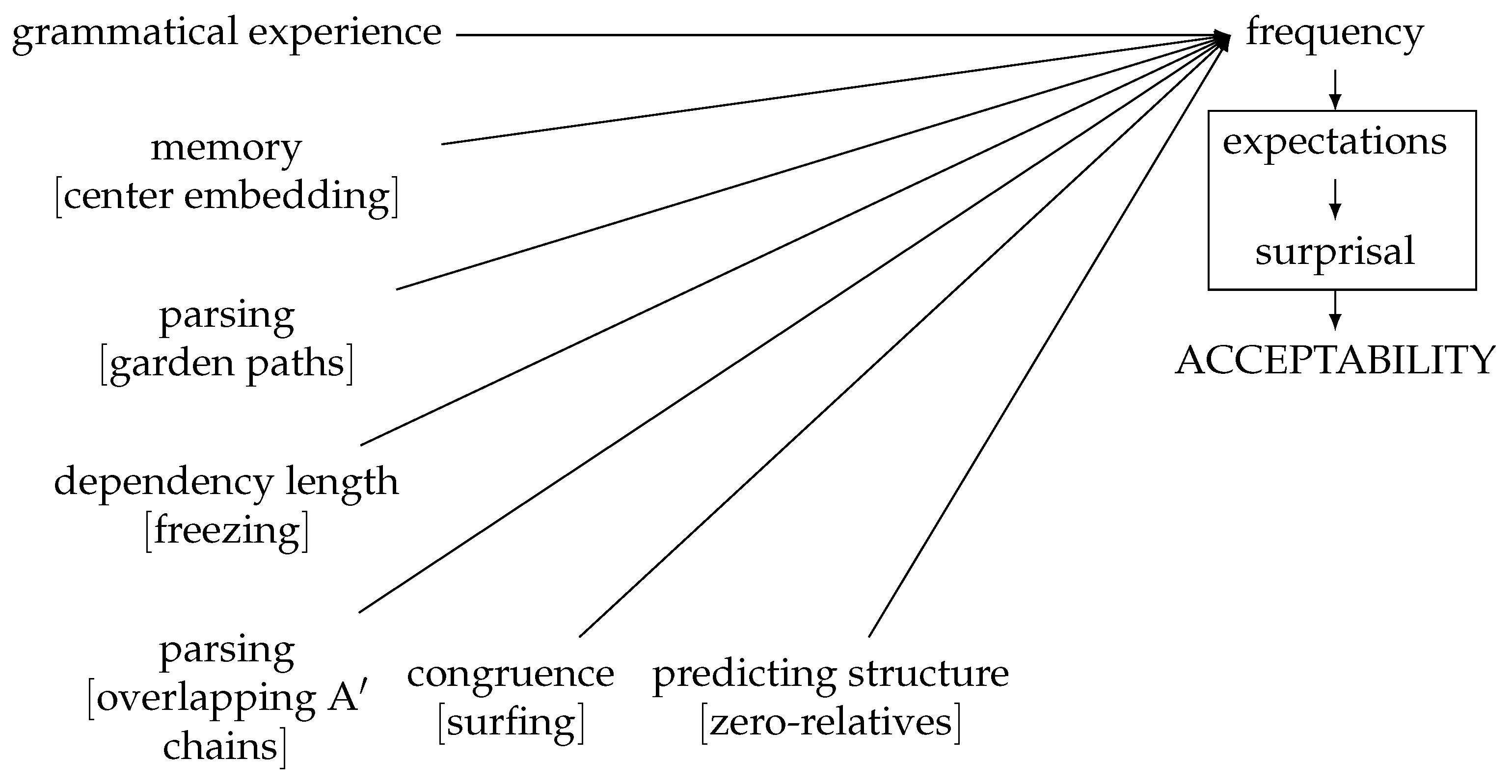

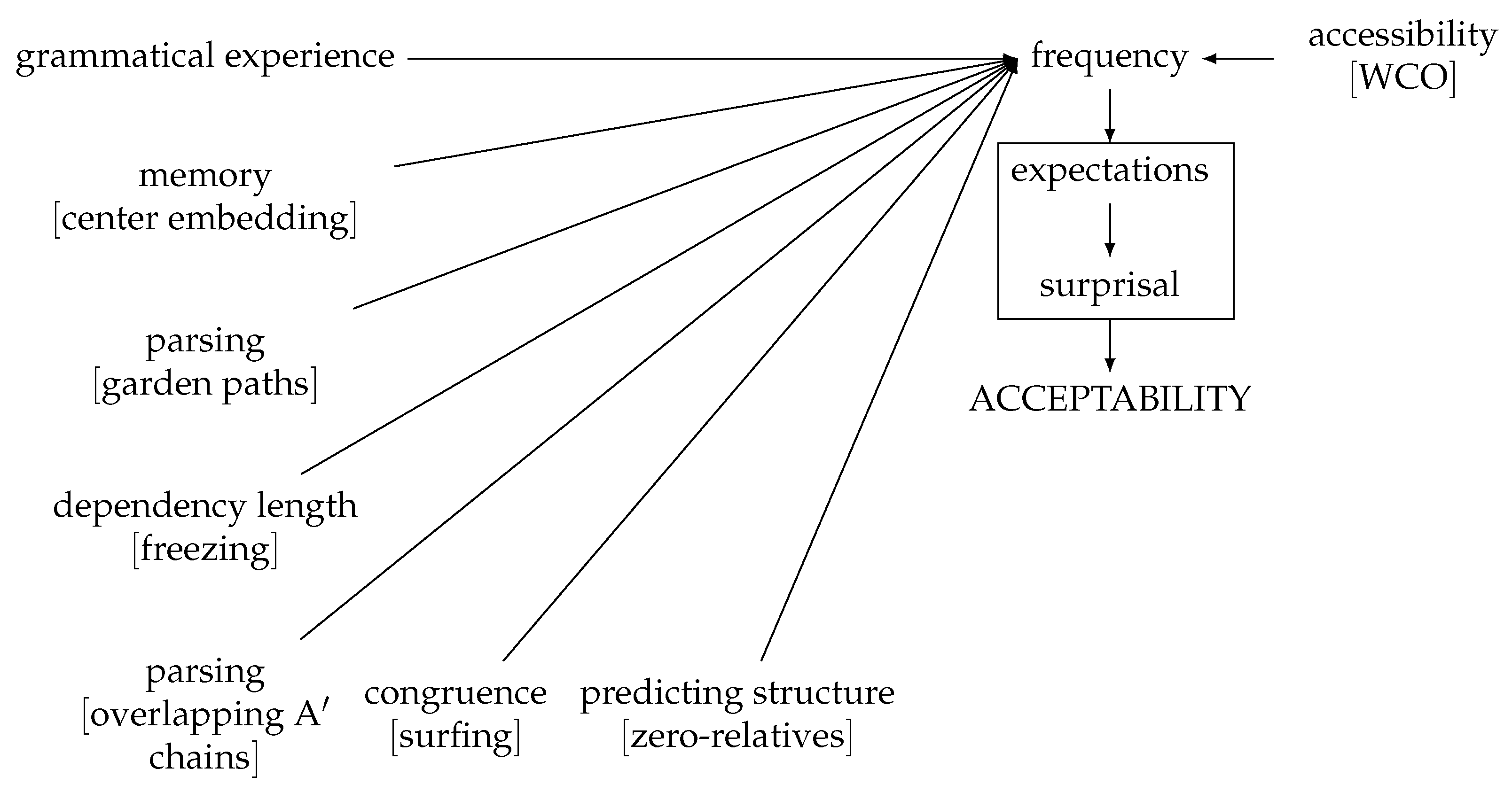

| 3 | More formally, Levy (2008) defines the surprisal associated with a given linguistic expression as its negative log probability conditional on all the previous expressions in the discourse and the relevant features of the extra-sentential context (written as context):

Our use of surprisal is different in several respects from Levy’s. First, Levy (2008) defines surprisal relative to words. We are generalizing the notion to linguistic expressions in general, including words and phrases. Second, Levy documents the correlation between surprisal and performance measures such as reaction times, while we are focusing on the underlying processing and acceptability responses. In this respect we are following a line of research pursued by Park et al. (2021), who use surprisal to measure a deep learning language model’s knowledge of syntax. They explore the extent to which a language model’s surprisal score for pairs of sentences matches with standard acceptability contrasts found in textbooks. They found that “the accuracy of BERT’s acceptability judgments [i.e., the correspondence between the surprisal value assigned by the language model, BERT, and the acceptability reported in textbooks] is fairly high” (Park et al. 2021, p. 420). |

| 4 | The frequency that determines expectations is not that of sequences of strings, but, rather, of linguistic expressions, minimally construed as correspondences of phonological, syntactic, and semantic information (Goldberg 1995, 2006; Jackendoff 2002; Michaelis 2012; Sag 2012). This caveat is necessary in order to avoid the objection Chomsky (1957, pp. 15–17) raised to statistical approaches. In the context I saw a fragile _, the strings bassoon and of may share an equal frequency in the past linguistic experience of an English speaker (≈0). However, since the speaker independently knows that bassoon is a noun and of is a preposition and the sequence fragile NP is much more frequent than fragile P, the expectation (and, therefore, the acceptability) for the former is much higher than for the latter. |

| 5 | For instance, in order to state a syntactic restriction against multiple center-embeddings, we would need some way of counting the number of embedded clauses; in order to account for (5), we would need the syntactic constraint on A movement to be sensitive to the position of the gap in the linear order of the string (which contradicts the widespread assumption that transformations are structure-dependent). The very idea of syntactic constraints on unbounded dependencies also entails a non-trivial extension of the vocabulary of syntactic theory insofar as it requires ways of referring to chunks of syntactic representations of an indeterminate size, as discussed in connection to (2) above. |

| 6 | A reviewer correctly points out that in principle failure of a particular example to observe a proposed syntactic constraint could be a ‘grammatical illusion’ (Christensen 2016; de Dios-Flores 2019; Engelmann and Vasishth 2009; Phillips et al. 2011; Trotzke et al. 2013). Clearly, such a possibility always exists where there are differences in judgments of acceptability. However, in order to appeal to a grammatical illusion to account for the acceptability of an island violation it is important to show that doing so results in a simplification of the theory of grammar; otherwise, one can aways appeal to a grammatical illusion in order to get around any counterexample to a proposed syntactic constraint. Quite the opposite appears to be the case for islands. As Phillips (2013a, p. 54) puts it, “[n]atural language grammars would probably be simpler if there were no island constraints" . The reasons relate to the point we made above about how syntactic accounts require extending the descriptive vocabulary of grammatical theory. |

| 7 | We note that evolutionary considerations are not incompatible per se with a syntactic approach to islands. On such a view, it would be necessary to show that island effects follow from an interaction of general architectural features of the syntactic part of language that could independently be justified on evolutionary grounds. We are not aware of such a demonstration. Hauser et al. (2002) suggest an alternative view, where island constraints arise automatically from solutions to the problem of optimizing the syntactic outputs constructed by the “narrow” faculty of language to the constraints imposed by the “broad” faculty of language – i.e., the cognitive systems that the syntax interacts with. If the latter are understood to include processing systems, Hauser et al.’s (2002) hypothesis can be seen as an instance of the RUH. |

| 8 | In fact, the experiments they report demonstrate that manipulation of frequency has an effect on acceptability judgments for island extractions. |

| 9 | Ross’s formulation of the constraint reflects the fact that it is not possible to extract from an extraposed relative clause, even though it is not in a configuration that would fall under the Complex NP Constraint. Thus we see right at the start the treatment of freezing as a special type of island phenomenon. |

| 10 | For other proposals that take chain interactions to result in ungrammaticality, see Chomsky’s (1977) discussion of the interaction of wh-movement and tough-movement and also Fodor (1978), and Pesetsky (1982). In contrast, Collins (2005) proposes an account of the English passive that requires movement of a sub-constituent from a larger, moved constituent. |

| 11 | It should also be noted that there are phenomena where greater distance between dependent elements appears to improve acceptability (see, for example, Vasishth and Lewis 2006). Such ‘anti-locality’ effects suggest that there are yet other factors at play, such as predictability related to selection (Levy and Keller 2013; Rajkumar et al. 2016). Moreover, research on the processing of relative clauses in languages such as Japanese and Korean suggests that there may be a preference of extraction of subjects over objects even though the gaps corresponding to the subjects are arguably further from the head (see, for example, Nakamura and Miyamoto 2013; Ueno and Garnsey 2008). These data favor the view that dependency length should be measured in terms of complexity of branching structure, given that in head-final languages the position of subject gaps is linearly farther but hierarchically closer to the position of the filler noun. |

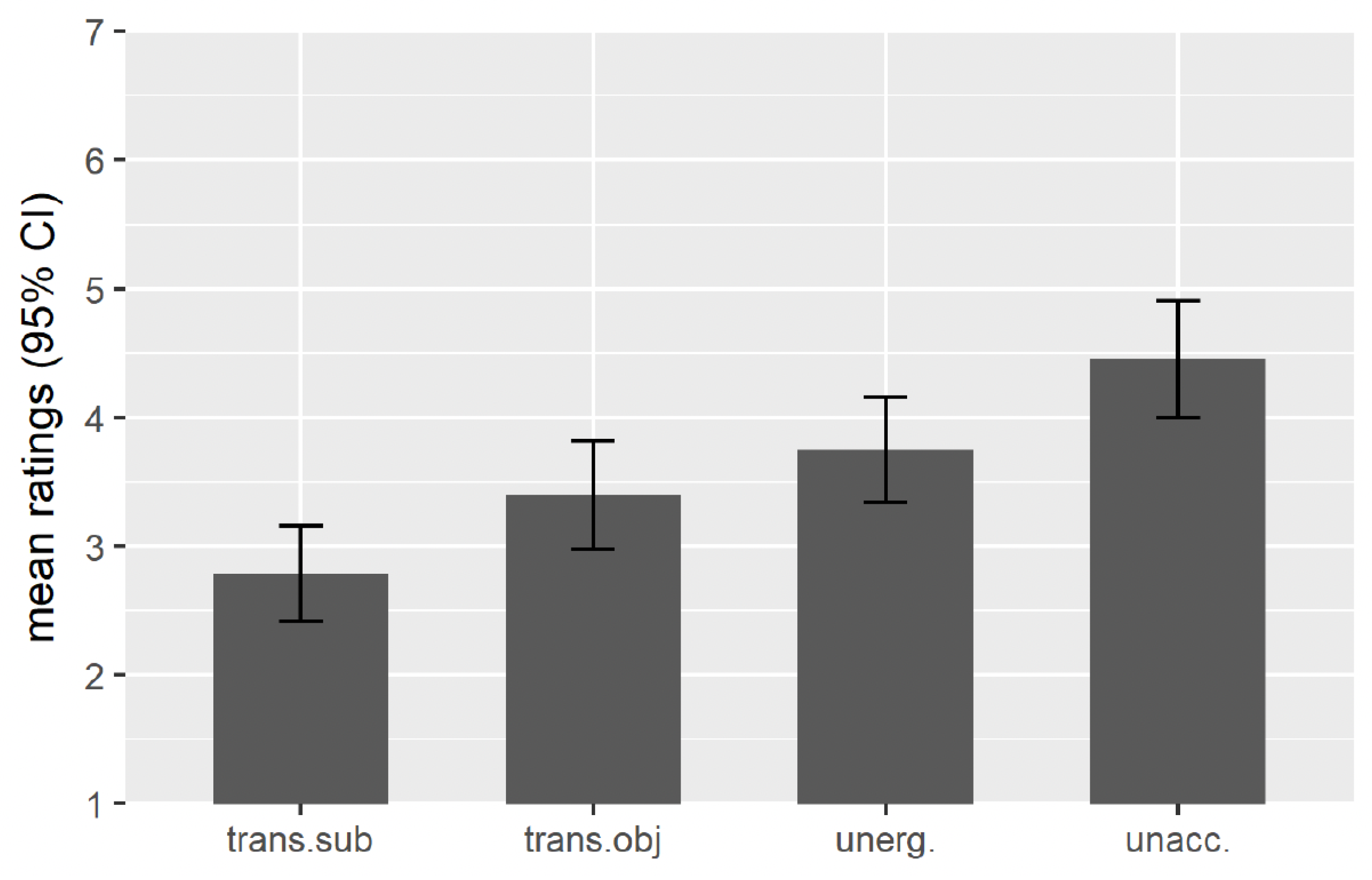

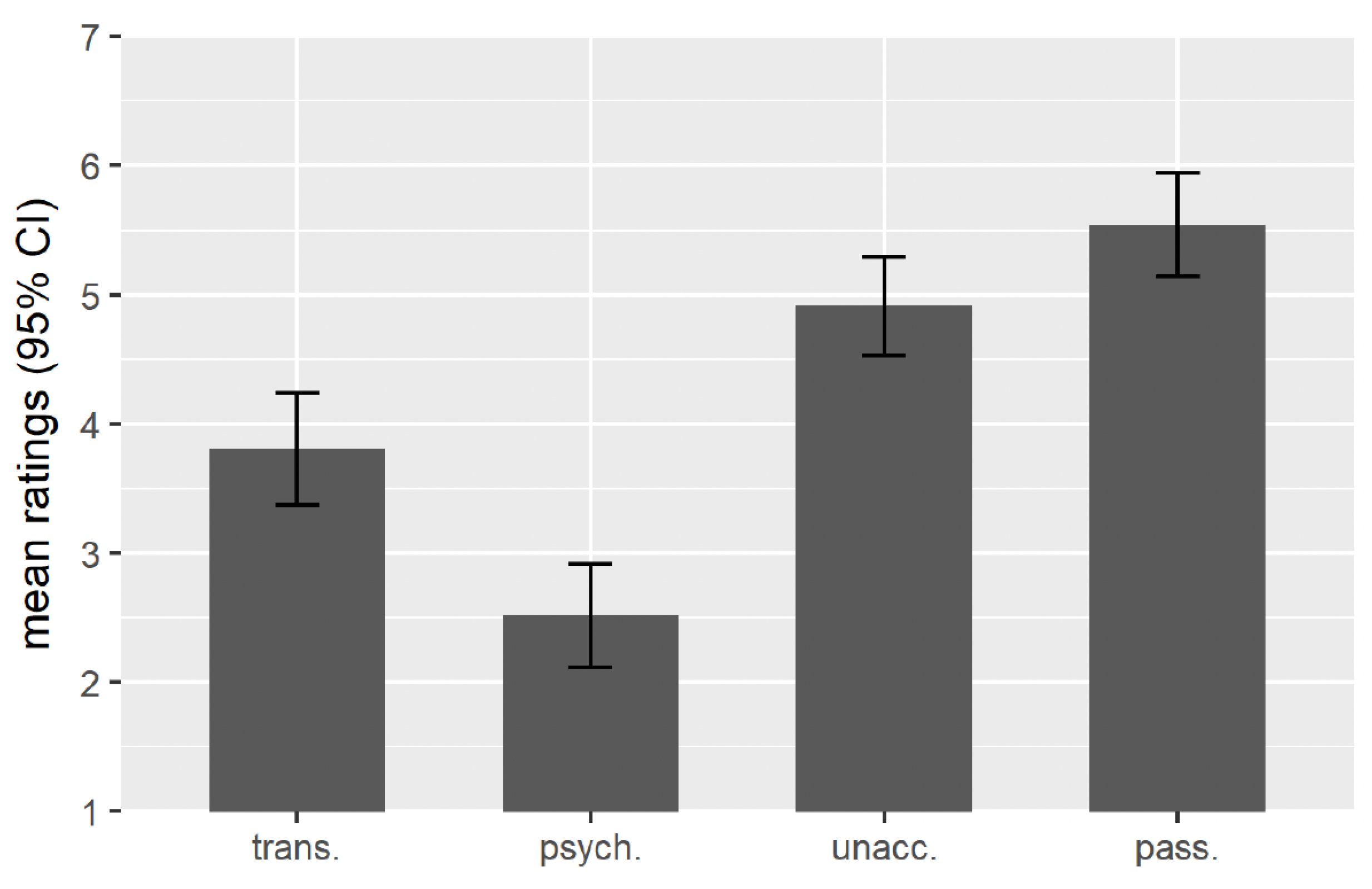

| 12 | |

| 13 | For completeness we note that there is a range of cases of purported freezing that do not immediately lend themselves to explanations in terms of non-syntactic factors. Among these are phenomena in German (Bayer 2018; Müller 2018), and Dutch (Corver 2018). These phenomena await a more extensive analysis than we can provide here. |

| 14 | Crossing is also seen in another type of example that fell under the freezing account of Wexler and Culicover (1980):

|

| 15 | The dependency length literature suggests that minimization of dependency length alone is not sufficient to account for structural preferences reflecting degree of congruence (Kuhlmann and Nivre 2006). Also relevant are the degree of adjacency of dependent constituents, measured by gap degree, which measures the number of discontinuities within a subtree, edge degree, which measures the number of intervening constituents spanned by a single edge, and the disjointness of constituents, measured by well-nestedness (Kuhlmann and Nivre 2006, p. 511). |

| 16 | |

| 17 | |

| 18 | |

| 19 | For a computational account of crossover effects in terms of linear order processing, see Shan and Barker (2006). |

| 20 | The lower accessibility of Frank would justify repeating the name Frank or using some other referential phrase carrying a higher degree of informativity. Repetition of Charlie in (28), in turn, would have been redundant and would, as a result, contribute to increase processing complexity (Gordon and Hendrick 1998). |

| 21 | We show below that the relative unacceptability of (30c–e) vs. (30a) is related to the Uninvited Guest in virtue of the presence of additional referring expressions as subjects as well as finite tense (cf. Kluender 1998). |

| 22 | Throughout most of the history of transformational grammar, the Coordinate Structure Constraint has resisted an integration into general syntactic theories of islands like the ones proposed by Chomsky (1973, 1977, 1986, 2008). However, it did play an important role in non-transformational theories like GPSG and HPSG (Gazdar 1981; Pollard and Sag 1994). More recently, minimalist accounts of both parts of (40) have been proposed which make critical use of non-local grammatical constraints, such as Chomsky’s (2000) Phase Impenetrability Condition and Rizzi’s (1990) Relativized Minimality (Bošković 2020; Oda 2021). Relativized minimality counts as a non-local constraint in our sense because, even in the absence of interveners, the distance between a target position and a movement trace can still be arbitrarily large. A similar observation applies to the size of the domain of a phase (i.e., the spell-out domain), from which extraction is ruled out by the Phase Impenetrability Condition (Chomsky 2000). |

| 23 | This is ultimately the strategy advocated by Chaves and Putnam (2020, pp. 102–3). |

References

- Abeillé, Anne, Barbara Hemforth, Elodie Winckel, and Edward Gibson. 2020. Extraction from subjects: Differences in acceptability depend on the discourse function of the construction. Cognition 204: 104293. [Google Scholar] [CrossRef] [PubMed]

- Abrusán, Márta. 2014. Weak Island Semantics. Oxford: Oxford University Press. [Google Scholar]

- Almor, Amit. 1999. Noun-phrase anaphora and focus: The informational load hypothesis. Psychological Review 106: 748–65. [Google Scholar] [CrossRef] [PubMed]

- Almor, Amit. 2000. Constraints and mechanisms in theories of anaphor processing. In Architectures and Mechanisms for Language Processing. Edited by Matthew W. Crocker, Martin J. Pickering and Charles Clifton. Cambridge, MA: MIT Press, pp. 341–54. [Google Scholar]

- Almor, Amit, and Veena A. Nair. 2007. The form of referential expressions in discourse. Language and Linguistics Compass 1: 84–99. [Google Scholar] [CrossRef]

- Ariel, Mira. 1988. Referring and accessibility. Journal of linguistics 24: 65–87. [Google Scholar] [CrossRef]

- Ariel, Mira. 1990. Accessing Noun-Phrase Antecedents. London: Routledge. [Google Scholar]

- Ariel, Mira. 1991. The function of accessibility in a theory of grammar. Journal of Pragmatics 16: 443–63. [Google Scholar] [CrossRef]

- Ariel, Mira. 1994. Interpreting anaphoric expressions: A cognitive versus a pragmatic approach. Journal of linguistics 30: 3–42. [Google Scholar] [CrossRef]

- Ariel, Mira. 2001. Accessibility theory: An overview. In Text Representation: Linguistic and Psycholinguistic Aspects. Edited by Ted. J. M. Sanders, Joost Schilperoord and Wilbert Spooren. Amsterdam: John Benjamins Publishing Company, pp. 29–87. [Google Scholar]

- Ariel, Mira. 2004. Accessibility marking: Discourse functions, discourse profiles, and processing cues. Discourse Processes 37: 91–116. [Google Scholar] [CrossRef]

- Arnold, Jennifer E., and Zenzi M. Griffin. 2007. The effect of additional characters on choice of referring expression: Everyone counts. Journal of Memory and Language 56: 521–36. [Google Scholar] [CrossRef] [Green Version]

- Arnold, Jennifer E. 2010. How speakers refer: The role of accessibility. Language and Linguistics Compass 4: 187–203. [Google Scholar] [CrossRef]

- Arnold, Jennifer E., and Michael K. Tanenhaus. 2011. Disfluency effects in comprehension: How new information can become accessible. In The Processin and Acquisition of Reference. Edited by Edward A. Gibson and Neal J. Pearlmutter. Cambridge, MA: MIT Press, pp. 197–217. [Google Scholar]

- Arnon, Inbal, Philip Hofmeister, T. Florian Jaeger, IvanA. Sag, and Neal Snider. 2005. Rethinking superiority effects: A processing model. Paper presented at the 18th Annual CUNY Conference on Human Sentence Processing, University of Arizona, Tucson, AZ, March 2. [Google Scholar]

- Baltin, Mark. 1981. Strict bounding. In The Logical Problem of Language Acquisition. Edited by C. Leroy Baker and John McCarthy. Cambridge, MA: MIT Press. [Google Scholar]

- Bayer, Josef. 2004. Was beschränkt die Extraktion? Subjekt-Objekt vs. Topic-Fokus. In Deutsche Syntax: Empirie und Theorie. Edited by Franz-Josef D’Avis. Volume 46 of Acta Universitatis Gothoburgensis. Göteborg: Göteborger Germanistische Forschungen, pp. 233–57. [Google Scholar]

- Bayer, Josef. 2018. Criteral freezing in the syntax of particles. In Freezing: Theoretical Approaches and Empirical Domains. Edited by Jutta Hartmann, Marion Jäger, Andreas Konietzko and Susanne Winkler. Berlin: De Gruyter, pp. 224–63. [Google Scholar]

- Berwick, Robert C., and Noam Chomsky. 2016. Why Only Us: Language and Evolution. Cambridge, MA: MIT Press. [Google Scholar]

- Bever, Thomas. 1970. The cognitive basis for linguistic structures. In Cognition and the Development of Language. Edited by John R. Hayes. New York: John Wiley & Sons, pp. 279–362. [Google Scholar]

- Bloomfield, Leonard. 1933. Language. New York and London: Henry Holt and Co. and Allen and Unwin Ltd. [Google Scholar]

- Boeckx, Cedric. 2008. Islands. Language and Linguistics Compass 2: 151–67. [Google Scholar] [CrossRef]

- Boeckx, Cedric. 2012. Syntactic Islands. Cambridge: Cambridge University Press. [Google Scholar]

- Borsley, Robert D. 2005. Against Conjp. Lingua 115: 461–82. [Google Scholar] [CrossRef]

- Bošković, Željko. 2015. From the complex NP constraint to everything: On deep extractions across categories. The Linguistic Review 32: 603–69. [Google Scholar] [CrossRef]

- Bošković, Željko. 2020. On the Coordinate Structure Constraint, across-the-board-movement, phases, and labeling. In Recent Developments in Phase Theory. Edited by Jeroen van Craenenbroeck, Cora Pots and Tanja Temmerman. Berlin: De Gruyter Mouton, pp. 133–82. [Google Scholar]

- Bouchard, Denis. 1984. On the Content of Empty Categories. Dordecht: Foris. [Google Scholar]

- Bouma, Gosse, Robert Malouf, and Ivan Sag. 2001. Satisfying constraints on extraction and adjunction. Natural Language and Linguistic Theory 19: 1–65. [Google Scholar] [CrossRef]

- Bybee, Joan. 2006. From usage to grammar: The mind’s response to repetition. Language 82: 711–33. [Google Scholar] [CrossRef]

- Bybee, Joan. 2010. Language, Usage and Cognition. Cambridge: Cambridge Univeristy Press. [Google Scholar]

- Bybee, Joan L., and Paul J. Hopper. 2001. Frequency and the Emergence of Linguistic Structure. Amsterdam and Philadelphia: John Benjamins Publishing Company. [Google Scholar]

- Chaves, Rui P. 2007. Coordinate Structures: Constraint-Based Syntax-Semantics Processing. Ph.D. thesis, Universidade de Lisboa, Lisbon, Portugal. [Google Scholar]

- Chaves, Rui P. 2013. An expectation-based account of subject islands and parasitism. Journal of Linguistics 49: 285–327. [Google Scholar] [CrossRef] [Green Version]

- Chaves, Rui P. 2020. Island phenomena and related matters. In Head-Driven Phrase Structure Grammar: The Handbook. Edited by Stefan Müller, Anne Abeillé, Robert D. Borsley and Jean-Pierre Koenig. Berlin: Language Science Press. [Google Scholar]

- Chaves, Rui P., and Jeruen E. Dery. 2014. Which subject islands will the acceptability of improve with repeated exposure? In Proceedings of the Thirty-First Meeting of the West Coast Conference on Formal Linguistics, Arizona State University, February 7–9, 2013. Edited by Robert E. Santana-LaBarge. Somerville, MA: Cascadilla Project, pp. 96–106. [Google Scholar]

- Chaves, Rui P., and Jeruen E. Dery. 2019. Frequency effects in subject islands. Journal of linguistics 55: 475–521. [Google Scholar] [CrossRef] [Green Version]

- Chaves, Rui P., and Michael T. Putnam. 2020. Unbounded Dependency Constructions: Theoretical and Experimental Perspectives. Oxford: Oxford University Press. [Google Scholar]

- Chomsky, Noam. 1957. Syntactic Structures. The Hague: Mouton. [Google Scholar]

- Chomsky, Noam. 1965. Aspects of the Theory of Syntax. Cambridge, MA: MIT Press. [Google Scholar]

- Chomsky, Noam. 1973. Conditions on transformations. In A Festschrift for Morris Halle. Edited by Stephen Anderson and Paul Kiparsky. New York: Holt, Reinhart & Winston, pp. 232–86. [Google Scholar]

- Chomsky, Noam. 1977. On wh-movement. In Formal Syntax. Edited by Peter W. Culicover, Thomas Wasow and Adrian Akmajian. New York: Academic Press, pp. 71–132. [Google Scholar]

- Chomsky, Noam. 1986. Barriers. Cambridge, MA: MIT Press. [Google Scholar]

- Chomsky, Noam. 2000. Minimalist inquiries: The framework. In Step by Step: Essays on Minimalist Syntax in Honor of Howard Lasnik. Edited by Roger Martin, David Michaels and Juan Uriagereka. Cambridge, MA: MIT Press, pp. 89–156. [Google Scholar]

- Chomsky, Noam. 2001. Derivation by phase. In Ken Hale: A Life in Linguistics. Edited by Michael Kenstowicz. Cambridge, MA: MIT Press, pp. 1–52. [Google Scholar]

- Chomsky, Noam. 2008. On phases. In Foundational Issues in Linguistic Theory: Essays in Honor of Jean-Roger Vergnaud. Edited by Robert Freidin, Carlos P. Otero and Maria Luisa Zubizarreta. Cambridge, MA: MIT Press, pp. 133–66. [Google Scholar]

- Chomsky, Noam, Ángel J. Gallego, and Dennis Ott. 2019. Generative grammar and the faculty of language: Insights, questions, and challenges. Catalan Journal of Linguistics 18: 229–61. [Google Scholar] [CrossRef] [Green Version]

- Christensen, Ken Ramshøj. 2016. The dead ends of language: The (mis)interpretation of a grammatical illusion. In Let Us Have Articles Betwixt Us: Papers in Historical and Comparative Linguistics in Honour of Johanna L. Wood. Edited by Sten Vikner, Henrik Jørgensen and Elly van Gelderen. Aarhus: Aarhus University, pp. 129–59. [Google Scholar]

- Citko, Barbara. 2014. Phase Theory: An Introduction. Cambridge: Cambridge University Press. [Google Scholar]

- Collins, Chris. 2005. A smuggling approach to the passive in English. Syntax 8: 81–120. [Google Scholar] [CrossRef]

- Corballis, Michael C. 2017. The evolution of language. In APA Handbook of Comparative Psychology: Basic Concepts, Methods, Neural Substrate, and Behavior. Edited by Josep Call, Gordon M. Burghardt, Irene M. Pepperberg, Charles T. Snowdon and Thomas R. Zentall. Washington, DC: American Psychological Association, pp. 273–97. [Google Scholar]

- Corver, Norbert. 2017. Freezing effects. In The Blackwell Companion to Syntax, 2nd ed. Edited by Martin Everaert and Henk van Riemsdijk. Malden: Blackwell Publishing. [Google Scholar]

- Corver, Norbert. 2018. The freezing points of the (Dutch) adjectival system. In Freezing: Theoretical Approaches and Empirical Domains. Edited by Jutta Hartmann, Marion Jäger, Andreas Konietzko and Susanne Winkler. Berlin: De Gruyter, pp. 143–94. [Google Scholar]

- Culicover, Peter W. 2005. Squinting at Dali’s Lincoln: On how to think about language. In Proceedings of the Annual Meeting of the Chicago Linguistic Society. Chicago: Chicago Linguistic Society, vol. 41, pp. 109–28. [Google Scholar]

- Culicover, Peter W. 2013a. English (zero-)relatives and the competence-performance distinction. International Review of Pragmatics 5: 253–70. [Google Scholar] [CrossRef] [Green Version]

- Culicover, Peter W. 2013b. Explaining Syntax. Oxford: Oxford University Press. [Google Scholar]

- Culicover, Peter W. 2013c. Grammar and Complexity: Language at the Intersection of Competence and Performance. Oxford: Oxford University Press. [Google Scholar]

- Culicover, Peter W. 2013d. The role of linear order in the computation of referential dependencies. Lingua 136: 125–44. [Google Scholar] [CrossRef]

- Culicover, Peter W. 2015. Simpler Syntax and the mind. In Structures in the Mind: Essays on Language, Music, and Cognition in Honor of Ray Jackendoff. Edited by Ida Toivonen, Piroska Csuri and Emile Van Der Zee. Cambridge: MIT Press, pp. 3–20. [Google Scholar]

- Culicover, Peter W. 2021. Language Change, Variation and Universals—A Constructional Approach. Oxford: Oxford University Press. [Google Scholar]

- Culicover, Peter W., and Robert D. Levine. 2001. Stylistic inversion in English: A reconsideration. Natural Language & Linguistic Theory 19: 283–310. [Google Scholar]

- Culicover, Peter W., and Andrzej Nowak. 2002. Markedness, antisymmetry and complexity of constructions. Linguistic Variation Yearbook 2: 5–30. [Google Scholar] [CrossRef] [Green Version]

- Culicover, Peter W., and Andrzej Nowak. 2003. Dynamical Grammar: Minimalism, Acquisition and Change. Oxford: Oxford University Press. [Google Scholar]

- Culicover, Peter W., and Michael Rochemont. 1983. Stress and focus in English. Language 59: 123–65. [Google Scholar] [CrossRef]

- Culicover, Peter W., and Susanne Winkler. 2018. Freezing, between grammar and processing. In Freezing: Theoretical Approaches and Empirical Domains. Edited by Jutta Hartmann, Marion Jäger, Andreas Konietzko and Susanne Winkler. Berlin: De Gruyter, pp. 353–86. [Google Scholar]

- Culicover, Peter W., and Susanne Winkler. 2022. Parasitic gaps aren’t parasitic or, the Case of the Uninvited Guest. The Linguistic Review 39: 1–35. [Google Scholar] [CrossRef]

- de Dios-Flores, Iria. 2019. Processing sentences with multiple negations: Grammatical structures that are perceived as unacceptable. Frontiers in Psychology 10: 2346. [Google Scholar] [CrossRef] [PubMed]

- De Kuthy, Kordula, and Andreas Konietzko. 2019. Information structural constraints on PP topicalization from NPs. In Architecture of Topic. Edited by Valéria Molnár, Verner Egerland and Susanne Winkler. Berlin and New York: De Gruyter, pp. 203–22. [Google Scholar]

- Deane, Paul. 1991. Limits to attention: A cognitive theory of island phenomena. Cognitive Linguistics 2: 1–63. [Google Scholar] [CrossRef]

- Emonds, Joseph E. 1985. A Unified Theory of Syntactic Categories. Dordrecht: Foris. [Google Scholar]

- Engelmann, Felix, and Shravan Vasishth. 2009. Processing grammatical and ungrammatical center embeddings in english and german: A computational model. Paper presented at Ninth International Conference on Cognitive Modeling, Manchester, UK, July 24–26; pp. 240–45. [Google Scholar]

- Erteschik-Shir, Nomi. 1977. On the Nature of Island Constraints. Ph.D. thesis, MIT, Cambridge, MA, USA. [Google Scholar]

- Erteschik-Shir, Nomi. 2007. Information Structure: The Syntax-Discourse Interface. Oxford: Oxford University Press. [Google Scholar]

- Erteschik-Shir, Nomi, and Shalom Lappin. 1979. Dominance and the functional explanation of island constraints. Theoretical Linguistics 6: 43–84. [Google Scholar] [CrossRef]

- Fodor, Janet Dean. 1978. Parsing strategies and constraints on transformations. Linguistic Inquiry 9: 427–73. [Google Scholar]

- Fodor, Janet Dean. 1983. Phrase structure parsing and the island constraints. Linguistics and Philosophy 6: 163–223. [Google Scholar] [CrossRef]

- Frampton, John. 1990. Parasitic gaps and the theory of wh-chains. Linguistic Inquiry 21: 49–78. [Google Scholar]

- Francis, Elaine J. 2022. Gradient Acceptability and Linguistic Theory. Oxford: Oxford University Press. [Google Scholar]

- Frazier, Lyn, and Charles Clifton. 1989. Successive cyclicity in the grammar and the parser. Language and Cognitive Processes 4: 93–126. [Google Scholar] [CrossRef]

- Futrell, Richard, Kyle Mahowald, and Edward Gibson. 2015. Large-scale evidence of dependency length minimization in 37 languages. Proceedings of the National Academy of Sciences 112: 10336–41. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gazdar, Gerald. 1980. A cross-categorial semantics for coordination. Linguistics 3: 407–9. [Google Scholar] [CrossRef]

- Gazdar, Gerald. 1981. Unbounded dependencies and coordinate structure. Linguistic Inquiry 12: 155–84. [Google Scholar]

- Gazdar, Gerald, Ewan Klein, Geoffrey Pullum, and Ivan A. Sag. 1985. Generalized Phrase Structure Grammar. Oxford and Cambridge: Blackwell Publishing and Harvard University Press. [Google Scholar]

- Gernsbacher, Morton. 1989. Mechanisms that improve referential access. Cognition 32: 99–156. [Google Scholar] [CrossRef] [Green Version]

- Gibson, Edward. 1998. Linguistic complexity: Locality of syntactic dependencies. Cognition 68: 1–76. [Google Scholar] [CrossRef]

- Gibson, Edward. 2000. The dependency locality theory: A distance-based theory of linguistic complexity. In Image, Language, Brain. Edited by Alec P. Marantz, Yasushi Miyashita and Wayne O’Neil. Cambridge, MA: MIT Press, pp. 95–126. [Google Scholar]

- Gieselman, Simone, Robert Kluender, Ivano Caponigro, Yelena Fainleib, Nicholas LaCara, and Yangsook Park. 2013. Isolating processing factors in negative island contexts. Proceedings of NELS 41: 233–46. [Google Scholar]

- Ginzburg, Jonathan, and Ivan A. Sag. 2000. Interrogative Investigations. Stanford: CSLI publications. [Google Scholar]

- Givón, Talmy. 1983. Topic Continuity in Discourse: A Quantitative Cross-Language Study. Amsterdam: John Benjamins Publishing Company. [Google Scholar]

- Goldberg, Adele E. 1995. Constructions: A Construction Grammar Approach to Argument Structure. Chicago: University of Chicago Press. [Google Scholar]

- Goldberg, Adele E. 2006. Constructions at Work: The Nature of Generalization in Language. Oxford: Oxford University Press. [Google Scholar]

- Goldsmith, John. 1985. A principled exception to the Coordinate Structure Constraint. In Proceedings of the Chicago Linguistic Society 21. Edited by William H. Eilfort, Paul D. Kroeber and Karen L. Peterson. pp. 133–43. [Google Scholar]

- Gordon, Peter C., and Randall Hendrick. 1998. The representation and processing of coreference in discourse. Cognitive Science 22: 389–424. [Google Scholar] [CrossRef]

- Gould, Stephen Jay, and Richard C. Lewontin. 1979. The spandrels of San Marco and the Panglossian paradigm: A critique of the adaptationist programme. Proceedings of the Royal Society of London. Series B. Biological Sciences 205: 581–98. [Google Scholar]

- Grice, H. Paul. 1975. Logic and conversation. In Speech Acts. Edited by Peter Cole and Jerry L. Morgan. Volume 3 of Syntax and Semantics. New York: Academic Press, pp. 43–58. [Google Scholar]

- Grimshaw, Jane. 1997. Projection, heads, and optimality. Linguistic Inquiry 28: 373–422. [Google Scholar]

- Grosu, Alexander. 1973. On the nonunitary nature of the Coordinate Structure Constraint. Linguistic Inquiry 4: 88–92. [Google Scholar]

- Grosu, Alexander. 1974. On the nature of the Left Branch Condition. Linguistic Inquiry 5: 308–19. [Google Scholar]

- Gundel, Jeanette, Nancy Hedberg, and Ron Zacharski. 1993. Cognitive Status and the Form of Referring Expressions in Discourse. Language 69: 274–307. [Google Scholar] [CrossRef]

- Haider, Hubert, and Inger Rosengren. 2003. Scrambling: Nontriggered chain formation in OV languages. Journal of Germanic Linguistics 15: 203–67. [Google Scholar] [CrossRef] [Green Version]

- Hale, John. 2001. A probablistic Earley parser as a psycholinguistic model. Paper presented at Second Meeting of the North American Chapter of the Association for Computational Linguistics, Morristown, NJ, USA, June 1–7; Stroudsburg, PA: Association for Computational Linguistics, pp. 1–8. [Google Scholar]

- Hale, John. 2003. The information conveyed by words in sentences. Journal of Psycholinguistic Research 32: 101–23. [Google Scholar] [CrossRef]

- Hauser, Marc D., Noam Chomsky, and W. Tecumseh Fitch. 2002. The faculty of language: What is it, who has it, and how did it evolve? Science 298: 1569–79. [Google Scholar] [CrossRef]

- Hawkins, John A. 1994. A Performance Theory of Order and Constituency. Cambridge: Cambridge University Press. [Google Scholar]

- Hawkins, John A. 2004. Efficiency and Complexity in Grammars. Oxford: Oxford University Press. [Google Scholar]

- Hawkins, John A. 2014. Cross-Linguistic Variation and Efficiency. Oxford: Oxford University Press. [Google Scholar]

- Hofmeister, Philip, Peter W. Culicover, and Susanne Winkler. 2015. Effects of processing on the acceptability of frozen extraposed constituents. Syntax 18: 464–83. [Google Scholar] [CrossRef] [Green Version]

- Hofmeister, Philip, T. Florian Jaeger, Inbal Arnon, Ivan Sag, and Neal Snider. 2013. The source ambiguity problem: Distinguishing the effects of grammar and processing on acceptability judgments. Language and Cognitive Processes 28: 48–87. [Google Scholar] [CrossRef] [Green Version]

- Hofmeister, Philip, T. Florian Jaeger, Ivan A. Sag, Inbal Arnon, and Neal Snider. 2007. Locality and accessibility in wh-questions. In Roots: Linguistics in Search of Its Evidential Base. Edited by Sam Featherston and Wolfgang Sternefeld. Berlin: Mouton de Gruyter, pp. 185–206. [Google Scholar]

- Hofmeister, Philip, and Ivan A. Sag. 2010. Cognitive constraints and island effects. Language 86: 366–415. [Google Scholar] [CrossRef] [Green Version]

- Hofmeister, Philip, Laura Staum Casasanto, and Ivan A. Sag. 2013. Islands in the grammar? Standards of evidence. In Experimental Syntax and the Islands Debate. Edited by Jon Sprouse and Norbert Hornstein. Cambridge: Cambridge University Press, pp. 42–63. [Google Scholar]

- Höhle, Tilman. 1982. Explikation für normale Betonung und normale Wortstellung. In Satzglieder im Deutschen. Edited by Werner Abraham. Tübingen: Gunter Narr Verlag, pp. 75–153. [Google Scholar]

- Jackendoff, Ray. 1977. X′ Syntax. Cambridge, MA: MIT Press. [Google Scholar]

- Jackendoff, Ray. 1999. Possible stages in the evolution of the language capacity. Trends in Cognitive Sciences 3: 272–79. [Google Scholar] [CrossRef]

- Jackendoff, Ray. 2002. Foundations of Language: Brain, Meaning, Grammar, Evolution. Oxford: Oxford University Press. [Google Scholar]

- Jackendoff, Ray, and Peter Culicover. 1972. A reconsideration of dative movement. Foundations of Language 7: 397–412. [Google Scholar]

- Jäger, Marion. 2018. An experimental study on freezing and topicalization in English. In Freezing: Theoretical Approaches and Empirical Domains. Edited by Jutta Hartmann, Marion Knecht, Andreas Konietzko and Susanne Winkler. Berlin and New York: Mouton de Gruyter, pp. 430–50. [Google Scholar]

- Kaplan, Ronald M., and Annie Zaenen. 1995. Long-distance dependencies, constituent structure, and functional uncertainty. In Formal Issues in Lexical-Functional Grammar. Edited by Mary Dalrymple, Ronald M. Kaplan, John T. Maxwell III and Annie Zaenen. Stanford, CA: CSLI Publications, pp. 137–65. [Google Scholar]

- Kehler, Andrew. 1996. Coherence and the coordinate structure constraint. Annual Meeting of the Berkeley Linguistics Society 22: 220–31. [Google Scholar] [CrossRef] [Green Version]

- Kluender, Robert. 1991. Cognitive Constraints on Variables in Syntax. Ph.D. thesis, University of California, San Diego, La Jolla, CA, USA. [Google Scholar]

- Kluender, Robert. 1992. Deriving islands constraints from principles of predication. In Island Constraints: Theory, Acquisition and Processing. Edited by Helen Goodluck and Michael Rochemont. Dordrecht: Kluwer, pp. 223–58. [Google Scholar]

- Kluender, Robert. 1998. On the distinction between strong and weak islands: A processing perspective. In Syntax and Semantics 29: The Limits of Syntax. Edited by Peter W. Culicover and Louise McNally. San Diego: Academic Press, pp. 241–79. [Google Scholar]

- Kluender, Robert. 2004. Are subject islands subject to a processing account? Paper presented at 23rd West Coast Conference on Formal Linguistics, University of California, Davis, CA, USA, April 23–25; Edited by Benjamin Schmeiser, Veneeta Chand, Ann Kelleher and Angelo J. Rodriguez. Somerville: Cascadilla Press, pp. 475–99. [Google Scholar]

- Kluender, Robert, and Marta Kutas. 1993a. Bridging the gap: Evidence from ERPs on the processing of unbounded dependencies. Journal of Cognitive Neuroscience 5: 196–214. [Google Scholar] [CrossRef]

- Kluender, Robert, and Marta Kutas. 1993b. Subjacency as a processing phenomenon. Language and Cognitive Processes 8: 573–633. [Google Scholar] [CrossRef]

- Konietzko, Andreas. forthcoming. PP extraction from subject islands in German. Glossa 7.

- Konietzko, Andreas, Janina Radó, and Susanne Winkler. 2019. Focus constraints on relative clause antecedents in sluicing. In Information Structure and Semantic Processing. Edited by Sam Featherston, Robin Hörnig, Sophie von Wietersheim and Susanne Winkler. Berlin and New York: Mouton de Gruyter, pp. 105–27. [Google Scholar]

- Konietzko, Andreas, Susanne Winkler, and Peter W. Culicover. 2018. Heavy NP shift does not cause freezing. Canadian Journal of Linguistics/Revue Canadienne de Linguistique 63: 454–64. [Google Scholar] [CrossRef]

- Koopman, Hilda, and Dominique Sportiche. 1983. Variables and the bijection principle. The Linguistic Review 2: 139–60. [Google Scholar]

- Kroch, Anthony. 1998. Amount quantification, referentiality, and long wh-movement. In Penn Working Papers in Linguistics, Vol. 5.2. Edited by Alexis Dimitriadis, Hikyoung Lee, Christine Moisset and Alexander Williams. Philadelphia: University of Pennsylvania. [Google Scholar]

- Kubota, Yusuke, and Jungmee Lee. 2015. The coordinate structure constraint as a discourse-oriented principle: Further evidence from Japanese and Korean. Language 91: 642–75. [Google Scholar] [CrossRef]

- Kubota, Yusuke, and Robert D. Levine. 2020. Type-Logical Syntax. Cambridge: MIT Press. [Google Scholar]

- Kuhlmann, Marco, and Joakim Nivre. 2006. Mildly non-projective dependency structures. Paper presented at COLING/ACL 2006, Main Conference Poster Sessions, Sydney, Australia, July; pp. 507–14. [Google Scholar]

- Kuperberg, Gina R., and T. Florian Jaeger. 2016. What do we mean by prediction in language comprehension? Language, Cognition and Neuroscience 31: 32–59. [Google Scholar] [CrossRef] [Green Version]

- Kush, Dave, Terje Lohndal, and Jon Sprouse. 2017. Investigating variation in island effects. Natural Language and Linguistic Theory 36: 1–37. [Google Scholar] [CrossRef] [PubMed]

- Lakoff, George. 1986. Frame semantic control of the Coordinate Structure Constraint. In Proceedings of the Chicago Linguistic Society 22. Edited by Anne M. Farley, Peter T. Farley and Karl-Erik McCullough. Chicago: Chicago Linguistic Society, pp. 152–67. [Google Scholar]

- Lasnik, Howard, and Tim Stowell. 1991. Weakest crossover. Linguistic Inquiry 22: 687–720. [Google Scholar]

- Levine, Robert D. 2017. Syntactic Analysis: An HPSG-Based Approach. Cambridge: Cambridge Univeristy Press. [Google Scholar]

- Levine, Robert D., and Thomas Hukari. 2006. The Unity of Unbounded Dependency Constructions. Stanford: CSLI Publications. [Google Scholar]

- Levinson, Stephen C. 1987. Pragmatics and the grammar of anaphora: A partial pragmatic reduction of binding and control phenomena. Journal of Linguistics 23: 379–434. [Google Scholar] [CrossRef] [Green Version]

- Levinson, Stephen C. 1991. Pragmatic reduction of the binding conditions revisited. Journal of Linguistics 27: 107–61. [Google Scholar] [CrossRef] [Green Version]

- Levy, Roger. 2005. Probabilistic Models of Word Order and Syntactic Discontinuity. Ph.D. thesis, Stanford University, Stanford, CA, USA. [Google Scholar]

- Levy, Roger. 2008. Expectation-based syntactic comprehension. Cognition 106: 1126–77. [Google Scholar] [CrossRef] [Green Version]

- Levy, Roger. 2013. Memory and surprisal in human sentence comprehension. In Sentence Processing. London: Psychology Press, pp. 78–114. [Google Scholar]

- Levy, Roger, and T. Florian Jaeger. 2007. Speakers optimize information density through syntactic reduction. Advances in Neural Information Processing Systems 19: 849. [Google Scholar]

- Levy, Roger P., and Frank Keller. 2013. Expectation and locality effects in german verb-final structures. Journal of Memory and Language 68: 199–222. [Google Scholar] [CrossRef] [Green Version]

- Lewis, Richard. 1993. An architecturally-based theory of human sentence comprehension. Paper presented at 15th Annual Conference of the Cognitive Science Society, Boulder, CO, USA, June 18–21; Mahwah: Erlbaum, pp. 108–13. [Google Scholar]

- Lewis, Richard. 1996. Interference in short-term memory: The magical number two (or three) in sentence processing. Journal of Psycholinguistic Research 25: 93–115. [Google Scholar] [CrossRef]

- Lewis, Richard, and Shravan Vasishth. 2005. An activation-based model of sentence processing as skilled memory retrieval. Cognitive Science 29: 1–45. [Google Scholar] [CrossRef] [Green Version]

- Lewis, Richard, Shravan Vasishth, and Julie Van Dyke. 2006. Computational principles of working memory in sentence comprehension. Trends in Cognitive Science 10: 447–54. [Google Scholar] [CrossRef] [Green Version]

- Liu, Haitao. 2008. Dependency distance as a metric of language comprehension difficulty. Journal of Cognitive Science 9: 159–91. [Google Scholar]

- Liu, Haitao, Chunshan Xu, and Junying Liang. 2017. Dependency distance: A new perspective on syntactic patterns in natural languages. Physics of Life Reviews 21: 171–93. [Google Scholar] [CrossRef] [PubMed]

- Michaelis, Laura A. 2012. Making the case for construction grammar. In Sign-Based Construction Grammar. Edited by Hans C. Boas and Ivan A. Sag. Stanford: CSLI Publications, pp. 31–68. [Google Scholar]

- Miller, George, and Noam Chomsky. 1963. Finitary models of language users. In Handbook of Mathematical Psychology. Edited by R. Duncan Luce, Robert R. Bush and Eugene Galanter. New York: Wiley, vol. 2, pp. 419–92. [Google Scholar]

- Müller, Gereon. 2010. On deriving CED effects from the PIC. Linguistic Inquiry 41: 35–82. [Google Scholar] [CrossRef] [Green Version]

- Müller, Geron. 2018. Freezing in complex pre-fields. In Freezing: Theoretical Approaches and Empirical Domains. Edited by Jutta Hartmann, Marion Jäger, Andreas Konietzko and Susanne Winkler. Berlin: De Gruyter, pp. 105–39. [Google Scholar]

- Nakamura, Michiko, and Edson T. Miyamoto. 2013. The object before subject bias and the processing of double-gap relative clauses in Japanese. Language and Cognitive Processes 28: 303–34. [Google Scholar] [CrossRef]

- Newmeyer, Frederick J. 2016. Nonsyntactic explanations of island constraints. Annual Review of Linguistics 2: 187–210. [Google Scholar] [CrossRef]

- Nunes, Jairo, and Juan Uriagereka. 2000. Cyclicity and extraction domains. Syntax 3: 20–43. [Google Scholar] [CrossRef]

- Oda, Hiromune. 2017. Two types of the Coordinate Structure Constraint and rescue by PF deletion. Proceedings of the North East Linguistic Society 47: 343–56. [Google Scholar]

- Oda, Hiromune. 2021. Decomposing and deducing the coordinate structure constraint. The Linguistic Review 38: 605–44. [Google Scholar] [CrossRef]

- O’Grady, William, Miseon Lee, and Miho Choo. 2003. A subject-object asymmetry in the acquisition of relative clauses in korean as a second language. Studies in Second Language Acquisition 25: 433–48. [Google Scholar] [CrossRef]

- Park, Kwonsik, Myung-Kwan Park, and Sanghoun Song. 2021. Deep learning can contrast the minimal pairs of syntactic data. Linguistic Research 38: 395–424. [Google Scholar]

- Pesetsky, David. 1982. Paths and Categories. Ph.D. thesis, Massachusetts Institute of Technology, Cambridge, MA, USA. [Google Scholar]

- Pesetsky, David. 1987. Wh-in-situ: Movement and unselective binding. In The Representation of (In)definiteness. Edited by Eric J. Reuland and Alice G. B. ter Meulen. Cambridge, MA: MIT Press, pp. 98–129. [Google Scholar]

- Pesetsky, David. 1998. Some optimality principles of sentence pronunciation. In Is the Best Good Enough? Edited by Pilar Barbosa, Danny Fox, Paul Hagstrom, Martha McGinnis and David Pesetsky. Cambridge, MA: MIT Press, pp. 337–84. [Google Scholar]

- Pesetsky, David. 2000. Phrasal Movement and Its Kin. Cambridge, MA: MIT Press. [Google Scholar]

- Phillips, Colin. 2006. The real-time status of island phenomena. Language 82: 795–823. [Google Scholar] [CrossRef]

- Phillips, Colin. 2013a. On the nature of island constraints i: Language processing and reductionist accounts. In Experimental Syntax and Island Effects. Edited by Jon Sprouse and Nobert Hornstein. Cambridge: University Press Cambridge, pp. 64–108. [Google Scholar]

- Phillips, Colin. 2013b. Some arguments and non-arguments for reductionist accounts of syntactic phenomena. Language and Cognitive Processes 28: 156–87. [Google Scholar] [CrossRef]

- Phillips, Colin, Matthew W. Wagers, and Ellen F. Lau. 2011. Grammatical illusions and selective fallibility in real-time language comprehension. In Experiments at the Interfaces. Bingley: Emerald, vol. 37, pp. 147–80. [Google Scholar]

- Pickering, Martin, and Guy Barry. 1991. Sentence processing without empty categories. Language and Cognitive Processes 6: 229–59. [Google Scholar] [CrossRef]

- Pinker, Steven, and Paul Bloom. 1990. Natural language and natural selection. Behavioral and Brain Sciences 13: 707–27. [Google Scholar] [CrossRef] [Green Version]

- Polinsky, Maria, Carlos G. Gallo, Peter Graff, Ekaterina Kravtchenko, Adam Milton Morgan, and Anne Sturgeon. 2013. Subject islands are different. In Experimental Syntax and Island Effects. Edited by Jon Sprouse and Norbert Hornstein. Cambridge: Cambridge University Press, pp. 286–309. [Google Scholar]

- Pollard, Carl, and Ivan A. Sag. 1994. Head-Driven Phrase Structure Grammar. Chicago: University of Chicago Press and CSLI Publications. [Google Scholar]

- Postal, Paul. 1971. Crossover Phenomena. New York: Holt, Rinehart and Winston. [Google Scholar]

- Pritchett, Bradley L. 1992. Grammatical Competence and Parsing Performance. Chicago: University of Chicago Press. [Google Scholar]

- Pritchett, Bradley L. 1988. Garden path phenomena and the grammatical basis of language processing. Language 64: 539–76. [Google Scholar] [CrossRef]

- Progovac, Ljiljana. 2016. A gradualist scenario for language evolution: Precise linguistic reconstruction of early human (and neandertal) grammars. Frontiers in Psychology 7: 1714. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pullum, Geoffrey K. 2019. What grammars are, or ought to be. Paper presented at 26th International Conference on Head-Driven Phrase Structure Grammar, București, Romania, July 25–26; Edited by Stefan Müller and Petya Osenova. Stanford: CSLI Publications, pp. 58–79. [Google Scholar]

- Rajkumar, Rajakrishnan, Marten van Schijndel, Michael White, and William Schuler. 2016. Investigating locality effects and surprisal in written English syntactic choice phenomena. Cognition 155: 204–32. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Reinhart, Tanya, and Eric Reuland. 1991. Anaphors and logophors: An argument structure perspective. In Long-Distance Anaphora. Edited by Jan Koster and Eric Reuland. Cambridge: Cambridge University Press, pp. 283–322. [Google Scholar]

- Reis, Marga. 1993. Wortstellung und Informationsstruktur. Berlin: Walter de Gruyter. [Google Scholar]

- Rizzi, Luigi. 1990. Relativized Minimality. Cambridge, MA: MIT Press. [Google Scholar]

- Roberts, Craige. 2012. Information structure: Towards an integrated formal theory of pragmatics. Semantics and Pragmatics 5: 6–1. [Google Scholar] [CrossRef] [Green Version]

- Rochemont, Michael. 1989. Topic islands and the subjacency parameter. Canadian Journal of Linguistics—Revue Canadianne de Linguistique 34: 145–70. [Google Scholar] [CrossRef]

- Rochemont, Michael, and Peter W. Culicover. 1990. English Focus Constructions and the Theory of Grammar. Cambridge: Cambridge University Press. [Google Scholar]

- Ross, John R. 1967. Constraints on Variables in Syntax. Ph.D. thesis, MIT, Cambridge, MA, USA. [Google Scholar]

- Ross, John R. 1987. Islands and syntactic prototypes. Chicago Linguistic Society Papers 23: 309–20. [Google Scholar]

- Sabel, Joachim. 2002. A minimalist analysis of syntactic islands. Linguistic Review 19: 271–315. [Google Scholar] [CrossRef]

- Safir, Ken. 2017. Weak crossover. In The Wiley Blackwell Companion to Syntax. Edited by Martin Everaert and Henk van Riemsdijk. Hoboken, NJ: Wiley Online Library, pp. 1–40. [Google Scholar]

- Sag, Ivan A. 1997. English relative clause constructions. Journal of Linguistics 33: 431–84. [Google Scholar] [CrossRef] [Green Version]

- Sag, Ivan A. 2012. Sign-based construction grammar—A synopsis. In Sign-Based Construction Grammar. Edited by Hans C. Boas and Ivan A. Sag. Stanford: CSLI Publications, pp. 61–197. [Google Scholar]

- Sag, Ivan A., Inbal Arnon, Bruno Estigarribia, Philip Hofmeister, T. Florian Jaeger, Jeanette Pettibone, and Neal Snider. 2006. Processing Accounts for Superiority Effects. Stanford, CA: Stanford University, Unpublished ms. [Google Scholar]

- Sag, Ivan A., and Janet D. Fodor. 1994. Extraction without traces. In Proceedings of the Thirteenth West Coast Conference on Formal Linguistics, Stanford University. Edited by Raul Aranovich, Willian Byrne, Susanne Preuss and Martha Senturia. Stanford University: CSLI Publications, pp. 365–84. [Google Scholar]

- Sag, Ivan A., Philip Hofmeister, and Neal Snider. 2007. Processing complexity in Subjacency violations: The complex noun phrase constraint. Paper presented at 43rd Annual Meeting of the Chicago Linguistic Society, Chicago, IL, May 3–5; Chicago: University of Chicago. [Google Scholar]

- Sauerland, Uli. 1999. Erasability and interpretation. Syntax 2: 161–88. [Google Scholar] [CrossRef] [Green Version]

- Selkirk, Elisabeth. 2011. The syntax-phonology interface. In The Handbook of Phonological Theory. Edited by John A. Goldsmith, Jason Riggle and Alan C. L. Yu. Oxford: Wiley-Blackwell, vol. 2, pp. 435–83. [Google Scholar]

- Shain, Cory, Idan Asher Blank, Marten van Schijndel, William Schuler, and Evelina Fedorenko. 2020. fmri reveals language-specific predictive coding during naturalistic sentence comprehension. Neuropsychologia 138: 107307. [Google Scholar] [CrossRef] [PubMed]

- Shan, Chung-Chieh, and Chris Barker. 2006. Explaining crossover and superiority as left-to-right evaluation. Linguistics and Philosophy 29: 91–134. [Google Scholar] [CrossRef]

- Sprouse, Jon. 2007. Continuous acceptability, categorical grammaticality, and experimental syntax. Biolinguistics 1: 118–29. [Google Scholar] [CrossRef]

- Sprouse, Jon, Matt Wagers, and Colin Phillips. 2012a. A test of the relation between working-memory capacity and syntactic island effects. Language 88: 82–123. [Google Scholar] [CrossRef]

- Sprouse, Jon, Matt Wagers, and Colin Phillips. 2012b. Working-memory capacity and island effects: A reminder of the issues and the facts. Language 88: 401–7. [Google Scholar] [CrossRef]

- Staum Casasanto, Laura, Philip Hofmeister, and Ivan A. Sag. 2010. Understanding acceptability judgments: Distinguishing the effects of grammar and processing on acceptability judgments. Paper presented at 32nd Annual Conference of the Cognitive Science Society, Portland, OR, USA, August 11–14; Edited by Stellan Ohlsson and Richard Catrambone. Austin: Cognitive Science Society, pp. 224–29. [Google Scholar]

- Szabolcsi, Anna, and Terje Lohndal. 2017. Strong vs. weak islands. In The Wiley Blackwell Companion to Syntax, 2nd ed. Edited by Martin Everaert and Henk van Riemsdijk. New York: John Wiley. [Google Scholar]

- Temperley, David. 2007. Minimization of dependency length in written english. Cognition 105: 300–33. [Google Scholar] [CrossRef]

- Trotzke, Andreas, Markus Bader, and Lyn Frazier. 2013. Third factors and the performance interface in language design. Biolinguistics 7: 1–34. [Google Scholar] [CrossRef]

- Truckenbrodt, Hubert. 1995. Phonological Phrases: Their Relation to Syntax, Focus, and Prominence. Ph.D. thesis, MIT, Cambridge, MA, USA. [Google Scholar]

- Ueno, Mieko, and Susan M. Garnsey. 2008. An ERP study of the processing of subject and object relative clauses in japanese. Language and Cognitive Processes 23: 646–88. [Google Scholar] [CrossRef]

- van Dyke, Julie, and Richard Lewis. 2003. Distinguishing effects of structure and decay on attachment and repair: A cue-based parsing account of recovery from misanalyzed ambiguities. Journal of Memory and Language 49: 285–316. [Google Scholar] [CrossRef] [Green Version]

- van Schijndel, Marten, Andy Exley, and William Schuler. 2013. A model of language processing as hierarchic sequential prediction. Topics in Cognitive Science 5: 522–40. [Google Scholar] [CrossRef] [PubMed]

- Varaschin, Giuseppe. 2021. A Simpler Syntax of Anaphora. Ph.D. thesis, Universidade Federal de Santa Catarina, Florianopolis, Brazil. [Google Scholar]

- Varaschin, Giuseppe, Peter W. Culicover, and Susanne Winkler. 2022. In pursuit of condition C. In Information Structure and Discourse in Generative Grammar: Mechanisms and Processes. Edited by Andreas Konietzko and Susanne Winkler. Berlin: Walter de Gruyter, to appear. [Google Scholar]

- Vasishth, Shravan, and Richard L. Lewis. 2006. Argument-head distance and processing complexity: Explaining both locality and antilocality effects. Language 82: 767–94. [Google Scholar] [CrossRef]

- Vasishth, Shravan, Bruno Nicenboim, Felix Engelmann, and Frank Burchert. 2019. Computational models of retrieval processes in sentence processing. Trends in Cognitive Sciences 23: 968–82. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Villata, Sandra, Luigi Rizzi, and Julie Franck. 2016. Intervention effects and Relativized Minimality: New experimental evidence from graded judgments. Lingua 179: 76–96. [Google Scholar] [CrossRef] [Green Version]

- Warren, Tessa, and Edward Gibson. 2002. The influence of referential processing on sentence complexity. Cognition 85: 79–112. [Google Scholar] [CrossRef]

- Wasow, Thomas. 1979. Anaphora in Generative Grammar. Amsterdam: John Benjamins Publishing Company. [Google Scholar]

- Wexler, Kenneth, and Peter W. Culicover. 1980. Formal Principles of Language Acquisition. Cambridge, MA: MIT Press. [Google Scholar]

- Winkler, Susanne, Janina Radó, and Marian Gutscher. 2016. What determines ‘freezing’ effects in was-für split constructions? In Firm Foundations: Quantitative Approaches to Grammar and Grammatical Change. Edited by Sam Featherston and Yannick Versley. Boston and Berlin: Walter de Gruyter, pp. 207–31. [Google Scholar]

- Yadav, Himanshu, Samar Husain, and Richard Futrell. 2021. Do dependency lengths explain constraints on crossing dependencies? Linguistics Vanguard 7: 1–7. [Google Scholar] [CrossRef]

- Zwicky, Arnold M. 1986. The unaccented pronoun constraint in English. Ohio State University Working Papers in Linguistics 32: 100–13. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Culicover, P.W.; Varaschin, G.; Winkler, S. The Radical Unacceptability Hypothesis: Accounting for Unacceptability without Universal Constraints. Languages 2022, 7, 96. https://doi.org/10.3390/languages7020096

Culicover PW, Varaschin G, Winkler S. The Radical Unacceptability Hypothesis: Accounting for Unacceptability without Universal Constraints. Languages. 2022; 7(2):96. https://doi.org/10.3390/languages7020096

Chicago/Turabian StyleCulicover, Peter W., Giuseppe Varaschin, and Susanne Winkler. 2022. "The Radical Unacceptability Hypothesis: Accounting for Unacceptability without Universal Constraints" Languages 7, no. 2: 96. https://doi.org/10.3390/languages7020096

APA StyleCulicover, P. W., Varaschin, G., & Winkler, S. (2022). The Radical Unacceptability Hypothesis: Accounting for Unacceptability without Universal Constraints. Languages, 7(2), 96. https://doi.org/10.3390/languages7020096