Arousal States as a Key Source of Variability in Speech Perception and Learning

Abstract

1. Introduction

2. The Physiology of Arousal States

3. Emergence of Non-Native Speech Category Representations in Adulthood

4. Moment-to-Moment Variability in Speech Perception and Acquisition

4.1. Behavioral Evidence for Stimulus- and Task-Independent Variability

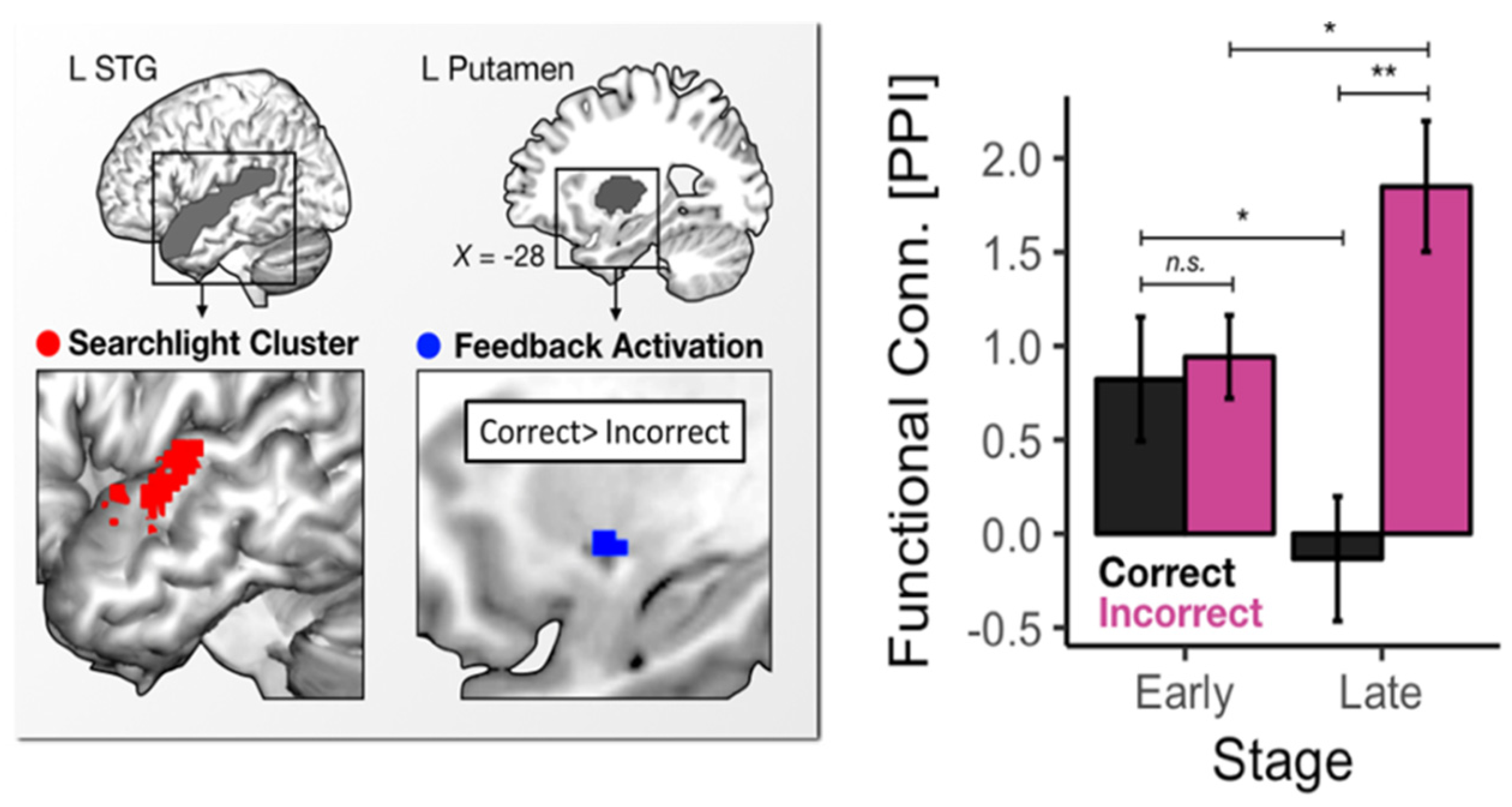

4.2. Neural Evidence for Arousal-Related Variability

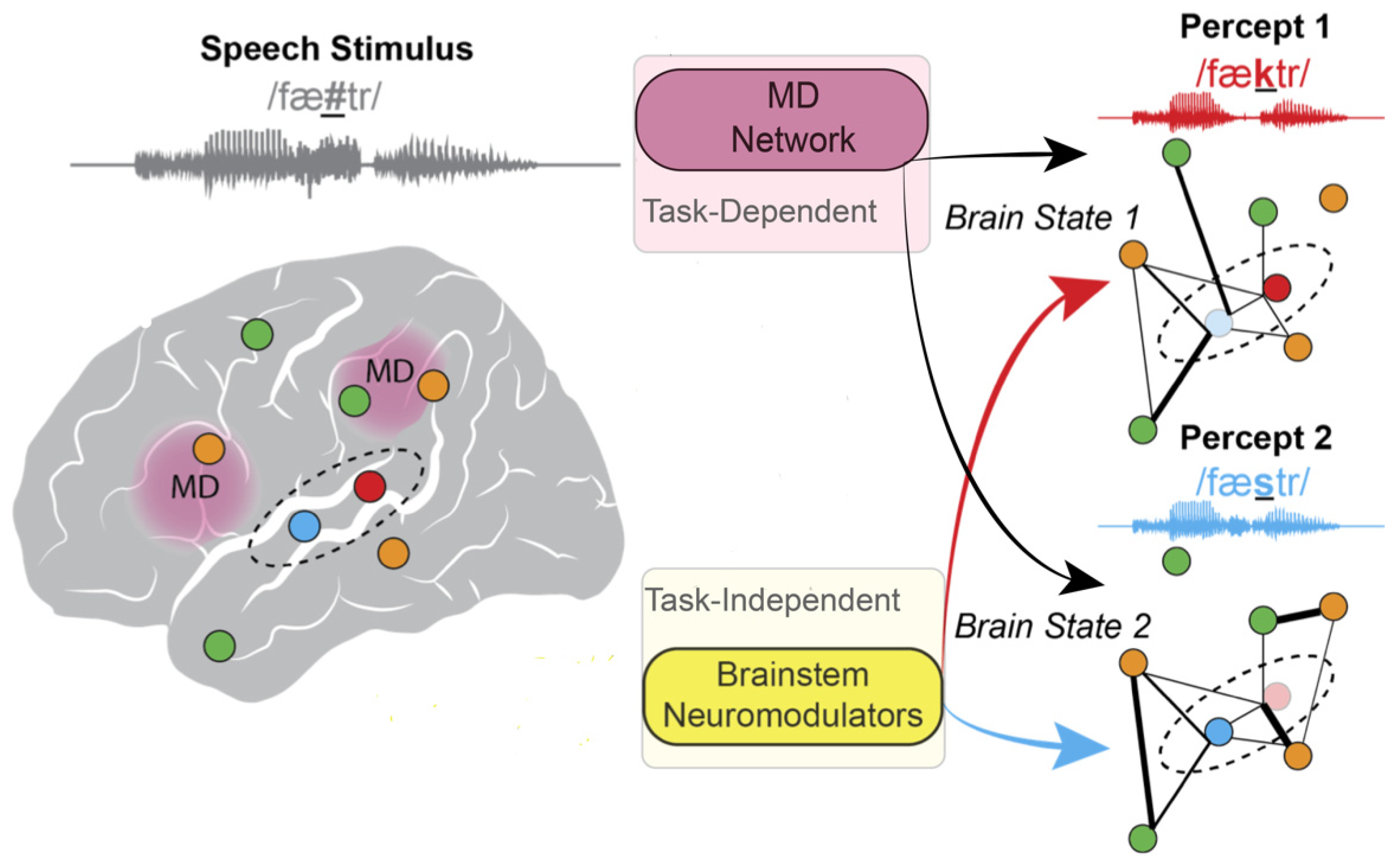

4.3. Cortical State-Dependent Perception and Behavior

5. Arousal States Modulate Brain States That Influence Perception

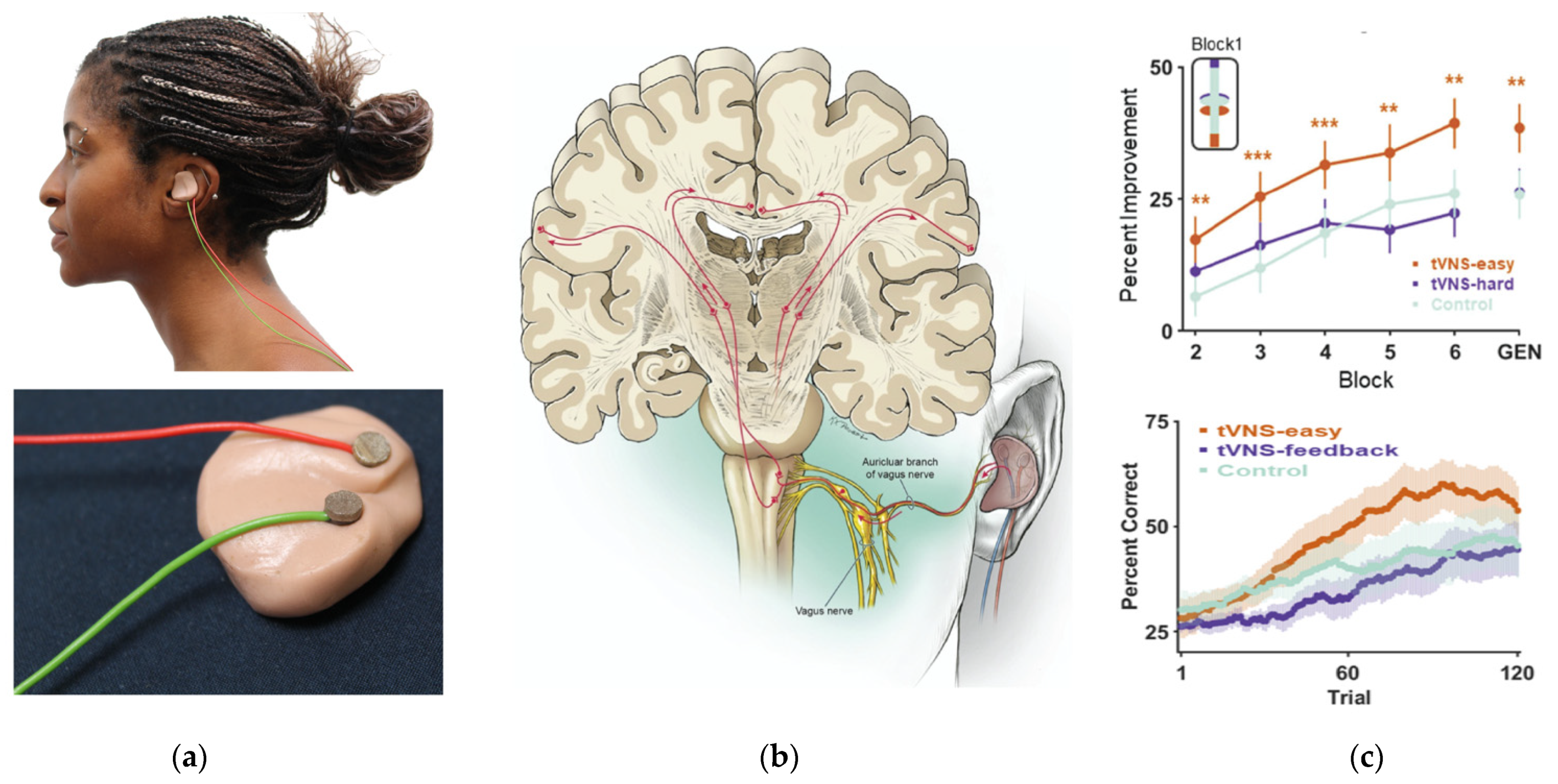

6. Using Non-Invasive Vagus Nerve Stimulation to Study the Effects of Arousal in Speech Perception and Learning

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Adair, Devin, Dennis Truong, Zeinab Esmaeilpour, Nigel Gebodh, Helen Borges, Libby Ho, and J. Douglas Bremner. 2020. Electrical stimulation of cranial nerves in cognition and disease. Brain Stimulation 13: 717–50. [Google Scholar] [CrossRef] [PubMed]

- Aston-Jones, Gary, and Jonathan D. Cohen. 2005. An integrative theory of locus coeruleus-norepinephrine function: Adaptive Gain and Optimal Performance. Annual Review of Neuroscience 28: 403–50. [Google Scholar] [CrossRef]

- Badran, Bashar W., Oliver J. Mithoefer, Caroline E. Summer, Nicholas T. LaBate, Chloe E. Glusman, Alan W. Badran, and William H. DeVries. 2018. Short trains of transcutaneous auricular vagus nerve stimulation (taVNS) have parameter-specific effects on heart rate. Brain Stimulation 11: 699–708. [Google Scholar] [CrossRef] [PubMed]

- Bari, Ausaf A., and Nader Pouratian. 2012. Brain imaging correlates of peripheral nerve stimulation. Surgical Neurology International 3: 260. [Google Scholar] [CrossRef]

- Berridge, Craig W., and Barry D. Waterhouse. 2003. The locus coeruleus–noradrenergic system: Modulation of behavioral state and state-dependent cognitive processes. Brain Research Reviews 42: 33–84. [Google Scholar] [CrossRef]

- Birdsong, David. 2018. Plasticity, variability and age in second language acquisition and bilingualism. Frontiers in Psychology 9: 81. [Google Scholar] [CrossRef] [PubMed]

- Birdsong, David, and Jan Vanhove. 2016. Age of second language acquisition: Critical periods and social concerns. In Bilingualism across the Lifespan: Factors Moderating Language Proficiency. Language and the Human Lifespan Series; Washington, DC: American Psychological Association, pp. 163–81. [Google Scholar] [CrossRef]

- Bradlow, Ann R. 2008. Training non-native language sound patterns: Lessons from training Japanese adults on the English /r/-/l/ contrast. In Phonology and Second Language Acquisition. Edited by Jette G. Hansen Edwards and Mary L. Zampini. Amsterdam: John Benjamins Publishing Company, pp. 287–308. [Google Scholar]

- Bradlow, Ann R., and Tessa Bent. 2008. Perceptual adaptation to non-native speech. Cognition 106: 707–29. [Google Scholar] [CrossRef]

- Breton-Provencher, Vincent, and Mriganka Sur. 2019. Active control of arousal by a locus coeruleus GABAergic circuit. Nature Neuroscience 22: 218–28. [Google Scholar] [CrossRef]

- Brodbeck, Christian, Alex Jiao, L. Elliot Hong, and Jonathan Z. Simon. 2020. Neural speech restoration at the cocktail party: Auditory cortex recovers masked speech of both attended and ignored speakers. PLOS Biology 18: e3000883. [Google Scholar] [CrossRef] [PubMed]

- Brosseau-Lapré, Françoise, Susan Rvachew, Meghan Clayards, and Daniel Dickson. 2013. Stimulus variability and perceptual learning of nonnative vowel categories. Applied Psycholinguistics 34: 419–41. [Google Scholar] [CrossRef]

- Brouwer, Susanne, Holger Mitterer, and Falk Huettig. 2013. Discourse context and the recognition of reduced and canonical spoken words. Applied Psycholinguistics 34: 519–39. [Google Scholar] [CrossRef]

- Burger, Andreas Michael, Willem A. J. van der Does, Jos F. Brosschot, and Bart Verkuil. 2020. From ear to eye? No effect of transcutaneous vagus nerve stimulation on human pupil dilation: A report of three studies. Biological Psychology 152: 107863. [Google Scholar] [CrossRef]

- Butt, Mohsin F., Ahmed Albusoda, Adam D. Farmer, and Qasim Aziz. 2019. The anatomical basis for transcutaneous auricular vagus nerve stimulation. Journal of Anatomy 236: 588–611. [Google Scholar] [CrossRef]

- Cakmak, Yusuf Ozgur. 2019. Concerning auricular vagal nerve stimulation: Occult neural networks. Frontiers in Human Neuroscience 13: 421. [Google Scholar] [CrossRef]

- Campbell, Ruth. 2008. The processing of audio-visual speech: Empirical and neural bases. Philosophical Transactions of the Royal Society B: Biological Sciences 363: 1001–10. [Google Scholar] [CrossRef] [PubMed]

- Cao, Jiayue, Kun-Han Lu, Terry L. Powley, and Zhongming Liu. 2017. Vagal nerve stimulation triggers widespread responses and alters large-scale functional connectivity in the rat brain. PLoS ONE 12: e0189518. [Google Scholar] [CrossRef]

- Cardin, Jessica A., and Marc F. Schmidt. 2003. Song system auditory responses are stable and highly tuned during sedation, rapidly modulated and unselective during wakefulness, and suppressed by arousal. Journal of Neurophysiology 90: 2884–99. [Google Scholar] [CrossRef] [PubMed]

- Cardin, Jessica A., and Marc F. Schmidt. 2004. Noradrenergic inputs mediate state dependence of auditory responses in the avian song system. Journal of Neuroscience 24: 7745–53. [Google Scholar] [CrossRef][Green Version]

- Chandrasekaran, Bharath, Han-Gyol Yi, Kirsten E. Smayda, and W. Todd Maddox. 2016. Effect of explicit dimensional instruction on speech category learning. Attention, Perception, & Psychophysics 78: 566–82. [Google Scholar] [CrossRef]

- Chandrasekaran, Bharath, Padma D. Sampath, and Patrick C. M. Wong. 2010. Individual variability in cue-weighting and lexical tone learning. The Journal of the Acoustical Society of America 128: 456–65. [Google Scholar] [CrossRef]

- Chandrasekaran, Bharath, Seth R. Koslov, and W. Todd Maddox. 2014. Toward a dual-learning systems model of speech category learning. Frontiers in Psychology 5: 825. [Google Scholar] [CrossRef] [PubMed]

- Chang, Edward F. 2015. Towards large-scale, human-based, mesoscopic neurotechnologies. Neuron 86: 68–78. [Google Scholar] [CrossRef]

- Collins, Lindsay, Laura Boddington, Paul J. Steffan, and David McCormick. 2021. Vagus nerve stimulation induces widespread cortical and behavioral activation. Current Biology 31: 2088–98. [Google Scholar] [CrossRef]

- Coull, Jennifer T. 1998. Neural correlates of attention and arousal: Insights from electrophysiology, functional neuroimaging and psychopharmacology. Progress in Neurobiology 55: 343–61. [Google Scholar] [CrossRef]

- Coull, Jennifer T., Christian Büchel, Karl J. Friston, and Chris D. Frith. 1999. Noradrenergically mediated plasticity in a human attentional neuronal network. NeuroImage 10: 705–15. [Google Scholar] [CrossRef] [PubMed]

- D’Agostini, Martina, Andreas M. Burger, Mathijs Franssen, Nathalie Claes, Mathias Weymar, Andreas von Leupoldt, and Ilse Van Diest. 2021. Effects of transcutaneous auricular vagus nerve stimulation on reversal learning, tonic pupil size, salivary alpha-amylase, and cortisol. Psychophysiology 58: e13885. [Google Scholar] [CrossRef]

- Darrow, Michael J., Miranda Torres, Maria J. Sosa, Tanya T. Danaphongse, Zainab Haider, Robert L. Rennaker, Michael P. Kilgard, and Seth A. Hays. 2020. Vagus nerve stimulation paired with rehabilitative training enhances motor recovery after bilateral spinal cord injury to cervical forelimb motor pools. Neurorehabilitation and Neural Repair 34: 200–209. [Google Scholar] [CrossRef] [PubMed]

- Davis, Matthew H., and Ingrid S. Johnsrude. 2007. Hearing speech sounds: Top-down influences on the interface between audition and speech perception. Hearing Research 229: 132–47. [Google Scholar] [CrossRef] [PubMed]

- Davis, Matthew H., Ingrid S. Johnsrude, Alexis Hervais-Adelman, Karen Taylor, and Carolyn McGettigan. 2005. Lexical information drives perceptual learning of distorted speech: Evidence from the comprehension of noise-vocoded sentences. Journal of Experimental Psychology: General 134: 222–41. [Google Scholar] [CrossRef]

- de Gee, Jan Willem, Konstantinos Tsetsos, Lars Schwabe, Anne E. Urai, David McCormick, Matthew J. McGinley, and Tobias H. Donner. 2020. Pupil-linked phasic arousal predicts a reduction of choice bias across species and decision domains. eLife 9: e54014. [Google Scholar] [CrossRef]

- de Gee, Jan Willem, Olympia Colizoli, Niels A Kloosterman, Tomas Knapen, Sander Nieuwenhuis, and Tobias H. Donner. 2017. Dynamic modulation of decision biases by brainstem arousal systems. Edited by Klaas Enno Stephan. eLife 6: e23232. [Google Scholar] [CrossRef] [PubMed]

- Desbeaumes Jodoin, Véronique, Paul Lespérance, Dang K. Nguyen, Marie-Pierre Fournier-Gosselin, and Francois Richer. 2015. Effects of vagus nerve stimulation on pupillary function. International Journal of Psychophysiology 98: 455–59. [Google Scholar] [CrossRef] [PubMed]

- Diachek, Evgeniia, Idan Blank, Matthew Siegelman, Josef Affourtit, and Evelina Fedorenko. 2020. The domain-general Multiple Demand (MD) network does not support core aspects of language comprehension: A large-scale fMRI investigation. Journal of Neuroscience 40: 4536–50. [Google Scholar] [CrossRef] [PubMed]

- Ding, Nai, and Jonathan Z. Simon. 2012. Emergence of neural encoding of auditory objects while listening to competing speakers. Proceedings of the National Academy of Sciences of USA 109: 11854–59. [Google Scholar] [CrossRef]

- Dugue, Laura, Philippe Marque, and Rufin VanRullen. 2011. The phase of ongoing oscillations mediates the causal relation between brain excitation and visual perception. Journal of Neuroscience 31: 11889–93. [Google Scholar] [CrossRef]

- Duncan, John. 2010. The multiple-demand (MD) system of the primate brain: Mental programs for intelligent behaviour. Trends in Cognitive Sciences 14: 172–79. [Google Scholar] [CrossRef]

- Engineer, Navzer D., Jonathan R. Riley, Jonathan D. Seale, Will A. Vrana, Jai A. Shetake, Sindhu P. Sudanagunta, Michael S. Borland, and Michael P. Kilgard. 2011. Reversing pathological neural activity using targeted plasticity. Nature 470: 101–14. [Google Scholar] [CrossRef]

- Engineer, Crystal T., Navzer D. Engineer, Jonathan R. Riley, Jonathan D. Seale, and Michael P. Kilgard. 2015. Pairing speech sounds with vagus nerve stimulation drives stimulus-specific cortical plasticity. Brain Stimulation 8: 637–44. [Google Scholar] [CrossRef]

- Engineer, Navzer D., Teresa J. Kimberley, Cecília N. Prudente, Jesse Dawson, W. Brent Tarver, and Seth A. Hays. 2019. Targeted vagus nerve stimulation for rehabilitation after stroke. Frontiers in Neuroscience 13: 280. [Google Scholar] [CrossRef]

- Erb, Julia, Molly J. Henry, Frank Eisner, and Jonas Obleser. 2013. The brain dynamics of rapid perceptual adaptation to adverse listening conditions. Journal of Neuroscience 33: 10688–97. [Google Scholar] [CrossRef]

- Evans, Samuel, and Matthew H. Davis. 2015. Hierarchical organization of auditory and motor representations in speech perception: Evidence from searchlight similarity analysis. Cerebral Cortex 25: 4772–88. [Google Scholar] [CrossRef] [PubMed]

- Faisal, A. Aldo, Luc P. J. Selen, and Daniel M. Wolpert. 2008. Noise in the nervous system. Nature Reviews. Neuroscience 9: 292–303. [Google Scholar] [CrossRef] [PubMed]

- Fang, Jiliang, Peijing Rong, Yang Hong, Yangyang Fan, Jun Liu, Honghong Wang, and Guolei Zhang. 2016. Transcutaneous vagus nerve stimulation modulates default mode network in major depressive disorder. Biological Psychiatry 79: 266–73. [Google Scholar] [CrossRef]

- Feng, Gangyi, Han Gyol Yi, and Bharath Chandrasekaran. 2019. The role of the human auditory corticostriatal network in speech learning. Cerebral Cortex 29: 4077–89. [Google Scholar] [CrossRef]

- Feng, Gangyi, Zhenzhong Gan, Fernando Llanos, Danting Meng, Suiping Wang, Patrick C. M. Wong, and Bharath Chandrasekaran. 2021. A distributed dynamic brain network mediates linguistic tone representation and categorization. NeuroImage 224: 117410. [Google Scholar] [CrossRef]

- Finn, Amy Sue, Carla L. Hudson Kam, Marc Ettlinger, Jason Vytlacil, and Mark D’Esposito. 2013. Learning language with the wrong neural scaffolding: The cost of neural commitment to sounds. Frontiers in Systems Neuroscience 7: 85. [Google Scholar] [CrossRef]

- Fox, Michael D., Abraham Z. Snyder, Justin L. Vincent, and Marcus E. Raichle. 2007. Intrinsic fluctuations within cortical systems account for intertrial variability in human behavior. Neuron 56: 171–84. [Google Scholar] [CrossRef]

- Frangos, Eleni, Jens Ellrich, and Barry R. Komisaruk. 2015. Non-invasive access to the vagus nerve central projections via electrical stimulation of the external ear: fMRI evidence in humans. Brain Stimulation 8: 624–36. [Google Scholar] [CrossRef] [PubMed]

- Friederici, Angela D. 2012. The cortical language circuit: From auditory perception to sentence comprehension. Trends in Cognitive Sciences 16: 262–68. [Google Scholar] [CrossRef]

- Ganong, William F. 1980. Phonetic categorization in auditory word perception. Journal of Experimental Psychology. Human Perception and Performance 6: 110–25. [Google Scholar] [CrossRef]

- Gilzenrat, Mark S., Sander Nieuwenhuis, Marieke Jepma, and Jonathan D. Cohen. 2010. Pupil diameter tracks changes in control state predicted by the adaptive gain theory of locus coeruleus function. Cognitive, Affective, & Behavioral Neuroscience 10: 252–69. [Google Scholar] [CrossRef]

- Ginn, Claire, Bipin Patel, and Robert Walker. 2019. Existing and emerging applications for the neuromodulation of nerve activity through targeted delivery of electric stimuli. International Journal of Neuroscience 129: 1013–23. [Google Scholar] [CrossRef]

- Golestani, Narly, and Robert J. Zatorre. 2009. Individual differences in the acquisition of second language phonology. Brain and Language 109: 55–67. [Google Scholar] [CrossRef]

- Guediche, Sara, Sheila Blumstein, Julie Fiez, and Lori Holt. 2014. Speech perception under adverse conditions: Insights from behavioral, computational, and neuroscience research. Frontiers in Systems Neuroscience 7: 126. [Google Scholar] [CrossRef]

- Harris, Kenneth D., and Alexander Thiele. 2011. Cortical state and attention. Nature Reviews Neuroscience 12: 509–23. [Google Scholar] [CrossRef]

- Hasson, Uri, Giovanna Egidi, Marco Marelli, and Roel M. Willems. 2018. Grounding the neurobiology of language in first principles: The necessity of non-language-centric explanations for language comprehension. Cognition 180: 135–57. [Google Scholar] [CrossRef]

- Heald, Shannon, and Howard Charles Nusbaum. 2014. Speech perception as an active cognitive process. Frontiers in Systems Neuroscience 8: 1–15. [Google Scholar] [CrossRef]

- Holdgraf, Christopher R., Wendy de Heer, Brian Pasley, Jochem Rieger, Nathan Crone, Jack J. Lin, Robert T. Knight, and Frédéric E. Theunissen. 2016. Rapid tuning shifts in human auditory cortex enhance speech intelligibility. Nature Communications 7: 13654. [Google Scholar] [CrossRef] [PubMed]

- Hulsey, Daniel R., Jonathan R. Riley, Kristofer W. Loerwald, Robert L. Rennaker II, Michael P. Kilgard, and Seth A. Hays. 2017. Parametric characterization of neural activity in the locus coeruleus in response to vagus nerve stimulation. Experimental Neurology 289: 21–30. [Google Scholar] [CrossRef]

- Hulsey, Daniel R., Seth A. Hays, Navid Khodaparast, Andrea Ruiz, Priyanka Das, Robert L. Rennaker II, and Michael P. Kilgard. 2016. Reorganization of motor cortex by vagus nerve stimulation requires cholinergic innervation. Brain Stimulation 9: 174–81. [Google Scholar] [CrossRef] [PubMed]

- Huyck, Julia Jones, and Ingrid S. Johnsrude. 2012. Rapid perceptual learning of noise-vocoded speech requires attention. The Journal of the Acoustical Society of America 131: EL236–42. [Google Scholar] [CrossRef] [PubMed]

- Joshi, Siddhartha, Yin Li, Rishi Kalwani, and Joshua I. Gold. 2016. Relationships between pupil diameter and neuronal activity in the locus coeruleus, colliculi, and cingulate cortex. Neuron 89: 221–34. [Google Scholar] [CrossRef] [PubMed]

- Kahneman, Daniel, and Jackson Beatty. 1966. Pupil diameter and load on memory. Science 154: 1583–85. [Google Scholar] [CrossRef]

- Kang, Shinae, Keith Johnson, and Gregory Finley. 2016. Effects of native language on compensation for coarticulation. Speech Communication 77: 84–100. [Google Scholar] [CrossRef]

- Kaniusas, Eugenijus, Stefan Kampusch, Marc Tittgemeyer, Fivos Panetsos, Raquel Fernandez Gines, Michele Papa, Attila Kiss, Bruno Podesser, Antonino Mario Cassara, Emmeric Tanghe, and et al. 2019a. Current directions in the auricular vagus nerve Stimulation I—A physiological perspective. Frontiers in Neuroscience 13: 1–23. [Google Scholar] [CrossRef]

- Kaniusas, Eugenijus, Stefan Kampusch, Marc Tittgemeyer, Fivos Panetsos, Raquel Fernandez Gines, Michele Papa, Attila Kiss, Bruno Podesser, Antonino Mario Cassara, Emmeric Tanghe, and et al. 2019b. Current directions in the auricular vagus nerve stimulation II—An engineering perspective. Frontiers in Neuroscience 13: 1–16. [Google Scholar] [CrossRef]

- Khoshkhoo, Sattar, Matthew K. Leonard, Nima Mesgarani, and Edward F. Chang. 2018. Neural correlates of sine-wave speech intelligibility in human frontal and temporal cortex. Brain and Language 187: 83–91. [Google Scholar] [CrossRef]

- Kleinow, Jennifer, and Anne Smith. 2006. Potential interactions among linguistic, autonomic, and motor factors in speech. Developmental Psychobiology 48: 275–87. [Google Scholar] [CrossRef]

- Kral, Andrej, Michael F. Dorman, and Blake S. Wilson. 2019. Neuronal development of hearing and language: Cochlear implants and critical periods. Annual Review of Neuroscience 42: 47–65. [Google Scholar] [CrossRef] [PubMed]

- Kuhl, Patricia K. 2004. Early language acquisition: Cracking the speech code. Nature Reviews Neuroscience 5: 831–43. [Google Scholar] [CrossRef]

- Kuhl, Patricia K. 2010. Brain mechanisms in early language acquisition. Neuron 67: 713–27. [Google Scholar] [CrossRef] [PubMed]

- Kuhl, Patricia K., Barbara T. Conboy, Denise Padden, Tobey Nelson, and Jessica Pruitt. 2005. Early speech perception and later language development: Implications for the “critical period”. Language Learning and Development 1: 237–64. [Google Scholar] [CrossRef]

- Kutas, Marta, and Kara D. Federmeier. 2000. Electrophysiology reveals semantic memory use in language comprehension. Trends in Cognitive Sciences 4: 463–70. [Google Scholar] [CrossRef]

- Lai, Jesyin, and Stephen V. David. 2021. Short-term effects of vagus nerve stimulation on learning and evoked activity in auditory cortex. ENeuro, 8. [Google Scholar] [CrossRef]

- Larsen, Rylan S., and Jack Waters. 2018. Neuromodulatory correlates of pupil dilation. Frontiers in Neural Circuits 12: 21. [Google Scholar] [CrossRef] [PubMed]

- Leonard, Matthew K., Maxime O. Baud, Matthias J. Sjerps, and Edward F. Chang. 2016. Perceptual restoration of masked speech in human cortex. Nature Communications 7: 13619. [Google Scholar] [CrossRef]

- Lim, Sung-joo, and Lori L. Holt. 2011. Learning foreign sounds in an alien world: Videogame training improves non-native speech categorization. Cognitive Science 35: 1390–405. [Google Scholar] [CrossRef] [PubMed]

- Lin, Pei-Ann, Samuel K. Asinof, Nicholas J. Edwards, and Jeffry S. Isaacson. 2019. Arousal regulates frequency tuning in primary auditory cortex. Proceedings of the National Academy of Sciences of USA 116: 25304–10. [Google Scholar] [CrossRef]

- Liu, Yang, Charles Rodenkirch, Nicole Moskowitz, Brian Schriver, and Qi Wang. 2017. Dynamic lateralization of pupil dilation evoked by locus coeruleus activation results from sympathetic, not parasympathetic, contributions. Cell Reports 20: 3099–112. [Google Scholar] [CrossRef]

- Llanos, Fernando, Jacie R. McHaney, William L. Schuerman, Han G. Yi, Matthew K. Leonard, and Bharath Chandrasekaran. 2020. Non-invasive peripheral nerve stimulation selectively enhances speech category learning in adults. NPJ Science of Learning 5: 1–11. [Google Scholar] [CrossRef]

- Loerwald, Kristofer W., Elizabeth P. Buell, Michael S. Borland, Robert L. Rennaker, Seth A. Hays, and Michael P. Kilgard. 2018. Varying stimulation parameters to improve cortical plasticity generated by VNS-tone pairing. Neuroscience 388: 239–47. [Google Scholar] [CrossRef]

- Luthra, Sahil, Giovanni Peraza-Santiago, Keiana Beeson, David Saltzman, Anne Marie Crinnion, and James S. Magnuson. 2021. Robust lexically mediated compensation for coarticulation: Christmash time is here again. Cognitive Science 45: e12962. [Google Scholar] [CrossRef]

- Maddox, W. Todd, and Bharath Chandrasekaran. 2014. Tests of a dual-system model of speech category learning*. Bilingualism: Language and Cognition 17: 709–28. [Google Scholar] [CrossRef]

- Mai, Guangting, Tim Schoof, and Peter Howell. 2019. Modulation of phase-locked neural responses to speech during different arousal states is age-dependent. NeuroImage 189: 734–44. [Google Scholar] [CrossRef]

- Mann, Virginia A., and Bruno H. Repp. 1980. Influence of vocalic context on perception of the [∫]-[s] distinction. Perception & Psychophysics 28: 213–28. [Google Scholar] [CrossRef]

- Martins, Ana Raquel O., and Robert C. Froemke. 2015. Coordinated forms of noradrenergic plasticity in the locus coeruleus and primary auditory cortex. Nature Neuroscience 18: 1483–92. [Google Scholar] [CrossRef]

- Marzo, Aude, Jing Bai, and Satoru Otani. 2009. Neuroplasticity regulation by noradrenaline in mammalian brain. Current Neuropharmacology 7: 286–95. [Google Scholar] [CrossRef]

- Mattys, Sven L., F. Seymour, Angela S. Attwood, and Marcus R. Munafò. 2013. Effects of acute anxiety induction on speech perception: Are anxious listeners distracted listeners? Psychological Science 24: 1606–608. [Google Scholar] [CrossRef]

- McBurney-Lin, Jim, Ju Lu, Yi Zuo, and Hongdian Yang. 2019. Locus coeruleus-norepinephrine modulation of sensory processing and perception: A focused review. Neuroscience & Biobehavioral Reviews 105: 190–99. [Google Scholar] [CrossRef]

- McCormick, David A., and Hans-Christian Pape. 1990. Noradrenergic and serotonergic modulation of a hyperpolarization-activated cation current in thalamic relay neurones. The Journal of Physiology 431: 319–42. [Google Scholar] [CrossRef]

- McGinley, Matthew J., Martin Vinck, Jacob Reimer, Renata Batista-Brito, Edward Zagha, Cathryn R. Cadwell, Andreas S. Tolias, Jessica A. Cardin, and David A. McCormick. 2015a. Waking state: Rapid variations modulate neural and behavioral responses. Neuron 87: 1143–61. [Google Scholar] [CrossRef]

- McGinley, Matthew J., Stephen V. David, and David A. McCormick. 2015b. Cortical membrane potential signature of optimal states for sensory signal detection. Neuron 87: 179–92. [Google Scholar] [CrossRef]

- McGurk, Harry, and John MacDonald. 1976. Hearing lips and seeing voices. Nature 264: 746–48. [Google Scholar] [CrossRef]

- Menon, Vinod, and Sonia Crottaz-Herbette. 2005. Combined EEG and fMRI Studies of human brain function. In International Review of Neurobiology. Neuroimaging, Part A. Cambridge: Academic Press, vol. 66, pp. 291–321. [Google Scholar] [CrossRef]

- Mesgarani, Nima, and Edward F. Chang. 2012. Selective cortical representation of attended speaker in multi-talker speech perception. Nature 485: 233–36. [Google Scholar] [CrossRef]

- Mesgarani, Nima, Connie Cheung, Keith Johnson, and Edward F. Chang. 2014. Phonetic feature encoding in human superior temporal gyrus. Science 343: 1006–10. [Google Scholar] [CrossRef] [PubMed]

- Miller, George A., and Stephen Isard. 1963. Some perceptual consequences of linguistic rules. Journal of Verbal Learning and Verbal Behavior 2: 217–28. [Google Scholar] [CrossRef]

- Morrison, Robert A., Tanya T. Danaphongse, David T. Pruitt, Katherine S. Adcock, Jobin K. Mathew, Stephanie T. Abe, Dina M. Abdulla, Robert L. Rennaker, Michael P. Kilgard, and Seth A. Hays. 2020. A limited range of vagus nerve stimulation intensities produce motor cortex reorganization when delivered during training. Behavioural Brain Research 391: 112705. [Google Scholar] [CrossRef]

- Mridha, Zakir, Jan Willem de Gee, Yanchen Shi, Rayan Alkashgari, Justin Williams, Aaron Suminski, Matthew P. Ward, Wenhao Zhang, and Matthew James McGinley. 2021. Graded recruitment of pupil-linked neuromodulation by parametric stimulation of the vagus nerve. Nature Communications 12: 1539. [Google Scholar] [CrossRef] [PubMed]

- Myers, Emily B. 2014. Emergence of category-level sensitivities in non-native speech sound learning. Frontiers in Neuroscience 8: 1–11. [Google Scholar] [CrossRef] [PubMed]

- Norris, Dennis, James M. McQueen, and Anne Cutler. 2003. Perceptual learning in speech. Cognitive Psychology 47: 204–38. [Google Scholar] [CrossRef]

- Pandža, Nick B., Ian Phillips, Valerie P. Karuzis, Polly O’Rourke, and Stefanie E. Kuchinsky. 2020. Neurostimulation and pupillometry: New directions for learning and research in applied linguistics. Annual Review of Applied Linguistics 40: 56–77. [Google Scholar] [CrossRef]

- Paulon, Giorgio, Fernando Llanos, Bharath Chandrasekaran, and Abhra Sarkar. 2020. Bayesian semiparametric longitudinal drift-diffusion mixed models for tone learning in adults. Journal of the American Statistical Association 116: 1114–27. [Google Scholar] [CrossRef]

- Perrachione, Tyler K., Jiyeon Lee, Louise Y. Y. Ha, and Patrick C. M. Wong. 2011. Learning a novel phonological contrast depends on interactions between individual differences and training paradigm design. The Journal of the Acoustical Society of America 130: 461–72. [Google Scholar] [CrossRef]

- Phillips, Ian, Regina C. Calloway, Valerie P. Karuzis, Nick B. Pandža, Polly O’Rourke, and Stefanie E. Kuchinsky. 2021. Transcutaneous auricular vagus nerve stimulation strengthens semantic representations of foreign language tone words during initial stages of learning. Journal of Cognitive Neuroscience 34: 127–52. [Google Scholar] [CrossRef]

- Poe, Gina R., Stephen Foote, Oxana Eschenko, Joshua P. Johansen, Sebastien Bouret, Gary Aston-Jones, and Carolyn W. Harley. 2020. Locus coeruleus: A new look at the blue spot. Nature Reviews Neuroscience 21: 644–59. [Google Scholar] [CrossRef] [PubMed]

- Pruitt, David T., Ariel N. Schmid, Lily J. Kim, Caroline M. Abe, Jenny L. Trieu, Connie Choua, Seth A. Hays, Michael P. Kilgard, and Robert L. Rennaker. 2016. Vagus nerve stimulation delivered with motor training enhances recovery of function after traumatic brain injury. Journal of Neurotrauma 33: 871–79. [Google Scholar] [CrossRef]

- Quinkert, Amy Wells, Vivek Vimal, Zachary M. Weil, George N. Reeke, Nicholas D. Schiff, Jayanth R. Banavar, and Donald W. Pfaff. 2011. Quantitative descriptions of generalized arousal, an elementary function of the vertebrate brain. Proceedings of the National Academy of Sciences of USA 108: 15617–23. [Google Scholar] [CrossRef] [PubMed]

- Ranjbar-Slamloo, Yadollah, and Zeinab Fazlali. 2020. Dopamine and noradrenaline in the brain; overlapping or dissociate functions? Frontiers in Molecular Neuroscience 12: 334. [Google Scholar] [CrossRef]

- Raut, Ryan V., Abraham Z. Snyder, Anish Mitra, Dov Yellin, Naotaka Fujii, Rafael Malach, and Marcus E. Raichle. 2021. Global waves synchronize the brain’s functional systems with fluctuating arousal. Science Advances. [Google Scholar] [CrossRef]

- Reetzke, Rachel, Zilong Xie, Fernando Llanos, and Bharath Chandrasekaran. 2018. Tracing the trajectory of sensory plasticity across different stages of speech learning in ddulthood. Current Biology 28: 1419–27.e4. [Google Scholar] [CrossRef]

- Reimer, Jacob, Matthew J. McGinley, Yang Liu, Charles Rodenkirch, Qi Wang, David A. McCormick, and Andreas S. Tolias. 2016. Pupil fluctuations track rapid changes in adrenergic and cholinergic activity in cortex. Nature Communications 7: 13289. [Google Scholar] [CrossRef] [PubMed]

- Remez, Robert E., Philip E. Rubin, David B. Pisoni, and Thomas D. Carrell. 1981. Speech perception without traditional speech cues. Science 212: 947–49. [Google Scholar] [CrossRef]

- Ruggiero, David A., Mark D. Underwood, Joseph John Mann, Muhammad Anwar, and Victoria Arango. 2000. The human nucleus of the solitary tract: Visceral pathways revealed with an “in vitro” postmortem tracing method. Journal of the Autonomic Nervous System 79: 181–90. [Google Scholar] [CrossRef]

- Sadaghiani, Sepideh, and Andreas Kleinschmidt. 2013. Functional interactions between intrinsic brain activity and behavior. NeuroImage 80: 379–86. [Google Scholar] [CrossRef]

- Sadakata, Makiko, and James M. McQueen. 2013. High stimulus variability in nonnative speech learning supports formation of abstract categories: Evidence from Japanese geminates. The Journal of the Acoustical Society of America 134: 1324–35. [Google Scholar] [CrossRef]

- Samuel, Arthur G. 1981. Phonemic restoration: Insights from a new methodology. Journal of Experimental Psychology: General 110: 474–94. [Google Scholar] [CrossRef]

- Sara, Susan J. 2009. The locus coeruleus and noradrenergic modulation of cognition. Nature Reviews Neuroscience 10: 211–23. [Google Scholar] [CrossRef]

- Sara, Susan J., and Sebastien Bouret. 2012. Orienting and reorienting: The locus coeruleus mediates cognition through arousal. Neuron 76: 130–41. [Google Scholar] [CrossRef] [PubMed]

- Sara, Susan J., Andrey Vankov, and Anne Hervé. 1994. Locus coeruleus-evoked responses in behaving rats: A clue to the role of noradrenaline in memory. Brain Research Bulletin 35: 457–65. [Google Scholar] [CrossRef]

- Satpute, Ajay B., Philip A. Kragel, Lisa Feldman Barrett, Tor D. Wager, and Marta Bianciardi. 2019. Deconstructing arousal into wakeful, autonomic and affective varieties. Neuroscience Letters 693: 19–28. [Google Scholar] [CrossRef] [PubMed]

- Scharinger, Mathias, Molly J. Henry, and Jonas Obleser. 2013. Prior experience with negative spectral correlations promotes information integration during auditory category learning. Memory & Cognition 41: 752–68. [Google Scholar] [CrossRef]

- Schevernels, Hanne, Marlies E. van Bochove, Leen De Taeye, Klaas Bombeke, Kristl Vonck, Dirk Van Roost, Veerle De Herdt, Patrick Santens, Robrecht Raedt, and C. Nico Boehler. 2016. The effect of vagus nerve stimulation on response inhibition. Epilepsy & Behavior 64: 171–79. [Google Scholar] [CrossRef]

- Schuerman, William L., Kirill V. Nourski, Ariane E. Rhone, Matthew A. Howard, Edward F. Chang, and Matthew K. Leonard. 2021. Human intracranial recordings reveal distinct cortical activity patterns during invasive and non-invasive vagus nerve stimulation. Scientific Reports 11: 1–14. [Google Scholar] [CrossRef] [PubMed]

- Scott, Sophie K., and Carolyn McGettigan. 2013. The neural processing of masked speech. Hearing Research 303: 58–66. [Google Scholar] [CrossRef]

- Sharon, Omer, Firas Fahoum, and Yuval Nir. 2021. Transcutaneous vagus nerve stimulation in humans induces pupil dilation and attenuates alpha oscillations. The Journal of Neuroscience 41: 320–30. [Google Scholar] [CrossRef] [PubMed]

- Shetake, Jai A., Navzer D. Engineer, Will A. Vrana, Jordan T. Wolf, and Michael P. Kilgard. 2012. Pairing tone trains with vagus nerve stimulation induces temporal plasticity in auditory cortex. Experimental Neurology 233: 342–49. [Google Scholar] [CrossRef]

- Sohoglu, Ediz, Jonathan E. Peelle, Robert P. Carlyon, and Matthew H. Davis. 2012. Predictive top-down integration of prior knowledge during speech perception. Journal of Neuroscience 32: 8443–53. [Google Scholar] [CrossRef]

- Stanners, Robert F., Michelle Coulter, Allen W. Sweet, and Philip Murphy. 1979. The pupillary response as an indicator of arousal and cognition. Motivation and Emotion 3: 319–40. [Google Scholar] [CrossRef]

- Stein, Richard B., E. Roderich Gossen, and Kelvin E. Jones. 2005. Neuronal variability: Noise or part of the signal? Nature Reviews Neuroscience 6: 389–97. [Google Scholar] [CrossRef]

- Steriade, Mircea, Igor Timofeev, and François Grenier. 2001. Natural waking and sleep states: A view from inside neocortical neurons. Journal of Neurophysiology 85: 1969–85. [Google Scholar] [CrossRef]

- Strauss, Antje, Molly J. Henry, Mathias Scharinger, and Jonas Obleser. 2015. Alpha phase determines successful lexical decision in noise. Journal of Neuroscience 35: 3256–62. [Google Scholar] [CrossRef] [PubMed]

- Symmes, David, and Kenneth V. Anderson. 1967. Reticular modulation of higher auditory centers in monkey. Experimental Neurology 18: 161–76. [Google Scholar] [CrossRef]

- Taghia, Jalil, Weidong Cai, Srikanth Ryali, John Kochalka, Jonathan Nicholas, Tianwen Chen, and Vinod Menon. 2018. Uncovering hidden brain state dynamics that regulate performance and decision-making during cognition. Nature Communications 9: 2505. [Google Scholar] [CrossRef]

- Unsworth, Nash, and Matthew K. Robison. 2017. A locus coeruleus-norepinephrine account of individual differences in working memory capacity and attention control. Psychonomic Bulletin & Review 24: 1282–311. [Google Scholar] [CrossRef]

- Urbin, Michael A., Charles W. Lafe, Tyler W. Simpson, George F. Wittenberg, Bharath Chandrasekaran, and Douglas J. Weber. 2021. Electrical stimulation of the external ear acutely activates noradrenergic mechanisms in humans. Brain Stimulation 14: 990–1001. [Google Scholar] [CrossRef]

- Van Berkum, Jos J. A., Colin M. Brown, Pienie Zwitserlood, Valesca Kooijman, and Peter Hagoort. 2005. Anticipating upcoming words in discourse: Evidence from ERPs and reading times. Journal of Experimental Psychology: Learning, Memory, and Cognition 31: 443–67. [Google Scholar] [CrossRef]

- Van Bockstaele, Elisabeth J., James Peoples, and Patti Telegan. 1999. Efferent projections of the nucleus of the solitary tract to peri-locus coeruleus dendrites in rat brain: Evidence for a monosynaptic pathway. Journal of Comparative Neurology 412: 410–28. [Google Scholar] [CrossRef]

- Van Lysebettens, Wouter, Kristl Vonck, Lars Emil Larsen, Latoya Stevens, Charlotte Bouckaert, Charlotte Germonpré, and Mathieu Sprengers. 2020. Identification of vagus nerve stimulation parameters affecting rat hippocampal electrophysiology without temperature effects. Brain Stimulation 13: 1198–206. [Google Scholar] [CrossRef] [PubMed]

- Ventureyra, Enrique C. G. 2000. Transcutaneous vagus nerve stimulation for partial onset seizure therapy. Child’s Nervous System 16: 101–12. [Google Scholar] [CrossRef] [PubMed]

- Vidaurre, Diego, Stephen M. Smith, and Mark W. Woolrich. 2017. Brain network dynamics are hierarchically organized in time. Proceedings of the National Academy of Sciences of USA 114: 12827–32. [Google Scholar] [CrossRef]

- Vonck, Kristl E. J., and Lars E. Larsen. 2018. Vagus Nerve Stimulation. In Neuromodulation. Amsterdam: Elsevier, pp. 211–20. [Google Scholar] [CrossRef]

- Vonck, Kristl, Robrecht Raedt, Joke Naulaerts, Frederick De Vogelaere, Evert Thiery, Dirk Van Roost, Bert Aldenkamp, Marijke Miatton, and Paul Boon. 2014. Vagus nerve stimulation…25 years later! What do we know about the effects on cognition? Neuroscience & Biobehavioral Reviews 45: 63–71. [Google Scholar] [CrossRef]

- Warren, Richard M. 1970. Perceptual restoration of missing speech sounds. Science 167: 392–93. [Google Scholar] [CrossRef]

- Warren, Christopher M., Klodiana D. Tona, Lineke Ouwerkerk, Jeroen van Paridon, Fenna Poletiek, Henk van Steenbergen, Jos A. Bosch, and Sander Nieuwenhuis. 2019. The neuromodulatory and hormonal effects of transcutaneous vagus nerve stimulation as evidenced by salivary alpha amylase, salivary cortisol, pupil diameter, and the P3 event-related potential. Brain Stimulation 12: 635–42. [Google Scholar] [CrossRef] [PubMed]

- Waschke, Leonhard, Sarah Tune, and Jonas Obleser. 2019. Local cortical desynchronization and pupil-linked arousal differentially shape brain states for optimal sensory performance. eLife 8: e51501. [Google Scholar] [CrossRef]

- Werker, Janet F., and Takao K. Hensch. 2015. Critical periods in speech perception: New directions. Annual Review of Psychology 66: 173–96. [Google Scholar] [CrossRef] [PubMed]

- Whyte, John. 1992. Attention and arousal: Basic science aspects. Archives of Physical Medicine and Rehabilitation 73: 940–49. [Google Scholar] [CrossRef] [PubMed]

- Yakunina, Natalia, Sam Soo Kim, and Eui-Cheol Nam. 2017. Optimization of transcutaneous vagus nerve stimulation using functional MRI. Neuromodulation: Technology at the Neural Interface 20: 290–300. [Google Scholar] [CrossRef] [PubMed]

- Yap, Jonathan Y. Y., Charlotte Keatch, Elisabeth Lambert, Will Woods, Paul R. Stoddart, and Tatiana Kameneva. 2020. Critical review of transcutaneous vagus nerve stimulation: Challenges for translation to clinical practice. Frontiers in Neuroscience 14: 284. [Google Scholar] [CrossRef]

- Yi, Han Gyol, and Bharath Chandrasekaran. 2016. Auditory categories with separable decision boundaries are learned faster with full feedback than with minimal feedback. The Journal of the Acoustical Society of America 140: 1332–35. [Google Scholar] [CrossRef]

- Yi, Han Gyol, Bharath Chandrasekaran, Kirill V. Nourski, Ariane E. Rhone, William L. Schuerman, Matthew A. Howard, Edward F. Chang, and Matthew K. Leonard. 2021. Learning nonnative speech sounds changes local encoding in the adult human cortex. Proceedings of the National Academy of Sciences of USA 118: e2101777118. [Google Scholar] [CrossRef]

- Yi, Han Gyol, Matthew K. Leonard, and Edward F. Chang. 2019. The encoding of speech sounds in the superior temporal gyrus. Neuron 102: 1096–110. [Google Scholar] [CrossRef]

- Yi, Han Gyol, W. Todd Maddox, Jeanette A. Mumford, and Bharath Chandrasekaran. 2016. The role of corticostriatal systems in speech category learning. Cerebral Cortex 26: 1409–20. [Google Scholar] [CrossRef] [PubMed]

- Young, Christina B., Gal Raz, Daphne Everaerd, Christian F. Beckmann, Indira Tendolkar, Talma Hendler, Guillén Fernández, and Erno J. Hermans. 2017. Dynamic shifts in large-scale brain network balance as a function of arousal. Journal of Neuroscience 37: 281–90. [Google Scholar] [CrossRef]

- Yu, Luodi, and Yang Zhang. 2018. Testing native language neural commitment at the brainstem level: A cross-linguistic investigation of the association between frequency-following response and speech perception. Neuropsychologia 109: 140–48. [Google Scholar] [CrossRef] [PubMed]

- Zekveld, Adriana A., Thomas Koelewijn, and Sophia E. Kramer. 2018. The pupil dilation response to auditory stimuli: Current state of knowledge. Trends in Hearing 22: 1–25. [Google Scholar] [CrossRef]

- Zhang, Yang, Patricia K. Kuhl, Toshiaki Imada, Makoto Kotani, and Yoh’ichi Tohkura. 2005. Effects of language experience: Neural commitment to language-specific auditory patterns. NeuroImage 26: 703–20. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Yang, Patricia K. Kuhl, Toshiaki Imada, Paul Iverson, John Pruitt, Erica B. Stevens, Masaki Kawakatsu, Yoh’ichi Tohkura, and Iku Nemoto. 2009. Neural signatures of phonetic learning in adulthood: A magnetoencephalography study. NeuroImage 46: 226–40. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schuerman, W.L.; Chandrasekaran, B.; Leonard, M.K. Arousal States as a Key Source of Variability in Speech Perception and Learning. Languages 2022, 7, 19. https://doi.org/10.3390/languages7010019

Schuerman WL, Chandrasekaran B, Leonard MK. Arousal States as a Key Source of Variability in Speech Perception and Learning. Languages. 2022; 7(1):19. https://doi.org/10.3390/languages7010019

Chicago/Turabian StyleSchuerman, William L., Bharath Chandrasekaran, and Matthew K. Leonard. 2022. "Arousal States as a Key Source of Variability in Speech Perception and Learning" Languages 7, no. 1: 19. https://doi.org/10.3390/languages7010019

APA StyleSchuerman, W. L., Chandrasekaran, B., & Leonard, M. K. (2022). Arousal States as a Key Source of Variability in Speech Perception and Learning. Languages, 7(1), 19. https://doi.org/10.3390/languages7010019