3.1. Corpus Data

This study relies on the written digital production collected from tweets. The use of written production data to study dialectal variation is motivated both by work which investigates the relationship between variation in written and in spoken registers (

Grieve et al., 2019) and also by work which investigates the impact of exposure to written language on language change (

Dąbrowska, 2021). These tweets are drawn from the

Corpus of Global Language Use (

cglu) (

Dunn, 2020,

2024b). Corpora representing individual cities are created by aggregating tweets into larger samples. The social media portion of the

cglu contains publicly accessible tweets collected from 10,000 cities around the world, where each city is a point with a 25 km collection radius. Here the number of cities is reduced to those with a sufficient amount of data (at least 25 samples, aggregated as described below) from either inner-circle or outer-circle countries (

Kachru, 1990). This paper works only with English-language corpora; tweets are tagged for language using both the idNet model (

Dunn, 2020) and the PacificLID model (

Dunn & Nijhof, 2022); only tweets which both models predict to be English are included. An overview of this dataset is shown in

Table 1.

A list of each city by country is available in

Table A1 for inner-circle countries and in

Table A2 for outer-circle countries, in

Appendix A. Our goal is to create comparable corpora representing each local population using geo-referenced tweets. The challenge is that social media data represents many topics and sub-registers, so that there is a possible confound presented by geographically structured variations in the topic or sub-register. For instance, if tweets from Chicago are sports-related and tweets from Christchurch are business-related, then the observed variation is likely to be partially register-based as well as dialect-based. To control for the topic, we create samples by aggregating tweets which contain the same set of keywords. First, we select 250 common words which are neither purely topical nor purely functional: for example,

girl,

know,

music, and

project. These keywords are available in

Table A3 in

Appendix B. Second, for each local metro area, we create samples containing one tweet for each keyword; each sample thus contains 250 individual tweets, for a total size of approximately 3900 words. Importantly, the distribution of keywords is uniform across all samples from all local areas. This allows us to control for variations in a topic or sub-register which might otherwise lead to non-dialectal sources of variation. This corpus has been previously used for other studies of linguistic variation (

Dunn, 2023;

Dunn et al., 2024).

To ensure a robust estimate of construction usage in each city, we only include those with at least 25 unique samples (thus, a total corpus of at least 100,000 words per city, divided into 25 comparable samples). This provides a total of 256 cities across 13 countries, as shown in

Table 1. Only historically English-using countries are included. Among inner-circle countries, Canada has 4261 samples across 24 cities; the United States has 7070 samples across 21 cities; and the UK has 5071 samples across 25 cities.

3.2. Representing Constructions

A construction grammar is a network of form-meaning mappings at various levels of schematicity (

Doumen et al., 2023,

2024;

Nevens et al., 2022). Here, we use constructions as the locus of grammatical variation: dialects differ in their preference for specific constructions in specific contexts. In order to observe construction usage at the scale required, we rely on computational construction grammar (computational CxG), a paradigm of grammar induction. The grammar learning algorithm used in this paper is taken from previous work (

Dunn, 2024a and the references therein), with the grammar learned using the same register as the dialectal data (tweets). This section provides an analysis of constructions within the grammar to illustrate the kinds of features used to model syntactic variation.

But, first, this approach to computational construction grammar views representations as sequences of slot-constraints, so that an instance of a construction in a corpus is defined as a string which satisfies all the slot-constraints in a construction. Because the slots are sequential, this requires the construction to have a specific linear order. Slot-constraints are defined as centroids within an embedding space; any sequence that falls within a given distance from that centroid (say, 0.90 cosine similarity) is considered to satisfy the constraint. The annotation method thus relies on sequences of constraints that are defined within embedding spaces: fastText skip-gram embeddings to capture semantic information and fastText cbow embeddings to capture syntactic information. These embeddings are learned as part of the grammar learning process (

Dunn, 2024a).

Importantly, constructions with the same form can still be differentiated. For example, the three utterances in (1a) through (1c) all have the same structure but have different semantics; this makes them distinct constructions. A further discussion of semantics in computational CxG can be found in

Dunn (

2024a). The complete grammar together with examples is available in the supplementary material and the codebase is available as a Python package.

2 In the context of variation, social meaning must be considered as a part of the meaning of constructions (

Leclercq & Morin, 2023).

| (1a) | give me a pencil |

| (1b) | give me a hand |

| (1c) | give me a break |

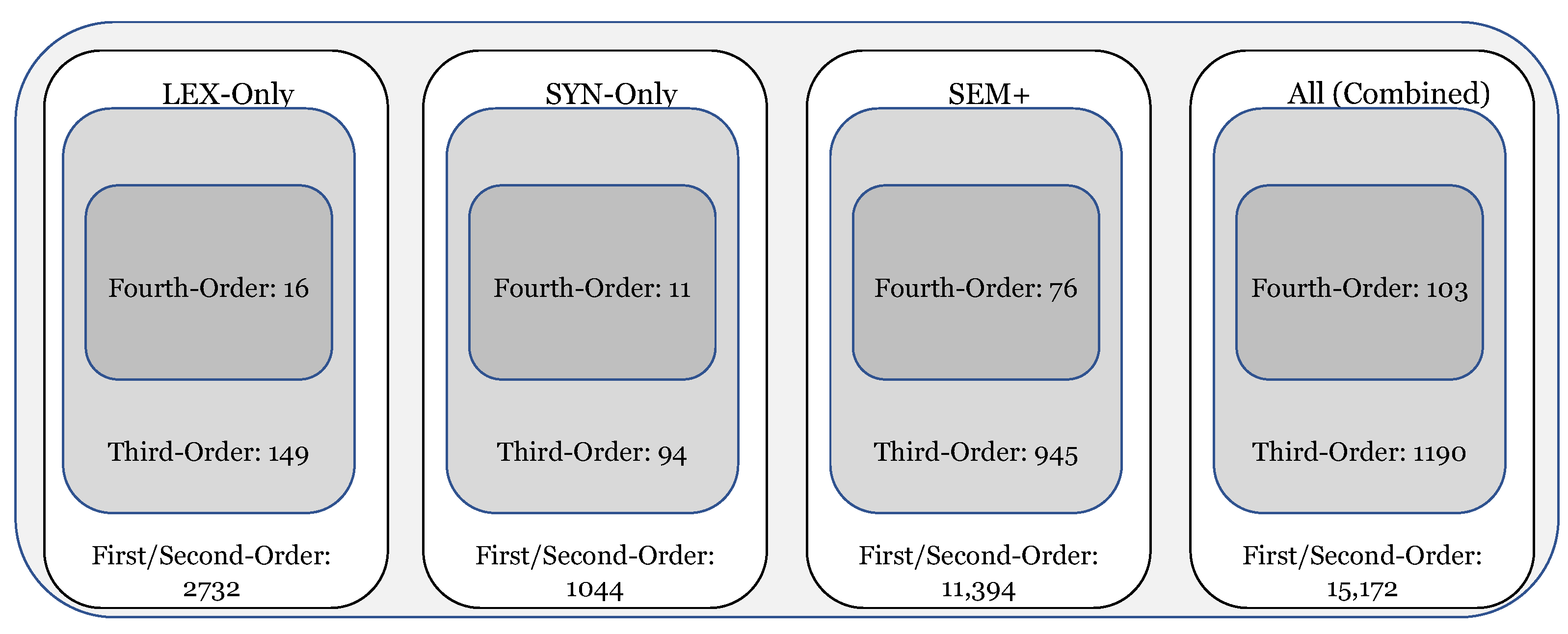

A break-down of the grammar used in the experiments is shown in

Figure 1, containing a total of 15,215 individual constructions. Constructions are represented as a series of slot-constraints and the first distinction between constructions involves the types of constraints used. Computational CxG uses three types of slot-fillers: lexical (

lex, for item-specific constraints), syntactic (

syn, for form-based or local co-occurrence constraints), and semantic (

sem, for meaning-based or long-distance co-occurrence constraints). As shown in (2), slots are separated by dashes in the notation used here. Thus,

syn in (2) describes the type of constraint and

determined–permitted provides its value using two central exemplars of that constraint. Examples or tokens of the construction from a test corpus of tweets are shown in (2a) through (2d).

| (2) | [ syn: | determined–permitted – syn: to – syn: pushover–backtrack ] |

| | (2a) | refused to play |

| | (2b) | tried to watch |

| | (2c) | trying to run |

| | (2d) | continue to drive |

Thus, the construction in (2) contains three slots, each defined using a syntactic constraint. These constraints are categories learned at the same time that the grammar itself is learned, formulated within an embedding space. An embedding that captures local co-occurrence information is used for formulating syntactic constraints (a continuous bag-of-words fastText model with a window size of 1) while an embedding which instead captures long-distance co-occurrence information is used for formulating semantic constraints (a skip-gram fastText model with a window size of 5). Constraints are then formulated as centroids within that embedding space. Thus, the tokens for the construction in (2) are shown in (2a) through (2d). For the first slot-constraint, the name (

determined–permitted) is derived from the lexical items closest to the centroid of the constraint. The proto-type structure of categories is modeled using cosine distance as a measure of how well a particular slot-filler satisfies the constraint. Here, the lexical items “reluctant”, “ready”, “refusal”, and “willingness” appear as fillers sufficiently close to the centroid to satisfy the slot-constraint. The construction itself is a complex verb phrase in which the main verb encodes the agent’s attempts to carry out the event encoded in the infinitive verb. This can be contrasted semantically with the construction in (3), which has the same form but instead encodes the agent’s preparation for carrying out the social action encoded in the infinitive verb. The dialect experiments in this paper rely on type frequency, which means that each construction like (3) is a feature and each unique form like (3a) through (3d) contributes to the frequency of that feature. To describe a larger utterance, these constructions would be clipped together to form longer representations.

| (3) | [ syn: | determined–permitted – syn: to – syn: demonstrate-reiterate ] |

| | (3a) | reluctant to speak |

| | (3b) | ready to exercise |

| | (3c) | refusal to recognize |

| | (3d) | willingness to govern |

An important idea in CxG is that structure is learned gradually, starting with item-specific surface forms and moving to increasingly schematic and productive constructions. This is called

scaffolded learning because the grammar has access to its own previous analysis for the purpose of building more complex constructions. In computational CxG, this is modeled by learning over iterations with different sets of constraints available. For example, the constructions in (2) and (3) are learned with only access to the syntactic constraints, while the constructions in (4) and (5) have access to lexical and semantic constraints as well. This allows grammars to become more complex while not assuming basic structures or categorizations until they have been learned. In the dialect experiments below, we distinguish between lexical (

lex) grammars (which only contain lexical constraints), syntactic (

syn) grammars (which contain only syntactic constraints), and

sem+ grammars (which contain lexical, syntactic, and semantic constraints).

| (4) | [ lex: | “the” – sem: way – lex: “to” ] |

| | (4a) | the chance to |

| | (4b) | the way to |

| | (4c) | the path to |

| | (4d) | the steps to |

Constructions have different levels of abstractness or schematicity. For example, the construction in (4) functions as a modifier, as in the X position in the sentence “Tell me [X] bake yeast bread.” This construction is not purely item-specific because it has multiple types or examples. But, it is less productive than the location-based noun phrase construction in (5) which will have many more types in a corpus of the same size. CxG is a form of lexico-grammar in the sense that there is a continuum between item-specific and schematic constructions, exemplified here by (4) and (5), respectively. The existence of constructions at different levels of abstraction makes it especially important to view the grammar as a network with similar constructions arranged in local nodes within the grammar.

| (5) | [ lex: | “the” – sem: streets ] |

| | (5a) | the street |

| | (5b) | the sidewalk |

| | (5c) | the pavement |

| | (5d) | the avenues |

A grammar, or

construction, is not simply a set of constructions but rather a network with both taxonomic and similarity relationships between constructions. In computational CxG, this is modeled by using pairwise similarity relationships between constructions at two levels: (i) representational similarity (or how similar the slot-constraints which define the construction are) and (ii) token-based similarity (or how similar are the examples or tokens of two constructions given a test corpus). Matrices of these two pairwise similarity measures are used to cluster constructions into smaller and then larger groups. For example, the phrasal verbs in (6) through (8) are members of a single cluster of phrasal verbs. Each individual construction has a specific meaning: in (6), focusing on the social attributes of a communication event; in (7), focusing on a horizontally situated motion event; in (8), focusing on a motion event interpreted as a social state. These constructions each have a unique meaning but a shared form. The point here is that, at a higher-order of structure, there are a number of phrasal verb constructions which share the same schema. These constructions have sibling relationships with other phrasal verbs and a taxonomic relationship with the more schematic phrasal verb construction. These phrasal verbs are an example of the

third-order constructions in the dialect experiments (c.f.,

Dunn, 2024a).

3| (6) | [ sem: | screaming–yelling – syn: through ] |

| | (6a) | stomping around |

| | (6b) | cackling on |

| | (6c) | shouting out |

| | (6d) | drooling over |

| (7) | [ sem: | rolled–turned – syn: through ] |

| | (7a) | rolling out |

| | (7b) | slid around |

| | (7c) | wiped out |

| | (7d) | swept through |

| (8) | [ sem: | sticking–hanging – syn: through ] |

| | (8a) | poking around |

| | (8b) | hanging out |

| | (8c) | stick around |

| | (8d) | hanging around |

An even larger structure within the grammar is based on groups of these third-order constructions, structures which we will call

fourth-order constructions. A fourth-order construction is much larger because it contains many third-order constructions which themselves contain individual first-order (and second-order) constructions. An example of a fourth-order construction is given with five constructions in (9) through (13) which all belong to same neighborhood of the grammar. The partial noun phrase in (9) points to a particular sub-set of some entity (as in, “parts of the recording”). The partial adpositional phrase in (10) points specifically to the end of some temporal entity (as in, “towards the end of the show”). In contrast, the partial noun phrase in (11) points a particular sub-set of a spatial location (as in, “the edge of the sofa”). A more specific noun phrase in (12) points to a sub-set of a spatial location with a fixed level of granularity (i.e., at the level of a city or state). And, finally, in (13), an adpositional phrase points to a location within a spatial object. Each of these first-order constructions is a member of the same fourth-order construction. This level of abstraction interacts with dialectal variation in that variation and change can take place in reference to either the lower-level or higher-level structures.

| (9) | [ sem: | part – lex: “of” – syn: the ] |

| | (9a) | parts of the |

| | (9b) | portion of the |

| | (9c) | class of the |

| | (9d) | division of the |

| (10) | [ syn: | through – sem: which-whereas – lex: “end” – lex: “of” – syn: the ] |

| | (10a) | at the end of the |

| | (10b) | before the end of the |

| | (10c) | towards the end of the |

| (11) | [ sem: | which–whereas – sem: way – lex: “of” ] |

| | (11a) | the edge of |

| | (11b) | the side of |

| | (11c) | the corner of |

| | (11d) | the stretch of |

| (12) | [ sem: | which–whereas – syn: southside–northside – syn: chicagoland ] |

| | (12a) | in north Texas |

| | (12b) | of southern California |

| | (12c) | in downtown Dallas |

| | (12d) | the southside Chicago |

| (13) | [ lex: | “of” – syn: the – syn: courtyard–balcony ] |

| | (13a) | of the gorge |

| | (13b) | of the closet |

| | (13c) | of the room |

| | (13d) | of the palace |

The examples in this section have illustrated some of the fundamental properties of CxG and also provide a discussion of some of the features which are used in the dialect classification study. A break-down of the types of constructions found in the grammar is shown in

Figure 1. The 15,215 total constructions are first divided into different scaffolds (

lex,

syn,

sem+). As before,

lex constructions allow only lexical constraints while

sem+ constructions allow lexical and syntactic and semantic constraints. This grammar has a network structure and contains 2132 third-order constructions (e.g., the phrasal verbs discussed above). At an even higher level of structure, there are 97 fourth-order constructions or neighborhoods within the grammar (e.g., the sub-set referencing constructions discussed above). We can thus look at the variation across the entire grammar, across different types of slot-constraints, and across different levels of abstraction when measuring dialect similarity.

To what degree are these grammatical representations adequate across different dialects? This is partly a question of parsing accuracy: do we have more false positives or false negatives in one location (like American English) vs. another (like Indian English)? To evaluate this, we analyze the overall number of constructions found per sample in different countries in

Table 2. The basic idea here is that each sample contains the same amount of usage from the same register on the same set of topics; thus, by default, we would expect the same number of constructions to be used. The choice of one construction over another is expected to vary; that is the difference between dialects. But, ideally, the grammar would contain all the constructions used in each dialect so that the total frequency of constructions would be consistent across dialects.

In practical terms, this is not the case because inner-circle varieties are more likely to be represented in the data used to learn the grammar (

Dunn, 2024a), which means that more constructions from inner-circle varieties are likely contained in the grammar. To evaluate the overall parsing quality, we then estimate the total frequency of construction matches per sample per city; since we expect a certain amount of usage to contain a certain number of constructions, higher values mean a better fit with the grammar.

Table 2 shows this comparison aggregated to the country level; the values here are standardized so that 1.0, for instance, indicates one standard deviation above the mean. This allows us to compare the different parts of the grammar which have different numbers of matches: first-order and second-order constructions, which are more concrete, and third- and fourth-order constructions, which are more abstract. These results show that the US and Canada have the best fit with the grammar, while India and Pakistan have the worst fit. This evaluation shows that there is a tendency to focus on constructions that better represent some varieties; this implies that city-to-city similarity measures will work better in places like the US. The ranking of countries is largely consistent across levels of abstraction.

Since the grammar is a better fit for certain countries, to what degree is this feature space able to adequately represent variation across dialects on a global scale? We use a classification task to measure the ability to predict the country which each city belongs to in

Table 3. First, we estimate the mean type frequency for each construction across samples to provide a single best representation for each city. We then train a linear SVM classifier with different sub-sets of the grammar to predict which country a city belongs to and then evaluate the accuracy of these predictions on held-out cities. The results are divided by the level of abstraction (1st/2nd-, 3rd-, and 4th-order constructions) as well as by the type of representation (

lex,

syn,

sem+). The high prediction accuracy (quantified here by the f-score) indicates that the features are able to adequately distinguish country-level dialects.

Thus,

Table 3 shows the relative ability of each portion of the grammar to capture country-level variation. We see that less abstract lower-level constructions (first and second order) are most able to capture variation regardless of the type of representation. This is not surprising as grammatical variation is expected to be strongest at these lower levels of abstraction. Next, more abstract features (third order) work well with

syn or

sem+ representations, but less well with

lex representations. And, finally, the most abstract features have the lowest f-scores overall, with

sem+ retaining an f-score of 0.82. Again, this follows our expectation: more schematic structures are shared across dialects to a greater degree and thus subject to less variation.

Looking across countries, we see that classification errors are concentrated in outer-circle varieties from places like Indonesia, the Philippines, or Pakistan. Again, these are the varieties least well represented in the frequency evaluation above. Thus, we would expect that city-to-city similarities would be the least precise in these areas. Overall, however, this evaluation shows that even in most outer-circle areas, the prediction accuracy is high, especially with less abstract constructions.

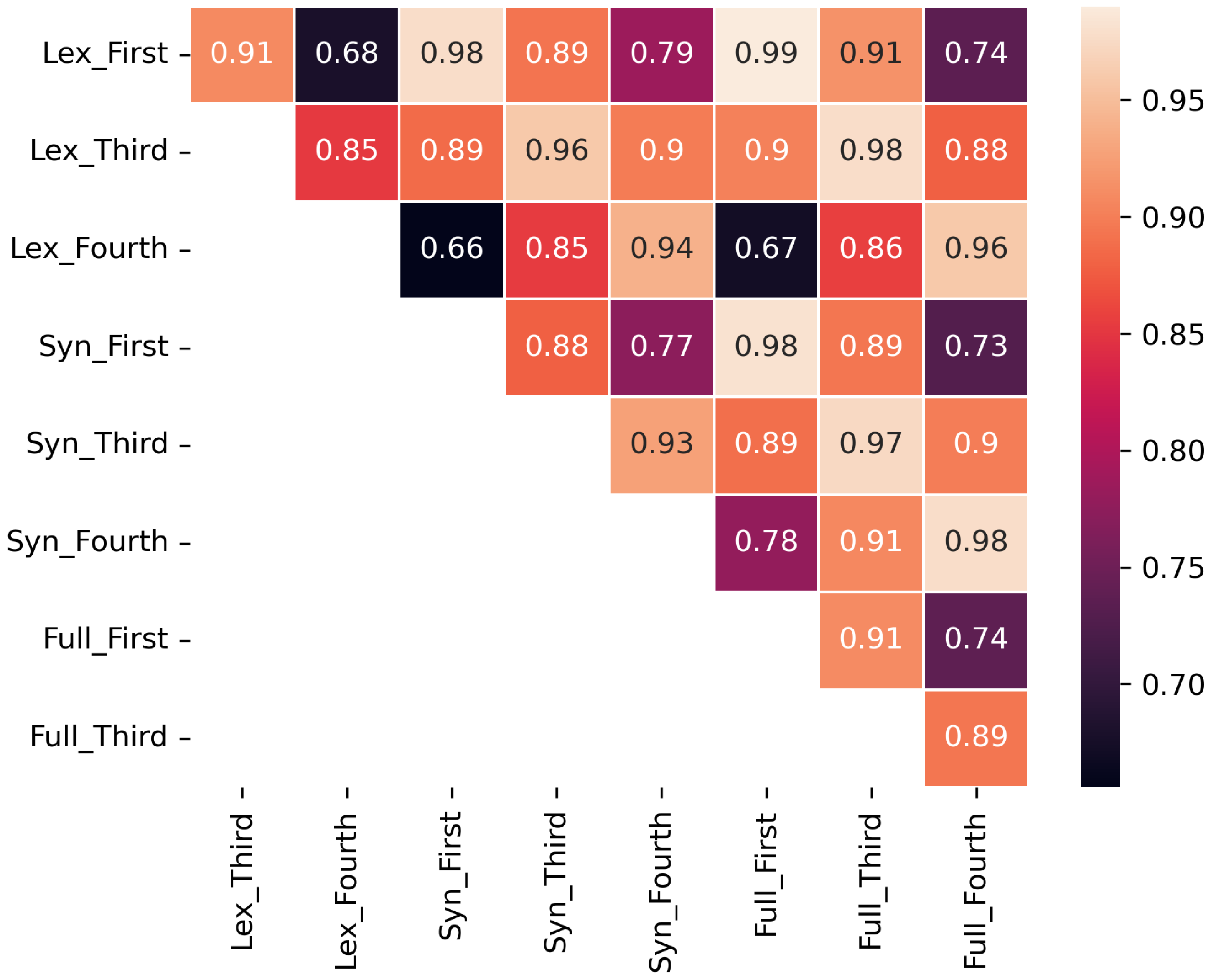

3.3. Measuring Linguistic Similarity

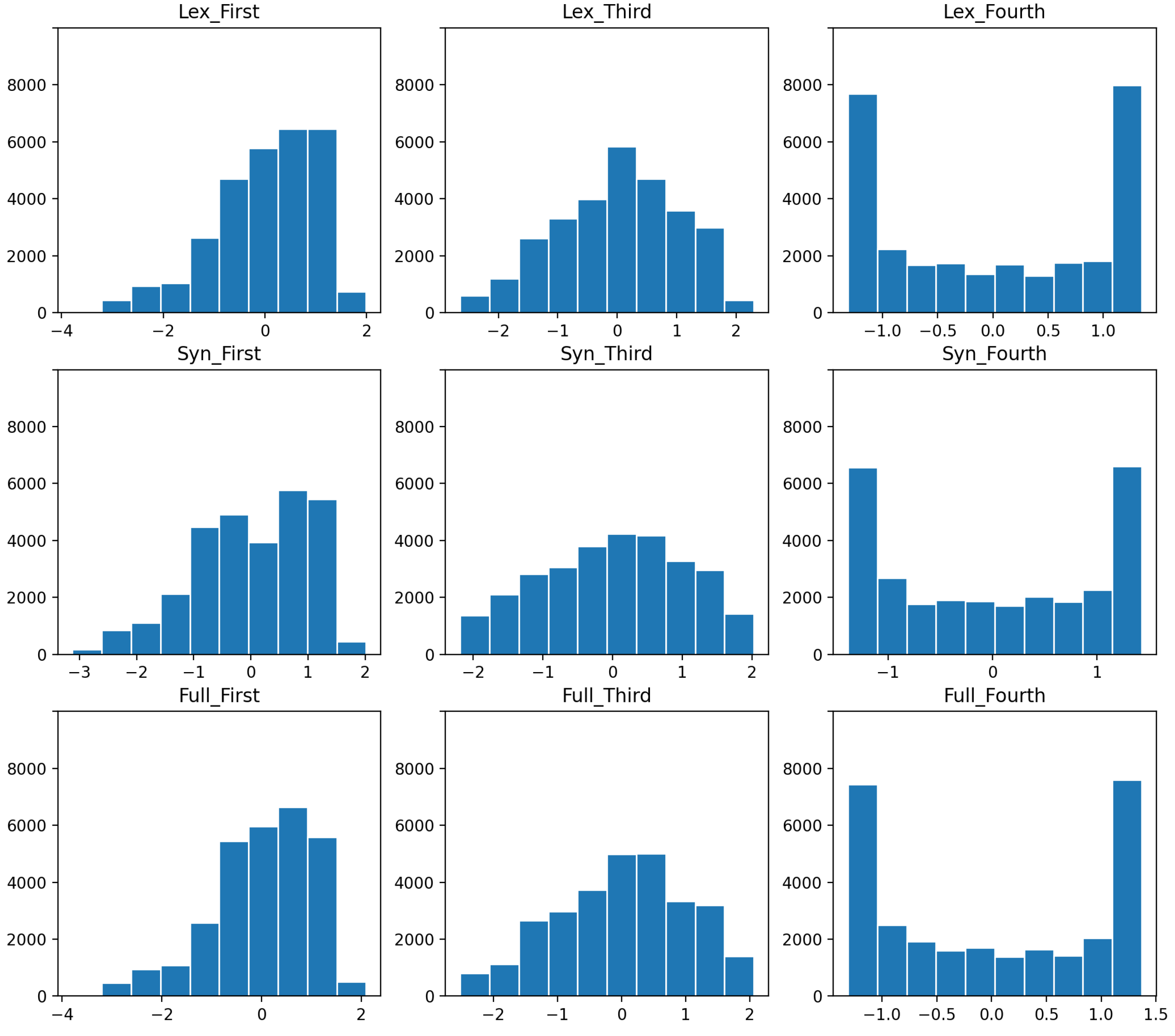

We now have a large number of comparable samples representing 256 cities in English-using countries which have been annotated for constructional features that have themselves been shown capable of distinguishing between country-level dialects. In this context, the annotations are a vector of type frequencies for each construction in the grammar for each sample in the data set. This section discusses the method of calculating pairwise similarities between cities for each of the nine distinct sub-sets of the grammar (i.e., three types of representation at three levels of abstraction).

Pairwise similarity between cities is calculated in three steps: First, we estimate the mean type frequency of each construction across samples for each city and then standardize these estimated frequencies across the entire corpus. Type frequency focuses on the productivity of each construction, and the number of unique forms it takes. This means that we have one expected type frequency per construction per city; values of 1 indicate that a construction is used one standard deviation above the mean across all cities. This standardization ensures that some more frequent constructions do not dominate the comparison. Second, we take the cosine distance between these standardized type frequencies, using as weights the feature loadings from the linear SVM classifier discussed above for country-level dialects. These classification weights focus the cosine distance on those constructions which are subject to variation. Third, because we have no absolute interpretation for pairwise similarities between city-level dialects, we standardize these cosine distances, thus focusing on the relative ranking of similarity values. This produces a ranked list of pairs of cities, with the most similar city-level dialects at the top and the least similar at the bottom.

The accuracy evaluation in

Table 3 was focused on validating whether these constructional features are capable, upstream, of distinguishing between dialects in a supervised setting. We follow this with a second accuracy-based validation here in

Table 4 which is focused on whether city-to-city similarity measures remain accurate in an unsupervised setting. We first calculate, for each city, the distance with all other cities. Secondly, we compare the distances of (i) within-country cities and (ii) out-of-country cities. An accurate prediction is when each city is closest to other cities within the same country. This measure of accuracy is shown in

Table 3 across levels of abstraction (first/second-, third-, and fourth-order constructions) and across levels of representation (

lex,

syn, and

sem+, which contains all three types of representation).

Our main concern is that these pairwise similarity measures are sufficiently accurate that the rankings can be taken as representing actual similarity between dialects within some portion of the grammar. As before, less abstract features (first/second- and third-order constructions) have higher accuracy because they are subject to more variation. Note that, by using type frequency as a means of comparison, we are looking at the relative productivity of each construction rather than differences in specific forms. Thus, the same construction could be equally productive in two dialects, while producing different forms in each. The lowest accuracy is found in the most abstract features (third-order constructions); to some degree, we expect that the most schematic constructions are subject to less variation. Within these features, the lowest performing areas are outer-circle varieties from Indonesia and Malaysia. It should be noted that predicting that a city is closest to another city in a neighboring country is not necessarily incorrect (i.e., in border areas); for our purposes, we would expect within-country similarity to be higher in most but not all cases.

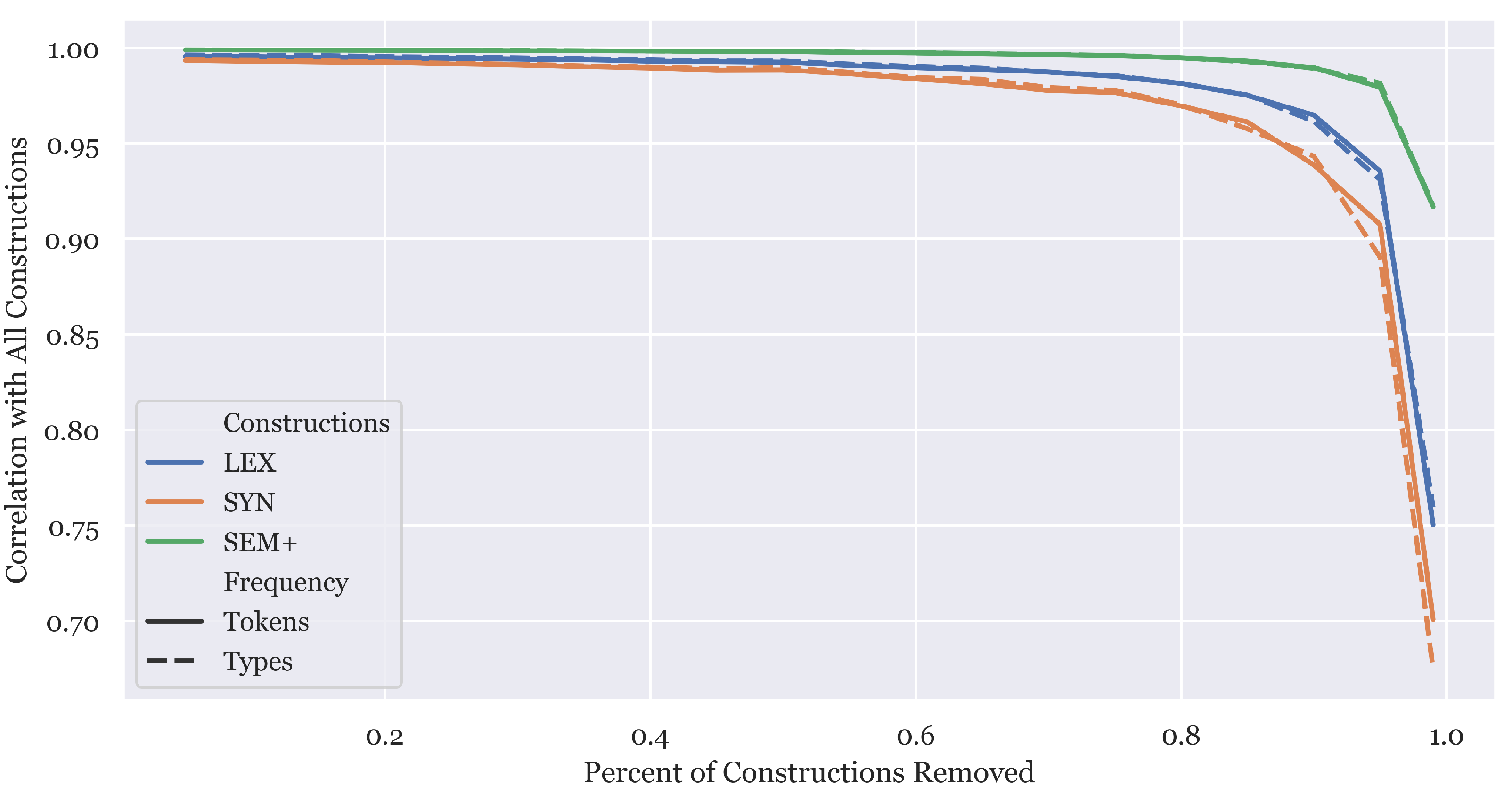

A final approach to evaluating the robustness of this operationalization of construction grammar is to measure the degree to which we would reach the same measures of city-level dialect similarity given random permutations of the grammar. The similarity measure outputs a rank of over 30 k cities, from most to least similar. In this evaluation, we randomly remove a certain percentage of the constructions and then recalculate this similarity ranking. This is shown in

Figure 2, using the Pearson correlation to measure how similar two rankings are high values indicate that a particular grammar is very similar to the full grammar in terms of how it ranks cities. Thus, high correlations show that the feature space is robust to random permutations. The x axis shows increasing percentages of permutations. For instance, at 0.2, we randomly remove 20% of the constructions and recalculate the distances between cities. Each level is computed 10-times and then averaged (a 10-fold cross-validation at each step).

The figure divides the grammar by the type of representation (lex, syn, sem+) and also by token frequency vs. type frequency for measuring the construction usage. This figure shows that up to 80% of the constructions can be removed before significant impacts are seen on the city-level similarity ranks. This indicates that the distance matrix used in these experiments is highly robust to random variations in the grammar induction component used to form the feature space. Thus, we should have high confidence in this operationalization of the grammar. This converges with the accuracy-based evaluations which also show that this feature space is able to identify the political boundaries which separate cities.

3.4. Social and Geographic Variables

We have now discussed comparable corpora across 256 cities which are annotated for constructional features in a way that supports accurate pairwise similarity measures between cities. These grammatical similarity measures tell us about dialectal variation in different portions of the grammar, about the patterns of diffusion for syntactic variants. The research question here is about the factors which cause or at least influence these similarity patterns:

why are some dialects of English more similar than others? In this paper, we experiment with three factors: (1) the geographic distance between cities, (2) the amount of population contact between cities as measured by air travel, and (3) the language contact experienced within each city as measured by the linguistic landscape of each city. This section describes each of these three measures. This builds on previous work combining dialectology with social and environmental variables (

Huisman et al., 2021;

Wieling et al., 2011).

The simplest of these three measures is geographic distance; here, we use the geodesic distance measured in kilometers. This approach accounts for the shape of the Earth but not for intervening features like mountains or oceans. We exclude pairs of cities which are closer than 250 km from one another; for such a close comparison, for instance, data from air travel is likely to greatly underestimate the amount of contact between two populations.

The second measure is the amount of population contact, for which we use as a proxy the number of airline passengers traveling from one city to another (

Huang et al., 2013). This flight-based variable can be consistently calculated for all the cities in this study and provides a generic measure of the amount of travel between cities which is itself a proxy for the amount of mutual exposure between the two local populations. Especially for long-distance pairs, air travel is often the only practical method for such travel. To make the travel measure symmetric, we combine trips in both directions (e.g., Chicago to Christchurch and Christchurch to Chicago). To illustrate why we include both geographic distance and the amount of air travel as factors, there is a negative Pearson correlation of −0.147 between the two factors. This means that there is not a close relationship between the two, so that each retains unique information about the relationship between cities.

The third measure captures differences in language contact based on the linguistic landscape in a city, motivated by work on the types of language contact involved in variation (

Croft, 2020). We use tweet-based data for this, focusing on the digital landscape because we are observing digital language use on the same platform. We take all tweets within 200 km of a city which are closer to that city than to any other. Then, using the geographic GeoLID model (

Dunn & Edwards-Brown, 2024), we find the relative frequency of up to 800 languages. The distance between two cities is then the cosine distance between this measure of the relative usage of languages. The more similar two cities are, the more they are experiencing the same types of language contact.

Examples of three pairs of cities are shown in

Table 5, with the distance between the linguistic landscape together with the break-down of most-used languages. The most different landscapes are between Glasgow (UK) and Jakarta (ID), at 0.819. English is the majority language in Glasgow (90%) but only a minority language in Jakarta (11.8%). Beyond this, Jakarta has a high use of Austronesian languages while Glasgow has very little. The next pair is Auckland (NZ) and Montreal (CA). In both bases, English is the most common, but in Montreal it constitutes only 54.7%, with French contributing 35%. Auckland is mostly characterized, however, by relatively small amounts of usage from languages like Spanish or Portuguese or Indonesian. And, finally, there is no distance at all between Oklahoma City (US) and Regina (CA), both with a high majority of English usage. These examples show three positions on the continuum between similar or different linguistic landscapes using this measure. Populations with different linguistic landscapes have, as a result, experienced different types of language contact. For instance, English in Jakarta has had a high amount of contact with Indonesian while, in Montreal, English has had a high amount of contact with French.

These three external features, the geographic distance, population contact, and language contact, are used as possible factors that could explain pairwise grammatical similarities between English-using cities around the world. We might expect, for instance, that closer cities are more similar linguistically (with certain thresholds to control for settler nations like New Zealand that are far from Europe). And, we might expect that dialects where English is mixing with French, for instance, would be more similar to other French-influenced dialects. The following section undertakes this analysis.