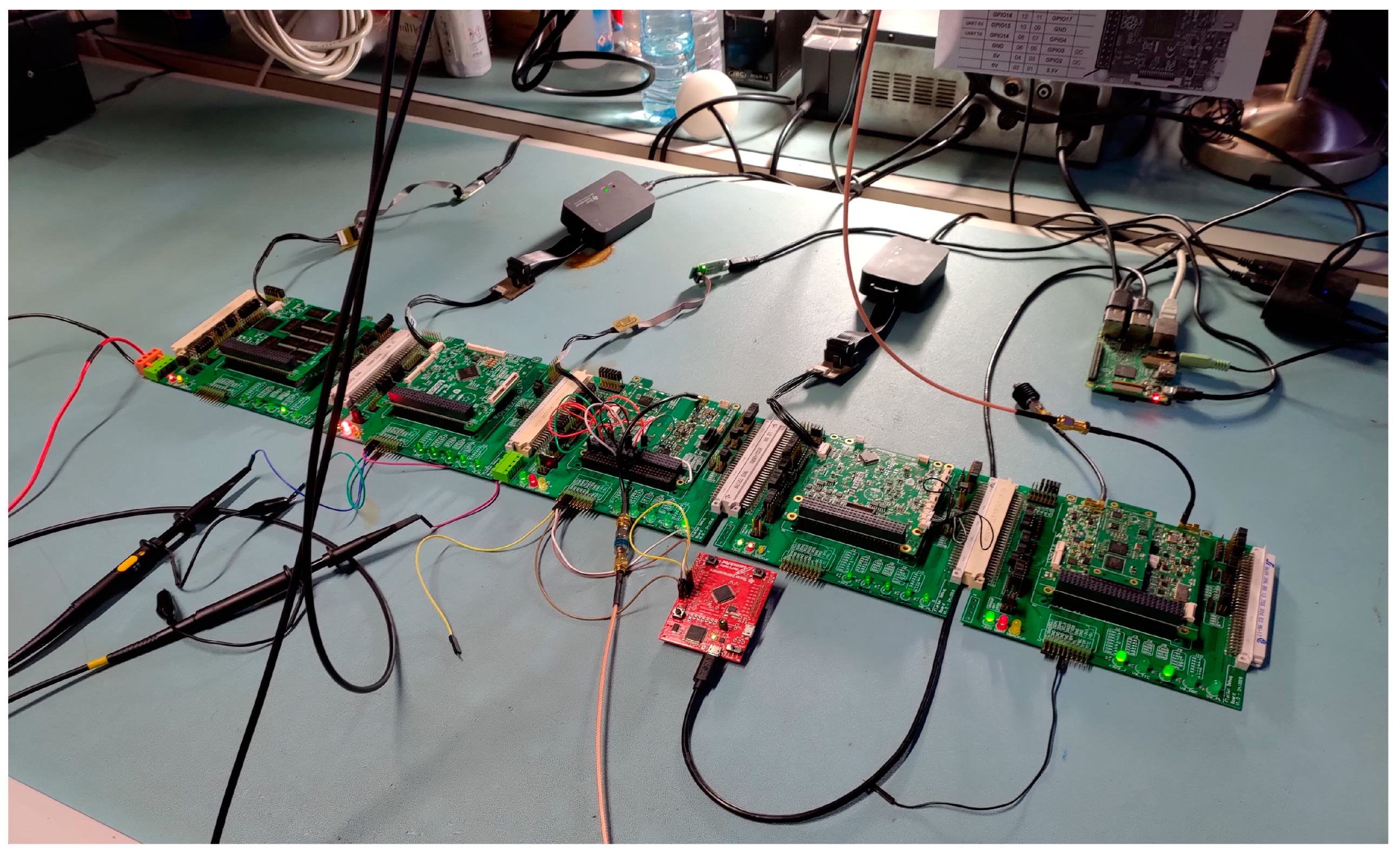

3.1. Flatsat Testing

The unit and integration tests are an ongoing effort, with no tangible results to be presented other than code coverage and test failure statistics. They are used more as a development tool than as a typical verification and validation tool. Nonetheless, the framework will be very useful for formal functional testing, during which the full functionality of the spacecraft will be tested.

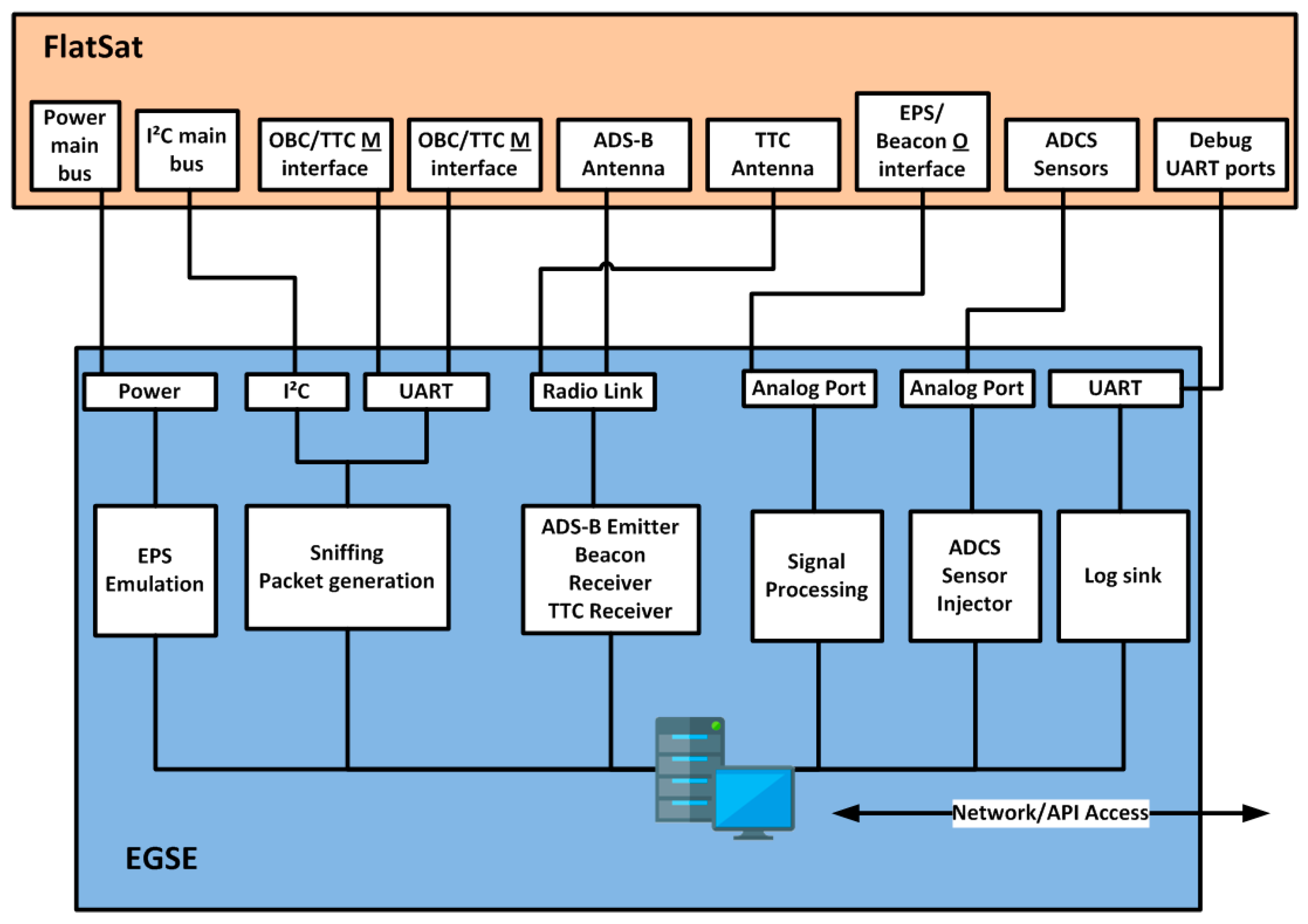

Regarding I

2C interface characterization, some results can be presented. Due to the AX.25 maximum payload and upper layer protocols, an ISTNanosat Control Protocol (INCP) message has a maximum size of 245 bytes in the radio link. Since messages received from the ground can be forwarded from the OBC and COM to other subsystems, and vice versa, in order to avoid fragmentation, all interfaces and protocols need to support a payload of 245 bytes. As can be seen in

Table 3, this is verified for every subsystem.

In ideal conditions, that is, when there is only one transmitter and one receiver, no message loss is expected. While characterizing the I

2C interface, one test was to perform 100 pings, i.e., sending a message to a subsystem that triggers a one-message reply.

Table 3 shows that no messages were lost under these conditions.

While a strict requirement regarding return trip time (RTT) does not exist, a value above 25 ms is indicative of a problem. The RTTs for all subsystems fall below this soft limit, although there’s a significant difference between them. For example, the EPS has an RTT that is almost four times larger than the TTC. This is explained by the combination of the different processing powers of the microprocessors and differences in the implementation of each subsystem.

Finally, a throughput test was performed. While receiving, all subsystems handled the 10 KB/s throughput (after considering protocol overheads) supported by the link. However, not all subsystems can produce and transmit messages fast enough to use the link’s full potential. In particular, there are systems such as the EPS and the TTC from which it was not possible to collect data leading to a result with statistical relevance.

Communications to and from the ground are ensured partly in the TTC and partly in OBC. The result is a suit of tests to ensure that the TTC can send and receive data from the ground by confirming the transmission and reception of messages via the ground station; the ability to change parameters; and that the specified data that should be available through this interface is indeed available. As these tests have a go/no-go nature, there are no statistics concerning the protocol performance except for the number of requirements covered by these tests. Up to now, all the basic communication requirements are covered.

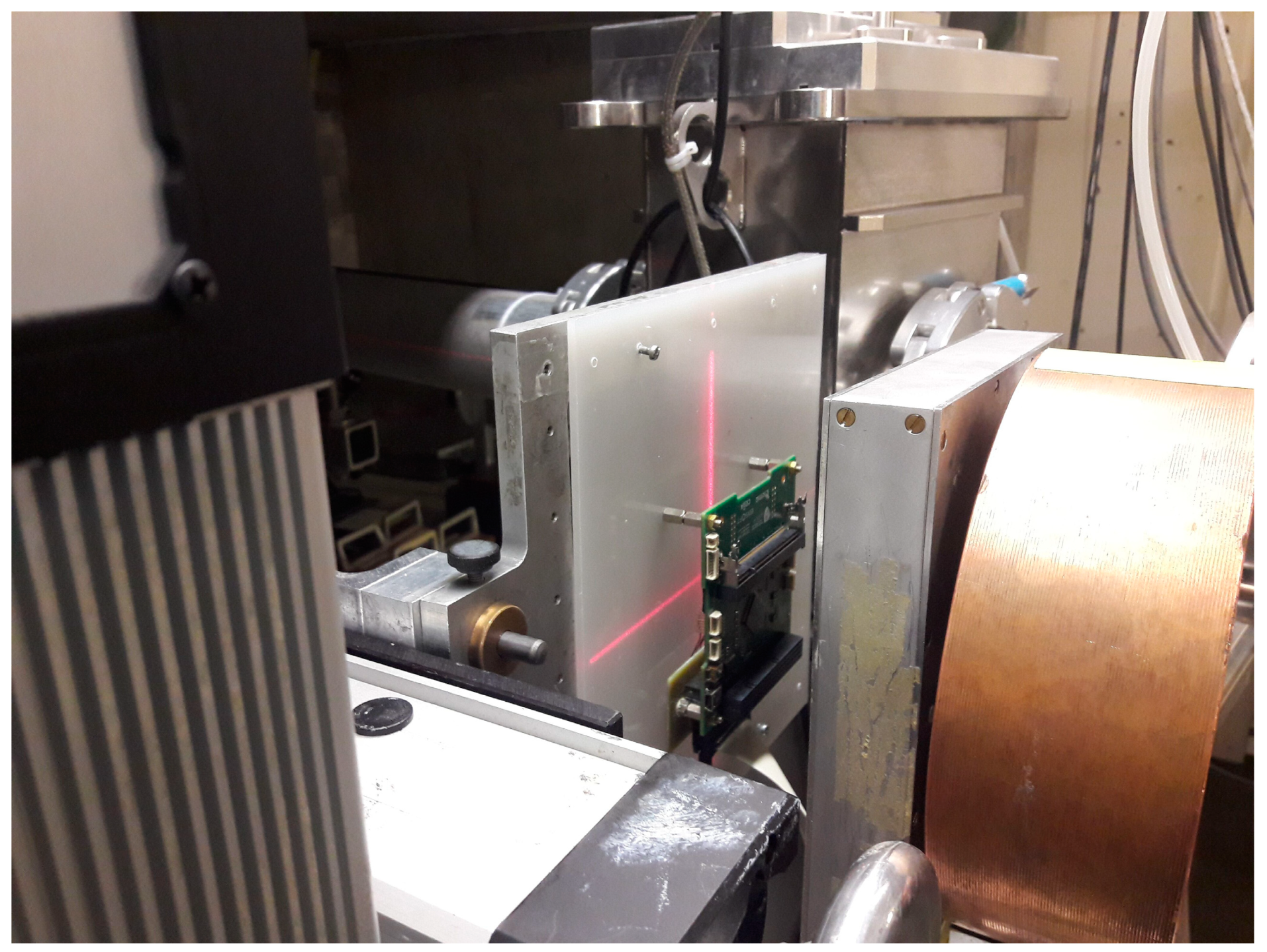

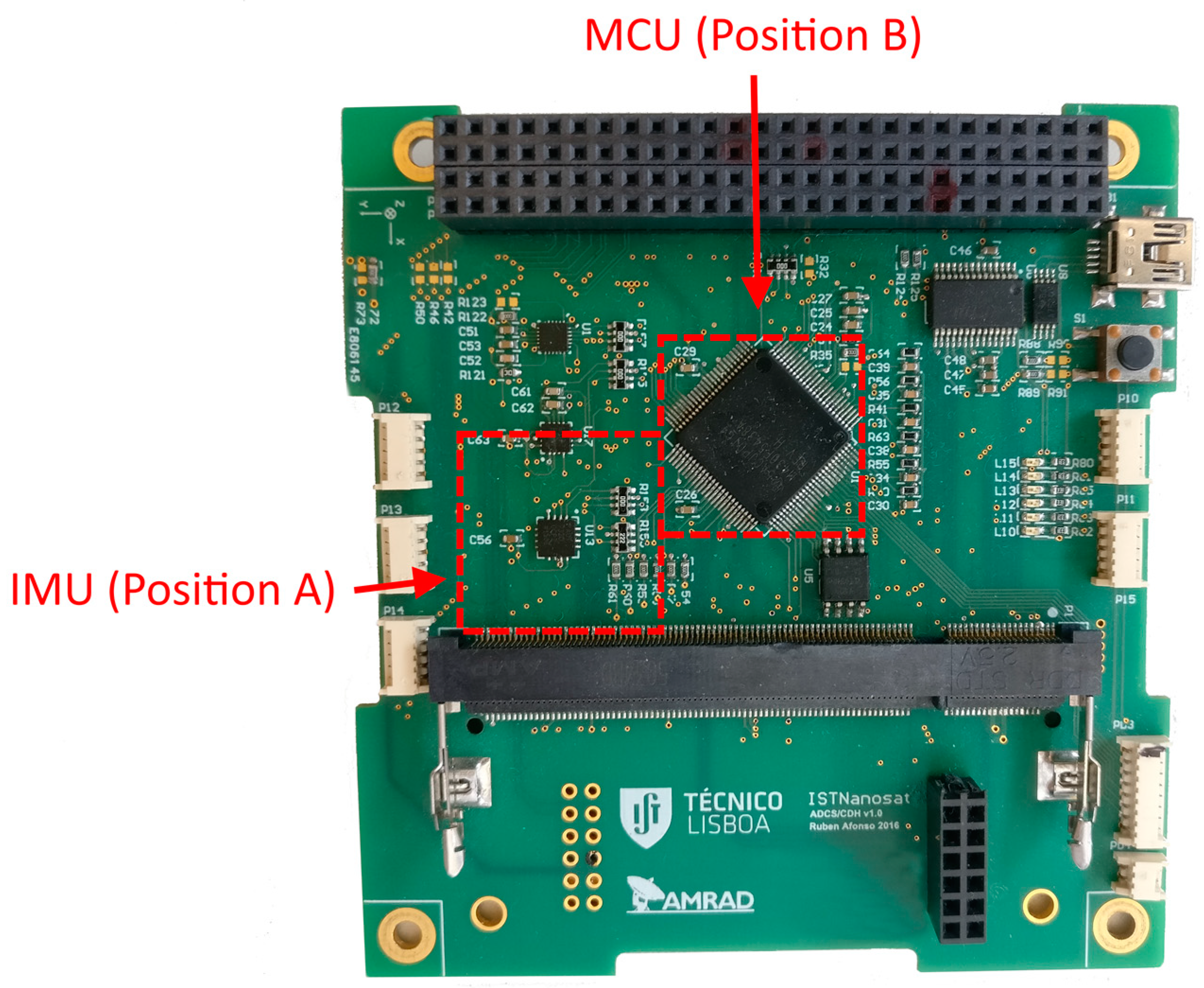

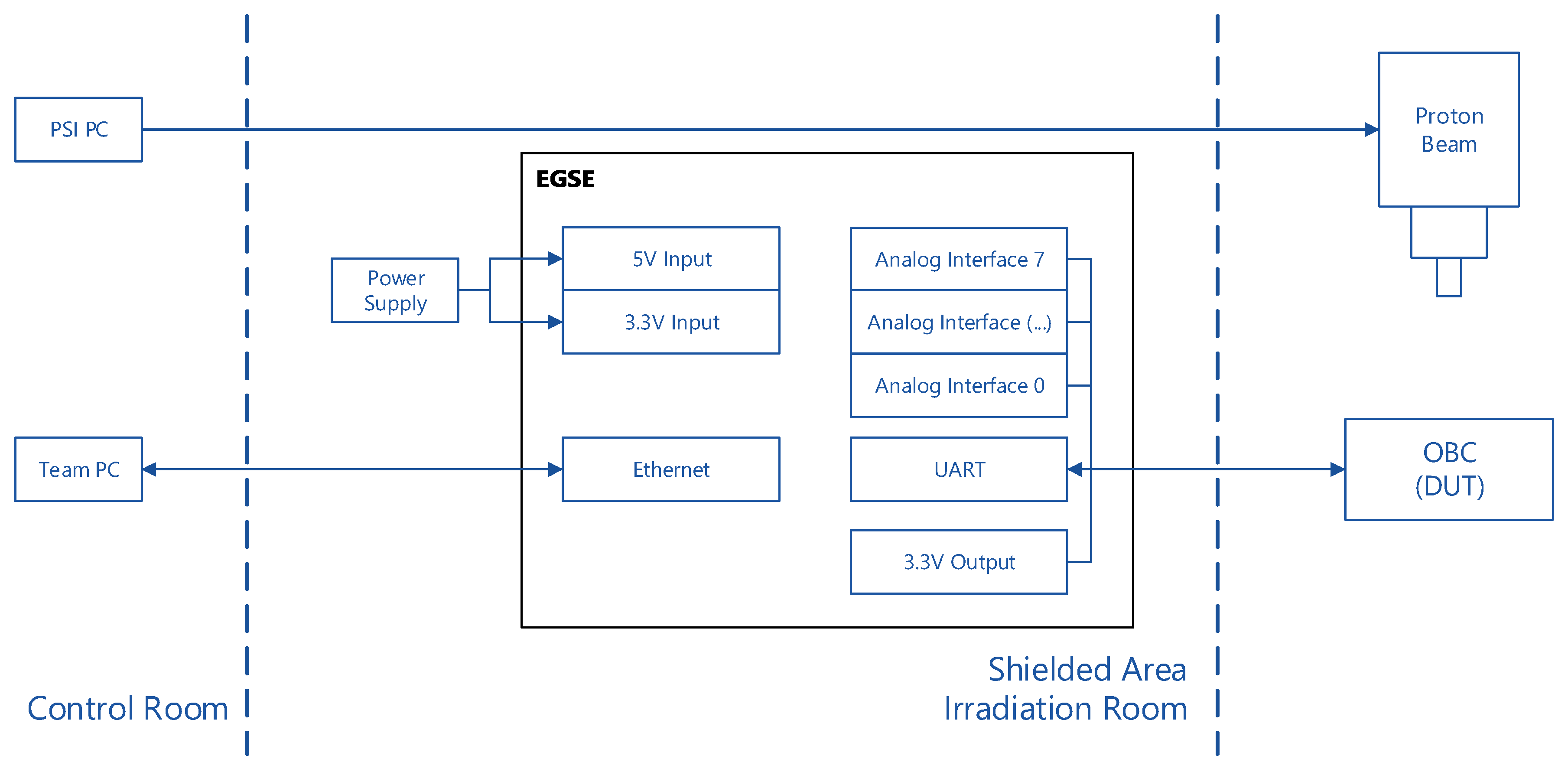

3.2. Ionizing Radiation Testing

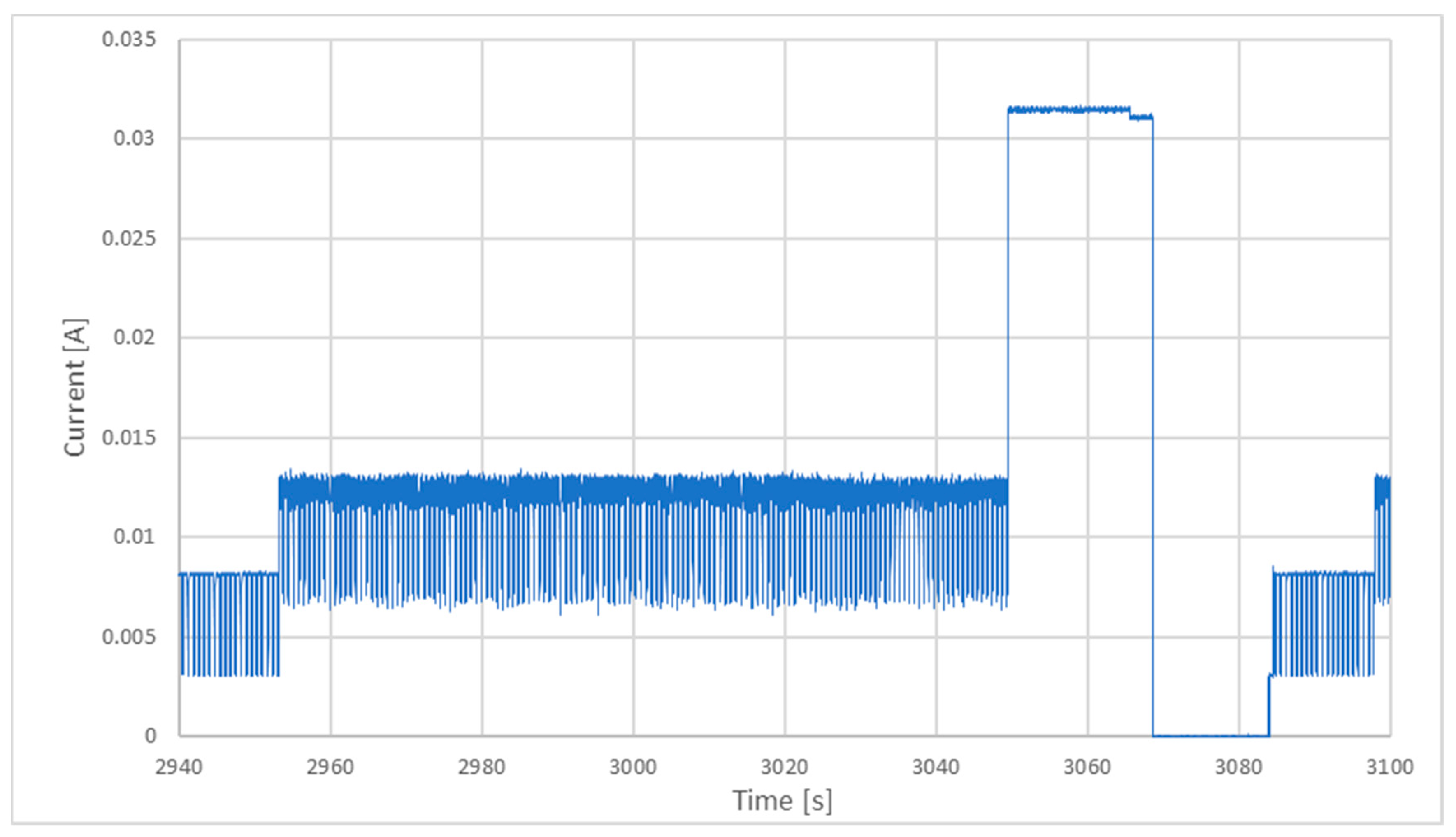

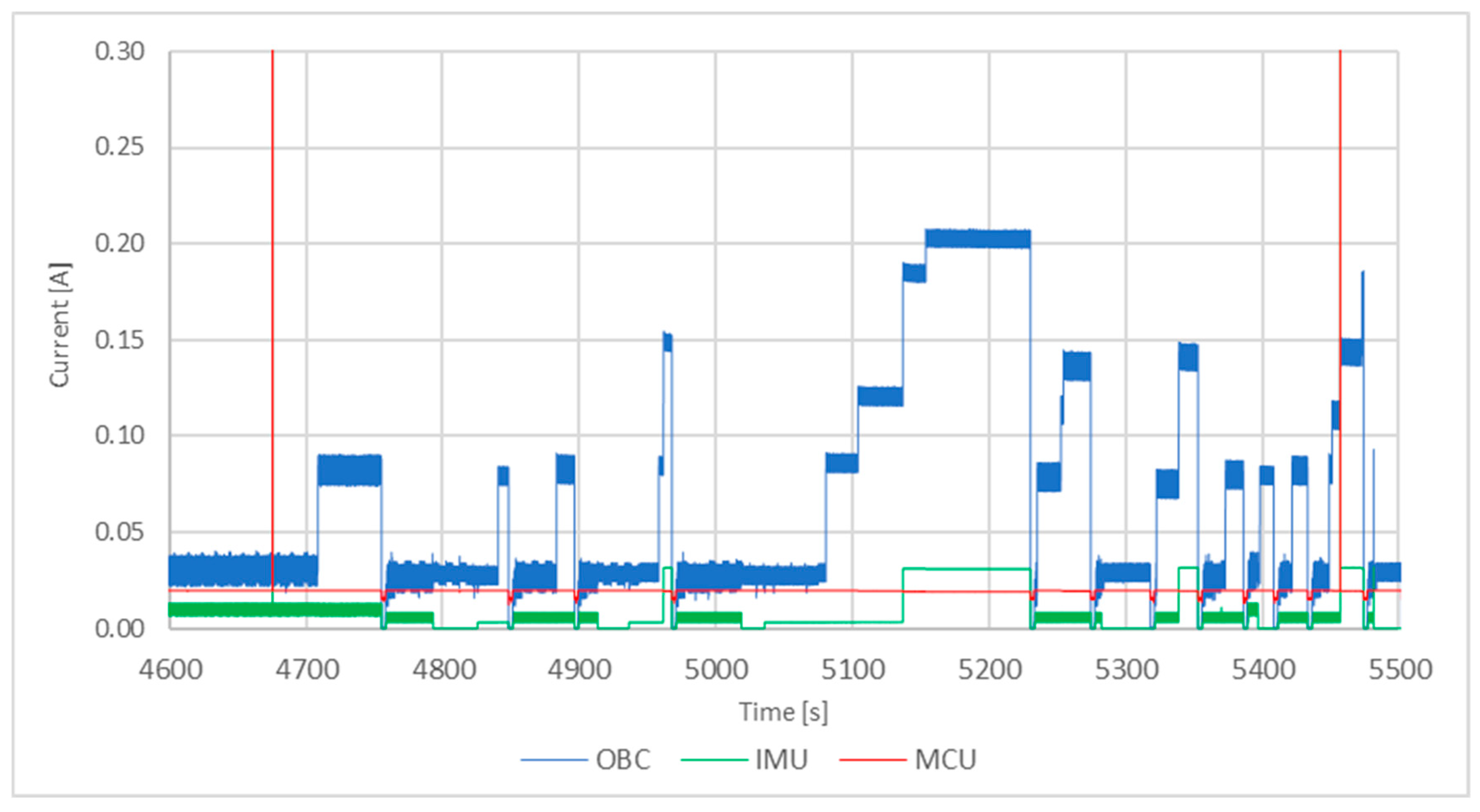

During RUN1, the observed anomalies were related to the sensor directly in the path of the beam, the IMU. The MCU detected four overcurrent events in its power rail, and each time, the software automatically disconnected it. The same anomaly occurred only once during RUN2 when the flux was increased. On RUN3, a small current draw increase on the IMU, from 8 mA to 13.4 mA, was detected at 2953 s (

Figure 16). The MCU did not respond to this increase since it was still below the cutoff value. The current was maintained for 96 s until 3049 s, when the current reached 31 mA, which was a value above the threshold, and the MCU turned it off.

After restarting the sensor at 3084 s, it started working normally, but the current increased to the same level, 13 mA, after 14 s. In RUN6 (runs 4 and 5 were aborted due to a problem in the beam), during which the beam was not focused on the sensor, the current draw was 8 mA. It is probable that the incident radiation changes the behavior of the sensor, but it is not enough for it to stop working.

In RUN7, the EGSE stopped running after 60 s and could not be restarted from the control room. The beam was stopped, and a power cycle to EGSE was applied in the chamber. It was discussed that without the collimator to focus the beam, more protons were dispersed and probably interfered with the EGSE, blocking the MCU in a state that could not be recovered remotely.

In RUN8 (red spikes at 4675 s and 5457 s on

Figure 17), the MCU’s current reached 315 mA, forcing the EGSE to cut off its power rails. There were situations in which the overall current increased, but the MCU current remained constant. The team’s interpretation was that some component on the board was affected, but the MCU was not directly affected. The MCU may lock up from a condition originated by a component or peripheral in an unexpected state.

At 5080 s, the OBC was left on to see what happened if the current increased. The current increased four times but never reached the 300 mA threshold to force a shutdown. At 5352 s, when the flux increased, the time between failures decreased as expected. Finally, at 5449 s, with the maximum flux of 8 × 10−7 p/cm2/s, the same four steps happen, but faster, in only 12 s. After a reboot, and without the beam, the system recovered its normal operation. In total, more than 15 manual power cycles were executed to gain control of the system. In all of them, the MCU lost the communication with the EGSE in less than 30 s.

The results show that, as predicted, the current draw from the individual components (sensors and MCU) greatly increases in the presence of high-energy particles—which build up charges on electronic devices when passing through them. The most affected devices are transistors (and any device that makes use of them), since their operation is based on changing charges. If the state of the transistor changes, it may allow current to flow, increasing the overall current consumption. At the levels used for the test, this effect is almost immediate, taking less than 30 s before the current level reached the threshold. The test showed that this effect does not permanently damage the sensors if appropriate action is taken, and the system is powered off. Therefore, the test increased the confidence in the design tolerance to radiation-related faults. The current overload detection mechanism was changed by implementing a time-averaged current draw threshold in addition to the already used instantaneous threshold. This way, the system can detect if there is a rapid current spike and a slow increase in current over time. These two methods also were implemented in the EPS subsystem.

As a direct consequence of the test, an external watchdog to the OBC MCU was added in order to force a restart in case of a latch-up. The software configuration was also changed to allow easily turning the internal Watchdog Timer (WDT) on/off during compilation using flags. These design changes are not foreseen to be tested again under the same environmental conditions unless a similar opportunity is offered. However, both changes are going to be tested as part of the functional test campaign, which will be run at ambient temperature and pressure. It is important to note that the addition of the external watchdog would likely have required the development of new flight hardware had the test only occurred after PFM integration.

3.3. Battery Qualification Campaign

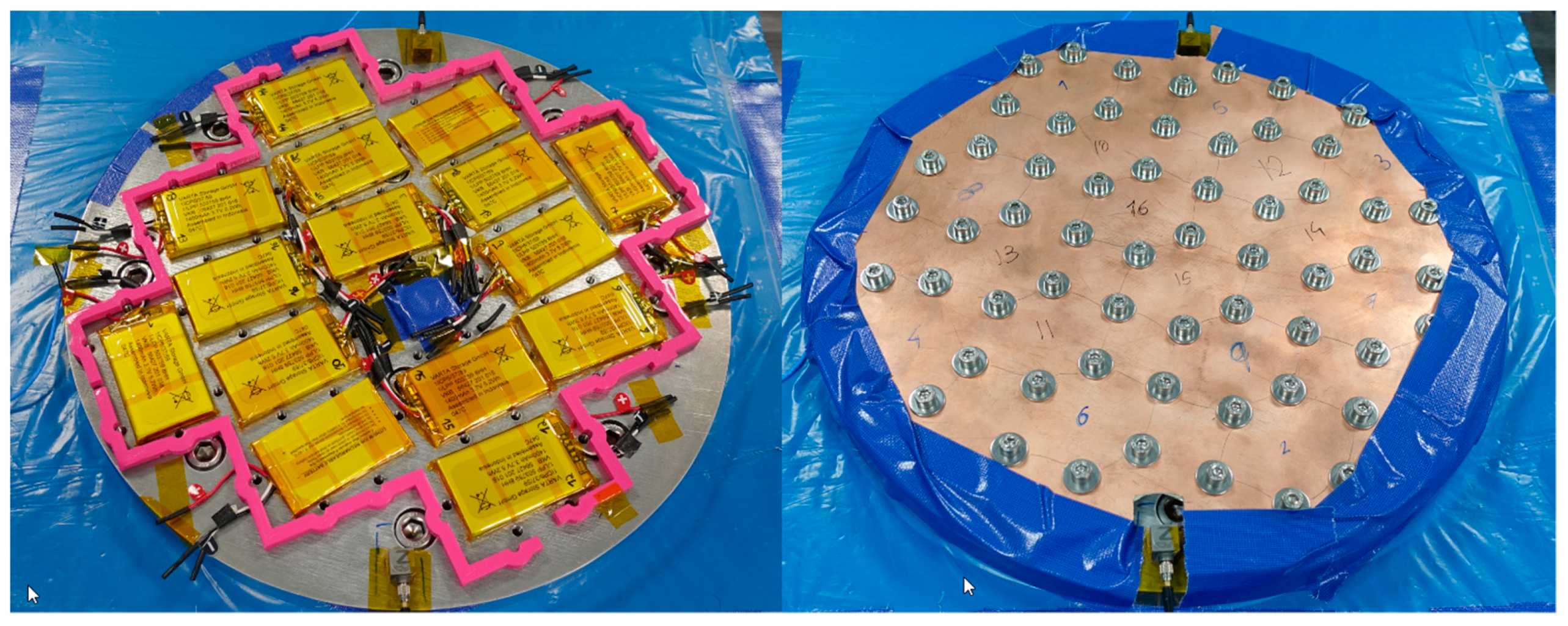

The physical and electrochemical characterization of the cells demonstrated that all 16 cells to undergo acceptance testing were compliant with the manufacturer datasheet. Cell discharge profiles were very similar for all cells.

After verifying their correct characteristics, all 16 cells endured the vibration test with no incidents. Each cell was vibrated in three different orthogonal directions and with a random mean square (RMS) acceleration of 9.65 g (the test logs demonstrate this but were excluded from this article due to size). Between each run, the cells were checked for both internal resistance and open circuit voltage. In all health checks, the cells revealed that they were not affected by the vibration tests.

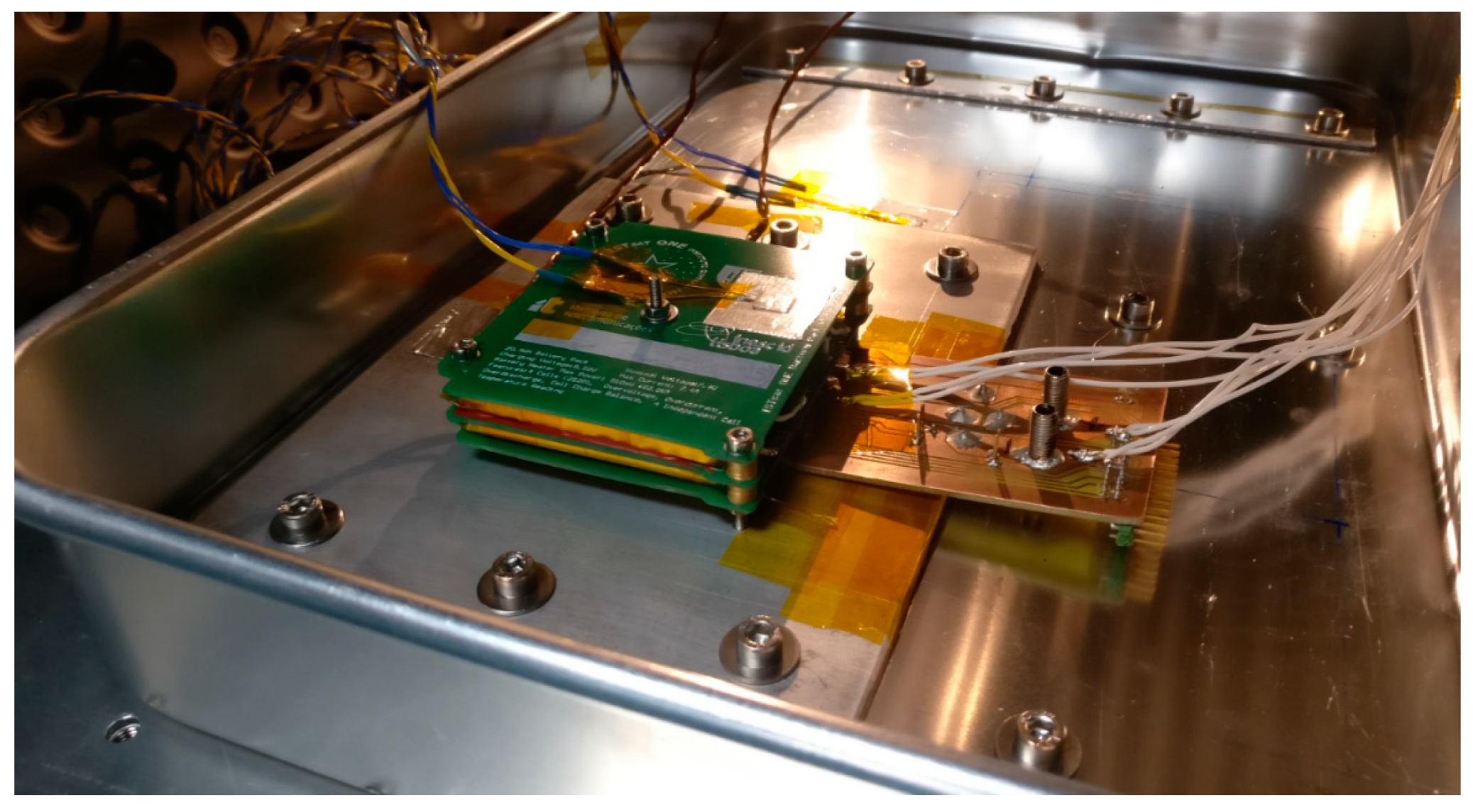

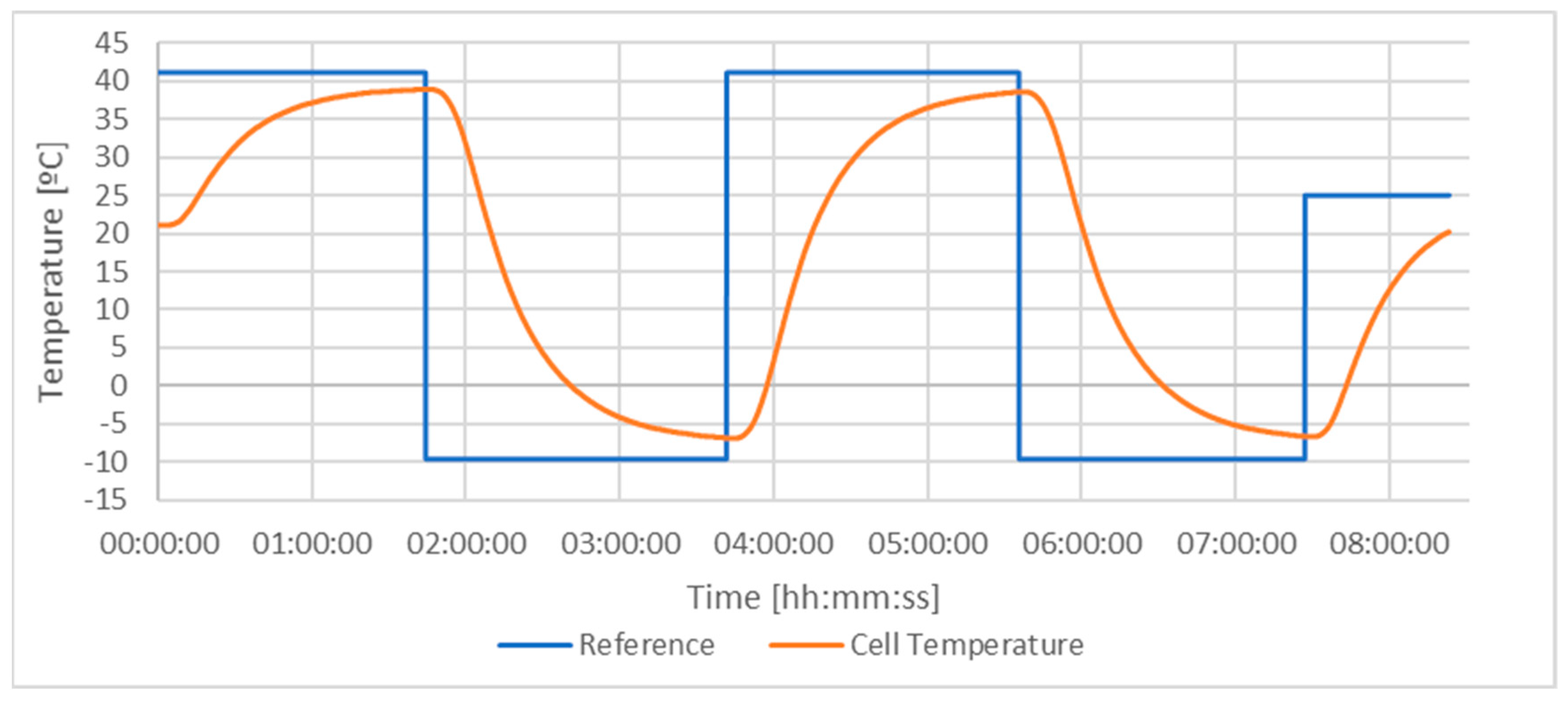

Regarding the TVAC test, all cells faced temperatures from roughly −9 °C to +39 °C under vacuum conditions (as seen in

Figure 18) without any incidents. The cells were submitted to, and survived, more than 10 h at a pressure of less than 10

−6 mbar. The tests did not harm the cells, as demonstrated by health check tests.

Reassessment of the cells’ physical and electrochemical characteristics demonstrated that all survived the tests. The mean relative discrepancy between pre-test and post-test internal resistance was +1.27%, with a standard deviation of 2.01%. The mean relative discrepancy between pre-test and post-test discharge capacity was +1.03%, with a standard deviation of 2.12%. In both cases, the deviation was positive, which is counterintuitive—capacity should decrease with every discharge cycle, particularly under stress conditions. The team attributes this to measurement error due to different environmental conditions between pre-test and post-test characterization, as cell internal resistance and capacity vary significantly with ambient temperature. Therefore, the team concluded that the cells were not damaged during acceptance testing.

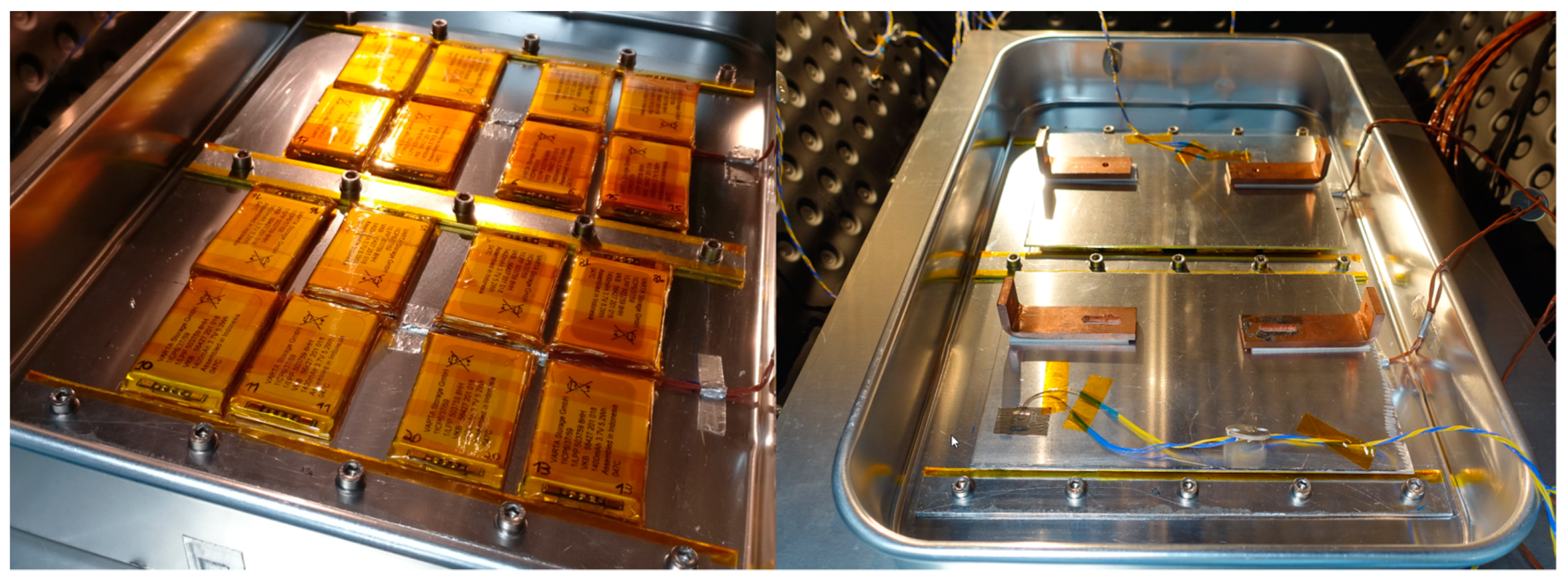

The qualification battery model was assembled, and characterization tests were performed. The battery tester was configured to discharge at a current of 800 mA (0.3 C) and let the battery discharge until the voltage dropped to 6.2 V or the current dropped below 700 mA. The battery pack was charged up to 8.3 V before starting the procedure. The total discharge capacity under these conditions was 2576 mAh.

Having confirmed the nominal operation of the battery pack, electrical stress tests were performed. The first test was the undervoltage test, which was performed also at an increased discharge rate (1.5 A, or 0.6 C). The cell protection circuit cut power at a battery voltage of 4.57 V, with the first cell to engage the protection circuit at 2.285 V (The protection circuit acts at an individual cell level. The total battery voltage was 4.57 V due to the series association of two cells at roughly 2.28 V). The manufacturer datasheet states 2.3 V as the minimum voltage below which the protection circuit is engaged, which means that this test yielded slightly worse results than expected; however, they were well within the 5% established tolerance. Under these conditions, the total discharge capacity was 1634 mAh, which was 37% below nominal conditions. This performance penalty was expected and is in line with the manufacturer datasheet.

Then, the battery was charged at a rate of 0.3 C, and the overcharge protection circuit was tested. The system engaged at a battery voltage of 8.572 V (about 4.286 V per cell, which is very close to the manufactured specification of 4.275 V for overcharge protection engagement), thus proving that the battery was adequately protected from overcharge and undercharge.

The final electrical abuse test was an external short-circuit test. The positive and negative pads were shorted, and the protection circuit engaged after 5 ms when the peak current was 8.44 A. The test showed that even in the event of an external short circuit (for instance, a piece of debris shorting the battery terminals) or a software glitch that would allow the EPS to overcharge or undercharge the battery, the passive battery protection circuit is capable of preventing any situation that could lead to a defective lithium polymer cell.

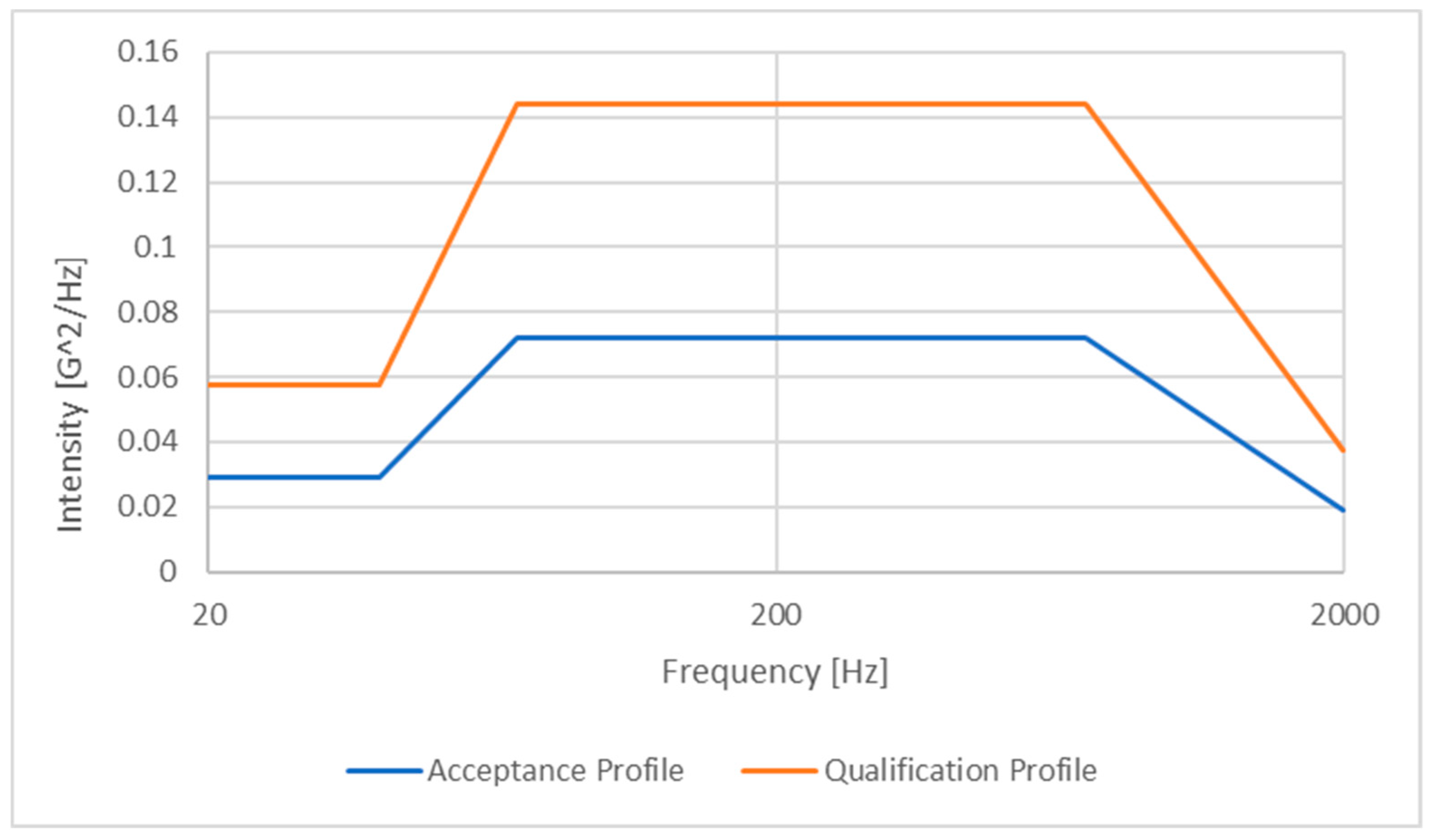

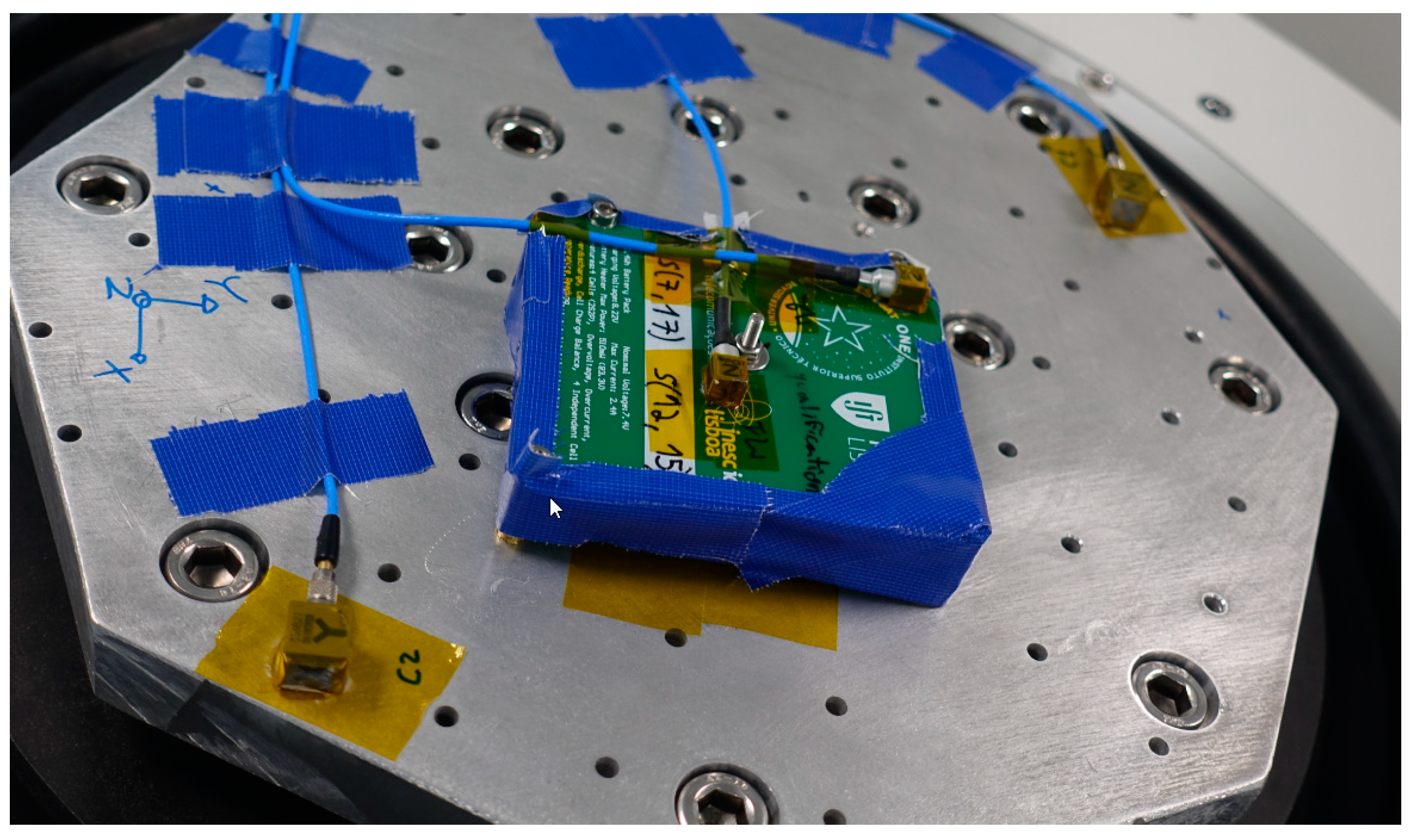

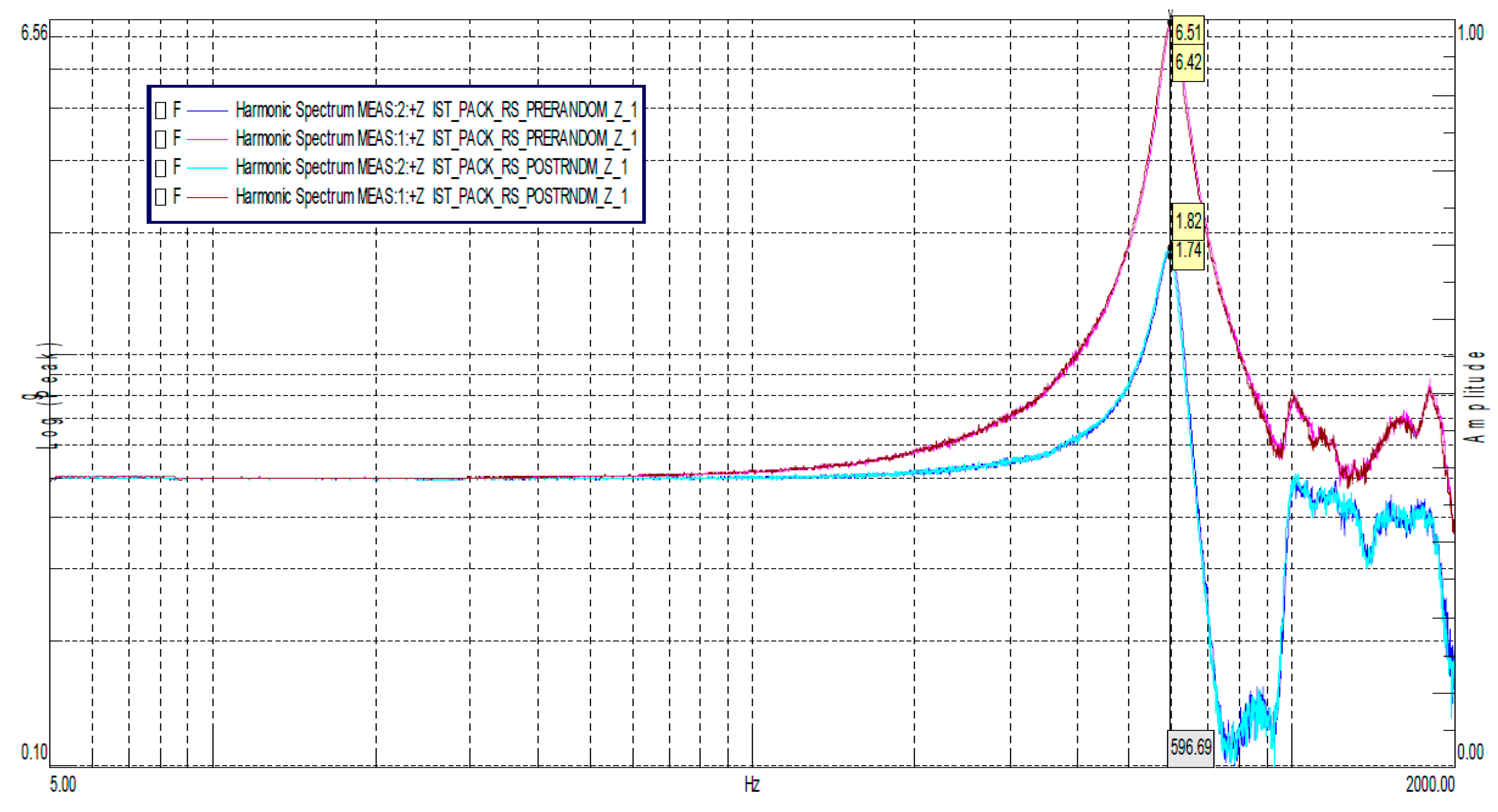

The team returned to the CSF in order to perform vibration and TVAC testing. The battery pack endured the expected random vibration profile (with a 13.65 g RMS acceleration) on all axes for a total duration of 5 min each. Before and after each random vibration test run, a low-level sine sweep was performed in order to assess the frequency response of the battery. In all cases, the frequency and amplitude shift in both accelerometer measurements were below 5%, demonstrating the adequate structural design of the battery pack. An example can be seen in

Figure 19, which shows that the frequency response curves for the accelerometer near the edge (blue lines) and near the center (pink lines) before and after the tests are nearly overlapping, indicating that no structural degradation took place. Electrical characterization before and after the test also confirmed that the battery had suffered no performance degradation.

After the random vibration test, the TVAC test took place. The initial OCV was 8.111 V, and the goal was to discharge at 0.8 C until 70% depth of discharge (DoD) corresponding to an expected 1960 mAh discharge capacity. At 60 °C, the time elapsed was 1 h 46 m 21 s, reaching a final voltage of 6.382 V and a total discharge capacity of 2021 mAh. This increase is expected due to the reduction of the cells’ internal resistance at higher temperatures.

Charging took place at 40 °C and a 0.5 C charge rate. The initial voltage before charging was 7.491 V, the final voltage was 8.246 V, and the maximum charging current was 0.727 A. After 20 min, the voltage settled to 8.158 V.

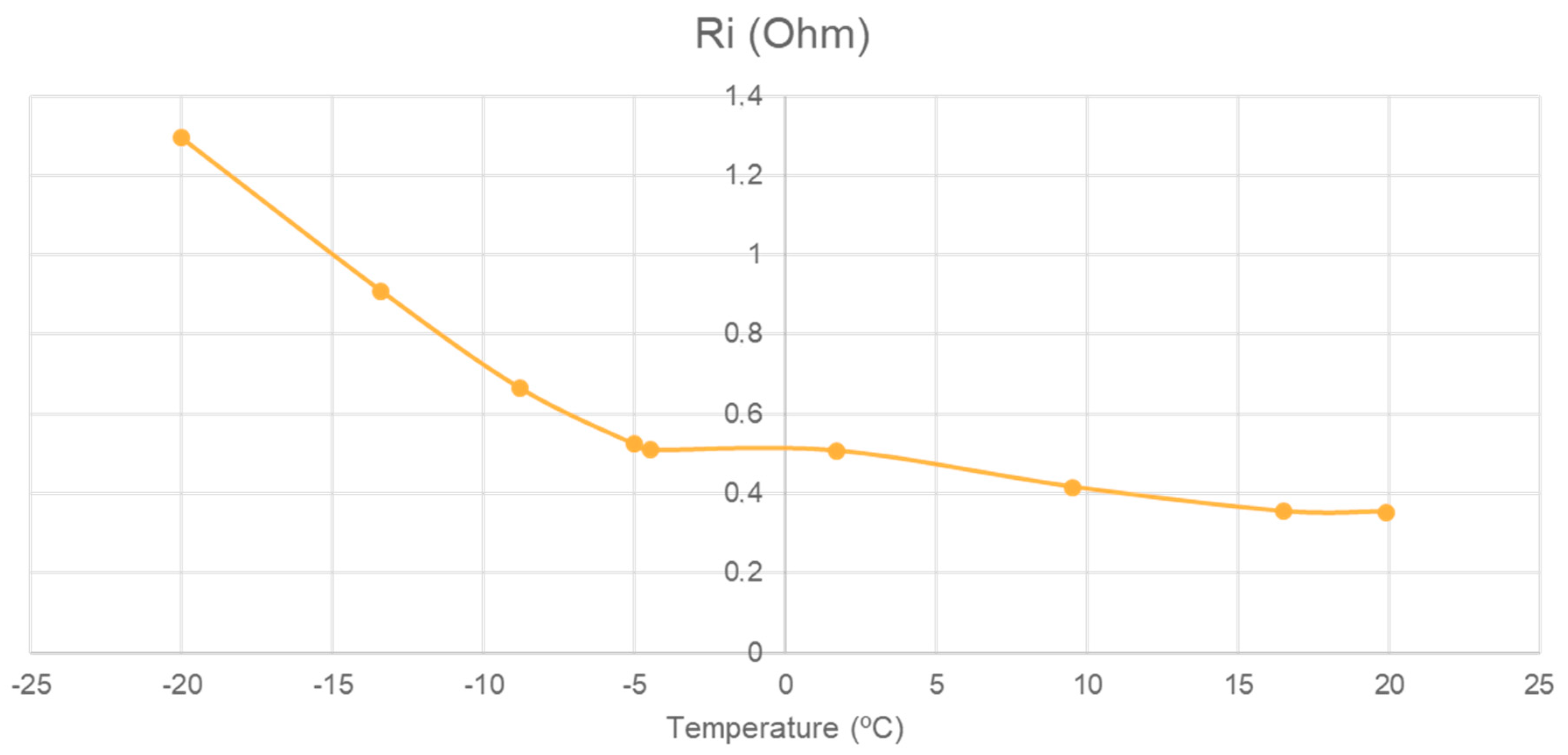

Then, the chamber was set for −20 °C and dwelled at this temperature for 2 h. The first attempt at discharging the battery was aborted, because the voltage drop under the nominal load was excessive at 700 mV, resulting in a 1.2 V decrease in less than one minute. Due to this unexpected behavior, the team decided to raise the temperature to −5 °C in order to understand if the damage was permanent or if it was induced by the low temperature. While the chamber temperature increased, the OCV and internal resistance were monitored in order to assess whether it was safe to perform another discharge. As shown in

Figure 20, as the temperature increases, the internal resistance decreases. This test demonstrated that despite the manufacturer claiming operability at −20 °C, the internal resistance at this temperature is too high, and therefore the discharge capacity is too low.

The discharge test took place at −5 °C. As expected, the performance degraded when compared to the 60 °C discharge profile, with a total discharge capacity of 1503 mAh, a final voltage of 6.498 V, and a total time of 1 h 25 m 40 s.

The final charging of the battery took place at 0 °C, resulting in a charging capacity of only 1020 mAh, which was expected as the charge rate was higher in order to reduce the charging time (1 h 46 m 33 s).

Physical and electrochemical characterization of the battery pack at the end of the campaign showed that the overall performance of the battery pack degraded by 99 mAh—specifically to 2477 mAh, a 3.5% capacity loss, which is within the error margin. The internal resistance at 100% state-of-charge was the same, 152 mΩ. This means that the battery recovered from the thermal vacuum incident that occurred during the discharging process at negative temperatures, and that the qualification campaign, which featured abuse testing at levels quite above expected nominal conditions, did not degrade the battery. Overall, the conclusion was that the battery is safe and its protection circuitry is adequate, but that the team needed to reassess the mission profiles and the battery heater duty cycle in order to prevent discharging at negative temperatures.

3.4. Electromagnetic Compatibility Testing

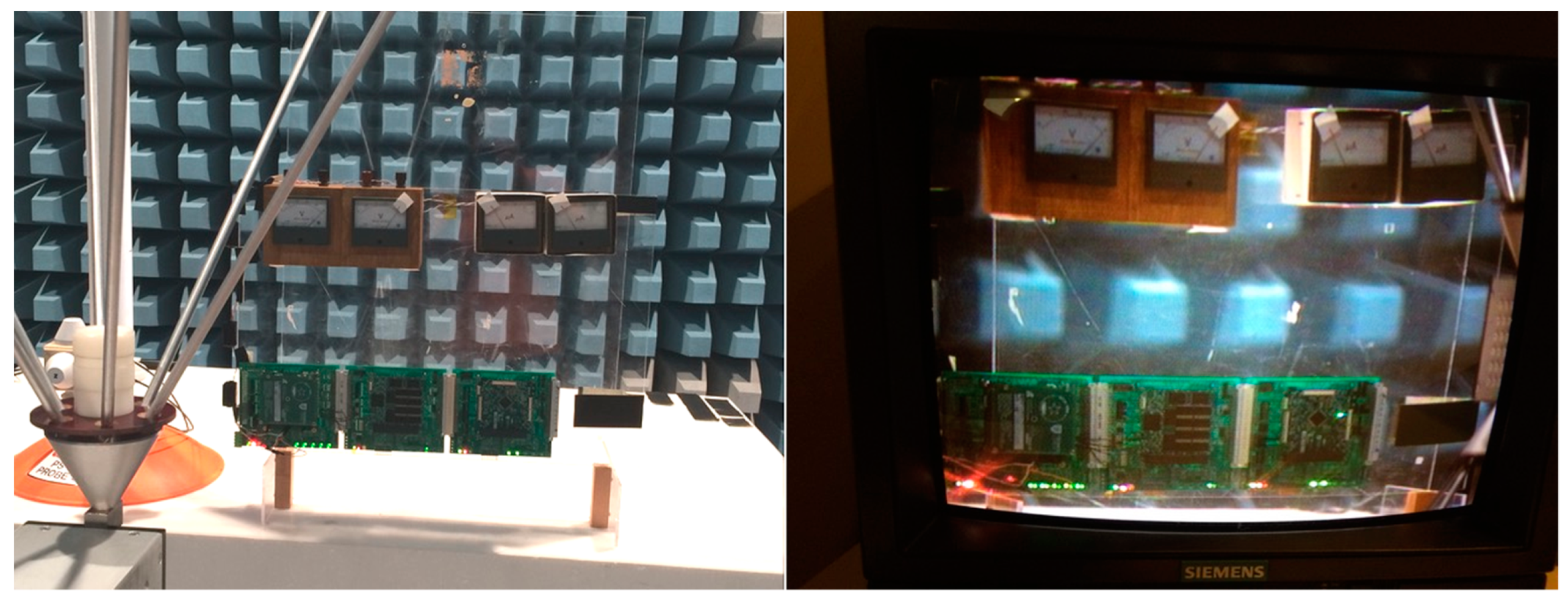

The first two immunity testing runs were executed without problems, but the third revealed a malfunction of the OBC, which occurred at 63 MHz with an illuminating field of 10 V/m. A quick diagnostic revealed that the RF radiation was being injected into the unprotected cables connected to the voltmeters and ammeters and consequently affecting the quality of the subsystems’ power lines. Due to this problem, the test procedure was interrupted so that the test setup could be improved. The cables were better twisted and relocated on the supporting panel along with the addition of ferrite cores over the cables. Following this rearrangement of the panel wiring, run 3 was repeated. On all runs, the DUT did not malfunction i.e., the current and voltage levels were constant, and the software ran with no issue. Three more runs were carried out with the boards in the stacked configuration. As before, the results were positive without any change in the behavior of the DUT in the whole frequency range used.

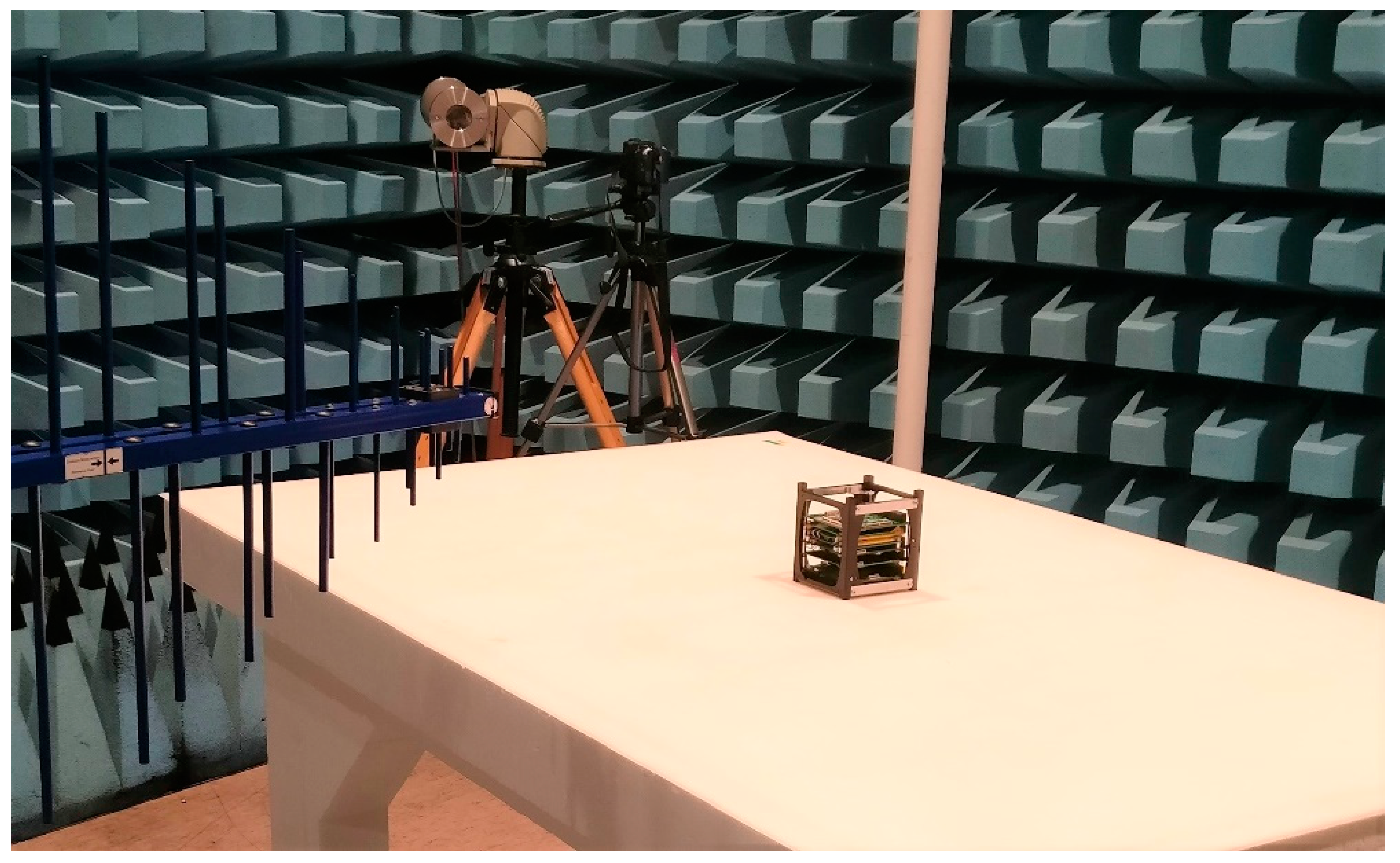

Regarding emission testing, in the first phase only the EPS, OBC, and COM were assembled in the structure. The intention was to check the amount of radiation generated essentially by the processors. Next, the payload was added, and the result compared with the previous values. A total of 12 runs were performed, six for each polarization, and covering the six faces of the S/C. The result of this first phase was very encouraging, as the maximum detected radiation peaks, mainly in the 80 MHz zone and corresponding essentially to the activity of the processors running an external memory test (activity on the processor external bus), were always below the limit set by the standard (receiving antenna 1 m from DUT), as seen in

Figure 21. The contribution of the ADS-B board to the overall radio noise of the satellite was not significant.

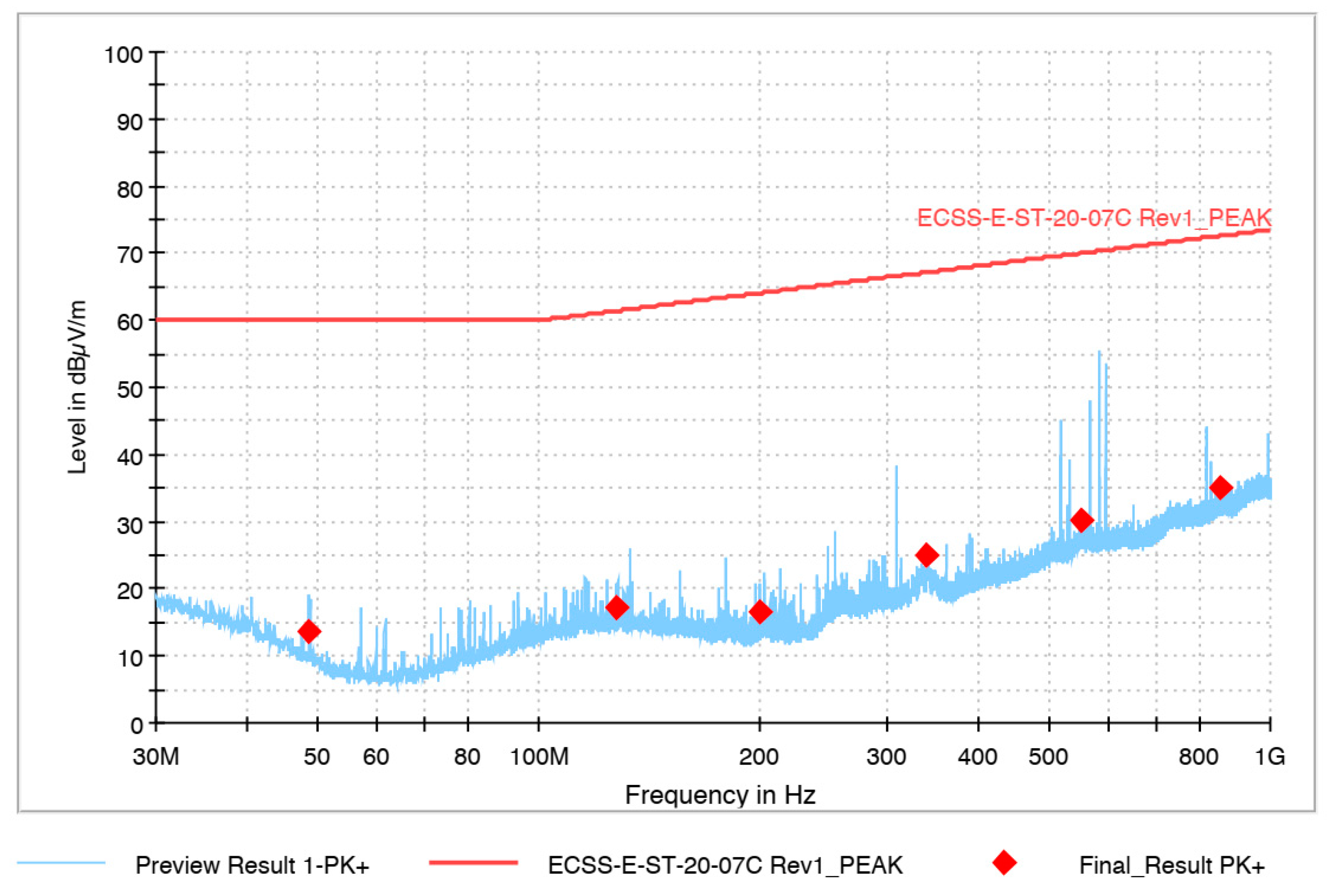

In the second phase, now with the satellite equipped with its five subsystems and the RF output of the TTC terminated in a 40 dB attenuator, the radiation emitted by the system was re-analyzed throughout the system across the entire frequency band. In this test, the contribution of the TTC to the satellite-radiated field was evident, with some peaks at certain frequencies (harmonics of the emission frequency and signals generated internally), although the maximum values were still 20 dB below the limit defined in the standard (as seen in

Figure 22). The TTC continuously emitted a test signal, and although terminated, there is still a small portion of that signal that is radiated outwards by the plugs and cables connected to the load.