Enhanced Test Data Management in Spacecraft Ground Testing: A Practical Approach for Centralized Storage and Automated Processing

Abstract

1. Introduction

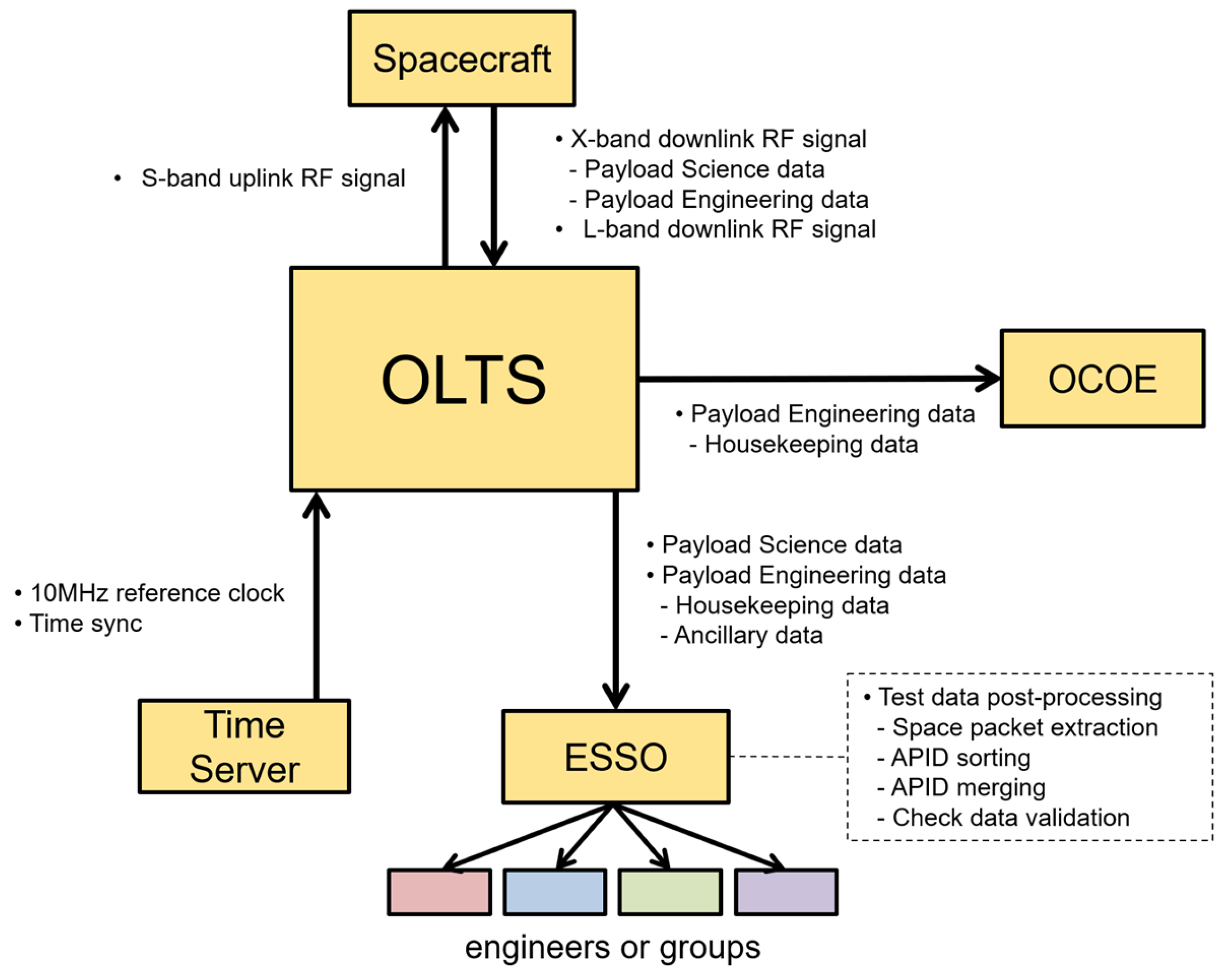

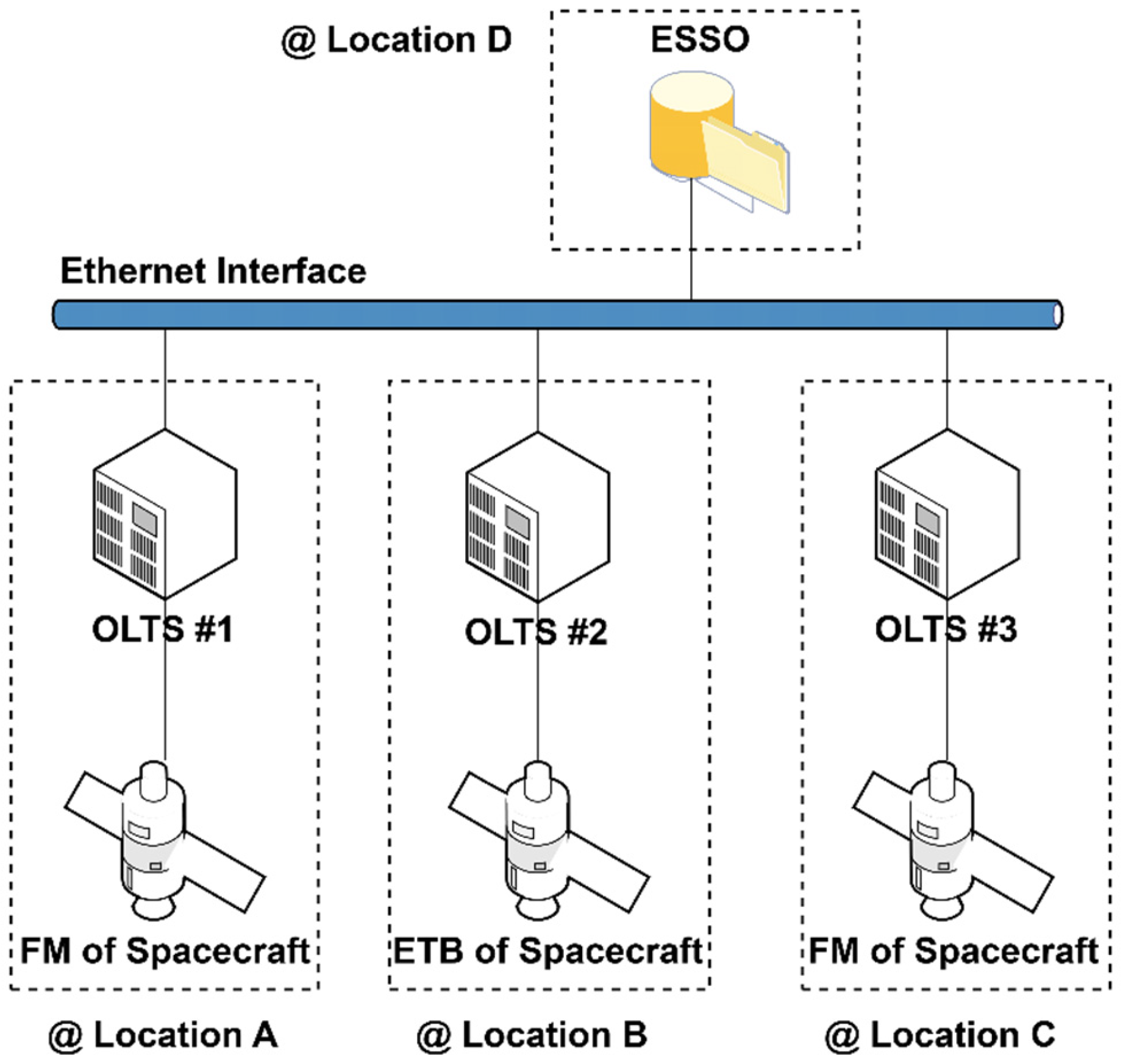

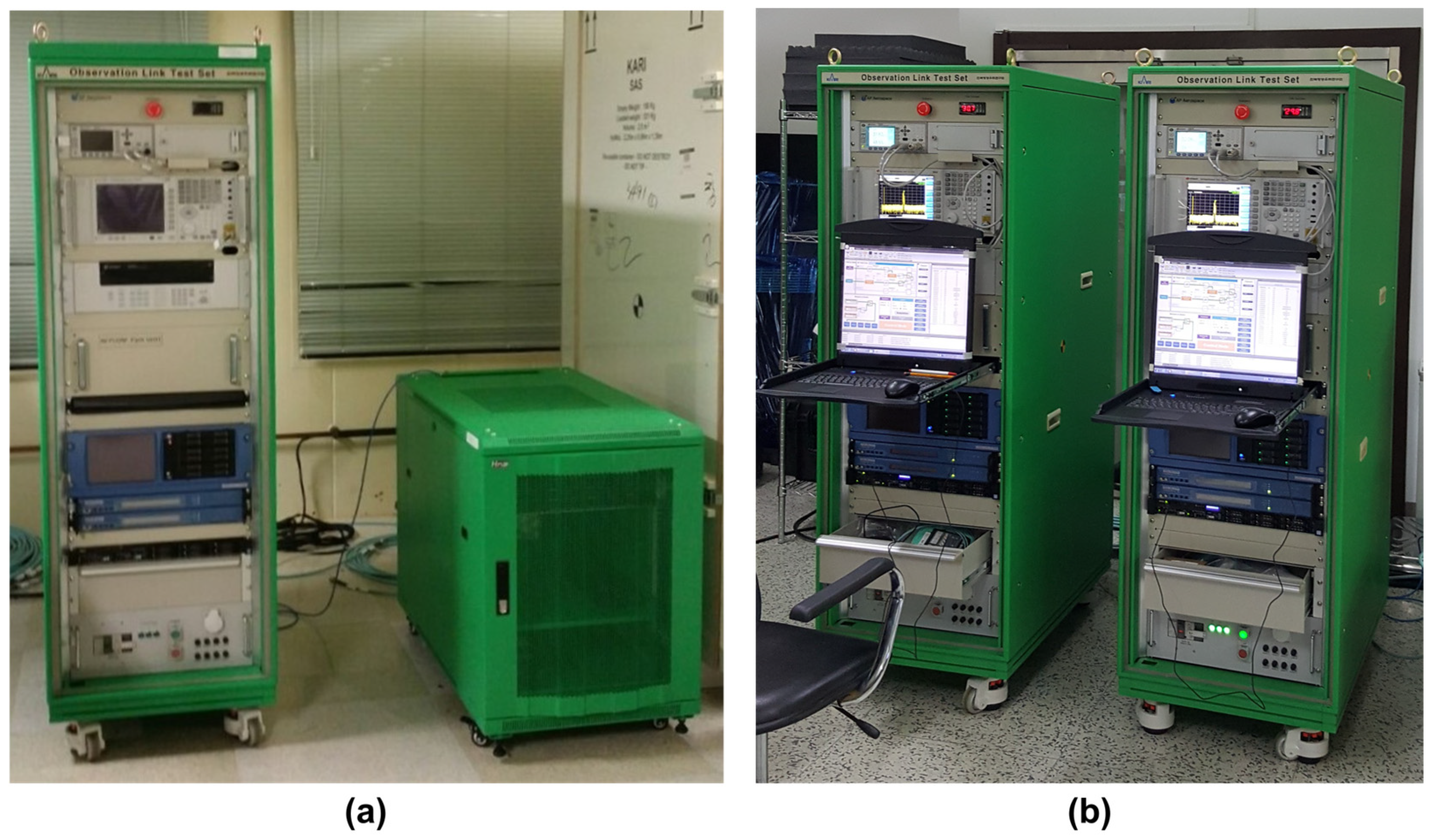

2. System Architecture and Operational Context of OLTS

2.1. System Architecture

2.1.1. Test Interfaces and Operational Modes

2.1.2. Multi-Site Deployment and Centralized Data Management

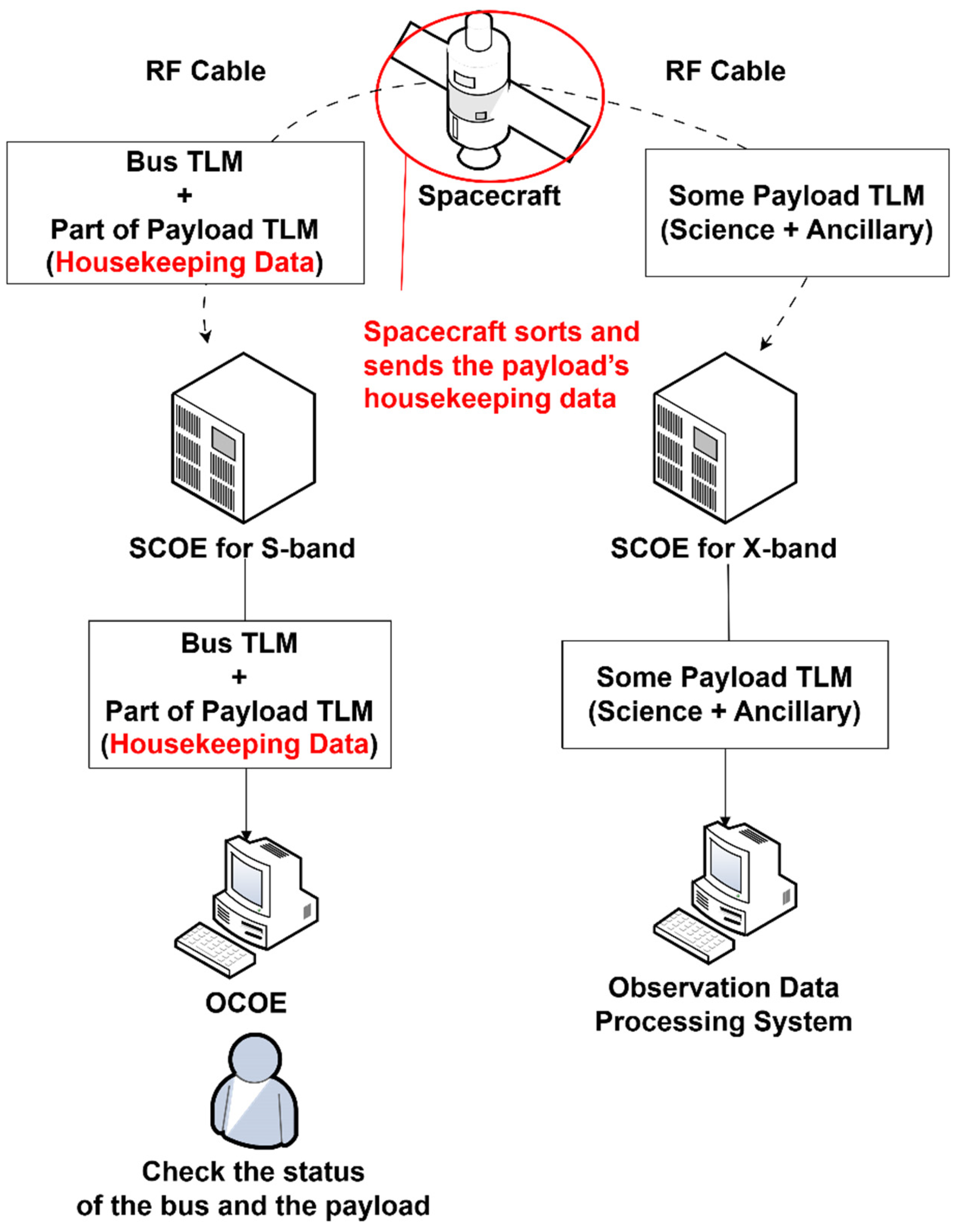

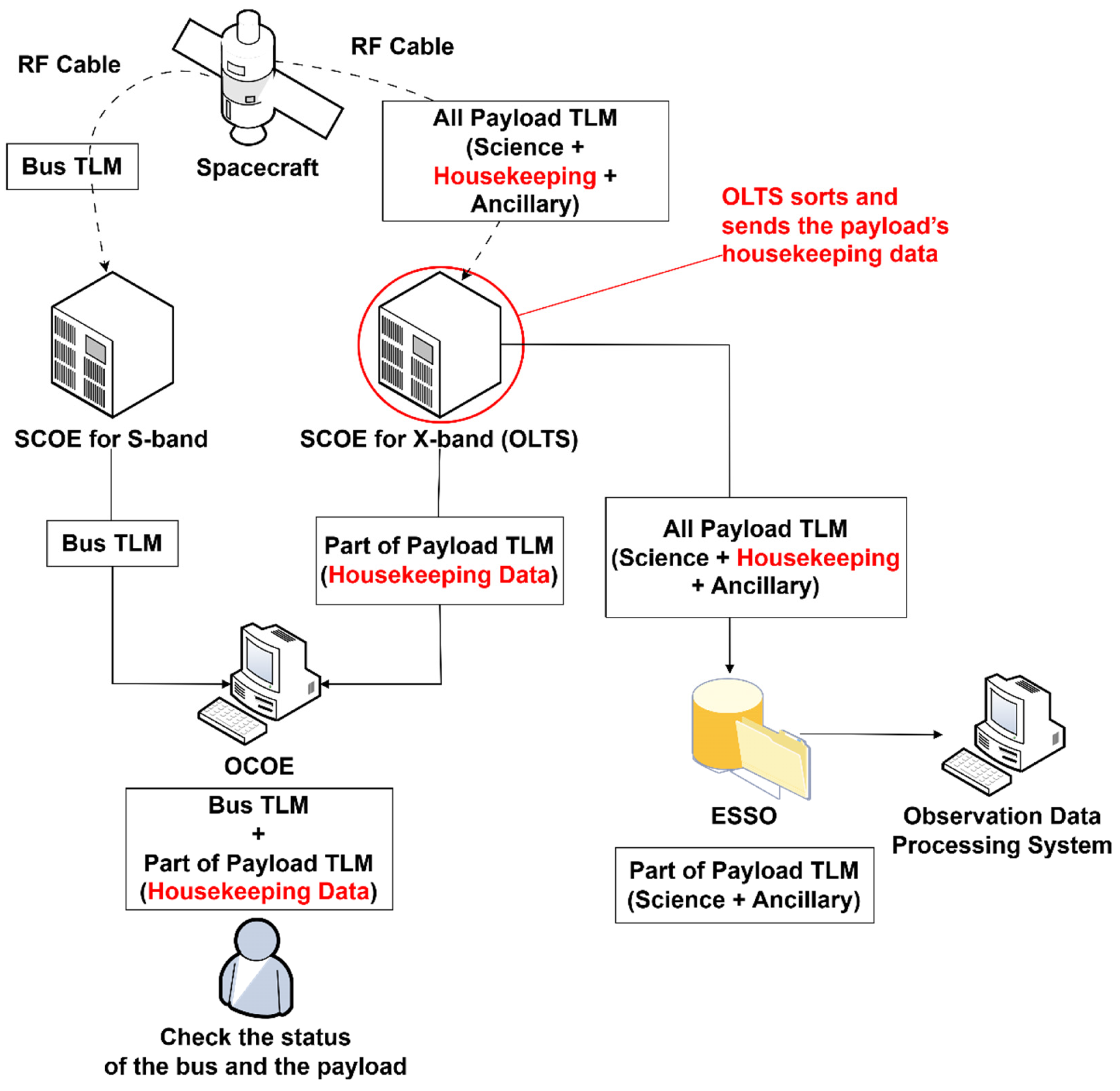

2.2. Payload Telemetry Categorization and Ground System Implications

2.2.1. Classification of Payload Telemetry

2.2.2. Impact on Ground System Architecture

3. Enhanced Data Management for Automated Spacecraft Ground Testing

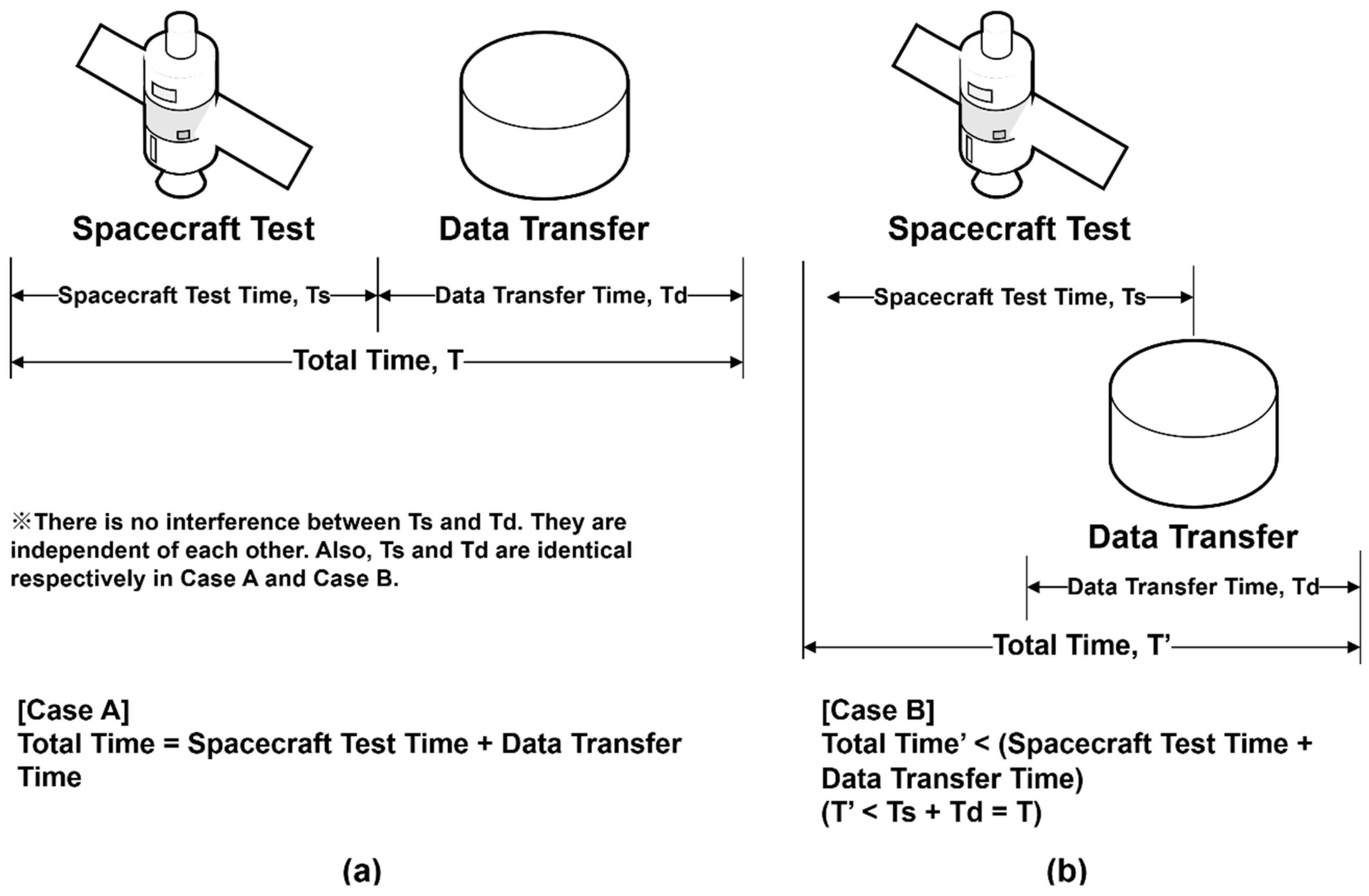

3.1. Test Data Transfer for Centralized Test Data Storage

3.1.1. Limitations of Manual Transfer in Legacy Systems

3.1.2. Design Principles for Automated File Transfer

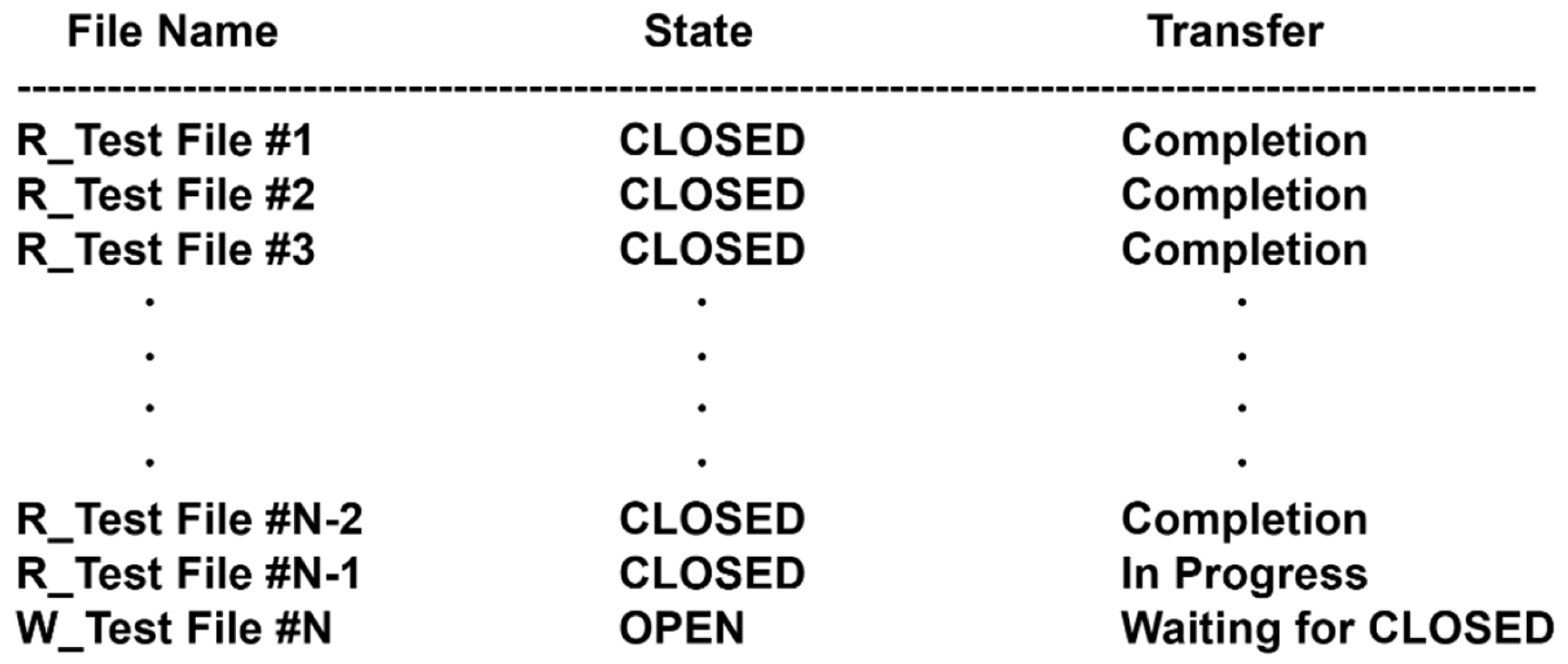

3.1.3. Transfer Automation Based on Prefix Algorithm

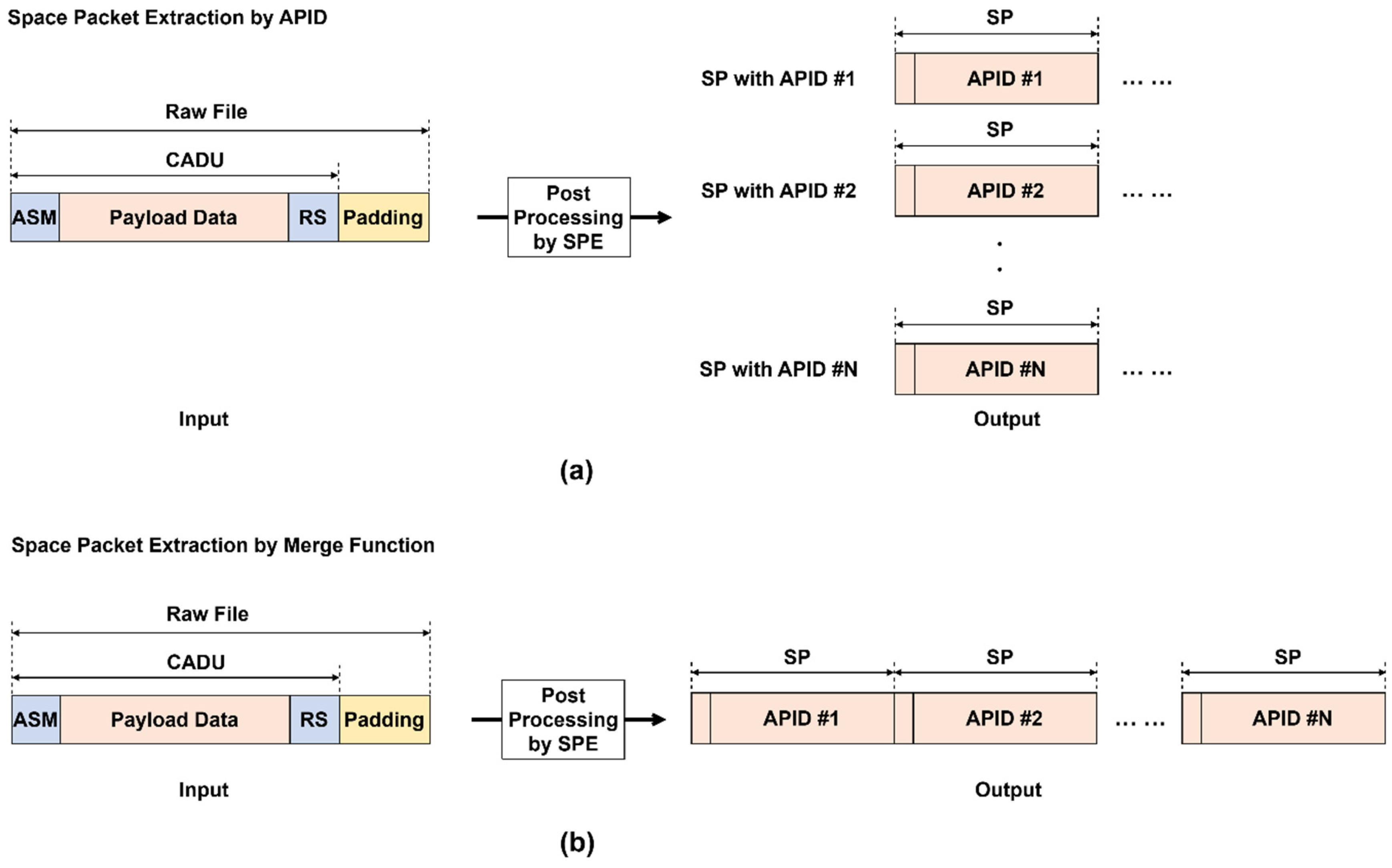

3.2. Space Packet Extractor for Post-Processing and Data Validation

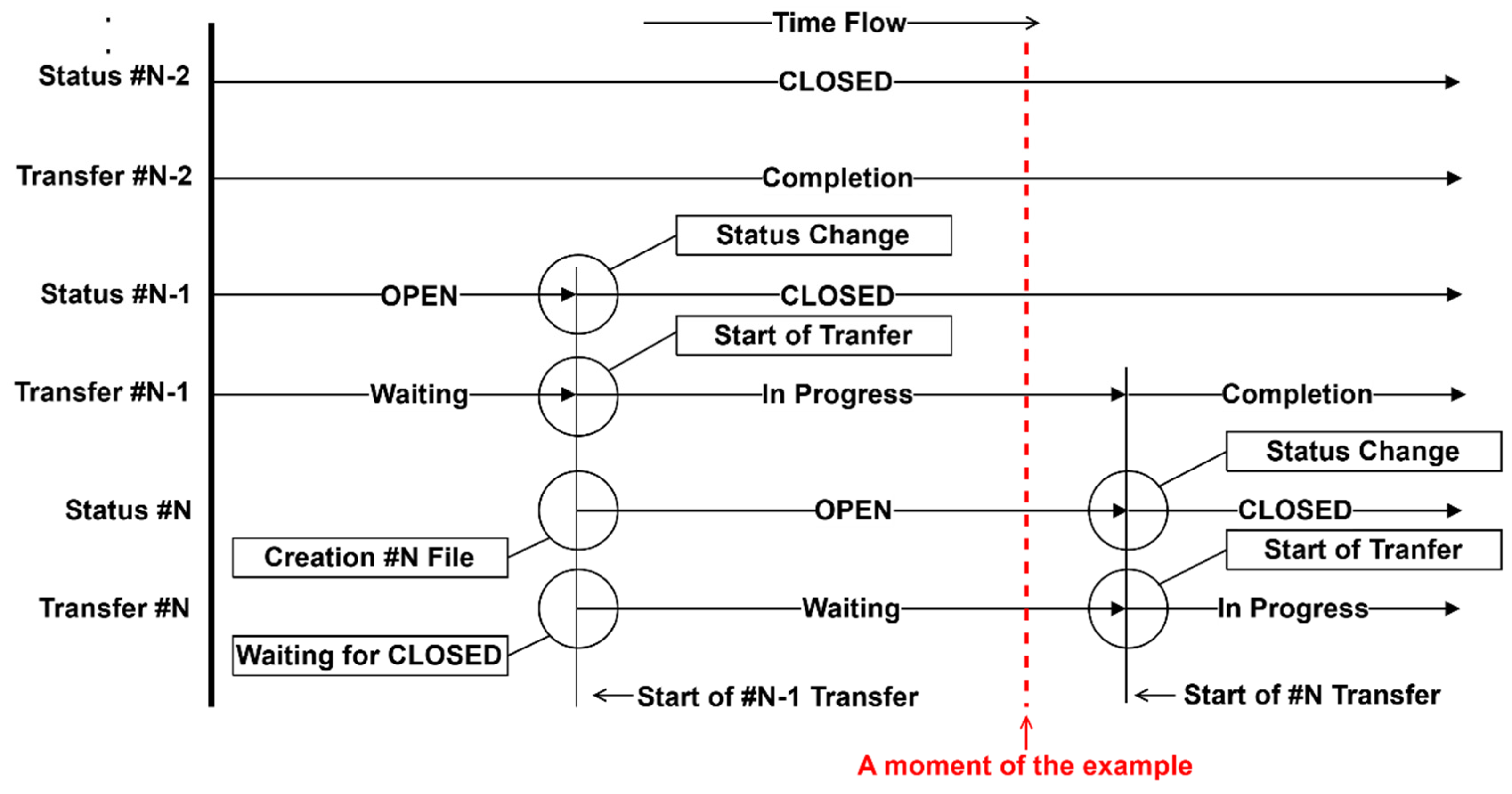

3.2.1. Input and Output Data Overview

3.2.2. CADU Extraction Options

3.2.3. Space Packet Extraction Options

3.2.4. Post-Processing Validation and Monitoring

4. Implementation and Results Analysis

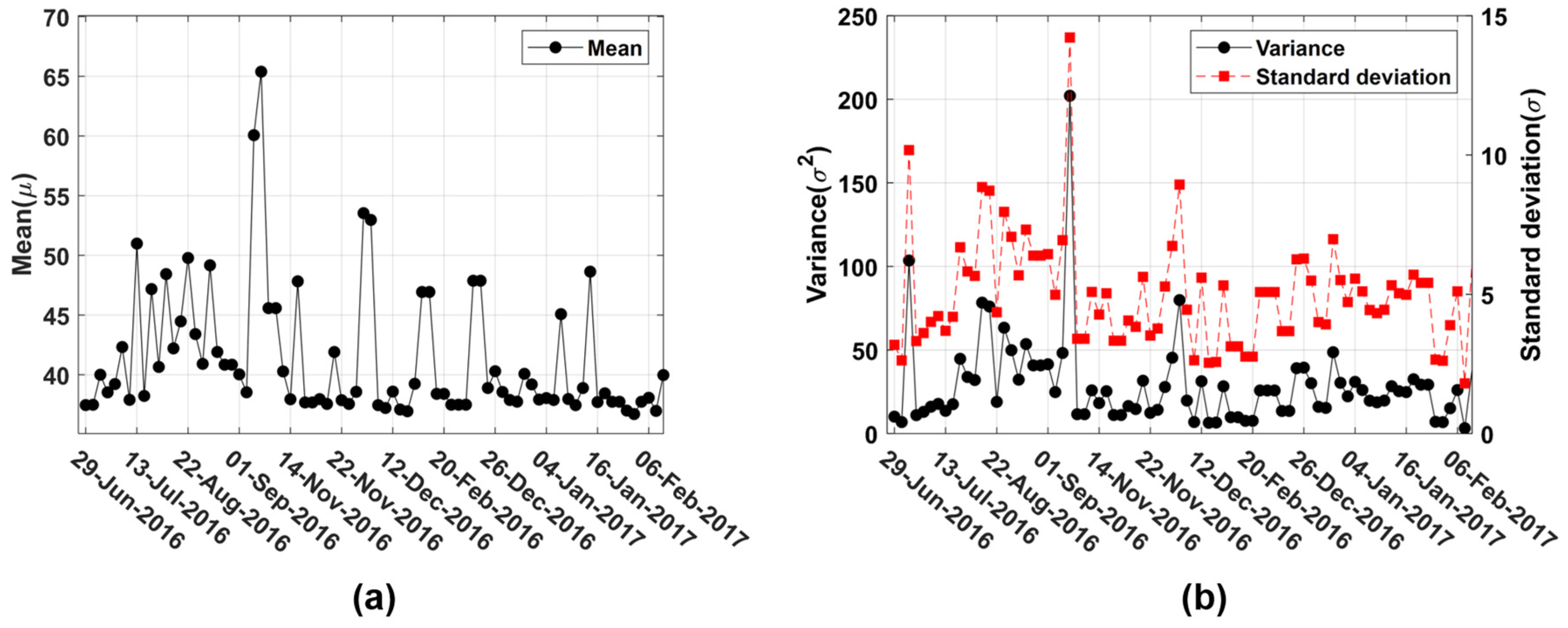

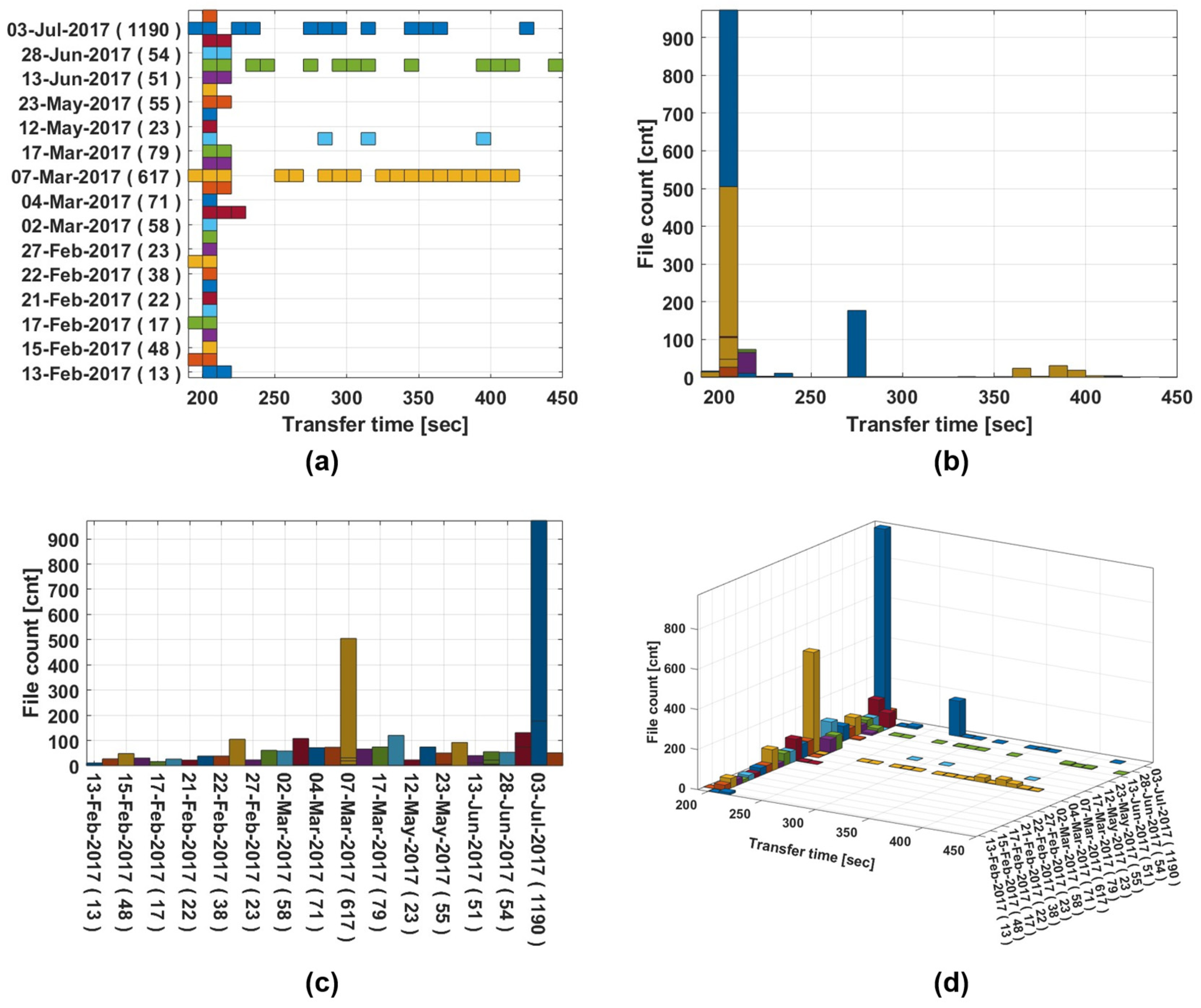

4.1. Analysis of TDT Processing Logs

4.1.1. Data Filtering and Preprocessing

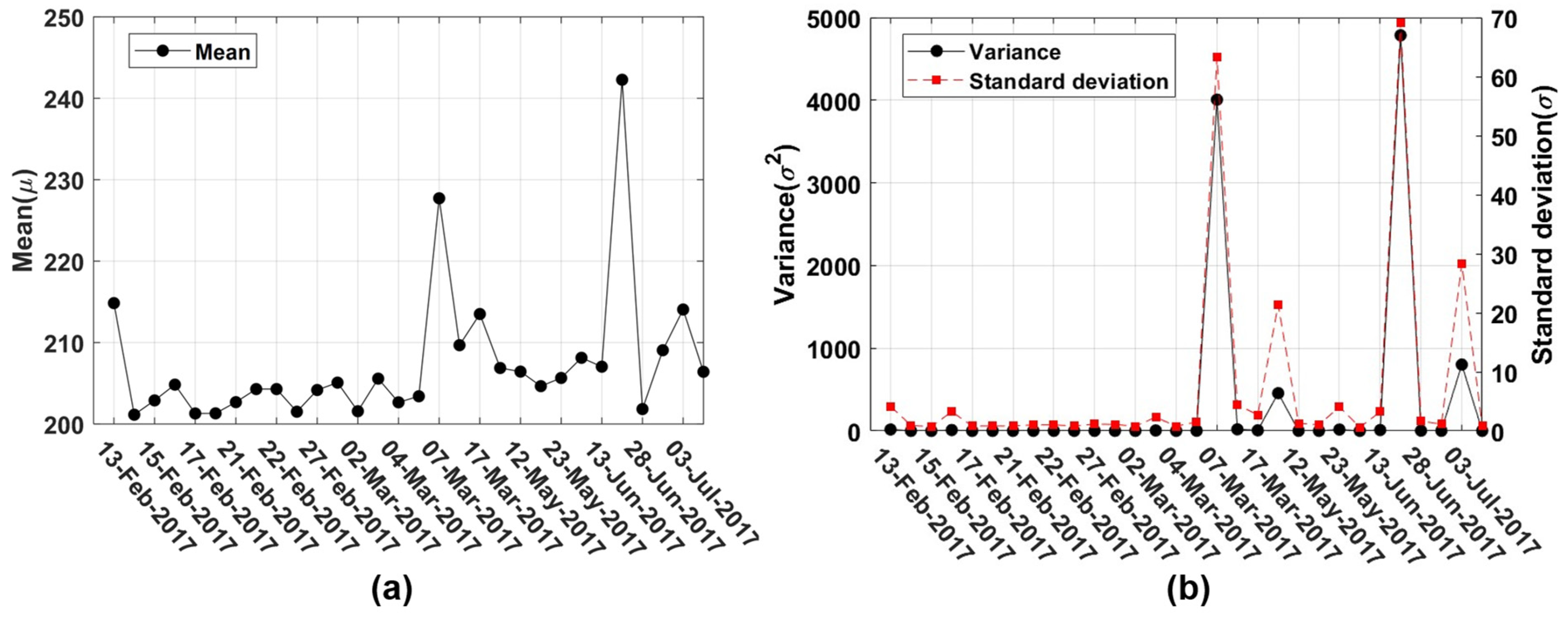

4.1.2. Statistical Analysis of Phase 1

4.1.3. Statistical Analysis of Phase 2

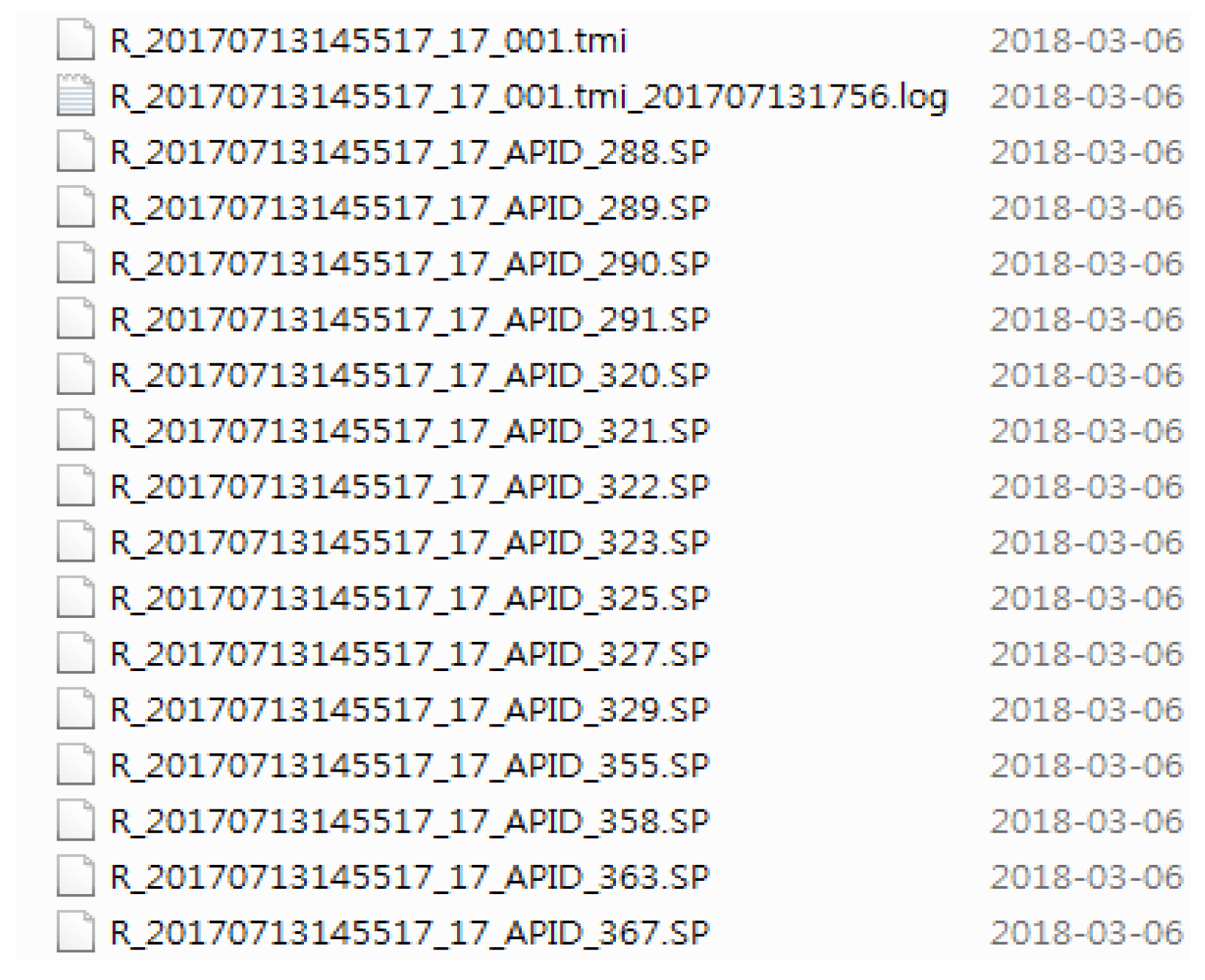

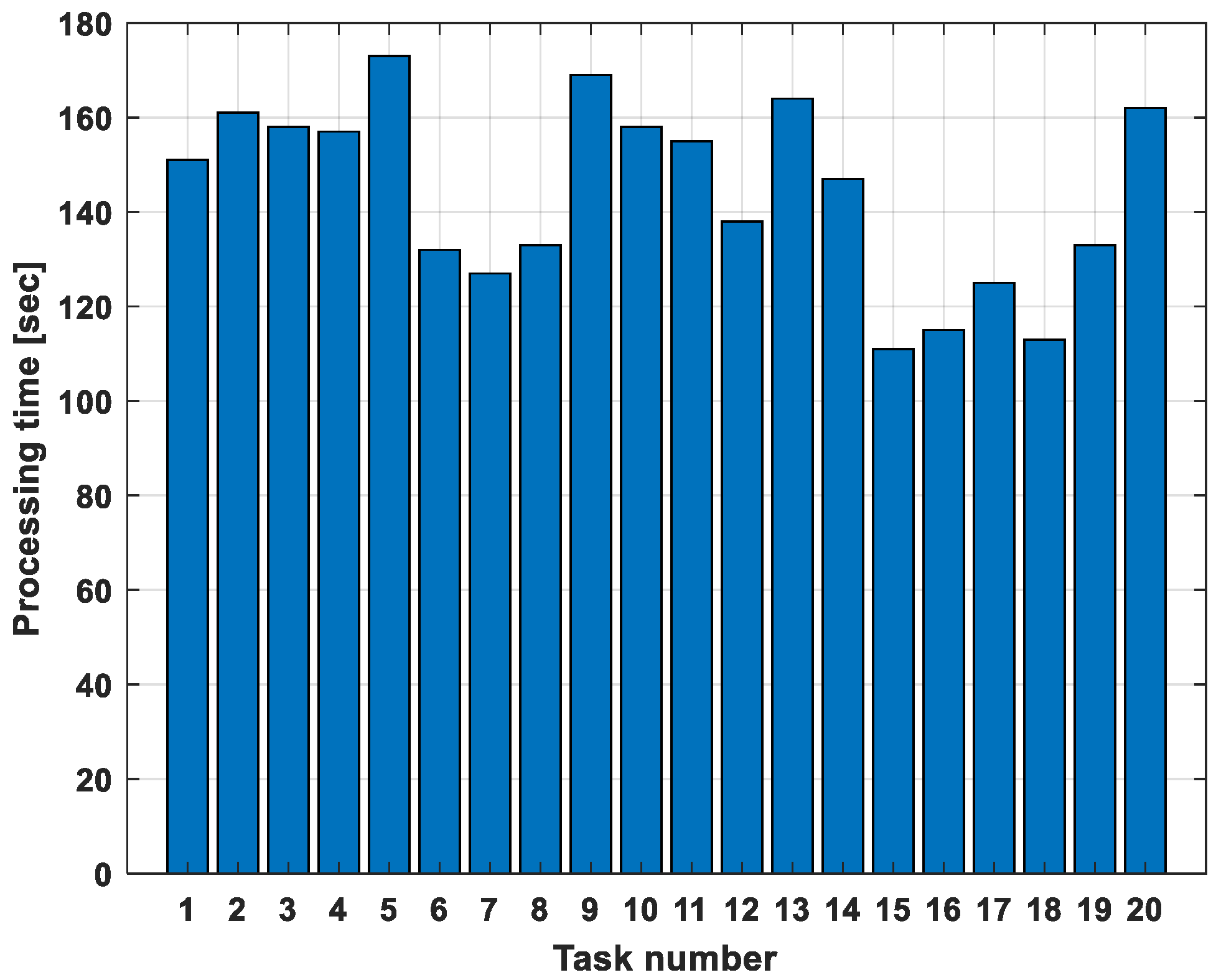

4.2. Analysis of SPE Processing Logs

4.2.1. Setup and Log Collection

4.2.2. Analysis of Processing Time Variation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, G.; Cui, Y.; Wang, S.; Meng, X. Design and performance test of spacecraft test and operation software. Acta Astronaut. 2011, 68, 1774–1781. [Google Scholar] [CrossRef]

- Zhong, P.; Wang, L.; Zhang, G.; Li, X.; Xu, J.; Sun, Q.; Wang, S.; Zhang, S.; Wang, C.; Chen, L.; et al. Thermal-vacuum regolith environment simulator for drilling tests in lunar polar regions. Acta Astronaut. 2025, 229, 13–26. [Google Scholar] [CrossRef]

- Rickmers, P.; Dumont, E.; Krummen, S.; Redondo Gutierrez, J.L.; Bussler, L.; Kottmeier, S.; Wübbels, G.; Martens, H.; Woicke, S.; Sagliano, M.; et al. The CALLISTO and ReFEx flight experiments at DLR-Challenges and opportunities of a wholistic approach. Acta Astronaut. 2024, 225, 417–433. [Google Scholar] [CrossRef]

- Lal, B.; Sylak-Glassman, E.J.; Mineiro, M.C.; Gupta, N.; Pratt, L.M.; Azari, A.R. Global Trends in Space Volume 2: Trends by Subsector and Factors that Could Disrupt Them. IDA Sci. Technol. Policy Inst. 2015, 2, 5242. [Google Scholar]

- Mason, L.S.; Oleson, S.R. Spacecraft impacts with advanced power and electric propulsion. In Proceedings of the 2000 IEEE Aerospace Conference. Proceedings (Cat. No. 00TH8484), Big Sky, MT, USA, 18–25 March 2000; pp. 29–38. [Google Scholar]

- Park, J.; Chae, D.; Bang, S.; Yu, M.; Moon, G. The Umbilical Test Set for Successful AIT and Launch Pad Operation. In Proceedings of the 14th International Conference on Space Operations, Daejeon, Republic of Korea, 16–20 May 2016; p. 2324. [Google Scholar]

- Kim, G.-N.; Park, S.-Y.; Seong, S.; Lee, J.; Choi, S.; Kim, Y.-E.; Ryu, H.-G.; Lee, S.; Choi, J.-Y.; Han, S.-K. The VISION–Concept of laser crosslink systems using nanosatellites in formation flying. Acta Astronaut. 2023, 211, 877–897. [Google Scholar] [CrossRef]

- Pu, W.; Yan, R.; Guan, Y.; Xiong, C.; Zhu, K.; Zeren, Z.; Liu, D.; Liu, C.; Miao, Y.; Wang, Z. Study on the impact of the potential variation in the CSES satellite platform on Langmuir probe observations. Acta Astronaut. 2025, 230, 104–118. [Google Scholar] [CrossRef]

- Kim, J.; Choi, M.; Kim, M.; Lim, H.; Lee, S.; Moon, K.J.; Choi, W.J.; Yoon, J.M.; Kim, S.-K.; Lee, S.H. Monitoring Atmospheric Composition by Geo-Kompsat-2: GOCI-2, AMI and GEMS. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 7750–7752. [Google Scholar]

- Kim, J.; Kim, M.; Choi, M.; Park, Y.; Chung, C.-Y.; Chang, L.; Lee, S.H. Monitoring atmospheric composition by GEO-KOMPSAT-1 and 2: GOCI, MI and GEMS. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 4084–4086. [Google Scholar]

- Lee, Y.; Ryu, G.-H. Planning for next generation geostationary satellites (GK-2A) of Korea Meteorological Administration (KMA). In Proceedings of the 8th IPWG and 5th IWSSM Joint Workshop, Bologna, Italy, 3–7 October 2016. [Google Scholar]

- Choi, J. The Earth’s surface, meteorology, and climate, GEO-KOMPSAT-2 (Cheollian-2/GK2) program. In South Korea National Report to COSPAR 2020; Committee on Space Research (COSPAR): Paris, France, 2020; pp. 9–20. Available online: https://cosparhq.cnes.fr/assets/uploads/2021/01/South-Korea_2020_compressed.pdf (accessed on 7 September 2025).

- Song, Y.-J.; Bae, J.; Hong, S.; Bang, J.; Pohlkamp, K.M.; Fuller, S. KARI and NASA JSC collaborative endeavors for joint Korea Pathfinder Lunar Orbiter flight dynamics operations: Architecture, challenges, successes, and lessons learned. Aerospace 2023, 10, 664. [Google Scholar] [CrossRef]

- Song, Y.-J.; Bae, J.; Kim, Y.-R.; Kim, B.-Y. Early phase contingency trajectory design for the failure of the first lunar orbit insertion maneuver: Direct recovery options. J. Astron. Space Sci. 2017, 34, 331–341. [Google Scholar] [CrossRef]

- Jeon, M.-J.; Cho, Y.-H.; Kim, E.; Kim, D.-G.; Song, Y.-J.; Hong, S.; Bae, J.; Bang, J.; Yim, J.R.; Kim, D.-K. Korea Pathfinder Lunar Orbiter (KPLO) Operation: From Design to Initial Results. J. Astron. Space Sci. 2024, 41, 43–60. [Google Scholar] [CrossRef]

- Wei, G.; Li, X.; Zhang, W.; Tian, Y.; Jiang, S.; Wang, C.; Ma, J. Illumination conditions near the Moon’s south pole: Implication for a concept design of China’s Chang’E−7 lunar polar exploration. Acta Astronaut. 2023, 208, 74–81. [Google Scholar] [CrossRef]

- Resurs-P 1, 2, 3 (47KS). Available online: https://space.skyrocket.de/doc_sdat/resurs-p.htm (accessed on 5 September 2025).

- Resurs-P 4, 5 (47KS). Available online: https://space.skyrocket.de/doc_sdat/resurs-p4.htm (accessed on 5 September 2025).

- Resurs-P (Resurs-Prospective). Available online: https://www.eoportal.org/satellite-missions/resurs-p#eop-quick-facts-section (accessed on 5 September 2025).

- Yang, Y.; Hulot, G.; Vigneron, P.; Shen, X.; Zhima, Z.; Zhou, B.; Magnes, W.; Olsen, N.; Tøffner-Clausen, L.; Huang, J.; et al. The CSES global geomagnetic field model (CGGM): An IGRF-type global geomagnetic field model based on data from the China Seismo-Electromagnetic Satellite. Earth Planets Space 2021, 73, 45. [Google Scholar] [CrossRef]

- Wang, C.; Jia, Y.; Xue, C.; Lin, Y.; Liu, J.; Fu, X.; Xu, L.; Huang, Y.; Zhao, Y.; Xu, Y.; et al. Scientific objectives and payload configuration of the Chang’E-7 mission. Natl. Sci. Rev. 2024, 11, nwad329. [Google Scholar] [CrossRef] [PubMed]

- Zou, Y.; Liu, Y.; Jia, Y. Overview of China’s upcoming Chang’E series and the scientific objectives and payloads for Chang’E 7 mission. In Proceedings of the 51st Annual Lunar and Planetary Science Conference, The Woodlands, TX, USA, 16–20 March 2020; p. 1755. [Google Scholar]

- Xu, D.; Zhang, G.; You, Z. On-line pattern discovery in telemetry sequence of micro-satellite. Aerosp. Sci. Technol. 2019, 93, 105223. [Google Scholar] [CrossRef]

- Wolfmuller, M.; Dietrich, D.; Sireteanu, E.; Kiemle, S.; Mikusch, E.; Bottcher, M. Data flow and workflow organization—The data management for the TerraSAR-X payload ground segment. IEEE Trans. Geosci. Remote Sens. 2008, 47, 44–50. [Google Scholar] [CrossRef]

- Kiemle, S.; Molch, K.; Schropp, S.; Weiland, N.; Mikusch, E. Big data management in Earth observation: The German satellite data archive at the German Aerospace Center. IEEE Geosci. Remote Sens. Mag. 2016, 4, 51–58. [Google Scholar] [CrossRef]

- Schreier, G.; Dech, S.; Diedrich, E.; Maass, H.; Mikusch, E. Earth observation data payload ground segments at DLR for GMES. Acta Astronaut. 2008, 63, 146–155. [Google Scholar] [CrossRef]

- Kim, J.H. The Public Release System for Scientific Data from Korean Space Explorations. J. Space Technol. Appl. 2023, 3, 373–384. [Google Scholar] [CrossRef]

- Kim, J.H. Korea space exploration data archive for scientific researches. In Proceedings of the AAS/Division for Planetary Sciences Meeting Abstracts, London, ON, Canada, 2–7 October 2022; p. 211.208. [Google Scholar]

- Silvio, F.; Silvia, M.; Anna, F.; Pavia, P.; Rovatti, M.; Rita, R.; Francesca, S. P/L Data Handling and File Management Solution for Sentinel Expansions High Performances Missions. In Proceedings of the 2023 European Data Handling & Data Processing Conference (EDHPC), Juan-Les-Pins, France, 2–6 October 2023; pp. 1–10. [Google Scholar]

- De Giorgi, G.; Legendre, C.; Plonka, R.; Komadina, J.; Hook, R.; Siegle, F.; Caleno, M.; Fernandez, M.M.; Fernandez-Boulanger, V.; Furano, G. CO2M Payload Data Handling Subsystem. In Proceedings of the 2023 European Data Handling & Data Processing Conference (EDHPC), Juan-Les-Pins, France, 2–6 October 2023; pp. 1–4. [Google Scholar]

- Anderson, J. High performance missile testing (next generation test systems). In Proceedings of the AUTOTESTCON 2003 IEEE Systems Readiness Technology Conference, Anaheim, CA, USA, 22–25 September 2003; pp. 19–27. [Google Scholar]

- Zhuo, J.; Meng, C.; Zou, M. A task scheduling algorithm of single processor parallel test system. In Proceedings of the Eighth ACIS International Conference on Software Engineering, Artificial Intelligence, Networking, and Parallel/Distributed Computing (SNPD 2007), Qingdao, China, 30 July–1 August 2007; pp. 627–632. [Google Scholar]

- Gong, N. Spacecraft Test Data Integration Management Technology based on Big Data Platform. Scalable Comput. Pract. Exp. 2023, 24, 621–630. [Google Scholar] [CrossRef]

- Yu, D.; Ma, S. Design and Implementation of Spacecraft Automatic Test Language. Chin. J. Aeronaut. 2011, 24, 287–298. [Google Scholar] [CrossRef]

- Ye, T.; Hu, F.; Huang, S.; Chen, Z.; Wang, H. Design and Implementation of Spacecraft Product Test Data Management System. In Proceedings of the 3rd International Conference on Computer Science and Application Engineering, Sanya, China, 22–24 October 2019; pp. 1–5. [Google Scholar]

- Hendricks, R.; Eickhoff, J. The significant role of simulation in satellite development and verification. Aerosp. Sci. Technol. 2005, 9, 273–283. [Google Scholar] [CrossRef]

- Eickhoff, J.; Falke, A.; Röser, H.-P. Model-based design and verification—State of the art from Galileo constellation down to small university satellites. Acta Astronaut. 2007, 61, 383–390. [Google Scholar] [CrossRef]

- Park, J.-O.; Choi, J.-Y.; Lim, S.-B.; Kwon, J.-W.; Youn, Y.-S.; Chun, Y.-S.; Lee, S.-S. Electrical Ground Support Equipment (EGSE) design for small satellite. J. Astron. Space Sci. 2002, 19, 215–224. [Google Scholar] [CrossRef]

- Li, Z.; Ye, G.; Ma, S.; Huang, J. The study of spacecraft parallel testing. Telecommun. Syst. 2013, 53, 69–76. [Google Scholar] [CrossRef]

- Chaudhri, G.; Cater, J.; Kizzort, B. A model for a spacecraft operations language. In Proceedings of the SpaceOps 2006 Conference, Rome, Italy, 19–23 June 2006; p. 5708. [Google Scholar]

- Lv, J.; Ma, S.; Li, X.; Song, J. A high order collaboration and real time formal model for automatic testing of safety critical systems. Front. Comput. Sci. 2015, 9, 495–510. [Google Scholar] [CrossRef]

- Consultative Committee for Space Data Systems (CCSDS). Space Packet Protocol, CCSDS 133.0-B-1. In Blue Book; Consultative Committee for Space Data Systems: Washington, DC, USA, 2003; Issue 1. [Google Scholar]

- Consultative Committee for Space Data Systems (CCSDS). AOS Space Data Link Protocol, CCSDS 732.0-B-2. In Blue Book; Consultative Committee for Space Data Systems: Washington, DC, USA, 2006; Issue 2. [Google Scholar]

| Data | Category | Subcategory | Description |

|---|---|---|---|

| Payload telemetry | Science data | Observation data | EO, SAR, magnetometer, or other instruments |

| Engineering data | Housekeeping data | Health state of payload instruments | |

| Ancillary data | Information necessary for processing science data |

| OLTS | ESSO | |

|---|---|---|

| OS | Windows 7 Professional x64 | Windows Storage Server 2012 R2 Standard x64 |

| CPU | Intel Xeon CPU E5-2620 v3 2.40 GHz | Intel Xeon CPU E5620 2.40 GHz |

| RAM | 8 GB | 16 GB |

| Storage | 1 TByte | 16 TByte (Usable, RAID 5), 2 TByte (Hot Spare), Expandable |

| Application | TDT, OLTS Manager | SPE, FileZillar Server |

| Ethernet Interface | 1 Gbps Connection | 1 Gbps Connection |

| Test Date | Number of Transferred Files [counts] | Mean of Transfer Time [s] |

|---|---|---|

| 29 June 2016 | 209 | 37 |

| 16 January 2017 | 1359 | 37 |

| 21 January 2017 | 397 | 38 |

| 31 January 2017 | 1190 | 36 |

| Item | Setting |

|---|---|

| File Size | 3,906,252 Kbyte |

| CADU Merge | Off |

| SP Merge | Off |

| SP Sorting by APID | On |

| Descrambling | Off |

| RS Decoding | Off |

| RS Correction | Off |

| Application | Processing Time for Phase 1 [s] | Processing Time for Phase 2 [s] | Location |

|---|---|---|---|

| TDT (ver. 2.0) | 40 | 213 | OLTS |

| SPE (ver. 2.0) | 144 | 144 | ESSO |

| Total Time | 184 | 358 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.; Song, Y.-J.; Lee, D. Enhanced Test Data Management in Spacecraft Ground Testing: A Practical Approach for Centralized Storage and Automated Processing. Aerospace 2025, 12, 813. https://doi.org/10.3390/aerospace12090813

Park J, Song Y-J, Lee D. Enhanced Test Data Management in Spacecraft Ground Testing: A Practical Approach for Centralized Storage and Automated Processing. Aerospace. 2025; 12(9):813. https://doi.org/10.3390/aerospace12090813

Chicago/Turabian StylePark, Jooho, Young-Joo Song, and Donghun Lee. 2025. "Enhanced Test Data Management in Spacecraft Ground Testing: A Practical Approach for Centralized Storage and Automated Processing" Aerospace 12, no. 9: 813. https://doi.org/10.3390/aerospace12090813

APA StylePark, J., Song, Y.-J., & Lee, D. (2025). Enhanced Test Data Management in Spacecraft Ground Testing: A Practical Approach for Centralized Storage and Automated Processing. Aerospace, 12(9), 813. https://doi.org/10.3390/aerospace12090813