Abstract

With the advancement of intelligent human–computer interaction (IHCI) technology, the accurate recognition of an operator’s intent has become essential for improving the collaborative efficiency in complex tasks. To address the challenges posed by stringent safety requirements and limited data availability in pilot intent recognition within the aviation domain, this paper presents a human intent recognition model based on operational sequence comparison. The model is built based on standard operational sequences and employs multi-dimensional scoring metrics, including operation matching degree, sequence matching degree, and coverage rate, to enable real-time dynamic analysis and intent recognition of flight operations. To evaluate the effectiveness of the model, an experimental platform was developed using Python 3.8 (64-bit) to simulate 46 key buttons in a flight cockpit. Additionally, five categories of typical flight tasks along with three operational test conditions were designed. Data were collected from 10 participants with flight simulation experience to assess the model’s performance in terms of recognition accuracy and robustness under various operational scenarios, including segmented operations, abnormal operations, and special sequence operations. The experimental results demonstrated that both the linear weighting model and the feature hierarchical recognition model enabled all three feature scoring metrics to achieve high intent recognition accuracy. This approach effectively overcomes the limitations of traditional methods in capturing complex temporal relationships while also addressing the challenge of limited availability of annotated data. This paper proposes a novel technical approach for intelligent human–computer interaction systems within the aviation domain, demonstrating substantial theoretical significance and promising application potential.

1. Introduction

With the advancement of artificial intelligence, human–machine interactions have gradually evolved into IHCI [1]. In future, complex task scenarios, human operators, and intelligent systems will function as an integrated unit to collaboratively accomplish shared objectives. However, fully autonomous intelligent systems have not yet been realized [2], and the nature of these interactions continues to demonstrate the role of intelligent systems as supportive tools in human task execution. This highlights the importance of an intelligent system’s capability to recognize operator intentions, which represents a critical technology for achieving IHCI [3,4]. Currently, this technology has achieved significant advancements across various cutting-edge domains, with prominent applications such as behavior understanding in computational environments [5], human–robot collaborative control in industrial contexts [6], and anticipatory interaction mechanisms in intelligent driving systems [7].

The feature data utilized in intention recognition analysis mainly comprises three categories: human behavioral features, machine-related features, and environmental features [8]. Each of these categories plays a distinct role in intention recognition. Human behavioral features reflect underlying intentions, whereas machine and environmental features constitute the foundational elements that shape these intentions. In terms of human behavioral features, various behaviors are driven by specific intentions. Currently, many researchers have employed eye-tracking [9,10] and gesture interaction actions [11] for intention recognition. Additionally, some researchers have utilized electroencephalography (EEG) [12] and electromyography (EMG) [13] to assist in the recognition process. Machine features and environmental features are also applied in research as the basis for human intention generation. For instance, in pilot intention recognition, machine feature information such as aircraft altitude, speed, and heading [14,15,16] is incorporated into the recognition model. Environmental information encompasses task-related environmental data [17] and situational data [18], which are also included in the recognition model as external factors influencing intention generation. Although previous studies have emphasized human behavioral factors in the selection of feature data, recent research often treats human behavioral features and machine-derived features separately or utilizes machine and environmental features solely as supplementary inputs to improve the accuracy of recognition models. This approach fails to account for the holistic nature of human–machine collaboration. Specifically, within the aviation domain, a pilot’s actions are ultimately translated into aircraft commands, which reflect not only individual human behavioral features but are also influenced by machine and environmental characteristics. Executing an intention typically involves a combination of multiple operational behaviors arranged in a specific sequence, with different intentions represented by different sequences of operations. Therefore, this study utilizes the sequence of pilot operations on the aircraft as feature data to conduct research on intention recognition.

The rational selection of feature data provides a solid foundation for intention recognition, while employing appropriate research methods is key to achieving accurate intention recognition. Existing intention recognition methods rely on various principled approaches, reflecting a spectrum that ranges from rule-based experiences to data-driven methodologies [19].

From the perspective of rule-based experience, intention recognition methods primarily rely on expert knowledge, utilizing predefined rules and template libraries established by experts. For instance, Gui et al. [20] focused on the recognition of flight operation actions, employing an expert system inference engine to deduce the degree of match between feature information and intention templates. However, this approach lacks flexibility in adapting to changes in the environment, making it challenging to respond effectively to the complexities of dynamic flight scenarios. Furthermore, the costs associated with updating and maintaining the system based solely on expert knowledge can be high, hindering the ability to quickly adapt to evolving aircraft and flight procedures.

Data-driven approaches have the capability to learn underlying patterns in operational sequences and exhibit stronger generalization abilities. Current intention recognition methods primarily consist of machine learning and deep learning techniques. Machine learning methods utilize manually designed features and established classifiers such as K-Nearest Neighbors (KNN) [21], Support Vector Machines (SVM) [22], Naive Bayes (NB) [23], and Random Forests (RF) [6] to effectively recognize and classify input data. However, these methods struggle to capture the complex temporal relationships in flight operations, particularly when processing long sequences [8]. In contrast, deep learning methods rely on training with large volumes of labeled data to construct neural networks capable of recognizing intentions. However, high-quality labeled data are often limited in the field of flight operations [24]. Additionally, deep learning models are prone to overfitting [25], and their “black box” nature [26] makes the decision-making processes difficult to interpret, which poses a significant drawback in the safety-critical aviation sector.

In summary, whether through rule-based or data-driven recognition methods, the core objective remains the construction of a model that categorizes input samples into predefined classes. However, within the context of human–machine interaction in the aviation domain, intention recognition faces three fundamental challenges. Firstly, regarding the sensitivity to timing and operational tolerance, while flight operations must adhere to strict sequential logic—such as the fixed order of instruments, throttle, and heading for ILS blind landings [27]—practical execution allows for reasonable adjustments to non-critical steps. This creates challenges for traditional sequential models in balancing formality with flexibility. Secondly, concerning feature coupling and decision interpretability, the interplay among humans, machines, and the environment during flight forms a complex intention signal. Existing methods have yet to effectively establish models that correlate these elements [28], resulting in a lack of transparency in the decision-making process [29]. Lastly, with respect to data scarcity and safety requirements, obtaining authentic human–computer interaction (HCI) data from real flights is challenging, while civil aviation ASIL-D safety standards demand an extremely low error rate for recognition [30]. This renders traditional data-driven methods inadequately equipped to meet the stringent demands for high precision and verifiability under small sample conditions.

To address the aforementioned challenges, this paper aims to develop a sequence comparison method for operational actions to establish a more comprehensive and accurate human intention recognition model, thereby providing more effective support for human–machine interaction systems. By utilizing the sequence of pilot actions during flight as feature data, three key metrics were proposed—operation matching degree, sequence matching degree, and coverage rate—to quantify the similarity between a pilot’s operational sequence and the standard operational sequences corresponding to different flight intentions. The intention prediction model was developed through the linear weighting of feature matching scores and a hierarchical approach to feature metrics, and the performance of these three metrics in terms of intention recognition accuracy was validated. This method not only overcomes the limitations of traditional approaches when dealing with highly uncertain tasks but also effectively addresses the issue of scarce labeled samples. This study offers a novel perspective on human intention recognition within the aviation domain, with the potential to substantially improve the performance and reliability of human–machine interaction systems.

2. Methodology

2.1. Overview

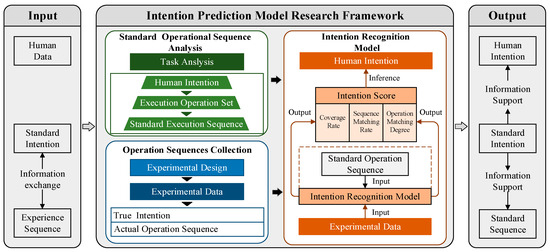

Building upon the research motivations previously outlined and a thorough review of the relevant literature, this paper proposes a methodology for developing a model designed to facilitate intention recognition in complex task environments. The intention prediction model research framework is shown as Figure 1. The core function of this framework is to derive potential human–computer interaction intentions by collecting and analyzing the operational behavior sequences of personnel. It aims to achieve a real-time and accurate understanding of human operational intentions through multi-dimensional sequence analysis within dynamic and complex human–machine environments. The implementation of this framework consists of three main steps. Firstly, typical task analysis methods are employed to decompose aviation tasks, allowing for the identification of standard operational sequences of personnel under various task intentions. Secondly, the operational behaviors of personnel are recorded to construct corresponding behavior sequences. Finally, intention recognition algorithms are applied to discern personnel intentions based on the collected operational sequences. In this paper, five typical tasks that commonly occur during flight operations are used as case studies to evaluate the applicability of the proposed framework in real-world, complex HCI tasks.

Figure 1.

Research framework for intent recognition model.

2.2. Standard Operational Sequences Analysis

Standard operational sequence analysis serves as a fundamental step in intention recognition for complex human–machine interaction tasks. Its primary objective is to outline the standard operating procedures followed in intricate tasks, analyze the intentions of the operators, and extract relevant features. This provides a solid foundation for subsequent data collection and the development of hierarchical intention recognition algorithms.

This study focuses on five typical flight tasks: “Proactive Identification of Potential Threats (PIPT)”, “Severe Weather Avoidance and Route Dynamic Adjustment (AWADRA)”, “Flight Management System (FMC) Route Calibration and Fuel Optimization (FMS-RCFO)”, “Low Visibility ILS Blind Landing System Approach and Landing (LVILS-AP)”, and “Multiband Communication Coordination and Conflict Resolution (MCCCR)”. A comprehensive and structured operational sequence dataset was developed through the integration of Hierarchical Task Analysis (HTA) [31] and expert experience synthesis. The specific process is outlined as follows:

Initially, relevant information was gathered based on the overarching objectives of the flight tasks, and the HTA method was utilized to hierarchically decompose each task. This process involved clarifying the primary tasks, identifying subtasks, and establishing their execution order, ultimately breaking them down into precise operational steps. For example, the “Proactive Identification of Potential Threats” task was subdivided into several subtasks, including the initiation of radar monitoring, the observation of Heads-Up Display (HUD) alert information, and the utilization of Head-Mounted Displays (HMD) for assisted identification, thereby ensuring a systematic and comprehensive structure for the task. Following this, the initially generated operational step sequences were rigorously validated against actual operational log data obtained from flight simulators, further enriched by the extensive operational expertise of multiple senior pilots.

Subsequently, the subtasks were further dissected into more granular execution operations, which directly align with the operators’ intentions within the framework of human–computer interaction. For instance, the sub-goal “Initiate Radar Monitoring” was broken down into several specific operations, including the radar sub-mode shortcut button group, the radar frequency/band selection switch, and the scan angle adjustment knob. Each button was clearly labeled to reflect the operators’ actions associated with these controls. The standard operational sequences for the five typical tasks are summarized in Appendix A, Table A1, Table A2, Table A3, Table A4 and Table A5.

In conclusion, the operational steps of each task are systematically mapped to corresponding button indices, resulting in the creation of traceable digital sequence data. Within this coding framework, each number is assigned to a specific control button or knob on the flight platform. For example, number 7 denotes the “Multi-Function Display (MFD) mode switch knob”, while number 22 corresponds to the “HUD alert prompt area”, among others. This standardized coding scheme enables a consistent representation of operational sequences, thereby facilitating subsequent automated identification, data analysis, and simulation applications. Table 1 illustrates the correspondence between different execution numbers and their respective operations.

Table 1.

Standard operating procedure sequence numbers for five types of task.

2.3. Operation Sequences Collection

The collection of feature data serves as the foundational basis for recognizing human intent in complex human–computer interaction tasks. This data rely on the temporal logic of pilot operations to determine the sequence of actions, which is then compared with the standard sequence in subsequent research. Even if there are slight variations in timing, this approach ensures consistency in the order of operations. Consequently, we deliberately overlook the time intervals between actions to concentrate on the more crucial sequence of operations. The purpose of collecting feature data is to replicate task scenarios within an experimental simulation environment, grounded on the previously mentioned task analysis, and to gather participants’ action feature data during task execution. This facilitates the creation of an intent feature dataset, offering data samples for subsequent algorithm research. This section will provide a detailed description of the experiment and task design, the participants involved, and the experimental procedures.

2.3.1. Experiment and Task Design

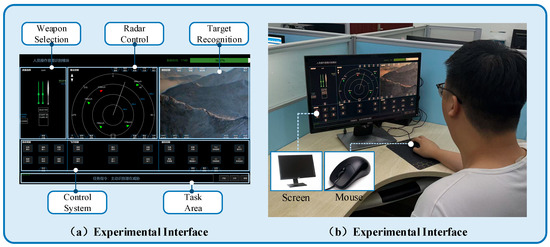

The experimental platform is crafted to simulate a realistic flight environment and is developed using Python. It features 46 essential control buttons typically found in an aircraft cockpit, with each button assigned a unique identifier, as depicted in Figure 2. When an operator clicks a button, the system’s backend logs the corresponding button number. These click actions are combined to create the operator’s sequence of operations, which is then compared against the five predefined operational sequences outlined in Table 1. This setup facilitates real-time data collection and dynamic intent recognition. Five typical tasks have been preconfigured to simulate various situations that commonly arise during flight. Each task is equipped with a standard operational sequence, and participants complete the button operations sequentially according to the task instructions. The system records the operational sequences in real time and evaluates task matching and intent prediction through a multi-dimensional scoring mechanism. The interface includes task instruction displays, a countdown timer, a progress bar, and a grid of operation buttons, ensuring that operational feedback is clear and intuitive. The experimental platform is shown in Figure 2.

Figure 2.

Display of operation numbers corresponding to software interface buttons.

In the diagram, red circles denote the operation numbers of various buttons, corresponding to Table 1. Each button is labeled with its specific function. For example, Circle 1 indicates the joystick flight control button, Circle 2 represents the joystick radar linkage button, Circle 3 is for the joystick weapon selection and interception button, and this continues up to Circle 46, which denotes the emergency communication button. During the experiment, participants should simply follow these numerical labels and perform operations according to the standard sequence logic outlined in Table 1. Moreover, yellow circles highlight other task-related buttons and indicators. Circle A serves as the task start button, initiating the task; Circle B is the task prompt display, which shows the current task name, such as “Proactive Identification of Potential Threats” or other task titles; Circle C functions as the task countdown timer. The text on any additional prompts or buttons does not impact the outcomes of this study.

The experiment was designed with three distinct testing conditions. In the first condition, participants were instructed to complete tasks following the standard operation sequence provided by the system, executing only steps 7 to 9 of this sequence. This aimed to assess the system’s accuracy in recognizing segment sequences under controlled operational conditions. The second approach focuses on abnormal operation intent recognition, permitting participants to randomly insert operations that deviate from the standard task sequence. This method simulates emergency responses during aircraft anomalies or adverse weather conditions, evaluating the system’s fault tolerance and its robustness in identifying such abnormal operations. The third approach emphasizes special sequence intent recognition, in which participants perform tasks in a non-standard but logically acceptable order. This simulates scenarios where participants prioritize certain operations or subtasks based on various considerations while managing the task. It tests the system’s capability to recognize potential intents within these special sequences, thereby enhancing its adaptability to different operational modes. During the experiment, the system collected participants’ operation sequences in real time, dynamically updating intent recognition scores and visual charts. A countdown timer set to 180 s was employed to limit the completion time for each task, with feedback on the results provided after task completion. All tasks were executed sequentially, and the system saved the operational data for further analysis.

2.3.2. Participants

The experiment recruited 10 graduate students from the School of Aero-engines at Shenyang Aerospace University, with an average age of 24.6 years (standard deviation (SD) = 0.94). All participants had normal or corrected vision and possessed experience in operating the aforementioned flight tasks. All participants were right-handed and did not have color blindness or color weakness. On the day before the experiment, all participants maintained good health, ensuring they had sufficient rest, and avoided intense physical activity.

2.3.3. Experimental Procedure

Prior to the experiment, detailed explanations of the task requirements and operating procedures were provided to the 10 participants, along with training sessions to familiarize them with the operating interface. Each participant sequentially completed three typical testing conditions derived from five preconfigured tasks: segment operational intent recognition, abnormal operational intent recognition, and special sequence intent recognition. The order of these tasks was randomly assigned by the system. Participants executed the corresponding operations within a 180-s countdown as directed by the system, which collected key press sequences in real time and dynamically updated intent recognition scores along with visual charts. The segment sequence task required strict adherence to the predefined operational sequence. Conversely, in the anomaly operation task, deviations from the standard sequence were allowed to simulate emergency procedures under abnormal conditions, whereas the special sequence task was executed in a non-standard but logical order. After completing each task, participants were given a brief break, during which the system displayed an operational feedback interface to present the results. All data were automatically saved by the system for subsequent analysis. The experimental setup is illustrated in Figure 3, with the numbered information indicating the labels for the buttons.

Figure 3.

Experimental interface and scene.

2.4. Intent Recognition Model

2.4.1. Model Process and Methods

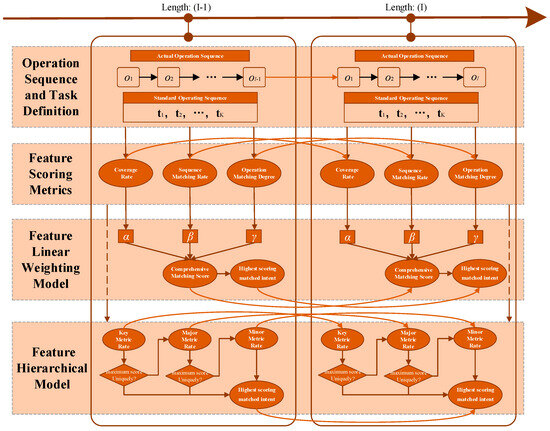

The model compares the real-time operational sequences collected from participants with the predefined standard operational sequences, generating three feature scoring metrics: operation matching degree, sequence matching degree, and coverage rate. These metrics are utilized to evaluate the extent of the match. Based on these feature metrics, two scoring systems were developed: a feature linear weighting model and a feature hierarchical model, which aim to achieve a comprehensive recognition of participants’ intents. The implementation method is illustrated in Figure 4.

Figure 4.

Implementation logic of intent recognition based on feature scoring metrics.

This method considers the execution characteristics and uncertainties of personnel during actual operations. On one hand, the operation matching degree emphasizes the recognition of unique task operations, thereby enhancing the model’s sensitivity to task features. On the other hand, the sequence match scoring metric permits a certain degree of sequence deviation to accommodate instances of non-strict linear execution during the operational process. Additionally, the coverage rate metric quantifies the extent of task completion, evaluating how well the current operational sequence represents the overall task progress.

2.4.2. Operation Sequence and Task Definition

The current operational sequence collected is as follows:

where oi represents the i-th human–computer interaction operation. In this study, and N is the total length of the sequence.

The set of task categories was defined as below:

In this context, L represents the number of defined task sets. Each task Tk comprises an operation sequence made up of a series of operation numbers.

Different sequences of numbers represent the actions taken by personnel on each button under standard conditions, as outlined below:

where nk is the length of the standard operational sequence for task Tk. In this paper, the sequences corresponding to the five tasks are represented by the codes in Table 1.

2.4.3. Feature Scoring Metrics

(1) Operation Matching Score Metric

The operation matching score is designed to assess the inclusion of pairs of unique operations that possess strong distinguishing capabilities for specific tasks within the current input sequence. This metric effectively differentiates between “general” operations that frequently occur across various tasks and “signature” operations that are unique to a particular task, thereby improving the accuracy of task discrimination.

Here, the unique operation set Uk is defined as the collection of operations that appear only in task Tk, and is utilized to tally the number of operational behaviors in the sequence O that correspond to the standard operational sequence Tk.

The operation matching degree score Mk is derived through the process of normalization:

This ratio represents the relative proportion of unique operations belonging to task k within the input sequence.

(2) Sequence Matching Score Metric

The sequence matching score metric is utilized to evaluate the degree of alignment between the input sequence and the standard task sequence concerning the order of operations. This metric measures the consistency of timing and assesses whether the input sequence can be mapped to the operation sequence outlined in the task specification path in a non-decreasing order. It not only emphasizes the types of operations involved but also takes into account the relative order of these operations.

For the task sequence Tk and the currently collected operation sequence O, we define the matching index sequence , where mki represents the position index of the i-th operation oi in the task sequence Tk. The definition is as follows:

The number of matching operations Nk is defined as follows:

The sequence matching score Ok is defined as follows:

(3) Coverage rate Metric

The coverage rate metric quantifies the proportion of operational elements within the input sequence that align with the prescribed task path, thereby reflecting the completeness and representativeness of the input sequence at the operational element level. This metric serves as a vital indicator of the extent to which users have accomplished a specific task, playing a crucial role in the assessment of task progress and the provision of timely assistance.

The coverage rate Ck is defined as:

2.4.4. Comprehensive Evaluation of Metrics

The mapping function is defined as follows:

For the current operation sequence O, the function f (O) returns the standard task sequence corresponding to that operation sequence.

(1) Feature Linear Weighting Model

A comprehensive matching scoring model is constructed by integrating the above metrics using a weighted linear combination. The standard sequence type with the highest matching score is selected as the prediction result:

where, Sk denotes the matching score for the standard task k, and the weight parameters α, β, γ are subject to the following conditions:

The final task score is the maximum value among the scores, and the results output k tasks:

Based on the critical impact of various indicators on flight intention recognition, the weight values are analyzed and determined. The operation matching degree directly reflects the consistency between the pilot’s actions and the standard procedures, serving as the core basis for judging intentions, with a weight set as α = 0.4. The sequence matching degree considers the reasonable adjustment space for operational sequences in actual flights, with a weight set as β = 0.2. Coverage effectively verifies the completeness of operations and plays an important supporting role in the final determination, with the weight also set as γ = 0.4. Through Grid Search (GS) validation, this weight allocation scheme is identified as the optimal parameter selection, ensuring that the contribution scores of each indicator strictly match their actual importance.

(2) Feature Hierarchical Model

The contribution rate of indicators is used to assess the degree of impact each scoring indicator has on the model results. This is done by calculating the contribution rates of various indicators based on the scores obtained through linear weighting, determining the hierarchical structure of different indicators. The formula for the contribution rate is as follows:

where represents the contribution rate of the evaluation indicator X, where , and , , represent the individual scores of separate indicators within the maximum task score.

Based on the magnitude of the contribution rates, indicators are categorized into key indicators, primary indicators, and secondary indicators. If the highest score for the task matching among the key indicators is unique, this indicator is output as the result of intent recognition. If not, the calculation continues with the next indicator.

3. Results

The dataset for this study comprises structured data collected from 30 trials involving 10 participants. It encompasses a total of 450 samples distributed across three testing conditions: 150 operation segment sequences categorized under five types of human–machine interaction intentions, 150 sequences representing abnormal operation orders, and 150 sequences of special orders. A comparative analysis was performed on the linear weighted model and the feature hierarchical model using the aforementioned data, followed by a comparison with existing typical recognition models.

3.1. Performance of Pilot Intent Model Based on Feature Linear Weighting

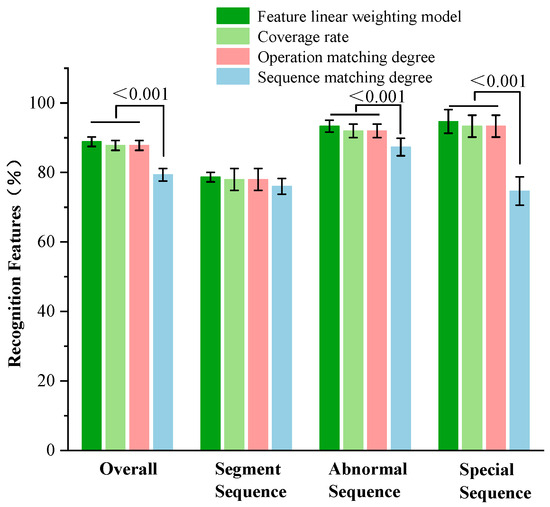

The model’s recognition accuracy is detailed in Table 2, while Figure 5 illustrates the comparative outcomes. The figures individually present the recognition accuracy when utilizing each of the three distinct metrics (coverage rate, operation matching degree, and sequence matching degree) as well as the overall recognition accuracy of pilot intent achieved through a linear weighted combination of these metrics. The data shown reflect the average results, along with standard deviations, derived from experiments conducted with 10 participants.

Table 2.

Recognition accuracy of individual features and linear weighted model.

Figure 5.

Comparison of recognition accuracy between individual features and linear weighting model.

The results demonstrate that the overall performance of the three feature indicators is commendable when evaluated individually, especially the coverage rate and operational matching degree indicators, which both achieved an overall recognition rate of 87.78%. By employing linear weighting to integrate the features, the performance of the recognition model undergoes a certain improvement, with the overall accuracy rising to 88.89%, which is slightly higher than that of the individual feature indicators. It is important to note that the sequential matching degree exhibits a recognition accuracy that is significantly lower than that of the other indicators (p < 0.001), both for general and abnormal sequences, as well as for special sequences. This discrepancy may be attributed to the inherent tendency of this indicator to depend on the similarity in the order of sequences, which leads to reduced robustness.

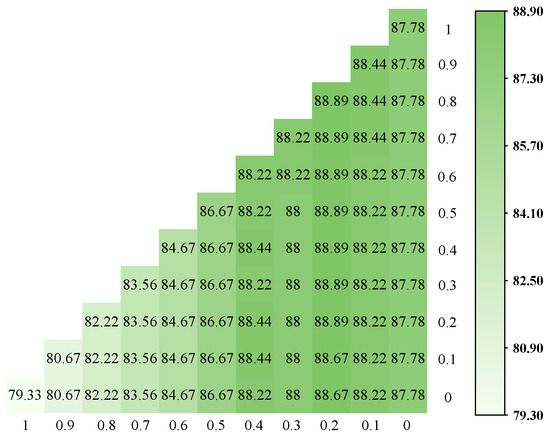

Figure 6 illustrates the recognition accuracy of the linear weighted model across different metric weight configurations. It presents the average recognition accuracy of the model for three types of sequences—segment sequences, anomaly sequences, and special sequences—under varying weight assignments. The horizontal axis represents the weight assigned to the sequence matching degree, while the vertical axis denotes the weight assigned to the coverage rate.

Figure 6.

Recognition accuracy of the linear weighted model at different weights.

In the sensitivity analysis of weight adjustments for the three metrics—sequence matching degree, coverage, and operation matching degree—the results indicate that increasing the weight of sequence matching degree (e.g., setting it to 1.00) results in a decrease in recognition accuracy to 79.33%. This suggests that sequence matching degree is not a critical factor in the model. When the weight of coverage is set to a moderate range (such as between 0.20 and 0.40), recognition accuracy can reach up to 88.89%. This underscores the critical role of coverage in capturing data comprehensiveness and enhancing model adaptability. Furthermore, when the operation matching degree is assigned a high weight (such as 0.60), it substantially boosts recognition performance, elevating accuracy to 88.89%. This demonstrates its vital role in capturing the model’s performance across various operational conditions.

Further analysis of the results, as illustrated in Figure 7, reveals that the similarity between the weather avoidance and active recognition task sequences in the standard sequence library reaches as high as 68% (calculated using Levenshtein distance [32]), while the average similarity among the five types of tasks within the standard sequence library stands at 43.9%. It is important to note that the length of the extracted segment sequences comprises 7 to 9 operational steps, a range that may coincidentally encompass common operational sequence segments from multiple tasks, thereby introducing some ambiguity into the recognition results.

Figure 7.

Similarity of standard operation sequences for five predefined tasks.

3.2. Pilot Intent Recognition Model Based on Feature Hierarchy

3.2.1. Research on Feature Hierarchy of Indicator Contribution Rate

The score contribution rate denotes the proportion of a specific factor or feature within the total score [33]. This metric quantifies the degree to which the factor influences the final outcome by comparing the individual score to the overall score. The original contribution rate data for the features has been normalized, and the average score contributions, after the weighting of each feature, are presented in the following order of contribution rate.

Table 3 illustrates the average score contributions and contribution rates of the three recognition features after weighting across the overall tasks. The contribution rate for the coverage score is 34.61%, while the operation matching degree score has a contribution rate of 36.89%, and the order matching score accounts for 28.50%. Although the contribution rates of the three feature metrics appear to be relatively balanced, it is evident that the operation matching degree and coverage features play the most pivotal roles in accurately recognizing task intentions.

Table 3.

Average contribution score and contribution rate of recognition features.

Table 4 presents the contribution rates of the three recognition features across three distinct operational sequences. Notably, the contribution rate for coverage in segment sequences is the highest, reaching 37.12%. Conversely, in anomaly sequences, the operation matching degree exhibits the highest contribution rate at 36.72%. In special sequences, the contribution rates for coverage and operation matching degree are relatively comparable, standing at 35.30% and 35.90%, respectively.

Table 4.

Contribution rate of recognition features under three operation sequences.

In summary, both coverage and operation matching degree exhibit relatively high contribution rates in sequence recognition. However, the recognition accuracy for anomaly sequences within the linear weighted pilot intention recognition model is comparatively low. To improve recognition performance, we propose a feature hierarchy method aimed at developing a new recognition model based on an analysis of feature contribution rates. The structure of the feature hierarchy architecture is organized as follows: operation matching–coverage–sequence matching.

3.2.2. Model Performance

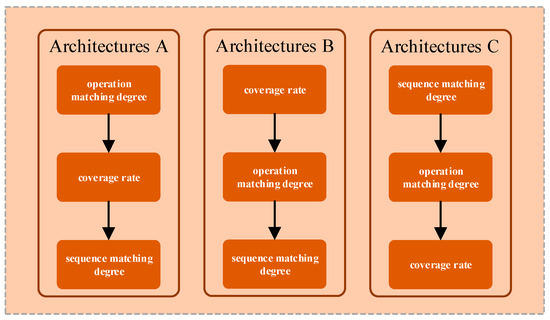

To validate the universality of the feature hierarchical strategy, this study constructs and compares the following three hierarchical architectures, as illustrated in Figure 8.

Figure 8.

Order of feature metrics for architectures A, B, and C.

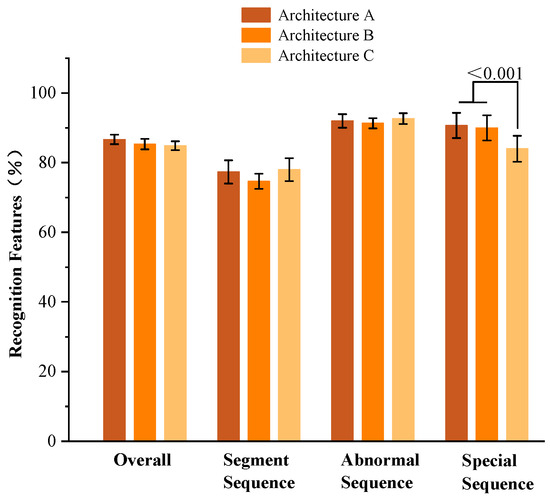

The results of the testing accuracy are presented in Table 5, which details the model recognition accuracy across the three feature hierarchical architectures. Additionally, a comparison of the accuracy among the different models is illustrated in Figure 9.

Table 5.

Recognition accuracy data of linear weighted model and feature hierarchical model.

Figure 9.

Comparison of recognition accuracy for feature hierarchical models A, B, and C.

Overall, the three layered architecture models demonstrate comparable performance, achieving an accuracy of approximately 85%. Architecture A exhibits a marginally better recognition accuracy of 86.77% relative to the other architectures; however, the differences in recognition performance among the architectures are not statistically significant. However, when examining various types of sequences, Architecture C demonstrates slightly better performance on anomalous sequences, achieving an accuracy of 92.67%. In contrast, Architecture A excels in recognizing special sequences, with a performance of 90.67%. However, when it comes to segment sequence recognition, Architecture C significantly underperforms compared to the other two models (p < 0.001), with an accuracy of only 84.00%. These results suggest that certain feature-layered models may offer specific advantages for different subtasks. This finding closely aligns with the previously discussed feature contribution rates obtained from the linear weighted model, reinforcing the notion that operation matching degree and coverage account for the majority of the weight in sequence comparison.

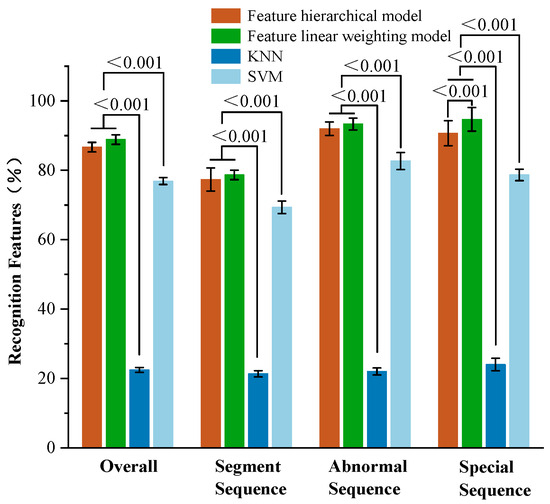

3.3. Comparative Study

Table 6 illustrates the recognition accuracy performance of four models evaluated on the same training dataset. The comparison is illustrated in Figure 10. The models compared include the feature linear weighted model, the feature layering Architecture A model, and the traditional classifiers KNN and SVM. The comparison seeks to emphasize the variations in recognition accuracy and assess the effectiveness of the intent recognition model proposed in this study in relation to existing models. Analyzing these results provides a deeper understanding of the strengths and weaknesses of each model in the task of intent recognition.

Table 6.

Comparison of model recognition accuracy.

Figure 10.

Comparison of the accuracy across four models.

The results reveal that there is no significant difference in overall recognition accuracy between the feature-layered model and the linear weighted model (p > 0.05), indicating that both models exhibit similar performance when processing the overall data. However, the linear weighted model shows superior accuracy compared to the feature-layered model when analyzing specific segment sequences, anomaly sequences, and special sequences. Notably, in the detection of special sequences, the linear weighted model achieves an accuracy of 94.67%, which significantly surpasses that of the feature-layered model. This outcome can be attributed to the linear weighted model’s capability to effectively quantify the contributions of independent feature metrics. While the feature hierarchical model is designed to capture the interactions between features theoretically, its performance in the current task may be constrained by a lack of adequate optimization of the hierarchical divisions. In contrast, the KNN model exhibits notably weaker performance, with recognition accuracy significantly lower than that of the two recognition models proposed in this study (p < 0.001). While the SVM model does achieve a certain level of recognition accuracy, its performance remains relatively average in both overall and individual sequences.

4. Conclusions

In light of the complex task scenarios characterized by the dynamic changes in human, machine, and environmental information in the field of aviation, this paper proposes a multidimensional intention recognition model based on operational sequence comparison. This model categorizes the similarity between real-time operational sequences and standard sequences into three feature scoring metrics. By constructing a linear weighted model for the feature metrics and a hierarchical model, it facilitates the recognition of pilots’ intentions, allowing for adaptability to the dynamically changing flight environment while accurately inferring the pilots’ operational intent.

This study selects five typical flight tasks as case studies and establishes standard operating procedures through task decomposition, systematically outlining the standard operational sequences under different flight intentions. A flight cockpit simulation platform was built using Python to simulate a realistic flying environment, and 10 participants with flight simulation experience were recruited, resulting in the collection of 450 sets of experimental data under three operational conditions. The research employs operation matching degree, sequence matching degree, and coverage to construct a scoring metric system. Two intention recognition models were developed through linear weighting and hierarchical construction of the scoring metrics to infer pilots’ intentions.

The experimental results indicate that, even in data-scarce situations, models built exclusively on standard operational sequences, utilizing linear weighting and feature stratification, achieve superior recognition accuracy and robustness across three metrics compared to traditional recognition models. These models can dynamically and in real-time interpret pilot intentions, thus effectively improving the adaptability and safety of intelligent human–machine interaction systems in complex aviation tasks. This study presents an innovative solution for pilot intention recognition technology. However, this research has some limitations. Firstly, the limited scale and diversity of the dataset may restrict the model’s generalization capability. Secondly, the model’s applicability across various flight task environments requires further validation. Future work will not only concentrate on intent prediction and active recognition but also strive to broaden the diversity of the dataset and evaluate higher-fidelity simulators or real flight environments. This approach aims to further enhance the model’s practicality and reliability.

Author Contributions

Conceptualization, investigation, methodology, formal analysis, validation, visualization, and writing—original draft preparation, X.M.; conceptualization, formal analysis, investigation, methodology, and resources, L.D.; conceptualization, data curation, funding acquisition, methodology, project administration, resources, supervision, and writing—review and editing, X.S.; investigation, methodology, and funding acquisition, L.P.; investigation and resources, investigation and project administration, Y.D.; investigation, methodology, and visualization, X.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author. Due to privacy concerns, they are not publicly available.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Table A1.

Standard operational sequence for PIPT.

Table A1.

Standard operational sequence for PIPT.

| Subtask | Execution Actions |

|---|---|

| Initiate Radar Monitoring | Radar sub-mode shortcut button group, radar frequency/band selection switch, scan angle adjustment knob, beam width adjustment knob, radar gain/sensitivity adjustment knob, radar emergency reset button |

| Observe HUD Alert Information | HUD alert prompt area, HUD weapon aiming crosshair, HUD attitude guidance line |

| Use HMD for Assisted Identification | HMD attitude sensor, HMD navigation guidance line, HMD night vision enhancement module |

| Target Interception and Lock | Target interception/forced tracking button, target handover data link control key, target classification and filtering button, joystick radar linkage button, joystick weapon selection and interception button |

| System Status Control | System reset master switch, autopilot mode switch, autopilot altitude hold knob, autopilot heading lock knob |

Table A2.

Standard operational sequence for AWADRA.

Table A2.

Standard operational sequence for AWADRA.

| Subtask | Execution Actions |

|---|---|

| Radar Weather Monitoring | Radar sub-mode shortcut button group, radar frequency/band selection switch, radar gain/sensitivity adjustment knob, radar scan center offset button |

| Adjust Autopilot Route Parameters | Autopilot mode switch, autopilot altitude hold knob, autopilot heading lock knob, autopilot speed control slider |

| MFD Interface Operation and Information Display | MFD touchscreen, MFD mode switch knob, MFD system status indicator lights |

| Route Adjustment and Fuel Management | FMC keyboard input module, fuel sensors (capacitive/temperature/density) |

| System Reset and Alarm Response | System reset master switch, HUD alert prompt area, frequency conflict alert light |

Table A3.

Standard operational sequence for FMS-RCFO.

Table A3.

Standard operational sequence for FMS-RCFO.

| Subtask | Execution Actions |

|---|---|

| IRS System Calibration | IRS calibration indicator light, system reset master switch |

| FMC Data Input and Verification | FMC keyboard input module, FMC control panel knobs, physical keyboard parameter setting keys |

| MFD Mode Switching and Display | MFD touchscreen, MFD mode switch knob |

| Fuel Sensing and Management | Fuel sensors (capacitive/temperature/density), automatic throttle switching switch, throttle control knob |

| Autopilot Altitude and Heading Control | Autopilot altitude hold knob, autopilot heading lock knob, autopilot speed control slider |

| Blind Landing Instrument and ILS Control | ILS control panel knob, system monitoring of ILS signal stability, HUD alert prompt area |

Table A4.

Standard Operational Sequence for LVILS-AP.

Table A4.

Standard Operational Sequence for LVILS-AP.

| Subtask | Execution Actions |

|---|---|

| System Monitoring ILS Signal Stability | System monitoring of ILS signal stability, ILS control panel knob |

| Autopilot Mode Configuration | Autopilot mode switch, autopilot heading lock knob, autopilot altitude hold knob |

| FMC Input and Route Confirmation | FMC keyboard input module, FMC control panel knobs |

| Radar and Target Recognition Assistance | Radar sub-mode shortcut button group, radar frequency/band selection switch, radar gain/sensitivity adjustment knob, radar emergency reset button |

| HUD and HMD Navigation Display | HUD alert prompt area, HUD weapon aiming crosshair, HUD attitude guidance line, HMD navigation guidance line |

| Throttle and Flight Control Adjustment | Throttle control knob, joystick flight control knob, joystick radar linkage button, joystick weapon selection and interception button |

| System Alarm Handling | System reset master switch, frequency conflict alert light |

Table A5.

Standard Operational Sequence for MCCCR.

Table A5.

Standard Operational Sequence for MCCCR.

| Subtask | Execution Actions |

|---|---|

| Communication Frequency Selection and Control | Communication control panel knob, frequency conflict alert light, emergency communication button |

| FMC and MFD Coordinated Operation | FMC keyboard input module, MFD touchscreen, MFD mode switch knob |

| Radar and Equipment Status Monitoring | Radar sub-mode shortcut button group, radar frequency/band selection switch, radar gain/sensitivity adjustment knob |

| Joystick Control Related Buttons | Joystick radar linkage button, joystick weapon selection and interception button, joystick emergency operation button |

| System Alarm and Reset | HUD alert prompt area, system reset master switch |

References

- Duric, Z.; Gray, W.; Heishman, R.; Li, F.; Rosenfeld, A.; Schoelles, M.; Schunn, C.; Wechsler, H. Integrating perceptual and cognitive modeling for adaptive and intelligent human-computer interaction. Proc. IEEE 2002, 90, 1272–1289. [Google Scholar] [CrossRef]

- Kong, Z.; Ge, Q.; Pan, C. Current status and future prospects of manned/unmanned teaming networking issues. Int. J. Syst. Sci. 2024, 56, 866–884. [Google Scholar] [CrossRef]

- Miller, C.A.; Hannen, M.D. The Rotorcraft Pilot’s Associate: Design and evaluation of an intelligent user interface for cockpit information management. Knowl. Base Syst. 1999, 12, 443–456. [Google Scholar] [CrossRef]

- Karaman, C.C.; Sezgin, T.M. Gaze-based predictive user interfaces: Visualizing user intentions in the presence of uncertainty. Int. J. Hum. Comput. Stud. 2018, 111, 78–91. [Google Scholar] [CrossRef]

- Cig, C.; Sezgin, T.M. Gaze-based prediction of pen-based virtual interaction tasks. Int. J. Hum. Comput. Stud. 2015, 73, 91–106. [Google Scholar] [CrossRef]

- Wang, H.; Pan, T.; Si, H.; Li, Y.; Jiang, N. Research on influencing factor selection of pilot’s intention. Int. J. Aero. Eng. 2020, 2020, 4294538. [Google Scholar] [CrossRef]

- Wei, D.; Chen, L.; Zhao, L.; Zhou, H.; Huang, B. A vision-based measure of environmental effects on inferring human intention during human robot interaction. IEEE Sens. J. 2022, 22, 4246–4256. [Google Scholar] [CrossRef]

- Zhang, R.; Qiu, X.; Han, J.; Wu, H.; Li, M.; Zhou, X. Hierarchical intention recognition framework in intelligent human–computer interactions for helicopter and drone collaborative wildfire rescue missions. Eng. Appl. Artif. Intell. 2025, 143, 110037. [Google Scholar] [CrossRef]

- Chen, X.-L.; Hou, W.-J. Gaze-based interaction intention recognition in virtual reality. Electronics 2022, 11, 1647. [Google Scholar] [CrossRef]

- Koochaki, F.; Najafizadeh, L. Predicting intention through eye gaze patterns. In Proceedings of the IEEE Biomedical Circuits and Systems Conference (BioCAS)—Advanced Systems for Enhancing Human Health, Cleveland, OH, USA, 17–19 October 2018; pp. 25–28. [Google Scholar] [CrossRef]

- Dong, L.; Chen, H.; Zhao, C.; Wang, P. Analysis of single-pilot intention modeling in commercial aviation. Int. J. Aero. Eng. 2023, 2023, 9713312. [Google Scholar] [CrossRef]

- Huang, W.; Wang, C.; Jia, H. Ergonomics analysis based on intention inference. J. Intell. Fuzzy Syst. 2021, 41, 1281–1296. [Google Scholar] [CrossRef]

- Feleke, A.G.; Bi, L.; Fei, W. EMG-based 3D hand motor intention prediction for information transfer from human to robot. Sensors 2021, 21, 1316. [Google Scholar] [CrossRef]

- Lin, X.; Zhang, J.; Zhu, Y.; Liu, W. Simulation study of algorithms for aircraft trajectory prediction based on ADS-B technology. In Proceedings of the Asia Simulation Conference/7th International Conference on System Simulation and Scientific Computing, Beijing, China, 10–12 October 2008. [Google Scholar] [CrossRef]

- Xia, J.; Chen, M.; Fang, W. Air combat intention recognition with incomplete information based on decision tree and GRU network. Entropy 2023, 25, 671. [Google Scholar] [CrossRef]

- Zhou, T.; Chen, M.; Wang, Y.; He, J.; Yang, C. Information entropy-based intention prediction of aerial targets under uncertain and incomplete information. Entropy 2020, 22, 279. [Google Scholar] [CrossRef] [PubMed]

- Tong, T.; Setchi, R.; Hicks, Y. Context change and triggers for human intention recognition. Procedia Comput. Sci. 2022, 207, 3826–3835. [Google Scholar] [CrossRef]

- Xiang, W.; Li, X.; He, Z.; Su, C.; Cheng, W.; Lu, C.; Yang, S. Intention estimation of adversarial spatial target based on fuzzy inference. Intell. Autom. Soft Comput. 2023, 35, 3627–3639. [Google Scholar] [CrossRef]

- Dubois, D.; Hájek, P.; Prade, H. Knowledge-driven versus data-driven logics. J. Logic Lang. Inf. 2000, 9, 65–89. [Google Scholar] [CrossRef]

- Gui, C.; Zhang, L.; Bai, Y.; Shi, G.; Duan, Y.; Du, J.; Wang, X.; Zhang, Y. Recognition of flight operation action based on expert system inference engine. In Proceedings of the 11th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 24–25 August 2019. [Google Scholar] [CrossRef]

- Jang, Y.-M.; Mallipeddi, R.; Lee, S.; Kwak, H.-W.; Lee, M. Human intention recognition based on eyeball movement pattern and pupil size variation. Neurocomputing 2014, 128, 421–432. [Google Scholar] [CrossRef]

- Qi, H.; Zhang, L.; Li, S.; Fu, Y. The identification method research for the helicopter flight based on decision-tree-based support vector machine with the parameter optimization. In Proceedings of the 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017. [Google Scholar] [CrossRef]

- Kang, J.-S.; Park, U.; Gonuguntla, V.; Veluvolu, K.C.; Lee, M. Human implicit intent recognition based on the phase synchrony of EEG signals. Pattern Recogn. Lett. 2015, 66, 144–152. [Google Scholar] [CrossRef]

- Liu, S. Aviation Safety Risk Analysis and Flight Technology Assessment Issues. arXiv 2023, arXiv:2309.12324. [Google Scholar] [CrossRef]

- Bejani, M.M.; Ghatee, M. A systematic review on overfitting control in shallow and deep neural networks. Artif. Intell. Rev. 2021, 54, 6391–6438. [Google Scholar] [CrossRef]

- Cambasi, H.; Kuru, O.; Amasyali, M.F.; Tahar, S. Comparison of dynamic bayesian network tools. In Proceedings of the Innovations in Intelligent Systems and Applications Conference (ASYU), Izmir, Turkey, 31 October–2 November 2019. [Google Scholar] [CrossRef]

- Pariès, J.; Wreathall, J.; Woods, D.D.; Hollnagel, E. Resilience Engineering in Practice: A Guidebook; Ashgate Publishing, Ltd.: London, UK, 2012; Volume 94, p. 291. [Google Scholar]

- Sorensen, L.J. Cognitive Work Analysis: Coping with Complexity. Ergonomics 2010, 53, 139. [Google Scholar] [CrossRef]

- Visser, R.; Peters, T.M.; Scharlau, I.; Hammer, B. Trust, distrust, and appropriate reliance in (X) AI: A conceptual clarification of user trust and survey of its empirical evaluation. Cogn. Syst. Res. 2025, 91, 101357. [Google Scholar] [CrossRef]

- Tang, W.; Mao, K.Z.; Mak, L.O.; Ng, G.W. Adaptive Fuzzy Rule-Based Classification System Integrating Both Expert Knowledge and Data. In Proceedings of the International Conference on Tools with Artificial Intelligence, Athens, Greece, 7–9 November 2012. [Google Scholar]

- Stanton, N.A. Hierarchical task analysis: Developments, applications, and extensions. Appl. Ergon. 2006, 37, 55–79. [Google Scholar] [CrossRef] [PubMed]

- Beernaerts, J.; Debever, E.; Lenoir, M.; De Baets, B.; Van de Weghe, N. A method based on the Levenshtein distance metric for the comparison of multiple movement patterns described by matrix sequences of different length. Expert Syst. Appl. 2019, 115, 373–385. [Google Scholar] [CrossRef]

- Ribeiro, M.; Singh, S.; Guestrin, C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Diego, CA, USA, 12–17 June 2016; pp. 1135–1144. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).