1. Introduction

Air traffic and, correspondingly, the workload of air traffic controllers (ATCOs) is expected to increase in the future [

1,

2], while there is an ongoing ATCO shortage in many parts of the world [

3,

4]. Accordingly, solutions should be found that support ATCOs in managing current and future demands. According to Mertes et al. [

5], already in 1969, the Air Traffic Control Advisory Committee (ATCAC) of the US Department of Transportation (DOT) predicted a significant growth in air traffic. This lead them to propose recommendations for future systems that emphasized higher levels of automation. Their goal was to achieve highly automated systems to be operational between 1995 and 2020 [

5]. As noted by Jenney and Ratner [

6], this high level of automation was intended to automate approximately 75–80% of tasks. In such a system, human operators were envisioned to work collaboratively with automation in different roles [

6]. The ATCAC’s vision for increased automation aligns with the predictions of Hunt and Zellweger in 1987 [

7]. They projected that by 1995, many planning and control tasks traditionally performed by ATCOs would be handled by computers. Beyond 1995, computers were expected to autonomously manage routine operations, such as generating and transmitting clearances directly to aircraft. In such automated systems, the role of ATCOs would shift to supervising the system and addressing exceptions [

7].

However, this prediction did not come true and ATCOs managing the upper airspace still have a wide variety of tasks. According to Kallus et al. [

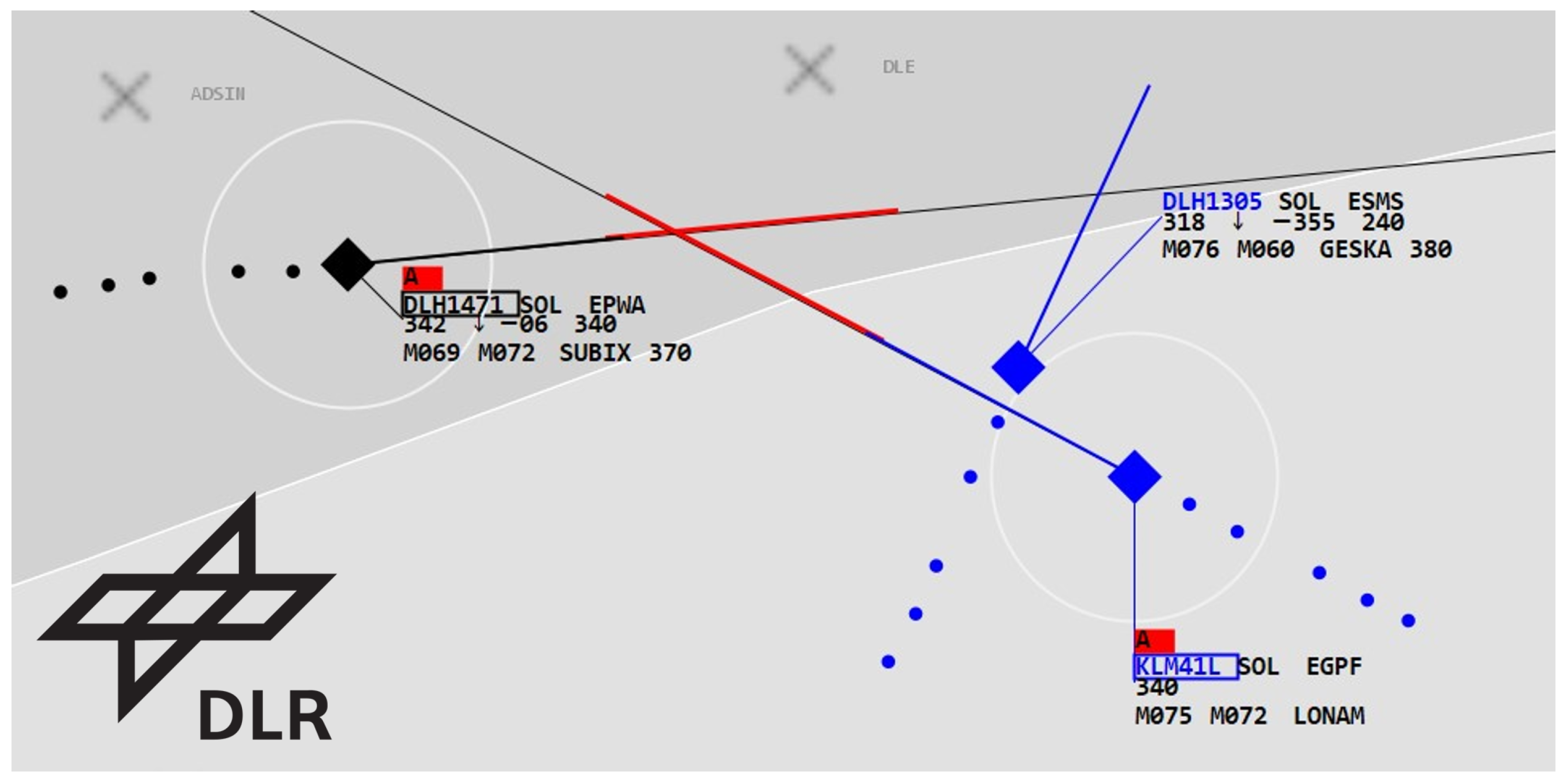

8], these can be summarized as follows: (1) maintaining situation awareness, (2) developing and receiving a sector control plan/conflict avoidance, (3) making decisions on control actions, (4) solving conflicts, (5) providing tactical air traffic management (ATM), and (6) other complementary tasks. All these tasks contribute to ensuring safe operations. While most of the mentioned tasks are less time-critical and focus more on pre-planning and maintaining situation awareness, “solving conflicts” stands out as a high priority task that is also time-critical. A conflict occurs when two or more aircraft are projected to violate minimum separation standards, as shown in

Figure 1. Because such conflicts demand timely and well-considered action, en-route ATCOs must continuously monitor traffic, anticipate potential conflicts, and implement, if necessary, resolutions to maintain flight safety. Therefore, conflict advisory tools play a crucial role in supporting en-route ATCOs by suggesting possible resolutions in the future. Accordingly, a notable early development during the 1980s was the application of symbolic artificial intelligence (AI) in the form of rule-based expert systems to automate conflict resolution [

9]. This demonstrated the potential of AI to automate tasks of ATCOs and, therefore, potentially reduce the workload of ATCOs. This set the stage for research into more sophisticated AI technologies to support ATCOs.

With the rapid advancements in AI, particularly in the domain of deep learning during the 2010s and 2020s, its potential applications in aviation and air traffic control (ATC) have garnered significant attention, leading administrative aviation organizations to publish different AI roadmaps [

10,

11]. Accordingly, current research investigates the decision support and automation for tasks such as conflict resolution using AI [

12]. A significant challenge researchers are facing is the interaction between AI and human ATCOs. An important aspect of this interaction is the trustworthiness of AI, which goes hand in hand with the ATCO’s acceptance of the system [

11]. Previous research has shown that ATCOs are often reluctant to relinquish control, which could result in low acceptance of highly automated systems [

13]. Even for low-level automation in the form of recommender systems, a low acceptance could lead ATCOs to reject advisories suggested by the system frequently, not using the full potential of these tools.

Accordingly, current research is investigating different approaches to increase trust or acceptance of these systems. A common approach in many domains, including aviation, is to increase transparency or explainability. This approach was demonstrated by Würfel et al. [

14], who developed a pilot advisory system with explainability components to meet the trust requirements of AI by the EASA [

11], or Ternus [

15], who investigated the influence of transparency on user trust in such a pilot advisory system. Similar concepts are also implemented in the ATC domain. For example, Tyburzy et al. proposed a human–machine interaction display for ATC [

16] to improve transparency and Westin et al. [

17] investigated the effects of more transparent conflict resolution advisories.

Another approach aims to align system-generated advisories with human decisionmaking patterns, making them more intuitive and thus easier for air traffic ATCOs to understand and, therefore, accept [

18]. This direction holds significant potential, particularly in the aviation domain and, more specifically, in ATC. As previously discussed, one of the core responsibilities of ATCOs is resolving conflicts between aircraft [

8]. While Westin et al. explore the broader implications of personalization in human–automation interaction, they explicitly highlight air traffic conflict resolution as a relevant use case for personalized systems [

18]. Typically, ATCOs can resolve conflicts using lateral, vertical, or speed-based maneuvers. Lateral resolutions involve assigning new headings or directing aircraft to alternative waypoints, while vertical resolutions are achieved by assigning different flight levels. Additionally, controllers may resolve conflicts by adjusting aircraft speed. To address a conflict, an ATCO may issue one or multiple instructions to one or several aircraft. This makes it a complex task with many different strategies that ATCOs can follow to find a suitable solution. Accordingly, it is an ideal problem for implementig and testing different approaches to personalized advisories and testing the effects of these approaches.

Therefore, this literature review explores current works that contribute to this concept in the domain of air traffic conflict resolution and provides an overview of the opportunities and open challenges of this research direction.

First, papers that aim to identify different strategies that ATCOs use to resolve conflicts are investigated. After that, the technologies available to model these strategies and develop personalized systems capable of generating advisories tailored to either individual users or groups are examined. Then, the paper provides an overview of approaches that aim to combine and balance the benefits of personalized advisories with advisories that strive for efficiency. Finally, papers that examine the influence of personalization on the acceptance rate of conflict resolution advisories are reviewed. Accordingly, the research questions to be answered by this literature review are as follows:

RQ 1: What strategies do ATCOs use to resolve conflicts and how can these strategies be identified?

RQ 2: What methods can be used to train a personalized model?

RQ 3: What approaches can be used to balance personalized advisories with advisories that strive for efficiency?

RQ 4: Does the personalization of conflict resolution advisories (group-based or individual) improve their acceptance rates among ATCOs?

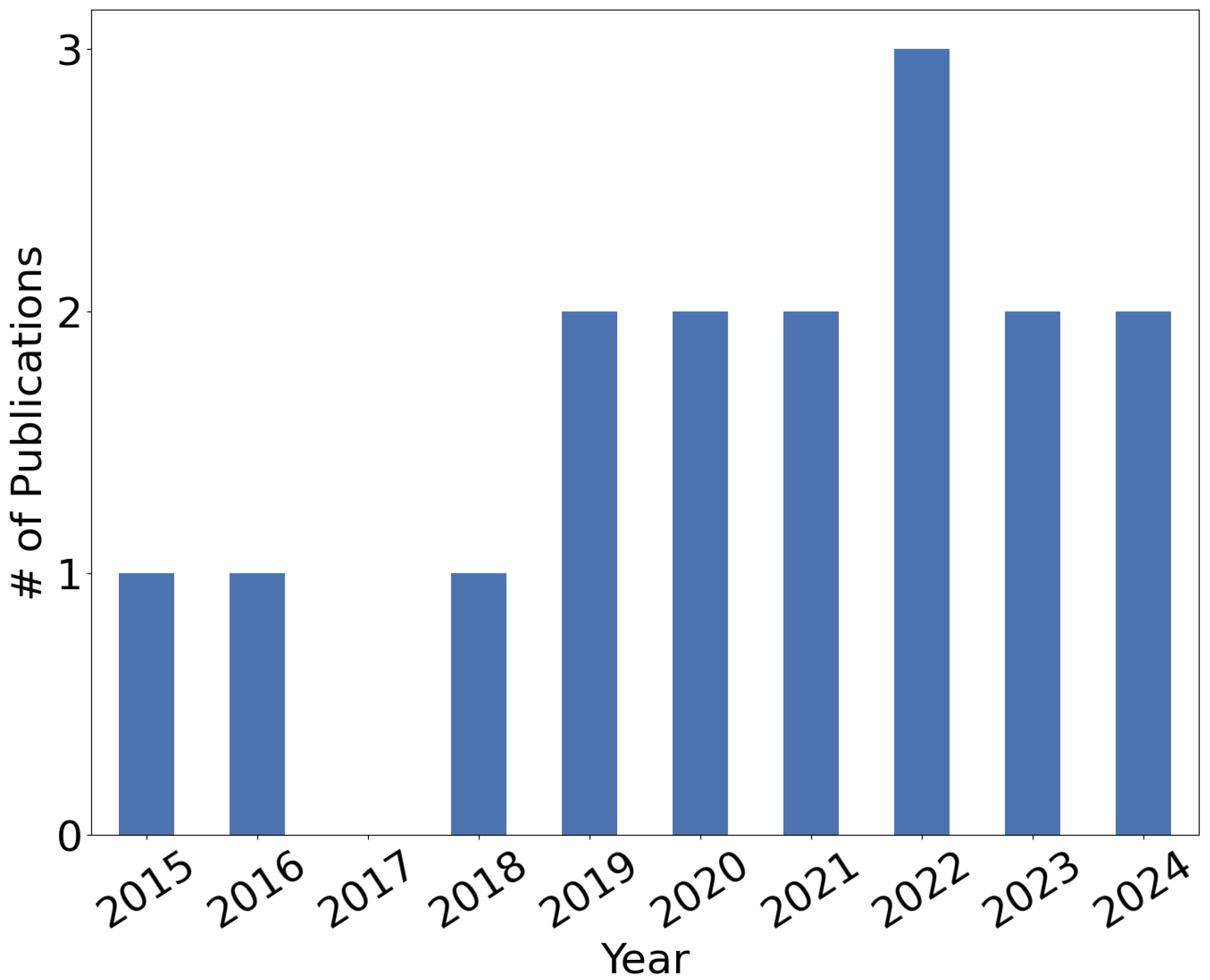

The remainder of this paper is structured as follows: First, in

Section 2, the effects and benefits of personalized systems are discussed. Then, in

Section 3 the method of the conducted literature review is described. After that, current publication trends are analyzed in

Section 4. This is followed by the core of the paper, presenting the results from the conducted review in

Section 5. After that, the opportunities resulting from the current research are explained in

Section 6 and the challenges in

Section 7. Finally, the results of the conducted review are concluded in

Section 8 and an outlook is provided in

Section 9.

2. Personalization in Artificial Intelligence

The use of personalization has proven to be an effective approach for increasing user acceptance and system efficiency across a range of domains. In web-based recommender systems, for instance, the goal is to tailor content to the needs and preferences of individual users. These systems typically analyze user behavior to detect patterns in interests and preferences. Methods such as clustering are used to uncover underlying structures in the data, enabling the generation of targeted and relevant suggestions [

19]. Personalized recommendations have been shown to enhance user engagement and satisfaction [

20].

Techniques such as behavioral cloning are also applied in more complex and interactive domains, such as autonomous driving. In these cases, systems are trained on demonstrations from human drivers to learn behaviors like steering or lane following [

21,

22]. While the primary goal in this context is often functional performance rather than personalization, the system may nonetheless inherit individual driving styles as a side effect. This form of imitation learning typically requires relatively little training data to achieve reasonable performance [

21]. However, the resulting personalization can also be a limitation: The human driver may not always demonstrate ideal behavior or cover rare but critical scenarios, such as how to recover from mistakes [

21].

In other scenarios, system behavior is shaped through human feedback: Models are guided to act in ways that align with human expectations and are discouraged when deviating from them. This helps prevent undesired or unpredictable behavior and supports applications in control tasks. It also extends to domains like recommender systems and generative models, where systems are adapted to better reflect the preferences and goals of individual users [

23].

The observed benefits in other domains using personalized systems suggest that similar approaches could be valuable in ATC. Given the central role of conflict resolution in the work of ATCOs, it is promising to investigate how the personalization of system advisories could increase the acceptance of proposed solutions. Accordingly, this paper reviews and analyzes existing research that explores personalization in conflict resolution to support ATCOs and discusses the potential benefits, challenges, and implications of such approaches.

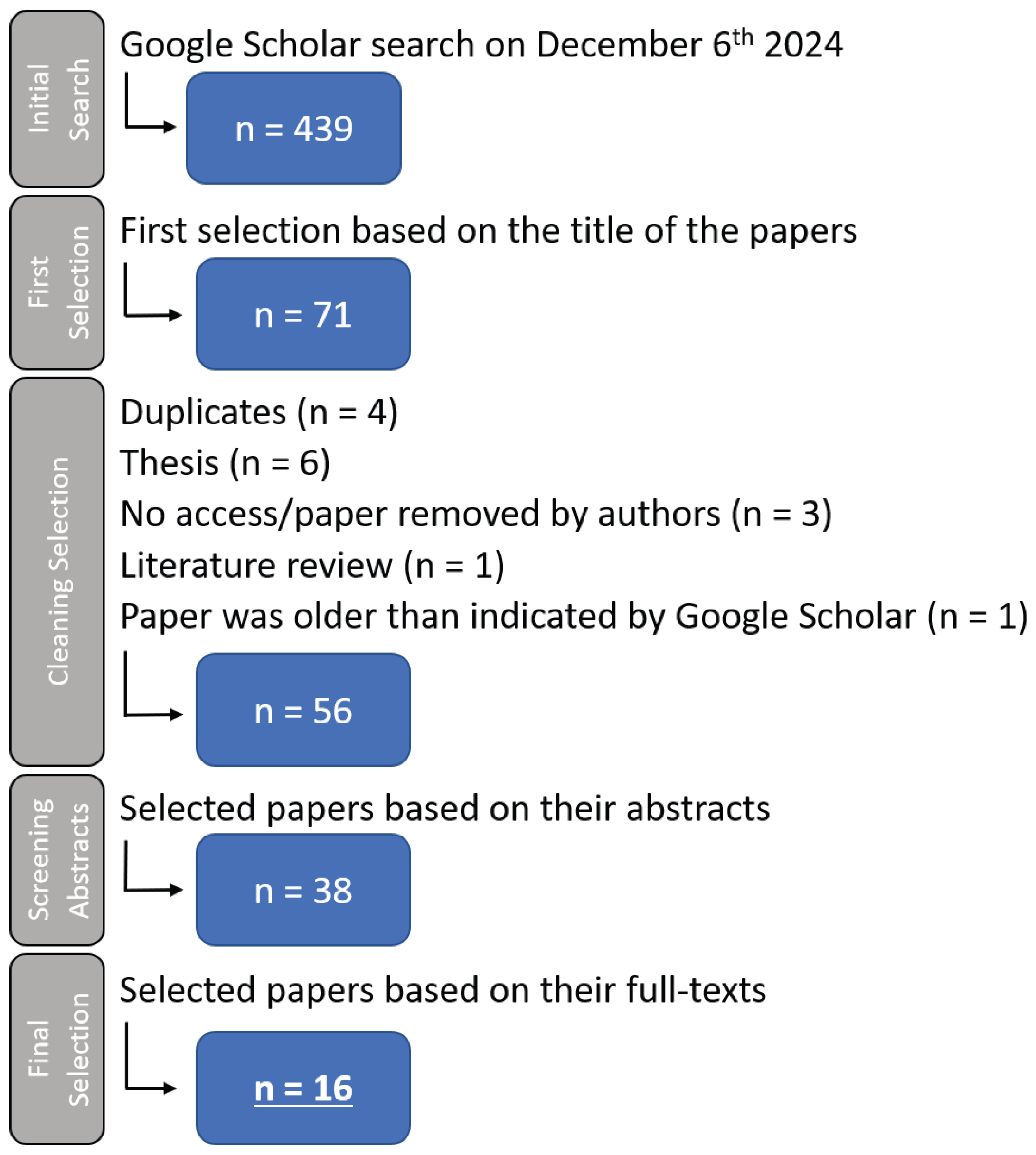

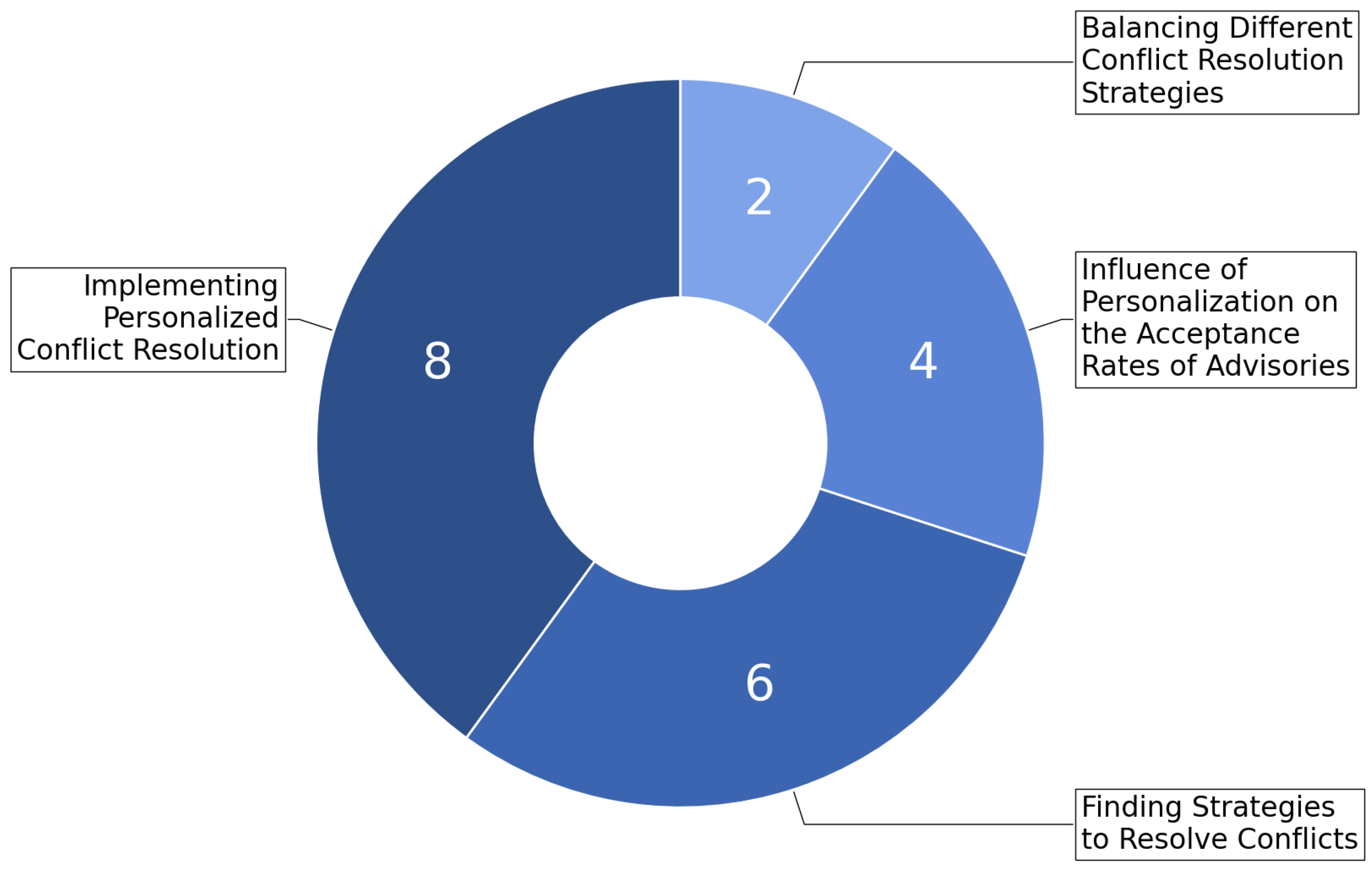

5. Thematic Analysis of Human-Centered Conflict Resolution

To further explore this landscape of research, the selected 16 papers were grouped into thematic categories based on their primary focus and contributions. An overview of these categories can be seen in

Figure 4 and an overview of the papers in

Table 1. This classification aims to highlight the primary focus and key contributions of each study. Given the interdisciplinary nature of the field, some papers were relevant to multiple categories and were, therefore, included in more than one grouping. The topics identified are “Finding Strategies to Resolve Conflicts”, “Implementing Personalized Conflict Resolution”, “Balancing Different Conflict Resolution Strategies”, and, lastly, “Influence of Personalization on the Acceptance Rates of Advisories”.

5.1. Finding Strategies to Resolve Conflicts

Recent research provides insights into the decision-making strategies of ATCOs, revealing individual differences, inconsistencies, and preferences in conflict resolution methods. To implement personalized conflict resolution advisories, these findings have to be taken into account. Zakaria et al. [

31] classified ATCO strategies into three categories: altitude changes, heading changes, and a mixed approach. Since speed changes are rarely used to resolve conflicts, they are not considered as a viable strategy [

31]. While some studies, such as Westin et al. [

25], found that heading changes were the most frequently used actions to resolve conflicts, others, such as Palma et al. [

29] and Zakaria et al. [

31], reported a preference for altitude changes, with heading adjustments and speed changes less favored. Trapsilawati et al. [

30] conducted a study with undergraduate students trained for only three hours in ATC procedures. Their findings showed a strong preference for altitude changes (72%), followed by speed changes (19%) and heading changes (9%), diverging from professional ATCOs’ behaviors. This demonstrates that conducting experiments with students in this context is often not meaningful, given that their approaches differ greatly from that of professional ATCOs. Furthermore, the findings of Palma et al. [

29] suggest that strategy selection is often driven by three main considerations: the characteristics of the conflict, a desire to improve customer service (e.g., providing more direct routes), and sector-specific constraints.

Beyond strategy selection, research highlights differences in how consistently ATCOs apply their preferred approaches. Guleria et al. analyzed decision-making patterns across multiple ATCOs, initially studying two individuals [

27] and later expanding their research to eight [

28]. Their results showed that ATCOs differ not only in their strategic preferences, but also in their adherence to them. Some ATCOs demonstrated high consistency in their maneuver choices, while others showed greater flexibility. The follow-up study [

28] suggested that experience may play a role in consistency, with more experienced ATCOs showing greater consistency with a particular strategy. This has implications for incorporating ATCO behavior into automation models, as inconsistencies could affect the reliability of personalized recommendations. The challenge of inconsistency is a recurring theme across multiple studies. Westin et al. [

25] also found that more experienced ATCOs seem to be more consistent. To address this problem, Regtuit et al. [

26] proposed an approach to distinguishing between a ATCO’s primary strategy and occasional deviations. Their study introduced an approach using k-means clustering with average silhouette width to differentiate between strategic preferences and inconsistencies. By applying this method to a dataset where an ATCO was instructed to behave inconsistently across three predefined strategies, the algorithm successfully identified the strategies used, demonstrating the potential for advanced analytical tools in understanding decision-making [

26].

These studies highlight the complexity of ATCO decision-making in conflict resolution, revealing that ATCOs adopt a variety of strategies that can differ not only between different ATCOs but also within the same ATCO over time. These strategies are influenced by individual decision-making tendencies, experience levels, and contextual factors such as regional conventions, rules, and airspace structures. The research presents mixed results regarding the most common conflict resolution strategies. Some studies emphasize heading changes, while others highlight altitude adjustments. This variability in strategy choice reflects the potential influence of regional differences, but it is also compounded by the inconsistency with which ATCOs may adhere to particular strategies. In addressing RQ 1—What strategies do ATCOs use to resolve conflicts and how can these strategies be identified?—the findings suggest that ATCOs’ conflict resolution strategies are diverse and dynamic, shaped by both personal and contextual factors. To identify these strategies, most studies rely on recording ATCOs’ actions during conflict resolution and analyzing various metrics to describe the strategies employed. Clustering techniques can also be utilized to uncover the underlying strategies, helping to reduce random variations and providing a clearer picture of the primary strategies used by individual ATCOs. This approach not only helps in identifying the main strategies but also in assessing the consistency with which an ATCO adheres to a particular strategy.

5.2. Implementing Personalized Conflict Resolution

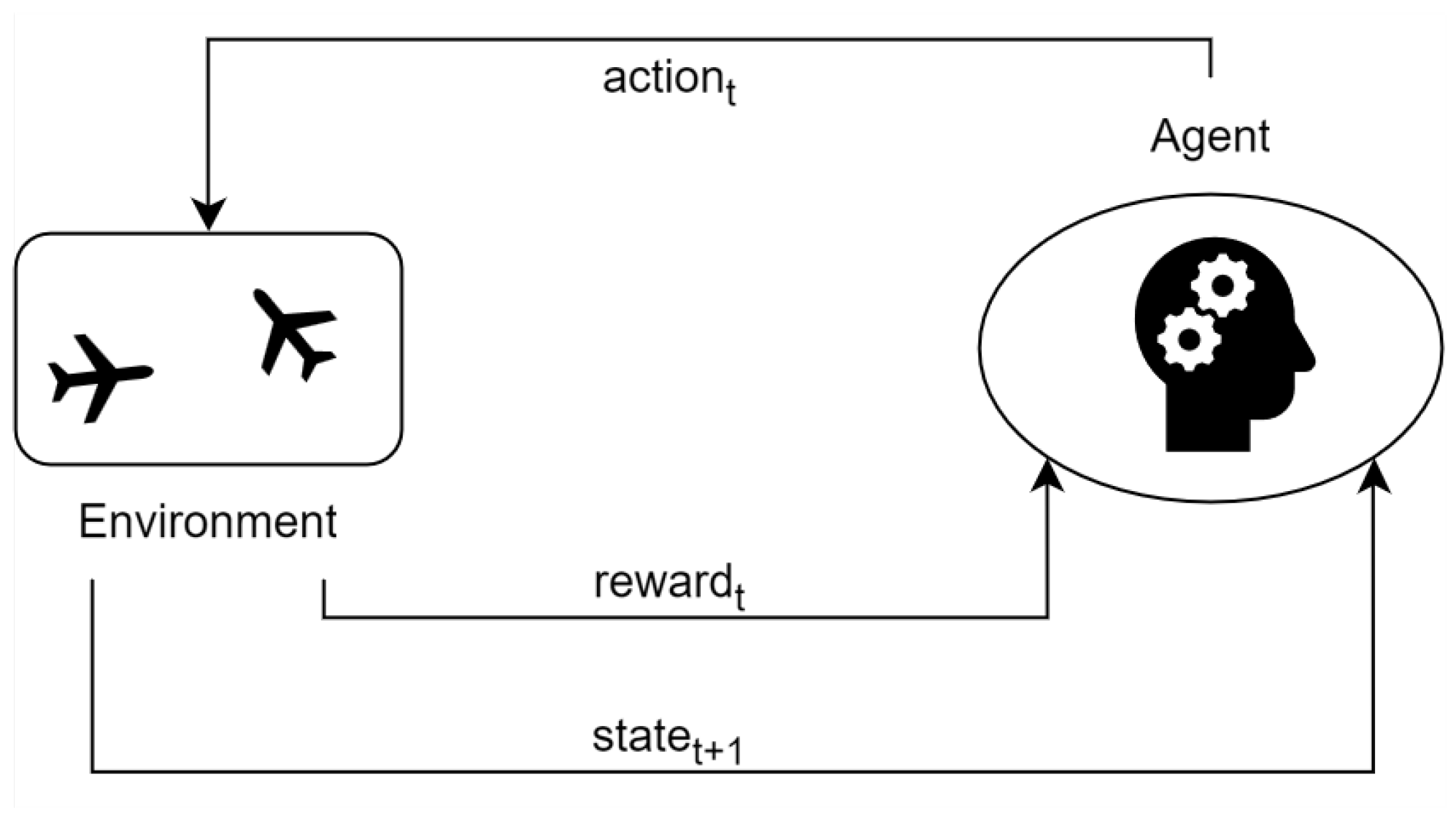

Several studies have explored the use of machine learning to create systems that are able to provide personalized conflict resolution advisories. Typically, these works either use reinforcement learning (RL) or supervised learning (SL). RL is a popular solution to develop conflict resolution advisory systems [

12]. The process to resolve a conflict can be described by a Markov Decision Process (MDP). A MDP is a mathematical framework used for modeling decision-making in a specific environment. As described by Naeem et al. [

40], it can be defined as a tuple:

where

S is a set of states,

A is a set of actions,

describes a transition probability, and

describes a reward function. Problems that can be described by an MDP can typically be tackled with RL. The goal is to find an optimal policy—a strategy for choosing actions—that maximizes cumulative rewards over time. Accordingly, by solving an MDP, it is possible to determine the best sequence of actions to take in order to achieve success in a given environment [

40]. This process is visualized in

Figure 5.

When implementing an RL approach, one of the primary challenges is to define a reward function that leads the system to learn the desired behavior. As described in

Section 5.1, Regtuit et al. [

26] used clustering algorithms to find and isolate conflict resolution strategies of ATCOs. Based on these strategies, a reward function is constructed that compares the agent’s actions to an estimated trace based on the strategy used by the ATCO. Accordingly, an important factor that influences the received reward is the alignment of the agent’s actions with the strategy of the ATCO. Regtuit et al. demonstrated the effectiveness of their approach using the Q-learning algorithm, a fundamental RL method that enables an agent to learn optimal decision-making through trial and error by interacting with its environment and updating its strategy based on received rewards. Q-learning assigns and updates values to state-action pairs, allowing the agent to learn which actions yield the highest expected future rewards over time [

42]. Similarly, Tran et al. [

32,

33] applied RL using the Deep Deterministic Policy Gradient (DDPG), a more advanced method compared to Q-learning. While Q-learning discretely maps state-action values to learn optimal policies, the DDPG is an actor–critic approach designed for continuous action spaces. In this approach, the reward function is structured to reward resolved conflicts and penalize violations of minimum separation standards. Additionally, since the DDPG is an actor–critic method, it uses two neural networks: the actor network, which determines the agent’s actions, and the critic network (value network), which evaluates how well those actions align with optimal decision-making [

43]. In this case, the value network assesses the similarity between the agent’s predictions and actual ATCO behavior, ensuring the trained agent aligns with real-world operational strategies [

32,

33].

Compared to RL, a more straightforward way to develop personalized systems is SL, which trains models on examples. Consequently, several recent studies have explored SL approaches to train personalized models for conflict resolution. Van Rooijen et al. [

34] investigated the feasibility of personalized automation using convolutional neural networks (CNNs) on data from 12 novice ATCOs. The study trained both personalized and general models, finding that personalized models outperformed general models in predicting ATCO decision-making, confirming that ATCOs follow distinct strategies. Moreover, a correlation was observed between ATCO consistency and model performance, suggesting that more consistent ATCOs are better suited for personalized automation. This underscores the potential for personalized machine learning models in ATC, provided that ATCOs maintain a certain level of decision consistency. A similar pattern emerged in studies by Guleria et al. [

27,

28], where random forests, XGBoost, and support vector machines (SVMs) were used in a multi-step prediction pipeline. Their approach sequentially predicted conflict resolution factors such as the aircraft chosen for maneuvering, the maneuver initiation time, heading angles, etc. Initially, the framework was tested on data from two ATCOs, demonstrating that the models could successfully replicate individual ATCOs’ decision patterns. The follow-up study expanded the dataset to eight experienced ATCOs, further confirming that their approach is suitable to create personalized models. Bastas et al. [

35] approached the problem using variational autoencoders (VAEs) to model when and how ATCOs resolve conflicts. In their initial study, the authors trained a VAE, finding that it outperformed a basic encoder-based model. The study primarily focused on predicting ATCO reaction timing, rather than specific resolution maneuvers. In their follow-up research [

36], random forests, gradient tree boosting, and neural networks were used to predict how ATCOs resolve conflicts. While the models for classification tasks performed well overall, the study noted difficulties in accurately predicting values for the vectoring, likely due to a small number of training samples.

A key limitation across multiple studies is the exclusion of flight level changes as a conflict resolution strategy, as seen in both Bastas et al. [

35,

36] and Guleria et al. [

27,

28]. This contradicts findings from [

29] and [

31] that for many ATCOs, flight-level adjustments are the preferred solution to resolve conflicts.

Accordingly, RQ 2—What methods can be used to train a personalized model?—can be answered as follows: Typically, a personalized model can be trained using different approaches. SL is as a common one, where data are collected from ATCOs and used to train models based on these examples. Another approach involves RL, in which ATCO data can either help determine strategies that form the basis of a reward function, guiding the training process, or be used to compare the actions of an AI agent with the actual behavior of ATCOs. This comparison allows the model’s performance to be assessed and improved over time. Both SL and RL provide effective methods for training personalized models, with each offering unique benefits.

5.3. Balancing Different Conflict Resolution Strategies

Recent studies have explored approaches to balance the preferences of ATCOs with optimal solutions. Westin et al. [

17] discussed a hybrid learning approach to balancing personalized and optimal models. They proposed combining SL (to achieve conformance) with RL to explore and identify optimal solutions. While the study did not implement this system, it emphasized that a combined framework could allow AI to replicate ATCO preferences while improving upon them where necessary, maintaining a balance between high acceptance rates and efficiency.

Another study, based on the work of Guleria et al. [

28], investigated how ATCOs respond to different conflict resolution advisories [

37]. Using an SL model, three types of resolution advisories were generated: (1) an conformal advisory maximizing alignment with the ATCO’s typical behavior, (2) an optimal advisory that minimizes travel time for greater efficiency, and (3) a balanced advisory, which is a trade-off between conformance and optimality. To find a balanced advisory, the paper proposes an approach that assigns an optimality and a conformance score to multiple generated advisories that lie between the conformal and optimal solution. Based on a balancing factor, the scores are weighted and summed up. The advisory with the highest overall score is selected as the balanced advisory. Results showed that the conformal advisory was the most favored, closely followed by the balanced advisory, while the purely optimal advisory was less accepted. This suggests that ATCOs prefer solutions that align with their existing strategies. Both approaches would be a valid answer to RQ 3—

What approaches can be used to balance personalized advisories with advisories that strive for efficiency? 5.4. Influence of Personalization on the Acceptance Rates of Advisories

Acceptance of AI is fundamental to ensure its adoption and effective use. The acceptance of AI is shaped by multiple factors including technological readiness, perceived usefulness and risk, ease of use, and impacts on organizational and societal levels. A study by Rane et al. [

44] highlights that the technological attributes of AI, such as performance and reliability, alongside ease of integration into existing systems, critically influence user acceptance. Trust, transparency, and fairness are also key components, while ethical concerns, privacy issues, and potential job displacement present notable challenges to acceptance [

44]. In the context of conflict resolution advisories, acceptance is often measured by the ratio of advisories accepted to those declined by the ATCO. Previous studies have shown that the alignment between conflict resolution advisories and ATCOs’ own resolution strategies influences the acceptance rate of such advisories. Hilburn et al. [

45] and Westin et al. [

46] indicate that ATCOs are more likely to accept advisories that mirror their own prior solutions, especially in highly complex scenarios. Personalized advisories not only had higher acceptance rates and agreement ratings but also resulted in faster response times compared to non-conformal advisories, suggesting that the alignment of AI with familiar strategies can foster trust and reduce cognitive load in critical settings [

46].

Westin et al. [

38] examined the role of personalization in AI-generated conflict resolution advisories. In their experiment, resolution advisories were either conformal, aligning with the ATCO’s own conflict resolution style, or non-conformal, based on the decision patterns of a different ATCO. Despite not being explicitly informed about the conformance manipulation, ATCOs accepted 77.8% of conformal advisories compared to 66.7% of non-conformal ones, highlighting a preference for suggestions that matched their own behavior; however, the results were not statistically significant. The study also explored the role of transparency, finding that while richer visual interfaces helped ATCOs better understand conflict resolution options, transparency did not significantly influence the acceptance rates of advisories. Building on this, Westin et al. [

17] explored the relationship between transparency, conformance, and optimality within the SEASAR project

MAHALO (

https://mahalo01.lr.tudelft.nl/, accessed on 25 February 2025). They found that personalization appeared to increase the acceptance and transparency to nudge ATCOs toward more optimal solutions while maintaining trust. However, the study also found that combining transparency with conformal solutions can have unintended negative effects. Because a strategic conformal advisory can be a sub-optimal solution, transparency can highlight its inefficiency, leading ATCOs to reject it despite its familiarity [

17]. This suggests that transparent AI should not merely emphasize optimality but also account for user preferences and biases in decision-making.

In another paper, by Westin et al. [

39], the behavior of 34 ATCOs was examined in different conflict scenarios. They used different approaches to generate individually personalized, group-based, and optimal solutions. An individually personalized model is trained solely on the recorded data of one specific person meant to use that specific model, while the group-based model uses data recorded from all participants to train the model. The results indicated that the effects of individual personalization and group-based models were not consistent across all scenarios, potentially due to small sample sizes or inherent ATCO inconsistencies in conflict resolution. The study identified that ATCOs with stable, predictable strategies were better suited for personalized models, while variability in decision-making negatively affected the effectiveness of both group-based and individually personalized models. Moreover, the study found that ATCOs’ preferences for separation distances played a key role in their acceptance rate of AI-generated advisories. Moreover, the study found that ATCOs’ acceptance rate of optimal advisories also depends on how closely the optimal advisory’s closest point of approach (CPA) matches their personal preferences. Those whose preferences were further from the optimal CPA showed lower acceptance rates and made more adjustments with increased transparency [

39]. In a study by Guleria et al. [

28], two ATCOs, ATCO A and ATCO B, were compared. These two ATCOs exhibited different levels of consistency in their conflict resolution strategies. ATCO A demonstrated highly consistent decision-making and rarely deviated from their preferred resolution strategies, accepting another ATCO’s solution only when it was visually identical to their own preferred approach. In contrast, ATCO B, who had less radar operations experience, was more open to alternative solutions and occasionally adopted ATCO A’s strategies when they were perceived as more efficient. This suggests that experienced ATCOs may place greater importance on conformance, whereas less experienced ATCOs may be more adaptable to new strategies. According to Guleria et al. [

28], these findings raise an important research question:

Does conformance become more relevant only for experienced ATCOs who have already established consistent decision-making strategies? If so, this could have implications on how personalized advisory systems should be tailored based on ATCO experience levels [

28]. Since Guleria et al. [

28] tested this only with two ATCOs, it would be interesting to analyze this on a larger scale.

Overall, the literature suggests that personalization, whether at a group or individual level, plays an important role in improving acceptance rates of conflict resolution advisories. This answers RQ 4: Does the personalization of conflict resolution advisories (group-based or individual) improve their acceptance rates among ATCOs? Individually personalized models tend to demonstrate the highest acceptance rates, but they require substantial data from each ATCO, which presents challenges for the actual implementation. Furthermore, their effectiveness is contingent upon the consistency of the ATCO’s strategies, which may vary. Group-based models, while less demanding in terms of individual data collection, pose the risk of incorporating conflicting strategies within the group, potentially reducing conformance and, consequently, acceptance rates.

7. Open Challenges

There are various challenges involved in implementing personalized conflict resolution systems, with three stakeholder groups being particularly affected. These are the challenges faced by developers, those encountered by certifying bodies, and those experienced by ATCOs.

7.1. Challenges for Developers

Developers face the challenge of translating current research into practical, real-world applications. At the same time, they must remain mindful of the responsibility and potential impact that personalized systems can have on ATCOs. These considerations result in the challenges outlined in the following chapters.

Most existing approaches rely on artificially created, isolated scenarios. However, implementing such a system in a real-world environment would require a thorough analysis of ATCOs’ strategies under actual operational conditions and is still an open challenge. One potential method for developing a personalized conflict resolution system using previously recorded traffic data is through a group-based approach. This would first necessitate identifying the strategies employed by ATCOs in real environments, which could be achieved using methods similar to those outlined in

Section 5.1. Techniques such as clustering could be applied to datasets like the one created by Gaume et al. [

51] to distinguish between different approaches taken by ATCOs and allow the identification of underlying strategies. Once these strategies are established, the behavior of an ATCO interacting with the system could be classified accordingly. This classification would allow for tailoring the systems advisories to align with the preferences and strategies of the specific ATCO.

Another key challenge for developers is finding the right balance between personalized advisories and optimized advisories. Relying solely on personalized advisories may limit their efficiency to the individual performance of the ATCO, whereas optimized advisories may not align with the ATCO’s expectations or decision-making style and result in low acceptance rates. Future research should investigate how to dynamically integrate both approaches in a way that enhances overall efficiency without compromising acceptance rates. Guleria et al. [

37] highlight the potential of combining optimized advisories and personalization; however, identifying the optimal trade-off between system performance and the personalization of advisories remains an open challenge.

Another challenge, often overlooked by existing works, is that conflicts are dynamic situations that evolve over time. As a result, any solution, personalized or optimal, should also adapt over time. However, current methods looking into personalized conflict resolution typically provide static solutions.

Furthermore, because ATC is a safety-critical domain, systems must be thoroughly validated and there must be some sort of redundancy or control system to ensure that any proposed action can be safely executed. The validation of AI, especially deep learning, is still an ongoing research topic [

52]. This is especially a problem if the system should continue to learn to further adapt to the preferences of an ATCO, because this would require monitoring the system continuously to ensure that no undesired behavior is learned. Accordingly, a continuous verification and validation process would be necessary. In addition, ensuring the safety of the predictions made by deep learning-based systems is also an important research direction. There are many approaches that could be applied and tested for personalized conflict resolution [

53].

Moreover, personalization requires substantial amounts of data from each ATCO using the system. Collecting these data can be both time-consuming and challenging. For example, Guleria et al. [

28] recorded over 570 conflict scenarios from two different ATCOs to train two personalized models. However, these models were limited to predicting heading changes. Training more complex models that also allow flight level or speed changes would require even larger datasets. Consequently, personalizing the conflict resolution advisories for each individual ATCO would require enormous effort. Therefore, developers need to explore ways to reduce the amount of data needed. One approach could be to train a model on a group of ATCOs, similar to the group-based models proposed by Westin et al. [

39], and then fine-tune the model for individual controllers. What makes this even more difficult is that the developers need to be aware that ATCOs not always work consistently, as discussed in

Section 5.1. It is, therefore, a major challenge not only to record sufficient data, but also to filter and decide which of the recorded data is meaningful and which should perhaps be excluded based on the consistency of the ATCOs.

Lastly, the developers have to consider that a decision support system, especially one that is designed to generate high acceptance rates, could lead to issues like over-trust [

54]. Accordingly, developers have to ensure that their systems are not too “persuasive”, leading to bad or even dangerous decisions. This is a problem that has not been widely considered for decision support systems for ATC tasks yet.

7.2. Challenges for ATCOs

Several human factors challenges emerge when ATCOs collaborate with AI systems, which extend even further to personalized conflict resolution systems.

First of all, there is a need to ensure that ATCOs do not extensively rely on decision support systems in the future. Previous research has shown that people tend to rely too much on recommendations by AI in different situations [

54,

55], which can lead to knowledge complacency [

56]. Accordingly, ATCOs need to learn about the limitations of AI-based recommendation systems and be aware of the risks in using them. They need to critically question advisories and learn to calibrate their trust to a sufficient level and avoid complacency. Therefore, they must be capable of detecting AI errors and verifying the system’s outputs, which depends on an adequate understanding of the system’s reasoning and constraints [

57]. Furthermore, it has been found, in the past, that pilots’ cognitive skills can be negatively impacted when relying on a high degree of automation in the cockpit [

58]. This problem might also apply to ATCOs when using highly automated systems. To prevent this, ATCOs would potentially need to regularly take part in additional trainings to keep their skill level and stay prepared for situations where they need to act without system support. Alternatively, the systems would have to be designed in a way to avoid this problem in the first place. Generally, achieving shared situation awareness and building shared mental models remains a central challenge in the collaboration of humans with autonomous systems [

59], like the proposed personalized conflict resolution systems.

7.3. Certification and Adoption Challenges

There are also open challenges for the certification and adoption of personalized conflict resolution systems. These concern, e.g., training procedures and the certification of systems that may change dynamically depending on the user.

To enable the certification and safe adoption of personalized conflict resolution systems in ATC, a clearly defined and consistently applied concept for human–AI collaboration is essential. This includes specifying how roles are distributed and how communication takes place with such systems. As mentioned in the previous section, effective collaboration depends on both human and AI sharing a common mental model of the situation. This can be supported by such systems that transparently communicate their own intentions, goals, and reasoning processes, as proposed by Chen et al. [

59]. Hereby, the alignment of the mental models may be further enhanced through personalization, allowing the AI to adapt its behavior to the specific strategies of operators.

Nevertheless, as noted by Westin et al. [

25], balancing personalization with standardization remains a critical challenge. To certify a system, it is necessary that this system follows certain regulations and standards. Introduction personalization would make it more difficult to ensure the safety of the system, making the process challenging for certification agencies. Accordingly, further research is needed to explore how personalization can be implemented without compromising operational safety and procedural uniformity.

Another challenge is that new training procedures would be needed to train first-time users and to avoid problems like over-trust and knowledge complacency. This is a challenge on the administrative side in that these new training procedures would need to be developed, and on the operational side in that time would be needed for ATCOs to learn and adapt to these systems.