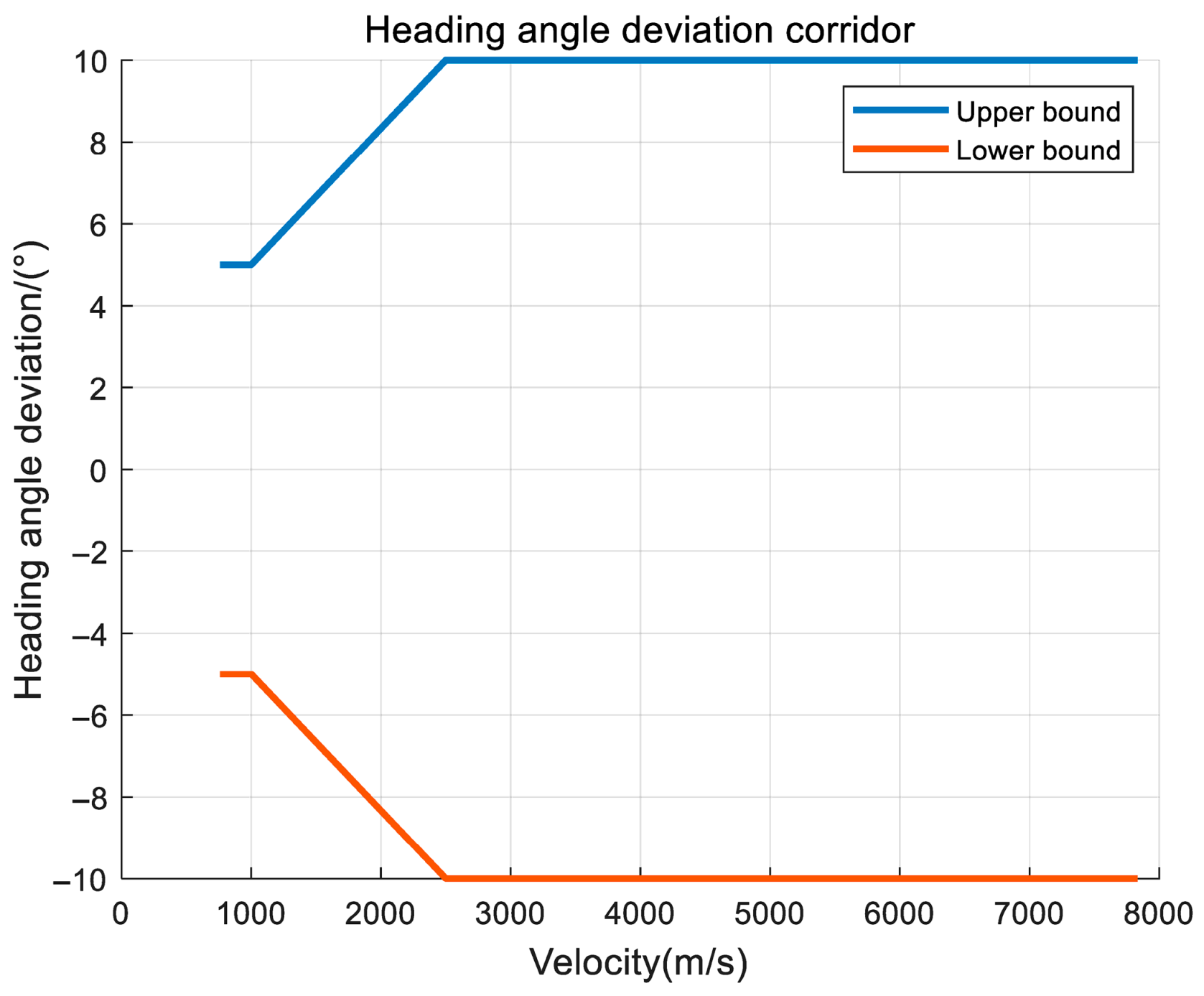

3.1. Angle of Attack Profile and Reentry Corridor

- 1.

Angle of attack profile

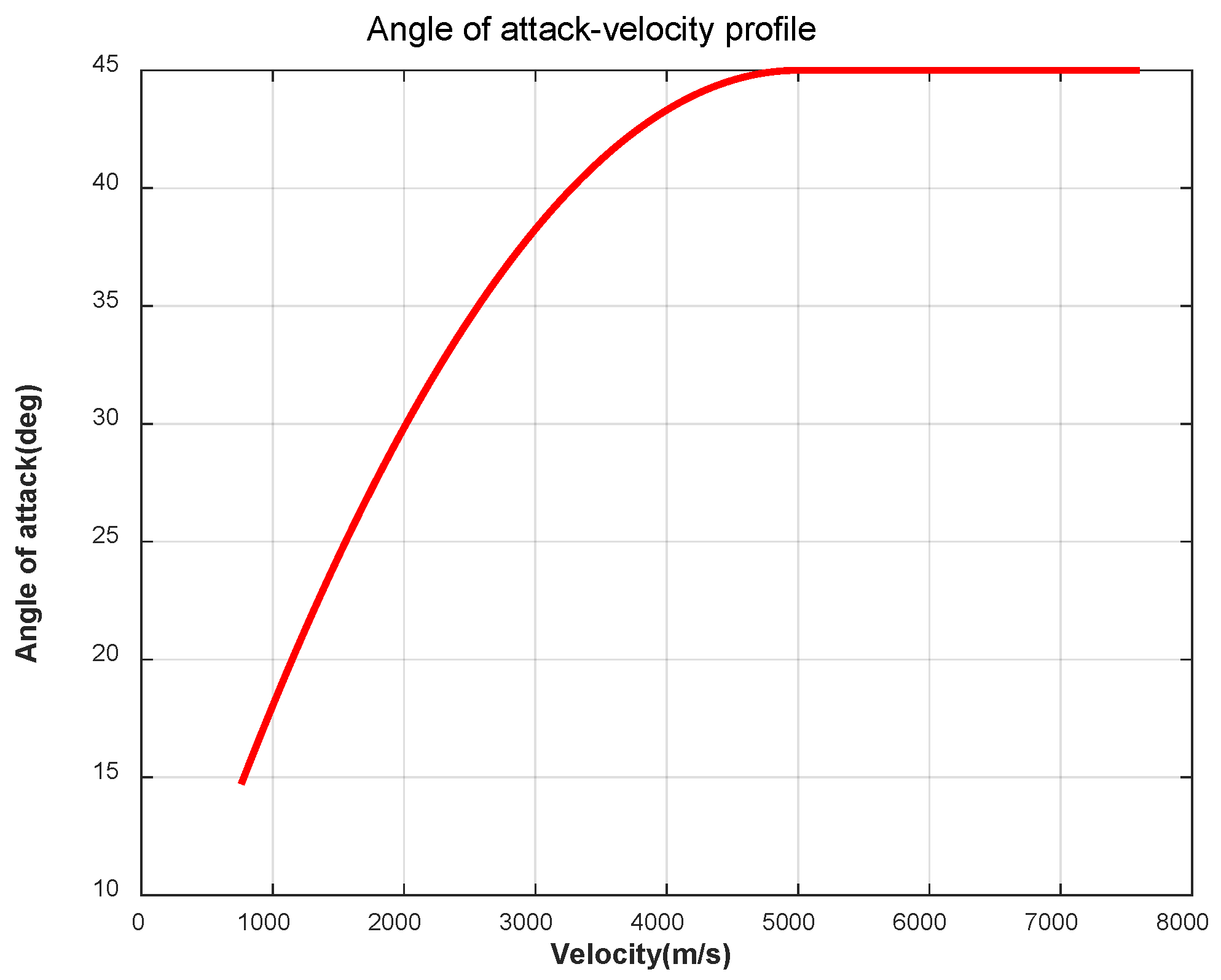

The angle of attack profile (

) is a function of angle of attack with respect to velocity. At the beginning of reentry, RLV needs to fly at a high angle of attack to increase lift and drag, so as to avoid excessive heat flow caused by excessive height drop. With the decrease in speed and height, the angle of attack should be gradually reduced to reduce the lift and drag, which can avoid the jump of the trajectory caused by excessive lift, and can also reduce the drag and increase the range. When the reentry phase is coming to an end, in order to ensure that the RLV can perform guidance tracking in the energy management phase, it is necessary to transition the angle of attack to the front end of the maximum lift-to-drag ratio [

7].

According to the above design principles, this paper refers to the angle of attack profile planning method of the space shuttle. At the beginning of reentry, it keeps flying at a high angle of attack. When the velocity is less than a certain value, the angle of attack begins to decrease with the velocity until it reaches the maximum lift-to-drag ratio at the end of reentry. In order to ensure the smooth connection of the subsequent track angle curve, the designed angle of attack–velocity profile formula is as follows:

where

,

, and

are design parameters.

The angle of attack profile designed in this paper is shown in

Figure 1.

- 2.

Reentry corridor

The calculation method of the reentry corridor is to convert the multiple process constraints into the constraints of a single physical quantity through a series of mathematical transformations [

17]. The three process constraints of heat flow, overload, and dynamic pressure form the hard constraints of the reentry corridor, and QEGC forms the soft constraints of the reentry corridor, which together form the upper and lower boundaries of the reentry corridor.

The expression transformed from Equation (4) to Equation (7) into the drag acceleration–velocity corridor is

where

,

,

, and

are the drag acceleration boundaries corresponding to

,

,

, and QEGC constraints, respectively.

is the drag acceleration reference profile.

It is assumed that the overall constraint of RLV is as follows: the maximum heat flux rate is

; the maximum overload is

; and the maximum dynamic pressure is

. The corresponding relationship between the drag acceleration and the relative velocity is calculated by Equation (12), and the reentry corridor of the drag acceleration–velocity profile can be calculated, as shown in

Figure 2.

,

, and

together constitute the lower boundary of the height–velocity corridor, and

forms the upper boundary of the height–velocity corridor.

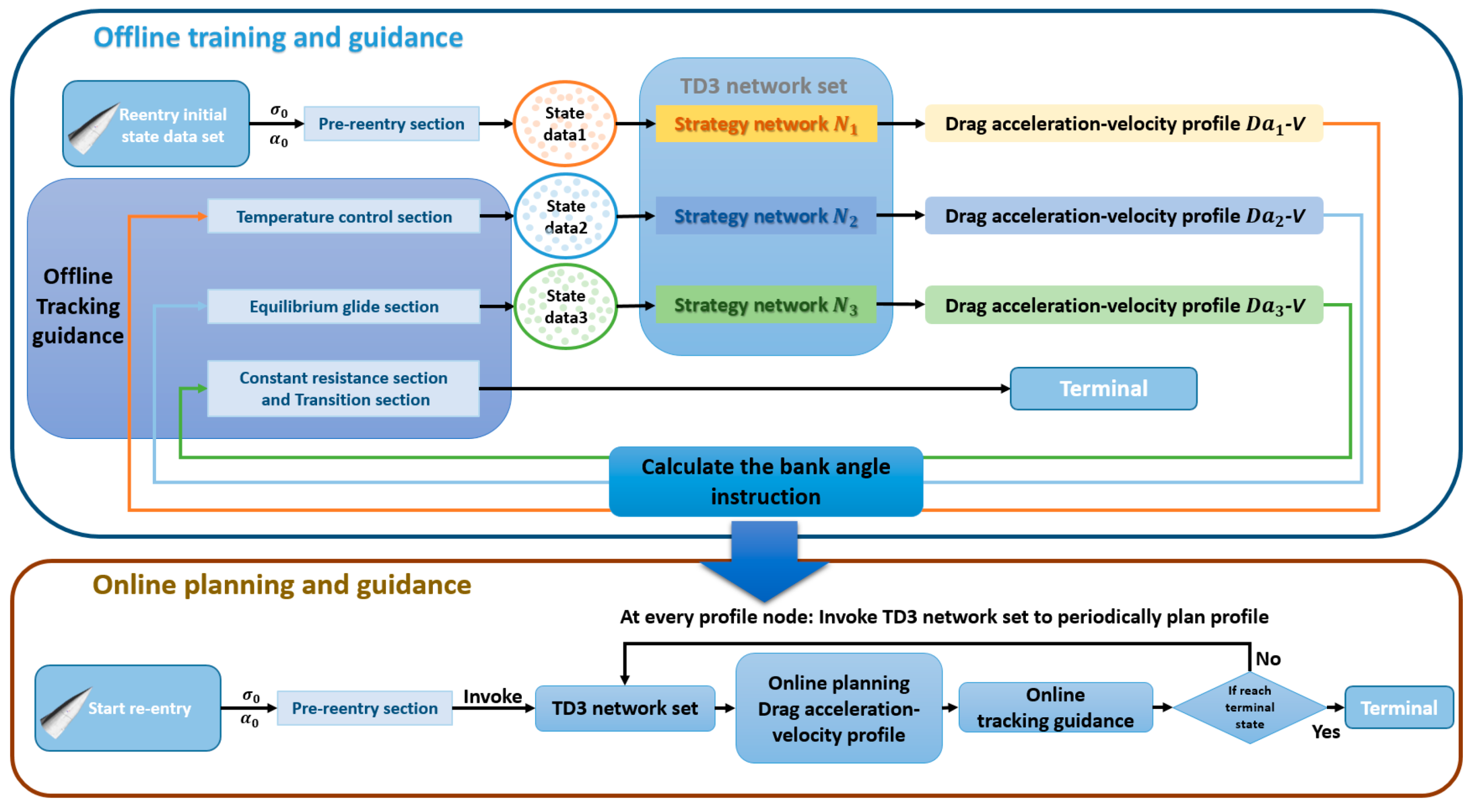

3.2. Reentry Drag Acceleration Reference Trajectory Designing

The reentry trajectory of an unpowered RLV is usually divided into a pre-reentry section and a glide section [

18]. The pre-reentry section is usually located at 60–120 km above sea level. In this stage, the atmospheric density is very thin, and the aerodynamic effect is not obvious. It is difficult for the spacecraft to adjust its trajectory by aerodynamic force. Therefore, it is usually necessary to maintain a constant large angle of attack and bank angle flight. When the spacecraft enters the glide phase, as the height decreases, the aerodynamic force begins to play a role, and the aircraft gradually generates guidance capability. At this time, the aerodynamic force can be used to achieve the purpose of adjusting the reentry trajectory, so this phase becomes the key link of reentry trajectory planning.

When the spacecraft is flying in the rarefied atmosphere, the aerodynamic force is weak, the aircraft has no guidance ability, and the control quantity has little effect on the descent trajectory. It can be considered that the spacecraft makes free-fall motion in this stage. Therefore, the angle of attack and bank angle in the pre-reentry section can be taken as constant

and

. The reentry trajectory in the pre-reentry section is obtained by numerical integration of the dynamic Equation (1) [

18].

The conditions for the end of the pre-reentry section are set as follows:

where

is a small quantity pre-set according to the accuracy requirement.

The reason why Equation (14) is used as the end condition of the pre-reentry trajectory is that as the RLV enters the dense atmosphere from the thin atmosphere, when the overload reaches a certain value, the spacecraft can rely on aerodynamic force to change its flight trajectory, and the aircraft gradually has the ability to change the trajectory.

- 2.

Glide section

The glide section needs to carry out segmented trajectory design, which is generally composed of four sections [

7]: temperature control section, equilibrium glide section, constant resistance section, and transition section. In order to ensure a smooth connection of each section, the first or second order function is selected for profile design. The drag acceleration–velocity expression of each stage is given below.

where

,

,

,

,

,

,

, and

are trajectory design parameters.

,

,

,

, and

are the terminal velocities of each section of the pre-reentry section and the gliding section.

According to Equations (15)–(18), there are 9 design parameters of the reference section. If the direct design is very complicated and not intuitive, and if the endpoints of each section are considered as the parameters to be designed, the design difficulty will be greatly simplified.

After the angle of attack–velocity profile is determined, the process constraints can be directly converted into the boundary of the reentry corridor. As long as the aircraft flies within the reentry corridor, all constraints are satisfied.

According to the principle of smooth connection of each segment, the state and of the pre-reentry terminal and the state of the reentry terminal can be known:

If the terminal state of the temperature control section is known, and in order to ensure that the track angle from the pre-reentry section to the gliding section does not jump, and can be calculated.

If the terminal state of the equilibrium glide section is known, and the terminal state and of the temperature control section and the terminal state of the equilibrium glide section are given, , , , and the terminal state of the equilibrium glide section can be calculated.

If the terminal state of the equilibrium glide section is known, given the terminal state of the equilibrium glide section, and the terminal state of the constant resistance section can be calculated.

If the terminal state of the constant resistance section is known, and the terminal state of the constant resistance section and the reentry terminal state are given, , , and can be calculated.

Let the parameters to be designed be

According to the flight mission and characteristics of each segment, the basic principles of design parameter adjustment are as follows:

The endpoint and the drag acceleration profile determined by the endpoint must be in the reentry corridor;

As far as possible to ensure a smooth connection at the endpoints of each section;

The control quantity such as the heeling angle does not exceed the control quantity constraint;

The velocity of the end of the temperature control section should be after the velocity corresponding to the maximum heat flux;

Considering the uncertainty of flight aerodynamic parameters, the drag acceleration profile should be kept at a certain distance from the reentry corridor.

According to Equations (12) and (13), the function

of the upper and lower boundaries of the reentry corridor are follow as

The reference drag acceleration profile parameters are uniquely determined by

and can be described as a function of

and

.

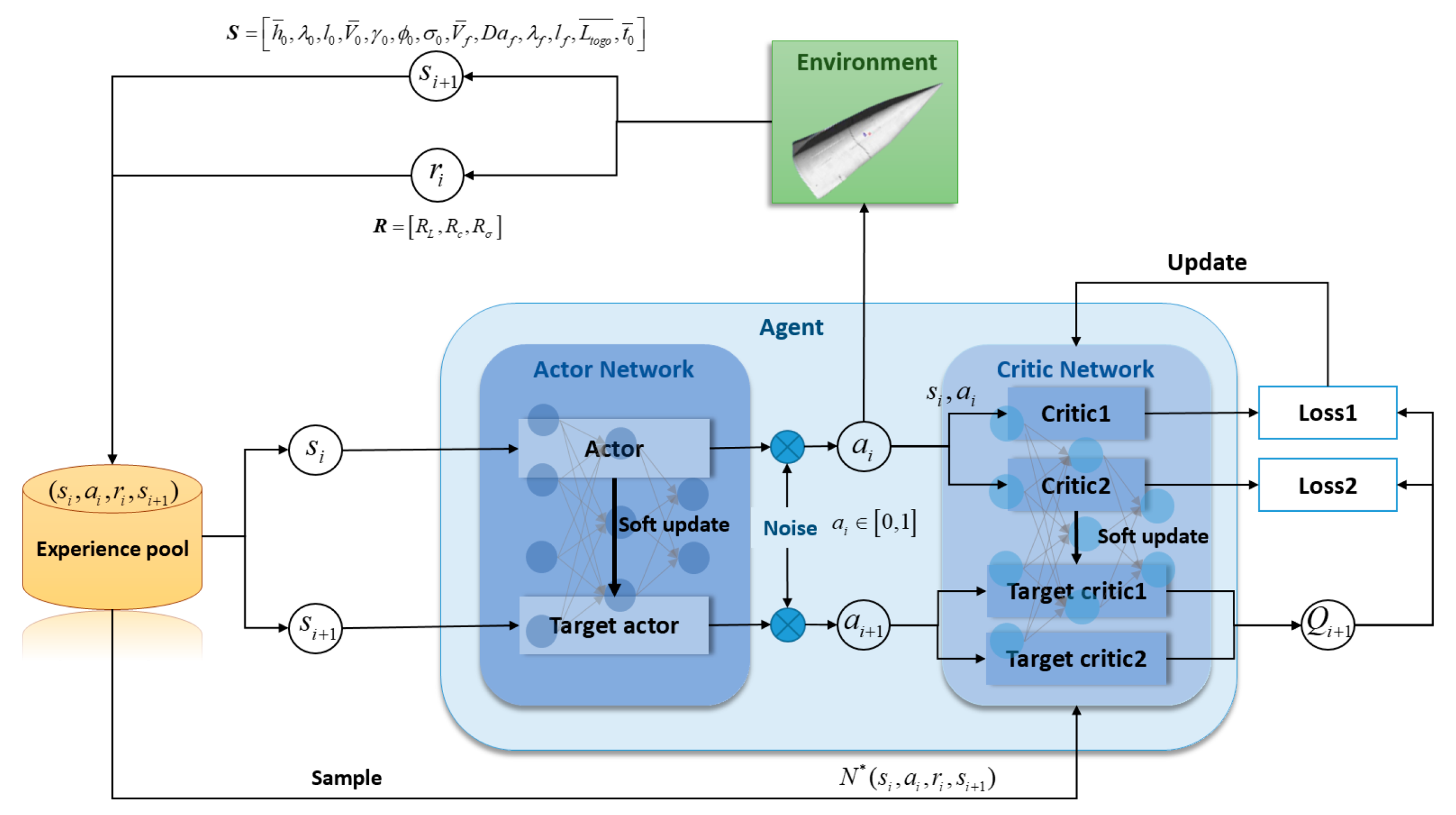

Considering the above principles and the characteristics of the reentry process, the final online planning problem of the reentry drag acceleration reference profile is that given the initial conditions, under the conditions of satisfying the process constraints, control constraints, and terminal constraints, the minimum flight distance of the aircraft is taken as the optimization objective, and the trajectory parameters

are optimized online to plan a qualified trajectory. The expression is

where

is the distance to be flown;

and

the latitude and longitude coordinates of the current aircraft position;

is the objective optimization function,

and

are the maximum heat flux decrease with

and

being the corresponding velocity, respectively;

is the corresponding velocity when the angle of attack is pressed down; and

and

are the thresholds.