Dynamic Resource Target Assignment Problem for Laser Systems’ Defense Against Malicious UAV Swarms Based on MADDPG-IA

Abstract

1. Introduction

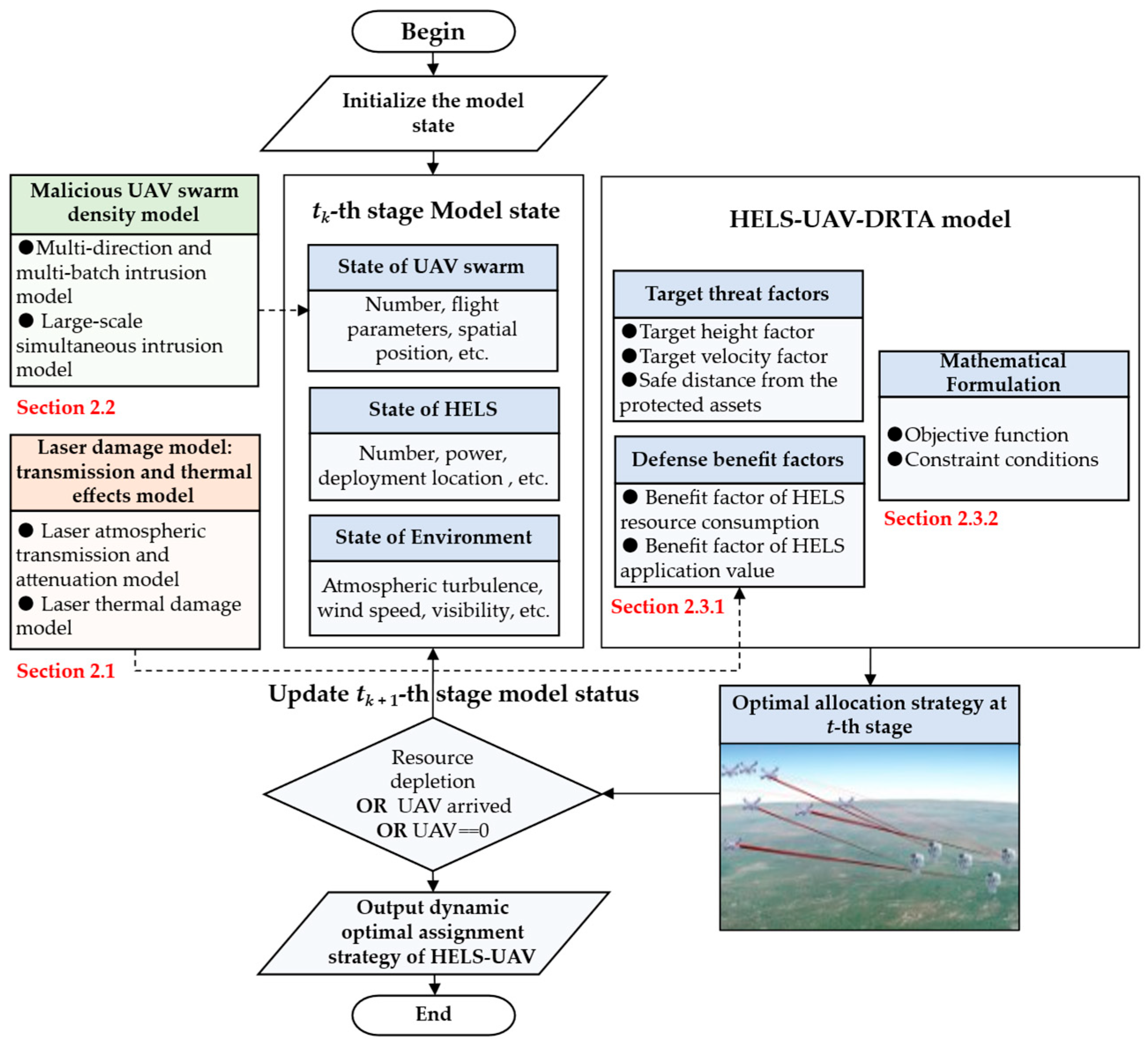

- (1)

- By analyzing the thermal damage mechanism of HELSs and considering the impact of various factors such as spatial situation and weather conditions, we construct an HELS damage-capability model that incorporates atmospheric-transmission and thermal-damage effects. Based on the real-time situation of malicious UAV swarms and the need for defense benefits, an HELS–UAV–DRTA model focused on optimal damage effectiveness has been established. This has further evolved into strategies such as delaying decision-making to await optimal timing and intercepting in different areas in coordination, to optimally achieve the intention.

- (2)

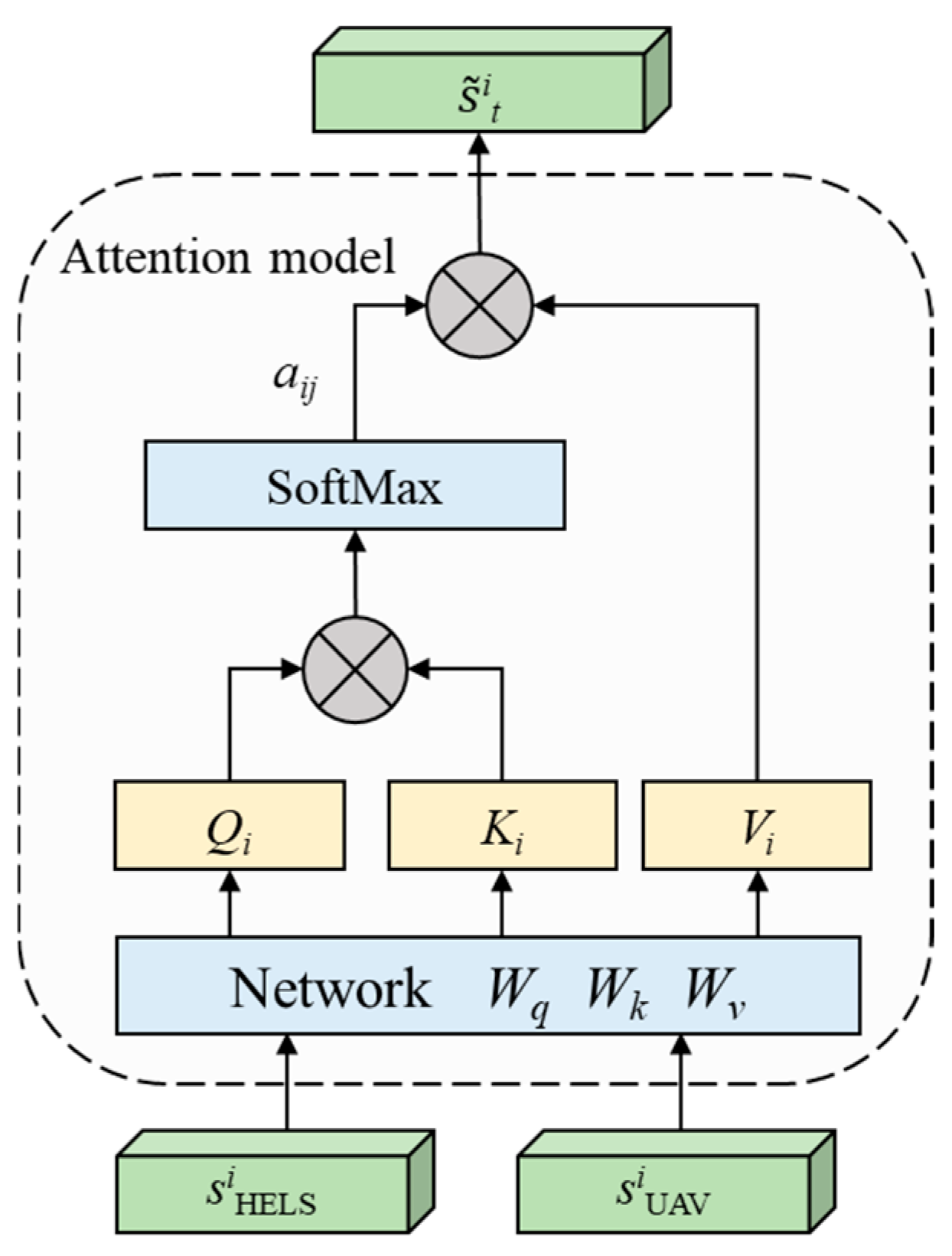

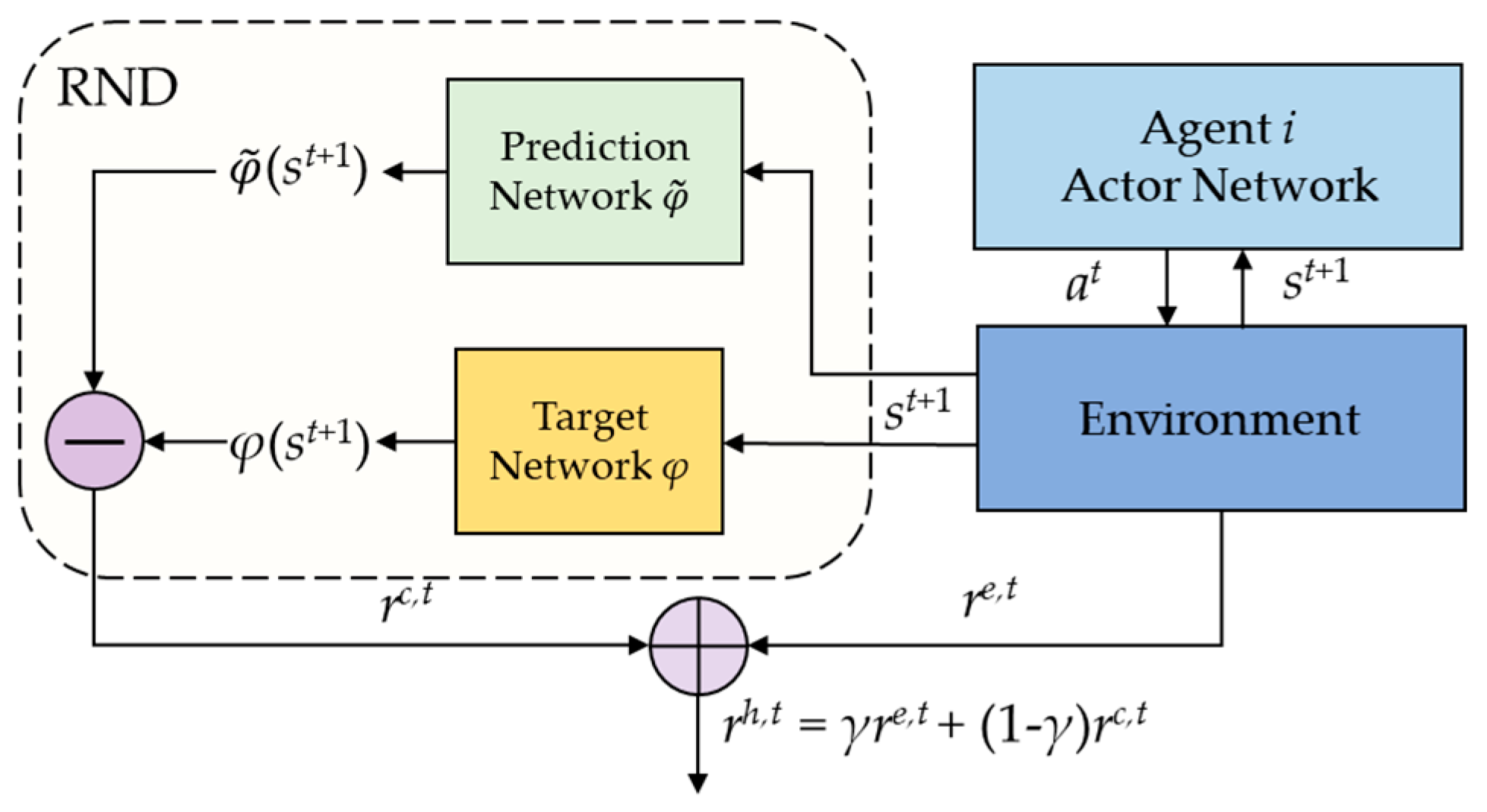

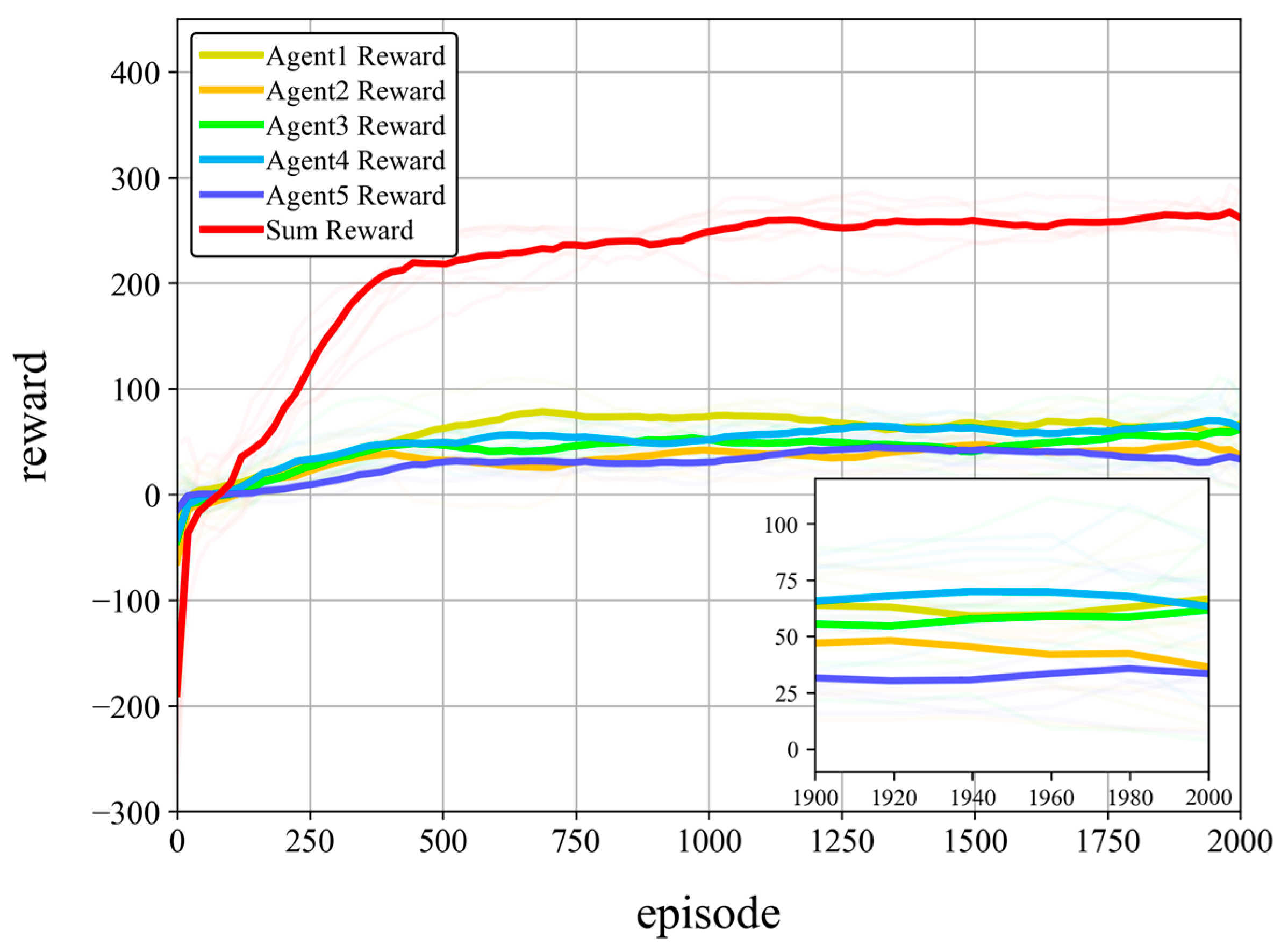

- To tackle the challenges of dynamically varying state dimensions, sparse extrinsic rewards, and limited resources when solving the HELS–UAV–DRTA problem via DRL, we proposed the MADDPG-IA (Multi-Agent Deep Deterministic Policy Gradient, MADDPG; I: intrinsic reward, and A: attention mechanism) algorithm. An attention-based encoder aggregates variable-length target states into fixed-size representations, while a Random Network Distillation (RND)-based intrinsic reward module provides dense exploration rewards, substantially boosting both performance and practicality.

- (3)

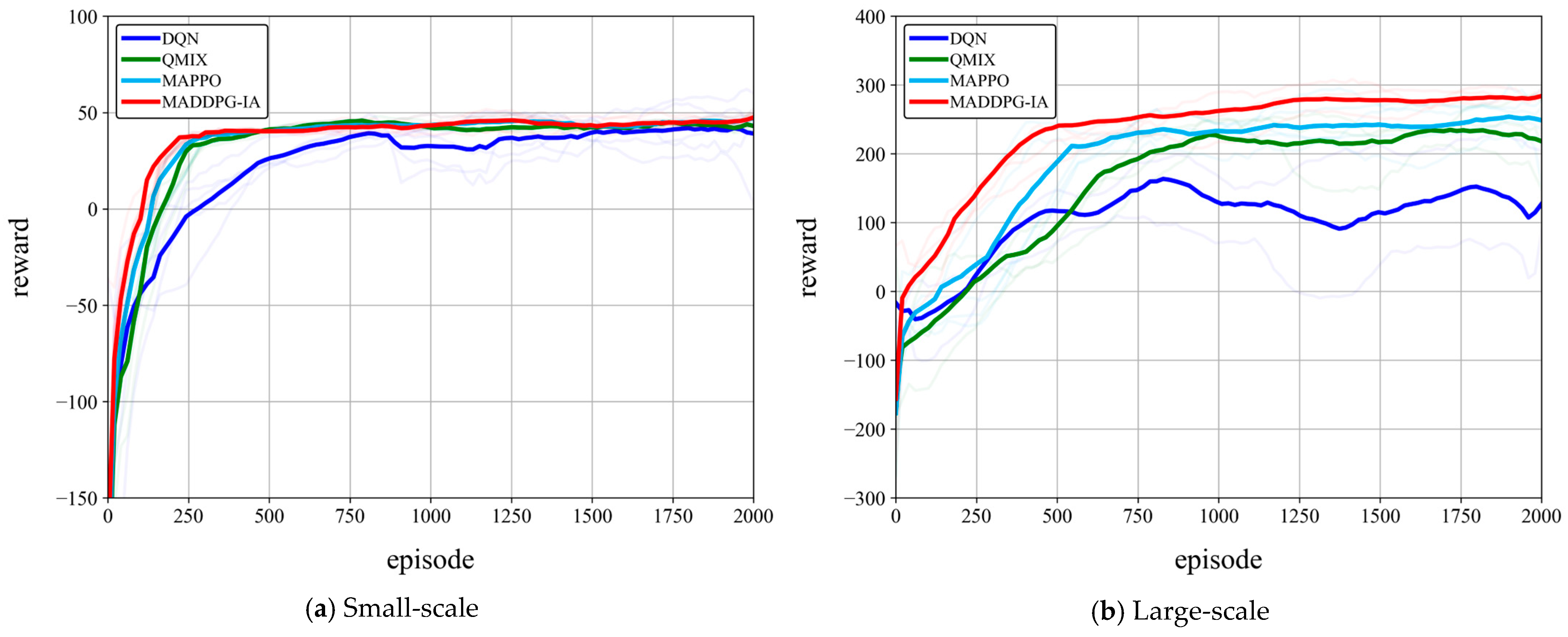

- Taking the defense problems of small-scale and large-scale UAV swarms as examples, comprehensively considering factors such as the atmospheric environment and swarms’ density, a typical HELS–UAV–DRTA scenario with the background of rural, desert, and coastal regions was established, providing ideas for solving actual problems. Experiments in various scenarios show the effectiveness and applicability of the MADDPG-IA algorithm in solving the HELS–UAV–DRTA problem. Through ablation experiments and algorithm comparison experiments, it is verified that the MADDPG-IA algorithm has significant convergence ability and stability, and has a stronger exploration ability.

2. Modeling the HELS–UAV–DRTA Problem

2.1. Laser Damage Model: Transmission and Thermal Effects

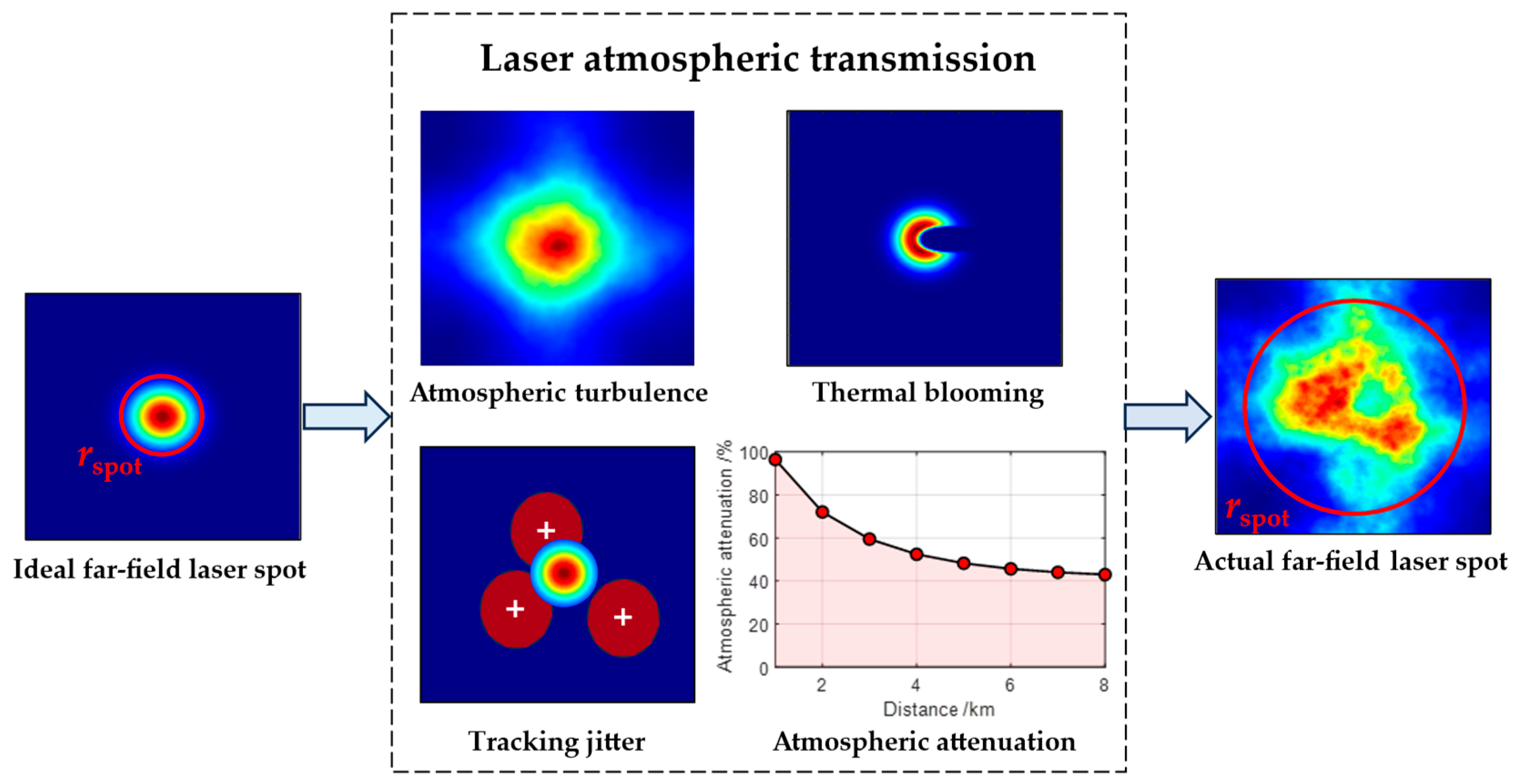

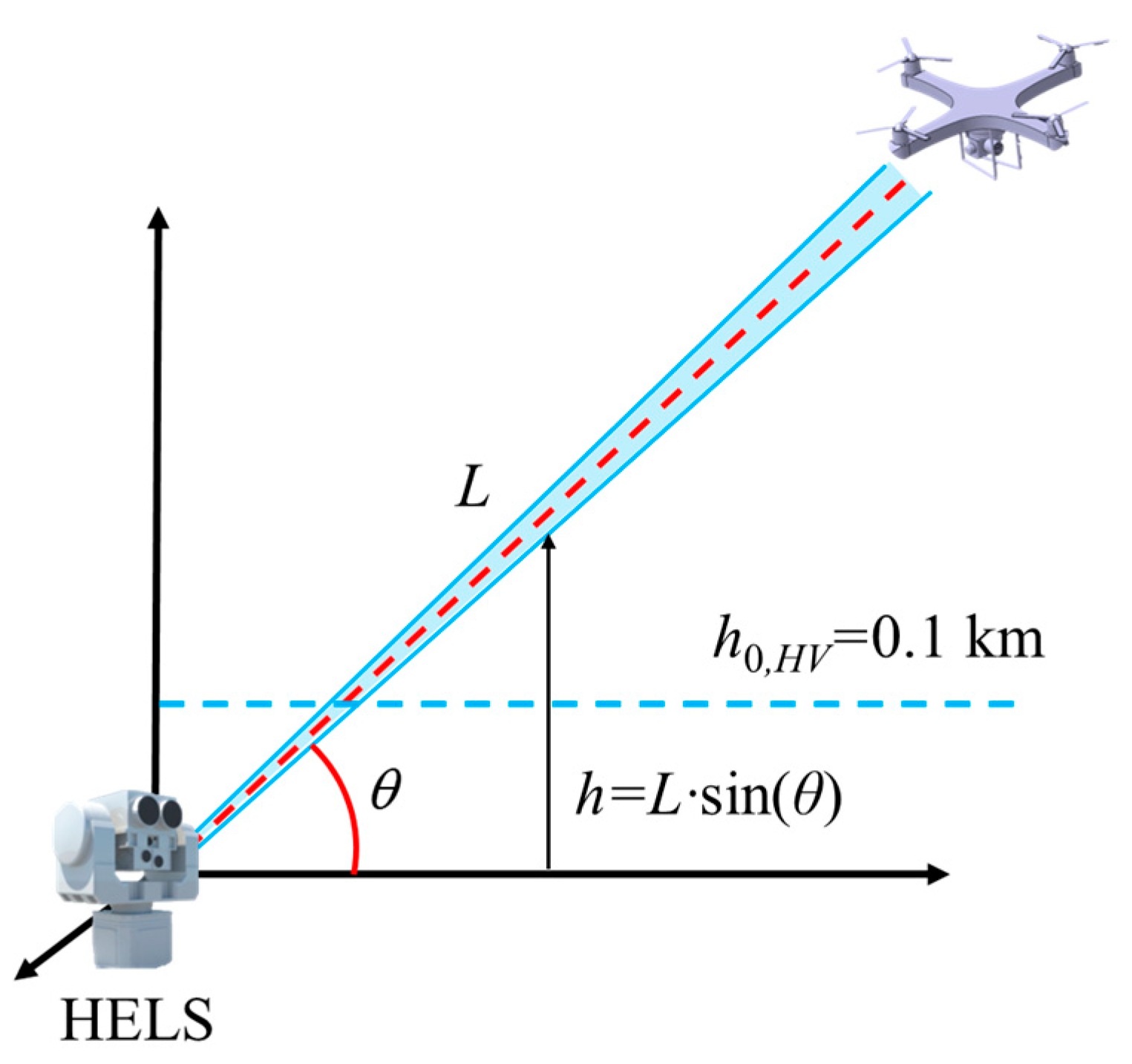

2.1.1. Laser Atmospheric Transmission Model

- (1)

- The influence of atmospheric turbulence on βT

- (2)

- The influence of the thermal blooming effect on βB

- (3)

- The influence of tracking jitter on βJ

- (4)

- Atmospheric attenuation of laser power

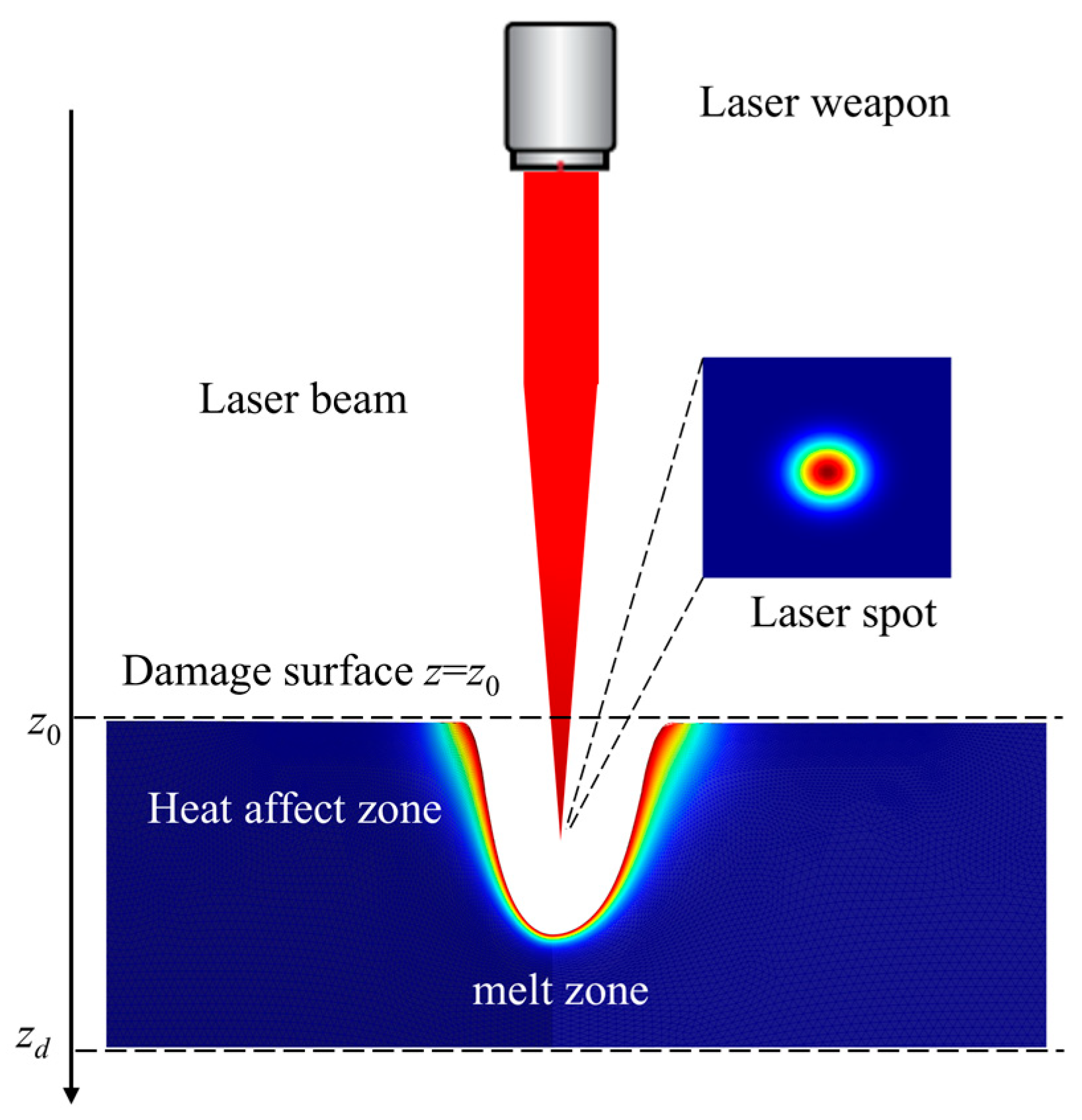

2.1.2. Laser Thermal Damage Model

2.2. Malicious UAV Swarm Density Model

- (1)

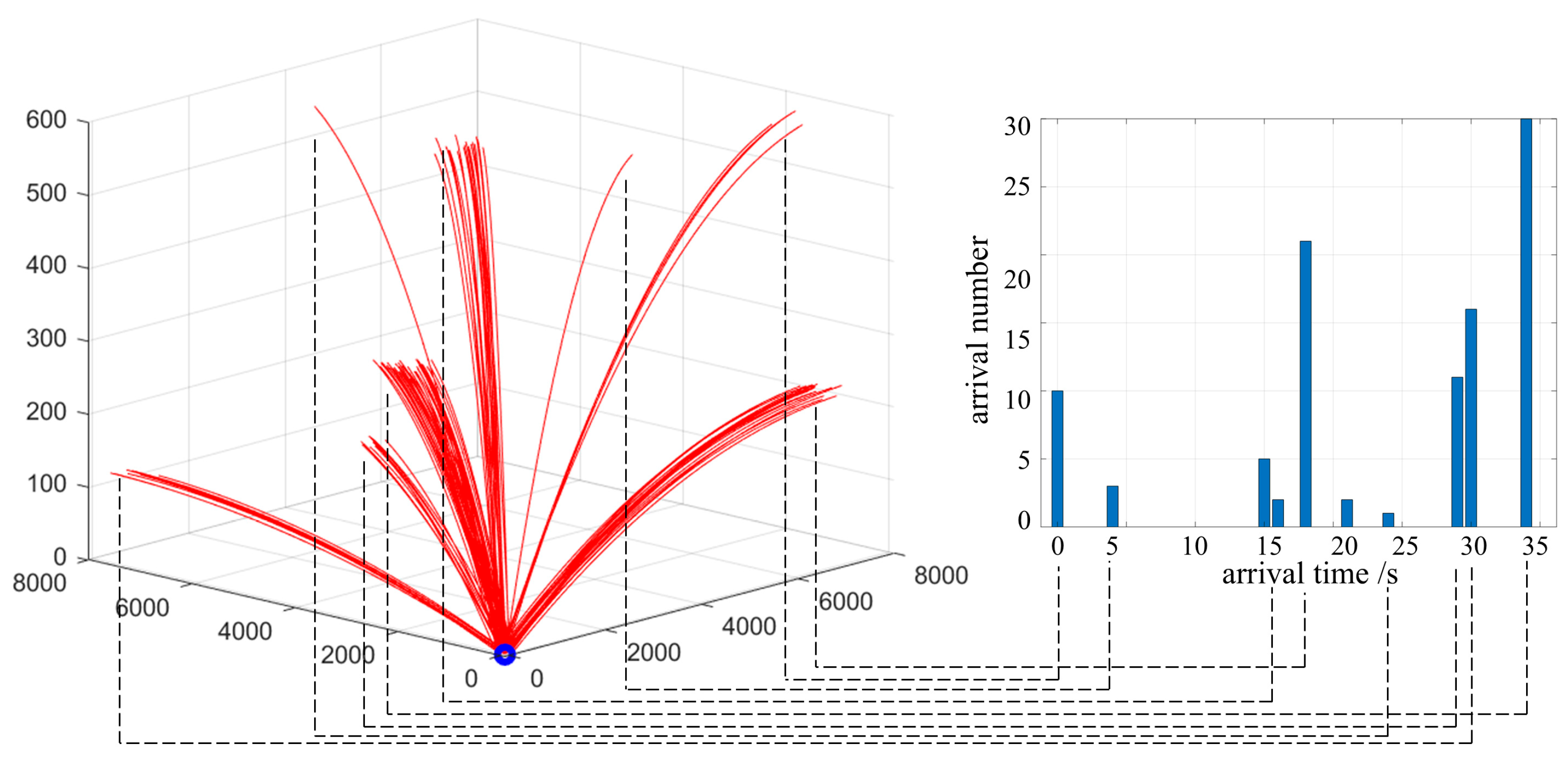

- The malicious UAV swarm density model of multi-direction and multi-batch intrusion. UAV swarms may fly from multiple directions and disperse into multiple waves, and each wave invades at certain time intervals. This mode can disperse the defense forces and improve the efficiency of invasion. If each wave of unmanned aerial vehicles takes off in a random order, the time interval for the defense side to discover the target can be considered to follow a lognormal distribution:where μ and σ2 are, respectively, the expectation and variance of the lognormal distribution. If μ increases, the average arrival time interval will increase. If σ increases, the reached density will become more dispersed. The schematic diagram of the multi-direction and multi-batch intrusion model is shown in the Figure 7. The left side of Figure 7 shows the spatial situation of the UAV swarm in the fan-shaped space of the defending side, and the right side of Figure 7 shows the arrival time interval and quantity of each wave.

- (2)

- The malicious UAV swarm density model of large-scale simultaneous intrusion. By launching a large number of UAVs in a short period of time, the defense side is unable to effectively defend due to the saturated processing capacity, thereby increasing the probability of a UAV intrusion. The core lies in forming an absolute quantitative advantage in a short period of time. When the group of unmanned aerial vehicles takes off simultaneously, the time interval for the defense side to discover the target follows a uniform distribution:where a and b, respectively, represent the upper and lower limits of the UAV arrival time interval.

2.3. HELS–UAV–DRTA Model Formulation with Threat and Benefit Factors

2.3.1. Quantification of Target Threat and Defense Benefit Factors

- (1)

- The threat factor of the target height. The height from the ground of UAVs has a direct impact on the probability of invasion. UAVs flying at low altitudes are more likely to penetrate, and their threat factor is higher than that of targets flying at high altitudes. Meanwhile, the atmospheric environment in which low-altitude-flying UAVs are located is more complex, which directly affects the damage time of HELSs. Therefore, their threat factor is also higher. Therefore, the model of the height threat factor established based on the exponential decay function is as follows:where is the normalized threat factor of UAVs based on height; represents the height of the j-th target; is the maximum height of the first point of all targets discovered by the detection system; and > 0 is the parameter that controls the steepness of the exponential decay function curve. The larger is, the more intensely the low-altitude threat increases, which meets the sensitive requirements for the low-altitude threat assessment. The physical meaning is as follows: the lower the height of the target is, the more intense the increase in its threat factor is and the easier it is to invade.

- (2)

- The threat factor of the target velocity. The maneuverability of the UAV increases with the increase in velocity. However, due to the limitations of the power performance, excessive speed means that the payload capacity of the UAVs is insufficient. Therefore, the speed threat factor is established based on the exponential function as follows:where is the normalized threat factor of UAVs based on the velocity; represents the actual flight velocity of each UAV; and is the preset speed. The physical meaning is as follows: the closer the velocity of the UAV is to , the more threatening it is. Both too high and too low a velocity will reduce its threat level.

- (3)

- The threat factor of the safe distance from the protected assets. The safe distance refers to the remaining distance for the UAV to invade the protected target along the shortest path starting from the current state. The shorter the remaining flight distance of the UAV is, the shorter the time window for defense will be, and the greater the urgency will be. Therefore, a distance threat factor model is established based on a linear function:where represents the distance-based normalized threat factor of UAVs; is the distance between each UAV and the protected assets; and and represent the farthest distance at which the target is first discovered and the radius of the secure airspace for protected assets. The physical meaning is as follows: the closer the remaining distance between the UAV and the protected assets is, the greater the threat is.

- (4)

- The benefit factor of HELS resource consumption. The energy storage of HELS is limited [43]. When dealing with UAV swarms, priority should be given to targets with shorter damage times to defend more targets with limited resources. Furthermore, UAVs with similar spatial angles should be selected in order to reduce the irradiation transmission time and improve the damage efficiency. Therefore, the benefit factor model based on HELS resource consumption is established based on the sigmoid function:where represents the resource consumption benefit factor of HELS i selecting target j; and > 0 is a parameter in the sigmoid function that controls the steepness of the yield curve. The larger is, the more sharply the interception benefit decreases with . Its physical meaning is as follows: When defending UAV swarms, an extremely high priority is given to the targets that can be destroyed in a short time, while the selectivity of the targets that need to be irradiated for a long time will rapidly decrease.

- (5)

- The benefit factor of the HELS application value. Due to the different performances of HELSs, their application values also vary. Although high-performance HELSs can quickly destroy targets, their usage cost is relatively high. In addition, HELS with more remaining power should be given priority for use to avoid the depletion of resources due to the concentrated use of a certain HELS, which makes it impossible to carry out subsequent tasks. Therefore, establish the cost–benefit model of HELSs based on linear functions:where is the application value benefit factor when the i-th HELS irradiates the j-th target, and is the linear weighted control parameter, ; and Pi is the initial power of the i-th HELS and is the remaining battery magazine of the i-th HELS. The physical meaning is as follows: prioritize the use of HELSs with a lower power and larger remaining battery magazine to carry out tasks to save application value.

2.3.2. Mathematical Formulation

- (1)

- Environmental boundary. The model is built upon the Hufnagel–Valley turbulence model and the LOWTRAN-7 atmospheric-attenuation model. It is applicable to atmospheric conditions with medium turbulence or lower ( < 2.5 × 10−13 m−2/3), and is superior to the visibility of light haze ( ≥ 5 km). Extreme weather conditions such as heavy rain and fog will cause the damage conditions to fail.

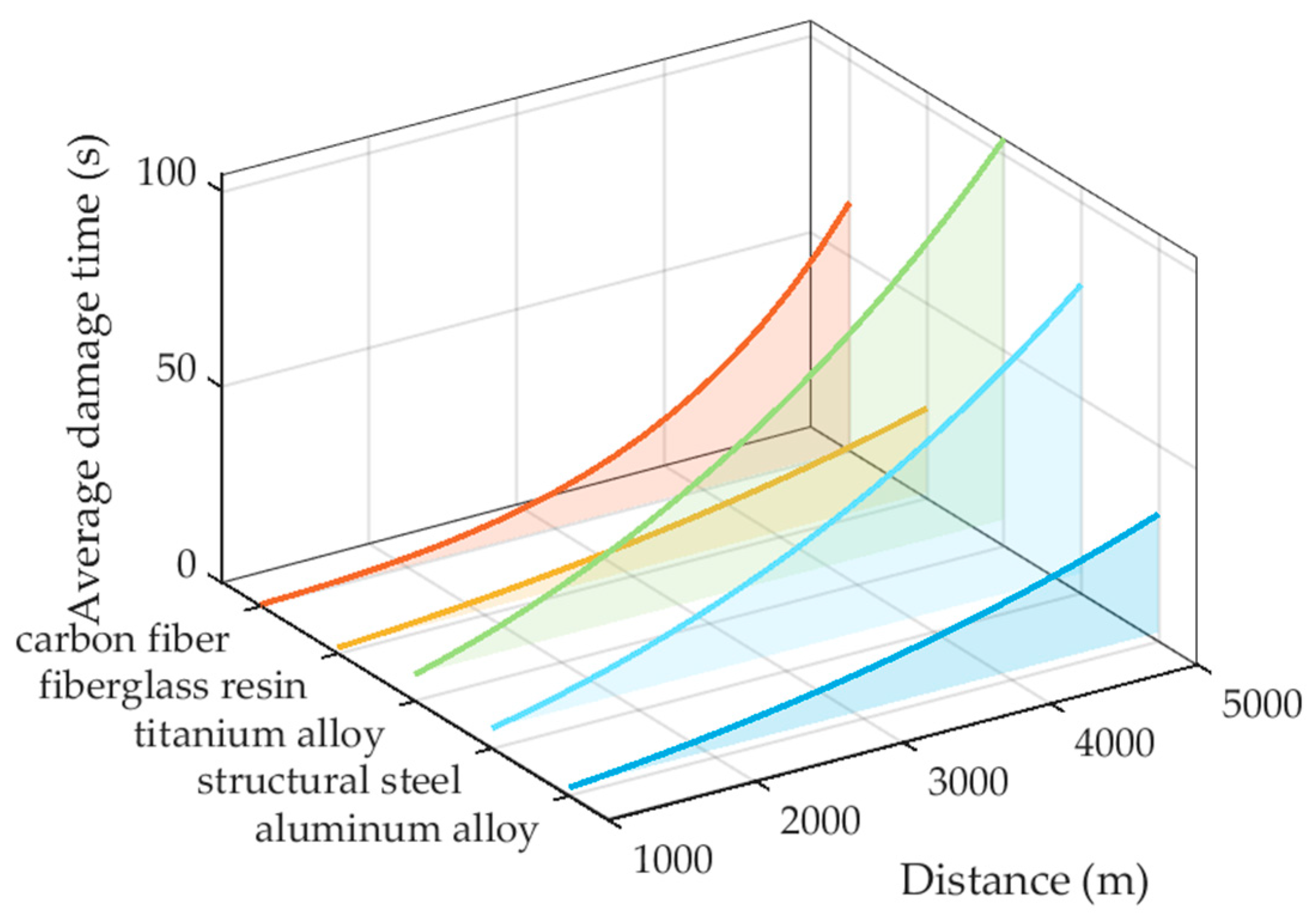

- (2)

- Target boundary. The model is designed for small commercial UAVs and assumes that the material is easily damaged; it is not applicable to high-maneuverability military UAVs or supersonic targets. Given that the damage effective range of HELSs is at the kilometer level, the target distance is typically set to L ≤ 10 km. If the target is replaced with a carbon-fiber–ceramic-coated composite structure, titanium alloy, or a surface-coated polyimide with high laser resistance, a higher-power HELS system should be used for calculation.

- (3)

- System boundary. The results are valid only within the “material–power” feasible region; that is, the HELS battery magazine must be able to support the cumulative irradiation time for all selected targets; if the required damage time far exceeds the system’s capability, at this time, the system should eliminate this target or adjust the laser power/illumination strategy during the initial stage.

3. MADDPG-IA Algorithm Design and Implementation

3.1. MADDPG Framework in HELS–UAV–DRTA

- (1)

- State space design

- (2)

- Discrete action space

- (3)

- Reward function

3.2. Enhanced MADDPG with Attention and Intrinsic Reward Mechanisms

3.2.1. Attention-Based State Encoding

3.2.2. Intrinsic Reward-Driven Exploration

3.2.3. MADDPG-IA Algorithm Workflow

| Algorithm 1. Pseudocode of the MADDPG-IA Algorithm for the HELS–UAV–DRTA Environment |

| 1. Initialize. Initialize the parameters of Actor network and Critic network , , , , experience replay pool D, discount factor γ, soft update parameter , adaptive coefficient , the RND-based intrinsic reward module’s target network parameters and predict network parameters . |

| 2. For episode = 1 to MaxEpisode Do |

| 3. Reset. HELS–UAV–DRTA environment; obtain the initial state . |

| 4. While not Done |

| 5. The observed state of each agent is attention-encoded to obtain the state at time t. |

| 6. Based on the current Actor network, the action is selected. |

| 7. The joint action is executed and the observed reward and the next state are obtained. |

| 8. Calculate the intrinsic reward using Equation (46) and calculate the hybrid reward using Equation (47). |

| 9. Store the experience into the playback experience pool D. |

| 10. For agent i to N Do |

| 11. Sample a batch of data from D. |

| 12. Update the Critic network by minimizing the loss function according to Equation (36). |

| 13. Update the Actor network through gradient descent according to Equation (33) |

| 14. Soft-update target network and parameters according to Equation (37). |

| 15. Minimize the prediction error of the intrinsic reward network. Update the prediction network through gradient descent. |

| 16. End For |

| 17. if tbattery,i = = 0 or nUAV = = 0 or min(L) < Lsafe |

| 18. End While |

| 19. End For |

4. Simulation and Analysis

4.1. Experimental Environment and Parameter Settings

4.2. Typical Scenario Experiments of HELS–UAV–DRTA

4.2.1. Experimental Simulation Analysis of Typical Scenarios

4.2.2. Model Generalization Experiment

- (1)

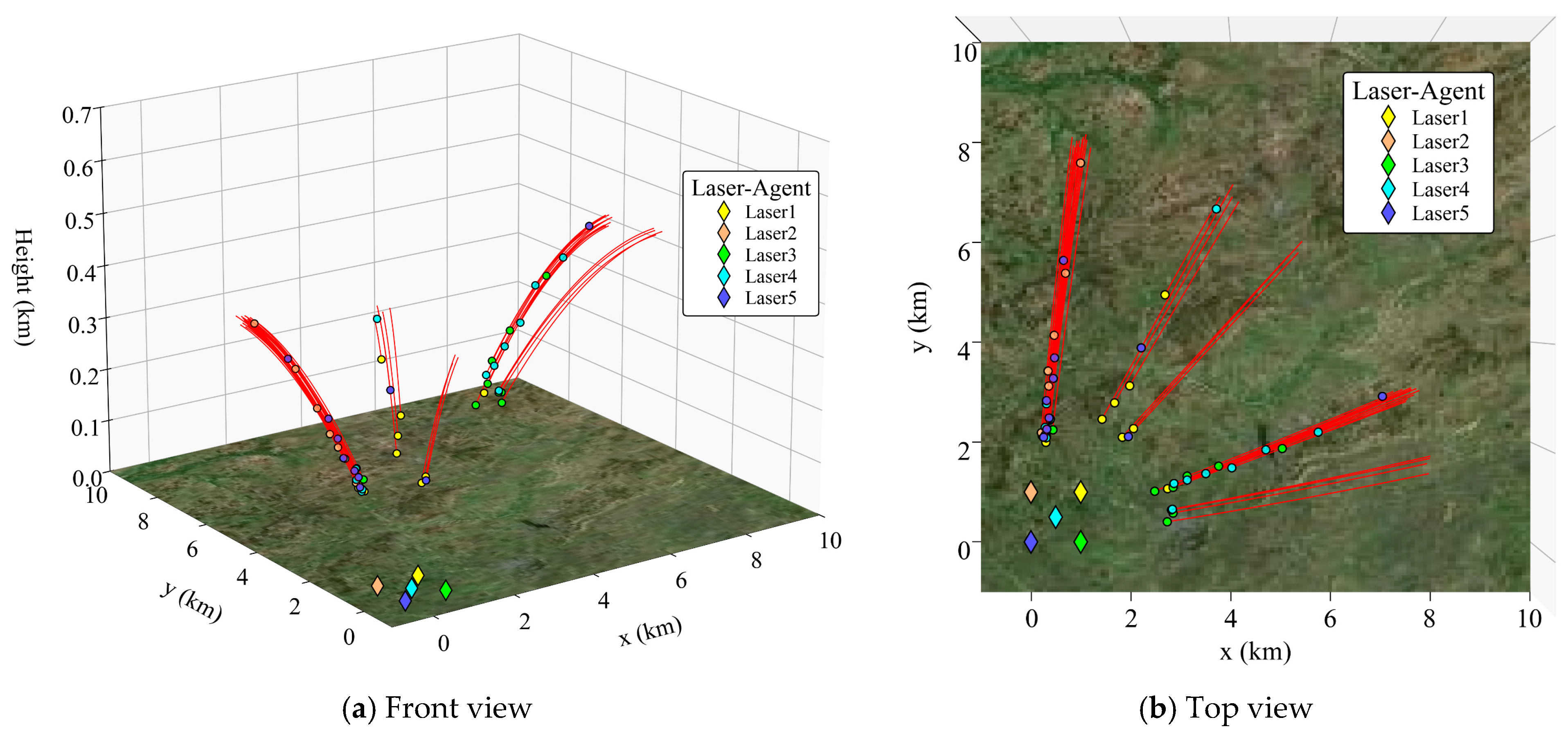

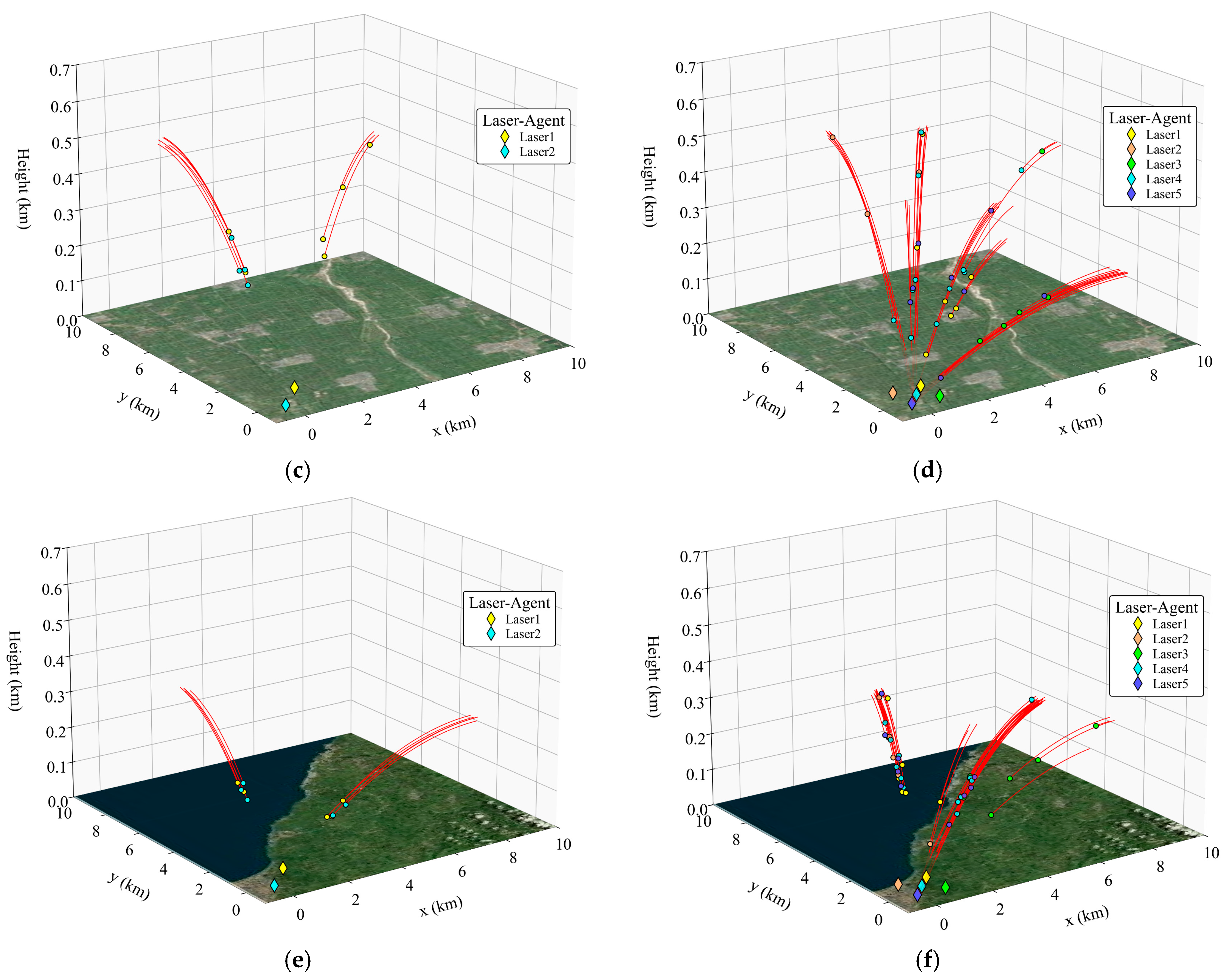

- The scale of the UAV swarm affects the damage decision. Under the same atmospheric environment, the decision-making timing of each HELS agent changes with the variation in the scale of the UAV swarm. Take Figure 13a,b as examples. In the small-scale scenario shown in Figure 13a, due to the smaller number of targets in the airspace, the HELS agent has more reaction time. Therefore, on the premise of ensuring safety, the HELS agent will delay the damage decision, choose to irradiate the target at a closer distance, and exchange a shorter irradiation time for damage for a higher damage benefit. In Figure 13b, as the number of targets increases, the agent cannot bear the risk of the strategy of delaying decision-making to wait for the optimal timing. Therefore, it will make decisions as early as possible to damage as many targets as possible. In the above-mentioned desert environment, the distance for the first irradiation of the target in the small-scale scene was 3.79 km, and that in the large-scale scene was 7.18 km.

- (2)

- The atmospheric environment affects the damage decision. When the atmospheric environment is favorable, the damage ability of HELSs is strong, and the HELS agent has more flexible decision-making strategies and can obtain more decision-making opportunities by intercepting at a closer distance. In the small-scale scenarios (Figure 13a,c,e), the distances of the first target interception were 3.79 km (desert), 6.69 km (rural), and 4.21 km (coastal), respectively. In addition, when there is a significant difference in the atmospheric environment between HELSs and UAVs, the atmospheric undulations will be obvious, resulting in a considerable difference in damage capabilities. For instance, in the coastal atmospheric environment, the HELS agent will prioritize irradiating targets on the sea surface that are easy to intercept, to achieve a higher damage efficiency. In large-scale scenarios (Figure 13b,d,f) and Table 2, the mean damage rates under the influence of the three types of atmospheric environments are 99.65% ± 0.32%, 79.37% ± 2.15%, and 91.25% ± 1.78%, respectively (100 runs per scenario), indicating that the HELS–UAV–DRTA model can significantly adapt to the influence of the atmospheric environment on decision-making. The results represent mean damage rates from 100 independent trials per scenario. The traditional ‘detect and intercept’ strategy was evaluated under identical conditions for a fair comparison. Compared with the average interception rates of 72.64% ± 3.21%, 51.29% ± 4.87%, and 67.38% ± 3.95% of the traditional strategy in large-scale scenarios (100 runs per scenario), it significantly improves the interception efficiency of multi-HELSs against UAV swarms. In addition, the performance of HELSs, and the distribution pattern and maneuverability of UAVs also have an impact on damage decision-making, which will not be discussed here.

4.3. Algorithm Performance Verification of MADDPG-IA

4.3.1. Algorithm Performance in Typical Scenarios

4.3.2. Ablation Experiment

4.3.3. Comparison Experiment of Algorithms

5. Conclusions and Outlook

- (1)

- Based on the effects of laser atmospheric transmission and thermal damage, a quantitative characterization of the damage capability of HELSs with the damage time as the core index was constructed. Considering the spatio-temporal characteristics of malicious UAV swarms comprehensively, an HELS–UAV–DRTA model with the threat factor of UAVs and the damage benefit factor of HELSs as the objective functions was proposed. Based on the algorithm framework of MADDPG, adaptive designs were carried out for the state space, action space, and reward function. In the typical scene experiments designed in this paper, the HELS–UAV–DRTA model can dynamically optimize HELS resource allocation according to weather conditions and real-time information, evolving strategies such as delaying decision-making to await optimal timing and cross-region coordination.

- (2)

- An MADDPG-IA algorithm for the HELS–UAV–DRTA problem is proposed. The problem of dynamic changes in the state dimension is solved by designing the state coding network based on the attention mechanism, and the exploration predicament under sparse rewards is cracked by using an RND-based intrinsic reward module, which demonstrates a strong computational performance. Experiments on typical scenarios of various scales show the effectiveness and applicability of the MADDPG-IA algorithm in solving the HELS–UAV–DRTA problem. Damage rates of 99.65% ± 0.32%, 79.37% ± 2.15%, and 91.25% ± 1.78% are achieved in large-scale scenarios in rural (sunshine/weak turbulence), desert (light haze/medium turbulence), and coastal (sunshine/weak turbulence) environments, respectively (100 runs per scenario). Compared with the interception rates of 72.64% ± 3.21%, 51.29% ± 4.87%, and 67.38% ± 3.95% of the traditional “detect and intercept” strategy (100 runs per scenario), it can significantly enhance the interception efficiency of multiple HELSs against UAV swarms. The algorithm comparison experiments show that the MADDPG-IA algorithm has a better convergence speed and stability in small-scale scenarios. It still maintains the progressive optimization ability in large-scale scenarios. The global average returns exceed those of DQN, QMIX, and MAPPO by 48.2%, 20.1%, and 14.7%, respectively, while demonstrating superior exploration. The high damage performance demonstrated across various scales and atmospheric conditions signifies a substantial leap in practical defense capability. This translates into the robust protection of critical assets by significantly reducing the intrusion risk, and a highly efficient use of laser resources enabling sustained defense, and offers a cost-effective alternative to traditional interceptors for swarm suppression.

- (3)

- In future research, we will further study the dynamic combinatorial optimization problem, the soft/hard damage mode and probability model of laser destruction of UAVs, the intelligent path planning of UAV swarms, and the game confrontation between the two sides, and construct a comprehensive research framework to provide the optimal strategy for defending against UAV swarms.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Javed, S.; Hassan, A.; Ahmad, R.; Ahmed, W.; Ahmed, R.; Saadat, A.; Guizani, M. State-of-the-Art and Future Research Challenges in UAV Swarms. IEEE Internet Things J. 2024, 11, 19023–19045. [Google Scholar] [CrossRef]

- Extance, A. Military Technology: Laser Weapons Get Real. Nature 2015, 521, 408–411. [Google Scholar] [CrossRef]

- Manne, A. A Target-Assignment Problem. Oper. Res. 1958, 3, 346–357. [Google Scholar] [CrossRef]

- Andersen, A.C.; Pavlikov, K.; Toffolo, T.A.M. Weapon-Target Assignment Problem: Exact and Approximate Solution Algorithms. Ann. Oper. Res. 2022, 312, 581–606. [Google Scholar] [CrossRef]

- Peng, Z.; Lu, Z.; Mao, X.; Ye, F.; Huang, K.; Wu, G.; Wang, L. Multi-Ship Dynamic Weapon-Target Assignment via Cooperative Distributional Reinforcement Learning With Dynamic Reward. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 9, 1843–1859. [Google Scholar] [CrossRef]

- Yang, R.; Li, C. Application of particle swarm optimization in anti-UAV fire allocation of laser weapon. Command. Inf. Syst. Technol. 2021, 12, 70–75. [Google Scholar] [CrossRef]

- Shi, L.; Pei, Y.; Yun, Q.; Ge, Y. Agent-Based Effectiveness Evaluation Method and Impact Analysis of Airborne Laser Weapon System in Cooperation Combat. Chin. J. Aeronaut. 2023, 36, 442–454. [Google Scholar] [CrossRef]

- Hemani, K.; Georges, K. Applications of Lasers for Tactical Military Operations. IEEE Access 2017, 5, 20736–20753. [Google Scholar] [CrossRef]

- Karr, T.; Trebes, J. The New Laser Weapons. Phys. Today 2024, 77, 32–38. [Google Scholar] [CrossRef]

- Li, M.; Chang, X.; Shi, J.; Chen, C.; Huang, J.; Liu, Z. Developments of weapon target assignment: Models, algorithms and applications. Syst. Eng. Electron. 2023, 45, 1049–1071. [Google Scholar]

- Chang, T.; Kong, D.; Hao, N.; Xu, K.; Yang, G. Solving the Dynamic Weapon Target Assignment Problem by an Improved Artificial Bee Colony Algorithm with Heuristic Factor Initialization. Appl. Soft Comput. 2018, 70, 845–863. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, A.; Bi, W.; Xu, S. Dynamic Gaussian Mutation Beetle Swarm Optimization Method for Large-Scale Weapon Target Assignment Problems. Appl. Soft Comput. 2024, 162, 111798. [Google Scholar] [CrossRef]

- Hanák, J.; Novák, J.; Ben-Asher, J.Z.; Chudý, P. Cross-Entropy Method for Laser Defense Applications. J. Aerosp. Inf. Syst. 2025, 22, 53–58. [Google Scholar] [CrossRef]

- Taylor, A.B. Counter-Unmanned Aerial Vehicles Study: Shipboard Laser Weapon System Engagement Strategies for Countering Drone Swarm Threats in the Maritime Environment. Ph.D. Thesis, Naval Postgraduate School, Monterey, CA, USA, 2021. [Google Scholar]

- Gong, H.; Liu, Y.; Xu, K.; Xu, W.; Sui, G. Research on Dynamic Photoelectric Weapon-Target Assignment Problem Based on NSGA-II. In Proceedings of the 2023 11th China Conference on Command and Control, Beijing, China, 24–25 October 2023; Chinese Institute of Command and Control, Ed.; Springer Nature: Singapore, 2024; pp. 626–637. [Google Scholar]

- Xu, W.; Chen, C.; Ding, S.; Pardalos, P.M. A Bi-Objective Dynamic Collaborative Task Assignment under Uncertainty Using Modified MOEA/D with Heuristic Initialization. Expert Syst. Appl. 2020, 140, 112844. [Google Scholar] [CrossRef]

- Guo, D.; Liang, Z.; Jiang, P.; Dong, X.; Li, Q.; Ren, Z. Weapon-Target Assignment for Multi-to-Multi Interception with Grouping Constraint. IEEE Access 2019, 7, 34838–34849. [Google Scholar] [CrossRef]

- Davis, M.T.; Robbins, M.J.; Lunday, B.J. Approximate Dynamic Programming for Missile Defense Interceptor Fire Control. Eur. J. Oper. Res. 2017, 259, 873–886. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, Y.; Wang, G. Target Assignment for Multiple Stages of Weapons Systems Using a Deep Q-Learning Network and a Modified Artificial Bee Colony Method. Comput. Electr. Eng. 2024, 118, 109378. [Google Scholar] [CrossRef]

- Xin, B.; Wang, Y.; Chen, J. An Efficient Marginal-Return-Based Constructive Heuristic to Solve the Sensor–Weapon–Target Assignment Problem. IEEE Trans. Syst. Man Cybern. Syst. 2019, 49, 2536–2547. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, Y.; Feng, Y.; Zhang, L.; Liu, Z. A Human-Machine Agent Based on Active Reinforcement Learning for Target Classification in Wargame. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 9858–9870. [Google Scholar] [CrossRef]

- Liu, J.; Wang, G.; Fu, Q.; Yue, S.; Wang, S. Task Assignment in Ground-to-Air Confrontation Based on Multiagent Deep Reinforcement Learning. Def. Technol. 2023, 19, 210–219. [Google Scholar] [CrossRef]

- Huang, T.; Cheng, G.; Huang, K.; Huang, J.; Liu, Z. Task assignment method of compound anti-drone based on DQN for multi type interception equipment. Control Decis. 2022, 37, 142–150. [Google Scholar] [CrossRef]

- Hu, T.; Zhang, X.; Luo, X.; Chen, T. Dynamic Target Assignment by Unmanned Surface Vehicles Based on Reinforcement Learning. Mathematics 2024, 12, 2557. [Google Scholar] [CrossRef]

- Hua, G.; Shaoyong, Z.; Ke, X.; We, S. Weapon Targets Assignment for Electro-Optical System Countermeasures Based on Multi-Objective Reinforcement Learning. In Proceedings of the 2022 China Automation Congress (CAC), Xiamen, China, 25–27 November 2022; pp. 6714–6719. [Google Scholar]

- Shojaeifard, A.; Amroudi, A.N.; Mansoori, A.; Erfanian, M. Projection Recurrent Neural Network Model: A New Strategy to Solve Weapon-Target Assignment Problem. Neural Process Lett. 2019, 50, 3045–3057. [Google Scholar] [CrossRef]

- Bahman, Z. Directed Energy Weapons Physics of High Energy Lasers(HEL); Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Sun, X.; Zhang, Q.; Zhong, Z.; Zhang, B. Scaling law for beam spreading during high-energy laser propagation in atmosphere. Acta Opt. Sin. 2022, 42, 74–80. [Google Scholar]

- Qiao, C.; Fan, C.; Huang, Y.; Wang, Y. Scaling laws of high energy laser propagation through atmosphere. Chin. J. Laser 2010, 37, 433–437. [Google Scholar] [CrossRef]

- Jabczyński, J.K.; Gontar, P. Impact of Atmospheric Turbulence on Coherent Beam Combining for Laser Weapon Systems. Def. Technol. 2021, 17, 1160–1167. [Google Scholar] [CrossRef]

- Hudcová, L.; Róka, R.; Kyselák, M. Atmospheric Turbulence Models for Vertical Optical Communication. In Proceedings of the 2023 33rd International Conference Radioelektronika (RADIOELEKTRONIKA), Pardubice, Czech Republic, 19–20 April 2023; pp. 1–6. [Google Scholar]

- Toyoshima, M.; Takenaka, H.; Takayama, Y. Atmospheric Turbulence-Induced Fading Channel Model for Space-to-Ground Laser Communications Links. Opt. Express 2011, 19, 15965–15975. [Google Scholar] [CrossRef] [PubMed]

- Quatresooz, F.; Vanhoenacker-Janvier, D.; Oestges, C. Computation of Optical Refractive Index Structure Parameter From Its Statistical Definition Using Radiosonde Data. Radio Sci. 2023, 58, e2022RS007624. [Google Scholar] [CrossRef]

- Bradley, L.C.; Herrmann, J. Phase Compensation for Thermal Blooming. Appl. Opt. 1974, 13, 331–334. [Google Scholar] [CrossRef]

- Khalatpour, A.; Paulsen, A.K.; Deimert, C.; Wasilewski, Z.R.; Hu, Q. High-Power Portable Terahertz Laser Systems. Nat. Photonics 2021, 15, 16–20. [Google Scholar] [CrossRef]

- Kiteto, M.K.; Mecha, C.A. Insight into the Bouguer-Beer-Lambert Law: A Review. Sustain. Chem. Eng. 2024, 5, 567–587. [Google Scholar] [CrossRef]

- Kneizys, F.; Shettle, E.; Abreu, L.; Chetwynd, J.; Anderson, G. User Guide to LOWTRAN 7; Air Force Geophysics Laboratory: Bedford, MA, USA, 1988; Volume 88. [Google Scholar]

- Liu, W.; Zhang, L.; Wang, W.; Zhang, M.; Zhang, J.; Gao, F.; Zhang, B. Damage Capability of Laser System in Ground-Air Defense Environments. Chin. J. Aeronaut. 2025, 103625. [Google Scholar] [CrossRef]

- Wiśniewski, T.S. Transient Heat Conduction in Semi-Infinite Solid with Specified Surface Heat Flux. In Encyclopedia of Thermal Stresses; Hetnarski, R.B., Ed.; Springer: Dordrecht, The Netherlands, 2014; pp. 6164–6171. ISBN 978-94-007-2739-7. [Google Scholar]

- Liu, L.; Xu, C.; Zheng, C.; Cai, S.; Wang, C.; Guo, J. Vulnerability Assessment of UAV Engine to Laser Based on Improved Shotline Method. Def. Technol. 2023, 3, 588–600. [Google Scholar] [CrossRef]

- Li, Q. Damage Effects of Vehicles Irradiated by Intense Lasers; China Astronautic Publishing House: Beijing, China, 2012. [Google Scholar]

- Ahmed, S.A.; Mohsin, M.; Ali, S.M.Z. Survey and Technological Analysis of Laser and Its Defense Applications. Def. Technol. 2021, 17, 583–592. [Google Scholar] [CrossRef]

- High Energy Lasers. Available online: https://www.rtx.com/raytheon/what-we-do/integrated-air-and-missile-defense/lasers (accessed on 24 March 2025).

- Wu, L.; Lu, J.; Xu, J. Modeling and effectiveness evaluation on UAV cluster interception using laser weapon systems. Laser Infrared 2022, 52, 887–892. [Google Scholar]

- Kline, A.; Ahner, D.; Hill, R. The Weapon-Target Assignment Problem. Comput. Oper. Res. 2019, 105, 226–236. [Google Scholar] [CrossRef]

- Li, B.; Wang, J.; Song, C.; Yang, Z.; Wan, K.; Zhang, Q. Multi-UAV Roundup Strategy Method Based on Deep Reinforcement Learning CEL-MADDPG Algorithm. Expert Syst. Appl. 2024, 245, 123018. [Google Scholar] [CrossRef]

- Chen, W.; Nie, J. A MADDPG-Based Multi-Agent Antagonistic Algorithm for Sea Battlefield Confrontation. Multimed. Syst. 2023, 29, 2991–3000. [Google Scholar] [CrossRef]

- Cai, H.; Li, X.; Zhang, Y.; Gao, H. Interception of a Single Intruding Unmanned Aerial Vehicle by Multiple Missiles Using the Novel EA-MADDPG Training Algorithm. Drones 2024, 8, 524. [Google Scholar] [CrossRef]

- Tilbury, C.R.; Christianos, F.; Albrecht, S.V. Revisiting the Gumbel-Softmax in MADDPG. arXiv 2023, arXiv:2302.11793. [Google Scholar] [CrossRef]

- Fu, X.; Wang, X.; Qiao, Z. Attack-defense strategy of multi-UAVs based on ASDDPG algorithm. Syst. Eng. Electron. 2025, 47, 1867–1879. [Google Scholar] [CrossRef]

- Hu, K.; Xu, K.; Xia, Q.; Li, M.; Song, Z.; Song, L.; Sun, N. An Overview: Attention Mechanisms in Multi-Agent Reinforcement Learning. Neurocomputing 2024, 598, 128015. [Google Scholar] [CrossRef]

- Hu, M.; Gao, R.; Suganthan, P.N. Self-Distillation for Randomized Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 16119–16128. [Google Scholar] [CrossRef]

- Chen, W.; Shi, H.; Li, J.; Hwang, K.-S. A Fuzzy Curiosity-Driven Mechanism for Multi-Agent Reinforcement Learning. Int. J. Fuzzy Syst. 2021, 23, 1222–1233. [Google Scholar] [CrossRef]

- Song, C.; Zhang, Q.; He, J.; Zhou, W.; Wang, H.; Kong, W.; Tian, W. Edge computing task offloading method of satellite-terrestrial collaborative based on MADDPG algorithm. Syst. Eng. Electron. 2025, 1–15. [Google Scholar]

- Li, Q. Numerical simulation of laser thermal ablation effect in atmosphere and study of damage effect assessment method. Master’s Thesis, Xidian University, Xi’an, China, 2019. [Google Scholar]

- Guo, W.; Liu, G.; Zhou, Z.; Wang, L.; Wang, J. Enhancing the Robustness of QMIX against State-Adversarial Attacks. Neurocomputing 2024, 572, 127191. [Google Scholar] [CrossRef]

- Liu, X.; Yin, Y.; Su, Y.; Ming, R. A Multi-UCAV Cooperative Decision-Making Method Based on an MAPPO Algorithm for Beyond-Visual-Range Air Combat. Aerospace 2022, 9, 563. [Google Scholar] [CrossRef]

| Category | Attribute | Value |

|---|---|---|

| HELS parameters | The number of HELS nHELS | 2 (small-scale), 5 (large-scale) |

| Initial emitted power P0 | 20 kW~50 kW | |

| Battery magazine tbattery | 200 s, 250 s | |

| Wavelength λ | 1.064 μm | |

| Diameter of telescope aperture D | 0.6 m | |

| Initial beam quality factor β0 | 1 | |

| Maximum detection distance | 7.5 km~8 km | |

| UAV parameters | The number of UAVs nUAV | 10 (small-scale), 50 (large-scale) |

| Attack direction | 2 (small-scale), 5 (large-scale) | |

| Target flow density parameter | μ = 1, σ = 2 | |

| Flight speed vj | 0.02 km/s~0.03 km/s | |

| Flight height hj | 0.2 km~0.8 km | |

| Material and thickness of the irradiated area | 2024 Al, zd = 5 mm (thermophysical properties are from Ref. [55]) | |

| Atmospheric parameters | Atmospheric turbulence Cn2(0) | 1 × 10−17, 1 × 10−15 |

| Aerosol model constant K | 2.828 (rural), 2.496 (desert), 4.453 (maritime) | |

| Visibility | 10 km (sunshine), 5 km (light haze) | |

| Wind speed vg | 5 m/s | |

| MADDPG-IA parameters | Size of the experience pool | 1 × 106 |

| Batch size | 2048 | |

| Max episode | 2000 | |

| Actor network learning rate | 1 × 10−3 | |

| Critic network learning rate | 1 × 10−3 | |

| Soft update parameter | 1 × 10−2 | |

| Discount factor γ | 0.95 |

| Environment | MADDPG-IA Mean ± Std (%) | Traditional Strategy Mean ± Std (%) | MADDPG-IA 95% CI |

|---|---|---|---|

| Rural (sunshine) | 99.65 ± 0.32 | 72.64 ± 3.21 | [99.54, 99.76] |

| Desert (light haze) | 79.37 ± 2.15 | 51.29 ± 4.87 | [78.82, 79.92] |

| Coastal (sunshine) | 91.25 ± 1.78 | 67.38 ± 3.95 | [90.82, 91.68] |

| Parameters | Damage Rate (Mean ± Std) | ||||

|---|---|---|---|---|---|

| HELS number | 3 HELS 72.3% ± 3.5% | 4 HELS 89.5% ± 2.1% | 5 HELS 99.6% ± 0.3% | 6 HELS 99.7% ± 0.25% | 7 HELS 99.8% ± 0.2% |

| Average laser power | 20 kW 65.1% ± 4.1% | 25 kW 72.5% ± 3.1% | 30 kW 85.2% ± 2.3% | 40 kW 99.7% ± 0.21% | 50 kW 99.9% ± 0.05% |

| UAV number | 10 100% ± 0% | 30 99.9% + 0.03% | 50 99.6% ± 0.3% | 70 89.3% ± 2.5% | 100 62.7% ± 4.8% |

| Atmospheric turbulence | 1 × 10−17 99.6% ± 0.3% | 5 × 10−16 95.4% ± 1.5% | 1 × 10−15 79.4% ± 2.1% | 5 × 10−15 68.2% ± 3.8% | 5 × 10−14 58.7% ± 5.2% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, W.; Zhang, L.; Wang, W.; Fang, H.; Zhang, J.; Zhang, B. Dynamic Resource Target Assignment Problem for Laser Systems’ Defense Against Malicious UAV Swarms Based on MADDPG-IA. Aerospace 2025, 12, 729. https://doi.org/10.3390/aerospace12080729

Liu W, Zhang L, Wang W, Fang H, Zhang J, Zhang B. Dynamic Resource Target Assignment Problem for Laser Systems’ Defense Against Malicious UAV Swarms Based on MADDPG-IA. Aerospace. 2025; 12(8):729. https://doi.org/10.3390/aerospace12080729

Chicago/Turabian StyleLiu, Wei, Lin Zhang, Wenfeng Wang, Haobai Fang, Jingyi Zhang, and Bo Zhang. 2025. "Dynamic Resource Target Assignment Problem for Laser Systems’ Defense Against Malicious UAV Swarms Based on MADDPG-IA" Aerospace 12, no. 8: 729. https://doi.org/10.3390/aerospace12080729

APA StyleLiu, W., Zhang, L., Wang, W., Fang, H., Zhang, J., & Zhang, B. (2025). Dynamic Resource Target Assignment Problem for Laser Systems’ Defense Against Malicious UAV Swarms Based on MADDPG-IA. Aerospace, 12(8), 729. https://doi.org/10.3390/aerospace12080729