Abstract

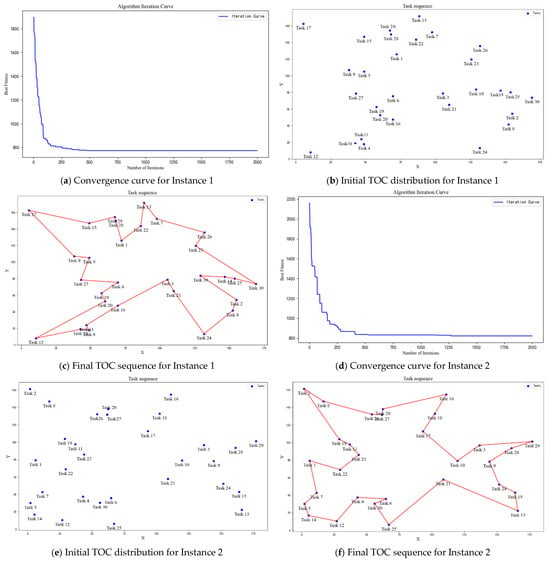

Traditional spacecraft task planning has relied on ground control centers issuing commands through ground-to-space communication systems; however, as the number of deep space exploration missions grows, the problem of ground-to-space communication delays has become significant, affecting the effectiveness of real-time command and control and increasing the risk of missed opportunities for scientific discovery. Adaptive Space Scientific Exploration requires that spacecraft have the ability to make autonomous decisions to complete known and unknown scientific exploration missions without ground control. Based on this requirement, this paper proposes an actor–critic-based hyper-heuristic autonomous mission planning algorithm, which is used for mission planning and execution at different levels to support spacecraft Adaptive Space Scientific Exploration in deep space environments. At the bottom level of the hyper-heuristic algorithm, this paper uses the particle swarm optimization algorithm, grey wolf optimization algorithm, differential evolution algorithm, and positive cosine optimization algorithm as the basic operators. At the high level, a reinforcement learning strategy based on the actor–critic model is used, combined with the network architecture, to construct a framework for the selection of advanced heuristic algorithms. The related experimental results show that the algorithm can meet the requirements of Adaptive Space Scientific Exploration, and exhibits a quality solution with higher comprehensive evaluation in the test. This study also designs an example application of the algorithm to a space engineering mission based on a collaborative sky and earth control system to demonstrate the usability of the algorithm. This study provides an autonomous mission planning method for spacecraft in the complex and ever-changing deep space environment, which supports the further construction of spacecraft autonomous capabilities and is of great significance for improving the exploration efficiency of deep space exploration missions.

1. Introduction

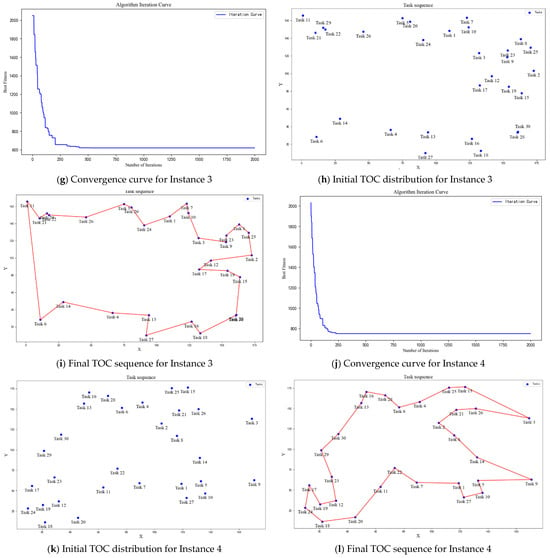

Traditional spacecraft payload operation modes predominantly rely on ground control centers to issue commands through Earth–space communication systems, guiding spacecraft in task planning, orbital adjustments, data collection, and processing. This approach is highly efficient in Earth–Moon systems or near-Earth orbital missions, where communication delays are minimal, allowing ground control centers to monitor spacecraft status almost in real time and adjust mission plans accordingly. However, as space exploration progressively delves into the unknown realms of deep space, accompanied by an increasing number of deep space exploration projects, this mode of operation faces significant challenges. The extended distances of Earth–space communication result in considerable communication delays, adversely affecting real-time command control and increasing the risk of missing scientific discovery opportunities. Moreover, the uncertainty of deep space exploration environments, coupled with the often unknown nature of exploration targets, demands a higher level of spacecraft autonomy.

Adaptive Space Scientific Exploration (ASSE) refers to the capability of spacecraft to make autonomous decisions based on their limited capabilities, resources, and knowledge, without reliance on ground control centers, to fulfill both known and unknown scientific exploration tasks. This requires spacecraft to determine the necessary tasks and objectives based on telemetry data, health status, operational parameters, and the real-time conditions of deep space, ensuring high operational precision, robust performance, strong adaptability to the environment, and longevity. Implementing ASSE necessitates the development of a system architecture platform that supports intelligent capabilities for spacecraft, along with leveraging a variety of intelligent technologies to enhance autonomy.

Viewed through the lens of the integrated electronic systems’ capabilities within spacecraft, the ASSE paradigm mandates the provision of several pivotal capabilities for spacecraft. These include the ability to discover and identify both unseen and known scientific targets, an autonomous mission planning capability for executing requisite tasks or instructions, an autonomous task execution management capability, and a comprehensive system of self-management and monitoring capability. Notably, the spacecraft’s autonomous mission planning capability—rooted in the outcomes of target identification and significantly impacting the operational state of the spacecraft subsequent to identification—emerges as a seminal research topic within the ambit of spacecraft’s autonomous operation and control. Deploying such planning methodologies directly on spacecraft, as opposed to terrestrial control systems, necessitates heightened demands on the systems’ and algorithms’ flexibility, robustness, and reliability.

Within the domain of autonomous operation and control of spacecraft, Daniel D. Dvorak and his colleagues have pioneered a revolutionary operational paradigm termed goal-driven operation [1]. This paradigm marks a fundamental shift in operational underpinnings from executing a sequenced set of commands to a declarative specification of operational intents, thereby facilitating guidance via well-defined objectives. This method significantly augments operational robustness amidst uncertainties and amplifies the system’s autonomous decision-making capabilities through the lucid articulation of operational intents.

In this study, the essence of spacecraft task planning is fundamentally oriented toward the planning of “objectives”. During autonomous operation, spacecraft are required to complete numerous pending tasks, among which there are variations in priority, resource consumption, and scientific detection needs. The sequence in which tasks are executed significantly impacts the spacecraft’s operational status, which in turn directly affects the efficiency, quality, and stability of scientific detection. Therefore, the development of a planning algorithm that is both adaptable to the deep space environment and capable of effectively addressing these challenges is particularly crucial.

Confronted with the dynamic nature and unpredictability of space exploration, the limitations of existing planning methods are increasingly evident. These traditional approaches are largely based on the predictability of a pre-set environment, utilizing pre-programmed instructions and models. Researchers choose appropriate heuristic methods to optimize task sequences based on controlled environments. However, the effectiveness of these methods relies on accurate environmental predictions, making them ill-suited for unknown or changing objectives. The complexity of deep space exploration demands that planning algorithms possess a high degree of flexibility and adaptability to address unforeseen challenges. In short, a singular algorithm cannot guarantee superiority across all environments and instances in deep space due to a lack of necessary adaptive mechanisms. This discrepancy can lead to difficulties in estimating convergence speed and optimization efficiency, as well as adapting to the unknown environments of deep space, missing opportunities for scientific discovery, and sometimes even jeopardizing the success of missions. This situation underscores the urgent need to move beyond traditional methods and adopt more advanced planning strategies.

Hyper-heuristic algorithms offer a novel approach to tackling such issues by operating at a higher level of abstraction. This method manages and manipulates a series of low-level heuristics (LLH) to forge new heuristic solutions, applicable to a wide array of combinatorial optimization problems [2]. The process encompasses two principal layers: initially, at the problem’s lower level, algorithms build mathematical models grounded in the problem’s representation and characteristics, designing specific solutions within a predetermined meta-heuristic framework. At a higher heuristic layer, an “intelligent computation expert” role is established, employing an efficient management and manipulation mechanism to derive new heuristic solutions from the lower level’s algorithm library and characteristic information [3]. This design enables the intelligent computation expert to autonomously select the most fitting heuristic algorithm based on current environmental data, allowing, in theory, for adaptability to various environmental conditions if the lower-level algorithms are judiciously chosen.

Traditional hyper-heuristic algorithm research has primarily focused on methods based on Simple Random [4], Choice Function [5], Modified Choice Function [6], Tabu Search [7], Ant Colony [8], and reinforcement learning [9,10,11]. Recently, integrating hyper-heuristic algorithms based on reinforcement learning with neural network technologies has emerged as a growing research area. This new direction aims to develop more accurate and reliable “intelligent computation experts” through deep reinforcement learning methods [12], capitalizing on neural networks’ non-linearity, adaptability, robustness, and parallel information processing capabilities. Deep reinforcement learning utilizes the perceptual power of deep learning to comprehend the environment, combined with the decision-making mechanisms of reinforcement learning, to identify optimal behavioral strategies across various settings [13]. By amalgamating deep reinforcement learning methods, optimized neural network architectures, and diverse heuristic algorithms, this approach not only merges the strengths of multiple technologies but also crafts algorithms specifically for deep-space mission planning, thereby enhancing spacecraft’s planning capabilities in the face of complex and changing environments.

Based on this, the main contributions of this paper are as follows:

- Relying on the philosophy of building autonomous capabilities onboard spacecraft and the “goal-driven” methodology, this study introduces a spacecraft task planning framework designed to meet the requirements of adaptive scientific exploration and conducts mathematical modeling of the planning issue.

- Based on a mathematical model, we designed an Actor–Critic-based Hyper-heuristic Autonomous Task Planning Algorithm (AC-HATP) to support spacecraft Adaptive Space Scientific Exploration.

- At the lower tier of hyper-heuristic algorithms, by considering three aspects, namely global search capabilities, quality of solution optimization, and speed of convergence, we selected and designed suitable heuristic algorithms, establishing an algorithmic library.

- At the higher tier of hyper-heuristic algorithms, we employed a reinforcement learning strategy based on the actor–critic model, in conjunction with the network architecture, to construct an advanced heuristic algorithm selection framework.

- Through designed experiments, our research validated that the algorithm meets the needs of adaptive scientific exploration. Compared with other algorithm types, it was demonstrated that our approach achieves faster convergence speeds and superior solution quality in addressing deep space exploration challenges.

2. Related Work

The problem of autonomous mission planning for spacecraft can be defined as an optimization issue of how to efficiently allocate a series of tasks to satellites within the constraints of limited satellite maneuverability and fixed time windows, aiming to maximize payload utilization efficiency and optimize target information collection [14]. This represents a variant of common planning optimization problems. Planning typically involves specifying a problem’s initial and target states, along with a description of actions, requiring the automatic discovery of a sequence of actions that allows the system to transition from the initial state to the target state [15]. In this paper, we categorize autonomous spacecraft mission planning methods into three main groups for discussion: traditional methods (including planning based on predicate logic, network graphs, and timelines), heuristic algorithm methods, and reinforcement learning methods.

2.1. Traditional Methods of Autonomous Task Planning

The earliest methods for addressing planning problems relied on strict linguistic and logical structures for problem description and resolution. At this stage, various planning representation methods existed, such as first-order logic [16] and situation calculus [17], which served as second-order logic languages depicting the dynamics of the world. Subsequently, researchers like Nillson introduced the STRIPS planning description methodology, marking the preliminary formation of methodologies within the planning domain [18]. Building upon the foundation of STRIPS, the planning research domain gradually developed a mature description language known as PDDL. Then, Mc Dermott [19] formally proposed the Planning Domain Definition Language (PDDL), which has since undergone continuous refinement and development, resulting in multiple versions including PDDL 2.1 [20], PDDL 2.2 [21], and PDDL 3.0 [22], and even the advent of the PDDL+ version [23]. Owing to its outstanding features, PDDL has been widely applied in spacecraft mission planning, particularly in autonomous mission planning on satellites, for task description and modeling. For instance, researchers like ZHU Liying, in the domain of autonomous flight intelligent planning for small body exploration, through designing knowledge models based on PDDL, mathematical models based on CSP, and solving algorithms based on genetic strategies, effectively achieved efficient task management and simplified operational processes [24]. Researchers like Emma, by integrating task planning with execution monitoring, have enhanced the autonomous operational capabilities of space robots, especially through enhancing the robots’ intelligent task processing with PDDL [25]. Li Xuan [26] used PDDL to model and validate inter-satellite transmission mission planning in a collaborative satellite network where microwave and laser links coexist. Researchers such as Ma Manhao have utilized PDDL to focus on the constraints between observation tasks, modeling from the aspects of constraints, activities, and planning objectives, thus constructing an applied mission planning model for Earth Observation Satellites (EOSs) [27]. Researchers like Chen [28], based on a deep analysis of the characteristics of imaging satellite mission planning issues, used PDDL to address duration constraints, complex resource constraints, and special external resource constraints, establishing a mission planning model for urban and rural satellites. Xue [29] established an autonomous mission planning model for satellites in emergency situations, achieved the definition of constraints based on PDDL, and constructed a model that comprehensively considers the constraints of satellite platforms and payloads.

In the practical planning process, especially when facing time-sensitive planning issues, relying solely on the descriptive method of predicate propositional logic can be challenging to adequately describe factors such as time constraints. Conversely, the timeline model, with its straightforward and intuitive feature of time constraints, becomes an ideal choice for satellite mission planning with lower concurrent demands and more singular tasks. For example, researchers like Xu [30], in the study of autonomous mission planning for deep space probes, have adopted representation methods based on states and state timelines to describe the tasks of the probes and their constraint relationships. Researchers like Wang [31], through an object-oriented formal description method, categorized domain knowledge, including four models such as the timeline model, and simplified the method of establishing constraints. NASA’s ASPEN system [32], with its iterative repair philosophy, has developed a variety of reasoning mechanisms and developed a new type of mission planning method centered on the state timeline. The European Space Agency, based on the ASPI system, models the timeline pattern of scientific tasks, considering defects as the core object, and drives the planning process through collecting, selecting, and solving defects [33].

Additionally, various methodologies have been adopted for solving spacecraft mission planning challenges. The SPLKE system in the United States, designed to cater to the servicing needs of the Hubble Space Telescope, employs an algorithm based on the Constraint Satisfaction Problem (CSP) for task planning [34]. Jiang [35] have devised a task planning strategy based on constraint grouping. This method, which places a premium on action constraints, circumvents the issue of diminished constraint capacity with the expansion of problem size. Du [36] has utilized colored Petri nets for system modeling, categorizing the model into a top-level model, control model, target imaging mission planning model, and image transmission mission planning model, thereby applying this planning approach to imaging satellites. Liang [37] have designed an autonomous mission planning method based on a priority approach. This method, which leverages timeline technology and takes into consideration task priorities, facilitates the effective planning of task sequences. Bucchioni [38] proposed an innovative rendezvous strategy in cis-lunar space, combining passive and active collision avoidance to ensure safety during the approach to the Moon’s L2 point, filling a gap in the literature on autonomous guidance systems in the presence of third-body influences and significantly advancing the field of autonomous mission planning.

2.2. Task Planning Based on Heuristic and Metaheuristic Algorithms

Heuristic algorithms are strategies that rely on experience and intuition to find solutions, particularly suitable for scenarios where precise solutions cannot be obtained within a reasonable time frame. Although heuristic algorithms do not guarantee the optimal solution, they often provide a satisfactory solution within an acceptable timeframe. For example, NASA’s DS-1 spacecraft utilized a planning-space-based heuristic algorithm for mission planning. This method demonstrates excellent scalability and partial orderliness in outcomes, thereby enhancing the flexibility of execution planning [39]. Xue [29] employed Relaxation-based Graph Planning (RGP), an Enhanced Hill-Climbing Method, and Greedy Best-First Search (GBFS) to segment the satellite mission planning into sequence planning and time scheduling. Chang et al. [40] addressed the challenges in planning for optical video satellites with variable imaging durations, proposing a Simple Heuristic Greedy Algorithm (SHGA) to enhance its performance. Zhao et al. [41] explored the scheduling issues of satellite observation missions, implementing a task clustering planning algorithm to improve the observational efficiency of agile satellites, using Tabu algorithms to generate local and global observation paths within the clustered regions. Jin et al. [42] introduced a heuristic estimation strategy and search algorithm to enhance planning efficiency on spacecraft, with experimental results showing superior performance compared to Europa2. The optimal design of an active space junk removal mission similar to the time-dependent orientation problem was solved with the A* algorithm by Federici [43].

Meta Heuristic Algorithms (MHAs) represent a sophisticated optimization strategy, aimed at guiding and controlling heuristic search processes to identify the most optimal solutions possible within a solution space. The primary advantage of these algorithms is their independence from specific domain knowledge, which endows them with significant versatility, allowing their application across a wide range of optimization challenges. Common metaheuristic algorithms include genetic algorithms, simulated annealing, and particle swarm optimization. Notably, these algorithms have been extensively explored for the autonomous task planning of spacecraft. For instance, Long [44] developed an autonomous management and collaboration architecture for multi-agent systems tailored to the complexity and variability of managing multi-satellite systems. They introduced a hybrid genetic algorithm with simulated annealing (H-GASA) to address autonomous mission planning challenges in multi-satellite cooperation. Xiao et al. [45] investigated a hybrid optimization algorithm that integrates tabu search and an enhanced ant colony optimization algorithm, designed to tackle the maintenance task planning of large-scale space solar power stations. Wang [46] considered time and resource constraints, proposed the concept of dynamic resources and devised an individual coding rule based on fixed-length integer sequence coding to reduce the search space. They introduced a genetic algorithm that combines multi-mode crossover mutation, and designed a replanning algorithm framework based on rolling horizon replanning. Zhao and Chen [47], in the context of Earth observation satellite design, incorporated two generations of competitive technologies and an optimal retention strategy to address local multi-conflict observation tasks with an improved genetic algorithm. Feng et al. [48] designed a payload mission planning algorithm based on genetic algorithms capable of generating a complete command sequence according to tasks and directives, thereby implementing an autonomous operation system architecture for spacecraft based on multi-agent systems.

2.3. Task Planning Based on Reinforcement Learning

Reinforcement learning (RL) is an algorithm that learns optimal behavioral strategies through a system of rewards and punishments. In the context of spacecraft task planning, RL algorithms refine actions through a process of defining functions and actions, utilizing feedback on the effects of these actions on the final outcome to achieve an optimal solution. Despite the inherent conflict between the exploratory nature of RL and the high reliability requirements of spacecraft, which has limited its application in the aerospace field, ongoing research in this area has led to the exploration of this artificial intelligence technique in spacecraft task planning and decision-making processes. Harris et al. [49] have applied deep reinforcement learning (DRL) to spacecraft decision-making challenges, addressing issues of problem modeling, dimensionality reduction, simplification using expert knowledge, sensitivity to hyperparameters, and robustness, and ensured safety by integrating appropriately designed control techniques. Hu et al. [9] proposed an end-to-end DRL-based step planner named SP-ResNet for global path planning of planetary rovers, employing a dual-branch residual network for action value estimation, validated on the real lunar terrain of the CE2TMap2015 dataset. Huang et al. [50] explored the scheduling of Earth observation satellite missions, adopting a deep deterministic policy gradient algorithm to address the problem of continuous-time satellite mission scheduling, with experimental results indicating superiority over traditional meta-heuristic optimization algorithms. Wei et al. [51] introduced a method based on deep reinforcement learning and parameter transfer (RLPT) for iteratively solving the Multi-Objective Agile Earth Observing Satellite Scheduling Problem (MO-AEOSSP), surpassing three classical multi-objective evolutionary algorithms (MOEAs) in terms of solution quality, distribution, and computational efficiency, demonstrating high universality and scalability. Zhao et al. [52] proposed a dual-phase neural combinatorial optimization method based on reinforcement learning for the scheduling of agile Earth observing satellites (AEOSs). Eddy and Kochenderfer [53] presented a semi-Markov decision process (SMDP) formulation for satellite mission scheduling that considers multiple operational goals and plans transitions between different functional modes. This method performed comparably to baseline methods in single-objective scenarios with faster speed and achieved higher scheduling rewards in multi-objective scenarios.

2.4. Summary of Related Work

The research for the related work can be summarized as shown in Table 1.

Table 1.

Summary of research in autonomous mission planning methods.

In the complex and uncertain environment of deep space, traditional rule-based methods are limited in flexibility, making it challenging to meet the requirements for problem resolution. Heuristic and meta-heuristic algorithms, constrained by their generalization capabilities and environmental adaptability, require integration with a high-level architecture of hyper-heuristic algorithms, and the construction of a heuristic algorithm library at the lower level to cover as diverse a range of potential environmental states as possible. Regarding reinforcement learning methods, given the unique environmental constraints in deep space, using online reinforcement learning to train models is impractical. For offline reinforcement learning methods, the significant increase in environmental uncertainty may result in trained models that fail to meet practical needs, presenting challenges similar to those faced by meta-heuristic algorithms. Therefore, considering the autonomous learning capabilities of reinforcement learning, although it cannot be directly applied to spacecraft onboard task planning in deep space, it can serve as an upper-layer “expert system” within a hyper-heuristic algorithm framework, responsible for selecting appropriate algorithms. By designing a sufficiently rich algorithm library, and utilizing reinforcement learning for algorithm selection, the system can adapt to a broad and variable environment, thus enhancing the model’s adaptability. Moreover, the limited action space of this type of reinforcement learning significantly reduces the difficulty of training the model.

Consequently, this study proposes a hyper-heuristic algorithm framework with reinforcement learning at the upper layer and meta-heuristic algorithms at the base layer, aimed at enhancing the adaptability of spacecraft in the uncertain conditions of deep space.

3. Framework and Modeling for Spacecraft Onboard Autonomous Task Planning

According to the literature [54], spacecraft operating in deep space can have their intelligence levels classified into three categories: “automatic”, “autonomous”, and “self-governing”. In this classification, “automatic” spacecraft are capable of substituting manual operations with software, hardware, and algorithms, though their operations still depend on human intervention, such as receiving and executing commands. At the “autonomous” level, spacecraft simulate human operational processes and are able to independently carry out simple task executions and self-learning, such as executing commands in a pre-determined sequence. “Self-governing” spacecraft are capable of analyzing their current state and surrounding environment, and making rational decisions based on this analysis to more effectively achieve predefined objectives.

ASSE poses a challenge for spacecraft intelligence capabilities to transition from autonomous to self-governing operation, ensuring stable and continuous functioning in the complex environment of deep space. This requires comprehensive coordination and integration across three critical aspects: spacecraft architectural design, data description methods, and algorithm development. Firstly, it is essential to design a spacecraft architecture that supports self-governing capabilities, facilitates the operation and deployment of relevant algorithms, and controls the spacecraft based on the outcomes of these algorithms. Secondly, to enable effective data interchange between the architecture and algorithms, a suitable data description format must be designed. Finally, problem-specific algorithms need to be developed and deployed on the architecture using established data description formats.

3.1. Target-Driven and Task-Level Objective Commands

Traditional spacecraft operations primarily involve the method of data injection to transmit action commands to the spacecraft. This method is widely used due to its directness and reliability. However, with the increasing uncertainties of deep space exploration missions and extended communication delays, this approach can result in missed opportunities for scientific objectives, thus impacting the efficiency of the exploration. As the number of spacecraft increases and operational modes become more mature, some routine operations can transition from manual to automatic execution. Consequently, the scope of spacecraft operations should shift from specific “actions” to specific “objectives”, allowing the spacecraft to autonomously select and execute commands that align with the current objectives. This operational mode is referred to as “goal-driven”.

According to research by MAULLO [55], the concept of “goal-driven” operations involves shifting the basis of operations from a sequence of command instructions to declarative operational intentions, or goals, thereby reducing the workload of operators and allowing them to focus on “what” to do rather than “how” to do it. This method enhances the system’s autonomy and its ability to respond to unpredictable environments. By clearly defining operational intentions, the system can verify the successful achievement of objectives and, when necessary, employ alternative methods to achieve these goals [1].

Through the use of “goal-oriented” commands, spacecraft can encapsulate specific sequences of behavioral instructions, thereby concentrating on the objectives to be fulfilled rather than the operational details of the commands themselves. These commands do not have a fixed design framework; each organization and spacecraft manufacturer can customize the command format based on their specific requirements. In this study, given the emphasis on planning for spacecraft mission objectives, these are designated as “Task-Oriented Commands” (TOCs) [56].

This research utilizes TOCs as the fundamental unit of mission planning. When a spacecraft is required to manage multiple TOCs simultaneously, it must holistically assess the current resource information, the resource consumption associated with the objectives, and the environmental conditions in which the spacecraft operates. An optimal mission execution strategy is then selected, aiming to achieve maximum efficiency and minimal resource consumption in the shortest possible time and with the fewest iterations.

3.2. Spacecraft Autonomous and Task Planning Framework

The task planning framework in this study is based on the intelligent flight software architecture for spacecraft proposed by Lyu [57]. This architecture incorporates the Spacecraft Onboard Interface Services (SOIS), selecting service according to the needs of intelligent capabilities. The entire framework is segmented into the subnet layer, application support layer, and application layer, with the task planning module positioned at the upper echelon of the application layer. This study focuses on the design of task planning capabilities at the higher level of the application layer of the framework.

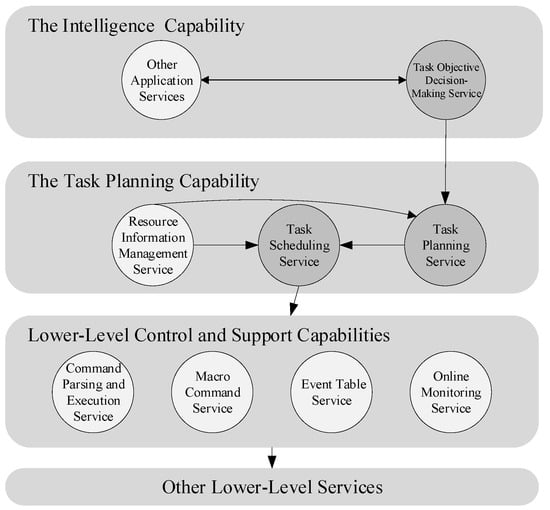

The autonomous task planning capabilities of the spacecraft comprise three main services: decision-making, planning, and scheduling. The relationship between these services and the overall architecture is depicted in Figure 1. The decision-making service involves the spacecraft generating and formulating task objectives based on the current environment and status, outputting several TOCs. The planning service is responsible for determining the execution sequence of various TOCs and generating a TOC execution sequence. The scheduling service entails decomposing each TOC into specific actions and commands executable by the spacecraft, ensuring that each TOC’s implementation effectively reaches the intended target state. Detailed descriptions of these services and their inputs and outputs are provided in Table 2. In summary, the task planning capabilities generate appropriate TOCs based on the spacecraft’s environment and status and plan their execution sequence. Subsequently, during implementation, these TOCs are broken down into concrete, executable commands, enabling the spacecraft to autonomously generate action commands in response to the current environment, thus fulfilling the requirements of ASSE.

Figure 1.

Interaction diagram of task planning capabilities and overall architecture.

Table 2.

Summary of autonomous spacecraft task planning processes.

This study aims to design a task planning service that optimizes the execution sequence of TOCs based on the onboard environment of the spacecraft, thereby enhancing the efficiency of task execution and the computational speed of the algorithm.

3.3. Mathematical Description of Spacecraft Autonomous Mission Planning Problem

This study explores the sequence planning problem for TOCs, with the objective of maximize scientific exploration benefits within the shortest possible operational duration, thereby optimizing the cost–benefit ratio. The spacecraft is required to methodically execute each mission objective until all known targets are completed. This issue represents a variant of the traveling salesman problem (TSP), a combinatorial optimization challenge that seeks to determine the shortest route by which a salesman departs from a city, visits each other city exactly once, and returns to the starting city, ensuring that the total path length (or cost) is minimized [58]. In this research, the total cost is defined in terms of the cost–benefit ratio, with additional considerations given to resources and environmental factors during the completion of each task.

Table 3 provides a comprehensive list of the symbols and their definitions used throughout the model.

Table 3.

Summary of symbols and definitions for the task sequence planning model.

The cost–benefit ratio can be expressed as

The mathematical model established in this study is as follows:

subject to

The objective function of the model is delineated in Equation (2), with the primary aim of maximizing the total cost–benefit ratio during scientific exploration. Constraint set (3) ensures that each task i is scheduled only once within the entire sequence of tasks. Constraint set (4) guarantees that, for each resource n, the consumption of resources during task execution, minus any potential replenishment, does not exceed the total capacity of that resource. Constraint set (5) defines the order of task execution to form a closed loop, preventing temporal conflicts between tasks. This is achieved by assigning a specific position to each task, thereby preventing the formation of multiple independent sub-cycles. Constraint set (6) is used to preclude the occurrence of sub-cycles in the solution, ensuring a complete operational loop rather than multiple fragmented cycles. Constraint set (7) ensures that the cost–benefit ratio of any executed sequence of tasks does not fall below a predefined minimum threshold. Constraint set (8) takes into account the need to avoid hazardous areas or maintain safe distances when executing tasks in deep space environments, as well as assesses the feasibility from task i to task j. Constraint sets (9) and (10) ensure that tasks start with a specified initial task and conclude with a designated final task. Constraint set (11) guarantees that the residual amount of resources never falls below zero at any point during task execution. Constraint set (12) specifies that certain tasks may only utilize specific resources within designated time frames. Constraint set (13) stipulates the maximum time interval for task execution, requiring that the interval between specific tasks does not exceed the set maximum time limit to avoid missing exploration opportunities. Constraint set (14) notes that, for some resources, consumption may be linked to the duration of task execution, necessitating consideration of the consumption rate. Constraint set (15) stipulates that the anticipated benefit of each task does not exceed the maximum limit.

Based on the description provided, this mathematical model can be constructed to plan the TOC execution in deep space. This model stipulates that the execution of TOCs consumes relevant resources and yields corresponding benefits. The sequence in which tasks are executed impacts both the amount of resources consumed and the benefits realized. Consequently, the model incorporates an objective function designed to maximize the cost–benefit ratio, thereby facilitating the optimization of the TOC execution sequence for spacecraft operations in deep space.

4. Methodology

4.1. Overview

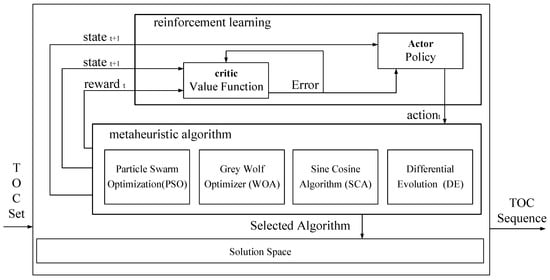

The architecture of the hyper-heuristic autonomous task planning algorithm based on the actor–critic model is depicted in Figure 2. This algorithm is structured into two levels: a higher level and a lower level. The lower level comprises multiple meta-heuristic algorithms, employed as core operators. Real-life scenarios inspire these meta-heuristics and are distinctively designed to meet the operational needs of spacecraft in the uncertain environment of deep space, with the four algorithms providing complementary features. The higher-level algorithm is based on reinforcement learning. In its operational process, the higher level initially selects appropriate lower-level operators based on the current environmental conditions and TOC parameters. Subsequently, the selected lower-level operator executes multiple times to alter the current state of the environment. Following this, the higher-level algorithm selects operators based on the updated environmental state, repeating this cycle until a predetermined number of iterations is completed. Once the reinforcement learning model is fully trained, the spacecraft, in theory, can select the most suitable operator based on real-time environmental data, thereby achieving optimized solutions more swiftly. The design of this computational method offers advantages in adaptability and flexibility over traditional single meta-heuristic algorithms. The high-level reinforcement learning strategy employs a policy-based actor–critic approach, utilizing the network to construct the actor and critic networks, which enhances the adaptability of operator selection and increases environmental carrying capacity.

Figure 2.

Schematic and data flow diagram of an actor–critic-based hyper-heuristic autonomous task planning algorithm.

4.2. Low-Level Heuristic Algorithm Selection and Design

- (1)

- Model mapping method

The TSP is intrinsically a discrete optimization problem. This paper utilizes several heuristic algorithms. Within the framework of heuristic algorithms, each computational instance involves data that effectively constitute a matrix. Let denote the solution matrix, where (for ) represents the row vector of the th solution, and each column corresponds to a specific TOC, formally represented as

Here, is the vector for the th solution. In heuristic algorithms, denotes the optimization value of the th task in solution . However, in the context of the TSP, does not represent a specific numerical value. In this study, we define the value of each solution by its magnitude factor, thus conceptualizing the entire solution as a sequence ordered from the largest to the smallest . The resolution strategy involves sorting all task optimization values, subsequently assigning a sequential factor to each to denote its position in the sequence, and restoring these tasks to their pre-sorted state. Finally, the original task values are replaced by their sequential factors, completing the construction of the solution.

The rationality of such mappings can be elucidated through mathematical principles.

Define as the operation of sorting vector , arranging the elements in ascending order based on their values, thereby generating a new sequence :

During this process, each is mapped to its respective position post-sorting. The sorting operation relies on comparisons among elements, and the sorting map ensures that the relative size relationships among the vector’s elements are preserved after the mapping, satisfying transitivity (if and , then ). This guarantees the consistency and uniqueness of the sort. The process leverages sorting as an intermediary step, thus ensuring that the mapping from a continuous space to a discrete ordinal space is both consistent and effective.

Furthermore, this type of mapping must also possess uniqueness. Since the mapping is based on the relative sizes of elements in the original sequence, any difference in the original sequences ensures that at least one element will be indexed differently after mapping. Consequently, different original sequences will map to distinct sequences.

- (2)

- Low-level heuristic operator selection

The unit of high-level operator selection is the underlying algorithm, so the overall optimization quality of the algorithm is related to the selection of the underlying algorithm. In the deep space environment, facing the uncertainty of the environment, in order to improve the adaptability and flexibility of the algorithm to the environment, it is crucial to select operators with different emphases. However, to ensure the convergence of the model, a balanced selection of algorithms is essential. Therefore, this research adopts the following four dimensions to select pertinent operators:

1. Global Search Capability:

Global search capability refers to an optimization algorithm’s ability to extensively explore the entire search space. This capability enables the algorithm to thoroughly probe the search space, thereby preventing it from merely settling into local optima and, ultimately, facilitating the discovery of global optima. In mathematical models, global search is often achieved by introducing randomness and diversity [59].

2. Quality of Solution Optimization:

A high-quality solution is not just a locally optimal solution but rather the best or near-best solution within the context of the optimization problem. An effective optimization algorithm should be capable of providing sufficiently high-quality solutions [60]. The quality of solutions is evaluated through a fitness function, which should be designed to differentiate between solutions of varying qualities and guide the algorithm towards developing higher-quality solutions.

3. Convergence Speed:

Convergence speed refers to the number of iterations or time required for an algorithm to meet its stopping criteria. Algorithms that converge quickly can find satisfactory solutions more rapidly, which directly impacts the efficiency of the optimization process [61]. Rapid convergence is demonstrated by the algorithm’s ability to quickly reduce the solution space and swiftly adjust solutions towards the optimal direction.

Consequently, this research aims to select four algorithms, each endowed with specific characteristics, to ensure that the chosen algorithms exhibit both flexibility and excellent adaptability. The four algorithms selected for this study are particle swarm optimization (PSO), grey wolf optimizer (WOA), sine cosine algorithm (SCA), and differential evolution algorithm (DE). The foundational principles, features, and their corresponding characteristics of these algorithms are detailed in the accompanying Table 4.

Table 4.

Comparative overview of selected optimization algorithms.

Among the four algorithms in the table, the particle swarm optimization (PSO) algorithm adjusts its position based on both its own historical best position and the global best position of the swarm. This information-sharing mechanism enhances the algorithm’s global search capability. Each particle explores a wide area in the search space and, through communication with other particles, avoids getting trapped in local optima, thus improving the global search ability of the algorithm. The grey wolf optimizer (GWO) algorithm simulates the hunting behavior of grey wolves by tracking the prey’s position and adjusting according to the prey’s dynamics, allowing the algorithm to quickly converge to the optimal solution. The sine cosine algorithm (SCA) is based on the periodic characteristics of sine and cosine functions, which allows it to extensively explore the search space in the early stages of the algorithm, enhancing global search capability. The differential evolution (DE) algorithm generates new candidate solutions through differential operations and combines multiple solutions to create new ones, thus avoiding premature convergence to local optima and enhancing its global search capability. Through this global exploration mechanism, DE is able to find the optimal solution in complex optimization problems, achieving a high quality of solution optimization.

In the following sections, these four algorithms will be described in detail.

1. Particle Swarm Optimization (PSO)

Particle swarm optimization (PSO) is a swarm intelligence-based optimization technique, initially proposed by Kennedy and Eberhart [62]. Inspired by the social behaviors of bird flocks, it simulates the foraging process of birds, enabling particles (i.e., solutions) within the algorithm to seek the optimal solution based on both individual and collective experiences in the solution space. The movement of particles is guided by both their historical best positions and the global best position, aiming to enhance global search efficiency through collaborative efforts.

PSO comprises four parameters: particle position, particle velocity, individual best position, and global best position. Within an n-dimensional search space, the position of a particle is denoted as , which correlates with potential task execution sequences. The velocity of a particle dictates the direction and magnitude of its movement in the search space and is represented as . The individual best position (pbest) refers to the optimal location each particle identifies during the search, formulated as . The global best position (gbest) represents the optimal position discovered by the entire swarm during the search process, expressed as .

The velocity and position of the particles are updated according to the following formula:

where is the velocity of particle i in dimension is the inertia weight, and are learning factors, and and are random numbers between 0 and 1.

The formula for updating the position is as follows:

where is the position of particle i in dimension d.

The PSO algorithm initially initializes the positions and velocities of the particle swarm. Once the algorithm commences, it calculates the fitness value for each particle, subsequently updating the individual and global best positions. Thereafter, the velocities and positions of each particle are adjusted according to Formulas (18) and (19). This process is repeated until the stopping criteria are met. If the fitness function is Equation (2), the pseudo-code of the algorithm is shown in Algorithm 1.

| Algorithm 1: Particle Swarm Optimization (PSO) with Cost-Benefit Ratio |

| Initialize particle positions and velocities based on tasks while termination criteria not met do for each particle do Calculate fitness using the cost-benefit ratio if is better than then Update with end if if is better than then Update global best with end if Update and based on and end for end while return the global best solution |

2. Grey Wolf Algorithm (WOA)

The grey wolf algorithm (WOA) was proposed by Mirjalili [63] inspired by the social hierarchy and hunting behaviors of grey wolves. The algorithm emulates the strategies of tracking, encircling, and capturing prey employed by wolf packs during the search process. It divides the wolf pack into leaders (alpha, beta, delta wolves) and followers, with the leaders guiding and the followers updating their positions, thereby facilitating effective global and local searches to find optimal solutions.

The core of the WOA is to emulate the hunting mechanisms of wolf packs. The algorithm designates three lead wolves, identified as , and . Initially, the distances between the pack and the prey are calculated as follows:

where is a coefficient vector determined by the formula , with generating a vector of random numbers, each within the interval .

Subsequent to this, the position update of the “wolf pack” is performed using Formula (21):

where Formula (21) models the pack’s behavior of encircling and hunting the prey. Here, is computed using , with being a linearly decreasing parameter from 2 to 0.

Finally, the position update is finalized using Formula (22)

In this formula, represents the current position of wolf , and is the newly calculated position.

The WOA algorithm starts by evaluating fitness, then simulates the hunting process of the wolf pack, updates the positions of the wolves and the global optimum solution, and repeats these steps until meeting the termination conditions. If the fitness function is Equation (2), the pseudo-code of the algorithm is shown in Algorithm 2.

| Algorithm 2: Grey Wolf Optimizer (WOA) with Cost-Benefit Ratio |

| Initialize wolf positions based on tasks Identify alpha, beta, and delta wolves based on while termination criteria not met do for each wolf do Update the position towards alpha, beta, and delta using , Calculate fitness using the cost-benefit ratio end for Update alpha, beta, and delta positions based on best values end while return the position of the alpha wolf |

3. Sine Cosine Algorithm (SCA)

The sine cosine algorithm (SCA) was developed by Mirjalili [64], utilizing the mathematical sine and cosine functions to update the positions of solutions. By dynamically adjusting the search direction and step size, the algorithm strikes a balance between global exploration and local search. This method is particularly well-suited for solving complex multimodal optimization problems, as it flexibly adjusts the search paths through the sine and cosine rules, thus avoiding local optima.

In the SCA algorithm, the position of each solution is updated in every iteration according to Formulas (23) and (24):

In these formulas, represents the current position of the solution, and is the position of the solution after it has been updated. The parameters , and are randomly generated to adjust the search trajectory of the solution: controls the step size, determines whether a sine or cosine function is used for the update, and dictates the direction of the search. denotes the position of the optimal solution in the current iteration. If the fitness function is Equation (2), the pseudo-code of the algorithm is shown in Algorithm 3.

| Algorithm 3: Sine Cosine Algorithm (SCA) with Cost-Benefit Ratio |

| Initialize solutions for all tasks Calculate fitness for all using Identify the best solution while not converged do for each solution do for each dimension do Calculate randomly if rand (); 0.5 then else end if end for Update improves the fitness end for Update if better solutions are found end while return |

4. Differential Evolution Algorithm (DE)

The differential evolution (DE) algorithm is a global optimization algorithm mainly used to solve optimization problems on continuous parameter spaces. Its principle is based on simple but effective genotype mutation, crossover, and selection operations on individuals in a population to explore the solution space and find the optimal solution [65].

In the variation step, three different individuals , and are randomly selected from the current population and used to generate a new candidate solution . The mutation vector is given by

where F is a positive scaling factor, usually between 0.5 and 1.0. This factor controls the strength of the perturbation of the solution vector a by the difference vectors .

In the crossover step, the algorithm combines the variance vector and the target individual to generate the trial vector . For each dimension j, the jth component of the trial vector is determined in the following way:

CR is the crossover probability, which determines the acceptance probability of the variance vector components on each dimension; is a random number uniformly distributed in the range [0,1]; ensures that at least one of the dimensions is selected from to introduce new genetic information, where D is the dimension of the problem.

In the selection step, the fitness of the target individual is directly compared with that of the test individual :

If the fitness of the test vector is better than (or equal to) the fitness of the current individual , then will replace in the next-generation population. If the fitness function is Equation (2), the pseudo-code of the algorithm is shown in Algorithm 4.

| Algorithm 4: Differential Evolution Algorithm (DE) |

| Initialize population vectors for to Evaluate the fitness of each individual Identify the best individual while not converged do for each individual in the population do Select random individuals from the population, Generate the donor vector Initialize trial vector to be an empty vector for each dimension do if or then else end if end for Evaluate the fitness if then else end if end for Update if a better solution is found end while return |

4.3. High-Level Algorithm Based on Actor–Critic Reinforcement Learning

- (1)

- Overview

Lower-level operators, by encompassing various initial uncertain states, necessitate the involvement of a “smart computing expert” at a higher level to assess the current environment and accordingly select an appropriate algorithm based on that environment and state. Once the algorithm is chosen, it is executed using the set parameters to activate the lower-level operators, a process that affects and modifies the current environment and state, prompting the subsequent selection of suitable operators based on the new conditions, and so forth. Thus, the essence of the entire algorithmic process depends critically on the “smart computing expert’s” ability to accurately select and implement the appropriate algorithms.

During the operator selection phase, it is essential to consider relevant design methodologies from reinforcement learning, such as methods for describing states, definitions of reward functions, criteria for action definition and selection, network architecture design, and other training and strategy designs.

Overall, the algorithm describes the spacecraft’s relevant resources and attributes as state features, utilizing the deep neural network to enhance the model’s parameterization and adaptability to complex and diverse environments. Moreover, action selection is based on the ε-Greedy Strategy, and dynamic reward functions are defined across four dimensions: global search capabilities, solution optimization quality, algorithm convergence speed, and types of applicable problems. This approach aims to differentiate reward functions in the early and late phases of training, thereby enhancing the model’s training effectiveness.

- (2)

- State

The design of the state significantly affects the computational accuracy of hyper-heuristic algorithms. In this study, we regard several solutions optimized by heuristic algorithms as part of the state, which are integrated with other elements (such as resource consumption, cost, and current resources) to form a comprehensive state representation matrix. According to Equation (16), the current solution matrix is denoted as , thereby defining the state space as follows:

Here, resource information includes all details pertaining to the resources required for task execution , the total available resources , and the resources accrued after completing tasks . Cost and benefit information encompasses the cost–benefit ratio from task to task and the benefits obtained after completing task represents the threshold for the minimum cost–benefit ratio , and indicates whether time-related constraints are present.

Given the variations in dimensions and shapes of matrices formed by these constraints, methods such as normalization, padding, and feature extraction are necessary to process these matrices. This allows the extracted features to be input as a cohesive state into the model. Such processed state inputs enable the trained model to adapt to diverse tasks and constraints effectively.

- (3)

- Action selection

In this paper, the reinforcement learning algorithm is defined with an action set . The action set comprises four heuristic algorithm operators. Based on the current environmental conditions, the algorithm selects the appropriate operator (i.e., heuristic algorithm) and executes the corresponding code according to pre-set parameters.

During model training, the early action selection strategy has a significant impact on the performance of the algorithm since the initial probability distribution is random or directly specified. For instance, if high probability actions are consistently chosen early on, the algorithm may overly rely on these known optimal actions, thereby causing the model to converge on local optima as it struggles to explore more advantageous strategies.

The action selection strategy employed in this study is the -Greedy Strategy, which effectively addresses the trade-off between exploration and exploitation. The basic strategy is as follows:

where is the action chosen at time . The algorithm defines an exploration rate . If the result of , the exploration mechanism is activated; otherwise, the action estimated to yield the highest expected reward is greedily selected, facilitating exploitation.

Regarding the adjustment of the exploration rate, given the instability of the initial probability distribution, a higher exploration rate is warranted initially. As training progresses to ensure stability in the decision-making process, the exploration rate should gradually decrease. The adjustment formula for the exploration rate is

Here, it is necessary to define the initial exploration rate , the minimum exploration rate , and the decay rate represents the exploration rate at time . Through this method, the exploration rate starts at a higher value and gradually decreases over time to a lower value.

- (4)

- Actor–Critic network structure design

The actor–critic (AC) method is a sophisticated reinforcement learning algorithm that amalgamates the advantages of policy gradient methods with those of value function optimization techniques [66]. In the upper layer of our study’s algorithm, we have adopted this method as the primary reinforcement learning framework.

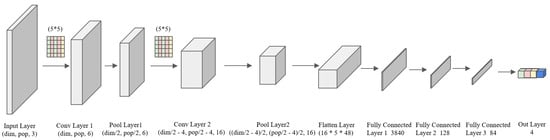

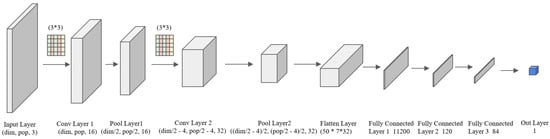

In this approach, there are two interconnected network structures: the actor network (Figure 3) and the critic network (Figure 4). The actor network (A) receives the state of the environment as input and outputs the probabilities of selecting each possible action. Its primary objective is to select actions based on the current policy, with the aim of maximizing expected rewards through policy learning. The critic network (C) also inputs the state of the environment but outputs an estimate of the current state’s value, assessing the value of states or state–action pairs by learning the value function of actions [67].

Figure 3.

Schematic representation of the structure and shape of the actor network in the actor–critic method.

Figure 4.

Schematic representation of the structure and shape of the critic network in the actor–critic method.

Initially, the actor network acts within the environment according to the current policy. Subsequently, the critic network evaluates the effectiveness of this action and computes the temporal difference (TD) error. The actor network then updates its strategy based on feedback from the critic network. Simultaneously, the critic network updates its estimate of the value function based on the TD error. The pseudo-code for the algorithm is provided in Algorithm 5.

| Algorithm 5: Actor-Critic Based Metaheuristic Algorithm |

| Initialize policy network parameters , value network parameters Initialize environment and state for each episode do Reset environment and observe initial state while not done do action according to the policy Execute action in the environment Observe reward and new state Compute advantage estimate Update policy Update value end while if end of evaluation period then Evaluate the policy end if end for |

For the actor network, the action probability distribution is defined as follows:

Here, represents the action, is the state, and are the parameters of the actor network, with denoting its function. This formula delineates the probability of selecting action in state . Initially, computes scores for all actions, which are subsequently converted into a probability distribution using the softmax function, ensuring that the sum of all action probabilities equals one and that the selection probability is positively correlated with the scores. Moreover, the exploration rate ensures a degree of random exploration.

In this study, we designed the architecture of the actor network, which tailored in our setting to output four operations (operators), with a complex input matrix (state ). The entire network consists of two convolutional layers, two pooling layers, and two fully connected layers, culminating in a Softmax output. The network architecture is illustrated as follows:

The loss function for the actor network is defined in two parts. The first part consists of the expected negative log probability multiplied by the action’s advantage function , aimed at guiding the policy to enhance reward values. The second part involves the entropy of the policy to encourage exploration of new actions, defined as

The network parameters are updated through gradient ascent. Here, is the coefficient for the entropy regularization term, and represents the entropy of the policy. High entropy implies greater randomness in action selection. The advantage function assesses the average merit in a given state and is defined as

Here, is the immediate reward obtained after executing action is the discount rate for future rewards, and is the estimated value function for the next state . This methodology allows for the approximation of the advantage function through the value function estimated by the critic network, simplifying the computation.

For the critic network, the value function is defined as

where are the parameters of the critic network, and is a function composed of two convolutional layers and three fully connected layers. This simplified network version is chosen due to the simplicity of the output from the policy function.

The loss function of the critic network calculates the error between the network’s value estimation and the actual rewards:

where is the actual return starting from state . The network parameters are updated through gradient descent.

Based on the described methods, an adaptable actor–critic network can be constructed, providing ample justification for action selection.

- (5)

- Optimization Metrics

Before defining the reward function, it is essential to clarify the key performance metrics that the algorithm aims to improve, as the design of the reward function in high-level reinforcement learning is closely tied to these metrics. In this study, the defined optimization metrics are directly proportional to the level of improvement achieved; thus, higher improvements correspond to higher reward values. Consequently, the goal of the algorithm is to enhance these metrics by obtaining high reward values.

This research establishes four critical metrics: global search capability, quality of solution optimization, algorithm convergence speed, and applicability to problem types. For each metric, both stepwise and overall rewards must be considered within the reward function.

Global search index : The Global Search Index is a metric used to quantify the diversity of solutions in heuristic algorithms by evaluating the ratio of distinct solutions generated during a specified iteration period to the theoretical maximum number of possible solutions. This metric reflects the algorithm’s ability to explore the search space globally, indicating how well it maintains diversity throughout the search process. The mathematical expression is

where denotes the number of distinct solutions, represents the number of iterations, and indicates the size of the solution space.

Quality of solution optimization (): This metric assesses the improvement of a solution compared to the initial state. The stepwise reward is defined below:

where P(X) is the path length of the current solution . The overall reward is calculated based on the specific improvement over the initial solution.

Weighted Convergence Speed ): This metric quantifies the average number of steps required for the algorithm to converge to the current optimal solution. It measures the speed and stability of the algorithm by assigning a weight to each adaptation improvement.

Here, is the weight of the i-th adaptation improvement, set according to the actual situation. is the improvement value of adaptation after the ith iteration. Iteration is the total number of iterations required to reach the highest adaptation value.

With these metrics, an algorithm that reaches a high level of adaptation quickly in the early stages and then remains unchanged will receive a higher value because the weighting of reaching a high level of adaptation quickly in the early stages is greater. Additionally, if the algorithm is still making small improvements as it approaches its final fitness, these improvements, although small, will be factored into the overall evaluation, although they will not significantly affect the evaluation metrics.

Algorithm Composite Evaluation Index (): This metric aims to quantify the average performance of the algorithm. For algorithms, surely the algorithm that achieves the best fitness is the most superior algorithm. However, in aerospace, the real-time and optimization efficiency of the algorithm needs to be considered so that the algorithm can achieve the best possible results in as short a time as possible, while ensuring adequate coverage of the number of solutions, and still achieve a good degree of fitness. Combining the above needs, this study defines the Algorithm Composite Evaluation Index, which is used to combine the above three metrics to comprehensively evaluate the performance of an algorithm so that it can be applied to the specific field of aerospace and related scenarios.

The index is used to compare the combined performance of the above three metrics between multiple algorithms and in the same case, so multiple algorithms need to calculate the index at the same time in order to be comparable. A higher index indicates that the algorithm performed relatively better (i.e., converged faster and produced better results) in that instance. The specific calculation method of the index is as follows:

In the above formula, and refer to the optimal value of the corresponding metrics in the algorithm in the same period, and the rest of the relevant participation is used for the metrics’ normalization. According to the importance ranking of the above three metrics, in this study we define , i.e., the algorithm’s fitness is calculated to be the most important, followed by the speed of convergence, and lastly, the extent of the algorithm’s coverage of the solution. The following text study completes the comprehensive evaluation of the algorithm based on this metric.

- (6)

- Reward

In this study, the designed algorithm aims to meet the flexibility requirements in diverse environments, thus imposing stringent demands on maintaining consistent performance under various conditions. The selection of the four underlying algorithms must possess significant advantages to ensure the efficiency and effectiveness of the overarching meta-heuristic algorithm. Initially, the goal is to surpass the performance of individual algorithms through optimization based on a relative evaluation of higher-level decisions. As training progresses, the model theoretically should select the optimal solution that exceeds the performance of each standalone algorithm, rendering simple relative evaluations inadequate. Hence, it is necessary to assess the merits and demerits of algorithms and decisions from an absolute perspective to guide the training direction.

Consequently, the reward function in this research is divided into two main parts: the absolute factor and the relative factor . As training time increases and training effects improve, the proportion of the absolute factor gradually increases, while that of the relative factor correspondingly decreases. The expression is given by

where is the point in time when and are equal.

In the reward function, the metrics are the four optimization indicators previously mentioned, calculated differently depending on whether the perspective is absolute or relative. For the absolute factor, the focus is on the absolute values of the optimization metrics and the changes before and after algorithm implementation. The formula is as follows:

Here, and represent the normalized step reward and global reward at time for metric , respectively, with being the weight of metric , and is the total number of iterations, and is the discount factor, used to adjust the weight of future rewards. The set of metrics includes .

For the relative factor, it is only necessary to compare the rankings of the meta-heuristic algorithm against the other four standalone heuristic algorithms. The higher the ranking of a corresponding metric, the greater the reward obtained. To encourage higher rewards, it is stipulated that higher rankings result in exponentially increasing rewards, as specified in the relative reward :

Here, is the ranking position of the algorithm, is the total number of algorithms. is the raw reward value obtained through the optimization indicators associated with the absolute factor. Each metric has an assigned weight and ranking.

After integrating both absolute and relative factors, the training of the algorithm focuses on surpassing the performance of individual underlying algorithms and striving for better solutions, thereby enhancing the overall performance while optimizing the four specified metrics.

- (7)

- Training strategy

Building upon the aforementioned description, this study further incorporates the following training strategies. Firstly, a dynamic learning rate adjustment is utilized. Within the optimizer, the learning rate, , is updated after each epoch via a scheduler according to the formula

Here, represents the learning rate for the upcoming epoch, is the learning rate of the current epoch, is a decay rate (typically less than 1), and controls the rate of decrease in the learning rate. By progressively reducing the learning rate, the algorithm can converge more effectively, while also minimizing parameter fluctuations and overfitting in the later stages of training. An exponential decay strategy is employed to adjust the learning rate.

Secondly, for the actor and critic networks, the Adam optimizer is employed:

where denotes the network parameters, is the learning rate, is the bias-corrected estimate of the first-order momentum, is the bias-corrected estimate of the second-order momentum, and is a small constant added to ensure numerical stability. The Adam optimizer is utilized to adjust model parameters, allowing for the adaptive adjustment of learning rates for each parameter.

5. Experiment

To validate the effectiveness and performance of the proposed algorithm in real-world environments, this study has designed several sets of experiments. Initially, the validity and training process of the model are analyzed by examining changes in the reward function values and anticipated model changes through the training procedure. Subsequently, by integrating the four operators at the base of the hyper-heuristic algorithm, the effectiveness of the higher-level algorithm in selecting these operators is assessed. Finally, by comparing with other heuristic algorithms and common algorithms, the comprehensive effectiveness of this algorithm on relevant evaluation metrics against other operators and algorithms is verified.

5.1. Parameter and Environment Settings

Due to the unique nature of this problem, there are no standard datasets available for testing. Therefore, the datasets used in this paper consist of task instances randomly generated according to the actual engineering requirements. We define two parameters for the spacecraft mission objectives with value ranges of (0,100), and the spacecraft’s initial position and state are set as default values. Subsequently, based on the task sequence, the spacecraft calculates and generates an optimized command sequence. The relevant parameters for the instances are defined in the following Table 5.

Table 5.

Environment parameters settings.

In the proposed hyper-heuristic algorithm, the determination of relevant parameters influences the algorithm’s performance. Based on multiple previous tests and taking into account the experience from related research, we have identified the relevant parameters for the high-level aspects of the hyper-heuristic algorithm and the parameters for the lower-level operators. These parameters are shown in the Table 6.

Table 6.

Hyper-heuristic algorithm and low-level operator parameters.

In this study, all algorithms were coded using Pytorch 2.0.0 and Python 3.9.18, and implemented on a personal computer with an Intel(R) Core(TM) Ultra 5 125 H processor (manufactured by Intel Corporation, Santa Clara, CA, USA)running at 1.2 GHz with 32 GB RAM for training and principle testing. During the training process, CUDA and an NVIDIA GeForce RTX 4060 Laptop GPU (manufactured by Intel Corporation, Santa Clara, CA, USA)were used for computational acceleration.

5.2. Model Training

In this study, the training scenario is as follows: the task parameters are defined based on a TOC command structure, where each task (TOC) represents a motion planning action for a gimbal system, characterized by two key parameters: Azimuth (horizontal direction, ranging from 0 to 180 degrees) and Elevation (vertical direction, ranging from 0 to 180 degrees). Task instances are generated according to the rules in Table 4, producing between 30 and 70 TOCs, with parameters such as time window, resource consumption, and resource replenishment randomly set within reasonable ranges. For example, the time window is set to 0 or 1 to simulate task timing constraints, and resource consumption is uniformly distributed to ensure that the total consumption does not exceed the resource limit. The environment simulation assumes that both the spacecraft and target positions are randomly distributed within a two-dimensional space, ranging from (0,0) to (100,100).

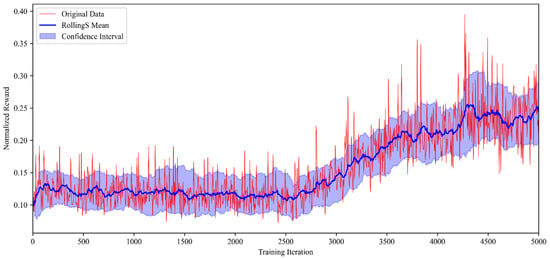

The training process of the model described in this study was based on CUDA and utilized the aforementioned GPU acceleration. During training, the algorithm’s reward function was defined in accordance with the earlier discussion on rewards, and continuous monitoring and recording were conducted. The study carried out 20 training sessions, each with a sufficient number of iterations. The trend graphs of the average, upper, and lower bounds of the reward function during these trainings are shown in Figure 5.

Figure 5.

Change in reward function during reinforcement learning training.

Here, the red fold represents the reward function change for one of the training sessions, the blue finding fold represents the average value of the reward function change, and the blue fill represents the sliding window of the highest and lowest values of the reward function change over the course of the multiple testing sessions described above. The value on the red fold represents the specific value of the reward function that can be obtained based on the algorithm completing one planning at the current number of iterations.

According to the above figure, it can be seen that the reward function score is low in the initial phase of the algorithm. Afterwards, the reward obtained gradually increases as the training progressively deepens. At the same time, the hyper-heuristic algorithm gradually outperforms the four underlying heuristics alone and obtains higher scores. The reward values fluctuate slightly due to the change in the reward function during the training iterations, which causes the algorithm to favor the global rather than outperform the individual four algorithms, although it ultimately stays within an interval. Comprehensively, the above figure reflects that the change in reward value will gradually improve, thus proving that the reinforcement learning algorithm can effectively improve the evaluation results of the relevant indicators, proving the effectiveness of the algorithm.

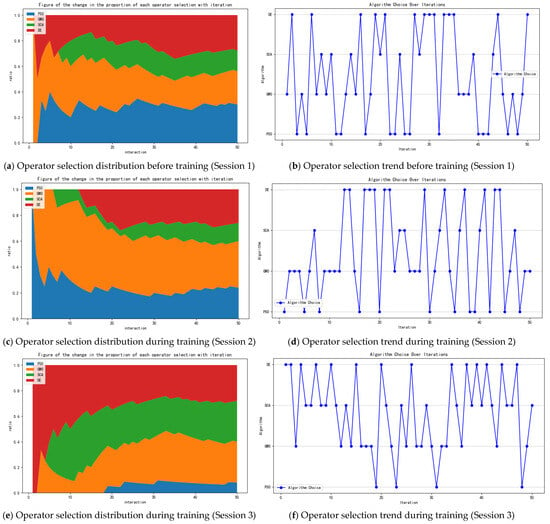

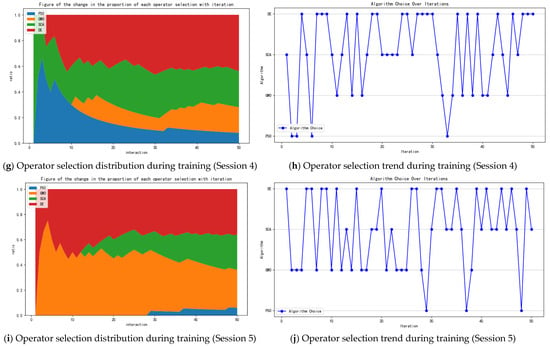

Meanwhile, during the training process, the selection scheme for the four underlying algorithms at each training is recorded. Figure 6 shows the stacked area plot of the proportion of the number of times the selection tendency was made for each of the four times during the training iteration cycle, initially and after the end of the model training, respectively.

Figure 6.

Schematic folded and stacked plots of operator choices before (1 session) and after (4 sessions) training.

Observing the above figure, we can find that the first two figures are the distribution of the model’s action selection before training. We can find that, before training, the model’s choices tend to be random, and the distribution of each action is basically equal. After the completion of multiple rounds of training, the last eight graphs show four times the model’s choice of operators at runtime. It can be noticed that the choice of operators has been differentiated due to the differences in the problems. For example, for Figure 6c,d, the tendency is to use the grey wolf optimization algorithm and the particle swarm algorithm to speed up the iterations at the beginning of the computation, while for Figure 6e–h, the tendency is to use the differential evolution algorithm to iterate quickly. Figure 6i,j, on the other hand, would use GWO and DE to speed up the iterations in the early stages, but used the PSO algorithm a number of times in the later stages to try to obtain more solutions. This illustrates the algorithm’s ability to target the right operators to achieve better results when faced with different particle states.