Generating Large-Scale Datasets for Spacecraft Pose Estimation via a High-Resolution Synthetic Image Renderer

Abstract

1. Introduction

- (1)

- A method for creating a labeled spacecraft pose estimation dataset is presented.

- (2)

- The proposed generated dataset method is based on RGB images, which tend to be used in vision-based spacecraft navigation operations.

- (3)

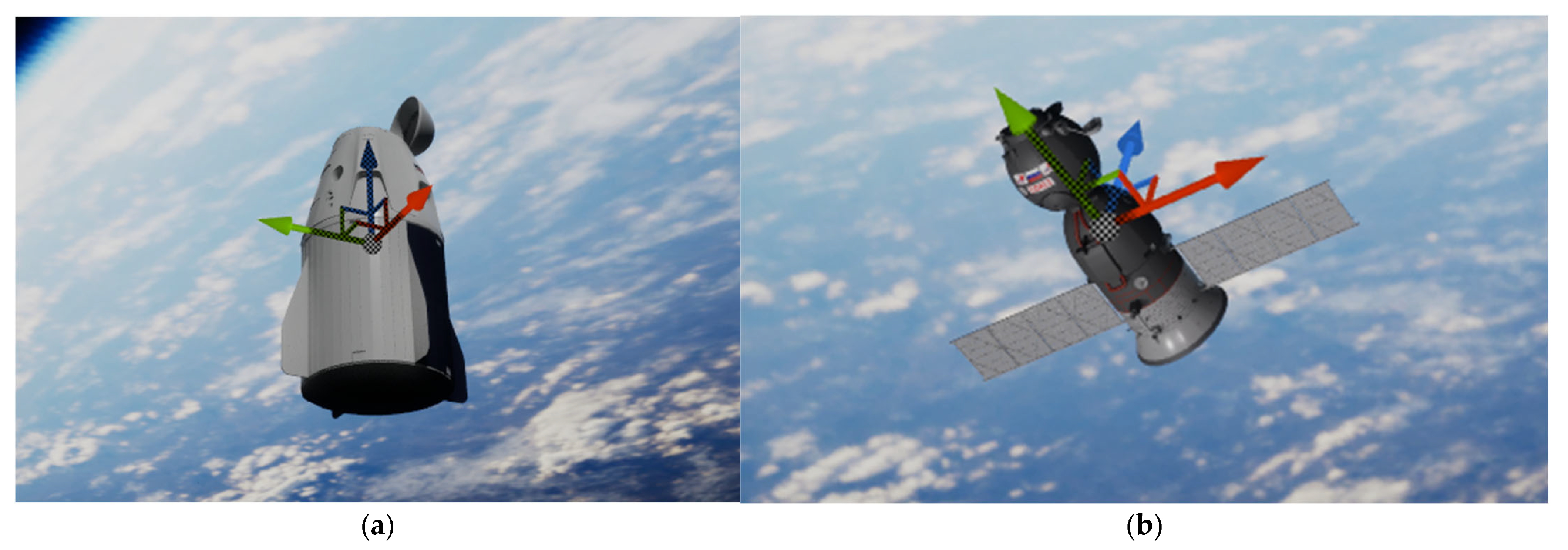

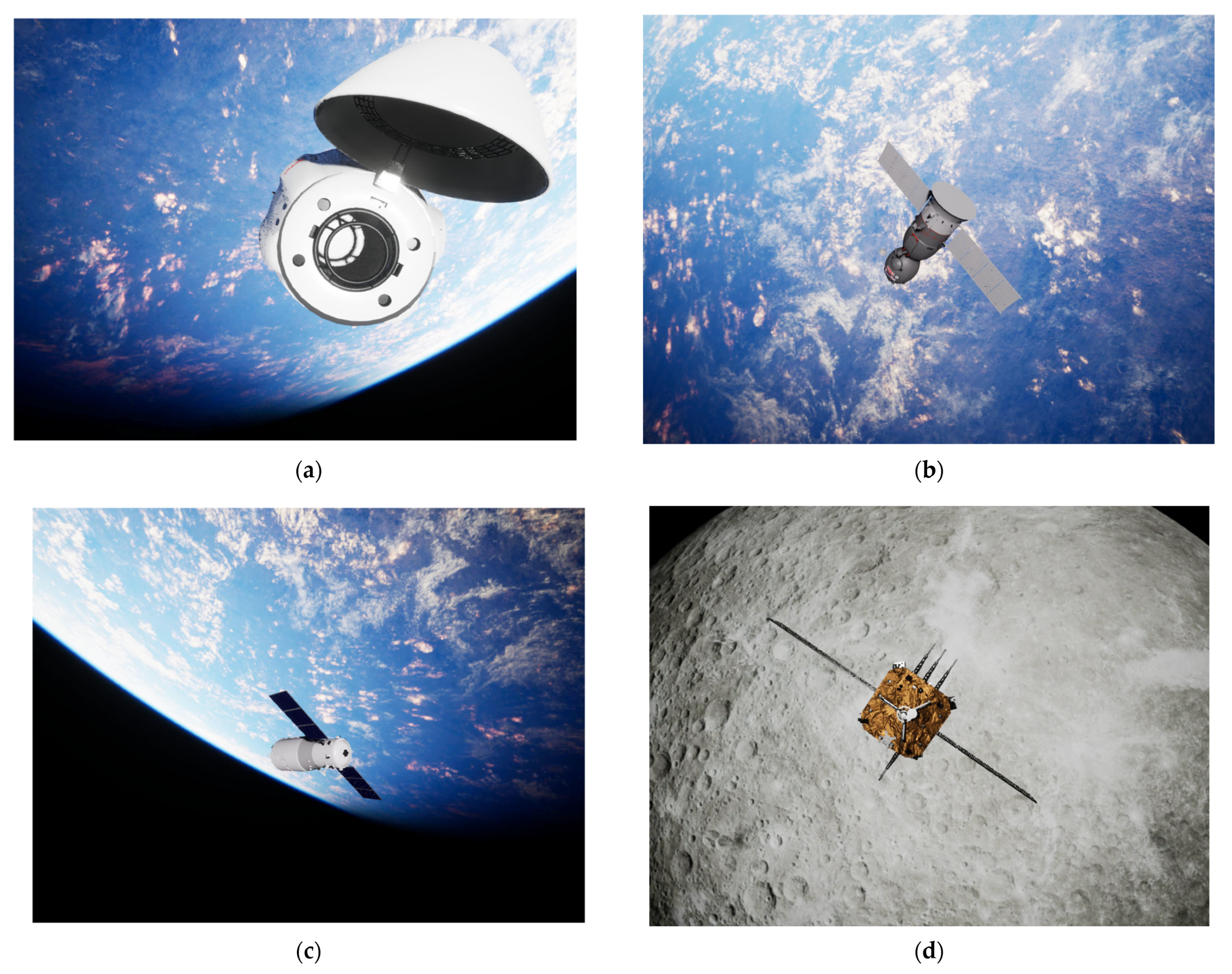

- The datasets generated from the four spacecraft, namely Dragon, Soyuz, Tianzhou, and ChangE-6, are publicly accessible.

2. Related Works

3. Methods

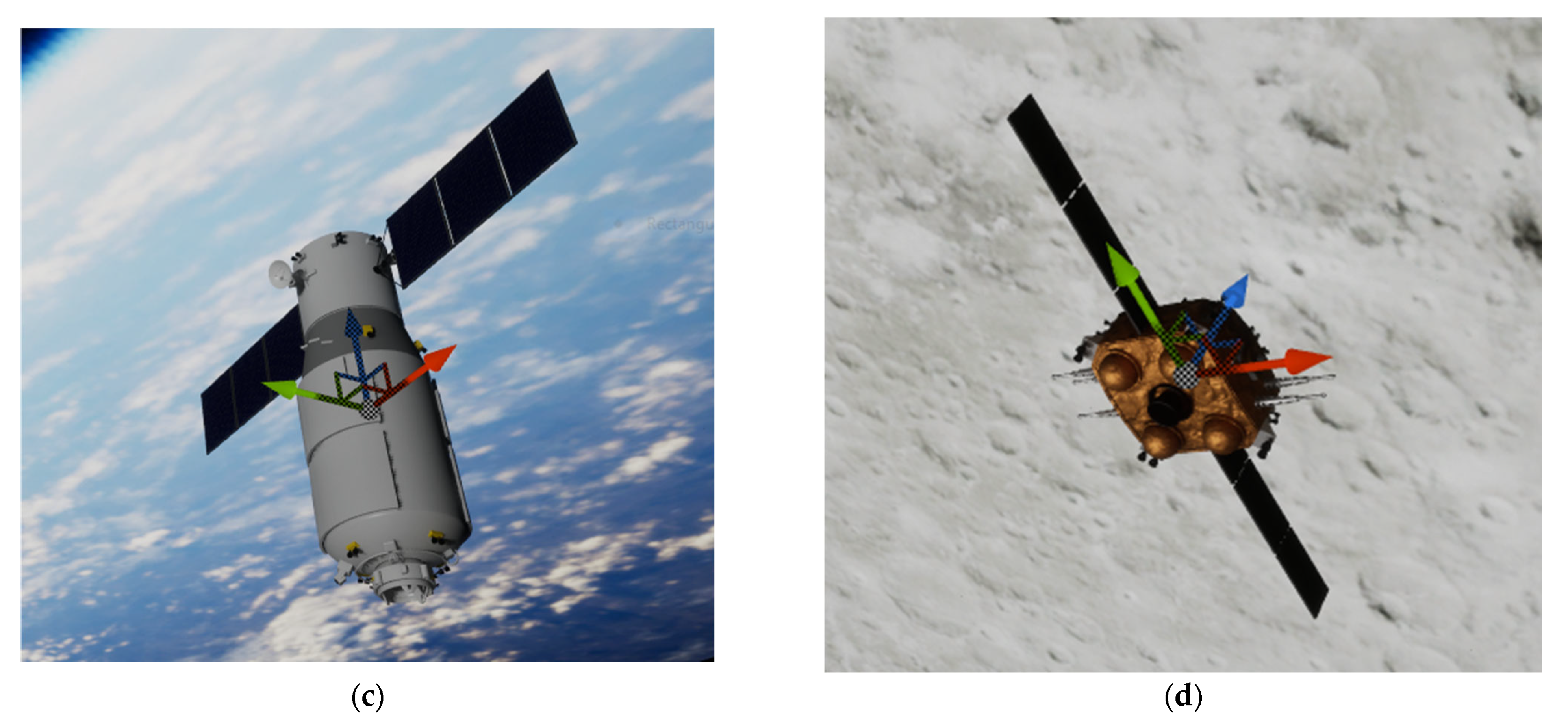

3.1. Raw Data Generation

3.1.1. Position Generation

3.1.2. Orientation Generation

| Algorithm 1: Pseudocode for the Raw Data Generation Process | |

| Input: Output: | |

| 1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: | = empty list for do = calculated from Equation (1) = calculated from Equation (1) = calculated from Equation (3) = calculated from Equation (4) = calculated from Equation (5) = calculated from Equation (6) as last element end for list |

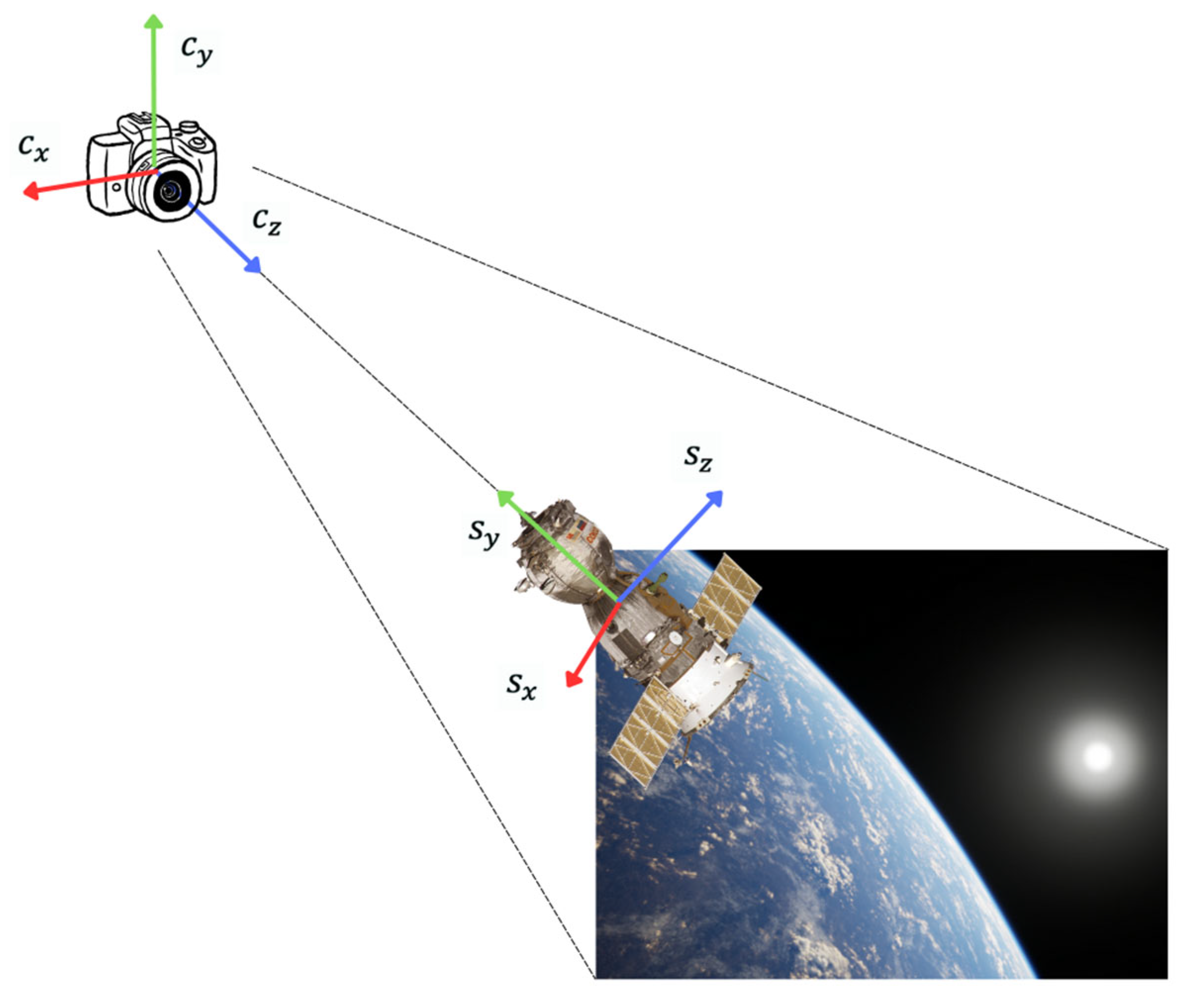

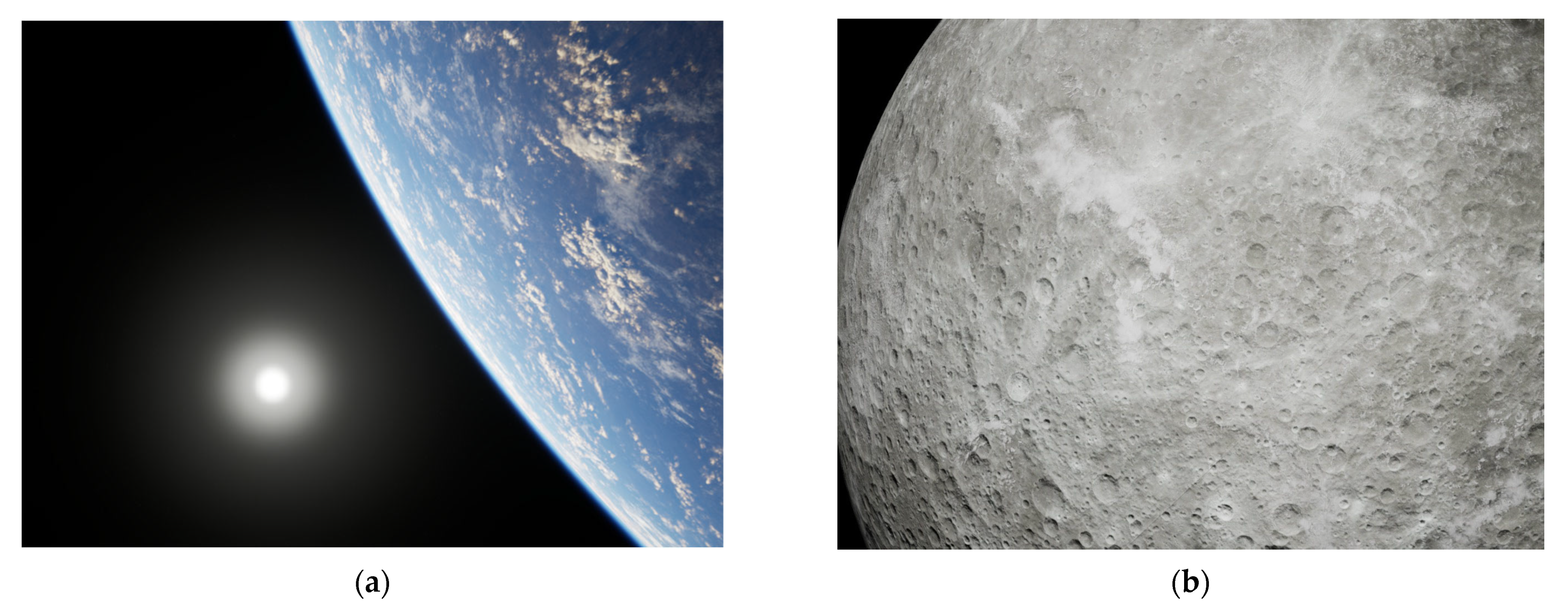

3.2. Background Image Generation

| Algorithm 2: Pseudocode for the Background Image Generation Process | |

| Input: , camera actor label Output: background image files | |

| 1: 2: 3: 4: 5: 6: 7: 8: 9: | Obtain camera actor from the label Initialize the camera position to (x: 0, y: 0, z: 0) for do ) Take high-resolution screenshot from the camera view end for |

3.3. Dataset Generation

| Algorithm 3: Pseudocode for the Dataset Generation Process | |

| Input: Output of Algorithm 1, output of Algorithm 2, camera actor label, satellite actor label, background image plate actor label Output: Dataset image files | |

| 1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: | Obtain camera actor from its label Obtain satellite actor from its label Obtain background image plate actor from its label Initial camera position and orientation for in raw data list do ) ) Uniformly and randomly select the generated background image from Section 3.2 Set the selected background image to the image plate actor Take a high-resolution screenshot from the camera view end for |

4. Results

5. Conclusions

6. Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gao, H.; Li, Z.; Wang, N.; Yang, J.; Dang, D. SU-Net: Pose Estimation Network for Non-Cooperative Spacecraft On-Orbit. Sci. Rep. 2023, 13, 11780. [Google Scholar] [CrossRef] [PubMed]

- Bianchi, L.; Bechini, M.; Quirino, M.; Lavagna, M. Synthetic Thermal Image Generation and Processing for Close Proximity Operations. Acta Astronaut. 2025, 226, 611–625. [Google Scholar] [CrossRef]

- Proença, P.F.; Gao, Y. Deep Learning for Spacecraft Pose Estimation from Photorealistic Rendering. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020. [Google Scholar]

- Kamsing, P.; Cao, C.; Zhao, Y.; Boonpook, W.; Tantiparimongkol, L.; Boonsrimuang, P. Joint Iterative Satellite Pose Estimation and Particle Swarm Optimization. Appl. Sci. 2025, 15, 2166. [Google Scholar] [CrossRef]

- Phisannupawong, T.; Kamsing, P.; Torteeka, P.; Channumsin, S.; Sawangwit, U.; Hematulin, W.; Jarawan, T.; Somjit, T.; Yooyen, S.; Delahaye, D.; et al. Vision-Based Spacecraft Pose Estimation via a Deep Convolutional Neural Network for Noncooperative Docking Operations. Aerospace 2020, 7, 126. [Google Scholar] [CrossRef]

- Park, T.H.; Märtens, M.; Lecuyer, G.; Izzo, D.; Amico, S.D. SPEED+: Next-Generation Dataset for Spacecraft Pose Estimation across Domain Gap. In Proceedings of the 2022 IEEE Aerospace Conference (AERO), Big Sky, MT, USA, 5–12 March 2022. [Google Scholar]

- Kisantal, M.; Sharma, S.; Park, T.H.; Izzo, D.; Märtens, M.; D’Amico, S. Satellite Pose Estimation Challenge: Dataset, Competition Design, and Results. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 4083–4098. [Google Scholar] [CrossRef]

- Sharma, S.; Beierle, C.; Amico, S.D. Pose Estimation for Non-Cooperative Spacecraft Rendezvous Using Convolutional Neural Networks. In Proceedings of the 2018 IEEE Aerospace Conference, Big Sky, MT, USA, 3–10 March 2018. [Google Scholar]

- Beierle, C.; D’Amico, S. Variable-Magnification Optical Stimulator for Training and Validation of Spaceborne Vision-Based Navigation. J. Spacecr. Rocket. 2019, 56, 1060–1072. [Google Scholar] [CrossRef]

- Park, T.H.; Märtens, M.; Jawaid, M.; Wang, Z.; Chen, B.; Chin, T.-J.; Izzo, D.; D’Amico, S. Satellite Pose Estimation Competition 2021: Results and Analyses. Acta Astronaut. 2023, 204, 640–665. [Google Scholar] [CrossRef]

- Forshaw, J.L.; Aglietti, G.S.; Navarathinam, N.; Kadhem, H.; Salmon, T.; Pisseloup, A.; Joffre, E.; Chabot, T.; Retat, I.; Axthelm, R.; et al. RemoveDEBRIS: An In-Orbit Active Debris Removal Demonstration Mission. Acta Astronaut. 2016, 127, 448–463. [Google Scholar] [CrossRef]

- Black, K.; Shankar, S.; Fonseka, D.; Deutsch, J.; Dhir, A.; Akella, M. Real-Time, Flight-Ready, Non-Cooperative Spacecraft Pose Estimation Using Monocular Imagery. arXiv 2021, arXiv:2101.09553. [Google Scholar]

- Dung, H.A.; Chen, B.; Chin, T.J. A Spacecraft Dataset for Detection, Segmentation and Parts Recognition. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021. [Google Scholar]

- Pasqualetto Cassinis, L.; Fonod, R.; Gill, E.; Ahrns, I.; Gil Fernandez, J. CNN-Based Pose Estimation System for Close-Proximity Operations Around Uncooperative Spacecraft. In AIAA Scitech 2020 Forum; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2020. [Google Scholar]

- Hu, Y.; Speierer, S.; Jakob, W.; Fua, P.; Salzmann, M. Wide-Depth-Range 6D Object Pose Estimation in Space. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Musallam, M.A.; Gaudilliere, V.; Ghorbel, E.; Ismaeil, K.A.; Perez, M.D.; Poucet, M.; Aouada, D. Spacecraft Recognition Leveraging Knowledge of Space Environment: Simulator, Dataset, Competition Design and Analysis. In Proceedings of the 2021 IEEE International Conference on Image Processing Challenges (ICIPC), Anchorage, AL, USA, 19–22 September 2021. [Google Scholar]

- Price, A.; Yoshida, K. A Monocular Pose Estimation Case Study: The Hayabusa2 Minerva-II2 Deployment. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021. [Google Scholar]

- Tsuda, Y.; Yoshikawa, M.; Abe, M.; Minamino, H.; Nakazawa, S. System design of the Hayabusa 2—Asteroid sample return mission to 1999 JU3. Acta Astronaut. 2013, 91, 356–362. [Google Scholar] [CrossRef]

- Jawaid, M.; Elms, E.; Latif, Y.; Chin, T.J. Towards Bridging the Space Domain Gap for Satellite Pose Estimation using Event Sensing. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023. [Google Scholar]

- Rathinam, A.; Qadadri, H.; Aouada, D. SPADES: A Realistic Spacecraft Pose Estimation Dataset using Event Sensing. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024. [Google Scholar]

- Gantois, K.; Teston, F.; Montenbruck, O.; Vuilleumier, P.; Braembusche, P.; Markgraf, M. PROBA-2 Mission and New Technologies Overview. In Proceedings of the 4S Symposium, Chia Laguna, Sardinia, Italy, 25–29 September 2006. [Google Scholar]

- Pauly, L.; Jamrozik, M.L.; del Castillo, M.O.; Borgue, O.; Singh, I.P.; Makhdoomi, M.R.; Christidi-Loumpasefski, O.-O.; Gaudillière, V.; Martinez, C.; Rathinam, A.; et al. Lessons from a Space Lab: An Image Acquisition Perspective. Int. J. Aerosp. Eng. 2023, 2023, 9944614. [Google Scholar] [CrossRef]

- Gallet, F.; Marabotto, C.; Chambon, T. Exploring AI-Based Satellite Pose Estimation: From Novel Synthetic Dataset to In-Depth Performance Evaluation. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 17–18 June 2024. [Google Scholar]

- Liu, X.; Wang, H.; Yan, Z.; Chen, Y.; Chen, X.; Chen, W. Spacecraft Depth Completion Based on the Gray Image and the Sparse Depth Map. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 7086–7097. [Google Scholar] [CrossRef]

- Xiang, A.; Zhang, L.; Fan, L. Shadow Removal Of Spacecraft Images with Multi-Illumination Angles Image Fusion. Aerosp. Sci. Technol. 2023, 140, 108453. [Google Scholar] [CrossRef]

- NASA’s Gateway Program Office. Available online: https://nasa3d.arc.nasa.gov/model (accessed on 27 January 2025).

- De Jongh, W.C.; Jordaan, H.W.; Van Daalen, C.E. Experiment for Pose Estimation of Uncooperative Space Debris Using Stereo Vision. Acta Astronaut. 2020, 168, 164–173. [Google Scholar] [CrossRef]

- Davis, J.; Pernicka, H. Proximity Operations About and Identification of Non-Cooperative Resident Space Objects Using Stereo Imaging. Acta Astronaut. 2019, 155, 418–425. [Google Scholar] [CrossRef]

- Bechini, M.; Lavagna, M.; Lunghi, P. Dataset Generation and Validation for Spacecraft Pose Estimation via Monocular Images Processing. Acta Astronaut. 2023, 204, 358–369. [Google Scholar] [CrossRef]

- Shirley, P.; Morley, R.K. Realistic Ray Tracing; A. K. Peters, Ltd.: Wellesley, MA, USA, 2003. [Google Scholar]

- Price, A.; Uno, K.; Parekh, S.; Reichelt, T.; Yoshida, K. Render-to-Real Image Dataset and CNN Pose Estimation for Down-Link Restricted Spacecraft Missions. In Proceedings of the 2023 IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2023. [Google Scholar]

- Schubert, C.; Black, K.; Fonseka, D.; Dhir, A.; Deutsch, J.; Dhamani, N.; Martin, G.; Akella, M. A Pipeline for Vision-Based On-Orbit Proximity Operations Using Deep Learning and Synthetic Imagery. In Proceedings of the 2021 IEEE Aerospace Conference (50100), Big Sky, MT, USA, 6–13 March 2021. [Google Scholar]

- Musallam, M.; Rathinam, A.; Gaudillière, V.; Castillo, M.; Aouada, D. CubeSat-CDT: A Cross-Domain Dataset for 6-DoF Trajectory Estimation of a Symmetric Spacecraft; ECCV 2022 Workshops-Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2023; Volume 13801, pp. 112–126. [Google Scholar] [CrossRef]

- Pauly, L.; Rharbaoui, W.; Shneider, C.; Rathinam, A.; Gaudillière, V.; Aouada, D. A Survey on Deep Learning-Based Monocular Spacecraft Pose Estimation: Current State, Limitations and Prospects. Acta Astronaut. 2023, 212, 339–360. [Google Scholar] [CrossRef]

- Wikipedia. Tianzhou 2. Available online: https://en.wikipedia.org/wiki/Tianzhou_2 (accessed on 27 January 2025).

- Orbital Velocity. Soyuz, The Most Reliable Spacecraft in History. Available online: https://www.orbital-velocity.com/soyuz (accessed on 27 January 2025).

- Soyuz 4. Available online: https://weebau.com/flights/soyuz4.htm (accessed on 27 January 2025).

- European Space Agency. The Russian Soyuz Spacecraft. Available online: https://www.esa.int/Enabling_Support/Space_Transportation/Launch_vehicles/The_Russian_Soyuz_spacecraft (accessed on 27 January 2025).

- American Association for the Advancement of Science. Research and Development of the Tianzhou Cargo Spacecraft. Available online: https://spj.science.org/doi/10.34133/space.0006 (accessed on 27 January 2025).

- Orbital Velocity. Dragon 2. Available online: https://www.orbital-velocity.com/crew-dragon (accessed on 27 January 2025).

- The State Council the People’s Republic of China. China’s Biggest Spacecraft Moved into Place for Launch. Available online: https://english.www.gov.cn/news/top_news/2017/04/18/content_281475630025783.htm (accessed on 27 January 2025).

- SpaceNews. Chang’e-6 Spacecraft Dock in Lunar Orbit Ahead of Journey Back to Earth. Available online: https://spacenews.com/change-6-spacecraft-dock-in-lunar-orbit-ahead-of-journey-back-to-earth/ (accessed on 27 January 2025).

- Chang’e-6 Description. Available online: https://www.turbosquid.com/3d-models/3d-change-6-lander-ascender-2220472 (accessed on 27 January 2025).

- The Most Powerful Real-Time 3D Creation Tool—Unreal Engine. Available online: https://www.unrealengine.com/en-US (accessed on 16 November 2024).

- HDRI Backdrop Visualization Tool in Unreal Engine | Unreal Engine 5.4 Documentation | Epic Developer Community. Available online: https://dev.epicgames.com/documentation/en-us/unreal-engine/hdri-backdrop-visualization-tool-in-unreal-engine (accessed on 16 November 2024).

- Brezov, D.; Mladenova, C.; Mladenov, M. New Perspective on the Gimbal Lock Problem. AIP Conf. Proc. 2013, 1570, 367–374. [Google Scholar] [CrossRef]

- Kuffner, J.J. Effective Sampling and Distance Metrics for 3D Rigid Body Path Planning. In Proceedings of the IEEE International Conference on Robotics and Automation, Proceedings ICRA’04, New Orleans, LA, USA, 26 April–1 May 2004. [Google Scholar]

- Phisannupawong, T.; Kamsing, P.; Tortceka, P.; Yooyen, S. Vision-Based Attitude Estimation for Spacecraft Docking Operation Through Deep Learning Algorithm. In Proceedings of the 2020 22nd International Conference on Advanced Communication Technology (ICACT), Pyeongchang, Republic of Korea, 16–19 February 2020. [Google Scholar]

| Satellite Model | Spacecraft | Range | Resolution | Tools |

|---|---|---|---|---|

| URSO | Dragon, Soyuz | 10 m–40 m | 1080 × 960 | Unreal Engine 4 |

| SPEED | Tango | 3 m–40.5 m | 1920 × 1200 | OpenGL |

| SPEED+ | Tango | 10 m | 1920 × 1200 | OpenGL |

| SwissCube | SwissCube | 0.1 m–1 m | 1024 × 1024 | Mitsuba 2 |

| Satellite Model | Background Scene | (m) | (m) | (m) | (m) | Number of Images |

|---|---|---|---|---|---|---|

| Dragon | Earth | 2.0 | 1.0 | 5.0 | 40.0 | 10,000 |

| Soyuz | Earth | 1.0 | 0.8 | 5.0 | 40.0 | 10,000 |

| Tianzhou | Earth | 2.0 | 1.0 | 7.0 | 40.0 | 10,000 |

| ChangE-6 | Moon | 2.0 | 1.0 | 5.0 | 40.0 | 10,000 |

| Dataset | Location Error (Meters) | Orientation Errors (Degrees) | Best Epoch (Out of 100) |

|---|---|---|---|

| Soyuz hard (URSO dataset) | 1.4701 | 21.1169 | 96 |

| Soyuz proposed dataset | 1.0671 | 11.3927 | 100 |

| Dragon proposed dataset | 0.9905 | 12.3303 | 91 |

| Tianzhou proposed dataset | 1.3729 | 15.7545 | 97 |

| ChangE-6 proposed dataset | 0.8886 | 11.9321 | 99 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hematulin, W.; Kamsing, P.; Phisannupawong, T.; Panyalert, T.; Manuthasna, S.; Torteeka, P.; Boonsrimuang, P. Generating Large-Scale Datasets for Spacecraft Pose Estimation via a High-Resolution Synthetic Image Renderer. Aerospace 2025, 12, 334. https://doi.org/10.3390/aerospace12040334

Hematulin W, Kamsing P, Phisannupawong T, Panyalert T, Manuthasna S, Torteeka P, Boonsrimuang P. Generating Large-Scale Datasets for Spacecraft Pose Estimation via a High-Resolution Synthetic Image Renderer. Aerospace. 2025; 12(4):334. https://doi.org/10.3390/aerospace12040334

Chicago/Turabian StyleHematulin, Warunyu, Patcharin Kamsing, Thaweerath Phisannupawong, Thanayuth Panyalert, Shariff Manuthasna, Peerapong Torteeka, and Pisit Boonsrimuang. 2025. "Generating Large-Scale Datasets for Spacecraft Pose Estimation via a High-Resolution Synthetic Image Renderer" Aerospace 12, no. 4: 334. https://doi.org/10.3390/aerospace12040334

APA StyleHematulin, W., Kamsing, P., Phisannupawong, T., Panyalert, T., Manuthasna, S., Torteeka, P., & Boonsrimuang, P. (2025). Generating Large-Scale Datasets for Spacecraft Pose Estimation via a High-Resolution Synthetic Image Renderer. Aerospace, 12(4), 334. https://doi.org/10.3390/aerospace12040334