1. Introduction

To meet the quality-of-service requirements for different types of space missions, the Consultative Committee for Space Data Systems (CCSDS) has proposed the concept of Advanced Orbiting Systems [

1] and established a set of related protocol frameworks [

2]. AOS divides data streams into virtual channels (VCs), allowing data with different service requirements from various space-based services to be transmitted over each virtual channel and then aggregated into a single data stream for transmission over a physical channel. Traditional AOS employs a two-level multiplexing technique consisting of frame multiplexing and virtual channel multiplexing. The design of the multiplexing algorithm directly impacts the key performance indicators of AOS channels, such as latency, residual frame quantity, and channel transmission efficiency [

3,

4]. As space missions become increasingly complex and diverse, the architecture of in-orbit satellites is shifting from the traditional single-satellite independent and centralized mode to a multi-satellite networked, collaborative, and distributed mode [

5]. In 2020, with the deployment of the low-Earth-orbit satellite constellation Starlink by SpaceX and the commencement of public beta services for satellite network [

6], it marked the official entry of satellite broadband networks into the service application phase. In-orbit satellite networking has gained significant attention within the industry, and satellite internet technology based on the integrated space-ground network has become a research hotspot and a key development direction in the commercial satellite sector [

7]. The explosive growth of space network services [

8] presents new challenges for space link AOS multiplexing and scheduling. Due to the significant differences in characteristics between network traffic and traditional traffic, network traffic exhibits considerable randomness, volatility, and diversity. Additionally, the increase in network bandwidth [

9] has raised higher demands for the multiplexing efficiency of space links. Therefore, when AOS carries data from the new type of space network traffic, it is impossible for traditional AOS multiplexing algorithms to achieve optimal multiplexing performance.

Traditional frame generation algorithms include isochronous frame generation algorithms [

10], high-efficiency frame generation algorithms [

11], and adaptive frame generation algorithms [

12]. The isochronous frame generation algorithm ensures that data frames have strict periodicity and fixed delays but greatly reduces multiplexing efficiency. The high-efficiency frame generation algorithm waits for data to fill a frame before sending them to the channel for transmission, with a frame multiplexing efficiency of 1, but may cause significant delays due to insufficient data arrival over a long period, which may lead to packet loss in delay-sensitive links. The adaptive frame generation algorithm balances frame multiplexing efficiency and transmission delay by setting a waiting time threshold but lacks the ability to automatically adjust under complex traffic conditions, limiting its actual effectiveness. In [

13], a method for AOS isochronous frame generation based on self-similar traffic was proposed, using an ON/OFF source arrival model and Pareto distribution for theoretical analysis to derive calculable formulas for average packet delay and multiplexing efficiency, which helps to improve AOS multiplexing performance. However, it mainly targets specific types of self-similar traffic and lacks research in diverse network environments and mixed transmission of different types of traffic.

Traditional virtual channel multiplexing algorithms include first-come-first-served scheduling algorithms [

14], time-slice polling scheduling algorithms [

15], and dynamic priority scheduling algorithms [

16,

17]. First, traditional multiplexing algorithms use the same multiplexing strategy for traffic of different characteristics, making it difficult to meet the quality-of-service requirements of different types of traffic. Second, traditional multiplexing algorithms usually consider only a single parameter for channel multiplexing. As channel characteristics become more complex, problems such as delay and frame residue caused by incomplete algorithm parameter design become increasingly apparent. Finally, traditional algorithms lack effective time slot management strategies, with fixed allocation of scheduling time slots, making it difficult to achieve high multiplexing efficiency when the characteristics of the source traffic are unknown. In [

18], a new AOS virtual channel scheduling algorithm based on frame urgency was proposed, considering service priority, scheduling delay urgency, and frame residue urgency as key factors affecting the scheduling order of virtual channels, and comprehensively considering the priority of asynchronous data, the isochronous nature of synchronous data, and the urgency of VIP data. In [

19], a cross-layer optimization polling weight scheduling method for AOS multiplexing was proposed, establishing a cross-layer optimization model to comprehensively consider channel state information at the physical layer and queue state information at the link layer, optimizing the polling weight allocation of virtual channels to achieve more effective resource allocation and scheduling. In recent years, researchers have also conducted studies on virtual channel scheduling algorithms based on intelligent algorithms such as ant colony algorithms [

20], genetic algorithms [

21,

22], and machine learning [

23,

24]. In [

22], the authors established an AOS hybrid scheduling model and proposed an asynchronous virtual channel algorithm based on genetic-particle swarm sorting. This algorithm combines the evolutionary operators of genetic algorithms and the search capabilities of particle swarm algorithms, establishing a fitness function model considering factors such as service priority, scheduling delay urgency, and frame residue urgency to optimize the scheduling order of asynchronous virtual channels. Reference [

24] combines AOS multiplexing technology with the demands of Industry 5.0, proposing an AOS adaptive framing algorithm based on optimized thresholds. This algorithm can adaptively adjust the frame waiting time according to packet arrival and use differential evolution algorithms to optimize frame waiting time thresholds. It also proposes an AOS virtual channel scheduling algorithm based on a deep Q-network (DQN), considering service priority, scheduling delay, and frame residue to find the optimal virtual channel scheduling order. Intelligent algorithms can optimize AOS scheduling performance to some extent, but due to their tendency to fall into local optimal solutions, and issues such as high algorithm complexity, high resource consumption, and poor real-time performance, practical application and engineering implementation face difficulties.

As network traffic complexity increases, the use of traffic prediction to optimize the AOS algorithm also has great advantages in view of the complexity of AOS network traffic. In [

25], an intelligent optimization threshold algorithm based on traffic prediction is proposed. The wavelet neural network is used to predict self-similar traffic, and the comprehensive evaluation function is optimized by the artificial fish swarm algorithm to dynamically determine the optimal threshold value to improve system performance. In [

26], an adaptive frame generation algorithm based on wavelet neural network traffic prediction is proposed. The network parameters are optimized by the genetic algorithm, and the framing time is adjusted according to the prediction results to optimize the frame multiplexing efficiency. It can be seen from the literature that the use of the wavelet neural network for traffic prediction can achieve high prediction accuracy, and it can be effectively applied in the AOS algorithm. However, the above research only optimizes the frame generation algorithm and does not consider the joint optimization problem with the virtual channel scheduling algorithm.

To address the performance issues faced by AOS multiplexing under space network traffic, this paper proposes an efficient network AOS comprehensive multiplexing algorithm based on elastic time slots. First, the space network traffic is categorized into synchronous traffic, asynchronous real-time traffic, and asynchronous non-real-time traffic according to its characteristics. A comprehensive multiplexing model with strong scalability is established based on the packet multiplexing layer, virtual channel multiplexing layer, and decision layer. Second, independent multiplexing algorithms are designed for each traffic type to accommodate their specific traffic characteristics, thereby meeting the quality-of-service requirements for different types of traffic. Special attention is given to asynchronous real-time services, which exhibit strong randomness and have high latency and efficiency demands. The traffic prediction algorithm in Reference [

26] is adopted, in the packet multiplexing layer, the multiplexing delay is optimized based on traffic prediction, and, in the virtual channel multiplexing layer, scheduling decisions are made by the virtual channel scheduling status, transmission frame scheduling status, virtual channel priority status, and traffic prediction status, which enables the scheduling algorithm to adjust decisions proactively before traffic arrival. Finally, an AOS comprehensive scheduling strategy based on elastic time slots is proposed. Starting with an initial allocation of time slots, the three traffic types can dynamically preempt time slots based on the current slot occupancy and service request conditions. The boundaries of service time slots are flexible, allowing the multiplexing algorithm to efficiently adapt to dynamic changes in the channel, thereby improving the overall multiplexing efficiency.

2. Efficient Network AOS Comprehensive Multiplexing Model

Spacecraft network traffic can be categorized into three types based on its different characteristics, as follows: synchronous traffic, asynchronous real-time traffic, and asynchronous non-real-time traffic. Synchronous traffic data refers to data that are essential for maintaining the basic operation of the spacecraft and determine the success or failure of the mission. This includes data such as remote control, telemetry, mission management, and astronaut health monitoring data. These data typically have low transmission rates and exhibit periodic synchronization characteristics. They have strict requirements for data transmission reliability and arrival latency. Asynchronous real-time service data consist of real-time platform and payload data generated during spacecraft operations, including voice, image, crew, and payload data. These data streams often exhibit significant randomness and have higher demands for transmission reliability and low latency. Asynchronous non-real-time service data pertain to non-real-time data generated by spacecraft operations, such as space experiment data, delayed payload data, and delayed telemetry data. These data typically have higher transmission rates and more relaxed latency requirements but have stricter demands for transmission efficiency and low packet loss rates.

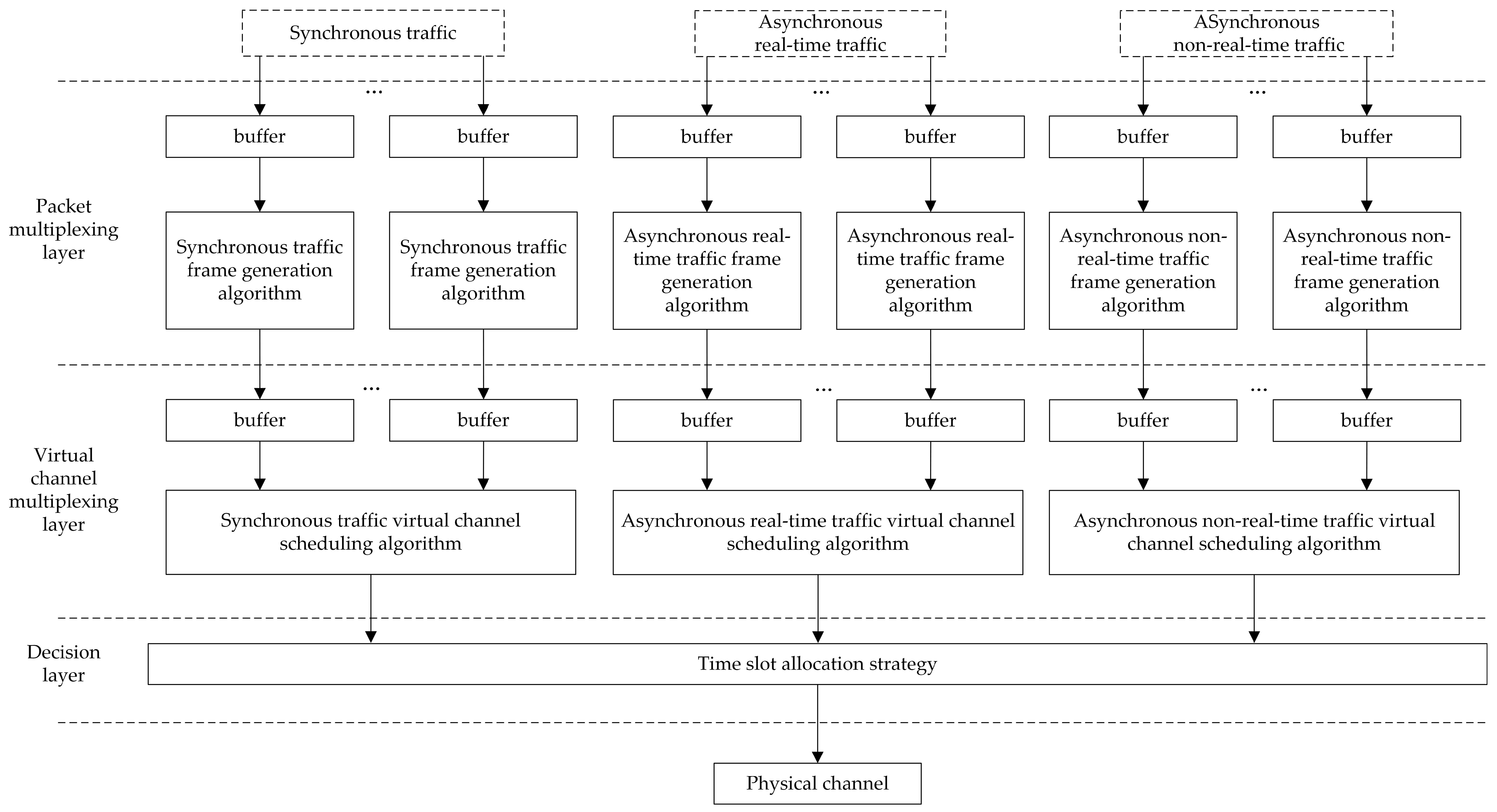

To enhance the flexibility and scalability of the multiplexing model, hierarchical architecture is employed for the model design. Additionally, a decision layer is introduced based on the traditional multiplexing architecture, as shown in

Figure 1.

The model is divided into the following three layers: the packet multiplexing layer, the virtual channel multiplexing layer, and the decision layer. The packet multiplexing layer connects and segment data packets sent by different users, which have different formats but the same service requirements, into fixed-length protocol data transmission frames. These frames are then transmitted over the same virtual channel. The virtual channel multiplexing layer schedules the input transmission frames with different service requirements according to a specific algorithm, enabling data from different virtual channels to be transmitted over the same physical channel. The decision layer allocates virtual channel scheduling slots for different service types, making comprehensive scheduling decisions for different types of virtual channels to achieve optimal multiplexing performance.

In this model, one buffer is allocated for each virtual channel at the packet multiplexing layer and the virtual channel multiplexing layer. The packet multiplexing layer buffer is used for temporary storage of data before frame generation. For synchronous services, the buffer capacity is determined by the maximum amount of data arriving in the virtual channel within two framing intervals. For asynchronous services, the buffer capacity is determined by the maximum amount of framed data in the framing algorithm. The virtual channel multiplexing layer buffer is used for temporary storage of data before virtual channel scheduling. For synchronous services, since there is no burst, the rate is low and the scheduling priority is the highest, the buffer capacity requirement is low, and usually the buffer capacity within ten frames is sufficient. For asynchronous real-time services, the burst data volume of different virtual channels is mainly considered, and the selection of buffer capacity needs to ensure that the burst data volume can be cached once, and a certain amount of buffer space margin is added on this basis. For asynchronous non-real-time services, since their overall priority is the lowest and the data volume is large, the buffer capacity needs to ensure the burst data volume once, and, at the same time, the data volume during the waiting time for other virtual channels must be considered, so a larger buffer capacity is usually required. Due to the complexity of the AOS multiplexing and scheduling algorithm process, on the basis of theoretical analysis, the selection of buffer capacity can be further optimized through simulation and actual debugging.

In the model, the virtual channel scheduling adopts a regularly spaced scheduling cycle, with a fixed number of scheduling slots set within each scheduling cycle. The value of K should be greater than the number of virtual channels, with each time slot transmitting one channel transmission frame. Since the AOS frame uses a fixed transmission frame length, the total amount of data transmitted in one transmission frame time slot and the occupied time are both fixed values. Here, is determined by the number of bytes in the transmission frame specified by the AOS protocol, and is determined by and the AOS processing speed. The virtual channel scheduling algorithm makes scheduling decisions at the beginning of each scheduling slot. Assuming the current scheduling cycle is , and the scheduling slot within the current cycle is (where k takes values from 0, 1, …, ), then the current scheduling moment is denoted by .

4. AOS Elastic Slot Scheduling Strategy

To accommodate the scheduling requirements of synchronous data with isochronous period constraints, asynchronous real-time data with priority and delay requirements, and asynchronous non-real-time data with fairness considerations, and to improve scheduling efficiency, this paper proposes an AOS elastic slot scheduling strategy.

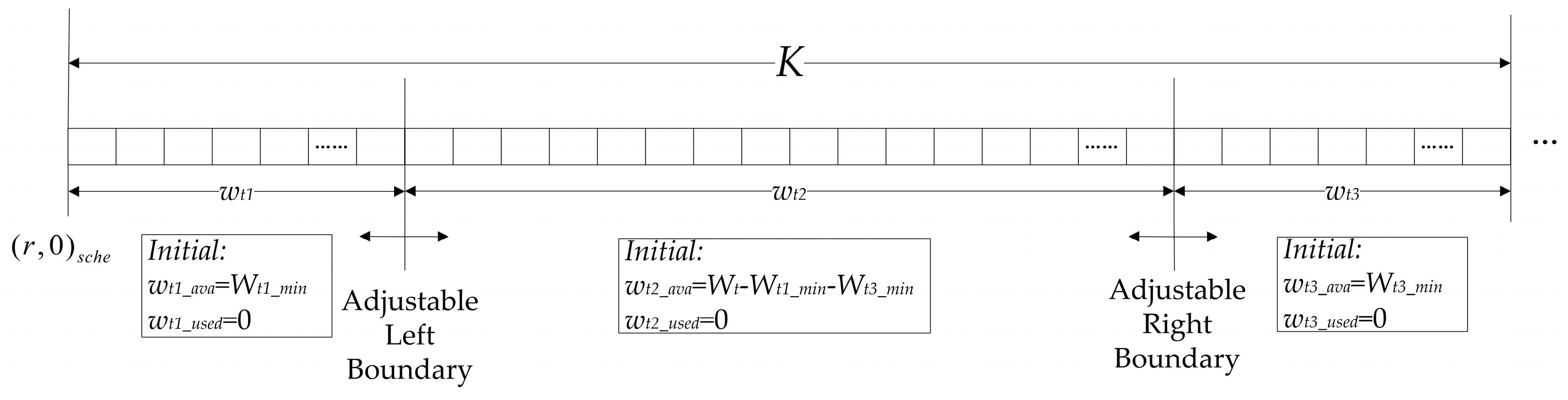

To allocate the scheduling slots within the VC scheduling cycle, a portion is dedicated to synchronous VC transmissions, another portion is allocated to asynchronous non-real-time VC transmissions, and the remaining portion is assigned to asynchronous real-time VC transmissions. Let us assume that at any given scheduling moment

, the total number of slots assigned to the three types of traffic is

,

, and

, respectively. The available slot counts are

,

, and

, and the used slot counts are

,

, and

, respectively. For any

(where

= 1, 2, 3), the following relationships holds:

The total number of slots in each scheduling cycle is

. To ensure fairness, we assume that the minimum number of slots allocated to synchronous VCs and asynchronous non-real-time VCs are

and

, respectively. The slot allocation and initial values at the scheduling moment

are set as shown in

Figure 2.

From the figure, it can be observed that after the allocation of slots for the three types of data, there are two slot boundaries on the left and right. During the scheduling process, based on the initial slot allocation, the slots can be dynamically adjusted according to the data request status and the current slot allocation situation, allowing for mutual preemption of the scheduling slots by the three types of data. Through the elastic preemption mechanism, the slot boundaries of the three types of data dynamically shift as the available and used slot numbers are updated, thereby adapting to the actual channel conditions and achieving a better overall scheduling effect.

In the scheduling process, assuming that type

data VC preempts the slot of type

data VC, the dynamic slot adjustment calculation formula is as follows:

Assuming that at any scheduling moment the transmission request states for the three types of traffic are , with a value of 1 indicating a request and 0 indicating no request, the pseudocode for the fixed time slot scheduling algorithm and the elastic time slot scheduling algorithm are shown in Algorithm 1 and Algorithm 2, respectively.

| Algorithm 1: Fixed Time Slot Scheduling Algorithm |

| 01: Input: For the three types of traffic, the number of available time slots , and the transmission request states . |

| 02: Output: Scheduling results for three types of traffic.

|

| 03: if ( 1 && 0) then |

| 04: Schedule synchronous traffic. |

| 05: else if ( == 1 && 0) then |

| 06: Schedule asynchronous real-time traffic. |

| 07: else if ( == 1 && 0) then |

| 08: Schedule asynchronous non-real-time traffic. |

| 09: else then |

| 10: Send padding frame. |

| 11: end if |

| Algorithm 2: Elastic Time Slot Scheduling Algorithm |

| 01: Input: For the three types of traffic, the number of available time slots , the number of used time slots , and the transmission request states . |

| 02: Output: The total number of time slots dynamically allocated for three types of traffic . |

| 03: if ( == 1 && == 0) then |

| 04: if ( < ) then |

| 05: Synchronous services preempt asynchronous non-real-time services’ time slots, |

| calculated according to Formula (18) ( = 3). |

| 06: else if ( < ) then |

| 07: if ( == 0 && == 0) then |

| 08: Synchronous services preempt asynchronous real-time services’ time slots, |

| calculated according to formula (18) ( = 2). |

|

09: end if |

| 10: else then |

| 11: if ( == 0) then |

| 12: Synchronous services preempt asynchronous real-time services’ time slots, |

| calculated according to Formula (18) ( = 2). |

|

13: end if |

| 14: end if |

| 15: else if ( == 1 && == 0) then |

| 16: if ( > ) then |

| 17: Asynchronous real-time services preempt synchronous services’ time slots, |

| calculated according to Formula (18) ( = 1). |

| 18: else then |

| 19: Asynchronous real-time services preempt asynchronous non-real-time services’ time |

| slots, calculated according to Formula (18) ( = 1). |

| 20: end if |

| 21: else if ( == 1 && == 0) then |

| 22: if ( < ) then |

| 23: Asynchronous non-real-time services preempt synchronous services’ time slots, |

| calculated according to Formula (18) ( = 1). |

| 24: else then |

| 25: Asynchronous non-real-time services preempt asynchronous real-time services’ time |

| slots, calculated according to Formula (18) ( = 2). |

| 26: end if |

| 27: else if ( == 1 && 0) then |

| 28: Schedule synchronous traffic. |

| 29: else if ( == 1 && 0) then |

| 30: Schedule asynchronous real-time traffic. |

| 31: else if ( == 1 && 0) then |

| 32: Schedule asynchronous non-real-time traffic. |

| 33: else then |

| 34: Send padding frame. |

| 35: end if |

5. Simulation and Analysis

5.1. Simulation Parameters and Algorithms

The simulation parameters are set as follows:

Simulation time T = 300 s;

Processing speed ranges from 0.1 × 103 to 1.6 × 103 frames/s, covering the data source speed range;

The average traffic prediction accuracy is 10%;

Three types of data sources are simulated: synchronous traffic, asynchronous real-time traffic, and asynchronous non-real-time traffic, with a total of 10 VCs. The detailed data source settings are shown in

Table 1.

When setting up the data source, two working conditions were selected based on the spacecraft’s traffic fluctuation, including the normal condition and the stressed condition. In the normal condition, synchronous traffic uses uniform distribution, asynchronous real-time traffic uses Poisson distribution, and asynchronous non-real-time traffic uses uniform distribution, with the average variance of overall traffic fluctuation being 0.428. In the stressed condition, synchronous traffic uses Poisson distribution, asynchronous real-time traffic uses actual terrestrial internet traffic datasets, and asynchronous non-real-time traffic uses Poisson distribution, with the average variance of overall traffic fluctuation being 4.76. The actual dataset used is from the MAWI Working Group Traffic Archive [

27], which primarily originates from actual internet traffic sampling points on the WIDE backbone network, and its traffic fluctuation characteristics are inevitably more complex than those of spacecraft network traffic. We use a 15-min-long packet trace taken on 18 October 2024, at 14:00 JST, containing about 248 million IPv4 packets. Four types of actual traffic data were selected, and the data were scaled proportionally to match the average rate of the data to be simulated, with the scaled traffic data serving as the data source.

In this simulation, the following three algorithms are selected for comparison: the traditional AOS multiplexing algorithm (TAMA), the fixed-time-slot-based AOS integrated multiplexing algorithm (FAMA), and the elastic-time-slot-based efficient AOS integrated multiplexing algorithm (EAMA). The detailed configurations of these three algorithms are presented in

Table 2.

5.2. Average Scheduling Delay

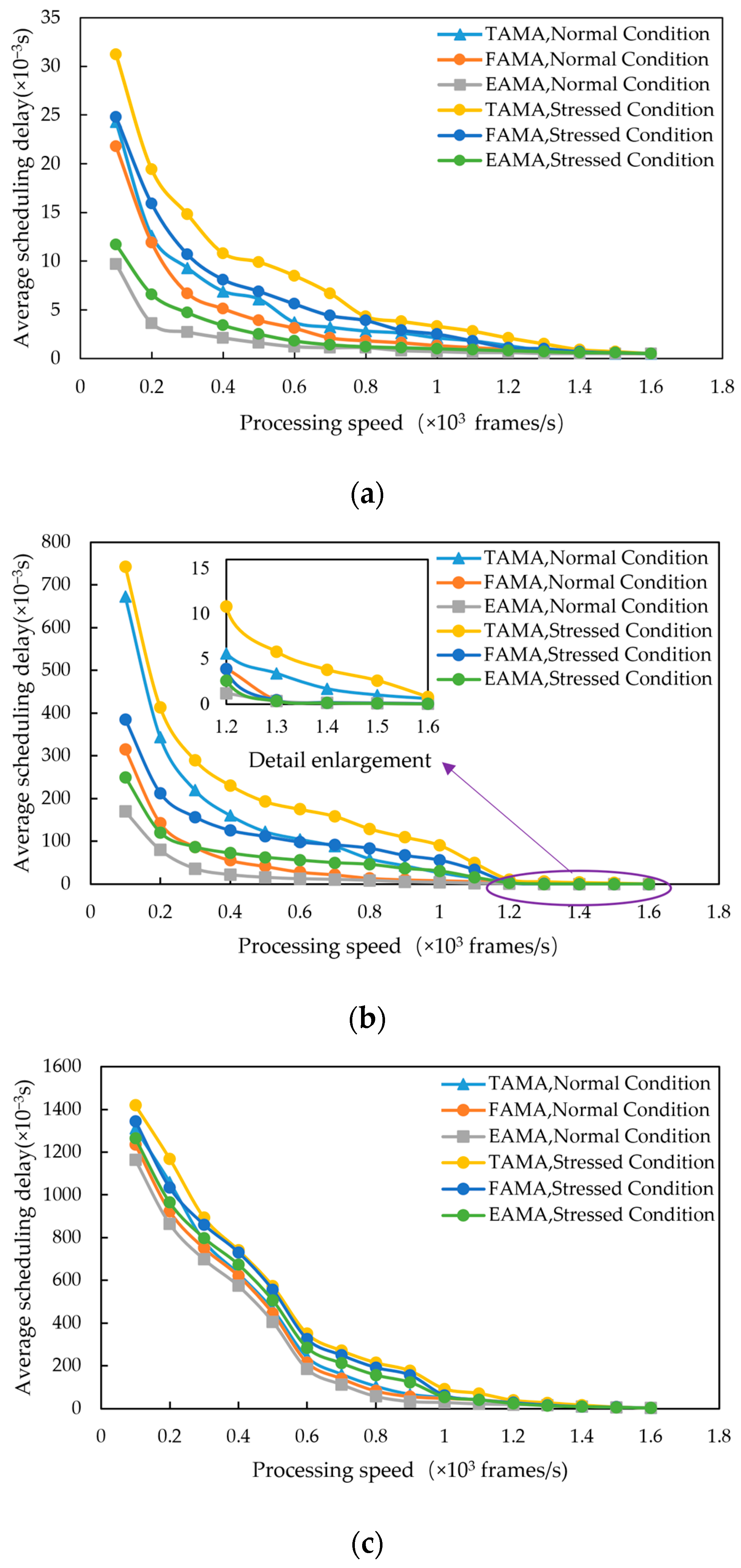

The simulation results of average scheduling delay for three types of traffic under three algorithms are shown in

Figure 3a–c.

As can be seen from the figure, for synchronous traffic, EAMA exhibits the lowest delay under both the normal condition and the stressed condition. This is because TAMA and FAMA lack a slot preemption mechanism. When periodic messages arrive and no slot is available, they must wait for the next available slot. In contrast, EAMA can reduce the delay of critical periodic messages by preemptively acquiring scheduling slots when periodic messages arrive, thus lowering the delay.

For asynchronous real-time traffic, it can be observed that when the processing speed is lower than the network load, EAMA shows a significant performance advantage under both the normal condition and the stressed condition. For example, when the processing speed is 0.5 × 103 frames/s, EAMA reduces the delay by 67.6% compared to TAMA, and by 44% compared to FAMA under the stressed condition. This is because EAMA, by integrating channel scheduling status information, avoids the problems of unfair scheduling, slow response to sudden traffic changes, and large scheduling time jitter caused by the traditional algorithm, thus reducing the average VC scheduling delay. When the processing speed exceeds the network load, the delays for both EAMA and TAMA further decrease. This is because EAMA and TAMA predict the traffic volume and estimate the channel capacity during the scheduling period. When there is available capacity within the scheduling period, they reduce the frame generation algorithm’s efficiency to lower the data delay.

For asynchronous non-real-time traffic, it can be seen that the delays of all three algorithms are generally similar under both the normal condition and the stressed condition. However, EAMA has a slightly lower average delay due to its slot preemption capability.

5.3. Maximum Frame Remaining

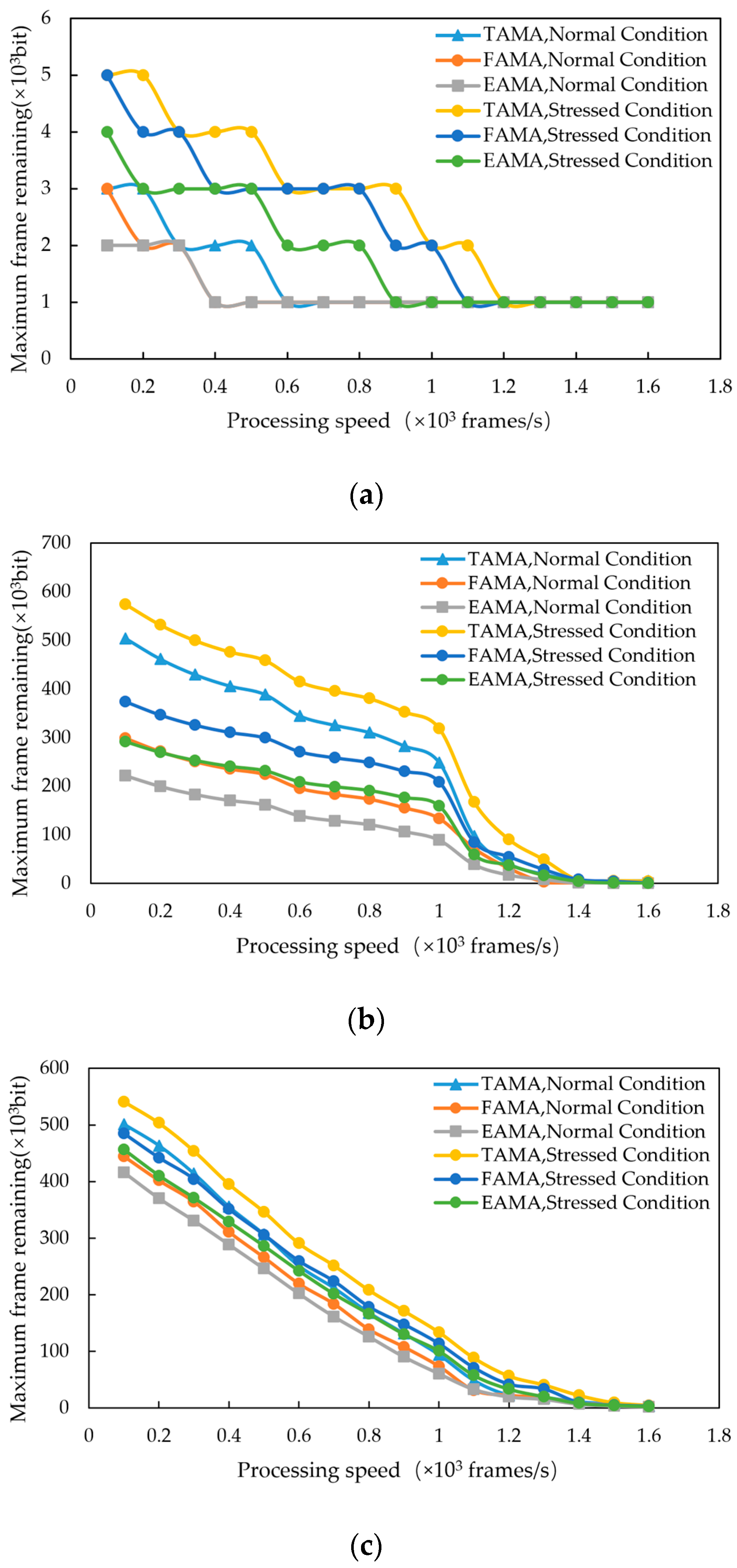

The simulation results of the maximum frame remaining for three types of traffic under three algorithms are shown in

Figure 4a–c.

As can be seen from the figure, for synchronous traffic, the maximum remaining frame quantity for the three algorithms is similar under both the normal condition and the stressed condition. This is because periodic messages account for a small proportion of the network traffic, and their priority is relatively high under all conditions, resulting in a remaining frame quantity generally ranging from 1 to 5 frames.

For asynchronous real-time traffic, it can be observed that when the processing speed is lower than the network load, EAMA effectively reduces the maximum remaining frame quantity, thereby lowering the demand for system buffering under both the normal condition and the stressed condition. For instance, when the processing speed is 0.5 × 103 frames/s, the maximum remaining frame quantity in EAMA is reduced by 49.6% compared to TAMA and by 22.7% compared to FAMA under the stressed condition. This is because EAMA incorporates the traffic prediction state information and slot preemption strategies, which provide stronger adaptability to dynamic network traffic changes, thus preventing certain virtual channels from being delayed in scheduling, thereby reducing the maximum remaining frame quantity.

For asynchronous non-real-time traffic, it can be observed that the TAMA algorithm exhibits a relatively higher frame residual, while FAMA, which uses the current virtual channel’s cache occupation as the scheduling decision parameter to ensure the fairness of scheduling across multiple VCs, results in a smaller maximum frame residual. On the other hand, EAMA, with its slot preemption capability, demonstrates better scheduling fairness than FAMA.

5.4. Transmission Efficiency

The channel transmission efficiency is calculated as the ratio of the effective bits transmitted to the total bits transmitted in the channel. The simulation results of the channel transmission efficiency under three algorithms are shown in

Figure 5.

From the figure, it can be observed that the EAMA algorithm proposed in this paper achieves higher channel transmission efficiency under both the normal condition and the stressed condition, especially when the processing speed is below the network load, maintaining a transmission efficiency of over 90%. When the processing speed is 0.5 × 103 frames/s, the transmission efficiency of EAMA is improved by 45.8% and 17.9% compared to TAMA and FAMA, respectively, under the stressed condition. At the same time, it can be seen that EAMA maintains high stability under different working conditions. This improvement is attributed to the optimization of multiplexing efficiency in the EAMA algorithm, which adopts an elastic slot scheduling strategy. This strategy enhances the multiplexing efficiency at the frame multiplexing layer and significantly reduces the generation of padding frames at the virtual channel multiplexing layer, thereby improving the overall transmission efficiency of the AOS channel.

6. Conclusions

This paper addresses the pressing need for the efficient transmission of increasingly complex and diverse space network data over AOS links. A comprehensive AOS virtual channel multiplexing model is developed based on three different types of services with distinct characteristics. An efficient network AOS integrated multiplexing algorithm based on elastic time slots is then proposed. Within the frame multiplexing layer and the virtual channel multiplexing layer, efficient multiplexing algorithms are designed to accommodate the unique traffic characteristics of each service type. At the decision layer, an elastic time slot scheduling strategy is applied to seamlessly integrate the multiplexing algorithms, further optimizing the overall efficiency of the system. It should be noted that changes to the protocol will lead to an increased computation time, and different algorithms are required to detect packets in noisy environments. The following conclusions can be drawn:

Based on the traditional AOS two-layer multiplexing model, this paper adds a decision-making layer. The upper-layer algorithms can independently match the network traffic characteristics and integrate them together with the decision-making layer, which gives the model stronger flexibility and scalability.

This paper classifies the space network into three types of traffic according to its characteristics and carries out theoretical analysis and design of the multiplexing algorithm. Additionally, a slot-preemption-based elastic time slot scheduling strategy is proposed at the decision layer. The performance of the algorithm is verified through simulation.

The simulation shows that, compared with the traditional algorithms, the algorithm proposed in this paper has a lower average delay, smaller frame residual amount, and higher channel transmission efficiency, which is more suitable for the effective transmission of multi-type space network traffic.