1. Introduction

It is essential to identify the physical parameters—particularly the inertia parameters—prior to mission execution to ensure safety and reliability.

In recent years, OOS has been receiving increasing attention [

1,

2,

3]. Typical OOS missions include refueling operations, fault diagnostics and resolution, satellite retrieval, and debris mitigation [

4,

5,

6]. Depending on the nature of the target, these missions can be classified into operations involving cooperative targets and non-cooperative targets. Missions involving non-cooperative targets are considerably more challenging due to the lack of cooperation from the targets. In addition, the unknown physical parameters of such targets result in uncertain dynamics, thereby increasing the operational risks. Consequently, it is essential to identify the physical parameters—particularly the inertia parameters—prior to mission execution to ensure safety and reliability.

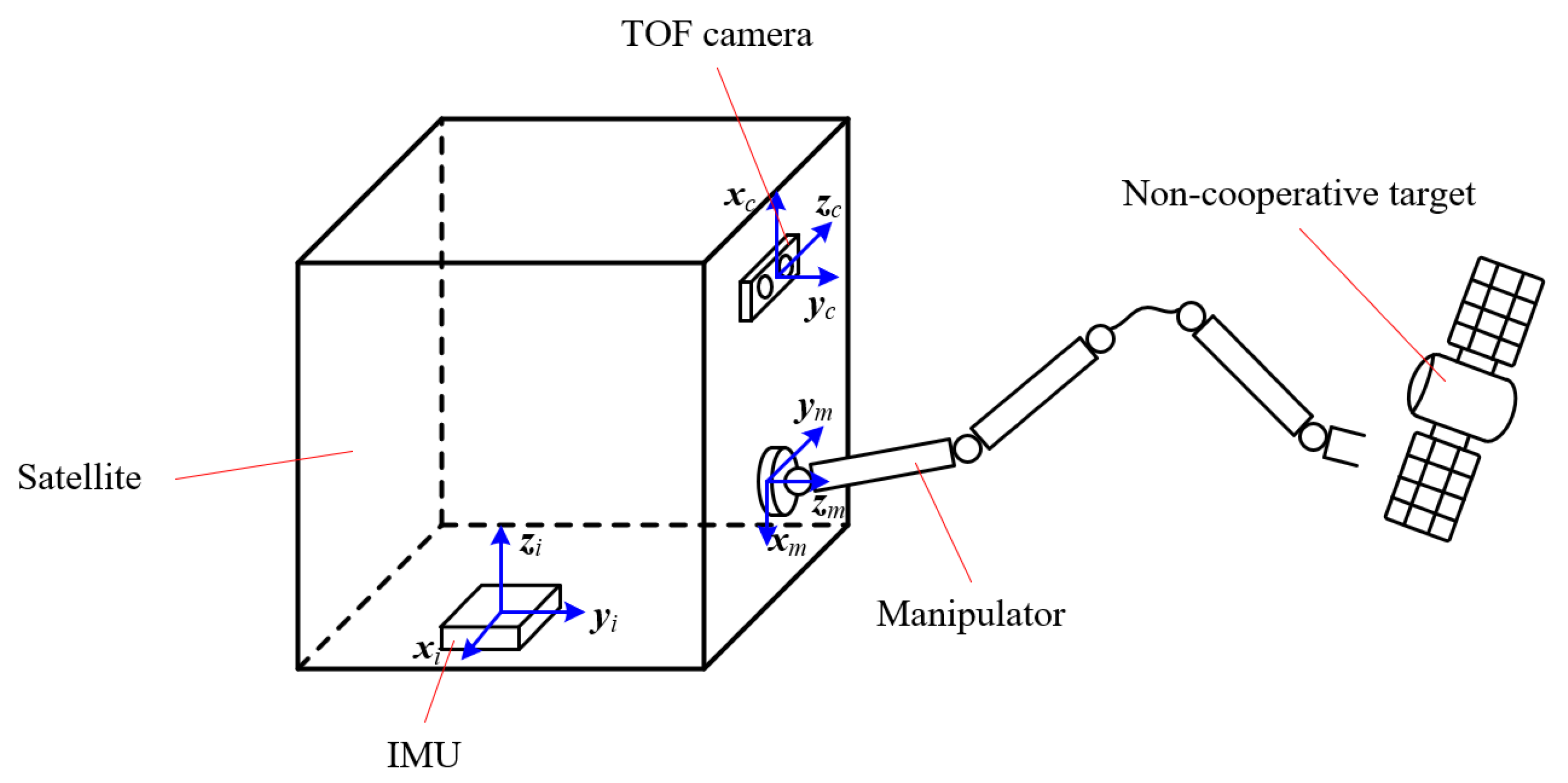

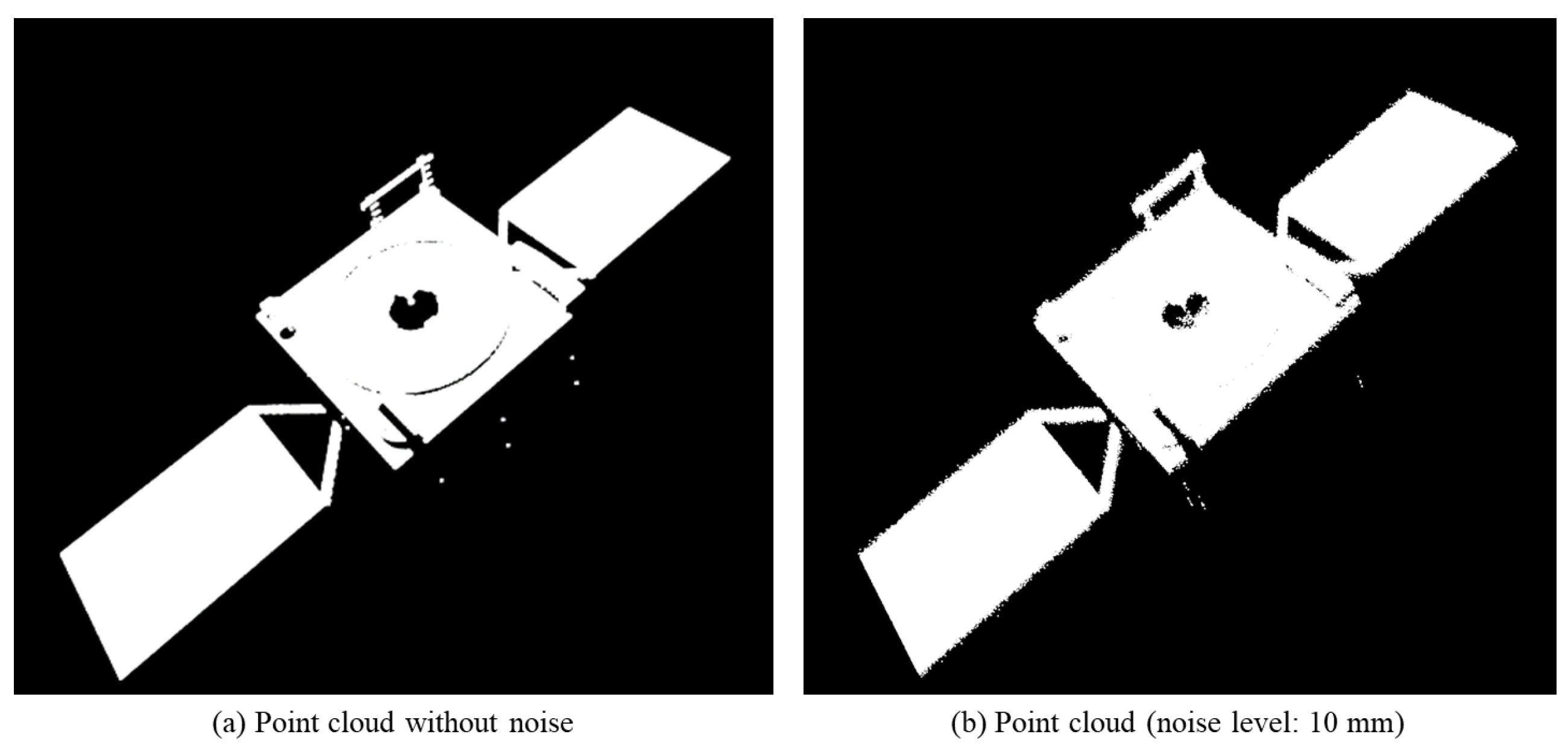

To identify all the inertia parameters, the first step is to estimate the position and attitude of the target. At present, various types of sensors can be employed for target observation, including visible light camera [

7], TOF camera [

8], infrared camera [

9], laser radar [

10], etc. Considering that the illumination conditions in space are often uncontrollable, TOF camera or laser-based sensors are generally preferred. Once point clouds are sampled, several approaches can be used to determine the position and attitude of the target. These methods are typically classified into two categories: global registration and local registration. Global registration methods generally provide an initial rough estimation of the transformation matrix between two sets of point clouds. Common techniques include Random Sample Consensus (RANSAC) combined with Fast Point Feature Histograms (FPFH) [

11], 4-Point Congruent Sets (4PCS) [

12], and Fast Global Registration (FGR) [

13], etc. The optimization function of the mostssss commonly used global registration method FGR is seen in Equation (1). Local registration methods often achieve higher accuracy but are sensitive to initial conditions and prone to convergence toward local minima. The iterative closest point (ICP) method [

14,

15] is widely used due to its simplicity, efficiency, and accuracy. In this study, the ICP method is adopted since point clouds do not require global registration.

where

P = {

pi} is the source point cloud, Q = {

qi} is the target point cloud,

R is the rotation matrix,

t is the translation vector, and

ρ() is the robust loss function.

After the completion of point cloud registration, it is necessary to estimate the target’s motion state before identifying its inertia parameters. Approaches to target motion estimation are generally divided into two principal categories: image-based methods and filter-based methods. Regarding imaged-based methods, Scharr et al. [

16] proposed a model for simultaneous estimation of full 3D motion and 3D positions in world coordinates from multi camera sequences. Shakernia et al. [

17] presented an algorithm for infinitesimal motion estimation from multiple central panoramic views based on optical flow equations. Haller et al. [

18] introduced a highly robust and precise global motion estimation (GME) approach using motion vectors (MVs), which enables accurate short-term global motion parameter estimation. However, these image-based techniques primarily estimate positional information and are generally unable to determine the target’s attitude. As for filter-based methods, Yang et al. [

19] proposed a dual Kalman filter framework to sequentially estimate the position and attitude parameters of a target. Olama et al. [

20] utilized signal strength and wave scattering models to estimate the position and velocity of the target. De Jongh et al. [

21] used a stereo–camera pair to extract distinct surface features via the scale invariant feature transform (SIFT) and adopted an EKF to estimate the position, attitude, velocity, and moments of inertia of the target. Tweddle et al. [

22] proposed a comprehensive approach combining factor graph and Multiplicative extended Kalman Filter (MEKF) to identify both the pose and partial inertia parameters of the target.

If the target has been captured, all its inertia parameters can be identified utilizing manipulators or another mechanism capable of altering the pose of the whole system [

23,

24,

25]. Murotsu et al. [

26] employed the least squares method (LSM) to determine the inertia parameters of the target by adjusting the pose of the arm body system after the target was securely fixed. The core LSM equation can be seen in Equation (2). Zhang et al. [

27] used a tethered system to approach and capture the target, identifying the full set of inertia parameters based on the dynamics response of the target. Nguyen-Huynh et al. [

28] employed a base minimum-disturbance control strategy to modify the attitude of the entire system, thereby enabling the identification of all inertia parameters. Wu et al. [

29] proposed an inertia matrix identification approach that integrates a structure optimization strategy. Their method effectively enhances the computational efficiency of DNN-based inertia estimation under complex measurement noise. Platanitis et al. [

30] presented an inertia parameter estimation framework for cargo-carrying spacecraft based on causal learning, which enables parameter identification without prior knowledge of the system’s state following a deployment event. However, these capture-based methods involved high-risk operations due to the unknown dynamics of the target, making them less desirable. On the other hand, many studies have explored methods that do not require target capture. Matthew et al. [

31,

32] proposed an architecture for estimating dynamic states, geometric shapes, and model parameters; however, their approach can only identify the normalized principal moments of inertia. Richard et al. [

33] estimated the inertia parameters of a space object using photometric and astrometric data. Sheinfeld et al. [

34] presented a general framework that could estimate the inertia of any rigid body. While valuable, the three methods are limited to identifying normalized inertia parameters rather than full inertia matrices. Meng et al. [

35] extended Matthew’s work by employing a touch probe to make physical contact with the target and applying the impulse theorem to achieve full identification of all inertia parameters. Their main identification equation is shown in Equation (3). Mammarella et al. [

36] introduced a recursive algorithm that combines physics-based modeling with black-box learning techniques to improve the accuracy and reliability of spacecraft inertia estimation, enhancing convergence toward true values. Baek et al. [

37] proposed a fast, learning-based inertia parameter estimation framework capable of modeling the dynamics of unknown objects using a time-series, data-driven regression model, which significantly improves identification speed.

where the identified parameters include the mass

mU, position vector

UaU, and six-dimensional inertia vector

UIrr.

In this paper, a novel position and velocity estimation method is proposed to facilitate the identification of inertia parameters. Since filter-based approaches are capable of estimating a broader range of motion parameters, both the KF and EKF are employed. Unlike conventional methods, which require reconstructing the entire target model to determine its geometric center [

31,

32,

33,

34,

35], the proposed method relies solely on point cloud data captured by the camera at a given moment, making it more general and practical for non-cooperative targets. Furthermore, this paper introduces a new method for identifying the complete set of inertia parameters of a non-cooperative target. Traditional approaches typically construct an LSM equation involving a 10-dimensional parameter vector and require continuous contact monitoring using a force sensor, or at least three collisions in the absence of one [

23,

24,

25,

26,

27,

28]. In contrast, the proposed method eliminates the need for a force sensor and reduces the identification process to only two parameters after visual processing. As a result, it requires merely a single instance of contact with the target to identify all remaining unknown inertia parameters, significantly simplifying the identification procedure.

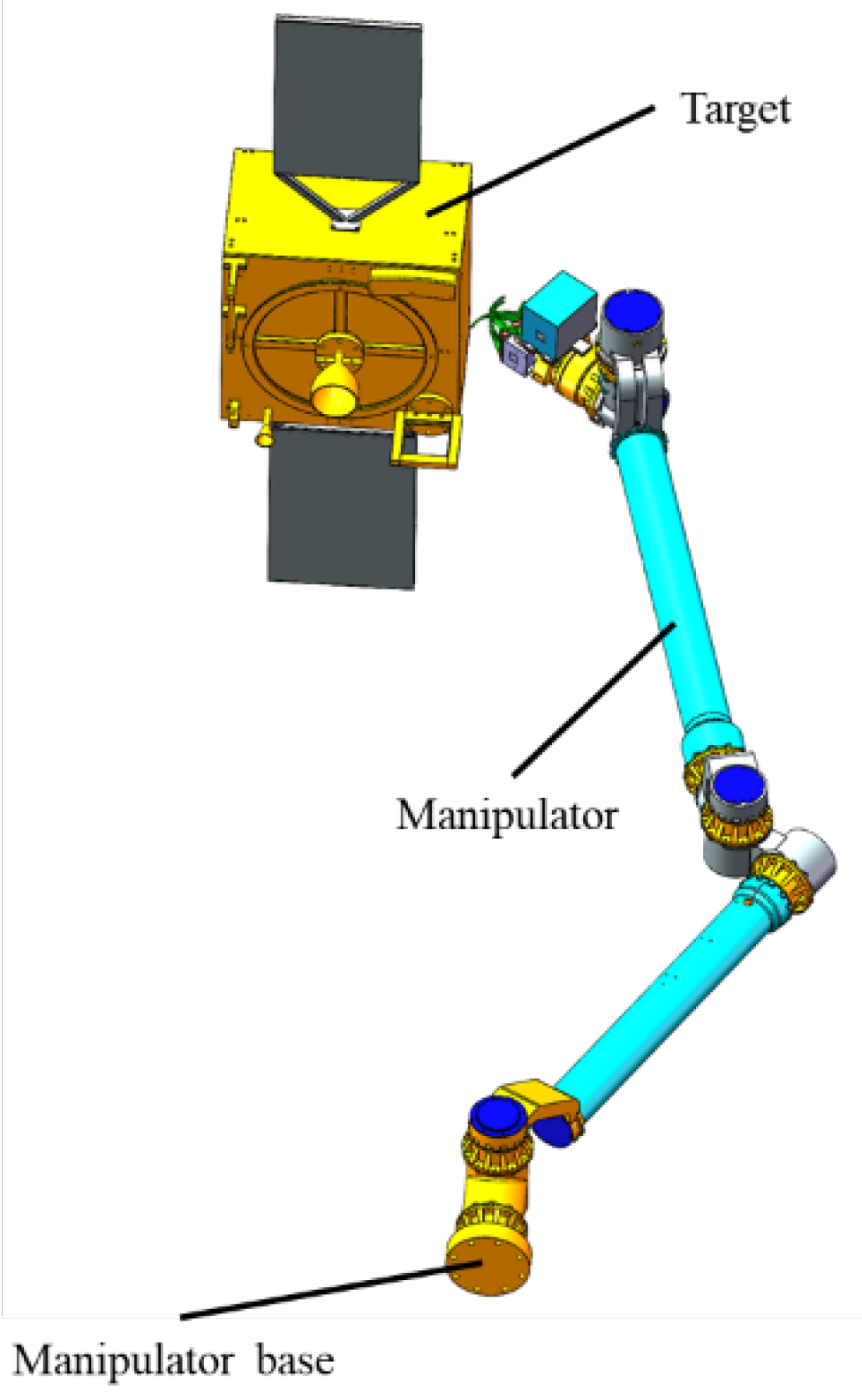

The structure of this paper is organized as follows: First,

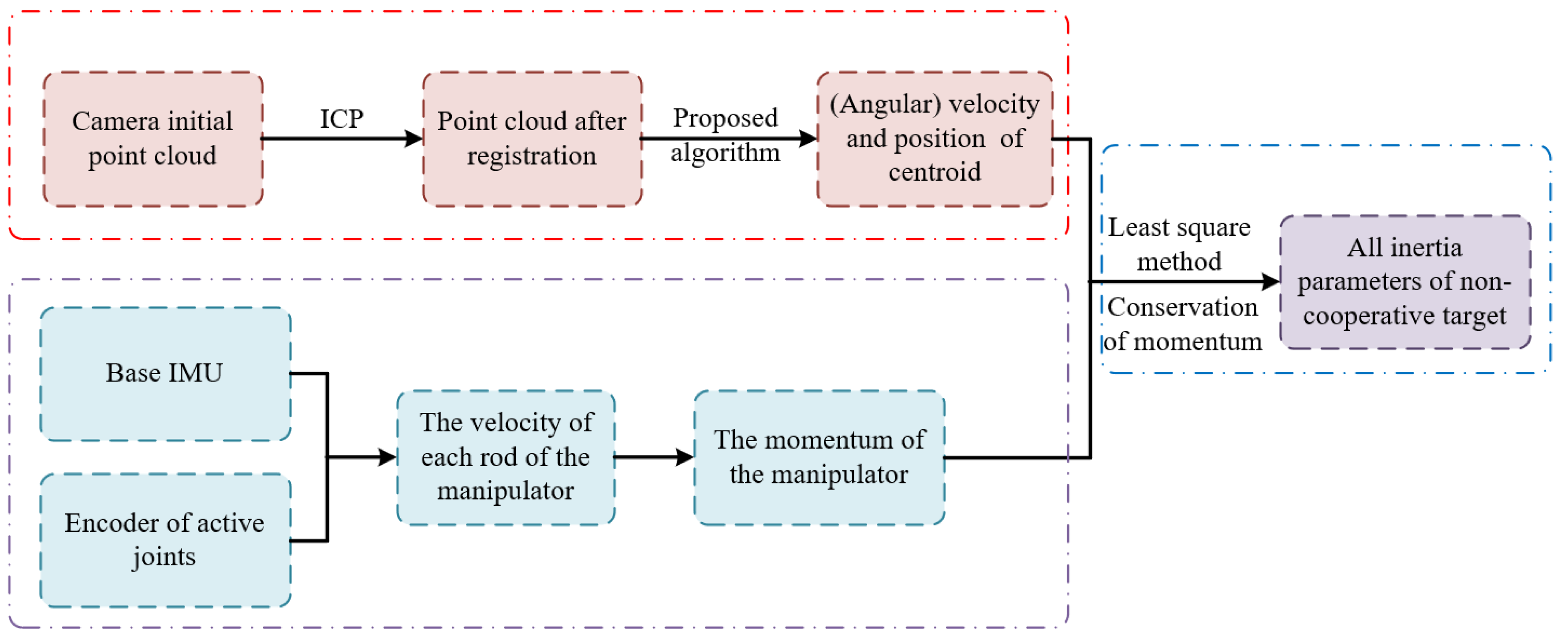

Section 2 briefly introduces the hardware system and the identification process for the non-cooperative target. Second,

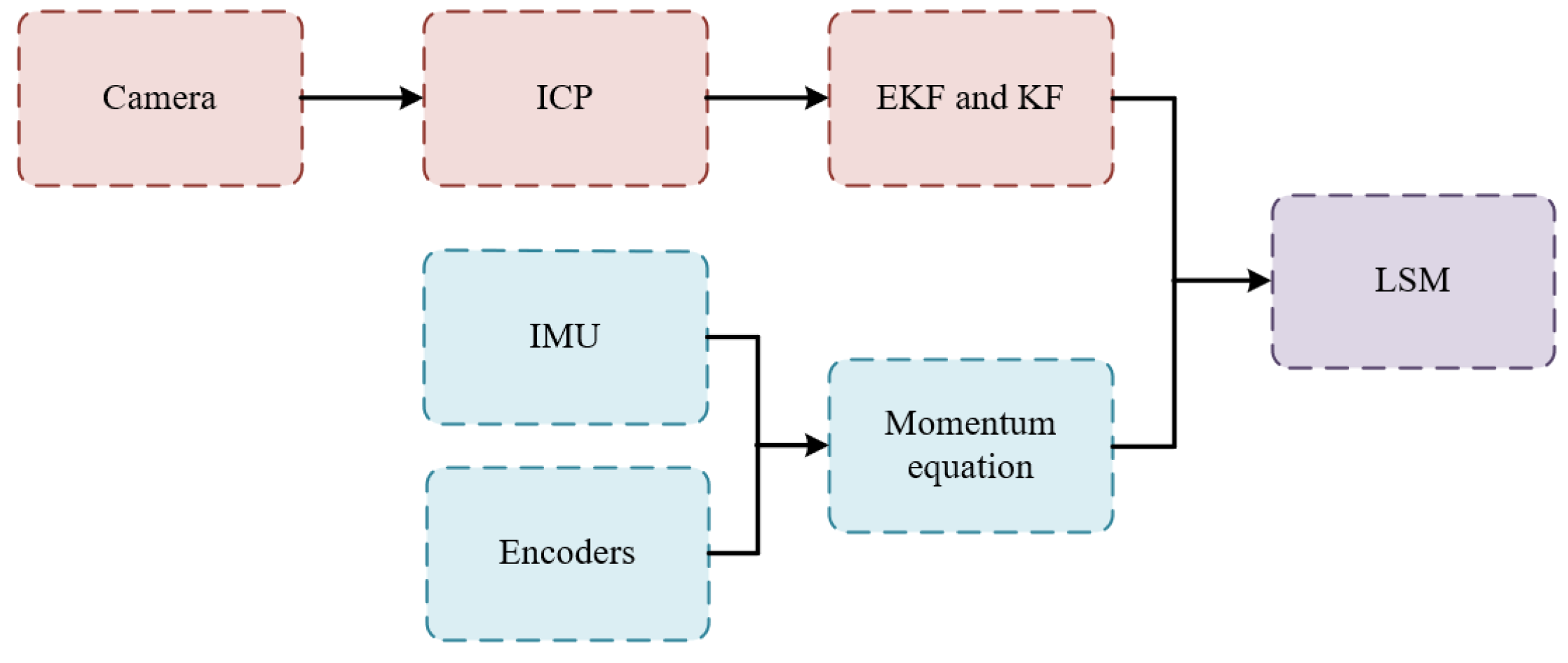

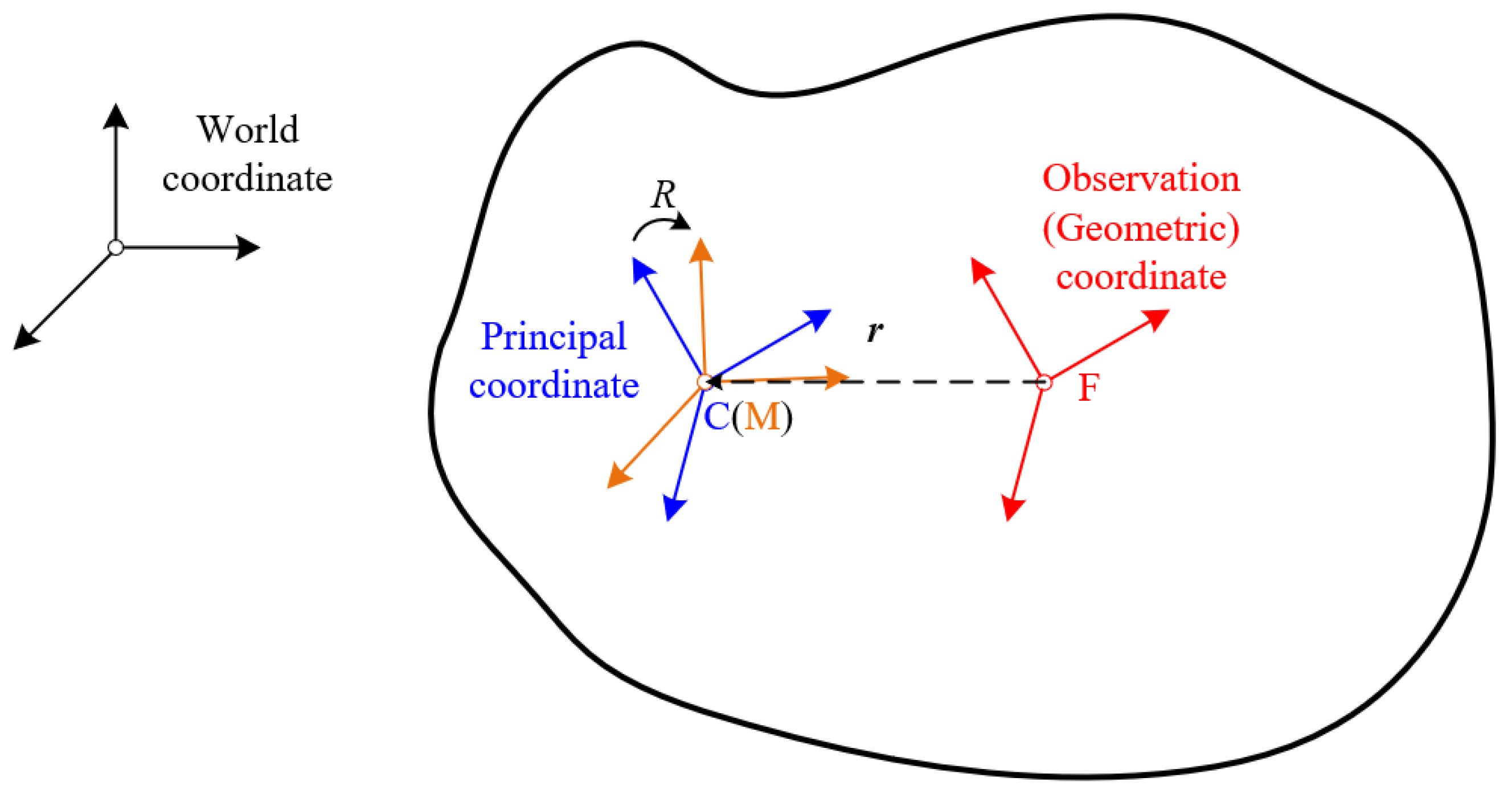

Section 3 presents the procedure for processing the collected data, as well as the newly proposed approach for non-cooperative target identification based on a novel position and velocity estimation method. Third, simulations are performed and analyzed in

Section 4. Finally,

Section 5 is illustrated.

5. Conclusions and Discussion

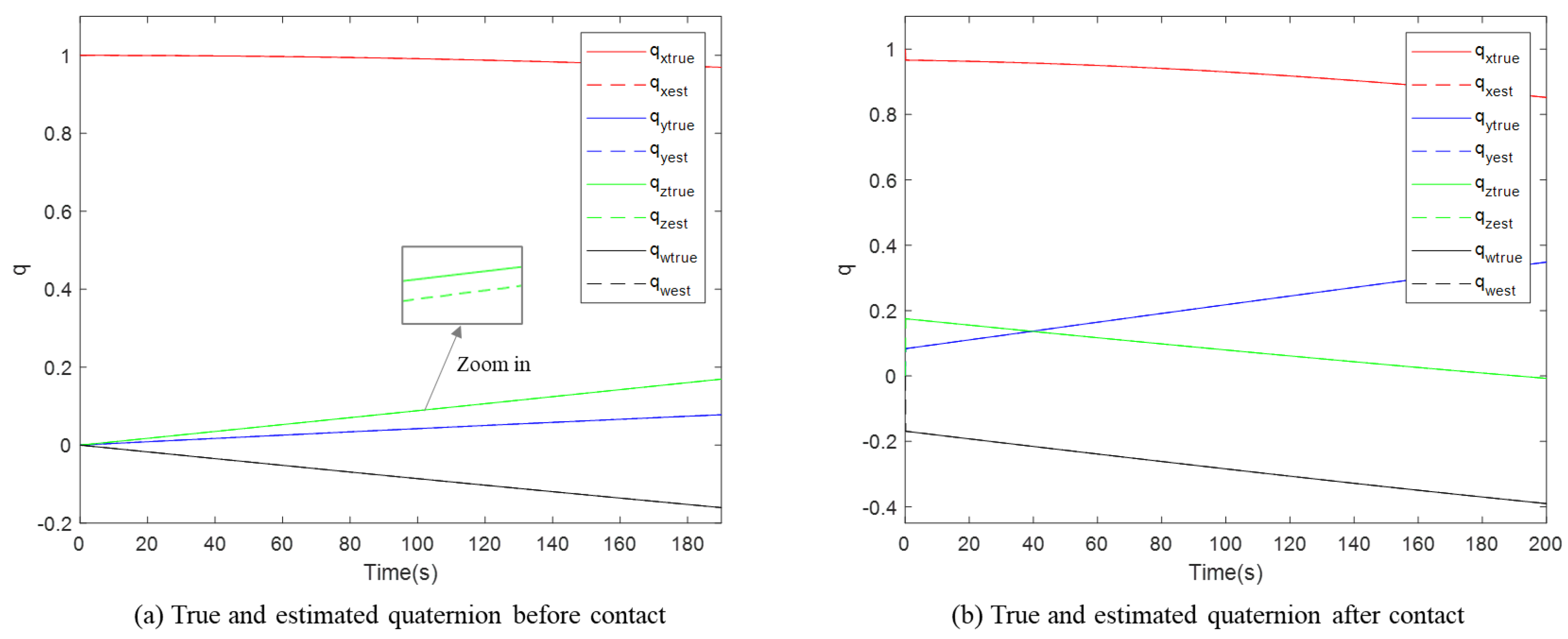

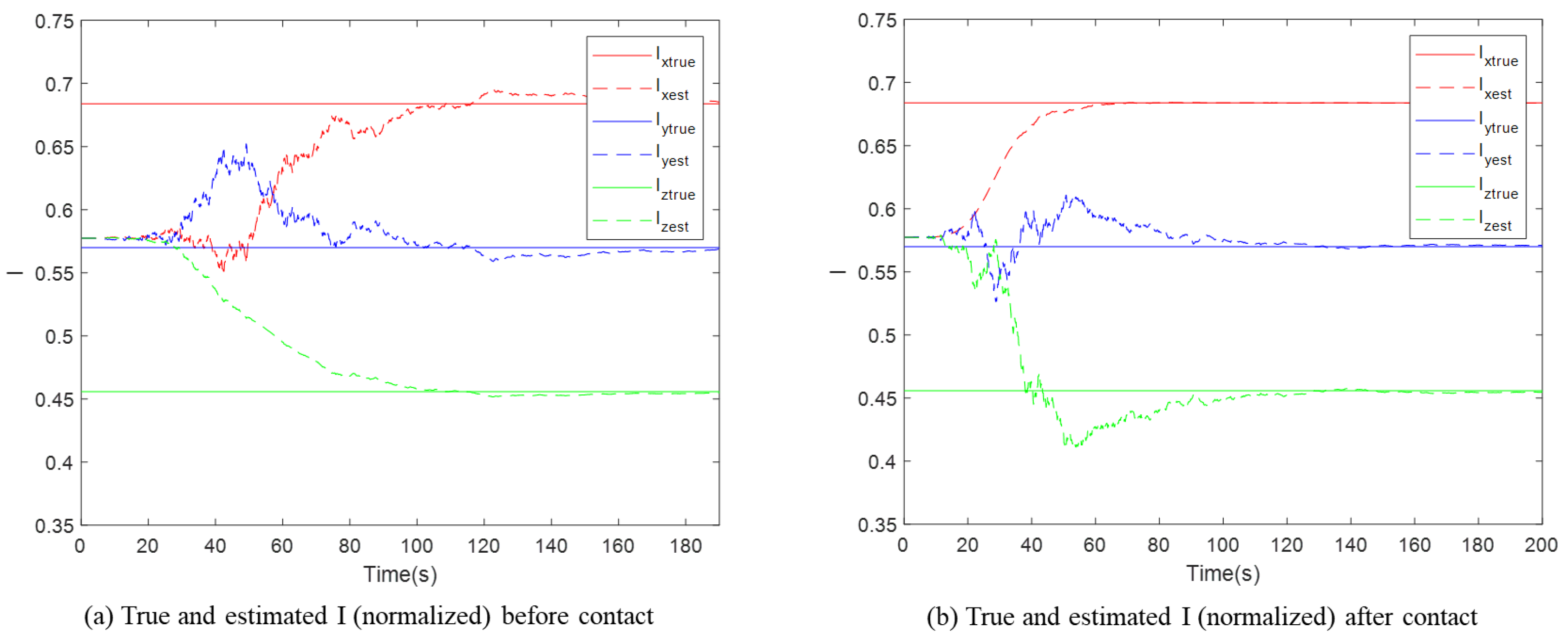

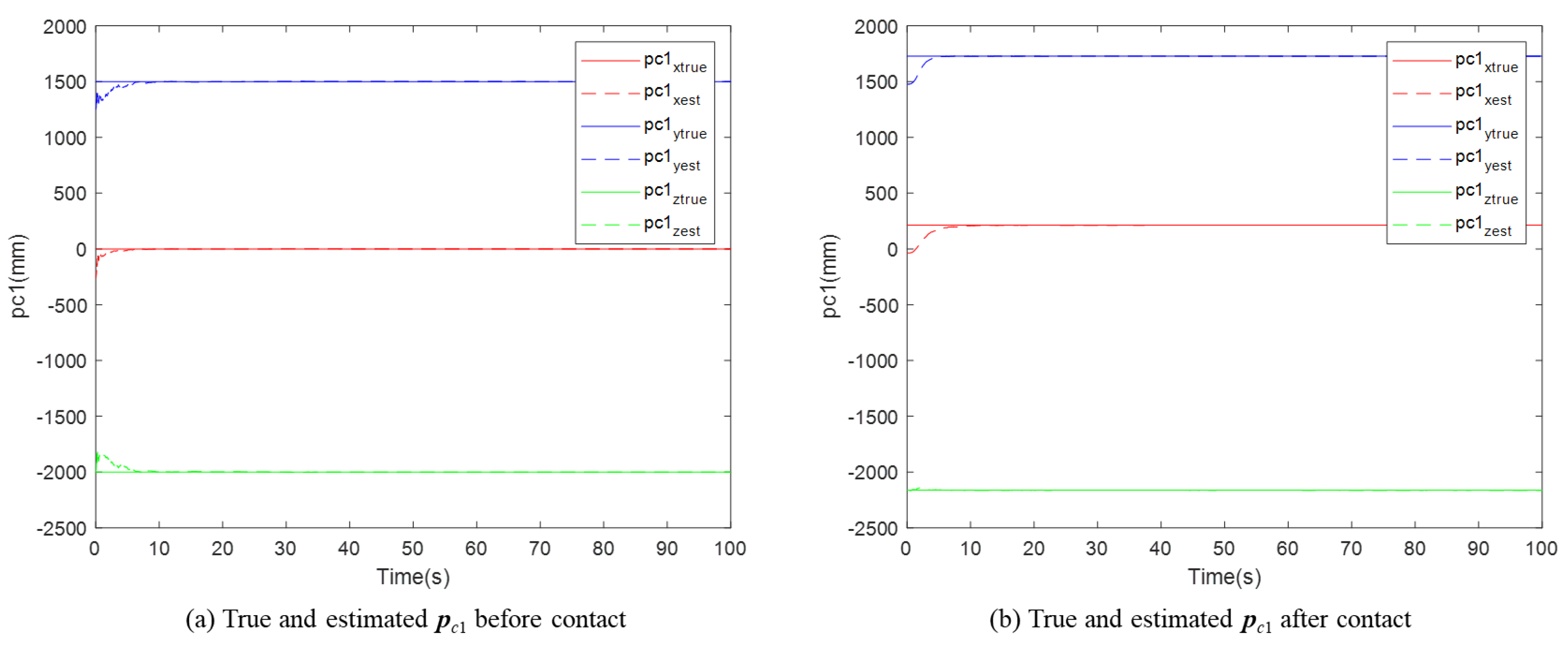

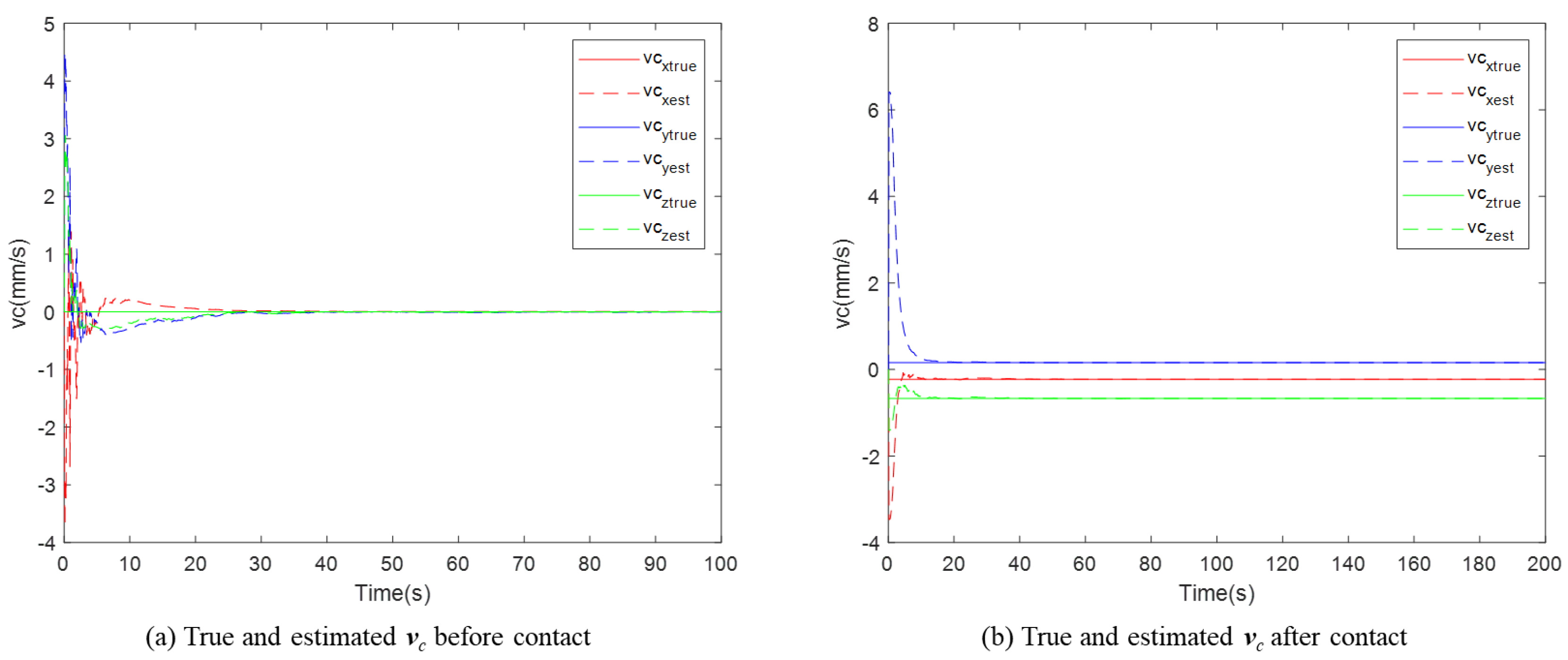

For a free-floating non-cooperative target in space, performing operations is challenging when all its inertia parameters are unknown. This paper proposed a novel method for identifying inertia parameters based on motion estimation. First, the physical composition of the system was introduced, and the overall identification framework was outlined. Then, the methods involved in the identification process were analyzed step by step. The acquisition and processing of the data essential for the identification process were considered, with particular emphasis on accurate motion estimation of the target. Attitude parameter identification was performed via the EKF, and position and velocity identification was performed with the KF. Finally, numerical simulations were performed to validate the methods proposed. The final identification errors for all inertia parameters were all smaller than 0.3%, demonstrating the effectiveness and accuracy of the proposed approach.

The position and velocity estimation combines the output of the ICP algorithm and with a novel modeling approach. In this period, only the initial position vector is identified, unlike conventional position estimation. The position at any subsequent time can be derived by the estimated velocity and elapsed time. Moreover, since the normalized principal moments of inertia can be estimated by visual data, the final LSM only contains two parameters to be identified. This significantly reduces the problem’s dimensionality compared with the six-dimensional output vector. For this reason, only one contact is sufficient to ensure the stability of the identification if all the errors are neglected.

However, the principal moments of inertia along the three axes in this paper are assumed to be distinct. When two or all of them are identical, deduction will differ, and the proposed method may not be applicable. This limitation will be addressed in future work. On the other hand, the observation quaternion is assumed to be the same as that of the principal coordinate, which may not always be valid, especially when the mass distribution is non-uniform. And the situation when they are different is to be noted. In addition, this paper assumes that the inertia parameters of both the manipulator and the satellite are known. If these values contain extra errors, then momentum conservation equation may also be affected, which should be considered in subsequent studies.