Research on Visual Target Detection Method for Smart City Unmanned Aerial Vehicles Based on Transformer

Abstract

1. Introduction

2. Related Works

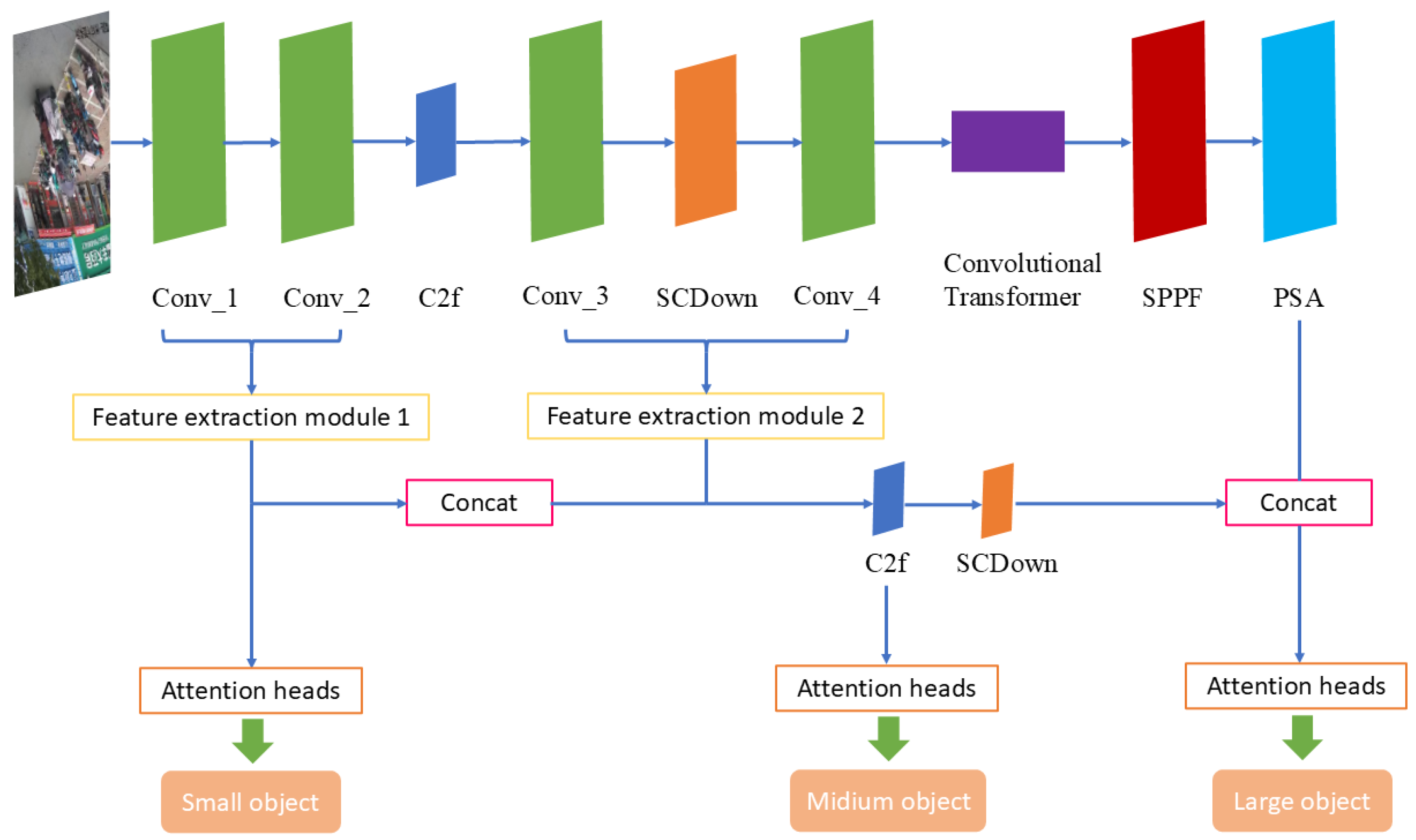

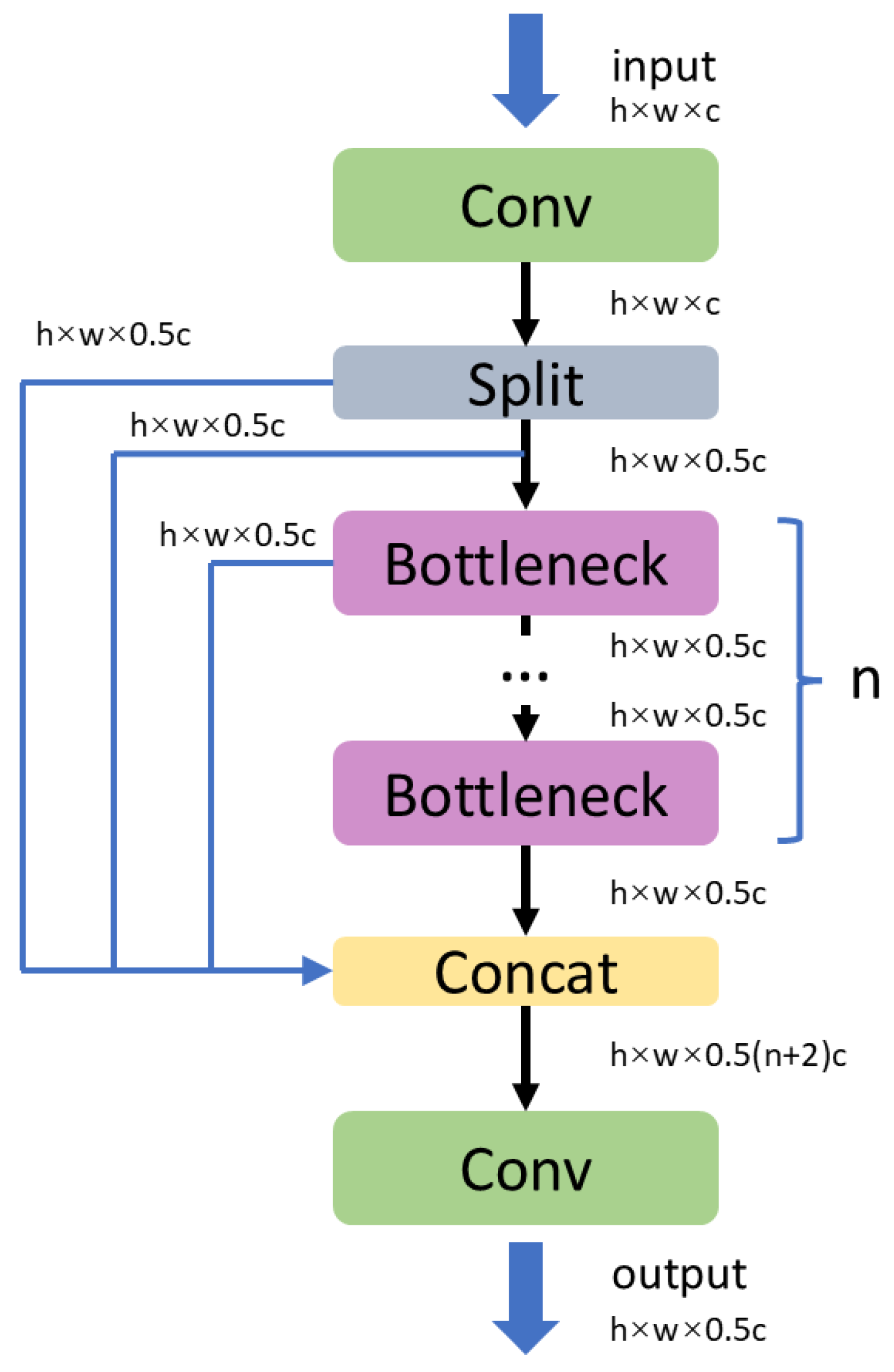

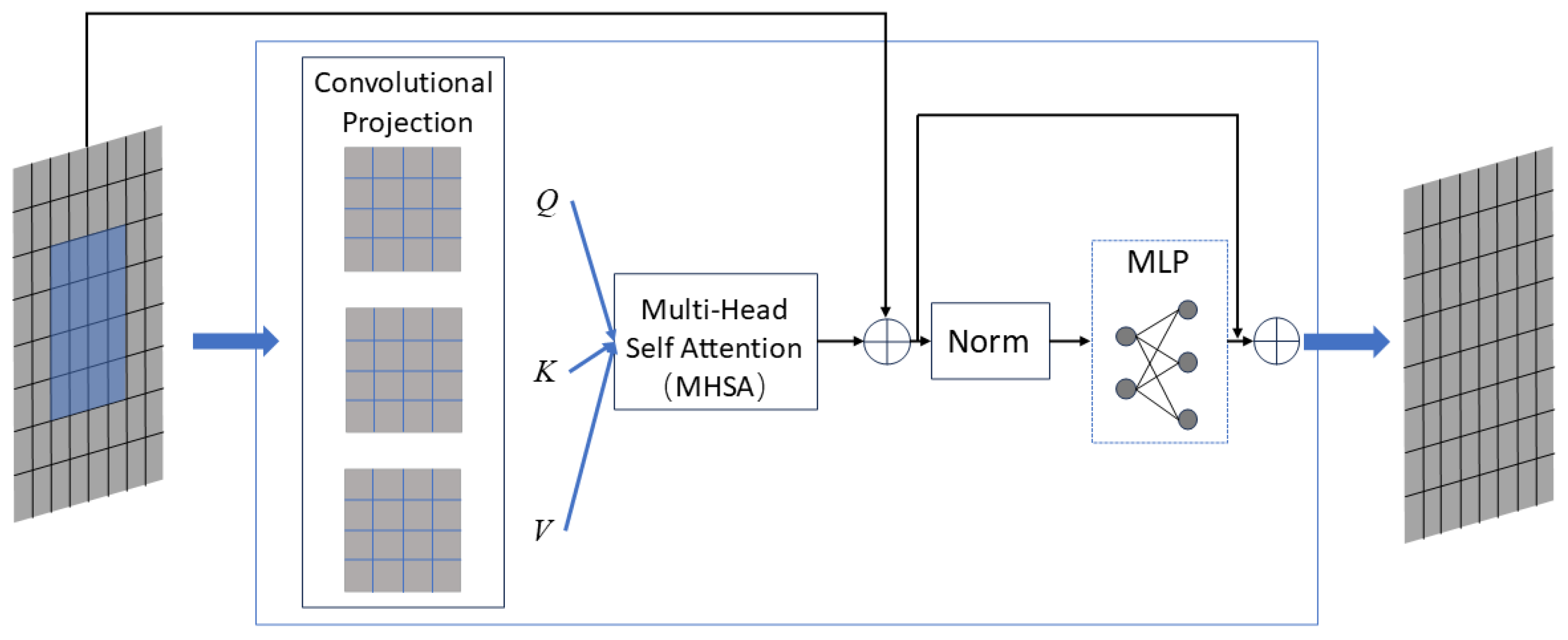

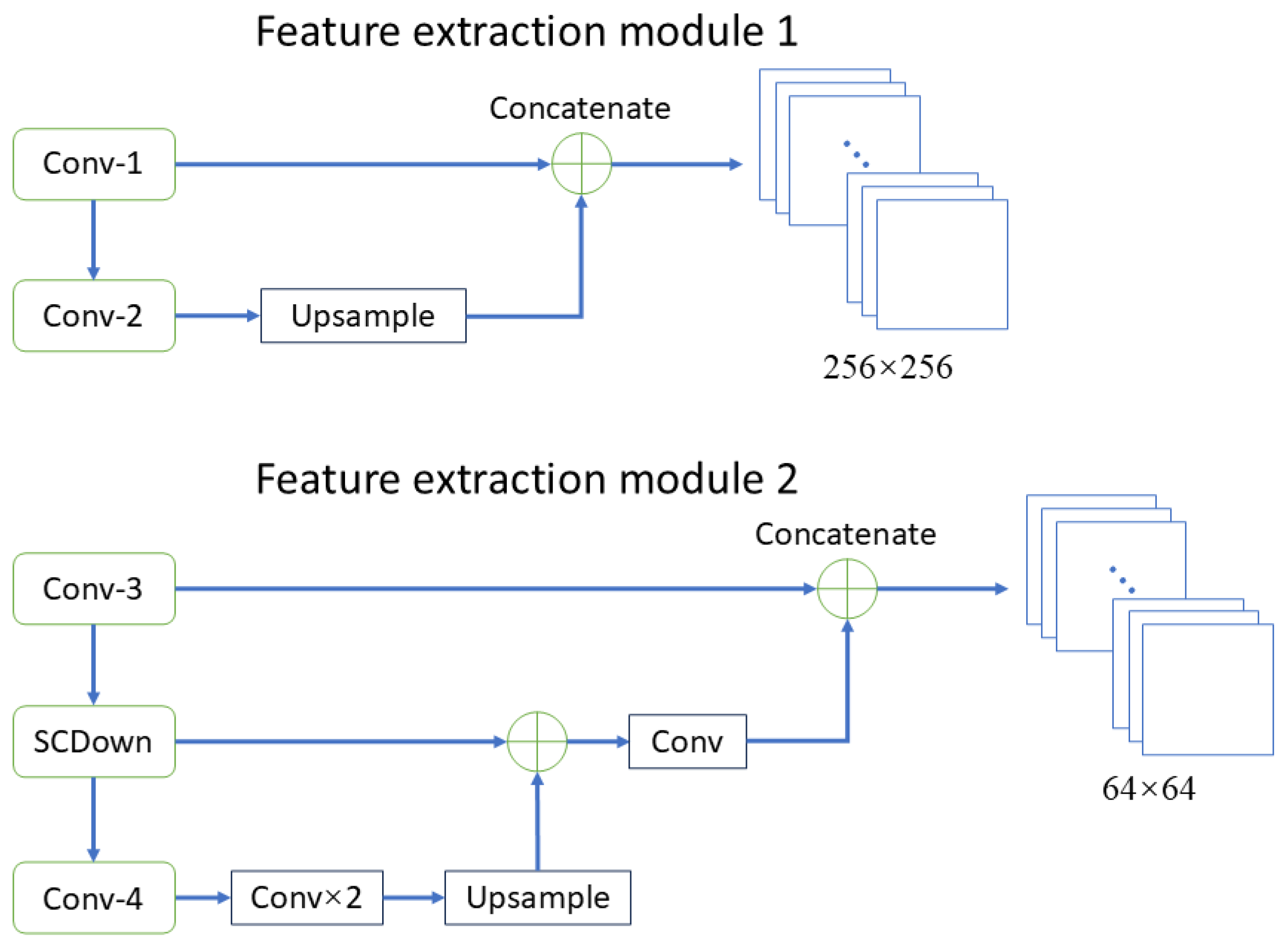

3. Proposed Network Framework

4. Experiments and Analysis

4.1. Training Process

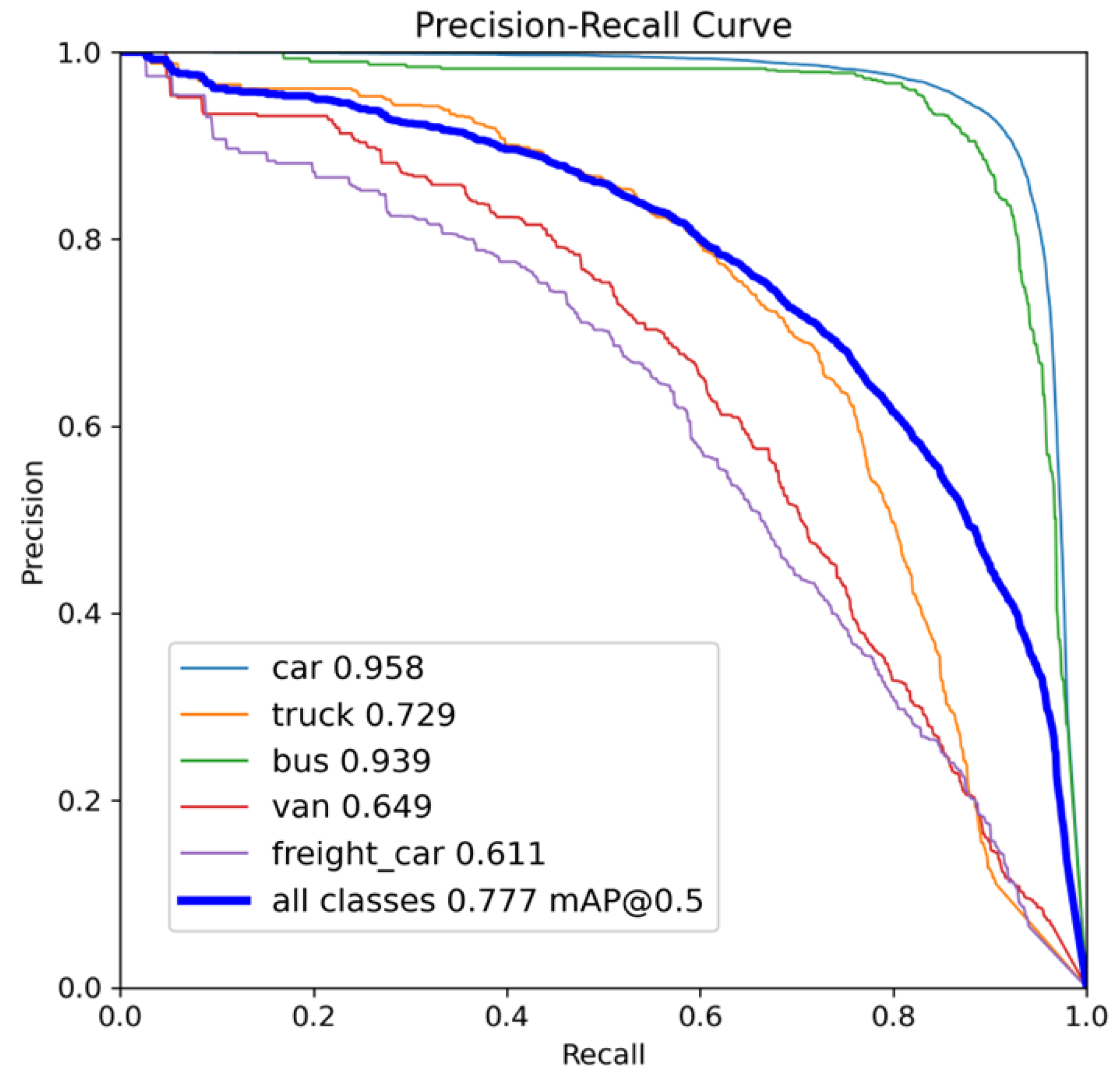

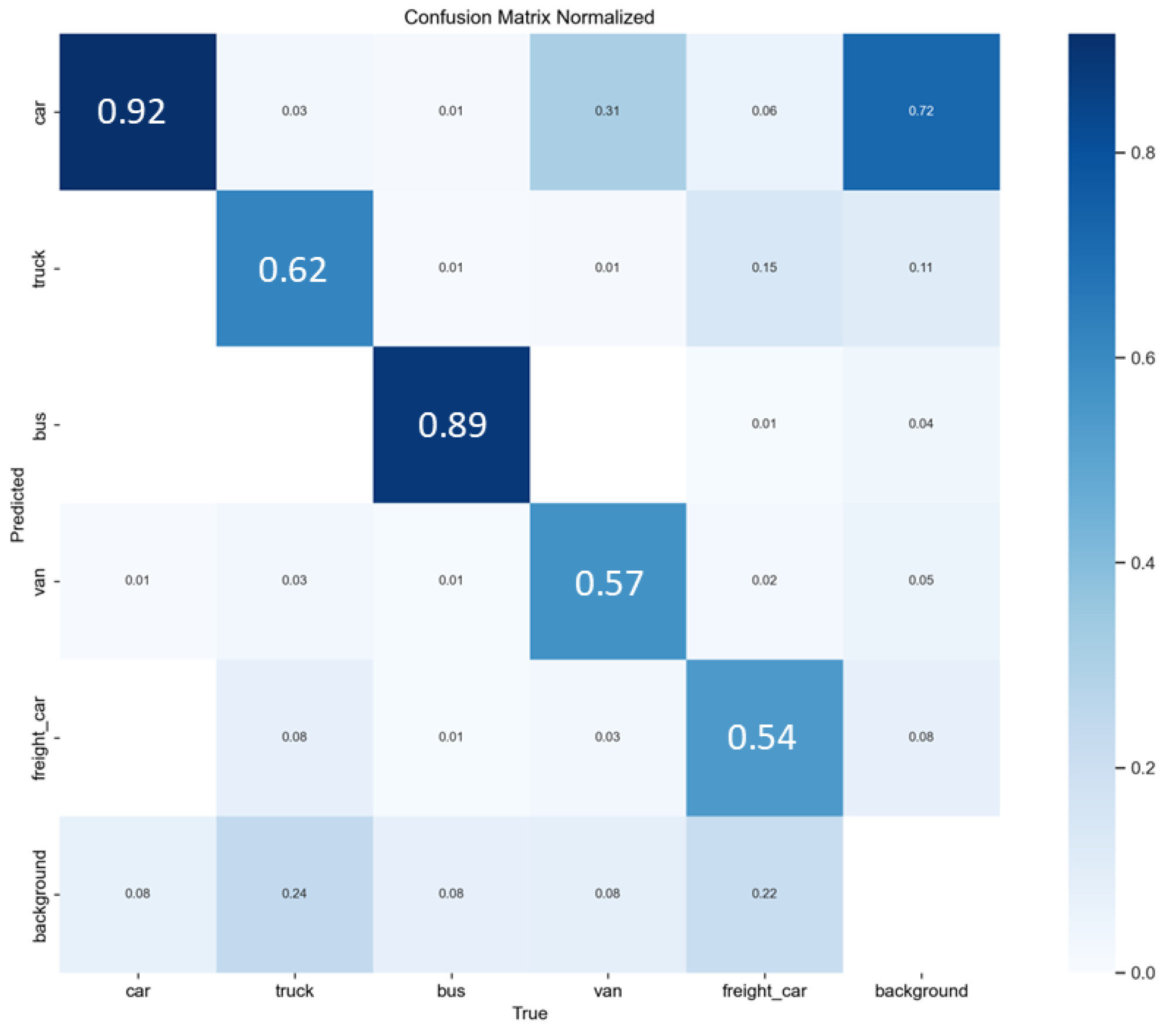

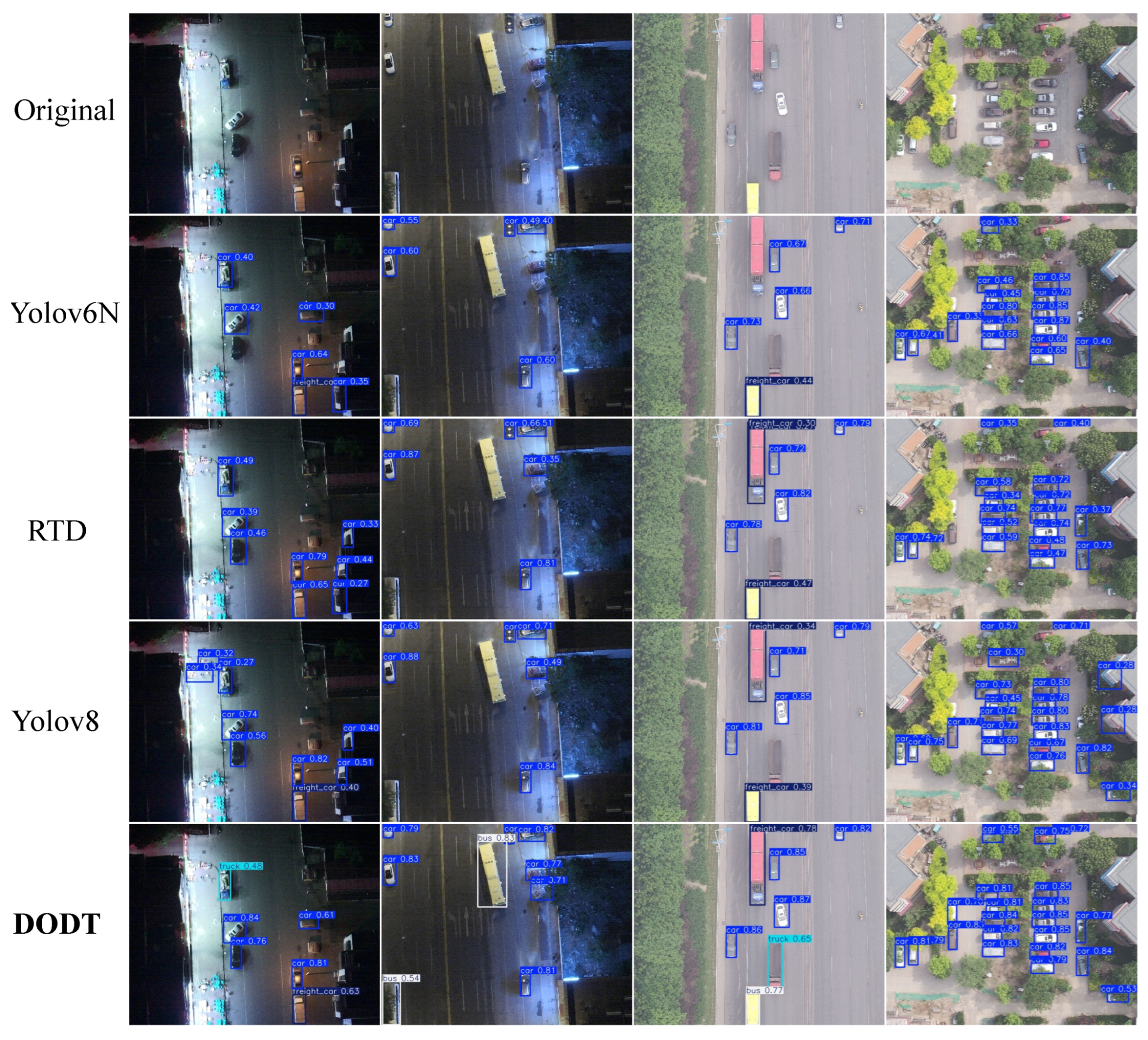

4.2. Test Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mora, L.; Gerli, P.; Batty, M.; Royall, E.B.; Carfi, N.; Coenegrachts, K.-F.; de Jong, M.; Facchina, M.; Janssen, M.; Meijer, A.; et al. Confronting the smart city governance challenge. Nat. Cities 2025, 2, 110–113. [Google Scholar] [CrossRef]

- Kaiser, Z.A.; Deb, A. Sustainable smart city and sustainable development goals (sdgs): A review. Reg. Sustain. 2025, 6, 100193. [Google Scholar] [CrossRef]

- Dahmane, W.M.; Ouchani, S.; Bouarfa, H. Smart cities services and solutions: A systematic review. Data Inf. Manag. 2025, 9, 100087. [Google Scholar] [CrossRef]

- Abu-Rayash, A.; Dincer, I. Development of an integrated model for environmentally and economically sustainable and smart cities. Sustain. Energy Technol. Assess. 2025, 73, 104096. [Google Scholar] [CrossRef]

- Behmanesh, H.; Brown, A. Improving the design and management of temporary events in public spaces by applying urban design criteria. J. Urban Manag. 2025; in press. [Google Scholar] [CrossRef]

- Fang, H.; Xia, M.; Zhou, G.; Chang, Y.; Yan, L. Infrared small uav target detection based on residual image prediction via global and local dilated residual networks. IEEE Geosci. Remote. Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Bocheng, Z.; Mingying, H.; Zheng, L.; Wenyu, F.; Ze, Y.; Naiming, Q.; Shaohai, W. Graph-based multi-agent reinforcement learning for collaborative search and tracking of multiple uavs. Chin. J. Aeronaut. 2025, 38, 103214. [Google Scholar]

- Salameh, H.B.; Alhafnawi, M.; Masadeh, A.; Jararweh, Y. Federated reinforcement learning approach for detecting uncertain deceptive target using autonomous dual uav system. Inf. Process. Manag. 2023, 60, 103149. [Google Scholar] [CrossRef]

- Dong, Y.; Ma, Y.; Li, Y.; Li, Z. High-precision real-time uav target recognition based on improved yolov4. Comput. Commun. 2023, 206, 124–132. [Google Scholar] [CrossRef]

- Zhao, B.; Huo, M.; Li, Z.; Yu, Z.; Qi, N. Clustering-based hyper-heuristic algorithm for multi-region coverage path planning of heterogeneous uavs. Neurocomputing 2024, 610, 128528. [Google Scholar] [CrossRef]

- Zhao, B.; Huo, M.; Li, Z.; Yu, Z.; Qi, N. Graph-based multi-agent reinforcement learning for large-scale uavs swarm system control. Aerosp. Sci. Technol. 2024, 150, 109166. [Google Scholar] [CrossRef]

- Wang, C.; Tian, J.; Cao, J.; Wang, X. Deep learning-based uav detection in pulse-doppler radar. IEEE Trans. Geosci. Remote. Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Ahmad, J.; Sajjad, M.; Eisma, J. Small unmanned aerial vehicle (uav)-based detection of seasonal micro-urban heat islands for diverse land uses. Int. J. Remote. Sens. 2025, 46, 119–147. [Google Scholar] [CrossRef]

- Zhai, Y.; Wang, L.; Yao, Y.; Jia, J.; Li, R.; Ren, Z.; He, X.; Ye, Z.; Zhang, X.; Chen, Y.; et al. Spatially continuous estimation of urban forest aboveground biomass with uav-lidar and multispectral scanning: An allometric model of forest structural diversity. Agric. For. Meteorol. 2025, 360, 110301. [Google Scholar] [CrossRef]

- Raju, M.R.; Mothku, S.K.; Somesula, M.K.; Chebrolu, S. Age and energy aware data collection scheme for urban flood monitoring in uav-assisted wireless sensor networks. Ad Hoc Netw. 2025, 168, 103704. [Google Scholar] [CrossRef]

- Chao, D.; Zhang, Y.; Ziye, J.; Yiyang, L.; Zhang, L.; Qihui, W. Three-dimension collision-free trajectory planning of uavs based on ads-b information in low-altitude urban airspace. Chin. J. Aeronaut. 2025, 38, 103170. [Google Scholar]

- Yang, J.; Qin, D.; Tang, H.; Tao, S.; Bie, H.; Ma, L. Dinov2-based uav visual self-localization in low-altitude urban environments. IEEE Robot. Autom. Lett. 2025, 10, 2080–2087. [Google Scholar] [CrossRef]

- Liu, J.; Wen, B.; Xiao, J.; Sun, M. Design of uav target detection network based on deep feature fusion and optimization with small targets in complex contexts. Neurocomputing 2025, 639, 130207. [Google Scholar] [CrossRef]

- Zhao, B.; Huo, M.; Yu, Z.; Qi, N.; Wang, J. Model-reference reinforcement learning for safe aerial recovery of unmanned aerial vehicles. Aerospace 2023, 11, 27. [Google Scholar] [CrossRef]

- Lu, S.; Guo, Y.; Long, J.; Liu, Z.; Wang, Z.; Li, Y. Dense small target detection algorithm for uav aerial imagery. Image Vis. Comput. 2025, 156, 105485. [Google Scholar] [CrossRef]

- Semenyuk, V.; Kurmashev, I.; Lupidi, A.; Alyoshin, D.; Kurmasheva, L.; Cantelli-Forti, A. Advances in uav detection: Integrating multi-sensor systems and ai for enhanced accuracy and efficiency. Int. J. Crit. Infrastruct. Prot. 2025, 49, 100744. [Google Scholar] [CrossRef]

- Jiang, L.; Yuan, B.; Du, J.; Chen, B.; Xie, H.; Tian, J.; Yuan, Z. Mffsodnet: Multiscale feature fusion small object detection network for uav aerial images. IEEE Trans. Instrum. Meas. 2024, 73, 1–14. [Google Scholar] [CrossRef]

- Yu, X.; Yin, D.; Nie, C.; Ming, B.; Xu, H.; Liu, Y.; Bai, Y.; Shao, M.; Cheng, M.; Liu, Y.; et al. Maize tassel area dynamic monitoring based on near-ground and uav rgb images by u-net model. Comput. Electron. Agric. 2022, 203, 107477. [Google Scholar] [CrossRef]

- Shaodan, L.; Yue, Y.; Jiayi, L.; Xiaobin, L.; Jie, M.; Haiyong, W.; Zuxin, C.; Dapeng, Y. Application of uav-based imaging and deep learning in assessment of rice blast resistance. Rice Sci. 2023, 30, 652–660. [Google Scholar] [CrossRef]

- Zuo, G.; Zhou, K.; Wang, Q. Uav-to-uav small target detection method based on deep learning in complex scenes. IEEE Sensors J. 2024, 25, 3806–3820. [Google Scholar] [CrossRef]

- Tan, L.; Lv, X.; Lian, X.; Wang, G. Yolov4_drone: Uav image target detection based on an improved yolov4 algorithm. Comput. Electr. Eng. 2021, 93, 107261. [Google Scholar] [CrossRef]

- Masadeh, A.; Alhafnawi, M.; Salameh, H.A.B.; Musa, A.; Jararweh, Y. Reinforcement learning-based security/safety uav system for intrusion detection under dynamic and uncertain target movement. IEEE Trans. Eng. Manag. 2022, 71, 12498–12508. [Google Scholar] [CrossRef]

- Lawal, M.O. Tomato detection based on modified yolov3 framework. Sci. Rep. 2021, 11, 1447. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Zhou, Q.; Zhang, W.; Li, R.; Wang, J.; Zhen, S.; Niu, F. Improved yolov5-s object detection method for optical remote sensing images based on contextual transformer. J. Electron. Imaging 2022, 31, 043049. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. Yolov6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. Uav-yolov8: A small-object-detection model based on improved yolov8 for uav aerial photography scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef] [PubMed]

- Charisis, C.; Nuwayhid, S.; Argyropoulos, D. A novel mask r-cnn-based tracking pipeline for oyster mushroom cluster growth monitoring in time-lapse image datasets. Comput. Electron. Agric. 2025, 237, 110590. [Google Scholar] [CrossRef]

- Zhu, W.; Zhang, H.; Eastwood, J.; Qi, X.; Jia, J.; Cao, Y. Concrete crack detection using lightweight attention feature fusion single shot multibox detector. Knowl.-Based Syst. 2023, 261, 110216. [Google Scholar] [CrossRef]

- Ye, T.; Qin, W.; Zhao, Z.; Gao, X.; Deng, X.; Ouyang, Y. Real-time object detection network in uav-vision based on cnn and transformer. IEEE Trans. Instrum. Meas. 2023, 72, 1–13. [Google Scholar] [CrossRef]

| Hyperparameters | Value |

|---|---|

| Image size | 640 × 512 |

| Batch size | 4 |

| Epoch | 300 |

| Weight Decay | 0.0005 |

| Momentum | 0.91 |

| Learning Rate | 0.01 |

| Optimizer | SGD |

| Method | Car (%) | Truck (%) | Bus (%) | Van (%) | Fcar (%) | Model Size (M) | mAP (%) | FPS |

|---|---|---|---|---|---|---|---|---|

| YOLOv3 | 90.2 | 49.8 | 85.9 | 57.9 | 56.1 | 24.3 | 66.4 | 52.4 |

| YOLOv4 | 91.3 | 52.5 | 86.6 | 58.4 | 55.3 | 25.6 | 68.7 | 30.3 |

| R-CNN | 90.5 | 50.3 | 84.3 | 59.4 | 61.7 | 32.3 | 70.5 | 8.9 |

| YOLOv5-S | 92.3 | 65.2 | 88.4 | 60.7 | 58.1 | 17.2 | 68.4 | 87.2 |

| YOLOv6-N | 92.5 | 66.4 | 88.4 | 60.4 | 58.4 | 9.5 | 69.7 | 93.2 |

| SSD | 89.8 | 53.3 | 91.2 | 63.2 | 59.9 | 20.3 | 72.8 | 13.7 |

| YOLOv7-TR | 91.5 | 60.7 | 89.3 | 58.2 | 57.6 | 42.3 | 75.1 | 38.0 |

| RT-DETR | 94.2 | 63.5 | 92.1 | 61.8 | 59.3 | 34.8 | 77.1 | 105.3 |

| YOLOv8 | 93.2 | 68.8 | 90.5 | 58.6 | 62.2 | 8.7 | 74.3 | 103.1 |

| RTD | 94.1 | 73.2 | 90.2 | 62.5 | 60.3 | 14.1 | 75.2 | 90.3 |

| DODT | 95.8 | 72.9 | 93.9 | 64.9 | 61.1 | 13.7 | 77.7 | 89.7 |

| Method | Input Size | mAP0.5 (%) | mAP0.5:0.95 (%) |

|---|---|---|---|

| SSD | 512 × 512 | 48.5 | 28.8 |

| YOLOv8 | 608 × 608 | 52.3 | 37.3 |

| RTD | 608 × 608 | 54.3 | 33.6 |

| YOLOv10 | 640 × 640 | 54.8 | 39.2 |

| DODT | 640 × 640 | 60.5 | 38.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qi, B.; Shi, H.; Zhao, B.; Mu, R.; Huo, M. Research on Visual Target Detection Method for Smart City Unmanned Aerial Vehicles Based on Transformer. Aerospace 2025, 12, 949. https://doi.org/10.3390/aerospace12110949

Qi B, Shi H, Zhao B, Mu R, Huo M. Research on Visual Target Detection Method for Smart City Unmanned Aerial Vehicles Based on Transformer. Aerospace. 2025; 12(11):949. https://doi.org/10.3390/aerospace12110949

Chicago/Turabian StyleQi, Bo, Hang Shi, Bocheng Zhao, Rongjun Mu, and Mingying Huo. 2025. "Research on Visual Target Detection Method for Smart City Unmanned Aerial Vehicles Based on Transformer" Aerospace 12, no. 11: 949. https://doi.org/10.3390/aerospace12110949

APA StyleQi, B., Shi, H., Zhao, B., Mu, R., & Huo, M. (2025). Research on Visual Target Detection Method for Smart City Unmanned Aerial Vehicles Based on Transformer. Aerospace, 12(11), 949. https://doi.org/10.3390/aerospace12110949