1. Introduction

On 3 November 2022, the successful completion of the “T” configuration on-orbit assembly of the China Space Station marked a major milestone in Chinese manned spaceflight program [

1]. Following this achievement, the space station transitioned into a long-term phase of on-orbit operations with astronauts [

2]. A key feature of the space station is its maintainability [

3], with fault diagnosis technology serving as the fundamental basis for efficient on-orbit maintenance [

4]. Given the increasing complexity of spacecraft systems, traditional fault diagnosis methods find it difficult to meet the demands for efficiency and adaptability [

5]. As a result, intelligent fault diagnosis technologies are gradually replacing conventional approaches and have become a major research focus [

6], as well as a core technology for the intelligent operation and maintenance of spacecraft [

7]. Intelligent fault diagnosis, particularly on space stations, is expected to advance rapidly from theoretical research to practical engineering applications.

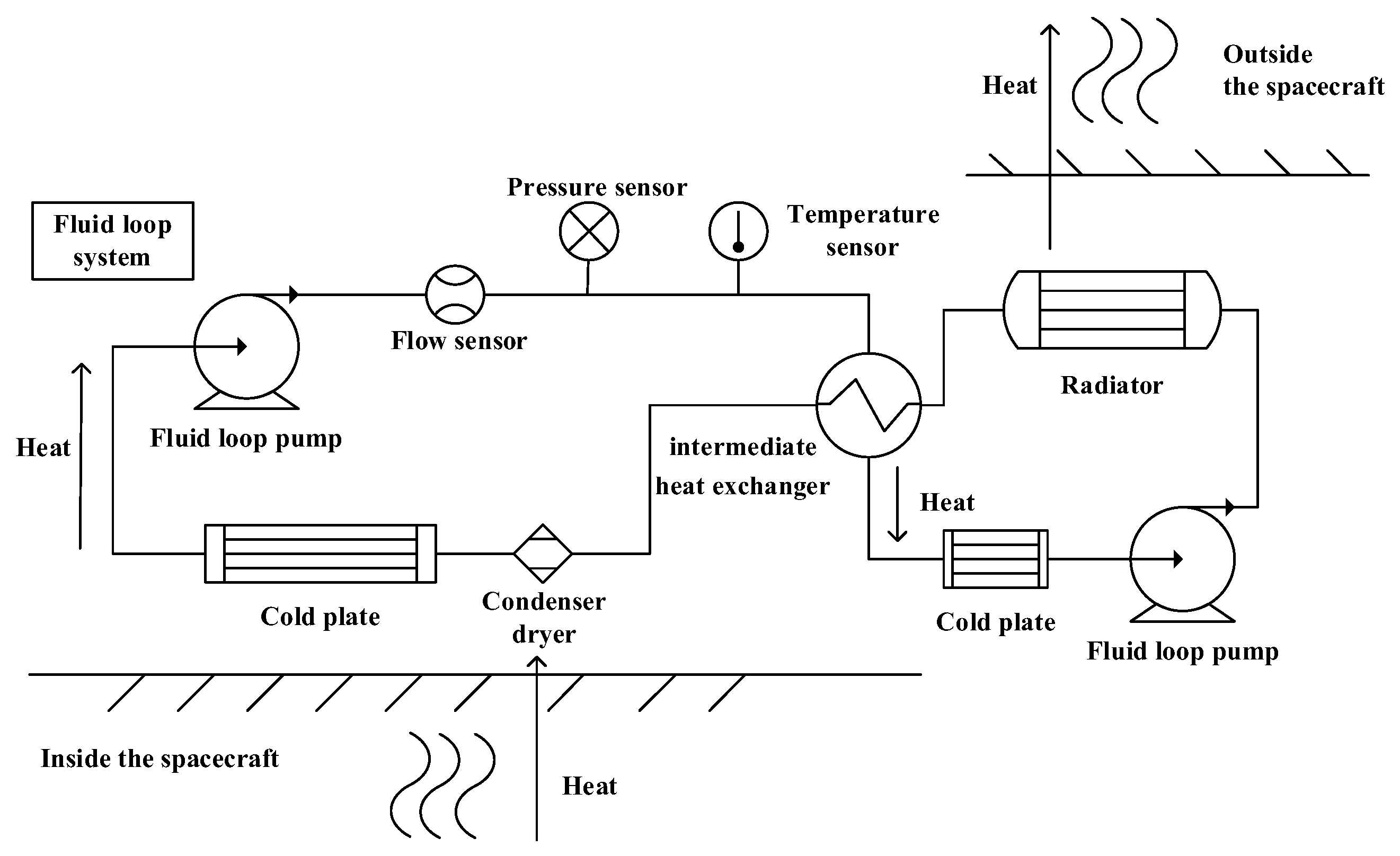

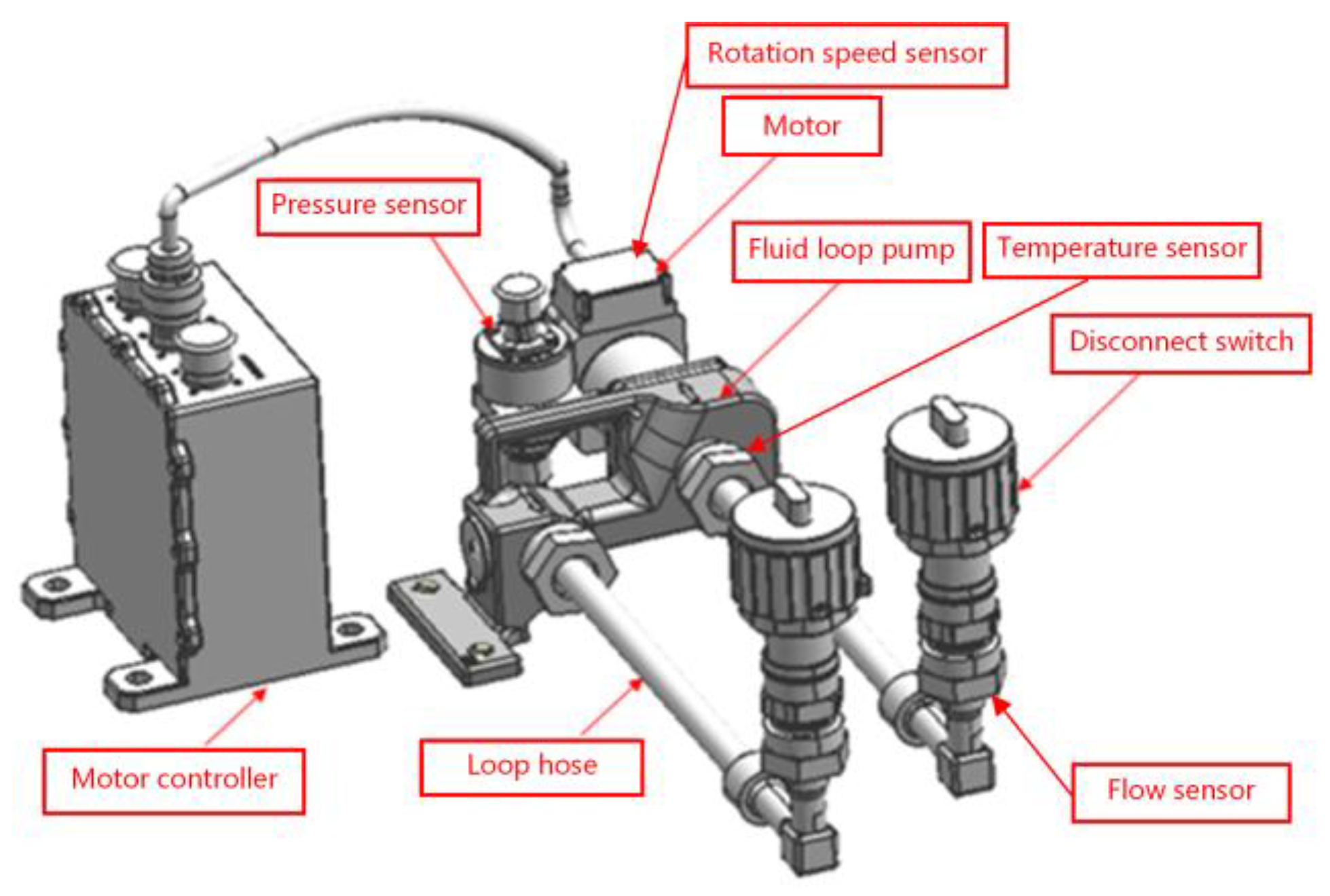

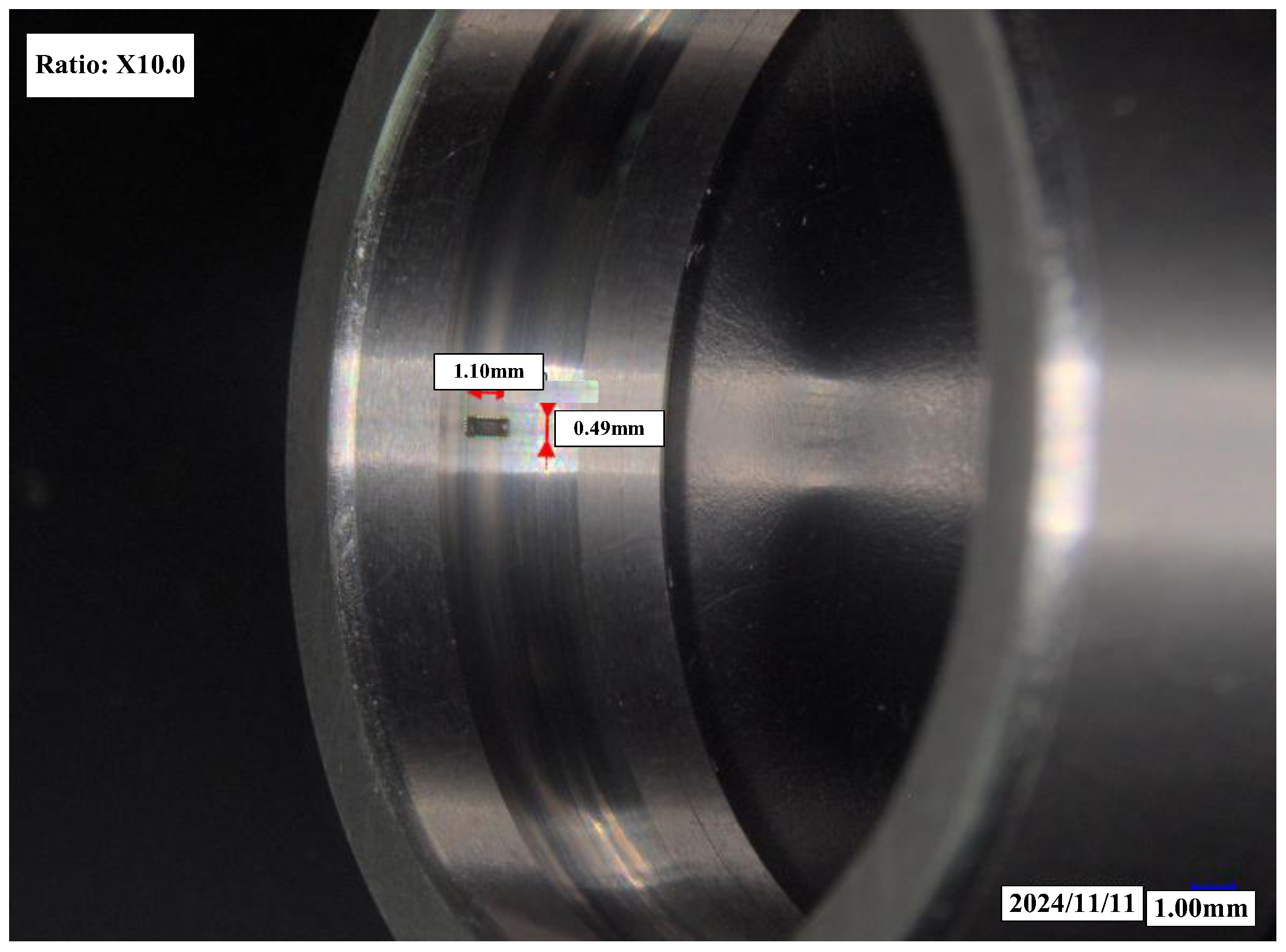

Based on the experiences of the Mir space station and the International Space Station [

8], the thermal control subsystem, particularly the fluid loop pumps, has been identified as having a high failure rate [

9]. These components are key targets for fault diagnosis and on-orbit maintenance in space stations. Fluid loop pumps function as the “heart” of the thermal control system in spacecraft; a failure in these pumps could result in a total system breakdown and loss of temperature regulation. Such failures would not only critically affect the safe and reliable operation of various spacecraft systems but also directly jeopardize the health and safety of astronauts [

10]. Therefore, research into fault diagnosis technologies for spacecraft fluid loop pumps is crucial for ensuring spacecraft reliability and crew safety.

Research on spacecraft fluid loop pumps has primarily focused on design and heat transfer characteristics [

11,

12,

13], with relatively few studies addressing fault diagnosis. However, there has been significant research on centrifugal pumps, which share similar structures with fluid loop pumps. These studies mainly use physics-model-driven and signal-processing-based approaches for fault diagnosis. For instance, Kalleso et al. [

14] proposed a physics-model-driven fault diagnosis method for centrifugal pumps that combines structural analysis, redundancy analysis, and observer design. This approach has proven effective in detecting and isolating five types of mechanical and hydraulic faults in centrifugal pumps. Beckerle et al. [

15] discussed the use of a balanced filter in model-based fault diagnosis for centrifugal pumps, achieving accurate fault identification. Muralidharan and Sugumaran [

16] employed wavelet transform techniques to extract multi-dimensional, multi-scale features from centrifugal pump operation signals, using a decision tree algorithm to swiftly and accurately identify various fault types. While physics-model-driven methods require detailed knowledge of pump structures, limiting their generalizability, signal-processing methods have yielded promising results for specific issues [

17]. Nevertheless, both approaches face challenges in addressing the increasing diversity of fault types and achieving higher methodological intelligence.

In recent years, neural networks have become a mainstream approach for intelligent fault diagnosis. Zaman et al. [

18] introduced a centrifugal pump fault diagnosis method using a SobelEdge spectrogram as input to a convolutional neural network (CNN). The results demonstrated that the SobelEdge spectrogram effectively enhanced the identification of fault-related information, while the CNN, with its strong feature extraction and classification capabilities, achieved accurate fault classification. AlTobi et al. [

19] explored the combined application of multi-layer perceptron (MLP) neural networks and support vector machines (SVMs) for centrifugal pump fault diagnosis, providing a comprehensive evaluation of these methods in improving fault classification accuracy and efficiency. Ranawat et al. [

20] proposed the use of SVM and artificial neural networks (ANNs) to diagnose centrifugal pump faults under various operating conditions. They extracted different statistical features from vibration signals in both time and frequency domains, utilizing various feature ranking methods to compare fault diagnosis efficiency. Yu et al. [

21] investigated the application of four neural networks for the intelligent fault diagnosis of spacecraft fluid loop pumps, finding that fuzzy neural networks performed the best. Despite these advancements, several challenges remain. Factors such as randomness in training and test set divisions, variability in initial weight and threshold values, and hyperparameter variations—such as the number of hidden layer neurons and learning rates—can introduce significant randomness and instability into prediction results. These issues may hinder the practical application of these technologies in spacecraft.

For practical application of spacecraft fault diagnosis technology, software development is essential. NASA and ESA have conducted extensive research on on-orbit fault diagnosis technology for manned spacecraft, resulting in systems like the Advanced Caution and Warning System (ACAWS), which enables system-level fault diagnosis and health management [

22,

23]. NASA has also developed several fault diagnosis software tools, including TAMES-RT (Testability Engineering and Maintenance System Real-time Diagnostics Tool), based on graphical models [

24], Livingstone, based on discrete models [

25], and HyDE (Hybrid Diagnostic Engine), based on hybrid models [

26]. These systems have been applied on the International Space Station. Nevertheless, while these software tools have significantly contributed to the initial development of on-orbit fault diagnosis technology, they were designed in an earlier era and primarily rely on traditional fault diagnosis methods. As a result, they may face challenges in achieving high diagnostic accuracy, simplifying operational procedures, and incorporating advanced intelligent features.

Looking ahead, fault diagnosis technology for spacecraft fluid loop pumps is expected to advance, becoming more capable of handling diverse faults, multi-dimensional data, and complex models [

27]. Although neural networks are at the forefront of intelligent fault diagnosis due to their high accuracy, they continue to be challenged by issues of randomness and instability [

28]. Therefore, enhancing the stability of neural networks and integrating them into practical software applications remains a central challenge in unlocking the engineering potential of intelligent fault diagnosis technologies for spacecraft.

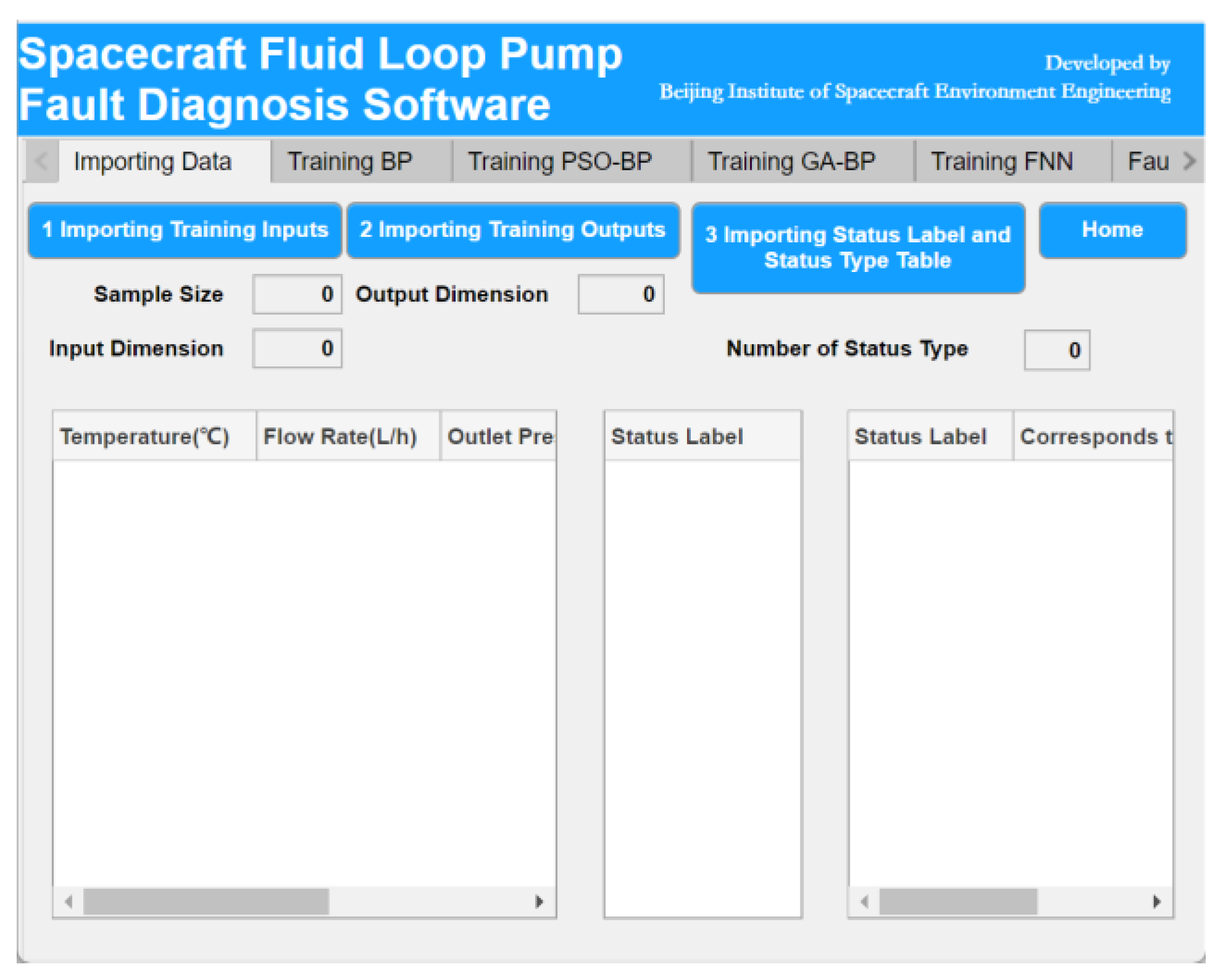

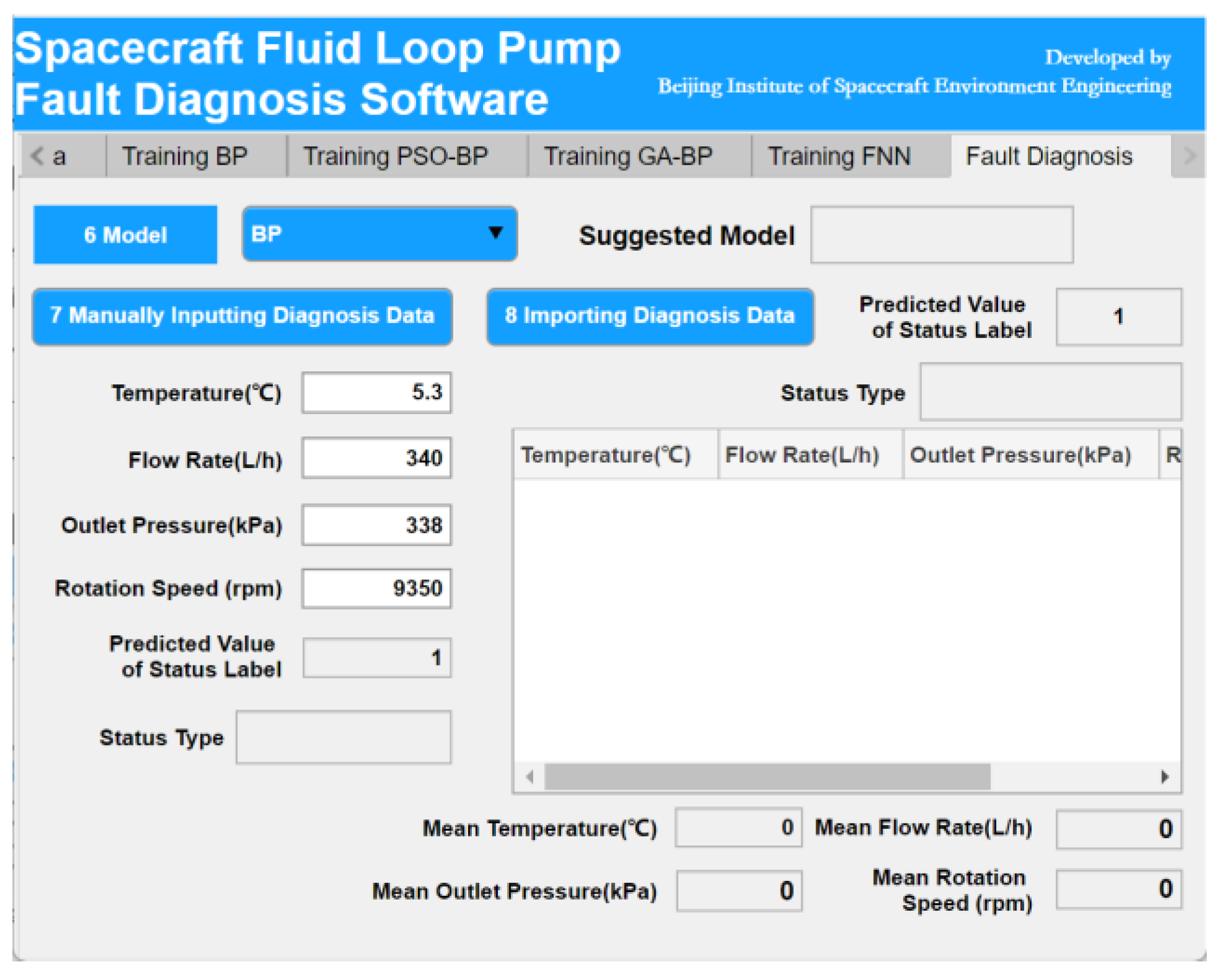

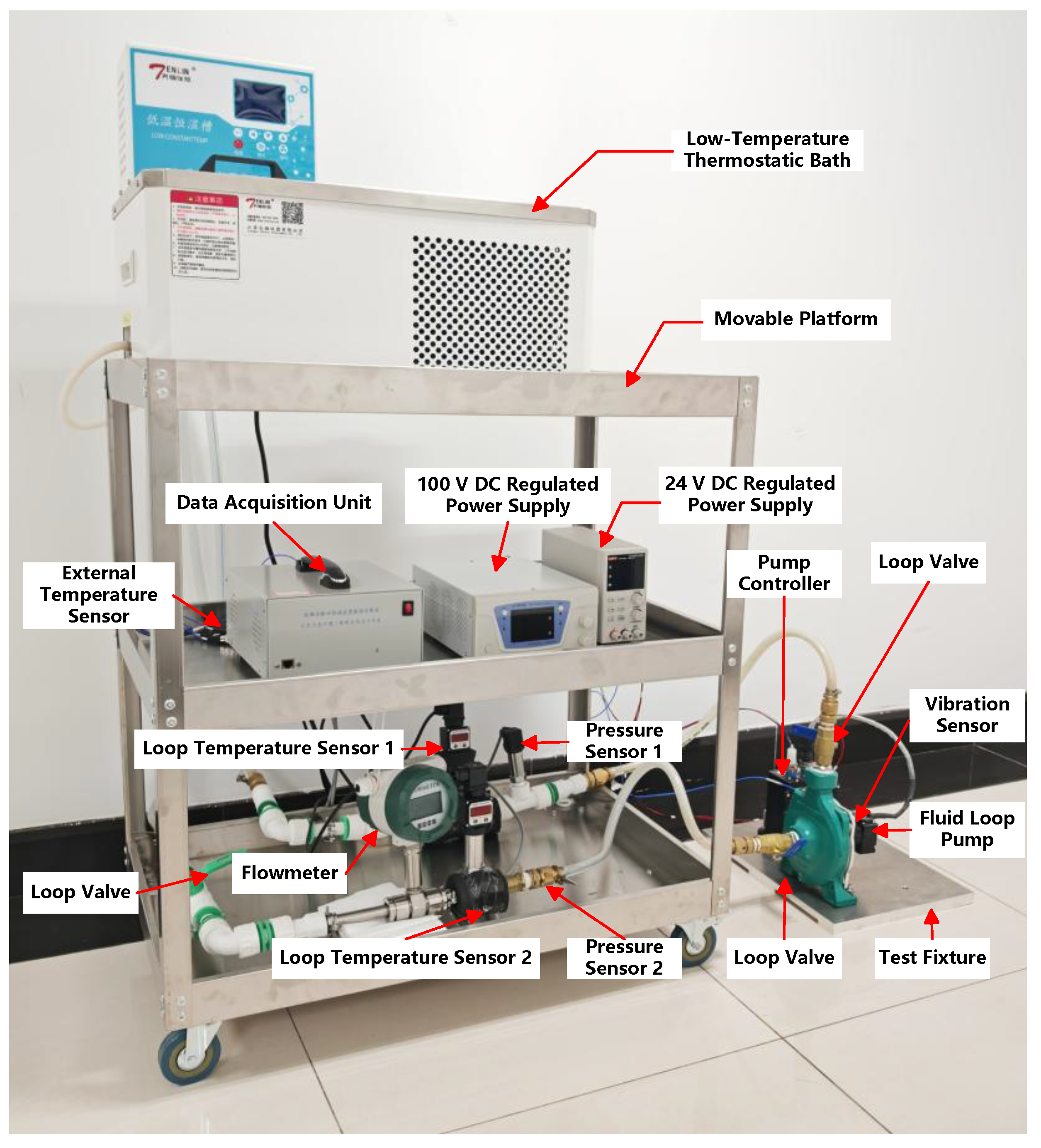

In response to the urgent need for highly accurate and stable intelligent fault diagnosis in spacecraft, this paper proposes an MNN-based intelligent fault diagnosis method for spacecraft fluid loop pumps. The paper also designs software for practical applications and validates the method using both on-orbit telemetry data and ground-based test data. The remainder of this paper is organized as follows.

Section 2 introduces the structure and operation principle of the spacecraft fluid loop pump, providing the physical foundation for subsequent analysis.

Section 3 describes the proposed intelligent fault diagnosis methodology, including the four base neural network models and the construction of the MNN.

Section 4 presents the development and implementation of the intelligent fault diagnosis software.

Section 5 validates the proposed method using both on-orbit telemetry data and ground-based test data and includes a detailed parametric and performance analysis. Finally,

Section 6 concludes the paper and discusses future research directions.

3. Intelligent Fault Diagnosis Method

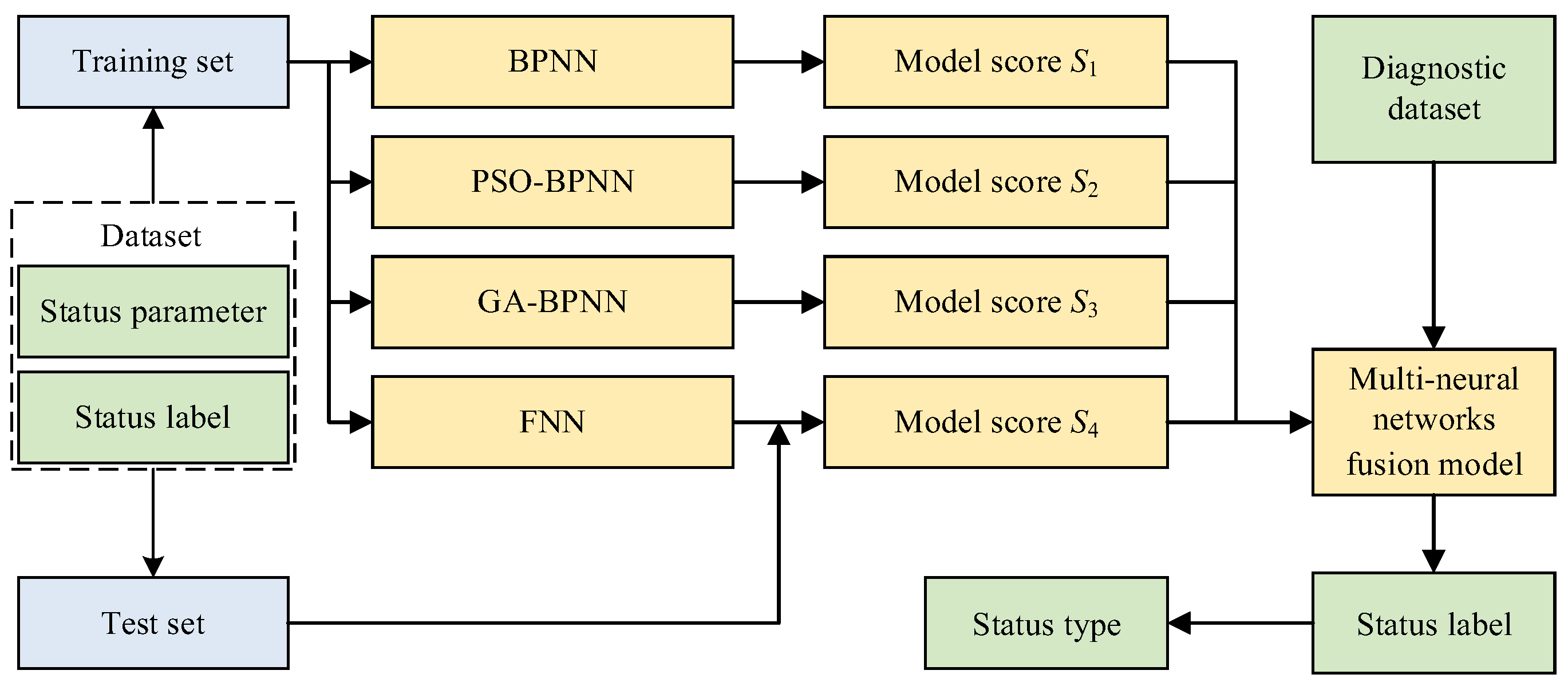

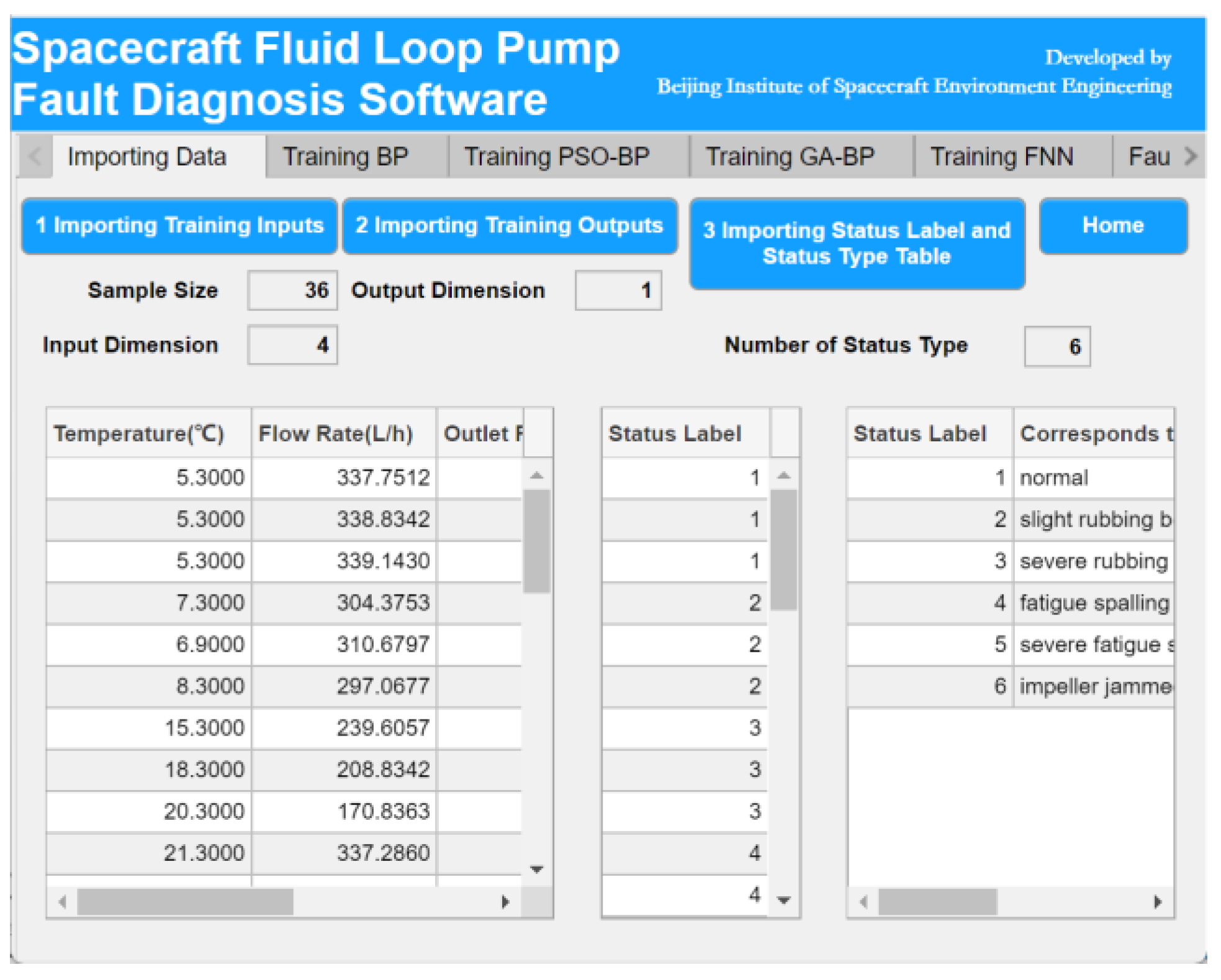

The intelligent fault diagnosis method utilizes the four status parameters of fluid loop pumps as inputs and their corresponding status labels as outputs. Four distinct neural network models are individually trained using this dataset. After the training phase is completed, each model is evaluated to generate a model score. These scores serve as the basis for weighing each model, and the weighted models are then integrated into an MNN. This fusion model is subsequently utilized to diagnose the system data with enhanced accuracy and stability.

3.1. Four Neural Network Models

The structures of these four neural networks are defined by specific model parameters, which are detailed in the “Model Parameter Settings” section. The dataset, comprising the four status parameters and their corresponding status labels for the fluid loop pump, is split into training and test sets. Each neural network is trained independently, taking the status parameters of fluid loop pumps as inputs and generating predicted status labels as outputs.

3.1.1. BPNN Model

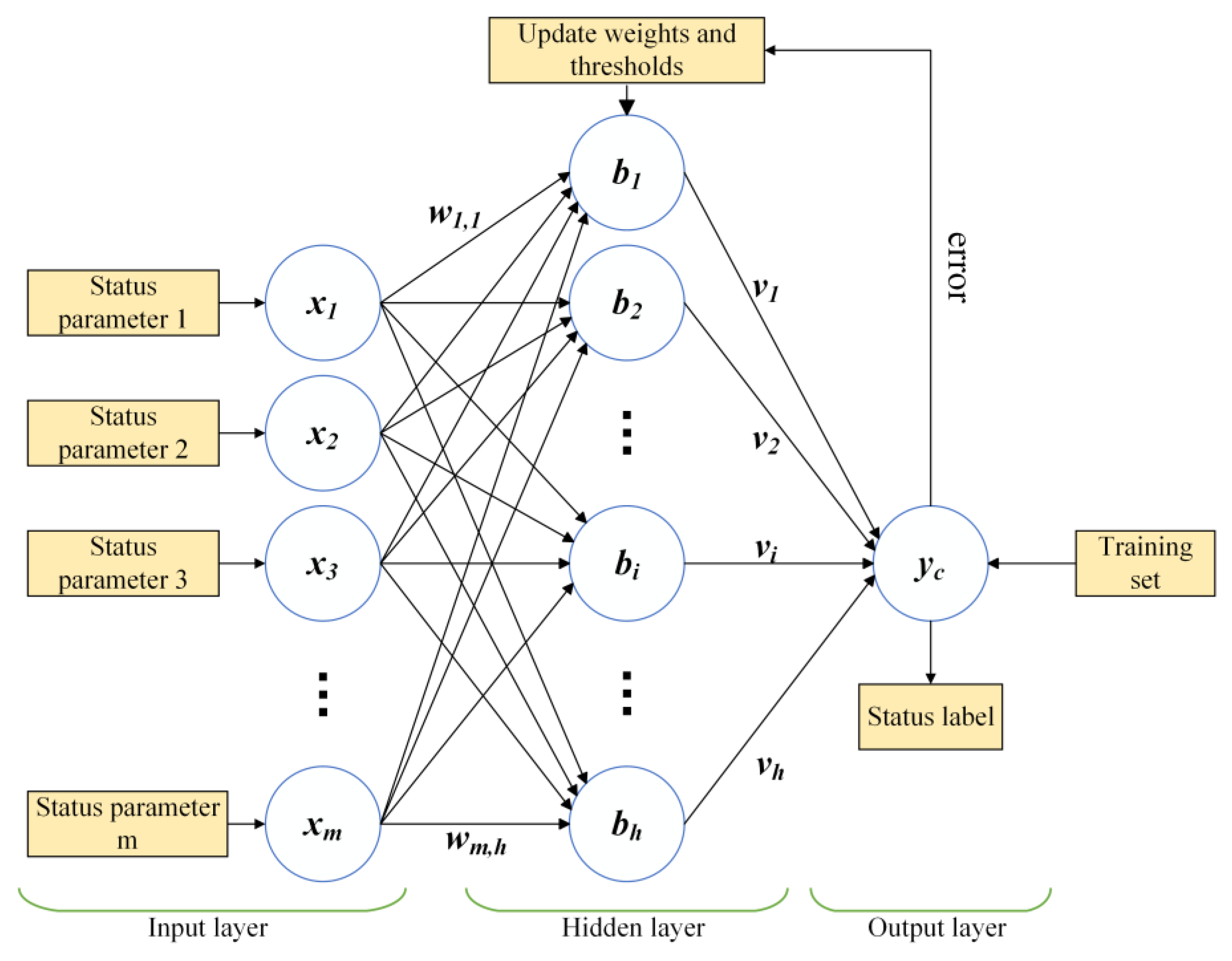

The back propagation neural network (BPNN) is composed of an input layer, a hidden layer, and an output layer. During training, the network iteratively adjusts the weights and thresholds to minimize the discrepancy between the predicted outputs and the actual status labels. Key parameters for constructing a BPNN include the number of neurons in the hidden layer, error threshold, number of iterations, and learning rate.

Figure 3 illustrates the BPNN structure used in this study, with the input layer comprising four neurons that represent the fluid loop pump status parameters and the output layer containing one neuron that represents the status label.

The mathematical relationships governing the connections between the input and hidden layers, and between the hidden and output layers, are described by Equations (1) and (2) [

29].

where

xi is the

i-th input to input neurons (the

i-th status parameter) and

m is the number of input neurons;

bj is the

j-th input to hidden layer neurons,

h is the number of hidden layer neurons, and

θj is the

j-th threshold of hidden layer neurons;

wj,i is the connection weights between the

j-th hidden layer neurons and

i-th input neurons;

f(●) is Sigmoid activation function. While

yc is the prediction value of the neural network,

β is the threshold of the prediction value;

vj is the connection weights between the

j-th hidden layer neurons and the prediction value of the neural network.

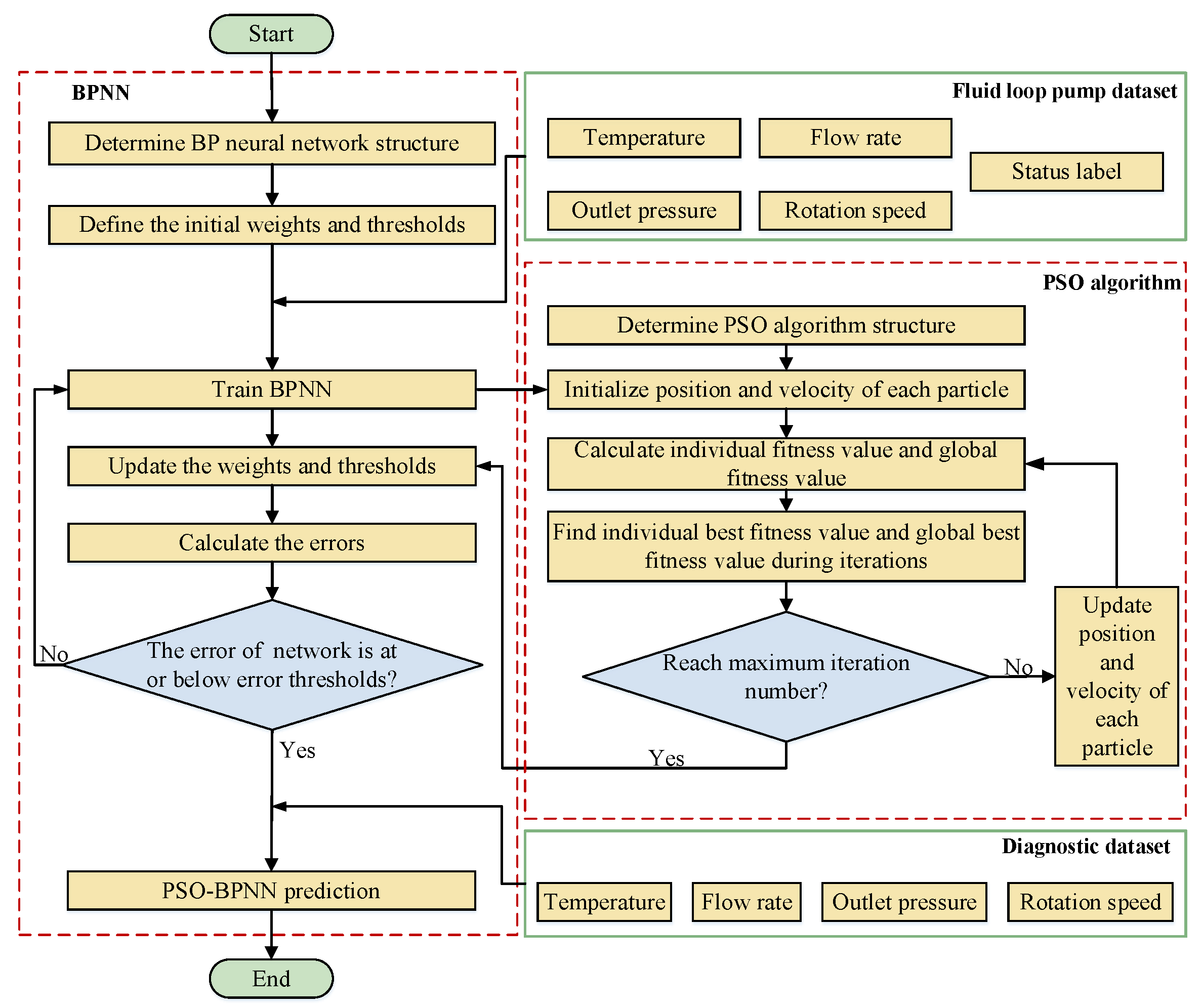

3.1.2. PSO-BPNN Model

The particle swarm optimization-back propagation neural network (PSO-BPNN) is an enhanced version of the BPNN. It utilizes the particle swarm optimization (PSO) algorithm to optimize the weights and thresholds of the BPNN, thereby improving its performance. This process is illustrated in

Figure 4. In the PSO algorithm, each particle represents a potential solution in the solution space for the weights and thresholds of the neural network. By simulating particle velocities and positions, the algorithm aims to find the global optimum while avoiding being trapped in local optima. Key parameters of the PSO algorithm include the learning factor, number of iterations, population size, and the maximum and minimum velocities and positions of particles. The positions and velocities of particles are updated according to Equations (3) and (4) [

30].

where

is the position of

j-th particle in

γ-th generation and

is the velocity of

j-th particle in

γ-th generation;

is individual best of

j-th particle at

γ-th iteration and

is the global best among

γ iterations;

c1 and

c2 are the learning rate factors while

r1 and

r2 are the random numbers.

During the iteration optimization process, the fitness of individual particles and the global fitness are assessed using Equations (5) and (6).

where

is the prediction value of

j-th particle in

γ-th generation and

is the actual value of

j-th particle in

γ-th generation;

n is the population size;

f is the fitness of

γ-th generation and

fg is the global fitness.

3.1.3. GA-BPNN Model

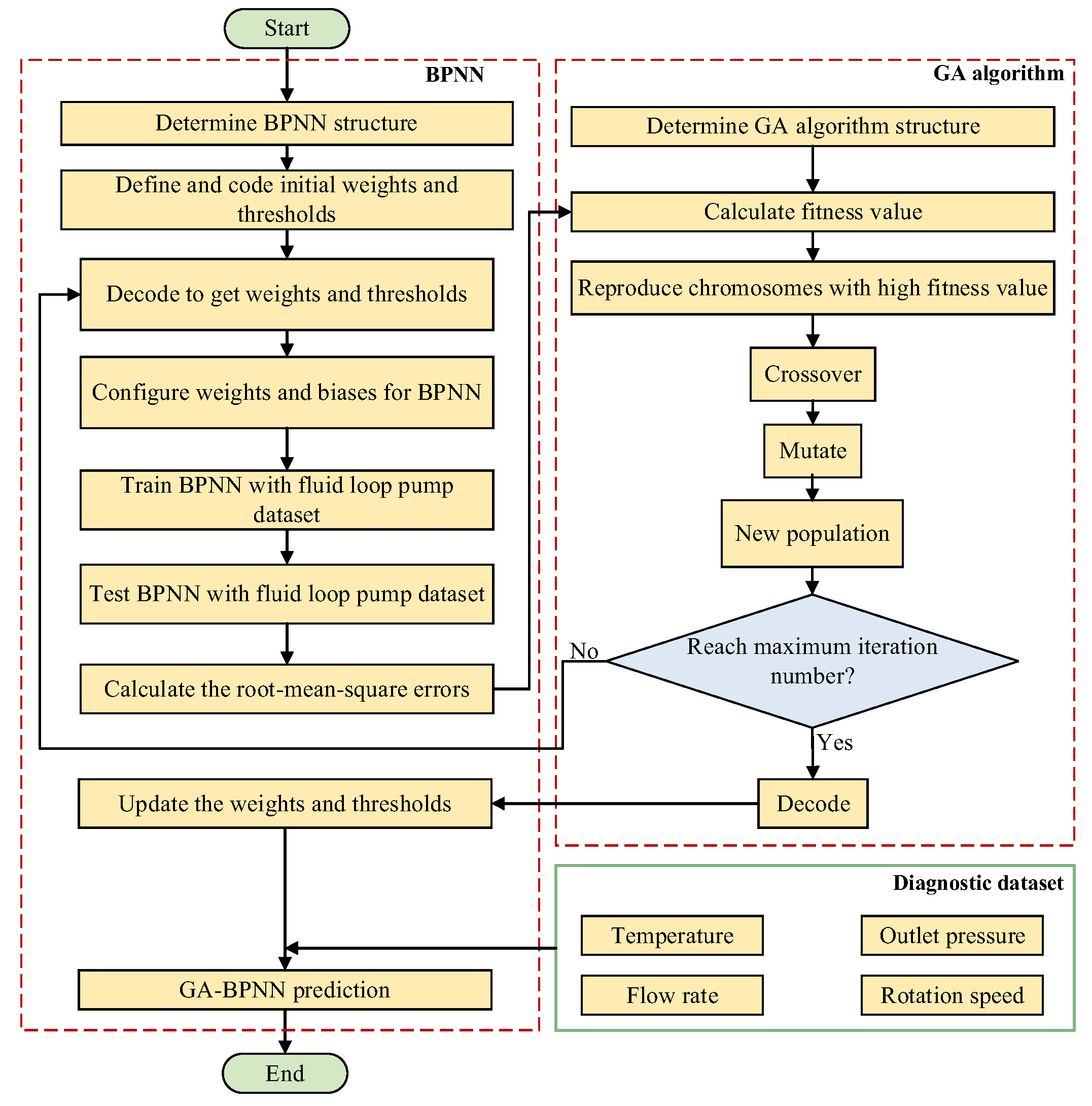

The genetic algorithm-back propagation neural network (GA-BPNN) is also an enhanced variant of the BPNN. It utilizes the genetic algorithm (GA) to optimize the thresholds and weights of the BPNN, as shown in

Figure 5. The GA mimics the evolutionary processes of natural populations through selection, crossover, and mutation. It iteratively searches for the optimal initial weights and thresholds of the network, thereby achieving global optimization [

31]. In this study, the GA’s performance is fine-tuned by configuring parameters such as the number of generations and population size.

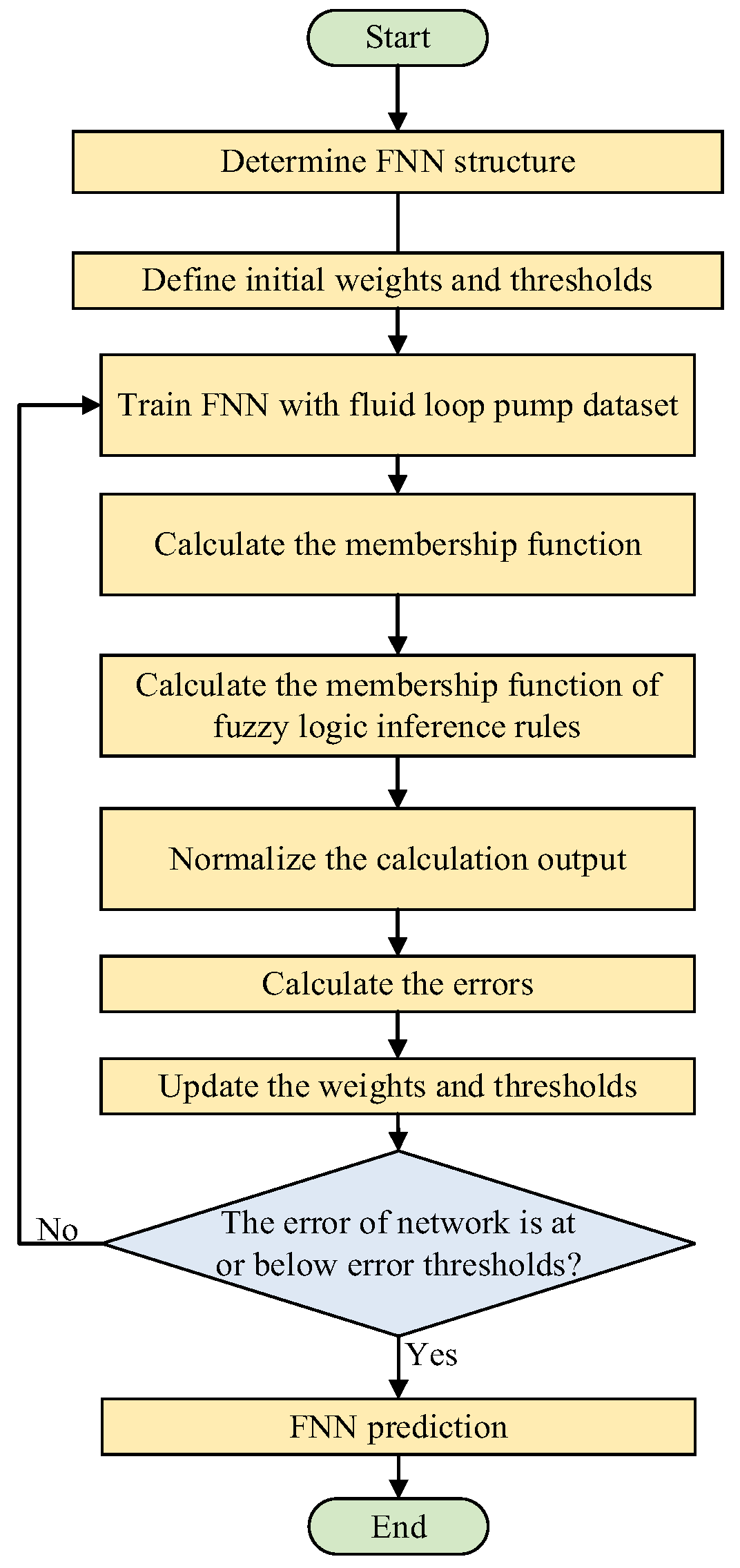

3.1.4. Fuzzy Neural Network Model

The fuzzy neural network (FNN) integrates fuzzy logic with an artificial feedforward neural network, offering strong self-learning capabilities and efficient direct data processing. The construction process of the FNN is illustrated in

Figure 6. Initially, the network structure is established based on the inputs and outputs of the training set. Subsequently, the membership function, network parameters, and the weights and thresholds of the output layer are initialized. The Gaussian function is used for the membership function [

32]. The training process involves calculating errors and updating the weights and thresholds iteratively until the error or iteration count meets the predefined termination conditions.

where

is the membership function for

j-th network input in the

i-th fuzzy subset and

l is the number of fuzzy subsets;

is the center point of the membership function for

j-th network input in the

i-th fuzzy subset and

is the width of the membership function for the

j-th network input in the

i-th fuzzy subset.

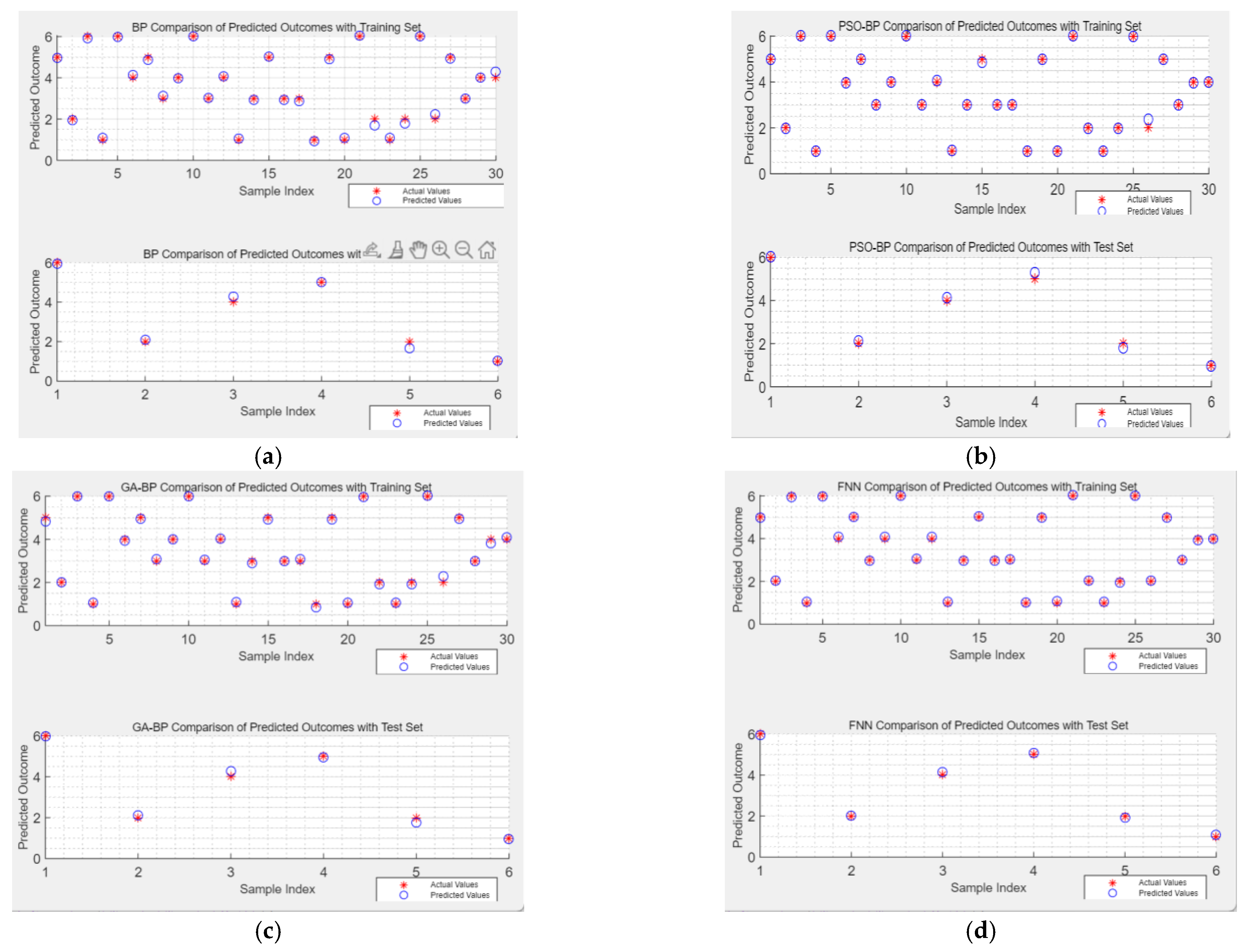

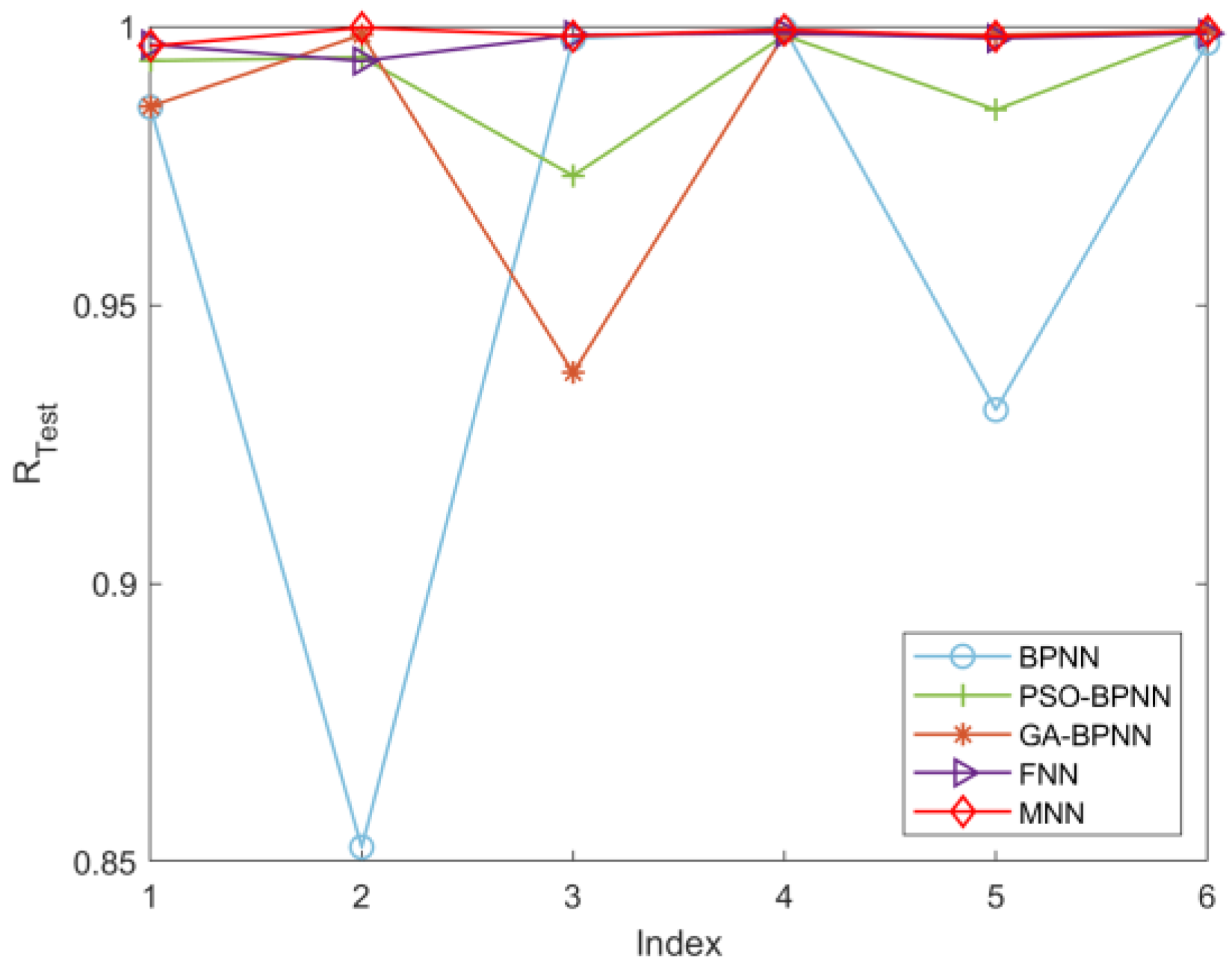

3.2. Model Evaluation Method

After training the neural network, we need to evaluate its performance by calculating the mean squared error (

MSE)

Mtrain and the correlation coefficient (

R)

Rtrain between the actual status labels and the predicted values for the training set. Similarly, compute the

Mtest and

Rtest for the test set. The

MSE and

R, as shown in Equations (8) and (9), are chosen as evaluation metrics. It is crucial to minimize the prediction error, measured by the

MSE, to accurately identify the operational status of fluid loop pumps. Additionally, a high

R ensures that the model’s predictions are reliable and closely aligned with the actual status labels.

To comprehensively evaluate the model’s performance, a scoring mechanism is introduced, which combines the

MSE and

R from both the training and test sets. Since a higher

R and a lower

MSE indicate better model performance, the

R/

MSE ratio is used to integrate these two metrics. Additionally, considering that the

MSE is typically much smaller than one, a logarithmic form is adopted to mitigate the significant impact of its minor fluctuations on the results. The training set

Rtrain and

Mtrain are combined with the test sets

Rtest and

Mtest in a proportional manner, as shown in Equation (10).

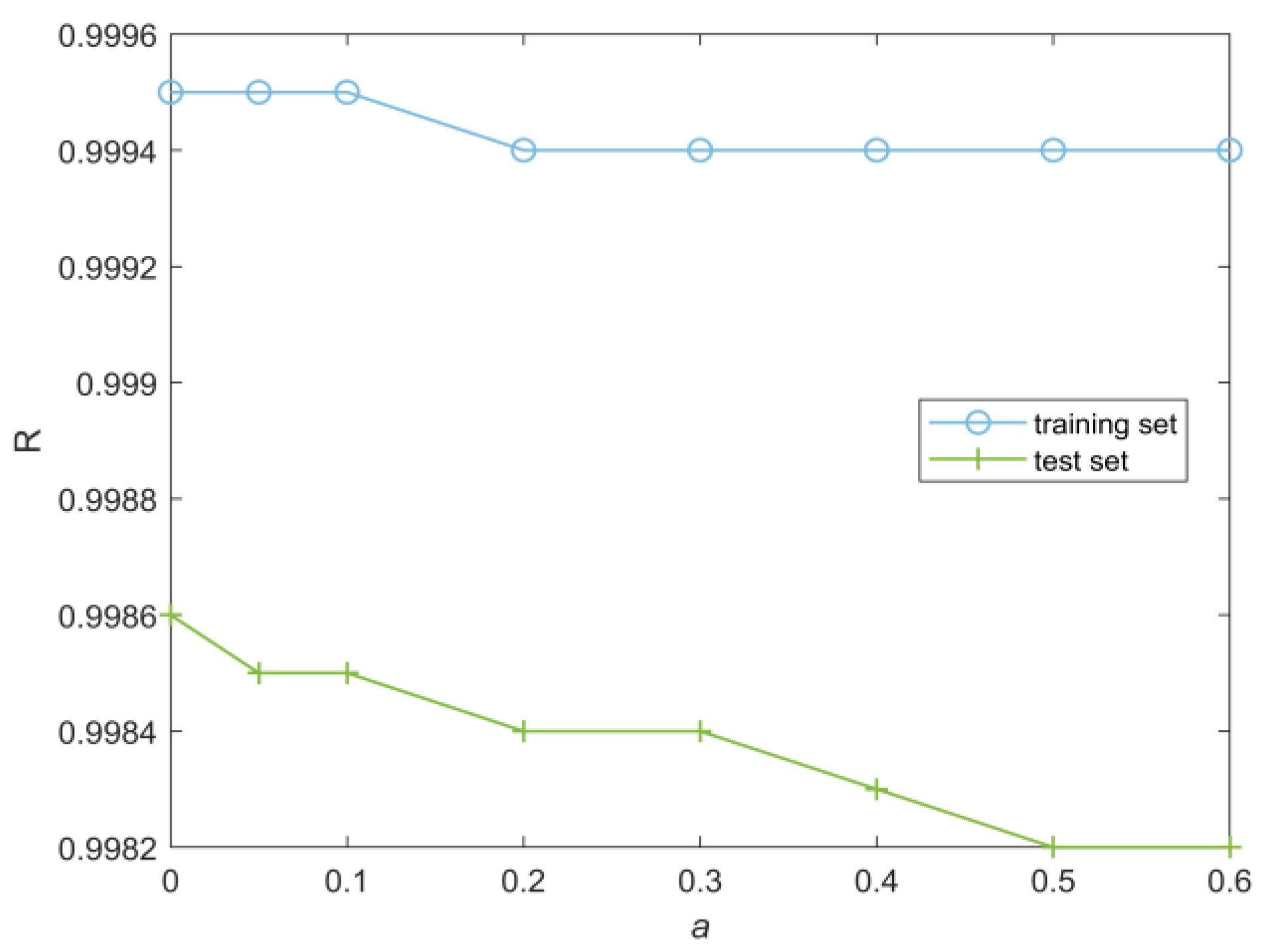

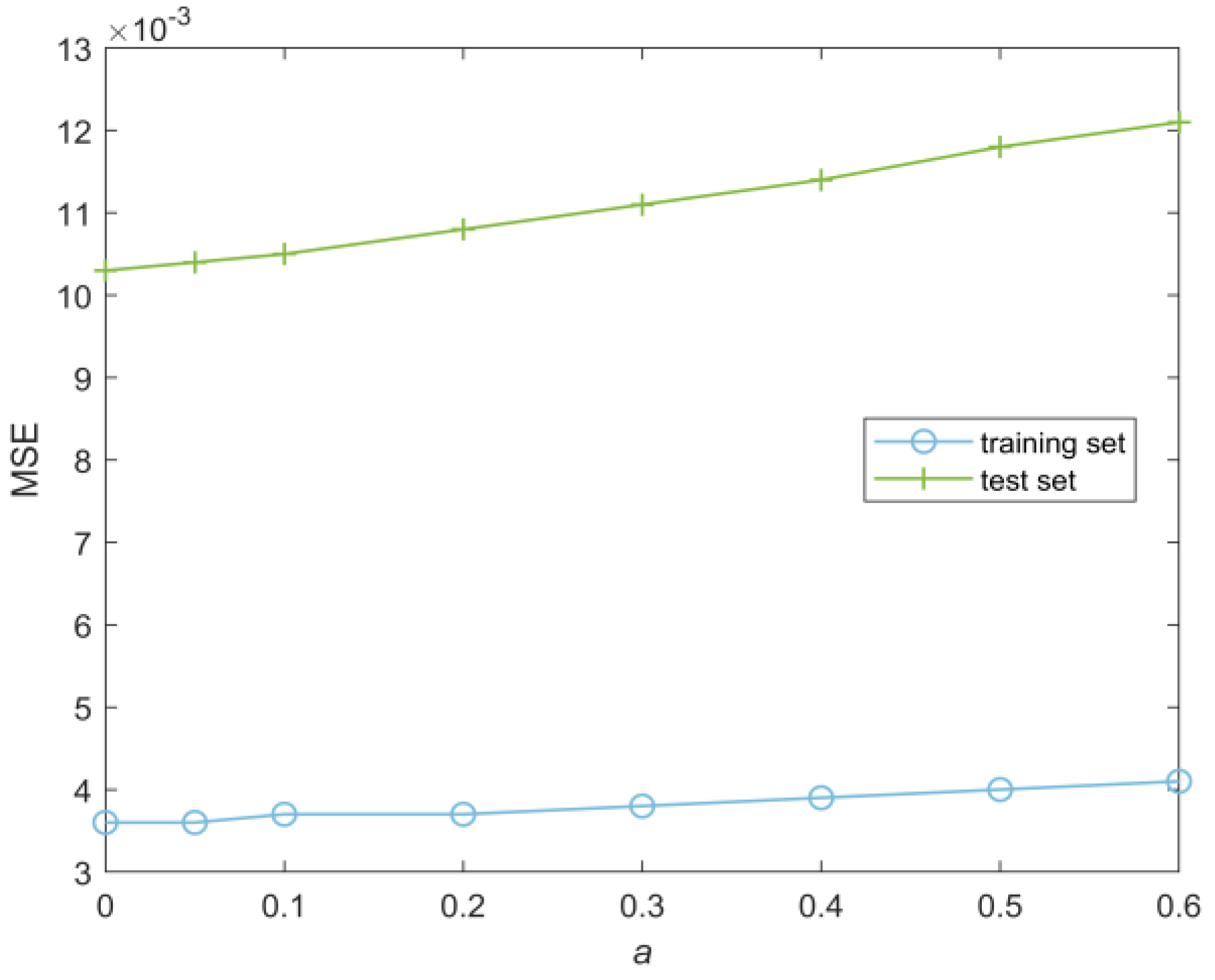

where a (0 ≤

a ≤ 1) represents the weight assigned to the training set performance (including both

Rtrain and

Mtrain) in the scoring formula. Meanwhile, (1 −

a) represents the weight assigned to the test set performance (including both

Rtest and

Mtest) in the scoring formula. The optimal

a value will be further determined through

k-fold cross-validation to minimize the variance of the test set’s

MSE and

R, ensuring the model’s stability and generalization ability. A higher model score indicates better training and testing performance. Based on the model scores of the four neural networks, an MNN can be constructed using a weighting algorithm.

To provide a more comprehensive assessment of the model’s diagnostic accuracy, we will also calculate Accuracy, Precision, Recall, and F1-score for the test set. Accuracy measures the proportion of all predictions that are correctly predicted, Precision measures the proportion of true positive predictions among all positive predictions, Recall measures the proportion of true positive predictions among all actual positive instances, and F1-score is the harmonic mean of Precision and Recall, providing a balanced measure of the model’s performance.

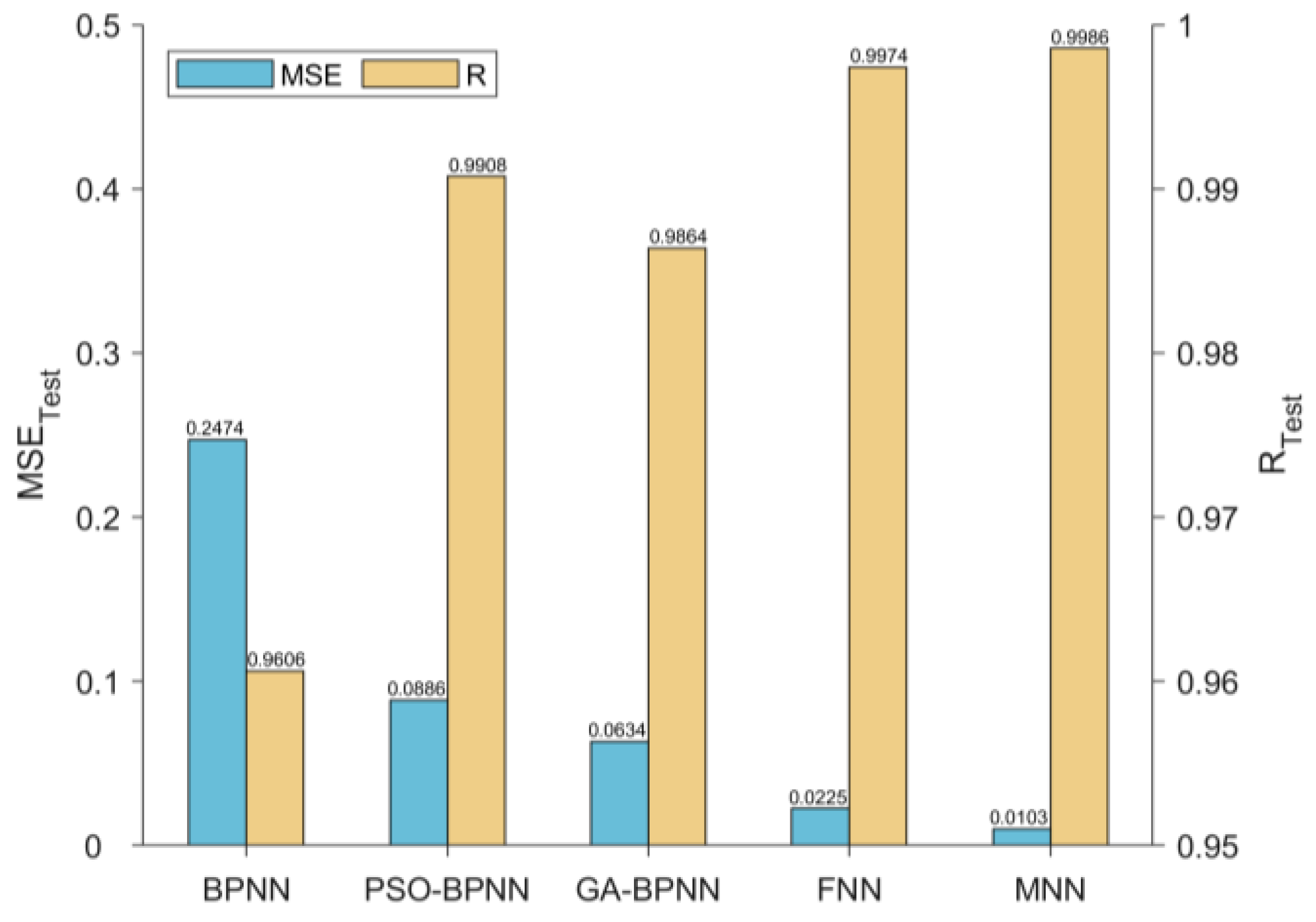

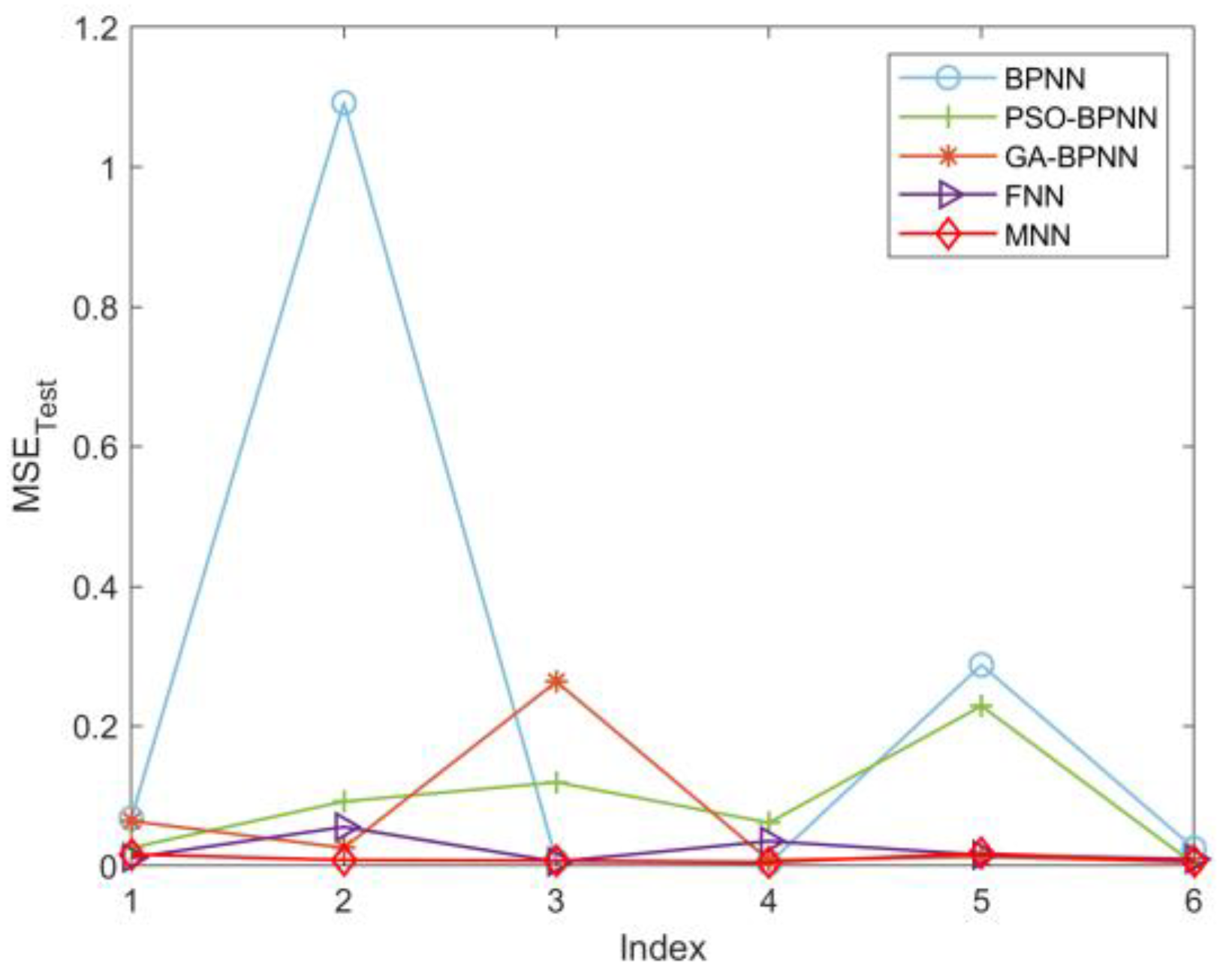

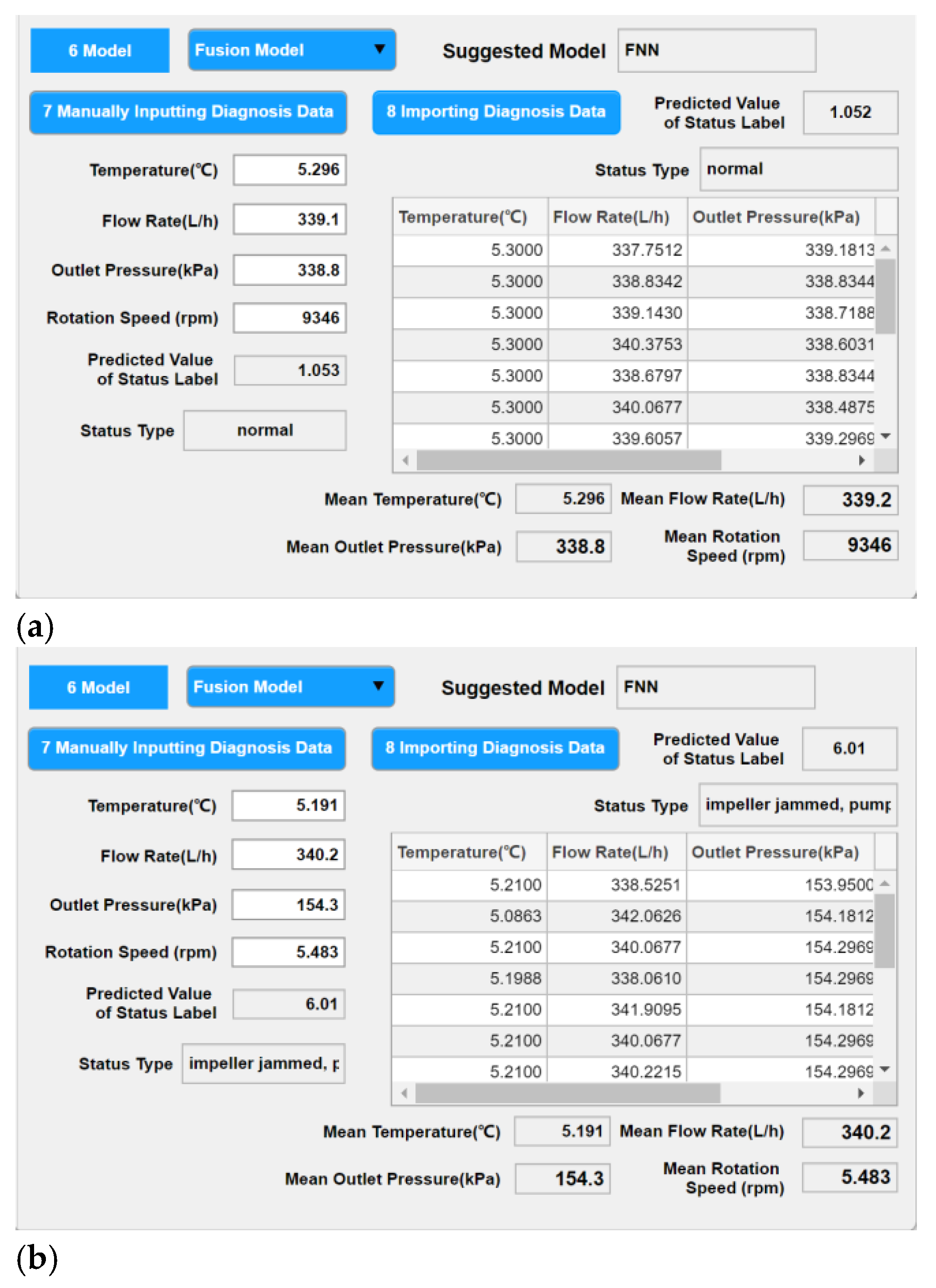

3.3. Multi-Neural Network Fusion Model Intelligent Fault Diagnosis Method

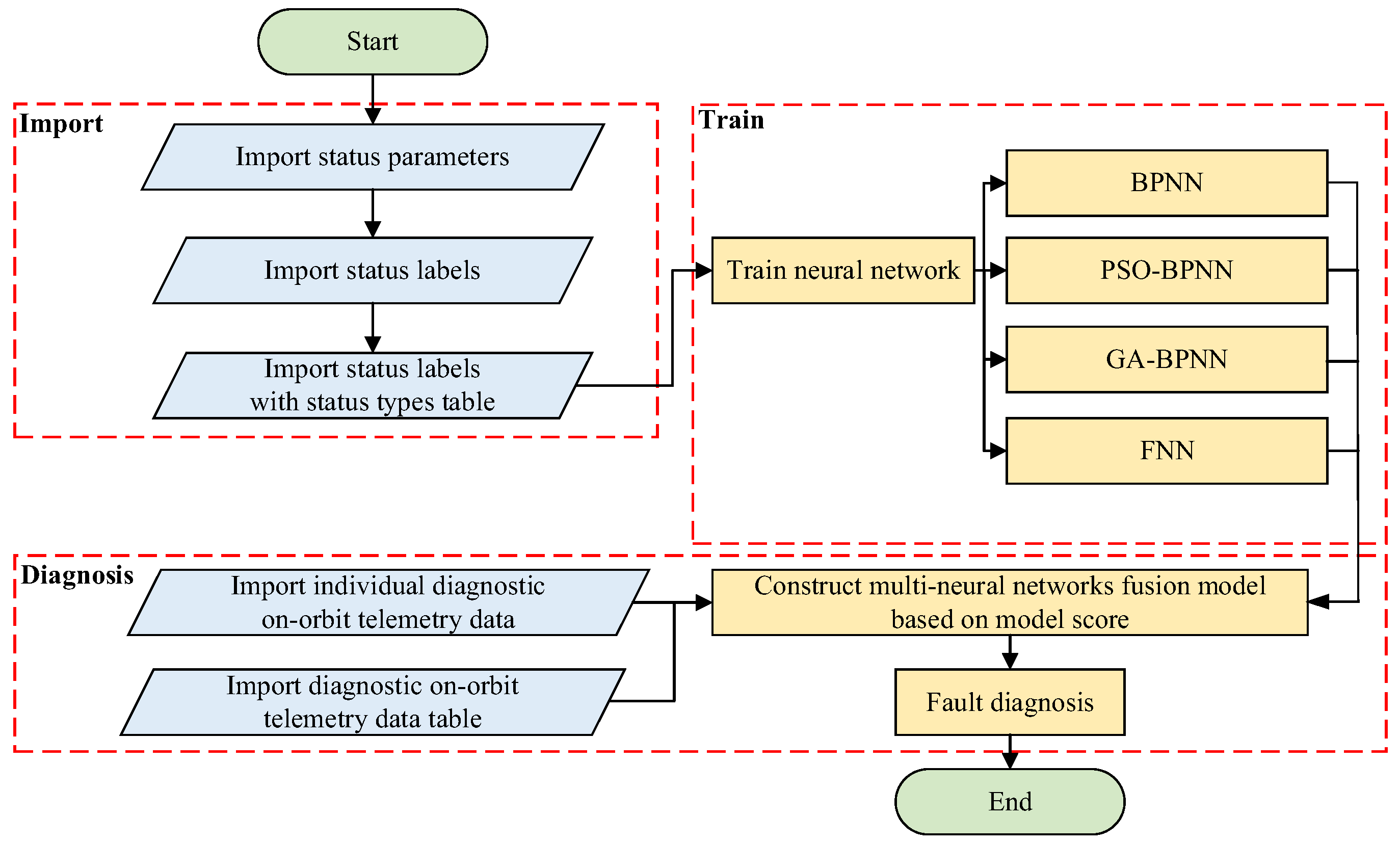

Referring to the flowchart shown in

Figure 7, based on the model scores of the four neural networks, the weights for each neural network in the MNN are calculated using Equation (11). The weight

Wi of each neural network is proportional to its model score

Si, ensuring that neural networks with higher scores contribute more to the final prediction. The output of the MNN is obtained by taking a weighted sum of the predicted status labels from each neural network, as shown in Equation (12).

This weighted sum allows the fusion model to leverage the strengths of each individual neural network, thereby improving the overall prediction accuracy and stability. The closest integer value p to the predicted value P0 is taken as the final status label. The status labels are as follows: 1 represents “normal,” 2 represents “slight rubbing between the impeller and pump casing,” 3 represents “severe rubbing between the impeller and pump casing,” 4 represents “fatigue spalling of bearing raceway,” 5 represents “severe fatigue spalling of bearing raceway,” and 6 represents “impeller jammed, pump functionality lost.”