4.2.1. Training Data Generation

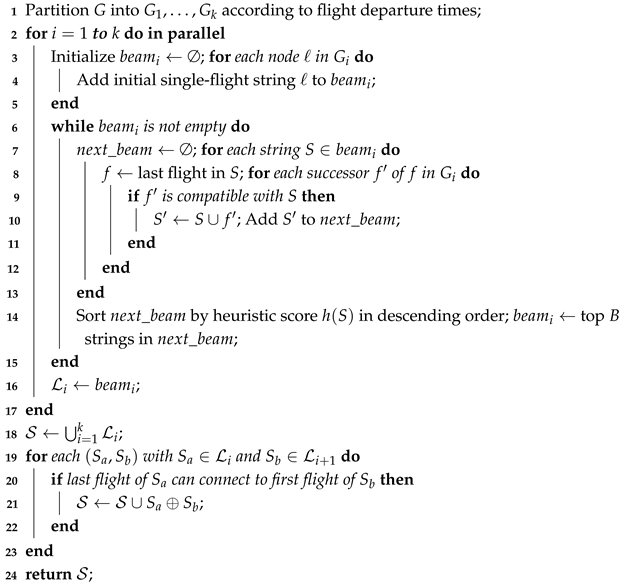

The pseudocode of the algorithm is shown in Algorithm A1. The following is an explanation of the symbols and formulas appearing in the pseudocode.

In the training data generation, one of the most crucial aspects is how to collect enough usable training data [

32]. For the problem addressed in this study, the data consists of a large number of solvable Set Covering Problems. Regarding the Aircraft Routing Problem, there are several challenges in collecting training data. If public datasets are used to train the model (such as CORLAT, MIPLIB, Google Production Packing, etc.), these datasets suffer from scattered data scales and are randomly generated, with no regularity in the constraint coefficients of the variables. Using these datasets to train the model results in poor fitting for the Aircraft Routing Problem, significantly reducing accuracy.

If real-world data are used for model training, since aircraft routing is typically released on a quarterly basis, even if data from a long time span is collected, the available samples may still be insufficient to fully train the model. In addition, adjacent flight schedules exhibit minimal differences, making overfitting a common issue. Therefore, this paper designs a data generation algorithm that mimics real-world scenarios, generating specific simulated SCP (Set Covering Problem) instances for the Aircraft Routing Problem, where both the problem scale and parameters can be customized.

We design a training data generator for the Aircraft Routing Problem that can generate ARP instances and their corresponding solutions. This generator first builds a covering seed solution and then injects noise columns under hub/time-structured rules. This guarantees feasibility while matching stylized regularities of aircraft routing. For example, hub airports tend to have higher connection rates, so the coefficients for flights connected to hubs are generally higher, reflecting their importance in the system. Similarly, time constraints between flights exhibit patterns, such as common time buckets (e.g., morning, afternoon, evening) with regular sequencing based on typical airline schedules.

Let

H be a set of airports with hub weights

(Zipf-like [

33]), and

be the set of flights. Each flight

has the following properties:

(origin and destination airports);

Departure and arrival times ;

A time bucket (morning, noon, evening);

A maintenance flag .

The minimum turnaround time is . A route S is an ordered subset of I that obeys connectivity and time-monotonicity: for consecutive flights in S, we require and . The specific process of the algorithm is as follows:

Sample flights with hub/time structure: For each flight, sample the departure airport and the arrival airport , with probabilities proportional to (reflecting the idea that “hub airports are more likely to be selected,” and is prohibited to avoid invalid round trips). Sample a time bucket , and then sample the departure time within the bucket; the arrival time is , where (log-normal distribution to model flight durations). Select all flights where as “maintenance-required flights” (set ), and set for others.

Build a feasible seed cover : Step 2 aims to construct a feasible seed cover to generate “seed routes” that ensure all flights are covered, thereby forming an initial feasible solution for the Set Covering Problem (SCP). Initially, (indices of seed routes), U (route nodes), A (incidence matrix with no columns initially), and (the set of uncovered flights, which initially includes all flights) are initialized. Subsequently, while , the procedure iterates to cover all flights: a starting flight is selected from with a probability proportional to , which integrates “flight degree” (the number of connections a flight has) and “hub weights” (the importance of the airport as a hub) to embody the “preferential attachment” mechanism. Preferential attachment is a concept borrowed from network theory, where entities with higher connectivity (in this case, more frequent flight connections) are more likely to be selected. This mechanism mimics the behavior observed in real-world networks, such as airline routes, where hub airports, due to their high connectivity, are more likely to be chosen for flight routes.

The initial route S is initialized as , and the route length L is sampled from to determine the number of flights contained in the route. Following this, subsequent flights are added iteratively: with probability , the time bucket of the next flight is restricted to match that of the current flight ℓ; otherwise, this constraint is relaxed. Candidate flights (satisfying “origin-destination airport matching and sufficient turnaround time”) are filtered. If and no maintenance-required flight exists in the current route, is biased toward flights with . A candidate flight j is sampled using the score (which combines flight degree, hub weights, and penalizes longer time intervals) and appended to S. If and no maintenance-required flight is present in the route yet, an attempt is made to append the nearest feasible maintenance-required flight; if this attempt fails, the construction of the current route restarts. Finally, a new route is created to update the incidence matrix A and the set . The seed solution is generated by setting for seed routes, ensuring that each flight is covered by at least one route (i.e., holds for all i).

Add noise columns for redundancy/realism: Step 3 aims to add noise columns to enhance redundancy and realism, generating additional “noise routes” such that each flight is covered by more routes (closer to the real-world scenario where “one flight has multiple route options”). First, the target degree is set: the “coverage count (degree)” of each flight must satisfy , where (the Poisson distribution controls the number of extra covers). Then, noise routes are generated iteratively until “the number of seed columns + the number of noise columns ” or “all flights meet the degree requirements”: when generating a noise route , the process is similar to Step 2 but with inflated hub weights , relaxed time-bucket constraint , and optionally longer route length L; a new column is added to update the incidence matrix, mark coverage relationships, and update the degree of flights.

Add noise columns for redundancy/realism: Step 3 aims to add noise columns to enhance redundancy and realism, generating additional “noise routes” such that each flight is covered by more routes (closer to the real-world scenario where “one flight has multiple route options”). The addition of noise routes introduces redundancy by providing multiple possible routes for each flight, reflecting the variety of feasible paths in real-world airline networks. It also improves realism by simulating alternative, less likely routes that might still be valid, thereby better representing the complexity and flexibility of actual flight scheduling. First, the target degree is set: the “coverage count (degree)” of each flight must satisfy , where (the Poisson distribution controls the number of extra covers). Then, noise routes are generated iteratively until “the number of seed columns + the number of noise columns ” or “all flights meet the degree requirements”: when generating a noise route , the process is similar to Step 2 but with inflated hub weights , relaxed time-bucket constraint , and optionally longer route length L; a new column is added to update the incidence matrix, mark coverage relationships, and update the degree of flights.

Assign costs and control SNR: Step 4 is for assigning costs and controlling the signal-to-noise ratio (SNR), which calculates the cost for each route and scales the cost of noise columns (to make the cost distinction between valid routes and noise routes more reasonable). The base cost formula is

where

is the number of flights in route

j;

is the total time interval between consecutive flights in the route;

is an indicator function (1 if the route contains a maintenance-required flight, 0 otherwise);

is noise following a normal distribution (simulating random fluctuations in cost). If

j is a noise column (

), its cost is scaled as

(

makes noise columns more costly, reducing their probability of being selected).

Let

index the columns, and

be the incidence matrix where

if

. The SCP decision vector is

. By construction, we generate a seed cover

, such that

A signal-to-noise parameter scales the costs of noise columns: for , we set . An optional maintenance-reach constraint requires every route to include at least one flight.

Output: Return the incidence matrix A, cost vector c, and bipartite graph G, along with the feasible seed solution .

Bartunov et al. (2017) [

16] proposed a method to transform integer linear programming problems into bipartite graph data structures. From the perspective of the Bipartite View, we output a bipartite graph

, which represents the routes (variable nodes),

represents the flights (constraint nodes), and

is the set of edges that indicate which routes cover which flights.

The seed solution guarantees feasibility. Adding noise columns does not destroy feasibility. The probability of selecting flights depends on the hub weights , which leads to higher degrees for flights connecting to hub airports. The bucket-based sampling process and minimum turnaround time enforce realistic temporal sequencing. The parameter controls the likelihood of staying within the same time bucket. The parameters , , and jointly control the redundancy and correlation of columns, thus shaping the difficulty of the SCP instance without sacrificing feasibility. The parameter enforces a constraint that every route must contain at least one maintenance flight.

To analyze the complexity, let denote the average route length and represent the total number of columns. The complexity of constructing each route is as this process involves sampling and feasibility checks. Meanwhile, the overall complexity for generating the entire instance is .

4.2.2. Network Structure

In the hybrid optimization framework for the ARP proposed in this study, the core prediction task associated with the TRS-GCN is the quantification of flight string usefulness. This task aims to accurately determine the probability that a particular flight string will be included in the optimal solution of the ARP (with the imitation target being the optimal solution of generated simulated ARP instances) and its potential to improve the objective function. This task is not only crucial for reducing the variable dimension of Mixed-Integer Programming (MIP) and alleviating enumeration redundancy but also provides a foundation for variable elimination in the subsequent presolve procedure and strong branching ordering in the Branch-and-Bound (B&B) process. The heterogeneous correlation of its multi-modal features (including the newly added linear programming-related mathematical features) has motivated the design of TRS-GCN to adapt to the complex dependency relationships inherent in the problem.

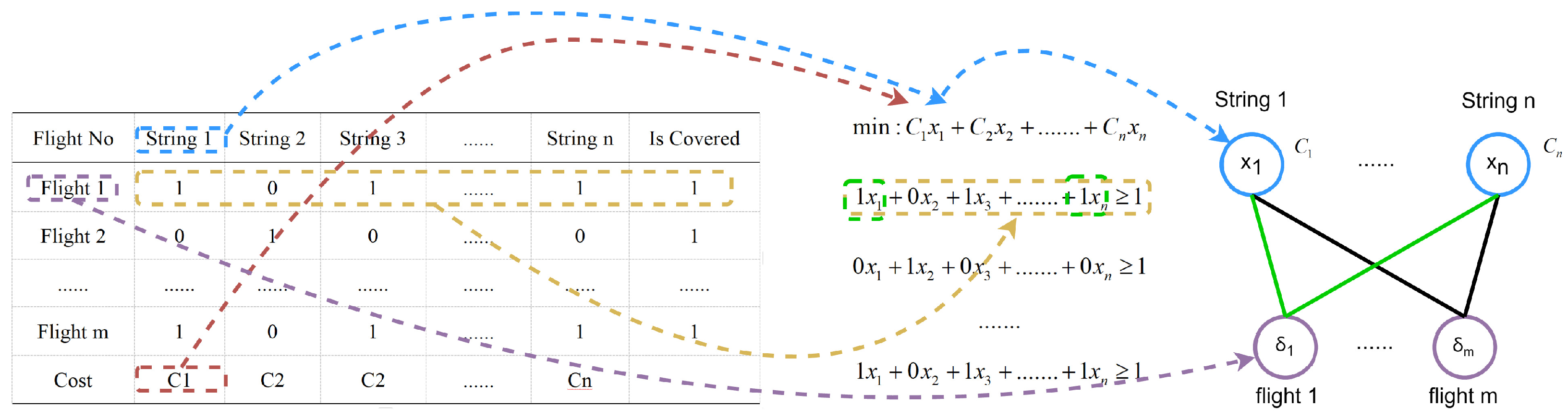

This study retains the traditional bipartite graph for ARPs as the input of the network, as shown in

Figure 2, The red represents the objective function coefficients, the blue represents the variables to be allocated, the yellow represents the constraints, and the green represents the coefficients of the constraints. Where the upper layer nodes represent flight strings and the lower layer nodes represent individual flights, with edges denoting inclusion relationships. Unlike traditional methods that use simplistic feature extraction, with objective function coefficients as flight string nodes feature and constraint constants as flight nodes feature, this study identifies limitations in such binary (0/1) edge features. Since the existence of nodes and edges already encodes this binary information, the traditional method lacks sufficient representational depth. To improve this, the study introduces a novel feature extraction approach, categorizing nodes into flight and flight string types and computing tailored feature vectors for each.

These characteristics are defined in

Table A2 and

Table A3, which offer a more informative and problem-specific representation [

34]. It is particularly important to emphasize that all feature calculations, especially those related to LP features, can be completed either directly or via the SCIP solver’s API with a time complexity of

or

. This ensures that the computation time during the feature extraction phase, prior to solving, is negligible.

To capture solutions close to the optimal one, we define the near-optimal feasible set with tolerance

:

where

represents the near-optimal feasible set for a given ARP instance

I with tolerance

. In this expression,

is a binary vector representing a solution, where each element indicates whether a flight string is included (1) or excluded (0) from the solution.

A is the constraint matrix,

is the cost vector, and

is the optimal solution vector.

Next, the importance score of flight string

s is calculated as a weighted average of its occurrences in near-optimal solutions:

where

is the weight of a solution

, which penalizes higher-cost solutions:

Here, is the importance score of flight string s in instance I, reflecting its prevalence in near-optimal solutions. The temperature parameter controls the sensitivity to the solution’s cost. The binary indicator denotes whether flight string s is included in the solution .

The vector encodes the true importance ranking of all flight strings in instance I. From this, we derive the ground-truth sequence of the top-K most important variables, denoted as .

Instead of predicting all scores at once in a static manner, the proposed TRS-GCN treats the task of ranking as a sequential decision-making process. Rather than assigning importance scores to all variables simultaneously, the model generates a ranked sequence step by step, selecting the most important variables one at a time. At each step, the model’s choice is influenced by the variables it has already selected, meaning that each decision is conditioned on the previous selections. This autoregressive approach allows the model to learn how the importance of one variable is related to the others, which is especially useful in complex optimization tasks where variables are interdependent.

The TRS-GCN is trained end-to-end by maximizing the likelihood of producing the correct ranking sequence, denoted as

. To achieve this, we minimize the negative log-likelihood of the target sequence. Minimizing the negative log-likelihood is equivalent to minimizing the sum of cross-entropy losses at each decoding step, where cross-entropy measures how much the model’s predicted sequence diverges from the actual ground-truth sequence. The training process uses a set of ARP instances sampled i.i.d. from a distribution

, and the goal is to adjust the model’s parameters to reduce this loss, ultimately learning how to rank the variables most accurately.

To address the limitations of static, one-shot prediction models in quantifying variable importance for Mixed-Integer Programming (MIP), we propose the Two-Stage Route Selection Graph Convolutional Network (TRS-GCN). Existing Graph Neural Network (GNN) approaches typically predict scores for all variables simultaneously. While effective, this paradigm does not capture the interdependent nature of variable selection in combinatorial optimization, where the importance of a variable is often conditional on which other variables have been considered.

Inspired by the successes of the encoder–decoder framework in sequence-to-sequence tasks such as machine translation [

35] and the autoregressive generation process in time-series forecasting, we reformulate the variable ranking problem as a sequential decision-making task. The core philosophy of TRS-GCN is to first form a holistic understanding of the entire optimization problem and then to autoregressively generate a ranked list of high-importance variables, where each selection is conditioned on the previous selections.

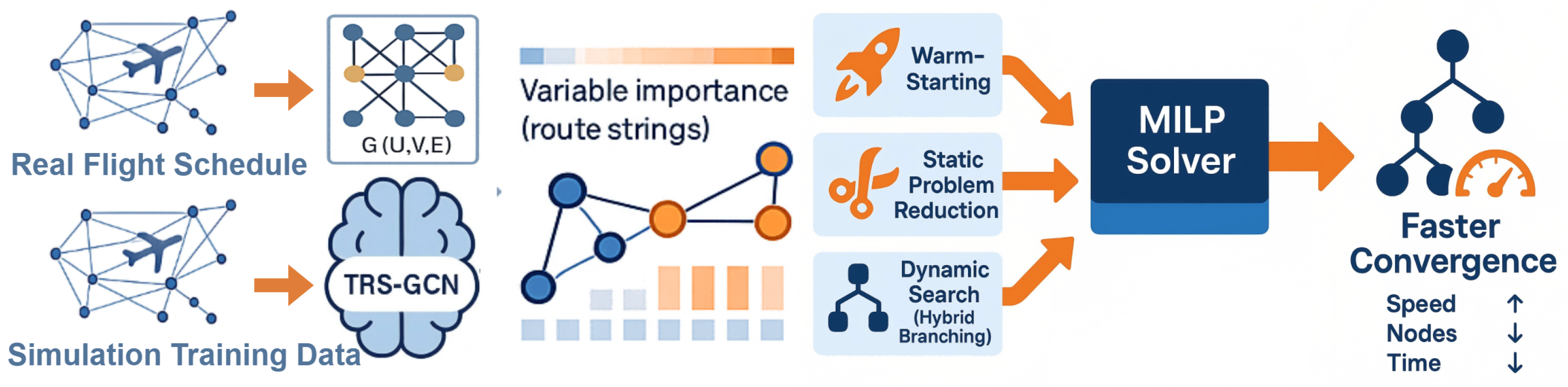

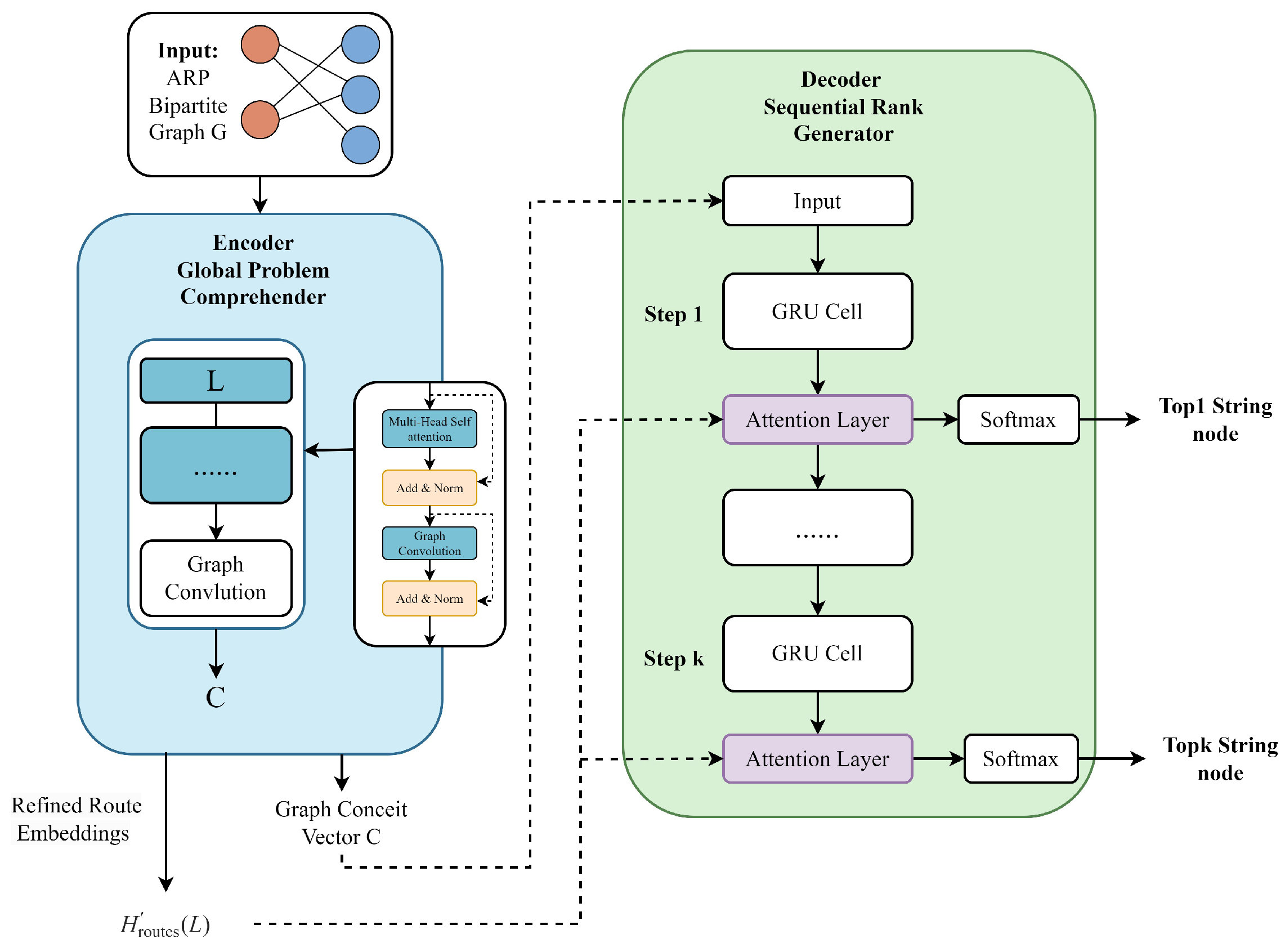

As illustrated in

Figure 3, the TRS-GCN architecture is based on an encoder–decoder framework. The encoder, a deep graph neural network, is responsible for comprehending the complex structure and features of the Aircraft Routing Problem (ARP) instance, represented as a bipartite graph. The decoder, a recurrent neural network equipped with an attention mechanism, then utilizes this comprehensive understanding to sequentially identify and rank the most salient variables (flight strings).

4.2.3. Encoder

The encoder aims to learn task-informative, permutation-invariant representations of an ARP instance. Given the bipartite graph with multi-modal node features, it produces context-aware embeddings that jointly encode (i) incidence topology and local feasibility signals (e.g., turnaround and maintenance reachability), (ii) global regularities (hubness and temporal order), and (iii) LP-derived cues (such as reduced costs and slacks). A permutation-invariant readout over variable nodes aggregates these embeddings into a global context vector that summarizes instance scale and coupling patterns and conditions the decoder. The resulting representations are size-agnostic and capture both long-range dependencies (via attention) and local structure (via graph convolution), providing sufficient statistics for the downstream ranking task.

The input to the encoder is the bipartite graph representation of the ARP instance, where

U is the set of variable nodes (flight strings) and

V is the set of constraint nodes (flights). Each node

is associated with a multi-modal feature vector

, derived from the categories defined in

Table A2 and

Table A3.

The encoder is composed of L stacked Hybrid Graph Attention (HGA) layers. Each HGA layer is designed to capture both global, long-range dependencies and local, structural relationships within the graph. An HGA layer consists of two main components followed by a fusion and normalization step:

Multi-Head Graph Self-Attention: To capture global dependencies, we employ a multi-head self-attention mechanism [

36], inspired by Graph Attention Networks (GAT) [

37]. This allows each node to weigh the importance of all other nodes in the graph when updating its representation. For each attention head

k, the attention coefficient

between node

i and node

j is computed as

where

is the feature vector of node

i at layer

l,

is a learnable weight matrix,

is a weight vector for the attention head, and ‖ denotes concatenation. These coefficients are then normalized using the softmax function to obtain attention weights

.

Neighborhood Aggregation: Following the self-attention module, a Graph Convolutional Network (GCN) layer [

38] is applied to aggregate information from the immediate local neighborhood of each node. This step reinforces the structural relationships encoded by the graph edges. The GCN update rule is given by

where

is the output from the self-attention module,

is the adjacency matrix with self-loops, and

is the corresponding degree matrix.

Fusion and Layer Update: The global and local representations are fused, and the layer update is completed with a residual connection [

39] and layer normalization [

40] to ensure stable training of the deep architecture. The final update for layer

l is

After passing through L HGA layers, the encoder produces two outputs:

4.2.4. Decoder

The decoder’s objective is to utilize the rich representations learned by the encoder to generate a ranked sequence of the top-

K most important variable nodes, denoted by

. The generation process is autoregressive, meaning the selection of the variable at step

t is conditioned on the variables selected in all previous steps. The decoder is implemented as a Gated Recurrent Unit (GRU) [

41], coupled with an attention mechanism [

42] that dynamically focuses on the most relevant parts of the input problem and generates the ranked sequence one variable at a time over

K steps:

Initialization: The initial hidden state of the GRU, , is initialized using the global context vector from the encoder, via a linear transformation: .

Decoding at Step

t (for

): The GRU updates its hidden state

, where

is the embedding of the previously selected variable (a special learnable

token is used for

). An attention mechanism then computes a score

for each candidate variable

u based on the current decoder state

and the encoder outputs

:

where

are learnable parameters. The scores are normalized into a probability distribution

over all

available (not yet selected) variables

using a softmax function with masking.

The variable for the current step, , is selected from this distribution (e.g., via arg max during inference).

The final output is the ordered sequence , representing the predicted ranking of the top-K most important variables.