1. Introduction

The rapid advancement of unmanned aerial vehicle (UAV) technology has significantly expanded its applications across diverse domains, including smart city development [

1,

2], ecological conservation [

3,

4] and resource exploration [

5,

6]. Consequently, object detection in aerial imagery has emerged as a prominent research focus within the computer vision community. As a novel sensing platform, UAV systems demonstrate remarkable technical advantages in various application scenarios such as traffic monitoring [

7,

8,

9], infrastructure inspection [

10,

11,

12], and security surveillance [

13,

14], owing to their exceptional mobility and operational efficiency. However, the unique imaging characteristics of aerial photography pose substantial challenges for object detection tasks: (1) First, the bird’s-eye view perspective results in dramatic scale variations among objects in images, with small-scale objects constituting a significantly higher proportion compared to conventional natural scenes. (2) Second, complex low-altitude environments frequently lead to occlusion by buildings and vegetation, resulting in discontinuous appearance features of targets. (3) Additionally, the wide field-of-view imaging system introduces complexities such as cluttered backgrounds and dynamic lighting conditions. These factors collectively compromise the accuracy and robustness of existing object detection algorithms in aerial scenarios. Therefore, advancing object detection algorithms with improved accuracy and adaptability for UAV applications is essential to maximize their practical deployment potential.

In recent years, the rapid advancement of deep learning techniques has revolutionized object detection in drone-captured aerial imagery [

15]. Aerial images present unique challenges, including small object sizes, complex backgrounds, and limited resolution, which significantly hinder the performance of conventional detection approaches. Traditional methods relying on handcrafted features exhibit notable limitations in adaptability and generalization capability under complex scenarios [

16]. In contrast, convolutional neural network (CNN)-based approaches demonstrate superior performance by automatically learning discriminative features, thereby substantially improving detection accuracy and robustness in challenging environments. To address the distinctive characteristics of aerial imagery, researchers have developed various innovative solutions. Liu et al. [

17] introduced Trans-R-CNN detectors with self-attention mechanisms to enhance feature representation for small objects. Sharifuzzaman et al. [

18] proposed the MSA R-CNN system incorporating multi-scale feature analysis to effectively handle scale variations, object rotations, and obfuscated backgrounds. He et al. [

19] augmented ResNet’s feature extraction capability through modified self-correcting convolutions and dilated convolutions, while Wu et al. [

20] introduced SCMaskR-CNN with novel optimizations for instance segmentation tasks. While these methods have improved small object detection to some extent, their increased computational complexity often leads to reduced inference speed. Considering the stringent hardware constraints of UAV platforms [

21], an ideal detection algorithm must maintain accuracy while meeting real-time processing and lightweight requirements. Consequently, achieving optimal performance-complexity trade-offs has emerged as a critical research focus in this field. Future developments should prioritize efficient architectures that balance detection performance with computational efficiency for practical deployment scenarios.

As a representative algorithm in single-stage object detection, the YOLO series has been widely adopted in computer vision owing to its exceptional real-time performance and high detection accuracy. However, when applied to small object detection in aerial imagery, the algorithm exhibits significant limitations, particularly for targets with weak feature representations that are susceptible to interference from complex backgrounds, often resulting in suboptimal performance. To address this challenge, academics have proposed improvements from multiple technical dimensions, mainly including strategies such as multi-scale feature fusion, data enhancement, and network architecture optimization. In feature fusion research, Shi et al. [

22] innovatively employed deformable convolutions to construct a cross-level feature fusion module, significantly enhancing multi-scale integration. Zhu et al. [

23] improved detection sensitivity by incorporating shallow semantic prediction heads into YOLOv5, albeit at the cost of increased parameters. Zhao et al. [

24] developed the CA-Trans module to fuse low-level features with rich details, though requiring larger training datasets. For feature enhancement, Wang et al. [

25] designed an adaptive receptive field network to enrich semantic representation, but detailed information might be lost in deep layers. Huang et al. [

26] reduced background interference through image cropping, yet preprocessing steps lowered efficiency. Zhao et al. [

27] proposed a context-guided Foreground Enhancement Module (FEM) to simultaneously boost foreground features and suppress noise. Regarding attention mechanisms, Wang et al. [

28] optimized the feature processing by introducing the BiFormer attention mechanism and increasing the detection scale. In addition, Hao et al. [

29] applied EfficientViT, a multi-scale attention module, to the backbone network to enhance the feature learning capability. However, these approaches are difficult to take into account the real-time requirements. While these methods improve YOLO’s small object detection capability, challenges persist in complex scenarios such as multi-category objects, severe occlusion, and irregular shapes, where performance remains limited.

Addressing critical challenges in UAV aerial imagery analysis—such as high proportions of small objects, complex backgrounds, and significant scale variations—this study proposes DDF-YOLO, a novel object detection model utilizing multi-scale dynamic feature fusion. The proposed model demonstrates substantial improvements in detection accuracy and computational efficiency, enabling the precise identification of multi-scale dense small objects against complex backgrounds. The core contributions of this work are as follows:

- (1)

A multi-scale dense object detection method is proposed specifically for UAV aerial imagery. Systematic experiments are conducted on the VisDrone2019 and UAVDT benchmark datasets, demonstrating that the proposed approach achieves significant performance improvements in detecting multi-scale, densely distributed small objects under complex background conditions.

- (2)

A dynamic feature extraction module, C2f-DCNv4, is designed based on DCNv4 [

30], utilizing deformable convolution mechanisms to effectively capture features of objects with complex contours and irregular shapes. By integrating the DySample [

31] module’s dynamic upsampling weight adjustment strategy, multi-scale feature fusion accuracy is significantly enhanced, leading to improved feature representation for deformed objects, occluded scenarios, and multi-scale targets.

- (3)

A dedicated small object detection layer is introduced to optimize the interaction mechanism between high-resolution shallow features and deep semantic features. This design is shown to effectively improve the model’s generalization learning capability for targets of varying scales, particularly extremely small objects, in aerial imagery.

- (4)

A Focaler-ECIoU loss function with a dynamic focus mechanism is proposed, incorporating a task-adaptive sample contribution adjustment strategy. This loss function is demonstrated to enable rapid convergence in bounding box regression while ensuring precise localization, further boosting detection accuracy.

The remaining parts of this study are presented in the following order.

Section 2 introduces the framework design and improvement method of the whole model.

Section 3 details the experimental design as well as the analysis of the results, and

Section 4 concludes the research content of this paper.

2. Proposed Methods

2.1. Overview of the Proposed Methods

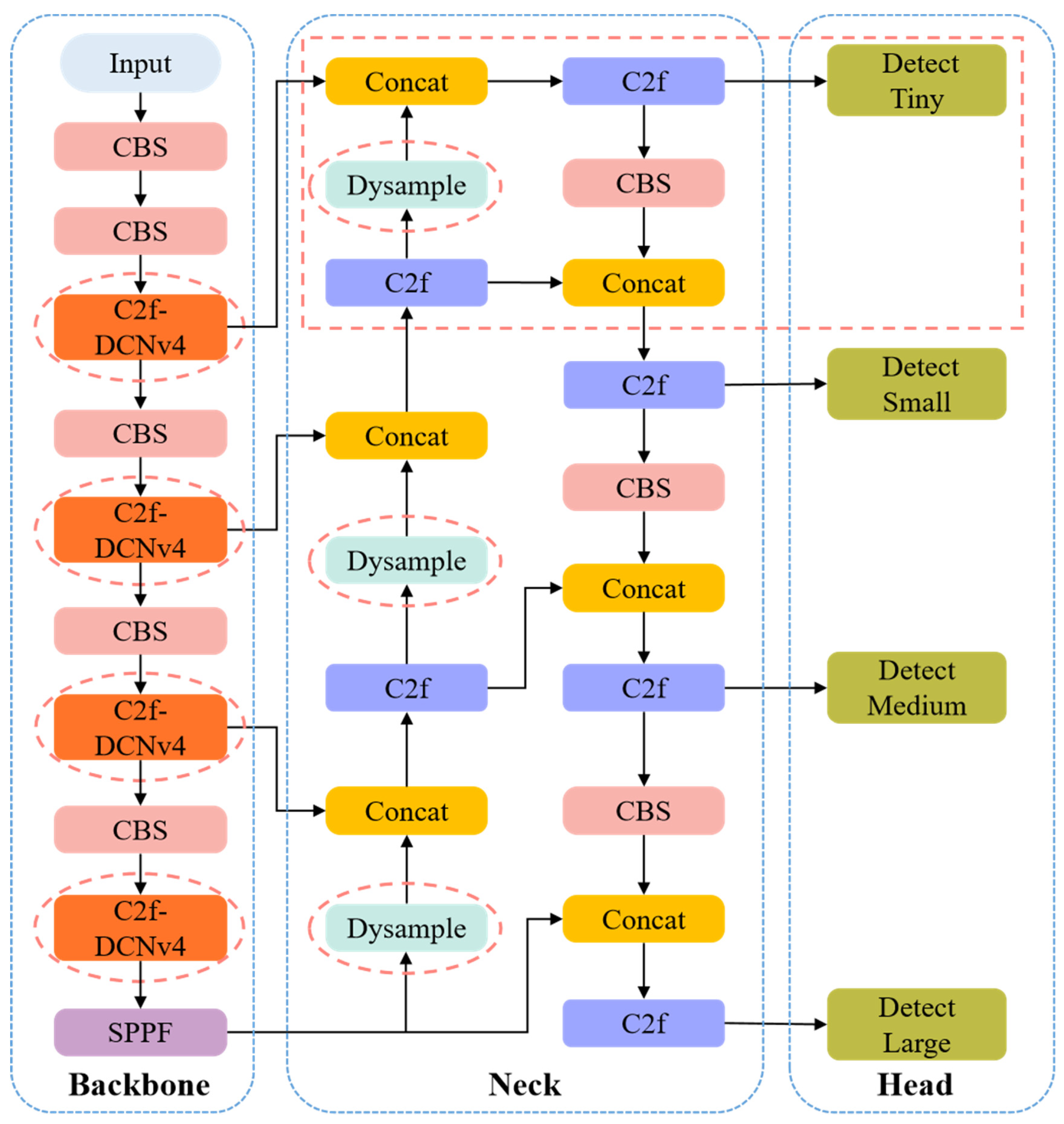

Building upon the YOLOv8n architecture, we propose DDF-YOLO, a lightweight object detection framework specifically designed to address the challenges of UAV imagery, such as high object density, significant scale variation, and complex background clutter. As illustrated in

Figure 1, our enhancements are integrated into the backbone, neck, and head components. The subsequent subsections provide a detailed elaboration on each key improvement:

Section 2.2 introduces the deformable feature extraction module (C2f-DCNv4) in the backbone network;

Section 2.3 details the dynamic upsampling mechanism (DySample) in the neck network;

Section 2.4 describes the hierarchical detection head with an added dedicated layer for small objects; and

Section 2.5 explains the composite loss function design (

Focaler-ECIoU).

The data flow within DDF-YOLO can be summarized as follows:

- (1)

The input image is processed by the enhanced Backbone, where C2f-DCNv4 modules at specific stages extract features with adaptive receptive fields, particularly beneficial for irregular objects. The Backbone generates multi-scale features {P3, P4, P5} that are fed into the Neck.

- (2)

In the top-down path of the Neck, features are upsampled using DySample, merged with lateral connections, and refined through convolutional blocks.

- (3)

The dedicated small-object detection layer incorporates high-resolution features from the Backbone’s earlier layers and processes them through the detection head.

- (4)

The output features from the Neck are fed into the Head for final prediction, with bounding box regression optimized using Focaler-ECIoU Loss.

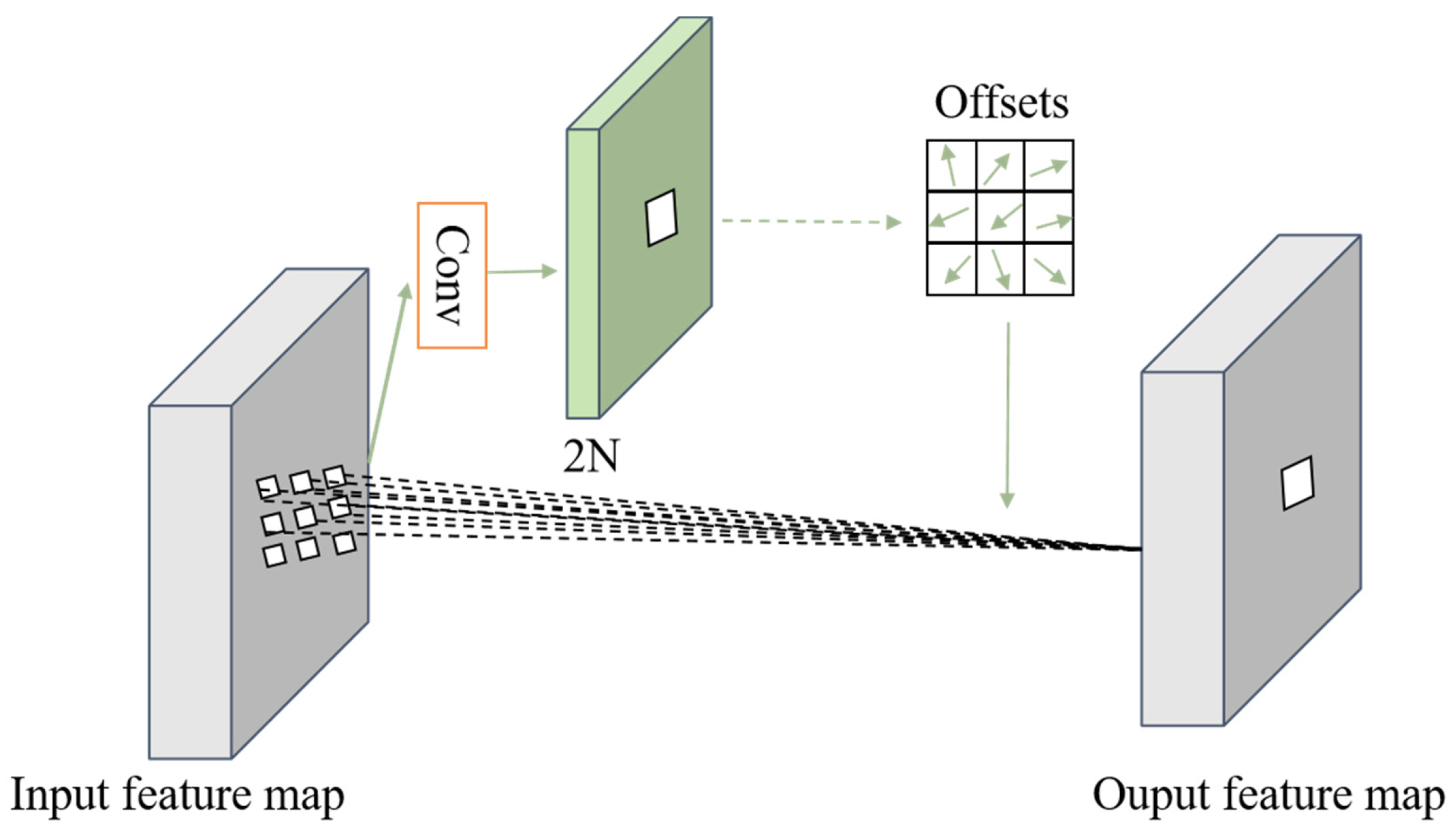

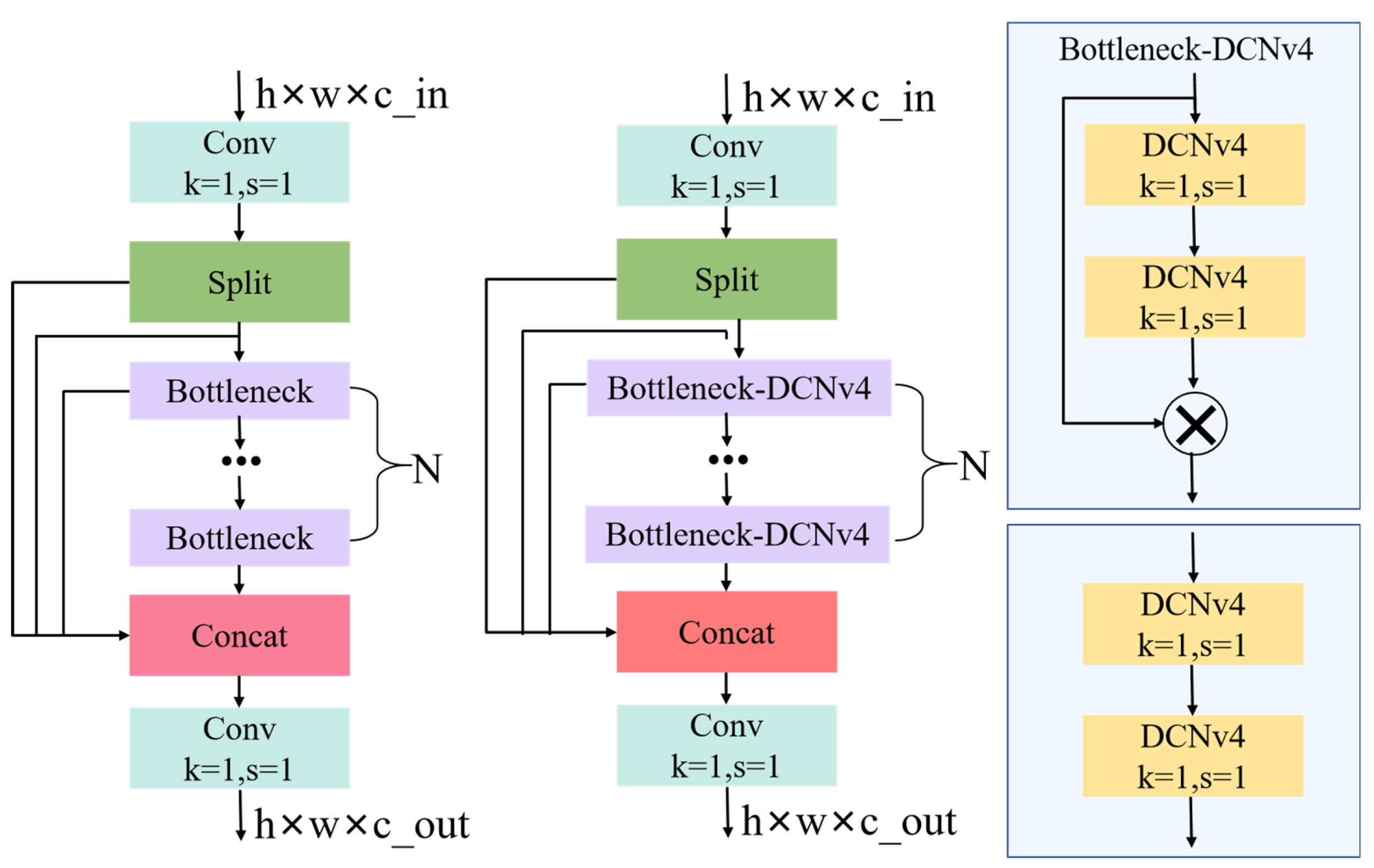

2.2. C2f-DCNv4

A key challenge in UAV detection is the dramatic size variation among targets, with objects ranging from large trucks to tiny pedestrians. Conventional convolutions using fixed rectangular structures can only handle irregular deformations in the sensory field in a basic way, which can result in the incorrect extraction of target feature information. We introduce the latest deformable convolution, DCNv4 (see

Figure 2), which uses a 3 × 3 convolution kernel that dynamically adjusts the extraction of input features based on offset prediction. It also interpolates the connection of nine samples in different channels to produce outputs consistent with the size of the input. This effectively improves the accuracy of detecting irregular contours and targets of different sizes.

This paper presents a new feature extraction module, C2f-DCNv4, which is designed by incorporating DCNv4 into C2f, as illustrated in

Figure 3, which optimizes the adaptive extraction and representation of complex shapes. Residual concatenation and serial concatenation are used for channel information in Bottleneck, respectively, to reduce redundant gradients while preserving the original features as much as possible.

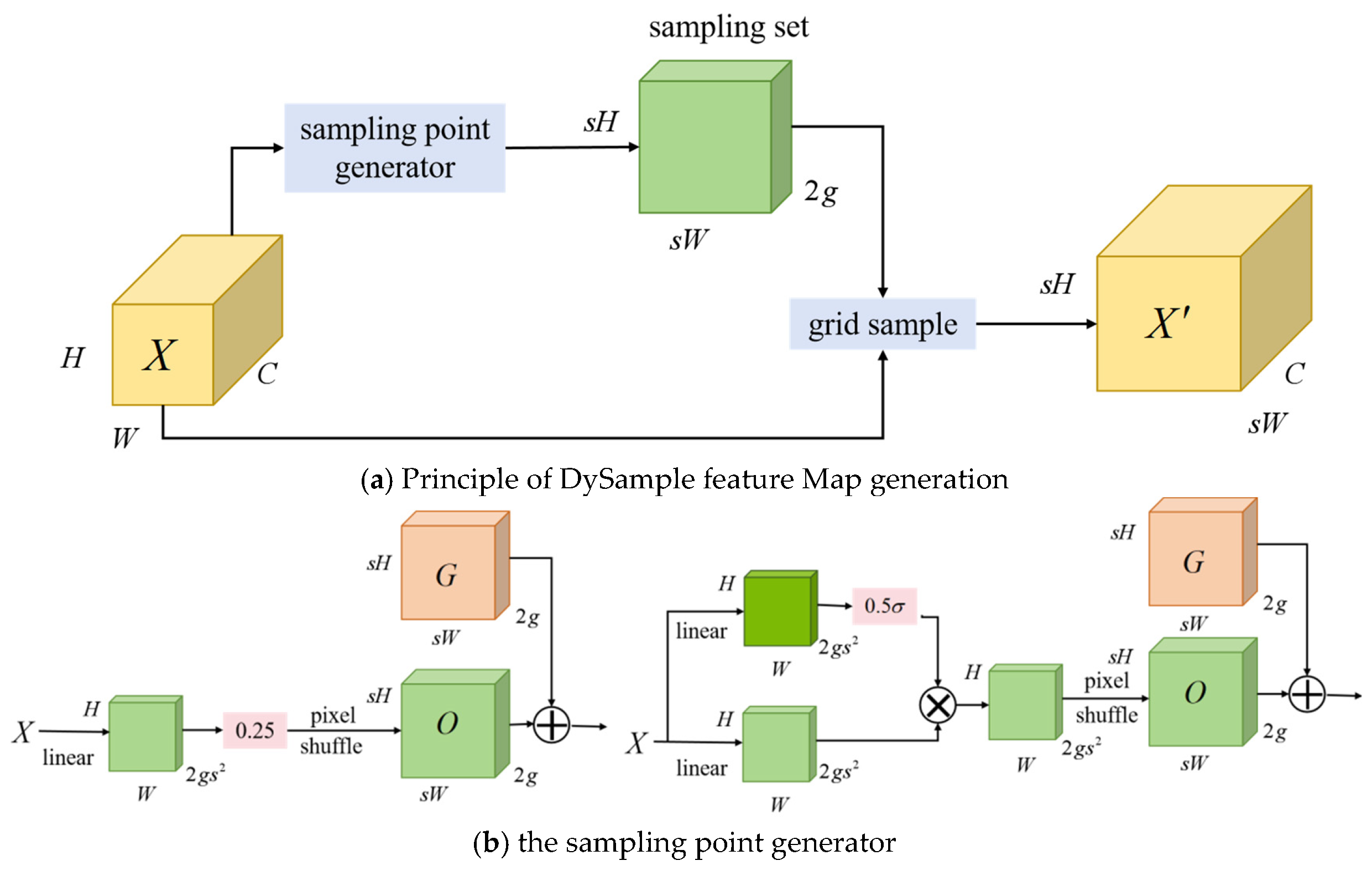

2.3. DySample

The bilinear interpolation up-sampling method employed in the feature fusion stage of the original YOLOv8s model exhibits a substantial drawback in that it relies exclusively on pixel spatial location while disregarding semantic information and feature correlation. which leads to loss of details and image distortion. This problem is especially evident in the context of aerial image detection, where small targets captured by UAVs are particularly sensitive to pixel-level information. and traditional static up-sampling methods are prone to blurring or false detection. For this reason, the researchers proposed the lightweight dynamic upsampling operator (DySample), the principal structure of which is shown in

Figure 4. For an input feature map x of dimension H × W × C (height × width × number of channels), the system first constructs a collection of sH × sW × 2 sampling coordinates through a sampling point generator, and is given an upsampling scale factor s. Each point in the collection contains dynamic offsets in the x and y directions, which are used to guide the high-resolution reconstruction of the feature map. Subsequently, content-aware interpolation is computed on the original feature map using the grid_sample function of PyTorch. The final output is an up-sampled feature map X’ in sH × sW × C dimensions. This process achieves dynamic mapping from low resolution to high resolution while preserving semantic information.

The primary benefits of DySample can be categorized into three distinct aspects: dynamism, lightweight and multi-scale compatibility. Firstly, the content-aware mechanism is able to dynamically adjust the sampling strategy according to the input features, thereby significantly improving the ability to restore image details (e.g., small aerial targets) while avoiding the overlapping of sampling points through dynamic range constraints. Secondly, the scheme can be implemented with only linear layers and parameter constraints, without complex sub-networks, and outperforms traditional up-sampling methods in terms of computational efficiency, with backpropagation speed almost unaffected. Furthermore, DySample facilitates cross-scale feature fusion, operating in conjunction with the original C2f module and lightweight attention mechanism to enhance the model’s detection robustness to targets of varying sizes. In UAV aerial photography missions, DySample can effectively mitigate the problem of information loss caused by downsampling and improve the rate of false alarms and missed alarms in small target detection. Its design paradigm of ‘dynamic adjustment + lightweight computation’ is not only applicable to the YOLOv8 architecture but also provides a general solution for other vision tasks that require high-precision feature reconstruction, balancing the needs for accuracy and efficiency.

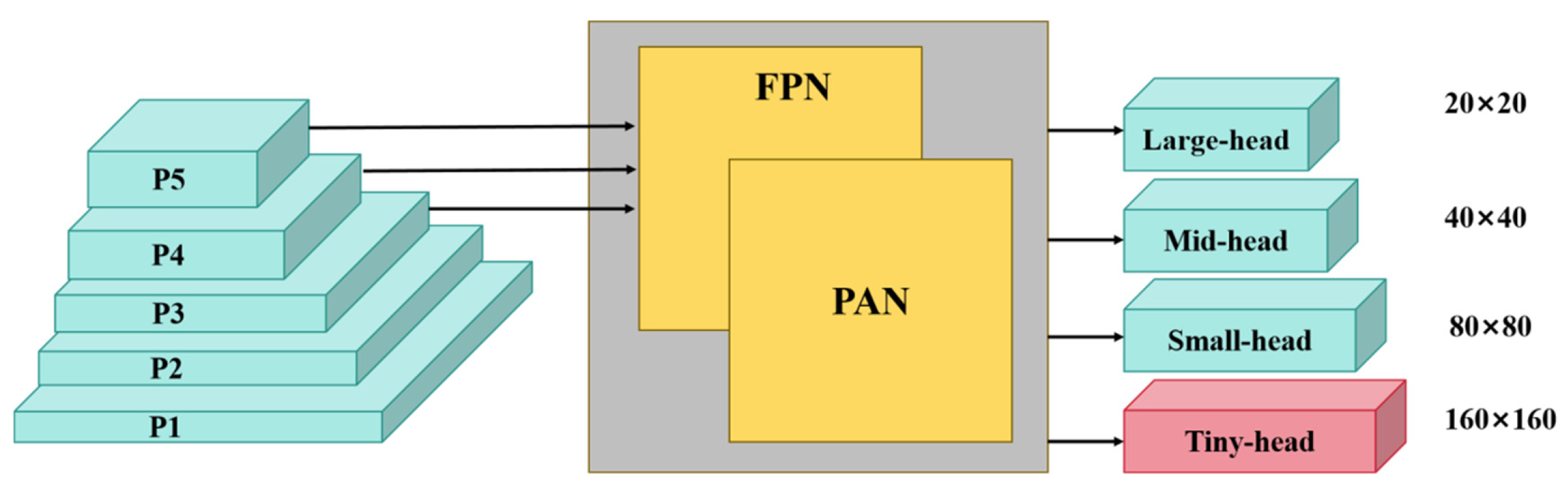

2.4. Small-Size Object Detection Layer

The flight altitude of UAVs frequently results in long-distance detection scenarios, wherein targets manifest smaller-scale features within the expansive sensory field. The original YOLOv8 model retains only 80 × 80, 40 × 40, and 20 × 20 sized detection heads after five times of downsampling in the feature extraction stage, while small targets appear more in the shallow semantic information, and the excessive downsampling factor leads to the loss of small targets.

In order to circumvent the omission of comprehensive details due to feature compression, a small target detection layer is incorporated in the initial downsampling stage, as illustrated in

Figure 5. Subsequently, a multi-scale fusion of 160 × 160-sized feature maps with smaller-scaled feature maps is performed. This enhances the extraction network’s comprehension of the overall contextual information through the integration of shallow semantics and deeper semantics. Consequently, richer information about the underlying features is retained, thereby augmenting the detector’s capacity to generalize and learn about targets of different scales in aerial viewpoints. The overarching objective is to facilitate generalized learning capabilities for targets across diverse scales, with the ultimate aim of optimizing the perception of small targets in complex environments.

Figure 5.

Multi-scale feature extraction and fusion networks.

Figure 5.

Multi-scale feature extraction and fusion networks.

2.5. Focaler EfficiCIoU Loss Function

The CIoU bounding box regression function in the YOLOv8 target detection framework has an inherent defect in the bounding box regression process. As it uses a single aspect ratio influence factor to characterize the difference in dimensions between the predicted and real boxes, it fails to take the differences in width and height dimensions into account independently. This makes it difficult for the model to adjust the width and height dimensions simultaneously during the optimization process. Specifically, when the width-to-height ratio of the predicted and real boxes is similar, the CIoU penalty mechanism fails, preventing the model from optimizing the bounding box’s positioning accuracy further. The EIoU (Enhanced Intersection over Union) loss function improves upon CIoU by decoupling the penalty terms in the width and height dimensions to achieve independent optimization of the bounding box dimensions. However, when the dimensions of the predicted and real boxes differ significantly, using EIoU alone leads to a significant decrease in convergence speed.

Based on this, the study proposes a hybrid optimization strategy (

ECIoU) that combines the advantages of

CIoU and

EIoU. First,

CIoU is used for coarse-grained adjustment in the initial optimization stage, quickly bringing the aspect ratios of the predicted boxes into a reasonable range. Then,

EIoU is used for fine-grained optimization, accurately adjusting the positions of the edges until the optimal solution is reached. This staged optimization strategy ensures convergence speed and improves final positioning accuracy. It is designed to optimize the accuracy and convergence speed of bounding box regression. The core idea is to enhance the model’s localization performance by distinguishing the impact of aspect ratio and more accurately measuring the similarity between predicted and actual boxes. Specifically, the loss function of

ECIoU is defined as follows:

where

denotes the intersection and concurrency ratio of the predicted frame to the true frame;

is the squared Euclidean distance between the centroid of the prediction frame and the centroid of the true frame;

is the square of the diagonal distance between the minimum outer rectangles of the two frames;

is used to measure the consistency of the aspect ratio of the two frames, which is calculated as:

The innovation of ECIoU is as follows: a. Separate aspect ratio penalty: avoids penalty failure due to the same aspect ratio in CIoU by calculating aspect differences independently. b. Dynamic adjustment mechanism: When the prediction frame range is large or the edge distance is far, it prioritizes CIoU for coarse adjustment. When the aspect ratio is within a reasonable range, it shifts to ECIoU for fine adjustment, thus accelerating convergence. c. Computational efficiency: ECIoU is more computationally efficient than CIoU because it reduces the need for complex inverse trigonometric function calculations. In conclusion, ECIoU optimizes the convergence efficiency of the model and improves detection accuracy by combining the advantages of CIoU and EIoU. This makes it a reliable solution for target detection tasks in complex scenes.

To improve the generalization ability of the target detection model, this study employs a sample optimization strategy involving dynamic weight allocation. The core idea of this method is to adaptively adjust the contribution of regression samples according to different task requirements using a linear transformation mechanism. Specifically, we optimize the bounding box regression process by mathematically reconstructing the

IoU loss function using the following expression:

The Focaler-ECIoU proposed in this work is an improved model based on the standard ECIoU, featuring a dynamic focus adjustment mechanism. It differentiates between samples of different qualities by introducing a loss weight adjustment strategy based on linear interpolation and implementing linear mapping within the threshold interval

.

3. Experiments and Discussion

In this study, the effectiveness of the proposed method in UAV target detection tasks is verified through systematic experiments. The experimental design consists of the following three parts: firstly, a complete test environment is built on two authoritative UAV datasets, VisDrone2019 and UAVDT, detailing the experimental conditions such as hardware configuration, software environment, etc., and specifying the performance evaluation indexes. Secondly, to comprehensively evaluate the method’s advancement, the proposed technique is compared with current mainstream algorithms on the VisDrone2019 dataset. The results of this comparative experiment fully prove the present method’s advantages. Thirdly, the relative importance of each part in the model is analyzed in depth on the UAVDT dataset by designing a sophisticated ablation experimental scheme, which provides an important basis for the optimization of the method.

3.1. Experiment Environment

All experiments were conducted using PyTorch 2.1.2 (Python 3.9) under an Ubuntu 18.04 environment to ensure consistent testing and validation. Model performance assessments were performed using a 16 GB NVIDIA GeForce RTX 4080Ti GPU, equipped with CUDA 11.8 and CUDNN to facilitate faster training processes. To maintain fairness and comparability in evaluating model outcomes, no pre-trained weights had been employed.

3.2. Datasets

VisDrone2019 [

32] is a large-scale UAV vision benchmark dataset created by the AISKYEYE team at Tianjin University. It is widely used in research into target detection and scene understanding. The dataset is collected from diverse scenes in 14 cities in China, containing 10,209 high-resolution images (6471/548/3190 divisions) and 288 video sequences, annotated with a total of 2.6 million instances of 10 types of traffic-related targets (pedestrians, vehicles, etc.), and provided with fine-grained attributes such as the degree of occlusion and the state of motion. Its remarkable features are as follows: about 60% are small targets (<32 × 32 pixels), multi-scale variations and complex occlusions, which can effectively simulate the detection challenges of real aerial photography scenes and provide an authoritative benchmark for algorithm robustness evaluation.

UAVDT (Unmanned Aerial Vehicle Detection and Tracking) [

33] is a large-scale benchmark dataset for vision tasks involving UAVs. It contains 100 aerial video sequences totalling ~80,000 frames at a resolution of 1024 × 540, covering diverse scenarios such as city roads, highways and intersections. The dataset is strictly divided into 31 training videos (23,829 frames) and 19 test videos (16,580 frames) to ensure data independence. It is labelled with three types of vehicular target (cars, trucks and buses) and 14 environmental attributes (e.g., weather, altitude and occlusion). The challenging nature stems from UAV motion, light variations and target occlusion, and is suitable for target detection and tracking studies, which are normalized to 640 × 640 resolution for experiments.

3.3. Evaluation Metrics

Quantitative analysis was conducted using standard evaluation metrics, Precision (P), Recall (R), Average Precision (AP), and Mean Average Precision (mAP) were adopted to describe various aspects of the network.

where

represents positive samples correctly identified;

indicates negative samples for error classified;

indicates positive samples for error classified.

3.4. Performance Comparison of Similar Models

In order to evaluate the effectiveness of the proposed improved algorithm, comparative experiments were conducted with the original YOLOv8n algorithm as a baseline and the improved DDF-YOLO algorithm on the VisDrone2019 dataset. To ensure statistical significance and robustness, all experiments were repeated five times with consistent random seeds. As demonstrated in

Table 1, all performance metrics of the enhanced model show significant improvements. The accuracy increased from 46.6% to 53.3%, while recall improved from 34.4% to 42.6%. The mAP50 metric demonstrated a substantial enhancement from 34.9% to 43.5%, representing a relative improvement of 24.6%. Additionally, the inference speed showed an 11.2% gain with FPS increasing from 161 to 179.

To further validate the reliability of our results, we report both the mean ± standard deviation and 95% confidence intervals for all key metrics based on five independent runs. As shown in

Table 1 and

Table 2, our method consistently outperforms the baseline across all evaluation metrics. The improvements are further confirmed by paired

t-tests, yielding statistically significant

p-values of less than 0.001 for all key metrics. These comprehensive results demonstrate that the DDF-YOLO algorithm exhibits excellent performance and implementation potential in UAV target identification scenarios through enhanced global feature processing capabilities.

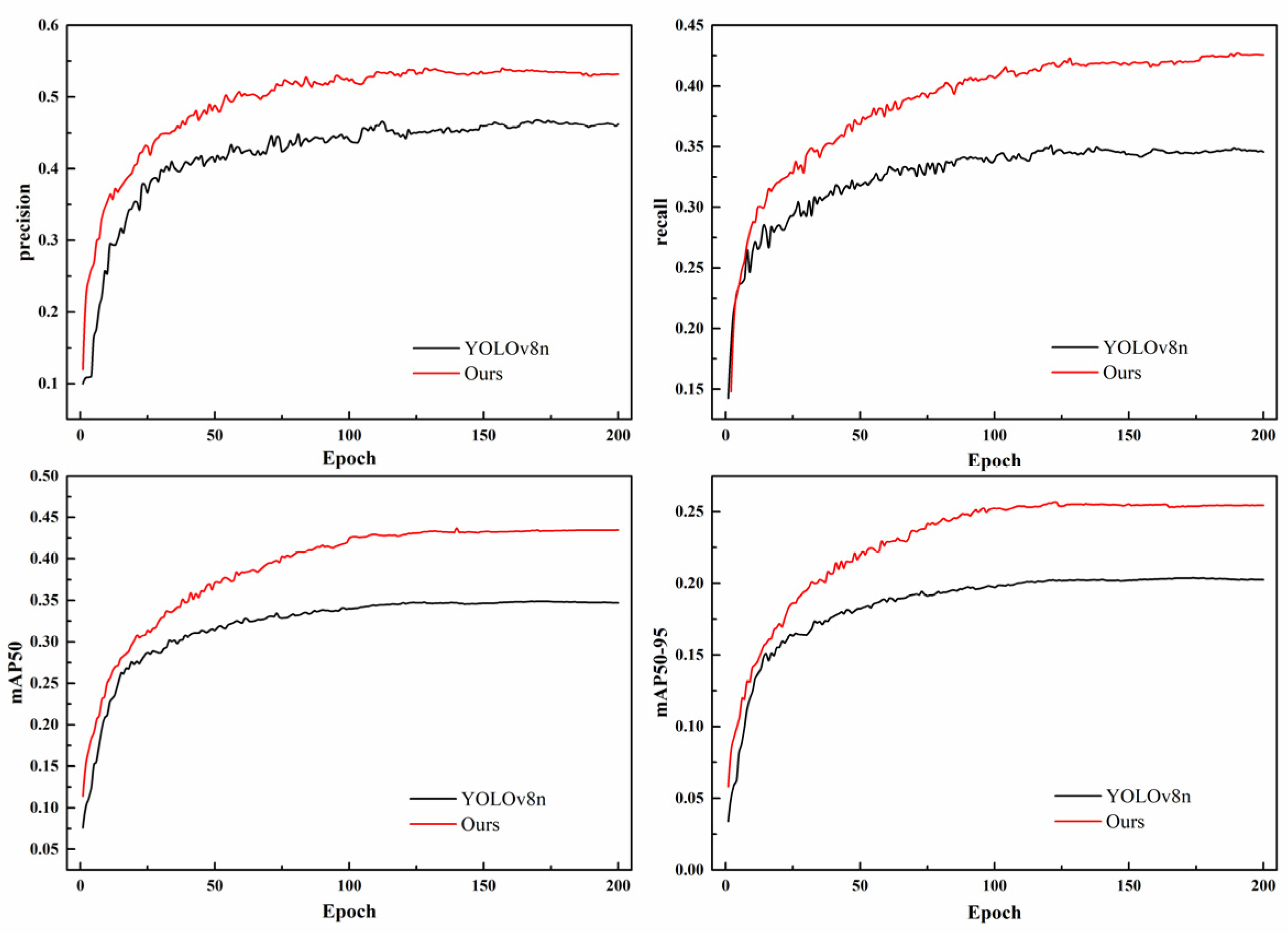

In order to show the impact of model enhancement more intuitively, the training iteration process of key detection indicators (precision, recall, mAP50 and mAP50-95) of the model on the validation set prior to and following the enhancement is illustrated in

Figure 6 and it can be seen that the training curves of the improved model for all the indicators are significantly better than those of the baseline YOLOv8 model, which is specifically shown as follows: (1) all the indicator curves in the whole process of training (epoch 0–200) all the index curves are always located above the benchmark model; (2) with faster convergence speed and higher stability value. The overall upward trend of the training curves further confirms the performance advantage of the improved model in the target detection task.

3.5. Comparison of Results of Different Models Experiments

In order to verify the performance advantages of the proposed improved algorithm, several representative works in the field of UAV target detection were selected for comparative analysis in this study. Specifically, a comprehensive comparison experiment was conducted between the proposed DDF-YOLO model and the current state-of-the-art UAV target detection models. The comparison benchmarks include: (1) the YOLO series of baseline models at different scales, (2) multiple improved algorithms based on the YOLO framework, and (3) other types of classical target detection architectures. The quantitative evaluation results, based on the VisDrone2019 benchmark dataset, are shown in

Table 3. This table compares in detail the performance of each model in terms of key metrics such as the number of parameters, detection accuracy, recall, and mAP.

As demonstrated in

Table 3, DDF-YOLO exhibits significant advantages in terms of overall performance. In particular, (1) compared with the benchmark YOLO series, although the number of parameters of DDF-YOLO is slightly higher than that of some lightweight versions (e.g., YOLOv5n, YOLOv10n, and YOLOv11n), it exhibits significant advantages in the key metrics, such as detection accuracy (improved by 2–9.7%), recall rate (improved by 2.1–11.8%), mAP50 (improved by 2.2–11.3%), FPS (up to 179) and other key metrics show obvious advantages; (2) It is evident that the DDF-YOLO algorithm exhibits notable advantages in terms of key performance indicators when compared to other enhanced YOLO algorithms. maintaining its position as a leading solution in this field. (3) Furthermore, when contrasted with conventional target detection models, DDF-YOLO attains substantial model compression while preserving a higher level of detection accuracy. This enhancement is evidenced by a reduction in parameter volume of over 16.6 M, accompanied by a 4.6% improvement in mAP50. The experimental results show that DDF-YOLO effectively solves the trade-off between lightweight and high accuracy and exhibits excellent performance advantages in UAV small target detection tasks.

3.6. Ablation Study

To verify the effectiveness of the improved method, a series of ablation experiments were conducted on the UAVDT dataset to test each module. The same testing platform and model hyperparameters were used for comparative analysis. In addition, recall, precision, mAP, FLOPs and number of parameters were used as evaluation indicators to evaluate the effect of each improvement, and the results are shown in

Table 4.

Comprehensive ablation studies were conducted through eight distinct experimental configurations to systematically evaluate the contributions of individual components. The evaluation framework was established as follows: (1) The original YOLOv8 model served as the baseline for performance comparison. (2) The C2f-DCNv4 module was implemented to examine its effectiveness in detecting irregular small objects in UAV imagery. (3) The DySample module replaced the conventional upsample operation in the neck network, demonstrating its dual advantages in computational efficiency and detection accuracy. (4) An additional detection layer specifically designed for small objects was incorporated to assess its capability in enhancing multi-scale feature representation, particularly for small-sized targets. (5) The Focaler-ECIoU loss function was introduced to validate its improvement in bounding box regression precision. Subsequently, experiments (6)–(8) progressively integrated these components in a cumulative manner to investigate their synergistic effects on overall algorithm performance.

Ablation studies serve as a fundamental methodology for evaluating the performance improvements in object detection algorithms for UAV imagery. As systematically demonstrated in

Table 3, the experimental results validate the effectiveness of each proposed module:

Experiment 2 shows that the dynamic feature extraction module (C2f-DCNv4), enhanced with DCNv4, significantly improves feature representation for deformed objects, occluded scenarios, and multi-scale targets. By leveraging dynamic sparse attention and adaptive spatial sampling, it maintains an optimal balance between detection accuracy and inference speed. Notably, it reduces model parameters by 5.5% while boosting mAP through simultaneous improvements in both precision and recall.

Experiment 3 demonstrates that the DySample module effectively enhances multi-scale feature fusion accuracy through dynamic upsampling weight adjustment. Particularly for small object detection in aerial imagery, the module reduces both false positive and false negative rates without introducing additional learnable parameters, ultimately achieving a 1% improvement in mAP50.

Experiment 4 indicates that the newly added small-object detection layer substantially mitigates missed detections of tiny objects in aerial images by strengthening high-resolution feature extraction. The refined multi-scale fusion strategy contributes to a remarkable overall mAP improvement.

Experiment 5 confirms the efficacy of the proposed Focaler-ECIoU loss function, which not only maintains bounding box regression accuracy but also accelerates convergence without increasing model complexity.

Experiments 6–8 systematically assess the synergy between module combinations. The results show consistent performance gains, with mAP50 increasing by 2.6%, 3.9%, and 4.8% across Experiments 6–8, respectively. Critically, adding the small-object detection layer does not increase the parameter size beyond the baseline YOLOv8 model. This design addresses three key challenges: (1) model lightweighting, (2) deployment simplicity on mobile platforms, and (3) real-time detection accuracy retention.

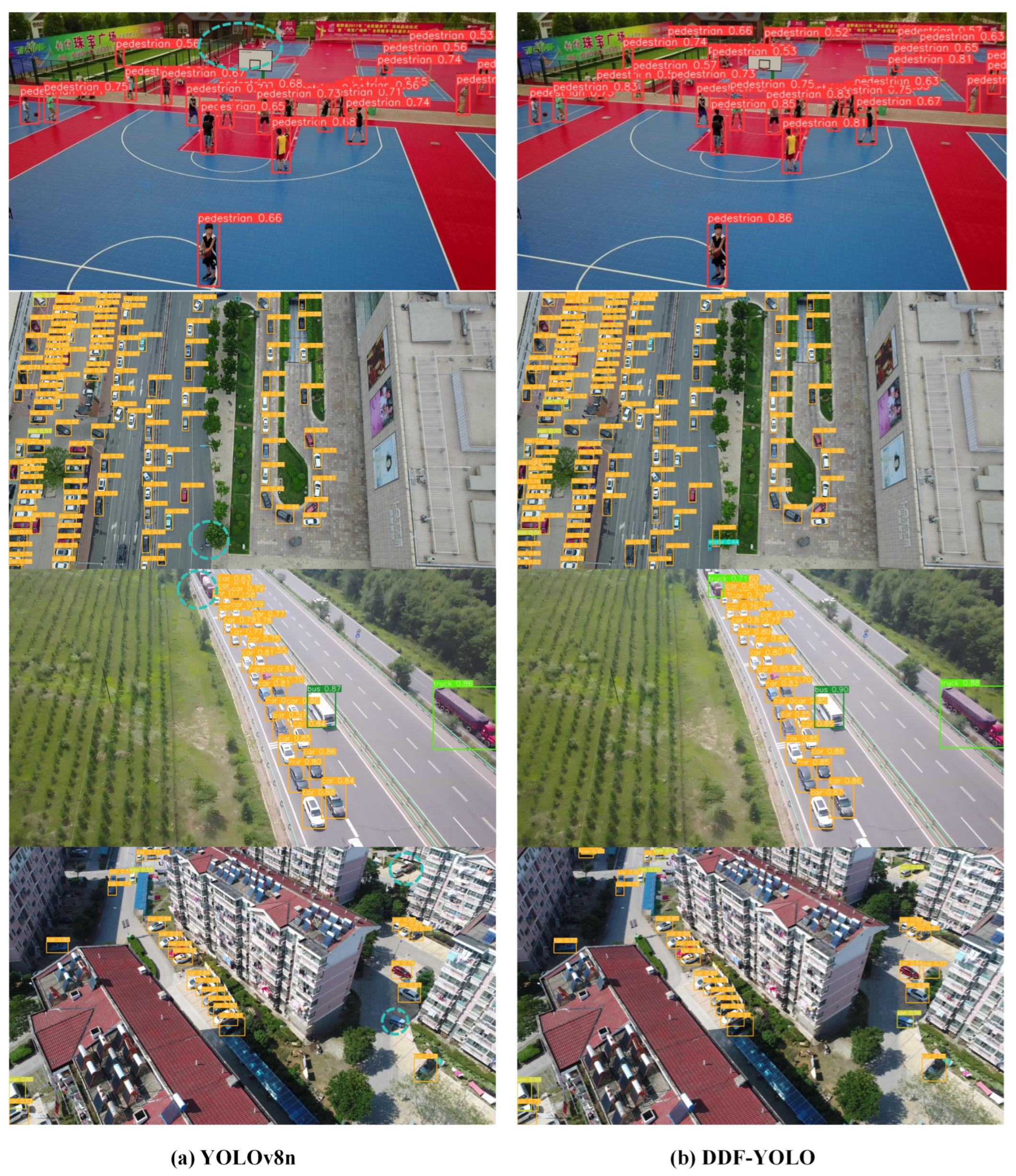

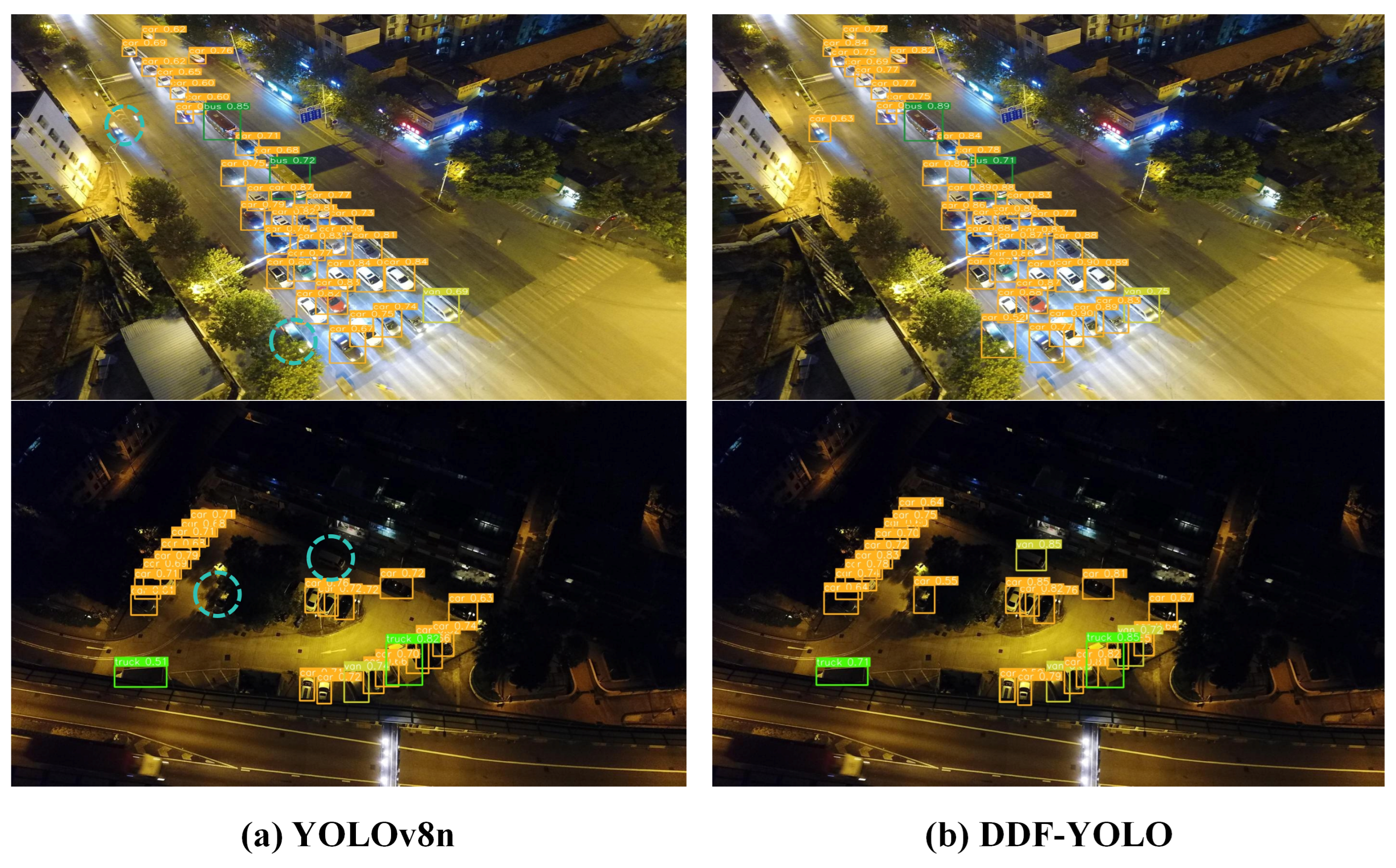

3.7. Visualization of Test Results

To comprehensively evaluate the robustness and generalization capability of the proposed algorithm for aerial image detection tasks, we conducted experimental validation using representative multi-scenario samples selected from two publicly available benchmark datasets: VisDrone2019 and UAVDT. The selected samples encompass the following challenging detection scenarios: (1) complex background environments including playgrounds, urban roads, and residential areas; (2) varying illumination conditions covering both daytime and nighttime scenes; and (3) diverse shooting perspectives involving both top-view and side-view aerial angles. These scenarios not only contain numerous densely distributed objects with significant scale variations but also exhibit severe occlusion phenomena, thereby providing representative test data for algorithm performance evaluation. Furthermore, we performed comparative experiments between the proposed algorithm and the YOLOv8 baseline model under identical test scenarios to quantitatively analyze the performance improvement achieved by our approach.

3.7.1. Detection Results on the VisDrone2019 Dataset

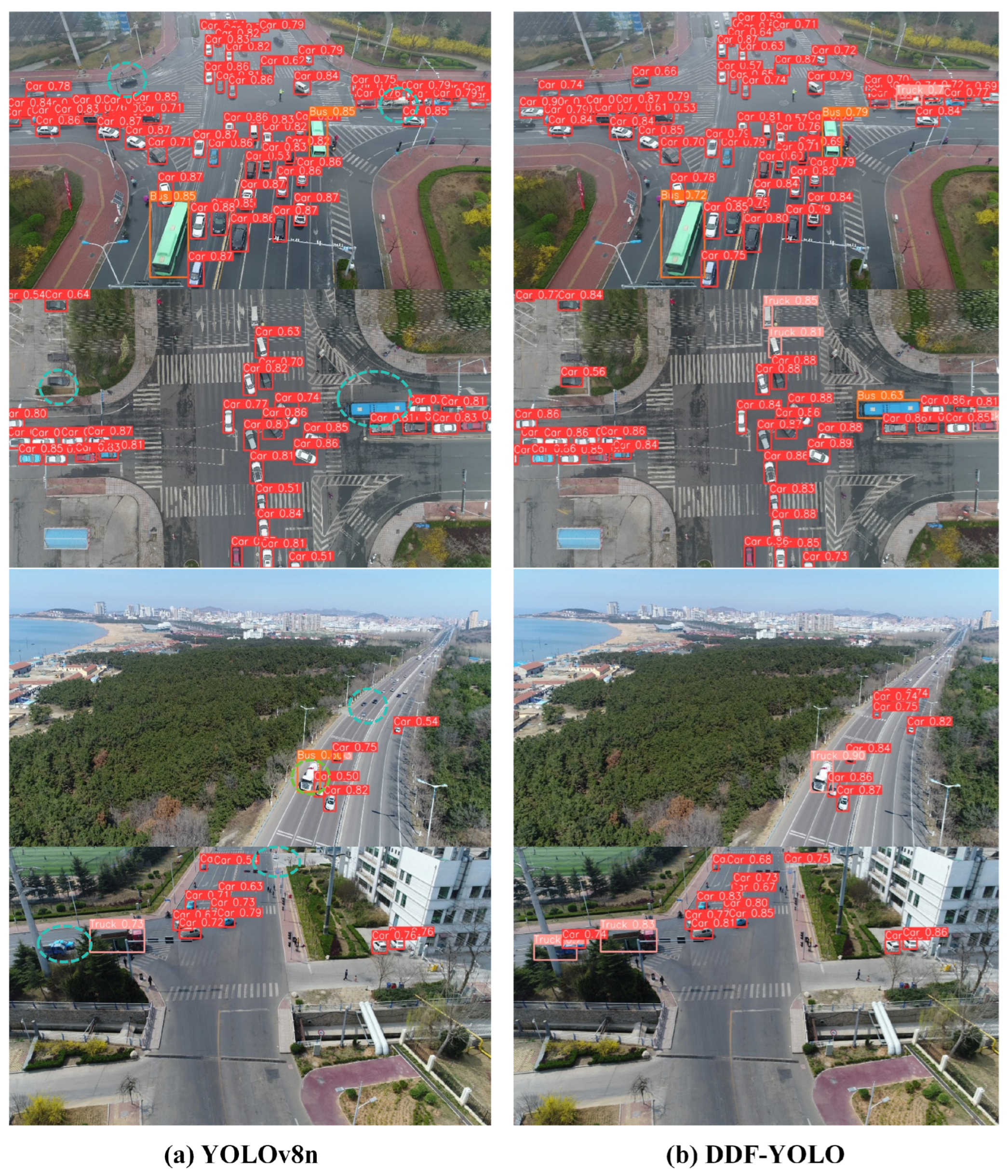

This study comprehensively evaluates the performance advantages of the DDF-YOLO model through multi-scenario comparative experiments. As illustrated in

Figure 7, the comparative analysis of detection performance in daytime scenarios (including playgrounds, urban intersections, highways, and residential areas) reveals significant limitations in the original YOLOv8n model, particularly in: (1) frequent missed detections and false alarms of occluded objects and dense small targets, and (2) inadequate perception capability for distant objects near image boundaries. In contrast, the proposed DDF-YOLO model demonstrates substantial improvements in these aspects, exhibiting superior detection sensitivity and accuracy, with particularly outstanding performance in small object detection.

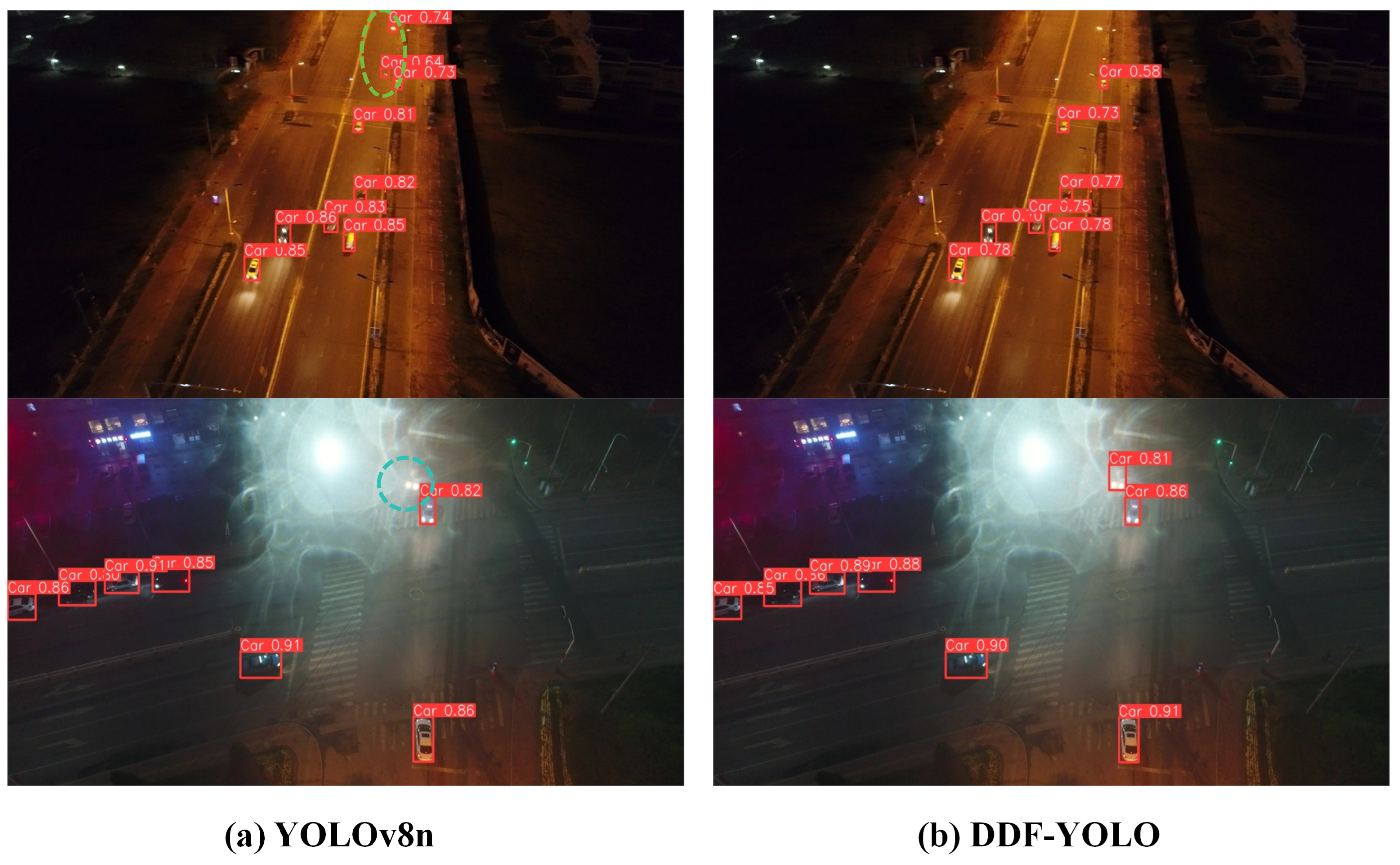

To further assess the model’s robustness in challenging environments, we evaluated its detection performance under various nighttime illumination conditions. The experimental results presented in

Figure 8 indicate that while the baseline YOLOv8n model has limited capability in detecting low-contrast objects under both strong and dim lighting conditions, our DDF-YOLO model exhibits remarkable environmental adaptability. Specifically, the proposed model demonstrates three key advantages: (1) effective adaptation to illumination variations, (2) stable detection performance under extreme lighting conditions, and (3) accurate identification of low-contrast small objects. These findings provide compelling evidence for the superiority of the DDF-YOLO model in uneven illumination environments, significantly enhancing object detection accuracy in complex nighttime scenarios.

3.7.2. Detection Results on the UAVDT Dataset

To systematically evaluate the model’s generalization capability, we conducted comparative experiments using multi-scenario samples from the UAVDT dataset. As illustrated in

Figure 9, our analysis of detection performance in daytime scenarios (including both rainy and sunny conditions) across typical environments such as urban intersections and suburban roads revealed three primary limitations of the baseline YOLOv8n model: (1) a significant increase in missed detection rates for vehicle targets under low-visibility conditions (e.g., rain and fog); (2) inadequate recognition capability for distant targets captured at large angles; and (3) poor tracking continuity for partially occluded objects. In contrast, our proposed DDF-YOLO model demonstrated substantial performance improvements across these challenging scenarios, particularly exhibiting superior robustness in aerial small object detection tasks.

To further validate the model’s adaptability in extreme environments, we specifically selected nighttime scenarios characterized by: (1) extremely low visibility, (2) complex background interference, and (3) high-density small object distributions. The comparative experimental results presented in

Figure 10 clearly demonstrate that while the conventional YOLOv8n model suffered from nighttime light interference—manifested as false positives (e.g., misidentifying streetlights as vehicles) or complete failure to detect valid targets—our DDF-YOLO model maintained detection accuracy comparable to that achieved under ideal lighting conditions. This comprehensive series of experiments not only verifies DDF-YOLO’s adaptability to complex environmental variations but also provides compelling evidence for its technical feasibility in meeting the requirements of all-weather aerial image detection tasks.

3.8. Discussion

The proposed DDF-YOLO model effectively tackles the persistent challenges in UAV-based object detection, particularly the difficulty of detecting small targets against complex backgrounds with significant scale variations. Central to this improvement is the introduction of a multi-scale dynamic feature fusion mechanism, which substantially enhances the model’s ability to integrate and utilize features across different levels of abstraction.

The integration of the dynamic feature extraction module (C2f-DCNv4) and the dynamic upsampling module (DySample) forms the core of this multi-scale dynamic fusion strategy. Unlike static fusion approaches, our method dynamically adjusts receptive fields and fusion weights based on input characteristics, allowing the model to better adapt to irregular object shapes and significant scale differences. Specifically, the deformable convolution in C2f-DCNv4 enhances feature capture for non-rigid and partially occluded objects, while DySample optimizes the fusion process between shallow spatial details and deep semantic information. This dynamic fusion preserves critical fine-grained details necessary for small object detection while maintaining rich contextual awareness, thereby improving both localization accuracy and classification reliability across varying object sizes.

Furthermore, the addition of a dedicated small-object detection layer strengthens the interaction between high-resolution shallow features and deep features, explicitly guiding the model to retain and emphasize low-level details that are often lost in standard feature pyramids. This design significantly boosts generalization across scales, as evidenced by the notable gains in both mAP50 and mAP50-95 on the VisDrone2019 and UAVDT datasets. The adaptive Focaler-ECIoU loss complements this architecture by dynamically modulating training emphasis based on sample difficulty, which further refines detection performance for challenging examples.

While the improvement in absolute mAP over recent models such as DDSC-YOLO and Drone-YOLO is moderate, the significantly reduced computational cost (only 7.3 GFLOPs) and higher inference speed (179 FPS) highlight the practical efficiency of our approach. This balance between accuracy and computational economy is especially valuable for real-time applications on resource-constrained platforms such as drones and mobile edge devices. Moreover, the model’s robustness across diverse scenarios reduces dependency on extensive post-processing or domain-specific tuning, enhancing its applicability in real-world deployments.

In summary, the multi-scale dynamic feature fusion strategy employed in DDF-YOLO offers a scalable and efficient solution for UAV-based detection tasks. Future work may explore end-to-end optimization of dynamic fusion parameters and extend the approach to video detection scenarios where temporal consistency is critical.

4. Conclusions

To address the technical challenges in UAV aerial imagery, including high proportions of small objects, complex backgrounds, and significant scale variations among targets, this study proposes an enhanced object detection model named DDF-YOLO, which demonstrates substantial improvements in both detection accuracy and computational performance for the precise identification of multi-scale dense small objects against complex backgrounds. First, a dynamic feature extraction module (C2f-DCNv4) is integrated into the backbone network, which effectively captures distinctive features of objects with complex contours and irregular shapes through deformable convolutional operations. Additionally, a DySample module is employed in the neck network to optimize multi-scale feature fusion, significantly enhancing detection performance in challenging scenarios involving occlusions and small objects while maintaining computational efficiency. Furthermore, a specialized layer optimized for small-scale target detection is integrated into the architecture, improving the interaction mechanism between high-resolution shallow features and deep semantic features. This enhancement strengthens the model’s generalization capability for targets at various scales, particularly extremely small objects in aerial views. At the same time, a novel Focaler-ECIoU loss function accelerates bounding box convergence and improves localization accuracy through an enhanced penalty mechanism. Comprehensive evaluations on the VisDrone2019 and UAVDT benchmark datasets demonstrate that DDF-YOLO achieves notable performance gains over baseline models without additional computational overhead. Specifically, the model shows improvements of 8.6% and 4.8% in mAP50, along with 5.0% and 3.3% enhancements in mAP50-95, respectively. The experimental findings substantiate the model’s superior capability for UAV-based aerial target detection.

Despite demonstrating robust performance on established benchmarks, this study is subject to certain limitations. The models were trained and evaluated on large-scale public datasets, which may not adequately capture the full spectrum of real-world complexity, such as extreme weather, heavy occlusion, or niche operational scenarios. Furthermore, although the system achieves real-time performance on high-end GPUs, practical deployment on embedded or mobile platforms would require additional optimizations—such as model quantization, pruning, or neural architecture search—to maintain a suitable balance between accuracy, latency, and computational footprint. In the future, we plan to extend this work by rigorously evaluating its robustness under a wider range of conditions, specifically focusing on the impact of image scale, viewpoint tilt, and object size, to bridge the gap between benchmark performance and real-world applications.