Ontological Airspace-Situation Awareness for Decision System Support

Abstract

1. Introduction

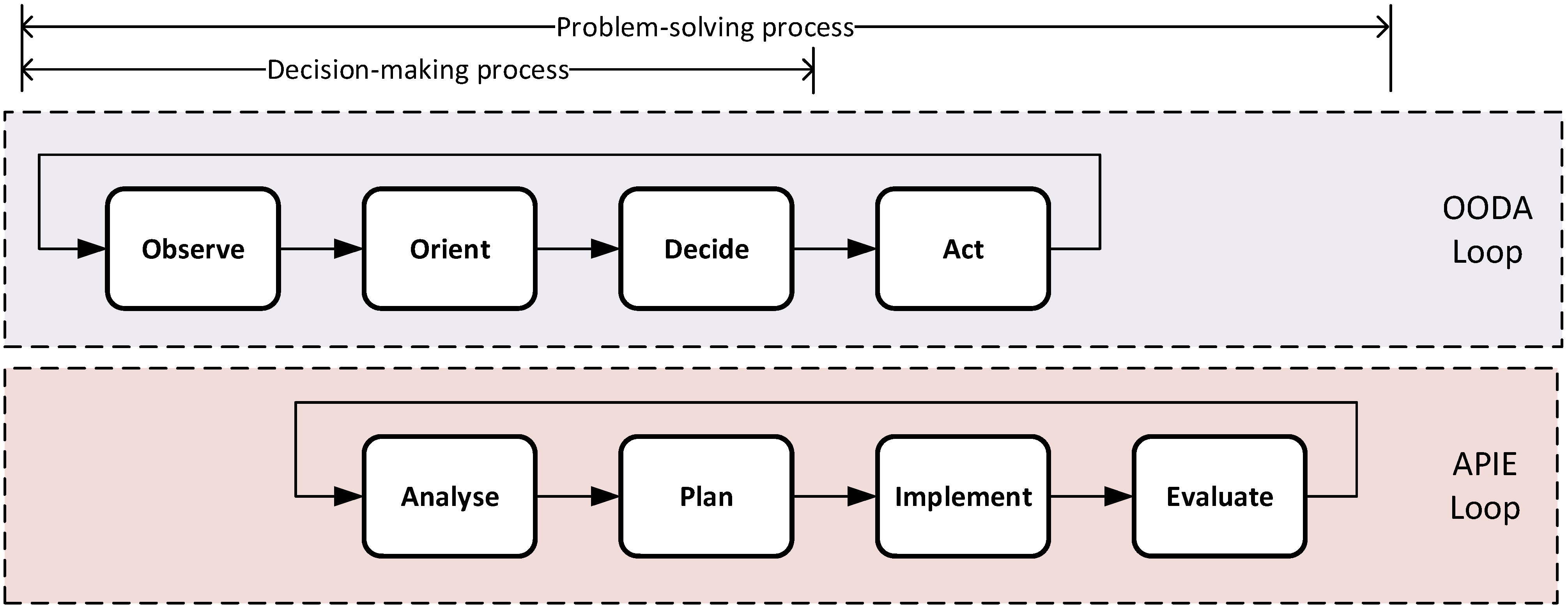

2. Related Works

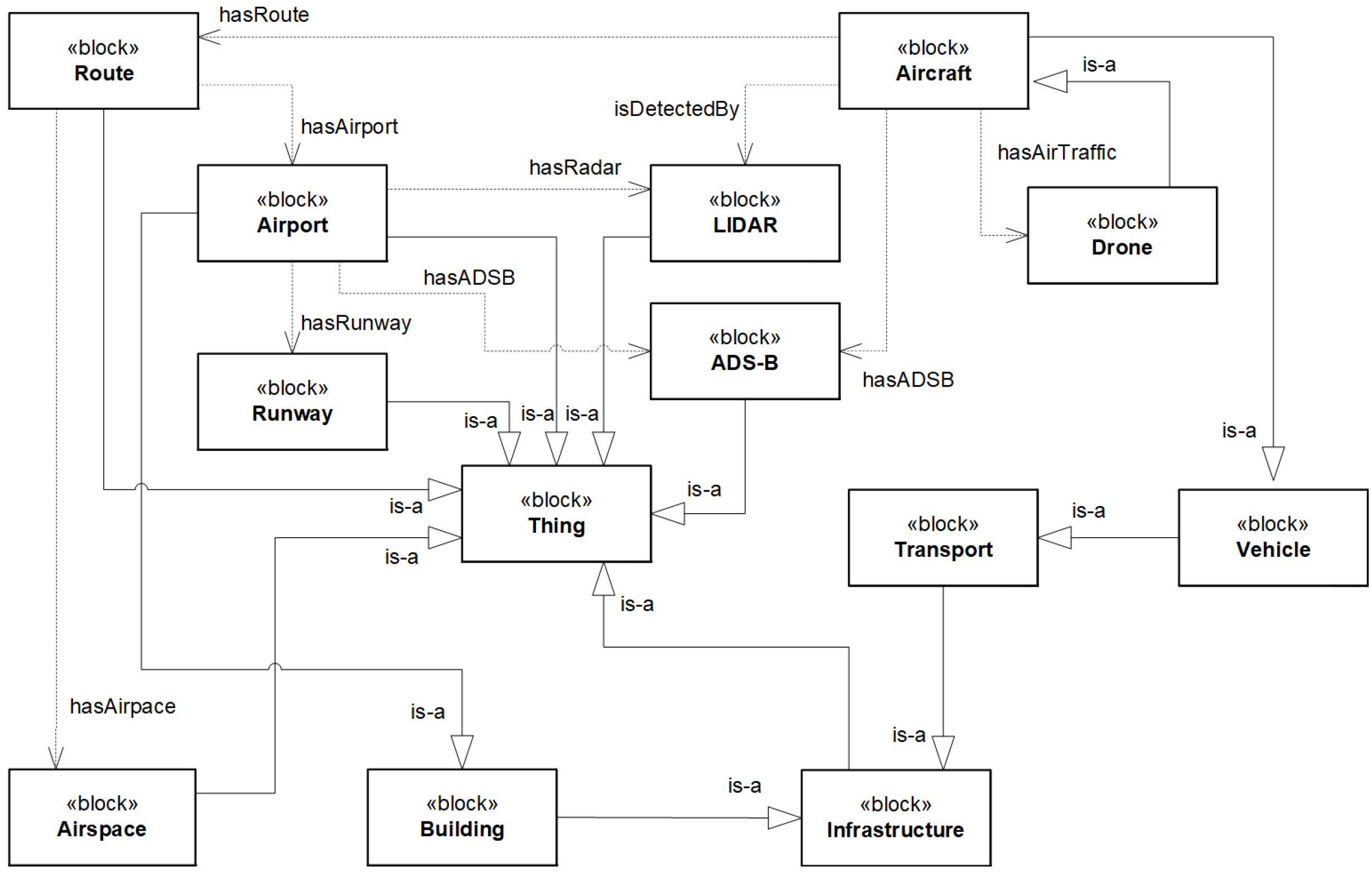

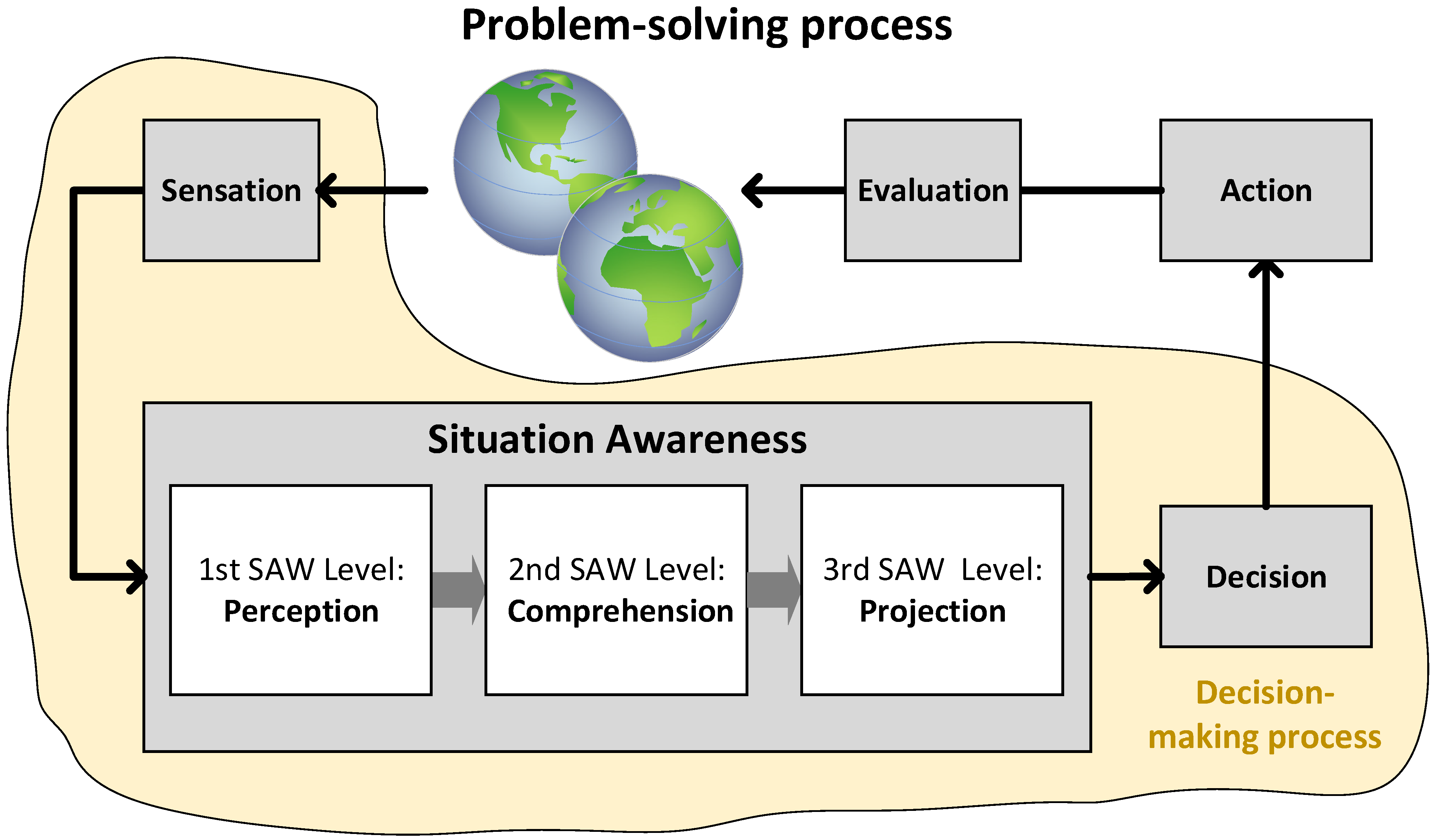

3. Knowledge-Based Situation Awareness

3.1. Air Traffic Situation Awareness

3.2. Description Logic

4. Framework Prototype

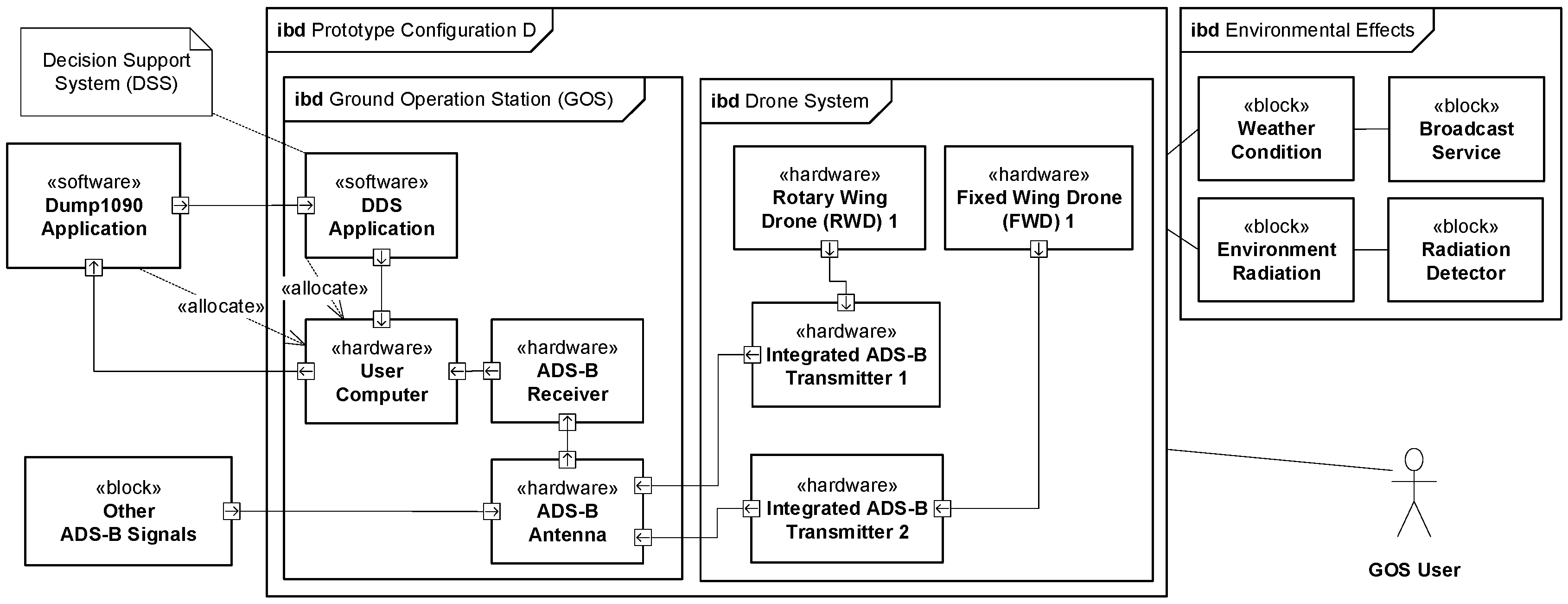

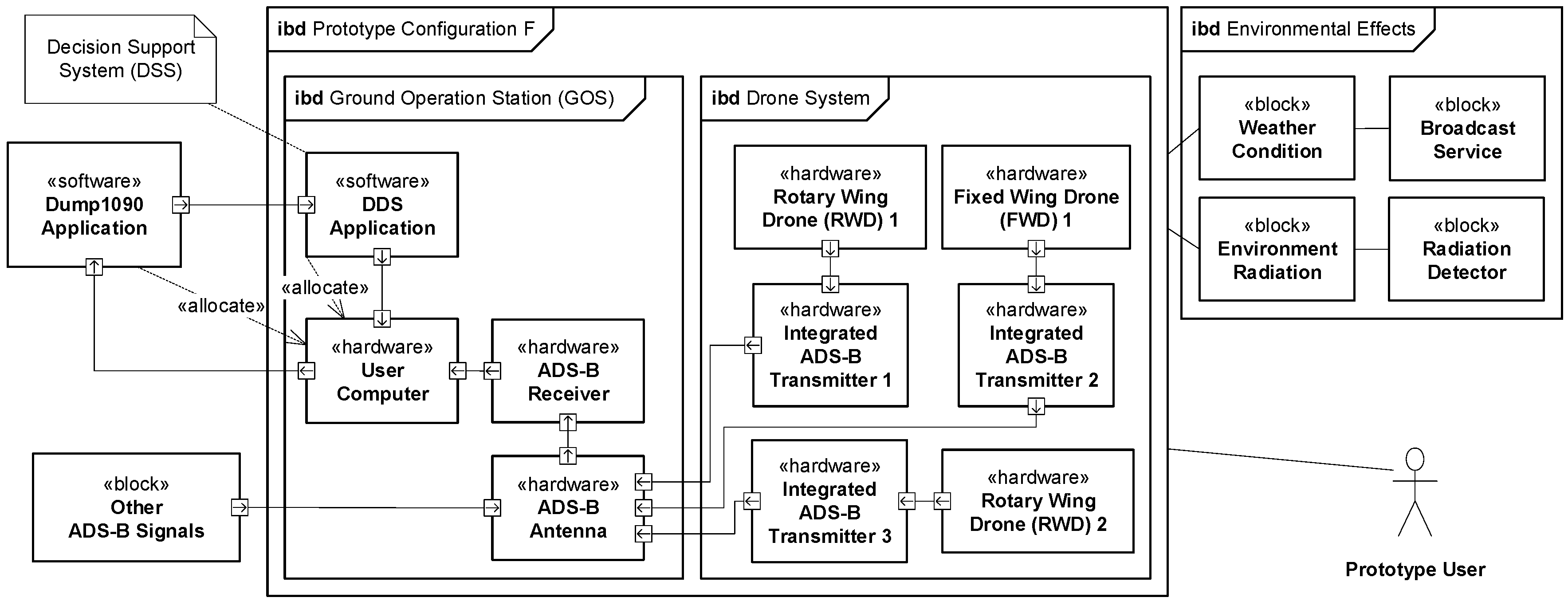

4.1. Hardware Prototype for the Framework

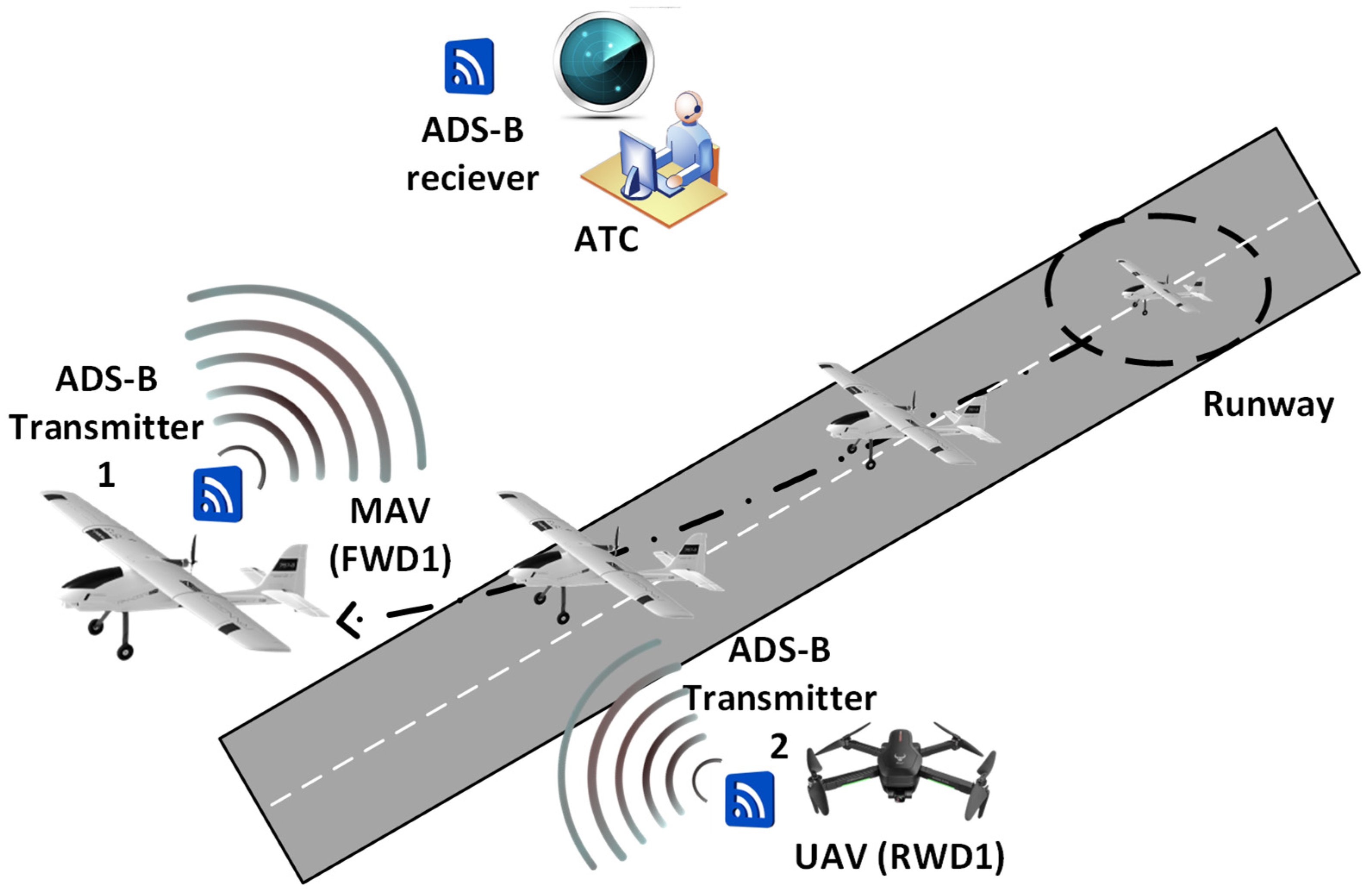

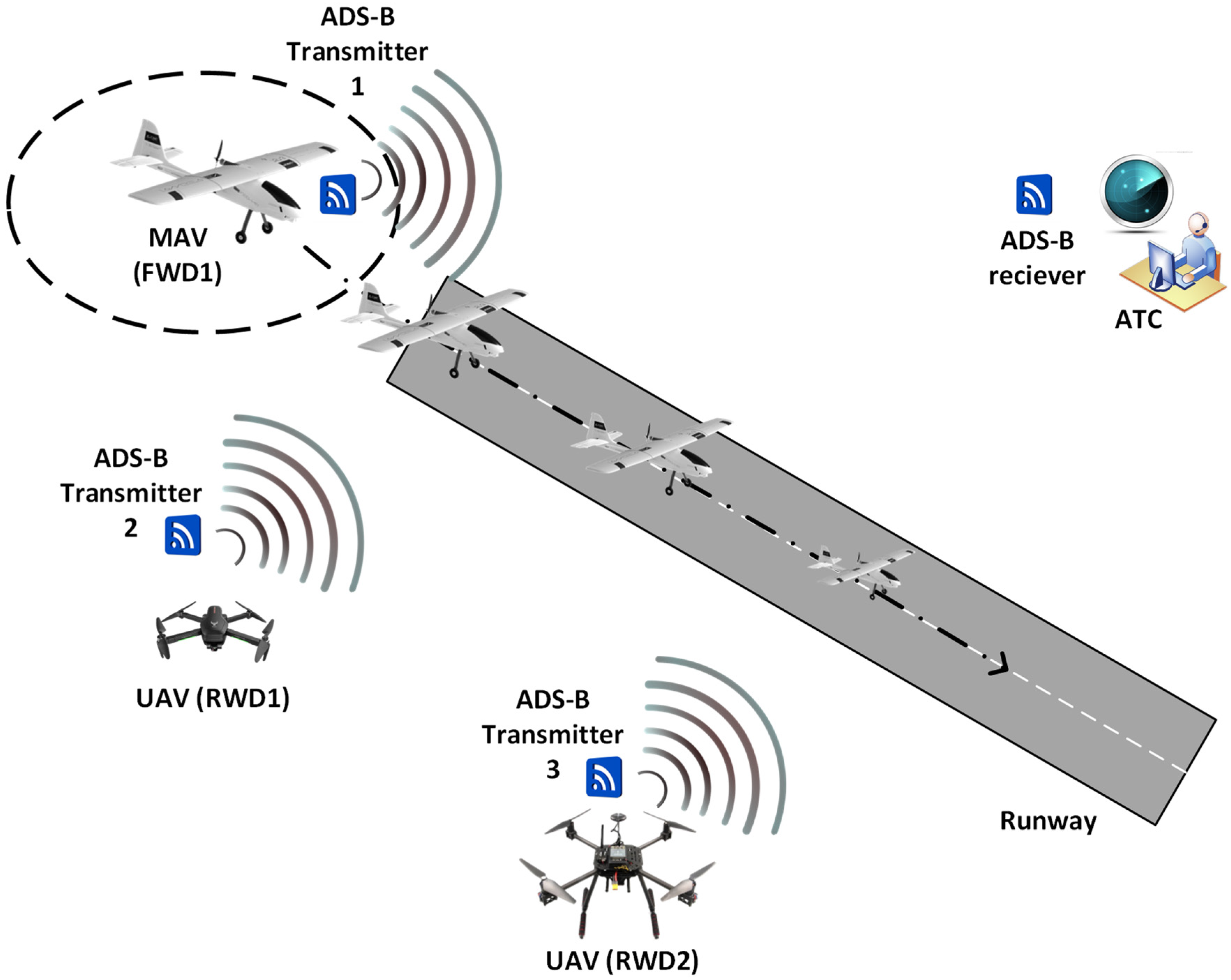

- RWD1 (RC quadcopter): ZLL SG906 Pro 5G WIFI FPV With 4K HD Camera 2-Axis Gimbal Optical Flow Positioning Brushless RC Drone Quadcopter RTF [49] equipped with an ADS-B transmitter.

- RWD2 (RC quadcopter): Holybro X500 Pixhawk 4 Mini 500 mm Wheelbase Frame Kit Combo 2216 880 KV Motor 1045 Propeller for RC Drone [50] equipped with an ADS-B transmitter.

- FWD1 (RC airplane; main role: passenger aircraft): ADS-B antenna and transmitter, battery, power manager, flight controller, motor driver, motor, servo driver, and servo.

- FWD2 (RC airplane; main role: drone): ADS-B antenna and transmitter (optional), battery, power manager, flight controller, motor driver, motor, servo driver, and servo.

- RWD1 (RC quadcopter; main role drone): battery, power manager, flight controller, motor driver, and motors.

- RWD2 (RC quadcopter; main role drone): ADS-B antenna and transmitter, battery, power manager, flight controller, motor driver, motors, and telemetry transceiver and antenna.

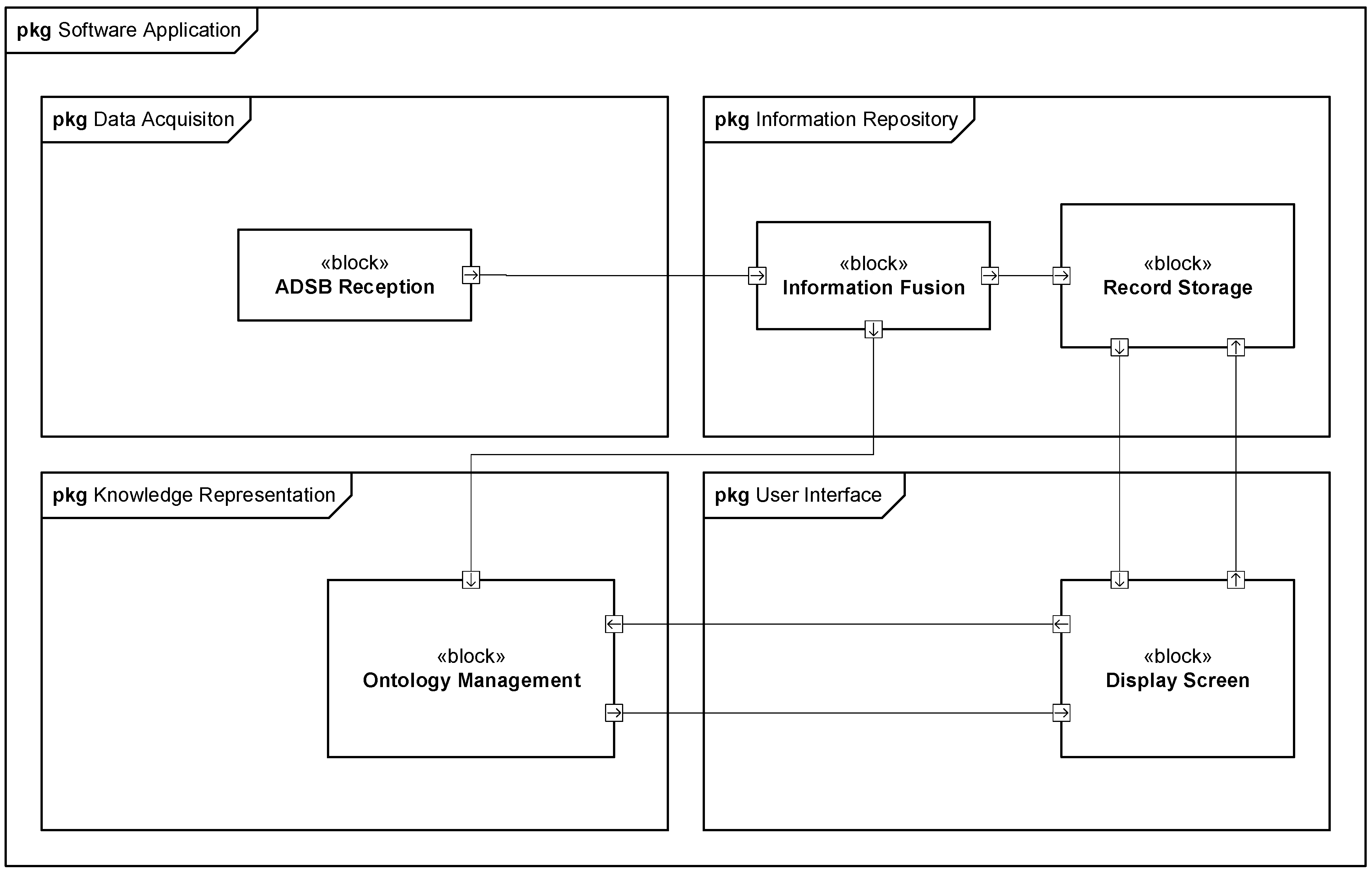

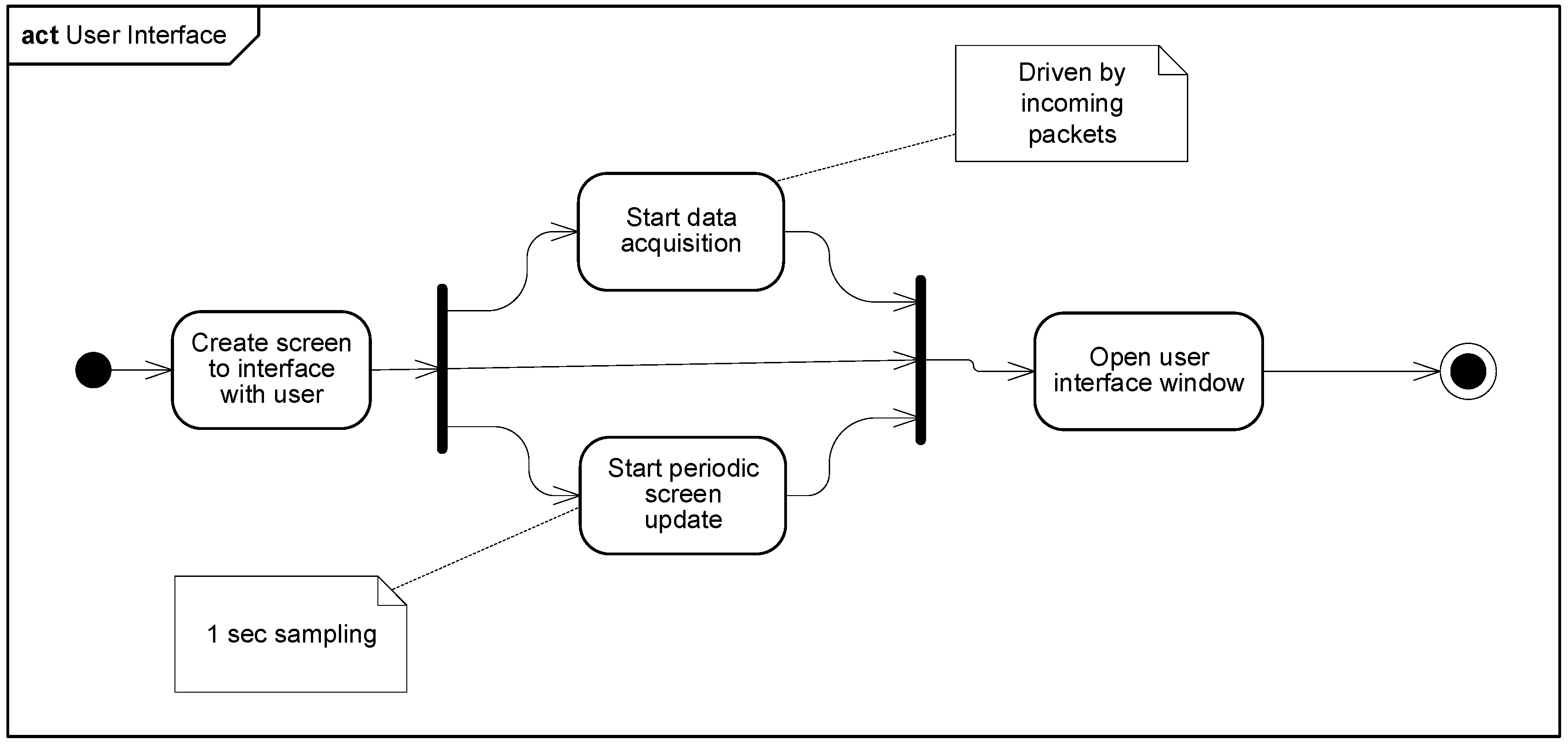

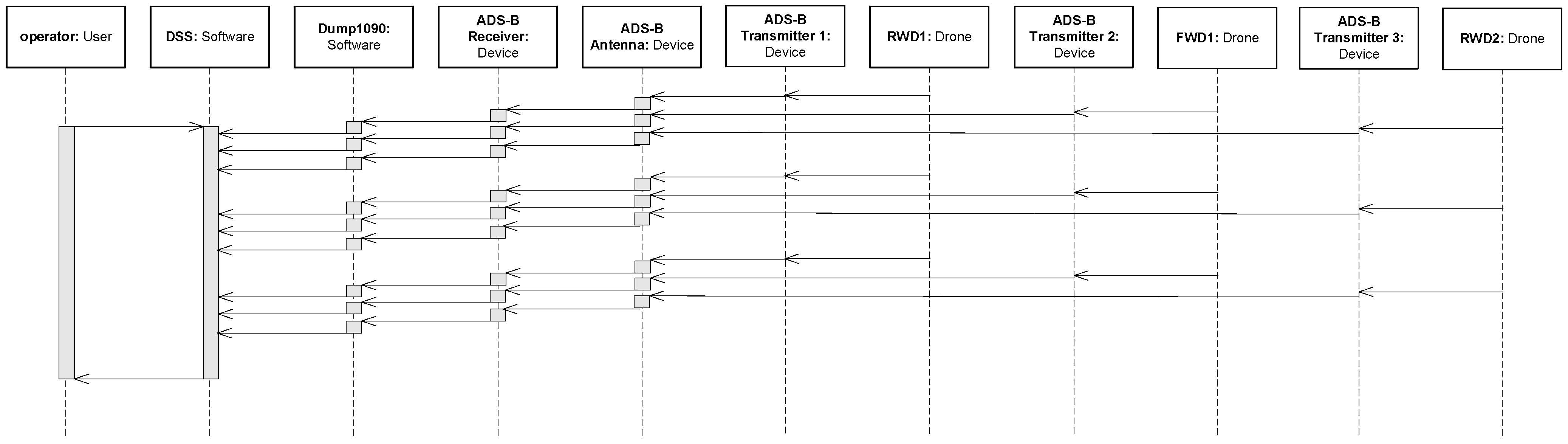

4.2. Software Prototype for the Framework

4.3. Air Traffic Scenarios for Experiments

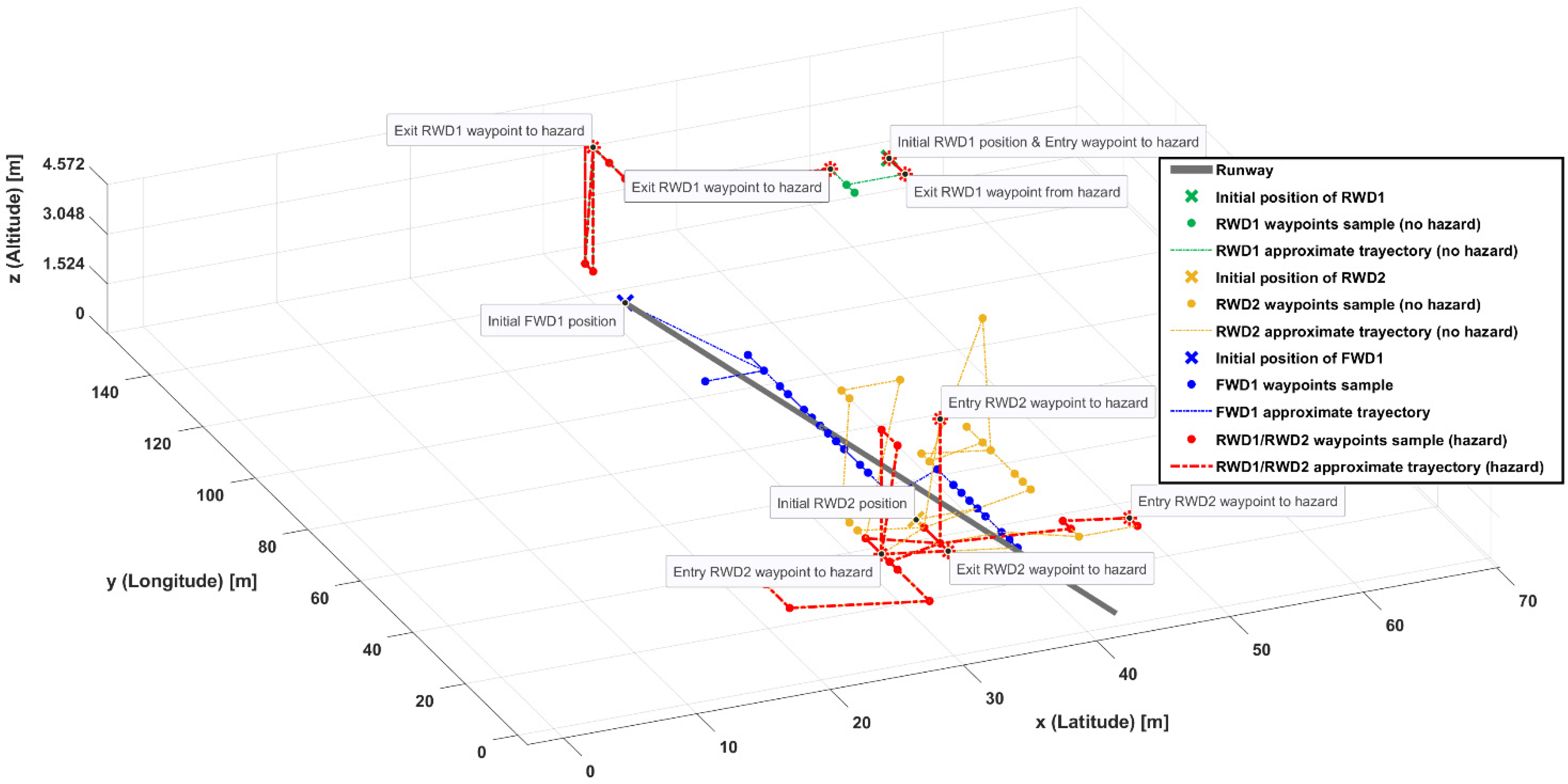

4.3.1. Scenario 1: Airplane Take Off in the Presence of UAVs

4.3.2. Scenario 2: Airplane Landing in the Presence of UAVs

5. Experimental Application Results

5.1. Setup of the Application Scenarios

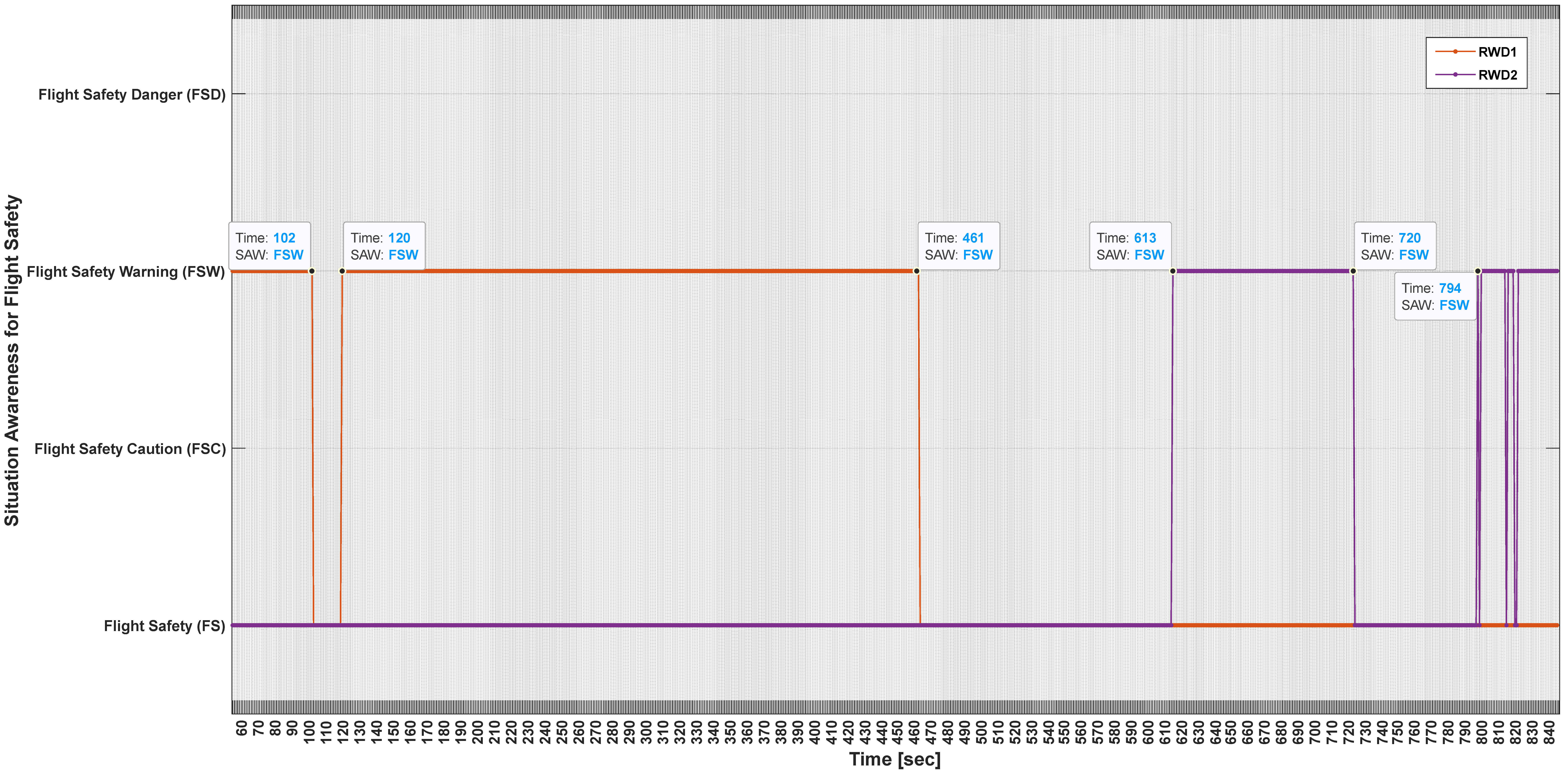

- Flight Safety Danger (FSD). This SAW is basically triggered and displayed to the user when the RWDs are closer to the FWD1 than is permitted and there is no countermeasure in place to avoid a collision.

- Flight Safety Warning (FSW). This SAW is basically triggered and displayed to the user when the RWDs are closer to the FWD1 than is permitted and there is some countermeasure in place to avoid collision.

- Flight Safety Caution (FSC). This SAW is basically triggered and displayed to the user when the RWDs are close to the FWD1 (within the permitted separations) and there is no countermeasure in place to avoid a collision.

- Flight Safety (FS). This SAW is basically triggered and displayed to the user when the RWDs are close to the FWD1 (within the permitted separations) and there is some countermeasure in place to avoid a collision.

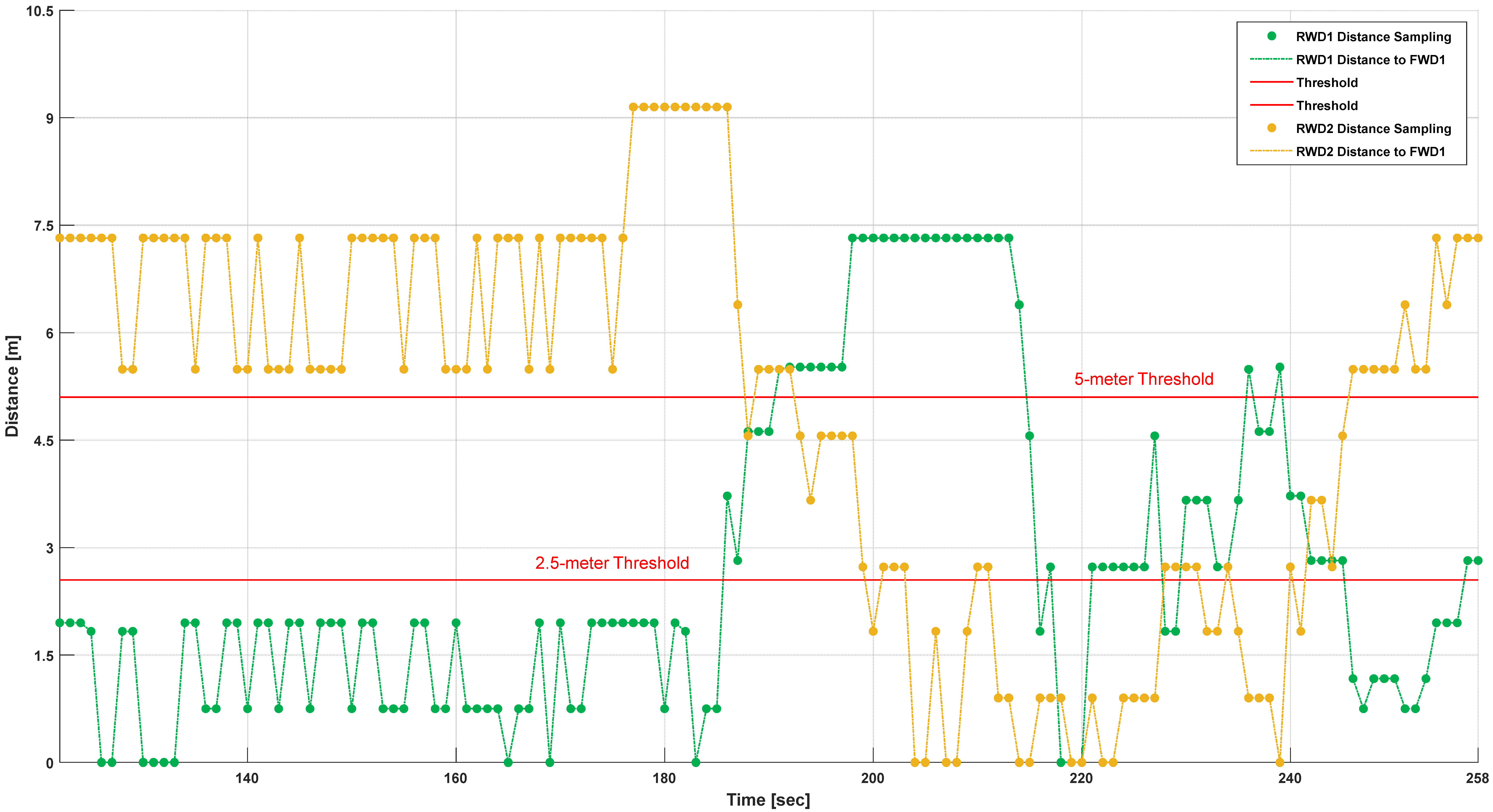

5.2. Scenario 1 Results: Airplane Rolling Out for Takeoff

- FWD1 is an airplane, which is an aircraft; (A4) and (A1).

- RWD1 is a quadcopter, which is an aircraft; (A5) and (A1).

- FWD1 and RWD1 are in Airspace 1; (A15) and (A16); as an airspace having air vehicles, (A3). Hence, RWD1 and FWD1 are in the same airspace (Airspace 1). Thus, RWD1 is part of the air traffic of FWD1 (and vice versa); (A1).

- Airspace 1 is a controlled airspace; (A17).

- RWD1 is not authorized to fly in Airspace 1; (A10).

- RWD1 has a contactable pilot; (A12).

- Separation is a requirement; (A23). Aircraft have a requirement for separation; (A24). Then, FWD1 and RWD1 have a requirement of separation.

- FWD1 requires a separation of 170 units from intruders when intruders are ahead of it and 85 units when intruders are behind it; (A25).

- RWD1 is at a distance from FWD1 (distance continuously being updated).

- Proper separation is when RWD1 is further from FWD1 than the separation distance; (A27).

- Improper separation is when RWD1 is closer to FWD1, less than the separation distance or the opposite of proper separation; (A28).

- Aircraft have a requirement to have proper separation; (A26). Thus, FWD1 and RWD2 have the same requirement, as they are aircraft.

- Proper separation can avoid a mid-air collision; (A27).

- RWD 1 is an intruder; (A29), as it is not authorized to fly in the same airspace as FWD1, as per (A17).

- Intruders can infringe on safety; (A30), which is a status; (A31).

- Aircraft have a constraint that is no collision; (A35).

- Aircraft have safety; (A36).

- RWD1 has separation from FWD1; (A37).

- RWD1 has a direction (ahead of FWD1 or behind it); (A38).

- RWD2 has separation from FWD1; (A39).

- RWD2 has a direction (ahead of FWD1 or behind it); (A40).

- Contactable pilot is a counter measure; (A41).

- None as a countermeasure means no countermeasure; (A42).

- RWD1 has no countermeasure; (A43).

- RWD2 has a countermeasure that is a contactable pilot; (A44).

- Flight safety caution is when RWD1 becomes close to FWD1 (further than the required separation; (A56). Queries on this class/concept show the airplane in caution, FWD1 in this case. Caution comes from RWD1 not having a countermeasure (None).

- What causes a flight safety caution is a UAV near an airplane (FWD1); (A57). Queries on this class/concept show the UAV causing the caution, RWD1 in this case since it does not have countermeasures.

- Flight safety danger is when RWD1 becomes close to FWD1 (closer than the required separation; (A61). Queries on this class/concept show the airplane in danger, FWD1 in this case. The danger comes from RWD1 not having a countermeasure (None).

- What causes a flight safety danger is a UAV near an airplane (FWD1); (A62). Queries on this class/concept show the UAV causing the danger, RWD1 in this case since it does not have countermeasures.

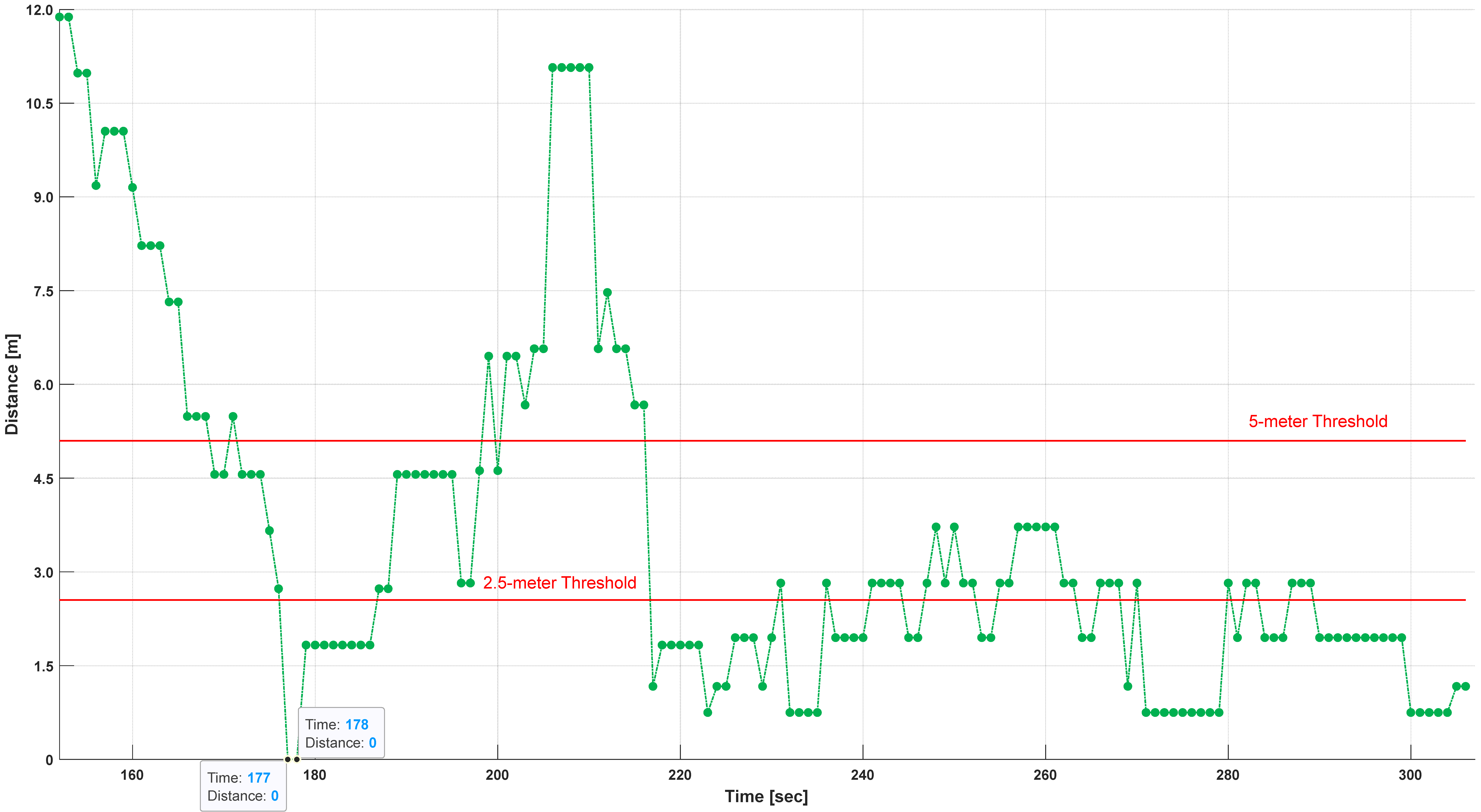

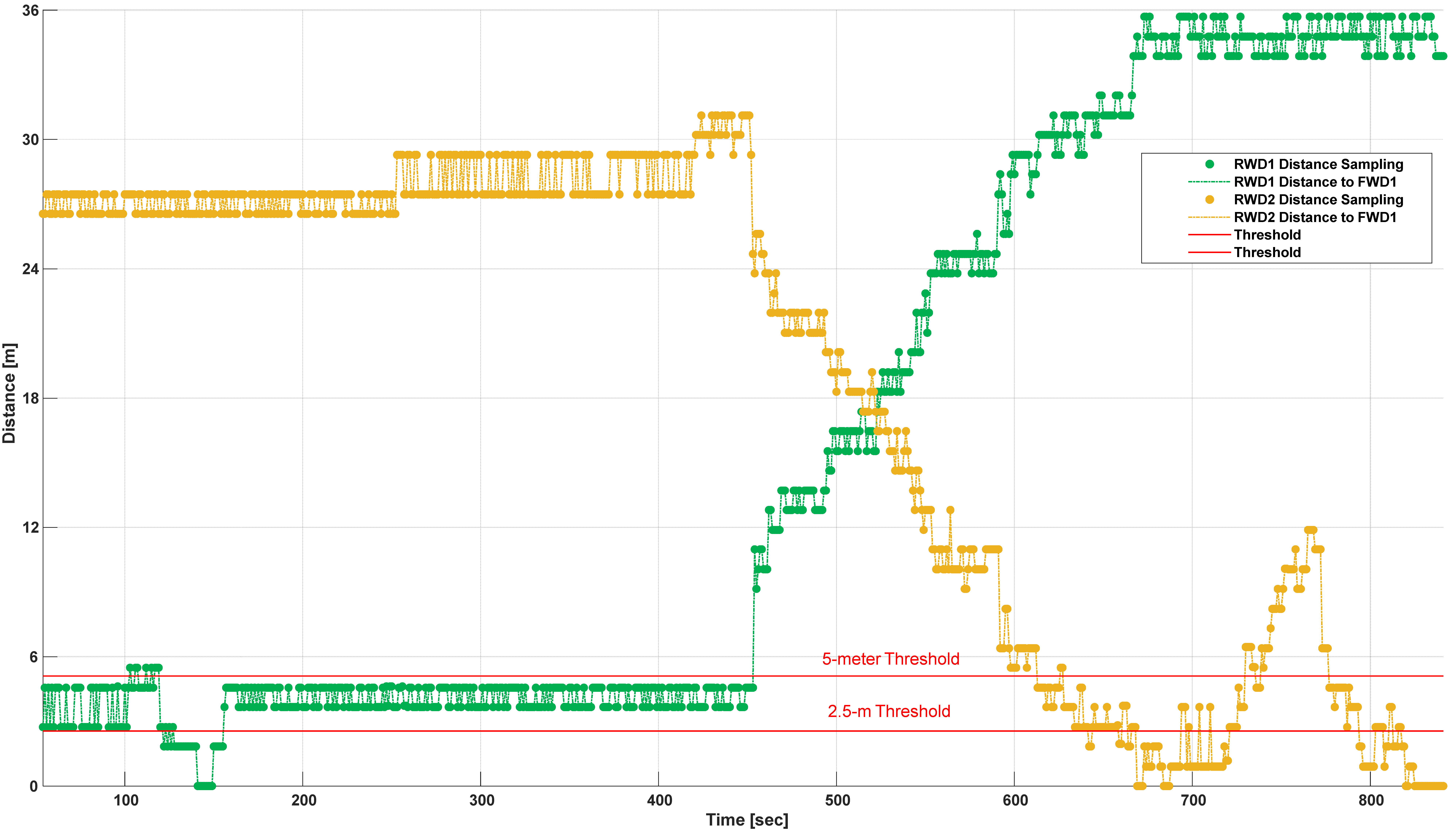

5.3. Scenario 2 Results: Airplane During After-Landing Roll Out

6. Discussion

- The battery of RWD1 (Beast RC quadcopter) must be at least 50% charged for the drone to take off and fly properly. This requirement is due to the payload (ADS-B transmitter) attached to it.

- The calibration of the inertial unit of RWD1 with a payload (ADS-B transmitter) must be completed slowly for the sensors (compass, accelerometer, etc.). Otherwise, the calibration is not properly completed, and RWD1 becomes unstable to control and fly.

- The results from the calculation of the distance between aircraft (RWD1 and FWD1) have improved when compared with previous trials, as there is more space to operate the drones and, therefore, to fly them in different locations. However, there is still a reasonable fluctuation when the separation threshold is crossed. The selection of the threshold has an impact (similar fluctuations) on the SAW provided by the DSS software application.

- The latitude, longitude, and altitude are real measures provided by the ADS-B devices. The scenarios are downscaled and not too sensitive to detect considerable changes in the above parameters sometimes. While the ±1 m error in the GPS should be appreciated, any small delay can affect the measurement, as the drones fly fast. Likewise, the altitude readings only change by around 7 feet (a bit more than 2 m).

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

(∃hasDistance.{>”85”} ⊓ ∃hasDirection.{”6”})

- There is a proper distance between the aircraft (airplanes and drones) given by airspace regulations (value given by “MandatorySeparation”) that must be the minimum distance between the drones (RWD1 and RWD2) that they are allowed to be from FWD1.

- Proper separation can avoid collisions between airplanes and drones in the airspace.

- Improper separation complements (i.e., is the opposite) proper separation.

- FWD1 (e.g., a commercial airplane) can have a safe flight (concept “FlightSafety”) if the distance to the RWD1 and RWD2 is larger than the “value” defined to be the threshold as permitted separation. The reasoned result is the airplane, i.e., FWD1.

- RWD1 or RWD2 are the cause (concept “FlightSafetyCause”) that FWD1 has a safe flight if the above logic is satisfied.

Appendix B

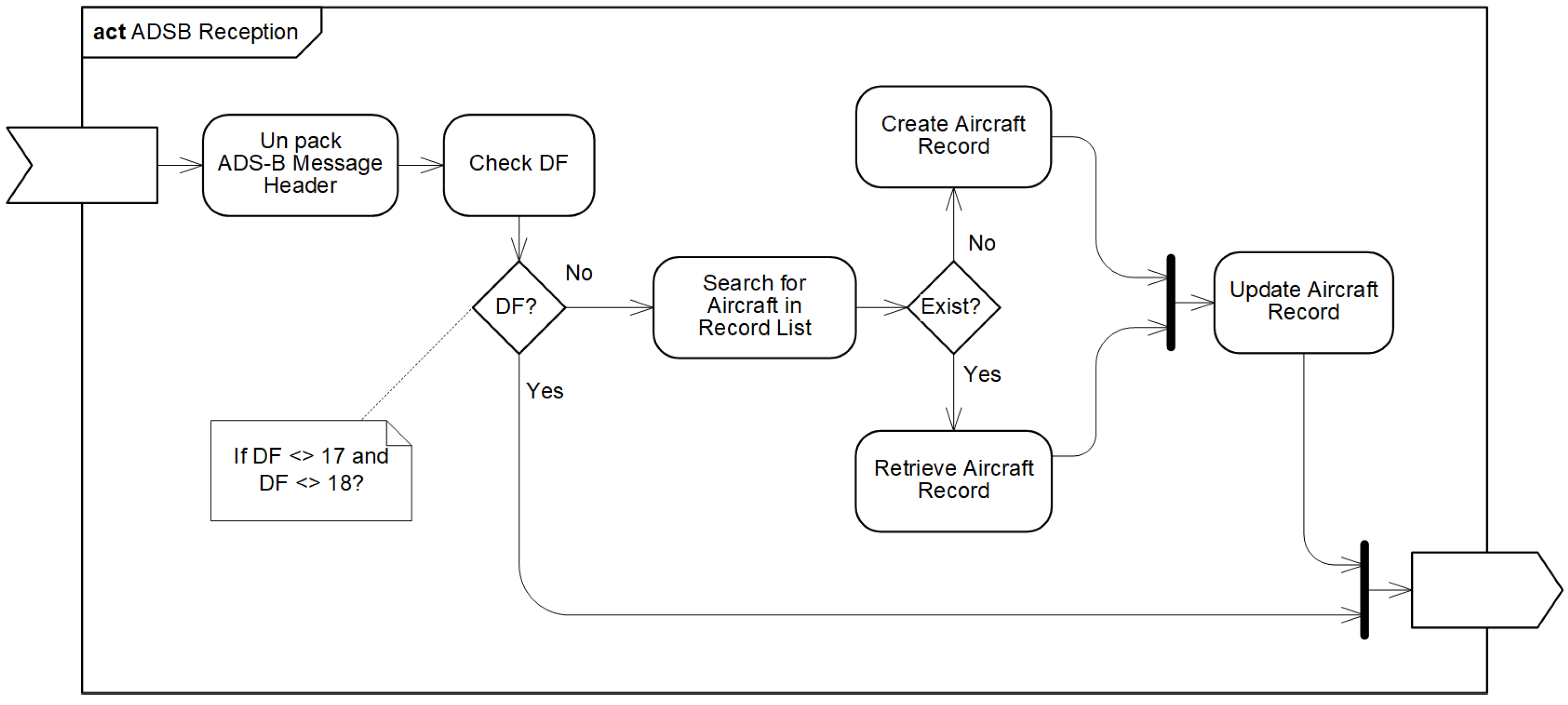

Appendix B.1. ADS-B Data Collection

- 48 bits: “000075A50B46” or 0x000075A50B46 is for the time stamp.

- 5 bits: first 5 bits on the left from “8D” or 0b10001101, i.e., 0b10001 for the DF.

- 3 bits: following 3 bits to the right from “8D”, i.e., 0b101 for the CA.

- 24 bits: “406D79” or 0x010000000110110101111001 for the ICAO address.

- 56 bits: “58BB65DF172AB9” for the ME (Extended Squitter Message).

- 24 bits: “AD4652” for the PI (Parity/Interrogator ID).

Appendix B.2. Ontological Knowledge Representation

Appendix C

References

- CNS/ATM Systems. Available online: https://www.icao.int/Meetings/AMC/MA/1998/rio/EXECSUM.pdf (accessed on 10 November 2024).

- Mid Air Collision—Our Safety Plan, UK Civil Aviation Authority (CAA). Available online: https://www.caa.co.uk/safety-initiatives-and-resources/how-we-regulate/safety-plan/mitigating-key-safety-risks/mid-air-collision/ (accessed on 10 November 2024).

- Artificial Intelligence Roadmap 2.0. 2003. Available online: http://easa.europa.eu/ai (accessed on 10 November 2024).

- Single European Sky ATM Research Joint Undertaking. Available online: www.sesarju.eu (accessed on 10 November 2024).

- Dy, L.R.I.; Mott, J.H. Airspace Saturation and Midair Collision Risk: A Case Study at a Class D Airport. Int. J. Aviat. Aeronaut. Aerosp. 2024, 10, 4. Available online: https://commons.erau.edu/cgi/viewcontent.cgi?article=1882&context=ijaaa (accessed on 10 November 2024). [CrossRef]

- ICAO. Manual on Airspace Planning Methodology for the Determination of Separation Minima, 1st ed.; International Civil Aviation Organization: Montreal, QC, Canada, 1998. Available online: https://www.icao.int/Meetings/anconf12/Document%20Archive/9689_cons_en.pdf (accessed on 10 November 2024).

- Aircraft ASE and RVSM Collision Risk Analyses, Federal Aviation Administration (FAA). 2017. Available online: https://www.faa.gov/sites/faa.gov/files/air_traffic/separation_standards/ase/3.1_RVSM_Safety_and_ASE.pdf (accessed on 10 November 2024).

- Civil Aviation Authority (CAA), Drone Safety Risk: An assessment, CAP 1627. 2018. Available online: https://www.caa.co.uk/publication/download/16315 (accessed on 10 November 2024).

- European Union Aviation Safety Agency, EASA Research project ‘Vulnerability of Manned Aircraft to Drone Strikes’. 2023. Available online: https://www.easa.europa.eu/en/newsroom-and-events/events/vulnerability-manned-aircraft-drone-strikes#:~:text=In%20particular%2C%20regarding%20the%20risk,means%20of%20compliance%20(AMC) (accessed on 10 November 2024).

- Insaurralde, C.C.; Blasch, E. Ontological Knowledge Representation for Avionics Decision-Making Support. In Proceedings of the 35th Digital Avionics Systems Conference (DASC), Sacramento, CA, USA, 25–29 September 2016. [Google Scholar]

- Wilson, S.; Suzic, R.; Van der Stricht, S. The SESAR ATM information reference model within the new ATM system. In Proceedings of the Integrated Communications, Navigation and Surveillance Conference (ICNS), Herndon, VA, USA, 8–10 April 2014. [Google Scholar]

- Keller, R.M. The NASA Air Traffic Management Ontology; Technical Documentation, NASA/TM-2017-219526; NASA: Washington, DC, USA, 2017. [Google Scholar]

- Vennesland, A.; Keller, R.M.; Schuetz, C.G.; Gringinger, E.; Neumayr, B. Matching Ontologies for Air Traffic Management: A Comparison and Reference Alignment of the AIRM and NASA ATM Ontologies. In Proceedings of the CEUR Workshop Proceedings, Auckland, New Zealand, 26–30 October 2019. [Google Scholar]

- Aghdam, M.; Tabbakh, S.; Chabok, S.; Kheyrabadi, M. A New Ontology-Based Multi-Agent System Model for Air Traffic Management. Int. J. Transp. Eng. 2022, 10, 1055–1068. [Google Scholar]

- Kabashkin, I.; Tikanashvili, N. Ontology-Based Approach for Human Competency Gap Analysis in Air Traffic Management. Transp. Telecommun. 2019, 20, 279–285. [Google Scholar] [CrossRef]

- Insaurralde, C.C.; Blasch, E.P.; Costa, P.C.G.; Sampigethaya, K. Uncertainty-Driven Ontology for Decision Support System in Air Transport. Electronics 2022, 11, 362. [Google Scholar] [CrossRef]

- Martin-Lammerding, D.; Astrain, J.; Cordoba, A.; Villadangos, J. An ontology-based system to avoid UAS flight conflicts and collisions in dense traffic scenarios. Expert Syst. Appl. 2023, 215, 119027. [Google Scholar] [CrossRef]

- Neal, C.; De Miceli, J.-Y.; Barrera, D.; Fernandez, J. Ontology-Based Anomaly Detection for Air Traffic Control Systems. arXiv 2022, arXiv:2207.00637. [Google Scholar]

- Aghdam, M.; Tabbakh, S.; Chabok, S.; Kheyrabadi, M. Ontology generation for flight safety messages in air traffic management. J. Big Data 2021, 8, 61. [Google Scholar] [CrossRef]

- Chevallier, J. Enabling Autonomy in Commercial Aviation: An Ontology and Framework for Automating Unmanned Aircraft Systems (UAS). Master’s Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2021. [Google Scholar]

- Kasmier, D.; Merrell, E.; Kelly, R.; Smith, B.; Heisey, C.; Maki, D.E.; Brittain, M.; Ankner, R.; Bush, K. Ontology of PlaysforAutonomous Teaming and Collaboration. In Proceedings of the XIV Seminar on Ontology Research, Bolzano, Italy, 13–17 September 2021. [Google Scholar]

- Lv, M.; Cao, X.; Wu, T.; Li, Y. A Civil Aviation Customer Service Ontology and Its Applications. Data Intell. 2023, 5, 1063–1081. [Google Scholar] [CrossRef]

- Jafer, S.; Chava, B.; Updegrove, J.; Durak, U. Schema-based Ontological Representations of a Domain-Specific Scenario Modeling Language. J. Simul. Eng. 2019, 1, 2:1–2:15. [Google Scholar]

- Palacios-Medinacelli, L. Knowledge Discovery for Avionics Maintenance: An Unsupervised Concept Learning Approach. Ph.D. Thesis, Université Paris Saclay, Orsay, France, 2019. Available online: https://tel.archives-ouvertes.fr/tel-02285443/ (accessed on 10 November 2024).

- Verhagen, W.J.C.; Curran, R. An Ontology-Based Approach for Aircraft Maintenance Task Support. In Proceedings of the 20th ISPE International Conference on Concurrent Engineering, Melbourne, Australia, 2–6 September 2013; pp. 494–506. [Google Scholar] [CrossRef]

- Grof, C.; Kamtsiuris, A. Ontology-based Process Reengineering to Support Digitalization Of MRO Operations: Application To An Aviation Industry Case. Procedia CIRP 2021, 104, 1322–1327. [Google Scholar] [CrossRef]

- Abdallah, A.; Fan, I.-S. Towards Building Ontology-Based Applications for Integrating Heterogeneous Aircraft Maintenance Records. In Proceedings of the IEEE 20th International Conference on Industrial Informatics (INDIN), Perth, Australia, 25–28 July 2022. [Google Scholar]

- The ICARUS Ontology: An Ontology for the Representation of the Knowledge of the Aviation Sector. Available online: https://www.icarus2020.aero/the-icarus-ontology-an-ontology-for-the-representation-of-the-knowledge-of-the-aviation-sector (accessed on 10 November 2024).

- Glas, M. Ontology-based Model Integration for the Conceptual Design of Aircraft. Technische Universität München, Munich, Germany, 2013. Available online: https://d-nb.info/1035274477/34 (accessed on 10 November 2024).

- Arista, R.; Zheng, X.; Lu, J.; Mas, F. An Ontology-based Engineering system to support aircraft manufacturing system design. J. Manuf. Syst. 2023, 68, 270–288. [Google Scholar]

- Chen, J.; Chen, Y.; Hu, Z.; Lu, J.; Zheng, X.; Zhang, H.; Kiritsis, D. A Semantic Ontology-Based Approach to Support Model-Based Systems Engineering Design for an Aircraft Prognostic Health Management System. Front. Manuf. Technol. 2022, 2, 886518. [Google Scholar] [CrossRef]

- Shobowale, K.O.; Mohammed, A.; Ibrahim, B.G.; Suleiman, A.A.; Muhammad, B.B.; Ubadike, O. Impact of Ontology in Aviation Incident and Accident Knowledge Repository. J. Sci. Technol. Educ. 2021, 9, 255–267. [Google Scholar]

- Wang, Y.; Li, Q.; Sun, Y.; Chen, J. Aviation Equipment Fault Information Fusion Based on Ontology. In Proceedings of the International Conference on Computer, Communications and Information Technology, Beijing, China, 16–17 January 2014. [Google Scholar]

- Ahang, X.; Sun, Y.; Zhang, Y. Ontology modelling of intelligent HCI in aircraft cockpit. Aircr. Eng. Aerosp. Technol. 2021, 93, 794–808. [Google Scholar]

- Qasim, L.; Hein, A.; Olaru, S.; Jankovic, M.; Garnier, J.-L. An Ontology for System Reconfiguration: Integrated Modular Avionics IMA Case Study. In Recent Trends and Advances in Model Based Systems Engineering; Springer: Berlin/Heidelberg, Germany, 2022; pp. 189–198. [Google Scholar]

- Du, X.; Du, C.; Chen, J.; Dong, C.; Liu, Y. Ontology-Based Resource Modeling and Matching Framework in Avionics Systems. Int. J. Aerosp. Eng. 2022, 2022, 8284857. [Google Scholar] [CrossRef]

- Zamboni, J.; Zamfir, A.; Moerland, E.; Nagel, B. A Semantic Knowledge Based Engineering Framework for The Rapid Generation Of Novel Air Vehicle Configurations. In Proceedings of the 33rd Congress of the International Council of the Aeronautical Sciences, Stockholm, Sweden, 4–9 September 2022. [Google Scholar]

- OntoCommons Original 11 Demonstrators, Airbus Design and Manufacturing. Available online: https://ontocommons.eu/ontocommons-demonstrators#OntoCommons%20Demonstrators (accessed on 10 November 2024).

- The FLY AI Report, Demystifying and Accelerating AI in Aviation/ATM, European Aviation Artificial Intelligence High Level Group, 2020. Available online: https://www.eurocontrol.int/sites/default/files/2020-03/eurocontrol-fly-ai-report-032020.pdf (accessed on 10 November 2024).

- Aviation Performance Measuring System (APMS), SKYbrary. Available online: https://skybrary.aero/articles/aviation-performance-measuring-system-apms (accessed on 10 November 2024).

- McIntosh, S.E. The Wingman-Philosopher of MiG Alley: Boyd and the OODA Loop. Air Power Hist. 2011, 58, 24–33. [Google Scholar]

- Yura, H.; Walsh, M.B. The Nursing Process: Assessing, Planning, Implementing, and Evaluating, Proceedings of the Continuing Education Series Conducted at the Catholic University of America; Catholic University of America Press: New York, NY, USA, 1967. [Google Scholar]

- Blasch, E.; Paces, P.; Leuchter, J. Pilot Timeliness of Safety Decisions Using Information Situation Awareness. In Proceedings of the IEEE/AIAA Digital Avionics Systems Conference, Springs, CO, USA, 5–9 October 2014. [Google Scholar]

- Endsley, M.R. Toward a Theory of Situation Awareness in Dynamic Systems. Hum. Factors J. 1995, 37, 32–64. [Google Scholar] [CrossRef]

- Baader, F. (Ed.) The Description Logic Handbook—Theory, Implementation and Applications; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- FlightAware Pro Stick and Pro Stick Plus—High Performance USB SDR ADS-B Receivers. Available online: https://uk.flightaware.com/adsb/prostick (accessed on 10 November 2024).

- Volantex RC Ranger EX, Professional FPV Platform. Available online: http://myosuploads3.banggood.com/products/20210506/20210506214624VolantexRangerExManual.pdf (accessed on 10 November 2024).

- Sky Echo II Manual. Available online: https://uavionix.com/products/skyecho/ (accessed on 10 November 2024).

- ZLL SG906 Pro 5G WIFI FPV, Specification. Available online: http://myosuploads3.banggood.com/products/20201022/20201022030844SG906PRO1.jpg (accessed on 10 November 2024).

- Holybro X500 Pixhawk 4 Mini 500 mm Wheelbase Frame Kit Combo 2216 880 KV Motor 1045 Propeller for RC Drone, Assembly Guide. Available online: https://www.3dxr.co.uk/multirotor-c3/multirotor-frames-c97/holybro-x500-frame-kit-with-pixhawk-4-mini-motors-and-escs-p3605 (accessed on 10 November 2024).

- Insaurralde, C.C.; Blasch, E. Avionics Analytics Ontology: Preliminary Flight Test Results for Decision Support. In Proceedings of the Integrated Communications, Navigation and Surveillance (ICNS) Conference, Washington, DC, USA, 23–25 April 2024. [Google Scholar]

| Environmental Aspect | Description |

|---|---|

| Weather Condition * |

|

| Environment Radiation | The following measures are with the ADS-B transmitter(s) and remote controllers on:

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Insaurralde, C.C.; Blasch, E. Ontological Airspace-Situation Awareness for Decision System Support. Aerospace 2024, 11, 942. https://doi.org/10.3390/aerospace11110942

Insaurralde CC, Blasch E. Ontological Airspace-Situation Awareness for Decision System Support. Aerospace. 2024; 11(11):942. https://doi.org/10.3390/aerospace11110942

Chicago/Turabian StyleInsaurralde, Carlos C., and Erik Blasch. 2024. "Ontological Airspace-Situation Awareness for Decision System Support" Aerospace 11, no. 11: 942. https://doi.org/10.3390/aerospace11110942

APA StyleInsaurralde, C. C., & Blasch, E. (2024). Ontological Airspace-Situation Awareness for Decision System Support. Aerospace, 11(11), 942. https://doi.org/10.3390/aerospace11110942