Thrust Prediction of Aircraft Engine Enabled by Fusing Domain Knowledge and Neural Network Model

Abstract

1. Introduction

2. On-Board Adaptive Model

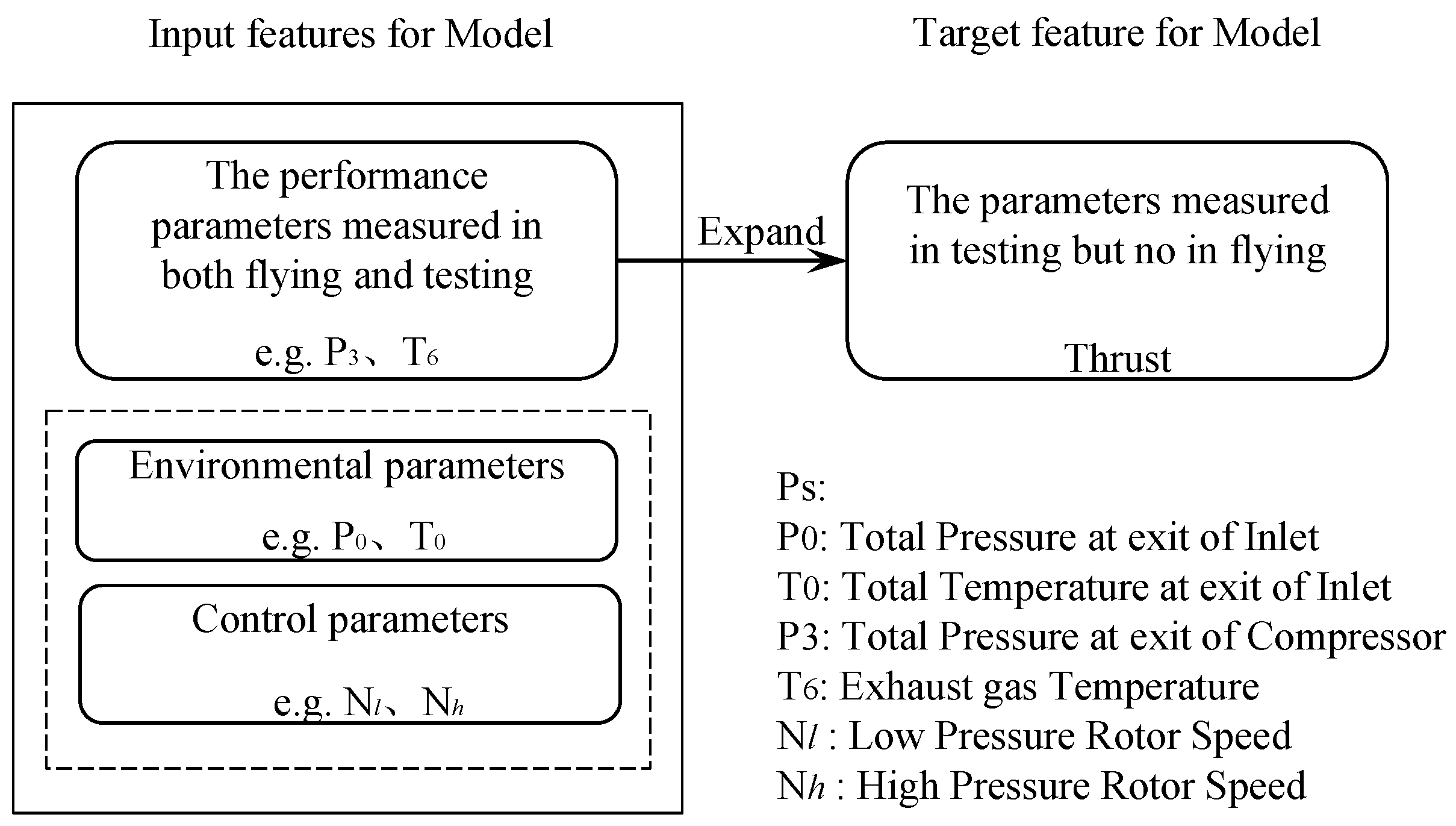

2.1. Architecture by Fusing Physical Structure and Neural Network

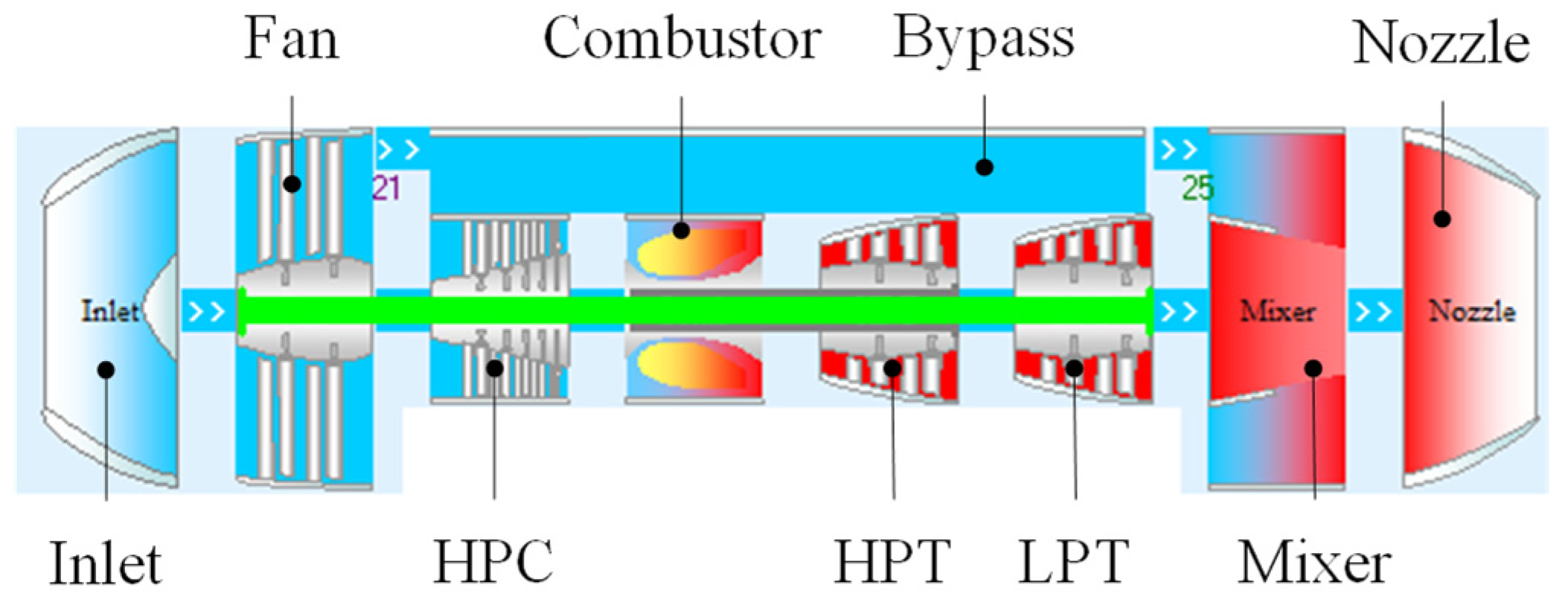

- Based on domain decomposition, a neural network is divided into multiple subnetworks and the number of which corresponds to the number of engine components.

- A subnetwork represents an engine component, and the input features of the subnetwork are related to the corresponding engine component.

- The subnetworks are interconnected based on the interconnection between the engine components. The order of data flowing through the subnetwork is based on the order in which air flows through the engine component. For example, the air flows sequentially through an inlet, a fan, and then a compressor. Correspondingly, the data flow sequentially through an inlet subnetwork, a fan subnetwork, and then a compressor subnetwork.

- The physical constraint on the networks is that the rotation speed of the components on the same axial is equal. For example, the same rotation speed is used as input to a fan subnetwork and a low-pressure turbine subnetwork.

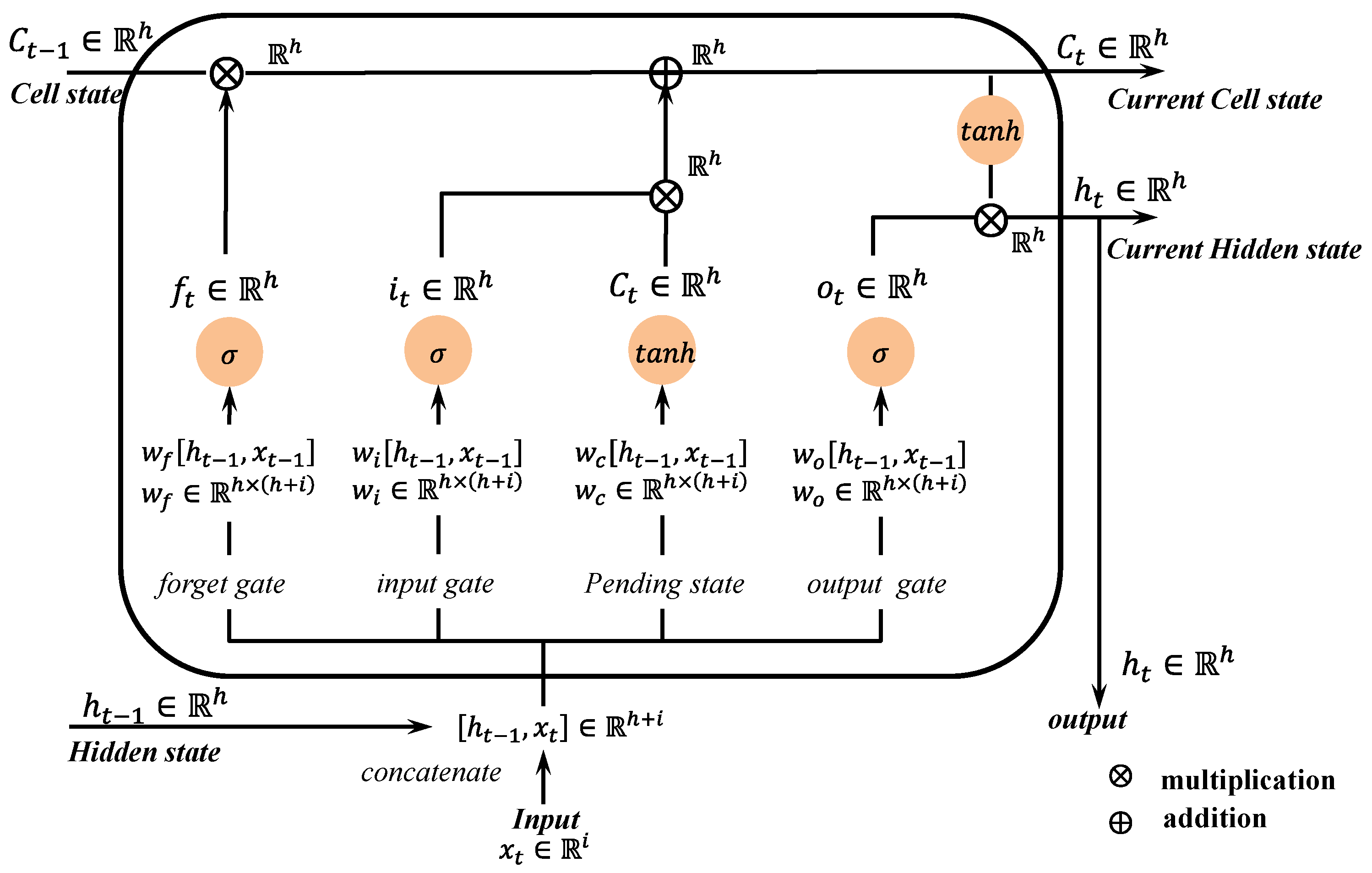

2.2. Component Network

2.3. Model for Predicting Thrust

| Algorithm 1 The hybrid architecture-based thrust prediction model | |

| 1 | Determine the number of component networks according to the aircraft engine type. |

| 2 | Connect the component network to build the component learning layer. |

| 3 | The component networks are arranged in the order in which air flows through the components in the engine. |

| 4 | The output of component networks points to the coupling layer; |

| The output of the coupling layer points to the mapping layer; | |

| The output of the mapping layer is a target parameter. | |

| 5 | Determine the neural network type for each layer: |

| Component learning layer (component network): FNN; | |

| Coupling layer: Bi-LSTM; | |

| Mapping layer: FNN. | |

| 6 | Measurable parameters are classified by component correlation; |

| Measurable parameters are the input for component network, thrust as the target. | |

| 7 | Preprocess data with the Min–Max normalization method. |

| 8 | Set the batch size and the node number of the network; choose MSE as the loss |

| function and RMSE for optimization. | |

| 9 | Training model: |

| For i = 1 to iter: | |

| Tune the weight value of the model to minimize the loss function. | |

| End | |

3. Verification and Discussion

3.1. Case Settings

3.2. Performance Metric

3.3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Litt, J.S.; Simon, D.L.; Garg, S.; Guo, T.H.; Mercer, C.; Millar, R.; Behbahani, A.; Bajwa, A.; Jensen, D.T. A Survey of Intelligent Control and Health Management Technologies for Aircraft Propulsion Systems. J. Aerosp. Comput. Inf. Commun. 2004, 1, 543–563. [Google Scholar] [CrossRef]

- Kobayashi, T.; Simon, D.L. Integration of On-Line and Off-Line Diagnostic Algorithms for Aircraft Engine Health Management. J. Eng. Gas Turbines Power 2007, 129, 986–993. [Google Scholar] [CrossRef]

- Simon, D.L. An Integrated Architecture for On-board Aircraft Engine Performance Trend Monitoring and Gas Path Fault Diagnostics. In Proceedings of the 57th JANNAF Joint Propulsion Meeting, Colorado Springs, CO, USA, 3–7 May 2010. [Google Scholar]

- Armstrong, J.B.; Simon, D.L. Implementation of an Integrated On-Board Aircraft Engine Diagnostic Architecture. In Proceedings of the 47th AIAA/ASME/SAE/ASEE Joint Propulsion Conference & Exhibit, San Diego, SA, USA, 31 July–3 August 2011. NASA/TM-2012-217279, AIAA-2011-5859, 2012.. [Google Scholar]

- Brunell, B.J.; Viassolo, D.E.; Prasanth, R. Model adaptation and nonlinear model predictive control of an aircraft engine. In Proceedings of the ASME Turbo Expo 2004: Power for Land, Sea and Air, Vienna, Austria, 14–17 June 2004; Volume 41677, pp. 673–682. [Google Scholar]

- Jaw, L.C.; Mattingly, J.D. Aircraft Engine Controls: Design, System Analysis, and Health Monitoring; Schetz, J.A., Ed.; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2009; pp. 37–61. [Google Scholar]

- Alag, G.; Gilyard, G. A proposed Kalman filter algorithm for estimation of unmeasured output variables for an F100 turbofan engine. In Proceedings of the 26th Joint Propulsion Conference, Orlando, FL, USA, 16–18 July 1990. [Google Scholar]

- Csank, J.T.; Connolly, J.W. Enhanced Engine Performance During Emergency Operation Using a Model-Based Engine Control Architecture. In Proceedings of the AIAA/SAE/ASEE Joint Propulsion Conference, Orlando, FL, USA, 27–29 July 2015. [Google Scholar]

- Simon, D.L.; Litt, J.S. Application of a constant gain extended Kalman filter for in-flight estimation of aircraft engine performance parameters. In Proceedings of the ASME Turbo Expo 2005: Power for Land, Sea, and Air, Reno, NV, USA, 6–9 June 2005. [Google Scholar]

- Litt, J.S. An optimal orthogonal decomposition method for Kalman filter-based turbofan engine thrust estimation. J. Eng. Gas Turbines Power 2007, 130, 745–756. [Google Scholar]

- Csank, J.; Ryan, M.; Litt, J.S.; Guo, T. Control Design for a Generic Commercial Aircraft Engine. In Proceedings of the 46th AIAA/SAE/ASEE Joint Propulsion Conference, Nashville, TN, USA, 25–28 July 2010. [Google Scholar]

- Zhu, Y.Y.; Huang, J.Q.; Pan, M.X.; Zhou, W.X. Direct thrust control for multivariable turbofan engine based on affine linear parameter varying approach. Chin. J. Aeronaut. 2022, 35, 125–136. [Google Scholar] [CrossRef]

- Simon, D.L.; Borguet, S.; Léonard, O.; Zhang, X. Aircraft engine gas path diagnostic methods: Public benchmarking results. J. Eng. Gas Turbines Power 2013, 136, 041201–041210. [Google Scholar] [CrossRef]

- Chati, Y.S.; Balakrishnan, H. Aircraft engine performance study using flight data recorder archives. In Proceedings of the 2013 Aviation Technology, Integration, and Operations Conference, Los Angeles, CA, USA, 12–14 August 2013. [Google Scholar]

- Kobayashi, T.; Simon, D.L. Hybrid neural network genetic-algorithm technique for aircraft engine performance diagnostics. J. Propuls. Power 2005, 21, 751–758. [Google Scholar] [CrossRef]

- Kim, S.; Kim, K.; Son, C. Transient system simulation for an aircraft engine using a data-driven model. Energy 2020, 196, 117046. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, Y. Data-Driven Exhaust Gas Temperature Baseline Predictions for Aeroengine Based on Machine Learning Algorithms. Aerospace 2023, 10, 17. [Google Scholar] [CrossRef]

- Zhao, Y.P.; Sun, J. Fast Online Approximation for Hard Support Vector Regression and Its Application to Analytical Redundancy for Aeroengines. Chin. J. Aeronaut. 2010, 23, 145–152. [Google Scholar]

- Zhao, Y.P.; Li, Z.Q.; Hu, Q.K. A size-transferring radial basis function network for aero-engine thrust estimation. Eng. Appl. Artif. Intell. 2020, 87, 103253. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Dong, Y.; Liao, F.; Pang, T.; Su, H.; Zhu, J.; Hu, X.; Li, J. Boosting Adversarial Attacks with Momentum. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) IEEE, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Tartakovsky, A.M.; Marrero, C.O.; Perdikaris, P. Physics-Informed Deep Neural Networks for Learning Parameters and Constitutive Relationships in Subsurface Flow Problems. Water Resour. Res. 2020, 56, e2019WR026731. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, D.; Karniadakis, G.E. Physics-informed generative adversarial networks for stochastic differential equations. SIAM J. Sci. Comput. 2020, 42, 292–317. [Google Scholar] [CrossRef]

- Karniadakis, G.E.; Kevrekidis, I.G.; Lu, L.; Perdikaris, P.; Wang, S.; Yang, L. Physics-informed machine learning. Nat. Rev. Phys. 2021, 3, 422–440. [Google Scholar] [CrossRef]

- Van Dyk, D.A.; Meng, X.L. The art of data augmentation. J. Comput. Graph. Stat. 2001, 10, 1–50. [Google Scholar] [CrossRef]

- Bronstein, M.M.; Bruna, J.; LeCun, Y.; Szlam, A.; Vandergheynst, P. Geometric deep learning: Going beyond Euclidean data. IEEE Signal Process. Mag. 2017, 34, 18–42. [Google Scholar] [CrossRef]

- Cohen, T.; Weiler, M.; Kicanaoglu, B.; Welling, M. Gauge equivariant convolutional networks and the icosahedral cnn. Proc. Mach. Learn. Res 2019, 97, 1321–1330. [Google Scholar]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural network: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Robinson, H.; Pawar, S.; Rasheed, A.; San, O. Physics guided neural networks for modelling of non-linear dynamics. Neural Netw. 2022, 154, 333–345. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning—Adaptive Computation and Machine Learning Series; The MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to Forget: Continual prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef]

- Jacobs, R.A. Increased Rates of Convergence Through Learning Rate Adaptation. Neural Netw. 1988, 1, 295–307. [Google Scholar] [CrossRef]

- Duchi, J.C.; Hazan, E.; Singer, Y. Adaptive Subgradient Methods for Online Learning and Stochastic Optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 2014 International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

| Type of Parameter | Cross-Section of Component/ Acronym | Component Network |

|---|---|---|

| Total temperature/K | Outlet of inlet/T0 | Inlet network |

| Outlet of fan/T25 | Fan network | |

| Exhaust gas temperature/T6 | LP turbine network | |

| Total pressure/kPa | Outlet of inlet/P0 | Inlet network |

| Outlet of fan/P25 | Fan network | |

| Outlet of compressor/P3 | Compressor network | |

| Control/controlled parameter | Low-pressure rotor speed/Nl | Fan network |

| LP turbine network | ||

| High-pressure rotor speed/Nh | Compressor network | |

| HP turbine network | ||

| Fuel flow rate/Wf | Combustor network | |

| Aircraft engine performance parameter | Thrust/Fn | Target parameter |

| Model Name | Architecture | First Layer | Second Layer | Other Layers | The Total Number of Nodes |

|---|---|---|---|---|---|

| AN-1 | Conventional | FC (100) | FC (100) | \ | 11,201 |

| AN-2 | Conventional | FC (100) | FC (60) | FC (60) | 10,781 |

| AN-3 | Conventional | FC (100) | FC (50) | FC (50)-FC (40) | 10,681 |

| Str-1 | Simple block | BiL (4) | BiL (32) | \ | 11,713 |

| Str-2 | Simple block | FC (50)-FC (4) | BiL (32) | \ | 11,513 |

| Str-3 | Simple block | FC (100) | BiL (30) | Lambda | 10,101 |

| Str-4 | Simple block | BiL (2) | BiL (32) | \ | 9821 |

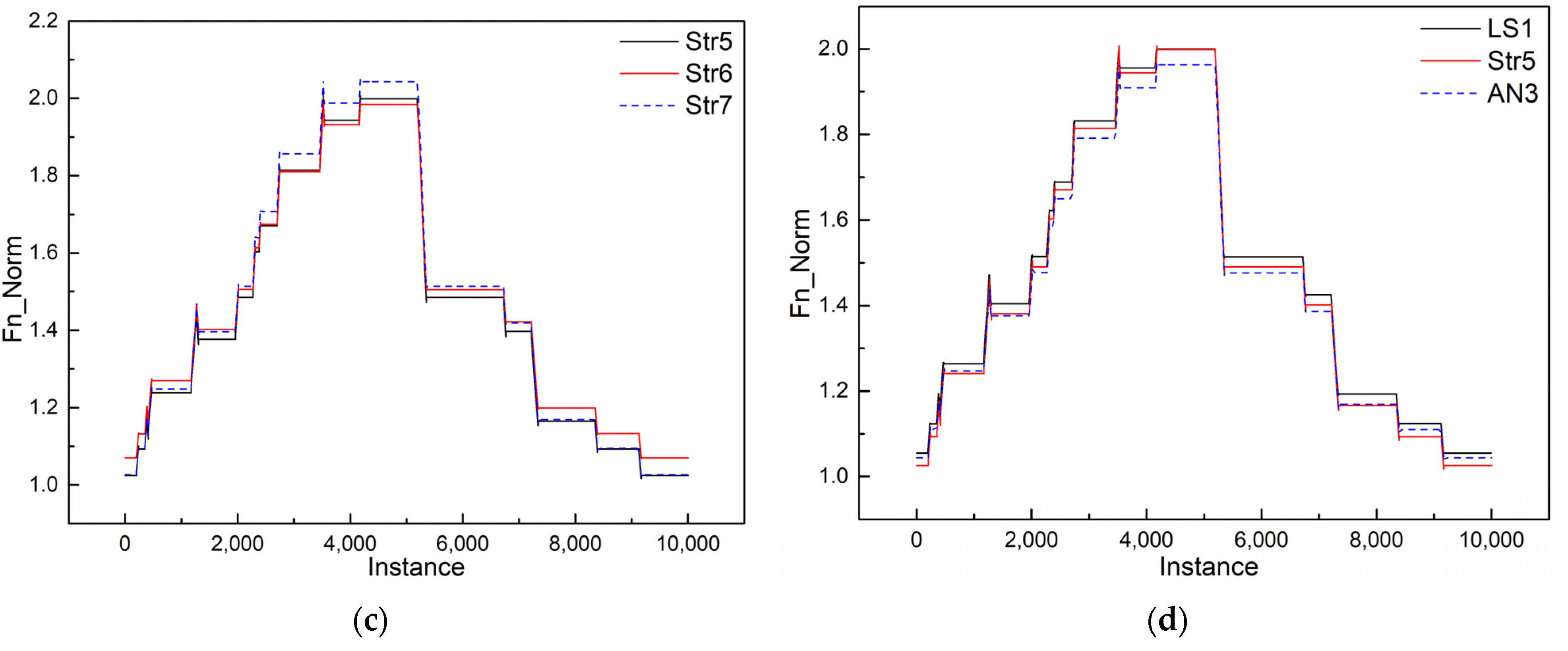

| Str-5 | Hybrid | FC (4) | BiL (32) | \ | 10,149 |

| Str-6 | Hybrid | FC (8) | BiL (32) | \ | 10,313 |

| Str-7 | Hybrid | FC (4) | BiL (48) | \ | 13,857 |

| LS-1 | Conventional | BiL (12) | BiL (24) | Sequence Length (9) | 11,569 |

| Model | ARD | MRD | ||||||

|---|---|---|---|---|---|---|---|---|

| Max % | Min % | Std. | Mean % | Max % | Min % | Std. | Mean % | |

| Testing Dataset 1 | ||||||||

| AN-1 | 1.3633 | 0.3610 | 0.0048 | 0.7324 | 2.3970 | 1.0077 | 0.0065 | 1.5453 |

| AN-2 | 1.332 | 0.2609 | 0.0045 | 0.8602 | 3.3842 | 0.7831 | 0.0101 | 2.1747 |

| AN-3 | 2.3639 | 0.8563 | 0.0068 | 1.534 | 6.0953 | 2.0993 | 0.0166 | 3.3400 |

| Str-1 | 2.0158 | 0.2241 | 0.0070 | 1.3764 | 3.8817 | 0.5153 | 0.0149 | 2.7367 |

| Str-2 | 2.9108 | 0.4683 | 0.0098 | 1.2643 | 4.0064 | 1.2529 | 0.0117 | 2.4521 |

| Str-3 | 1.2415 | 0.3763 | 0.0035 | 0.6599 | 2.0010 | 1.0146 | 0.0037 | 1.4305 |

| Str-4 | 2.5134 | 0.2215 | 0.0101 | 1.4086 | 4.0629 | 0.7235 | 0.0133 | 2.6834 |

| Str-5 | 1.6190 | 0.2531 | 0.0053 | 0.8957 | 2.8639 | 0.7364 | 0.0092 | 2.0084 |

| Str-6 | 2.6263 | 0.5867 | 0.0081 | 1.9309 | 5.0158 | 1.2956 | 0.0139 | 3.3564 |

| Str-7 | 2.0936 | 0.0780 | 0.0054 | 1.3602 | 3.4931 | 1.7716 | 0.0067 | 2.6448 |

| LS-1 | 1.2987 | 0.3633 | 0.0042 | 0.9355 | 31.642 | 29.221 | 0.0117 | 30.407 |

| Testing Dataset 2-1 | ||||||||

| AN-1 | 3.7842 | 2.6882 | 0.0045 | 3.3160 | 7.7096 | 6.0131 | 0.0071 | 6.9651 |

| AN-2 | 2.9477 | 1.0322 | 0.0078 | 2.3805 | 6.9304 | 2.7426 | 0.0164 | 5.3353 |

| AN-3 | 4.2153 | 2.1237 | 0.0095 | 3.1021 | 10.203 | 5.1283 | 0.1871 | 7.1988 |

| Str-1 | 5.4999 | 1.2474 | 0.0168 | 2.6325 | 7.8605 | 2.2850 | 0.0223 | 4.9320 |

| Str-2 | 4.5492 | 0.2893 | 0.0172 | 2.5289 | 7.2269 | 1.3958 | 0.0250 | 4.6247 |

| Str-3 | 3.1393 | 1.2299 | 0.0076 | 2.0684 | 6.9090 | 2.7207 | 0.0157 | 4.9956 |

| Str-4 | 2.5373 | 0.6163 | 0.0090 | 1.5616 | 6.0721 | 1.6456 | 0.0183 | 3.9637 |

| Str-5 | 2.2245 | 0.8123 | 0.0055 | 1.3605 | 5.4286 | 1.8546 | 0.0137 | 3.3643 |

| Str-6 | 3.6926 | 0.6533 | 0.0154 | 2.0156 | 8.0805 | 1.8475 | 0.0305 | 4.7318 |

| Str-7 | 3.6972 | 1.4409 | 0.0101 | 2.3213 | 4.8455 | 3.2047 | 0.0071 | 4.1794 |

| LS-1 | 4.0474 | 1.6943 | 0.00916 | 2.8629 | 8.0474 | 4.1085 | 0.0145 | 6.1554 |

| Model | ARD | MRD | ||||||

|---|---|---|---|---|---|---|---|---|

| Max % | Min % | Std. | Mean % | Max % | Min % | Std. | Mean % | |

| Testing Dataset 2-2 | ||||||||

| AN-1 | 3.8091 | 2.7000 | 0.0044 | 3.3428 | 7.6944 | 6.0316 | 0.0070 | 6.9876 |

| AN-2 | 3.0014 | 1.0153 | 0.0081 | 2.4119 | 6.9846 | 2.8165 | 0.0162 | 5.5582 |

| AN-3 | 4.2774 | 2.1498 | 0.0096 | 3.1463 | 10.229 | 5.1809 | 0.0186 | 7.2430 |

| Str-1 | 5.5318 | 1.2685 | 0.0168 | 2.6604 | 7.8891 | 2.3098 | 0.0224 | 4.9705 |

| Str-2 | 4.6903 | 0.3445 | 0.0171 | 2.6489 | 7.2254 | 1.2766 | 0.0252 | 4.7164 |

| Str-3 | 3.1902 | 1.3591 | 0.0075 | 2.1474 | 6.9461 | 2.8543 | 0.0154 | 5.0725 |

| Str-4 | 2.8107 | 0.5281 | 0.0130 | 1.6001 | 6.3564 | 1.5174 | 0.0196 | 4.0076 |

| Str-5 | 2.4076 | 0.8850 | 0.0063 | 1.4650 | 5.6173 | 1.9695 | 0.0143 | 3.4874 |

| Str-6 | 3.7327 | 0.6196 | 0.0154 | 2.0597 | 8.1307 | 1.9709 | 0.0301 | 4.8170 |

| Str-7 | 3.7408 | 1.3976 | 0.0103 | 2.3859 | 4.9623 | 3.1957 | 0.0075 | 4.2571 |

| LS-1 | 4.0642 | 1.7461 | 0.0090 | 2.8997 | 8.0599 | 4.1571 | 0.0143 | 6.1853 |

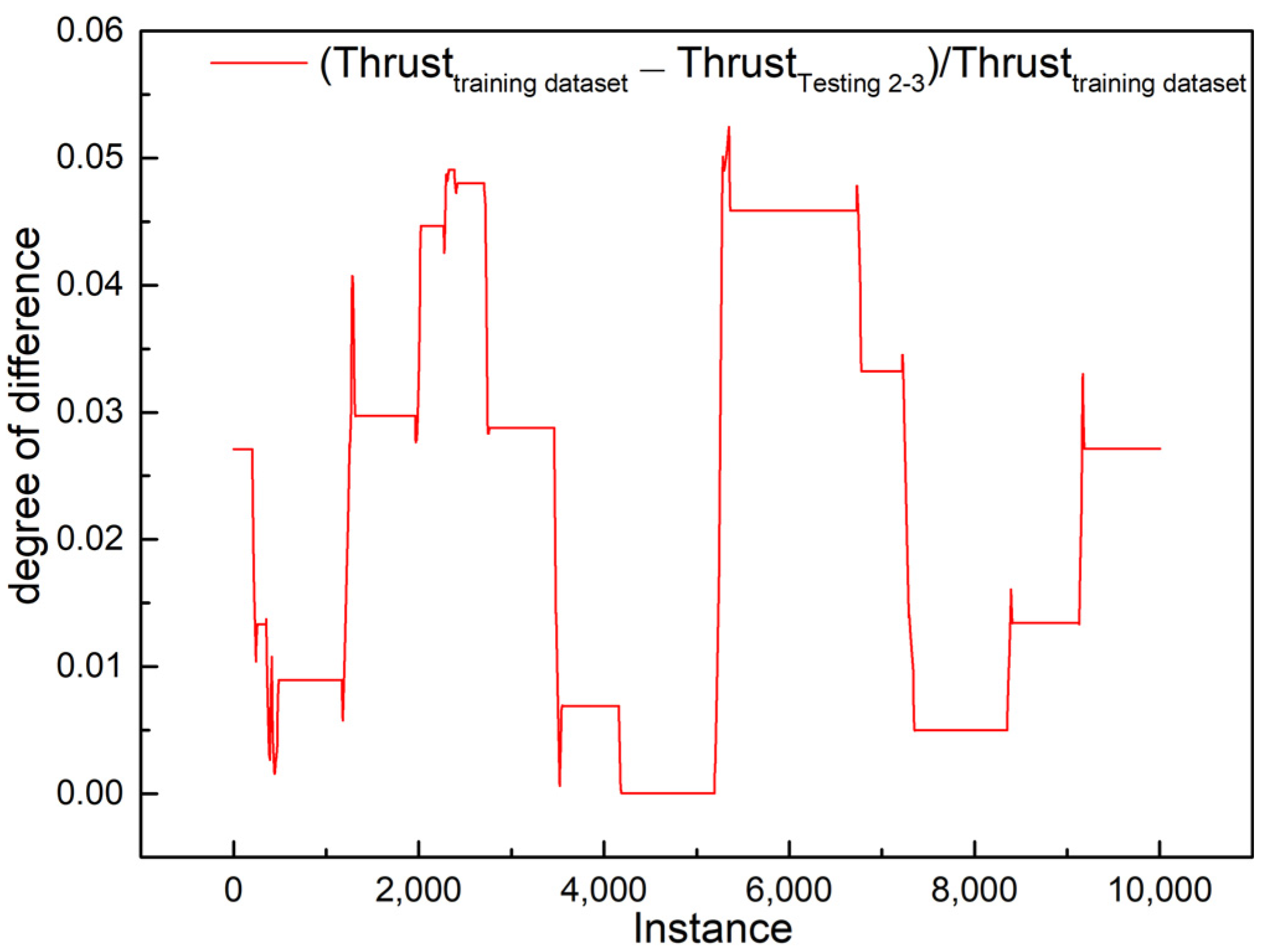

| Testing Dataset 2-3 | ||||||||

| AN-1 | 3.9344 | 2.8795 | 0.0042 | 3.5255 | 7.8176 | 6.1732 | 0.0069 | 7.1332 |

| AN-2 | 3.1761 | 1.0413 | 0.0087 | 2.5571 | 7.1321 | 2.9314 | 0.0163 | 5.7190 |

| AN-3 | 4.4586 | 2.3525 | 0.0095 | 3.3026 | 10.287 | 5.2857 | 0.0183 | 7.3590 |

| Str-1 | 5.7983 | 1.4903 | 0.0172 | 2.8747 | 8.1181 | 2.4377 | 0.0229 | 5.1395 |

| Str-2 | 4.8208 | 0.0449 | 0.0171 | 2.7945 | 7.2504 | 1.4033 | 0.0246 | 4.8515 |

| Str-3 | 3.4819 | 1.5350 | 0.0079 | 2.3974 | 7.1504 | 2.9540 | 0.0158 | 5.2498 |

| Str-4 | 3.1511 | 0.3398 | 0.0118 | 1.7532 | 6.5569 | 1.4514 | 0.0194 | 4.2091 |

| Str-5 | 2.5754 | 0.8158 | 0.0072 | 1.5926 | 5.7334 | 2.0804 | 0.0146 | 3.5879 |

| Str-6 | 3.8069 | 0.6172 | 0.0155 | 2.1314 | 8.1673 | 2.0353 | 0.0301 | 4.8812 |

| Str-7 | 3.8111 | 1.3497 | 0.0106 | 2.4564 | 5.0550 | 3.1940 | 0.0078 | 4.3224 |

| LS-1 | 4.3430 | 1.9969 | 0.0091 | 3.1685 | 8.2561 | 4.3434 | 0.0144 | 6.3692 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Z.; Xiao, H.; Zhang, X.; Wang, Z. Thrust Prediction of Aircraft Engine Enabled by Fusing Domain Knowledge and Neural Network Model. Aerospace 2023, 10, 493. https://doi.org/10.3390/aerospace10060493

Lin Z, Xiao H, Zhang X, Wang Z. Thrust Prediction of Aircraft Engine Enabled by Fusing Domain Knowledge and Neural Network Model. Aerospace. 2023; 10(6):493. https://doi.org/10.3390/aerospace10060493

Chicago/Turabian StyleLin, Zhifu, Hong Xiao, Xiaobo Zhang, and Zhanxue Wang. 2023. "Thrust Prediction of Aircraft Engine Enabled by Fusing Domain Knowledge and Neural Network Model" Aerospace 10, no. 6: 493. https://doi.org/10.3390/aerospace10060493

APA StyleLin, Z., Xiao, H., Zhang, X., & Wang, Z. (2023). Thrust Prediction of Aircraft Engine Enabled by Fusing Domain Knowledge and Neural Network Model. Aerospace, 10(6), 493. https://doi.org/10.3390/aerospace10060493