Observability-Driven Path Planning Design for Securing Three-Dimensional Navigation Performance of LiDAR SLAM

Abstract

1. Introduction

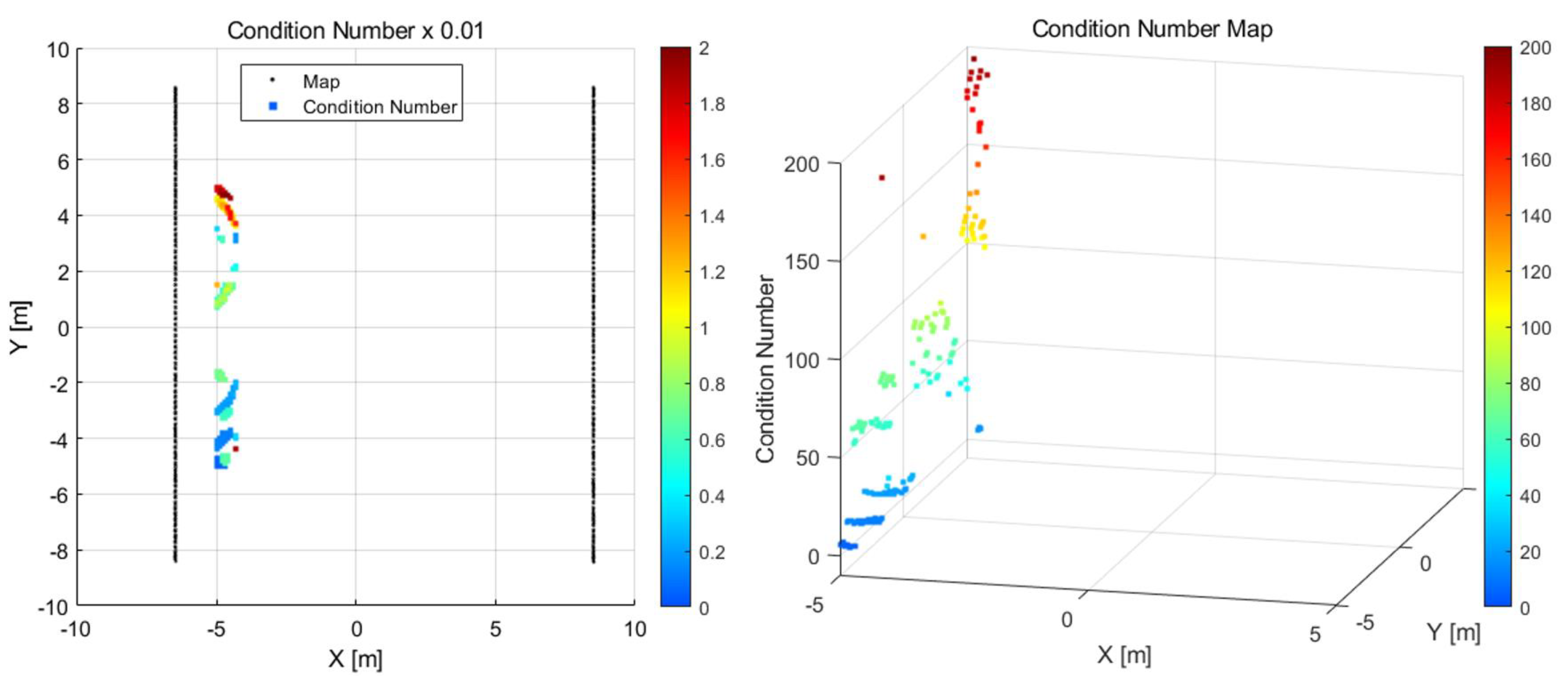

2. Observability Analysis

2.1. Observability Model

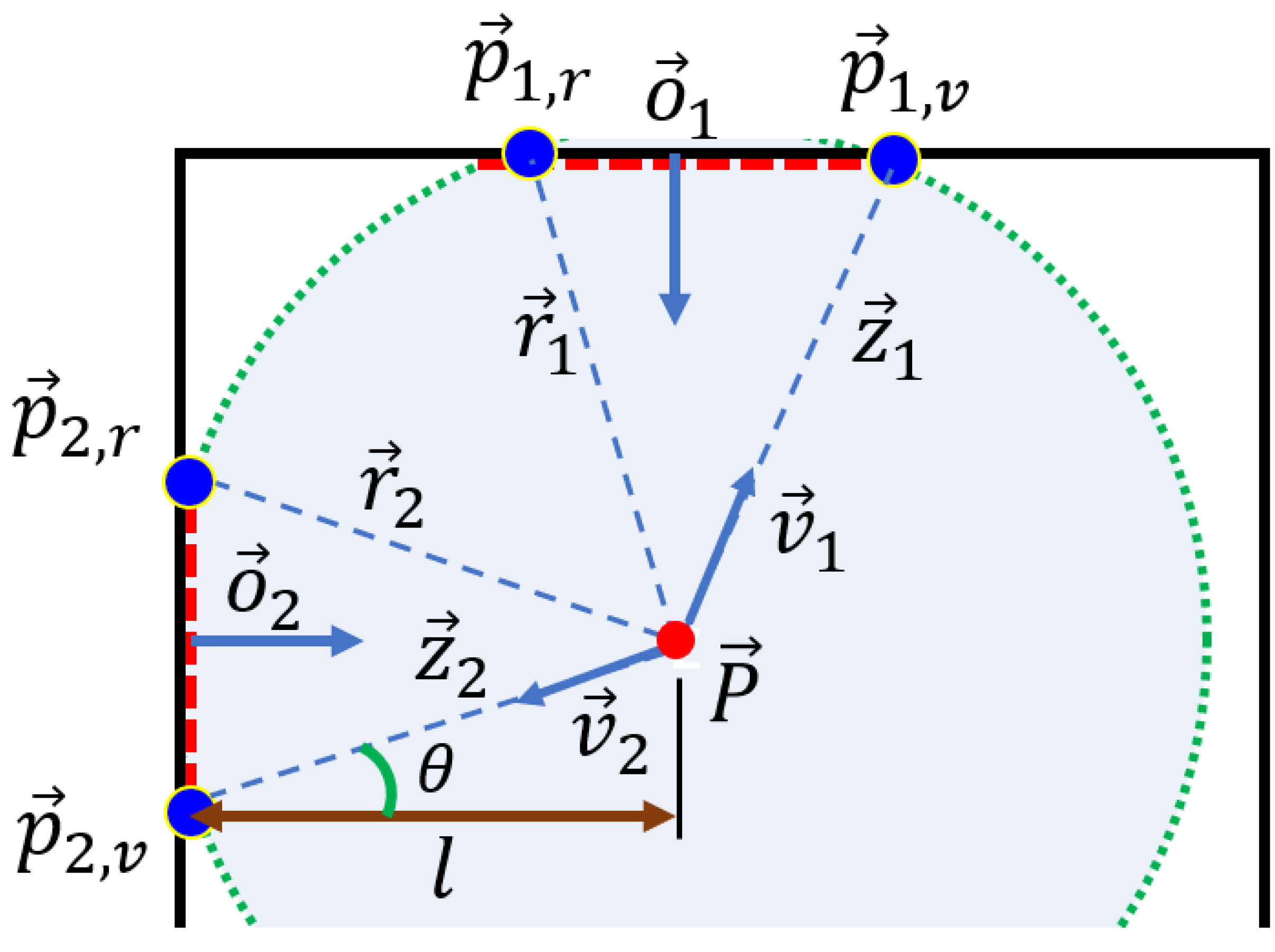

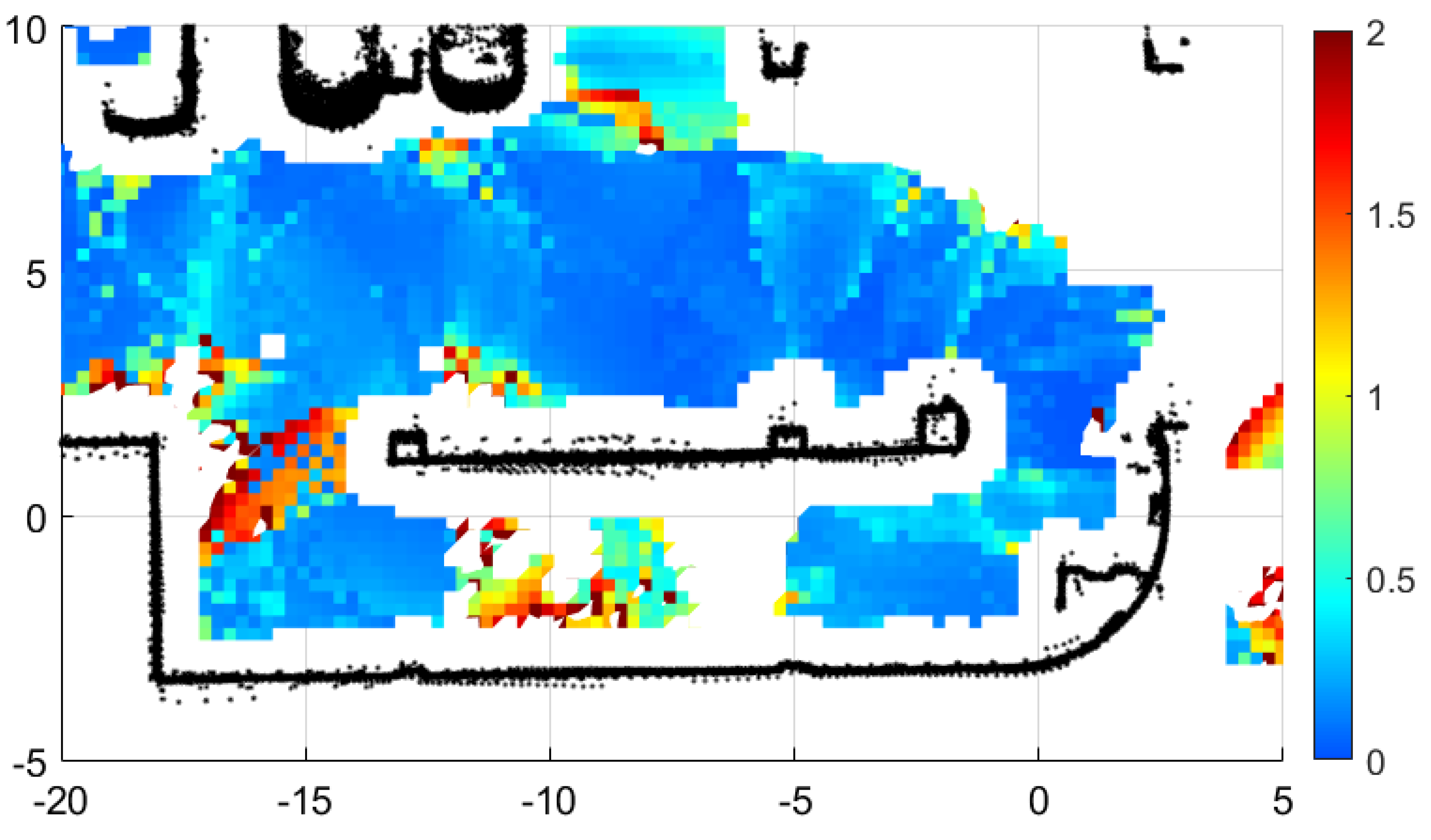

2.2. Geometric Detection

3. Observability Verification Study

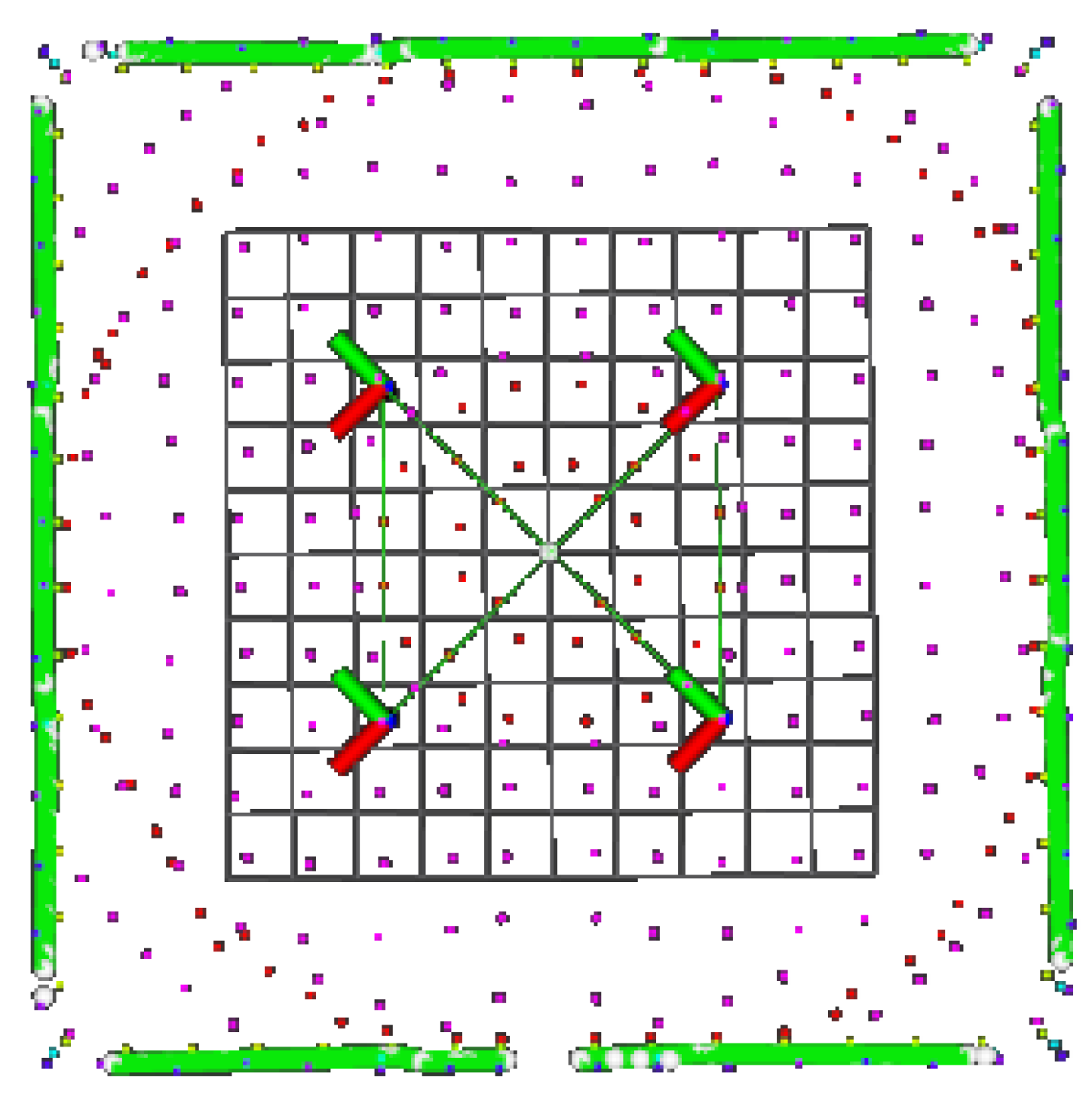

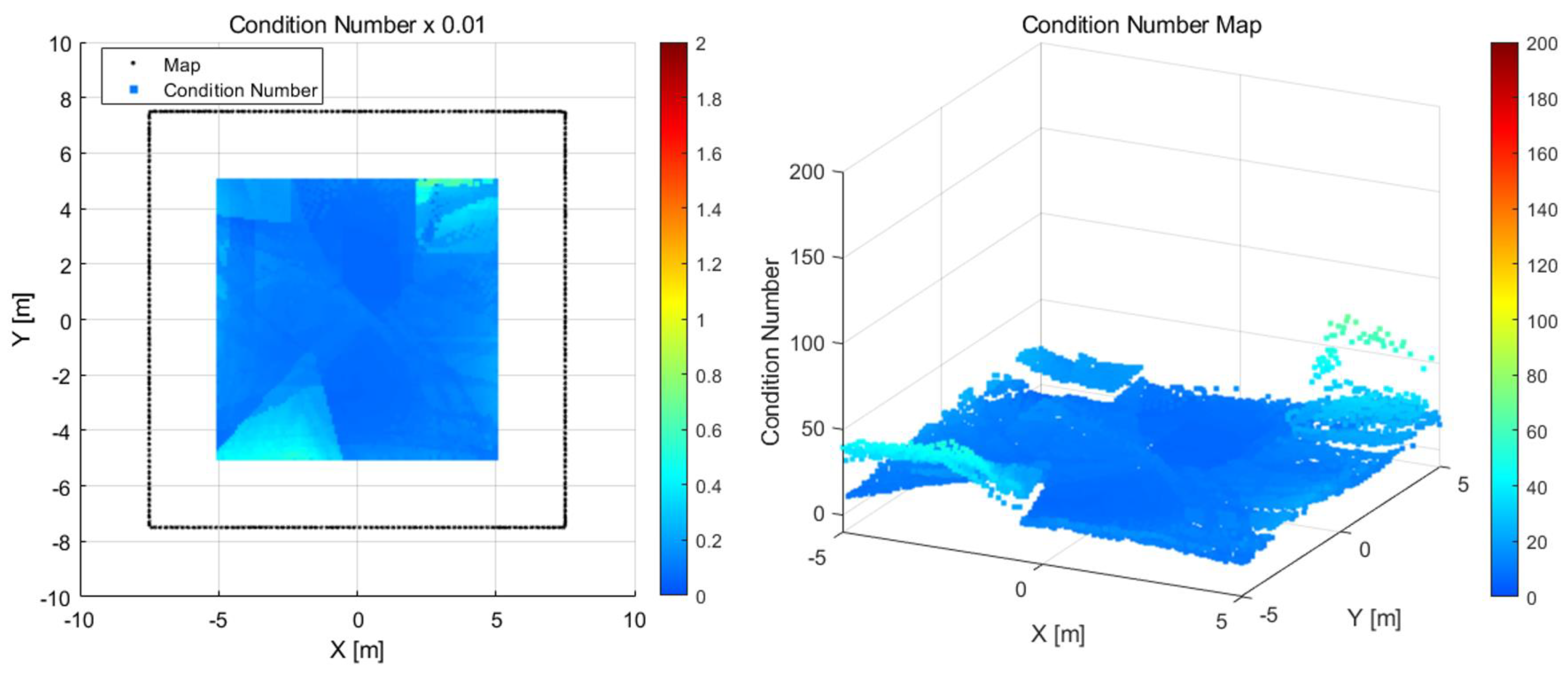

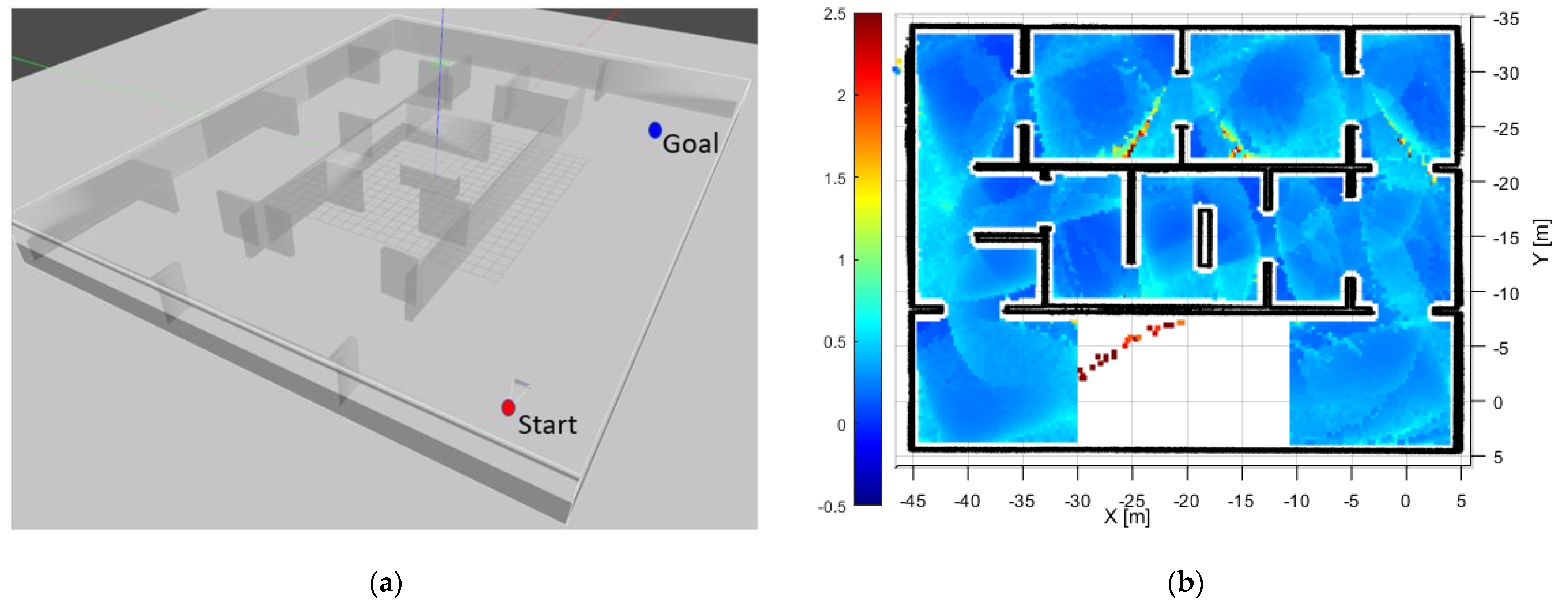

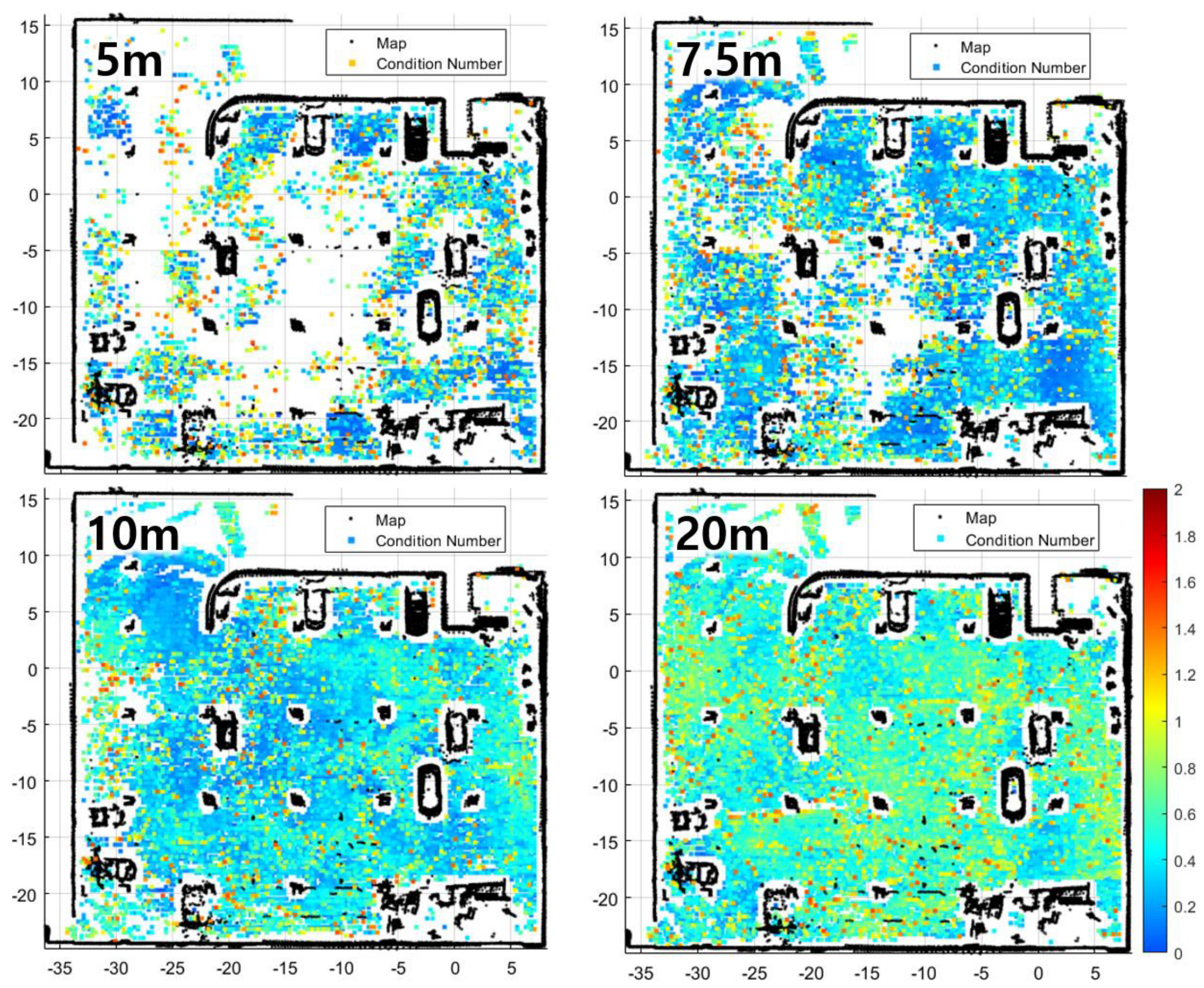

3.1. Simulation Results

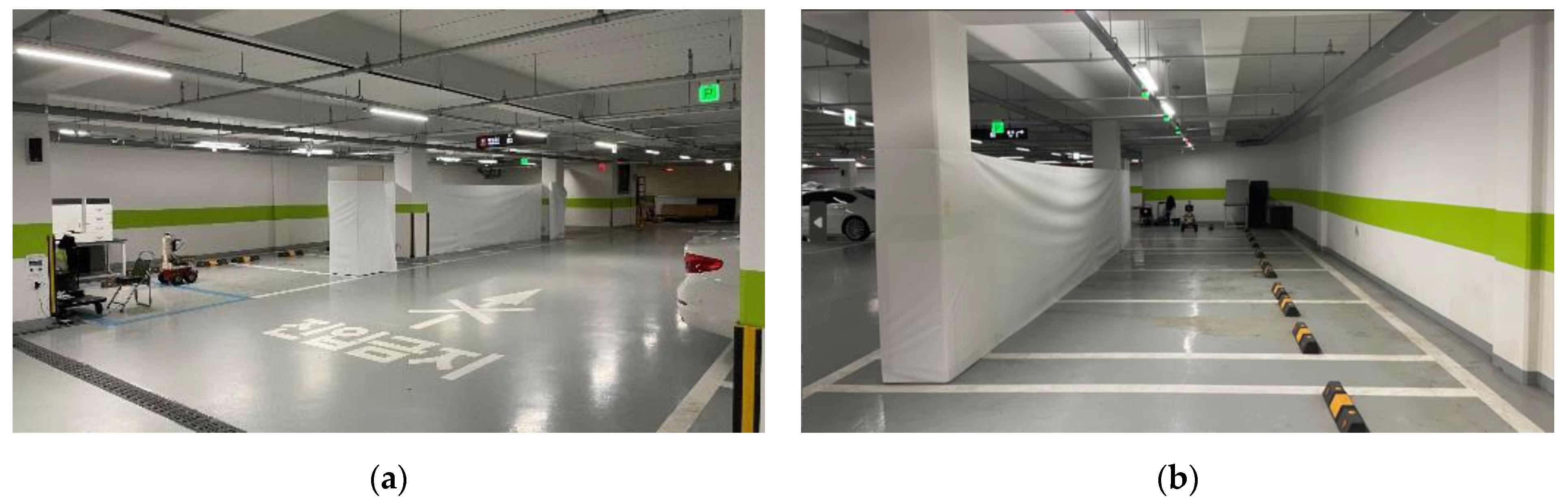

3.2. Results for the Real Environment

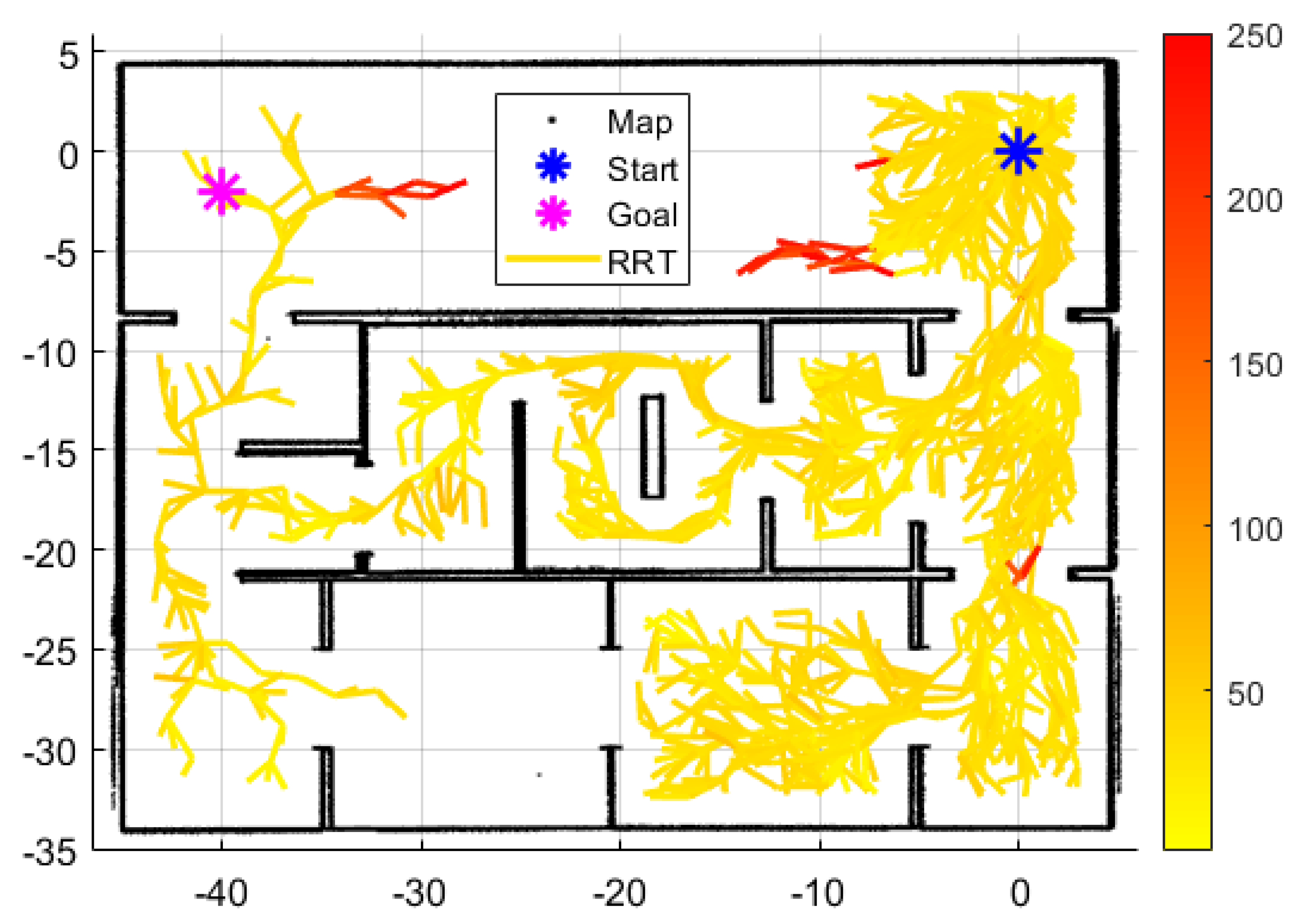

4. Observability-Driven Path Planning

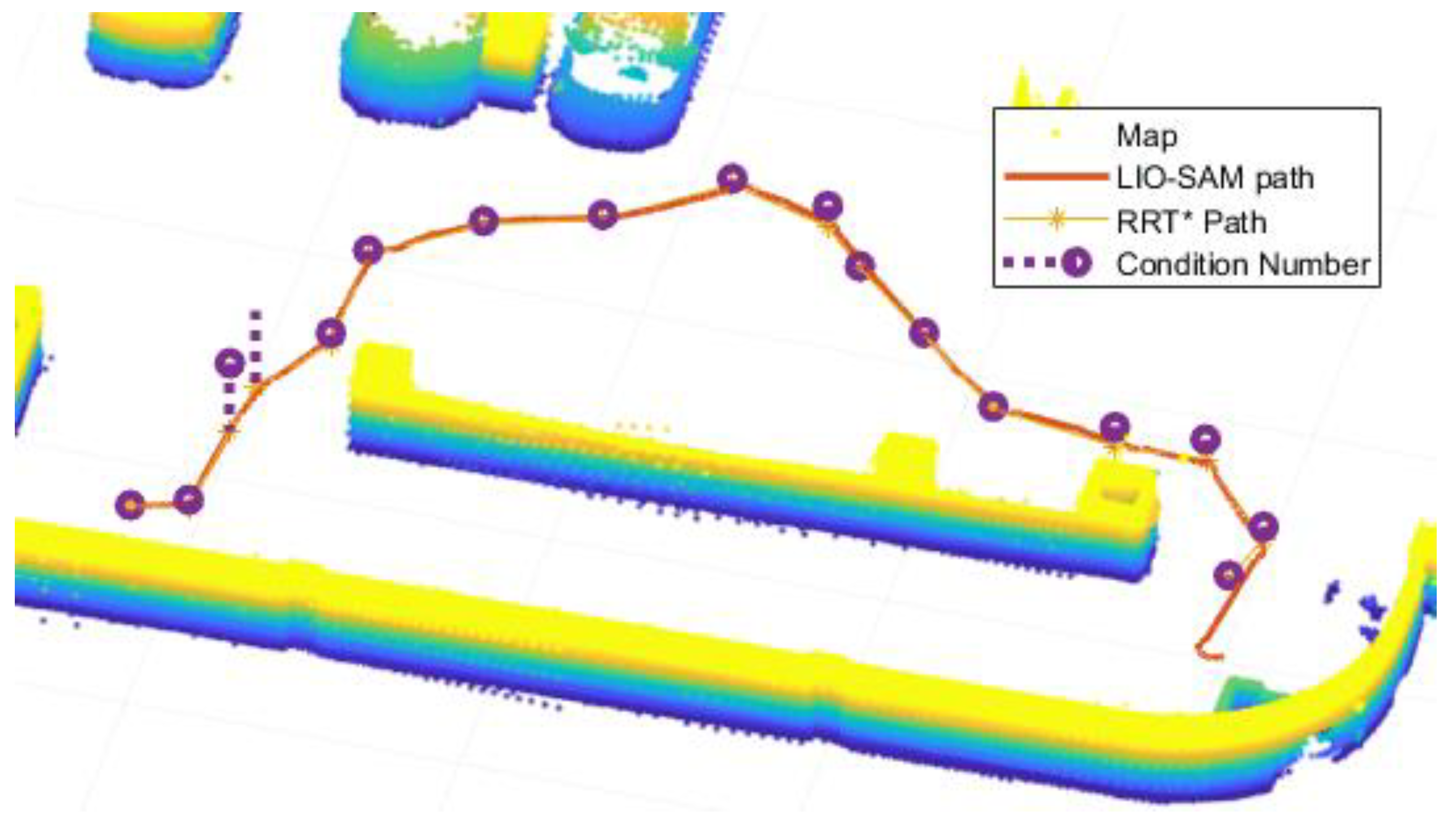

4.1. Algorithm Implementation

| Algorithm 1 Observability-Driven Path Planning. |

|

- MapConv: This function receives a point cloud map from SLAM and returns Octomap and map size S. Octomap is an Octree-based map with substantial advantages of fast search and efficient memory management. The reason for converting to Octomap is for the walls search algorithm before observability calculation.

- Sample: Returns a random position in map size S.

- Nearest: Among the nodes of the path tree τ, the node closest to is searched and returned.

- Steer: Calculates the distance and direction between and . If the distance exceeds a certain distance, a new node location is created at a limited distance in the direction calculated with as the center.

- Observability: This function calculates observability and Condition Number . The wall detection method finds walls close to the node location . It also serves as obstacle avoidance by returning 0 if the wall or obstacle is too close. Orthogonal vectors are extracted from the searched walls. The observability and Condition Number are calculated using the extracted orthogonal vector and the location of the vector.

- Near: A function that finds nodes within a certain radius centered on .

- ChooseParent: Finds and returns the node that makes the cost of the smallest among .

- InsertNode: A node connected to as a parent and as a child applies the tree τ.

- Rewire: When is set as a parent among , nodes with a smaller cost are found and changed.

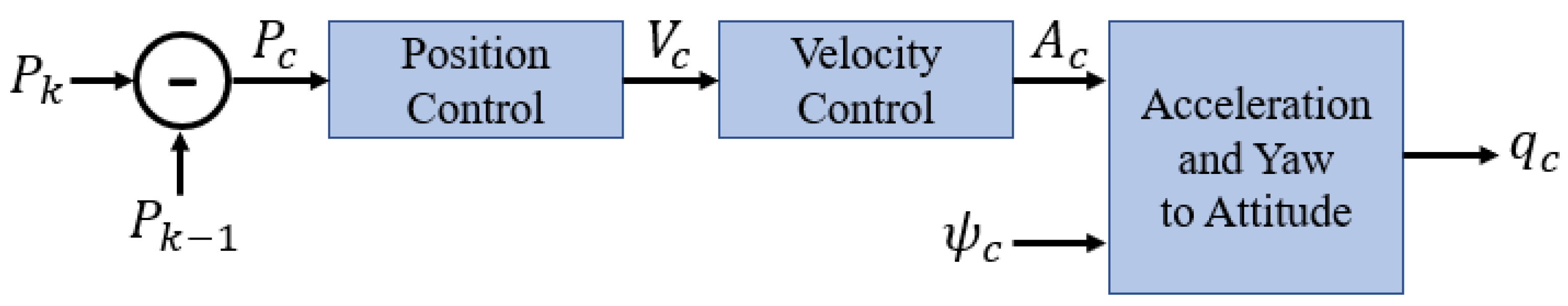

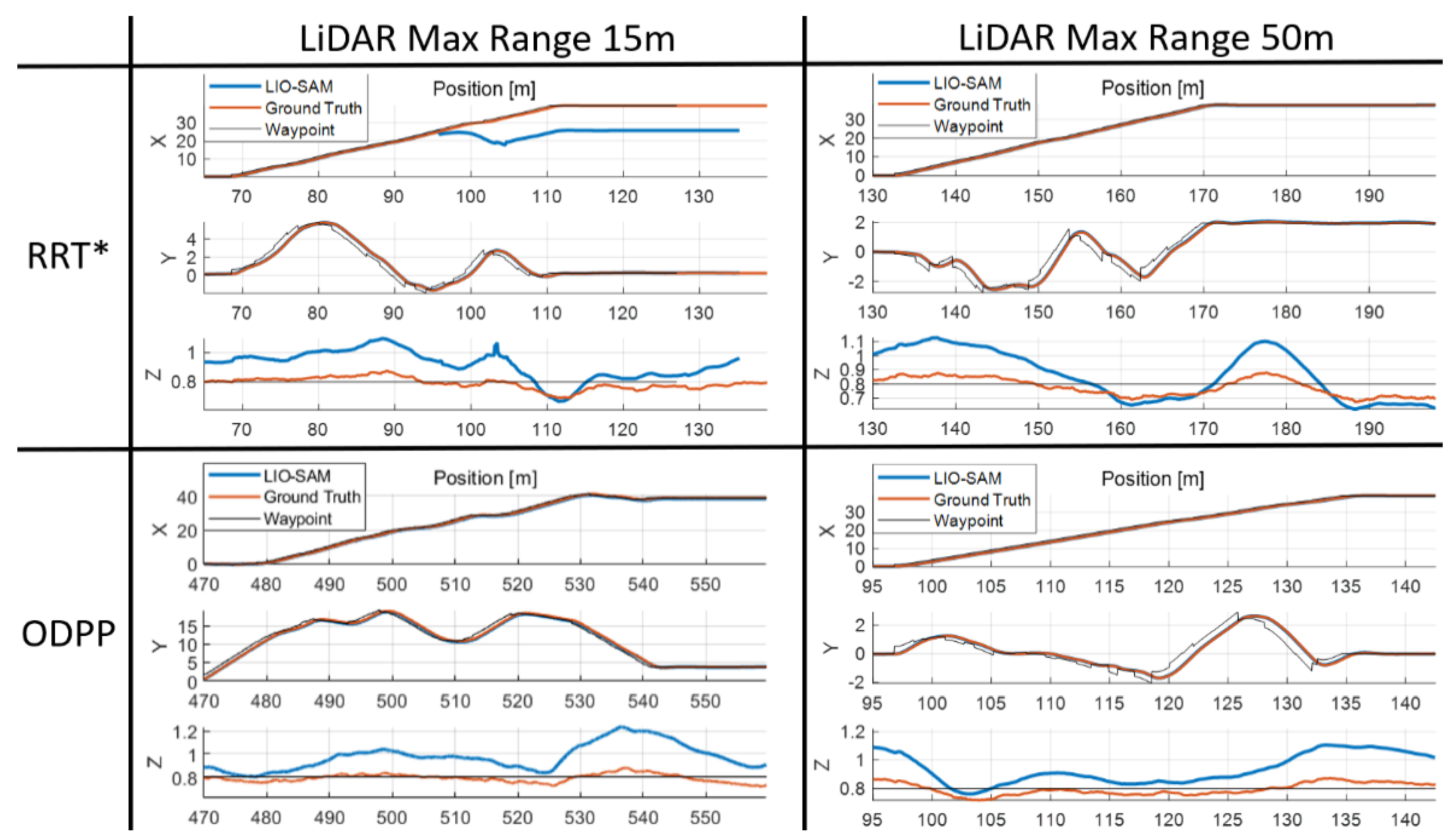

4.2. Simulation and Experimental Result

5. Results and Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shan, T.; Englot, B.; Ratti, C.; Rus, D. LVI-SAM: Tightly-Coupled Lidar-Visual-Inertial Odometry via Smoothing and Mapping. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 5692–5698. [Google Scholar] [CrossRef]

- Zheng, C.; Zhu, Q.; Xu, W.; Liu, X.; Guo, Q.; Zhang, F. FAST-LIVO: Fast and Tightly-Coupled Sparse-Direct LiDAR-Inertial-Visual Odometry. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 4003–4009. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Meyers, D.; Wang, W.; Ratti, C.; Rus, D. LIO-SAM: Tightly-Coupled Lidar Inertial Odometry via Smoothing and Mapping. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 25–29 October 2020; pp. 5135–5142. [Google Scholar] [CrossRef]

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. FAST-LIO2: Fast Direct LiDAR-Inertial Odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast Semi-Direct Monocular Visual Odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar] [CrossRef]

- Carrillo, H.; Reid, I.; Castellanos, J.A. On the Comparison of Uncertainty Criteria for Active SLAM. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 2080–2087. [Google Scholar] [CrossRef]

- Maurovic, I.; Seder, M.; Lenac, K.; Petrovic, I. Path Planning for Active SLAM Based on the D* Algorithm with Negative Edge Weights. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 1321–1331. [Google Scholar] [CrossRef]

- Palomeras, N.; Carreras, M.; Andrade-Cetto, J. Active SLAM for Autonomous Underwater Exploration. Remote Sens. 2019, 11, 2827. [Google Scholar] [CrossRef]

- Lim, H.; Jeon, J.; Myung, H. UV-SLAM: Unconstrained Line-Based SLAM Using Vanishing Points for Structural Mapping. IEEE Robot. Autom. Lett. 2022, 7, 1518–1525. [Google Scholar] [CrossRef]

- Jung, K.Y.; Kim, Y.E.; Lim, H.J.; Myung, H. ALVIO: Adaptive Line and Point Feature-Based Visual Inertial Odometry for Robust Localization in Indoor Environments. In Lecture Notes in Mechanical Engineering; Springer: Berlin/Heidelberg, Germany, 2021; pp. 171–184. [Google Scholar] [CrossRef]

- Zhang, C. PL-GM:RGB-D SLAM with a Novel 2D and 3D Geometric Constraint Model of Point and Line Features. IEEE Access 2021, 9, 9958–9971. [Google Scholar] [CrossRef]

- Bavle, H.; De La Puente, P.; How, J.P.; Campoy, P. VPS-SLAM: Visual Planar Semantic SLAM for Aerial Robotic Systems. IEEE Access 2020, 8, 60704–60718. [Google Scholar] [CrossRef]

- Qiu, Z.; Lin, D.; Jin, R.; Lv, J.; Zheng, Z. A Global ArUco-Based Lidar Navigation System for UAV Navigation in GNSS-Denied Environments. Aerospace 2022, 9, 456. [Google Scholar] [CrossRef]

- Yang, Y.; Huang, G. Observability Analysis of Aided INS with Heterogeneous Features of Points, Lines, and Planes. IEEE Trans. Robot. 2019, 35, 1399–1418. [Google Scholar] [CrossRef]

- Einhorn, E.; Gross, H.M. Generic NDT Mapping in Dynamic Environments and Its Application for Lifelong SLAM. Rob. Auton. Syst. 2015, 69, 28–39. [Google Scholar] [CrossRef]

- Mendes, E.; Koch, P.; Lacroix, S. ICP-Based Pose-Graph SLAM. In Proceedings of the SSRR 2016—International Symposium on Safety, Security and Rescue Robotics, Lausanne, Switzerland, 23–27 October 2016; pp. 195–200. [Google Scholar] [CrossRef]

- Lee, B.; Kim, D.G.; Lee, J.; Sung, S. Analysis on Observability and Performance of INS-Range Integrated Navigation System Under Urban Flight Environment. J. Electr. Eng. Technol. 2020, 15, 2331–2343. [Google Scholar] [CrossRef]

- Mirzaei, F.M.; Roumeliotis, S.I. A Kalman Filter-Based Algorithm for IMU-Camera Calibration: Observability Analysis and Performance Evaluation. IEEE Trans. Robot. 2008, 24, 1143–1156. [Google Scholar] [CrossRef]

- Gao, S.; Wei, W.; Zhong, Y.; Feng, Z. Rapid Alignment Method Based on Local Observability Analysis for Strapdown Inertial Navigation System. Acta Astronaut. 2014, 94, 790–798. [Google Scholar] [CrossRef]

- Yeon, S.; Doh, N.L. Observability Analysis of 2D Geometric Features Using the Condition Number for SLAM Applications. In Proceedings of the International Conference on Control, Automation and Systems, Gwangju, Republic of Korea, 20–23 October 2013; pp. 1540–1543. [Google Scholar] [CrossRef]

- Bavle, H.; Sanchez-Lopez, J.L.; de la Puente, P.; Rodriguez-Ramos, A.; Sampedro, C.; Campoy, P. Fast and Robust Flight Altitude Estimation of Multirotor UAVs in Dynamic Unstructured Environments Using 3D Point Cloud Sensors. Aerospace 2018, 5, 94. [Google Scholar] [CrossRef]

- Chen, S.; Zhou, W.; Yang, A.S.; Chen, H.; Li, B.; Wen, C.Y. An End-to-End UAV Simulation Platform for Visual SLAM and Navigation. Aerospace 2022, 9, 48. [Google Scholar] [CrossRef]

- Karaman, S.; Frazzoli, E. Sampling-Based Algorithms for Optimal Motion Planning. Int. J. Robot. Res. 2011, 30, 846–894. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, D.; Lee, B.; Sung, S. Observability-Driven Path Planning Design for Securing Three-Dimensional Navigation Performance of LiDAR SLAM. Aerospace 2023, 10, 492. https://doi.org/10.3390/aerospace10050492

Kim D, Lee B, Sung S. Observability-Driven Path Planning Design for Securing Three-Dimensional Navigation Performance of LiDAR SLAM. Aerospace. 2023; 10(5):492. https://doi.org/10.3390/aerospace10050492

Chicago/Turabian StyleKim, Donggyun, Byungjin Lee, and Sangkyung Sung. 2023. "Observability-Driven Path Planning Design for Securing Three-Dimensional Navigation Performance of LiDAR SLAM" Aerospace 10, no. 5: 492. https://doi.org/10.3390/aerospace10050492

APA StyleKim, D., Lee, B., & Sung, S. (2023). Observability-Driven Path Planning Design for Securing Three-Dimensional Navigation Performance of LiDAR SLAM. Aerospace, 10(5), 492. https://doi.org/10.3390/aerospace10050492