1. Introduction

The intensification of space operations has led to a significant growth in the number of satellites and space debris orbiting the Earth compared to the past. Current services of space surveillance and monitoring track daily up to 28,000 objects greater than 10 cm, including both active payloads and space debris. Nowadays, the geostationary orbit assumes significant importance and is currently populated by over 1000 objects, ranging from active satellites to rocket bodies and defunct payloads [

1]. Geostationary satellites play a crucial role in global telecommunications infrastructure, enabling television broadcasts and voice communications on a global scale. Additionally, satellites in this orbit are crucial for meteorological services, providing valuable data for environmental monitoring [

2].

During their operational phase, geostationary satellites require periodic station-keeping maneuvers to correct for orbital perturbations and maintain their stable position and orientation [

3]. At the end of their operational life, they are moved to a designated graveyard orbit, freeing up valuable orbital slots for new satellites [

4].

Ground-based optical observations can serve as a complementary and cost-effective tool for monitoring and managing these space assets, allowing for the extraction of various information. This includes the dynamic state at a specific time and verification of ongoing orbital maneuvers. Furthermore, through the analysis of light curves, it is possible to identify the presence of a tumbling motion or evaluate the correct alignment of solar panels [

5]. Photometry is also valuable in estimating the shape and materials of an object [

6].

The sunlight backscattered by orbiting objects and captured by ground-based observatories is influenced by various factors, including attitude, shape, materials of the satellite itself, and the phase angle [

7]. These aspects are of great significance as they can have a substantial impact on the amount of light reflected or emitted by the satellite, ultimately reaching the observer on Earth.

The phase angle holds significant relevance not only in the context of geostationary object observations but also for objects in other orbital regimes. Defined as the angle between the observer-satellite and the sun-satellite directions, the phase angle directly influences the intensity of the received luminous flux. In general, when the phase angle is small (the satellite is “frontally illuminated”), the received luminous flux is at its maximum. Conversely, when the phase angle is large (the satellite is “laterally illuminated”), the luminous flux is reduced. Understanding this relationship is meaningful not only for maximizing the visibility of geostationary satellites but also for enhancing the geostationary spectral characterization.

The attitude of the satellite in space is highly significant in the context of optical observations. The position of the satellite’s reflective or emitting surfaces relative to the observer can determine how much of its light is detected. For instance, when the satellite is oriented with its reflective surfaces toward the observer, the perceived brightness will be greater compared to when the reflective surfaces are oriented differently.

Another aspect to take into account is the shape of the satellite. The distribution of reflected light can vary depending on its overall geometry and the arrangement of solar panels, antennas, and other components. Satellites with irregular shapes or specific surface details can produce variations in observed brightness on Earth, as these components can act as reflectors or absorbers.

In specific spectral regions, the materials employed in the satellite assembly can be more or less reflective or emissive, while other components may be good absorbers. The selection of materials for elements like solar panels or antennas can affect the satellite’s reflection in a particular bandwidth and, as a result, the observed brightness.

Due to all the factors mentioned earlier, it is evident that satellites, unlike a Lambertian model, reflect light in a highly variable manner. In an ideal Lambertian model, light reflection from the surface would be uniformly distributed in all directions, regardless of the angle of incident light. However, this idealization cannot be applied as a general rule for satellites in orbit [

8]. An in-depth knowledge of each of these factors is essential to properly interpreting optical observation outcomes and deriving meaningful results about the nature and state of geostationary satellites. Also, they can contribute to the development of a distinct spectral signature for each satellite that may exhibit substantial variations between different satellites in orbit [

9,

10,

11,

12,

13].

To characterize the spectral response of a geostationary satellite as a function of phase angle, it is essential to take into account that the phase angle in this orbital regime experiences minimal variation over time. Therefore, it is crucial to observe these satellites continuously throughout the night, except during periods when the satellite is in eclipse. Furthermore, it is significant to plan the observations in a way that allows continuous information collection through different photometric filters. Indeed, to collect detailed information through optical observations, it is possible to use various sets of photometric filters that enable the analysis of specific portions of the electromagnetic spectrum separately [

14]. However, obtaining simultaneous observations in different spectral bands requires highly precise coordination and synchronization of the different filters. Moreover, if the optical filter selects a single bandwidth during the observation, it is necessary to involve multiple observatories simultaneously at the same site, each equipped with a specific spectral filter [

15].

These filters enable the analysis of specific spectral bands separately, providing valuable details about the spectral characterization of geostationary satellites. The goal is to minimize the time between acquisitions through different photometric bands, ensuring that data referred to different filters are collected as closely as possible in time. Observations in different spectral bands can be useful to identify the spectral signature of an object.

This study starts with an overview of two distinct optical systems developed and operated by the Sapienza Space Systems and Space Surveillance Laboratory (S5Lab) at Sapienza University of Rome. The first system is a telescope paired with an sCMOS camera and Sloan photometric filters that represents a specialized approach tailored for astronomical observations. The second one, designed for non-astronomical professional use, incorporates DSLR cameras equipped with RGB channels attributed to the Bayer mask and offers a more cost-effective alternative without compromising functionality. A comparative analysis of these systems serves as a valuable resource for understanding the advantages they present for geostationary satellite monitoring. Then, strategies employed for data acquisition will be clarified, describing the observation session conducted. Furthermore, the details of data processing will be explored, and the results and discussions concerning the observed light curves will be presented. Sloan and RGB light curves associated with four distinct active satellites will be illustrated in order to investigate their spectral response.

2. State of the Art and Related Work

Until the 1990s, observations of the geostationary ring were mostly focused on active satellites as part of space surveillance programs aimed at maintaining an updated catalog of objects in orbit. Optical observations of space debris displaced in geostationary orbits began in the late 1990s, followed by a growing international interest. In this context, due to the limited availability of data, observation methodologies primarily consisted of surveys of the geosynchronous region, such as the campaigns promoted by ESA, aimed at correlating detected objects and estimating their magnitudes. The first observation campaigns by ESA and IADC were conducted in Tenerife, Spain, and were documented by Schildknecht et al. in 2004. These observation campaigns discovered new and unexpected populations of space debris, known as High Area to Mass Ratio (HAMR) objects [

16]. HAMR objects posed a significant challenge to numerous observers in determining their orbits and shapes [

17]. In the subsequent years, various techniques were applied to observe geostationary orbits, such as the “parked telescope” method [

18], to implement orbit determination methodologies. Research also moved forward by applying methods from other fields to GEO observations, such as light curves and photometry [

19]. Furthermore, other researchers conducted studies to stress the different behaviors of GEO objects in BVRI passbands, providing both photometric characterization [

9] and physical characterization using the Loiano observatory in Italy [

14]. BVRI magnitudes were also estimated with observations from the Cerro Tololo Inter-American Observatory in Chile [

20]. Related areas of research encompass studies on materials, the evolution of debris clouds, spectroscopy, and more. The majority of research focuses on space debris in GEO orbit, primarily because of the potential risks they pose to operational satellites, which represent significant costs. It is worth noting that the aforementioned researchers’ work also addressed active satellites.

With regard to the focus of this article, Sloan filters were applied for GEO observations with the Chinese Quad Channel Telescope in order to investigate the multiband observations of space debris in GEO [

21]. Sloan spectral bands have only recently emerged as a tool for the characterization of man-made orbiting objects and were employed in 2020 at NASA’s John Africano Observatory [

22]. In the literature, no possible applications of commercial cameras aimed at observing GEO objects in Bayer’s RGB were found. It is for this reason that the comparative study presented below can be useful for understanding satellite behavior in geostationary orbit.

3. Description of the Optical Systems and Observation Strategies

The observations were performed using the network of telescopes and observation systems managed by the S5Lab [

23]. Specifically, the SCUDO telescope and the SURGE system were selected.

The SCUDO is strategically positioned within a mountainous region near Rome, specifically in Collepardo (

Figure 1). The location was chosen thoughtfully, considering its advantageous characteristics for astronomical observations. One of its primary advantages lies in its distance from major sources of artificial light pollution, ensuring that the night sky in Collepardo remains relatively unaffected by the glare of urban illumination. These favorable light conditions confer to the S5Lab research team a clearer and more pristine view of celestial objects and orbiting spacecraft. Additionally, the observatory altitude, standing at 555 m above sea level, further enhances the quality of the observations by minimizing astronomical seeing. This phenomenon refers to the degradation of the image of an astronomical object caused by atmospheric interference and turbulence. Hence, the SCUDO position ensures a more consistent and dependable view of the sky vault, enhancing the overall reliability of the optical observations. SCUDO observation equipment consists of two Newtonian telescopes: a smaller telescope with a 15 cm diameter and a larger telescope with a 25 cm diameter. For the research project discussed in this article, the larger telescope was selected. The optical tube was paired with a sophisticated scientific Complementary Metal-Oxide-Semiconductor (sCMOS) sensor. These sensors are known for their high sensitivity and rapid data-acquisition capabilities, making them ideal for capturing detailed astronomical images and data.

Such a system is also equipped with a filter wheel that holds a set of Sloan photometric filters. For this study, only the g’, r’, i’, and z’ filters were utilized. The transmission curves for each filter are illustrated in

Figure 2. Further detailed characteristics of SCUDO are provided in

Table 1.

The SURveillance GEostationary system (SURGE) was completely designed, developed, and manufactured by the S5Lab research team. The optical setup consisted of fixed Digital Single-Lens Reflex (DSLR) cameras connected to the control system. This control system comprises a computer, two electronic boards (for control and power supply), a USB hub for managing data flow, and a power supply unit. Detailed characteristics of SURGE are provided in

Table 1.

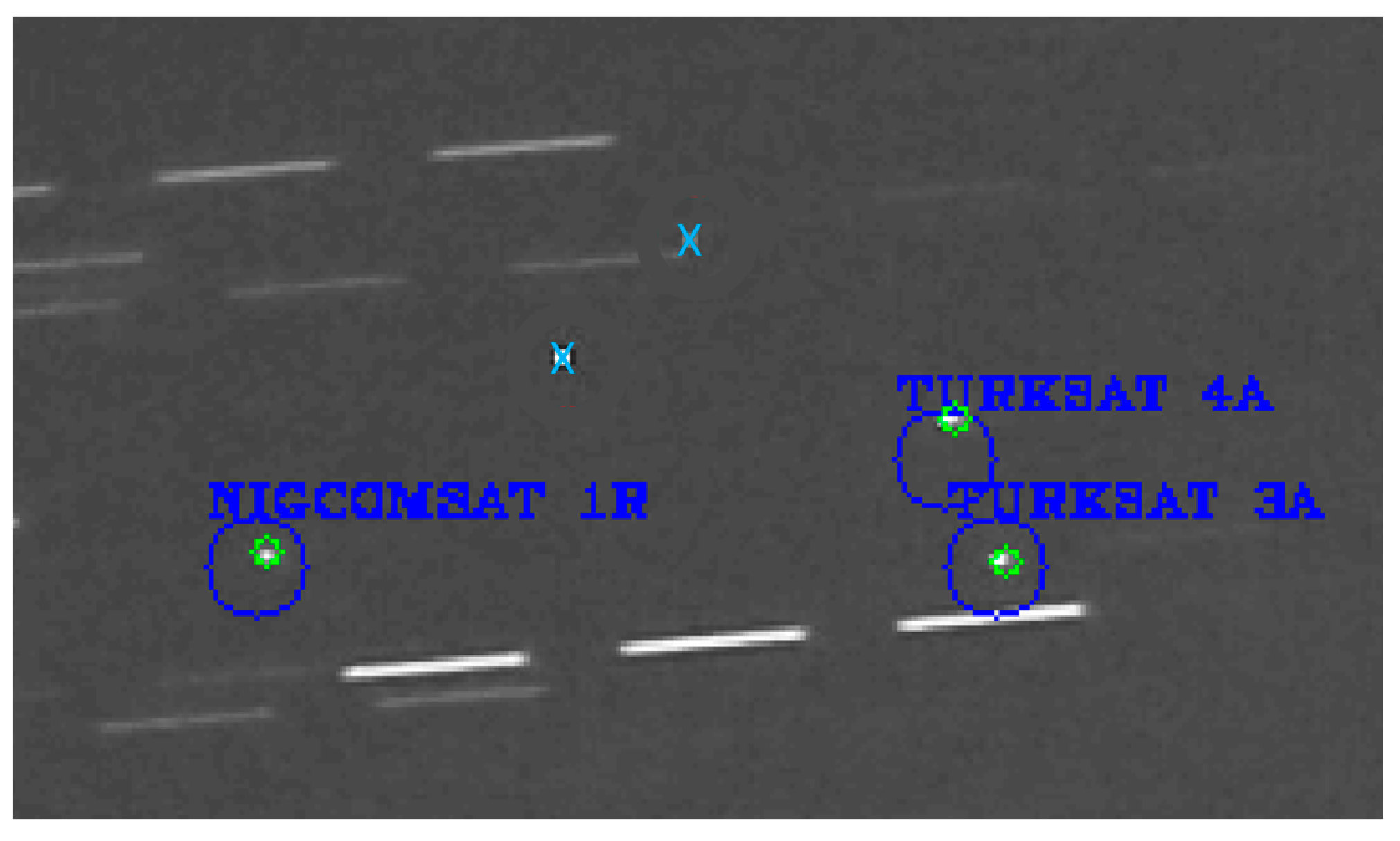

Each camera is mounted on a fixed tripod, ensuring that the Field of View (FoV) remains the same while the sky vault appears to move across the background. The resulting frames report stars as streaks and geostationary satellites as dots due to their lack of relative velocity with respect to the Earth. This phenomenon can be appreciated in

Figure 3 (on the right).

Figure 3 (on the left) shows the FoV of SURGE projected onto the celestial sphere, along with the geostationary satellite catalog. This allows us to determine the number of satellites present within SURGE FoV.

To illustrate the spectral diversity of SURGE compared to SCUDO,

Figure 4 reports, respectively, on the left, the quantum efficiency graph as a function of wavelength for two commercial cameras specifically designed for professional non-astronomical use [

25], and on the right, an example of two sCMOS sensors [

26].

Comparing the graphs in

Figure 2 and

Figure 4, the advantages of using Sloan filters over a Bayer mask on a CMOS sensor for spectral characterization can be discerned. Sloan filters cover the entire light spectrum ranging from 300 nm to 1100 nm, differentiating the photometric information in five channels within this range. In contrast, the use of a Bayer mask on a CMOS sensor results in a narrower total bandwidth (400 nm to 700 nm). However, when comparing the specific interval covered by both Sloan filters and RGB channels, the latter are able to better differentiate retrievable information due to the narrower bandwidth of its channels. While the Sloan set combines two channels, g’ and r’, within the same wavelength range, the Bayer mask divides the same interval into three channels: red, green, and blue. As a result, the use of RGB filters holds the promise of a more detailed characterization of the spectral properties of observed objects within the defined wavelength range despite some small areas of the channels overlapping (see

Figure 4). However, it is important to note that implementing the Sloan filter system remains essential for acquiring the largest bandwidth of wavelengths reflected by objects, overcoming the limitations imposed by RGB filters. Sloan filters are more selective as they do not overlap, and they result in a larger received signal due to their higher transmittance compared to RGB filters. Moreover, the transmittance is basically constant across the considered bandwidth.

Observing the two systems requires two different strategies, according to the different nature of the observatories. SURGE implements a continuous survey of the portion of the sky in the camera’s FoV since it is fixed to the ground, so it cannot operate in satellite tracking. On the contrary, SCUDO operates in satellite tracking using the available TLEs, which are propagated for the duration of the scheduled observation in order to provide the instructions in terms of right ascension and declination to the telescope, permitting it to follow the trajectory of the object. The main concept for both observatories is to take images for the whole night to use the largest time span, covering different phase angles of the orbiting objects. The pause time between two subsequent images is dictated by the time constraints of the necessary operations, which include the download time of the image, the camera’s stabilization time for SURGE, and the time needed by the filter wheel to change the filters for SCUDO.

4. Data Processing

In this section, the critical tasks performed by the image processing algorithms will be illustrated. Upon acquiring the images during the observation campaign, the first operation involves image calibration to remove all the undesirable contributions to the acquired signal. In fact, the light collected by the sensor contains both natural and artificial sources, as well as contributions from thermal noise and voltage bias. Additionally, the non-uniformity of the pixel array causes faults in astronomical images that are caused by the different light sensitivity of the pixels. Also, vignetting is a common problem in astronomical imaging. Thermal noise and voltage bias, the first two contributions, are handled using so-called bias frames and dark frames. Instead, flaws caused by the sensor itself are fixed using a flat-field frame. There is a vast amount of literature available on how to take these calibration frames, and often, each observer uses their own techniques that are specific to their objectives. To correct the images that constitute the focus of this paper, a series of calibration frames were captured. These included bias frames, which involved taking 60 individual frames with the shutter closed and the shortest possible sensor exposure. These individual bias frames were then combined to produce a master bias frame. Additionally, 60 dark frames, captured with the shutter closed and matching the exposure of the main images, were averaged to generate a master dark frame. For flat-field frames, the sensor was pointed at a uniformly illuminated light source, and data were collected until the sensor reached 40% saturation. An average of 60 frames was computed to create a master flat-field frame. Bias and dark frames must be collected on each observation night because they are heavily dependent on the environment, especially temperature, at the time of observation. In contrast, flat-field frames may be taken only once, for example at the midpoint of the campaign, as they mostly depend on the sensor and optics cleanliness. It is important to stress that flat-field frames were acquired separately for each filter that was used during the observations from SCUDO.

The second operation is the Astrometric Solution, also known as Plate Solving. This procedure consists of correlating the stars visible in the image with those cataloged in a reference star catalog, such as Gaia, Tycho-2, or others. The outcome of this process is the establishment of a mathematical model that precisely matches astronomical coordinates with each pixel within the image. Without this procedure, it would be impossible to obtain astrometric measurements of celestial objects in terms of their celestial coordinates (i.e., right ascension and declination). Additionally, collecting photometric data relies on Plate Solving since this procedure also provides information on the flux and magnitude of the stars present in the sensor FoV. This information is directly retrieved from the catalog used for the astrometric solution; thus, selecting an appropriate catalog is crucial. The ATLAS All-Sky Stellar Reference Catalog (“ATLAS-REFCAT2”) [

27] was chosen to analyze SCUDO frames, as it provides standard magnitude data for stars within the Sloan filters wavelength range. Instead, stars from the Gaia EDR3 catalog were employed for the astrometric-referencing process of SURGE images. Moreover, the RGB magnitudes of these stars were retrieved in [

28]. After the astrometric solution, the image data-processing procedure can be initiated to extract the necessary information (i.e., celestial coordinates and photometric data). In

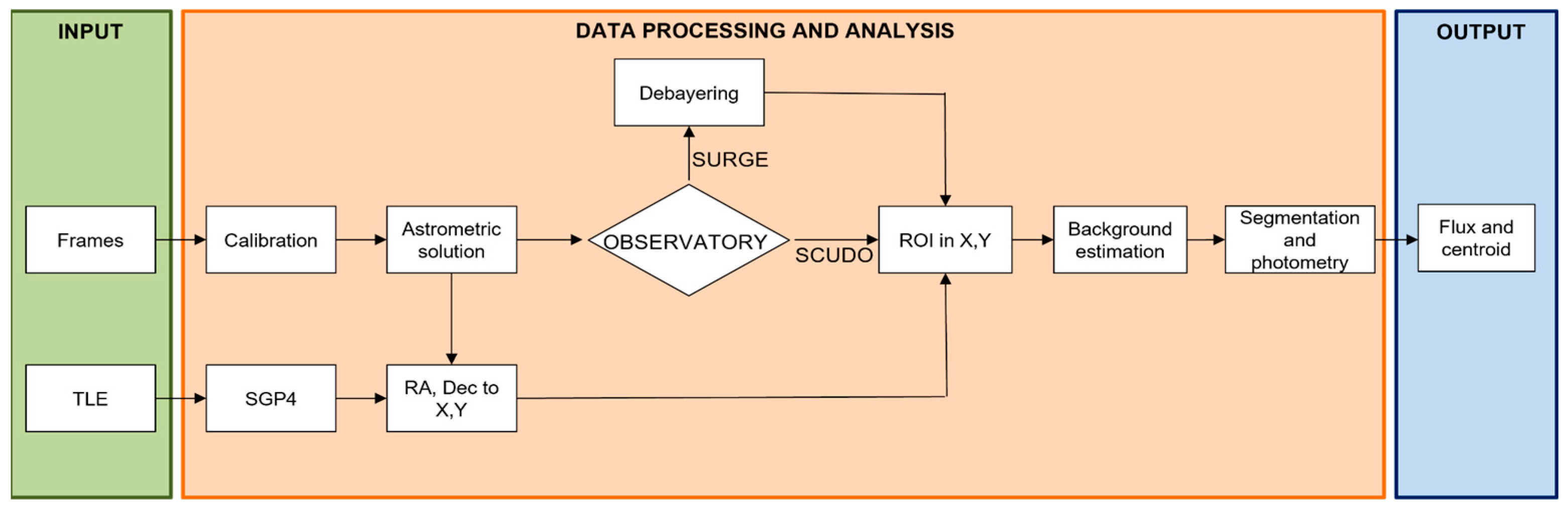

Figure 5, a flowchart of the image analysis software is shown.

The detection of objects within each frame is a crucial step in retrieving their celestial coordinates and photometric features. This task is particularly challenging in the first frame since many objects are present (including galaxies, stars, and space objects), and the algorithm needs to efficiently recognize only man-made space objects. To avoid errors and incorrect detections, the algorithm takes advantage of an orbital propagator. In the framework of this research, the Simplified General Perturbations 4 (SGP4) [

29] propagator is used to estimate the state of the object at the time of the image by propagating its initial state as provided by the Two-Line Elements (TLE) released by the North American Aerospace Defense Command (NORAD). Then, the algorithm converts the object’s celestial coordinates (RA, Dec) into pixel coordinates (x, y) within the image thanks to the previous Plate Solving (

Figure 6). The SGP4, coupled with the Development Ephemeris 421 (DE421), is also used to retrieve the phase angle.

A Region of Interest (ROI) is centered on the pixel coordinates, allowing the algorithm to focus its analysis on the specific object of interest, reducing the likelihood of incorrect detections and enhancing the accuracy of subsequent processing steps. Here, a background estimation is performed using the SExtractor algorithm [

30]. To estimate the background map, SExtractor first scans the pixel data and divides the image into a grid of rectangular cells. For each cell, it estimates the local background by iteratively clipping the background histogram until it converges to within ±3σ of its median.

After the grid is created, a median filter can be applied to remove any local overestimations of the background caused by bright stars. The final background map is then created by interpolating between the grid cells using a natural bicubic spline. The median filter helps to reduce any ringing artifacts that may be introduced by the bicubic spline interpolation around bright features. The estimated background is then subtracted from the foreground in order to highlight the objects. There exists the possibility that the algorithm finds more than one object within the ROI; therefore, a strategy to exclude the wrong ones must be implemented. In this case, the algorithm computes for each object some properties, including the ellipticity, circularity, and other geometrical properties; lastly, the distance from the propagation point is considered since this depends on the accuracy of the TLE. The centroid of the object is retrieved after the segmentation procedure: a suitable threshold value is chosen in order to binarize the image (black and white) and to return a connected component with its properties (centroid, ellipticity, and circularity). Lastly, the photometric characteristics of the selected object are extracted through an aperture photometry algorithm. Hence, the luminous flux emitted by the object is extracted. Finally, the conversion from the centroid coordinates (x, y) into celestial ones (RA, Dec) occurs through a geometrical transformation called World Coordinate Systems (WCS). An example of a geostationary satellite found in the ROI of a camera of the SURGE system is shown in

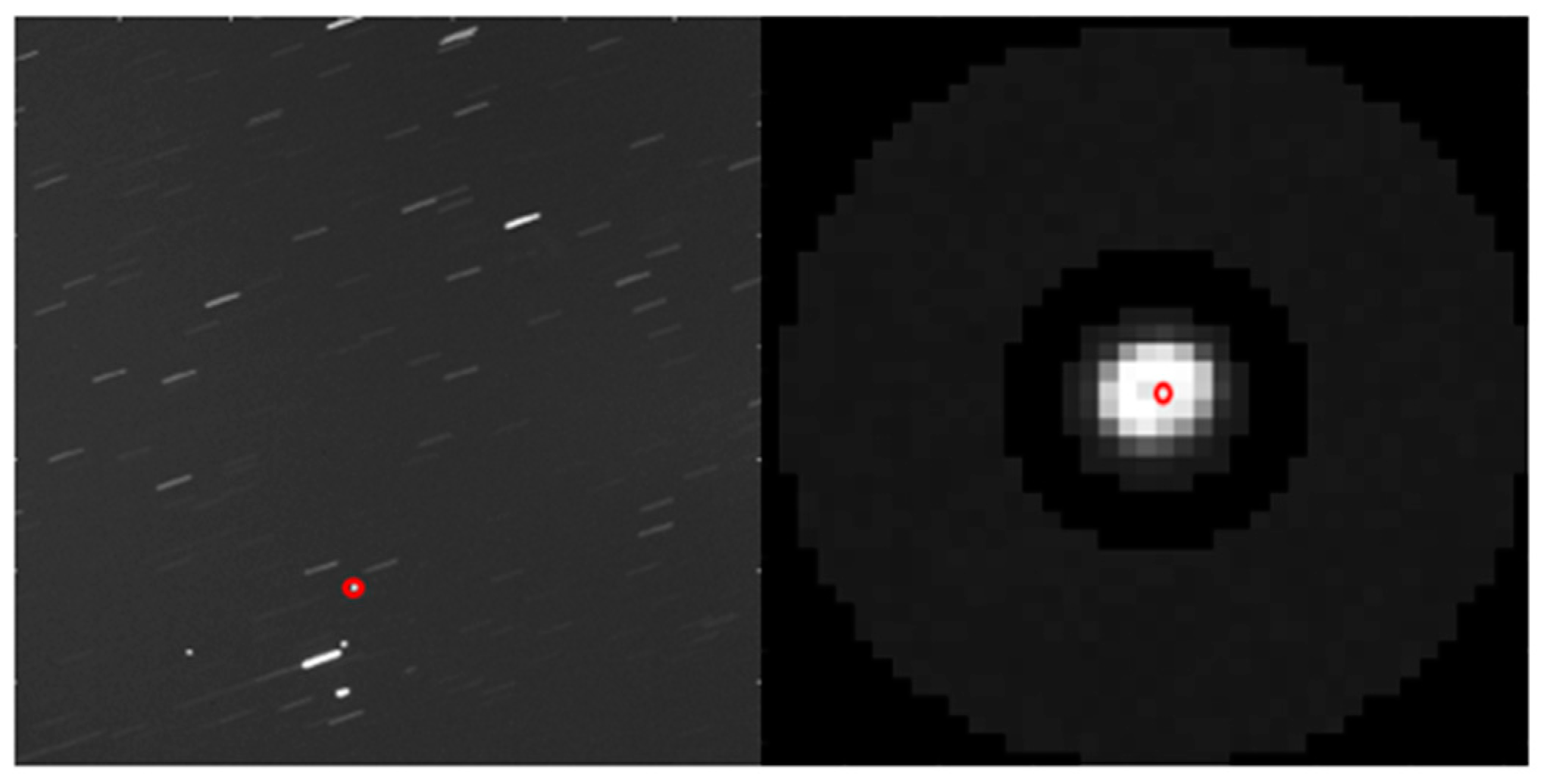

Figure 7.

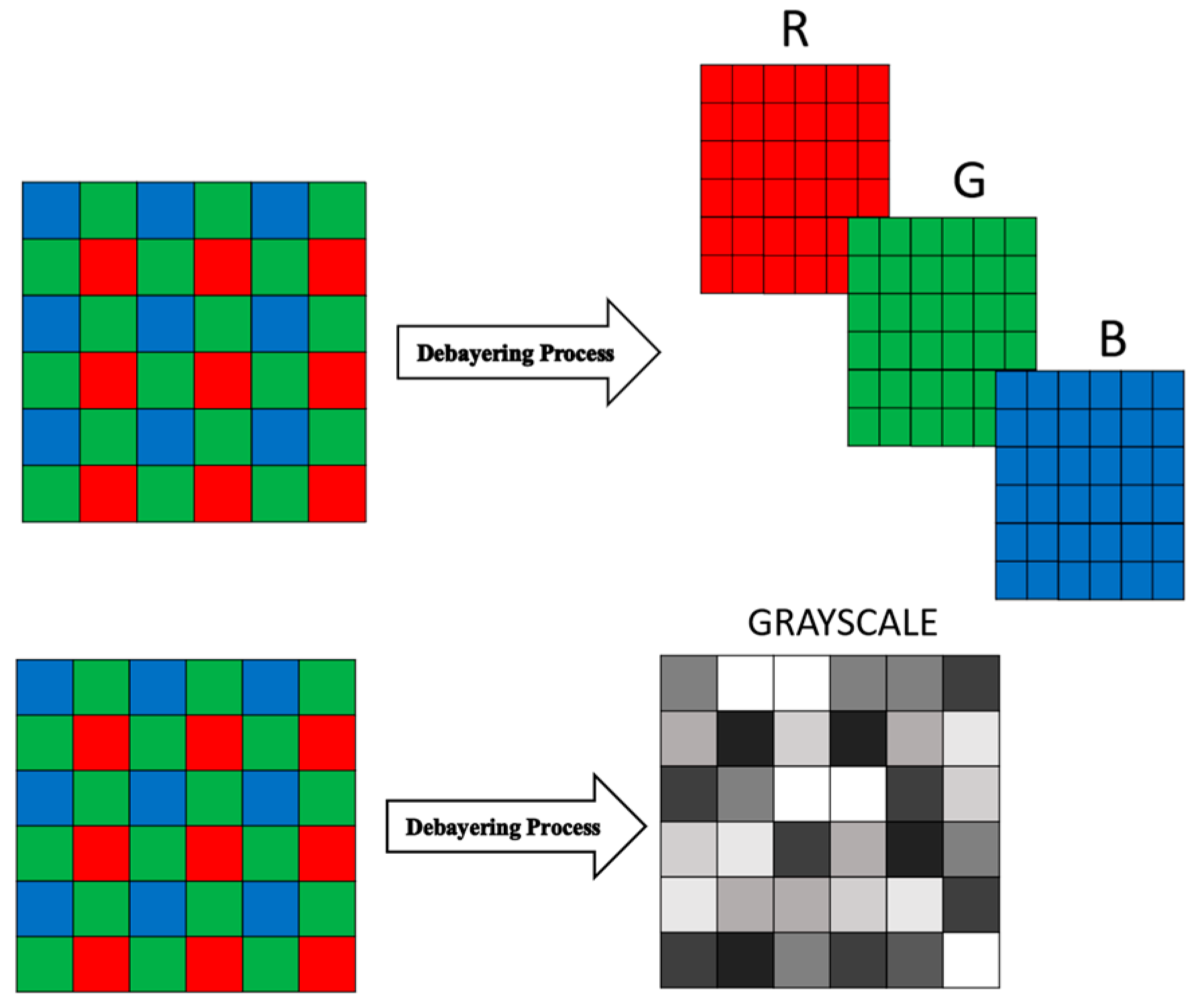

Regarding the images taken from SURGE, a previous conversion procedure was performed. All digital cameras have a Bayer filter above the sensor that converts the light into a color image. The Bayer filter is a mosaic of red, green, and blue filters that is placed over the light-sensitive cells (photosites) of the camera sensor. The filter is arranged in a 2 × 2 pattern, with 2 green filters (G), 1 blue filter (B), and 1 red filter (R) that can be ordered in different ways: BGGR, RGGB, GBRG, and GRBG. When light enters the camera and passes through the lens, it reaches the Bayer filter array on the sensor. The filter absorbs photons of certain colors while allowing others to pass through. The absorption of photons by the filter is what enables the camera to differentiate between different colors. The Bayer filter’s role is to selectively allow photons of a specific color to reach the pixel underneath it. Once the photons pass through the filter and reach the pixel, they are absorbed by the pixel’s photosensitive material. The Bayer filter’s arrangement of green filters is designed to match the way the human eye sees color. The human eye is more sensitive to green light than to red or blue light, so the Bayer filter oversamples the green channel to produce more accurate color reproduction. The debayering, or demosaicing, process is an algorithm that allows reconstructing a full color image starting from raw data. In this way, it is possible to retrieve an RGB image, split it into each color channel, and convert it into a grayscale image, as shown in

Figure 8.

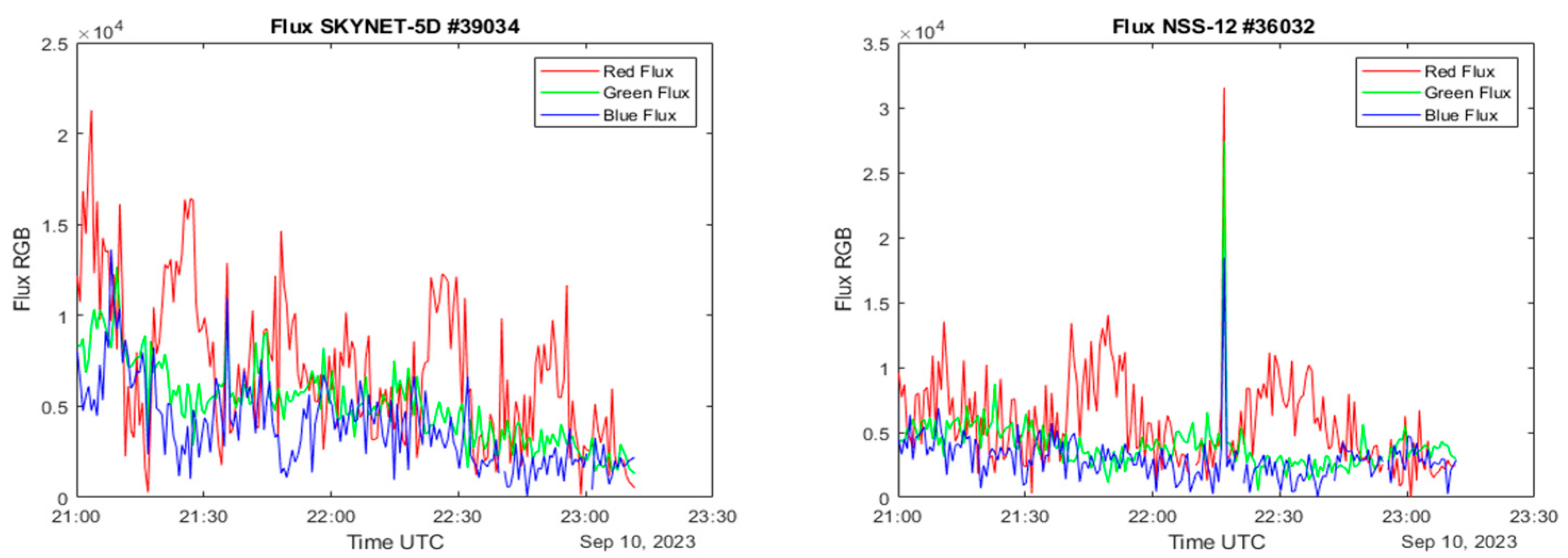

This analysis process is repeated for each frame of the observation, resulting in a series of flux curves in different RGB channels as a function of time, as illustrated in

Figure 9.

If the images are collected from the SCUDO observatory, the image-analysis procedure is the same as explained above. The native images are already in greyscale, and therefore, no conversion from a color image is needed. An example of a geostationary satellite in the ROI of the SCUDO observatory is shown in

Figure 10.

In the case of the SCUDO observatory, the SDSS/Sloan filter set, specifically g’, r’, i’, z’, was employed. With only one observatory available, the observation strategy entailed capturing frames sequentially with each filter. Since the images taken with different filters were not simultaneous, individual flux curves were generated, with each curve lacking data for the times when other filters were in use.

Figure 11 shows two flux curves, one as a function of time and the other with respect to the phase angle, acquired through the SCUDO observatory and referred to the object identified by its NORAD ID 36582 during the night of 10 September 2023.

The image processing software offers the user the capability to manually correct any errors in accurately identifying the object. This feature proves especially useful in cases where a star passes over the object or when the object is not correctly recognized. Once the flux curves are extracted, they are transformed into light curves by using the magnitudes of the stars observed in the FoV.

5. Results and Discussion

The results discussed in the following sections are directly related to finding similarities and differences in observations produced by the two imaging systems previously described. The observation session took place on 10 September 2023. Measurements were taken from 21:00:00 until 3:00:00 UTC time.

Table 2 illustrates observation parameters for both systems. The sample time and, consequently, the number of acquisitions, depends on the exposure time and other constraints imposed by the hardware, in particular for SCUDO, the time needed to cycle filters and adjust the optics focus.

Such an observation night was concerned with the Eutelsat telecommunication satellites, with a number of them released in the slot corresponding to a 13° E latitude. By tracking the TLE of Eutelsat Hotbird 13E, cataloged as 28946 by NORAD, three other satellites belonging to the Eutelsat constellation were found in the FoV. They are listed in

Table 3.

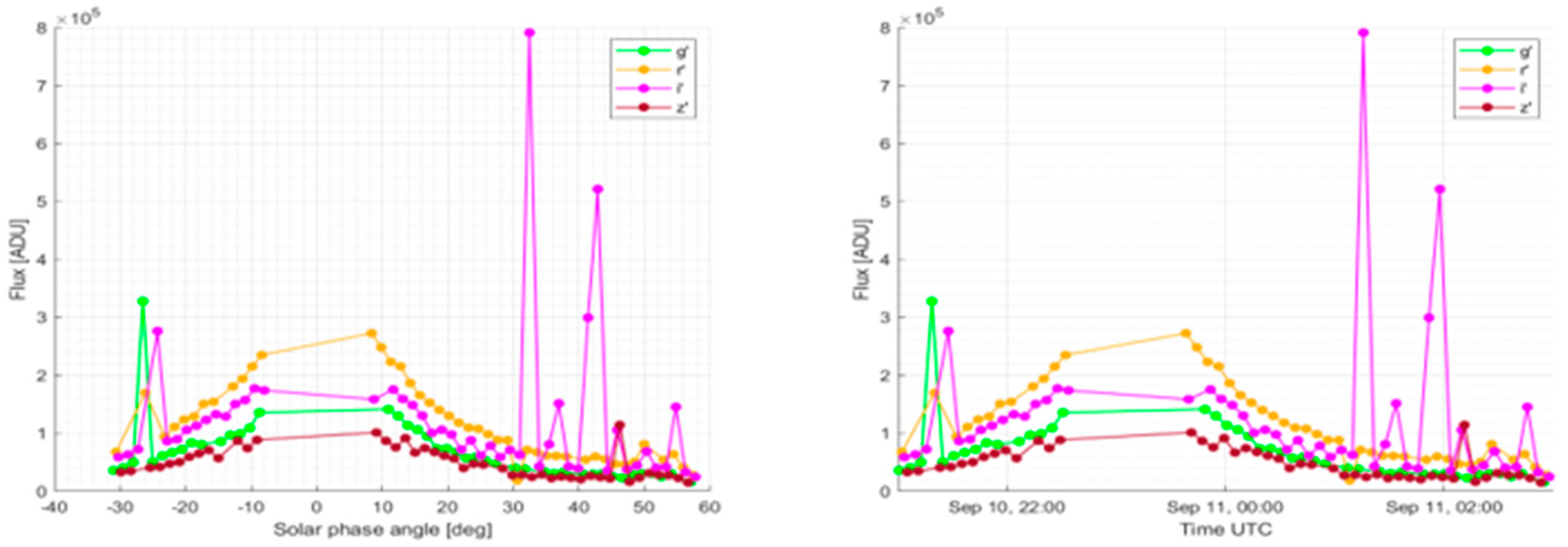

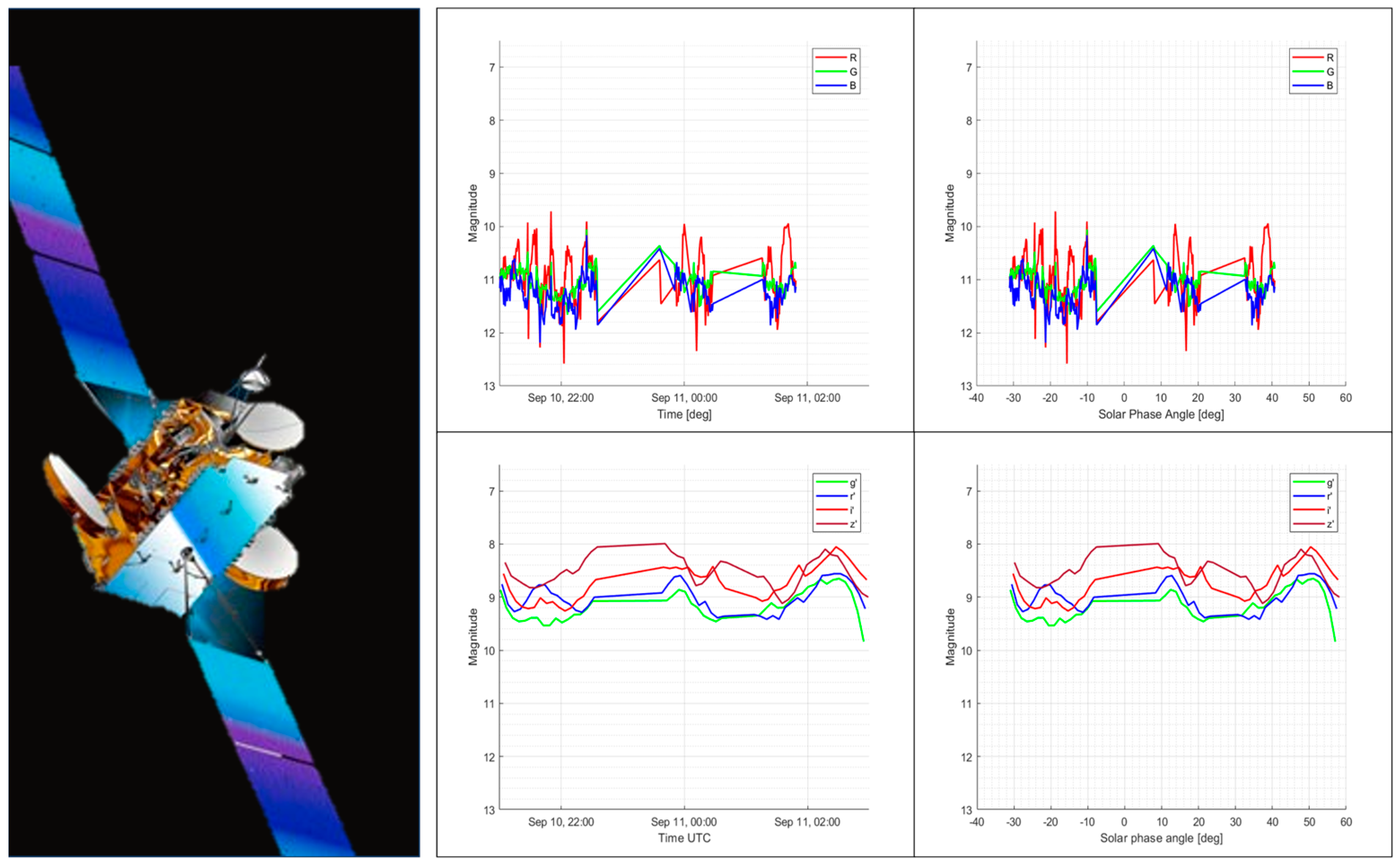

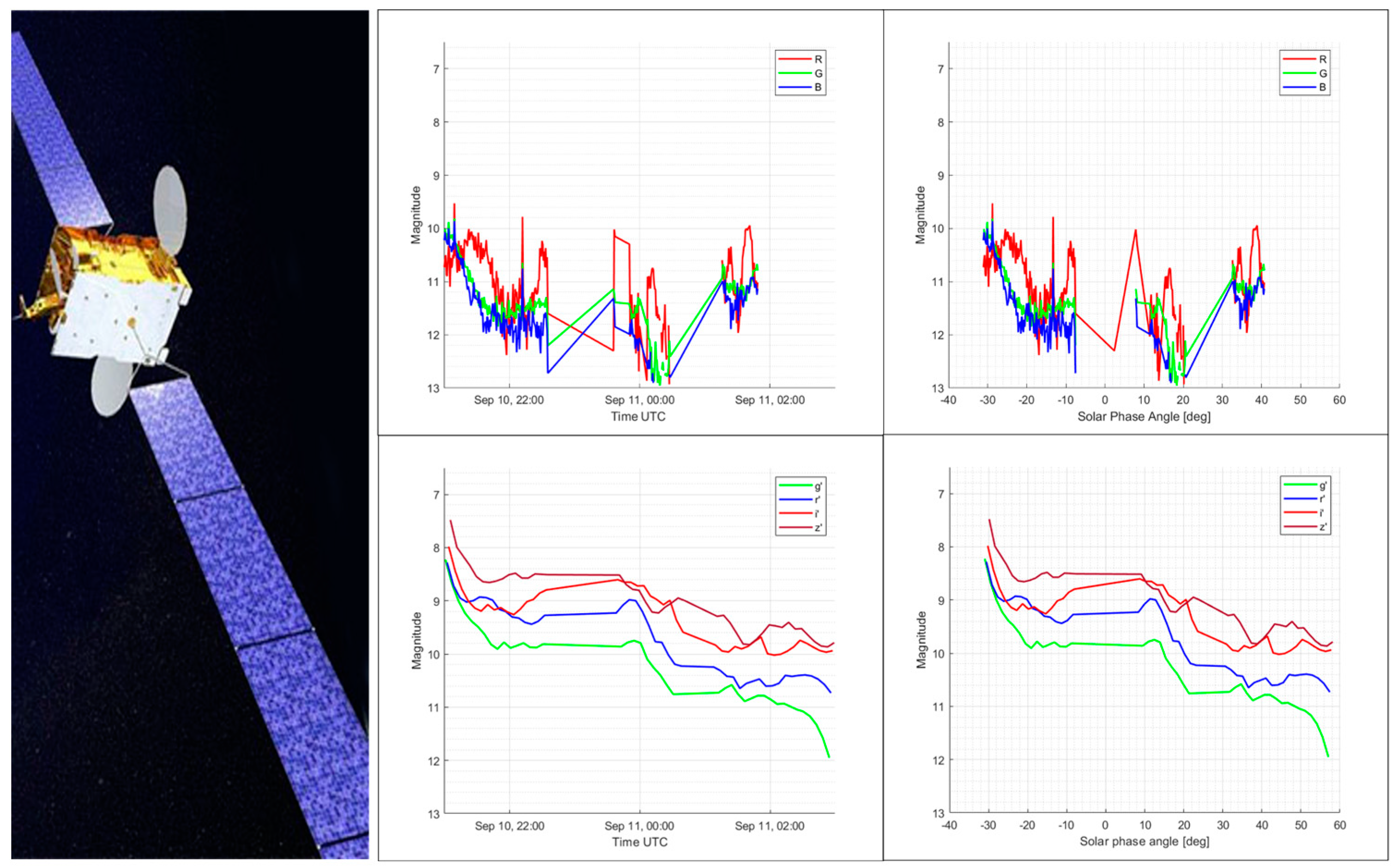

In the figures that follow, the light curves are reported as a function of the observation time and solar phase angle. The upper images report the observations from SCUDO, while the figures on the bottom are from SURGE. Observations are differentiated by the observed wavelength. For each object, an artistic representation of the satellite is reported.

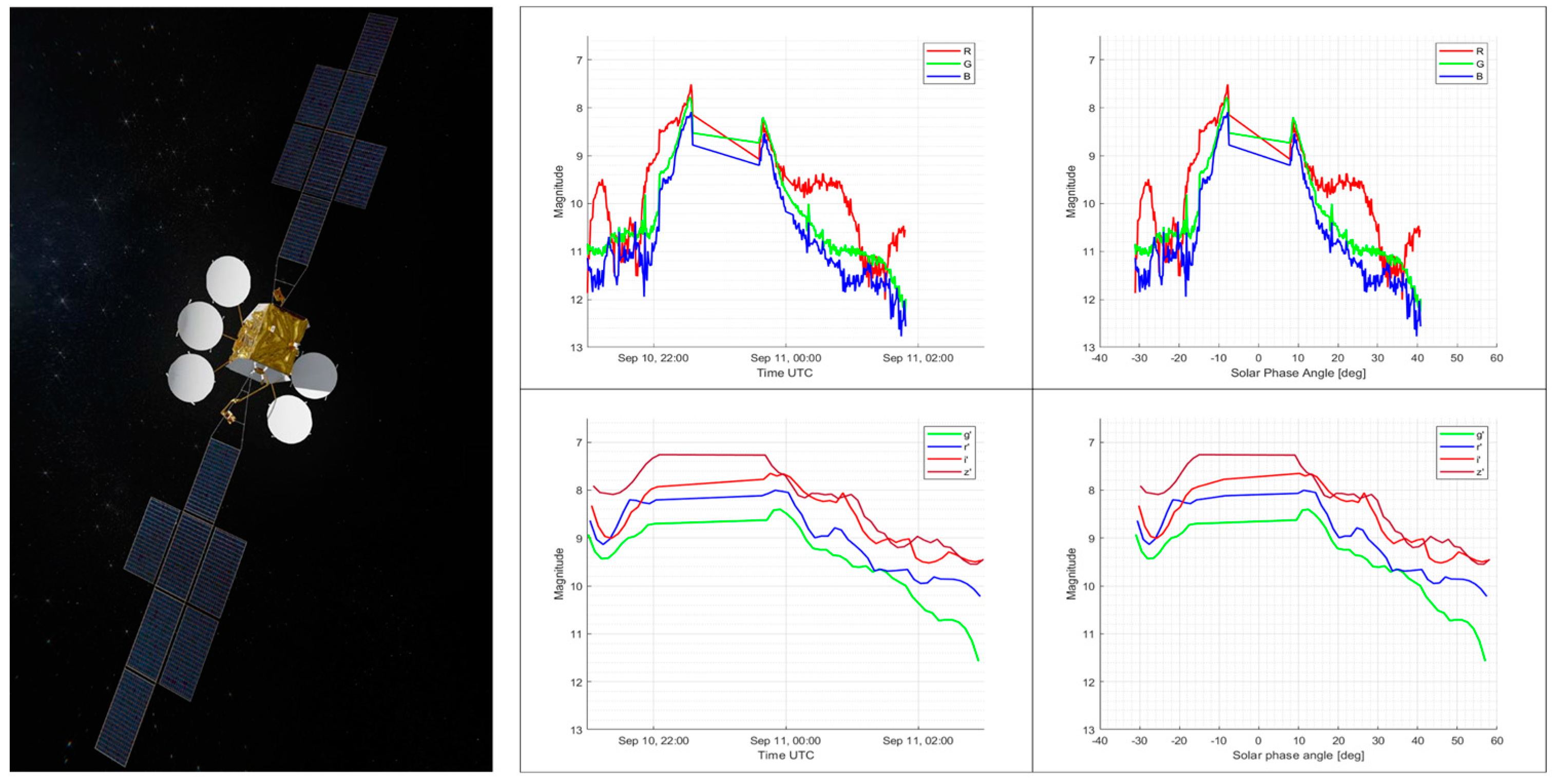

The satellite with NORAD ID 28946 mounts the Spacebus 3000, designed by Thales Alenia Space. Referring to

Figure 12, the light curves show three intervals separated by two interruptions. The first interruption results from the shadowed time of the satellite, while the second is due to the satellite overlapping with another object in the FoV (on the same line of sight). In the light curves, the z’ (near infrared) and R (red) bands significantly predominate, thus illustrating that the satellite is brighter when observed in regions close to the infrared part of the spectrum. In the second part of the observation, when the satellite is once more visible, SCUDO measurements reveal a light curve that is flat with respect to the phase angle, with no global increasing or decreasing trend. In the end, the third interval shows a local maximum in the reflectance that occurs at a phase angle of about 50 deg. Due to the lack of data for SURGE at the end of the observation period, the data generated by the two systems in this particular case cannot be compared.

Object 29270 mounts the Eurostar-3000 bus, a typical satellite bus belonging to the Eurostar family designed by Airbus Defence and Space. The systems exhibit very similar patterns in all the considered spectral bands except for the R channel in the SURGE system, where spikes are particularly evident, as shown in

Figure 13. Also, in this case, two interruptions are found: the first is due to the shadowed time of the satellite, and the latter is due to the overlapping with another object. Contrary to expectations, the satellite appears to reflect less as it becomes closer to a 0-degree phase angle. This is recognized by both systems, and it can be a consequence of the object attitude orientation. This demonstrates that the ideal behavior expected by a Lambertian sphere cannot always be recognized for an orbiting object.

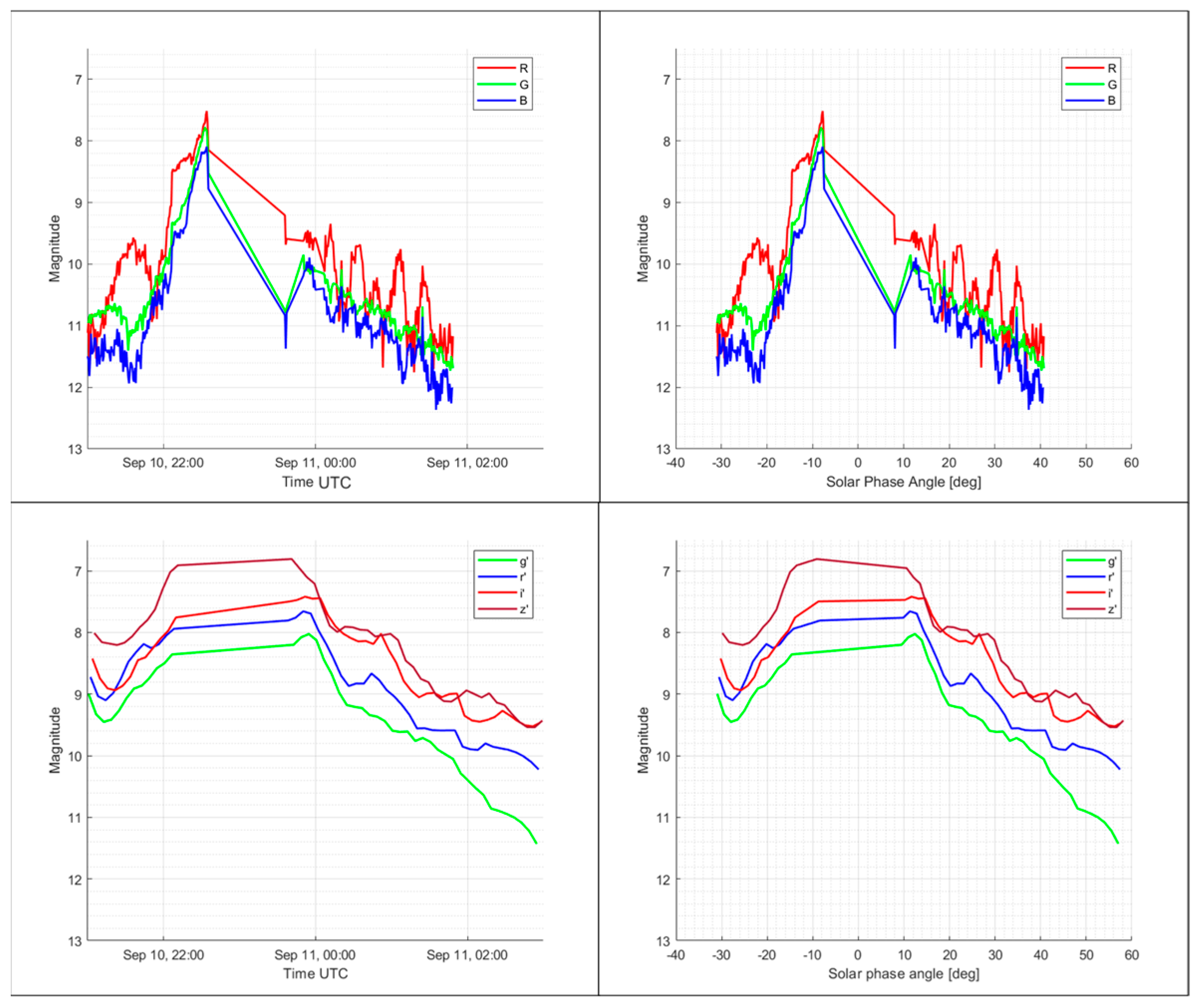

The observations for the objects 54048 and 54225 are shown in

Figure 14 and

Figure 15. The Eurostar-Neo bus structure, produced by Airbus Defence and Space, is mounted on both of them. It is reasonable to expect a light curve that is relatively similar given the same satellite bus and the same phase angle. In this case, the dominant bands are z’ for SCUDO and R for SURGE. Additionally, the ideal influence of the solar phase angle, related to the observation geometry, is observed in these examples. The observed light curves decrease as the solar phase angle increases, whereas reflectance increases as the satellite approaches the 0° phase angle. These considerations apply to both objects and observations made from both systems. The light curves of the two objects observed with SCUDO are nearly identical in each of the observed spectral bands, both in the visible and in the near-infrared. This means that a typical signature for spacecraft of this type can be revealed and repeatedly observed over time, yielding useful outcomes for satellite identification via optical observations. On the other hand, light curves observed from SURGE are similar but not identical, especially in the R channel. This can be due to the fact that part of the RGB transmittance curves overlap, thus selecting wavelengths that belong to multiple channels, as illustrated in

Figure 4. This does not happen when using Sloan filters, thus enabling a more selective spectral characterization and leading to the repetition of the same spectral signature over the observation of two structurally similar satellites observed at the same phase angle.

General considerations can be discussed concerning outcomes that apply to every observed object. The z’ band, which corresponds to the near-infrared, is the most pronounced in all SCUDO observations. Each of the shown curves clearly demonstrates this. The g’ band, on the other hand, is the least visible. The z’ band, specifically, is approximately one magnitude lower than the g’ band, resulting in a two-fold difference in brightness between the near-infrared and visible spectra. This disparity may be related to the materials used by satellite producers, as demonstrated by objects 54048 and 54225, which use the same satellite bus and exhibit similar brightness in each observed band. This effect is not observed in the RGB channels, which have weaker wavelength selection compared to Sloan filters. The higher order of light curves observed from SCUDO with respect to those acquired by SURGE is probably due to the higher quantum efficiency of the used sensor. The light curves, illustrated as a function of phase angle, present different behaviors with respect to the observation geometry.

It is worth noting that the R channel in the SURGE images shows a different behavior with respect to the G and B channels. This is perceptible in all the figures above. It would be a good starting point for future improvements of this study to consider different satellites with different buses to see if the behavior in the R channel depends on the materials and the configuration of the satellite.

6. Conclusions

A comparative analysis was conducted between two observation systems characterized by significant hardware differences in terms of performance and usage. Specifically, SCUDO consists of a telescope with a monochromatic sCMOS sensor and a set of photometric filters (SDSS/Sloan), while SURGE is a DSLR-based system with a CMOS sensor with a Bayer mask. The photographic optics and sensor used in SURGE allow for a wide FoV, enabling the simultaneous observation of multiple geostationary satellites. In contrast, the optical tube and sensor of SCUDO produce a narrower field of view but with greater angular precision, generally allowing the observation of a few geostationary satellites at a time.

The CMOS sensor with a Bayer mask, used in SURGE, offers a narrower spectral bandwidth compared to a monochromatic sensor but provides information on three spectral bands simultaneously. Study cases show that light curves in g’, r’, i’, and z’ filters are more distinguishable from each other with respect to curves in R, G, and B. The RGB bands overlap, with each channel containing information from the others, although in a reduced proportion. This contrasts with the Sloan filter set used in SCUDO, where each filter is spectrally separated from the others. Hence, the advantage of using Sloan bands consists of a more precise spectral characterization of the light reflected by an orbiting object. Also, with respect to the Bayer mask array, Sloan filters have constant transmittance and do not overlap with each other. For this reason, using a system equipped with Sloan filters is preferred with respect to a system based on the Bayer mask for the spectral characterization. However, if characterization is specifically requested in the R, G, and B bands, test cases show that light curves are consistent with the results gathered with Sloan bands. In fact, the brighter band resulted in being the closest to the infrared region from light curves obtained with both systems. Moreover, an advantage related to the use of systems based on the Bayer mask is the simultaneous acquisition in each wavelength without the need for cycling filters, thus decreasing the sample time.

Both systems recognized a similar light curve for two different satellites, objects 54048 and 54225, mounting the same bus structure. Hence, since they have the same phase angle, observations also suggest they have similar attitude motions. Specifically, light curves observed with Sloan filters are nearly identical, thus enabling the identification of a spectral signature associated with this category of objects. On the other hand, an identical spectral signature was not recognized when using RGB channels, with the R channel showing several discrepancies. As a result, Sloan filters are much more spectrally distinctive than RGB channels, allowing for a better photometric characterization of observed objects.

Observations prove that it cannot be assumed as a general rule that lowering the phase angle increases reflectivity. Also, attitude motion must be considered, as illustrated by the curves of 28946 and 29270. Looking at these curves, light curves present local peaks for both satellites at phase angle values different from zero. On the other hand, objects 54048 and 54225 present the typical behavior expected by a Lambertian sphere, with reflectivity increased when the phase angle is approaching zero. It must be noticed that these satellites present a complex structure due to antennas and solar panels; thus, the observed behavior is due to an attitude motion that is compatible with the Lambertian sphere.

It has to be highlighted that the procedure described is only applicable to actively controlled satellites since the repeatability of light curves is guaranteed by attitude stabilization. For uncontrolled satellites, this procedure can be applied only after a deep analysis of light curve variations over a longer time in order to keep into account the long-term attitude variations.