Improving Audio Steganography Transmission over Various Wireless Channels

Abstract

1. Introduction

1.1. Problem Statement

- In previous works, audio steganography techniques concealed multimedia data (text, images, or audio) in a cover audio file, so the capacity of the embedded data was low.

- In previous studies, researchers designed different audio steganography techniques without testing them in communication systems and without studying the effect of wireless channel noise.

- The quality of reconstructed data from transmitting audio steganography suffered from the noise resulting from wireless channels.

- Although OFDM is considered a wideband modulation method for coping with multi-path channel problems, the reconstructed multimedia data are affected by it.

- In previous studies, OFDM systems did not address the effect of different wireless channels (AWGN, Fading, and SUI) on audio steganography techniques.

1.2. Main Contributions

- Constructing two models to hide various secret data in an audio cover file. The first model is based on the LSB technique, and the second is based on DWT and the LSB techniques to increase the capacity of the embedded data.

- Analyzing the behavior of the two proposed models using BPSK modulation over an AWGN wireless channel.

- Applying a free error control scheme, known as a convolutional (1, 2, 7) encoding scheme, to transmit audio steganography reconstructed from the two models to cope with the noise coming from wireless channels.

- Merging OFDM and differential pulse shift keying (DPSK) modulations over an AWGN wireless channel in order to enhance the OFDM system.

- Studying the behavior of the two proposed secure models through enhanced OFDM over various wireless channels, such as AWGN, multipath fading, and SUI-6.

2. Background and Related Work

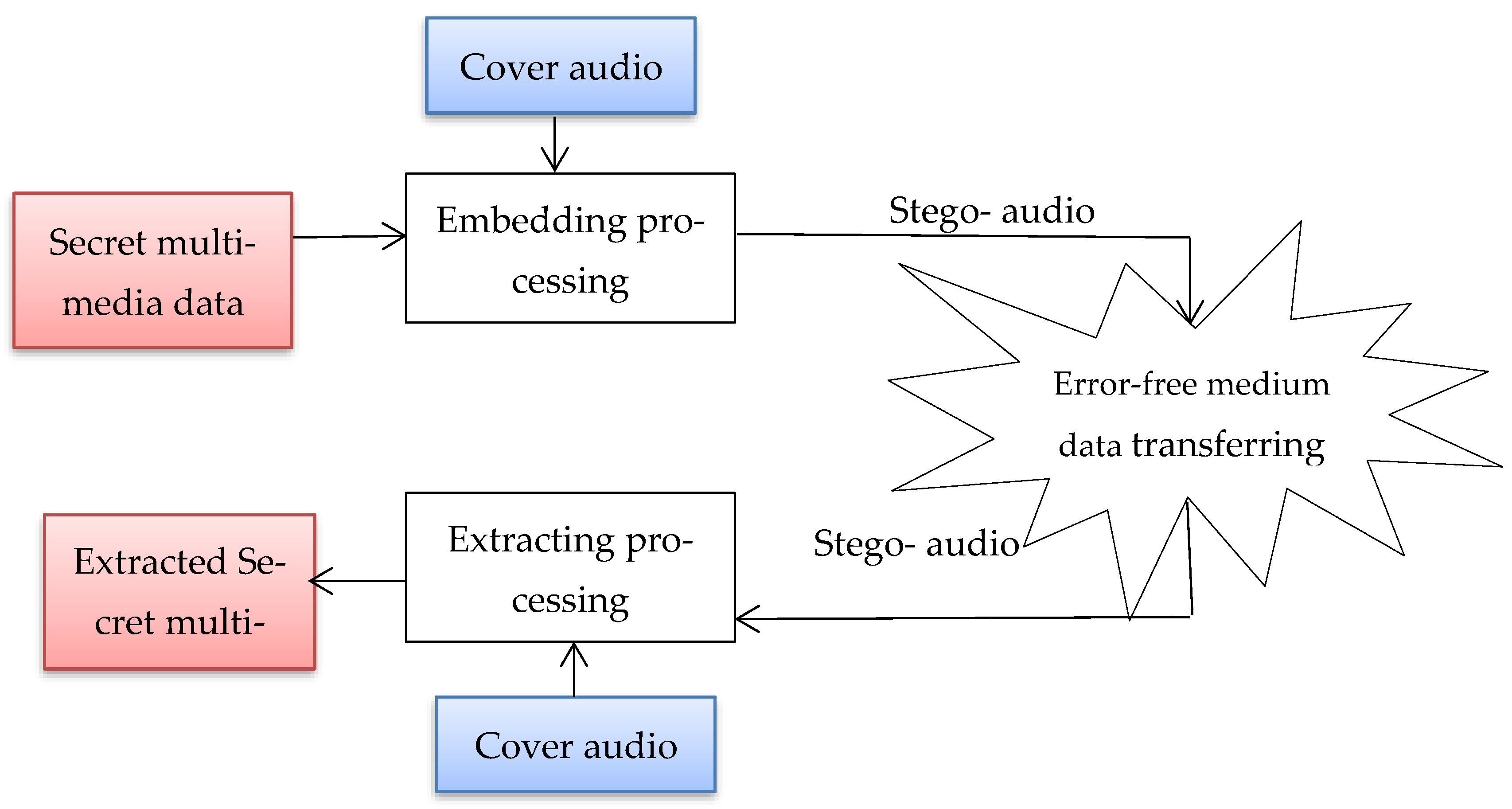

2.1. Description of Audio Steganography Technique

2.2. Related Works

3. The Proposed Models of Audio Steganography

3.1. The First Proposed Algorithm

- To prepare secret images with secret text, DWT transform must be applied to the images. Then, four bands will appear (LL, LH, HH, HL). After that, the text is converted into binary data that acts as bits. In the text embedding process in the image, the LSB technique is applied to the LL band.

- To embed secret images with secret text in an audio cover file, the audio cover must first be converted into 16 bits. The 8 LSB of each symbol can be used for embedding data in it; the characteristics of the audio do not change much, and the sound is clear to the listener. The 8-LSB is utilized for embedding secret images with text by the LSB technique from 1:256 × 256 of the cover audio length.

- Before embedding the secret audio, it must perform the condition for using the audio compression stage. If the length of the secret audio is greater than the length of the cover audio, different DWT decomposition levels are applied to the secret audio in the audio compression stage. The number of DWT decomposition levels in the audio compression stage is determined according to the length of the cover audio file. So, the length of cover audio must be equal or more than (the length of secret audio + 256 × 256). If the length of the secret audio is lower than the length of the cover audio, the audio compression stage is not performed on the secret audio.

- The 8-LSB of the audio cover, from (256 × 256 + 1) to the cover audio length, is used for embedding 8-MSB (most significant bit) of secret audio. Finally, the audio steganography file is produced at the transmitter.

- At the receiver, the inverse operation of the embedding process is applied. To extract the secret audio, the audio steganography must be converted into a binary format, and the extracted 8-LSB acts as 8 MSB of the secret audio to reconstruct the secret audio from its location in the audio steganography.

- To extract secret images with text, 8-LSB of audio steganography is extracted from its location to reconstruct secret images with text.

- The text-extracting process from the secret image is performed by applying DWT to the secret image and extracting the LSB from the LL band.

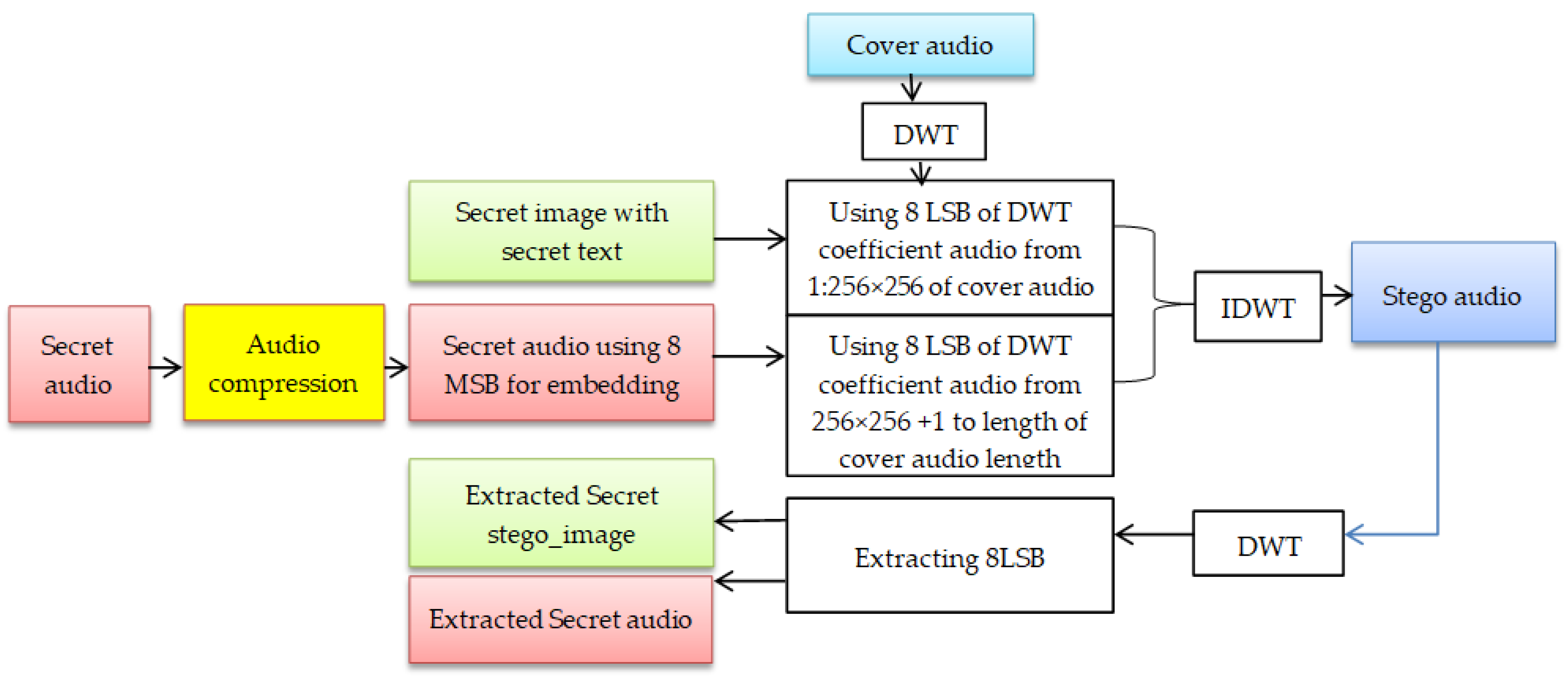

3.2. The Second Proposed Algorithm

- After applying DWT on the audio cover file, the two low and high-frequency bands (LL, HH) appear. The coefficients of the LL band are used for the embedding process.

- To embed a secret image with text, 8-LSB of LL band coefficients from 1:256 × 256 of transformed cover LL length is applied.

- Before embedding the secret audio, it must meet the condition for using the audio compression stage. If the length of the secret audio is greater than the length of the band of DWT decomposition of the cover audio file, the number of DWT decomposition levels is determined according to the length of the band of DWT decomposition of the cover audio file in the second audio steganography model. So, the length of the band of DWT decomposition of the cover audio file must be equal to or greater than (the length of the secret audio + 256 × 256). If the length of the secret audio is lower than the length of the cover audio, the audio compression stage is not performed on the secret audio

- 8-LSB of LL band coefficients, from (256 × 256 + 1) to the transformed cover LL length, is utilized for embedding 8-MSB of secret audio.

- Finally, the audio steganography is constructed after applying inverse DWT (IDWT).

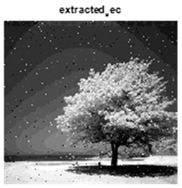

- At the receiver side, the extraction process is applied to the audio steganography. The DWT transform is applied to the audio steganography, and the LL and HH bands appear.

- To extract secret images with secret text, 8-LSB of the LL band coefficients is utilized to reconstruct the secret image from its specific location in the LL band.

- To reconstruct the secret audio, 8-LSB of the LL band, from its specific location of LL band length, acts as the 8-MSB of the secret audio.

- The text-extracting process from the secret image is performed by applying DWT on the secret image and extracting the LSB from the LL band.

| Algorithm 1: Embedding procedure of the proposed approach (Transmitter side) | |||

| 1: | Input: | ||

| 2: | I: Cover image (uint8), size H × W | ||

| 3: | M: Binary payload | ||

| 4: | K: Secret key | ||

| 5: | params: {d, DWTL, Sub bands, α, Nsubcarriers, CC, inter leaver} | ||

| 6: | Output: | ||

| 7: | Istego: Stego image (uint8), size H × W | ||

| 8: | Mcrc ← CRC.APPEND(M) | ||

| 9: | Mfec ← FEC.ENCODE_CC(Mcrc, CC = (1, 2, 7)) | ||

| 10: | Xofdm ← OFDM.MODULATE(Xsym, N = Nsubcarriers) | ||

| 11: | Lbits, Tbits ← SPLIT(Xofdm, ratio = rspatial:rwavelet) | ||

| 12: | C0 ← DWT.DECOMPOSE(I, levels = DWTL) | ||

| 13: | Ω ← SELECT_COEFF_INDICES(C0, Sub bands, policy = “mid-band, energy-capped”, seed = rand(K,“dwtidx”), |Tbits|) | ||

| 14: | For (i, idx) in enumerate(Ω) do | ||

| 15: | c ← C0[idx] | ||

| 16: | b ← Tbits[i] | ||

| 17: | C0[idx]← c + α * SIGN_FOR_EMBED(b, policy = “LSB-on-coeff”) | ||

| 18: | End For | ||

| 19: | I′ ← DWT.RECONSTRUCT(C0) | ||

| 20: | Π ← SELECT_PIXEL_INDICES(I′, strategy = “blue-channel-first or grayscale-scan”, seed = rand(K,“LSBidx”), count = ceil(|Lbits|/d)) | ||

| 21: | j ← 0 | ||

| 22: | For each p in Π do | ||

| 23: | seg ← Lbits[j:j + d] | ||

| 24: | v ← PIXEL_VALUE(I′, p) | ||

| 25: | vnew ← (v & ~((1 << d)−1)) | BITS_TO_INT(seg) | ||

| 26: | SET_PIXEL(I′, p, v_new) | ||

| 27: | j ← j + d | ||

| 28: | if j ≥ |Lbits| then break | ||

| 29: | End For | ||

| 30: | Istego ← CLIP_TO_UINT8(I′) | ||

| 31: | assert PAYLOAD_CAPACITY_OK(I, d, |Lbits|) | ||

| 32: | Return Istego | ||

| 33: | End | ||

| Algorithm 2: Extraction procedure of the proposed approach (Receiver side) | ||||

| 1: | Input: | |||

| 2: | Istego: Stego image (uint8), size H × W | |||

| 3: | K: Secret key | |||

| 4: | params: {d, DWTL, Sub bands, α, Nsubcarriers, CC, inter leaver} | |||

| 5: | Output: | |||

| 6: | Mhat: Recovered payload (binary) | |||

| 7: | status: {OK | CRC_FAIL} | |||

| 8: | C1 ← DWT.DECOMPOSE(Istego, levels = DWTL) | |||

| 9: | Ω ← RESELECT_COEFF_INDICES(C1, Sub bands, seed = rand(K,“dwtidx”)) | |||

| 10: | ← [] | |||

| 11: | For idx in Ω do | |||

| 12: | c ← C1[idx] | |||

| 13: | bhat ← DETECT_BIT_FROM_COEFF(c, policy = “sign-threshold”, α) | |||

| 14: | APPEND(, bhat) | |||

| 15: | End For | |||

| 16: | Π ← RESELECT_PIXEL_INDICES(strategy = “blue-channel-first or grayscale-scan”, eed = rand(K,“LSBidx”), count = ceil(|image|/stride)) | |||

| 17: | ← [] | |||

| 18: | For each p in Π do | |||

| 19: | v ← PIXEL_VALUE(Istego, p) | |||

| 20: | seg ← INT_TO_BITS(v & ((1 << d)−1), d) | |||

| 21: | APPEND(, seg) | |||

| 22: | if || ≥ EXPECTED_LSB_LENGTH then break | |||

| 23: | End For | |||

| 24: | ← MERGE(, , ratio = rspatial:rwavelet) | |||

| 25: | ← OFDM.DEMODULATE(, N = Nsubcarriers) | |||

| 26: | ← DEINTERLEAVE(π, π = BUILD_INTERLEAVER(rand(K,“π”), length(π))) | |||

| 27: | ← FEC.DECODE_CC(, CC = (1, 2, 7)) | |||

| 28: | , ok ← CRC.CHECK_AND_STRIP() | |||

| 29: | IF ok = TRUE then | |||

| 30: | Return , OK | |||

| 31: | else | |||

| 32: | Return , CRC_FAIL | |||

| 33: | End IF | |||

| 34: | End | |||

4. Experimental Evaluation

4.1. Simulation Setup

4.2. Key Parameters Justifications

- (1)

- LSB embedding depth

- (2)

- Convolutional code (1, 2, 7)

- (3)

- Number of OFDM subcarriers

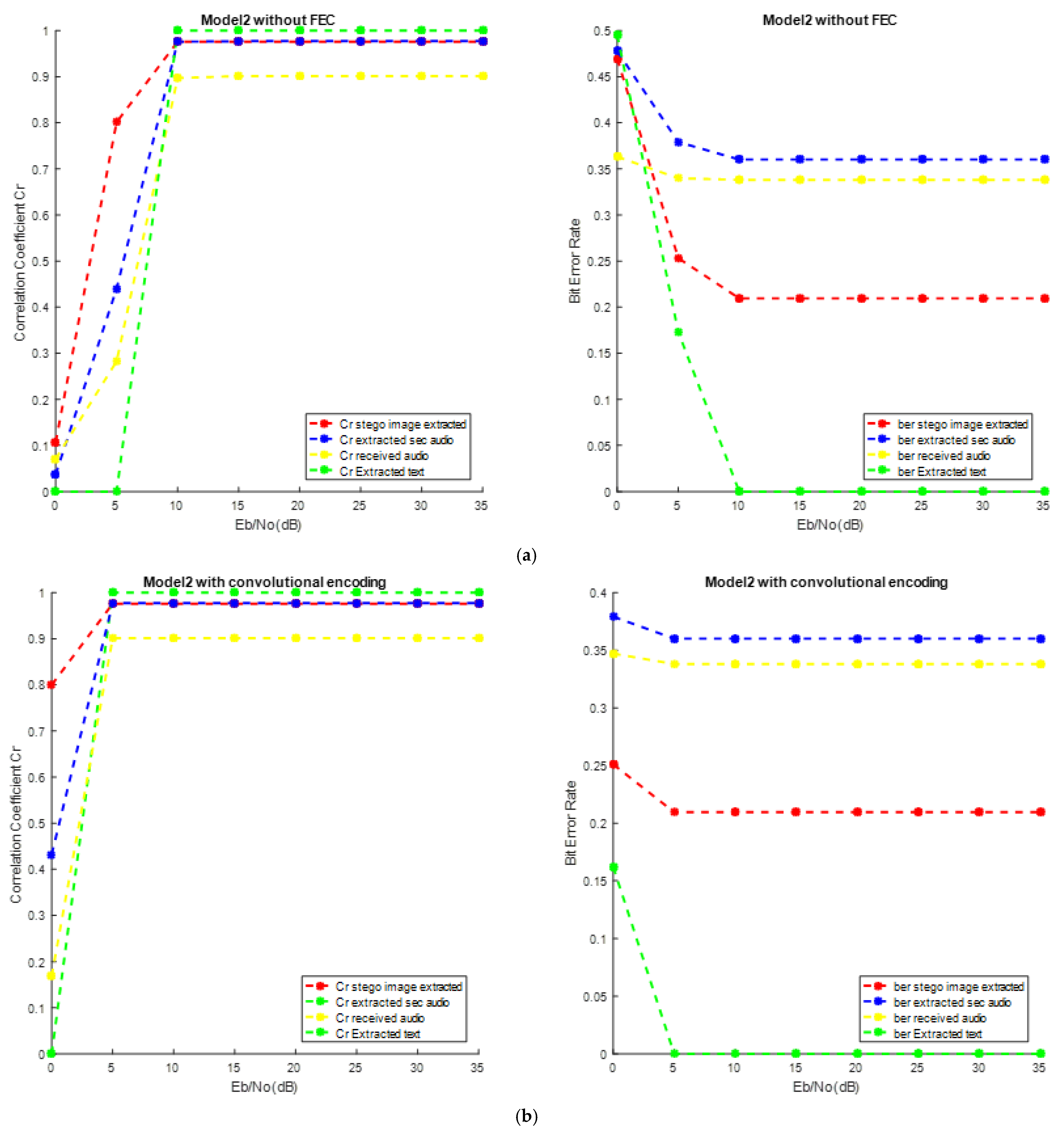

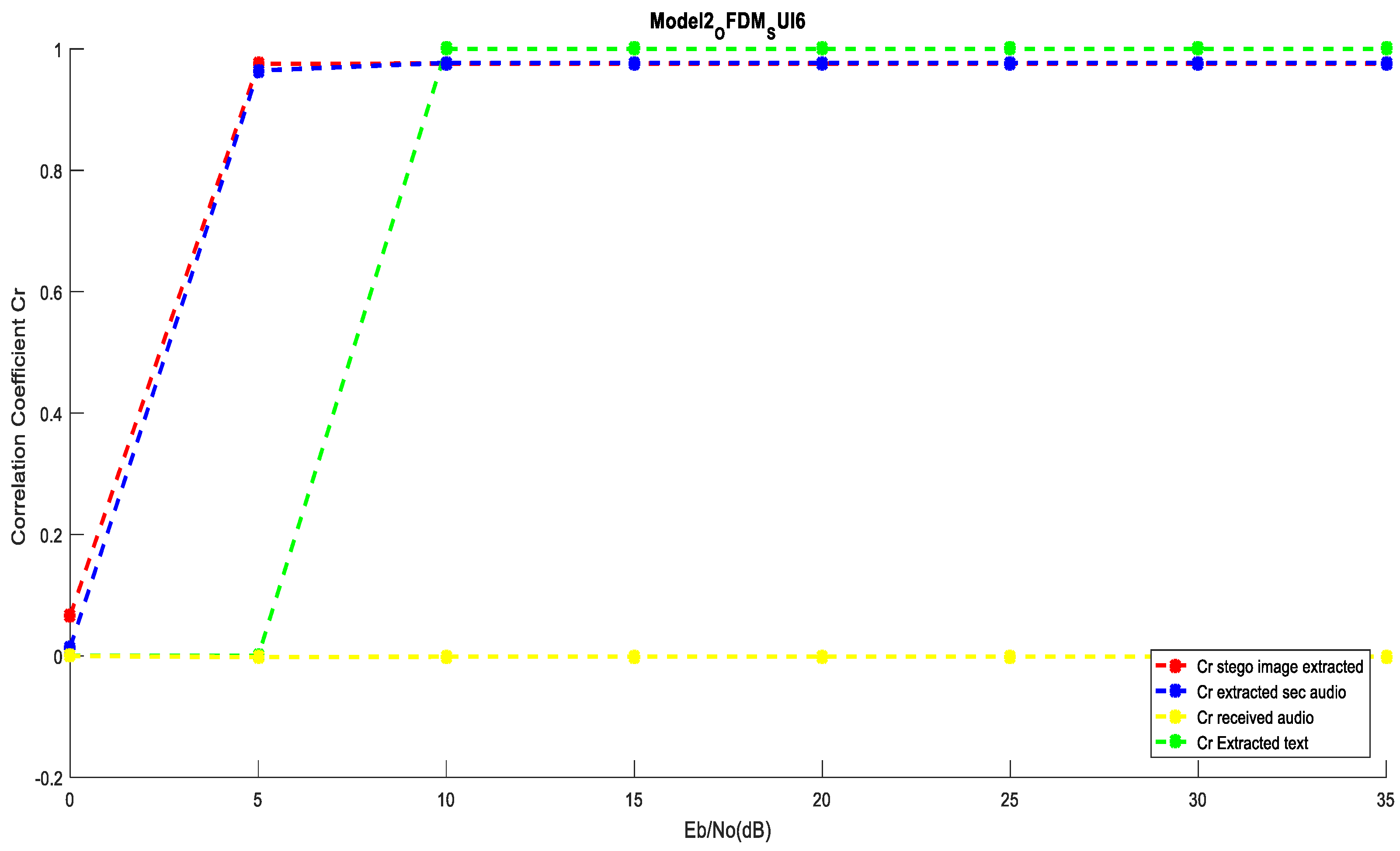

4.3. Performance Investigation of the Two Secured Models over AWGN

5. Performance Evaluation Using OFDM System

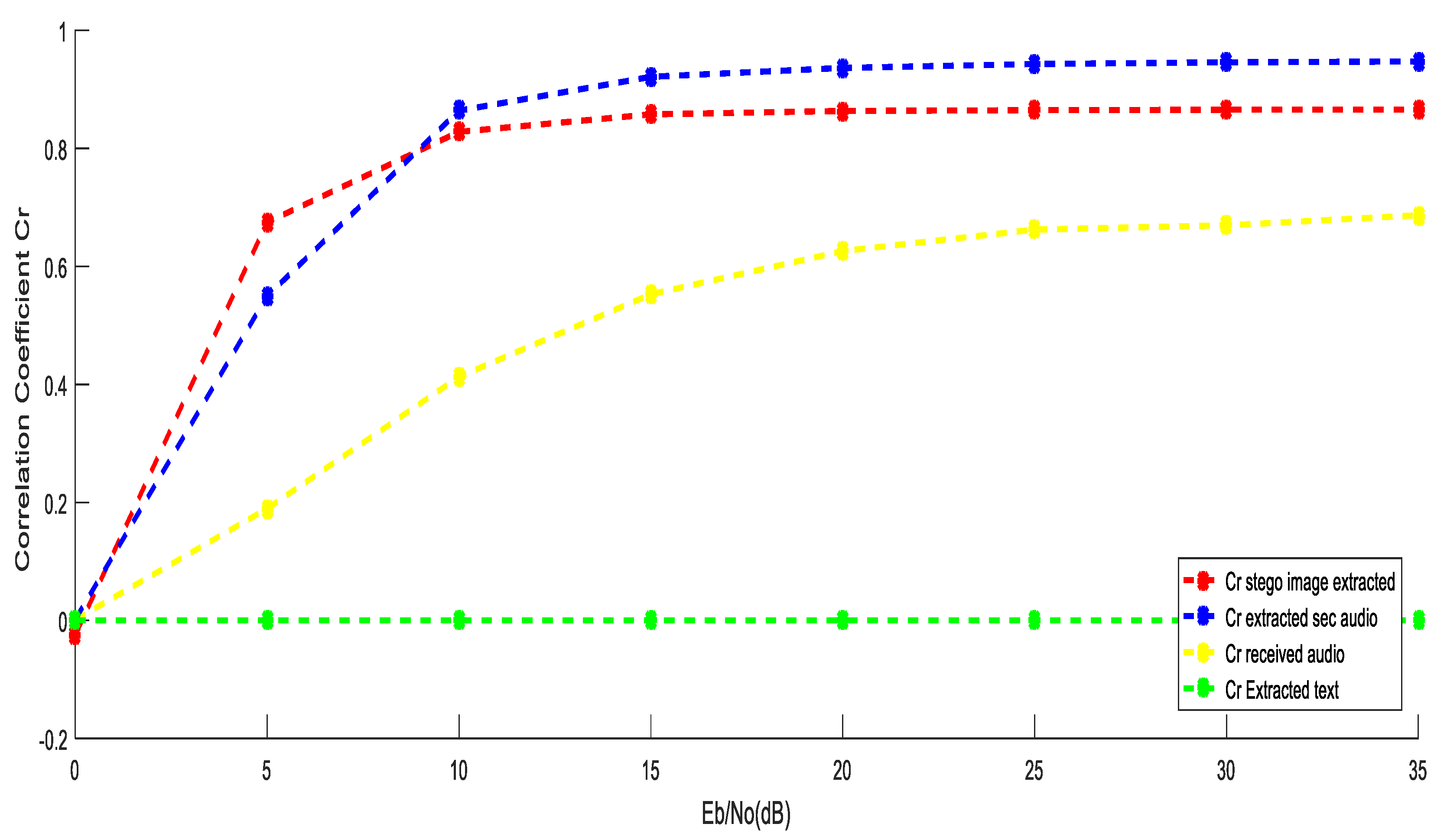

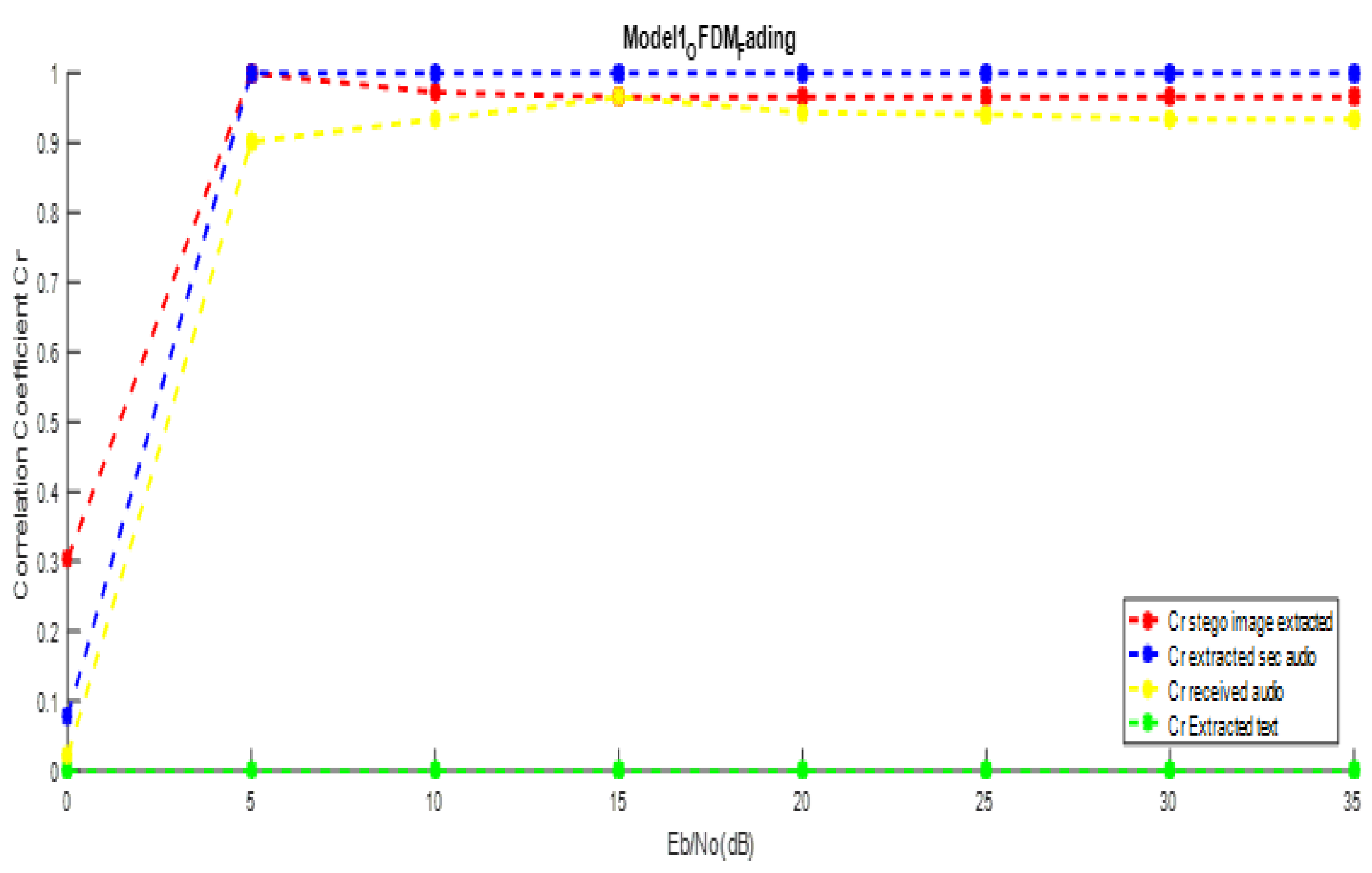

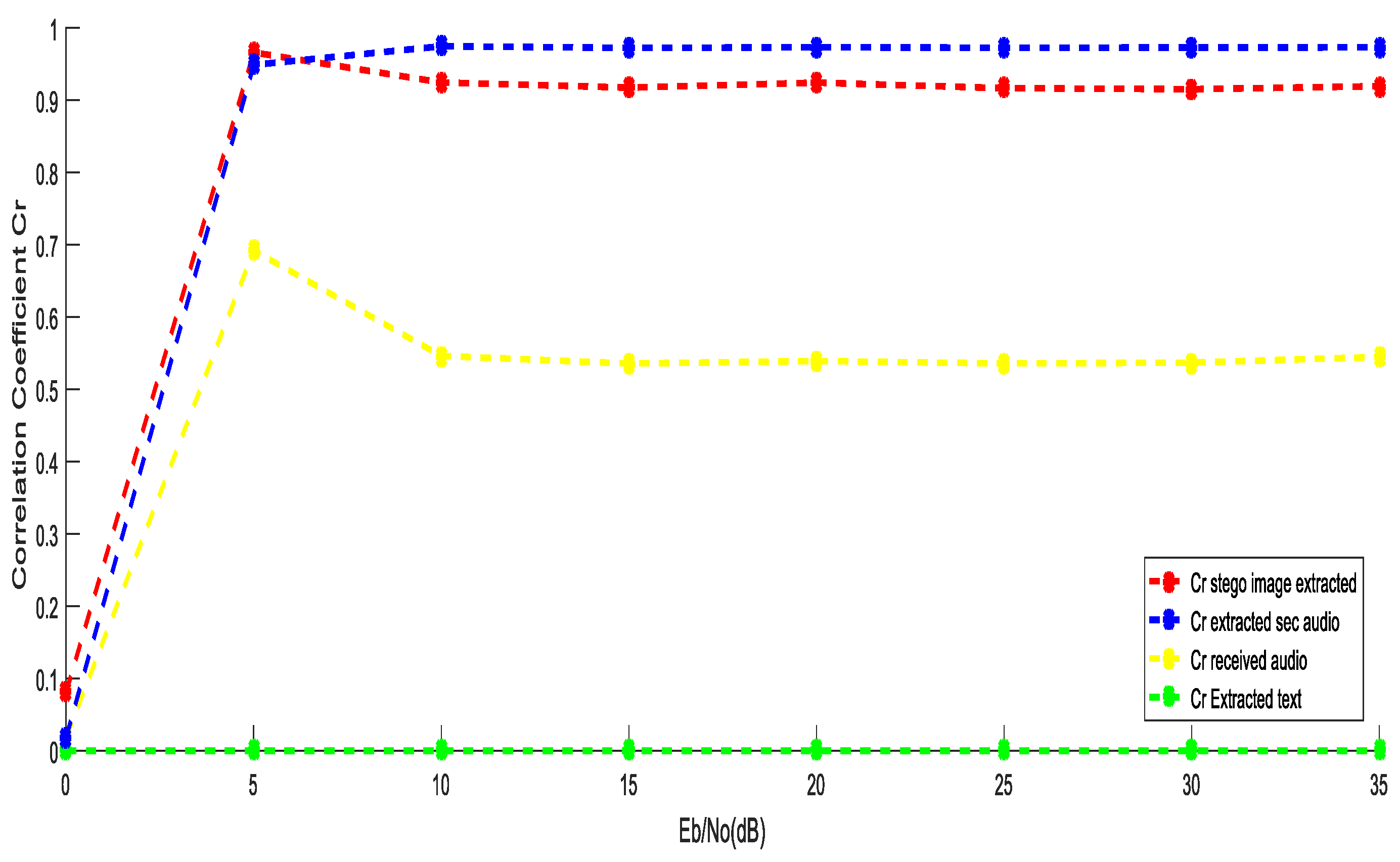

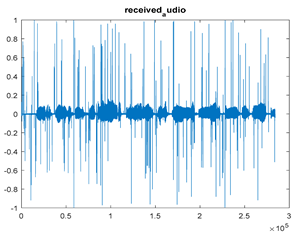

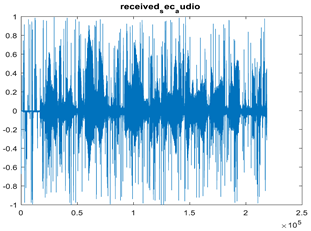

5.1. Performance Evaluation Using OFDM over AWGN Channel

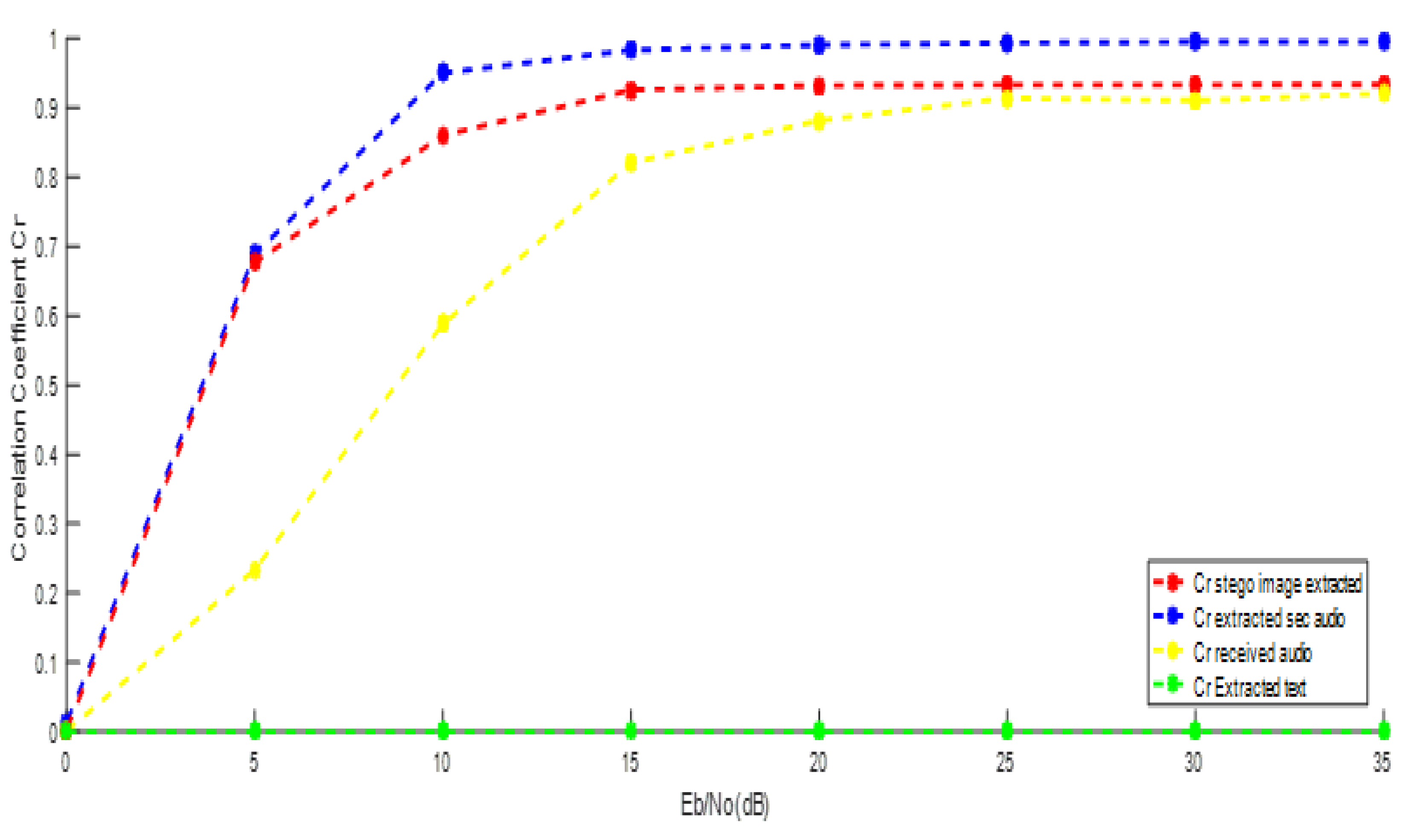

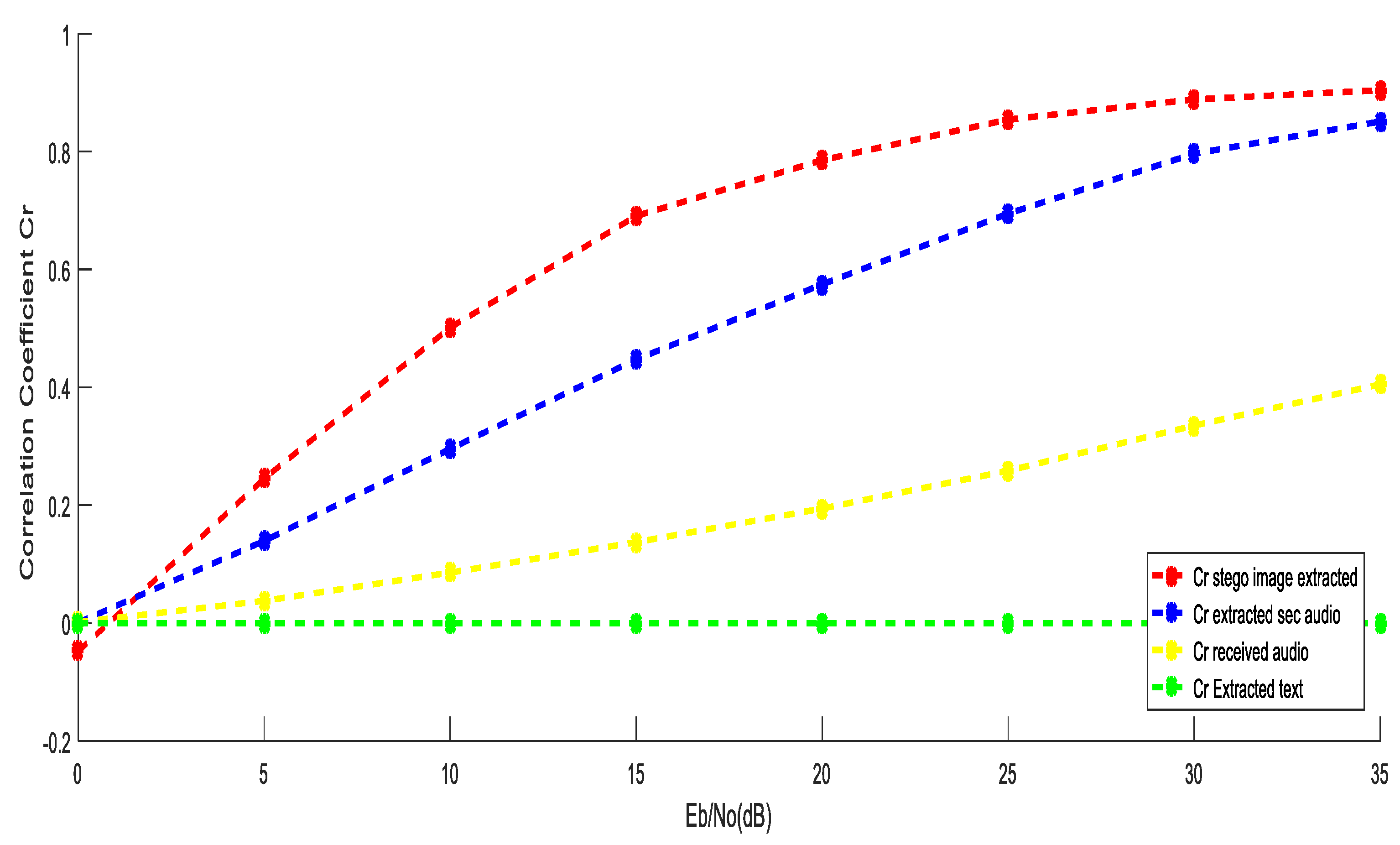

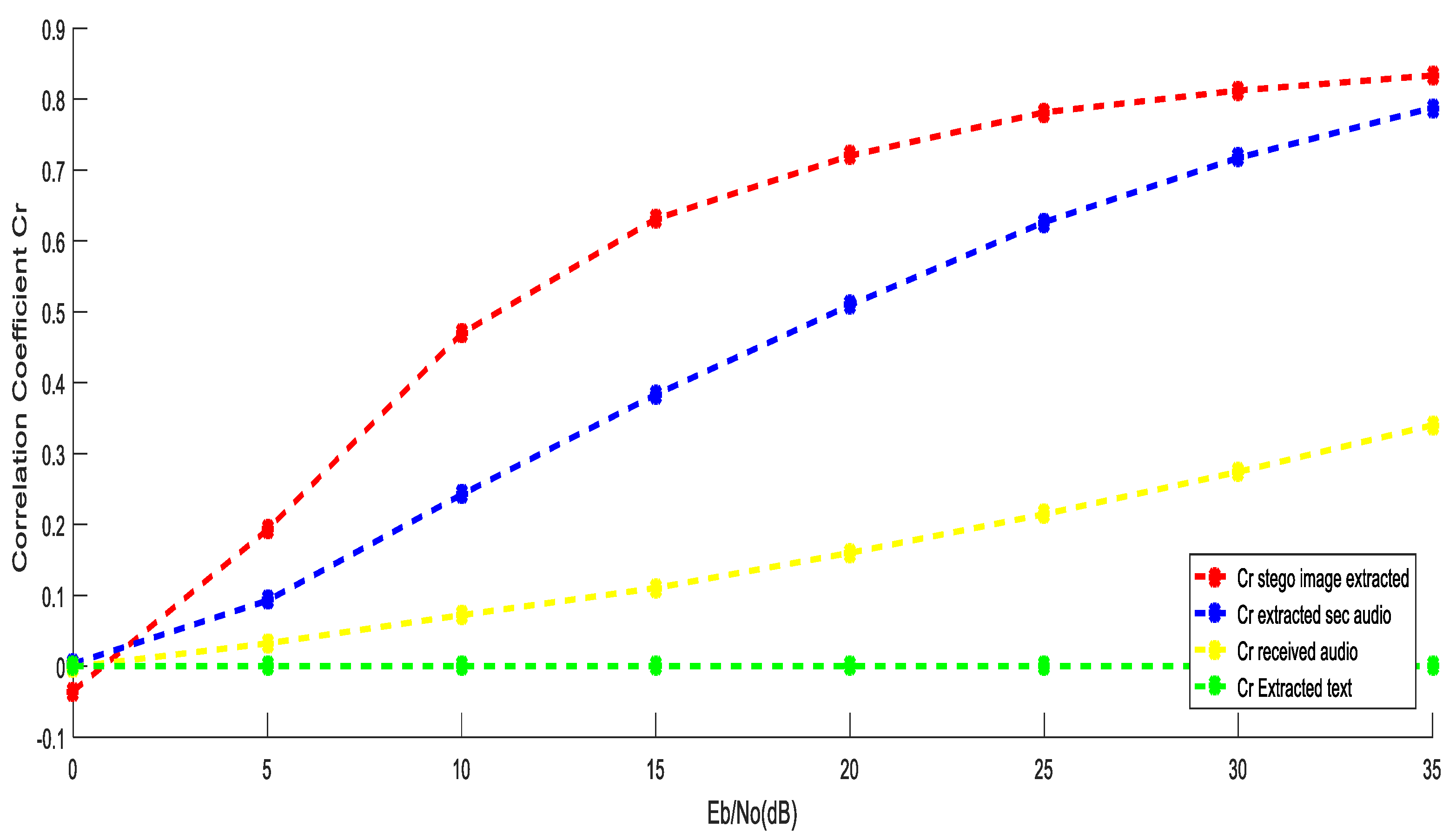

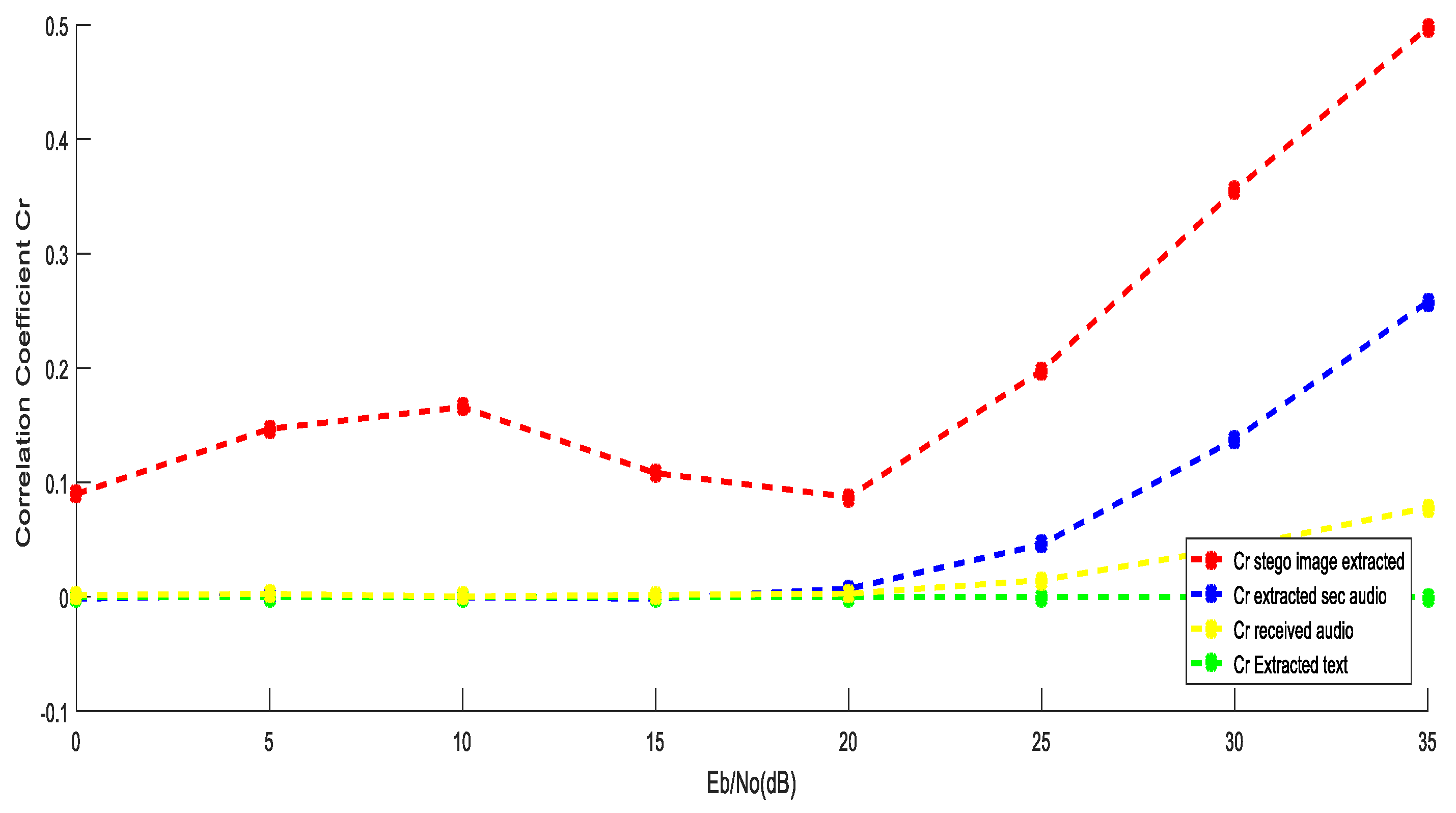

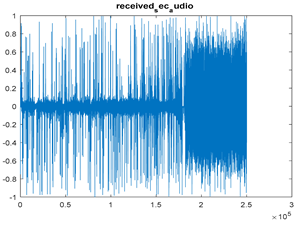

5.2. Performance Evaluation Using OFDM over Fading Channel

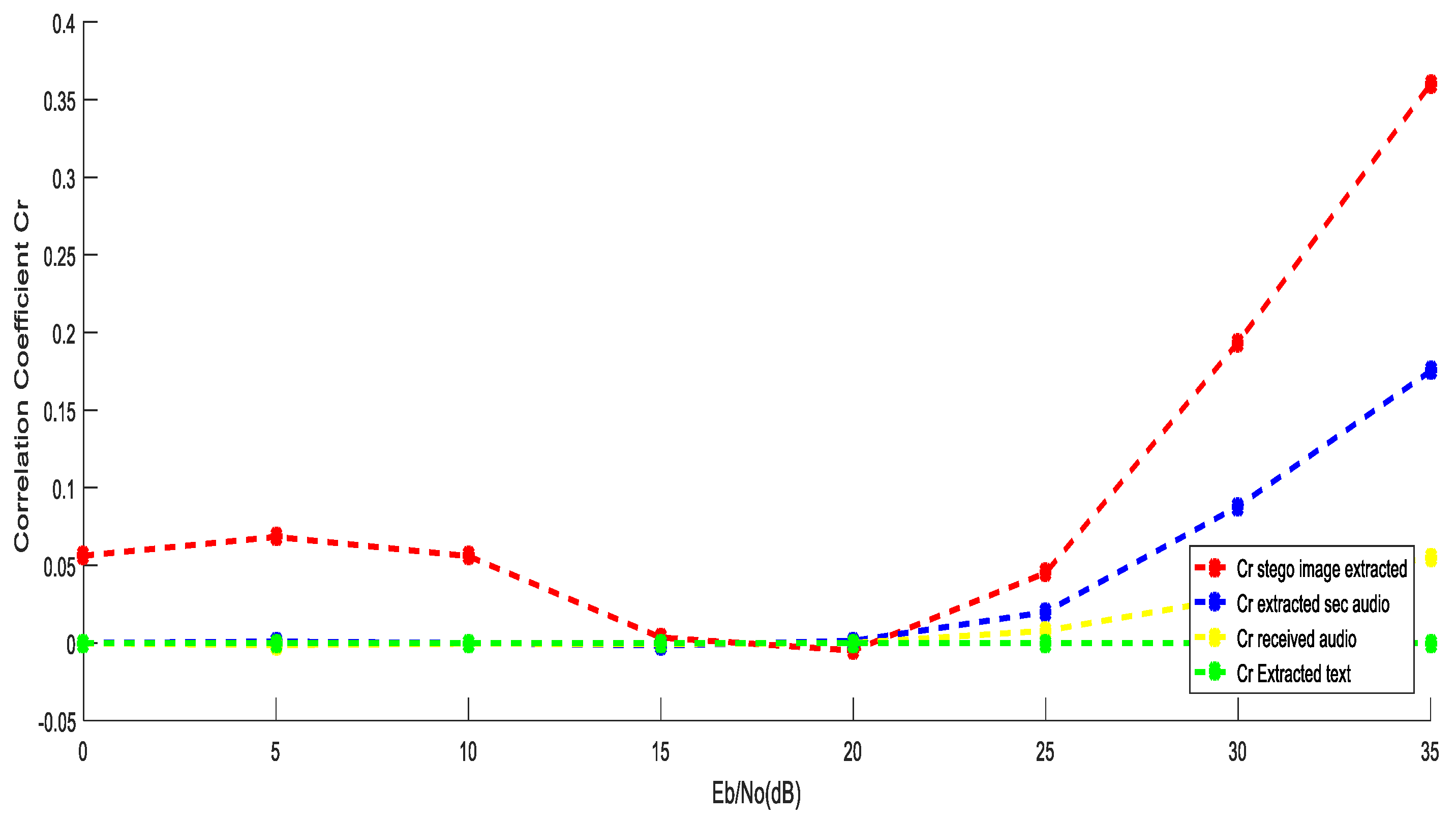

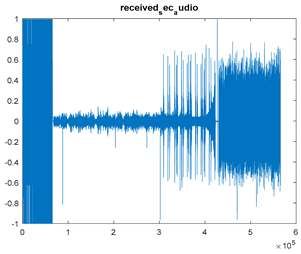

5.3. Performance Evaluation Using OFDM over SUI6 Channel

5.4. Attack Resistance Investigation

- (1)

- Statistical detection attacksLSB techniques are prone to histogram analysis, chi-square testing, and RS steganalysis. Placing half of the data in the DWT domain reduces statistical regularities in the spatial domain, considerably increasing undetectability.

- (2)

- Differential attacksDifferential steganalysis involves comparing the cover and modified media to identify the modifications. Information in the present uplim scheme is distributed between the LSB and DWT domains, and therefore, manipulations in only one such domain (e.g., pixel-level differencing) are not able to expose the total hidden information. This two-domain distribution increases protection from differential attacks [29].

- (3)

- Compression attacksJPEG compression is a common real-world attack that reduces redundancy and can destroy spatial-domain LSB embeddings. Embedding in selected DWT sub-bands gives some resilience, since low-frequency terms remain stable under compression.

- (4)

- Filtering and noise attacksSpatial filtering (e.g., Gaussian blurring) and additive noise can disrupt LSB embedding. However, wavelet-domain embeddings, especially within mid- and low-frequency sub-bands, are more robust, allowing recovery of data under tolerable distortions.

- (5)

- Geometric attacksMost watermarking schemes face challenges with rotation, cropping, and scaling. Although hybrid approaches are not completely exempt from this limitation, the inclusion of DWT coefficients balances the limitation to a considerable degree, as transform-domain features are relatively robust to geometric transformations [30].

- Better undetectability against statistical analysis.

- Security against differential and compression attacks.

- Resilience against common noise and filtering processes.

- A good balance between efficiency, strength, and security.

5.5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wendzel, S.; Caviglione, L.; Mazurczyk, W.; Mileva, A.; Dittmann, J.; Krätzer, C.; Lamshöft, K.; Vielhauer, C.; Hartmann, L.; Keller, J.; et al. A Generic Taxonomy for Steganography Methods. ACM Comput. Surv. 2025, 57, 1–37. [Google Scholar] [CrossRef]

- Nasr, M.A.; El-Shafai, W.; El-Rabaie, E.-S.M.; El-Fishawy, A.S.; El-Hoseny, H.M.; Abd El-Samie, F.E.; Abdel-Salam, N. A Robust Audio Steganography Technique Based on Image Encryption Using Different Chaotic Maps. Sci. Rep. 2024, 14, 22054. [Google Scholar] [CrossRef] [PubMed]

- Aravind Krishnan, A.; Ramesh, Y.; Urs, U.; Arakeri, M. Audio-in-Image Steganography Using Analysis and Resynthesis Sound Spectrograph. IEEE Access 2025, 13, 75184–75193. [Google Scholar] [CrossRef]

- Nasr, M.A.; El-Shafai, W.; El-Rabaie, E.-S.M.; El-Fishawy, A.S.; Abdel-Salam, N.; El-Samie, F.E.A. Robust and Secure Systems for Audio Signals. J. Electr. Syst. Inf. Technol. 2025, 12, 31. [Google Scholar] [CrossRef]

- Su, W.; Ni, J.; Hu, X.; Li, B. Efficient Audio Steganography Using Generalized Audio Intrinsic Energy with Micro-Amplitude Modification Suppression. IEEE Trans. Inf. Forensics Secur. 2024, 19, 6559–6572. [Google Scholar] [CrossRef]

- Helmy, M. Audio Transmission Based on Hybrid Crypto-Steganography Framework for Efficient Cyber Security in Wireless Communication System. Multimed. Tools Appl. 2024, 84, 18893–18917. [Google Scholar] [CrossRef]

- Fadhil, A.M.; Jaber, A.Y. Securing Communication Channels: An Advanced Steganography Approach with Orthogonal Frequency Division Multiplexing (OFDM). J. Electr. Comput. Eng. 2025, 2025, 2468585. [Google Scholar] [CrossRef]

- Aldababsa, M.; Özyurt, S.; Kurt, G.K.; Kucur, O. A Survey on Orthogonal Time Frequency Space Modulation. IEEE Open J. Commun. Soc. 2024, 5, 4483–4518. [Google Scholar] [CrossRef]

- Huang, G.; Zhang, K.; Zhang, Y.; Liao, K.; Jin, S.; Ding, Y. Orthogonal Frequency Division Multiplexing Directional Modulation Waveform Design for Integrated Sensing and Communication Systems. IEEE Internet Things J. 2024, 11, 29588–29599. [Google Scholar] [CrossRef]

- Jiang, S.; Wang, W.; Miao, Y.; Fan, W.; Molisch, A.F. A Survey of Dense Multipath and Its Impact on Wireless Systems. IEEE Open J. Antennas Propag. 2022, 3, 435–460. [Google Scholar] [CrossRef]

- Mahmood, A.; Khan, S.; Hussain, S.; Zeeshan, M. Performance Analysis of Multi-User Downlink PD-NOMA under SUI Fading Channel Models. IEEE Access 2021, 9, 52851–52859. [Google Scholar] [CrossRef]

- Imoize, A.L.; Ibhaze, A.E.; Atayero, A.A.; Kavitha, K.V.N. Standard Propagation Channel Models for MIMO Communication Systems. Wirel. Commun. Mob. Comput. 2021, 2021, 8838792. [Google Scholar] [CrossRef]

- Li, Y.; Chen, K.; Wang, Y.; Zhang, X.; Wang, G.; Zhang, W.; Yu, N. CoAS: Composite Audio Steganography Based on Text and Speech Synthesis. IEEE Trans. Inf. Forensics Secur. 2025, 20, 5978–5991. [Google Scholar] [CrossRef]

- Khan, S.; Abbas, N.; Nasir, M.; Haseeb, K.; Saba, T.; Rehman, A.; Mehmood, Z. Steganography-Assisted Secure Localization of Smart Devices in Internet of Multimedia Things (IoMT). Multimed. Tools Appl. 2021, 80, 17045–17065. [Google Scholar] [CrossRef]

- Almomani, I.; Alkhayer, A.; El-Shafai, W. A Crypto-Steganography Approach for Hiding Ransomware within HEVC Streams in Android IoT Devices. Sensors 2022, 22, 2281. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Wang, K. A Novel Audio Steganography Based on the Segmentation of the Foreground and Background of Audio. Comput. Electr. Eng. 2025, 123, 110026. [Google Scholar] [CrossRef]

- Ali, B.Q. Covert Voip Communication Based on Audio Steganography. Int. J. Comput. Digit. Syst. 2022, 11, 821–830. [Google Scholar] [CrossRef]

- Hameed, A.S. A High Secure Speech Transmission Using Audio Steganography and Duffing Oscillator. Wirel. Pers. Commun. 2021, 120, 499–513. [Google Scholar] [CrossRef]

- Mahmoud, M.M.; Elshoush, H.T. Enhancing LSB Using Binary Message Size Encoding for High Capacity, Transparent and Secure Audio Steganography—An Innovative Approach. IEEE Access 2022, 10, 29954–29971. [Google Scholar] [CrossRef]

- Kasetty, P.K.; Kanhe, A. Covert Speech Communication through Audio Steganography Using DWT and SVD. In Proceedings of the 2020 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kharagpur, India, 1–3 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–5. [Google Scholar]

- Abdulrazzaq, S.T.; Siddeq, M.M.; Rodrigues, M.A. A Novel Steganography Approach for Audio Files. SN Comput. Sci. 2020, 1, 97. [Google Scholar] [CrossRef]

- Gao, S.; Ding, S.; Ho-Ching Iu, H.; Erkan, U.; Toktas, A.; Simsek, C.; Wu, R.; Xu, X.; Cao, Y.; Mou, J. A Three-Dimensional Memristor-Based Hyperchaotic Map for Pseudorandom Number Generation and Multi-Image Encryption. Chaos 2025, 35, 073105. [Google Scholar] [CrossRef]

- Gao, S.; Ho-Ching Iu, H.; Erkan, U.; Simsek, C.; Toktas, A.; Cao, Y.; Wu, R.; Mou, J.; Li, Q.; Wang, C. A 3D Memristive Cubic Map with Dual Discrete Memristors: Design, Implementation, and Application in Image Encryption. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 7706–7718. [Google Scholar] [CrossRef]

- Saranya, S.S.; Reddy, P.L.C.; Prasanth, K. Digital Audio Steganography Using LSB and RC7 Algorithms for Security Applications. In AIP Conference Proceedings, Proceedings of the 4th International Conference on Internet of Things 2023: ICIoT2023, Kattankalathur, India, 26–28 April 2023; AIP Publishing: New York, NY, USA, 2024; Volume 3075, p. 020083. [Google Scholar]

- Singha, A.; Ullah, M.A. Development of an Audio Watermarking with Decentralization of the Watermarks. J. King Saud Univ.—Comput. Inf. Sci. 2022, 34, 3055–3061. [Google Scholar] [CrossRef]

- Anwar, M.; Sarosa, M.; Rohadi, E. Audio Steganography Using Lifting Wavelet Transform and Dynamic Key. In Proceedings of the 2019 International Conference of Artificial Intelligence and Information Technology (ICAIIT), Yogyakarta, Indonesia, 13–15 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 133–137. [Google Scholar]

- Indrayani, R. Modified LSB on Audio Steganography Using WAV Format. In Proceedings of the 2020 3rd International Conference on Information and Communications Technology (ICOIACT), Yogyakarta, Indonesia, 24–25 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 466–470. [Google Scholar]

- Anitha, M.; Azhagiri, M. Uncovering the Secrets of Stegware: An in-Depth Analysis of Steganography and Its Evolving Threat Landscape. In Human Machine Interaction in the Digital Era; CRC Press: London, UK, 2024; pp. 277–282. ISBN 9781003428466. [Google Scholar]

- Biryukov, A. Impossible Differential Attack. In Encyclopedia of Cryptography, Security and Privacy; Springer Nature: Cham, Switzerland, 2025; pp. 1188–1189. ISBN 9783030715205. [Google Scholar]

- Su, Q.; Liu, D.; Sun, Y. A Robust Adaptive Blind Color Image Watermarking for Resisting Geometric Attacks. Inf. Sci. 2022, 606, 194–212. [Google Scholar] [CrossRef]

- Kasban, H.; Nassar, S.; El-Bendary, M.A.M. Medical Images Transmission over Wireless Multimedia Sensor Networks with High Data Rate. Analog Integr. Circuits Signal Process. 2021, 108, 125–140. [Google Scholar] [CrossRef]

| Ref. | Techniques | Channels | Quality |

|---|---|---|---|

| [26] | LWT + a dynamic key for encrypting | -- | Stego audio is similar to the original audio. |

| [27] | (message in cover audio) by different LSB | -- | BER (%) from 0.00441 to 0.00507. |

| [18] | Different capacities of secret audio in cover audio by contourlet transform and duffing oscillator) | -- | Cr of full size of retrieve secret speech = 0.8529, Cr of full size of stego speech = 0.9999 |

| [19] | Message in audio file by (The LSB-BMSE (Binary Message Size Encoding)) | -- | Histogram error rate for stego Jazz = 2.86 × 10−7 Using a 100 KB secret message |

| [20] | (Secret audio in cover audio) by 5TH DWT + SVD | AWGN | PSNR = 34.67 |

| [21] | Compressed image by GMPR in an audio file using (DCT + DWT) | -- | RMSE for decompressed Lena image = 4.3 |

| [25] | Multiple image watermarks in audio by (DWT + SVD) | -- | Cr (host File) = 0.9682 Cr (1st watermark) = 0.9803 Cr (2nd watermark) = 0.9963 Cr (3rd watermark) = 0.9982 Cr (4th watermark) = 0.9999 |

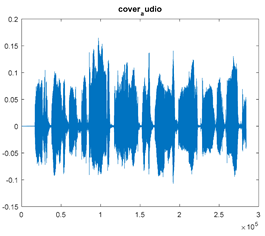

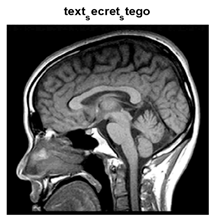

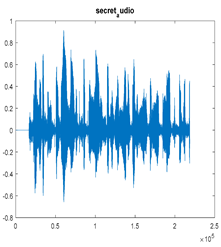

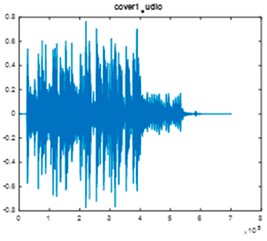

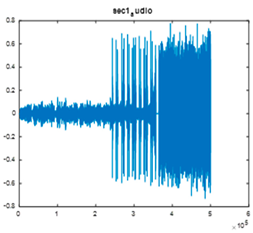

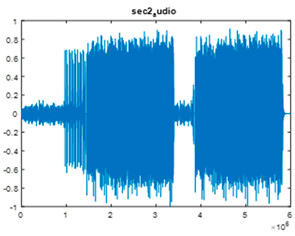

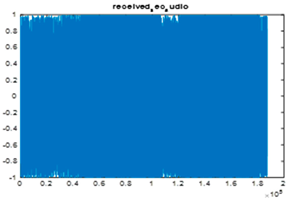

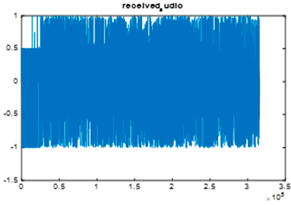

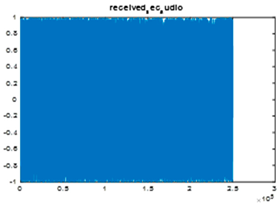

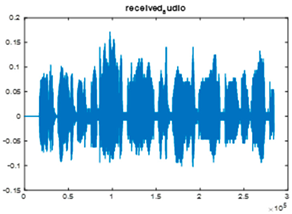

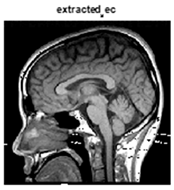

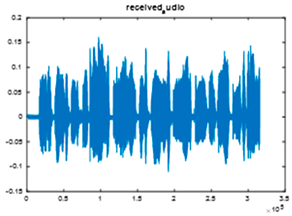

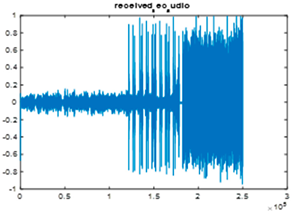

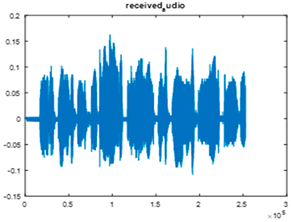

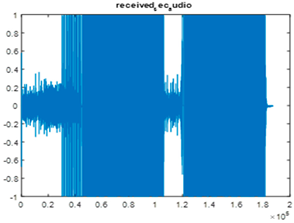

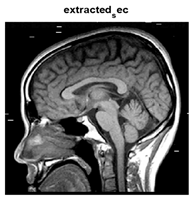

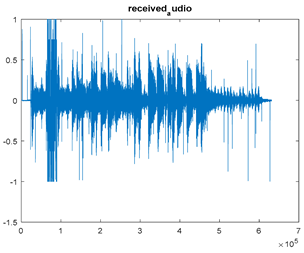

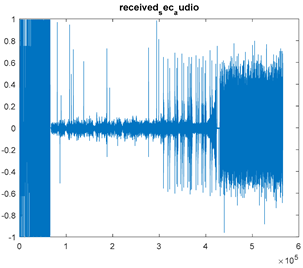

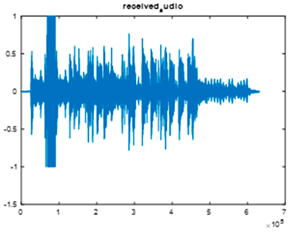

| Cover Audio | Secret _Text Image | Secret Audio |

|---|---|---|

|  |  |

| Bit rate = 705 kbps (.wav) Sample Rate = 44.1 KHz | - | Bit rate = 705 kbps (.wav) Sample Rate = 44.1 KHz |

|  |  |

| Bitrate = 63 kbps (.mp3) Sample Rate = 44.1 KHz | - | Bit rate = 8 kbps (.mp3) Sample Rate = 8 KHz |

| - |  |  |

| - |  | Bit rate = 47 kbps (.mp3) Sample Rate = 32 KHz |

| Parameter | Value |

|---|---|

| Doppler shift frequency | 250 Hz |

| Wireless communications channels | AWGN Rayleigh fading SUI-6 model |

| Coding | Convolutional encoding (1, 2, 7) |

| Modulation | BPSK OFDM (OFDM subcarriers are 128 and number of symbols = 2) OFDM + DPSK |

| SNR | 0 to 35 dB |

| Metrics of the performance | Correlation coefficient (Cr) |

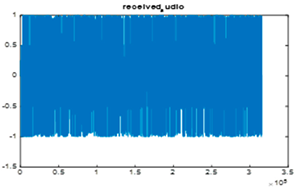

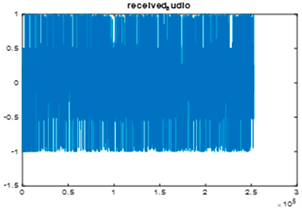

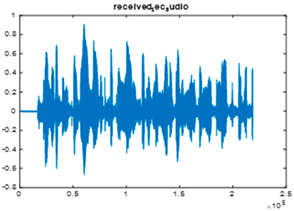

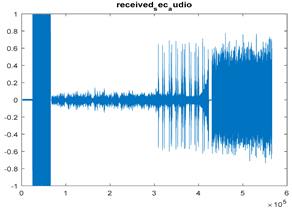

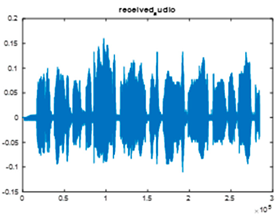

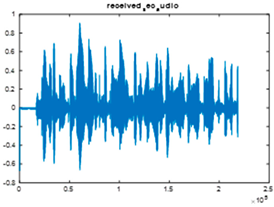

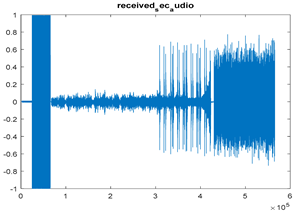

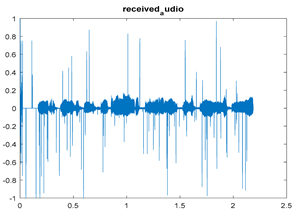

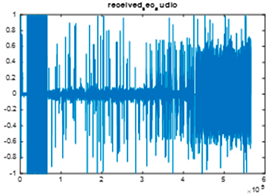

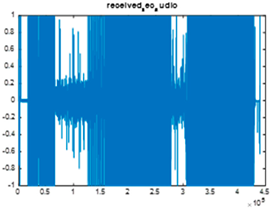

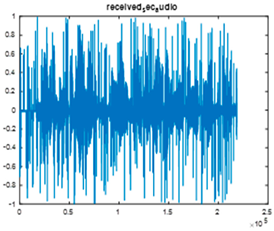

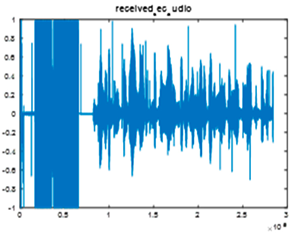

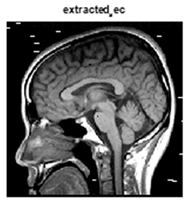

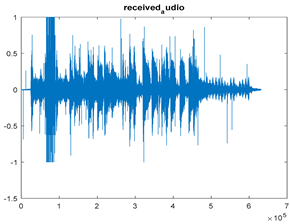

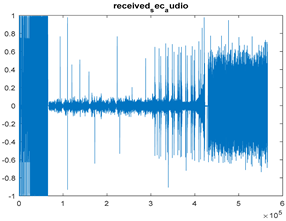

|  |  |

| Cr = 0.3582, BER = 0.1790 (audio.wav) | Cr = 0.4365, BER = 0.3790 (audio.wav) | Cr = 0.8031, BER = 0.2524 |

| Extracted text = “In The” (Cr = 0, BER = 0.0619) | ||

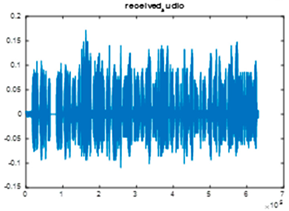

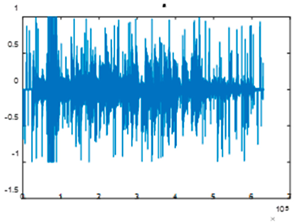

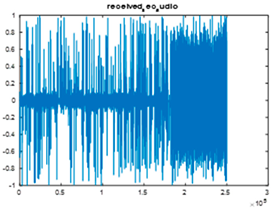

|  |  |

| Cr = 0.3325, BER = 0.2206 (audio.wav) | Cr = 0.7822, BER = 0.2497 (audio.mp3) | Cr = 0.9802, BER = 0.2935 |

| Extracted text = “In TLd”NaMd/f Slhah,!tana}e kf$thE case8is!Esmaa Qbbelmnem yssa” thE sg” (Cr = 0, BER = 0.0556) | ||

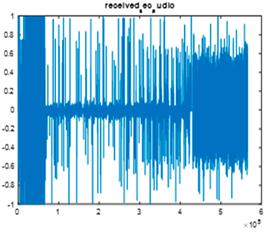

|  |  |

| Cr = 0.3382, BER = 0.2096 (audio.wav) | Cr = 0.9699, BER = 0.2509 (audio.mp3) | Cr = 0.8826, BER = 0.2860 |

| Extracted text (Cr = 0, BER = 0.0571) | ||

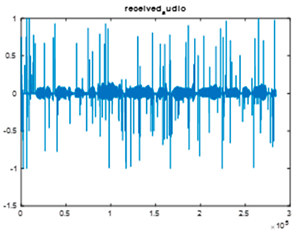

|  |  |

| Cr = 0.2294, BER = 0.2206 (audio.mp3) | Cr = 0.7798, BER = 0.2498 (audio.mp3) | Cr = 0.9827, BER = 0.3061 |

| Extracted text (Cr = 0, BER = 0.0651) | ||

|  |  |

| Cr = 0.2809, BER = 0.3399(audio.wav) | Cr = 0.4365, BER = 0.3790 (audio.wav) | Cr = 0.8031, BER = 0.2524 |

| Extracted text = “In `a(Nqme,_n a|l@hl The8oimb o`” (Cr = 0, BER = 0.1635) | ||

|  |  |

| Cr = 0.3158, BER = 0.3238 (audio.wav) | Cr = 0.3610, BER = 0.4864 (audio.mp3) | Cr = 0.8223, BER = 0.3226 |

| Extracted text = “In Dhu$NalA`kF!@,jah,Bt lil” (Cr = 0, BER = 0.1524) | ||

|  |  |

| Cr = 0.2683, BER = 0.3601 (audio.wav) | Cr = 0.3090, BER = 0.4835 (audio.mp3) | Cr = 0.5579, BER = 0.3112 |

| Extracted text (Cr = 0, BER = 0.1810) | ||

|  |  |

| Cr = 0.7795, BER = 0.3046 (audio.mp3) | Cr = 0.3706, BER = 0.4866 (audio.mp3) | Cr = 0.8207, BER = 0.3335 |

| Extracted text (Cr = 0), (BER = 0.1873) | ||

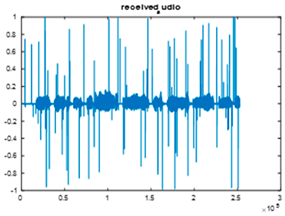

|  |  |

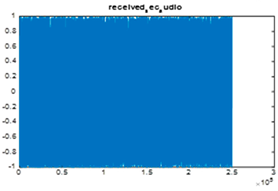

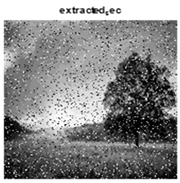

| Cr = 0.9934, BER = 0.1752 (audio.wav) | Cr = 0.9998, BER = 0.2413 (audio.wav) | Cr = 0.9988, BER = 0.2049 |

| Extracted text = “In The Name of Allah, the name of the case is Asmaa Abdelmonem Eyssa the age is 34 years” (Cr = 1, BER = 0) | ||

|  |  |

| Cr = 0.9914, BER = 0.2173 (audio.wav) | Cr = 0.9998, BER = 0.2468 (audio.mp3) | Cr = 0.9978, BER = 0.2911 |

| Extracted text (Cr = 1, BER = 0) | ||

|  |  |

| Cr = 0.9945, BER = 0.2062 (audio.wav) | Cr = 0.9818, BER = 0.2474 (audio.mp3) | Cr = 0.9856, BER = 0.2835 |

| Extracted text (Cr = 1, BER = 0) | ||

|  |  |

| Cr = 0.9995, BER = 0.2171 (audio.mp3) | Cr = 0.9998, BER = 0.2468 (audio.mp3) | Cr = 0.9979, BER = 0.3038 |

| Extracted text (Cr = 1, BER = 0) | ||

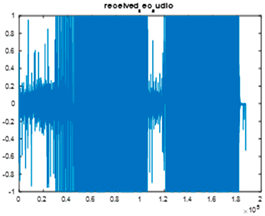

|  |  |

| Cr = 0.9013, BER = 0.3380 (audio.wav) | Cr = 0.9771, BER = 0.3601 (audio.wav) | Cr = 0.9760, BER = 0.2095 |

| Extracted text = “In The Name of Allah, the name of the case is Asmaa Abdelmonem Eyssa the age is 34 years” (Cr = 1, BER = 0) | ||

|  |  |

| Cr = 0.9673, BER = 0.3217 (audio.wav) | Cr = 0.7177, BER = 0.4843 (audio.mp3) | Cr = 0.9961, BER = 0.2927 |

| Extracted text (Cr = 1, BER = 0) | ||

|  |  |

| Cr = 0.8532, BER = 0.3588 (audio.wav) | Cr = 0.3538, BER = 0.4810 (audio.mp3) | Cr = 0.9844, BER = 0.2836 |

| Extracted text (Cr = 1, BER = 0) | ||

|  |  |

| Cr = 0.9888, BER = 0.3024 (audio.mp3) | Cr = 0.7178, BER = 0.4848 (audio.mp3) | Cr = 0.9903, BER = 0.3061 |

| Extracted text (Cr = 1, BER = 0) | ||

| SNR | Extracted Text |

|---|---|

| 0 dB | |

| 5 dB | |

| 10 dB | Inp |

| 15 dB | In The Name.of0Allah, the name of the(cmse is Asmai Abdelmonem Eyssa0 the(age2is 34 yecrs |

| 20 dB | In The Name!of0Allah, the name of the(cese is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| 25 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| 30 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| 35 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

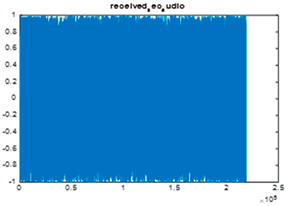

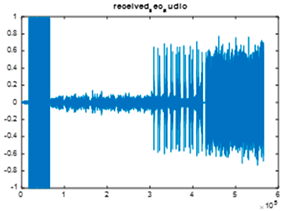

|  |  |

| Cr = 0.9160, BER = 0.1764 (audio.wav) | Cr = 0.9925, BER = 0.4067 (audio.wav) | Cr = 0.9925, BER = 0.3387 |

| Extracted text (Cr = 0, BER = 0.481) | ||

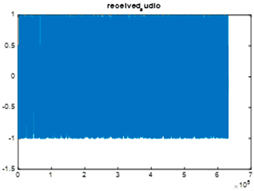

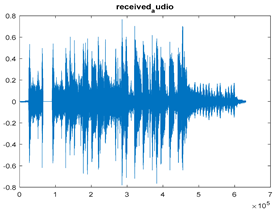

|  |  |

| Cr = 0.8966, BER = 0.2180 (audio.wav) | Cr = 0.9935,BER = 0.2469 (audio.mp3) | Cr = 0.9974, BER = 0.2911 |

| Extracted text (Cr = 1, BER = 0) | ||

|  |  |

| Cr = 0.9177, BER = 0.2068 (audio.wav) | Cr = 0.9813, BER = 0.2469(audio.mp3) | Cr = 0.9830, BER = 0.2835 |

| Extracted text (Cr = 1, BER = 0) | ||

|  |  |

| Cr = 0.9916, BER = 0.2211 (audio.mp3) | Cr = 0.9926, BER = 0.2469 (audio.mp3) | Cr = 0.9976, BER = 0.3038 |

| Extracted text (Cr = 1, BER = 0) | ||

|  |  |

| Cr = 0.6453, BER = 0.3376 (audio.wav) | Cr = 0.9580, BER = 0.3604 (audio.wav) | Cr = 0.9610, BER = 0.2121 |

| Extracted text = “In Uhe*nume of Ullch,!the name of`vhe case`is Asmaa Abdelmonem Gyssa the age is 34 years” (Cr = 0, BER = 0.0222) | ||

|  |  |

| Cr = 0.8158, BER = 0.3217 (audio.wav) | Cr = 0.7086, BER = 0.4843 (audio.mp3) | Cr = 0.9939, BER = 0.2930 |

| Extracted text = “In The Name of Allah, the name of the case is Asmaa Abdelmonem Eyssa the age is `34 years” (Cr = 0, BER = 0.0016) | ||

|  |  |

| Cr = 0.5486, BER = 0.3575 (audio.wav) | Cr = 0.3531, BER = 0.4811 (audio.mp3) | Cr = 0.9761, BER = 0.2839 |

| Extracted text (Cr = 0, BER = 0.0032) | ||

|  |  |

| Cr = 0.9679, BER = 0.3140 (audio.mp3) | Cr = 0.7104, BER = 0.4848 (audio.mp3) | Cr = 0.9881, BER = 0.3064 |

| Extracted text (Cr = 0, BER = 0.0016) | ||

| SNR | Extracted Text |

|---|---|

| 0 dB | Re(;ky7NPc3VmR?#FtNQ |

| 5 dB | In!The Name of Allah, the name of the case is Asmaa Abdelmonem Eyssa the age is 34 years |

| 10 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| 15 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| 20 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| 25 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| 30 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| 35 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| SNR | Extracted Text |

|---|---|

| 0 dB | gg = gK5L|kt, |

| 5 dB | In The Name of Allah,0the name of0the case is Asmaa Abdelmonem Eyssa the(age is 34 years |

| 10 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| 15 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| 20 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| 25 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| 30 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| 35 dB | In The Name of0Allah, the name of the(case is Asmaa Qbdelmonem Eyssa 0the(age2is 34 years |

| Model 1 | ||

|  |  |

| Cr = 0.9522, BER = 0.1770 (udio.wav) PSNR = 39.3251 | Cr = 0.9984, BER = 0.2414 (audio.wav) PSNR = 42.0124 | Cr = 0.9830, BER = 0.2074 PSNR = 25.7678 |

| Extracted text = “In!The Name of Qllah, the name of0the case is Asmaa Abdelmonem Eyssa the(age is 34 years” (Cr = 0, BER = 0.0063) | ||

|  |  |

| Cr = 0.9924, BER = 0.2234 (audio.mp3) PSNR = 35.7209 | Cr = 0.9988, BER = 0.2468 (audio.mp3) PSNR = 43.3394 | Cr = 0.9977, BER = 0.3038 PSNR = 35.2872 |

| Extracted text (Cr = 1, BER = 0) | ||

| Model2 | ||

|  |  |

| Cr = 0.7124, BER = 0.3379 (audio.wav) PSNR = 31.6726 | Cr = 0.9511, BER = 0.3604 (audio.mp3) PSNR = 27.9513 | Cr = 0.9697, BER = 0.2107 PSNR = 23.2955 |

| Extracted text (Cr = 0, BER = 0.0143) | ||

|  |  |

| Cr = 0.9716, BER = 0.3079 (audio.mp3) PSNR = 31.4113 | Cr = 0.7053, BER = 0.4848 (audio.mp3) PSNR = 18.4474 | Cr = 0.9876, BER = 0.3065 PSNR = 27.9792 |

| Extracted text (Cr = 0, BER = 0.0048) | ||

| Model 1 over an AWGN Channel Through an OFDM System Using (OFDM + DPSK) Modulation | ||

|  |  |

| Cr = 0.9893, BER = 0.2316 (audio.mp3) PSNR = 34.2353 | Cr = 0.9993, BER = 0.2468 (audio.mp3) PSNR = 44.9526 | Cr = 0.9979, BER = 0.3038 PSNR = 35.6745 |

| Extracted text (Cr = 1, BER = 0) | ||

|  |  |

| Cr = 0.9934, BER = 0.2235 (audio.mp3) PSNR = 36.3781 | Cr = 0.9989, BER = 0.2468 (audio.mp3) PSNR = 43.6093 | Cr = 0.9978, BER = 0.3038 PSNR = 35.6745 |

| Extracted text (Cr = 1, BER = 0) | ||

| Model 1 over the SUI6 channel through the OFDM system using (OFDM + DPSK) modulation | ||

|  |  |

| Cr = 0.9917, BER = 0.2509 (audio.mp3) PSNR = 35.3370 | Cr = 0.9997, BER = 0.2468 (audio.mp3) PSNR = 46.4769 | Cr = 0.9979, BER = 0.3038 PSNR = 35.6771 |

| Extracted text (Cr = 1, BER = 0) | ||

| Attack Type | LSB Domain | DWT Domain | Hybrid (LSB + DWT) | Remarks |

|---|---|---|---|---|

| Statistical steganalysis (e.g., chi-square, RS analysis) | Weak (easily detectable due to direct pixel modification) | Moderate (Coefficient changes are less obvious) | Strong (spatial + frequency embedding reduces detectability) | Hybrid disperses changes across domains, lowering statistical bias. |

| Differential attack (frame/image differencing) | Weak (differences amplified at high LSB embedding) | Moderate (frequency domain dampens minor variations) | Strong (distribution across domains resists direct differencing) | Improved resilience by balancing modifications between LSB and DWT. |

| Noise attack (Gaussian, Salt and Pepper) | Weak (bit error is highly impactful) | Moderate (frequency domain coefficients more robust) | Strong (error correction + redundancy improve survival) | OFDM + convolutional coding aid robustness. |

| Compression (JPEG/MP3) | Very weak (lossy compression destroys embedded bits) | Moderate (low-frequency subbands survive compression) | Strong (DWT selection + error correction mitigate loss) | Hybrid embeds redundantly in robust bands and LSBs. |

| Cropping/Partial data loss | Weak (localized LSB loss destroys payload) | Moderate (global transform gives partial recovery) | Strong (redundant embedding across domains increases recovery rate) | Hybrid ensures payload survival even under partial cropping. |

| Cachin’s information-theoretic measure (security level) | Low (p-value deviates significantly) | Moderate (closer to uniform distribution) | High (embedding imperceptibility enhanced) | Hybrid meets stronger security criteria under Cachin’s framework. |

| Criterion | LSB (Spatial Domain) | DWT (Transform Domain) | Hybrid LSB–DWT (Proposed) |

|---|---|---|---|

| Embedding capacity | High (can embed more bits per pixel) | Moderate (limited by transform coefficients) | High–Moderate (capacity enhanced by LSB, controlled by DWT to preserve quality) |

| Imperceptibility | Very high (changes occur in insignificant pixel bits) | High (modifications in the frequency domain are less visible) | Very high (imperceptibility preserved via balanced embedding in both domains) |

| Robustness | Low (fragile against compression, filtering, scaling) | High (robust against compression, noise, and filtering) | High (DWT ensures robustness; LSB provides additional redundancy for improved error tolerance) |

| Security | Low (easy to detect by statistical analysis) | Higher (frequency-domain embedding is harder to detect) | Higher (dual-domain embedding increases resistance against steganalysis) |

| Computational complexity | Low: O(n) for embedding/extraction | for DWT decomposition | , dominated by DWT, but with lightweight LSB stage, maintaining efficiency |

| Space complexity | O(n) (only pixels) | O(n) (requires storing transform coefficients) | O(n) (hybrid requires storage of both pixel data and transform coefficients, still linear in input size) |

| Overall trade-off | High capacity, low robustness | High robustness, moderate capacity | Balanced capacity, imperceptibility, and robustness with manageable computational overhead |

| Model | SNR (AWGN) Channel | Reconstructed Data | |||

|---|---|---|---|---|---|

| Text | Sec_Image | Sec_Audio | Stego_Audio | ||

| Ref. [17] | 10 dB | -- | -- | Cr = 0.841 | PSNR = 32.61 |

| Model1 | -- | -- | Cr = 0.9998 | PSNR = 48.9 | |

| Model2 | -- | Cr = 0.9771 | PSNR = 37.32 | ||

| Ref. [31] | 15 dB | -- | Cr = 0.964 | -- | -- |

| Model1 | -- | Cr = 0.965 | -- | -- | |

| Model2 | -- | Cr = 0.921 | -- | -- | |

| Ref. [6] | 6 dB | -- | -- | PSNR = 36.10 | -- |

| Model1 | -- | -- | PSNR = 44.9526 | -- | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hamdi, A.A.; Eyssa, A.A.; Abdalla, M.I.; ElAffendi, M.; AlQahtani, A.A.S.; Ateya, A.A.; Elsayed, R.A. Improving Audio Steganography Transmission over Various Wireless Channels. J. Sens. Actuator Netw. 2025, 14, 106. https://doi.org/10.3390/jsan14060106

Hamdi AA, Eyssa AA, Abdalla MI, ElAffendi M, AlQahtani AAS, Ateya AA, Elsayed RA. Improving Audio Steganography Transmission over Various Wireless Channels. Journal of Sensor and Actuator Networks. 2025; 14(6):106. https://doi.org/10.3390/jsan14060106

Chicago/Turabian StyleHamdi, Azhar A., Asmaa A. Eyssa, Mahmoud I. Abdalla, Mohammed ElAffendi, Ali Abdullah S. AlQahtani, Abdelhamied A. Ateya, and Rania A. Elsayed. 2025. "Improving Audio Steganography Transmission over Various Wireless Channels" Journal of Sensor and Actuator Networks 14, no. 6: 106. https://doi.org/10.3390/jsan14060106

APA StyleHamdi, A. A., Eyssa, A. A., Abdalla, M. I., ElAffendi, M., AlQahtani, A. A. S., Ateya, A. A., & Elsayed, R. A. (2025). Improving Audio Steganography Transmission over Various Wireless Channels. Journal of Sensor and Actuator Networks, 14(6), 106. https://doi.org/10.3390/jsan14060106