The RoboPeak A1M8 laser rangefinder from Slamtec Co., Ltd. was selected for the experimental research [

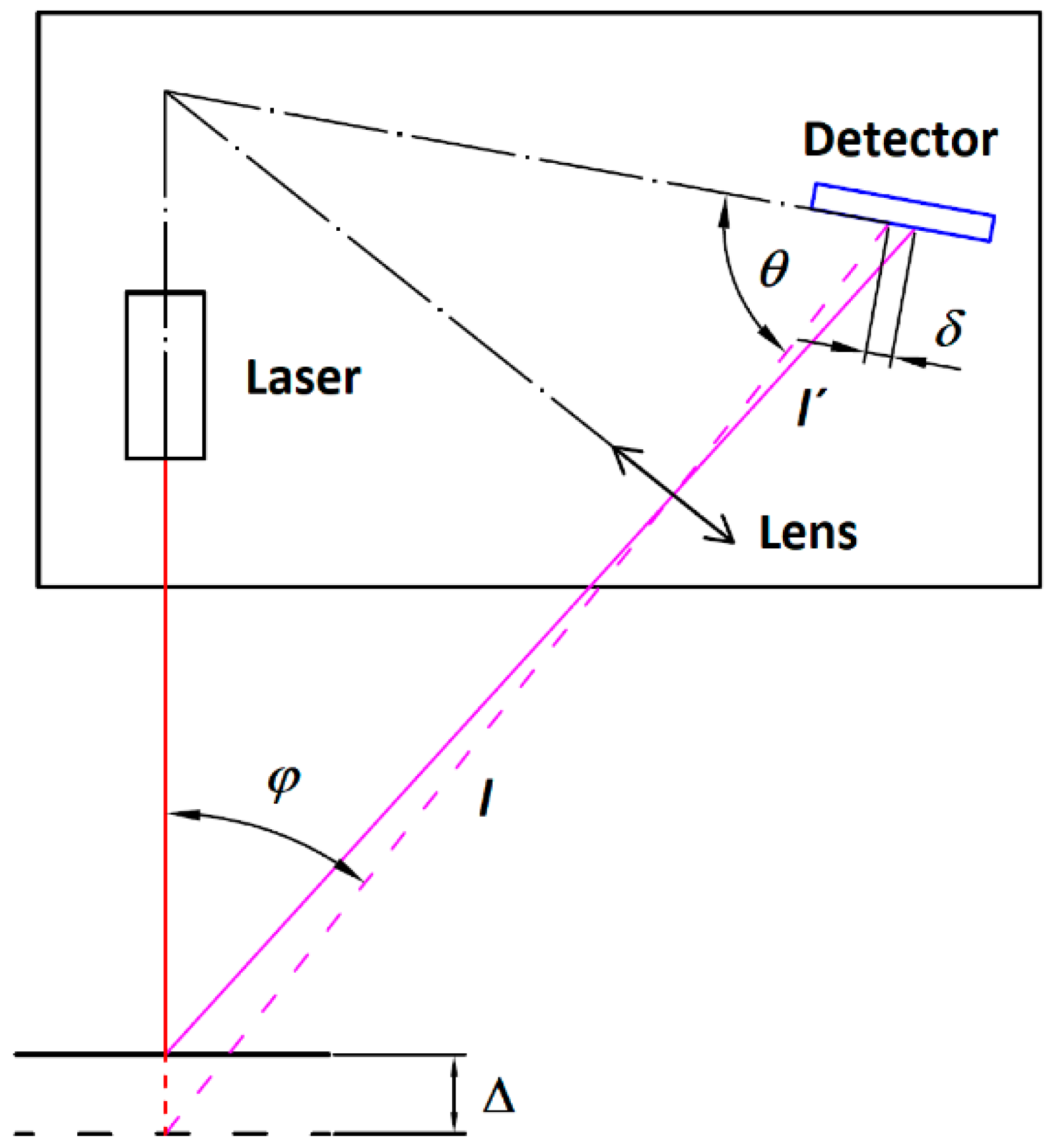

30]. The system of this device can perform 360-degree environmental scanning at a range of up to 12 m, with a standard rotation of range finder module (laser spot onto the target); the reflected light falls on the receiver element at an angle depending on the distance. The principle of the laser triangulation sensor is presented in

Figure 1 [

31]. Δ is the geometric relationship between the two laser spot positions (initial and shifted due to the object displacement) and the corresponding image displacement in the detector δ. The device has the following optical characteristics: wavelength λ = 795 nm, laser power is 5 mW, and pulse duration 300 μs [

32].

I and

I″ are the optical paths from the object to the receiver before and after object shift, whereas angle

φ is the angle between the laser beam and the receiver imaging optics axis.

The light source is a low-power (<5 mW) infrared laser controlled by modulated pulses. In addition, this model has integrated high-speed environmental image acquisition and processing hardware. The system can take 2000 measurements per second and process the measurement data to create a two-dimensional point map. The resolution of RoboPeak A1M8 can reach up to 1% of the actual measuring distance. A belt attached to the electric motor pulley drives the unit that scans the environment.

Experiments are carried out in both clear (no rain) and rainy conditions by scanning a reference object (target, stationary sphere of 135 mm diameter) at different frequencies and storing the surrounding map data on the computer using the RP-LiDAR frame grabber software program (v1.12.0).

3.1. Test in Clear Weather Conditions

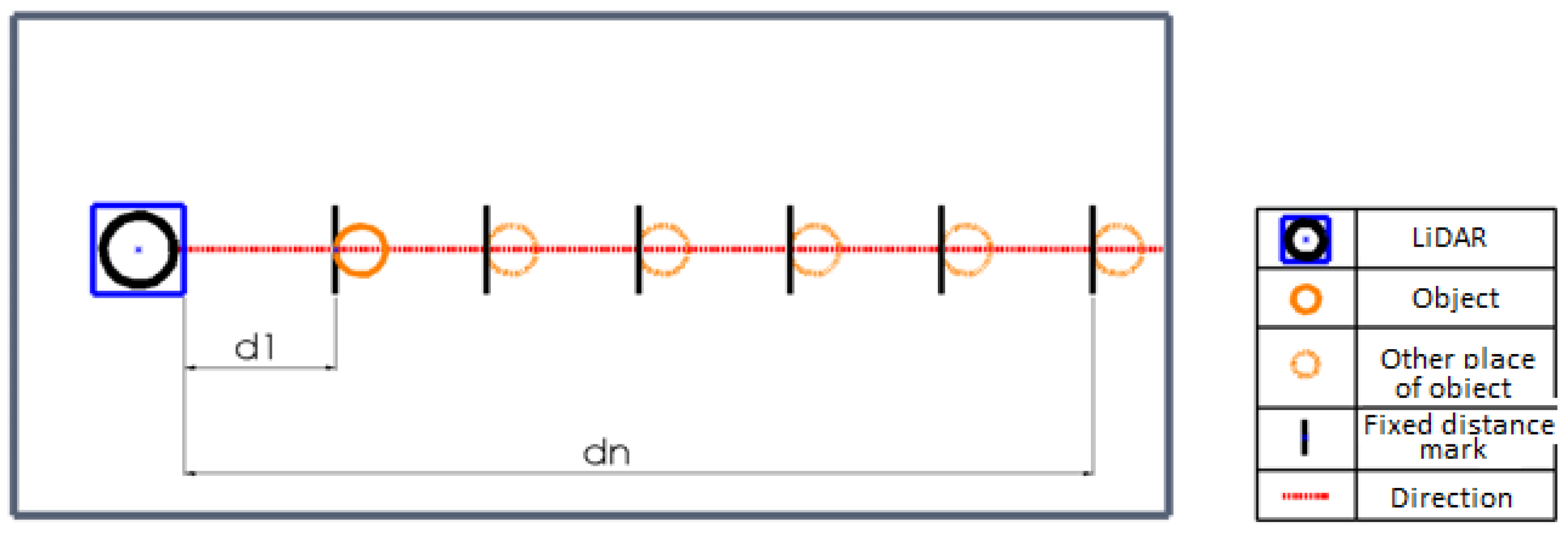

The setup used for the experimental evaluation of the LiDAR sensor performance in clear weather conditions is shown in

Figure 2. The legend in the right part of the figure explains the symbols used for detailed understanding.

The measurement data, collected in this configuration, are shown as statistics in

Table 1 for the distance set as

d = 1 m and in

Table 2 for the set distance of

di of 6 m, which is mentioned also in the first column of both tables as distance

d [m] to the object under investigation.

In addition, f is the scanning frequency [Hz], i is the number of the captured shape points of the object, d is the distance [m] actually measured to the closest point of the target (which is considered as an obstacle in real use, so shortest distance is the critical one), is the mean square error of measurements and is the dispersion of measurements.

Calculations of the RMS (Formula (3)) have revealed that the highest distance errors occurred when using a scanning frequency of 2 Hz, while the lowest ones were obtained at 5.5 or 7 Hz (RMS at 2 Hz was almost 10 times higher than at 7 Hz). It means that RMS has an opposite pattern with increasing frequency (

Table 1). Although at the closest distance (1 m) a scanning frequency of 5.5 or 7 Hz cause about 9 times smaller errors compared to the errors recorded at the longest distance (6 m); also, here the errors are still 6 times smaller using high scanning frequencies compared to the case of just 2 Hz. This test has revealed a clear dependence of the distance variation on the scanning frequency; the distances are most accurate in the range of higher frequencies of 5.5 and 7 Hz (

Table 1,

Figure 3).

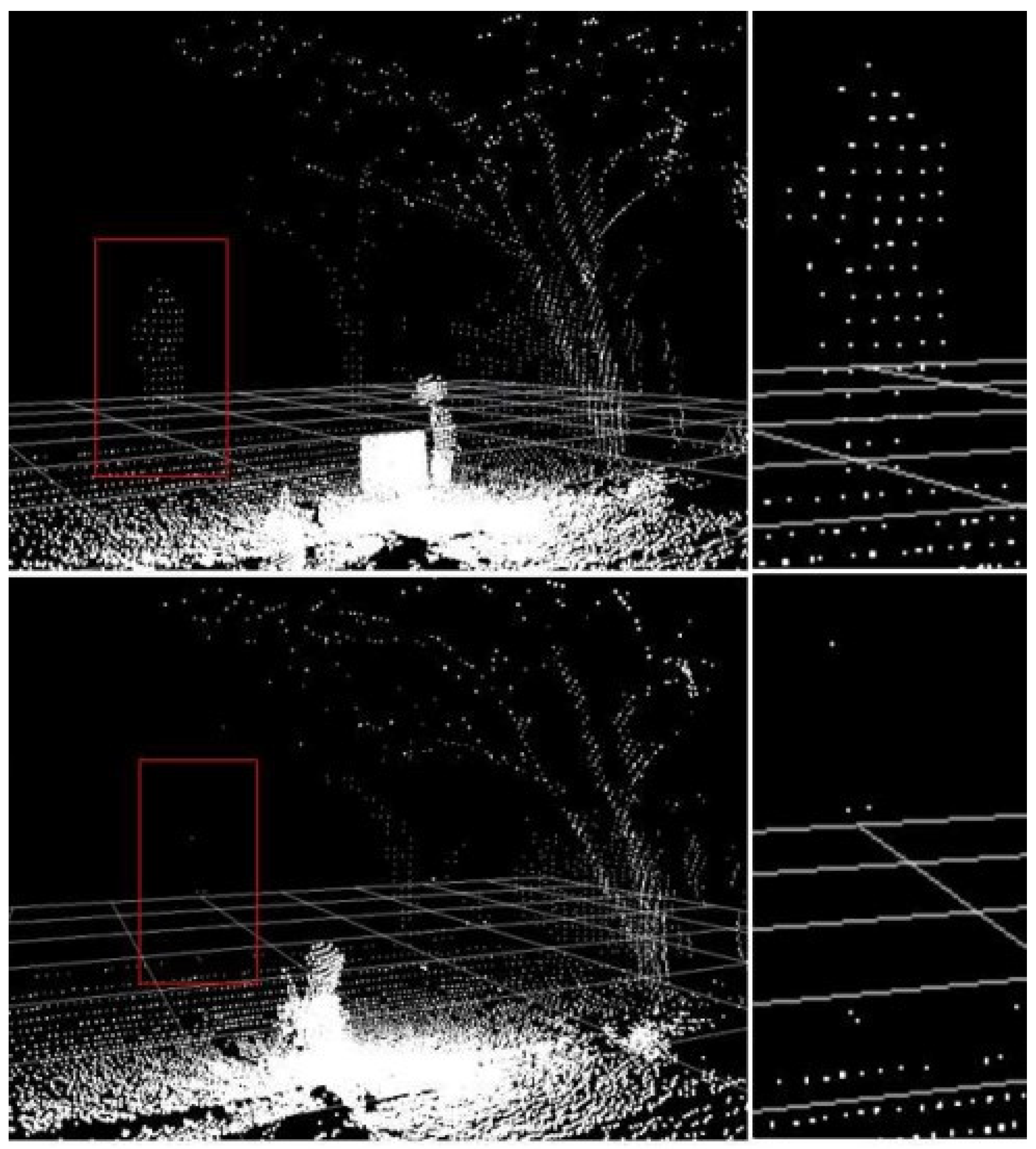

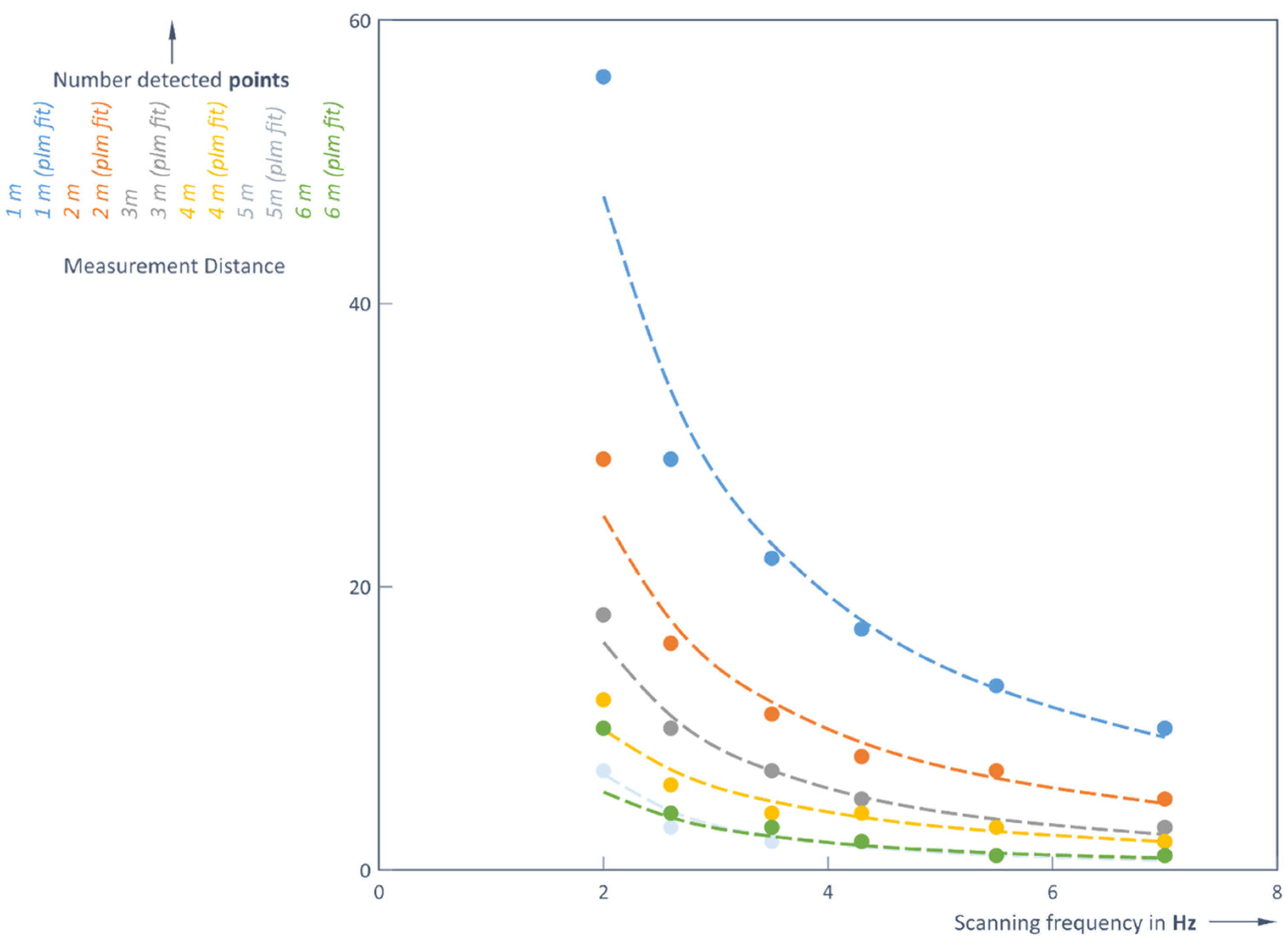

The number of points that the LiDAR receiver can detect determines the accuracy of the shape of the object. This number strongly depends on both the distance to the object and the scanning frequency of the LiDAR (

Figure 4). Here, in a first example, the silhouette of a person is depicted in a red frame (magnification right to the complete picture). It can be seen very clear that in the case of rain at the given distance (5 m), the silhouette of a person is difficult to recognize. Although most points of the object have been registered at lower frequencies (2 and 2.6 Hz), these frequencies resulted in a bigger loss of distance accuracy (

Table 1); when the real distance was 1 m, the LiDAR captured distances of 0.944 m and 0.971 m.

The upper and lower images show the situation without rain and with rain, respectively. A scanning frequency of 7 Hz was used, with measurements taken at a distance of 5 m. Rain intensity is 9.84 mm per hour. Rain fell on the person and between them and the LiDAR. The tree was unaffected by the rain.

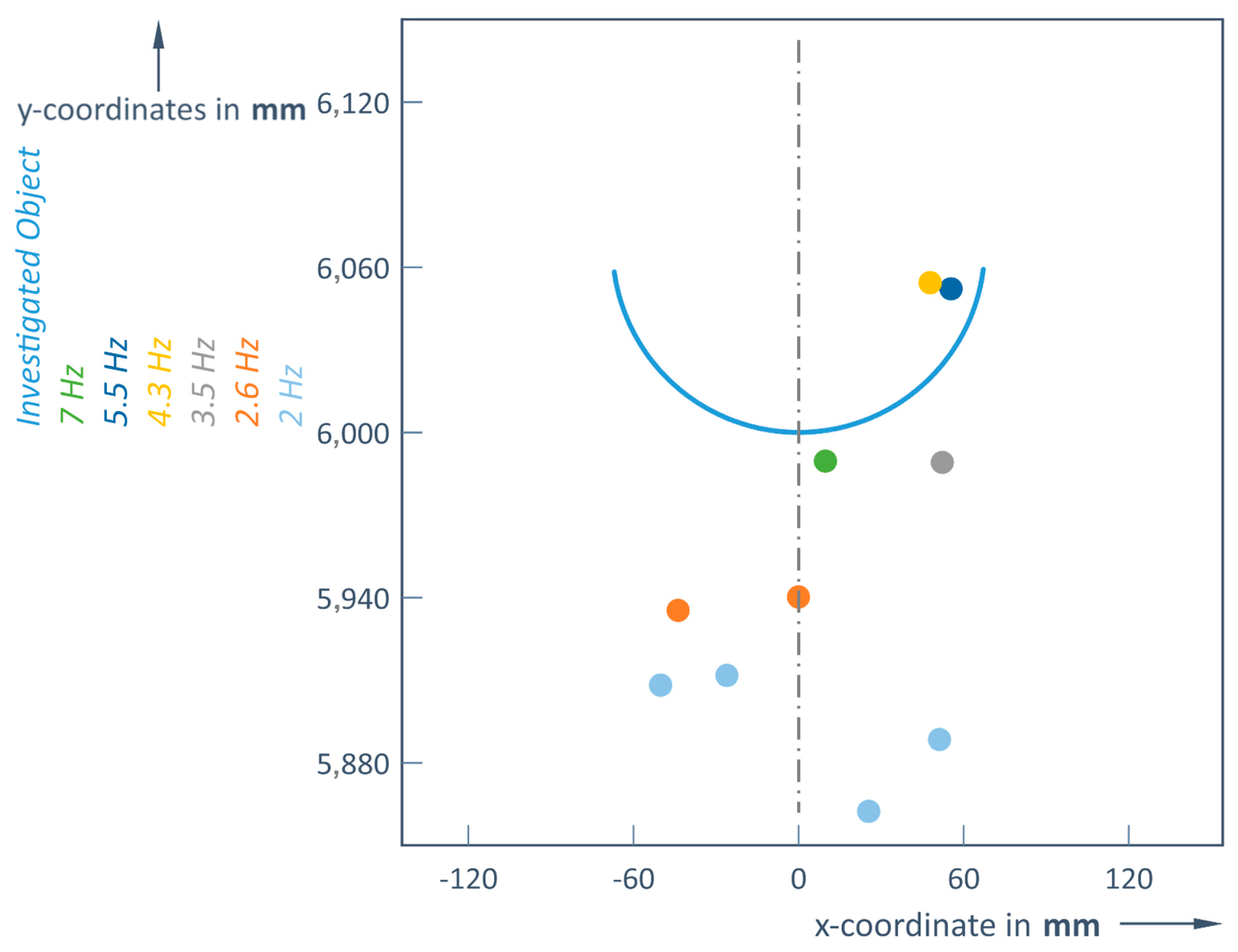

Therefore, it is important to determine an optimal scanning frequency depending on the distance to the object. For an object at a distance of 6 m, the detector can only pick up more than one reflected signal from that object if a frequency of 4.3 Hz or less is used. This means that at higher scanning frequencies (5.5 or 7 Hz), the object (again a ball of 135 mm diameter) becomes practically invisible at this 6 m distance limit; the Slamtec RoboPeak A1M8 LiDAR could only detect 1 point (

Table 2,

Figure 5).

From this, it can be concluded that there must be an object at the measured distance. This information is still useful for rough obstacle detection, but no longer for control routines, as the shape and size information of the object is lost.

3.2. Test in Rainy Weather Conditions

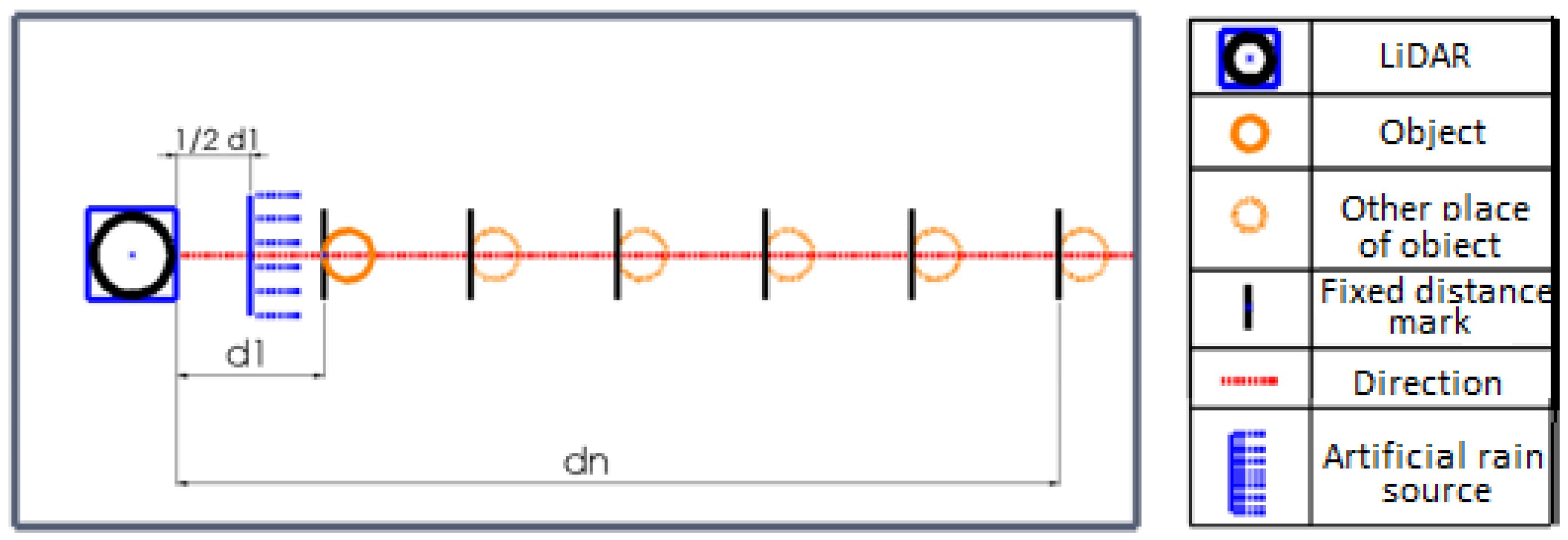

This experiment scenario is similar to clear weather conditions, but it is carried out by causing artificial rain between the LiDAR transmitter and the object (

Figure 6). The artificial rain was placed next to the sensor, between the sensor and the object.

The object was again placed at distances from 1 to 6 m. The frequencies were selected based on the specifications of the LiDAR device used [

30]. Technical characteristics: sampling—7 Hz, 6 m measurement range, 360-degree scan.

To determine the direct influence on the determination of distance to the obstacle and its shape, for the purity of the experiment, it was chosen to perform it in contrasting weather conditions: clear weather and heavy rain. As for the measurement duration, the measurements are performed instantaneously for discrete frequencies; and the controlled variables are the distance to the object in meters, which is measured to the closest point of the target, and captured shape points of the object. The results at distances (

d) of 1 m and 6 m are shown in

Table 3 and

Table 4, respectively.

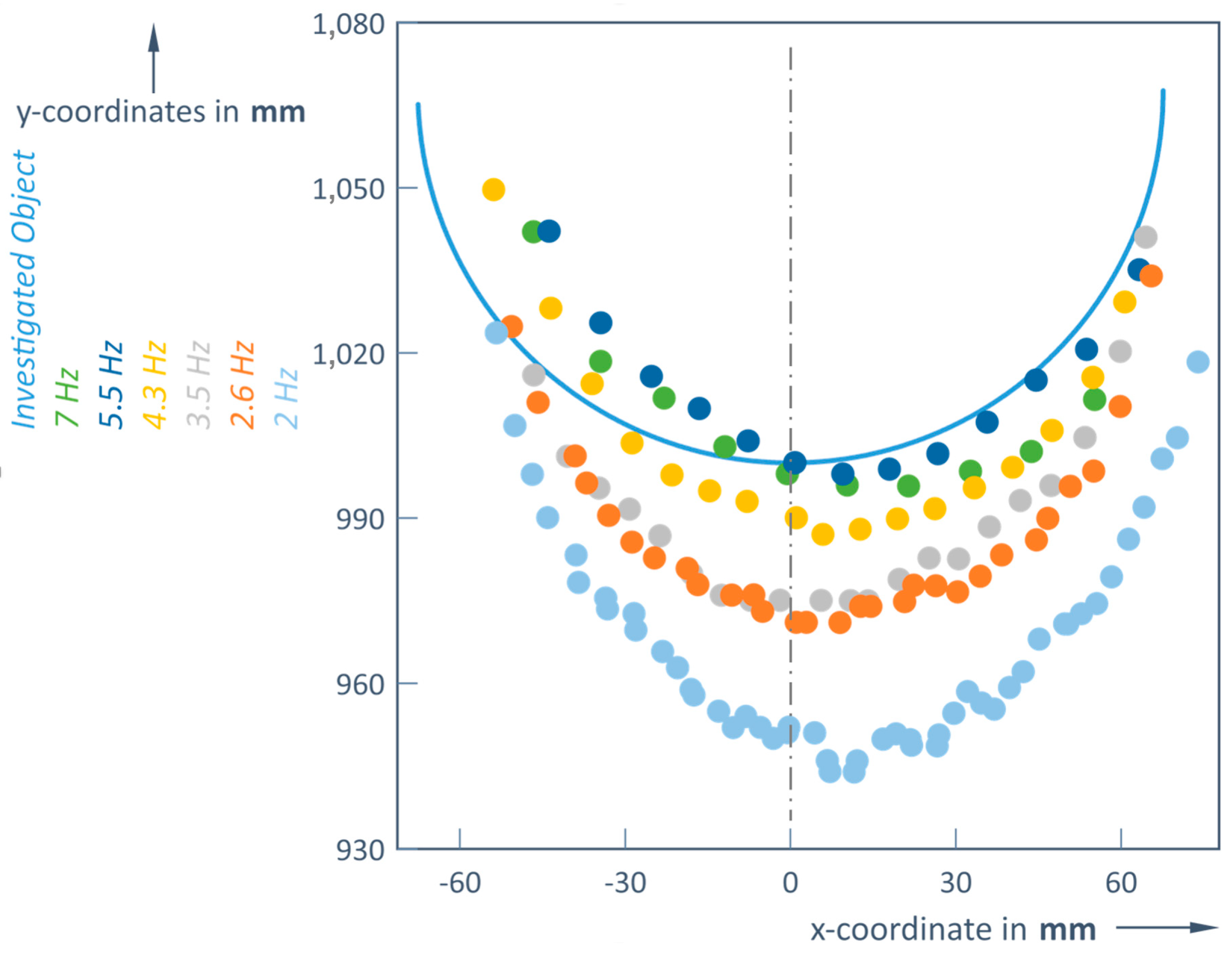

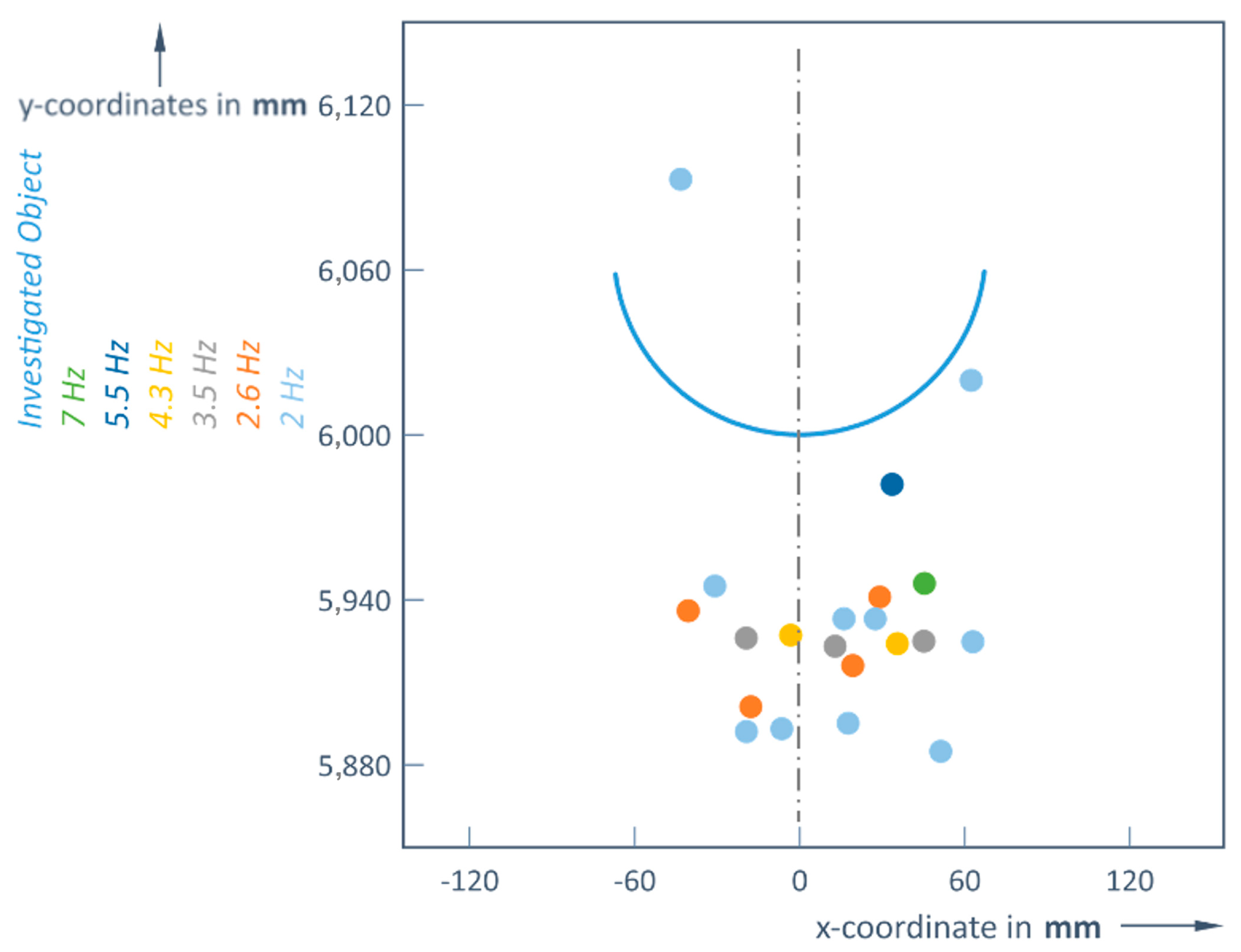

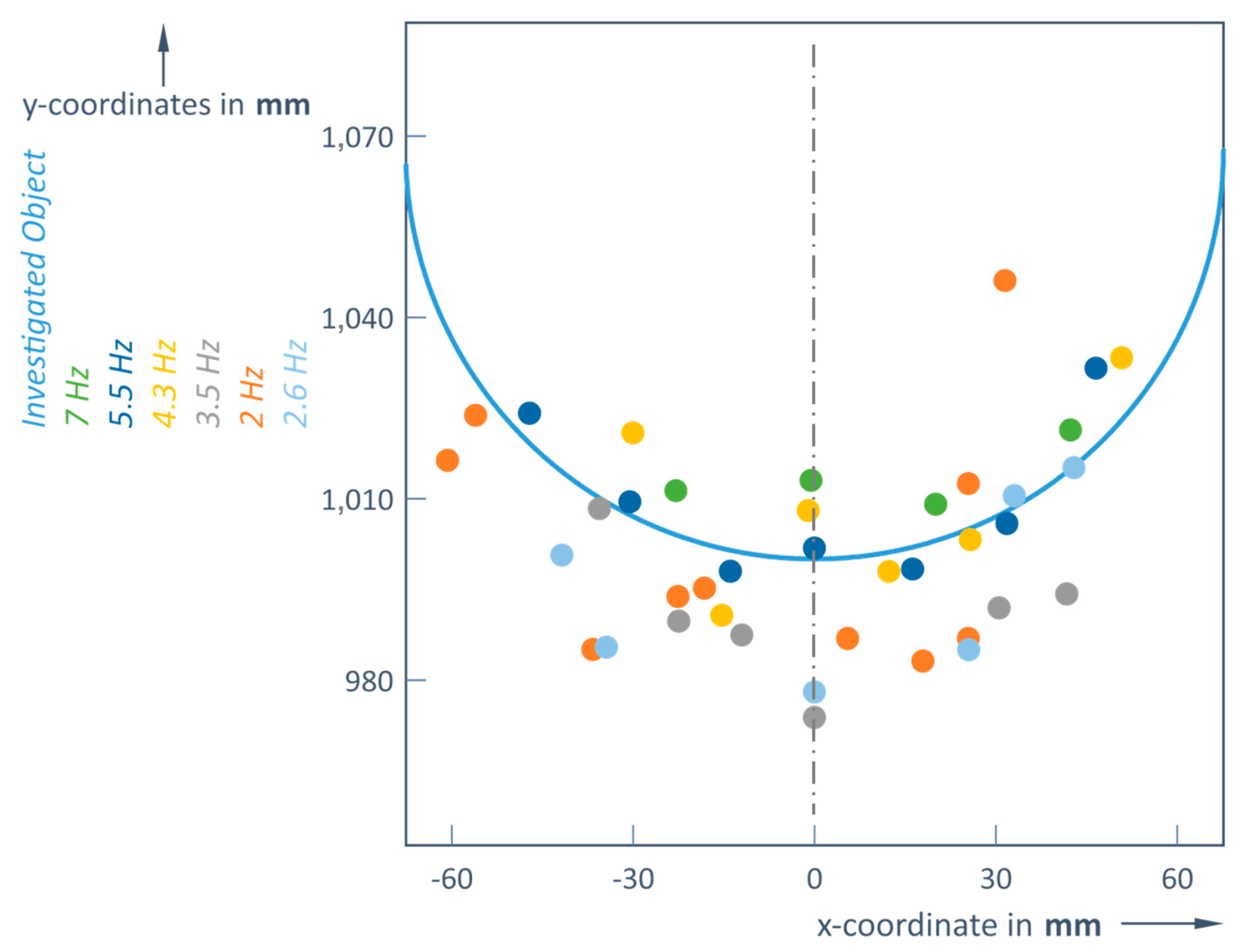

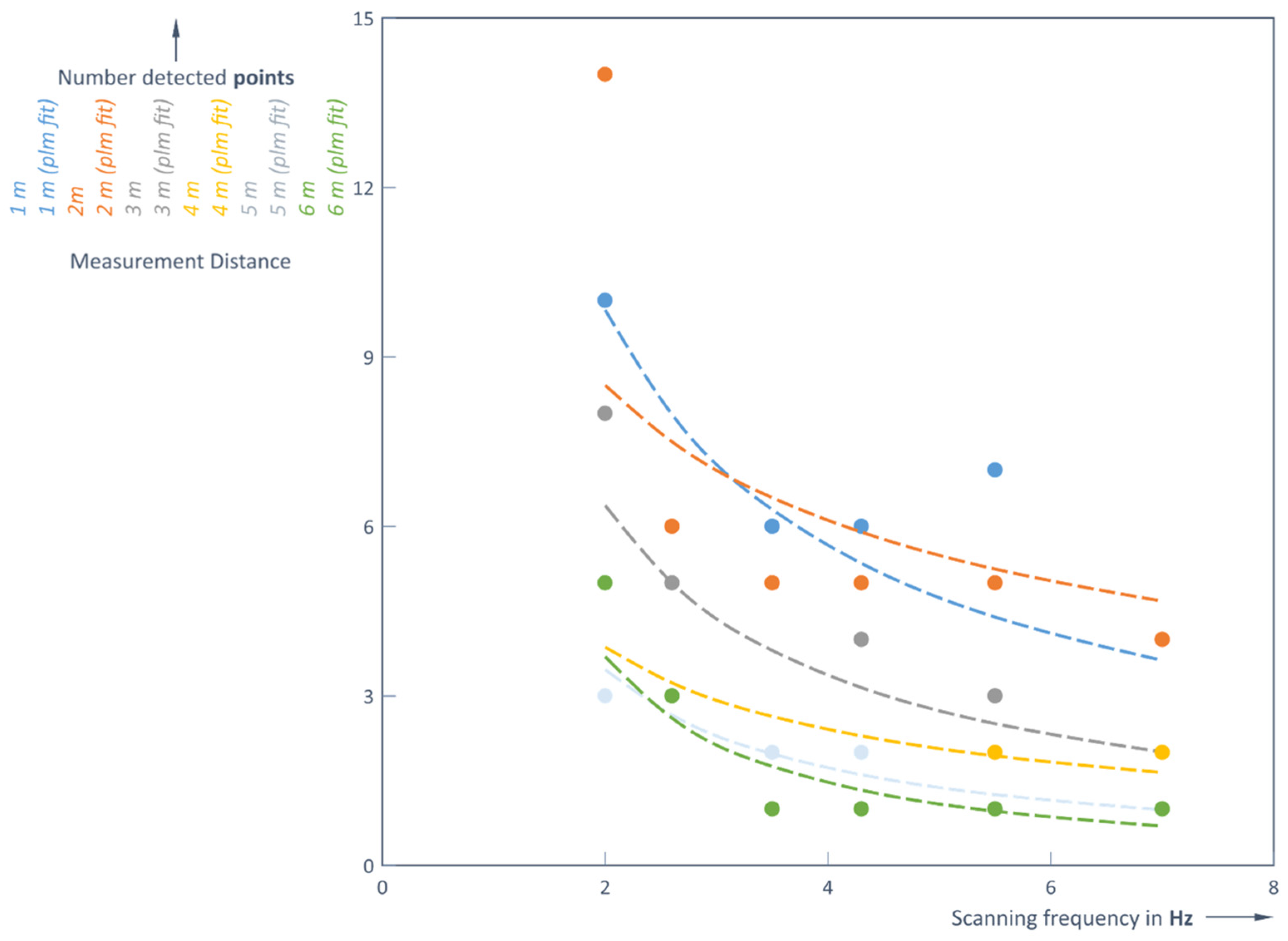

Rainy weather data (

Table 3) revealed that measuring at 1 m, the distance is determined quite accurately using higher frequencies (5.5 and 7 Hz), which is very similar to the clear weather experiment (1 m reference distance was measured as 0.998 m and 1.009 m). The object becomes invisible at a distance of 6 m when the scanning frequency is 3.5 Hz (or higher), while at a frequency lower than 2.6 Hz, this object is detected but its shape is lost (

Table 4). Thus, with increasing distance, the dispersion of points recorded from the object increases as well (

Figure 7 and

Figure 8). However, using a 2 Hz LiDAR scan rate, the RMS at 1 m is about 3 times smaller than the one under good weather conditions (

Table 1 and

Table 3). This could be explained by the self-compensation effect on multiple paths due to heavy rain.

It can be clearly seen that the number of points of the detected object decreases, since the field increases with the increasing distance at a constant angle of view, resulting in fewer points being detected for more distant objects (

Table 1,

Table 2,

Table 3,

Table 4 and

Figure 3,

Figure 5,

Figure 7 and

Figure 8, correspondingly). This inverse dependence is seen in

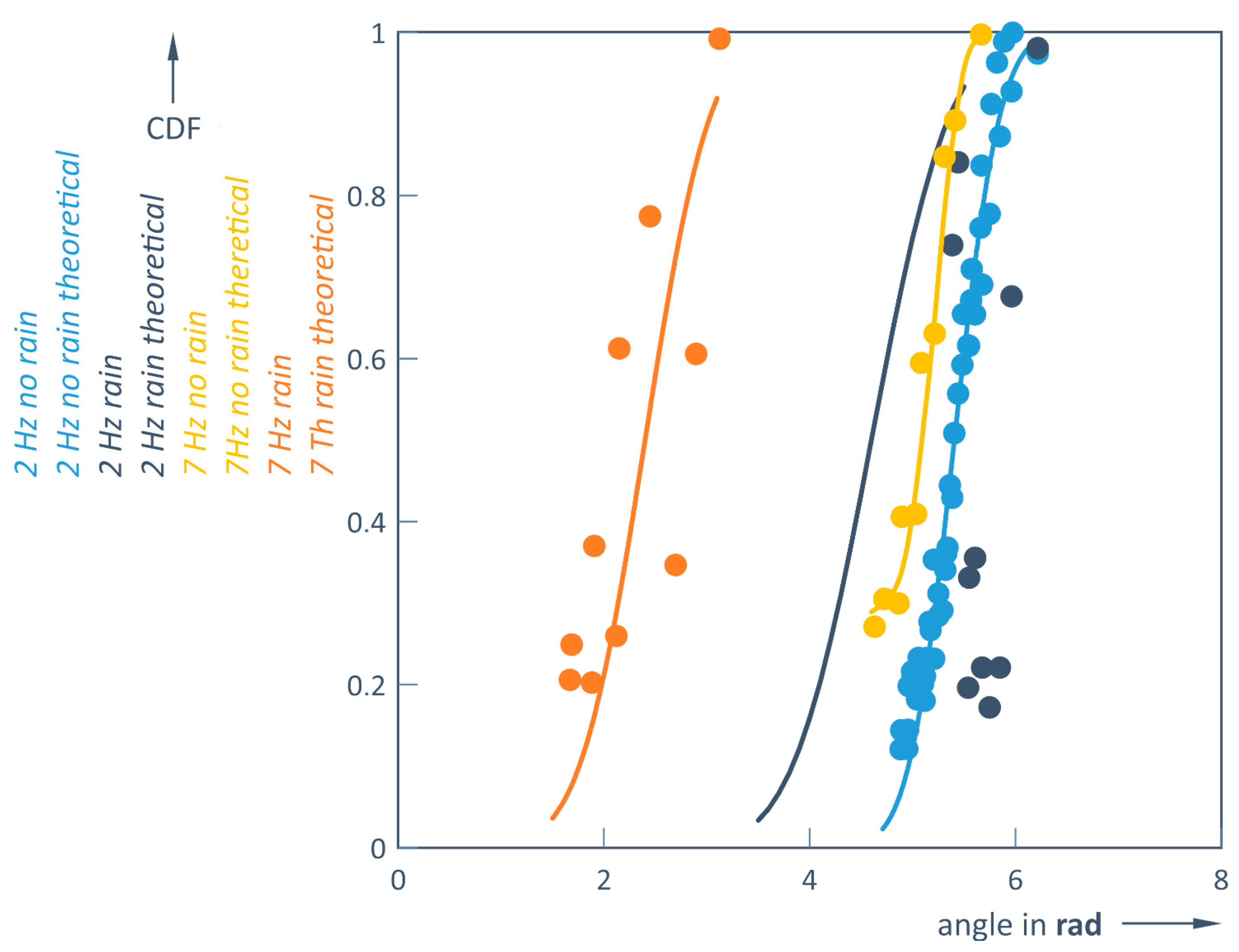

Figure 9 and

Figure 10 for both weather conditions.

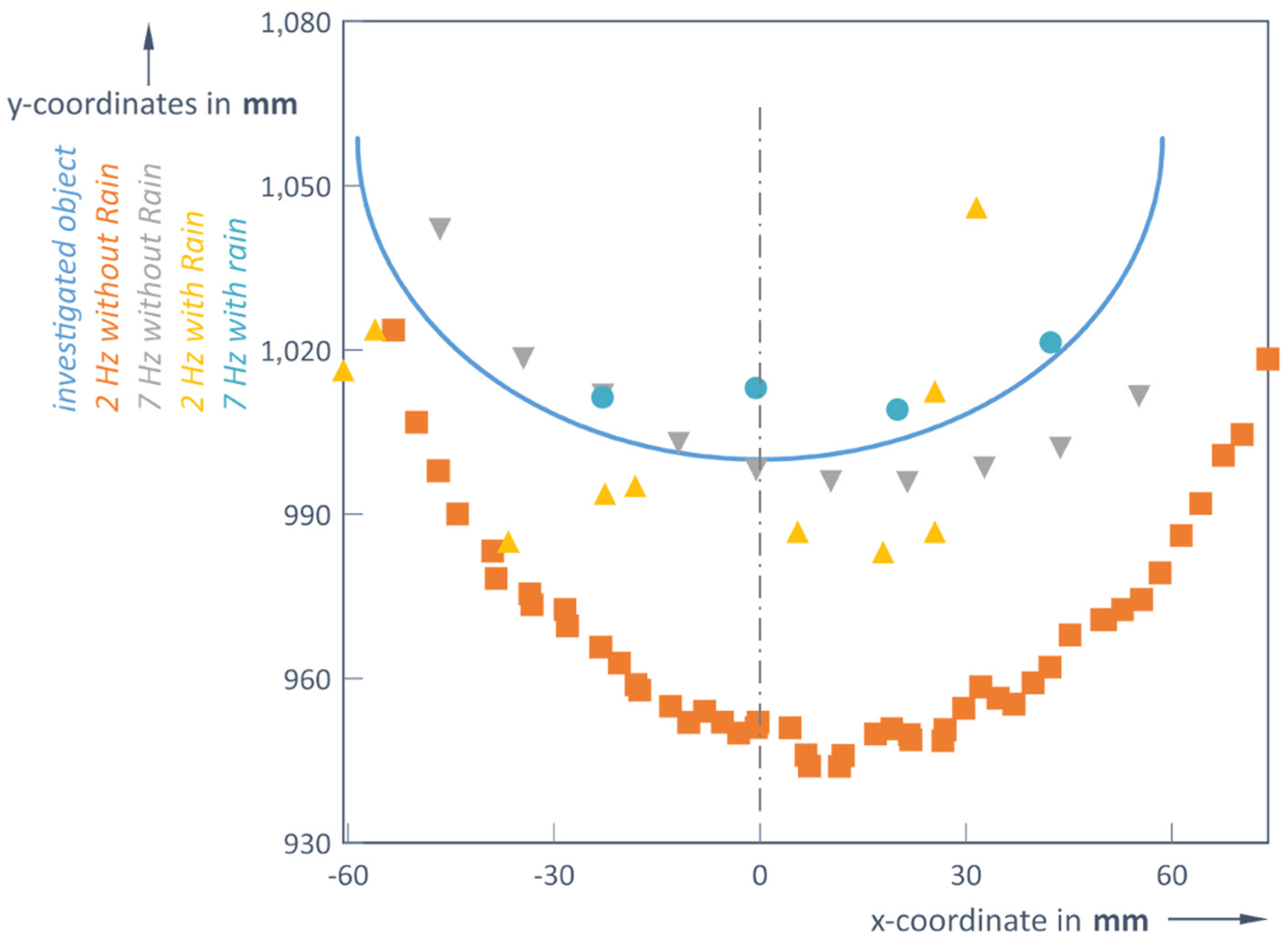

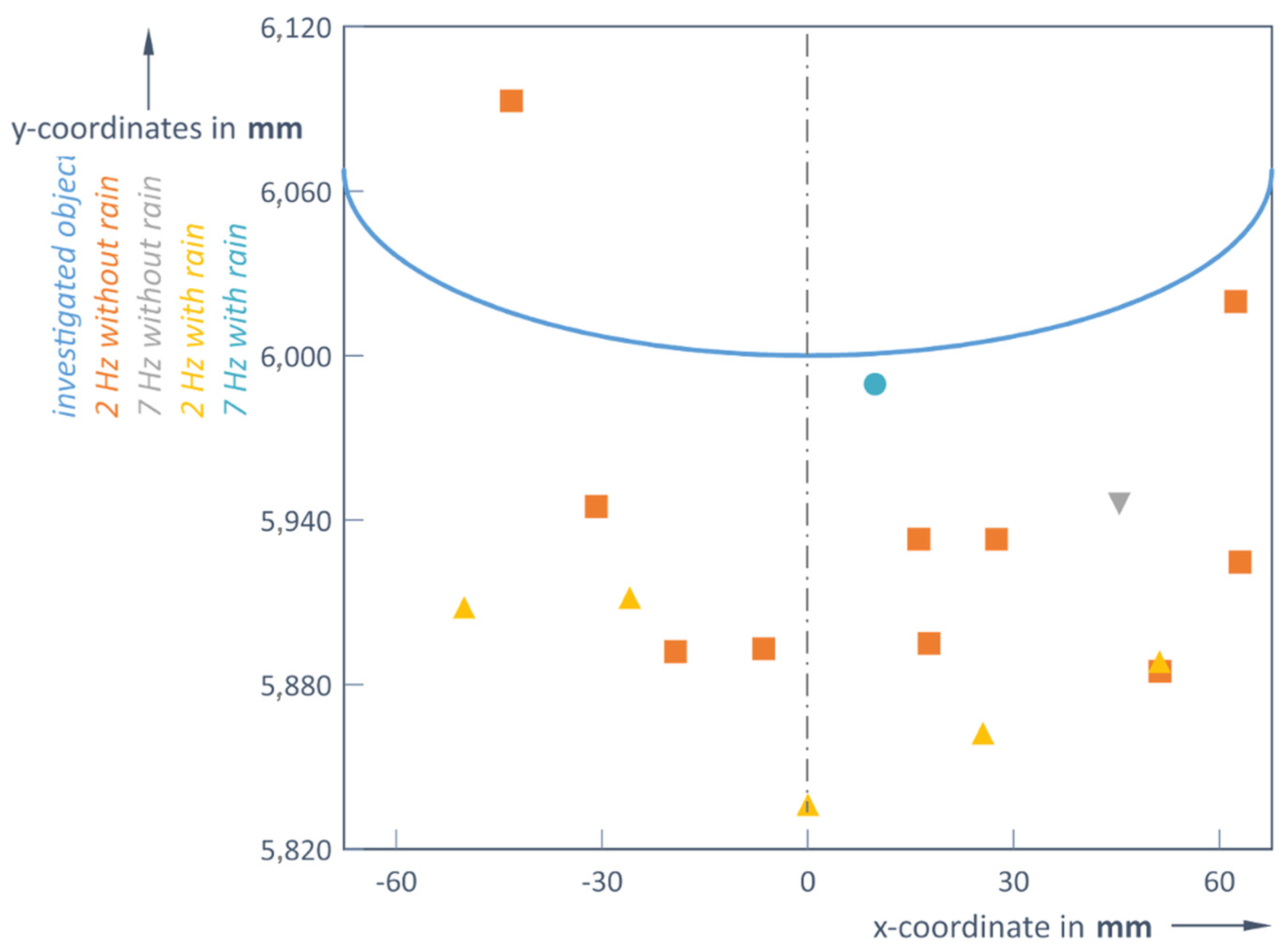

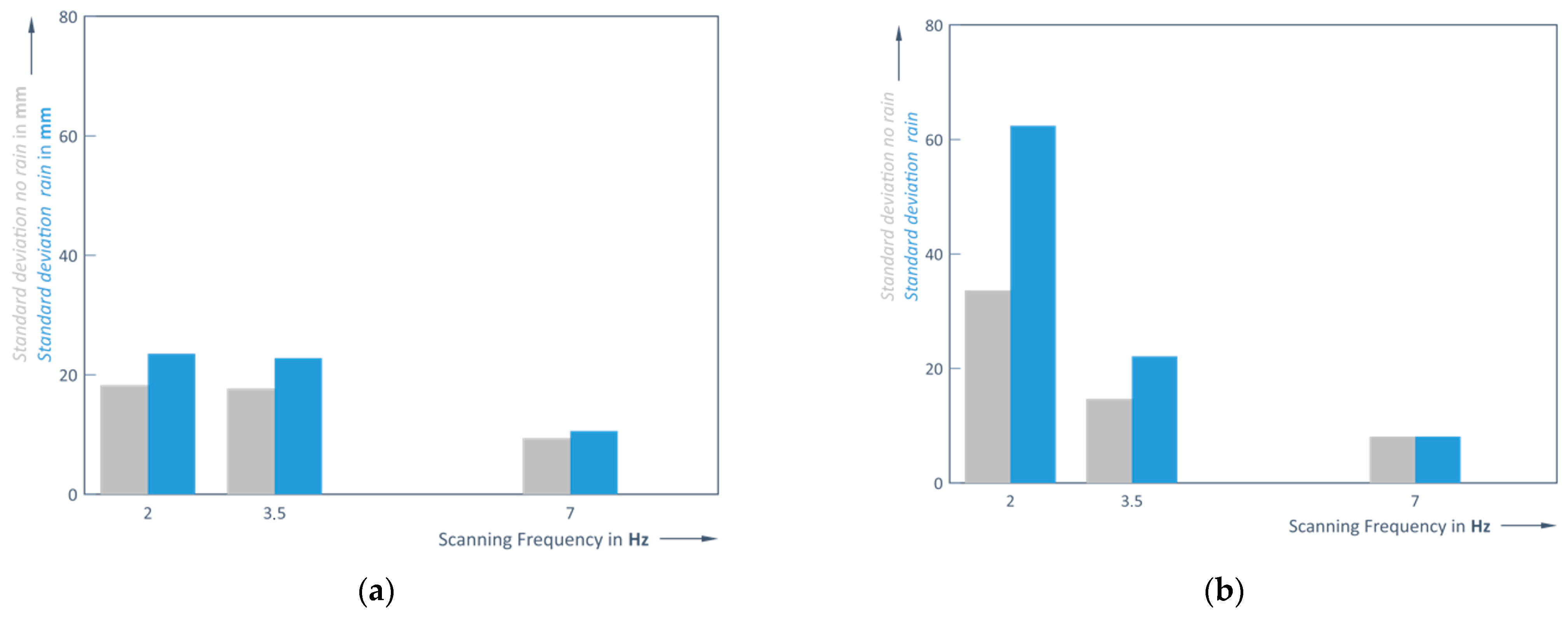

Heavy rain increases the errors in detecting a precise distance to the object and its shape, compared with clear weather (

Figure 11 and

Figure 12). It provides less points of the object shape, and this is especially visible when measuring at a distance of 1 m (

Figure 11).

When measuring at 6 m, an object is poorly detected in clear conditions and heavy rain, and the frequency used does not play a significant role (

Figure 12).

When the distance increases, the number of points of the detected object decreases significantly. At a number of detected points per object below five, just the presence of the object and distance to it is detected, but not its shape and size. The same trend occurs at all scanning frequencies, as also shown in the following two diagrams.

Such dependencies of the number of points can be approximated very well by these corresponding equations: without rain (9) and with rain (10):

where

n and

nr are the number of detected points without rain and in rainy conditions;

d is the distance from the LiDAR to the object in meters (m); and

f is the scanning frequency in hertz (Hz) of the LiDAR sensor.

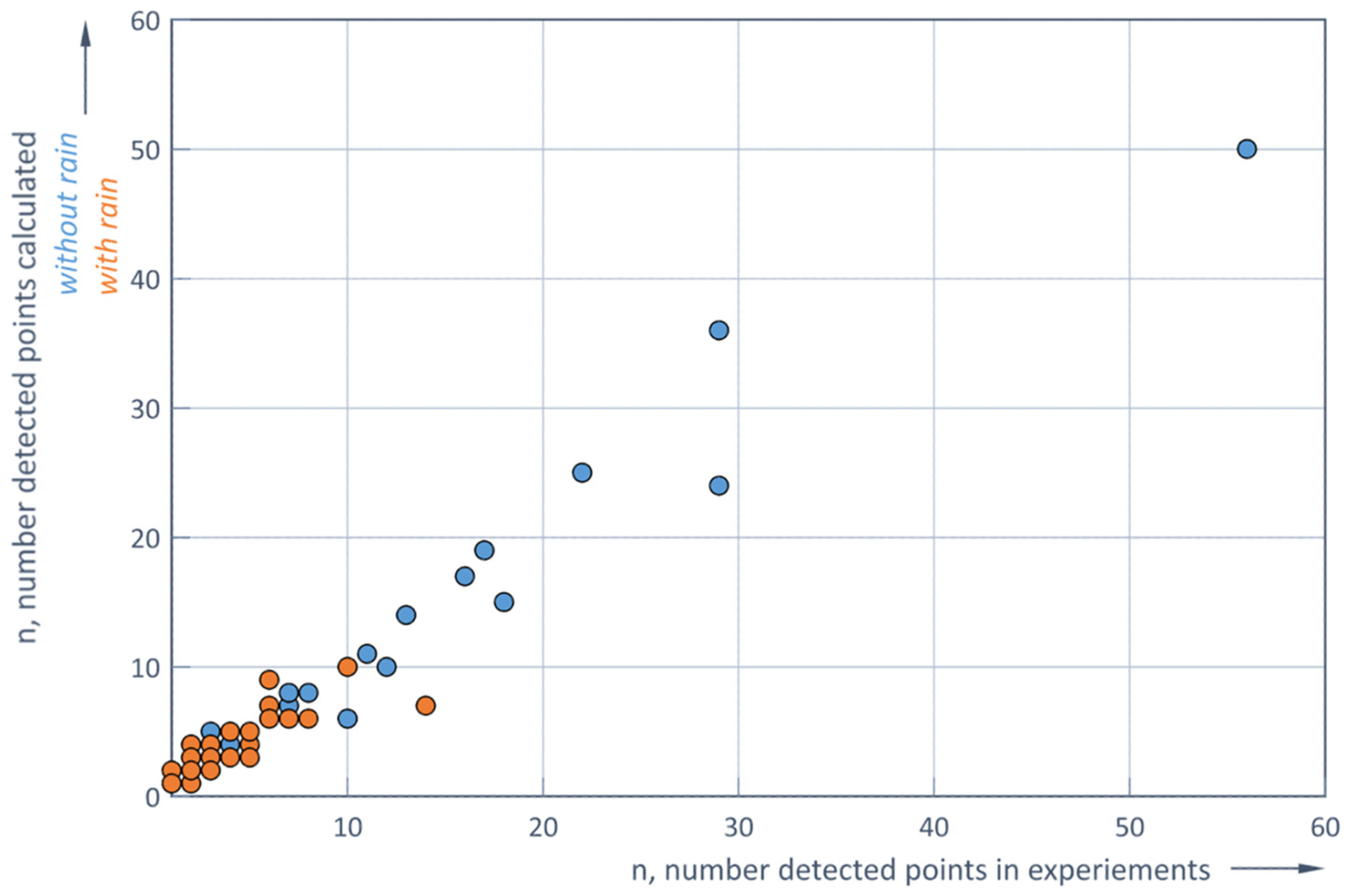

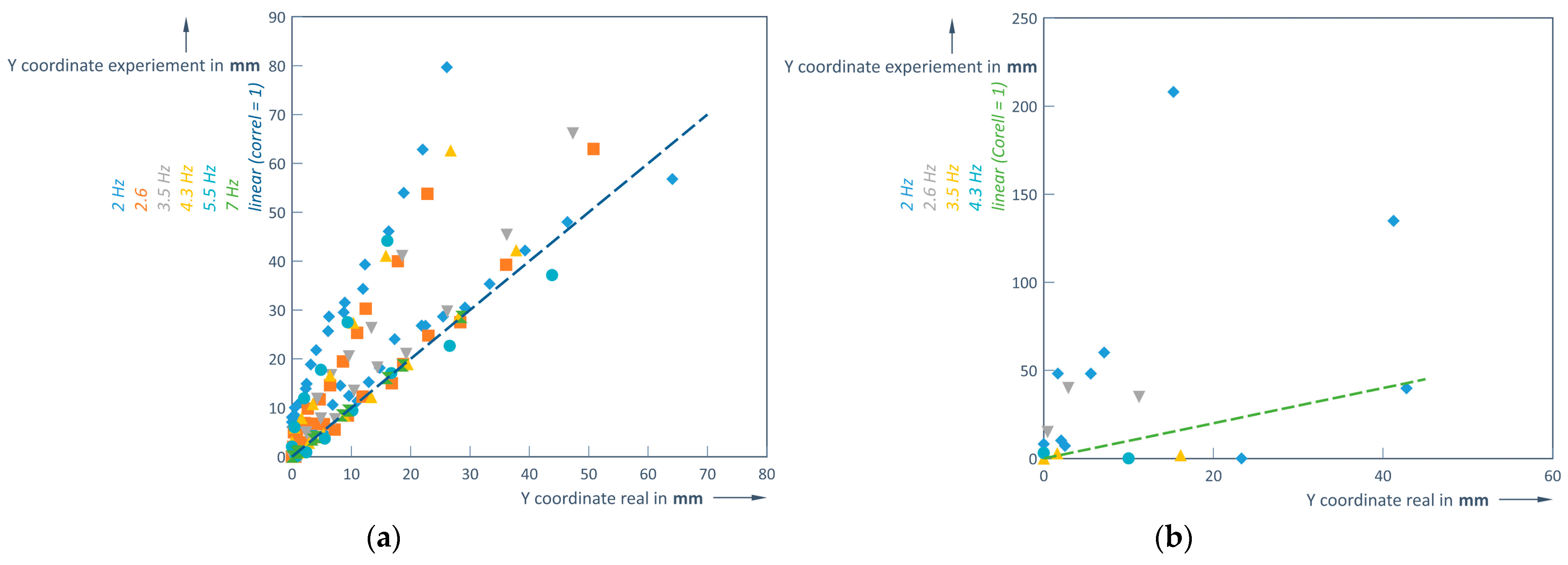

Figure 13 shows the correlation between the experimentally detected number of points and the number of points calculated using the two Formulas (9) and (10) for conditions with and without rain. The correlation coefficients are 0.98 without rain and 0.83 with rain. These strong correlations confirm the adequacy of both models, Formulas (9) and (10), with respect to the experimental results (

Figure 13).

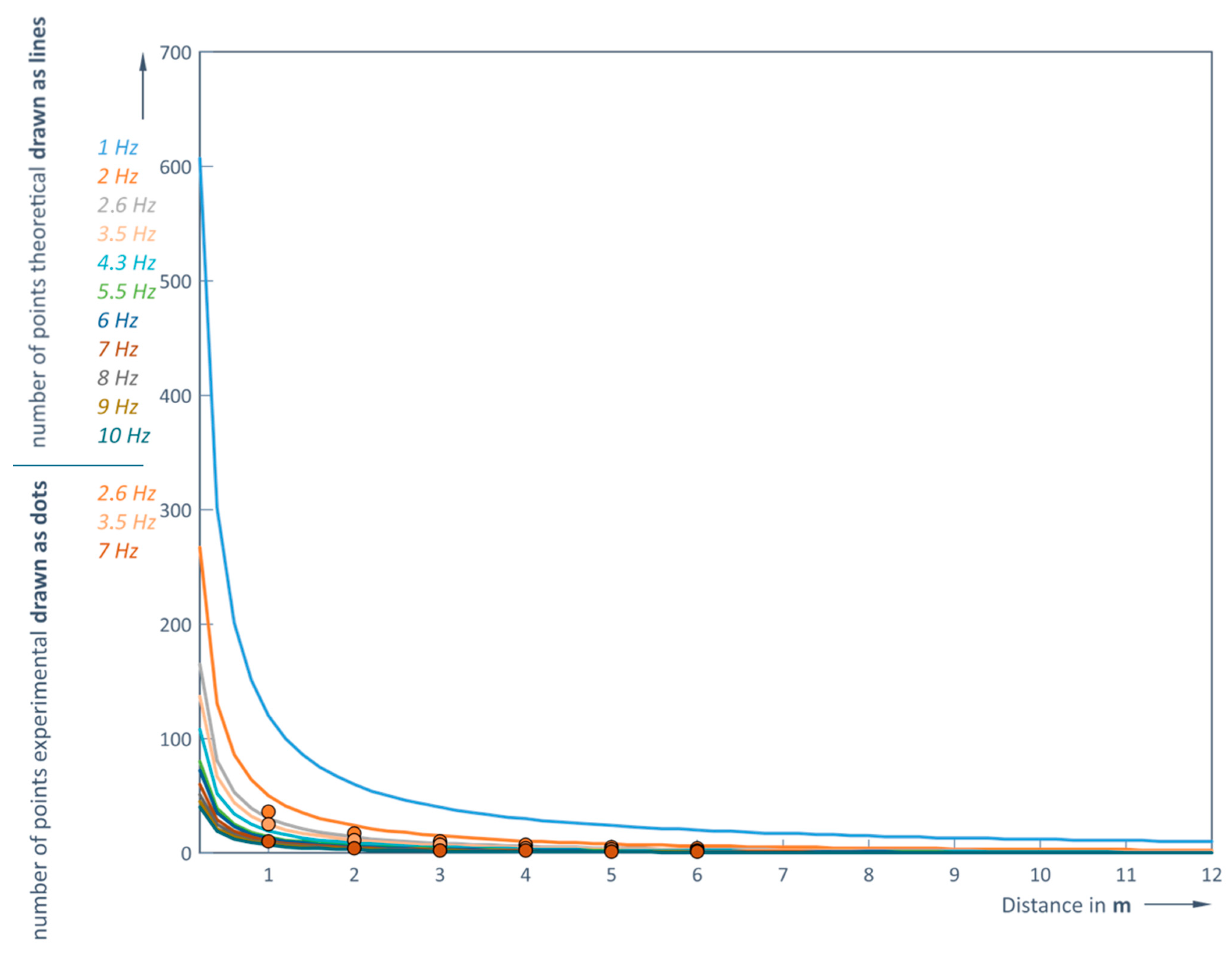

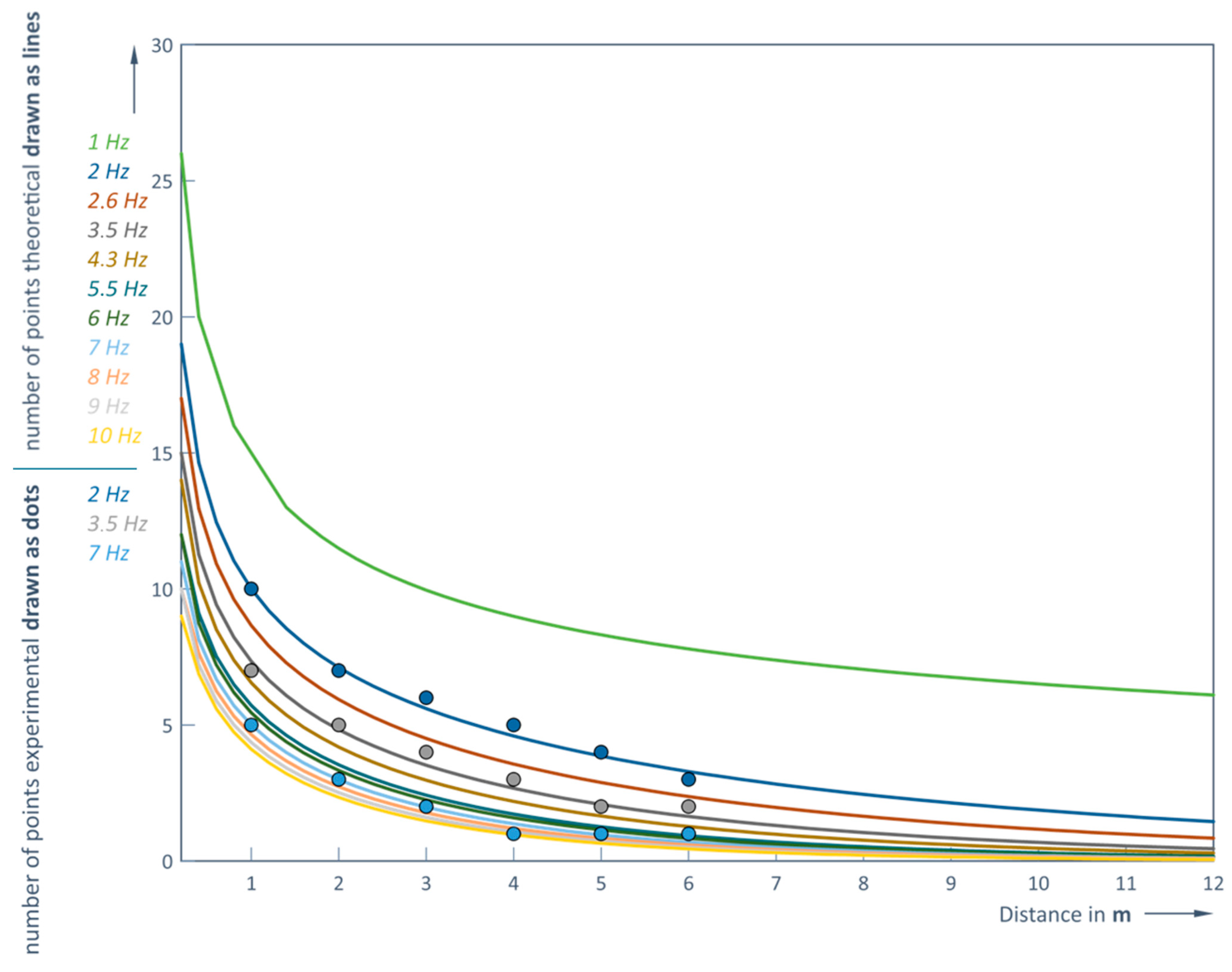

Thus, it is possible to predict the number of captured points that the sensor under study can detect depending on the distance and the LiDAR operating frequency without rain. The results of this prediction and the comparison with the experimental data are shown in

Figure 14 (for the clear weather conditions) and in

Figure 15 (for the rainy conditions).

It can be concluded that the modeled dependencies and experimental results correspond very well (

Figure 14 and

Figure 15). At an LiDAR operating frequency of 1 Hz (in clear weather conditions) the number of points recorded at the minimum distance of 0.2 m between the sensor and the obstacle are more than 600, and at the maximum distance of 12 m, this number is reduced to 10 (

Figure 14). However, at the maximum LiDAR operating frequency of 10 Hz, it is possible to capture a sufficient number of points (at least one) at a distance of no more than 5 m (

Table 5 and

Table 6).

The object points should no longer be recorded from 3.8 m in rainy weather and when the LiDAR operating frequency is 10 Hz (

Figure 15). The actual values of the measured distance at 1 and 6 m are 0.983 m (clear weather) and 0.978 m (rainy weather) and 5.836 m and 5.823 m. The influence of the rain is therefore quite small in these measurement conditions. The smallest measurement errors are obtained with 5.5 or 7 Hz. For example, RMS at 1 m and 5.5 Hz is 4.42 mm, RMS at 1 m and 7 Hz is 8.18 mm, RMS at 6 m and 5.5 Hz is 27.18 mm and RMS at 6 m and 7 Hz is 11.23 mm. Thus, in rainy weather conditions, when the measured object is 6 m away, the scanning frequency of 5.5 Hz ensures about 5–6 times smaller errors (RMS) compared to the case when 2 Hz is used. So, both the distance to the object and its shape are determined much more accurately in higher frequencies.

The obtained measured distance values after simulating in this case at a distance of 1 m are strongly linearly correlated, as the calculated Pearson correlation coefficient is equal to 0.81 (Formula (4)). An obtained correlation coefficient is 0.49 at a distance of 6 m, so it is assumed that the existing correlation between the measurements is moderately strong. In this case, the evaluation of the results includes measurements only at 2 and 2.6 Hz.

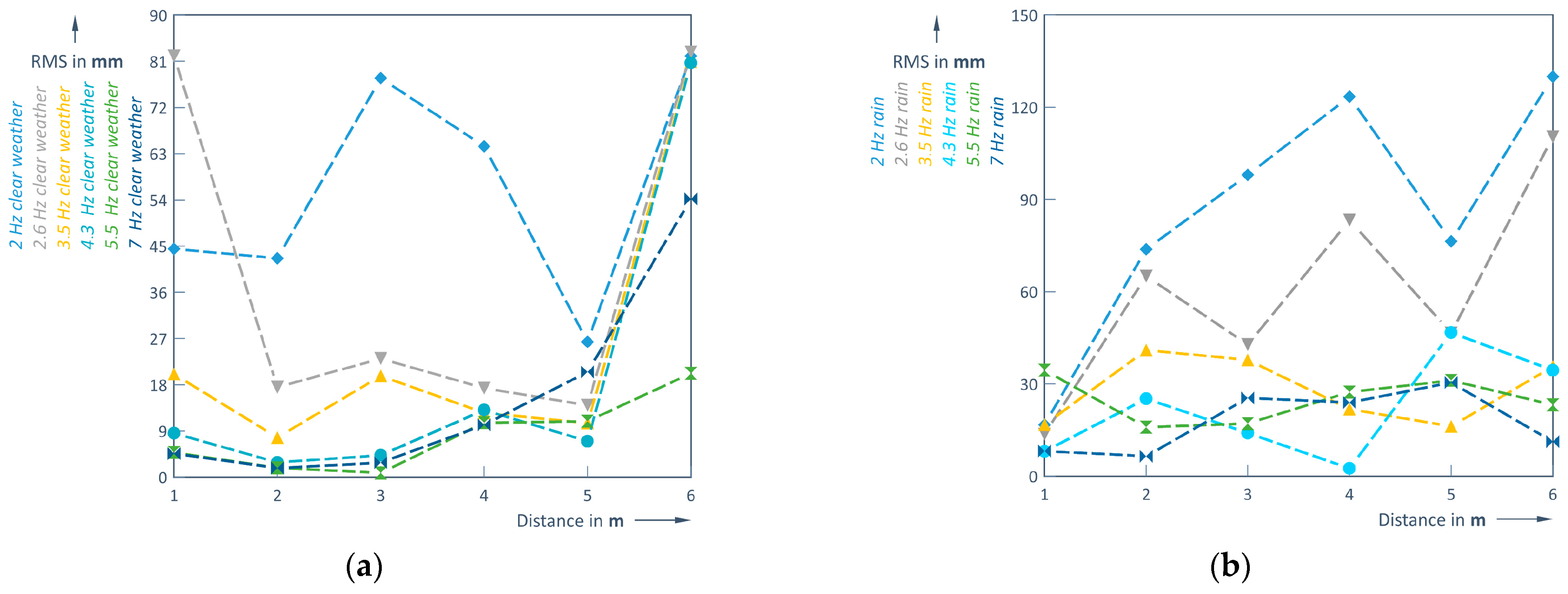

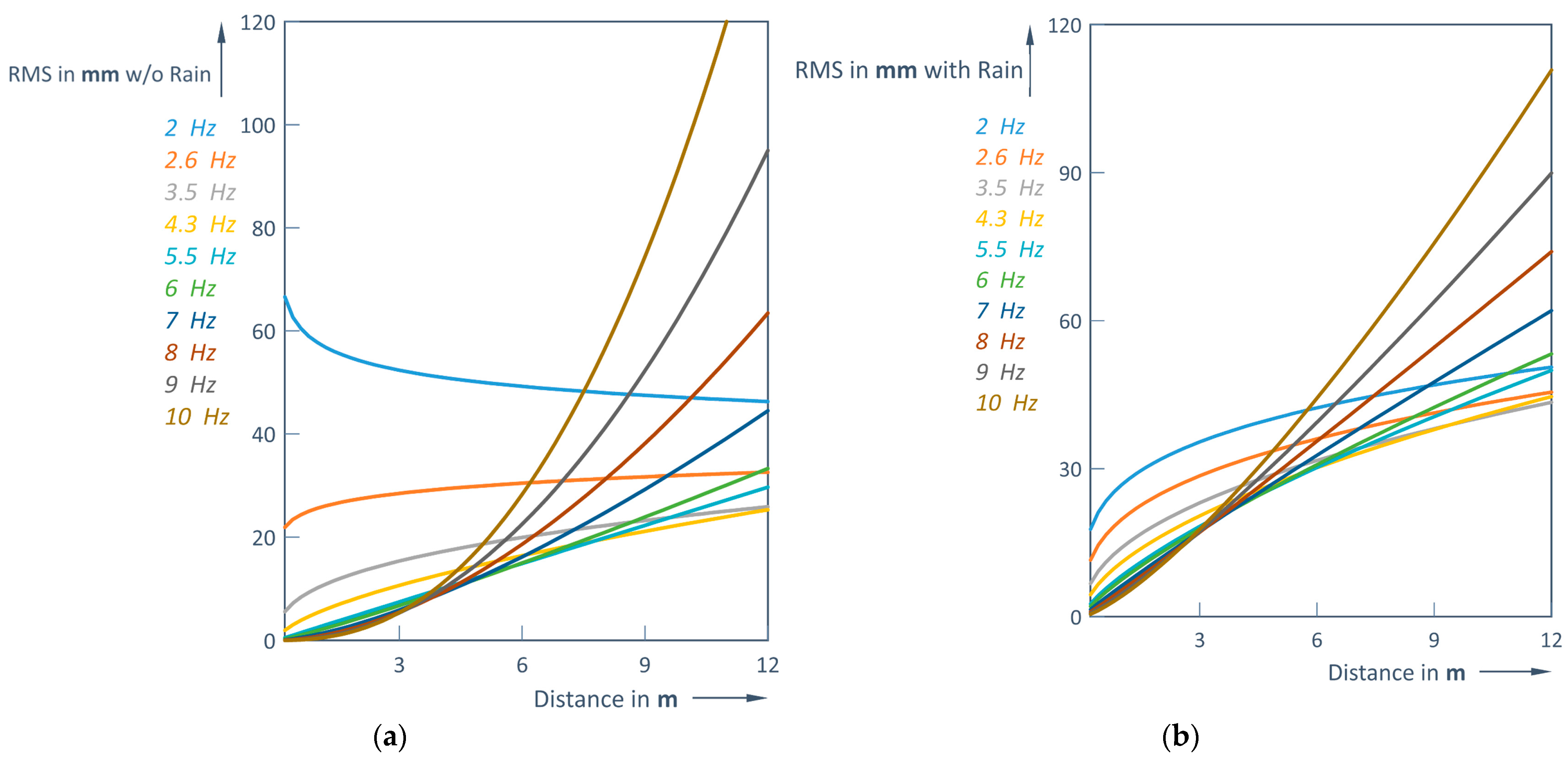

It is worthwhile depicting the dependence of the RMS variation on distance d [m] at different LiDAR operating frequencies

f [Hz]. A quite large dispersion of empiric results is observed in

Figure 16a,b:

Calculation of the RMS shows that the largest error occurs at a scanning frequency of 2 Hz (RMS at 1 m measuring distance is 16.74 mm and at 4 m 123.43 mm). In this case, the correlation coefficients are 0.61 in clear weather and 0.73 in rain. In the last case (Formula (12)), the correlation coefficient is strong compared to the case without rain (Formula (11)) where the correlation is only moderate. Furthermore, when assessing the correlation at different frequencies, a strong correlation only occurs at higher LiDAR operating frequencies (>5 Hz) (

Figure 16).

Approximate Formulas (11) and (12) are used to depict the RMS forecast under clear and rainy weather conditions (

Figure 17a,b):

An interesting trend about the different nature of the RMS depending on the frequency range can be seen in

Figure 17. As long as the frequencies are <6 Hz, the RMS variation with increasing distance d varies significantly up to a distance of 3 m, but as the distance increases further, the RMS increase is already smaller (stabilization behavior). Meanwhile, at higher frequencies (f > 6 Hz), the RMS increase is almost linear with gradients; the higher the frequency, the higher the gradient. This particular behavior results in a lower RMS for distances up to about 6 m when using higher frequencies (6 Hz and above), while at longer distances lower scanning frequencies (below 6 Hz) provide a lower RMS. This should be considered when taking practical measurements.