A Fusion Visualization Method for Disaster Information Based on Self-Explanatory Symbols and Photorealistic Scene Cooperation

Abstract

1. Introduction

2. Methodology

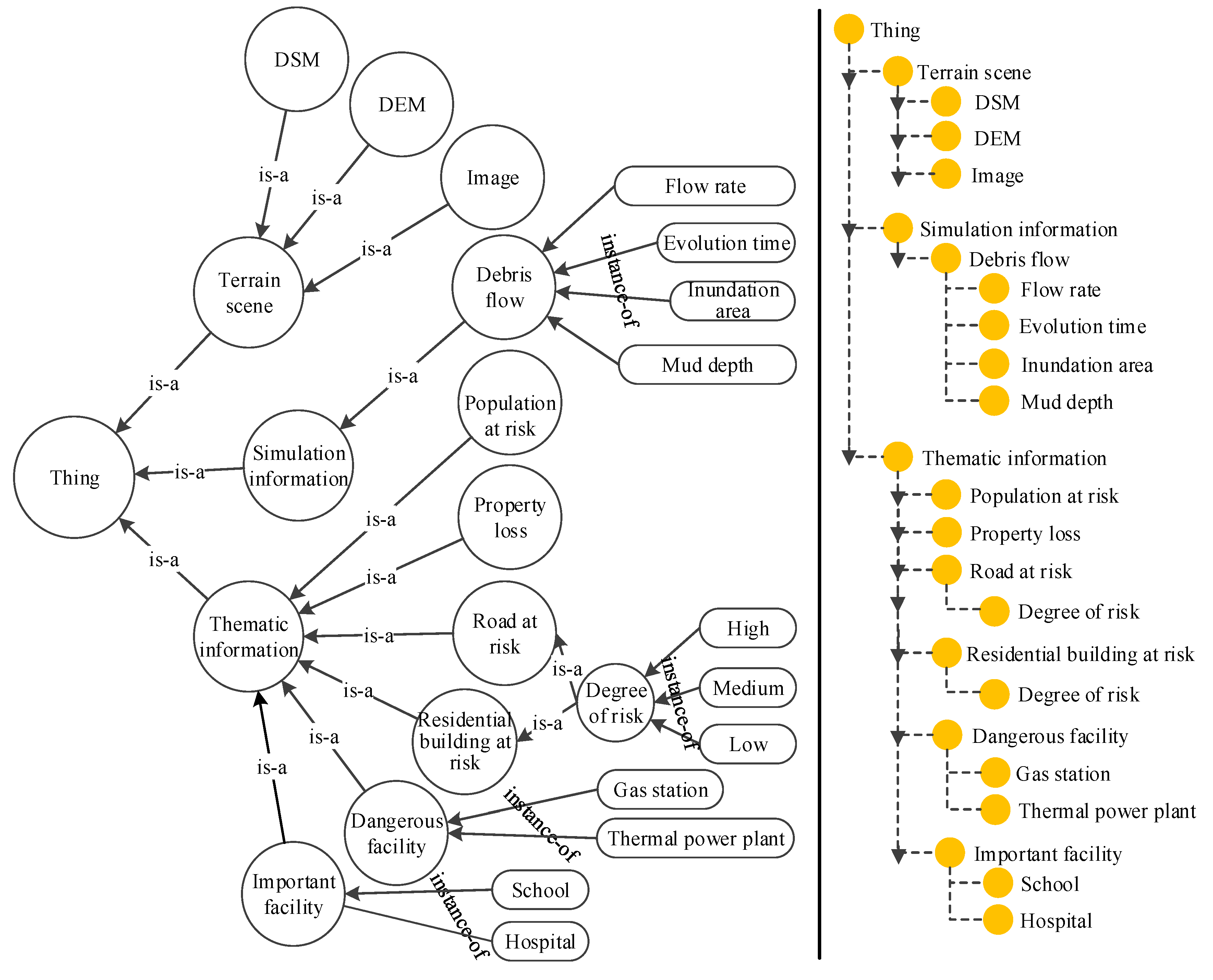

2.1. Disaster Scene Division and Semantic Description

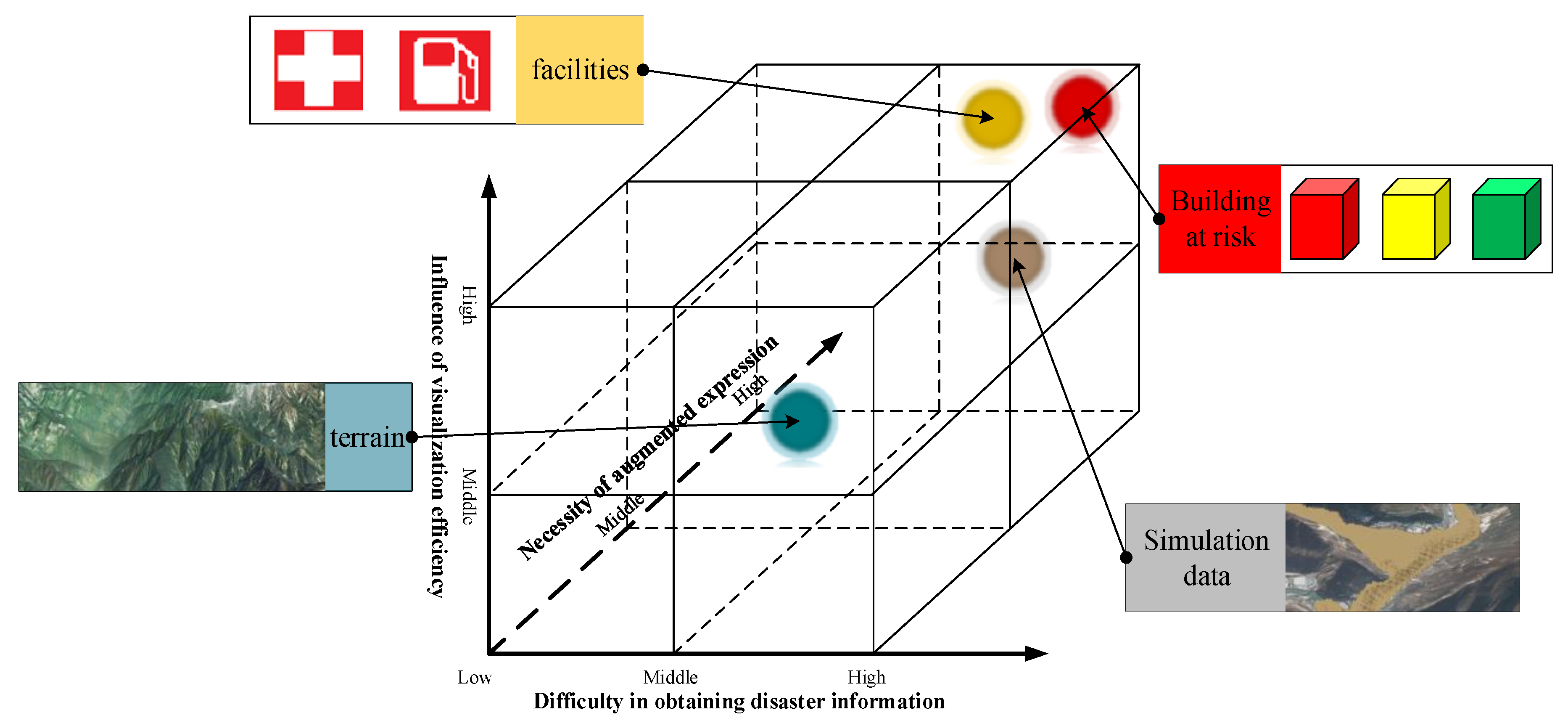

2.2. Fusion Visualization of Self-Explanatory Symbols and Photorealistic Scene Cooperation

2.2.1. Continuous Hierarchy of Non-Photorealistic and Photorealistic Expression

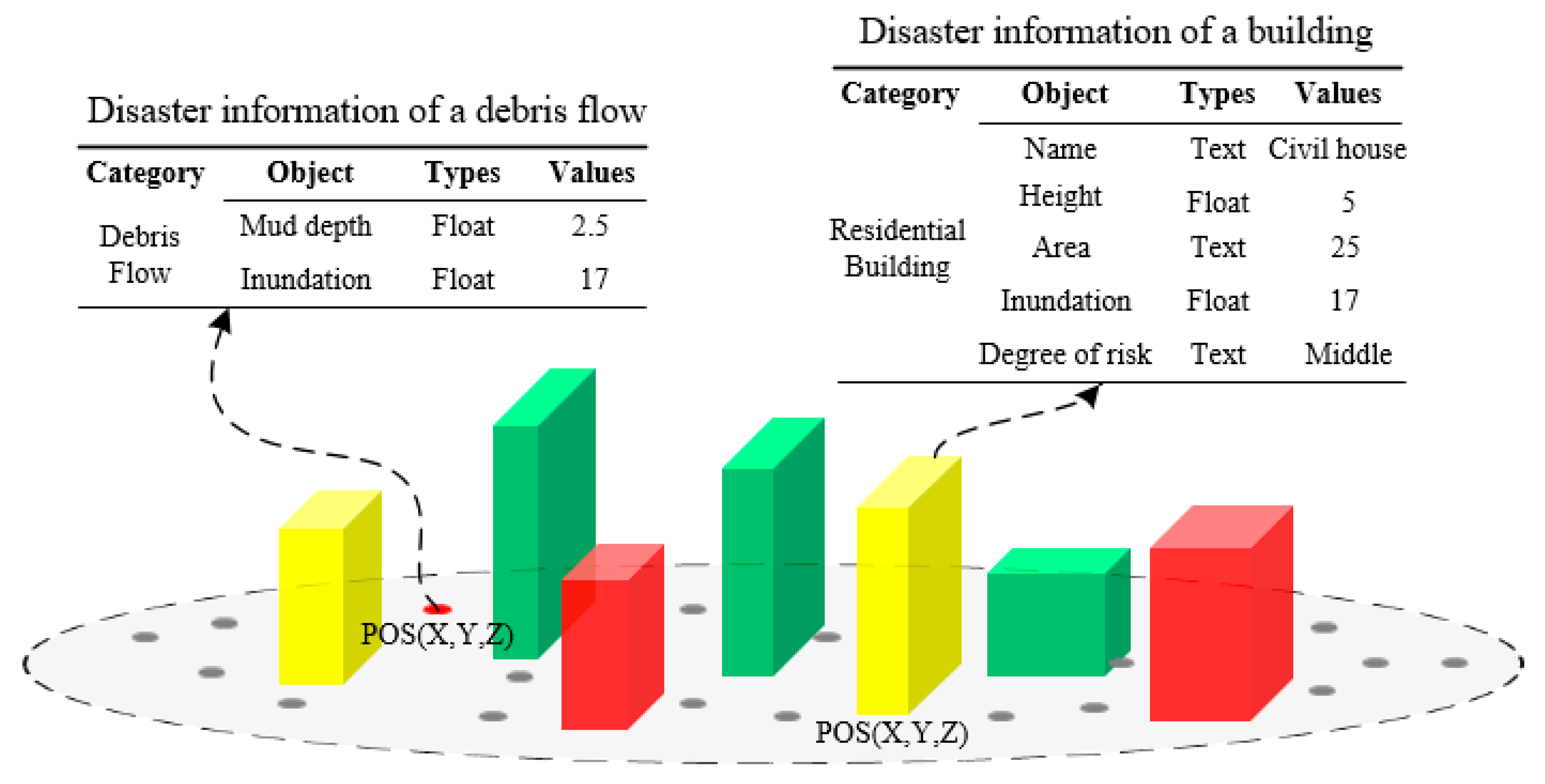

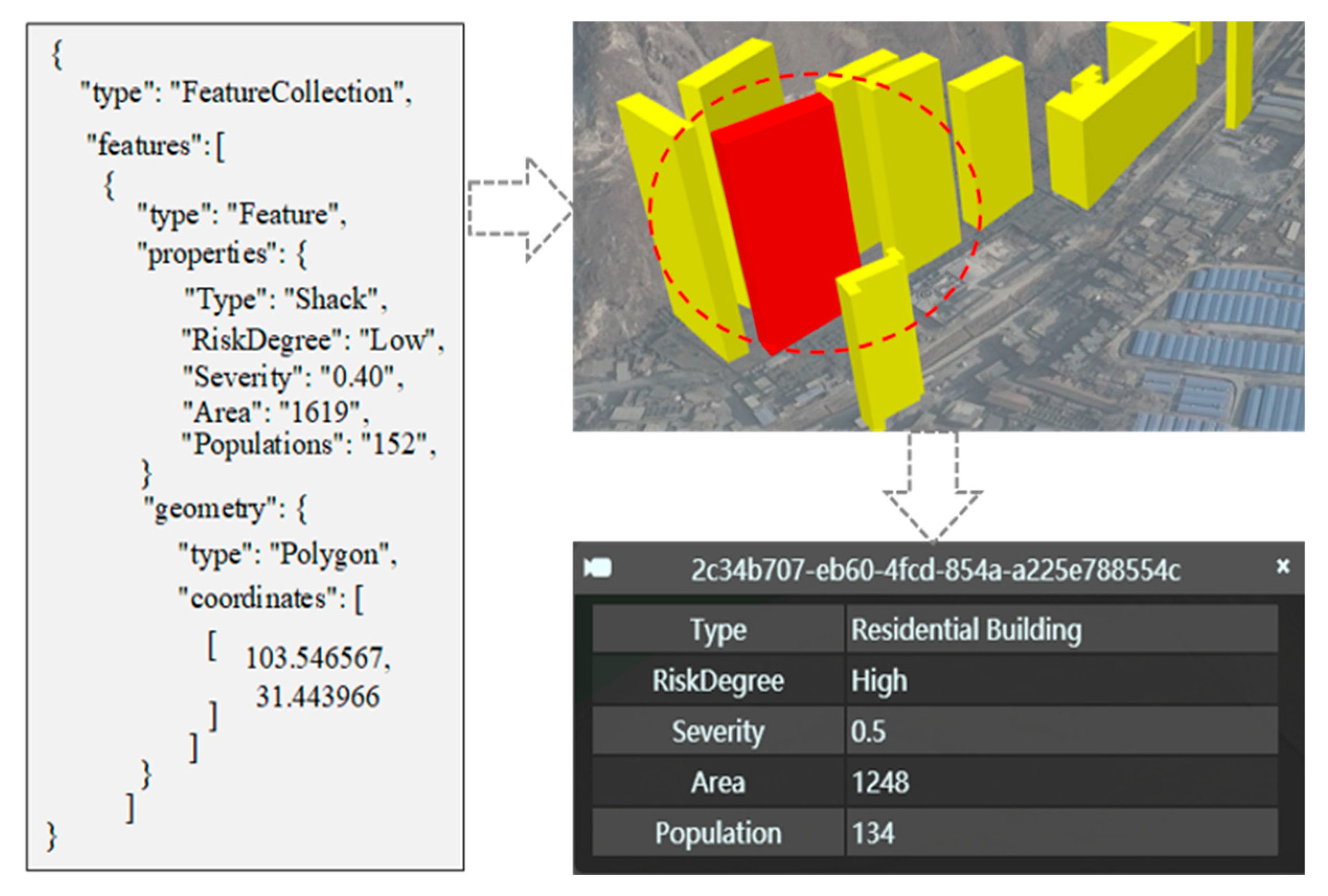

2.2.2. Self-Explanatory Symbols and Photorealistic Scene Cooperation

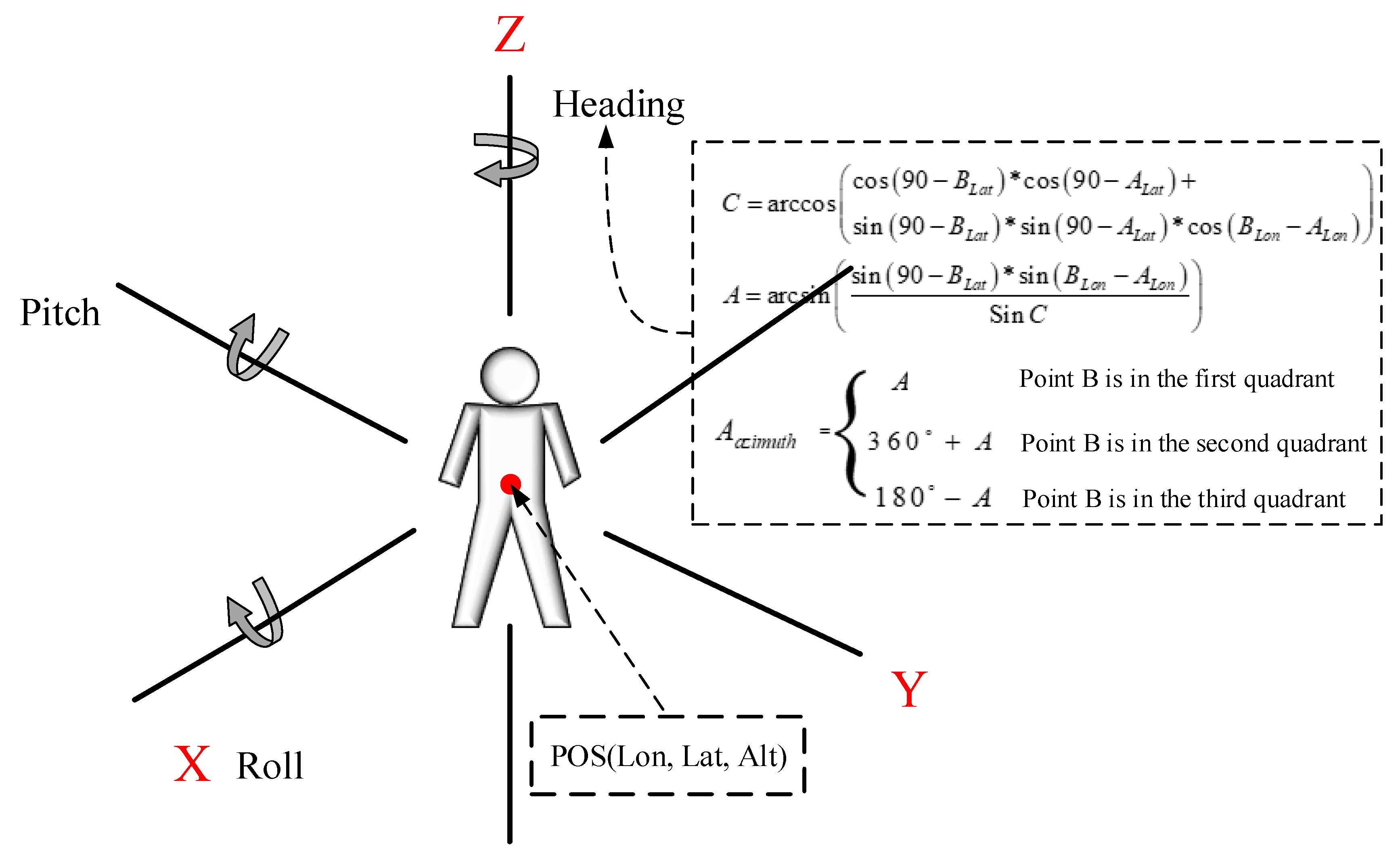

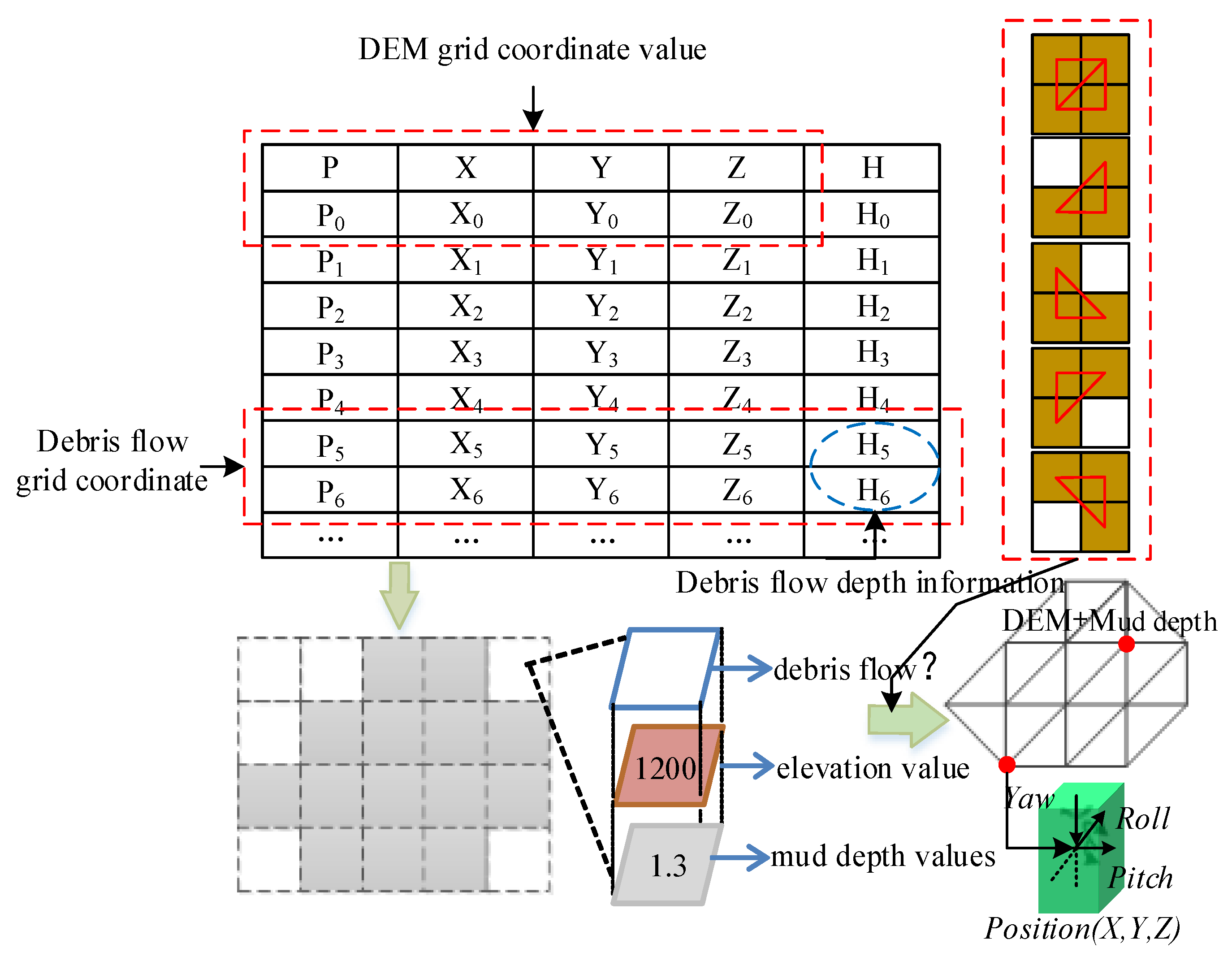

2.2.3. Fusion of Scene Objects with Spatial Semantic Constraints

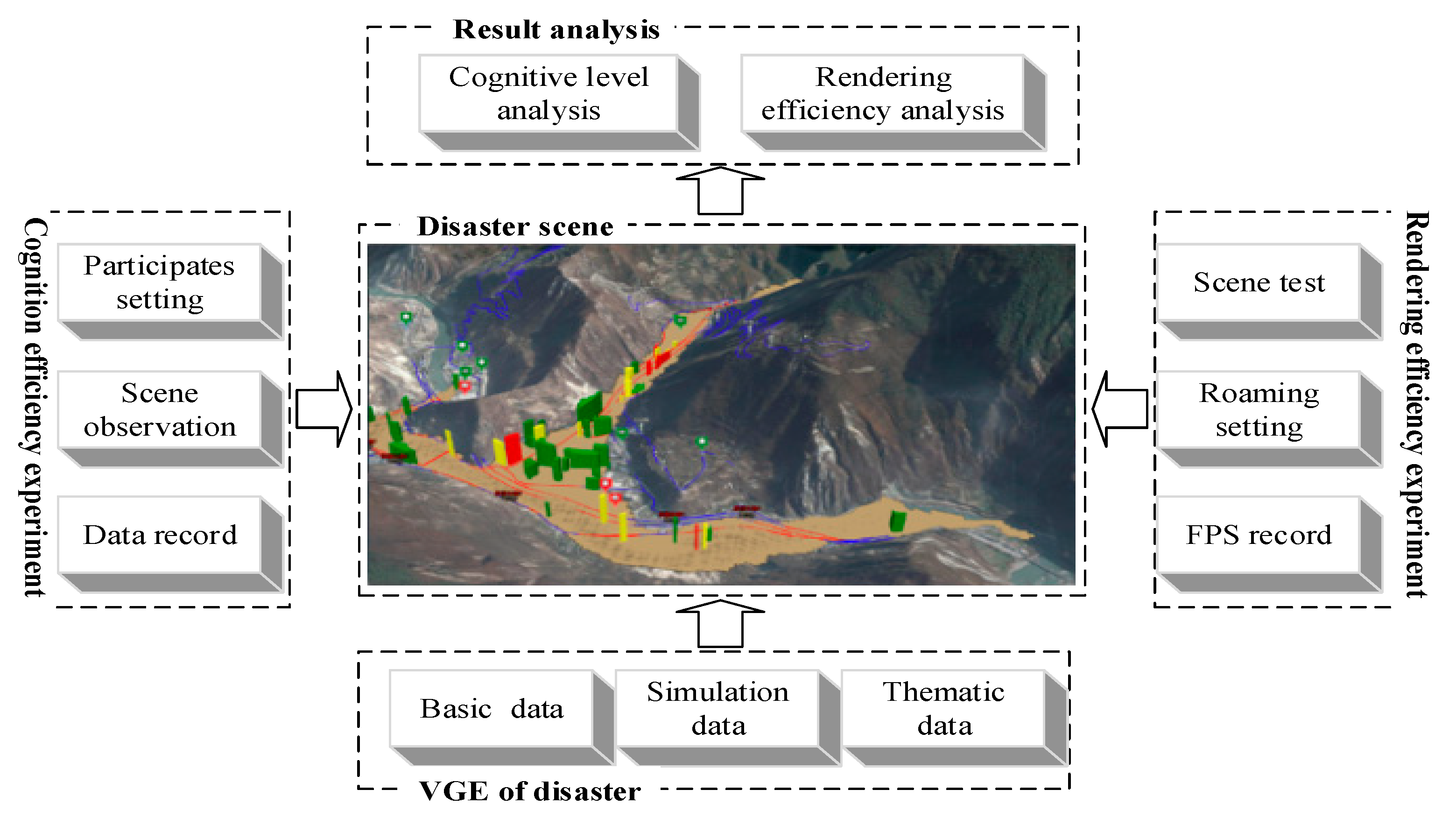

2.2.4. Disaster Scene Cognition and Visualization Efficiency Evaluation

3. System Implementation and Experimental Analysis

3.1. Study Area and Data Processing

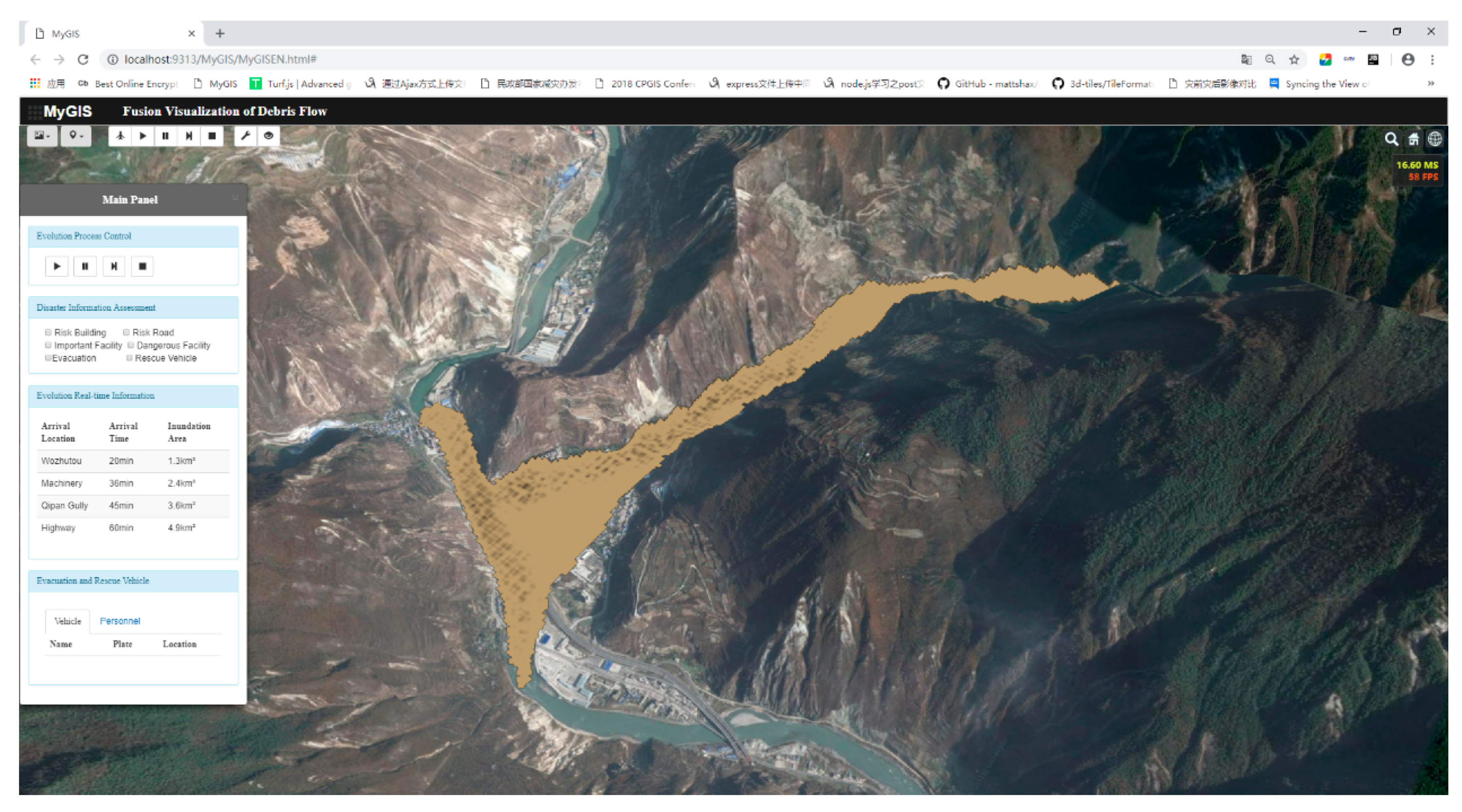

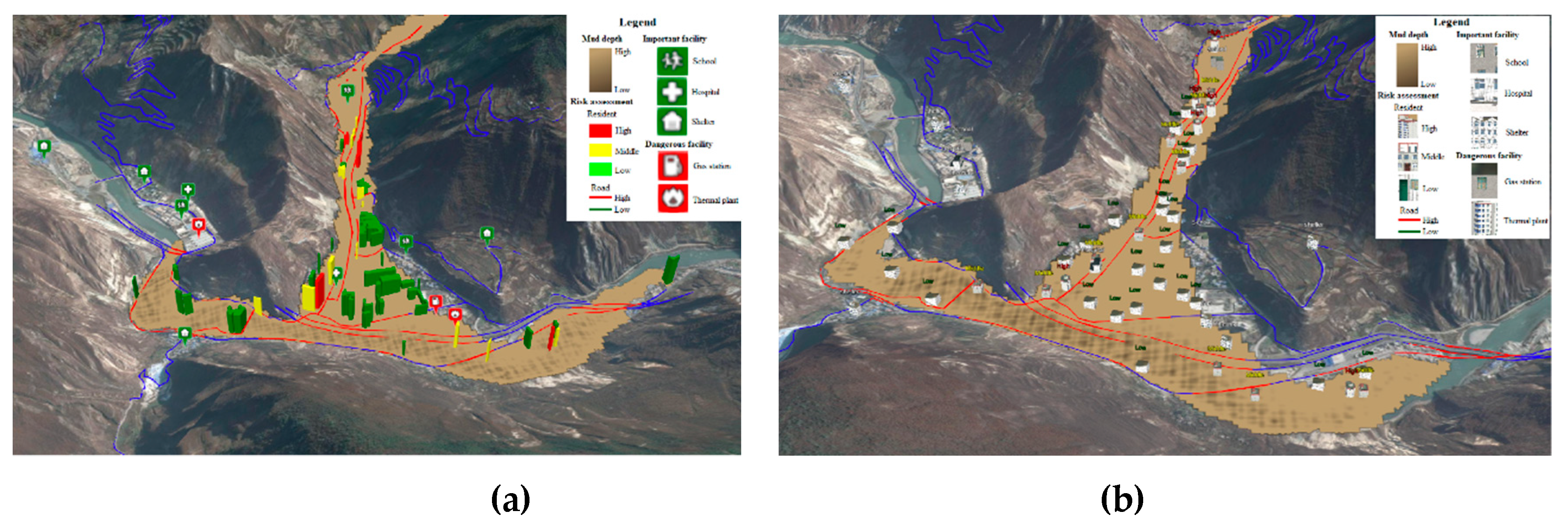

3.2. Prototype System Implementation and Fusion Visualization of the Disaster Information

3.3. Cognition and Visualization Efficiency of the Disaster Scene Experiments

3.3.1. Cognition Efficiency of the Disaster Scene Experiments

3.3.2. Rendering Efficiency of the Disaster Scene Experiment

4. Conclusions and Future Work

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Fan, Y.D.; Wu, W.; Wang, W.; Liu, M.; Wen, Q. Research progress of disaster remote sensing in China. J. Remote Sens. 2016, 20, 523–535. [Google Scholar]

- Zhao, Z.C. Seriously study and implement the spirit of General Secretary Xi Jinping’s important speech, comprehensively improve the ability of disaster prevention, mitigation and relief. Disast Reduct. China. 2016, 17, 11. [Google Scholar]

- Kelman, I. Climate change and the Sendai framework for disaster risk reduction. Int. J. Disast Risk Sci. 2015, 6, 117–127. [Google Scholar] [CrossRef]

- Aitsi-Selmi, A.; Egawa, S.; Sasaki, H.; Wannous, C.; Murrey, V. The Sendai framework for disaster risk reduction: Renewing the global commitment to people’s resilience, health, and well-being. Int. J. Disast Risk Sci. 2015, 6, 164–176. [Google Scholar] [CrossRef]

- Yodmani, S. Disaster Risk Management and Vulnerability Reduction: Protecting the Poor. In Proceedings of the Social Protection Workshop 6: Protecting Communities—Social Funds and Disaster Management, Manila, Philippines, 5–9 February 2001. [Google Scholar]

- Shi, P.J. Retrospect and prospect of China’s comprehensive disaster prevention, disaster mitigation and disaster relief. Disast Reduct. China 2016, 19, 16–19. [Google Scholar]

- Carter, W.N. Disaster Management: A Disaster Manager’s Handbook; Asian Development Bank: Mandaluyong City, Philippines, 2008. [Google Scholar]

- Pearce, L. Disaster management and community planning, and public participation: How to achieve sustainable hazard mitigation. Nat. Hazards 2003, 28, 211–228. [Google Scholar] [CrossRef]

- Center, A.D.P. Total Disaster Risk Management: Good Practices; Asian Disaster Reduction Center: Kobe, Japan, 2005. [Google Scholar]

- Comfort, L.K. Risk, security, and disaster management. Annu. Rev. Polit. Sci. 2005, 8, 335–356. [Google Scholar] [CrossRef]

- Desai, B.; Maskrey, A.; Peduzzi, P.; De Bono, A.; Herold, C. Making Development Sustainable: The Future of Disaster Risk Management, Global Assessment Report on Disaster Risk Reduction; United Nations Office for Disaster Risk Reduction (UNISDR): Genève, Switzerland, 2015. [Google Scholar]

- Denolle, M.A.; Dunham, E.M.; Prieto, G.A.; Beroza, G.C. Strong ground motion prediction using virtual earthquakes. Science 2014, 343, 399–404. [Google Scholar] [CrossRef] [PubMed]

- Cui, P. Progress and prospects in research on mountain hazards in China. Prog. Geogr. 2014, 33, 145–152. [Google Scholar]

- Fan, W.C.; Weng, W.G.; Wu, G.; Meng, Q.F.; Yang, L.X. Basic scientific problems of national security management. Bull. Natl. Nat. Sci. Found. China 2015, 29, 436–443. [Google Scholar]

- Peters, S.; Jahnke, M.; Murphy, C.E.; Meng, L.; Abdul-Rahman, A. Cartographic Enrichment of 3D City Models—State of the Art and Research Perspectives. In Advances in 3D Geoinformation. Lecture Notes in Geoinformation and Cartography; Abdul-Rahman, A., Ed.; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Reichenbacher, T.; Swienty, O. Attention-Guiding Geovisualization. In Proceedings of the 10th AGILE International Conference on Geographic Information Science, Aalborg, Denmark, 8–11 May 2007; 2007. [Google Scholar]

- Döllner, J.; Kyprianidis, J.E. Approaches to Image Abstraction for Photorealistic Depictions of Virtual 3D Models. In Proceedings of the First ICA Symposium for Central and Eastern Europe, Vienna, Austria, 16–17 February 2009; Springer: Berlin/Heidelberg, Germany. [Google Scholar]

- Lin, H.; Chen, M.; Lu, G.N.; Zhu, Q.; Gong, J.H.; You, X.; Wen, Y.N.; Xu, B.L.; Hu, M.Y. Virtual geographic environments (VGEs): A new generation of geographic analysis tool. Earth Sci. Rev. 2013, 126, 74–84. [Google Scholar] [CrossRef]

- Chen, M.; Lin, H.; Kolditz, O.; Chen, C. Developing dynamic virtual geographic environments (VGEs) for geographic research. Environ. Earth Sci. 2015, 74, 6975–6980. [Google Scholar] [CrossRef]

- Kim, J.C.; Jung, H.; Kim, S.; Chung, K. Slope based intelligent 3D disaster simulation using physics engine. Wirel. Pers. Commun. 2016, 86, 183–199. [Google Scholar] [CrossRef]

- Lin, H.; Chen, M. Managing and sharing geographic knowledge in virtual geographic environments (VGEs). Ann. Gis. 2015, 21, 261–263. [Google Scholar] [CrossRef]

- Gennady, A.; Natalia, A.; Urska, D.; Doris, D.; Jason, D.; Sara, I.F.; Mikael, J.; Menno-Jan, K.; Heidrun, S.; Christian, T. Space, time and visual analytics. Int. J. Geogr. Inf. Sci. 2010, 24, 1577–1600. [Google Scholar]

- Bodum, L. Modelling Virtual Environments for Geovisualization: A focus on representation. In Exploring Geovisualization; Dykes, J., MacEachren, A.M., Kraak, M.-J., Eds.; Elsevier: Oxford, UK, 2005; pp. 389–402. [Google Scholar]

- Sherman, W.R.; Craig, A.B. Understanding Virtual Reality: Interface, Application, and Design; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2002. [Google Scholar]

- MacEachren, A.M. How Maps Work: Representation, Visualization and Design; Paper Back; Guilford Press: New York, NY, USA, 2004; 513p. [Google Scholar]

- Hu, Y.; Zhu, J.; Li, W.; Zhang, Y.; Zhu, Q.; Qi, H.; Zhang, H.; Cao, Z.; Yang, W.; Zhang, P. Construction and optimization of three-dimensional disaster scenes within mobile virtual reality. ISPRS Int. J. Geo. Inf. 2018, 7, 215. [Google Scholar] [CrossRef]

- Zhu, L.; Hyyppä, J.; Kukko, A.; Kaartinen, H.; Chen, R. Photorealistic building reconstruction from mobile laser scanning data. Remote Sens. 2011, 3, 1406–1426. [Google Scholar] [CrossRef]

- Bunch, R.L.; Lloyd, R.E. The cognitive load of geographic information. Prof. Geogr. 2006, 58, 209–220. [Google Scholar] [CrossRef]

- Glander, T.; Döllner, J. Abstract representations for interactive visualization of virtual 3D city models. Comput. Environ. Urban Syst. 2009, 33, 375–387. [Google Scholar] [CrossRef]

- Bandrova, T. Designing of Symbol System For 3D City Maps. In Proceedings of the 20th International Cartographic Conference, Beijing, China, 6–10 August 2001; pp. 1002–1010. [Google Scholar]

- Petrovic, D.; Masera, P. Analysis of User’s Response on 3D Cartographic Presentations. In Proceedings of the 5th Mountain Cartography Workshop of the Commission on Mountain Cartography of the ICA, Bohinj, Slovenia, 29 March–1 April 2006. [Google Scholar]

- Auer, M.; Agugiaro, G.; Billen, N.; Loos, L.; Zipf, A. Web-based visualization and query of semantically segmented multiresolution 3d models in the field of cultural heritage. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 2, 33. [Google Scholar] [CrossRef]

- Meyer, V.; Kuhlicke, C.; Luther, J.; Fuchs, S.; Priest, S.; Dorner, W.; Serrhini, K.; Pardoe, J.; McCarthy, S.; Seidel, J.; et al. Recommendations for the user-specific enhancement of flood maps. Nat. Hazards Earth Syst. Sci. 2012, 12, 1701–1716. [Google Scholar] [CrossRef]

- Peng, G.Q.; Yue, S.S.; Li, Y.T.; Song, Z.Y.; Wen, Y.N. A Procedural construction method for interactive map symbols used for disasters and emergency response. ISPRS Int. J. Geo Inf. 2017, 6, 95. [Google Scholar] [CrossRef]

- Couclelis, H. Ontologies of geographic information. Int. J. Geogr. Inf. Sci. 2010, 24, 1785–1809. [Google Scholar] [CrossRef]

- Evans, S.Y.; Todd, M.; Baines, I.; Hunt, T.; Morrison, G. Communicating flood risk through three-dimensional visualization. Proc. Inst. Civ. Eng. Civ. Eng. 2014, 167, 48–55. [Google Scholar]

- Clemens, P. OpenGIS Geography Markup Language (GML) Encoding Standard, Version 3.2.1; OGC Doc. No. 07-036; Open Geospatial Consortium: Wayland, MA, USA, 2014. [Google Scholar]

- Classification of Earthquake Damage to Buildings and Special Structures. Basic Terms on Natural Disaster Management; GB/T 24335-2009; Standardization Administration of the People’s Republic of China: Beijing, China, 2009.

- Basic Terms on Natural Disaster Management; GBT 26376-2010; Standardization Administration of the People’s Republic of China: Beijing, China, 2010.

- Zhu, J.; Zhang, H.; Chen, M.; Xu, Z.; Qi, H.; Yin, L.Z.; Wang, J.H.; Hu, Y. A procedural modelling method for virtual high-speed railway scenes based on model combination and spatial semantic constraint. Int. J. Geogr. Inf. Sci. 2015, 29, 1059–1080. [Google Scholar] [CrossRef]

- Dong, W.H.; Wang, S.K.; Chen, Y.Z.; Meng, L.Q. Using eye tracking to evaluate the usability of flow maps. ISPRS Int. J. Geo. Inf. 2018, 7, 281. [Google Scholar] [CrossRef]

- Dong, W.H.; Zheng, L.Y.; Liu, B.; Meng, L.Q. Using eye tracking to explore differences in map-based spatial ability between geographers and non-geographers. ISPRS Int. J. Geo. Inf. 2018, 7, 337. [Google Scholar] [CrossRef]

- Liu, B.; Dong, W.; Meng, L. Using eye tracking to explore the guidance and constancy of visual variables in 3D visualization. ISPRS Int. J. Geo. Inf. 2017, 6, 274. [Google Scholar] [CrossRef]

- Popelka, S.; Brychtova, A. Eye-tracking study on different perception of 2d and 3d terrain visualisation. Cart. J. 2013, 50, 240–246. [Google Scholar] [CrossRef]

- Yin, L.Z.; Zhu, J.; Li, Y.; Zeng, C.; Zhu, Q.; Qi, H.; Liu, M.W.; Li, W.L.; Cao, Z.Y.; Yang, W.J.; et al. A virtual geographic environment for debris flow risk analysis in residential areas. ISPRS Int. J. Geo. Inf. 2017, 6, 377. [Google Scholar] [CrossRef]

| Category | Content | Data Format | |

|---|---|---|---|

| Before | After | ||

| Basic geographic data | Terrain data/image data | .tif | .terrain/.png |

| Debris flow Simulation data | Location/range/depth/velocity | .txt | .json |

| Thematic analysis data | Residential buildings/roads/important facilities/dangerous facilities/population | .shp/.txt/.3ds | .json/.glTF/.png |

| Test Groups | Visualization Types | Important Facilities | Dangerous Facilities | Degree of Risk of Residential Buildings |

|---|---|---|---|---|

| A | Self-explanatory symbols | Schools, hospitals, shelters | Gas station, thermal power plant | High, medium, low |

| B | Detailed texture, annotations |

| Evaluation criterion | Index | Describe |

| Accuracy | Scene object identification and question and answer accuracy | |

| Time | The average time taken to finish the cognitive experiment |

| Evaluation Index | Group A | Group B |

|---|---|---|

| Average accuracy (%)/variance | 91.93/94.49 | 80.20/159.03 |

| Average finish time (s)/variance | 62.20/332.45 | 168.93/3361.78 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, W.; Zhu, J.; Zhang, Y.; Cao, Y.; Hu, Y.; Fu, L.; Huang, P.; Xie, Y.; Yin, L.; Xu, B. A Fusion Visualization Method for Disaster Information Based on Self-Explanatory Symbols and Photorealistic Scene Cooperation. ISPRS Int. J. Geo-Inf. 2019, 8, 104. https://doi.org/10.3390/ijgi8030104

Li W, Zhu J, Zhang Y, Cao Y, Hu Y, Fu L, Huang P, Xie Y, Yin L, Xu B. A Fusion Visualization Method for Disaster Information Based on Self-Explanatory Symbols and Photorealistic Scene Cooperation. ISPRS International Journal of Geo-Information. 2019; 8(3):104. https://doi.org/10.3390/ijgi8030104

Chicago/Turabian StyleLi, Weilian, Jun Zhu, Yunhao Zhang, Yungang Cao, Ya Hu, Lin Fu, Pengcheng Huang, Yakun Xie, Lingzhi Yin, and Bingli Xu. 2019. "A Fusion Visualization Method for Disaster Information Based on Self-Explanatory Symbols and Photorealistic Scene Cooperation" ISPRS International Journal of Geo-Information 8, no. 3: 104. https://doi.org/10.3390/ijgi8030104

APA StyleLi, W., Zhu, J., Zhang, Y., Cao, Y., Hu, Y., Fu, L., Huang, P., Xie, Y., Yin, L., & Xu, B. (2019). A Fusion Visualization Method for Disaster Information Based on Self-Explanatory Symbols and Photorealistic Scene Cooperation. ISPRS International Journal of Geo-Information, 8(3), 104. https://doi.org/10.3390/ijgi8030104