The main goal of the study was to examine the possibility of detecting shore structures with the use of an automotive radar on a floating ASV. The idea was to compare a radar traffic image with an orthophotomap to identify detectable structures.

First, the general concept is given, followed by a detailed description of the research equipment and configuration, as well as the methodology of photogrammetric and radar measurements. At the end, the evaluation methodology of comparative research is given.

2.1. Research Concept

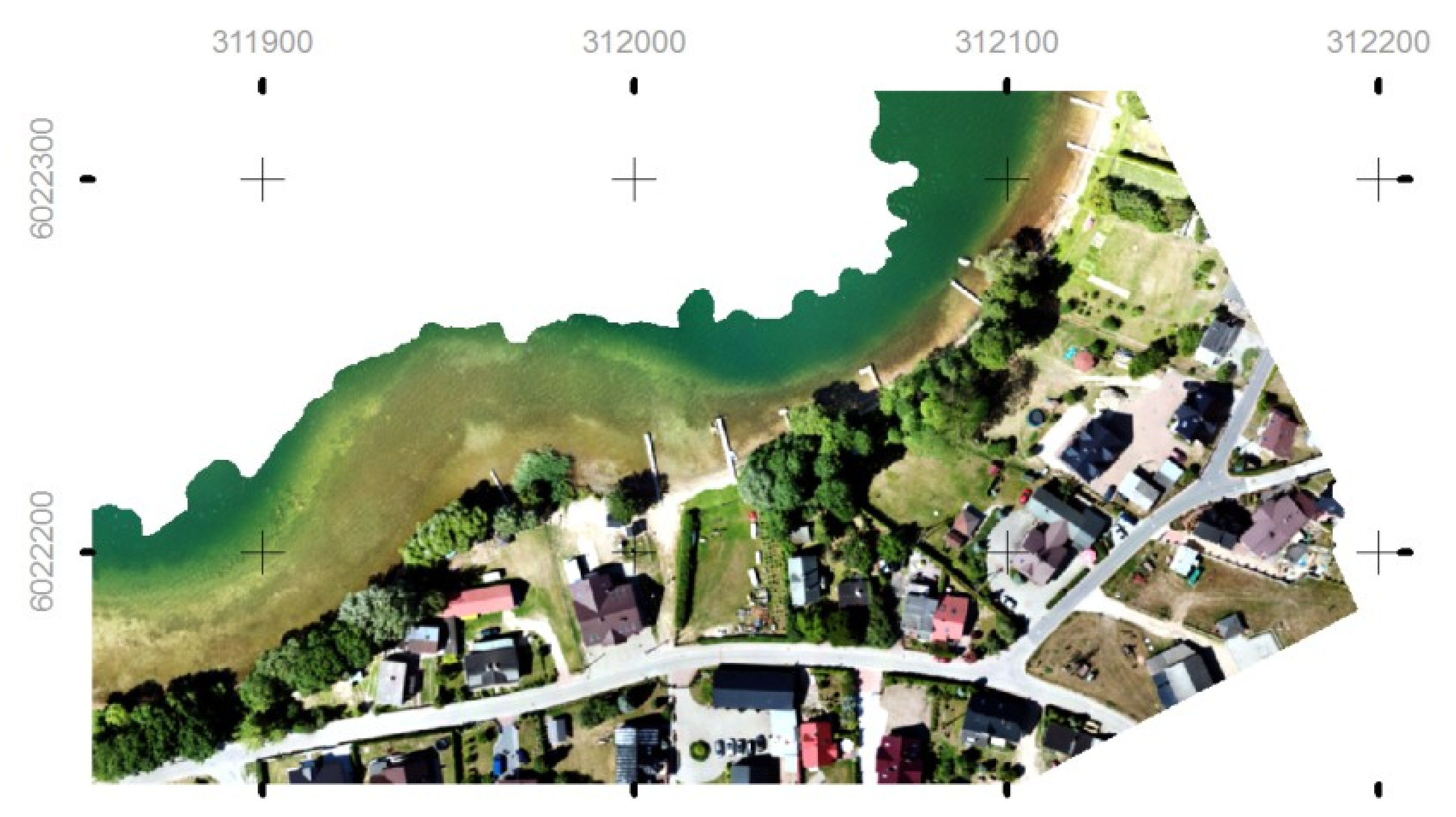

A comparative analysis of photogrammetric and radar images of the same area was performed, with the aim of finding and selecting objects in the orthophoto map and analyzing their echoes in the radar image.

Photogrammetric measurements were made from an autonomous flying platform, and radar measurements were recorded from automotive radar mounted on a floating autonomous ship. Thus, for the same area, we obtained images using different techniques and from different perspectives: a bird’s-eye view and a surface view.

The subsequent steps were as follows:

georeferencing of the radar traffic image;

overlaying of the radar traffic image on the orthophotomap;

selection of targets from radar for analysis;

identification of selected objects on the orthophotomap;

analysis of the possibility of detecting shore structures;

identification of important objects that were not detected by radar.

In addition, a statistical analysis of possible detections by radar was performed, based on a number of runs of the ASV over different courses.

In the approach presented here, a photogrammetric picture is used as the reference platform for possible identification of radar targets and for analysis of their accuracy. The novelty of this approach lies mainly in the specific character of the automotive radar. This radar does not provide a picture itself, but rather a set of target measurements. Thus, spatially extended objects become sets of point targets, which makes identification a non-trivial task. Another challenge is posed by the use of automotive radar in a water environment, which can be considered a novel technological concept and in need of further investigation itself.

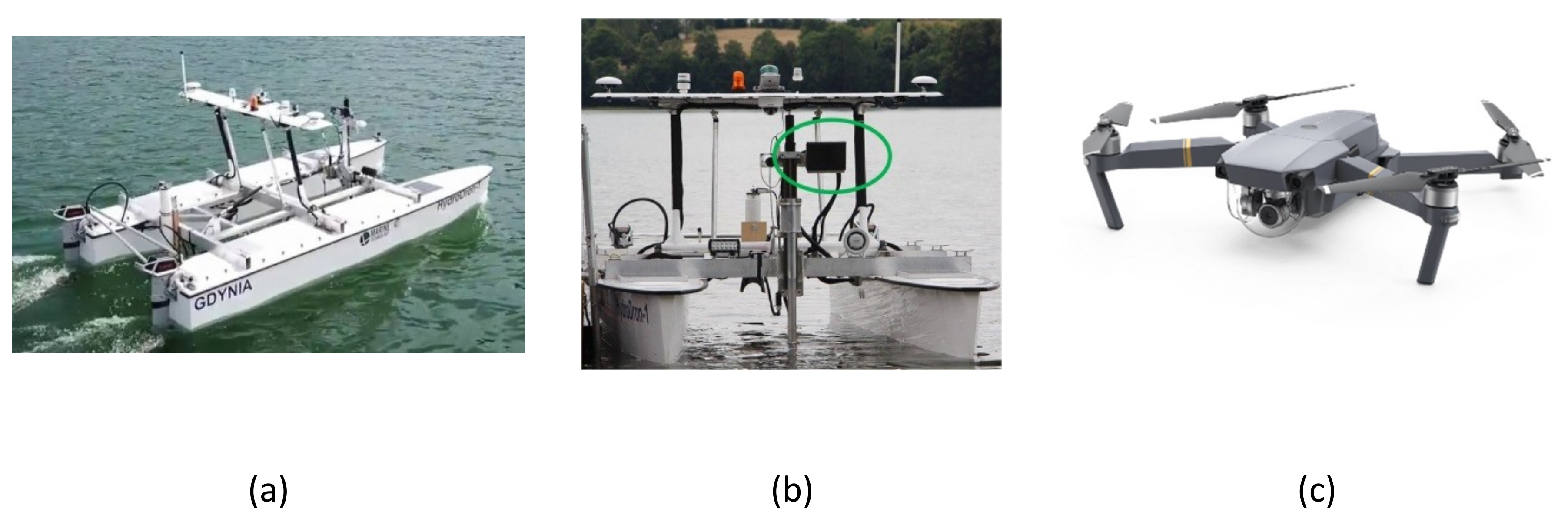

The research equipment deployed is presented in

Figure 1 and includes the HydroDron ASV, 3D automotive radar, and a UAV.

The HydroDron is a double-hulled catamaran, made of lightweight but durable material, 4 m long and 2 m wide. It is also characterized by a small draught ranging from 20 cm at the bow to 50 cm at the aft–motor section. The aft–motor section is equipped with two Torqueedo Cruise 4. 0 electric motors, which work independently of each other. The main specifications of the HydroDron are given in

Table 1.

The HydroDron is mobile in the sense that it can be transported to the measurement area by road on a trailer, or by water on another, larger surface vessel. It is possible to launch the catamaran from a trailer at the shoreline, from a beach, jetty, or quay, or from a ship.

The system is powered by rechargeable batteries located in two independent bays, while two photovoltaic panels located in the bow section of the vessel allow for complementary recharging. The catamaran system is capable of operating for 12 h.

The HydroDron is designed as a multipurpose ASV, but its main use is for various hydrographic measurements. Therefore, it is equipped with a wide range of measuring equipment.

Table 2 presents the major navigational and hydrographic sensors mounted onboard, all of which can be easily dismantled. This configuration allows the performance of most hydrographic measurements in shallow waters. The hydrographic head is mounted on a movable cylinder, and the navigation sensors are installed on a mast that is automatically folded down. The sound speed meter can be lowered or raised into or out of the water automatically by means of an anchor lift. The catamaran system transmits the recorded data to a shore station consisting of two consoles and a Getac S410 for data acquisition. More information about the HydroDron’s equipment can be found in Reference [

41].

The crucial sensors from the point of view of the present study are the navigational system and the radar.

The high-quality inertial navigation system provides information about position, speed, and heading. Its specifications are presented in

Table 3.

The specifications of the radar used in this study are presented in

Table 4. It is a 3D UMRR 42HD automotive radar with a 24 GHz microwave sensor. The type 42 antenna has a wide field of view. The sensor is a 24 GHz 3D/UHD radar for motion management and is able to operate under adverse conditions, measuring in parallel such parameters as angle, radial speed, range, and reflectivity. It is usually used as a standalone radar and for detecting approaching and receding motion. The operation of the sensor enables the detection of a large number of reflectors (256 target objects) at the same time. The scope can also be sorted, with short-range targets being reported first if more than 128 targets are detected. Each sensor provides the following data: volume, occupancy, average speed, vehicle presence, 85th percentile speed, headway, gap, and a wrong way detection trigger. It is possible to save the recorded data in the internal FLASH memory, with the possibility of downloading them later [

44].

The reference frame for analyzing the possibility of detection by radar was an orthophotomap, obtained from low-level photogrammetric measurements done with the use of a DJI UAV. UAV photogrammetry, as a low-cost alternative to aerial photogrammetry, is able to deliver accurate spatial data for a given mapping area in the shortest possible time. Each specific case requires an appropriate UAV, with the choice being made on the following bases: during each flight, the platform must undertake the maximal area coverage, the system must execute an autonomous flight along a programed track and at a specified height (level), the flight must be possible under any given weather conditions (wind resistance), and the take-off and landing areas must not be restricted. To select the most suitable platform for the present study, the methodology described in Reference [

42] was used, and indicated that a multirotor UAV was suitable. A DJI Mavic Pro MAV (micro air vehicle) (

Figure 1c) was used, equipped with Bentley Context Capture Software for data processing (georeferenced UAV mapping). For navigation, it employed one GPS and GLONASS module, two inertial measurement unit (IMU) modules, and a forward and downward vision system for automatic self-stabilization and to navigate between obstacles and to track moving objects. The MAV was equipped with a DJI FC220 non-metric camera with sensor size 1/2.3” (6.16mm × 4.55 mm) and pixel size 1.55 µm (

Table 5). The camera tagged (into the EXIF metadata) the images with geolocation data using the MAV’s GPS (direct image georeferencing).

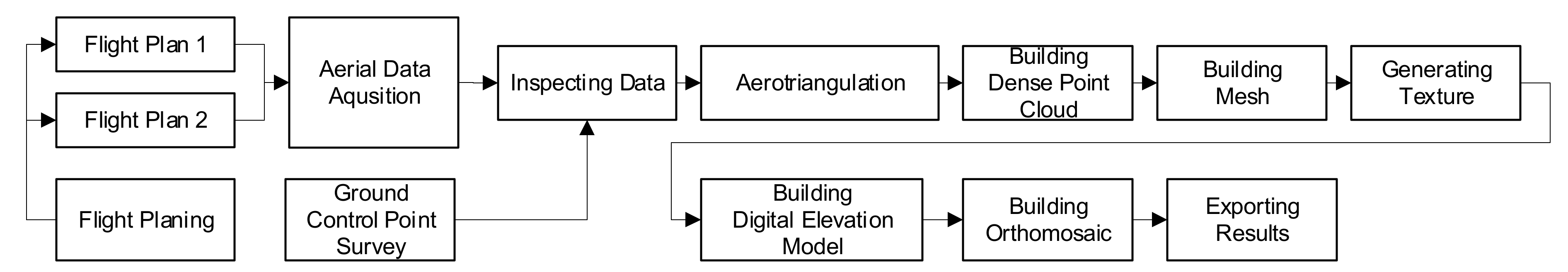

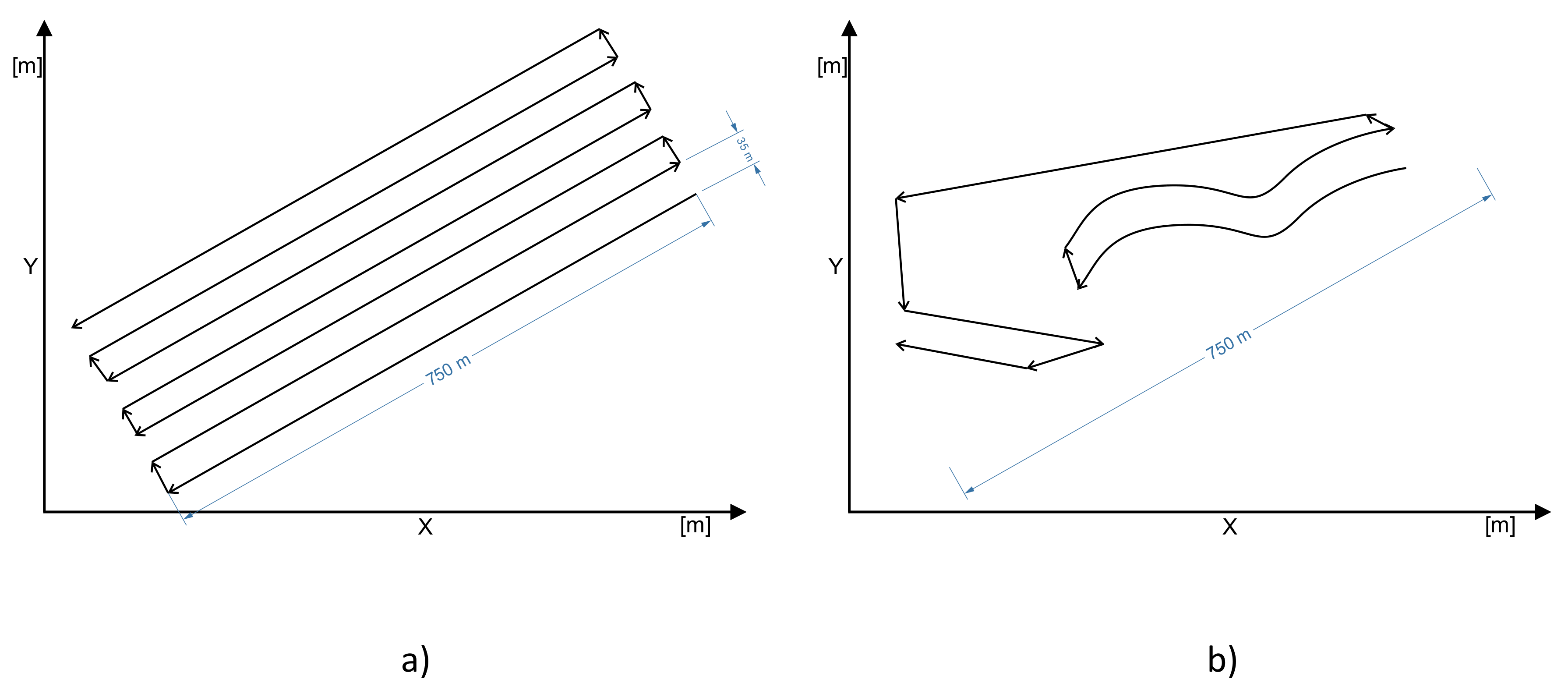

2.2. Methodology for Photogrammetric Measurements

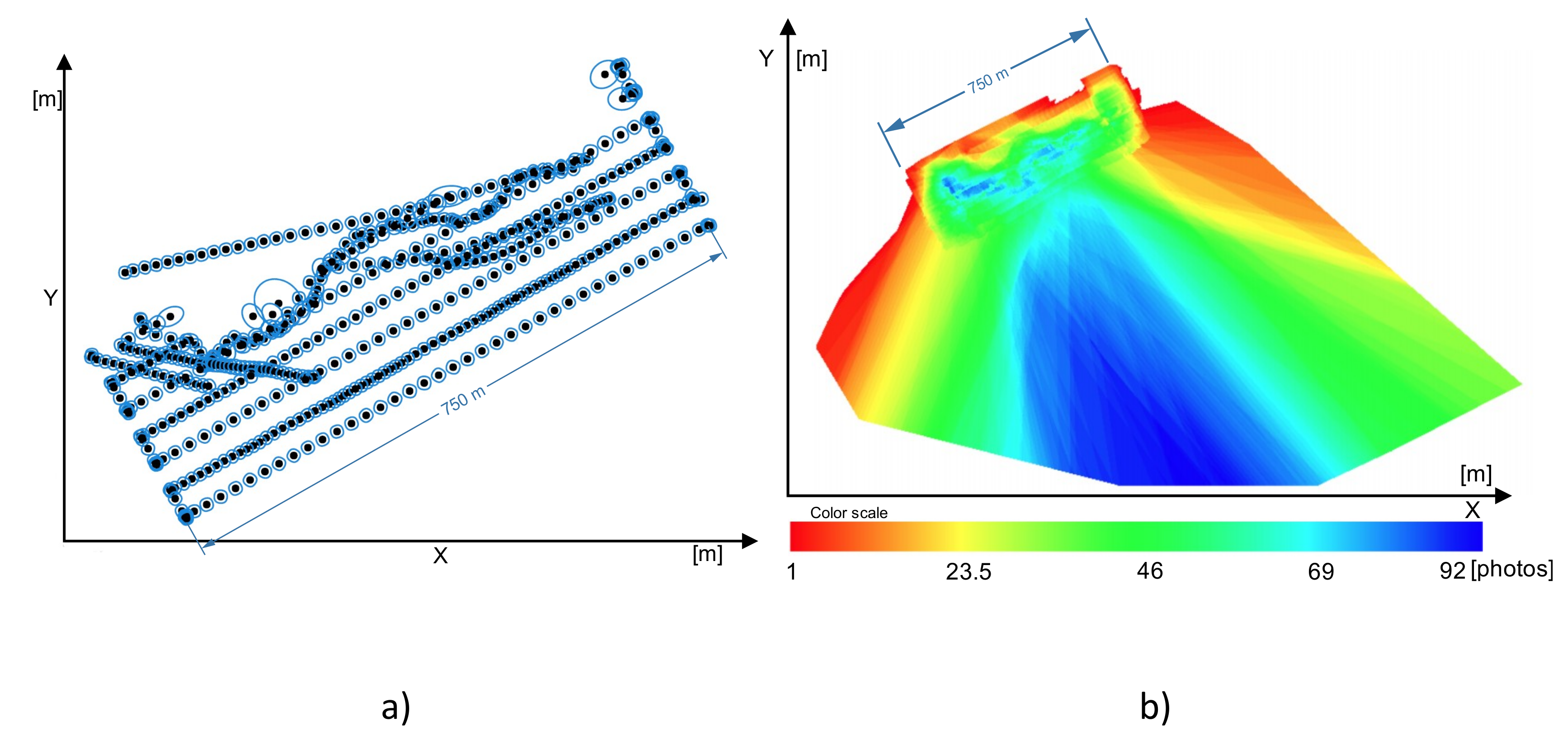

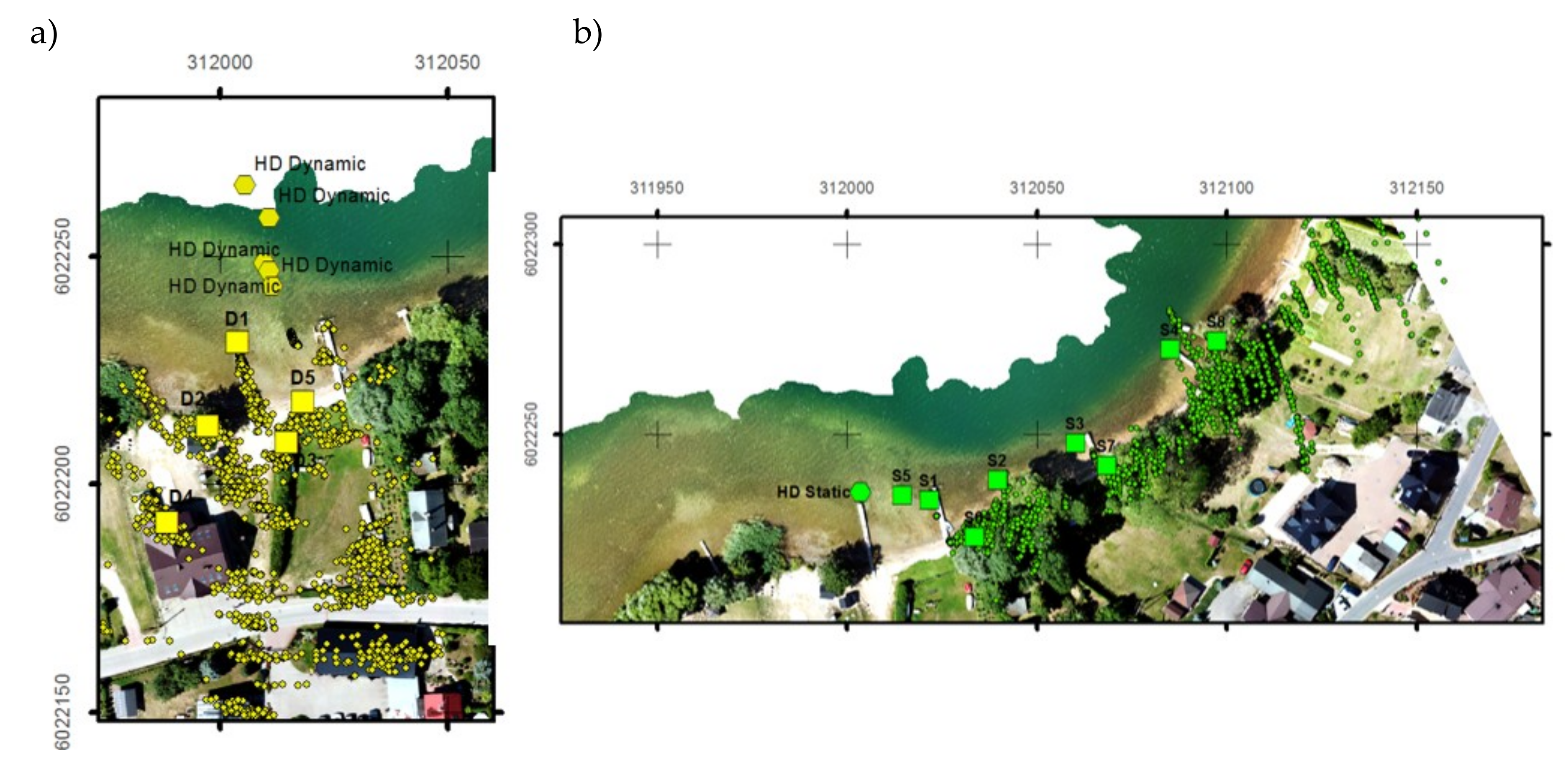

Image data were acquired using two different flight paths, post-flight geodetic grade ground control point (GCP) measurements, and data processing (

Figure 2). The area to be mapped and the specific task required non-standard flight paths. The first flight path followed a single-grid mission plan (

Figure 3a) and was used for general measurements with a nadir camera orientation. This plan is suitable for most environments, and is recommended when the principal interest is in 2D map outputs, relatively flat surfaces, and large areas. This type of flight does not deliver enough data to model objects on the shore or situated under trees. When trees also cover the shoreline, they cannot be modeled using nadir images.

The second flight path, following a free flight plan (

Figure 3b), was used to measure objects on the shore. Such a plan is suitable for acquiring data on more difficult objects that require greater flexibility. The camera shutter is automatically triggered according to horizontal and vertical distance intervals. The plan requires manual operation of the MAV, and is recommended when the principal interest is in 3D model outputs, small areas, and complex or vertical structures (e.g., building facades, cliffs, or bridges). The free flight was used for modeling objects on the shore, hidden underneath the trees, or covered by other objects. The measurements from the two different flight plans were fused for the final modeling. The modeling software used was a reality modeling category application that was originally designed for processing images of the physical environment into 3D representations to provide contexts within geospatial modeling environments.

Eight natural GCPs were established and measured using geodetic-grade real-time kinematic (RTK) measurements. For aerial survey applications, GCPs are required to enhance the positioning and accuracy of the mapping outputs.

2.3. Methodology for Radar Measurements

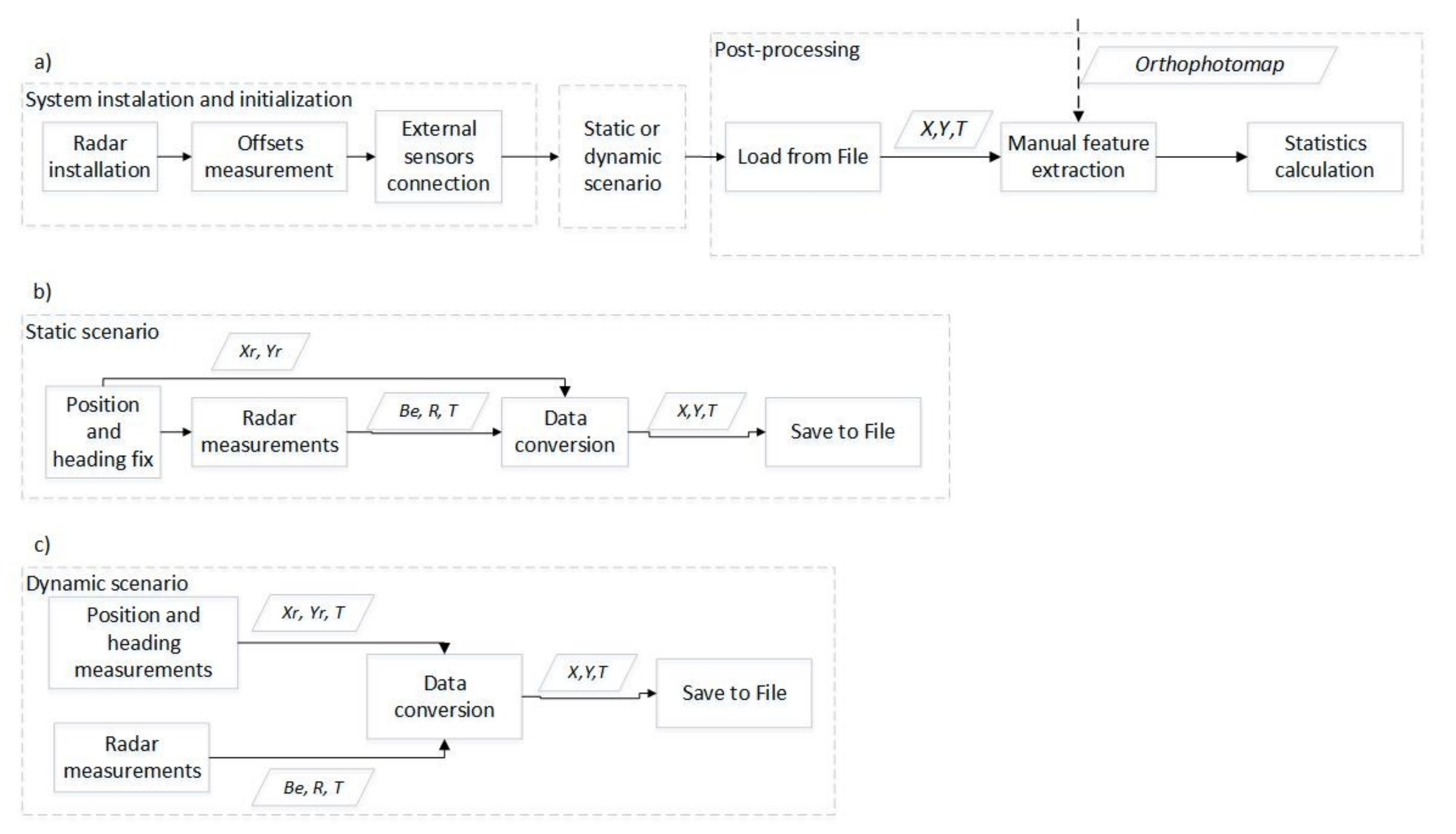

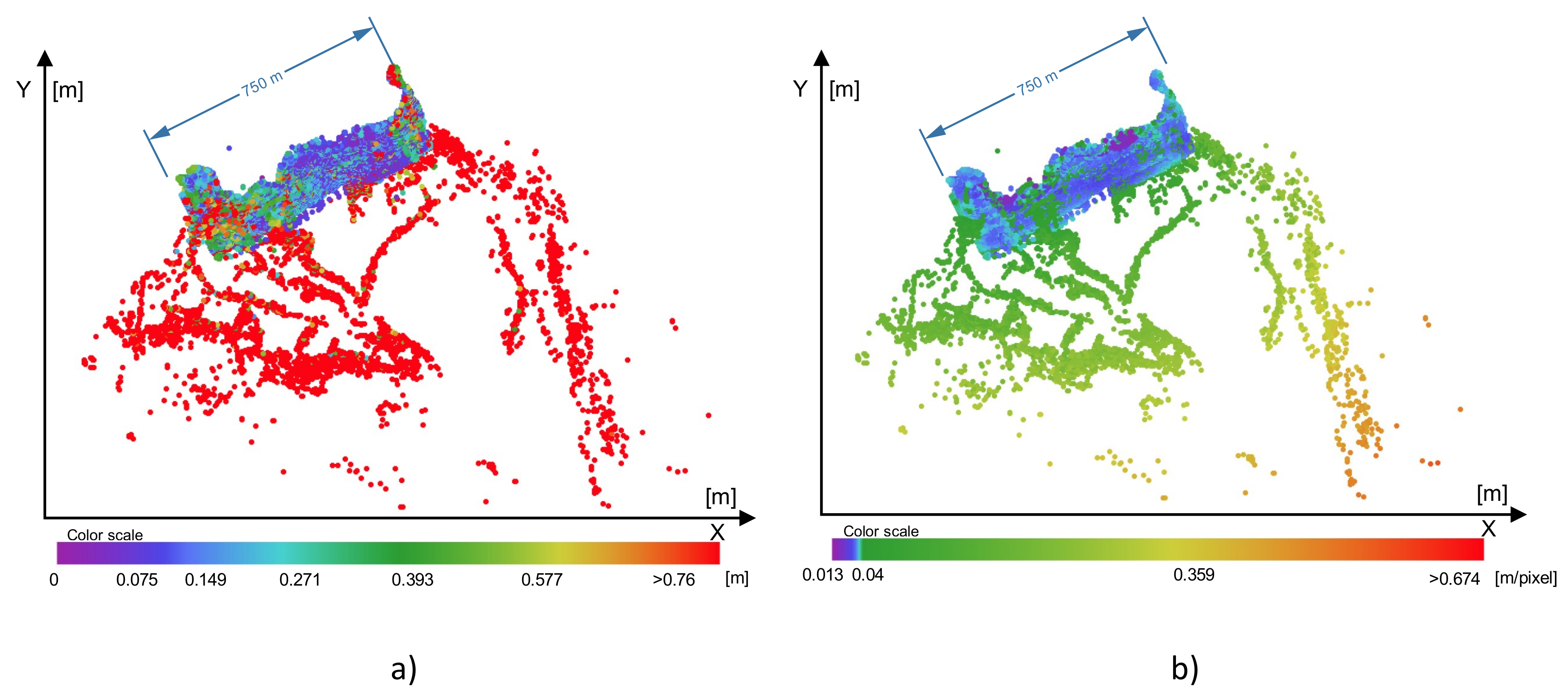

As already mentioned, the radar data obtained in this study could not be analyzed as in the case of data from classical marine or inland water radar. First of all, it did not provide an image as a whole, but rather a set of point targets. Thus, we cannot talk here about a radar image, but rather a radar traffic image. Extraction of objects acquires a new meaning here, being less a matter of image processing and more a signal processing task. A high temporal frequency allows usable images to be obtained for anti-collision and mooring purposes. The key problem in such identification tasks is organization of the measurement strategy. The radar methodology that was used is presented schematically in

Figure 4.

The first step was installation of sensors and system initialization (

Figure 4a). The radar was mounted in the front of the HydroDron. The offset to the global navigational satellite system (GNSS) antenna was measured to find a common reference point. Measurements from external systems (e.g., GNSS and heading) were provided to the measurement recording software on a PC installed in the HydroDron. Measurements were then performed in a static or a dynamic scenario, as shown in

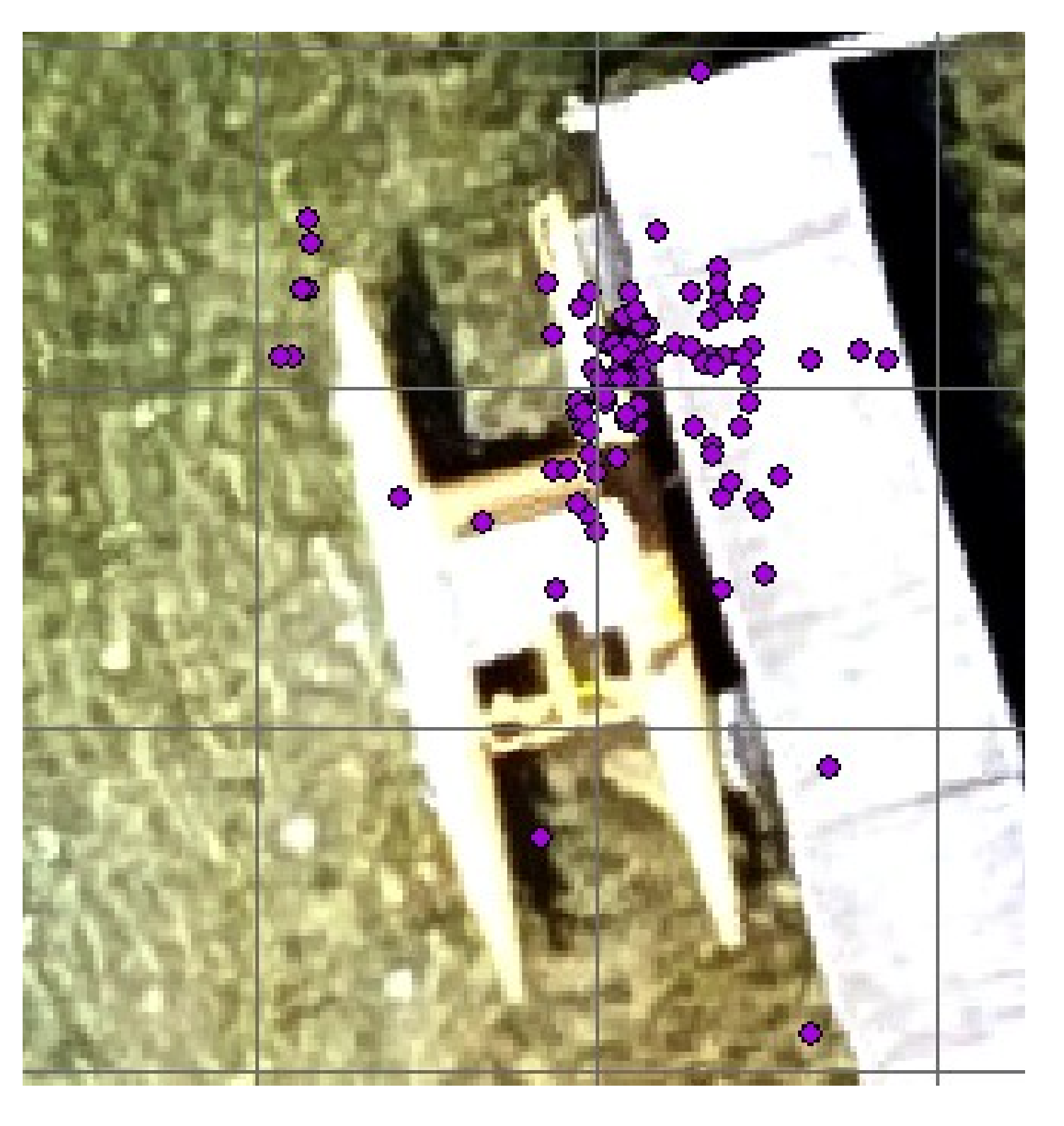

Figure 4b,c and described below. The results from the scenarios were files in which recorded radar measurements were converted to universal transverse mercator (UTM) coordinates. These were then processed in ESRI ArcGIS software together with the orthophotomap. Manual extraction of features was performed, radar measurements were associated with each target, and the statistics for the results were calculated.

The measurements were performed in two variants: static measurements, when the ASV was moored at the quay (henceforth referred to as the static scenario—

Figure 4b), and dynamic measurements (henceforth referred to as the dynamic scenario—

Figure 4c), when the ASV was traveling on a lake. The position of the radar in both cases was obtained online with high-precision GNSS-RTK measurement. The positions of targets were thus georeferenced, and the georeferenced targets were used to create a complex radar traffic image. However, in the case of the static scenario, initial measurement of position and heading was needed only once. The radar data were recorded in a local polar coordinate system, while the RTK data were measured in the UTM system. Thus, georeferencing involved transformation of the local radar system to UTM. This entire process is called “Data conversion” in

Figure 4. Cartographic errors were ignored in this step, owing to the small measurement distances (maximum 200 m). The key element here was proper indication of the time shift between measurements. In this case, a linear model of ASV movement was employed. Simultaneously, the orthophotomap was converted to the UTM system from its original World Geodetic System (WGS-84).

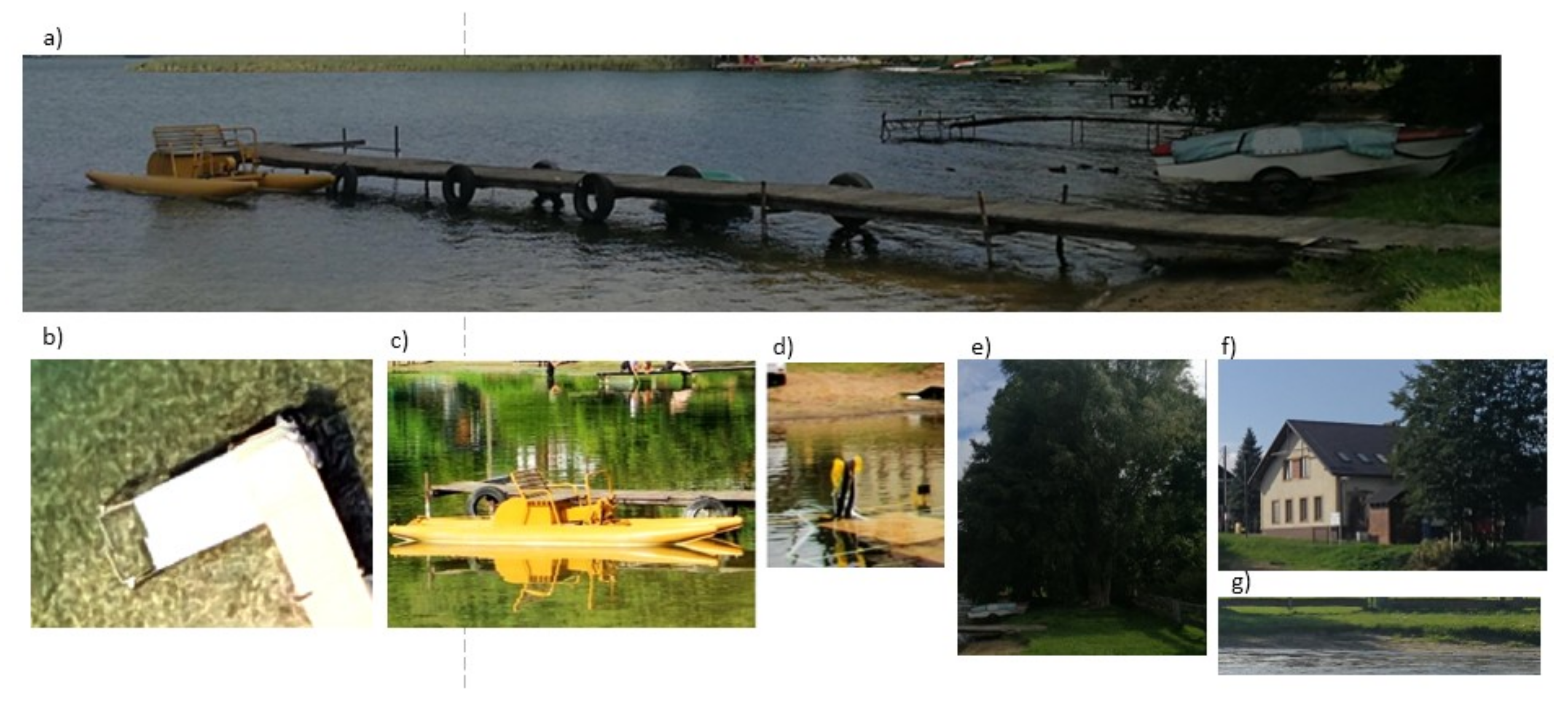

2.4. Evaluation Methodology

The measurements described above provided photogrammetric and radar pictures of the same scene. In the case of radar, many measurements were obtained for each target, owing to the high temporal density of measurements. These radar targets were overlaid on the orthophotomap, and a set of test objects was chosen. For these objects, a statistical analysis of the possibility and accuracy of detection was performed. A detailed description of the selected objects is given in the next subsection, but, in general, it can be said that they included the most common targets in an inland area, namely, vegetation (trees and bushes), piers, buildings, and other boats. This allowed a complex analysis of the possibility of detecting shore structures using automotive radar in an inland shipping environment.

The radar time sampling frequency was 20 Hz, which means that during the study (which lasted about 20 min), a large number of plots for each object were received. Although such a situation might be good for statistical analysis, it is unlikely to happen in real life. Thus, it was decided to analyze the situation in sliding sampling windows. For both scenarios, the length of sampling window was chosen to be 5 s. Given the typical speed of an ASV during measurement and mooring operations (about 3–4 knots), this is a time in which it is feasible to take anti-collision action after detection of an obstacle. The statistics for each selected object were calculated in these time windows.