Recovering Human Motion Patterns from Passive Infrared Sensors: A Geometric-Algebra Based Generation-Template-Matching Approach

Abstract

1. Introduction

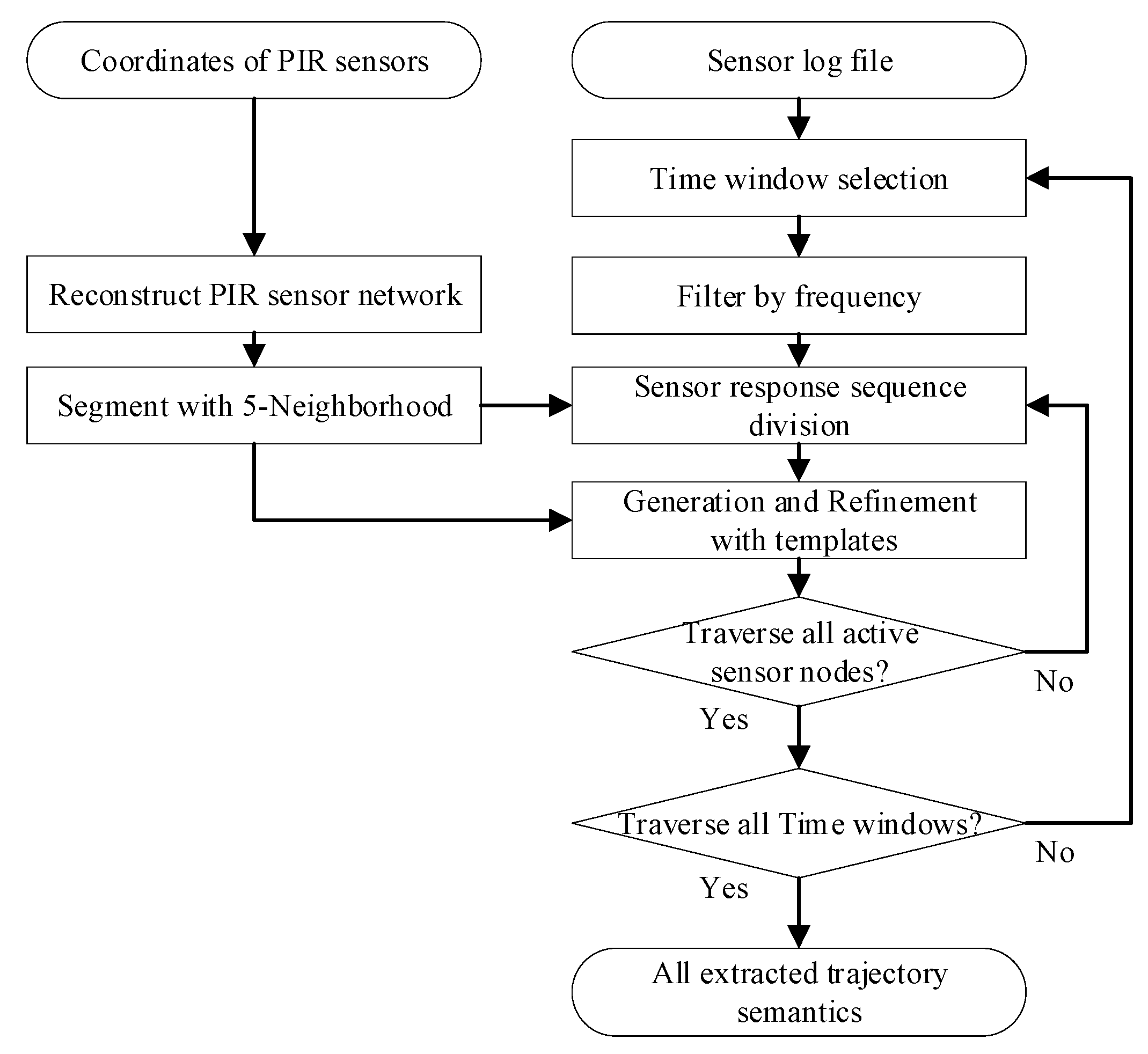

2. Materials and Methods

2.1. Problem Definition and Basic Idea

2.2. Basic Idea

2.2.1. Template Generation

2.2.2. Spatial and Temporal Filtering

2.2.3. Template Matching

3. Methods

3.1. Templates with the Neighborhood Model

3.2. Spatial and Temporal Constraint Window

3.2.1. Spatial Constraint Window

3.2.2. Temporal Constraint Window

3.3. Meta Response Pattern Generation

- and are both empty, which means only the center sensor node responds;

- and both have response sensor nodes;

- Either or is empty.

3.4. Template Matching of Meta Response Pattern and Template

3.5. Generation-Template Matching Algorithm

| Algorithm 1. Pseudo code of the generation and matching algorithm. |

| Input: Basic parameters, Coordinate set of sensors C, Sensor activation log Data, Time division step Δt |

| Output: semantic set of trajectory ST |

| Function Explanation: The functions used in the pseudo-code are explained with reference to Table 1. TC means all the sensor nodes after coordination conversion; Aj means the set of nodes adjacent to TC; M means the adjacency matrix; E denotes adjacent domain coding; T denotes the total time; W denotes the duration time window; GA_code represents the code sequence with the combination of Front_seq and Back_seq; Templates mean the pre-defined 5-neighborhood motion templates. |

| 1: TC = DataCoordTrans(C); |

| 2: for i←0 to Count of TC do |

| 3: Aji←DataFindAdj(TCi); |

| 4: for each element e in Aji |

| 5: if (AdjJudgeCon(e, TCi)) |

| 6: M←IniAdjMatrix(e, TCi); |

| 7: End if |

| 8: End for |

| 9: End for |

| 10: for i←0 to Count of TC do |

| 11: M5i←DataNeighScreen(TCi, M); |

| 12: E5i,←DataNeighCode(M5i); |

| 13: for j←0 to T/W |

| 14: E5 i,j←FreqFilter(E5 i,j) ; |

| 15: Front_seq←DataDivSeq(Data, E5 i,j, Wj, Δt).Front; |

| 16: Back_seq←DataDivSeq(Data, E5 i,j, Wj, Δt).Back; |

| 17: GA_code←DataSemGenerate (Front_seq, Back_seq); |

| 18: End for |

| 19: End for |

| 20: Motion_Pattern←DataSemMatch(GA_code, Templates); |

4. Case Study

4.1. Data and Analysis Environment

4.2. Analysis and Verification

4.2.1. Verification Based on the Environment

4.2.2. Verification Based on the Event

4.3. Algorithm Efficiency

5. Conclusions and Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- D’Apuzzo, N. Surface measurement and tracking of human body parts from multi-image video sequences. ISPRS J. Photogramm. Remote Sens. 2002, 56, 360–375. [Google Scholar] [CrossRef]

- Guo, S.; Xiong, H.; Zheng, X.; Zhou, Y. Activity Recognition and Semantic Description for Indoor Mobile Localization. Sensors 2017, 17, 649. [Google Scholar] [CrossRef] [PubMed]

- Kulshrestha, T.; Saxena, D.; Niyogi, R.; Raychoudhury, V.; Misra, M. SmartITS: Smartphone-based Identification and Tracking using Seamless Indoor-Outdoor Localization. J. Netw. Comput. Appl. 2017, 98, 97–113. [Google Scholar] [CrossRef]

- Pittet, S.; Renaudin, V.; Merminod, B.; Kasser, M. UWB and MEMS Based Indoor Navigation. J. Navig. 2008, 61, 369–384. [Google Scholar] [CrossRef]

- Dodge, S.; Weibel, R.; Forootan, E. Revealing the physics of movement: Comparing the similarity of movement characteristics of different types of moving objects. Comput. Environ. Urban Syst. 2009, 33, 419–434. [Google Scholar] [CrossRef]

- Laube, P.; Dennis, T.; Forer, P.; Walker, M. Movement beyond the snapshot—Dynamic analysis of geospatial lifelines. Comput. Environ. Urban Syst. 2007, 31, 481–501. [Google Scholar] [CrossRef]

- Jin, X.; Sarkar, S.; Ray, A.; Gupta, S.; Damarla, T. Target Detection and Classification Using Seismic and PIR Sensors. IEEE Sens. J. 2012, 12, 1709–1718. [Google Scholar] [CrossRef]

- Yang, D.; Xu, B.; Rao, K.; Sheng, W. Passive Infrared (PIR)-Based Indoor Position Tracking for Smart Homes Using Accessibility Maps and A-Star Algorithm. Sensors 2018, 18, 332. [Google Scholar] [CrossRef]

- Yu, Z.; Luo, W.; Yuan, L.; Hu, Y.; Zhu, A. Geometric Algebra Model for Geometry-oriented Topological Relation Computation. Trans. GIS 2016, 20, 259–279. [Google Scholar] [CrossRef]

- Zappi, P.; Farella, E.; Benini, L. Tracking Motion Direction and Distance with Pyroelectric IR Sensors. IEEE Sens. J. 2010, 10, 1486–1494. [Google Scholar] [CrossRef]

- Agarwal, R.; Kumar, S.; Hegde, R.M. Algorithms for Crowd Surveillance Using Passive Acoustic Sensors Over a Multimodal Sensor Network. IEEE Sens. J. 2015, 15, 1920–1930. [Google Scholar] [CrossRef]

- Kim, H.H.; Ha, K.N.; Lee, S.; Lee, K.C. Resident Location-Recognition Algorithm Using a Bayesian Classifier in the PIR Sensor-Based Indoor Location-Aware System. IEEE Trans. Syst. Man Cybern. Part C 2009, 39, 240–245. [Google Scholar]

- Luo, X.; Guan, Q.; Tan, H.; Gao, L.; Wang, Z.; Luo, X. Simultaneous Indoor Tracking and Activity Recognition Using Pyroelectric Infrared Sensors. Sensors 2017, 17, 1738. [Google Scholar] [CrossRef] [PubMed]

- Shrivastava, N.; Mudumbai, R.; Madhow, U.; Suri, S. Target Tracking with Binary Proximity Sensors. ACM Trans. Sens. Netw. 2009, 5, 30:1–33. [Google Scholar] [CrossRef]

- Yang, B.; Zhang, M. Credit-Based Multiple Human Location for Passive Binary Pyroelectric Infrared Sensor Tracking System: Free from Region Partition and Classifier. IEEE Sens. J. 2016, 17, 37–45. [Google Scholar] [CrossRef]

- Yang, B.; Wei, Q.; Zhang, M. Multiple Human Location in a Distributed Binary Pyroelectric Infrared Sensor Network. Infrared Phys. Technol. 2017, 85, 216–224. [Google Scholar] [CrossRef]

- Belongie, S.; Malik, J.; Puzicha, J. Shape Matching and Object Recognition Using Shape Contexts. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 509–522. [Google Scholar] [CrossRef]

- Davis, J.W.; Bobick, A.F. The recognition of human movement using temporal templates. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 257–267. [Google Scholar]

- Gesto-Diaz, M.; Tombari, F.; Gonzalez-Aguilera, D.; Lopez-Fernandez, L.; Rodriguez-Gonzalvez, P. Feature matching evaluation for multimodal correspondence. ISPRS J. Photogramm. Remote Sens. 2017, 129, 179–188. [Google Scholar] [CrossRef]

- Wang, M.; Cui, Q.; Sun, Y.; Wang, Q. Photovoltaic panel extraction from very high-resolution aerial imagery using region–line primitive association analysis and template matching. ISPRS J. Photogramm. Remote Sens. 2018, 141, 100–111. [Google Scholar] [CrossRef]

- Yuan, L.; Yu, Z.; Luo, W.; Zhang, J.; Hu, Y. Clifford algebra method for network expression, computation, and algorithm construction. Math. Methods Appl. Sci. 2014, 37, 1428–1435. [Google Scholar] [CrossRef]

- Dorst, L.; Fontijne, D.H.F.; Mann, S. Geometric Algebra for Computer Science: An Object-Oriented Approach to Geometry; Morgan Kaufmann Publishers Inc.: Burlington, MA, USA, 2007. [Google Scholar]

- Hitzer, E.; Nitta, T.; Kuroe, Y. Applications of Clifford’s Geometric Algebra. Adv. Appl. Clifford Algebras 2013, 23, 377–404. [Google Scholar] [CrossRef]

- Yu, Z.; Yuan, L.; Luo, W.; Feng, L.; Lv, G. Spatio-Temporal Constrained Human Trajectory Generation from the PIR Motion Detector Sensor Network Data: A Geometric Algebra Approach. Sensors 2016, 16, 43. [Google Scholar] [CrossRef]

- Yuan, L.; Yu, Z.; Luo, W.; Yi, L.; Lü, G. Geometric Algebra for Multidimension-Unified Geographical Information System. Adv. Appl. Clifford Algebras 2013, 23, 497–518. [Google Scholar] [CrossRef]

- Wren, C.R.; Ivanov, Y.A.; Leigh, D.; Westhues, J. The MERL motion detector dataset. In Proceedings of the Workshop on Massive Datasets, Nagoya, Japan, 15 November 2007; pp. 10–14. [Google Scholar]

| Operator Set | Operator | Illustration |

|---|---|---|

| Network reconfiguration | DataCoordTrans () | Coordinate space conversion |

| DataFindAdj () | Connection point search | |

| AdjJudgeCon () | Connectedness judgment | |

| IniAdjMatrix () | Establishes the adjacency matrix | |

| Spatial constraints | DataNeighScreen () | 5-neighborhood search |

| DataNeighCode () | Coding of 5-neighborhood | |

| Time constraints | FreqFilter () | Filter the code by frequency |

| DataDivSeq () | Divide front and back sequences | |

| Generation and matching | DataSemGenerate () | Generate the motion codes |

| DataSemMatch () | Matching motion patterns |

| Trajectory Semantics | Proposed Type | Environment Type |

|---|---|---|

| Contain none of the turns | Type-I |  |

| Contain one of the turns | Type-L |  |

| Contain two of the turns except (BottomToLeft & TopToRight) and (TopToLeft & BottomToRight) | Type-T |  |

| Contain three, four of the turns and two of the turns when (BottomToLeft & TopToRight) and (TopToLeft & BottomToRight) | Type-Cross |  |

| Confusion Matrix | The Actual Type | ||||

|---|---|---|---|---|---|

| I | L | T | Cross | ||

| The proposed type | I | 105 | 0 | 0 | 0 |

| L | 3 | 17 | 0 | 0 | |

| T | 1 | 2 | 23 | 0 | |

| Cross | 0 | 0 | 0 | 3 | |

| Size of Nodes | Size of Extraction | Time (s) | Memory (MB) |

|---|---|---|---|

| 10 | 1000 | 0.28 | 10.4 |

| 50 | 1000 | 0.39 | 17.1 |

| 100 | 1000 | 0.60 | 25.6 |

| Time Interval | No. of Records | Time (s) | Memory (MB) | |||

|---|---|---|---|---|---|---|

| Total | Spatial Constraints | Time Constraints | Generation | |||

| One hour | 6923 | 1.973 | 0.027 | 0.022 | 1.083 | 14.0 |

| One day | 77,556 | 26.98 | 0.305 | 0.248 | 20.001 | 79.8 |

| One week | 355,702 | 155.18 | 1.439 | 1.148 | 122.22 | 357.7 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, S.; Yuan, L.; Luo, W.; Li, D.; Zhou, C.; Yu, Z. Recovering Human Motion Patterns from Passive Infrared Sensors: A Geometric-Algebra Based Generation-Template-Matching Approach. ISPRS Int. J. Geo-Inf. 2019, 8, 554. https://doi.org/10.3390/ijgi8120554

Xiao S, Yuan L, Luo W, Li D, Zhou C, Yu Z. Recovering Human Motion Patterns from Passive Infrared Sensors: A Geometric-Algebra Based Generation-Template-Matching Approach. ISPRS International Journal of Geo-Information. 2019; 8(12):554. https://doi.org/10.3390/ijgi8120554

Chicago/Turabian StyleXiao, Shengjun, Linwang Yuan, Wen Luo, Dongshuang Li, Chunye Zhou, and Zhaoyuan Yu. 2019. "Recovering Human Motion Patterns from Passive Infrared Sensors: A Geometric-Algebra Based Generation-Template-Matching Approach" ISPRS International Journal of Geo-Information 8, no. 12: 554. https://doi.org/10.3390/ijgi8120554

APA StyleXiao, S., Yuan, L., Luo, W., Li, D., Zhou, C., & Yu, Z. (2019). Recovering Human Motion Patterns from Passive Infrared Sensors: A Geometric-Algebra Based Generation-Template-Matching Approach. ISPRS International Journal of Geo-Information, 8(12), 554. https://doi.org/10.3390/ijgi8120554